1. Introduction

Let ![]() $F\,:\,\mathbb{R}^d \rightarrow \mathbb{R}^d, d \geq 1$, be a vector field. For much of what follows F arises as the gradient of a potential function V, namely

$F\,:\,\mathbb{R}^d \rightarrow \mathbb{R}^d, d \geq 1$, be a vector field. For much of what follows F arises as the gradient of a potential function V, namely ![]() $V\,:\,\mathbb{R}^d \rightarrow \mathbb{R}$, and

$V\,:\,\mathbb{R}^d \rightarrow \mathbb{R}$, and ![]() $F=-\nabla V$. Now, we define a system driven by

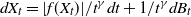

$F=-\nabla V$. Now, we define a system driven by

To elaborate on the parameters, let ![]() $\mathcal{F}_n$ be a filtration; then

$\mathcal{F}_n$ be a filtration; then ![]() $a_n,\xi_n$ are adapted, and the

$a_n,\xi_n$ are adapted, and the ![]() $\xi_n$ constitute martingale differences, i.e.

$\xi_n$ constitute martingale differences, i.e. ![]() $\mathbb{E}(\xi_{n+1}|\mathcal{F}_n) = 0$. For the purposes of this introduction we will simplify and assume, without any great loss of abstraction, that

$\mathbb{E}(\xi_{n+1}|\mathcal{F}_n) = 0$. For the purposes of this introduction we will simplify and assume, without any great loss of abstraction, that ![]() $a_n$ is deterministic and either is a constant number or is converging to zero comparably to

$a_n$ is deterministic and either is a constant number or is converging to zero comparably to ![]() $n^{-\gamma}$ (i.e. it is

$n^{-\gamma}$ (i.e. it is ![]() $\Theta\left(n^{-\gamma}\right)$), where

$\Theta\left(n^{-\gamma}\right)$), where ![]() $\gamma \in (1/2,1]$. Also, some additional assumptions on the noise are usually required: one is a boundedness constraint, that is, we assume the existence of a constant M such that

$\gamma \in (1/2,1]$. Also, some additional assumptions on the noise are usually required: one is a boundedness constraint, that is, we assume the existence of a constant M such that ![]() $|\xi_n|\leq M$ almost surely (a.s.); and secondly we want

$|\xi_n|\leq M$ almost surely (a.s.); and secondly we want ![]() $\xi_n$ to be quasi-isotropic (see [Reference Daneshmand, Kohler, Lucchi and HofmannDKLH18]), i.e.,

$\xi_n$ to be quasi-isotropic (see [Reference Daneshmand, Kohler, Lucchi and HofmannDKLH18]), i.e., ![]() $\mathbb{P}( (\theta \cdot \xi_n)^{+}>\delta )>\delta$ for any unit direction

$\mathbb{P}( (\theta \cdot \xi_n)^{+}>\delta )>\delta$ for any unit direction ![]() $\theta \in \mathbb{R}^d$. This condition makes sure that the process gets jiggled in every direction. This versatile system is well studied, and it arises naturally in many different areas. In machine learning and statistics, (1) can be a powerful tool for quick optimization and statistical inference (see [Reference AgarwalAAZB+17], [Reference Li, Liu, Kyrillidis and CaramanisLLKC18], [Reference Chen, Lee, Tong and ZhangCdlTTZ16]), among other uses. Furthermore, many urn models are represented by (1). These processes play a central role in probability theory due to their wide applicability in physics, biology, and social sciences; for a comprehensive exposition on the subject see [Reference PemantlePem07].

$\theta \in \mathbb{R}^d$. This condition makes sure that the process gets jiggled in every direction. This versatile system is well studied, and it arises naturally in many different areas. In machine learning and statistics, (1) can be a powerful tool for quick optimization and statistical inference (see [Reference AgarwalAAZB+17], [Reference Li, Liu, Kyrillidis and CaramanisLLKC18], [Reference Chen, Lee, Tong and ZhangCdlTTZ16]), among other uses. Furthermore, many urn models are represented by (1). These processes play a central role in probability theory due to their wide applicability in physics, biology, and social sciences; for a comprehensive exposition on the subject see [Reference PemantlePem07].

In machine learning, processes satisfying (1) appear in stochastic gradient descent (SGD). First, to provide context, let us briefly introduce the gradient descent method (GD) and then see why SGD arises naturally from it. The GD is an optimization technique which finds local minima for a potential function V via the iteration

where in many applications we take ![]() $\eta_n$ to be positive and constant. Notice that (2) is a specialization of (1), with

$\eta_n$ to be positive and constant. Notice that (2) is a specialization of (1), with ![]() $F=-\nabla V$,

$F=-\nabla V$, ![]() $\xi_{n+1}\equiv 0$, and

$\xi_{n+1}\equiv 0$, and ![]() $a_n=\eta_n$. The above method when applied to non-convex functions has the shortcoming that it may get stuck near saddle points (i.e. points where the gradient vanishes, that are neither local minima nor local maxima), or may locate local minima instead of global ones. The former issue can be resolved by adding noise into the system, which, consequently, helps in pushing the particle downhill and eventually escaping saddle points (see [Reference PemantlePem90] and [Reference Kushner and YinKY03, Section 5.8]). For the latter, in general, avoiding local minima is a difficult problem ([Reference Gelfand and MitterGM91] and [Reference Raginsky, Rakhlin and TelgarskyRRT17]); however, fortunately, in many instances finding local minima is satisfactory. Recently there have been several problems of interest where this is indeed the case, either because all local minima are global minima ([Reference Ge, Huang, Jin and YuanGHJY15] and [Reference Sun, Qu and WrightSQW17]), or, in other cases, because local minima provide equally good results as global minima [Reference ChoromanskaCHM15]. Furthermore, in certain applications saddle points lead to highly suboptimal results ([Reference Jain, Jin, Kakade and NetrapalliJJKN15] and [Reference Sun and LuoSL16]), which highlights the importance of escaping saddle points.

$a_n=\eta_n$. The above method when applied to non-convex functions has the shortcoming that it may get stuck near saddle points (i.e. points where the gradient vanishes, that are neither local minima nor local maxima), or may locate local minima instead of global ones. The former issue can be resolved by adding noise into the system, which, consequently, helps in pushing the particle downhill and eventually escaping saddle points (see [Reference PemantlePem90] and [Reference Kushner and YinKY03, Section 5.8]). For the latter, in general, avoiding local minima is a difficult problem ([Reference Gelfand and MitterGM91] and [Reference Raginsky, Rakhlin and TelgarskyRRT17]); however, fortunately, in many instances finding local minima is satisfactory. Recently there have been several problems of interest where this is indeed the case, either because all local minima are global minima ([Reference Ge, Huang, Jin and YuanGHJY15] and [Reference Sun, Qu and WrightSQW17]), or, in other cases, because local minima provide equally good results as global minima [Reference ChoromanskaCHM15]. Furthermore, in certain applications saddle points lead to highly suboptimal results ([Reference Jain, Jin, Kakade and NetrapalliJJKN15] and [Reference Sun and LuoSL16]), which highlights the importance of escaping saddle points.

As described in the previous paragraph, escaping saddle points when performing SGD is an important problem. The saddle problem is well understood when nondegeneracy conditions are imposed. Results showing that asymptotically SGD will escape saddle points date back to works of Pemantle [Reference PemantlePem91] and, more recently, [Reference Lee, Simchowitz, Jordan and RechtLSJR16], where the authors prove that random initialization guarantees almost sure convergence to minimizers. The establishment of asymptotic convergence subsequently led to results on how this can be done efficiently [Reference Lee, Simchowitz, Jordan and RechtLSJR16].

Processes satisfying (1), when ![]() $a_n$ goes to zero, are known as stochastic approximations, after [Reference Robbins and MonroRM51]. These processes have been extensively studied since then [Reference Kushner and YinKY03]. An important feature is that the step size

$a_n$ goes to zero, are known as stochastic approximations, after [Reference Robbins and MonroRM51]. These processes have been extensively studied since then [Reference Kushner and YinKY03]. An important feature is that the step size ![]() $a_n$ satisfies

$a_n$ satisfies

This property balances the effects of the noise in the system, so that there is an implicit averaging that, eventually, eliminates the effects of the noise. The previously described system hence behaves similarly to the mean flow: the ordinary differential equation whose right-hand side corresponds to the expectation of the driving term (![]() $F(X_t)$). This heuristic can help us identify the support S of the limiting process

$F(X_t)$). This heuristic can help us identify the support S of the limiting process ![]() $X_\infty \,{:}\,{\raise-1.5pt{=}}\, \lim_{n \rightarrow \infty} X_n$ in terms of the topological properties of the dynamical system

$X_\infty \,{:}\,{\raise-1.5pt{=}}\, \lim_{n \rightarrow \infty} X_n$ in terms of the topological properties of the dynamical system ![]() $\frac{\text{d }\!X_t^{\vphantom{a}} }{ \text{d }\!\! t}=F(X_t) $ (see [Reference Kushner and YinKY03, Chapter 5]). More specifically, in most instances, one can argue that attractors are in S, whereas repellers or ‘strict’ saddle points are not (see [Reference Kushner and YinKY03, Section 5.8]). However, there has not been a systematic approach to finding when a degenerate saddle point, i.e. a point that is neither an attractor nor a repeller, belongs in S.

$\frac{\text{d }\!X_t^{\vphantom{a}} }{ \text{d }\!\! t}=F(X_t) $ (see [Reference Kushner and YinKY03, Chapter 5]). More specifically, in most instances, one can argue that attractors are in S, whereas repellers or ‘strict’ saddle points are not (see [Reference Kushner and YinKY03, Section 5.8]). However, there has not been a systematic approach to finding when a degenerate saddle point, i.e. a point that is neither an attractor nor a repeller, belongs in S.

Stochastic approximations arise naturally in many different contexts. Some early results were published by [Reference RuppertRup88] and [Reference Polyak and JuditskyPJ92]. There, the authors dealt with averaged stochastic gradient descent (ASGD) arising from a strongly convex potential V with step size ![]() $n^{-\gamma}, \gamma \in (1/2,1]$. In their work they proved that one can build, with proper scaling, consistent estimators

$n^{-\gamma}, \gamma \in (1/2,1]$. In their work they proved that one can build, with proper scaling, consistent estimators ![]() $\tilde{x}_n$ (for the

$\tilde{x}_n$ (for the ![]() $\arg \min(V)$) whose limiting distribution is Gaussian. In learning problems, a modified version of ASGD [Reference Rakhlin, Shamir and SridharanRSS12] provides convergence rates to global minima of order

$\arg \min(V)$) whose limiting distribution is Gaussian. In learning problems, a modified version of ASGD [Reference Rakhlin, Shamir and SridharanRSS12] provides convergence rates to global minima of order ![]() $n^{-1}$. Additionally, many classical urn processes can be described via (1), where

$n^{-1}$. Additionally, many classical urn processes can be described via (1), where ![]() $a_{n}$ is of the order of

$a_{n}$ is of the order of ![]() $n^{-1}$. Certain efforts are being made towards understanding the support of the limiting process

$n^{-1}$. Certain efforts are being made towards understanding the support of the limiting process ![]() $X_{\infty}$. In specific instances, the underlying problem boils down to understanding an SGD problem: to characterize the support of

$X_{\infty}$. In specific instances, the underlying problem boils down to understanding an SGD problem: to characterize the support of ![]() $X_\infty$ in terms of the class of critical points of the corresponding potential V. For a comprehensive exposition on urn processes see [Reference PemantlePem07].

$X_\infty$ in terms of the class of critical points of the corresponding potential V. For a comprehensive exposition on urn processes see [Reference PemantlePem07].

From the previous discussion, some fundamental questions of interest regarding (1) are the following:

1. Does

$X_n$ converge?

$X_n$ converge?-

2. When does

$X_n$ converge to (local) minima, consequently avoiding saddle points?

$X_n$ converge to (local) minima, consequently avoiding saddle points? -

3. When does

$X_n$ converge to global minima?

$X_n$ converge to global minima? -

4. How fast does

$X_n$ converge to local minima?

$X_n$ converge to local minima?

When F arises from a potential function V, the first question is for the most part settled: the limit of the process converges, and it is supported on a subset of the set of critical points of V (see [Reference Kushner and YinKY03, Chapter 5]).

Here, our primary focus will be understanding the second question in a one-dimensional setting. More specifically, we will work with processes that solve

To put this in context, the antiderivative of ![]() $-f$ would correspond to the potential function

$-f$ would correspond to the potential function ![]() $-V$. Therefore, if a point p has a neighborhood

$-V$. Therefore, if a point p has a neighborhood ![]() $\mathcal{N}$ such that f is positive except for

$\mathcal{N}$ such that f is positive except for ![]() $f(p)=0$, then the point p would be a saddle point.

$f(p)=0$, then the point p would be a saddle point.

Problem 1.1. Let ![]() $(X_n)_{\geq 1}$ solve (3). Suppose that p is a saddle point. Find the threshold value, denoted

$(X_n)_{\geq 1}$ solve (3). Suppose that p is a saddle point. Find the threshold value, denoted ![]() $\tilde{\gamma}$, for

$\tilde{\gamma}$, for ![]() $\gamma$, should it exist, such that the following hold:

$\gamma$, should it exist, such that the following hold:

1. When

$\gamma \in (1/2, \tilde{\gamma})$,

$\gamma \in (1/2, \tilde{\gamma})$,  $\mathbb{P}(X_n\rightarrow p) = 0$.

$\mathbb{P}(X_n\rightarrow p) = 0$.2. When

$\gamma \in (\tilde{\gamma},1]$,

$\gamma \in (\tilde{\gamma},1]$,  $\mathbb{P}(X_n\rightarrow p)>0$.

$\mathbb{P}(X_n\rightarrow p)>0$.

Part 1 of Problem 1.1 guarantees that the SGD avoids saddle points, and hence converges to local minima. Choosing ![]() $\gamma$ appropriately in the first regime (i.e.

$\gamma$ appropriately in the first regime (i.e. ![]() $\gamma\in (1/2, \tilde{\gamma})$) enables us to optimize the performance of the SGD. In practice, choosing a small step size can slow the rate of convergence; however, a bigger step size may lead the process to bounce around (see [Reference Brennan and RogersBR95] and [Reference Suri and LeungSL87]). In [Reference Even-Dar and MansourEDM01] the authors study the rate of convergence for polynomial step sizes in the context of Q-learning for Markov decision processes, and they experimentally demonstrate that for

$\gamma\in (1/2, \tilde{\gamma})$) enables us to optimize the performance of the SGD. In practice, choosing a small step size can slow the rate of convergence; however, a bigger step size may lead the process to bounce around (see [Reference Brennan and RogersBR95] and [Reference Suri and LeungSL87]). In [Reference Even-Dar and MansourEDM01] the authors study the rate of convergence for polynomial step sizes in the context of Q-learning for Markov decision processes, and they experimentally demonstrate that for ![]() $\gamma$ approximately

$\gamma$ approximately ![]() $\tfrac{17}{20}$ the rate of convergence is optimal.

$\tfrac{17}{20}$ the rate of convergence is optimal.

In the literature there are many results of this type. However, as already mentioned, the vast majority of them require the saddle points to satisfy certain nondegeneracy conditions. In fact, nondegenerate saddle points will never be in the support of ![]() $X_\infty$. Interestingly enough, the previous conclusion is not always valid for degenerate ones; see [Reference PemantlePem91], in which the support of

$X_\infty$. Interestingly enough, the previous conclusion is not always valid for degenerate ones; see [Reference PemantlePem91], in which the support of ![]() $X_\infty$ for a generalized urn model [Reference Hill, D. and SudderthHLS80] fitting (3) for

$X_\infty$ for a generalized urn model [Reference Hill, D. and SudderthHLS80] fitting (3) for ![]() $\gamma=1$ is characterized in terms of a ‘general’ function f. However, we show that for any V, under some mild conditions, we can find

$\gamma=1$ is characterized in terms of a ‘general’ function f. However, we show that for any V, under some mild conditions, we can find ![]() $\gamma$ such that saddle points do not belong to S (see Theorems 1.2 and 1.4). Hence, we demonstrate that implementing SGD, by adding enough noise, gives the desired asymptotic behavior even in the degenerate case.

$\gamma$ such that saddle points do not belong to S (see Theorems 1.2 and 1.4). Hence, we demonstrate that implementing SGD, by adding enough noise, gives the desired asymptotic behavior even in the degenerate case.

It is the hope of the author that this work is a step towards understanding a broader class of non-convex problems. One prospective application would be analyzing complex systems that can be studied by finding a corresponding simpler one-dimensional system. Although non-convex optimization problems are, generally, NP-hard (for a discussion in the context of escaping saddle points see [Reference Anandkumar and GeAG16]), it would be possible to extend the results of this paper to certain classes of problems in higher dimensions, as we are focusing on the asymptotic behavior of the system. Potentially, such an extension can be achieved by reducing the multidimensional problem to a suitable one-dimensional problem and then applying the results of this paper. For an example where the analysis of the asymptotic behavior of a system of stochastic approximations relies on reducing the problem to a one-dimensional problem, see [Reference PemantlePem90]. Also we are trying to establish that if we understand the underlying dynamical system sufficiently, then by adding enough noise, we can guarantee that the process will wander until it is captured by a downhill path, and thus it will eventually escape the unstable neighborhood. Finally, this paper, and even more so a multidimensional extension of it, can serve as a theoretical guarantee of convergence, much in the spirit of the works of [Reference Lee, Simchowitz, Jordan and RechtLSJR16] and [Reference PemantlePem90], which were succeeded by efficient algorithms [Reference JinJGN+17].

To extend results to the multidimensional setting using this paper, one would need to find a suitable corresponding one-dimensional system. One potential path to accomplish this is to use a Łojasiewicz-type inequality; for a reference see [Spr, Theorem 2] and [Reference SonSon12, Lemma 3.2, p. 315]. Before we state the inequality we will need a definition.

Definition 1.1. Suppose that ![]() $V\,:\,\mathbb{R}^n \to \mathbb{R}$. The zero set of V is denoted by

$V\,:\,\mathbb{R}^n \to \mathbb{R}$. The zero set of V is denoted by ![]() $Z_V = \{x\in \mathbb{R}^n : V(x)=0 \}$.

$Z_V = \{x\in \mathbb{R}^n : V(x)=0 \}$.

Theorem 1.1. Let V be defined as before. Let ![]() $Z_V$ denote the zero set of V. Then there is an open set

$Z_V$ denote the zero set of V. Then there is an open set ![]() $0\in \mathcal{O}$ such that there is a positive constant

$0\in \mathcal{O}$ such that there is a positive constant ![]() $k\in(1,2)$ such that the following holds:

$k\in(1,2)$ such that the following holds:

Now suppose that ![]() $X_n$ satisfies (1), where

$X_n$ satisfies (1), where ![]() $F(\cdot)=-V$ where

$F(\cdot)=-V$ where ![]() $a_n = \frac{1}{n^\gamma}$. Furthermore, assume

$a_n = \frac{1}{n^\gamma}$. Furthermore, assume ![]() $V\,:\,\mathbb{R}^d\rightarrow \mathbb{R}$ is an analytic function such that

$V\,:\,\mathbb{R}^d\rightarrow \mathbb{R}$ is an analytic function such that ![]() $V(0)=\nabla V(0)=0$. Hereby, we assume that 0 is a saddle point and that it is also an isolated critical point.

$V(0)=\nabla V(0)=0$. Hereby, we assume that 0 is a saddle point and that it is also an isolated critical point.

To study whether ![]() $X_n\to 0$, our candidate line of attack consists of three distinct steps.

$X_n\to 0$, our candidate line of attack consists of three distinct steps.

• We start by studying the process

$(V(X_n))_{n\geq 1}$. Then Theorem 1.1 should give an upper bound on

$(V(X_n))_{n\geq 1}$. Then Theorem 1.1 should give an upper bound on  $|V(X_n)|$.

$|V(X_n)|$.• Then the process

$X_n$ may wander into the realm where

$X_n$ may wander into the realm where  $V(X_n)<0$ with probability bounded from below.

$V(X_n)<0$ with probability bounded from below.• Lastly, we show that when

$V(X_n) <0$, the process may stay negative with probability bounded from below; hence we conclude that

$V(X_n) <0$, the process may stay negative with probability bounded from below; hence we conclude that  $\mathbb{P}(X_n\rightarrow 0) =0$.

$\mathbb{P}(X_n\rightarrow 0) =0$.

For the second part of the strategy we notice that the path from ![]() $X_n$ to

$X_n$ to ![]() $z\in Z_V$ along the flow

$z\in Z_V$ along the flow ![]() $x'_t= -\nabla V(x_t)$ has length

$x'_t= -\nabla V(x_t)$ has length ![]() $V(X_n)$. So we should expect that as long as

$V(X_n)$. So we should expect that as long as ![]() $V(X_n)$ and the remaining noise in the recursion (1) are comparable, then

$V(X_n)$ and the remaining noise in the recursion (1) are comparable, then ![]() $X_n$ may wander into the realm where

$X_n$ may wander into the realm where ![]() $V(X_n)<0$.

$V(X_n)<0$.

To expand on the third step we need the following definition.

Definition 1.2. Suppose that ![]() $V\,:\,\mathbb{R}^n\to \mathbb{R}$ and let

$V\,:\,\mathbb{R}^n\to \mathbb{R}$ and let ![]() $x \in \mathbb{R}^n$ such that

$x \in \mathbb{R}^n$ such that ![]() $V(x)<0$. Denote by

$V(x)<0$. Denote by ![]() $\mathcal{O}_x$ the connected component of

$\mathcal{O}_x$ the connected component of ![]() $ \{ x \in \mathbb{R}^n\,:\,V(x) \leq 0 \}$ such that

$ \{ x \in \mathbb{R}^n\,:\,V(x) \leq 0 \}$ such that ![]() $x \in \mathcal{O}_x$.

$x \in \mathcal{O}_x$.

For the last step of the strategy we ought to understand the geometry of the conical region ![]() $\mathcal{O}_{X_n}\cap Z_V$. For instance, the surface

$\mathcal{O}_{X_n}\cap Z_V$. For instance, the surface ![]() $\mathcal{O}_{X_n}\cap Z_V$ may be very steep, so that under the slightest perturbation the iterates

$\mathcal{O}_{X_n}\cap Z_V$ may be very steep, so that under the slightest perturbation the iterates ![]() $X_n$ may return to the realm

$X_n$ may return to the realm ![]() $V(X_n)>0$. It is important to note that in certain instances, depending on the surface

$V(X_n)>0$. It is important to note that in certain instances, depending on the surface ![]() $\mathcal{O}_{X_n}\cap Z_V$, there could be a degenerate saddle point where the iterates could get stuck. However, if we assume, for example, that V is a homogeneous polynomial, then the angular size of this surface near the origin is not changing over time, and so we should expect a probability bounded from below that the process crosses into the region with

$\mathcal{O}_{X_n}\cap Z_V$, there could be a degenerate saddle point where the iterates could get stuck. However, if we assume, for example, that V is a homogeneous polynomial, then the angular size of this surface near the origin is not changing over time, and so we should expect a probability bounded from below that the process crosses into the region with ![]() $V(X_n)<-\delta t^{\frac{1-\gamma}{1-k }}$, where k is given by Theorem 1.1. To gain intuition on the previous bound one can look at the proof of Proposition 6.1.

$V(X_n)<-\delta t^{\frac{1-\gamma}{1-k }}$, where k is given by Theorem 1.1. To gain intuition on the previous bound one can look at the proof of Proposition 6.1.

1.1. Results for the continuous model

We proceed by transitioning to a continuous model. For that purpose we need a potential, a step size, and a noise. However, it is natural to consider, without the need to contemplate, a process defined by

We assume that ![]() $f(0)=0$ and that f is otherwise positive in a neighborhood

$f(0)=0$ and that f is otherwise positive in a neighborhood ![]() $\mathcal{N}$ of zero. What we wish to investigate is whether

$\mathcal{N}$ of zero. What we wish to investigate is whether ![]() $L_t$ will not converge to 0 with probability 1, or will converge there with some positive probability. The answer to these questions depends only on the local behavior of f on

$L_t$ will not converge to 0 with probability 1, or will converge there with some positive probability. The answer to these questions depends only on the local behavior of f on ![]() $\mathcal{N}$.

$\mathcal{N}$.

The main non-convergence result is the following.

Theorem 1.2. Suppose that ![]() $\mathcal{N}$ is a neighborhood of zero. Let

$\mathcal{N}$ is a neighborhood of zero. Let ![]() $(L_t)_{t\geq 1}$ be a solution of (4), where f(x) is Lipschitz. We distinguish two cases depending on f and the parameters of the system:

$(L_t)_{t\geq 1}$ be a solution of (4), where f(x) is Lipschitz. We distinguish two cases depending on f and the parameters of the system:

1.

$k|x| \leq f(x) $,

$k|x| \leq f(x) $,  $k> \frac{1}{2}$, and

$k> \frac{1}{2}$, and  $ \gamma =1$ for all

$ \gamma =1$ for all  $x\in \mathcal{N}$.

$x\in \mathcal{N}$.2.

$ |x|^k \leq f(x)\,$,

$ |x|^k \leq f(x)\,$,  $\frac{1}{2}+\frac{1}{2k} \geq \gamma$, and

$\frac{1}{2}+\frac{1}{2k} \geq \gamma$, and  $k>1$ for all

$k>1$ for all  $x\in \mathcal{N}$.

$x\in \mathcal{N}$.

If either 1 or 2 holds, then ![]() $\mathbb{P}(L_t\rightarrow 0)=0$.

$\mathbb{P}(L_t\rightarrow 0)=0$.

In the first part of the theorem, the result holds even in the case ![]() $k=\frac{1}{2}$; however, the proof is omitted to avoid repetition. In Part 1, we have only considered

$k=\frac{1}{2}$; however, the proof is omitted to avoid repetition. In Part 1, we have only considered ![]() $\gamma=1$ since that is the only critical case; that is, for

$\gamma=1$ since that is the only critical case; that is, for ![]() $\gamma<1$ the effects of the noise would be overwhelming and for all k we would obtain

$\gamma<1$ the effects of the noise would be overwhelming and for all k we would obtain ![]() $\mathbb{P}(L_t\rightarrow 0)=0$.

$\mathbb{P}(L_t\rightarrow 0)=0$.

We now state the main convergence theorem.

Theorem 1.3. Suppose that ![]() $\mathcal{N}$ is a neighborhood of zero. Let

$\mathcal{N}$ is a neighborhood of zero. Let ![]() $(L_t)_{t\geq 1}$ be a solution of (4). We distinguish two cases depending on f and the parameters of the system:

$(L_t)_{t\geq 1}$ be a solution of (4). We distinguish two cases depending on f and the parameters of the system:

1.

$k_1|x|\leq f(x)\leq k_2|x|$,

$k_1|x|\leq f(x)\leq k_2|x|$,  $0<k_i<1/2$, and

$0<k_i<1/2$, and  $\gamma=1$ for all

$\gamma=1$ for all  $x\in \mathcal{N}\cap(-\infty,0]$.

$x\in \mathcal{N}\cap(-\infty,0]$.2.

$0<c|x|^k \leq f(x)\leq|x|^k$,

$0<c|x|^k \leq f(x)\leq|x|^k$,  $\frac{1}{2}+\frac{1}{2k} >\gamma$, and

$\frac{1}{2}+\frac{1}{2k} >\gamma$, and  $k>1$ for all

$k>1$ for all  $x\in \mathcal{N}\cap(-\infty,0]$.

$x\in \mathcal{N}\cap(-\infty,0]$.

If either 1 or 2 holds, then ![]() $\mathbb{P}(L_t\rightarrow 0)>0$.

$\mathbb{P}(L_t\rightarrow 0)>0$.

This is proved by first establishing the previous results for monomials, i.e. ![]() $f(x)=|x|^k$ or

$f(x)=|x|^k$ or ![]() $f(x)=k|x|$, which is done in Sections 3 and 4. We prove the stated theorems in Section 5, by utilizing the comparison results found in Section 2.

$f(x)=k|x|$, which is done in Sections 3 and 4. We prove the stated theorems in Section 5, by utilizing the comparison results found in Section 2.

In Section 3 we deal with the linear case, i.e. ![]() $f(x)=k|x|$. There, the stochastic differential equation (SDE) can be explicitly solved, which simplifies matters to a great extent. Firstly, in Subsection 3.2, we prove that when

$f(x)=k|x|$. There, the stochastic differential equation (SDE) can be explicitly solved, which simplifies matters to a great extent. Firstly, in Subsection 3.2, we prove that when ![]() $k>1/2$, the corresponding process a.s. will not converge to 0, which is accomplished by proving that it will converge to infinity a.s. Secondly, in Subsection 3.3, we show that when

$k>1/2$, the corresponding process a.s. will not converge to 0, which is accomplished by proving that it will converge to infinity a.s. Secondly, in Subsection 3.3, we show that when ![]() $k<1/2$, the process will converge to 0 with some positive probability.

$k<1/2$, the process will converge to 0 with some positive probability.

In Section 4 we move on to the higher-order monomials, i.e. ![]() $f(x)=|x|^k$. Here we show that the process will behave as the ‘mean flow’ process h(t) infinitely often; this is accomplished by studying the process

$f(x)=|x|^k$. Here we show that the process will behave as the ‘mean flow’ process h(t) infinitely often; this is accomplished by studying the process ![]() $L_t/h(t)$. In Subsection 4.2, the main theorem is that when

$L_t/h(t)$. In Subsection 4.2, the main theorem is that when ![]() $\frac{1}{2}+\frac{1}{2k}\geq\gamma$,

$\frac{1}{2}+\frac{1}{2k}\geq\gamma$, ![]() $L_t\rightarrow \infty$ a.s. In Subsection 4.3, we show that when

$L_t\rightarrow \infty$ a.s. In Subsection 4.3, we show that when ![]() $\frac{1}{2}+\frac{1}{2k}<\gamma$, the process may converge to 0 with positive probability.

$\frac{1}{2}+\frac{1}{2k}<\gamma$, the process may converge to 0 with positive probability.

Qualitatively, the previous constraints on the parameters are in accordance with our intuition. To be more specific, when k increases, f becomes steeper, which should indicate it is easier for the process to escape. When ![]() $\gamma$ decreases the remaining variance increases; hence we should expect that the process visits the unstable trajectory with greater ease, due to higher fluctuations.

$\gamma$ decreases the remaining variance increases; hence we should expect that the process visits the unstable trajectory with greater ease, due to higher fluctuations.

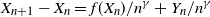

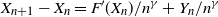

1.2. Results for the discrete model

The asymptotic behavior of the discrete processes is the expected one, depending on the parameters of the problem. Here, we study processes satisfying

or

where ![]() $Y_n$ are a.s. bounded (i.e. there is a constant M such that

$Y_n$ are a.s. bounded (i.e. there is a constant M such that ![]() $|Y_n|<M$ a.s.),

$|Y_n|<M$ a.s.), ![]() $\mathbb{E}(Y_{n+1}|\mathcal{F}_n)=0$, and

$\mathbb{E}(Y_{n+1}|\mathcal{F}_n)=0$, and ![]() $ \mathbb{E}(Y_{n+1}^2|\mathcal{F}_n )\geq l>0 $. The main non-convergence theorem is the following.

$ \mathbb{E}(Y_{n+1}^2|\mathcal{F}_n )\geq l>0 $. The main non-convergence theorem is the following.

Theorem 1.4. Suppose that ![]() $\mathcal{N}$ is a neighborhood of zero. Let

$\mathcal{N}$ is a neighborhood of zero. Let ![]() $(X_n)_{n\geq 1}$ solve (5). If

$(X_n)_{n\geq 1}$ solve (5). If ![]() $|x|^k \leq f(x)$,

$|x|^k \leq f(x)$, ![]() $\frac{1}{2}+\frac{1}{2k} > \gamma$, and

$\frac{1}{2}+\frac{1}{2k} > \gamma$, and ![]() $k>1$ for all

$k>1$ for all ![]() $x\in \mathcal{N}$, then

$x\in \mathcal{N}$, then ![]() $\mathbb{P}(X_n\rightarrow 0)=0$. For the convergence result the nondegeneracy condition

$\mathbb{P}(X_n\rightarrow 0)=0$. For the convergence result the nondegeneracy condition ![]() $\mathbb{E}(Y_{n+1}^2|\mathcal{F}_n)\geq l$ is replaced with the assumption stated in Part 1 of Theorem 1.5.

$\mathbb{E}(Y_{n+1}^2|\mathcal{F}_n)\geq l$ is replaced with the assumption stated in Part 1 of Theorem 1.5.

Theorem 1.5. Let ![]() $\mathcal{N}=(-3\epsilon,3\epsilon)$ be a neighborhood of zero. Suppose

$\mathcal{N}=(-3\epsilon,3\epsilon)$ be a neighborhood of zero. Suppose ![]() $(X_n)_{n\geq 1}$ solve (6). Assume the following:

$(X_n)_{n\geq 1}$ solve (6). Assume the following:

1. There exist

$-\epsilon_2>-3\epsilon$ and

$-\epsilon_2>-3\epsilon$ and  $-\epsilon_1<-\epsilon $ such that for all

$-\epsilon_1<-\epsilon $ such that for all  $M>0$, there exists

$M>0$, there exists  $n>M$ such that

$n>M$ such that  $\mathbb{P}(X_n \in (-\epsilon_2,-\epsilon_1 ) ) >0$.

$\mathbb{P}(X_n \in (-\epsilon_2,-\epsilon_1 ) ) >0$.2.

$ 0<f(x)\leq |x|^k$,

$ 0<f(x)\leq |x|^k$,  $\frac{1}{2}+\frac{1}{2k}< \gamma$, and

$\frac{1}{2}+\frac{1}{2k}< \gamma$, and  $k>1$ for all

$k>1$ for all  $x\in \mathcal{N}$.

$x\in \mathcal{N}$.

Then ![]() $\mathbb{P}(X_n\rightarrow 0 )>0$.

$\mathbb{P}(X_n\rightarrow 0 )>0$.

The assumption imposed on ![]() $X_n$, Part 1 of Theorem 1.5, says that the process should be able visit a neighborhood of the origin for large enough n. If this constraint is not imposed on the process, the previous result need not hold. For instance, the drift could dominate the noise, and consequently the process might never reach a neighborhood of the origin with probability 1. There are processes that naturally satisfy this property; an example is the urn process defined in Section 1 (see [Reference PemantlePem91]).

$X_n$, Part 1 of Theorem 1.5, says that the process should be able visit a neighborhood of the origin for large enough n. If this constraint is not imposed on the process, the previous result need not hold. For instance, the drift could dominate the noise, and consequently the process might never reach a neighborhood of the origin with probability 1. There are processes that naturally satisfy this property; an example is the urn process defined in Section 1 (see [Reference PemantlePem91]).

Example 1.1. Suppose that ![]() $X_n$ satisfies

$X_n$ satisfies

where the ![]() $U_n$ are independent and identically distributed variables, uniformly distributed on

$U_n$ are independent and identically distributed variables, uniformly distributed on ![]() $ (-2,2)$. As the

$ (-2,2)$. As the ![]() $U_n$ dominate the driving term, the assumption 1 is satisfied. And since

$U_n$ dominate the driving term, the assumption 1 is satisfied. And since ![]() $\frac{1}{2} +\frac{1}{2\cdot 3}<\frac{3}{4}$, we expect that

$\frac{1}{2} +\frac{1}{2\cdot 3}<\frac{3}{4}$, we expect that ![]() $X_n\to 0$ holds with positive probability. In Figure 1 we can see a typical example where convergence of the iterates occurs.

$X_n\to 0$ holds with positive probability. In Figure 1 we can see a typical example where convergence of the iterates occurs.

Figure 1. ![]() $(X_n)_{n\geq 10}$ and

$(X_n)_{n\geq 10}$ and ![]() $X_{10}=-1$.

$X_{10}=-1$.

2. Preliminary results

We will now prove two important lemmas that will be needed throughout. Let ![]() $f\,:\,\mathbb{R}\rightarrow \mathbb{R}$ be Lipschitz such that for every

$f\,:\,\mathbb{R}\rightarrow \mathbb{R}$ be Lipschitz such that for every ![]() $\epsilon>0$ there exists c such that

$\epsilon>0$ there exists c such that ![]() $f(x)>c>0$ for all

$f(x)>c>0$ for all ![]() $x \in \mathbb{R}\setminus (-\epsilon,\epsilon) $. Also, let

$x \in \mathbb{R}\setminus (-\epsilon,\epsilon) $. Also, let ![]() $g\,:\,\mathbb{R}_{\geq 0}\rightarrow \mathbb{R}$ be a continuous function such that

$g\,:\,\mathbb{R}_{\geq 0}\rightarrow \mathbb{R}$ be a continuous function such that ![]() $\int_{0}^{\infty} g^2(t) \text{d}t <\infty $. Let

$\int_{0}^{\infty} g^2(t) \text{d}t <\infty $. Let ![]() $X_t$ satisfy

$X_t$ satisfy

Lemma 2.1. ![]() $\limsup_{t \rightarrow \infty} X_t \geq 0 $ a.s.

$\limsup_{t \rightarrow \infty} X_t \geq 0 $ a.s.

Proof. We will argue by contradiction. Assume that ![]() $\limsup_{t \rightarrow \infty} X_t < 0 $, and pick

$\limsup_{t \rightarrow \infty} X_t < 0 $, and pick ![]() $\delta >0 $ such that

$\delta >0 $ such that ![]() $\limsup_{t \rightarrow \infty} X_t< -\delta$ with positive probability. Then there is a time u such that

$\limsup_{t \rightarrow \infty} X_t< -\delta$ with positive probability. Then there is a time u such that ![]() $X_t \leq -\delta$ for all

$X_t \leq -\delta$ for all ![]() $ t\geq u$. But this has as an immediate consequence that

$ t\geq u$. But this has as an immediate consequence that ![]() $\int_{1}^{t} f(X_s) {\rm d}s \rightarrow \infty $. However, since the process

$\int_{1}^{t} f(X_s) {\rm d}s \rightarrow \infty $. However, since the process ![]() $G_t= \int_{1}^{t} g(s) {\rm d}B_s $ has finite quadratic variation, i.e.

$G_t= \int_{1}^{t} g(s) {\rm d}B_s $ has finite quadratic variation, i.e. ![]() $\sup_t \langle G_t \rangle=\int_{0}^{\infty} g^2(s) \text{d}s <\infty$,

$\sup_t \langle G_t \rangle=\int_{0}^{\infty} g^2(s) \text{d}s <\infty$, ![]() $G_t$ stays a.s. finite. The last two observations imply that

$G_t$ stays a.s. finite. The last two observations imply that ![]() $X_t \rightarrow \infty$, which is a contradiction.

$X_t \rightarrow \infty$, which is a contradiction.

Lemma 2.2. ![]() $\liminf_{t \rightarrow \infty} X_t \geq 0 $ a.s.

$\liminf_{t \rightarrow \infty} X_t \geq 0 $ a.s.

Proof. We will again argue by contradiction. Assume that ![]() $\liminf_{t \rightarrow \infty} X_t < 0 $ on a set of positive probability. Take an enumeration of the pairs of positive rationals

$\liminf_{t \rightarrow \infty} X_t < 0 $ on a set of positive probability. Take an enumeration of the pairs of positive rationals ![]() $(q_n,p_n)$ such that

$(q_n,p_n)$ such that ![]() $q_n>p_n$. Now, define

$q_n>p_n$. Now, define ![]() $A_{n}= \{ X_t \leq -q_n \text{\ i.o.}, X_t\geq -p_n \text{\ i.o.} \} $. Since

$A_{n}= \{ X_t \leq -q_n \text{\ i.o.}, X_t\geq -p_n \text{\ i.o.} \} $. Since ![]() $ \limsup_{t \rightarrow \infty} X_t \geq 0 $, we have

$ \limsup_{t \rightarrow \infty} X_t \geq 0 $, we have ![]() $ \bigcup_{n\geq 0} A_{n} = \{ \liminf_{t \rightarrow \infty} X_t < 0 \} $. Now, for

$ \bigcup_{n\geq 0} A_{n} = \{ \liminf_{t \rightarrow \infty} X_t < 0 \} $. Now, for ![]() $ t_1<t_2$ assume that

$ t_1<t_2$ assume that ![]() $ X_{t_1} \geq -p_n $ and

$ X_{t_1} \geq -p_n $ and ![]() $ X_{t_2} \leq -q_n $. Then we see that

$ X_{t_2} \leq -q_n $. Then we see that ![]() $X_{t_2} -X_{t_1} \leq -q_n + p_n $; however,

$X_{t_2} -X_{t_1} \leq -q_n + p_n $; however,

\begin{align*} X_{t_2} - X_{t_1} &= \int_{t_1}^{t_2} f(X_s) {\rm d} s + \int_{t_1}^{t_2} g(s){\rm d}B_s \\[4pt]

&\geq \int_{t_1}^{t_2} g(s) {\rm d}B_s . \end{align*}

\begin{align*} X_{t_2} - X_{t_1} &= \int_{t_1}^{t_2} f(X_s) {\rm d} s + \int_{t_1}^{t_2} g(s){\rm d}B_s \\[4pt]

&\geq \int_{t_1}^{t_2} g(s) {\rm d}B_s . \end{align*}

Hence we conclude that ![]() $ \int_{t_1}^{t_2} g(s){\rm d}B_s \leq -q_n + p_n$. By the definition of

$ \int_{t_1}^{t_2} g(s){\rm d}B_s \leq -q_n + p_n$. By the definition of ![]() $A_n$, on the event

$A_n$, on the event ![]() $A_n$ we can find a sequence of times

$A_n$ we can find a sequence of times ![]() $(t_{2k}, t_{2k+1})$ such that

$(t_{2k}, t_{2k+1})$ such that ![]() $t_{2k}< t_{2k+1} $ and

$t_{2k}< t_{2k+1} $ and ![]() $ \int_{t_{2k}}^{t_{2k+1}} g(s) {\rm d}B_s \leq -q_n + p_n$. Now, if we define

$ \int_{t_{2k}}^{t_{2k+1}} g(s) {\rm d}B_s \leq -q_n + p_n$. Now, if we define ![]() $G_{u,t} = \int_u ^t g(s) {\rm d}B_s $, we see that

$G_{u,t} = \int_u ^t g(s) {\rm d}B_s $, we see that ![]() $G_{1,t}$ converges a.s. since it is a martingale of bounded quadratic variation. Hence

$G_{1,t}$ converges a.s. since it is a martingale of bounded quadratic variation. Hence ![]() $\mathbb{P} (A_n) =0$, i.e.

$\mathbb{P} (A_n) =0$, i.e. ![]() $\mathbb{P} (\liminf_{t \rightarrow \infty} X_t < 0 )=0$.

$\mathbb{P} (\liminf_{t \rightarrow \infty} X_t < 0 )=0$.

The next comparison result is intuitively obvious; however, it will be useful for comparing processes with different drifts.

Proposition 2.1. Let ![]() $(C_t)_{t\geq 0}$ and

$(C_t)_{t\geq 0}$ and ![]() $(D_t)_{t\geq 0}$ be stochastic processes in the same Wiener space that satisfy

$(D_t)_{t\geq 0}$ be stochastic processes in the same Wiener space that satisfy

respectively, where ![]() $g,f_1,f_2$ are deterministic real-valued functions. Assume that

$g,f_1,f_2$ are deterministic real-valued functions. Assume that ![]() $f_1(x) > f_2( x)$ for all

$f_1(x) > f_2( x)$ for all ![]() $x \in \mathbb{R}$, and

$x \in \mathbb{R}$, and ![]() $C_{s_0}> D_{s_0} $. Then

$C_{s_0}> D_{s_0} $. Then ![]() $C_{t} > D_{t}$ for every

$C_{t} > D_{t}$ for every ![]() $t \geq s_0 $ a.s.

$t \geq s_0 $ a.s.

Proof. Define ![]() $\tau =\inf\{ t> s_0 | C_t= D_t \}$, and set

$\tau =\inf\{ t> s_0 | C_t= D_t \}$, and set ![]() $D_\tau=C_\tau=c$, for

$D_\tau=C_\tau=c$, for ![]() $\tau<\infty$. Now, from continuity of

$\tau<\infty$. Now, from continuity of ![]() $f_1$ and

$f_1$ and ![]() $f_2$, we can find

$f_2$, we can find ![]() $\delta$ such that

$\delta$ such that ![]() $f_1(x) >f_2(x)$ for every

$f_1(x) >f_2(x)$ for every ![]() $x \in ( c-\delta , c ] $. However, for all s we have

$x \in ( c-\delta , c ] $. However, for all s we have

Thus, for s such that ![]() $ C_y, D_y \in (c-\delta,c)$ for every

$ C_y, D_y \in (c-\delta,c)$ for every ![]() $y \in ( s,\tau ) $, we have

$y \in ( s,\tau ) $, we have

\begin{align*} 0&> -( C_s - D_s ) \\[4pt]

&= \int_ s ^ \tau f_1(C_u) - f_2 ( D_u) {\rm d} u \\[4pt]

&>0. \end{align*}

\begin{align*} 0&> -( C_s - D_s ) \\[4pt]

&= \int_ s ^ \tau f_1(C_u) - f_2 ( D_u) {\rm d} u \\[4pt]

&>0. \end{align*}

Therefore ![]() $ \{\tau<\infty\}$ has zero probability.

$ \{\tau<\infty\}$ has zero probability.

In what follows, we will prove two important lemmas, corresponding to Lemma 2.1 and Lemma 2.2, for the discrete case. We will assume that ![]() $X_n$ satisfies

$X_n$ satisfies

where f has the property that for every ![]() $\epsilon>0$ there exists

$\epsilon>0$ there exists ![]() $c>0$ such that

$c>0$ such that ![]() $f(x)\geq c$ for every

$f(x)\geq c$ for every ![]() $x \in (-\infty,-\epsilon)$, and the

$x \in (-\infty,-\epsilon)$, and the ![]() $Y_n$ are defined similarly as in (5).

$Y_n$ are defined similarly as in (5).

Lemma 2.3. ![]() $\limsup X_n \geq 0$ a.s.

$\limsup X_n \geq 0$ a.s.

Proof. The proof is nearly identical to that of the continuous case (Lemma 2.1).

Lemma 2.4. ![]() $\liminf_{t \rightarrow \infty} X_t \geq 0 $ a.s.

$\liminf_{t \rightarrow \infty} X_t \geq 0 $ a.s.

Proof. The proof is identical to that of the continuous case (Lemma 2.2).

We provide a suitable version of the Borel–Cantelli lemma (for a reference see Theorem 5.3.2 in [Reference DurrettDur13]).

Lemma 2.5. Let ![]() $\mathcal{F}_n$,

$\mathcal{F}_n$, ![]() $n \geq 0$, be a filtration with

$n \geq 0$, be a filtration with ![]() $\mathcal{F}_0 = \{ 0, \Omega \}$, and let

$\mathcal{F}_0 = \{ 0, \Omega \}$, and let ![]() $A_n$,

$A_n$, ![]() $n\geq 1$, be a sequence of events with

$n\geq 1$, be a sequence of events with ![]() $A_n\in \mathcal{F}_n$. Then

$A_n\in \mathcal{F}_n$. Then

\begin{equation*}\{A_n \mathrm{\,i.o.} \} = \left \{ \sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) = \infty \right \}.\end{equation*}

\begin{equation*}\{A_n \mathrm{\,i.o.} \} = \left \{ \sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) = \infty \right \}.\end{equation*}

3. Continuous model, simplest case

3.1. Introduction

Let ![]() $L_t$ be defined by (4), for

$L_t$ be defined by (4), for ![]() $f(x)=k|x|$ and

$f(x)=k|x|$ and ![]() $\gamma=1$. To simplify, we make a time change and consider

$\gamma=1$. To simplify, we make a time change and consider ![]() $X_t \,{:}\,{\raise-1.5pt{=}}\, L_{e^t}$, and subsequently we obtain

$X_t \,{:}\,{\raise-1.5pt{=}}\, L_{e^t}$, and subsequently we obtain

\begin{align*}X_{t+{\rm d}t} - X_t &= L_{e^t+e^t {\rm d}t} -L_{e^t}\\&= k|L_{e^t}| {\rm d}t + e^{-t} (B_{t + e^t {\rm d}t} - B_{e^t}) \\&= k|X_t| {\rm d}t + e^{-\frac{t}{2}} {\rm d}B_t,\end{align*}

\begin{align*}X_{t+{\rm d}t} - X_t &= L_{e^t+e^t {\rm d}t} -L_{e^t}\\&= k|L_{e^t}| {\rm d}t + e^{-t} (B_{t + e^t {\rm d}t} - B_{e^t}) \\&= k|X_t| {\rm d}t + e^{-\frac{t}{2}} {\rm d}B_t,\end{align*}

which is the model we will study. We begin with some definitions: first,

We introduce another SDE closely related to the previous one, which will be useful:

It is easy to see that both of these SDEs admit unique strong solutions; for a reference see Theorem 11.2 in Chapter 6 in [Reference Rogers and WilliamsRWW87]. Therefore we can construct ![]() $X_t,K_t$ in the classical Wiener space

$X_t,K_t$ in the classical Wiener space ![]() $(\Omega, \mathcal{F}, \mathbb{P})$. The solution for the SDE (10) is given by

$(\Omega, \mathcal{F}, \mathbb{P})$. The solution for the SDE (10) is given by ![]() $ K_t = e^{ k t} (e^{ -t_0k } K_{t_0} + \int_{t_0}^t e^{-s (k +\frac{1}{2} ) } {\rm d} B_s )$. Indeed, substituting in (10) and using Itô’s formula, we get

$ K_t = e^{ k t} (e^{ -t_0k } K_{t_0} + \int_{t_0}^t e^{-s (k +\frac{1}{2} ) } {\rm d} B_s )$. Indeed, substituting in (10) and using Itô’s formula, we get

\begin{align*} {\rm d} K_t &= a'(t) (k_0 + \int_{t_0}^{t} b(s) {\rm d} B_s ) + a(t)b(t){\rm d} B_t \\&= \frac{a'(t)}{a(t)} K_t+ a(t)b(t) {\rm d}B_t ,\end{align*}

\begin{align*} {\rm d} K_t &= a'(t) (k_0 + \int_{t_0}^{t} b(s) {\rm d} B_s ) + a(t)b(t){\rm d} B_t \\&= \frac{a'(t)}{a(t)} K_t+ a(t)b(t) {\rm d}B_t ,\end{align*}

where ![]() $ a(t)= e^{ k (t-t_0)} $ and

$ a(t)= e^{ k (t-t_0)} $ and ![]() $ b(t) = e^{-t(\frac{1}{2}+k) +kt_0 }$, so that

$ b(t) = e^{-t(\frac{1}{2}+k) +kt_0 }$, so that ![]() $\frac{a'(t)}{a(t)} = k$ and

$\frac{a'(t)}{a(t)} = k$ and ![]() $ a(t)b(t)= e^{-\frac{t}{2}} $.

$ a(t)b(t)= e^{-\frac{t}{2}} $.

Proposition 3.1. Let ![]() $(X_t)_{t \geq t_0},\,(K_t)_{t \geq t_0}$ in the Wiener probability space

$(X_t)_{t \geq t_0},\,(K_t)_{t \geq t_0}$ in the Wiener probability space ![]() $(\Omega,\mathcal{F},\mathbb{P})$ be the solutions of (9), (10) respectively. We start them at time

$(\Omega,\mathcal{F},\mathbb{P})$ be the solutions of (9), (10) respectively. We start them at time ![]() $t_0$,

$t_0$, ![]() $ X_{t_0} \geq K_{t_0} \geq 0$. Then

$ X_{t_0} \geq K_{t_0} \geq 0$. Then ![]() $ X_t \geq K_t$ for every

$ X_t \geq K_t$ for every ![]() $t\geq t_0 $.

$t\geq t_0 $.

Proof. This is a direct application of Proposition 2.1.

3.2. Analysis of  $\textbf{\textit{X}}_{\textbf{\textit{t}}}$ when

$\textbf{\textit{X}}_{\textbf{\textit{t}}}$ when  $k>1/2$

$k>1/2$

We start by stating the main result of this section, which we will prove at the end of the subsection.

Theorem 3.1. Let ![]() $(X_t)_{t\geq 1}$ be the solution of (9) for

$(X_t)_{t\geq 1}$ be the solution of (9) for ![]() $k>\frac{1}{2}$; then

$k>\frac{1}{2}$; then ![]() $X_t \rightarrow \infty$ a.s.

$X_t \rightarrow \infty$ a.s.

Now we will show that ![]() $(X_t)_{t\geq 1}$ cannot stay negative for all times. This will be accomplished by a direct computation after solving the SDE.

$(X_t)_{t\geq 1}$ cannot stay negative for all times. This will be accomplished by a direct computation after solving the SDE.

Proposition 3.2. Let ![]() $(X_t)_{t\geq 1}$ be the solution of (9) for

$(X_t)_{t\geq 1}$ be the solution of (9) for ![]() $k>\frac{1}{2}$. Assume that at time s,

$k>\frac{1}{2}$. Assume that at time s, ![]() $X_{s} < 0 $. Then

$X_{s} < 0 $. Then ![]() $X_t$ will reach 0 with probability 1, i.e.

$X_t$ will reach 0 with probability 1, i.e. ![]() $\mathbb{P}( \sup_{u\geq s} X_u > 0 )=1$.

$\mathbb{P}( \sup_{u\geq s} X_u > 0 )=1$.

Proof. First, note that the solution of the SDE (9), run from time s with initial condition ![]() $X_{s}<0$, coincides with the solution of the SDE

$X_{s}<0$, coincides with the solution of the SDE ![]() $ {\rm d}X_t = -k X_t {\rm d}t + e^{-\frac{t}{2}}{\rm d} B_t $ before

$ {\rm d}X_t = -k X_t {\rm d}t + e^{-\frac{t}{2}}{\rm d} B_t $ before ![]() $X_t$ hits 0. Formally, we define

$X_t$ hits 0. Formally, we define ![]() $\tau_0 = \inf \{ t| t \geq s,\,X_t=0 \}$. Using the same method as when solving SDE (10), we obtain

$\tau_0 = \inf \{ t| t \geq s,\,X_t=0 \}$. Using the same method as when solving SDE (10), we obtain ![]() $X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $ on

$X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $ on ![]() $\{t <\tau_0 \}$. Set

$\{t <\tau_0 \}$. Set ![]() $G_t= \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u $, and calculate the quadratic variation of

$G_t= \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u $, and calculate the quadratic variation of ![]() $G_t$, namely

$G_t$, namely ![]() $\left \langle G_t \right \rangle = ( e^{2t(k-\frac{1}{2}) } -e^{2s(k-\frac{1}{2}) } )/(2k-1) $. Next, we compute the probability of never returning to zero:

$\left \langle G_t \right \rangle = ( e^{2t(k-\frac{1}{2}) } -e^{2s(k-\frac{1}{2}) } )/(2k-1) $. Next, we compute the probability of never returning to zero:

\begin{align*}\mathbb{P}(\tau =\infty) & = \mathbb{P}\left(\sup_{s \lt u \lt \infty} X_u \leq 0 \right)\\[6pt]

& = \mathbb{P}\left(\sup_{s \lt u \lt \infty} G_u \leq-e^{ks} X_{s} \right)\\[6pt]

&= 1- \mathbb{P}\left(\sup_{s \lt u \lt \infty} G_u \gt -e^{ks} X_{s} \right) \\[6pt]

&=1- \lim_{t\rightarrow \infty}\mathbb{P}\left(\sup_{s \lt u \lt t} G_u \gt -e^{ks} X_{s} \right) \\[6pt]

&= 1- \lim_{t\rightarrow \infty}2 \mathbb{P}\left( G_t \gt -e^{ks} X_{s}\right), \textrm{from\,the\,reflection\,principle} \\[6pt]

&= 1- \lim_{t\rightarrow \infty}2 \mathbb{P}\left(N\left( 0, \frac{e^{2t(k-\frac{1}{2})}-e^{2s(k-\frac{1}{2})}}{2k -1} \right) \gt -e^{ks} X_{s} \right) \\[4pt]

&=0,\end{align*}

\begin{align*}\mathbb{P}(\tau =\infty) & = \mathbb{P}\left(\sup_{s \lt u \lt \infty} X_u \leq 0 \right)\\[6pt]

& = \mathbb{P}\left(\sup_{s \lt u \lt \infty} G_u \leq-e^{ks} X_{s} \right)\\[6pt]

&= 1- \mathbb{P}\left(\sup_{s \lt u \lt \infty} G_u \gt -e^{ks} X_{s} \right) \\[6pt]

&=1- \lim_{t\rightarrow \infty}\mathbb{P}\left(\sup_{s \lt u \lt t} G_u \gt -e^{ks} X_{s} \right) \\[6pt]

&= 1- \lim_{t\rightarrow \infty}2 \mathbb{P}\left( G_t \gt -e^{ks} X_{s}\right), \textrm{from\,the\,reflection\,principle} \\[6pt]

&= 1- \lim_{t\rightarrow \infty}2 \mathbb{P}\left(N\left( 0, \frac{e^{2t(k-\frac{1}{2})}-e^{2s(k-\frac{1}{2})}}{2k -1} \right) \gt -e^{ks} X_{s} \right) \\[4pt]

&=0,\end{align*}

since

We will now prove two important lemmas that are true for solutions of (9) for any ![]() $k>0.$

$k>0.$

Lemma 3.1. Let ![]() $(X_t)_{t\geq 1}$ be the solution of (9). Then on the event

$(X_t)_{t\geq 1}$ be the solution of (9). Then on the event ![]() $\{X_t \geq 0 \text{\ i.o.} \}$, there is a positive constant

$\{X_t \geq 0 \text{\ i.o.} \}$, there is a positive constant ![]() $c<1$ such that

$c<1$ such that ![]() $\{ X_t \geq c e^{-t/2} \text{\ i.o.} \}$ holds a.s.

$\{ X_t \geq c e^{-t/2} \text{\ i.o.} \}$ holds a.s.

Proof. Assume we start the SDE at time ![]() $t_i$ with initial condition

$t_i$ with initial condition ![]() $X_{t_i}\geq 0$. Then we see that

$X_{t_i}\geq 0$. Then we see that

Set ![]() $G_t =\int_{t_i}^t e^{-\frac{u}{2}} {\rm d}B_u$. The quadratic variation of

$G_t =\int_{t_i}^t e^{-\frac{u}{2}} {\rm d}B_u$. The quadratic variation of ![]() $G_t$ is

$G_t$ is ![]() $\langle G_t\rangle = e^{-t_1} - e^{-t}$. Fix

$\langle G_t\rangle = e^{-t_1} - e^{-t}$. Fix ![]() $0<c<1$. Now, observe that we can always choose t big enough so that

$0<c<1$. Now, observe that we can always choose t big enough so that ![]() $\langle G_t \rangle \geq c e^{-t_1}$ for any

$\langle G_t \rangle \geq c e^{-t_1}$ for any ![]() $t_1$.

$t_1$.

Then

\begin{align*}\mathbb{P} \Big( \sup _{t_i<u<t }X_u >e^{-t_1/2 } \Big) &\geq \mathbb{P} \Big( \sup _{t_i<u<t }G_u >e^{-t_1/2 } \Big) \\[4pt]

&= 2\mathbb{P} \big( G_t \gt e^{-t_1/2 } \big) \\[4pt]

& \geq 2\mathbb{P} \big( N(0,c e^{-t_1}) \gt e^{-t_1/2 } \big) \\[4pt]

& = 2\mathbb{P} \big( N(0, c) \gt 1 \big) \gt \gamma \gt 0.\end{align*}

\begin{align*}\mathbb{P} \Big( \sup _{t_i<u<t }X_u >e^{-t_1/2 } \Big) &\geq \mathbb{P} \Big( \sup _{t_i<u<t }G_u >e^{-t_1/2 } \Big) \\[4pt]

&= 2\mathbb{P} \big( G_t \gt e^{-t_1/2 } \big) \\[4pt]

& \geq 2\mathbb{P} \big( N(0,c e^{-t_1}) \gt e^{-t_1/2 } \big) \\[4pt]

& = 2\mathbb{P} \big( N(0, c) \gt 1 \big) \gt \gamma \gt 0.\end{align*}

Let ![]() $ g(x) = \inf \{ y| e^{-x} - e^{-y} \geq c e^{-x} \}$. Now we can formally define the sequence of the stopping times. The first stopping time is

$ g(x) = \inf \{ y| e^{-x} - e^{-y} \geq c e^{-x} \}$. Now we can formally define the sequence of the stopping times. The first stopping time is ![]() $\tau_1 = \inf \{t |X_{t} \geq 0 \} $; then we define recursively

$\tau_1 = \inf \{t |X_{t} \geq 0 \} $; then we define recursively ![]() $\tau_{i+1} = \inf \{ t | t> \tau_i, t> g(\tau_i), \, X_t \geq 0 \}$. We also define the associated filtration

$\tau_{i+1} = \inf \{ t | t> \tau_i, t> g(\tau_i), \, X_t \geq 0 \}$. We also define the associated filtration ![]() $ \mathcal{F}_n = \mathcal{F}_{ \tau_n}$, for

$ \mathcal{F}_n = \mathcal{F}_{ \tau_n}$, for ![]() $n\geq 1$ and

$n\geq 1$ and ![]() $\mathcal{F}_0 =\{ 0 , \Omega \}$. Now let

$\mathcal{F}_0 =\{ 0 , \Omega \}$. Now let ![]() $ A_n =\{ \exists t,\, \tau_{n-1} <t< \tau_{n} \, , \text{ s.t.\ } X_t \geq c e^{- t/2} \}$. By definition

$ A_n =\{ \exists t,\, \tau_{n-1} <t< \tau_{n} \, , \text{ s.t.\ } X_t \geq c e^{- t/2} \}$. By definition ![]() $A_n \in \mathcal{F}_n$. We find a lower bound for

$A_n \in \mathcal{F}_n$. We find a lower bound for ![]() $ \mathbb{P} (A_n | \mathcal{F}_{n-1} )$:

$ \mathbb{P} (A_n | \mathcal{F}_{n-1} )$:

\begin{align*}\mathbb{P} (A_n | \mathcal{F}_{n-1}) & \geq \mathbb{P} \Big( \sup_{\tau_{n-1} \lt u \lt \tau_n} X_u \gt ce^{-t_{n-1} /2} | \mathcal{F}_{n-1} \Big) \\

&\geq \mathbb{P} \Big( \sup_{\tau_{n-1} \lt u \lt g(\tau_{ n-1})} X_u \gt ce^{-\tau_{n-1} /2} | \mathcal{F}_{n-1}\Big) \\

& \gt \gamma .\end{align*}

\begin{align*}\mathbb{P} (A_n | \mathcal{F}_{n-1}) & \geq \mathbb{P} \Big( \sup_{\tau_{n-1} \lt u \lt \tau_n} X_u \gt ce^{-t_{n-1} /2} | \mathcal{F}_{n-1} \Big) \\

&\geq \mathbb{P} \Big( \sup_{\tau_{n-1} \lt u \lt g(\tau_{ n-1})} X_u \gt ce^{-\tau_{n-1} /2} | \mathcal{F}_{n-1}\Big) \\

& \gt \gamma .\end{align*}

On ![]() $\{X_t\geq 0 \text{\ i.o.}\}$ the sum

$\{X_t\geq 0 \text{\ i.o.}\}$ the sum ![]() $\sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) $ has infinitely many nonzero terms bigger than

$\sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) $ has infinitely many nonzero terms bigger than ![]() $\gamma$; hence

$\gamma$; hence ![]() $\sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) = \infty $ a.s. Finally, by Lemma 2.5 (Borel–Cantelli) we conclude.

$\sum_{n\geq 1} \mathbb{P}(A_n | \mathcal{F}_{n-1} ) = \infty $ a.s. Finally, by Lemma 2.5 (Borel–Cantelli) we conclude.

The next lemma uses the previous lemma to establish that on ![]() $\{X_t \geq 0 \text{\ i.o.} \}$ we have

$\{X_t \geq 0 \text{\ i.o.} \}$ we have ![]() $\liminf_{t \rightarrow \infty} X_t >0 $.

$\liminf_{t \rightarrow \infty} X_t >0 $.

Lemma 3.2. Let ![]() $(X_t)_{t\geq 1}$ be the solution of (9). Then on the event

$(X_t)_{t\geq 1}$ be the solution of (9). Then on the event ![]() $\{X_t \geq 0 \text{\ i.o.} \}$ we have that

$\{X_t \geq 0 \text{\ i.o.} \}$ we have that ![]() $\{\liminf_{t \rightarrow \infty} X_t >0 \}$ holds a.s.

$\{\liminf_{t \rightarrow \infty} X_t >0 \}$ holds a.s.

Proof. Indeed, if we start the process at time s with initial condition ![]() $X_{s} \geq ce^{- \frac{s}{2}}$, then the solution of (9), before hitting 0, is given by

$X_{s} \geq ce^{- \frac{s}{2}}$, then the solution of (9), before hitting 0, is given by

Define ![]() $G_t=\int_{s}^{t} e^{-s(k+\frac{1}{2}) } {\rm d}B_s $. We calculate its quadratic variation:

$G_t=\int_{s}^{t} e^{-s(k+\frac{1}{2}) } {\rm d}B_s $. We calculate its quadratic variation:

Taking ![]() $ t \rightarrow \infty $ shows

$ t \rightarrow \infty $ shows ![]() $\langle G_{\infty }\rangle= \dfrac{ e^{-2sk-s} }{2k+1 }$. Therefore,

$\langle G_{\infty }\rangle= \dfrac{ e^{-2sk-s} }{2k+1 }$. Therefore,

\begin{align}\mathbb{P} \left(\inf_{s \leq u <\infty } X_u >\frac{c}{2}e^{ -\frac{s}{2} } \right)&=\mathbb{P} \left(\inf_{s \leq u <\infty } e^{ku}\left(ce^{-s\left(k +\frac{1}{2}\right)} + G_u \right) > \frac{c}{2}e^{ -\frac{s}{2} } \right)\nonumber\\[4pt]

&\geq \mathbb{P} \left(\inf_{s \leq u <\infty } e^{ks}\left(ce^{-s\left(k +\frac{1}{2}\right)} + G_u \right) > \frac{c}{2}e^{ -\frac{s}{2} } \right)\nonumber \\[4pt]

&=\mathbb{P} \left(\inf_{s \leq u <\infty } ce^{-s\left(k +\frac{1}{2}\right)} + G_u > \frac{c}{2}e^{ -s\left(k +\frac{1}{2}\right) } \right)\nonumber \\[4pt]

&=\mathbb{P} \left(\inf_{s \leq u <\infty } G_u > -\frac{c}{2}e^{ -s\left(k +\frac{1}{2} \right)} \right)\\[4pt]

&= 1- \mathbb{P} \left(\sup_{s \leq u <\infty } G_u >\frac{c}{2}e^{ - s\left(k +\frac{1}{2} \right) } \right)\nonumber \\[4pt]

&= 1-2\lim_{t\rightarrow \infty} \mathbb{P} \left(G_t>-\frac{c}{2}e^{ -s\left(k +\frac{1}{2}\right) } \right),\text{ by\,the\,reflection\,principle} \nonumber\\[4pt]

&=1-2\mathbb{P} \left(N\left(0, \dfrac{ e^{-s(2k+1)} }{2k+1 }\right) > \frac{c}{2}e^{ -s(k +\frac{1}{2} ) } \right)\nonumber \\[4pt]

&=1-2\mathbb{P}\left(N\left(0,\frac{1}{k+1}\right) > \frac{c}{2} \right)>\delta>0. \nonumber\end{align}

\begin{align}\mathbb{P} \left(\inf_{s \leq u <\infty } X_u >\frac{c}{2}e^{ -\frac{s}{2} } \right)&=\mathbb{P} \left(\inf_{s \leq u <\infty } e^{ku}\left(ce^{-s\left(k +\frac{1}{2}\right)} + G_u \right) > \frac{c}{2}e^{ -\frac{s}{2} } \right)\nonumber\\[4pt]

&\geq \mathbb{P} \left(\inf_{s \leq u <\infty } e^{ks}\left(ce^{-s\left(k +\frac{1}{2}\right)} + G_u \right) > \frac{c}{2}e^{ -\frac{s}{2} } \right)\nonumber \\[4pt]

&=\mathbb{P} \left(\inf_{s \leq u <\infty } ce^{-s\left(k +\frac{1}{2}\right)} + G_u > \frac{c}{2}e^{ -s\left(k +\frac{1}{2}\right) } \right)\nonumber \\[4pt]

&=\mathbb{P} \left(\inf_{s \leq u <\infty } G_u > -\frac{c}{2}e^{ -s\left(k +\frac{1}{2} \right)} \right)\\[4pt]

&= 1- \mathbb{P} \left(\sup_{s \leq u <\infty } G_u >\frac{c}{2}e^{ - s\left(k +\frac{1}{2} \right) } \right)\nonumber \\[4pt]

&= 1-2\lim_{t\rightarrow \infty} \mathbb{P} \left(G_t>-\frac{c}{2}e^{ -s\left(k +\frac{1}{2}\right) } \right),\text{ by\,the\,reflection\,principle} \nonumber\\[4pt]

&=1-2\mathbb{P} \left(N\left(0, \dfrac{ e^{-s(2k+1)} }{2k+1 }\right) > \frac{c}{2}e^{ -s(k +\frac{1}{2} ) } \right)\nonumber \\[4pt]

&=1-2\mathbb{P}\left(N\left(0,\frac{1}{k+1}\right) > \frac{c}{2} \right)>\delta>0. \nonumber\end{align}

We know that on ![]() $\{X_t\geq 0 \text{\ i.o.}\}$ the event

$\{X_t\geq 0 \text{\ i.o.}\}$ the event ![]() $\{X_t\geq c e^{-\frac{t}{2}} \text{\ i.o.} \}$ holds a.s. Therefore, on

$\{X_t\geq c e^{-\frac{t}{2}} \text{\ i.o.} \}$ holds a.s. Therefore, on ![]() $\{X_t\geq 0 \text{\ i.o.}\}$, if we define

$\{X_t\geq 0 \text{\ i.o.}\}$, if we define ![]() $\tau_0=0$ and

$\tau_0=0$ and ![]() $\tau_{n+1} = \{t>\tau_n+1| X_t \geq c e^{-\frac{t}{2}} \}$, we see that

$\tau_{n+1} = \{t>\tau_n+1| X_t \geq c e^{-\frac{t}{2}} \}$, we see that ![]() $\tau_n<\infty$ a.s., and

$\tau_n<\infty$ a.s., and ![]() $\tau_n \rightarrow \infty$ a.s. Also, we define the corresponding filtration, namely

$\tau_n \rightarrow \infty$ a.s. Also, we define the corresponding filtration, namely ![]() $\mathcal{F}_n = \sigma( \tau_n)$.

$\mathcal{F}_n = \sigma( \tau_n)$.

To show that on the event ![]() $\{X_t\geq c e^{-\frac{t}{2}} \text{\ i.o.}\}$ the event

$\{X_t\geq c e^{-\frac{t}{2}} \text{\ i.o.}\}$ the event ![]() $A=\{\liminf_{\rightarrow \infty} X_t\leq 0\}$ has probability zero, it suffices to argue that there is a

$A=\{\liminf_{\rightarrow \infty} X_t\leq 0\}$ has probability zero, it suffices to argue that there is a ![]() $\delta$ such that

$\delta$ such that ![]() $ \mathbb{P}( A|\mathcal{F}_n )<1-\delta$ a.s. for all

$ \mathbb{P}( A|\mathcal{F}_n )<1-\delta$ a.s. for all ![]() $n\geq 1$. This is immediate from the previous calculation. Indeed,

$n\geq 1$. This is immediate from the previous calculation. Indeed,

\begin{align*}\mathbb{P}( A|\mathcal{F}_n ) & \leq 1-\mathbb{P} \left(\inf_{ \tau_{n}\leq u <\infty } X_u >\frac{c}{2}e^{ -\frac{\tau_n}{2} } |\mathcal{F}_n \right)\\&<1-\delta.\end{align*}

\begin{align*}\mathbb{P}( A|\mathcal{F}_n ) & \leq 1-\mathbb{P} \left(\inf_{ \tau_{n}\leq u <\infty } X_u >\frac{c}{2}e^{ -\frac{\tau_n}{2} } |\mathcal{F}_n \right)\\&<1-\delta.\end{align*}

Now we can prove Theorem 3.1.

Proof of Theorem 3.1. From Proposition 3.2 we know that ![]() $\{X_t\geq 0 \text{\ i.o.} \}$ has probability 1. Therefore from Lemma 3.2 we deduce

$\{X_t\geq 0 \text{\ i.o.} \}$ has probability 1. Therefore from Lemma 3.2 we deduce ![]() $\liminf_{t \rightarrow \infty} X_t >0$ a.s. Consequently,

$\liminf_{t \rightarrow \infty} X_t >0$ a.s. Consequently, ![]() $ \int_{0}^\infty |X_u| { \rm d} u \rightarrow \infty$ a.s. At the same time

$ \int_{0}^\infty |X_u| { \rm d} u \rightarrow \infty$ a.s. At the same time ![]() $ \limsup_{t \rightarrow \infty} \int_{0}^{t} e^{-\frac{u}{2}} {\rm d } B_u < \infty$ a.s.; hence

$ \limsup_{t \rightarrow \infty} \int_{0}^{t} e^{-\frac{u}{2}} {\rm d } B_u < \infty$ a.s.; hence ![]() $X_t\rightarrow \infty$ a.s.

$X_t\rightarrow \infty$ a.s.

3.3. Analysis of  $X_t$ when

$X_t$ when  $k<1/2$

$k<1/2$

As before, ![]() $(X_t)_{t\geq 1}$ is the solution of the stochastic differential equation

$(X_t)_{t\geq 1}$ is the solution of the stochastic differential equation ![]() $dX_t= k |X_t|\rm{d}t +e^{-\frac{t}{2}} \text{d}B_t $.

$dX_t= k |X_t|\rm{d}t +e^{-\frac{t}{2}} \text{d}B_t $.

The behavior of ![]() $X_t$ when

$X_t$ when ![]() $k<1/2$ is different. The process in this regime can converge to 0 with positive probability. More specifically, we have the following theorem.

$k<1/2$ is different. The process in this regime can converge to 0 with positive probability. More specifically, we have the following theorem.

Theorem 3.2. Let ![]() $(X_t)_{t \geq 1}$ solve (9) with

$(X_t)_{t \geq 1}$ solve (9) with ![]() $k<\frac{1}{2}$, and define

$k<\frac{1}{2}$, and define ![]() $A= \{X_t\rightarrow 0 \}$,

$A= \{X_t\rightarrow 0 \}$, ![]() $B=\{X_t\rightarrow \infty \}$. Then the following hold:

$B=\{X_t\rightarrow \infty \}$. Then the following hold:

1. Let A, B be as above. Then

$\mathbb{P}( A\cup B)=1$.

$\mathbb{P}( A\cup B)=1$.2. Both A and B are nontrivial, i.e.,

$\mathbb{P}(A) >0$ and

$\mathbb{P}(A) >0$ and  $\mathbb{P}(B) >0$.

$\mathbb{P}(B) >0$.3. On

$\{X_t\geq 0 \mathrm{i.o.} \} $ we get

$\{X_t\geq 0 \mathrm{i.o.} \} $ we get  $X_t\rightarrow \infty.$

$X_t\rightarrow \infty.$

Before proving the theorem, we first need to prove a proposition. We will show that the process, starting from a negative value, will never cross 0 with positive probability.

Proposition 3.3. Let ![]() $(X_t)_{t \geq 1}$ solve (9) with

$(X_t)_{t \geq 1}$ solve (9) with ![]() $k<\frac{1}{2}$. Assume that at time s,

$k<\frac{1}{2}$. Assume that at time s, ![]() $X_{s} < 0 $. Then

$X_{s} < 0 $. Then ![]() $(X_t)_{t \geq 1}$ will hit 0 with probability

$(X_t)_{t \geq 1}$ will hit 0 with probability ![]() $\alpha$, where

$\alpha$, where ![]() $0<\alpha<1$.

$0<\alpha<1$.

Proof. Define the stopping time ![]() $\tau_1 =\inf\{ t\geq s| X_t =0 \}$. As in Proposition 3.2, the solution for

$\tau_1 =\inf\{ t\geq s| X_t =0 \}$. As in Proposition 3.2, the solution for ![]() $X_t$ started at time s up to time

$X_t$ started at time s up to time ![]() $\tau_1$ is given by

$\tau_1$ is given by ![]() $X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $. We have

$X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $. We have

\begin{align*}\mathbb{P}( \tau =\infty )&= \mathbb{P}\Big( \sup_{ s <u <\infty } X_u \leq 0 \Big)\\

&= 1-\lim_{t\rightarrow \infty }2 \mathbb{P}\Bigg( N\Bigg( 0, \frac{ e^{2t(k-\frac{1}{2}) } -e^{2t(k-\frac{1}{2}) } }{2k -1 } \Bigg) >-e^{ks} X_{s}\Bigg),\text{ as\,in\,Proposition\,} \linkref{pro3.2}{3.2} \\

&= 1- 2 \mathbb{P}\big( N\big( 0, -e^{2s(k-\frac{1}{2}) } /(2k-1) \big) >-e^{ks} X_{s}\big)\\&=1-\alpha.\end{align*}

\begin{align*}\mathbb{P}( \tau =\infty )&= \mathbb{P}\Big( \sup_{ s <u <\infty } X_u \leq 0 \Big)\\

&= 1-\lim_{t\rightarrow \infty }2 \mathbb{P}\Bigg( N\Bigg( 0, \frac{ e^{2t(k-\frac{1}{2}) } -e^{2t(k-\frac{1}{2}) } }{2k -1 } \Bigg) >-e^{ks} X_{s}\Bigg),\text{ as\,in\,Proposition\,} \linkref{pro3.2}{3.2} \\

&= 1- 2 \mathbb{P}\big( N\big( 0, -e^{2s(k-\frac{1}{2}) } /(2k-1) \big) >-e^{ks} X_{s}\big)\\&=1-\alpha.\end{align*}

Therefore ![]() $0<\alpha<1$.

$0<\alpha<1$.

Proof of Theorem 3.2.

1. Define the events

$N=\{ \exists s \text{s.t. } X_t <0 \forall t\geq s \}$ and

$N=\{ \exists s \text{s.t. } X_t <0 \forall t\geq s \}$ and  $P=\{X_t \geq 0 \text{\ i.o.} \}$. Of course N and P are disjoint and

$P=\{X_t \geq 0 \text{\ i.o.} \}$. Of course N and P are disjoint and  $\mathbb{P}(P \cup N )=1$. To prove Part

$\mathbb{P}(P \cup N )=1$. To prove Part  $\textit{1}$, we will show that

$\textit{1}$, we will show that  $N\subset \{ X_t \rightarrow 0 \}$ up to a null set and

$N\subset \{ X_t \rightarrow 0 \}$ up to a null set and  $P= \{ X_t \rightarrow \infty \} $. From Lemma 2.2 we know that

$P= \{ X_t \rightarrow \infty \} $. From Lemma 2.2 we know that  $\liminf_{t\rightarrow \infty} X_{t}\geq 0$ a.s.; therefore

$\liminf_{t\rightarrow \infty} X_{t}\geq 0$ a.s.; therefore  $N\subset \{X_t\rightarrow 0 \}$ up to a null set.

$N\subset \{X_t\rightarrow 0 \}$ up to a null set.To show that

$P= \{ X_t \rightarrow \infty \} $, note that Lemma 3.2 shows that on

$P= \{ X_t \rightarrow \infty \} $, note that Lemma 3.2 shows that on  $\{ X_t \geq 0 \text{\ i.o.} \} $,

$\{ X_t \geq 0 \text{\ i.o.} \} $,  $\liminf_{t \rightarrow \infty }X_t >0$ a.s. Consequently, on

$\liminf_{t \rightarrow \infty }X_t >0$ a.s. Consequently, on  $\{X_t \geq 0 \text{\ i.o.}\}$ we have

$\{X_t \geq 0 \text{\ i.o.}\}$ we have  $X_t\to \infty $, as

$X_t\to \infty $, as  $ \int_{0}^\infty |X_u| { \rm d} u \rightarrow \infty$ and

$ \int_{0}^\infty |X_u| { \rm d} u \rightarrow \infty$ and  $ \limsup_{t \rightarrow \infty} \int_{0}^{t} e^{-\frac{u}{2}} {\rm d } B_u < \infty$ a.s. Therefore,

$ \limsup_{t \rightarrow \infty} \int_{0}^{t} e^{-\frac{u}{2}} {\rm d } B_u < \infty$ a.s. Therefore,  $P= \{ X_t \rightarrow \infty \} $, which concludes Part

$P= \{ X_t \rightarrow \infty \} $, which concludes Part  $\textit{1}$.

$\textit{1}$.-

2. The fact that

$\mathbb{P}(A)>0$ follows immediately from Proposition 3.3. Now, we will prove that

$\mathbb{P}(A)>0$ follows immediately from Proposition 3.3. Now, we will prove that  $\mathbb{P}(B)>0$. Define the stopping time

$\mathbb{P}(B)>0$. Define the stopping time  $\tau_0=\inf\{t |X_t=0 \}$. Also, define

$\tau_0=\inf\{t |X_t=0 \}$. Also, define  $Y(t,\omega)=1$ if

$Y(t,\omega)=1$ if  $X_s\geq 0$ for all

$X_s\geq 0$ for all  $s\geq t+1$. Observe that

$s\geq t+1$. Observe that  $\{ Y_{\tau_0}=1, \tau_0 < \infty \} \subset P $. Hence, using the strong Markov property,

$\{ Y_{\tau_0}=1, \tau_0 < \infty \} \subset P $. Hence, using the strong Markov property,

\begin{align*} \mathbb{P}(Y_{\tau}=1, \tau

< \infty ) &= \int_{0}^{\infty} \mathbb{ P} ( \tau=u ) \mathbb{P}_0 ( X_t\geq 0, \forall t\geq 1 ){\rm d} u\\ &\geq \int_{0}^{\infty} \mathbb{ P} ( \tau=u ) \mathbb{P}_0 ( K_t\geq 0, \forall t\geq 1 ){\rm d} u \quad \text{\,since\,}\,X_t\geq K_t\\ &=\alpha \mathbb{P}_0 ( K_t\geq 0, \forall t\geq 1 ) \\ &>0. \end{align*}

\begin{align*} \mathbb{P}(Y_{\tau}=1, \tau

< \infty ) &= \int_{0}^{\infty} \mathbb{ P} ( \tau=u ) \mathbb{P}_0 ( X_t\geq 0, \forall t\geq 1 ){\rm d} u\\ &\geq \int_{0}^{\infty} \mathbb{ P} ( \tau=u ) \mathbb{P}_0 ( K_t\geq 0, \forall t\geq 1 ){\rm d} u \quad \text{\,since\,}\,X_t\geq K_t\\ &=\alpha \mathbb{P}_0 ( K_t\geq 0, \forall t\geq 1 ) \\ &>0. \end{align*}

-

3. This follows immediately from the proof of

$\textit{1}$.

$\textit{1}$.

Lastly, we prove a proposition that will be used in Section 5.

Proposition 3.4. Suppose ![]() $(X_t)_{t\geq 1}$,

$(X_t)_{t\geq 1}$, ![]() $(Y_t)_{t \geq 1}$ solve (9), with constants k and

$(Y_t)_{t \geq 1}$ solve (9), with constants k and ![]() $k_1$ respectively. Suppose that

$k_1$ respectively. Suppose that ![]() $0<k_1<k<1/2$. Let

$0<k_1<k<1/2$. Let ![]() $\epsilon >0$. If

$\epsilon >0$. If ![]() $X_s,Y_s \in(-2\epsilon,-\epsilon)$ a.s., then there is an event A with positive probability, such that

$X_s,Y_s \in(-2\epsilon,-\epsilon)$ a.s., then there is an event A with positive probability, such that ![]() $X_t,Y_t\in (-3\epsilon,0)$ for every

$X_t,Y_t\in (-3\epsilon,0)$ for every ![]() $t>s$.

$t>s$.

Proof. Solving the SDE before it hits zero, we find ![]() $X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $ and

$X_t =e^{-kt} (e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u ) $ and ![]() $Y_t =e^{-k_1t} (e^{k_1s} Y_{s} + \int_{s}^{t} e^{u(k_1-\frac{1}{2}) } {\rm d}B_u ) $. Let

$Y_t =e^{-k_1t} (e^{k_1s} Y_{s} + \int_{s}^{t} e^{u(k_1-\frac{1}{2}) } {\rm d}B_u ) $. Let ![]() $\epsilon >0$. Since the process

$\epsilon >0$. Since the process ![]() $G_{t}=\int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u $ has finite quadratic variation, the event

$G_{t}=\int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u $ has finite quadratic variation, the event ![]() $A=\{G_{t}\in(-\epsilon,\epsilon) \forall t>s \}$ has positive probability. Set

$A=\{G_{t}\in(-\epsilon,\epsilon) \forall t>s \}$ has positive probability. Set ![]() $\tilde{G}_{t}=\int_{s}^{t} e^{u(k_1-\frac{1}{2}) } {\rm d}B_u $, and define

$\tilde{G}_{t}=\int_{s}^{t} e^{u(k_1-\frac{1}{2}) } {\rm d}B_u $, and define ![]() $N_t= G_te^{t(k_1-k)}$. Using Itô’s formula, we find

$N_t= G_te^{t(k_1-k)}$. Using Itô’s formula, we find ![]() $\text{d}N_t=e^{t(k-\frac{1}{2})} e^{(k_1-k) t} \text{d}B_t+ (k_1-k)e^{(k_1-k) t}G_t\text{d}t$. Therefore,

$\text{d}N_t=e^{t(k-\frac{1}{2})} e^{(k_1-k) t} \text{d}B_t+ (k_1-k)e^{(k_1-k) t}G_t\text{d}t$. Therefore,

So

To bound ![]() $|\tilde{G}_t|$ observe that

$|\tilde{G}_t|$ observe that

\begin{align*}-\int_{s}^t (k_1-k)e^{(k_1-k) u} G_u\text{d}u &\leq -\epsilon \int_{s}^t (k_1-k)e^{(k_1-k) u} \text{d}u\\[4pt]

&=-\epsilon \left(e^{(k_1-k) t}- e^{(k_1-k) s}\right).\end{align*}

\begin{align*}-\int_{s}^t (k_1-k)e^{(k_1-k) u} G_u\text{d}u &\leq -\epsilon \int_{s}^t (k_1-k)e^{(k_1-k) u} \text{d}u\\[4pt]

&=-\epsilon \left(e^{(k_1-k) t}- e^{(k_1-k) s}\right).\end{align*}

Similarly we obtain ![]() $-\int_{s}^t (k_1-k)e^{(k_1-k) u} G_u\text{d}u \geq \epsilon( e^{(k_1-k) t}- e^{(k_1-k) s})$. Thus on A, we obtain the following inequalities:

$-\int_{s}^t (k_1-k)e^{(k_1-k) u} G_u\text{d}u \geq \epsilon( e^{(k_1-k) t}- e^{(k_1-k) s})$. Thus on A, we obtain the following inequalities:

Simplifying, we obtain ![]() $ | \tilde{G}_t | \leq \epsilon e^{(k_1-k) s}\leq \epsilon$. Now we will estimate

$ | \tilde{G}_t | \leq \epsilon e^{(k_1-k) s}\leq \epsilon$. Now we will estimate ![]() $X_t$ on A. Using that

$X_t$ on A. Using that ![]() $\epsilon<|e^{ks}X_s| $ we obtain the upper bound

$\epsilon<|e^{ks}X_s| $ we obtain the upper bound

\begin{align*}X_t &= e^{-kt} \left(e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u \right ) \\[4pt]

&\leq e^{-kt} (e^{ks} X_{s} +\epsilon )\\[4pt]

&<0\end{align*}

\begin{align*}X_t &= e^{-kt} \left(e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u \right ) \\[4pt]

&\leq e^{-kt} (e^{ks} X_{s} +\epsilon )\\[4pt]

&<0\end{align*}

and the lower bound

\begin{align*}X_t &= e^{-kt} \left(e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u \right) \\[4pt]

&\geq e^{-kt} (-2e^{ks} \epsilon -\epsilon )\\[4pt]

&\geq -3\epsilon.\end{align*}

\begin{align*}X_t &= e^{-kt} \left(e^{ks} X_{s} + \int_{s}^{t} e^{u(k-\frac{1}{2}) } {\rm d}B_u \right) \\[4pt]

&\geq e^{-kt} (-2e^{ks} \epsilon -\epsilon )\\[4pt]

&\geq -3\epsilon.\end{align*}

Doing similarly for ![]() $Y_t$, we conclude.

$Y_t$, we conclude.

4. Analysis of  ${\rm d }{\textit{\textbf{L}}}_{\textit{\textbf{t}}} = \frac{|{\textit{\textbf{L}}}_{\textit{\textbf{t}}}|^{\textit{\textbf{k}}}}{{\textit{\textbf{t}}}^{\boldsymbol{\gamma}}} {\rm d}{\textit{\textbf{t}}} + \frac{1}{{\textit{\textbf{t}}}^{\boldsymbol{\gamma}}} {\rm d} {\textit{\textbf{B}}}_{\textit{\textbf{t}}}$

${\rm d }{\textit{\textbf{L}}}_{\textit{\textbf{t}}} = \frac{|{\textit{\textbf{L}}}_{\textit{\textbf{t}}}|^{\textit{\textbf{k}}}}{{\textit{\textbf{t}}}^{\boldsymbol{\gamma}}} {\rm d}{\textit{\textbf{t}}} + \frac{1}{{\textit{\textbf{t}}}^{\boldsymbol{\gamma}}} {\rm d} {\textit{\textbf{B}}}_{\textit{\textbf{t}}}$

4.1. Introduction

As in the previous section, to simplify matters, we will work with reparametrizing ![]() $L_t$. Set

$L_t$. Set ![]() $\theta (t) = t^{ \frac{1}{1-\gamma} }$, and let

$\theta (t) = t^{ \frac{1}{1-\gamma} }$, and let ![]() $ X_t= L_{ \theta (t)}$. To obtain the SDE that

$ X_t= L_{ \theta (t)}$. To obtain the SDE that ![]() $X_t$ obeys, notice that

$X_t$ obeys, notice that ![]() $ {\rm d} B_{ \theta (t) } = \sqrt{\theta ' (t)} {\rm d} B_t $. Therefore

$ {\rm d} B_{ \theta (t) } = \sqrt{\theta ' (t)} {\rm d} B_t $. Therefore

\begin{align*}{ \rm d }X_t &= \frac{|X_t|^k}{\theta(t)^{\gamma}} \theta'(t){ \rm d}t + \frac{1}{\theta(t)^\gamma} \sqrt{\theta ' (t)}{\rm d} B_t \\&= c_1|X_t|^k { \rm d}t + c_2t^{-\frac{\gamma}{1-\gamma}} \sqrt{\theta ' (t)}{\rm d} B_t \\&=c_1|X_t|^k { \rm d}t + c_2t^{-\frac{\gamma}{2(1-\gamma)}} {\rm d} B_t,\end{align*}

\begin{align*}{ \rm d }X_t &= \frac{|X_t|^k}{\theta(t)^{\gamma}} \theta'(t){ \rm d}t + \frac{1}{\theta(t)^\gamma} \sqrt{\theta ' (t)}{\rm d} B_t \\&= c_1|X_t|^k { \rm d}t + c_2t^{-\frac{\gamma}{1-\gamma}} \sqrt{\theta ' (t)}{\rm d} B_t \\&=c_1|X_t|^k { \rm d}t + c_2t^{-\frac{\gamma}{2(1-\gamma)}} {\rm d} B_t,\end{align*}

where ![]() $c_2^2=c_1= 1/(1-\gamma)$. By abusing the notation we set

$c_2^2=c_1= 1/(1-\gamma)$. By abusing the notation we set ![]() $ X_t = X_t/c_2$, which satisfies an SDE of the form

$ X_t = X_t/c_2$, which satisfies an SDE of the form

where ![]() $k>1$,

$k>1$, ![]() $\gamma\in(1/2,1)$, and

$\gamma\in(1/2,1)$, and ![]() $c\in(0,\infty)$. By a time scaling, we may assume that

$c\in(0,\infty)$. By a time scaling, we may assume that ![]() $X_t$ solves

$X_t$ solves

where ![]() $k>1$ and

$k>1$ and ![]() $\gamma\in(1/2,1)$. Notice that the noise is scaled differently. However, it will be evident that only the order of the noise is relevant. The SDE (13) will be the primary focus of the next subsection, and the results will apply to solutions of (12) as well.

$\gamma\in(1/2,1)$. Notice that the noise is scaled differently. However, it will be evident that only the order of the noise is relevant. The SDE (13) will be the primary focus of the next subsection, and the results will apply to solutions of (12) as well.

We define another process that will be fundamental for our analysis, namely ![]() $\smash{Z_t=-\frac{X_t}{h(t)}}$, where

$\smash{Z_t=-\frac{X_t}{h(t)}}$, where ![]() $h(t)= -t^{\frac{1}{1-k}} $. Next, we find the SDE that

$h(t)= -t^{\frac{1}{1-k}} $. Next, we find the SDE that ![]() $Z_t$ satisfies.

$Z_t$ satisfies.

Proposition 4.1. Suppose that ![]() $(X_t)_{t\geq 1}$ solve (12), and set

$(X_t)_{t\geq 1}$ solve (12), and set ![]() $C(c)=\frac{1}{c(k-1)}$,

$C(c)=\frac{1}{c(k-1)}$, ![]() $h(t)= -t^{\frac{1}{1-k}} $. Then the process

$h(t)= -t^{\frac{1}{1-k}} $. Then the process ![]() $Z_t= -\frac{X_t}{h(t)}$ satisfies

$Z_t= -\frac{X_t}{h(t)}$ satisfies

Also, before ![]() $X_t$ hits zero we get a solution purely in terms of

$X_t$ hits zero we get a solution purely in terms of ![]() $Z_t$:

$Z_t$:

Proof. Recall that since h(t) is a continuous function, the covariance ![]() $ \langle h(t) , Z_t \rangle$ is 0. Using Itô’s formula we obtain

$ \langle h(t) , Z_t \rangle$ is 0. Using Itô’s formula we obtain

Thus,

\begin{align*}Z_t - Z_s &= \int_{ s }^{t} -\frac{1}{h(u)} c|X_u| ^ k { \rm d } u + \int_{ s }^{t} -\frac{1}{h(u)}u^{-\frac{\gamma}{2(1-\gamma)}} { \rm d }B_u + \int_{ s }^{t} X_u\frac{h'(u)}{ h(u) ^2} {\rm d }u \\[8pt]

&= \int_{ s }^{t} X_u\frac{h'(u)}{ h(u) ^2} -\frac{1}{h(u)} c|X_u| ^ k { \rm d } u + \int_{ s }^{t} -\frac{1}{h(u)}u^{-\frac{\gamma}{2(1-\gamma)}} { \rm d }B_u \\[8pt]

&=\int_{ s }^{t}c\dfrac{X_u}{h(u) } \left(\frac{h'(u)}{c h(u) } - \frac{|X_u| ^k }{X_u}\right) { \rm d } u + \int_{ s }^{t} -\frac{1}{h(u)}u^{-\frac{\gamma}{2(1-\gamma)}} { \rm d }B_u \\[8pt]