1. Introduction

Random evolutions with finite velocity have drawn the attention of many scientists in the last decades. Indeed, they provide a good alternative to diffusion processes, which often appear unsuitable for describing natural phenomena in life sciences. For instance, the integrated telegraph random process, which is one of the basic models for the description of finite-velocity random motions, can be regarded as a finite-velocity counterpart of the one-dimensional Brownian motion, thanks to the relationship between the corresponding probability density functions. This explains the growing interest in this topic and also the numerous papers published in the literature in recent years. Indeed, starting from the seminal papers [Reference Goldstein23] and [Reference Kac28], many scientists have devoted their research to this theme by proposing generalizations of the integrated telegraph random process (see e.g. [Reference López and Ratanov34, Reference Bshouty, Di Crescenzo, Martinucci and Zacks7, Reference Crimaldi, Di Crescenzo, Iuliano and Martinucci10, Reference Di Crescenzo, Iuliano, Martinucci and Zacks13, Reference Di Crescenzo, Martinucci, Paraggio and Zacks16, Reference Orsingher37, Reference Garra and Orsingher21]) and also applications in many fields (see e.g. [Reference Weiss52, Reference Di Crescenzo and Pellerey17, Reference Ratanov48, Reference Travaglino, Di Crescenzo, Martinucci and Scarpa51]). An in-depth analysis of the one-dimensional telegraph process can be found in the books of Kolesnik and Ratanov [Reference Kolesnik and Ratanov33] and Zacks [Reference Zacks54]. The present paper is part of the aforementioned research and can be regarded as a further step in the path of investigation of random motions.

The standard integrated telegraph process

![]() $X_t$

,

$X_t$

,

![]() $t>0$

, describes the position of a particle traveling at constant speed on the real line. The direction of the motion is reversed according to the arrival epochs of a homogeneous Poisson process. This results in exponentially distributed random times between consecutive reversals of direction of motion.

$t>0$

, describes the position of a particle traveling at constant speed on the real line. The direction of the motion is reversed according to the arrival epochs of a homogeneous Poisson process. This results in exponentially distributed random times between consecutive reversals of direction of motion.

The process

![]() $X_t$

is also called a piecewise linear Markov process or Markovian fluid [Reference Asmussen2, Reference Ramaswami46, Reference Rogers49]. Piecewise linear processes were first studied in [Reference Gnedenko and Kovalenko22] and represent a subclass of the family of piecewise deterministic processes [Reference Davis11]. A piecewise deterministic process

$X_t$

is also called a piecewise linear Markov process or Markovian fluid [Reference Asmussen2, Reference Ramaswami46, Reference Rogers49]. Piecewise linear processes were first studied in [Reference Gnedenko and Kovalenko22] and represent a subclass of the family of piecewise deterministic processes [Reference Davis11]. A piecewise deterministic process

![]() $\Xi$

is defined as

$\Xi$

is defined as

![]() $\Xi(t)=\phi_{\epsilon(\tau_n)}(t),\,\tau_n\leq t<\tau_{n+1}$

, where (1)

$\Xi(t)=\phi_{\epsilon(\tau_n)}(t),\,\tau_n\leq t<\tau_{n+1}$

, where (1)

![]() $\epsilon = (\epsilon(t))_{t\geq0}$

is an arbitrary measurable and adapted process with values in a finite space

$\epsilon = (\epsilon(t))_{t\geq0}$

is an arbitrary measurable and adapted process with values in a finite space

![]() $\{1,\ldots, N\}$

, (2)

$\{1,\ldots, N\}$

, (2)

![]() $\phi_1,\;\ldots,\;\phi_N$

are N deterministic flows, and (3)

$\phi_1,\;\ldots,\;\phi_N$

are N deterministic flows, and (3)

![]() $\{\tau_n\}_{n\geq1}$

is the sequence of switching times of

$\{\tau_n\}_{n\geq1}$

is the sequence of switching times of

![]() $\epsilon$

. Given a fixed starting point, a piecewise deterministic Markov process evolves according to the flow

$\epsilon$

. Given a fixed starting point, a piecewise deterministic Markov process evolves according to the flow

![]() $\phi_i$

for an exponentially distributed random time; then a switch occurs, and the evolution is governed by another flow

$\phi_i$

for an exponentially distributed random time; then a switch occurs, and the evolution is governed by another flow

![]() $\phi_j$

,

$\phi_j$

,

![]() $j\neq i$

, for another exponential time; then it switches again. The standard integrated telegraph process

$j\neq i$

, for another exponential time; then it switches again. The standard integrated telegraph process

![]() $X_t$

represents the simplest example of a piecewise linear process based on a two-state Markov process

$X_t$

represents the simplest example of a piecewise linear process based on a two-state Markov process

![]() $\epsilon(t)\in\{0, 1\}$

. Its sample paths are composed of straight lines whose slopes alternate between two values. The process

$\epsilon(t)\in\{0, 1\}$

. Its sample paths are composed of straight lines whose slopes alternate between two values. The process

![]() $X_t$

alone is not Markovian, whereas if we supply

$X_t$

alone is not Markovian, whereas if we supply

![]() $X_t$

with a second stochastic process keeping track of the driving flow, we obtain a two-dimensional Markov process.

$X_t$

with a second stochastic process keeping track of the driving flow, we obtain a two-dimensional Markov process.

Piecewise linear processes based on the distribution of inter-switching times different from the exponential ones are much less studied. Some examples can be found in [Reference Di Crescenzo and Ratanov18] and [Reference Ratanov47]. Generally, for the integrated telegraph process, there have been many papers in the literature considering a more general setting, but most of them are Markovian. Unfortunately, the assumption of exponentially distributed intertimes is not suitable for many important applications in physics, biology, and engineering, since it gives higher probability to very short intervals. Hence, special attention should be reserved for non-Markovian cases. For instance, in [Reference Di Crescenzo12], the author analyses the case when the random times separating consecutive velocity reversals of the particle have Erlang distribution with possibly unequal parameters. This hypothesis, if we recall that the sum of independent and identically distributed exponential random variables has Erlang distribution, can be interpreted as stating that the particle undergoes a fixed number of collisions arriving according to a Poisson process before reversing its motion direction. Some connections of the model with queueing, reliability theory, and mathematical finance are also presented in the same paper. The study of a one-dimensional random motion with Erlang distribution for the sojourn times is also performed in [Reference Pogorui and Rodríguez-Dagnino40], where the authors apply the methodology of random evolutions to find the partial differential equations governing the particle motion and obtain a factorization of these equations. Moreover, motivated by applications in mathematical biology concerning randomly alternating motion of micro-organisms, in [Reference Di Crescenzo and Martinucci14] the authors consider gamma-distributed random intertimes and obtain the probability law of the process, expressed in terms of series of incomplete gamma functions. Similarly, the case of exponentially distributed random intertimes with linearly increasing parameters has been treated in [Reference Di Crescenzo and Martinucci15], thus modeling a damping behavior sometimes appearing in particle systems. We recall also the papers [Reference Pogorui and Rodríguez-Dagnino41] and [Reference Pogorui and Rodríguez-Dagnino42], where isotropic random motions in higher dimensions with, respectively, Erlang- and gamma-distributed steps are studied.

In the present paper, along the lines of [Reference Di Crescenzo and Zacks19] and [Reference Zacks54], we provide a general expression for the probability law of

![]() $X_t$

in terms of the distributions of the n-fold convolutions of the random times between motion reversals. The key idea is to relate the probability law of

$X_t$

in terms of the distributions of the n-fold convolutions of the random times between motion reversals. The key idea is to relate the probability law of

![]() $X_t$

to that of the occupation time, which gives the fraction of time that the motion moved with positive velocity in [0, t]. Starting from this result, Section 3 is devoted to the explicit derivations of the probability law of the integrated telegraph process in some special cases, when the upward U and downward D random intertimes are distributed as follows: (i) both are gamma-distributed; (ii) both are Erlang-distributed; (iii) U is exponentially distributed and D gamma-distributed; (iv) U is exponentially distributed and D Erlang-distributed. In Cases (i) and (ii), for some values of the parameters involved, we obtain closed-form results for the probability law of the process, which, to the best of our knowledge, is a new result in the field of finite-time random evolutions. This shows the great advantage of this novel expression for the probability law compared to previously existing results: it has good mathematical tractability. Hence, Section 3 offers a collection of explicit expressions for the probability law of the generalized telegraph process, which could be useful for all scholars interested in non-Markovian generalizations of the telegraph process.

$X_t$

to that of the occupation time, which gives the fraction of time that the motion moved with positive velocity in [0, t]. Starting from this result, Section 3 is devoted to the explicit derivations of the probability law of the integrated telegraph process in some special cases, when the upward U and downward D random intertimes are distributed as follows: (i) both are gamma-distributed; (ii) both are Erlang-distributed; (iii) U is exponentially distributed and D gamma-distributed; (iv) U is exponentially distributed and D Erlang-distributed. In Cases (i) and (ii), for some values of the parameters involved, we obtain closed-form results for the probability law of the process, which, to the best of our knowledge, is a new result in the field of finite-time random evolutions. This shows the great advantage of this novel expression for the probability law compared to previously existing results: it has good mathematical tractability. Hence, Section 3 offers a collection of explicit expressions for the probability law of the generalized telegraph process, which could be useful for all scholars interested in non-Markovian generalizations of the telegraph process.

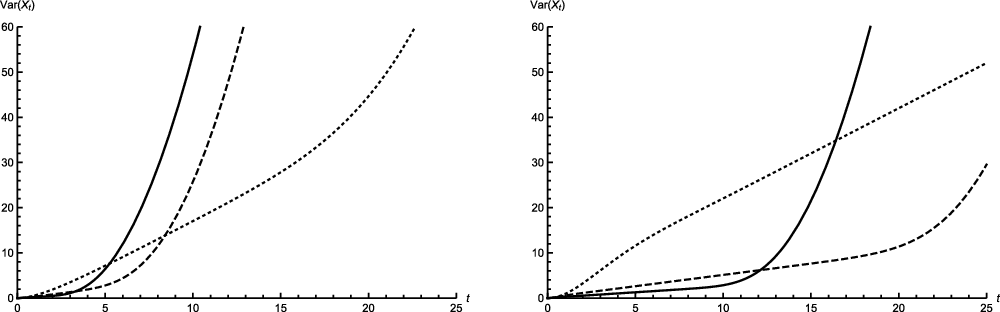

In Section 4 we obtain the Laplace transform of the moment generating function

![]() $M_{X}^s(t)$

of the generalized telegraph process. This result allows us to do the following:

$M_{X}^s(t)$

of the generalized telegraph process. This result allows us to do the following:

-

(a) Prove the existence of a Kac-type condition for the integrated telegraph process with identically distributed gamma intertimes. Under such a condition, the probability density function of

$X_t$

converges to the probability density function of the standard Brownian motion, thus generalizing the well-known result for the standard integrated telegraph process.

$X_t$

converges to the probability density function of the standard Brownian motion, thus generalizing the well-known result for the standard integrated telegraph process. -

(b) Provide an explicit expression for

$M_{X}^s(t)$

in the case of gamma- and Erlang-distributed random times between consecutive velocity reversals.

$M_{X}^s(t)$

in the case of gamma- and Erlang-distributed random times between consecutive velocity reversals.

As further validation, we also derive the moment generating function for exponentially distributed intertimes, finding a well-known result. The first and second moment of

![]() $X_t$

under the assumptions of gamma-distributed intertimes are also given.

$X_t$

under the assumptions of gamma-distributed intertimes are also given.

Finally, Section 5 is devoted to the study of certain functionals of the generalized telegraph process. In particular, we provide an explicit expression for the distribution function of the square of

![]() $X_t$

, under the assumption of exponential distribution for the upward intertimes U and gamma distribution for the downward intertimes D. We recall that the square of the standard integrated telegraph process has been studied in [Reference Martinucci and Meoli35], where its relationship with the square of the Brownian motion is also stressed. See also [Reference Kolesnik and Ratanov33, Chapter 7] and [Reference Kolesnik32] for other relevant functionals of the telegraph processes.

$X_t$

, under the assumption of exponential distribution for the upward intertimes U and gamma distribution for the downward intertimes D. We recall that the square of the standard integrated telegraph process has been studied in [Reference Martinucci and Meoli35], where its relationship with the square of the Brownian motion is also stressed. See also [Reference Kolesnik and Ratanov33, Chapter 7] and [Reference Kolesnik32] for other relevant functionals of the telegraph processes.

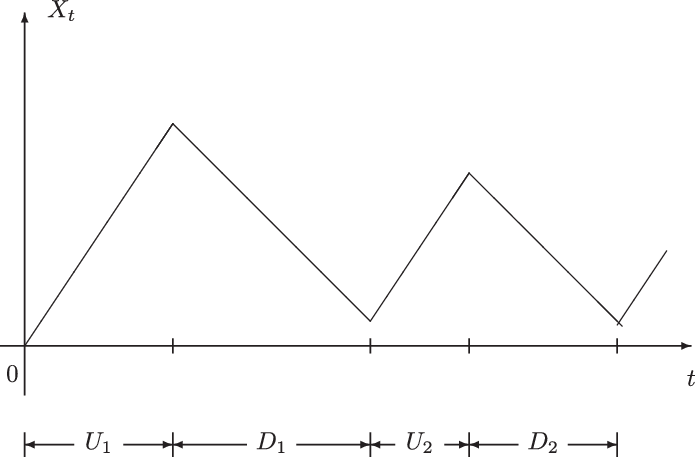

2. The generalized telegraph process

Let

![]() $\big\{X_t;\,t\geq 0\big\}$

be a generalized integrated telegraph process. Such a process describes the position of a particle moving on the real axis with velocity c or

$\big\{X_t;\,t\geq 0\big\}$

be a generalized integrated telegraph process. Such a process describes the position of a particle moving on the real axis with velocity c or

![]() $-c(c>0)$

, according to an independent alternating counting process

$-c(c>0)$

, according to an independent alternating counting process

![]() $\big\{N_t;\, t\geq 0\big\}$

. The latter is governed by sequences of positive independent random times

$\big\{N_t;\, t\geq 0\big\}$

. The latter is governed by sequences of positive independent random times

![]() $\big\{U_{1},U_{2},\cdots\big\}$

and

$\big\{U_{1},U_{2},\cdots\big\}$

and

![]() $\left\{D_{1},D_{2},\cdots\right\} $

, which in turn are assumed to be mutually independent. The random variable

$\left\{D_{1},D_{2},\cdots\right\} $

, which in turn are assumed to be mutually independent. The random variable

![]() $U_{i}$

(resp.

$U_{i}$

(resp.

![]() $D_i$

),

$D_i$

),

![]() $i=1,2,\ldots$

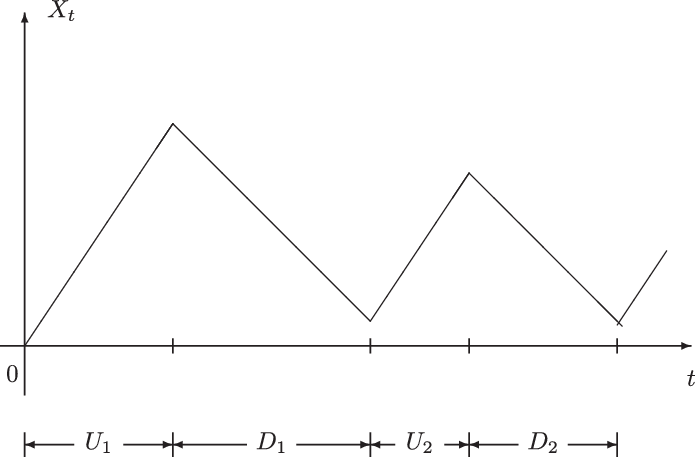

, describes the ith random period during which the motion proceeds with positive (resp. negative) velocity. A sample path of

$i=1,2,\ldots$

, describes the ith random period during which the motion proceeds with positive (resp. negative) velocity. A sample path of

![]() $X_t$

with initial velocity

$X_t$

with initial velocity

![]() $V_0=c$

is shown in Figure 1.

$V_0=c$

is shown in Figure 1.

Let us denote by

![]() $V_t$

the particle velocity at time

$V_t$

the particle velocity at time

![]() $ t\geq 0$

. Assuming that

$ t\geq 0$

. Assuming that

![]() $X_0=0$

, and

$X_0=0$

, and

![]() $V_0\in\{-c,c\}$

, with

$V_0\in\{-c,c\}$

, with

![]() $V_0$

independent of

$V_0$

independent of

![]() $N_t$

, we have

$N_t$

, we have

where

\begin{equation*}N_t=\sum_{n=1}^{+\infty}{\bf 1}_{\{T_n\leq t\}},\qquad N_0=0,\end{equation*}

\begin{equation*}N_t=\sum_{n=1}^{+\infty}{\bf 1}_{\{T_n\leq t\}},\qquad N_0=0,\end{equation*}

with

\begin{equation*} T_{2n}=U^{(n)}+D^{(n)}, \qquad T_{2n+1}=T_{2n}+ \begin{cases} U_{n+1} & \hbox{if}\quad V_0=c,\\[5pt] D_{n+1} & \hbox{if}\quad V_0=-c, \end{cases} \qquad n=0,1,\ldots,\end{equation*}

\begin{equation*} T_{2n}=U^{(n)}+D^{(n)}, \qquad T_{2n+1}=T_{2n}+ \begin{cases} U_{n+1} & \hbox{if}\quad V_0=c,\\[5pt] D_{n+1} & \hbox{if}\quad V_0=-c, \end{cases} \qquad n=0,1,\ldots,\end{equation*}

and

![]() $U^{(0)}=D^{(0)}=0$

,

$U^{(0)}=D^{(0)}=0$

,

![]() $U^{(n)}\,:\!=\,U_{1}+\dots+U_{n}$

,

$U^{(n)}\,:\!=\,U_{1}+\dots+U_{n}$

,

![]() $D^{(n)}\,:\!=\,D_{1}+\dots+D_{n}$

.

$D^{(n)}\,:\!=\,D_{1}+\dots+D_{n}$

.

Figure 1. A sample path of

![]() $X_t$

.

$X_t$

.

Hence, if the motion does not change velocity in [0, t], we have

![]() $X_t=V_0\,t$

. Otherwise, if there is at least one velocity change in [0, t], then

$X_t=V_0\,t$

. Otherwise, if there is at least one velocity change in [0, t], then

![]() $-c t<X_t<c t$

with probability 1.

$-c t<X_t<c t$

with probability 1.

Hereafter, according to the assumptions of the standard symmetric telegraph process [Reference Kac28], we assume that the initial velocity is random, i.e.

Let

![]() $F_{U_{i}}({\cdot}) $

and

$F_{U_{i}}({\cdot}) $

and

![]() $ G_{D_{i}}({\cdot}) $

be the absolutely continuous distribution functions of

$ G_{D_{i}}({\cdot}) $

be the absolutely continuous distribution functions of

![]() $U_i$

and

$U_i$

and

![]() $D_{i}$

$D_{i}$

![]() $(i=1,2,{\dots})$

respectively, with densities

$(i=1,2,{\dots})$

respectively, with densities

![]() $ f_{U_{i}}({\cdot}) $

and

$ f_{U_{i}}({\cdot}) $

and

![]() $ g_{D_{i}}({\cdot})$

, and denote by

$ g_{D_{i}}({\cdot})$

, and denote by

![]() $\overline{F}_{U_{i}}({\cdot})$

and

$\overline{F}_{U_{i}}({\cdot})$

and

![]() $\overline{G}_{D_{i}}({\cdot})$

their complementary distribution functions. In the sequel, we shall denote the distribution functions of

$\overline{G}_{D_{i}}({\cdot})$

their complementary distribution functions. In the sequel, we shall denote the distribution functions of

![]() $U^{(n)}$

and

$U^{(n)}$

and

![]() $D^{(n)}$

by

$D^{(n)}$

by

![]() $F_{U}^{(n)}({\cdot}) $

and

$F_{U}^{(n)}({\cdot}) $

and

![]() $G_{D}^{(n)}({\cdot})$

, and their densities by

$G_{D}^{(n)}({\cdot})$

, and their densities by

![]() $f_{U}^{(n)}({\cdot}) $

and

$f_{U}^{(n)}({\cdot}) $

and

![]() $g_{D}^{(n)}({\cdot})$

, respectively. If the random variables

$g_{D}^{(n)}({\cdot})$

, respectively. If the random variables

![]() $ U_{i} $

are independent and identically distributed for

$ U_{i} $

are independent and identically distributed for

![]() $ i=1,2,\dots, n $

, then

$ i=1,2,\dots, n $

, then

![]() $ F_{U}^{(n)}({\cdot}) $

is the n-fold convolution of

$ F_{U}^{(n)}({\cdot}) $

is the n-fold convolution of

![]() $F_{U_{1}}({\cdot})$

, and similarly for

$F_{U_{1}}({\cdot})$

, and similarly for

![]() $G_{D}^{(n)}({\cdot})$

. Moreover, we set

$G_{D}^{(n)}({\cdot})$

. Moreover, we set

![]() $F_{U}^{\left(0\right)}\left(x\right)=G_{D}^{\left(0\right)}\left(x\right)=1 $

for

$F_{U}^{\left(0\right)}\left(x\right)=G_{D}^{\left(0\right)}\left(x\right)=1 $

for

![]() $ x\geq 0 $

.

$ x\geq 0 $

.

In order to provide the probability law of

![]() $X_t$

, we refer to the general method proposed by Zacks in [Reference Zacks53]. The key idea is to relate the probability law of

$X_t$

, we refer to the general method proposed by Zacks in [Reference Zacks53]. The key idea is to relate the probability law of

![]() $X_t$

to that of the occupation time

$X_t$

to that of the occupation time

which gives the fraction of time that the motion moved with positive velocity in [0, t]. Indeed, for all

![]() $t\geq 0$

,

$t\geq 0$

,

![]() $X_t$

and

$X_t$

and

![]() $W_t$

are linked by the following relationship:

$W_t$

are linked by the following relationship:

Hence, denoting by

the absolutely continuous component of the probability law of

![]() $W_t$

over (0, t), we can express the probability law of

$W_t$

over (0, t), we can express the probability law of

![]() $X_t$

in terms of that of

$X_t$

in terms of that of

![]() $W_t$

, according to the following theorem.

$W_t$

, according to the following theorem.

Proposition 2.1. For all

![]() $t>0$

we have

$t>0$

we have

and

The distribution of

![]() $W_t$

was derived by Perry et al. (see [Reference Perry, Stadje and Zacks38], [Reference Perry, Stadje and Zacks39]) in terms of the distribution of the first time at which a compound process crosses a linear boundary. Proceeding along the lines of Lemmas 5.1 and 5.2 of [Reference Zacks54], in the following theorem we provide the probability law of

$W_t$

was derived by Perry et al. (see [Reference Perry, Stadje and Zacks38], [Reference Perry, Stadje and Zacks39]) in terms of the distribution of the first time at which a compound process crosses a linear boundary. Proceeding along the lines of Lemmas 5.1 and 5.2 of [Reference Zacks54], in the following theorem we provide the probability law of

![]() $W_t$

.

$W_t$

.

Theorem 2.1. For all

![]() $t>0$

it holds that

$t>0$

it holds that

and, for

![]() $0<x<t$

,

$0<x<t$

,

where

\begin{align*}\psi_c(x,t;\,c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=c\,|\,V_0=c)=\sum_{n=1}^{+\infty}\left[F_{U}^{(n)}(x)-F_{U}^{(n+1)}(x)\right]g_{D}^{(n)}(t-x),\\\psi_{-c}(x,t;\,c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=c\,|\,V_0=-c)=\sum_{n=0}^{+\infty}\left[F_{U}^{(n)}(x)-F_{U}^{(n+1)}(x)\right]g_{D}^{(n+1)}(t-x),\\\psi_c(x,t;\,-c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=-c\,|\,V_0=c)=\sum_{n=0}^{+\infty}\left[G_{D}^{(n)}(t-x)-G_{D}^{(n+1)}(t-x)\right]f_{U}^{(n+1)}(x),\\\psi_{-c}(x,t;\,-c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=-c\,|\,V_0=-c)=\sum_{n=1}^{+\infty}\left[G_{D}^{(n)}(t-x)-G_{D}^{(n+1)}(t-x)\right]f_{U}^{(n)}(x).\end{align*}

\begin{align*}\psi_c(x,t;\,c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=c\,|\,V_0=c)=\sum_{n=1}^{+\infty}\left[F_{U}^{(n)}(x)-F_{U}^{(n+1)}(x)\right]g_{D}^{(n)}(t-x),\\\psi_{-c}(x,t;\,c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=c\,|\,V_0=-c)=\sum_{n=0}^{+\infty}\left[F_{U}^{(n)}(x)-F_{U}^{(n+1)}(x)\right]g_{D}^{(n+1)}(t-x),\\\psi_c(x,t;\,-c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=-c\,|\,V_0=c)=\sum_{n=0}^{+\infty}\left[G_{D}^{(n)}(t-x)-G_{D}^{(n+1)}(t-x)\right]f_{U}^{(n+1)}(x),\\\psi_{-c}(x,t;\,-c) & \,:\!=\,\frac{\partial}{\partial x} P(W_t\leq x, V_t=-c\,|\,V_0=-c)=\sum_{n=1}^{+\infty}\left[G_{D}^{(n)}(t-x)-G_{D}^{(n+1)}(t-x)\right]f_{U}^{(n)}(x).\end{align*}

Using Theorem 2.1 and Proposition 2.1, we finally obtain the expression for the probability law of

![]() $X_t$

.

$X_t$

.

Theorem 2.2. For all

![]() $t>0$

it holds that

$t>0$

it holds that

and, for

![]() $-c t<x<c t$

,

$-c t<x<c t$

,

where

\begin{align}f_{c}\!\left(x,t\right)\,:\!=\,& \frac{\partial }{\partial x}\mathbb{P}\big(X_t\leq x\,|\,V_0=c\big)=\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[G_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)-G_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)\right]\right.\nonumber\\& \left.\times\, f_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right) +\sum_{n=1}^{+\infty}\left[F_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)-F_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right)\right]g_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)\right\}\!,\end{align}

\begin{align}f_{c}\!\left(x,t\right)\,:\!=\,& \frac{\partial }{\partial x}\mathbb{P}\big(X_t\leq x\,|\,V_0=c\big)=\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[G_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)-G_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)\right]\right.\nonumber\\& \left.\times\, f_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right) +\sum_{n=1}^{+\infty}\left[F_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)-F_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right)\right]g_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)\right\}\!,\end{align}

and

\begin{align}f_{-c}\!\left(x,t\right)\,:\!=\, & \frac{\partial }{\partial x}\mathbb{P}\big(X_t\leq x\,|\,V_0=-c\big)=\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[F_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)-F_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right)\right]\right.\nonumber\\[2pt]& \times\, g_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)+\sum_{n=1}^{+\infty}\left[G_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)\right.\nonumber\\[2pt]& \left.\left.- G_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)\right]f_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)\right\}.\end{align}

\begin{align}f_{-c}\!\left(x,t\right)\,:\!=\, & \frac{\partial }{\partial x}\mathbb{P}\big(X_t\leq x\,|\,V_0=-c\big)=\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[F_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)-F_{U}^{\left(n+1\right)}\!\left(\frac{ct+x}{2c}\right)\right]\right.\nonumber\\[2pt]& \times\, g_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)+\sum_{n=1}^{+\infty}\left[G_{D}^{\left(n\right)}\!\left(t-\frac{ct+x}{2c}\right)\right.\nonumber\\[2pt]& \left.\left.- G_{D}^{\left(n+1\right)}\!\left(t-\frac{ct+x}{2c}\right)\right]f_{U}^{\left(n\right)}\!\left(\frac{ct+x}{2c}\right)\right\}.\end{align}

An alternative approach to disclosing the probability law of the generalized integrated telegraph process is based on the resolution of the hyperbolic system of partial differential equations related to the probability density of

![]() $(X_t,V_t)$

. For instance, in [Reference Di Crescenzo and Martinucci15], for

$(X_t,V_t)$

. For instance, in [Reference Di Crescenzo and Martinucci15], for

![]() $t>0$

,

$t>0$

,

![]() $-c t<x<c t$

,

$-c t<x<c t$

,

![]() $j=1,2$

, and

$j=1,2$

, and

![]() $n=1,2,\ldots$

, the authors define the conditional densities of

$n=1,2,\ldots$

, the authors define the conditional densities of

![]() $(X_t,V_t)$

, joint with

$(X_t,V_t)$

, joint with

![]() $\big\{T_{2n-j}\leq t< T_{2n-j+1}\big\}$

, and provide the relative system of partial differential equations. Unfortunately, the resolution of such a system is so hard a task that the authors are forced to follow a different approach.

$\big\{T_{2n-j}\leq t< T_{2n-j+1}\big\}$

, and provide the relative system of partial differential equations. Unfortunately, the resolution of such a system is so hard a task that the authors are forced to follow a different approach.

3. Special cases of the probability law of

$\boldsymbol{{X}}_{\boldsymbol{{t}}}$

$\boldsymbol{{X}}_{\boldsymbol{{t}}}$

In this section we make use of Theorem 2.2 to obtain an explicit expression for the probability law of the motion under suitable choices of

![]() $F_{U_{i}}\!\left(\cdot\right)$

and

$F_{U_{i}}\!\left(\cdot\right)$

and

![]() $G_{D_{i}}\!\left(\cdot\right)$

,

$G_{D_{i}}\!\left(\cdot\right)$

,

![]() $i=1,2,\ldots$

. First of all, in Theorem 3.1 we assume that the random intertimes

$i=1,2,\ldots$

. First of all, in Theorem 3.1 we assume that the random intertimes

![]() $U_{i}$

and

$U_{i}$

and

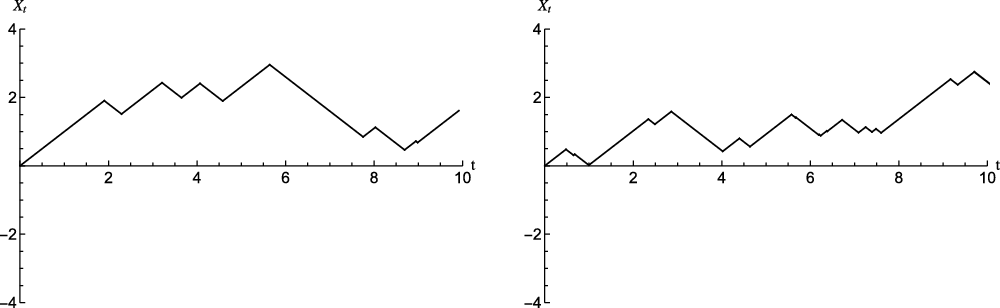

![]() $D_{i}$

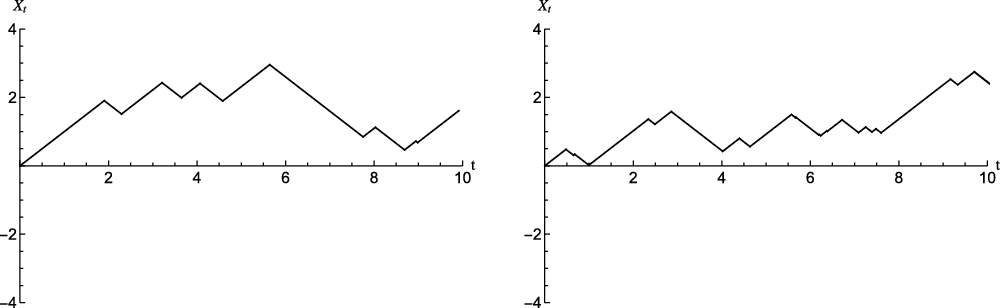

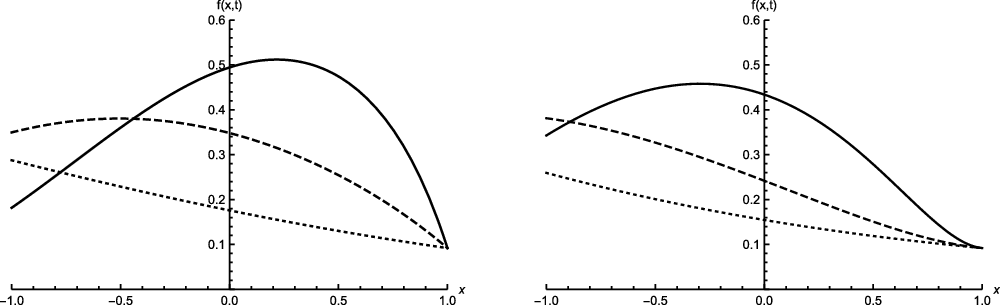

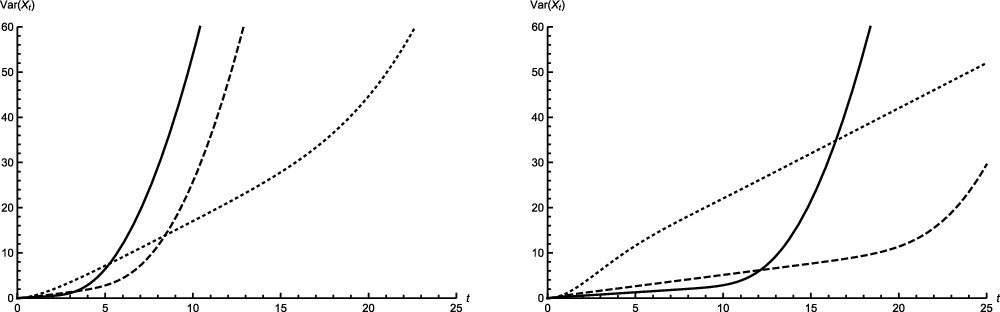

are identically gamma-distributed. Figure 2 provides some simulated sample paths of the related integrated telegraph process for two different values of the coefficient of variation. We recall that the joint probability law of

$D_{i}$

are identically gamma-distributed. Figure 2 provides some simulated sample paths of the related integrated telegraph process for two different values of the coefficient of variation. We recall that the joint probability law of

![]() $\{(X_t, V_t), t\geq 0\}$

, under the assumption of gamma-distributed intertimes, has been expressed in [Reference Di Crescenzo and Martinucci14] in terms of a series of the incomplete gamma function. In the next theorem, we provide an expression for the probability law of

$\{(X_t, V_t), t\geq 0\}$

, under the assumption of gamma-distributed intertimes, has been expressed in [Reference Di Crescenzo and Martinucci14] in terms of a series of the incomplete gamma function. In the next theorem, we provide an expression for the probability law of

![]() $X_t$

in terms of the generalized Wright function. The latter is a very simple and mathematically tractable expression, and, as shown in Propositions (3.2) and (3.3), it allows us to obtain closed-form results for the probability law of the generalized integrated telegraph process in the case of fixed values of the parameters involved. These results appear to be of great interest owing to the absence of similar outcomes in the literature for the one-dimensional case.

$X_t$

in terms of the generalized Wright function. The latter is a very simple and mathematically tractable expression, and, as shown in Propositions (3.2) and (3.3), it allows us to obtain closed-form results for the probability law of the generalized integrated telegraph process in the case of fixed values of the parameters involved. These results appear to be of great interest owing to the absence of similar outcomes in the literature for the one-dimensional case.

Figure 2. Simulated sample paths of

![]() $X_t$

under the assumptions of gamma intertimes with coefficient of variation less than 1 (left-hand side) or greater than 1 (right-hand side).

$X_t$

under the assumptions of gamma intertimes with coefficient of variation less than 1 (left-hand side) or greater than 1 (right-hand side).

Theorem 3.1. For all

![]() $ i=1,2,\dots$

, let

$ i=1,2,\dots$

, let

![]() $ U_{i} $

and

$ U_{i} $

and

![]() $ D_{i} $

be gamma-distributed with shape parameter

$ D_{i} $

be gamma-distributed with shape parameter

![]() $ \alpha>0 $

and rate parameter

$ \alpha>0 $

and rate parameter

![]() $\beta>0$

, and set

$\beta>0$

, and set

\begin{equation} A^k_{l}(x,t)\,:\!=\,{}_1 \Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt] \left(\alpha,\alpha\right)&\left(k+1+l \alpha,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right], \end{equation}

\begin{equation} A^k_{l}(x,t)\,:\!=\,{}_1 \Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt] \left(\alpha,\alpha\right)&\left(k+1+l \alpha,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right], \end{equation}

where, for

![]() $z\in\mathbb{C}$

,

$z\in\mathbb{C}$

,

![]() $a_{i},b_{j}\in{\mathbb C}$

,

$a_{i},b_{j}\in{\mathbb C}$

,

![]() $\alpha_{i},\beta_{j}\in{\mathbb{R}}$

,

$\alpha_{i},\beta_{j}\in{\mathbb{R}}$

,

![]() $\alpha_{i},\beta_{j}\neq 0$

,

$\alpha_{i},\beta_{j}\neq 0$

,

\begin{equation} {}_p \Psi_q\left[\begin{matrix}&\left(a_{l},\alpha_{l}\right)_{1,p}\\[4pt]&\left(b_{l},\beta_{l}\right)_{1,q} \end{matrix}\,\Bigg|\,z\right]={}_p \Psi_q\left[\begin{matrix}&\left(a_{1},\alpha_{1}\right)\cdots \left(a_{p},\alpha_{p}\right) \\[4pt]&\left(b_{1},\beta_{1}\right)\cdots \left(b_{q},\beta_{q}\right) \end{matrix}\,\Bigg|\,z\right]\,:\!=\,\sum_{k=0}^{+\infty}\frac{\displaystyle\prod_{l=1}^{p}\Gamma\big(a_{l}+\alpha_{l}k\big)}{\displaystyle\prod_{j=1}^{q}\Gamma\!\left(b_{j}+\beta_{j}k\right)}\frac{z^{k}}{k!} \end{equation}

\begin{equation} {}_p \Psi_q\left[\begin{matrix}&\left(a_{l},\alpha_{l}\right)_{1,p}\\[4pt]&\left(b_{l},\beta_{l}\right)_{1,q} \end{matrix}\,\Bigg|\,z\right]={}_p \Psi_q\left[\begin{matrix}&\left(a_{1},\alpha_{1}\right)\cdots \left(a_{p},\alpha_{p}\right) \\[4pt]&\left(b_{1},\beta_{1}\right)\cdots \left(b_{q},\beta_{q}\right) \end{matrix}\,\Bigg|\,z\right]\,:\!=\,\sum_{k=0}^{+\infty}\frac{\displaystyle\prod_{l=1}^{p}\Gamma\big(a_{l}+\alpha_{l}k\big)}{\displaystyle\prod_{j=1}^{q}\Gamma\!\left(b_{j}+\beta_{j}k\right)}\frac{z^{k}}{k!} \end{equation}

is the generalized Wright function. Then, for

![]() $t>0$

, we have

$t>0$

, we have

with

![]() $\gamma\!\left(s,x\right)$

denoting the lower incomplete gamma function, and, for

$\gamma\!\left(s,x\right)$

denoting the lower incomplete gamma function, and, for

![]() $-c t<x<c t$

,

$-c t<x<c t$

,

\begin{align} f\!\left(x,t\right)=\, & \frac{\beta^{\alpha} {\textrm{e}}^{-\beta t}}{4 c} \left\{\sum_{k=0}^{+\infty} \beta^{k} A^k_{0}(x,t) \left[ \left(\frac{ct+x}{2c}\right)^{\alpha-1}\,\left(\frac{ct-x}{2c}\right)^{k} +\left(\frac{ct-x}{2c}\right)^{\alpha-1}\,\left(\frac{ct+x}{2c}\right)^{k} \right]\right. \nonumber \\[4pt]& \!\left. - \sum_{k=0}^{+\infty} \beta^{k+2\alpha } A^k_{2}(x,t)\!\left[ \left(\frac{ct+x}{2c}\right)^{\alpha-1}\!\left(\frac{ct-x}{2c}\right)^{k+2\alpha} +\left(\frac{ct-x}{2c}\right)^{\alpha-1}\!\left(\frac{ct+x}{2c}\right)^{k+2\alpha}\right]\right\}\!. \end{align}

\begin{align} f\!\left(x,t\right)=\, & \frac{\beta^{\alpha} {\textrm{e}}^{-\beta t}}{4 c} \left\{\sum_{k=0}^{+\infty} \beta^{k} A^k_{0}(x,t) \left[ \left(\frac{ct+x}{2c}\right)^{\alpha-1}\,\left(\frac{ct-x}{2c}\right)^{k} +\left(\frac{ct-x}{2c}\right)^{\alpha-1}\,\left(\frac{ct+x}{2c}\right)^{k} \right]\right. \nonumber \\[4pt]& \!\left. - \sum_{k=0}^{+\infty} \beta^{k+2\alpha } A^k_{2}(x,t)\!\left[ \left(\frac{ct+x}{2c}\right)^{\alpha-1}\!\left(\frac{ct-x}{2c}\right)^{k+2\alpha} +\left(\frac{ct-x}{2c}\right)^{\alpha-1}\!\left(\frac{ct+x}{2c}\right)^{k+2\alpha}\right]\right\}\!. \end{align}

Proof. The first result is due to Equation (2). Under the given assumptions, recalling that

and by setting

(cf. Equation 6.5.4 of [Reference Abramowitz and Stegun1]), from Equation (4) we get

\begin{align} f_{c}\!\left(x,t\right) & =\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[\frac{\gamma\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(n\alpha\right)}-\frac{\gamma\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right]\right.\nonumber\\[3pt]& \qquad\qquad\qquad\times \frac{\beta^{\left(n+1\right)\alpha}\!\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\nonumber\\[3pt]& \quad \left.+\sum_{n=1}^{+\infty}\left[\frac{\gamma\!\left(n\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(n\alpha\right)}-\frac{\gamma\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right]\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right\}\nonumber\\[3pt]& =\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right. \nonumber\\[3pt]& \quad \left. -\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha+\alpha}\!\!\!\gamma^{\star}\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right. \nonumber \\[3pt]& \quad \left.+\sum_{n=1}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right. \nonumber \\[3pt] & \quad \left.-\sum_{n=1}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{n\alpha+\alpha}\gamma^{\star}\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right\}. \end{align}

\begin{align} f_{c}\!\left(x,t\right) & =\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[\frac{\gamma\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(n\alpha\right)}-\frac{\gamma\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right]\right.\nonumber\\[3pt]& \qquad\qquad\qquad\times \frac{\beta^{\left(n+1\right)\alpha}\!\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\nonumber\\[3pt]& \quad \left.+\sum_{n=1}^{+\infty}\left[\frac{\gamma\!\left(n\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(n\alpha\right)}-\frac{\gamma\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right]\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right\}\nonumber\\[3pt]& =\frac{1}{2c}\left\{\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right. \nonumber\\[3pt]& \quad \left. -\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha+\alpha}\!\!\!\gamma^{\star}\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\right. \nonumber \\[3pt]& \quad \left.+\sum_{n=1}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right. \nonumber \\[3pt] & \quad \left.-\sum_{n=1}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{n\alpha+\alpha}\gamma^{\star}\!\left(\left(n+1\right)\alpha,\beta\!\left(\frac{ct+x}{2c}\right)\right)\frac{\beta^{n\alpha}\!\left(\frac{ct-x}{2c}\right)^{n\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}}{\Gamma\!\left(n\alpha\right)}\right\}. \end{align}

Let us focus on the first term on the right-hand side of (11). Recalling that

\begin{equation}\gamma^{\star}\!\left(a,z\right)={\textrm{e}}^{-z}\sum_{n=0}^{+\infty}\frac{z^{n}}{\Gamma\!\left(a+n+1\right)},\end{equation}

\begin{equation}\gamma^{\star}\!\left(a,z\right)={\textrm{e}}^{-z}\sum_{n=0}^{+\infty}\frac{z^{n}}{\Gamma\!\left(a+n+1\right)},\end{equation}

and using Equation (7), we obtain

\begin{align*}&\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}\sum_{n=0}^{+\infty}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{n\alpha}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}\sum_{k=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}}{\Gamma\!\left(n\alpha+k+1\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\sum_{n=0}^{+\infty}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{n\alpha}}{\Gamma\!\left(\left(n+1\right)\alpha\right)\Gamma\!\left(n\alpha+k+1\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k} {}_1\Psi_2\left[\begin{matrix}&\left(1,1\right)\\[3pt]&\left(\alpha,\alpha\right)&\left(k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right].\end{align*}

\begin{align*}&\sum_{n=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{n\alpha}\gamma^{\star}\!\left(n\alpha,\beta\!\left(\frac{ct-x}{2c}\right)\right)\frac{\beta^{n\alpha+\alpha}\!\left(\frac{ct+x}{2c}\right)^{n\alpha+\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta\left(\frac{ct+x}{2c}\right)}\sum_{n=0}^{+\infty}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{n\alpha}}{\Gamma\!\left(\left(n+1\right)\alpha\right)}{\textrm{e}}^{-\beta\left(\frac{ct-x}{2c}\right)}\sum_{k=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}}{\Gamma\!\left(n\alpha+k+1\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\sum_{n=0}^{+\infty}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{n\alpha}}{\Gamma\!\left(\left(n+1\right)\alpha\right)\Gamma\!\left(n\alpha+k+1\right)}\\[3pt]=\,&\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k} {}_1\Psi_2\left[\begin{matrix}&\left(1,1\right)\\[3pt]&\left(\alpha,\alpha\right)&\left(k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right].\end{align*}

By applying similar reasoning for each term in the right-hand side of (11), we finally get

\begin{align}f_{c}\!\left(x,t\right) = & \frac{{\textrm{e}}^{-\beta t}}{2c}\left\{\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\right.\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad\qquad \left.\times{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right) & \\[4pt] \left(\alpha,\alpha\right) & \left(k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right.\nonumber\\[4pt]& -\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right) & \\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[4pt]& +\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[4pt]& \left.-\beta^{\alpha}\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\right.\nonumber\\[4pt]& \left.\times\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right\}.\end{align}

\begin{align}f_{c}\!\left(x,t\right) = & \frac{{\textrm{e}}^{-\beta t}}{2c}\left\{\beta^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\right.\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad\qquad \left.\times{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right) & \\[4pt] \left(\alpha,\alpha\right) & \left(k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right.\nonumber\\[4pt]& -\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right) & \\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[4pt]& +\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\nonumber\\[4pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[4pt]& \left.-\beta^{\alpha}\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\right.\nonumber\\[4pt]& \left.\times\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right\}.\end{align}

Similarly, in the case of negative initial velocity, we have

\begin{align}f_{-c}\!\left(x,t\right) =& \frac{{\textrm{e}}^{-\beta t}}{2c}\left\{\beta^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\right.\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right) & \left(k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[3pt]& -\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right].\nonumber\\& +\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber \\[3pt]& \left.-\beta^{\alpha}\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\right.\nonumber\\[3pt]& \left.\times\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right\}.\end{align}

\begin{align}f_{-c}\!\left(x,t\right) =& \frac{{\textrm{e}}^{-\beta t}}{2c}\left\{\beta^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\right.\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right) & \left(k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber\\[3pt]& -\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{k}\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right].\nonumber\\& +\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\nonumber\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad \times {}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[4pt]\left(\alpha,\alpha\right) & \left(\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\nonumber \\[3pt]& \left.-\beta^{\alpha}\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\!\left(\frac{ct-x}{2c}\right)^{\alpha}\!\left(\frac{ct+x}{2c}\right)^{-1}\right.\nonumber\\[3pt]& \left.\times\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}{}_1\Psi_2\left[\begin{array}{l@{\quad}l}\left(1,1\right)\\[3pt]\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{array}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]\right\}.\end{align}

The proof finally follows from recalling the assumption of random initial velocity.

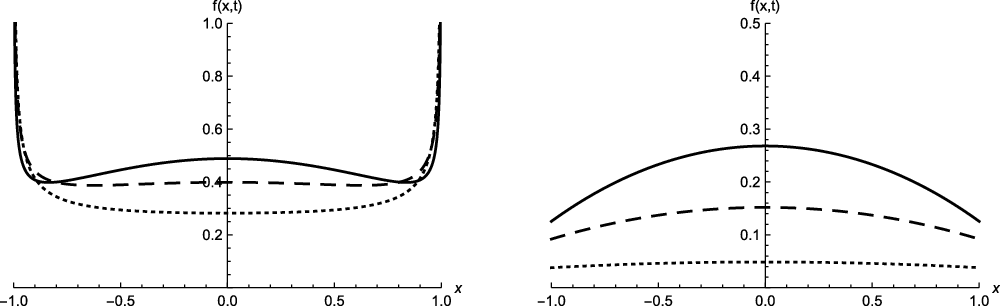

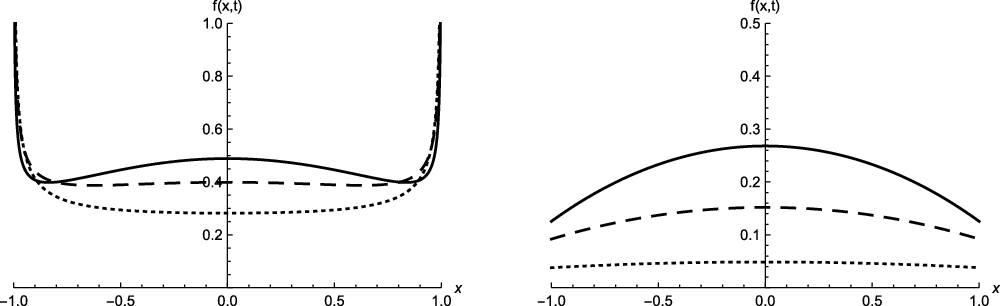

Hereafter we analyze the behavior of the density

![]() $f\!\left(x,t\right)$

given in Equation (8) at the extreme points of the interval

$f\!\left(x,t\right)$

given in Equation (8) at the extreme points of the interval

![]() $({-}c t, c t)$

, for any fixed t.

$({-}c t, c t)$

, for any fixed t.

Proposition 3.1. For

![]() $ t,\,\alpha,\,\beta>0 $

, it holds that

$ t,\,\alpha,\,\beta>0 $

, it holds that

\begin{equation*}\lim_{x\rightarrow (c t)^{-}}f\!\left(x,t\right)=\lim_{x\rightarrow ({-}c t)^{+}}f\!\left(x,t\right)=\left\{ \begin{array}{c} \frac{1}{4 c} \frac{{\textrm{e}}^{-\beta t} \beta^{\alpha} t^{\alpha-1}}{\Gamma\!\left(\alpha\right)}, \,\quad \hbox{if} \quad \alpha\geq 1, \\[4pt] + \infty,\quad \hbox{if} \quad \alpha<1. \end{array} \right.\end{equation*}

\begin{equation*}\lim_{x\rightarrow (c t)^{-}}f\!\left(x,t\right)=\lim_{x\rightarrow ({-}c t)^{+}}f\!\left(x,t\right)=\left\{ \begin{array}{c} \frac{1}{4 c} \frac{{\textrm{e}}^{-\beta t} \beta^{\alpha} t^{\alpha-1}}{\Gamma\!\left(\alpha\right)}, \,\quad \hbox{if} \quad \alpha\geq 1, \\[4pt] + \infty,\quad \hbox{if} \quad \alpha<1. \end{array} \right.\end{equation*}

Proof. Because of the symmetry properties of

![]() $X_t$

, it is enough to analyze the behavior of

$X_t$

, it is enough to analyze the behavior of

![]() $f\!\left(x,t\right)$

as

$f\!\left(x,t\right)$

as

![]() $x\rightarrow (ct)^{-}$

.

$x\rightarrow (ct)^{-}$

.

Let us fix

![]() $t,\,\alpha,\,\beta>0 $

and assume

$t,\,\alpha,\,\beta>0 $

and assume

![]() $-c t<x<c t$

. By Corollary 1.1 of [Reference Kilbas, Saigo and Trujillo30], the generalized Wright functions appearing in the density (8) are entire functions for all nonnegative integers k. Hence they are continuous, and, recalling their analytical expression (7), we obtain

$-c t<x<c t$

. By Corollary 1.1 of [Reference Kilbas, Saigo and Trujillo30], the generalized Wright functions appearing in the density (8) are entire functions for all nonnegative integers k. Hence they are continuous, and, recalling their analytical expression (7), we obtain

\begin{align*}\lim_{x\rightarrow (ct)^{-}} {}_1 \Psi_2\left[\begin{matrix}&\left(1,1\right)\\[4pt]&\left(\alpha,\alpha\right)&\left(k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]&=\frac{1}{\Gamma\!\left(\alpha\right)\Gamma\!\left(k+1\right)},\\[4pt]\lim_{x\rightarrow (ct)^{-}} {}_1 \Psi_2\left[\begin{matrix}&\left(1,1\right)\\[4pt]&\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]&=\frac{1}{\Gamma\!\left(\alpha\right)\Gamma\!\left(2\alpha+k+1\right)},\end{align*}

\begin{align*}\lim_{x\rightarrow (ct)^{-}} {}_1 \Psi_2\left[\begin{matrix}&\left(1,1\right)\\[4pt]&\left(\alpha,\alpha\right)&\left(k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]&=\frac{1}{\Gamma\!\left(\alpha\right)\Gamma\!\left(k+1\right)},\\[4pt]\lim_{x\rightarrow (ct)^{-}} {}_1 \Psi_2\left[\begin{matrix}&\left(1,1\right)\\[4pt]&\left(\alpha,\alpha\right)&\left(2\alpha+k+1,\alpha\right)\end{matrix}\,\Bigg|\,\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{\alpha}\right]&=\frac{1}{\Gamma\!\left(\alpha\right)\Gamma\!\left(2\alpha+k+1\right)},\end{align*}

so that, by Equation (12), after simple calculations we get

\begin{align*}\lim_{x\rightarrow (ct)^{-}}f\!\left(x,t\right) & =\frac{\beta^{\alpha}}{4c}{\textrm{e}}^{-\beta t}\left\{\frac{t^{\alpha-1}}{\Gamma\!\left(\alpha\right)}-\frac{\left(\beta t\right)^{2\alpha}}{\Gamma\!\left(\alpha\right)\!\left(2c\right)^{\alpha-1}}\sum_{k=0}^{+\infty}\frac{\left(\beta t\right)^{k}}{\Gamma\!\left(2\alpha+k+1\right)}\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}\right.\nonumber\\[3pt]& \quad \left. +\frac{1}{\Gamma\!\left(\alpha\right)}\!\left(\frac{1}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\frac{\left(\beta t\right)^{k}}{\Gamma\!\left(k+1\right)}\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}\right\}\nonumber\\[3pt]& =\frac{{\textrm{e}}^{-\beta t}\!\left(\beta t\right)^{\alpha}}{4 c t\, \Gamma\!\left(\alpha\right)}+\frac{1}{2\Gamma\!\left(\alpha\right)}\!\left(\frac{\beta}{2c}\right)^{\alpha}\!\left(1-\frac{\gamma\!\left(2\alpha,\beta t\right)}{\Gamma\!\left(2\alpha\right)}\right)\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}.\end{align*}

\begin{align*}\lim_{x\rightarrow (ct)^{-}}f\!\left(x,t\right) & =\frac{\beta^{\alpha}}{4c}{\textrm{e}}^{-\beta t}\left\{\frac{t^{\alpha-1}}{\Gamma\!\left(\alpha\right)}-\frac{\left(\beta t\right)^{2\alpha}}{\Gamma\!\left(\alpha\right)\!\left(2c\right)^{\alpha-1}}\sum_{k=0}^{+\infty}\frac{\left(\beta t\right)^{k}}{\Gamma\!\left(2\alpha+k+1\right)}\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}\right.\nonumber\\[3pt]& \quad \left. +\frac{1}{\Gamma\!\left(\alpha\right)}\!\left(\frac{1}{2c}\right)^{\alpha-1}\sum_{k=0}^{+\infty}\frac{\left(\beta t\right)^{k}}{\Gamma\!\left(k+1\right)}\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}\right\}\nonumber\\[3pt]& =\frac{{\textrm{e}}^{-\beta t}\!\left(\beta t\right)^{\alpha}}{4 c t\, \Gamma\!\left(\alpha\right)}+\frac{1}{2\Gamma\!\left(\alpha\right)}\!\left(\frac{\beta}{2c}\right)^{\alpha}\!\left(1-\frac{\gamma\!\left(2\alpha,\beta t\right)}{\Gamma\!\left(2\alpha\right)}\right)\lim_{x\rightarrow (ct)^{-}}\!\left(ct-x\right)^{\alpha-1}.\end{align*}

In the following proposition we provide a closed-form expression for the probability density function (8) in the case

![]() $\alpha=1/2$

.

$\alpha=1/2$

.

Proposition 3.2. In the case

![]() $\alpha=1/2$

the probability density function (8) has the following expression:

$\alpha=1/2$

the probability density function (8) has the following expression:

\begin{equation*}f\!\left(x,t\right)= \frac{1}{4c}\sqrt{\frac{\beta}{\pi}}e^{\frac{\beta}{c}\!\left(\sqrt{c^{2}t^{2}-x^{2}}-ct\right)}\left\{\left(\frac{ct+x}{2c}\right)^{-\frac{1}{2}}+\left(\frac{ct-x}{2c}\right)^{-\frac{1}{2}}\right\},\quad -c t<x <c t.\end{equation*}

\begin{equation*}f\!\left(x,t\right)= \frac{1}{4c}\sqrt{\frac{\beta}{\pi}}e^{\frac{\beta}{c}\!\left(\sqrt{c^{2}t^{2}-x^{2}}-ct\right)}\left\{\left(\frac{ct+x}{2c}\right)^{-\frac{1}{2}}+\left(\frac{ct-x}{2c}\right)^{-\frac{1}{2}}\right\},\quad -c t<x <c t.\end{equation*}

Proof. For

![]() $\alpha=1/2$

and recalling that, for

$\alpha=1/2$

and recalling that, for

![]() $ n\in\mathbb{N}$

,

$ n\in\mathbb{N}$

,

one can easily prove that

![]() $A^{k}_{2}\!\left(x,t\right)=A^{k+1}_{0}\left(x,t\right)$

, where the function

$A^{k}_{2}\!\left(x,t\right)=A^{k+1}_{0}\left(x,t\right)$

, where the function

![]() $A_l^{k}\!\left(x,t\right)$

has been defined in (6). Hence we obtain

$A_l^{k}\!\left(x,t\right)$

has been defined in (6). Hence we obtain

\begin{equation}f(x,t)=\frac{\sqrt{\beta}e^{-\beta t}}{4c}A^{0}_{0}\!\left(x,t\right)\left\{\left(\frac{ct+x}{2c}\right)^{-\frac{1}{2}}+\left(\frac{ct-x}{2c}\right)^{-\frac{1}{2}}\right\}.\end{equation}

\begin{equation}f(x,t)=\frac{\sqrt{\beta}e^{-\beta t}}{4c}A^{0}_{0}\!\left(x,t\right)\left\{\left(\frac{ct+x}{2c}\right)^{-\frac{1}{2}}+\left(\frac{ct-x}{2c}\right)^{-\frac{1}{2}}\right\}.\end{equation}

Moreover, by setting

and using Equation (15), we get

\begin{align*}A^{0}_{0}\!\left(z,t\right) & =\sum_{n=0}^{+\infty}\frac{z^{2n}}{\Gamma\!\left(n+\frac{1}{2}\right)\Gamma\!\left(n+1\right)}+\sum_{n=0}^{+\infty}\frac{z^{2n+1}}{\Gamma\!\left(n+1\right)\Gamma\!\left(1+\frac{1}{2}+n\right)}\\& =\frac{1}{\sqrt{\pi}}\sum_{n=0}^{+\infty}\frac{\left(2z\right)^{2n}}{\left(2n\right)!}+\frac{2}{\sqrt{\pi}}\sum_{n=0}^{+\infty}\frac{\left(2z\right)^{2n+1}\!\left(n+1\right)!}{n!\left(2n+2\right)!}\\& =\frac{1}{\sqrt{\pi}}\cosh\left(2z\right)+\frac{1}{\sqrt{\pi}}\sinh\!\left(2z\right)=\frac{1}{\sqrt{\pi}}e^{2z}.\end{align*}

\begin{align*}A^{0}_{0}\!\left(z,t\right) & =\sum_{n=0}^{+\infty}\frac{z^{2n}}{\Gamma\!\left(n+\frac{1}{2}\right)\Gamma\!\left(n+1\right)}+\sum_{n=0}^{+\infty}\frac{z^{2n+1}}{\Gamma\!\left(n+1\right)\Gamma\!\left(1+\frac{1}{2}+n\right)}\\& =\frac{1}{\sqrt{\pi}}\sum_{n=0}^{+\infty}\frac{\left(2z\right)^{2n}}{\left(2n\right)!}+\frac{2}{\sqrt{\pi}}\sum_{n=0}^{+\infty}\frac{\left(2z\right)^{2n+1}\!\left(n+1\right)!}{n!\left(2n+2\right)!}\\& =\frac{1}{\sqrt{\pi}}\cosh\left(2z\right)+\frac{1}{\sqrt{\pi}}\sinh\!\left(2z\right)=\frac{1}{\sqrt{\pi}}e^{2z}.\end{align*}

Finally, the proof follows from substituting the previous expression in (16) and recalling the definition of z.

Starting from Theorem 3.1, in the next proposition we provide the expression for the probability law of

![]() $X_t$

under the assumption of identically Erlang-distributed random intertimes

$X_t$

under the assumption of identically Erlang-distributed random intertimes

![]() $U_{i}$

and

$U_{i}$

and

![]() $D_{i} $

,

$D_{i} $

,

![]() $ i=1,2,\dots$

. Such results are in agreement with those obtained in [Reference Di Crescenzo and Zacks19].

$ i=1,2,\dots$

. Such results are in agreement with those obtained in [Reference Di Crescenzo and Zacks19].

Corollary 3.1. If

![]() $U_{i} $

and

$U_{i} $

and

![]() $D_{i}$

,

$D_{i}$

,

![]() $ i=1,2,\dots,$

both have Erlang distribution with parameters

$ i=1,2,\dots,$

both have Erlang distribution with parameters

![]() $m\in {\mathbb N}$

and

$m\in {\mathbb N}$

and

![]() $\beta>0$

, then for

$\beta>0$

, then for

![]() $t>0$

, we have

$t>0$

, we have

and for

![]() $-c t<x<c t$

, we have

$-c t<x<c t$

, we have

\begin{align*} f\!\left(x,t\right) = &\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\big(m-1,j\big)}\!\!\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\& \qquad\qquad\left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(\,j,m-1\right)}\!\!\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\}, \end{align*}

\begin{align*} f\!\left(x,t\right) = &\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\big(m-1,j\big)}\!\!\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\& \qquad\qquad\left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(\,j,m-1\right)}\!\!\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\}, \end{align*}

where

\begin{equation}S_{i,j}^{\left(k,r\right)}\!\left(x,y\right)=\sum_{l=0}^{+\infty}\frac{x^{kl+i}y^{rl+j}}{\left(kl+i\right)!\left(rl+j\right)!},\quad k,r\geq 1,\quad i,j\geq 0,\end{equation}

\begin{equation}S_{i,j}^{\left(k,r\right)}\!\left(x,y\right)=\sum_{l=0}^{+\infty}\frac{x^{kl+i}y^{rl+j}}{\left(kl+i\right)!\left(rl+j\right)!},\quad k,r\geq 1,\quad i,j\geq 0,\end{equation}

is a two-index pseudo-Bessel function.

Proof. The result follows from Theorem 3.1 by letting

![]() $ \alpha=m\in\mathbb{N} $

. For instance, for the first term in (8), by (7), and recalling Equation (17), we have

$ \alpha=m\in\mathbb{N} $

. For instance, for the first term in (8), by (7), and recalling Equation (17), we have

\begin{eqnarray*}&& \frac{\beta^{m}}{4c}{\textrm{e}}^{-\beta t}\!\left(\frac{ct+x}{2c}\right)^{m-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}{}_1 \Psi_2\left[\begin{array}{l} \left(1,1\right)\\[2pt]\left(m,m\right) \!\left(k+1,m\right)\end{array}\,\Bigg|\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{m}\right]\\[2pt]&& =\frac{\beta^{m}}{4c}{\textrm{e}}^{-\beta t}\!\left(\frac{ct+x}{2c}\right)^{m-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\sum_{h=0}^{+\infty}\frac{\Gamma\!\left(1+h\right)}{\Gamma\!\left(m+m h\right)\Gamma\!\left(k+1+m h\right)}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{mh}}{h!}\\[2pt]&& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right].\end{eqnarray*}

\begin{eqnarray*}&& \frac{\beta^{m}}{4c}{\textrm{e}}^{-\beta t}\!\left(\frac{ct+x}{2c}\right)^{m-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}{}_1 \Psi_2\left[\begin{array}{l} \left(1,1\right)\\[2pt]\left(m,m\right) \!\left(k+1,m\right)\end{array}\,\Bigg|\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{m}\right]\\[2pt]&& =\frac{\beta^{m}}{4c}{\textrm{e}}^{-\beta t}\!\left(\frac{ct+x}{2c}\right)^{m-1}\sum_{k=0}^{+\infty}\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{k}\sum_{h=0}^{+\infty}\frac{\Gamma\!\left(1+h\right)}{\Gamma\!\left(m+m h\right)\Gamma\!\left(k+1+m h\right)}\frac{\left[\beta^{2}\!\left(\frac{c^{2}t^{2}-x^{2}}{4c^{2}}\right)\right]^{mh}}{h!}\\[2pt]&& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right].\end{eqnarray*}

Using similar reasoning, we obtain

\begin{align*}f\!\left(x,t\right) = & \frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\nonumber\\& -\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,2m+k\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\\&+\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\\& \left.-\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,2m+k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\}.\end{align*}

\begin{align*}f\!\left(x,t\right) = & \frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\nonumber\\& -\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,2m+k\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\\&+\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,k\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\\& \left.-\sum_{k=0}^{+\infty}S^{\left(m,m\right)}_{\left(m-1,2m+k\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\}.\end{align*}

Hence, straightforward calculations and the identities (10) and (12) give

\begin{align*}f\!\left(x,t\right)&=\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{{\textrm{e}}^{\beta\left(\frac{ct-x}{2c}\right)}\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[\frac{\gamma\!\left(mh,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(mh\right)}\right.\right.\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\left.-\,\frac{\gamma\!\left(m\!\left(h+2\right),\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(m\!\left(h+2\right)\right)}\right]\\&\left.+\,{\textrm{e}}^{\beta\left(\frac{ct+x}{2c}\right)}\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[\frac{\gamma\!\left(mh,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(mh\right)}-\frac{\gamma\!\left(m\!\left(h+2\right),\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(m\!\left(h+2\right)\right)}\right]\right\}.\end{align*}

\begin{align*}f\!\left(x,t\right)&=\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{{\textrm{e}}^{\beta\left(\frac{ct-x}{2c}\right)}\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[\frac{\gamma\!\left(mh,\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(mh\right)}\right.\right.\\[3pt]& \qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\left.-\,\frac{\gamma\!\left(m\!\left(h+2\right),\beta\!\left(\frac{ct-x}{2c}\right)\right)}{\Gamma\!\left(m\!\left(h+2\right)\right)}\right]\\&\left.+\,{\textrm{e}}^{\beta\left(\frac{ct+x}{2c}\right)}\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[\frac{\gamma\!\left(mh,\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(mh\right)}-\frac{\gamma\!\left(m\!\left(h+2\right),\beta\!\left(\frac{ct+x}{2c}\right)\right)}{\Gamma\!\left(m\!\left(h+2\right)\right)}\right]\right\}.\end{align*}

By setting

\begin{equation}e_{n}\!\left(x\right)\,:\!=\,\sum_{j=0}^{n}\frac{x^{\,j}}{j!}\end{equation}

\begin{equation}e_{n}\!\left(x\right)\,:\!=\,\sum_{j=0}^{n}\frac{x^{\,j}}{j!}\end{equation}

(cf. Equation 6.5.11 of [Reference Abramowitz and Stegun1]) and recalling that

(cf. Equations 6.5.2 and 6.5.13 of [Reference Abramowitz and Stegun1]), we have

\begin{align*} f\!\left(x,t\right)& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[e_{mh+2m-1}\!\left(\beta\!\left(\frac{ct-x}{2c}\right)\right)-e_{mh-1}\!\left(\beta\!\left(\frac{ct-x}{2c}\right)\right)\right]\right.\\[3pt]& \quad\left.+\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\!\left[e_{mh+2m-1}\!\!\left(\beta\!\left(\frac{ct+x}{2c}\right)\!\right)-e_{mh-1}\!\left(\!\beta\!\left(\frac{ct+x}{2c}\right)\!\right)\right]\!\right\}\!=\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\\[3pt]& \quad\times \left\{\sum_{h=0}^{+\infty}\sum_{j=0}^{2m-1}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{j+mh}}{\left(\,j+mh\right)!}\right.\\[3pt]& \qquad\qquad \left. + \sum_{h=0}^{+\infty}\sum_{j=0}^{2m-1}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{j+mh}}{\left(\,j+mh\right)!}\right\}\\& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\& \qquad\qquad\qquad \left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\right\}\\& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\ & \qquad\qquad\qquad \left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(\,j,m-1\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\},\end{align*}

\begin{align*} f\!\left(x,t\right)& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\left[e_{mh+2m-1}\!\left(\beta\!\left(\frac{ct-x}{2c}\right)\right)-e_{mh-1}\!\left(\beta\!\left(\frac{ct-x}{2c}\right)\right)\right]\right.\\[3pt]& \quad\left.+\sum_{h=0}^{+\infty}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\!\left[e_{mh+2m-1}\!\!\left(\beta\!\left(\frac{ct+x}{2c}\right)\!\right)-e_{mh-1}\!\left(\!\beta\!\left(\frac{ct+x}{2c}\right)\!\right)\right]\!\right\}\!=\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\\[3pt]& \quad\times \left\{\sum_{h=0}^{+\infty}\sum_{j=0}^{2m-1}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{j+mh}}{\left(\,j+mh\right)!}\right.\\[3pt]& \qquad\qquad \left. + \sum_{h=0}^{+\infty}\sum_{j=0}^{2m-1}\frac{\left[\beta\!\left(\frac{ct-x}{2c}\right)\right]^{mh+m-1}}{\left(mh+m-1\right)!}\frac{\left[\beta\!\left(\frac{ct+x}{2c}\right)\right]^{j+mh}}{\left(\,j+mh\right)!}\right\}\\& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\& \qquad\qquad\qquad \left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct-x}{2c}\right),\beta\!\left(\frac{ct+x}{2c}\right)\right]\right\}\\& =\frac{\beta}{4c}{\textrm{e}}^{-\beta t}\left\{\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(m-1,j\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right.\\ & \qquad\qquad\qquad \left. +\sum_{j=0}^{2m-1}S^{\left(m,m\right)}_{\left(\,j,m-1\right)}\left[\beta\!\left(\frac{ct+x}{2c}\right),\beta\!\left(\frac{ct-x}{2c}\right)\right]\right\},\end{align*}

where the last equality comes from the symmetry property

![]() $ S^{\left(k,r\right)}_{\left(i,j\right)}\!\left(x,y\right)=S^{\left(r,k\right)}_{\left(\,j,i\right)}\!\left(y,x\right) $

of the pseudo-Bessel function.

$ S^{\left(k,r\right)}_{\left(i,j\right)}\!\left(x,y\right)=S^{\left(r,k\right)}_{\left(\,j,i\right)}\!\left(y,x\right) $

of the pseudo-Bessel function.

Some properties of the two-index pseudo-Bessel function (17) can be found in Remark 3.2 of [Reference Di Crescenzo12].

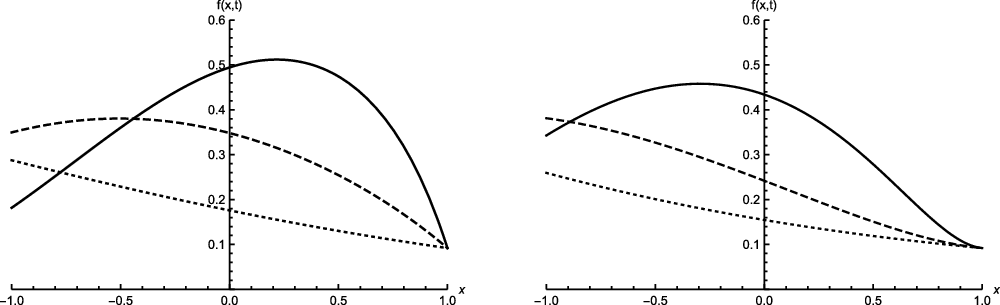

In the following proposition we provide a closed-form expression for the probability density function (8) in the case

![]() $\alpha=2$

.

$\alpha=2$

.

Proposition 3.3. In the case

![]() $\alpha=2$

the probability density function (8), for

$\alpha=2$

the probability density function (8), for

![]() $-c t<x<c t$

, has the following expression:

$-c t<x<c t$

, has the following expression:

\begin{align*}f\!\left(x,t\right) = & \frac{\lambda}{8c}e^{-\lambda t}\sum_{j=0}^{3}\left[\left(\frac{ct-x}{ct+x}\right)^{\frac{j-1}{2}} + \left(\frac{ct-x}{ct+x}\right)^{\!\!-\frac{j-1}{2}}\right]\left[I_{j-1}\!\left(\frac{\lambda}{c}\sqrt{c^{2}t^{2}-x^{2}}\right)\right.\\& \qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\left. -J_{j-1}\!\left(\frac{\lambda}{c}\sqrt{c^{2}t^{2}-x^{2}}\right)\right],\end{align*}

\begin{align*}f\!\left(x,t\right) = & \frac{\lambda}{8c}e^{-\lambda t}\sum_{j=0}^{3}\left[\left(\frac{ct-x}{ct+x}\right)^{\frac{j-1}{2}} + \left(\frac{ct-x}{ct+x}\right)^{\!\!-\frac{j-1}{2}}\right]\left[I_{j-1}\!\left(\frac{\lambda}{c}\sqrt{c^{2}t^{2}-x^{2}}\right)\right.\\& \qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qquad\left. -J_{j-1}\!\left(\frac{\lambda}{c}\sqrt{c^{2}t^{2}-x^{2}}\right)\right],\end{align*}

where, for

![]() $ \nu\in\mathbb{R}$

,

$ \nu\in\mathbb{R}$

,

\begin{equation}J_{\nu}\!\left(z\right)=\sum_{l=0}^{+\infty}\frac{\left({-}1\right)^{l}}{l!\Gamma\!\left(l+\nu+1\right)}\!\left(\frac{z}{2}\right)^{2l+\nu},\end{equation}

\begin{equation}J_{\nu}\!\left(z\right)=\sum_{l=0}^{+\infty}\frac{\left({-}1\right)^{l}}{l!\Gamma\!\left(l+\nu+1\right)}\!\left(\frac{z}{2}\right)^{2l+\nu},\end{equation}

is the Bessel function of the first kind, and

\begin{equation}I_{\nu}\!\left(z\right)=\sum_{k=0}^{+\infty}\dfrac{1}{k! \Gamma\!\left(\nu+k+1\right)} \!\left(\frac{z}{2}\right)^{2 k+\nu}\end{equation}

\begin{equation}I_{\nu}\!\left(z\right)=\sum_{k=0}^{+\infty}\dfrac{1}{k! \Gamma\!\left(\nu+k+1\right)} \!\left(\frac{z}{2}\right)^{2 k+\nu}\end{equation}

is the modified Bessel function of the first kind.

Proof. Note that, by (20) and (21),

\begin{align*}I_{1}\!\left(2\lambda\sqrt{xy}\right)+J_{1}\!\left(2\lambda\sqrt{xy}\right)&=\sum_{m=0}^{+\infty}\frac{\left(\lambda\sqrt{xy}\right)^{2m+1}}{m!\Gamma\!\left(m+2\right)}+\sum_{m=0}^{+\infty}\frac{\left({-}1\right)^{m}\!\left(\lambda\sqrt{xy}\right)^{2m+1}}{m!\Gamma\!\left(m+2\right)}\\&=2\lambda\sqrt{xy}\sum_{n=0}^{+\infty}\frac{\left(\lambda^{2}xy\right)^{2n}}{\left(2n\right)!\left(2n+1\right)!}.\end{align*}

\begin{align*}I_{1}\!\left(2\lambda\sqrt{xy}\right)+J_{1}\!\left(2\lambda\sqrt{xy}\right)&=\sum_{m=0}^{+\infty}\frac{\left(\lambda\sqrt{xy}\right)^{2m+1}}{m!\Gamma\!\left(m+2\right)}+\sum_{m=0}^{+\infty}\frac{\left({-}1\right)^{m}\!\left(\lambda\sqrt{xy}\right)^{2m+1}}{m!\Gamma\!\left(m+2\right)}\\&=2\lambda\sqrt{xy}\sum_{n=0}^{+\infty}\frac{\left(\lambda^{2}xy\right)^{2n}}{\left(2n\right)!\left(2n+1\right)!}.\end{align*}

Hence, setting

![]() $\tilde{x}\,:\!=\,\frac{ct+x}{2c}$

and

$\tilde{x}\,:\!=\,\frac{ct+x}{2c}$

and

![]() $y\,:\!=\,\frac{ct-x}{2c}$

, we can write the two-index pseudo-Bessel function (17) as

$y\,:\!=\,\frac{ct-x}{2c}$

, we can write the two-index pseudo-Bessel function (17) as

\begin{align*} S^{\left(2,2\right)}_{\left(1,0\right)}\left[\lambda \tilde{x},\lambda y\right] & = \sum_{l=0}^{+\infty}\frac{\left(\lambda \tilde{x}\right)^{2l+1}\left(\lambda y\right)^{2l}}{\left(2l+1\right)!\left(2l\right)!}\\& =\left(\lambda \tilde{x}\right)\sum_{l=0}^{+\infty}\frac{\left(\lambda^{2}\tilde{x}y\right)^{2l}}{\left(2l+1\right)!\left(2l\right)!}=\frac{1}{2}\left(\frac{y}{\tilde{x}}\right)^{-\frac{1}{2}}\left[I_{1}\left(2\lambda\sqrt{\tilde{x}y}\right)+J_{1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)\right]\\& =\frac{1}{2}\left(\frac{y}{\tilde{x}}\right)^{-\frac{1}{2}}\left[I_{-1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)-J_{-1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)\right],\end{align*}

\begin{align*} S^{\left(2,2\right)}_{\left(1,0\right)}\left[\lambda \tilde{x},\lambda y\right] & = \sum_{l=0}^{+\infty}\frac{\left(\lambda \tilde{x}\right)^{2l+1}\left(\lambda y\right)^{2l}}{\left(2l+1\right)!\left(2l\right)!}\\& =\left(\lambda \tilde{x}\right)\sum_{l=0}^{+\infty}\frac{\left(\lambda^{2}\tilde{x}y\right)^{2l}}{\left(2l+1\right)!\left(2l\right)!}=\frac{1}{2}\left(\frac{y}{\tilde{x}}\right)^{-\frac{1}{2}}\left[I_{1}\left(2\lambda\sqrt{\tilde{x}y}\right)+J_{1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)\right]\\& =\frac{1}{2}\left(\frac{y}{\tilde{x}}\right)^{-\frac{1}{2}}\left[I_{-1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)-J_{-1}\!\left(2\lambda\sqrt{\tilde{x}y}\right)\right],\end{align*}

where the last equality follows from recalling that

![]() $ I_{-n}\!\left(x\right)=I_{n}\!\left(x\right) $

and

$ I_{-n}\!\left(x\right)=I_{n}\!\left(x\right) $

and

![]() $ J_{-n}\!\left(x\right)=\left({-}1\right)^{n}J_{n}\!\left(x\right)$

.

$ J_{-n}\!\left(x\right)=\left({-}1\right)^{n}J_{n}\!\left(x\right)$

.

Moreover, for

![]() $j\geq 1$

,

$j\geq 1$

,