R1. Introduction

The commentaries stimulated by our target article on the integrated information theory (IIT) of consciousness are truly diverse in style and content and offer a wide range of verdicts regarding the merits of our critique and of IIT. This diversity samples the remarkable heterogeneity of opinion in consciousness theory generally. Thus, on the question of the problem of consciousness itself, they span from the conviction that the problem was solved decades ago (Graziano), to the suspicion that it may be intractable short of a scientific revolution (Feldman). On the question of the ontology of consciousness, they range over suggestions that consciousness might be lodged in matter as such (Velmans), involve quantum entanglement (Riva) or something like electromagnetic fields (Gur), or may be a product of neural circuitry of some kind (most commentators), perhaps of a mosaic kind (Zadra and Levitin), and not necessarily implemented at the cortical level (Devor, Koukoui, & Baron [Devor et al.]; Delafield-Butt & Trevarthen).

Whether this heterogeneity reflects a prehistory stage of disciplinary development, as we suggested, or is a post-science phenomenon, as Verschure suggests, remains to be seen. The heterogeneity is there; so, for present purposes we first briefly survey the commentaries generically, positioning them in relation to our target article, and then consider some in greater detail for their bearing on our critique of IIT.

A number of commentaries deal primarily with the question of consciousness in the large without forefronting the details of our critique of IIT (Delafield-Butt & Trevarthen; Feldman; Fitch; Graziano; Kotchoubey; Morsella, Velasquez, Yankulova, Li, & Gazzaley [Morsella et al.]; Schmidt and Biafora; Riva; Veit). They either broach constitutive issues, propose criteria to define consciousness, suggest stances that would promote progress in its study, or do more than one of these, as do a few other commentators not in this general category (Desmond & Huneman; Eagleman, Eagleman, Menon, & Meador [Eagleman et al.]; Velmans; Verschure).

Of those who deal more specifically with our critique of IIT, a few tend to frame their comments as if our target article argues the relative merits of IIT versus a geometric, viewpoint-based, theory of our own (Pennartz; Velmans; Zadra and Levitin; and to some extent Morsella et al.). This was not our intention, however. Only in the concluding section of our target article, while dealing with constitutive questions of consciousness, do we introduce a formal definition of the origin of a perspectival space. We did so to exemplify a construct that may be germane to that topic, but not as a presentation of the theory of consciousness from which that formal construct is taken. It has been presented elsewhere (Rudrauf et al., Reference Rudrauf, Bennequin, Granic, Landini, Friston and Williford2017, Reference Rudrauf, Bennequin and Williford2020, Reference Rudrauf, Sergeant-Perthuis, Belli, Tisserand and Di Marzo Serugendo2022), and is under active development (Rudrauf, Sergeant-Perthuis, Belli, Tisserand, & Serugendo, Reference Rudrauf, Sergeant-Perthuis, Belli, Tisserand and Di Marzo Serugendo2022; Williford, Bennequin, & Rudrauf, Reference Williford, Bennequin and Rudraufunder review). This same exclusive focus on IIT explains why the ideas regarding consciousness developed by the first author are not featured in the target article. Delafield-Butt and Trevarthen asked about this, and they can rest assured that those ideas stand in all essentials very much the way they were published as far as their author is concerned.

With these preliminaries, we turn to the suggestion that consciousness research is a fool's errand, in the sense that the problem has already been solved (Graziano) or that it lies beyond the reach of science as currently conceived (Feldman).

R2. Premature celebrations of victory as well as defeat

Feldman doubts that the record of success on the part of psychology and neuroscience in demystifying once mysterious aspects of our experience and behaviour will continue to dispel some of the remaining mysteries, including “the mechanism of subjective experience.” To support his pessimism, he refers to aspects of subjective experience that appear to be inconsistent with neuroscience, specifically our impression that the visual field is rich in detail throughout its expanse whereas visual resolution drops drastically with eccentricity, and the apparently single moment of touch experienced when we touch our nose with our finger, although the neural signal from the finger has a far longer distance to travel to the brain than that from the nose.

Yet these examples are vulnerable to being demystified along the lines that, in the nose-touching example, what is relevant is not their travel time to the brain, but their time and mode of arrival at the brain's mechanism of consciousness, yet to be identified. Their mode of doing so, moreover, is likely to be shaped by the well-known discounting of the consequences of self-produced movement as far as the active body part is concerned; witness the difference in subjective experience between touching one's nose with the finger; and touching a stationary finger with the nose via a head movement. Similarly, regarding our unreflective sense of a uniformly rich visual field, not everyone shares it, and even a cursory exercise in phenomenology suffices to dispel it. The belief in uniformity, meanwhile, is underwritten by the fact that wherever we look, the world, as revealed by focal vision, is rich in detail. The predictive and generative operation of our cerebral machinery is likely to build such overwhelming statistical evidence into our priors, to make a sense of visual-field uniformity an unreflective default assumption, one that pertains more to our sense of what the world is like than to the spatial distribution of our visual resolving power.

It is our guess that Feldman's neuro-scepticism does not stand and fall with the examples he provided but turns, rather, on the kind of constitutive questions we broached in our concluding section. From Feldman's relative pessimism we turn then to Graziano's conviction that the problem of consciousness has already been solved. At the heart of this claim lies the idea that consciousness is not what naïve introspection suggests and that we have no sure first-person access to its actual properties. Our beliefs, rather, encode a distorting model of the underlying phenomenon they target. Thus, an adequate theory need not capture the properties we are inclined to attribute to consciousness.

However, the fact that a model differs from the item being modelled (Graziano's “Principle 2”) does not ipso facto render the model useless, any more than the differences between a forensic artist's pencil sketch and the actual suspect render it useless in a manhunt. If the sketch did not resemble the suspect at all, it would be useless; and it is the relevant resemblances that make it useful. But, when Graziano argues against realist theories of consciousness (theories such as IIT and many others that grant introspection at least provisional evidentiary status), he adopts the stance that the differences between model and modelled do render introspection completely useless to theory. And, if introspection yields no accurate information at all about consciousness, then, Graziano suggests, the explanation of consciousness reduces to the explanation of our beliefs purporting to be about consciousness; and “consciousness” turns out to be whatever it is that those beliefs actually track (attention, according to him).

However, Graziano's own theory is not as “illusionist” as it may seem. First, our knowledge of the very existence of our introspectively based beliefs about consciousness is itself something that depends, in part, on introspection (and not on mere verbal behaviour). Thus, in that regard at the least, Graziano must accept that our introspective “model” is not inaccurate. Second, surely Graziano would agree that there are some phenomenologically derived constraints on what it is plausible to think our introspective beliefs about consciousness could actually track. Indeed, one must concede that the equation of consciousness and attention has at least some prima facie phenomenological plausibility to it that is roughly on a par with that of the equations proposed by other theories of consciousness (e.g., global availability and integration-cum-differentiation). These points suggest that Graziano is a realist, in our sense, malgré lui and that his “2 + 2 = 4” argument against such realism is overblown – none of the theories just mentioned actually contradicts his two principles.

With these reasons for putting the stances “already solved” and “won't be solved” to one side as far as the problem of consciousness goes, we turn to issues raised by commentators who engage with our critique of IIT without themselves being adherents of the theory.

R3. Issues bearing on our critique of IIT in commentaries other than those by IIT authors

Negro's commentary provides an admirably concise and accurate outline of the conceptual structure of our IIT critique. From the welter of our arguments he has extracted its conceptual backbone, and we recommend his summary to readers as a guide to its salient points. We address points where Negro considers that our criticism falls short in section R4, in direct response to the four commentaries by Tononi, Boly, Grasso, Hendren, Juel, Mayner, Marshall, and Koch (Tononi et al.) and colleagues (Albantakis; Huan; and Massimini, Sarasso, Casarotto, & Rosanova [Massimini et al.]. Negro's commentary also pinpoints the weak link in the attempt to clothe IIT in an axiomatic form, namely the transition from its axioms to its postulates (cf. Bayne, Reference Bayne2018), an issue also considered further in the next section.

The title of Brette's commentary perfectly captures our principal problem with the way in which IIT casts the trivial fact that an experience differs from other experiences as the informativeness of that experience. This is typically done by presenting to the imagination of the reader – a third-person party, as Brette points out – a number of potential experiences from which it does indeed differ. Although that comparison makes intuitive sense to the reader, it does not take place in the experience of the experiencing subject, who is having only experience x, not the experience of comparing experience x with experience y or any other experience. We have more to say about this matter in the next section.

Pennartz raises a number of germane issues. We attempted to guard against an exclusively visual construal of our invocation of a viewpoint in the concluding section of our target article. Taste is indeed experienced as located in the mouth, and smell “around the nose,” just as a pinprick is experienced as located in the finger, but we are neither in our mouths when we experience taste, nor in our finger when we experience the pinprick, any more than we are in the tree we experience outside our window. All these percepts are located in a common projective space relative to an underlying origin of projection, the elusive location of the subjective point of view you occupy in experiencing them.

As shared by different modalities, this viewpoint is specific to none of them, just as the space it helps define (without being one of its points) does not belong to any one modality, but is shared by them all. Our expression supramodal, accordingly, is not to be taken in the sense of amodal, but only indicates that each distinctive sensory modality shares the same spatial framework, as in the well-matched coincidence of the boundary of our body when defined by either sight or touch. It is to mark the singleness of this spatial framework shared by multiple modalities that we call it “supramodal.”

Four commentaries point to empirical findings which in one way or another challenge assumptions or claims made by IIT. The detailed studies involving double dissociations in measures of stimulus awareness cited by Schmidt and Biafora are not easily reconciled with a conception of consciousness as the product of a single unitary mechanism of the kind that IIT would seem to offer. The studies cited by Gur, for their part, are not easily reconciled with IIT's dependence on recurrent processing to define consciousness. The commentaries by Devor et al. and by Zadra and Levitin review empirical findings that challenge IIT on the basis of the effects of brain lesions, in the former case, and on complexities encumbering the relation between the physiology and the phenomenology of sleep and waking, in the latter. We refer to these two commentaries as well as that by Devor et al. in section R4, in responding to the commentary by Massimini et al.

Zadra and Levitin then go on to extend what might be called a “mosaic” conception of the physiology of sleep and waking to the constitution of consciousness itself by invoking Dennett's and Kinsbourne's “multiple drafts” conjecture. However, as Fitch notes in his commentary, in order to relate to either coherent behaviour (which is always of one body) or to coherent memory, the putative drafts must be selected down to a single one, and it is the selected one alone that defines our experience at any one time. The competitive mechanism required for such selection is “where they all come together” to settle their claims, and although the particular mechanism responsible for selecting the contents of our experience remains to be positively identified, the search for it is not subject to a homuncular fallacy. As Dennett himself noted: “Homunculi are bogeymen only if they duplicate entirely the talents they are rung in to explain” (Dennett, Reference Dennett1978, p. 123). Whatever piecemeal and fragmentary processes may take place in the brain, by the time they contribute to consciousness, that to which they contribute is a unitary and integrated phenomenon, just as IIT and many another theory insists.

A few commentaries bring up the function of consciousness, “what it is for,” an issue one might think would be a central concern of consciousness theory. Velmans notes that the issue plays a key role in what he calls discontinuity theories, as when the transition to consciousness at a given stage of evolution is attributed to the function it performs. Exemplifying this, Veit proposes that consciousness arose as a means for organisms to deal with what he calls pathological complexity. We assume that what he has in mind is the kind of complexity that arises in coevolution and evolutionary arms races, say of the predator–prey kind, which became acute with the evolution of large, image-forming eyes, hence his reference to the Cambrian Explosion. As Velmans notes, such proposals will need to show why, say, conscious vision, rather than simply better visually based performance operating unconsciously, is needed to meet the transition's functional challenge. The constitutive issue we raised in the concluding section of our target article will persist until it is successfully addressed.

The function of consciousness proposed by Fitch is intrinsic to brains with parallel capacity capable of learning from the outcome of their operations. The serial nature of the actions of the body imposes a competitive and selective bottleneck on the operation of its parallel functional architecture. Fitch casts consciousness as mediator of credit assignment among parallel contributors to outcomes in light of the outcome actually achieved. That role provides a natural functional rationale for crucial attributes of consciousness such as its unity and limited capacity (see, e.g., Baars, Reference Baars1993; Mandler, Reference Mandler1975, Reference Mandler2002, Ch. 2; McFarland & Sibly, Reference McFarland and Sibly1975; Merker, Reference Merker2007, p. 70). We note that no convincing rationale for the limited capacity of consciousness compared to the massive informational capacity of the cerebral hemispheres is to be found in IIT.

Desmond and Huneman ask not what consciousness, but rather what integrated information as defined by IIT, might be for, and suggest that it supplies a constitutive principle for the concept and ubiquitous biological phenomenon of agency. This fits well with our discussion of a system “acting back on itself” in our response to the lead commentary in section R4. We would suggest, however, that this plausible interpretation of the function of integrated information is unlikely to find consciousness to be its “double” in any formal, mathematical, sense. Rather, we suggest, consciousness performs a specialized subfunction within some of the systems that integrate information to sustain their agency, making conscious agents a proper subset of the set of agents. This might give us, say, agential but unconscious bacteria along with conscious agents such as ourselves.

Few commentaries address the “impasse” in the search for so-called neural correlates of consciousness to which we pointed in the concluding section of our target article. Only Fitch and Negro mention it directly, whereas Schmidt and Biafora do so by implication. If theorists differ in their conception of the explanandum, better data, data sharing, and data analysis, the means to progress suggested by Eagleman et al. in their commentary, are unlikely to resolve the impasse, which is why we pointed to the need to address constitutive issues. Metacriteria of the kind proposed by Kotchoubey are useful devices in that regard, although not without difficulties, particularly as regards the first of his metacriteria, that is, “objectively phenomenological,” as he himself appears to acknowledge. We share his belief that, with due caution, these difficulties are not insurmountable, as our remarks on the relevance of psychophysical data in the target article make clear.

Morsella et al. raise questions germane to constitutive issues when they ask us about the very relevant but seldom discussed “encapsulation” attribute of conscious contents they have defined, as well as about the “something it is like” locution from Thomas Nagel's 1974 paper. That locution, thus truncated, is sometimes taken to say something defining about consciousness. We do not think it does, but must defer discussion of these interesting issues. We, thus, turn to the four commentaries by authors directly identified with IIT, starting with the lead commentary by Tononi et al.

R4. The four commentaries by Tononi et al. and co-authors of his on IIT publications

R4.1. Lead commentary by Giulio Tononi and seven co-authors

The lead commentary's first specific critique of our treatment of IIT faults us for concentrating our analysis on the concepts of differentiation (axiom/postulate of information) and integration (axiom/postulate of integration), whereas IIT encompasses a set of five such essential pairs. Thus, Tononi et al.: “In the target article, the main refrain is that while integration and information may well be necessary requirements for consciousness, they are not sufficient. But who said they would be? […] IIT explicitly says the PSC [physical substrate of consciousness] must satisfy all five postulates, not just two.” Our characterization of IIT as underconstrained is not, however, predicated on confining ourselves to those two pairs. Instead, as we explain in the opening portions of section 2 of our target article, we base it on IIT's identification of integrated information as implemented in its algorithm for computing Φ-max with consciousness, for which we give several verbatim quotes from IIT publications. It is this identification, we claim, that casts far too wide a net across the universe of candidate conscious systems to allow it to catch only the actually conscious ones. Because, nevertheless, Tononi et al. insist on making appeal to the IIT axioms their principal bulwark against our critique, we add an analysis of their attempt to place IIT on an axiomatic footing at the end of this response to their commentary.

Regarding our target article's actual line of criticism, in order to ensure that it was understood that our critique concerns first and foremost the just mentioned computational heart of IIT in its identification with consciousness, we provided, in the second paragraph of section 2 of our target article, a capsule summary of the method by which IIT computes Φ. To avoid having to explain the specialized technical terminology of IIT, which varies across versions of IIT, we used the expression “information transfer” as a verbal shorthand for causal change within a system, and particularly for the difference, under perturbation, between the cause–effect behaviour of partitioned and unpartitioned system subsets. Our repeated reference to the behaviour of subsets under perturbation in that paragraph should have made it clear that no specialized sense of either “information” or “transfer” except for causal change, and certainly no transfer of messages – a term which is nowhere to be found in our treatment, whether in the Shannon or any other sense – was implied by this informal usage.

We should have anticipated that advocates of IIT, given the highly specialized sense in which the concept of information figures in IIT, would seize on this verbal shorthand of ours as a means of wholesale dismissal. With this clarification, we stand by our capsule summary of the bare bones essentials of the IIT computational method, no other part of which met with objections either in the lead commentary or in the three additional commentaries whose first authors have co-authored IIT publications (i.e., Albantakis, Haun, and Massimini et al.).

Similarly, in characterizing the generic category of dynamical networks, which, for short, we labelled “efficient networks” – of which we claim all networks with high Φ are instances – as possessing “high capacity for efficient global information transfer,” the locution “global information transfer” refers ultimately to nothing other than their particular causal behaviour under perturbation. “Global” here refers to the crucial fact that such systems “causally act back on themselves” in a comprehensive manner, which is what the IIT formalism has been designed to pick out, whereas the term “efficient” indicates their doing so with causal economy, which increases Φ.Footnote 1 The context in which we introduce the construct and the literature we cite in so doing, should have made this clear, but we provide this clarification, given that Tononi et al. do not properly relate our characterization to the operations by which Φ is computed in IIT.

The matter is of central importance for the assessment of IIT's claim to embody a theory of consciousness. The entirety of the claim that IIT captures “subjectivity” as a defining attribute of consciousness rests on identifying it with this property of a comprehensive “causal acting back on itself” on the part of the physical substrate of consciousness, because this “acting back on itself” is the key to and basis of the “intrinsicality” that IIT identifies with subjectivity and codifies in its Intrinsicality axiom and corresponding postulate. Thus, the lead commentary (p. 44): “Intrinsicality means that every experience is subjective – it is for the subject of experience, from its own private, intrinsic perspective, rather than for something extrinsic to it.” But no “subject of experience” is defined by the IIT formalism: There is nothing in it that corresponds to the phenomenological notion of an experience being for a subject or present to a subject, let alone the Cartesian cogito. The claim of “intrinsicality” (qua postulate) rests only on this: That by examining the system's behaviour alone, under a sufficiently rich set of specified perturbations, it is possible to identify a domain or part of the system that is distinguished by maximally acting back on itself under these perturbations. It, thus, in a manner of speaking, “defines itself” under these manipulations.

The fact that “defines itself” is a reflexive grammatical construction implies nothing more about selfhood, subjectivity, or “intrinsic perspective” than the fact that under temperature perturbations those parts of a building that are heated by a thermostat-controlled heating system are picked out “intrinsically” (i.e., “define themselves”) by their behaviour alone vis-à-vis temperature. The reflexive constructions by which our grammar conveys such circumstances imply nothing about “subjectivity,” “for a subject,” “private,” or “perspective” in the case of the building, any more than in the case of the brain, or any other system that acts back on itself in this particular way. This “acting back on itself” can be as simple as the photodiode arrangement that Oizumi, Albantakis, and Tononi (Reference Oizumi, Albantakis and Tononi2014) declared to be conscious in the publication that defines IIT 3.0. In that case, the crucial “acting back on itself” is supplied by the one-bit memory latch that is part of the arrangement. That suffices to deliver a positive Φ score as well as make the grammatical reflexivity apt, but it does not even touch on the subjectivity that is a defining property of consciousness, which, we would argue, is a structure that IIT is not suited to capture (see below).

By equating “subjectivity,” “existing for itself,” and so on with “intrinsicality” defined in the manner just explicated, IIT brings within its compass a number of candidate conscious systems whose conscious status the authors of the lead commentary attempt to disavow. Among them are large-scale electrical power grids and human social networks, in addition to the many absurdly simple systems, like the photodiode arrangement, they are willing to attribute consciousness to. In the case of power grids, of course “it all depends on the nature of the units, on their fan-in and fan-out, and on the level of noise,” as the lead commentary says. Therefore, why not apply IIT by actually measuring the integrated information of, say, the US power grid by at least a proxy method (given the intractability of the IIT formalism for almost all real-world applications), instead of resting content with the intuition that “Most likely, power grids should harbor little hope of being conscious”? We look forward to seeing the results of such an exercise conducted with a proxy measure of causal behaviour appropriate to power grids, and similarly for other systems such as our gene-expression networks, the internet, and social networks. We predict that in each case the effort will yield an estimate of Φ substantially in excess of that which sufficed to qualify the photodiode arrangement of IIT 3.0 as conscious (Oizumi et al., Reference Oizumi, Albantakis and Tononi2014).

Consider, then, the grounds on which the lead commentary authors attempt to disavow “group mind”: “a society to which I belong cannot be conscious simply because I am conscious, which excludes from existence any superset that includes my PSC (no ‘group mind’)”. This argument at first blush might seem plausible given the IIT “postulate of exclusion.” It violates, nevertheless, the norm prescribed by the IIT formalism, because, adhering to it strictly, we do not know what PSC (physical substrate of consciousness) is claiming to be conscious in this instance! IIT requires us to ascertain what that substrate is by finding that part of the system as a whole that maximizes Φ. The system as a whole includes all network parts that are causally connected to one another. For a human body that includes a vast and multi-facetted assembly of networks, including neural, immune, metabolic, and hormonal ones. They are all causally interconnected with one another by intricate feedforward and feedback causal pathways, and thus are necessarily part of what defines the system as a whole. Even if we stop there, which as we shall presently see we cannot do, it is on this body-wide system that the IIT formalism directs us to calculate Φ, and not on some part of this causal network favoured by our extra-IIT intuitions regarding the relevant object of inquiry.

But we cannot even stop at that body-wide system, because human bodies engage in causally consequential interactions with one another that not only form social networks (Milgram, Reference Milgram1967), but also networks that exhibit the kind of connectivity that leads one to expect substantial magnitudes of Φ were the IIT formalism to be applied to them (Boccaletti et al., Reference Boccaletti, Latora, Moreno, Chavez and Hwang2006). Until that has been done, claims to the effect that “I am conscious” are undecidable – by the formal principles of IIT – with respect to which part of this vastly ramified physical system as a whole is voicing its conscious status by using the first-person pronoun. For all we know it might be the highly integrated gene-expression network serving our immune system or the social interconnectivity of human beings (today capped by the efficient network called the world-wide web), that is speaking to us in the claims of one or another author of the lead commentary to be conscious.

Needless to say, we do not believe any of this to be the case. We are merely explicating the consequences of the way IIT asks us to conduct the search for the physical substrate of consciousness. Until the formal IIT calculus has been applied to the actually existing causal structures within which we are enmeshed there is no way of knowing (by IIT standards) whether, say, Tononi is conscious and the social system of which he is a part is not, or whether his nervous system and body has simply served as a convenient output pathway – unconscious by the postulate of exclusion – by which the social system of which he is a part is voicing its conscious status. By IIT principles, such questions should be answered by calculating Φ for the system as a whole, and not by extra-IIT intuitions about what physical substrate is expressing itself in this way.

Regarding our point that a subjective “point of view” or “projective perspectival origin” is a necessary attribute of consciousness that is missing from IIT, the lead commentary claims that IIT is capable of defining such a point of view, citing Haun and Tononi (Reference Haun and Tononi2019), a matter we address in our response to Haun, who also makes this claim. Finally, before turning to the axioms, Tononi et al. misunderstand the referent of “integrated information” where we say that it is “a hypothetical construct in search of a unique and well-defined formal definition and measure.” This was not meant to single out IIT's particular bid for such a measure, nor even the IIT-related efforts to design more tractable means to compute the same, but refers to the generic concept of integrated information itself, which IIT is not alone in pursuing. Hence our reference in this connection is not only to Tegmark's taxonomy, but also to “complexity theory.” Whether IIT has, in fact, found a unique measure for the difference that partitioning a mechanism makes in its cause–effect repertoire, a matter internal to its particular formal framework, remains to be seen.Footnote 2 The confidence with which IIT's claims about consciousness are asserted has in any case not varied with successively abandoned distance measures (Kullback-Leibler divergence, Earth Mover's distance).

We conclude our response to the lead commentary by addressing its complaint that we have not taken the IIT phenomenological “axioms” seriously in our critique. In this regard, our favourable reference to Bayne's 2018 article on the matter was intended to obviate the need for a longer discussion. To our knowledge, Bayne's concerns have not been seriously addressed in any primary IIT publication. Here, we echo Bayne's criticisms and supplement them with the following considerations.

The history of phenomenology and of psychology as well as work on consciousness since at least the 1970s in philosophy and neuroscience demonstrate the difficulty of distilling a set of “axioms” from phenomenological reflection, let alone a complete set of such axioms, at least if one intends one's generalizations to impose substantive constraints on a theory. The attempted “axiomatization” of IIT roundly illustrates this difficulty. What lends its axioms an air of indubitability is their formulation at a level of generality that robs them of the specificity needed to constrain a theory of consciousness. Of how many referents besides an experience can it not be said that they are structured (axiom of composition), or that they “are the specific way they are” (axiom of information), or unitary (axiom of integration), or that they are definite, that they “contain what they contain, neither less nor more” (axiom of exclusion)? Strictly speaking, it is only the axiom of intrinsicality – that “experience is subjective” – that is clearly consciousness-specific, and we have already covered how the IIT construct “intrinsicality,” qua postulate, fails to define subjectivity.

To this over-generality of the axioms comes the fact, noted already by Bayne, as well as by Negro, that even if we take the IIT “axioms” to reflect certain widely accepted phenomenological truisms (and, depending on their interpretation, they can be taken to do so), this is very far from securing the case for IIT's postulates. It is the postulates that bear on IIT's mechanistic account of consciousness and its method for computing Φ; so without deriving the postulates from the axioms, the bearing of the latter on the method is moot. That is, unlike geometrical axioms and postulates, IIT's “axioms” and “postulates” do not have the same logical status; rather, the “postulates” provide a kind of conjectural interpretation of the “axioms” tailored to fit the formalism of the method. The rigour lent to IIT by the use of such language is apparent only.

It is in virtue of their underspecification that the IIT axioms seem undeniable and obvious. As Bayne points out, proposals for more precisely formulated, nuanced, and substantive characterizations of such putative consciousness-invariants come at the expense of controversy, and the indubitability vanishes. Yet, by lending the axioms, via underspecification, the appearance of being elevated above the controversies that have long surrounded serious phenomenological efforts, and by identifying them, without formal warrant, with the corresponding postulates, doubts concerning the postulates can be portrayed as unreasonable doubts about the obvious. We illustrate the anomaly of this situation by means of the crucial axiom of exclusion as follows.

The phenomenological data reflected in the axiom of exclusion are by no means inconsistent with the denial of the postulate of exclusion. For aught we can tell via introspection, a conscious super-system could have individually conscious parts all of which are none the wiser. That a consciousness is definite and contains no more and no less than what it does, which is what the axiom says, is fully compatible with its containing conscious parts that it does not experience as such (but rather, say, in terms of various qualitative distinctions) as well as with its being a conscious part of a larger conscious super-system. Moreover, positing this is, as these things go, perhaps less metaphysically bizarre than what the postulate of exclusion entails. If some relatively simple entities can be conscious, as the logic of IIT tells us, why would they not keep their individual consciousness even as they combine to make conscious super-systems?

By the latched photodiode norm of IIT, an individual bacterium is bound to have a non-zero Φ score, and hence to be conscious to some extent. If, as is technologically feasible, we could move it in and out of a microbiome with a higher Φ score than that of the bacterium itself, are we to seriously imagine that its consciousness would flicker on and off as it is moved in an out?Footnote 3 This, at least, is certain: The fact that it would thus flicker in and out is hardly indubitable and obvious in the way that the proposition that an experience is “definite, that it contains what it contains, neither less nor more” (axiom of exclusion) is indubitable. The exclusion postulate, by contrast, is not only not determined by its corresponding phenomenological axiom, it has some of the most metaphysically bizarre consequences of anything in consciousness theory, as just noted.

The formal hiatus between axioms and postulates noted by both Bayne and Negro, the fact that postulates do not follow deductively from the axioms, undermines any suggestion that by questioning the postulates one is thereby questing obvious truisms about consciousness.Footnote 4 Our critique of IIT recognizes this less than compelling connection between IIT axioms and the formalism by which IIT assesses a system's integrated information, identified with consciousness, and measured by Φ. Our critique, therefore, moves on the postulate, indeed on the formal method, side of that hiatus, as underscored by our thumbnail sketch of that method, and leaves the axioms as the rhetorical embellishment of IIT that they on closer inspection turn out to be. It is unproblematic to accept some version of all of the axioms as statements of truisms about consciousness (and, in most cases, not only consciousness). But, as we, in effect, argue extensively in the target article, the postulates of IIT do not offer the “best” explanation (see footnote 4) of these truisms as truisms specifically about consciousness.

R4.2. Larissa Albantakis

We would have no problem with the IIT information axiom if, as Albantakis states, it “merely asserts that experience is specific, not generic,” explicated as its being “the particular way it is.” This “may seem like a truism,” Albantakis acknowledges, but immediately goes on to assert that “it nevertheless implies the possibility of a myriad of experiences that differ from the current one, all being the particular way they are.”Footnote 5 This “nevertheless implies” is quite problematic if it is taken to mean, as Albantakis and a long line of IIT publications going all the way back to Tononi and Edelman (Reference Tononi and Edelman1998) apparently do, that the informativeness of an experience to the person who is having that experience is attributable to experiences that the person is not having at the time, in fact to a myriad of such at-the-time-non-experienced experiences (see also our response to Brette above).

This theoretical commitment, which serves as one of the foundation stones of IIT as a theory of consciousness, is difficult to understand as anything other than a conflation of the informativeness of an experience being had with the logistics of state transitions in a probabilistic model of causal network operations such as that employed in IIT. In such a probabilistic model of a causal system, there is a sense in which its transition probability matrix as a whole (in the limit) conditions state transitions.Footnote 6 As beneficiaries of such transitions, assuming for the sake of argument that they occur exactly as in the IIT model, human consciousness dwells in the results of such causal operations – the experience itself – without being privy to the operations “behind the scenes” that bring them about (Kihlstrom, Reference Kihlstrom and Velmans1996; Morsella et al., Reference Morsella, Velasquez, Yankulova, Li, Wong and Lambert2020; Velmans, Reference Velmans1991).

It is, therefore, the contents of an accomplished transition, rather than the logistics of its genesis, that must define the informativeness of an experience to the one who is having it, and not the myriad non-experienced experiences invoked by IIT, excluded from consciousness as they are by virtue of our having the experience we are having. The only currently unrealized patterns out of the many available in a system's transition probability matrix that, even in theory, may contribute to the actual contents of an experience we are, in fact, having are those defined by points of that matrix traversed by the system in its past experiential history, provided the occurrence of those states effected more or less durable changes in the physical properties of the system itself. Such operations, needless to say, are not available to a system built, as in IIT, of memoryless units. That is how the issue of memory entered our discussion of informativeness, in which we employed the standard usage of the term memory in psychology and neuroscience, which refers to the way a system's activity alters, more or less lastingly, the system itself, typically by altering the response characteristics of its constituent elements.

That is what makes the literature we cite in that connection relevant to understanding the informativeness of experience as it actually occurs in conscious creatures such as ourselves, and therefore to our critique of “informativeness” as construed in IIT. That literature explores how the significance (informativeness) of current experience depends on past experience in creatures whose physical substrate of consciousness consists of other than memoryless elements.

Given that humans are such creatures, and that the lead commentary insists that “the only viable scientific avenue is to develop a theory that adequately accounts for the presence and properties of our consciousness – for its quality and quantity – based on its physical substrate in our brain, and to extrapolate from there,” it follows that IIT's claims regarding “informativeness” based on networks of memoryless units do not, in any non-trivial sense, bear on the informativeness of a given human experience, because the units that compose the physical substrate of the latter are equipped with memory at a variety of temporal scales. We conclude that the IIT idea that the informativeness of a current experience is based on or somehow involves the myriad experiences that are excluded by having that experience is an error of principle embedded in its very foundations.

A final terminological note in the margin: Albantakis puts quotation marks around “access” when referring to our ordinary language use of the word in our treatment, apparently attributing some special significance to our use of that word. Lest Albantakis should have misunderstood us to allude to the “access consciousness” distinction introduced by Ned Block, we hasten to disavow any such connection or intention. That distinction plays no role in our critique. Apart from this possibility, the quotation marks, along with Albantakis's focus on that word, are hard to understand.

R4.3. Marcello Massimini et al.

Massimini et al. accurately portray the challenge our “systems consolidation” interpretation of the integration properties of our cerebral equipment poses to IIT. Their attempt to meet that challenge on the basis of results obtained in different conditions and impairments of consciousness employing the perturbation complexity index (PCI) is problematic on multiple counts, however. First, there is the general problem encumbering all empirical tests of IIT, namely whether the proxy measure is a valid stand-in for Φ. There are three grounds for doubting this:

(1) The PCI settles for a grain in space and time chosen for the convenient measurement instrumentation that is available at those levels of “graining” rather than after an assessment of Φ at all levels, as IIT stipulates. The authors of the lead commentary themselves violate this stricture when they ask us to “Consider the grain of our own PSC: is it neurons, micro-columns, or minicolumns? Is it over microseconds, milliseconds, or seconds?”. Why start at the level of neurons, and why stop at the level of minicolumns, and similarly for time, when IIT itself requires all levels of graining to be canvassed in order to find Φ? If we are to take IIT seriously, it is not acceptable to substitute, when convenient for the sake of argument or measurement, extra-IIT intuitions regarding function for the formal commitments of IIT.

(2) We cite Mediano et al. (Reference Mediano, Seth and Barrett2019b) to the effect that “measures that share similar theoretical properties can behave in substantially different ways, even on simple systems.” To spell this out in words that bring out the methodological problem for proxy measures: Minor differences in proxy measures can cause major differences in outcome. Without a comparison of the proxy measure and integrated information as measured by Φ in the target system itself we do not at this point know whether any proxy measure provides even an approximation of Φ.

(3) In raising objections to the way our target article subjects the combination of differentiation with integration that plays a central role in IIT to particularly close scrutiny, authors of the lead commentary note that “IIT explicitly says the PSC must satisfy all five postulates, not just two. So countless substrates may score high on information integration, but only maxima of Φ can be a PSC” (italics added). But the PCI proxy measure is based on exactly the two attributes of differentiation and integration we highlight (see the title of the Massimini et al. commentary). According to Tononi himself (a co-author on the proxy measure studies Massimini et al. cite), this leaves room for countless false positives in applying such a measure to the assessment of consciousness.

Given these basic problems encumbering IIT proxy measures in general, and the PCI more specifically, there is no assurance that the results of the studies Massimini et al. cite in fact reflect what a full and formal assessment of Φ in the same circumstances would have disclosed. But let us assume, for the sake of argument, that they have happened upon an adequate stand-in for Φ nevertheless. That by no means disposes of the potential confound between consciousness and the logistics of information storage that we suggest encumbers those studies, particularly because those storage logistics involve the very combination of differentiation with integration that the PCI measures. The reason is this: Dreams (and hallucinations under sedation) are recallable, and thus stored. We certainly do not recall every dream we have on waking up, but that does not mean that they are not stored, as we learn in instances where, even days after having had a dream we did not recall on waking from it, we encounter, in real life, a concrete reminder of something that figured in the dream, and that triggers recall of a dream of which, without that reminder, we would know nothing. Preservation of idiosyncratic detail across years in thematic dreams unrelated to the dreamer's everyday world betoken the same.

Similarly, for ketamine anaesthesia: At issue is not the subjects' ability to “retain memory of external events occurring during sedation” as Massimini et al. claim, but the fact that subjects, post-sedation, recall experiences that transpired during sedation. That is how we know that subjects experience hallucinations while cut off from the world by the sedative. Being recalled means that those experiences were stored. As these examples show, the required dissociation between consciousness and the function we propose for the high-Φ organization of our cerebral apparatus is by no means easy to attain. As we noted in our target article, evidence like that cited by Massimini et al. cannot be taken to support IIT until such a dissociation is known to have been achieved in the sorts of cases in question.

Beyond these basic considerations, the details of how dreaming and non-rapid eye movement (REM) sleep are related to the operation of the cerebral-cortex-wide efficient network organization that we suggest serves systems consolidation must await studies that take the full complexity of the sleep phenomenon into account, a complexity usefully illustrated by the kind of findings reviewed in brief in the Devor et al. and the Zadra and Levitin commentaries. The fact that memory consolidation is “topped off” during parts of non-REM sleep – the proverbial advantage conferred by “sleeping on” a memorization task – does not mean that consolidation as such is dependent on that sleep stage (during which PCI is lower than in REM sleep). Consolidation is obviously operational in the waking state itself. According to the hypothesis that identifies the high-Φ organization of our cerebral equipment with systems consolidation for the coherence of memory storage rather than with consciousness itself, the cortex is free to allocate aspects of the consolidation process to whatever phase of the diurnal cycle can make a contribution in that regard, as in the case of the ripple activity of non-REM sleep (the conscious content of which sleep stage, moreover, is hardly nil as Devor et al. note, to complicate matters further). In sum, there is a long way to go before the kind of studies Massimini et al. cite can be considered to provide anything like an adequate test of the claims of IIT.

R4.4. Andrew Haun

The basic claims of IIT's approach to the experience of space and spatial extension are contained in the opening paragraph of the Haun commentary. They rely on three component claims, regarding phenomenology, topology, and physical realization. We shall comment on all three briefly, beginning with the confounding of the first two in the Haun and Tononi treatment of 2019. In place of actual, first-hand, phenomenology, that publication presents the reader with an exercise in what might be called “guided imagery.” Guided by what? By the concepts and primitives of mereology and the topology of spatial extension (“connection,” “fusion,” “inclusion,” etc.). These are presented, in the paper's introduction, as simple examples that make the essential elements of mereotopology intuitively accessible, but from the outset, the approach is problematic.

To wit, how could even cursory first-hand phenomenology fail to disclose that human experience presents space as extended in three dimensions, rather than as a two-dimensional “extended canvas” on which its contents are “painted, image after image” (Haun and Tononi, Reference Haun and Tononi2019, p. 3, italics added)?Footnote 7 How could primary phenomenology fail to disclose that the experienced content of both visual and haptic space consists of distinctly shaped three-dimensional objects rather than “spots”? And what kind of phenomenology delivers the datum that “a spot always overlaps itself” (Haun and Tononi, Reference Haun and Tononi2019, p. 14), a notion directly derived from the “reflexivity” property that figures in mereotopology? Apparently, these phenomenological claims originate in the formalisms of mereotopology and mereology, as applied to surface extension more specifically.Footnote 8 That the spatial content of experience fulfils formal criteria for spatial extendedness is trivial. But the experience of spatial extension requires more, namely a point of view; and that, in turn, requires a projective geometrical frame, which is not recoverable from the framework Haun and Tononi deployFootnote 9 (cf. our concluding paragraph below).

It was the need to do justice to primary phenomenology in its own right and as a resource for consciousness theory, as well as to avoid the risk of making a pre-conceived theory into a Procrustean bed, that motivated us to point to psychophysics as both a model and a source of data, given that its dependent variable is typically what subjects actually experience, thus making it relevant to consciousness theory. Haun is, of course, right in noting that we misconstrued IIT's “difference that makes a difference” by equating it with the JND of psychophysics, and we stand corrected on that point. The larger point, however, remains, namely the need for genuine primary phenomenology to inform consciousness theory, and the availability of psychophysical data to constrain theorizing.

A striking instance of IIT failure to avail itself of easily accessible constraints from these sources is its proposal that the physical substrate of our experience of space and spatial extension is to be found in the spatially organized sensory maps of early sensory cortices, both visual and somatosensory. Thus, Haun and Tononi (Reference Haun and Tononi2019): “grid-like areas, primarily located in posterior cortex, specify cause-effect structures that correspond in a one-to-one manner to the experience of space” (p. 32). And the Haun commentary, with reference to that paper: “IIT can account, in a highly detailed manner, for spatial phenomenology, demonstrating that topological features of spatial experience are identical to the features of the cause-effect structures specified by the kinds of networks that compose early sensory cortices” (opening paragraph).

The toy network on the basis of which this identity claim is made can be said to “model” those cortical areas in only the most rudimentary manner. A linear row of 8 units with only next neighbour interactions captures nothing specific to these early cortical sensory areas that is not also true of many other topographic maps that populate the central nervous system at various levels, from spinal cord to prefrontal cortex. There are, however, features of the early cortical sensory areas that rule them out as candidate physical substrates for our experience of spatial extension. Haun and Tononi envisage a quite specific correspondence between the detailed features of these maps and our spatial experience, because they propose to explain quite subtle (and controversial) anisotropies of visual phenomenology of our experience of space by detailed differences within and between these cortical sensory areas. Thus, “the precise features of the experience would depend on the precise pattern of connections” (Haun & Tononi, Reference Haun and Tononi2019, p. 32).

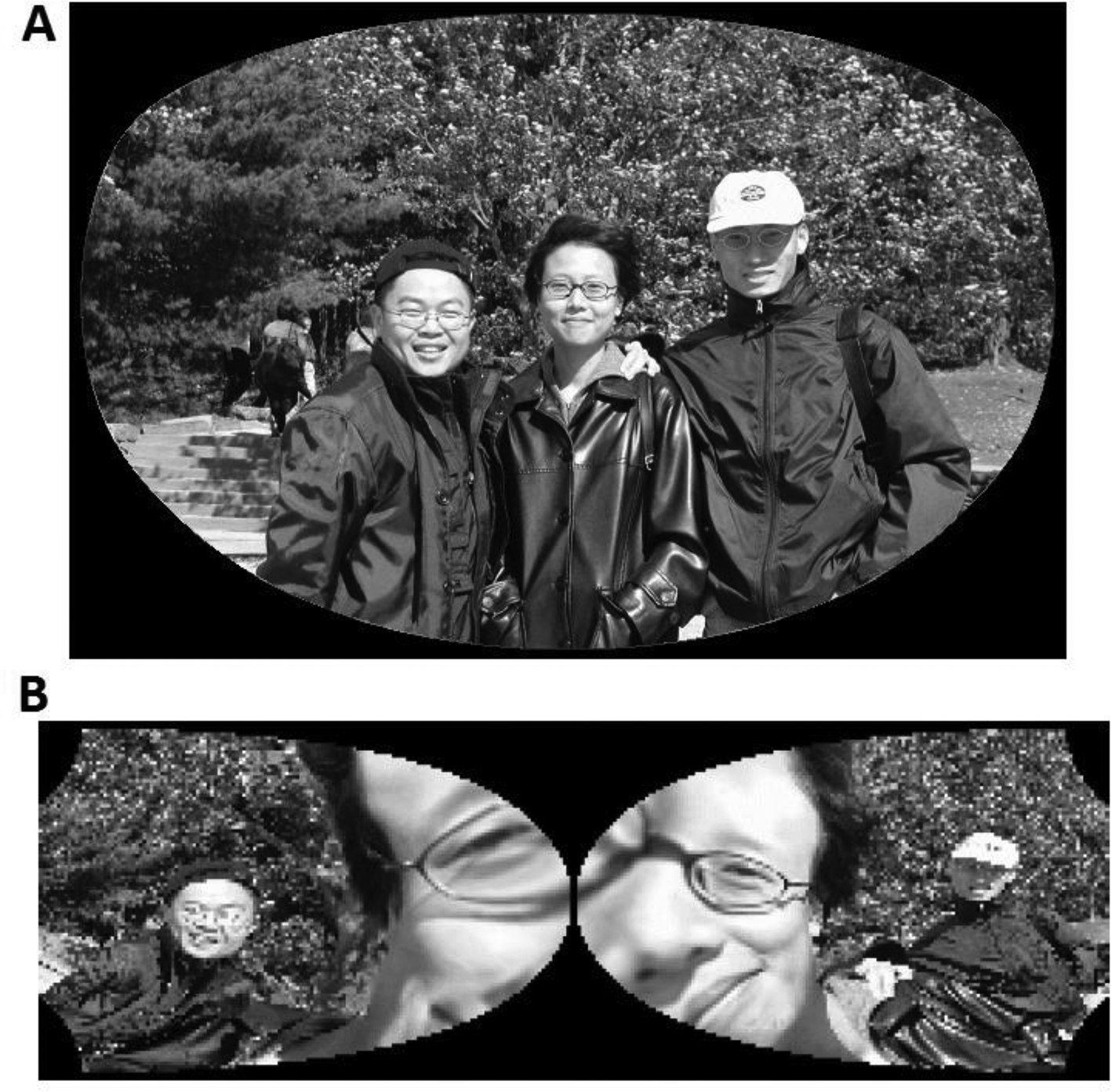

Yet there is a massive anisotropy, built into the basic anatomy and connectivity of every one of these areas, that completely dwarfs these subtle differences, yet is conspicuous by its absence from our experience of spatial extension, whether visual or haptic. Each of these cortical sensory maps, both visual and somatosensory, exhibits massive spatial magnification factors physically implemented in gross anatomy, a feature which in the case of vision concentrates sensory resolving power to central parts of the represented space (Schwartz, Reference Schwartz1977, Reference Schwartz1980), as illustrated in Feldman's commentary figure. Reflected in our experience of spatial extension this basic cortical sensory feature would make the snapshot of panel A in our figure appear as it does in panel B of the figure, which depicts the snapshot as it is represented spatially in primary visual cortex (Fig. R1).

Figure R1. Spatial representation of the visual field in primary visual cortex can be approximated by a log-polar mapping (Schwartz, Reference Schwartz1977, Reference Schwartz1980). (A) The original image, with line of sight directed to the lower left edge of the frame of the glasses covering the central subjects’ right eye. Image supplied with a mask approximating the shape of the human visual field. (B) The scene of the snapshot as it would appear to us if primary visual cortex (and with slight modifications any early cortical visual area) served as the physical substrate of our spatial experience. The two visual half-fields are allocated to separate hemispheres through the partial decussation at the optic chiasm, hence the split image of panel B. Figure adapted from Fig. 2.14 of Gennan Chen's doctoral dissertation (Reference Chen2002), with permission of the author and copyright holder.

Even a cursory exercise in phenomenology suffices to rule out our primary visual cortical area as the physical substrate of our experience of visual space, and substantial magnification factors incompatible with our visual experience persist up the visual hierarchy to the level of MT/V5 (Gattass et al., Reference Gattass, Nascimento-Silva, Soares, Lima, Karla Jansen, Diogo and Fiorani2005; Kolster, Peeters, & Orban, Reference Kolster, Peeters and Orban2010) and beyond. For the early somatosensory areas of posterior cortex the corresponding anatomical distortion of spatial extent – exactly the attribute Haun and Tononi attempt to model – pertains to the distal extremities and mouth region. The functional architecture of the visual and somatosensory areas of posterior cortex is accordingly ruled out as plausible candidates for the anatomical substrate of our experience of spatial extension, contrary to what Haun and Tononi propose. A corollary of these circumstances is that the physical substrate of the visual as well as haptic experience of space is to be found elsewhere than early sensory areas of the cerebral cortex.

To conclude this excursion into the phenomenology and neurophenomenology of spatial experience stimulated by the Haun commentary, we note that Haun and Tononi propose that to equip a sensory space with “the feeling of a perspectival ‘center’ of space,” “a natural center may be provided by grids specifying body space, which would be heavily bound by relations to the middle of visual space, and by grids responsible for initiating movements near the body midline.” However, to find a suitable midpoint of a sensory space does not amount to equipping it with a perspectival viewpoint of the kind that performs a constitutive role in defining consciousness. Many definable spaces have a midpoint of some sort, but only conscious spaces have a viewpoint, which requires a projective frame and a “horizonal” structure. The midpoint of a space is one of the points of that space, and as we explained in brief in our target article, the viewpoint, in the sense of the origin of projection, must not exist as one of the points of the dimensional space for which it provides the perspective point. That rules out the Haun and Tononi solution to equipping their space with a perspectival viewpoint. Ultimately, what is missing from IIT is the subject of experience itself, which we already had occasion to comment on in relation to IIT claims regarding “intrinsicality.”Footnote 10

In sum, IIT's extension into the phenomenology of spatial experience is as problematic as other parts of the IIT project, for reasons that we believe can all be traced back to the fact that IIT is a theory of something other than consciousness, as developed at length in our target article.

R5. Concluding remarks

Again, as in our target article, we end by turning towards the future. A principal motive behind our proffering this analysis of IIT as mere critics instead of as advocates of an alternative theory has been our conviction that IIT not only fails to support its claims, but it attempts to do so in ways that would hamper the search for a scientific account of consciousness unless those ways are clearly identified and rejected. This includes (1) presenting theory-driven constructs as if they were the deliverances of phenomenology, specifically by simply interpreting its axioms in terms of postulates motivated by the method for computing Φ, (2) adopting the external trappings of formal rigour (axioms and postulates) that lack the substance of formal rigour (for which see Bayne [Reference Bayne2018], the commentary by Negro, and our response to the lead commentary), (3) toy model exercises claimed to bear on neural structures (such as cerebellum, the thalamocortical complex, and “early cortical sensory areas”) without their actually doing so, (4) claiming that results based on proxy measures confirm a theory whose own principles, taken seriously, cast serious doubt on the validity of the proxy measure (see our response to Massimini et al.), and (5) disavowing, without having applied the theory to relevant instances, inconvenient – indeed, potentially disconfirmatory – consequences of the theory, such as conscious power grids (lead commentary), while (6) embracing absurdly simple systems (e.g., a two-element photodiode circuit) as possessed of consciousness (Oizumi et al., Reference Oizumi, Albantakis and Tononi2014). Such practices are not acceptable if consciousness theory is to be taken seriously as a part of science, and we therefore concur with Verschure's forceful statement in his commentary that what is at stake in our judgement of IIT is not just the validity of a proposed theory of consciousness, but the integrity of science as a path to knowledge.

Acknowledgment

We give special thanks to Gennan Chen for his permission to use the figure from his 2002 Dissertation.

Financial support

David Rudrauf was supported by the Swiss National Science Foundation, project funding in Mathematics, Natural Sciences and Engineering (division II) No. 205121_188753.

Conflict of interest

The authors declare no conflict of interest.

Target article

The integrated information theory of consciousness: A case of mistaken identity

Related commentaries (23)

A call for comparing theories of consciousness and data sharing

Anatomical, physiological, and psychophysical data show that the nature of conscious perception is incompatible with the integrated information theory (IIT)

Axioms and postulates: Finding the right match through logical inference

Computation, perception, and mind

Consciousness generates agent action

Consciousness is already solved: The continued debate is not about science

Consciousness, complexity, and evolution

Does the present moment depend on the moments not lived?

Encapsulation and subjectivity from the standpoint of viewpoint theory

Escaping from the IIT Munchausen method: Re-establishing the scientific method in the study of consciousness

Explaining the gradient: Requirements for theories of visual awareness

Functional theories can describe many features of conscious phenomenology but cannot account for its existence

IIT is ideally positioned to explain perceptual phenomena

IIT, half masked and half disfigured

Is the neuroscientist's grandmother in the notebook? Integrated information and reference frames in the search for consciousness

Measures of differentiation and integration: One step closer to consciousness

Meta-criteria to formulate criteria of consciousness

Searching in the wrong place: Might consciousness reside in the brainstem?

The disintegrated theory of consciousness: Sleep, waking, and meta-awareness

The integrated information theory of agency

To be or to know? Information in the pristine present

What is exactly the problem with panpsychism?

Why evolve consciousness? Neural credit and blame allocation as a core function of consciousness

Author response

The integrated information theory of consciousness: Unmasked and identified