1. Introduction

The Erdős–Rényi random graph, denoted by G(n, p), is obtained from the complete graph with vertex set [n] by independently retaining each edge with probability

![]() $p\in [0,1]$

and deleting it with probability

$p\in [0,1]$

and deleting it with probability

![]() $1-p$

. We are interested in the size of the largest connected component

$1-p$

. We are interested in the size of the largest connected component

![]() $\mathcal C_{\max}$

, or a typical connected component

$\mathcal C_{\max}$

, or a typical connected component

![]() $\mathcal{C}(v)$

for

$\mathcal{C}(v)$

for

![]() $v\in[n]$

. It is well known (see e.g [Reference Bollobás7, Reference van der Hofstad32] or [Reference Janson, Luczak and Rucinski15] for more details) that, if

$v\in[n]$

. It is well known (see e.g [Reference Bollobás7, Reference van der Hofstad32] or [Reference Janson, Luczak and Rucinski15] for more details) that, if

![]() $p=p(n)=\gamma/n$

for constant

$p=p(n)=\gamma/n$

for constant

![]() $\gamma$

, then G(n, p) undergoes a phase transition as

$\gamma$

, then G(n, p) undergoes a phase transition as

![]() $\gamma$

passes 1:

$\gamma$

passes 1:

-

i. if

$\gamma < 1$

(the subcritical case), then

$\gamma < 1$

(the subcritical case), then

$|\mathcal C_{\max}|$

is of order

$|\mathcal C_{\max}|$

is of order

$\log n$

;

$\log n$

; -

ii. if

$\gamma=1$

(the critical case), then

$\gamma=1$

(the critical case), then

$|\mathcal C_{\max}|$

is of order

$|\mathcal C_{\max}|$

is of order

$n^{2/3}$

;

$n^{2/3}$

; -

iii. if

$\gamma > 1$

(the supercritical case), then

$\gamma > 1$

(the supercritical case), then

$|\mathcal C_{\max}|$

is of order n.

$|\mathcal C_{\max}|$

is of order n.

Motivated by the lack of a simple proof of (ii), Nachmias and Peres [Reference Nachmias and Peres24] used a martingale argument to prove that for any

![]() $n>1000$

and

$n>1000$

and

![]() $A>8$

,

$A>8$

,

and

They also gave bounds when

![]() $p = \frac{1+\lambda n^{-1/3}}{n}$

for fixed

$p = \frac{1+\lambda n^{-1/3}}{n}$

for fixed

![]() $\lambda\in\mathbb{R}$

. The best known bound on the latter quantity is due originally to Pittel [Reference Pittel26] who showed that for p of this form,

$\lambda\in\mathbb{R}$

. The best known bound on the latter quantity is due originally to Pittel [Reference Pittel26] who showed that for p of this form,

converges as

![]() $A\to\infty$

to a specific constant, which is stated to be

$A\to\infty$

to a specific constant, which is stated to be

![]() $(2\pi)^{-1/2}$

but should be

$(2\pi)^{-1/2}$

but should be

![]() $(8/9\pi)^{1/2}$

due to a small oversight in the proof. More details, and a stronger result that allows A and

$(8/9\pi)^{1/2}$

due to a small oversight in the proof. More details, and a stronger result that allows A and

![]() $\lambda$

to depend on n, are available in [Reference Roberts28]. Both [Reference Pittel26] and [Reference Roberts28] rely on a combinatorial formula for the expected number of components with exactly k vertices and

$\lambda$

to depend on n, are available in [Reference Roberts28]. Both [Reference Pittel26] and [Reference Roberts28] rely on a combinatorial formula for the expected number of components with exactly k vertices and

![]() $k+\ell$

edges, which is specific to Erdős–Rényi graphs and appears difficult to adapt to other models, together with analytic approximations.

$k+\ell$

edges, which is specific to Erdős–Rényi graphs and appears difficult to adapt to other models, together with analytic approximations.

We provide a new proof of asymptotics for

![]() $\mathbb{P}(|\mathcal{C}(v)|>An^{2/3})$

and

$\mathbb{P}(|\mathcal{C}(v)|>An^{2/3})$

and

![]() $\mathbb{P}(|\mathcal{C}_{\max}|>An^{2/3})$

that combines the strengths of the results mentioned above:

$\mathbb{P}(|\mathcal{C}_{\max}|>An^{2/3})$

that combines the strengths of the results mentioned above:

-

it gives accurate bounds for large A as

$n\to\infty$

;

$n\to\infty$

; -

it allows A and

$\lambda$

to depend on n;

$\lambda$

to depend on n; -

it uses only robust probabilistic tools and therefore has the potential to be adapted to other models of random graphs.

This is the purpose of our main theorem, which we now state.

Theorem 1.1 There exists

![]() $A_0>0$

such that if

$A_0>0$

such that if

![]() $A=A(n)$

satisfies

$A=A(n)$

satisfies

![]() $A_0\le A = o(n^{1/30})$

and

$A_0\le A = o(n^{1/30})$

and

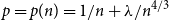

![]() $p=p(n)=1/n+\lambda/n^{4/3}$

with

$p=p(n)=1/n+\lambda/n^{4/3}$

with

![]() $\lambda = \lambda(n)$

such that

$\lambda = \lambda(n)$

such that

![]() $|\lambda|\leq A/3$

, then for sufficiently large n and any vertex

$|\lambda|\leq A/3$

, then for sufficiently large n and any vertex

![]() $v\in[n]$

, we have

$v\in[n]$

, we have

and

for some constants

![]() $0<c_1\le c_2<\infty$

.

$0<c_1\le c_2<\infty$

.

Although our methods are not accurate enough to give the correct constant factor in the asymptotic, identified in [Reference Roberts28], we believe that the substantially more robust approach is worth the small sacrifice in precision. Indeed, the probabilistic arguments in [Reference Nachmias and Peres24] have been adapted to critical random d-regular graphs by Nachmias and Peres [Reference Nachmias and Peres23], the configuration model with bounded degrees by Riordan [Reference Riordan27], and more recently a particular model of inhomogeneous random graphs by the first author and Pachon [Reference De Ambroggio and Pachon11].

At the end of Sections 3 and 4 (see Remarks 3.7 and 4.15) we will comment further on the problem of finding the right constant in the asymptotic using methods similar to the ones in this paper. We believe that it may be possible but would require significantly more technical work. We also remark that Nachmias and Peres [Reference Nachmias and Peres24] gave specific values of n and A for which their bounds hold, namely

![]() $n>1000$

and

$n>1000$

and

![]() $A>8$

. We could do the same, but it would again require significantly more technical details given the more precise nature of our bounds.

$A>8$

. We could do the same, but it would again require significantly more technical details given the more precise nature of our bounds.

We remark that our proofs of the upper bounds in Theorem 1.1 are particularly straightforward, perhaps even more so than those in [Reference Nachmias and Peres24], despite giving a much more accurate bound. A key part of the argument will be the following simple ballot-type result, which may be of independent interest.

Lemma 1.2 Fix

![]() $n\in\mathbb{N}$

. Let

$n\in\mathbb{N}$

. Let

![]() $X_1,\dots,X_n$

be

$X_1,\dots,X_n$

be

![]() $\mathbb{Z}-$

valued random variables and suppose that the law of

$\mathbb{Z}-$

valued random variables and suppose that the law of

![]() $(X_1,\dots,X_n)$

is invariant under rotations (it may depend on n). Define

$(X_1,\dots,X_n)$

is invariant under rotations (it may depend on n). Define

![]() $S_0 = 0$

and

$S_0 = 0$

and

![]() $S_t = \sum_{i=1}^{t}X_i$

, for

$S_t = \sum_{i=1}^{t}X_i$

, for

![]() $t\in [n]$

. Then for any

$t\in [n]$

. Then for any

![]() $j\in\mathbb{N}$

,

$j\in\mathbb{N}$

,

Our proofs of the lower bounds in Theorem 1.1 will be more complicated than those for the upper bounds, although they still use only robust probabilistic techniques such as a generalised ballot theorem, Poisson approximations for the binomial distribution and Brownian approximations to random walks. In future work we intend to demonstrate the adaptability of our new approach by applying our methods to other random graph models. As the first step in this direction, for applications of Lemma 1.2 to a random intersection graph, an inhomogeneous random graph and percolation on a d-regular graph, see [Reference De Ambroggio10].

Structure of the paper. We start by introducing ballot-type results in Section 2, where we prove Lemma 1.2 and a corollary which will be the main tool to obtain the upper bounds in Theorem 1.1. We also state a generalised ballot theorem due to Addario-Berry and Reed [Reference Addario-Berry and Reed2] that will be used for our lower bounds. Subsequently, in Section 3 we prove the upper bounds in (a) and (b) of Theorem 1.1, whereas the corresponding lower bounds will be proved in Section 4.

Notation. We write

![]() $\mathbb{N}_0 = \mathbb{N}\cup\{0\}$

,

$\mathbb{N}_0 = \mathbb{N}\cup\{0\}$

,

![]() $[n]=\{1,2,\ldots,n\}$

, and

$[n]=\{1,2,\ldots,n\}$

, and

![]() $[\![a,b]\!] = [a,b]\cap{\mathbb{Z}}$

. The abbreviation i.i.d. means ‘independent and identically distributed’. The empty sum is defined to be 0, and the empty product is defined to be 1. In particular we use the convention that

$[\![a,b]\!] = [a,b]\cap{\mathbb{Z}}$

. The abbreviation i.i.d. means ‘independent and identically distributed’. The empty sum is defined to be 0, and the empty product is defined to be 1. In particular we use the convention that

![]() $\sum_{i=n+1}^n a_i$

is zero, for any n and any sequence

$\sum_{i=n+1}^n a_i$

is zero, for any n and any sequence

![]() $(a_i)$

. For brevity we simply write A rather than A(n),

$(a_i)$

. For brevity we simply write A rather than A(n),

![]() $\lambda$

instead of

$\lambda$

instead of

![]() $\lambda(n)$

, and p in place of

$\lambda(n)$

, and p in place of

![]() $p(n)=1/n + \lambda n^{-4/3}$

. We will often write c to mean a constant in

$p(n)=1/n + \lambda n^{-4/3}$

. We will often write c to mean a constant in

![]() $(0,\infty)$

and use c many times in a single proof even though the constant may change from line to line.

$(0,\infty)$

and use c many times in a single proof even though the constant may change from line to line.

1.1. Related work

Besides his Proposition 2, which gives asymptotics for

![]() $\mathbb{P}(|\mathcal C_{\max}|>An^{2/3})$

in the case of constant (large) A and

$\mathbb{P}(|\mathcal C_{\max}|>An^{2/3})$

in the case of constant (large) A and

![]() $\lambda$

, Pittel [Reference Pittel26] includes several other results which we make no attempt to rework. These include asymptotics for

$\lambda$

, Pittel [Reference Pittel26] includes several other results which we make no attempt to rework. These include asymptotics for

![]() $\mathbb{P}(|\mathcal C_{\max}|< an^{2/3})$

when a is small. Nachmias and Peres [Reference Nachmias and Peres24] also gave a simple but inaccurate upper bound on this quantity, and it would be interesting to give an intuitive probabilistic proof of more accurate asymptotics. Pittel’s paper is partially based on an earlier article by Luczak, Pittel and Wierman [Reference Łuczak, Pittel and Wierman20].

$\mathbb{P}(|\mathcal C_{\max}|< an^{2/3})$

when a is small. Nachmias and Peres [Reference Nachmias and Peres24] also gave a simple but inaccurate upper bound on this quantity, and it would be interesting to give an intuitive probabilistic proof of more accurate asymptotics. Pittel’s paper is partially based on an earlier article by Luczak, Pittel and Wierman [Reference Łuczak, Pittel and Wierman20].

For G(n, p) outside the critical scaling window, i.e. when

![]() $\lambda$

is not bounded in n,

$\lambda$

is not bounded in n,

![]() $n^{2/3}$

is not the most likely size for the largest component of the graph, and therefore our results—while still true, at least provided

$n^{2/3}$

is not the most likely size for the largest component of the graph, and therefore our results—while still true, at least provided

![]() $|\lambda| \le A/3 = o(n^{1/30})$

—appear less natural than those by Nachmias and Peres [Reference Nachmias and Peres22], Bollobás and Riordan [Reference Bollobás and Riordan8] or Riordan [Reference Riordan27].

$|\lambda| \le A/3 = o(n^{1/30})$

—appear less natural than those by Nachmias and Peres [Reference Nachmias and Peres22], Bollobás and Riordan [Reference Bollobás and Riordan8] or Riordan [Reference Riordan27].

A local limit theorem for the size of the k largest components (for arbitrary k) was given by Van der Hofstad et al. [Reference van der Hofstad, Kager and Müller34]. See also Van der Hofstad et al. [Reference van der Hofstad, Kliem and van Leeuwaarden36], where similar results to those established by Pittel [Reference Pittel26] are proved in the context of inhomogeneous random graphs.

Aldous [Reference Aldous3] used a breadth-first search algorithm to explore G(n, p) for p within the critical window and showed that the sizes of the largest components, if rescaled by

![]() $n^{2/3}$

, converge (in an appropriate sense) to some limit, which he described in detail. The same type of argument has been used by Van der Hofstad [Reference van der Hofstad, Janssen and van Leeuwaarden33] to investigate critical SIR epidemics. The work of Aldous was then developed by Addario-Berry, Broutin and Goldschmidt [Reference Addario-Berry, Broutin and Goldschmidt1] who showed that the rescaled components themselves converge to metric spaces characterised by excursions of Brownian motion with parabolic drift, decorated by a Poisson point process.

$n^{2/3}$

, converge (in an appropriate sense) to some limit, which he described in detail. The same type of argument has been used by Van der Hofstad [Reference van der Hofstad, Janssen and van Leeuwaarden33] to investigate critical SIR epidemics. The work of Aldous was then developed by Addario-Berry, Broutin and Goldschmidt [Reference Addario-Berry, Broutin and Goldschmidt1] who showed that the rescaled components themselves converge to metric spaces characterised by excursions of Brownian motion with parabolic drift, decorated by a Poisson point process.

There are several other models that share similar properties with the near-critical Erdős–Rényi graph. For instance, there are many critical models whose component sizes, when suitably rescaled, converge to the lengths of excursions of Brownian motion with parabolic drift just as for the Erdős–Rényi graph. Some examples include inhomogeneous random graphs (see e.g. [Reference Bhamidi, van der Hofstad and van Leeuwaarden5] and [Reference Bhamidi, van der Hofstad and van Leeuwaarden6]), the configuration model (see [Reference Dhara, van der Hofstad, van Leeuwaarden and Sen13], [Reference Joseph16] and [Reference Riordan27]), and quantum random graphs (see [Reference Dembo, Levit and Vadlamani12]).

In another direction we mention [Reference O’Connell25],where a large deviations rate function is provided for the size of the maximal component divided by n, valid for the

![]() $G(n,\gamma/n)$

model with

$G(n,\gamma/n)$

model with

![]() $\gamma>0$

. For a very recent work in this direction, see [Reference Andreis, König and Patterson4].

$\gamma>0$

. For a very recent work in this direction, see [Reference Andreis, König and Patterson4].

Finally, the results of [Reference Roberts28] were used to show the existence of times when a dynamical version of the Erdős–Rényi graph has an unusually large connected component. Related results about the structure of dynamical Erdős–Rényi graphs were given by Rossignol [Reference Rossignol29].

2. Ballot-style results

Let

![]() $X_1,\dots,X_n \in \{-1,1\}$

be i.i.d. random variables taking values in

$X_1,\dots,X_n \in \{-1,1\}$

be i.i.d. random variables taking values in

![]() $\{-1,1\}$

, with

$\{-1,1\}$

, with

![]() $\mathbb{P}(X_i=1)=\mathbb{P}(X_i=-1)=1/2$

, and let

$\mathbb{P}(X_i=1)=\mathbb{P}(X_i=-1)=1/2$

, and let

![]() $S_t = \sum_{i=1}^{t}X_i$

. In its simplest form the ballot theorem concerns the probability that

$S_t = \sum_{i=1}^{t}X_i$

. In its simplest form the ballot theorem concerns the probability that

![]() $S_t$

stays positive for all times

$S_t$

stays positive for all times

![]() $t\in [n]$

, given that

$t\in [n]$

, given that

![]() $S_n=k \in \mathbb{N}$

, and says that the answer is

$S_n=k \in \mathbb{N}$

, and says that the answer is

![]() $k/n$

; see e.g. [Reference Addario-Berry and Reed2, Reference Kager17, Reference Konstantopoulos19, Reference van der Hofstad and Keane35] and references therein. However, we will be interested in evaluating probabilities of the following type:

$k/n$

; see e.g. [Reference Addario-Berry and Reed2, Reference Kager17, Reference Konstantopoulos19, Reference van der Hofstad and Keane35] and references therein. However, we will be interested in evaluating probabilities of the following type:

where

![]() $k\geq 1$

and

$k\geq 1$

and

![]() $X_1,\dots,X_n$

are i.i.d. random variables taking values in

$X_1,\dots,X_n$

are i.i.d. random variables taking values in

![]() $\{-1,0,1,2,\dots\}$

. A possible solution might be to apply the following generalised ballot theorem.

$\{-1,0,1,2,\dots\}$

. A possible solution might be to apply the following generalised ballot theorem.

Theorem 2.1 (Addario-Berry and Reed [Reference Addario-Berry and Reed2])Suppose X is a random variable satisfying

![]() $\mathbb{E}[X]=0$

,

$\mathbb{E}[X]=0$

,

![]() $\text{Var}(X)>0$

,

$\text{Var}(X)>0$

,

![]() $\mathbb{E}[X^{2+\alpha}]<\infty$

for some

$\mathbb{E}[X^{2+\alpha}]<\infty$

for some

![]() $\alpha >0$

, and X is a lattice random variable with period d (meaning that dX is an integer random variable and d is the smallest positive real number for which this holds). Then given independent random variables

$\alpha >0$

, and X is a lattice random variable with period d (meaning that dX is an integer random variable and d is the smallest positive real number for which this holds). Then given independent random variables

![]() $X_1,X_2,\dots$

distributed as X with associated partial sums

$X_1,X_2,\dots$

distributed as X with associated partial sums

![]() $S_t = \sum_{i=1}^{t}X_i$

, for all j such that

$S_t = \sum_{i=1}^{t}X_i$

, for all j such that

![]() $0\leq j =O\left(\sqrt{n}\right)$

and such that j is a multiple of

$0\leq j =O\left(\sqrt{n}\right)$

and such that j is a multiple of

![]() $1/d$

we have

$1/d$

we have

This result will indeed be useful in the proof of the lower bounds in our Theorem 1.1. However, for the upper bound we will need a result that holds when j is much larger than

![]() $\sqrt{n}$

. Our Lemma 1.2 shows that the upper bound remains true more generally. We now aim to prove that result.

$\sqrt{n}$

. Our Lemma 1.2 shows that the upper bound remains true more generally. We now aim to prove that result.

Fix

![]() $n\in \mathbb{N}$

. Let

$n\in \mathbb{N}$

. Let

![]() $X = (X_1,\dots,X_n)$

be random variables taking values in

$X = (X_1,\dots,X_n)$

be random variables taking values in

![]() $\mathbb{Z}$

. Define

$\mathbb{Z}$

. Define

![]() $S_0 = 0$

and

$S_0 = 0$

and

![]() $S_t = \sum_{i=1}^{t}X_i$

for all

$S_t = \sum_{i=1}^{t}X_i$

for all

![]() $t\in [n]$

. Given

$t\in [n]$

. Given

![]() $r\in [n]$

, define the rotation of

$r\in [n]$

, define the rotation of

![]() $S=(S_0,S_1,\dots,S_n)$

by r as the walk

$S=(S_0,S_1,\dots,S_n)$

by r as the walk

![]() $S^{r}=(S_0^{r},S_1^{r},\dots,S_n^{r})$

corresponding to the rotated sequence

$S^{r}=(S_0^{r},S_1^{r},\dots,S_n^{r})$

corresponding to the rotated sequence

![]() $X^{r} = (X_{r+1},\dots,X_n,X_1,\dots,X_r)$

. That is,

$X^{r} = (X_{r+1},\dots,X_n,X_1,\dots,X_r)$

. That is,

-

if

$0\leq t\leq n-r$

, then

$0\leq t\leq n-r$

, then

$S_t^{r} = S_{t+r}-S_r = \sum_{i=r+1}^{t+r}X_i$

;

$S_t^{r} = S_{t+r}-S_r = \sum_{i=r+1}^{t+r}X_i$

; -

if

$n-r<t\leq n$

, then

$n-r<t\leq n$

, then

$S_t^{r} = S_n+S_{t+r-n}-S_r = \sum_{i=r+1}^{n}X_i+\sum_{i=1}^{t+r-n}X_i$

.

$S_t^{r} = S_n+S_{t+r-n}-S_r = \sum_{i=r+1}^{n}X_i+\sum_{i=1}^{t+r-n}X_i$

.

In particular,

![]() $S_n^{r}=\sum_{i=r+1}^{n}X_i+\sum_{i=1}^{r}X_i=S_n$

(for every

$S_n^{r}=\sum_{i=r+1}^{n}X_i+\sum_{i=1}^{r}X_i=S_n$

(for every

![]() $r\in [n]$

) and

$r\in [n]$

) and

![]() $S^n=S$

.

$S^n=S$

.

Definition 2.2 We say that

![]() $r\in [n]$

is favourable if

$r\in [n]$

is favourable if

![]() $S_t^{r}>0 $

for every

$S_t^{r}>0 $

for every

![]() $t\in [n]$

.

$t\in [n]$

.

The following lemma contains the key observation needed to prove Lemma 1.2.

Lemma 2.3 Fix

![]() $j\in \mathbb{N}$

. If

$j\in \mathbb{N}$

. If

![]() $S_n=j$

, then

$S_n=j$

, then

Proof. The idea is simply that between each two favourable vertices, the partial sum must increase by at least one. To see this, let

![]() $1\leq I_1<\dots <I_L\leq n$

denote the indices (if any) such that

$1\leq I_1<\dots <I_L\leq n$

denote the indices (if any) such that

![]() $I_k$

is favourable for

$I_k$

is favourable for

![]() $1\leq k\leq L$

. We need to show that

$1\leq k\leq L$

. We need to show that

![]() $L\leq j$

. Observe that for each

$L\leq j$

. Observe that for each

![]() $1\leq k\leq L-1$

, since

$1\leq k\leq L-1$

, since

![]() $I_k$

is favourable and

$I_k$

is favourable and

![]() $X_1,\ldots,X_n$

are integer-valued, we have

$X_1,\ldots,X_n$

are integer-valued, we have

![]() $S^{I_k}_t\ge 1$

for all

$S^{I_k}_t\ge 1$

for all

![]() $t\in[n]$

; in particular

$t\in[n]$

; in particular

![]() $S_{I_{k+1}-I_k}^{I_k}\geq 1$

. For the same reason,

$S_{I_{k+1}-I_k}^{I_k}\geq 1$

. For the same reason,

![]() $S_{(I_1+n)-I_L}^{I_L}\geq 1$

. Consequently

$S_{(I_1+n)-I_L}^{I_L}\geq 1$

. Consequently

\begin{equation*}S_n= \sum_{k=1}^{L-1}S_{I_{k+1}-I_k}^{I_k}+S_{(I_1+n)-I_L}^{I_L} \ge \sum_{k=1}^{L-1} 1 + 1 = L.\end{equation*}

\begin{equation*}S_n= \sum_{k=1}^{L-1}S_{I_{k+1}-I_k}^{I_k}+S_{(I_1+n)-I_L}^{I_L} \ge \sum_{k=1}^{L-1} 1 + 1 = L.\end{equation*}

But our assumption is that

![]() $S_n=j$

, so we must have

$S_n=j$

, so we must have

![]() $L\le j$

as required.

$L\le j$

as required.

Proof of Lemma 1.2. For any

![]() $r\in[n]$

, since

$r\in[n]$

, since

![]() $(X_1,X_2,\ldots,X_n)$

is invariant under rotations,

$(X_1,X_2,\ldots,X_n)$

is invariant under rotations,

and since

![]() $S_n^r=S_n$

, we obtain that

$S_n^r=S_n$

, we obtain that

Summing over

![]() $r\in[n]$

and applying Lemma 2.3 we have

$r\in[n]$

and applying Lemma 2.3 we have

\begin{align*}n\mathbb{P}(S_t>0\ \forall t\in [n],\,S_n=j) & = \sum_{r=1}^{n}\mathbb{E}[\unicode{x1D7D9}_{\{r \text{ is favourable}\}}\unicode{x1D7D9}_{\{S_n=j\}}] \\

& = \mathbb{E}\bigg[\unicode{x1D7D9}_{\{S_n=j\}}\sum_{r=1}^{n}\unicode{x1D7D9}_{\{r \text{ is favourable}\}}\bigg]\\

&\leq \mathbb{E}\left[\unicode{x1D7D9}_{\{S_n=j\}}j\right]= j\mathbb{P}(S_n=j)\end{align*}

\begin{align*}n\mathbb{P}(S_t>0\ \forall t\in [n],\,S_n=j) & = \sum_{r=1}^{n}\mathbb{E}[\unicode{x1D7D9}_{\{r \text{ is favourable}\}}\unicode{x1D7D9}_{\{S_n=j\}}] \\

& = \mathbb{E}\bigg[\unicode{x1D7D9}_{\{S_n=j\}}\sum_{r=1}^{n}\unicode{x1D7D9}_{\{r \text{ is favourable}\}}\bigg]\\

&\leq \mathbb{E}\left[\unicode{x1D7D9}_{\{S_n=j\}}j\right]= j\mathbb{P}(S_n=j)\end{align*}

which completes the proof.

The following corollary will be used to prove the upper bounds of Theorem 1.1.

Corollary 2.4 Fix

![]() $n\in\mathbb{N}$

and let

$n\in\mathbb{N}$

and let

![]() $(X_i)_{i\ge 1}$

be i.i.d. random variables taking values in

$(X_i)_{i\ge 1}$

be i.i.d. random variables taking values in

![]() $\mathbb{Z}$

, whose distribution may depend on n. Let

$\mathbb{Z}$

, whose distribution may depend on n. Let

![]() $h\in \mathbb{N}$

, and suppose that

$h\in \mathbb{N}$

, and suppose that

![]() $\mathbb{P}(X_1=h)>0$

. Define

$\mathbb{P}(X_1=h)>0$

. Define

![]() $S_t = \sum_{i=1}^{t}X_i$

for

$S_t = \sum_{i=1}^{t}X_i$

for

![]() $t\in\mathbb{N}_0$

. Then for any

$t\in\mathbb{N}_0$

. Then for any

![]() $j\geq 1$

we have

$j\geq 1$

we have

Proof. Let

![]() $X_0$

be an independent copy of

$X_0$

be an independent copy of

![]() $X_1$

. Define

$X_1$

. Define

![]() $S_t^*=X_0+S_t$

for

$S_t^*=X_0+S_t$

for

![]() $0\leq t\leq n$

. Then

$0\leq t\leq n$

. Then

\begin{align}&\mathbb{P}(h+S_t>0\ \forall t\in [n],\, h+S_{n}=j)\nonumber\\[2pt]

&=\mathbb{P}(X_0=h)^{-1}\mathbb{P}(h+S_t>0\ \forall t\in [n],\,h+S_{n}=j,\,X_0=h)\nonumber\\[2pt]

&=\mathbb{P}(X_1=h)^{-1}\mathbb{P}(S_t^*>0\ \forall t\in [n],\,S_{n}^*=j,\,S_0^*=h)\nonumber\\[2pt]

&\leq \mathbb{P}(X_1=h)^{-1}\mathbb{P}(S_t^*>0\ \forall t\in \{0\}\cup [n],\,S_{n}^*=j).\end{align}

\begin{align}&\mathbb{P}(h+S_t>0\ \forall t\in [n],\, h+S_{n}=j)\nonumber\\[2pt]

&=\mathbb{P}(X_0=h)^{-1}\mathbb{P}(h+S_t>0\ \forall t\in [n],\,h+S_{n}=j,\,X_0=h)\nonumber\\[2pt]

&=\mathbb{P}(X_1=h)^{-1}\mathbb{P}(S_t^*>0\ \forall t\in [n],\,S_{n}^*=j,\,S_0^*=h)\nonumber\\[2pt]

&\leq \mathbb{P}(X_1=h)^{-1}\mathbb{P}(S_t^*>0\ \forall t\in \{0\}\cup [n],\,S_{n}^*=j).\end{align}

Now since

![]() $(S_0^*,S_1^*,\dots,S_n^*)\overset{d}{=}(S_1,S_2,\dots,S_{n+1})$

, applying Lemma 1.2 we obtain that

$(S_0^*,S_1^*,\dots,S_n^*)\overset{d}{=}(S_1,S_2,\dots,S_{n+1})$

, applying Lemma 1.2 we obtain that

\begin{align*}\mathbb{P}(S_t^*>0\ \forall t\in \{0\}\cup [n],\,S_{n}^*=j)&=\mathbb{P}(S_t>0\ \forall t\in [n+1]\,,S_{n+1}=j)\\[2pt]

&\leq \frac{j}{n+1}\mathbb{P}(S_{n+1}=j),\end{align*}

\begin{align*}\mathbb{P}(S_t^*>0\ \forall t\in \{0\}\cup [n],\,S_{n}^*=j)&=\mathbb{P}(S_t>0\ \forall t\in [n+1]\,,S_{n+1}=j)\\[2pt]

&\leq \frac{j}{n+1}\mathbb{P}(S_{n+1}=j),\end{align*}

and substituting this into (1) gives the result.

3. Proof of the upper bounds in Theorem 1.1

A main ingredient in our analysis is an exploration process, which is a procedure to sequentially discover the component containing a given vertex, and which reduces the study of component sizes to the analysis of the trajectory of a stochastic process. Such exploration processes are well known, dating back at least to [Reference Martin-Löf21], and several variants exist. Our description closely follows the one appearing in [Reference Roberts28]; see also [Reference Nachmias and Peres24].

Let G be any (undirected) graph with vertex set [n], and let

![]() $v\in [n]$

be any given vertex. Fix an ordering of the n vertices with v first. At each time

$v\in [n]$

be any given vertex. Fix an ordering of the n vertices with v first. At each time

![]() $t\in \{0\}\cup [n]$

of the exploration, each vertex will be active, explored or unseen; the number of explored vertices will be t whereas the (possibly random) number of active vertices will be denoted by

$t\in \{0\}\cup [n]$

of the exploration, each vertex will be active, explored or unseen; the number of explored vertices will be t whereas the (possibly random) number of active vertices will be denoted by

![]() $Y_t$

. At time

$Y_t$

. At time

![]() $t=0$

, vertex v is declared to be active whereas all other vertices are declared unseen, so that

$t=0$

, vertex v is declared to be active whereas all other vertices are declared unseen, so that

![]() $Y_0=1$

. At each step

$Y_0=1$

. At each step

![]() $t\in [n]$

of the procedure, if

$t\in [n]$

of the procedure, if

![]() $Y_{t-1}>0$

then we let

$Y_{t-1}>0$

then we let

![]() $u_t$

be the first active vertex; if

$u_t$

be the first active vertex; if

![]() $Y_{t-1}=0$

, we let

$Y_{t-1}=0$

, we let

![]() $u_t$

be the first unseen vertex (here the term first refers to the ordering that we fixed at the beginning of the procedure). Note that at time

$u_t$

be the first unseen vertex (here the term first refers to the ordering that we fixed at the beginning of the procedure). Note that at time

![]() $t=1$

we have

$t=1$

we have

![]() $u_1=v$

. Denote by

$u_1=v$

. Denote by

![]() $\eta_t$

the number of unseen neighbours of

$\eta_t$

the number of unseen neighbours of

![]() $u_t$

in G and change the status of these vertices to active. Then, set

$u_t$

in G and change the status of these vertices to active. Then, set

![]() $u_t$

itself explored. From this description we see that:

$u_t$

itself explored. From this description we see that:

-

$Y_t=Y_{t-1}+\eta_t-1$

, if

$Y_t=Y_{t-1}+\eta_t-1$

, if

$Y_{t-1}>0$

;

$Y_{t-1}>0$

; -

$Y_t=\eta_t$

, if

$Y_t=\eta_t$

, if

$Y_{t-1}=0$

.

$Y_{t-1}=0$

.

We now specialise to the Erdős–Rényi random graph, i.e. we now take

![]() $G=G(n,p)$

. Let us denote by

$G=G(n,p)$

. Let us denote by

![]() $U_t=n-Y_t-t$

the number of unseen vertices in G(n, p) at time t, and define

$U_t=n-Y_t-t$

the number of unseen vertices in G(n, p) at time t, and define

![]() $\mathcal{F}_0 = \{\Omega,\emptyset\}$

and

$\mathcal{F}_0 = \{\Omega,\emptyset\}$

and

![]() $\mathcal{F}_t = \sigma(\{\eta_j\,{:}\,1\leq j\leq t\})$

for

$\mathcal{F}_t = \sigma(\{\eta_j\,{:}\,1\leq j\leq t\})$

for

![]() $t\in [n]$

. Then for

$t\in [n]$

. Then for

![]() $t\in [n]$

, given

$t\in [n]$

, given

![]() $\mathcal{F}_{t-1}$

, we see that

$\mathcal{F}_{t-1}$

, we see that

![]() $\eta_t\sim \textrm{Bin}(U_{t-1},p)$

. Since

$\eta_t\sim \textrm{Bin}(U_{t-1},p)$

. Since

![]() $U_t\leq n-t$

, we can couple the process

$U_t\leq n-t$

, we can couple the process

![]() $(\eta_i)_{i \in [n]}$

with a sequence

$(\eta_i)_{i \in [n]}$

with a sequence

![]() $(\tau_i)_{i \in [n]}$

of independent

$(\tau_i)_{i \in [n]}$

of independent

![]() $\textrm{Bin}(n-i,p)$

random variables such that

$\textrm{Bin}(n-i,p)$

random variables such that

![]() $\tau_i\geq \eta_i$

for all i. It follows that, for any

$\tau_i\geq \eta_i$

for all i. It follows that, for any

![]() $k\in[n]$

,

$k\in[n]$

,

\begin{align}\mathbb{P}(|\mathcal{C}(v)|> k) &= \mathbb{P}(Y_t>0\ \forall t\in [k])\nonumber\\&=\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\eta_i-1)>0\ \forall t\in [k]\bigg)\nonumber\\\

&\leq \mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \bigg).\end{align}

\begin{align}\mathbb{P}(|\mathcal{C}(v)|> k) &= \mathbb{P}(Y_t>0\ \forall t\in [k])\nonumber\\&=\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\eta_i-1)>0\ \forall t\in [k]\bigg)\nonumber\\\

&\leq \mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \bigg).\end{align}

We would like to apply Corollary 2.4 to the sequence

![]() $(1+\sum_{i=1}^{t}(\tau_i-1))_{t\in [k]}$

, and to this end we need to turn the latter process into a random walk with identically distributed increments. This is achieved in Lemma 3.1 below.

$(1+\sum_{i=1}^{t}(\tau_i-1))_{t\in [k]}$

, and to this end we need to turn the latter process into a random walk with identically distributed increments. This is achieved in Lemma 3.1 below.

Lemma 3.1 There exists a finite constant c such that for any

![]() $k\in[n]$

,

$k\in[n]$

,

\begin{equation*}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \bigg) \leq c\mathbb{P}\left(1+R_t>0\ \forall t\in [k],\, 1+R_{k}\ge \frac{k^2p}{2} - \frac{k}{n^{1/2}}\right),\end{equation*}

\begin{equation*}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \bigg) \leq c\mathbb{P}\left(1+R_t>0\ \forall t\in [k],\, 1+R_{k}\ge \frac{k^2p}{2} - \frac{k}{n^{1/2}}\right),\end{equation*}

where

![]() $(R_t)_{t\geq 0}$

is a random walk with

$(R_t)_{t\geq 0}$

is a random walk with

![]() $R_0=0$

and i.i.d. steps each having distribution

$R_0=0$

and i.i.d. steps each having distribution

![]() $\textrm{Bin}(n,p)-1$

.

$\textrm{Bin}(n,p)-1$

.

The idea behind this lemma is that by adding an independent Bin(i, p) random variable to

![]() $\tau_i$

, we transform it into a Bin(n, p) random variable which forms one of the steps of the random walk

$\tau_i$

, we transform it into a Bin(n, p) random variable which forms one of the steps of the random walk

![]() $R_t$

appearing on the right-hand side. If the sum of the

$R_t$

appearing on the right-hand side. If the sum of the

![]() $\tau_i$

up to t remains positive then

$\tau_i$

up to t remains positive then

![]() $R_t$

, which is larger, must certainly also remain positive; but also the final value

$R_t$

, which is larger, must certainly also remain positive; but also the final value

![]() $R_k$

must be larger than the sum of the additional contributions from the Bin(i, p) random variables. A standard bound shows that these additional contributions are concentrated about their mean, which is approximately

$R_k$

must be larger than the sum of the additional contributions from the Bin(i, p) random variables. A standard bound shows that these additional contributions are concentrated about their mean, which is approximately

![]() $k^2p/2$

.

$k^2p/2$

.

We postpone the details until Section 3.1 and continue with the proof of the upper bounds in Theorem 1.1. By summing over the possible values of

![]() $R_k$

, we can apply Corollary 2.4 with

$R_k$

, we can apply Corollary 2.4 with

![]() $h=1$

to the quantity on the right-hand side of Lemma 3.1: it is at most

$h=1$

to the quantity on the right-hand side of Lemma 3.1: it is at most

\begin{equation}\frac{c}{k+1}\sum_{j=h(k,n)}^{(k+1)(n-1)} j \mathbb{P}\left( R_{k+1}=j\right),\end{equation}

\begin{equation}\frac{c}{k+1}\sum_{j=h(k,n)}^{(k+1)(n-1)} j \mathbb{P}\left( R_{k+1}=j\right),\end{equation}

where

![]() $h(k,n)=\lceil \frac{k^2}{2}p - \frac{k}{n^{1/2}} \rceil$

, and the upper limit on the sum is due to the fact that

$h(k,n)=\lceil \frac{k^2}{2}p - \frac{k}{n^{1/2}} \rceil$

, and the upper limit on the sum is due to the fact that

![]() $R_{k+1}\leq$

$R_{k+1}\leq$

![]() $(k+1)(n-1)$

(because each step of

$(k+1)(n-1)$

(because each step of

![]() $R_t$

is at most

$R_t$

is at most

![]() $n-1$

, and in

$n-1$

, and in

![]() $R_{k+1}$

we are summing

$R_{k+1}$

we are summing

![]() $k+1$

of them).

$k+1$

of them).

We now rewrite the above sum in a way that is easier to analyse, using the following elementary observation. If X is a random variable taking values in

![]() $\mathbb{Z}\cap(-\infty,N]$

for some

$\mathbb{Z}\cap(-\infty,N]$

for some

![]() $N\in \mathbb{N}$

, then for any

$N\in \mathbb{N}$

, then for any

![]() $h\ge1$

, we have

$h\ge1$

, we have

\begin{align*}\mathbb{E}[X\unicode{x1D7D9}_{\{X\ge h\}}] = \mathbb{E}\Big[\sum_{i=1}^N \unicode{x1D7D9}_{\{i\le X\}}\unicode{x1D7D9}_{\{X\ge h\}}\Big]&= \mathbb{E}\Big[\sum_{i=1}^h \unicode{x1D7D9}_{\{X\ge h\}}\Big] + \mathbb{E}\Big[\sum_{i=h+1}^N \unicode{x1D7D9}_{\{X\ge i\}}\Big]\\

&= h\mathbb{P}(X\ge h) + \sum_{i=h+1}^N \mathbb{P}(X\ge i).\end{align*}

\begin{align*}\mathbb{E}[X\unicode{x1D7D9}_{\{X\ge h\}}] = \mathbb{E}\Big[\sum_{i=1}^N \unicode{x1D7D9}_{\{i\le X\}}\unicode{x1D7D9}_{\{X\ge h\}}\Big]&= \mathbb{E}\Big[\sum_{i=1}^h \unicode{x1D7D9}_{\{X\ge h\}}\Big] + \mathbb{E}\Big[\sum_{i=h+1}^N \unicode{x1D7D9}_{\{X\ge i\}}\Big]\\

&= h\mathbb{P}(X\ge h) + \sum_{i=h+1}^N \mathbb{P}(X\ge i).\end{align*}

Applying this to

![]() $R_{k+1}$

, and using that

$R_{k+1}$

, and using that

![]() $h(k,n)/(k+1) \le k/n$

when n is large, we have

$h(k,n)/(k+1) \le k/n$

when n is large, we have

\begin{equation*}\frac{1}{k+1}\sum_{j=h(k,n)}^{(k+1)(n-1)} j \mathbb{P}\left( R_{k+1}=j\right) \le \frac{k}{n} \mathbb{P}(R_{k+1}\ge h(k,n)) + \frac{1}{k+1} \sum_{j=h(k,n)+1}^{(k+1)(n-1)} \mathbb{P}(R_{k+1}\ge j),\end{equation*}

\begin{equation*}\frac{1}{k+1}\sum_{j=h(k,n)}^{(k+1)(n-1)} j \mathbb{P}\left( R_{k+1}=j\right) \le \frac{k}{n} \mathbb{P}(R_{k+1}\ge h(k,n)) + \frac{1}{k+1} \sum_{j=h(k,n)+1}^{(k+1)(n-1)} \mathbb{P}(R_{k+1}\ge j),\end{equation*}

and putting this together with (2), Lemma 3.1 and (3), we have shown that

\begin{equation*}\mathbb{P}\left(|\mathcal{C}(v)|>k \right)\leq \frac{ck}{n} \mathbb{P}(R_{k+1}\ge h(k,n)) + \frac{c}{k+1} \sum_{j=h(k,n)+1}^{(k+1)(n-1)} \mathbb{P}(R_{k+1}\ge j).\end{equation*}

\begin{equation*}\mathbb{P}\left(|\mathcal{C}(v)|>k \right)\leq \frac{ck}{n} \mathbb{P}(R_{k+1}\ge h(k,n)) + \frac{c}{k+1} \sum_{j=h(k,n)+1}^{(k+1)(n-1)} \mathbb{P}(R_{k+1}\ge j).\end{equation*}

The next two lemmas conclude the proof of the upper bound in part (a) of Theorem 1.1 by showing that, when we take

![]() $k=\lceil An^{2/3}\rceil$

with

$k=\lceil An^{2/3}\rceil$

with

![]() $A\ge1$

, the right-hand side above is bounded by

$A\ge1$

, the right-hand side above is bounded by

![]() $cA^{-1/2}n^{-1/3}\exp\{-A^3/8+\lambda A^2/2-\lambda^2A/2\}$

. Let

$cA^{-1/2}n^{-1/3}\exp\{-A^3/8+\lambda A^2/2-\lambda^2A/2\}$

. Let

Lemma 3.2 Suppose that

![]() $1\le A=o\left(n^{1/12}\right)$

,

$1\le A=o\left(n^{1/12}\right)$

,

![]() $\lambda = o(n^{1/12})$

and

$\lambda = o(n^{1/12})$

and

![]() $\lambda\le A/3$

. There exists a finite constant c such that

$\lambda\le A/3$

. There exists a finite constant c such that

Lemma 3.3 Suppose that

![]() $1\le A=o\left(n^{1/12}\right)$

,

$1\le A=o\left(n^{1/12}\right)$

,

![]() $\lambda = o(n^{1/12})$

and

$\lambda = o(n^{1/12})$

and

![]() $\lambda\le A/3$

. There exists a finite constant c such that

$\lambda\le A/3$

. There exists a finite constant c such that

\begin{equation*}\frac{1}{\lceil An^{2/3}\rceil+1} \sum_{j=H(A,n)+1}^{(\lceil An^{2/3}\rceil+1)(n-1)} \mathbb{P}(R_{\lceil An^{2/3}\rceil+1}\ge j) \leq \frac{c}{A^2 n^{1/3}}e^{-A^3/8+\lambda A^2/2 - \lambda^2 A/2}.\end{equation*}

\begin{equation*}\frac{1}{\lceil An^{2/3}\rceil+1} \sum_{j=H(A,n)+1}^{(\lceil An^{2/3}\rceil+1)(n-1)} \mathbb{P}(R_{\lceil An^{2/3}\rceil+1}\ge j) \leq \frac{c}{A^2 n^{1/3}}e^{-A^3/8+\lambda A^2/2 - \lambda^2 A/2}.\end{equation*}

Since

![]() $R_{\lceil An^{2/3}\rceil+1}$

is simply a binomial random variable, the proofs of Lemmas 3.2 and 3.3 are exercises in applying standard estimates to binomial random variables. We carry out the details in Section 3.1. Subject to these and the proof of Lemma 3.1, the proof of the upper bound in part (a) of Theorem 1.1 is complete.

$R_{\lceil An^{2/3}\rceil+1}$

is simply a binomial random variable, the proofs of Lemmas 3.2 and 3.3 are exercises in applying standard estimates to binomial random variables. We carry out the details in Section 3.1. Subject to these and the proof of Lemma 3.1, the proof of the upper bound in part (a) of Theorem 1.1 is complete.

The upper bound of part (b) in Theorem 1.1 is deduced from the upper bound in part (a) using the following standard procedure, used for example in [Reference Nachmias and Peres24]. For any

![]() $k\in[n]$

, denote by

$k\in[n]$

, denote by

the number of vertices that are contained in components of size larger than k. If u is any fixed vertex in G(n, p), we have

and then taking

![]() $k=\lceil An^{2/3}\rceil$

and applying part (a), this is at most

$k=\lceil An^{2/3}\rceil$

and applying part (a), this is at most

as required. This concludes the proof for the upper bounds (a) and (b) in Theorem 1.1, subject to proving Lemmas 3.1, 3.2 and 3.3.

3.1. Proofs of Lemmas 3.1, 3.2 and 3.3

To prove Lemmas 3.1, 3.2 and 3.3 we will make use of the following two preliminary results on the concentration of Binomial random variables about their mean. The first of these results is Theorem 1.3 in [Reference Bollobás7], while the second is Theorem 2.1 in [Reference Janson, Luczak and Rucinski15].

Lemma 3.4 Let

![]() $S\sim \textrm{Bin}(n,p)$

, and suppose that

$S\sim \textrm{Bin}(n,p)$

, and suppose that

![]() $np\geq 1$

and

$np\geq 1$

and

![]() $1\leq h\leq (1-p)n/3$

. Then

$1\leq h\leq (1-p)n/3$

. Then

Lemma 3.5 Let

![]() $S\sim \textrm{Bin}(n,p)$

and define

$S\sim \textrm{Bin}(n,p)$

and define

![]() $\phi(x) = (1+x)\log(1+x)-x$

for

$\phi(x) = (1+x)\log(1+x)-x$

for

![]() $x\geq -1$

. Then for every

$x\geq -1$

. Then for every

![]() $t\geq 0$

we have that

$t\geq 0$

we have that

-

a.

$\mathbb{P}(S\geq \mathbb{E}[S]+t)\leq \exp\{-\mathbb{E}[S]\phi(t/\mathbb{E}[S])\}\leq \exp\left\{-t^2/2(\mathbb{E}[S]+t/3)\right\}$

;

$\mathbb{P}(S\geq \mathbb{E}[S]+t)\leq \exp\{-\mathbb{E}[S]\phi(t/\mathbb{E}[S])\}\leq \exp\left\{-t^2/2(\mathbb{E}[S]+t/3)\right\}$

; -

b.

$\mathbb{P}(S\leq \mathbb{E}[S]-t)\leq \exp\{-t^2/2\mathbb{E}[S]\}$

.

$\mathbb{P}(S\leq \mathbb{E}[S]-t)\leq \exp\{-t^2/2\mathbb{E}[S]\}$

.

We are now ready to start with the proofs of the lemmas stated in the previous section.

Proof of Lemma 3.1. We want to bound

\begin{equation*}\mathbb{P}\Big(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \Big)\end{equation*}

\begin{equation*}\mathbb{P}\Big(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t\in [k] \Big)\end{equation*}

from above, where

![]() $\tau_i\sim \textrm{Bin}(n-i,p)$

are independent. We do this by adding extra terms to the sum

$\tau_i\sim \textrm{Bin}(n-i,p)$

are independent. We do this by adding extra terms to the sum

![]() $\sum_{i=1}^{t}(\tau_i-1)$

to create a random walk with identically distributed steps. To this end, let

$\sum_{i=1}^{t}(\tau_i-1)$

to create a random walk with identically distributed steps. To this end, let

![]() $(B_i)_{i\in [n]}$

be a sequence of independent random variables, also independent from

$(B_i)_{i\in [n]}$

be a sequence of independent random variables, also independent from

![]() $(\tau_i)_{i \in [n]}$

, and such that

$(\tau_i)_{i \in [n]}$

, and such that

![]() $B_i\sim \textrm{Bin}(i,p)$

for every

$B_i\sim \textrm{Bin}(i,p)$

for every

![]() $i \in [n]$

. Moreover, define

$i \in [n]$

. Moreover, define

![]() $S_t = \sum_{i=1}^{t}B_i$

for

$S_t = \sum_{i=1}^{t}B_i$

for

![]() $t\in [n]$

. Let

$t\in [n]$

. Let

Since

![]() $S_{k} \sim \textrm{Bin}\left(k(k+1)/2,p\right)$

, an application of Lemma 3.5(b) with

$S_{k} \sim \textrm{Bin}\left(k(k+1)/2,p\right)$

, an application of Lemma 3.5(b) with

![]() $t=kn^{-1/2} + kp/2$

yields that

$t=kn^{-1/2} + kp/2$

yields that

![]() $P\geq c$

for some

$P\geq c$

for some

![]() $c>0$

. Now using the independence of

$c>0$

. Now using the independence of

![]() $(\tau_i)_{i\in [n]}$

and

$(\tau_i)_{i\in [n]}$

and

![]() $(B_i)_{i \in [n]}$

we obtain that

$(B_i)_{i \in [n]}$

we obtain that

\begin{equation*}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t \in [k] \bigg) =P^{-1}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t \in [k],\, S_{k}\ge\frac{k^2}{2}p-\frac{k}{n^{1/2}}\bigg).\end{equation*}

\begin{equation*}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t \in [k] \bigg) =P^{-1}\mathbb{P}\bigg(1+\sum_{i=1}^{t}(\tau_i-1)>0\ \forall t \in [k],\, S_{k}\ge\frac{k^2}{2}p-\frac{k}{n^{1/2}}\bigg).\end{equation*}

Setting

![]() $R_t = \sum_{i=1}^{t}(\tau_i + B_i-1)$

, we see that the last quantity is bounded from above by

$R_t = \sum_{i=1}^{t}(\tau_i + B_i-1)$

, we see that the last quantity is bounded from above by

so noting that

![]() $\tau_i+B_i \overset{iid}{\sim}\textrm{Bin}(n,p)$

for every

$\tau_i+B_i \overset{iid}{\sim}\textrm{Bin}(n,p)$

for every

![]() $i\in [n]$

completes the proof.

$i\in [n]$

completes the proof.

Proof of Lemma 3.2. Write

and recall that

We want to use Lemma 3.4 to bound from above the quantity

where we recall that

![]() $(R_t)_{t\ge 0}$

is a random walk with

$(R_t)_{t\ge 0}$

is a random walk with

![]() $R_0=0$

and i.i.d. steps each having distribution

$R_0=0$

and i.i.d. steps each having distribution

![]() $\textrm{Bin}(n,p)-1$

. Letting

$\textrm{Bin}(n,p)-1$

. Letting

![]() $B_{j,p}$

be a binomial random variable with parameters j and p, setting

$B_{j,p}$

be a binomial random variable with parameters j and p, setting

![]() $h\,{:}\,{\raise-1.5pt{=}}\, H-K\lambda/n^{1/3}$

(which is of order

$h\,{:}\,{\raise-1.5pt{=}}\, H-K\lambda/n^{1/3}$

(which is of order

![]() $A^2n^{1/3}$

since

$A^2n^{1/3}$

since

![]() $\lambda<A/3$

) and observing that

$\lambda<A/3$

) and observing that

applying Lemma 3.4 we obtain

\begin{align}\mathbb{P}(R_{K}\ge H) &= \mathbb{P}( B_{nK,p} \geq K+H)\nonumber\\[2pt]

&=\mathbb{P}( B_{nK,p} \geq nKp+H-K\lambda/n^{1/3})\nonumber\\[2pt]

&\leq \frac{(p(1-p)nK)^{1/2}}{h\sqrt{2\pi}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}+\frac{h}{p(1-p)nK}+\frac{h^3}{2(1-p)^3(nK)^3}\right\}\nonumber\\[2pt]

&\leq c \frac{K^{1/2}}{A^2n^{1/3}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}\right\}\nonumber\\[2pt]

&\leq \frac{c}{A^{3/2}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}\right\}.\end{align}

\begin{align}\mathbb{P}(R_{K}\ge H) &= \mathbb{P}( B_{nK,p} \geq K+H)\nonumber\\[2pt]

&=\mathbb{P}( B_{nK,p} \geq nKp+H-K\lambda/n^{1/3})\nonumber\\[2pt]

&\leq \frac{(p(1-p)nK)^{1/2}}{h\sqrt{2\pi}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}+\frac{h}{p(1-p)nK}+\frac{h^3}{2(1-p)^3(nK)^3}\right\}\nonumber\\[2pt]

&\leq c \frac{K^{1/2}}{A^2n^{1/3}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}\right\}\nonumber\\[2pt]

&\leq \frac{c}{A^{3/2}}\exp\left\{-\frac{1}{2}\frac{h^2}{p(1-p)nK}\right\}.\end{align}

Define

Elementary estimates using the fact that

![]() $A=o(n^{1/12})$

and

$A=o(n^{1/12})$

and

![]() $\lambda=o(n^{1/12})$

show that

$\lambda=o(n^{1/12})$

show that

From (4) we therefore have, for large n,

\begin{align*}\frac{K-1}{n} \mathbb{P}(R_{K}\ge H) &\le c\frac{A}{n^{1/3}} \frac{1}{A^{3/2}}e^{-x(A,n,\lambda)^2/2}\\

&\le \frac{c}{\sqrt{A}n^{1/3}}\exp\left(-\frac{A^3}{8}+\frac{\lambda A^2}{2} - \frac{\lambda^2 A}{2} \right),\end{align*}

\begin{align*}\frac{K-1}{n} \mathbb{P}(R_{K}\ge H) &\le c\frac{A}{n^{1/3}} \frac{1}{A^{3/2}}e^{-x(A,n,\lambda)^2/2}\\

&\le \frac{c}{\sqrt{A}n^{1/3}}\exp\left(-\frac{A^3}{8}+\frac{\lambda A^2}{2} - \frac{\lambda^2 A}{2} \right),\end{align*}

which completes the proof of Lemma 3.2.

Before we prove Lemma 3.3, we will need the following bound, which is an easy application of Lemma 3.5.

Lemma 3.6 Suppose that

![]() $B_{N,p}$

is a binomial random variable with parameters

$B_{N,p}$

is a binomial random variable with parameters

![]() $N\ge 1$

and

$N\ge 1$

and

![]() $p\in[0,1]$

. Let

$p\in[0,1]$

. Let

![]() $C\in(0,\infty)$

be constant. Then for all

$C\in(0,\infty)$

be constant. Then for all

![]() $x\in(0, C(Np)^{2/3}]$

we have that

$x\in(0, C(Np)^{2/3}]$

we have that

where c is another finite constant.

Proof. Applying Lemma 3.5, we have

and since

![]() $\log(1+t)>t-t^2/2$

for every

$\log(1+t)>t-t^2/2$

for every

![]() $t>0$

,

$t>0$

,

\begin{align*}\mathbb{P}\left(B_{N,p}\ge Np + x\right) &\le \exp\Big(-Np\Big[\Big(1+\frac{x}{Np}\Big)\Big(\frac{x}{Np}-\frac{x^2}{2(Np)^2}\Big)-\frac{x}{Np}\Big]\Big)\\

&= \exp\Big(-Np\Big[\frac{x^2}{2(Np)^2} - \frac{x^3}{2(Np)^3}\Big]\Big)\\

&= \exp\Big(-\frac{x^2}{2Np}+\frac{x^3}{2(Np)^2}\Big),\end{align*}

\begin{align*}\mathbb{P}\left(B_{N,p}\ge Np + x\right) &\le \exp\Big(-Np\Big[\Big(1+\frac{x}{Np}\Big)\Big(\frac{x}{Np}-\frac{x^2}{2(Np)^2}\Big)-\frac{x}{Np}\Big]\Big)\\

&= \exp\Big(-Np\Big[\frac{x^2}{2(Np)^2} - \frac{x^3}{2(Np)^3}\Big]\Big)\\

&= \exp\Big(-\frac{x^2}{2Np}+\frac{x^3}{2(Np)^2}\Big),\end{align*}

which establishes the result with

![]() $c=\exp(C^3/2)$

.

$c=\exp(C^3/2)$

.

Proof of Lemma 3.3. Writing

we aim to bound

\begin{equation*}\frac{1}{K}\sum_{j=H+1}^{K(n-1)}\mathbb{P}(R_{K} \ge j)\end{equation*}

\begin{equation*}\frac{1}{K}\sum_{j=H+1}^{K(n-1)}\mathbb{P}(R_{K} \ge j)\end{equation*}

from above. We first note that

\begin{equation}\frac{1}{K}\sum_{j=H+1}^{K(n-1)}\mathbb{P}(R_{K} \ge j) \le \frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(R_K\ge j) + n\mathbb{P}(R_K \ge K^{2/3}).\end{equation}

\begin{equation}\frac{1}{K}\sum_{j=H+1}^{K(n-1)}\mathbb{P}(R_{K} \ge j) \le \frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(R_K\ge j) + n\mathbb{P}(R_K \ge K^{2/3}).\end{equation}

To bound the second term on the right-hand side of (5) observe that, since

![]() $A=o(n^{1/12})$

and

$A=o(n^{1/12})$

and

![]() $\lambda = o(n^{1/12})$

, we have

$\lambda = o(n^{1/12})$

, we have

![]() $K\ge nKp - K^{2/3}/2$

when n is large. Thus, when n is large,

$K\ge nKp - K^{2/3}/2$

when n is large. Thus, when n is large,

\begin{align}n\mathbb{P}(R_K \ge K^{2/3}) &= n\mathbb{P}(B_{nK,p}\ge K + K^{2/3})\nonumber\\[3pt]

&\leq n\mathbb{P}(B_{nK,p}\ge nKp+K^{2/3}/2).\end{align}

\begin{align}n\mathbb{P}(R_K \ge K^{2/3}) &= n\mathbb{P}(B_{nK,p}\ge K + K^{2/3})\nonumber\\[3pt]

&\leq n\mathbb{P}(B_{nK,p}\ge nKp+K^{2/3}/2).\end{align}

Using the second inequality in part (a) of Lemma 3.5 we obtain

\begin{align}(6)&\le n \exp\left\{-\frac{K^{4/3}}{8(nKp+\frac{1}{6}K^{2/3})}\right\} \leq n\exp\left\{-cA^{1/3}n^{2/9}\right\}\end{align}

\begin{align}(6)&\le n \exp\left\{-\frac{K^{4/3}}{8(nKp+\frac{1}{6}K^{2/3})}\right\} \leq n\exp\left\{-cA^{1/3}n^{2/9}\right\}\end{align}

and for sufficiently large n we have that

Next, for the first term on the right-hand side of (5), note that

\begin{align}\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(R_K\ge j)&=\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(B_{nK,p}\ge K+j)\nonumber\\[3pt]

&=\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(B_{nK,p}\ge nKp+j-K\lambda n^{-1/3}).\end{align}

\begin{align}\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(R_K\ge j)&=\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(B_{nK,p}\ge K+j)\nonumber\\[3pt]

&=\frac{1}{K}\sum_{j=H+1}^{\lfloor K^{2/3}\rfloor} \mathbb{P}(B_{nK,p}\ge nKp+j-K\lambda n^{-1/3}).\end{align}

Since

![]() $A=o(n^{1/12})$

and

$A=o(n^{1/12})$

and

![]() $\lambda=o(n^{1/12})$

, we have

$\lambda=o(n^{1/12})$

, we have

![]() $K\lambda n^{-1/3}=o(K^{2/3})$

, and therefore we may apply Lemma 3.6 to obtain

$K\lambda n^{-1/3}=o(K^{2/3})$

, and therefore we may apply Lemma 3.6 to obtain

\begin{align*}(8)\le \frac{c}{K}\sum_{j=H+1}^{\lfloor K^{2/3} \rfloor}\exp\left\{-\frac{(j-K\lambda n^{-1/3})^2}{2nKp}\right\}\leq \frac{c}{\sqrt{K}}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right),\end{align*}

\begin{align*}(8)\le \frac{c}{K}\sum_{j=H+1}^{\lfloor K^{2/3} \rfloor}\exp\left\{-\frac{(j-K\lambda n^{-1/3})^2}{2nKp}\right\}\leq \frac{c}{\sqrt{K}}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right),\end{align*}

where G denotes a Gaussian random variable with mean zero and unit variance. Recalling the standard bound

![]() $\mathbb{P}(G\geq t)\leq (t\sqrt{2\pi})^{-1}e^{-t^2/2}$

, which is valid for every

$\mathbb{P}(G\geq t)\leq (t\sqrt{2\pi})^{-1}e^{-t^2/2}$

, which is valid for every

![]() $t>0$

, we obtain

$t>0$

, we obtain

\begin{equation*}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right) \leq \frac{1}{\sqrt{2\pi}}\frac{\sqrt{nKp}}{H+1-K\lambda/n^{1/3}}\exp\Big(-\frac{(H+1-K\lambda/n^{1/3})^2}{2nKp}\Big).\end{equation*}

\begin{equation*}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right) \leq \frac{1}{\sqrt{2\pi}}\frac{\sqrt{nKp}}{H+1-K\lambda/n^{1/3}}\exp\Big(-\frac{(H+1-K\lambda/n^{1/3})^2}{2nKp}\Big).\end{equation*}

An easy computation reveals that

and consequently we obtain

\begin{align*}\frac{c}{\sqrt{K}}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right) \le \frac{c}{A^2n^{1/3}}\exp\left\{-\frac{A^3}{8}+\lambda\frac{A^2}{2}-\lambda^2\frac{A}{2}\right\},\end{align*}

\begin{align*}\frac{c}{\sqrt{K}}\mathbb{P}\left(G\ge \frac{H+1-K\lambda/n^{1/3}}{\sqrt{nKp}}\right) \le \frac{c}{A^2n^{1/3}}\exp\left\{-\frac{A^3}{8}+\lambda\frac{A^2}{2}-\lambda^2\frac{A}{2}\right\},\end{align*}

as required.

Remark 3.7 Here we comment on the possibility of obtaining the correct constant factor in the upper bound of the asymptotic for

![]() $\mathbb{P}(|\mathcal{C}_{\text{max}}|>An^{2/3})$

using the above methodology. We will give a corresponding comment for the lower bound in Remark 4.15.

$\mathbb{P}(|\mathcal{C}_{\text{max}}|>An^{2/3})$

using the above methodology. We will give a corresponding comment for the lower bound in Remark 4.15.

There are three places in our proof of the upper bounds stated in Theorem 1.1 where unspecified constants appear. One of these is from Lemma 3.1. The constant appearing within this result could be eliminated by amending the argument to use

![]() $k/n^\gamma$

rather than

$k/n^\gamma$

rather than

![]() $k/n^{1/2}$

, for e.g.

$k/n^{1/2}$

, for e.g.

![]() $\gamma=5/12$

, and strengthening our assumption on A appropriately to ensure that the error coming from Lemma 3.5 is smaller than our desired bound.

$\gamma=5/12$

, and strengthening our assumption on A appropriately to ensure that the error coming from Lemma 3.5 is smaller than our desired bound.

Another step requiring a more detailed analysis comes from the application of Lemma 3.4 in the proof of Lemma 3.2. Similarly to the first case above, this should be possible but would require stronger conditions on the relationship between A and

![]() $\lambda$

, or error bounds that depend on

$\lambda$

, or error bounds that depend on

![]() $A/\lambda$

.

$A/\lambda$

.

Finally, a further constant appears when we apply Corollary 2.4 in (3). Finding the correct constant here would require a different approach; either an improvement to Corollary 2.4, or an attempt to apply other results about random walks to

![]() $(R_t)_{t\ge0}$

. The latter option would be complicated by the fact that the step distribution of

$(R_t)_{t\ge0}$

. The latter option would be complicated by the fact that the step distribution of

![]() $R_t$

depends on n.

$R_t$

depends on n.

4. Proof of the lower bounds in Theorem 1.1

Let

![]() $v\in [n]$

be any vertex in G(n, p) from which we start running the exploration process described at the beginning of Section 3. We write

$v\in [n]$

be any vertex in G(n, p) from which we start running the exploration process described at the beginning of Section 3. We write

![]() $T_2 = \lceil An^{2/3}\rceil$

; we will in due course also have a time

$T_2 = \lceil An^{2/3}\rceil$

; we will in due course also have a time

![]() $T_1$

which is smaller than

$T_1$

which is smaller than

![]() $T_2$

.

$T_2$

.

Recall from Section 3 that

![]() $\eta_{i}$

denotes the number of unseen vertices which become active during the ith step of the exploration process, and

$\eta_{i}$

denotes the number of unseen vertices which become active during the ith step of the exploration process, and

![]() $Y_t=1+\sum_{i=1}^{t}(\eta_i-1)$

is the number of active vertices at step t of the procedure. Moreover, recall that

$Y_t=1+\sum_{i=1}^{t}(\eta_i-1)$

is the number of active vertices at step t of the procedure. Moreover, recall that

where

![]() $\mathcal{F}_i = \sigma(\{\eta_{1},\dots,\eta_i\})$

and

$\mathcal{F}_i = \sigma(\{\eta_{1},\dots,\eta_i\})$

and

![]() $p=p(n)=1/n+\lambda n^{-4/3}$

. We will start by proving the lower bound in part (a) of Theorem 1.1; that is, by bounding from below the probability

$p=p(n)=1/n+\lambda n^{-4/3}$

. We will start by proving the lower bound in part (a) of Theorem 1.1; that is, by bounding from below the probability

\begin{equation*}\mathbb{P}\left(|\mathcal{C}(v)|>T_2\right)=\mathbb{P}\left(1+\sum_{i=1}^{t}(\eta_i-1)>0\ \forall t \in [T_2]\right).\end{equation*}

\begin{equation*}\mathbb{P}\left(|\mathcal{C}(v)|>T_2\right)=\mathbb{P}\left(1+\sum_{i=1}^{t}(\eta_i-1)>0\ \forall t \in [T_2]\right).\end{equation*}

We note that the random variables

![]() $\eta_i$

are not independent, which makes our analysis more difficult. The first part of our argument, therefore, consists of replacing the

$\eta_i$

are not independent, which makes our analysis more difficult. The first part of our argument, therefore, consists of replacing the

![]() $\eta_{i}$

with a sequence of independent binomial random variables which are easier to analyse. The idea is that

$\eta_{i}$

with a sequence of independent binomial random variables which are easier to analyse. The idea is that

![]() $\eta_i$

, which is the number of neighbours of our ith vertex that are unseen, is roughly

$\eta_i$

, which is the number of neighbours of our ith vertex that are unseen, is roughly

![]() $\textrm{Bin}(n-i,p)$

, but the first parameter is slightly smaller due to the (random) number of active vertices that are present at the beginning of the ith step of the exploration process. If we can bound the number of active vertices above by some deterministic value K with high probability, then we can remove this source of randomness and obtain a sequence of independent increments in place of the

$\textrm{Bin}(n-i,p)$

, but the first parameter is slightly smaller due to the (random) number of active vertices that are present at the beginning of the ith step of the exploration process. If we can bound the number of active vertices above by some deterministic value K with high probability, then we can remove this source of randomness and obtain a sequence of independent increments in place of the

![]() $\eta_i$

. To this end, fix

$\eta_i$

. To this end, fix

![]() $K\in\mathbb{N}$

and suppose that

$K\in\mathbb{N}$

and suppose that

![]() $(\delta_i)_{i\in [T_2]}$

is a sequence of independent random variables with

$(\delta_i)_{i\in [T_2]}$

is a sequence of independent random variables with

![]() $\delta_i\sim \textrm{Bin}(n-K-i,p)$

and set

$\delta_i\sim \textrm{Bin}(n-K-i,p)$

and set

![]() $R_t = 1+\sum_{i=1}^{t}(\delta_i-1)$

. We note that the definitions of

$R_t = 1+\sum_{i=1}^{t}(\delta_i-1)$

. We note that the definitions of

![]() $\delta_i$

and

$\delta_i$

and

![]() $R_t$

depend implicitly on K; sometimes for clarity we will write

$R_t$

depend implicitly on K; sometimes for clarity we will write

![]() $\delta_i^{(K)}$

and

$\delta_i^{(K)}$

and

![]() $R_t^{(K)}$

. We will soon fix

$R_t^{(K)}$

. We will soon fix

![]() $K=\lfloor n^{2/5}\rfloor$

, but the following lemma works for any

$K=\lfloor n^{2/5}\rfloor$

, but the following lemma works for any

![]() $K<n-T_2$

. We postpone the proof, which constructs a coupling between

$K<n-T_2$

. We postpone the proof, which constructs a coupling between

![]() $\eta_i$

and

$\eta_i$

and

![]() $\delta_i$

, until Section 4.3.

$\delta_i$

, until Section 4.3.

Lemma 4.1 Suppose that

![]() $K+T_2<n$

. Define

$K+T_2<n$

. Define

![]() $\tau_0\,{:}\,{\raise-1.5pt{=}}\, \min\{t\geq 1:Y_t=0\}$

, the first time at which the set of active vertices becomes empty. Then

$\tau_0\,{:}\,{\raise-1.5pt{=}}\, \min\{t\geq 1:Y_t=0\}$

, the first time at which the set of active vertices becomes empty. Then

Our next result shows that if we choose

![]() $K=\lfloor n^{2/5}\rfloor$

, then we do not have to worry about the last probability on the right-hand side of (10).

$K=\lfloor n^{2/5}\rfloor$

, then we do not have to worry about the last probability on the right-hand side of (10).

Lemma 4.2 Let

![]() $\tau_0$

be as in the previous lemma. As

$\tau_0$

be as in the previous lemma. As

![]() $n\rightarrow \infty$

,

$n\rightarrow \infty$

,

The proof of Lemma 4.2, which easily follows from Lemma 3.5, is again postponed toSection 4.3.

Given (11), we can now fix

![]() $K=\lfloor n^{2/5}\rfloor$

and focus on providing a lower bound for

$K=\lfloor n^{2/5}\rfloor$

and focus on providing a lower bound for

Observe that, although we now have a process with independent increments, obtaining a lower bound for (12) remains a non-trivial task, because the

![]() $\delta_i$

that are used to define

$\delta_i$

that are used to define

![]() $R_t$

are not identically distributed. We consider two options to produce a random walk with i.i.d. increments from

$R_t$

are not identically distributed. We consider two options to produce a random walk with i.i.d. increments from

![]() $(R_t)_{t\in [T_2]}$

. The first is to view

$(R_t)_{t\in [T_2]}$

. The first is to view

![]() $\delta_i$

as a sum of i.i.d. Bernoulli random variables, with

$\delta_i$

as a sum of i.i.d. Bernoulli random variables, with

![]() $\delta_1$

summing more Bernoullis than

$\delta_1$

summing more Bernoullis than

![]() $\delta_2$

and so on; and then to rearrange the same Bernoullis amongst sums

$\delta_2$

and so on; and then to rearrange the same Bernoullis amongst sums

![]() $\delta_i^{\prime}$

that all have equal length. The second is simply to add an independent

$\delta_i^{\prime}$

that all have equal length. The second is simply to add an independent

![]() $\textrm{Bin}(K+i,p)$

random variable to

$\textrm{Bin}(K+i,p)$

random variable to

![]() $\delta_i$

for each i.

$\delta_i$

for each i.

It turns out that neither of these two options works on its own. The first has problems if we try to cover too many values of i, since the more Bernoullis that we have to rearrange, the less accurate our estimates become. The second has problems when i is small, as the variance of the added

![]() $\textrm{Bin}(K+i,p)$

random variables is too large when our random walk is near the origin.

$\textrm{Bin}(K+i,p)$

random variables is too large when our random walk is near the origin.

We therefore combine the two techniques. We take

![]() $T_1\in[T_2]$

and carry out the first strategy for times

$T_1\in[T_2]$

and carry out the first strategy for times

![]() $t\in [T_1]$

, and the second strategy for

$t\in [T_1]$

, and the second strategy for

![]() $t\in [\![ T_1, T_2]\!]$

.

$t\in [\![ T_1, T_2]\!]$

.

We note first that for any deterministic

![]() $H\in\mathbb{N}$

and

$H\in\mathbb{N}$

and

![]() $T_1\in[T_2]$

,

$T_1\in[T_2]$

,

\begin{align}&\mathbb{P}\big(R_t>0\ \forall t \in [T_2]\big)\nonumber\\[3pt] &\quad \geq \mathbb{P}\big(R_t>0\ \forall t\in [T_1],\,\, R_{T_1}\in [H,2H],\,\, R_t>0\ \forall t\in [\![ T_1, T_2]\!]\big)\nonumber\\[3pt]&\quad \ge \mathbb{P}\big(R_t>0\ \forall t\in [T_1],\,\, R_{T_1}\in [H,2H]\big)\mathbb{P}( R_t > 0\ \forall t\in [\![ T_1, T_2]\!] \,\big|\, R_{T_1}=H\big).\end{align}

\begin{align}&\mathbb{P}\big(R_t>0\ \forall t \in [T_2]\big)\nonumber\\[3pt] &\quad \geq \mathbb{P}\big(R_t>0\ \forall t\in [T_1],\,\, R_{T_1}\in [H,2H],\,\, R_t>0\ \forall t\in [\![ T_1, T_2]\!]\big)\nonumber\\[3pt]&\quad \ge \mathbb{P}\big(R_t>0\ \forall t\in [T_1],\,\, R_{T_1}\in [H,2H]\big)\mathbb{P}( R_t > 0\ \forall t\in [\![ T_1, T_2]\!] \,\big|\, R_{T_1}=H\big).\end{align}

We now fix

![]() $T_1 = 2\lfloor n^{2/3}/A^2\rfloor-1$

and

$T_1 = 2\lfloor n^{2/3}/A^2\rfloor-1$

and

![]() $H = \lceil n^{1/3}/A\rceil$

.

$H = \lceil n^{1/3}/A\rceil$

.

Proposition 4.3 There exists

![]() $c>0$

such that for sufficiently large n and A,

$c>0$

such that for sufficiently large n and A,

Proposition 4.4 There exists

![]() $c>0$

such that for sufficiently large n and A,

$c>0$

such that for sufficiently large n and A,

We will prove Proposition 4.3 in Section 4.1 and Proposition 4.4 in Section 4.2. For now we show how these results can be used to complete the proof of the lower bounds in Theorem 1.1.

Proof of lower bounds in Theorem 1.1. By Lemmas 4.1 and 4.2,

In light of (13), it then follows from Propositions 4.3 and 4.4 that

This concludes the proof of the lower bound in part (a) of Theorem 1.1. In order to prove the lower bound in part (b), we will need to use the fact that for any

![]() $\mathbb{N}_0$

-valued random variable X,

$\mathbb{N}_0$

-valued random variable X,

This can be proved by applying the Cauchy–Schwarz inequality to

![]() $X\unicode{x1D7D9}_{\{X\ge 1\}}$

.

$X\unicode{x1D7D9}_{\{X\ge 1\}}$

.

To proceed with the proof of the lower bound in part (b), let us denote by

![]() $X = \sum_{i=1}^{n}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2]\}}$

the number of components of size between

$X = \sum_{i=1}^{n}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2]\}}$

the number of components of size between

![]() $T_2$

and

$T_2$

and

![]() $2T_2$

. Observe that

$2T_2$

. Observe that

![]() $X\geq 1 $

implies

$X\geq 1 $

implies

![]() $|\mathcal{C}_{\max}|\geq T_2$

. Therefore using (15) we obtain

$|\mathcal{C}_{\max}|\geq T_2$

. Therefore using (15) we obtain

For the numerator, we have

Next we bound the denominator from above. Given vertices

![]() $i,j\in [n]$

, write

$i,j\in [n]$

, write

![]() $i\leftrightarrow j$

if there exists a path of opens edges between i and j. Then we can write

$i\leftrightarrow j$

if there exists a path of opens edges between i and j. Then we can write

where

\begin{equation*}S_1 = \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{j\neq i}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{|\mathcal{C}(j)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{i \nleftrightarrow j \}}\bigg]\end{equation*}

\begin{equation*}S_1 = \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{j\neq i}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{|\mathcal{C}(j)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{i \nleftrightarrow j \}}\bigg]\end{equation*}

and

\begin{equation*}S_2 = \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{j\neq i}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{|\mathcal{C}(j)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{i \leftrightarrow j \}}\bigg].\end{equation*}

\begin{equation*}S_2 = \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{j\neq i}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{|\mathcal{C}(j)|\in [T_2,2T_2] \}}\unicode{x1D7D9}_{\{i \leftrightarrow j \}}\bigg].\end{equation*}

For

![]() $S_1$

we have

$S_1$

we have

\begin{align*}S_1&\leq n^2\sum_{k=T_2}^{2T_2}\mathbb{P}\Big(|\mathcal{C}(1)|=k, 1\nleftrightarrow 2\Big)\mathbb{P}\Big(|C(2)|\in [T_2,2T_2] \,\Big|\, |\mathcal{C}(1)|=k, 1\nleftrightarrow 2\Big)\\[3pt] &\leq n^2 \mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2] \right) \mathbb{P}\left(|\mathcal{C}(2)|\ge T_2\right).\end{align*}

\begin{align*}S_1&\leq n^2\sum_{k=T_2}^{2T_2}\mathbb{P}\Big(|\mathcal{C}(1)|=k, 1\nleftrightarrow 2\Big)\mathbb{P}\Big(|C(2)|\in [T_2,2T_2] \,\Big|\, |\mathcal{C}(1)|=k, 1\nleftrightarrow 2\Big)\\[3pt] &\leq n^2 \mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2] \right) \mathbb{P}\left(|\mathcal{C}(2)|\ge T_2\right).\end{align*}

For

![]() $S_2$

we have

$S_2$

we have

\begin{equation*}\begin{split}S_2=\mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{k=T_2}^{2T_2}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|=k\}}\sum_{j\neq i}\unicode{x1D7D9}_{\{j\in \mathcal{C}(i)\}}\bigg]&\leq \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{k=T_2}^{2T_2}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|=k\}}k\bigg]\\[3pt] &\leq 2T_2 n\mathbb{P}\left(|C(1)|\in [T_2,2T_2]\right).\end{split}\end{equation*}

\begin{equation*}\begin{split}S_2=\mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{k=T_2}^{2T_2}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|=k\}}\sum_{j\neq i}\unicode{x1D7D9}_{\{j\in \mathcal{C}(i)\}}\bigg]&\leq \mathbb{E}\bigg[\sum_{i=1}^{n}\sum_{k=T_2}^{2T_2}\unicode{x1D7D9}_{\{|\mathcal{C}(i)|=k\}}k\bigg]\\[3pt] &\leq 2T_2 n\mathbb{P}\left(|C(1)|\in [T_2,2T_2]\right).\end{split}\end{equation*}

Returning to (18) and recalling that

![]() $T_2 = \lceil An^{2/3}\rceil$

, we obtain

$T_2 = \lceil An^{2/3}\rceil$

, we obtain

\begin{multline*}\mathbb{E}[X^2]\leq n\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right) + n^2 \mathbb{P}\left(|C(1)|\in [T_2,2T_2] \right)\mathbb{P}\left(|\mathcal{C}(2)|\ge T_2\right)\\[3pt]+ 3An^{5/3}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right).\end{multline*}

\begin{multline*}\mathbb{E}[X^2]\leq n\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right) + n^2 \mathbb{P}\left(|C(1)|\in [T_2,2T_2] \right)\mathbb{P}\left(|\mathcal{C}(2)|\ge T_2\right)\\[3pt]+ 3An^{5/3}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right).\end{multline*}

By the upper bound in part (a) of Theorem 1.1,

and therefore

Substituting this and (17) into (16) and then applying (14), we obtain

\begin{align}\nonumber\mathbb{P}\left(|\mathcal{C}_{\max}|\geq \lceil An^{2/3} \rceil\right)&\geq\frac{n^{2}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right)^2}{cAn^{5/3}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right)}\\[3pt]&=c\frac{n^{1/3}}{A}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right).\end{align}

\begin{align}\nonumber\mathbb{P}\left(|\mathcal{C}_{\max}|\geq \lceil An^{2/3} \rceil\right)&\geq\frac{n^{2}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right)^2}{cAn^{5/3}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right)}\\[3pt]&=c\frac{n^{1/3}}{A}\mathbb{P}\left(|\mathcal{C}(1)|\in [T_2,2T_2]\right).\end{align}

Observe that

and by applying the upper bound in Theorem 1.1(a) with 2A in place of A, together with (14), for A sufficiently large we may ensure that

![]() $\mathbb{P}(|\mathcal{C}(1)|> 2T_2)\le \mathbb{P}(|\mathcal{C}(1)|\geq T_2)/2$

. Therefore (19) is at least

$\mathbb{P}(|\mathcal{C}(1)|> 2T_2)\le \mathbb{P}(|\mathcal{C}(1)|\geq T_2)/2$

. Therefore (19) is at least

as required. This completes the proof of Theorem 1.2, subject to the proofs of Lemmas 4.1 and 4.2 and Propositions 4.3 and 4.4.

4.1. Rearranging Bernoullis and applying the ballot theorem: proof of Proposition 4.3

We first introduce a technical result which will be used to transform

![]() $(R_t)_{t\in [T_1]}$

into a process with i.i.d. increments.

$(R_t)_{t\in [T_1]}$

into a process with i.i.d. increments.

Lemma 4.5 Suppose that

![]() $N\in\mathbb{N}$

, and that

$N\in\mathbb{N}$

, and that

![]() $L\in[N]$

is odd. Let

$L\in[N]$

is odd. Let

![]() $(I_j^{i})_{i,j\ge 1}$

be i.i.d. non-negative random variables and set

$(I_j^{i})_{i,j\ge 1}$

be i.i.d. non-negative random variables and set

![]() $X_i=\sum_{j=1}^{N-i}I_j^{i}$

for

$X_i=\sum_{j=1}^{N-i}I_j^{i}$

for

![]() $i=1,\ldots,L$

. Then there exist i.i.d. random variables

$i=1,\ldots,L$

. Then there exist i.i.d. random variables

![]() $(\tilde I_j^i)_{i,j\ge1}$

with the same distribution as

$(\tilde I_j^i)_{i,j\ge1}$

with the same distribution as

![]() $I^i_j$

such that if we set

$I^i_j$

such that if we set

![]() $\tilde X_i=\sum_{j=1}^{N-(L+1)/2}\tilde I_j^{i}$

then

$\tilde X_i=\sum_{j=1}^{N-(L+1)/2}\tilde I_j^{i}$

then

-

$\sum_{i=1}^{t} \tilde X_i\leq \sum_{i=1}^{t}X_i$

for all

$\sum_{i=1}^{t} \tilde X_i\leq \sum_{i=1}^{t}X_i$

for all

$1\leq t\leq L$

;

$1\leq t\leq L$

; -

$\sum_{i=1}^{L} \tilde X_i=\sum_{i=1}^{L}X_i$

.

$\sum_{i=1}^{L} \tilde X_i=\sum_{i=1}^{L}X_i$

.

The reader can think of the

![]() $I_j^i$

as Bernoulli(p)-distributed, so that

$I_j^i$

as Bernoulli(p)-distributed, so that

![]() $X_i\sim \textrm{Bin}(N-i,p)$

and

$X_i\sim \textrm{Bin}(N-i,p)$

and

![]() $\tilde X_i\sim \textrm{Bin}(N-(L+1)/2,p)$

. The idea behind the proof is that

$\tilde X_i\sim \textrm{Bin}(N-(L+1)/2,p)$

. The idea behind the proof is that

![]() $X_1$

has more summands than

$X_1$

has more summands than

![]() $X_L$

, so if we transfer some of the summands from

$X_L$

, so if we transfer some of the summands from

![]() $X_1$

to

$X_1$

to

![]() $X_L$

, we do not change the value of

$X_L$

, we do not change the value of

![]() $\sum_{i=1}^L X_i$

but we decrease

$\sum_{i=1}^L X_i$

but we decrease

![]() $X_1$

. Then we move on to

$X_1$

. Then we move on to

![]() $X_2$

and transfer some of its summands to

$X_2$

and transfer some of its summands to

![]() $X_{L-1}$

, which decreases

$X_{L-1}$

, which decreases

![]() $\sum_{i=1}^2 X_i$

without changing

$\sum_{i=1}^2 X_i$

without changing

![]() $\sum_{i=1}^L X_i$

; and so on. We postpone the details until Section 4.3.

$\sum_{i=1}^L X_i$

; and so on. We postpone the details until Section 4.3.

Before we can proceed with the proof of Proposition 4.3, we need one more tool. We can use Lemma 4.5 to transform

![]() $(R_t)_{t\in [T_1]}$