1. INTRODUCTION

Modern shipping requires ever-increasing accuracy and reliability from navigation systems. Marine radars offer live real-time information about targets above the water surface, but they cannot offer any comprehensive hydrographic information. The Electronic Navigational Chart (ENC), however, enhances ship navigation by displaying static information including coastlines and fixed/floating aids to navigation. Multi-sensor data fusion allows analysing and synthesising information from multiple sources according to certain criteria to improve decision-making and control (Hall and Llinas, Reference Hall and Llinas2002). Radar echo images are the primary non-visual source of information for ship collision avoidance, whereas ENCs provide hydrological information for the waters in which the ship is sailing. These two sources of information are complimentary in providing information to support navigation safety. Therefore, fusing ENC data and marine radar images could facilitate the identification and positioning of moving ships and improve both the automation level and navigation safety (Zhang and Fan, Reference Zhang and Fan2011).

Suitable marine radar performance and test standards from the International Electrotechnical Commission (IEC), IEC 62388 (2013), enable overlaying ENC on marine radar images, conforming a chart radar (IEC 62388, 2013: 115), which provides a more intuitive understanding of the ship navigation scenario and quickly illustrates the Global Navigation Satellite System (GNSS) accuracy and proper operation of other navigation instruments. Nevertheless, mismatching occurs between the objects obtained from radar and Electronic Chart Display and Information System (ECDIS) due to transmitting heading signals, shielding of electromagnetic waves, and the extension of echoes in range. Mismatching can be manually compensated if the causes of such mismatching are identified. However, the process of manual compensation is cumbersome and requires experience.

Data fusion for navigation instruments usually addresses aspects such as filtering optimisation and image fusion from different sensors. For instance, Hu et al. (Reference Hu, Gao and Zhong2015) and Gao et al. (Reference Gao, Hu, Gao, Zhong and Gu2018) explored filtering algorithms for GNSS/Inertial Navigation System (INS) integrated navigation. Likewise, an adaptive data-fusion fuzzy algorithm has been proposed by Al-Sharman et al. (Reference Al-Sharman, Emran, Jaradat, Najjaran, Al-Husari and Zweiri2018) to improve estimation accuracy while an aircraft approaches the landing surface. Radar/Automatic Identification System (AIS) fusion is also a basis for navigational decision support systems, as demonstrated by Borkowski and Zwierzewicz (Reference Borkowski and Zwierzewicz2011). A target tracking fusion algorithm for data from radar and AIS was proposed by Kazimierski and Stateczny (Reference Kazimierski and Stateczny2015). Du and Gao (Reference Du and Gao2017) proposed a method for image fusion of data from different navigation instruments and multi-focus image fusion using orientation information excitation on a pulse-coupled neural network. Similarly, image registration based on feature points or edges has been intensively explored (Ma et al., Reference Ma, Jiang, Zhou, Zhao and Guo2018).

Extensive research has been conducted on fusion (including superposition) of ENC data and marine radar images. Donderi et al. (Reference Donderi, Mercer, Hong and Skinner2004) conducted simulations on navigation, confirming the suitability of overlaying ENC on radar images for navigation safety. Some empirical research on integrating marine radar images and ENC was conducted by Kazimierski and Stateczny (Reference Kazimierski and Stateczny2015) by superimposing fused AIS and radar signals on ECDIS. A method for evaluating the accuracy of overlaying surveillance marine radar images has been proposed by Lubczonek (Reference Lubczonek2015). Liu et al. (Reference Liu, Ma and Zhuang2005) and Yang et al. (Reference Yang, Dou and Zheng2010) thoroughly evaluated the real-time overlapping of ECDIS and superposition of ENC and radar images. Zhang and Fan (Reference Zhang and Fan2011) proposed automatic image fusion of radar images and ENC data based on the Harris corner to detect image features. These types of methods use different operators to detect features often affected by echo quality and target deformation. The detection results of the features are often not ideal.

High-level fusion of ENC data and marine radar images has been addressed to a limited extent. Moreover, apart from classical algorithms for estimation and recognition, recent approaches such as artificial intelligence, information theory and advanced filtering algorithms are being increasingly applied to multi-sensor data fusion (Zhao et al., Reference Zhao, Gao, Zhang and Sun2014). Deep learning has been successfully applied in computer vision for determining high-level abstraction. Specifically, a Convolutional Neural Network (CNN) processes inputs to maintain important features and exclude irrelevant information (Goyal et al., Reference Goyal, Yap, Reeves, Rajbhandari and Spragg2017).

In this study, we propose an algorithm employing deep learning for extracting target features from marine radar images to enable feature-level fusion of ENC data and radar images taking the characteristics of marine radar signals into consideration. We verified the performance of the proposed method and explored solutions for fusion failure due to sensor errors in ship positioning and heading. Section 2 of this paper introduces the proposed target detection method, which is based on the characteristics of marine radar images and details of the proposed fusion algorithm are presented in Section 3. Simulation results to verify the performance of the method are presented in Section 4 and the conclusions of the study are summarised in Section 5.

2. MARINE RADAR IMAGE PROCESSING

The working principles of marine radar mean that clutter pixels in the resulting radar image and irregular expansion and adhesion deformation in the echoes from some objects is inevitable. Other objects easily identified from high-quality echoes are typical targets, which include land, islands, navigation equipment, ships, navigation aids and bridges. These targets reflect electromagnetic waves with less distortion and can be used as suitable references for fusion of ENC data and marine radar images. However, some typical targets such as shoreline echoes, present variations whose shape changes with the tide and their positions are not stable. Likewise, the shapes of radar echoes from targets are affected by aspects such as meteorological conditions and ship draughts. Before fusion, we applied target detection based on deep learning to identify typical targets in marine radar images. These targets then serve as reference points for consistent radar image fusion with ENC data. In the following subsections, details of the proposed marine radar image processing are presented.

2.1. Marine radar data pre-processing

To apply deep learning to marine radar images, we first compiled the images into a dataset. This was then augmented to improve detection. Subsequently, we performed annotation of typical targets.

Before target detection, a typical radar target dataset should be constructed. We selected real-time ship radar images referenced by duty officers or pilots from vessels in different narrow channels as samples for typical target detection. After the vessels docked, we used the Voyage Data Recorder (VDR) monitor to acquire ENC data and marine radar images from the corresponding narrow channel over the previous 12 hours.

We used a data augmentation method to avoid overfitting and increase data availability during training as the obtained dataset was small for deep learning (Wu et al., Reference Wu, Xu, Zhu and Guo2017). This data augmentation approach consists of geometrical transformations, such as horizontal flipping, resizing, rotation and cropping to generate synthetic images.

Our team was supported by ten senior seafarers who manually annotated typical targets from marine radar images using bounding boxes and then labelled their classes. These annotations were verified with reference to the chart, and typical targets were placed at the centre of the bounding boxes.

2.2. Target detection using neural networks

For efficient and robust marine radar typical target detection, we selected two models with strong generalisation capability, namely, Faster Region-based CNN (Faster R-CNN) and Region-based Fully Convolutional Network (R-FCN) (Dai et al., Reference Dai, Li, He, Sun, Chen, Gong and Tie2016). In addition, we selected the Oxford Visual Geometry Group (VGG) VGG-16 deep CNN (VGG-16) and the 101-layer residual neural network (ResNet-101) in imageNet Large Scale Visual Recognition Challenge (He et al., Reference He, Zhang, Ren and Sun2016; Simonyan and Zisserman, Reference Simonyan and Zisserman2015) with fast training and testing, respectively, to be the feature extractors.

2.2.1. Faster R-CNN

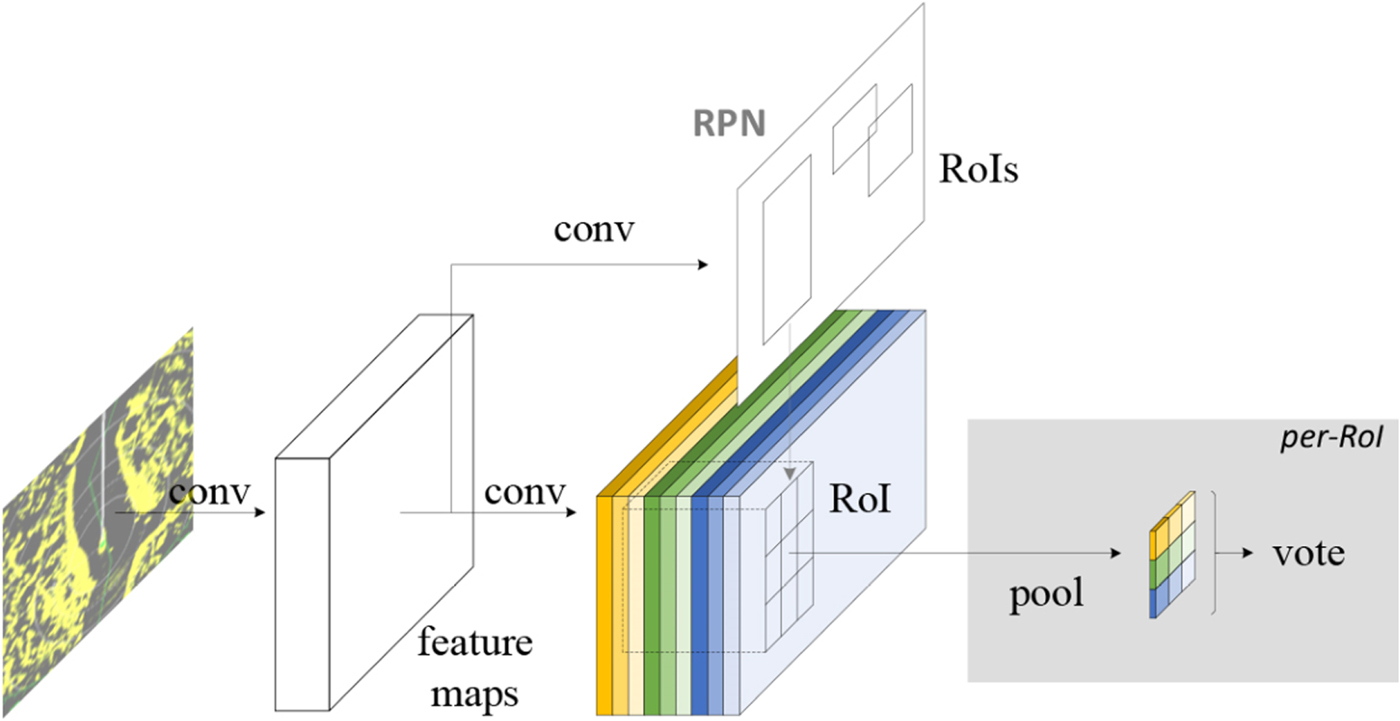

Faster R-CNN performs detection in two stages. In the first stage, a Region Proposal Network (RPN) accepts a marine radar image as input and extracts its features to indicate the subsequent detection model of the regions it should analyse. We slid a small network over the obtained convolutional feature map of the radar image to generate region proposals. Each feature is mapped into a lower-dimensional feature, which is fed into box-regression and box-classification layers. To train the RPN, we assigned a binary class label determined from the intersection-over-union overlap compared to a threshold with any ground-truth box to each anchor indicating whether it is an object. The loss function for the image is defined as:

$$L(\{ p_i\},\{ t_i\} ) = \displaystyle{1 \over {N_{cls}}}\sum\limits_i {L_{cls}} (p_i + p_i^* ) + \lambda \displaystyle{1 \over N}\sum\limits_i {p_i^* } L_{reg}(t_i,t_i^* )$$

$$L(\{ p_i\},\{ t_i\} ) = \displaystyle{1 \over {N_{cls}}}\sum\limits_i {L_{cls}} (p_i + p_i^* ) + \lambda \displaystyle{1 \over N}\sum\limits_i {p_i^* } L_{reg}(t_i,t_i^* )$$where i is the anchor index in a mini-batch, p i is the probability of anchor i being an object, t i is a vector representing the four parameterised coordinates of the predicted bounding box, t i* is the vector of the ground-truth box associated with a positive anchor, and λ is a balancing weight (Ren et al., Reference Ren, He, Girshick and Sun2015). The classification loss is the log loss over two classes as follows:

and the regression loss is given by:

For bounding box regression, we adopted the parameterisation of the four coordinates as follows:

$$\eqalign{t_x &= (x_p-x_a)/w_a,t_y = (y_p-y_a)/h_a \cr t_w &= \log (w_p/w_a),\quad t_h = \log (h_p/h_a) \cr t_x^* & = (x^*-x_a)/w_a,t_y^* = (y^*-y_a)/h_a \cr t_w^* &= \log (w^*/w_a),\quad t_h^* = \log (h^*/h_a)}$$

$$\eqalign{t_x &= (x_p-x_a)/w_a,t_y = (y_p-y_a)/h_a \cr t_w &= \log (w_p/w_a),\quad t_h = \log (h_p/h_a) \cr t_x^* & = (x^*-x_a)/w_a,t_y^* = (y^*-y_a)/h_a \cr t_w^* &= \log (w^*/w_a),\quad t_h^* = \log (h^*/h_a)}$$where x p, x a and x* correspond to the predicted box, anchor box and ground-truth box, respectively (likewise for w p, y p, h p). Variables x p, y p, w p and h p denote the two centre coordinates of the bounding box and its width and height. The RPN and subsequent detection are illustrated in Figure 1.

Figure 1. Diagram of the RPN architecture (left) and example of detections using RPN outcomes (right).

In the second stage, the box proposals are used to detect marine radar image features from the feature map. These features are fed into the remaining layers of the feature extractor for predicting the class probability and bounding box for each proposal.

The entire process is implemented on a unified network as shown in Figure 2, enabling sharing of full-image convolutional features with the detection network and significantly reducing the number of weights for simplifying and speeding-up training.

Figure 2. Block diagram of Faster R-CNN (ROI, region of interest).

2.2.2. R-FCN

The R-FCN illustrated in Figure 3 uses convolution for prediction and then performs region-of-interest pooling. Adding location information before pooling prevents the fully convolutional network from losing such information and specifying different score maps allows detection of different locations of the target. After pooling, the score maps obtained from different locations can be combined to reconstruct the original location information, whereas the fully convolutional structure reduces training time.

Figure 3. R-FCN with fully convolutional network.

We evaluated the performance of the models using the mean average precision (Everingham et al., Reference Everingham, van Gool, Williams, Winn and Zisserman2010). The average precision is the area under the precision (p)–recall (r) curve of the detection task, and its mean is computed over all the classes in a task. The average precision and mean average precision are respectively given by:

$$\eqalign{AP &= \sum\limits_i^{n_d} {p_i} \Delta r \cr mAP &= \displaystyle{1 \over C}\sum\limits_{C = 1}^C {AP_C} }$$

$$\eqalign{AP &= \sum\limits_i^{n_d} {p_i} \Delta r \cr mAP &= \displaystyle{1 \over C}\sum\limits_{C = 1}^C {AP_C} }$$The average precision AP is a dimensionless quantity and integrates both the precision and recall indicators, which are commonly used to measure the ability of neural networks to detect targets, with higher values indicating the model more suitable for the data set. Likewise, the mean average precision mAP is the average precision (including background) of each object, which is used to measure the target detection ability of the model for a category of targets.

2.3. Transfer learning

We used transfer learning to fine-tune the detection model as the employed dataset is small. In fact, modern object detection considers a large number of data samples and over a thousand class labels. Then, the hidden layers in a CNN provide distinct feature representations, with lower layers providing general feature extraction and higher layers providing more specific information about the intended task (Dai et al., Reference Dai, Li, He, Sun, Chen, Gong and Tie2016). For instance, lower layers would be edge detectors, whereas higher layers provide more specific representations belonging to the input image. Therefore, generalisation is provided by feature extraction and representation of lower layers and fine-tuning of the specific problem is provided by higher layers. The transfer learning employed in this study to improve detection is illustrated in Figure 4. In this study, we used a dataset available online, namely UC Merced Land Use Dataset with 21 object classes (Yang and Newsam, Reference Yang and Newsam2010) to perform pre-training. The main purpose was to guide the lower layers of the network to extract features in the proper direction. Then, fine-tuning was performed on higher layers using the radar data set generated in the present study.

Figure 4. Diagram of transfer learning to improve target detection.

3. FUSION BASED ON DETECTED TARGETS

3.1. Scaling and display area of ENC

The coordinate systems of the ENC and corresponding radar images are different, leading to superposition errors, which can be mitigated by performing real-time coordinate transformations. For the ENC, the projection mode defaults to the Mercator projection as this type of projection is simpler to calculate and yields higher accuracy in a small scanning range than similar methods. We used the Mercator projection by considering the scanning range of the radar, which is usually within six nautical miles when ships sail in confined waters.

The effect of the curvature of the Earth between the radar detection coordinate system and the Mercator coordinate system can be ignored as this value is too small compared to the radius of the Earth. When the range is small, the detection distance of the radar is approximately equal to the geographical distance considering the curvature of the earth. The conversion of the geodetic coordinate system into Mercator projection proceeds as follows (Yang et al., Reference Yang, Dou and Zheng2010):

$$\eqalign{& \left\{ {\matrix{ {x_m = r_0q} \hfill \cr {y_m = r_0\lambda } \hfill \cr } } \right. \cr & r_0 = \displaystyle{\alpha \over {\sqrt {1-e^2\sin \varphi _r} }} \times \cos \varphi _r \cr & q = \ln {\rm tan}\;\left( {\displaystyle{\pi \over 4} + \displaystyle{\varphi \over 0}} \right)-\displaystyle{e \over 2}\ln \displaystyle{{1 + e\sin \varphi } \over {1-e\sin \varphi }}}$$

$$\eqalign{& \left\{ {\matrix{ {x_m = r_0q} \hfill \cr {y_m = r_0\lambda } \hfill \cr } } \right. \cr & r_0 = \displaystyle{\alpha \over {\sqrt {1-e^2\sin \varphi _r} }} \times \cos \varphi _r \cr & q = \ln {\rm tan}\;\left( {\displaystyle{\pi \over 4} + \displaystyle{\varphi \over 0}} \right)-\displaystyle{e \over 2}\ln \displaystyle{{1 + e\sin \varphi } \over {1-e\sin \varphi }}}$$where x m and y m are the Mercator Cartesian coordinates, λ and φ are the longitude and latitude, respectively, α is the major axis of the Earth's ellipsoid, e is the first eccentricity of the Earth, φ r is the reference dimension, q is the equivalent dimension, and r 0 is the radius of the base circle of the latitude.

According to the projection transformation of chart pixels, the latitude and longitude of the radar scan can be obtained as:

$$\left\{ {\matrix{ {\lpar {\lambda_{\min },\lambda_{\min }} \rpar = \phi_{org}^{-1} \;\lpar {\phi \lpar {\lambda_0,\varphi_0} \rpar -\lpar {L,L} \rpar } \rpar } \hfill \cr {\lpar {\lambda_{\max },\lambda_{\max }} \rpar = \phi_{org}^{-1} \;\lpar {\phi \lpar {\lambda_0,\varphi_0} \rpar + \lpar {L,L} \rpar } \rpar } \hfill \cr } } \right.$$

$$\left\{ {\matrix{ {\lpar {\lambda_{\min },\lambda_{\min }} \rpar = \phi_{org}^{-1} \;\lpar {\phi \lpar {\lambda_0,\varphi_0} \rpar -\lpar {L,L} \rpar } \rpar } \hfill \cr {\lpar {\lambda_{\max },\lambda_{\max }} \rpar = \phi_{org}^{-1} \;\lpar {\phi \lpar {\lambda_0,\varphi_0} \rpar + \lpar {L,L} \rpar } \rpar } \hfill \cr } } \right.$$where λ0 and φ 0 are the longitude and latitude of the radar centre, respectively, (λmin, φ min) and (λmax, φ max) are the longitude and Lattitude ranges of the radar scanning, respectively, ϕ(λ0, φ 0) is the longitude and Lattitude of the radar centre in Cartesian coordinates with ϕ being the map for Mercator projections, respectively, ϕ −1 is the inverse of the Mercator map for chart projection, and L is the detection range of the radar. Then, we searched the area of the ENC to be registered using the results obtained.

In terms of the registration, the ENC and marine radar scales must be consistent. Modifying the radar scale is achieved by several variation ranges, whereas ENC data can be arbitrarily set to the desired scale. Therefore, we matched the electronic chart to radar data (Yang et al., Reference Yang, Dou and Zheng2010). A radar image scale and the display scale of the ENC are determined by:

$$\eqalign{\displaystyle{1 \over {S_r}} &= \displaystyle{{c \times d} \over R} \cr z &= \displaystyle{{\lpar {c \times d \times s_0} \rpar } \over R}}$$

$$\eqalign{\displaystyle{1 \over {S_r}} &= \displaystyle{{c \times d} \over R} \cr z &= \displaystyle{{\lpar {c \times d \times s_0} \rpar } \over R}}$$where R is the detection radius of the radar, c pixels at distance d are contained in the radius scan line, and 1/s r is the original scale of the radar. However, the remaining area, excluding the radar image area, is displayed in black on the radar display screen. Thus, 1/s r can also be regarded as the scale of the external square of the radar circular image. 1/s 0 is the original scale of the ENC, and z is the scaling parameter of the ENC.

According to the azimuth and distance of the corners of the bounding boxes obtained from radar target detection with respect to the registration reference point, areas outside the bounding box in the ENC are treated as background, whereas the area within the bounding box of the ENC data and radar image is the Registration Interest Area (RIA).

3.2. RIA processing

3.2.1. Filtering algorithm

Unlike registration based on entire radar images, filtering and parameter selection considering only RIAs can be more accurate for extracting the edges. For enhanced applicability, we consider real-time fusion and adaptive mean filtering, which is expressed as:

where x is a pixel in the plane, c k is a constant greater than 0, k (x) satisfies ∫k(x)dr < 0, and K(x) is a kernel function. The kernel density estimate is given by:

$$\eqalign{f(x) &= \displaystyle{1 \over n}\sum\limits_{i = 1}^n {K_{\rm H}} (x-x_i) \cr K_{\rm H}(x) &= \vert H \vert ^{-1/2}K\lpar {H^{-1/2}x} \rpar }$$

$$\eqalign{f(x) &= \displaystyle{1 \over n}\sum\limits_{i = 1}^n {K_{\rm H}} (x-x_i) \cr K_{\rm H}(x) &= \vert H \vert ^{-1/2}K\lpar {H^{-1/2}x} \rpar }$$where H = h 2I. Simplifying the density function, we obtain:

$$f(x) = \displaystyle{1 \over {nh^d}}\sum\limits_{i = 1}^n k \left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)$$

$$f(x) = \displaystyle{1 \over {nh^d}}\sum\limits_{i = 1}^n k \left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)$$whose gradient is given by:

$$\nabla f(x) = \displaystyle{{2C_k} \over {nh^{d + 2}}}\sum\limits_{i = 1}^n {k^{\prime}} \left( {{\left\| {\displaystyle{{x-x_i} \over h}} \right\| }^2} \right).$$

$$\nabla f(x) = \displaystyle{{2C_k} \over {nh^{d + 2}}}\sum\limits_{i = 1}^n {k^{\prime}} \left( {{\left\| {\displaystyle{{x-x_i} \over h}} \right\| }^2} \right).$$ Assuming g(x) = −k ′(x), ![]() $G\lpar x\rpar = c_{k}g\ \lpar \Vert x\Vert^{2}\rpar $, and G(x) is the opacification function of K(x), we obtain:

$G\lpar x\rpar = c_{k}g\ \lpar \Vert x\Vert^{2}\rpar $, and G(x) is the opacification function of K(x), we obtain:

$$\eqalign{\nabla f(x) &= \displaystyle{{2c_k} \over {nh^{d + 2}}}\left[ {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)}^2} \right]\left[ {\displaystyle{{\sum\limits_{i = 1}^n {x_i} g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)}^2} \over {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\|} \right)}^2}}-x} \right] \cr & = \displaystyle{{2c_k} \over {nh^{d + 2}}}\left[ {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\|} \right)}^2} \right]\lsqb {m_h\;(x)-x} \rsqb }$$

$$\eqalign{\nabla f(x) &= \displaystyle{{2c_k} \over {nh^{d + 2}}}\left[ {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)}^2} \right]\left[ {\displaystyle{{\sum\limits_{i = 1}^n {x_i} g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\| } \right)}^2} \over {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\|} \right)}^2}}-x} \right] \cr & = \displaystyle{{2c_k} \over {nh^{d + 2}}}\left[ {\sum\limits_{i = 1}^n \; g\;{\left( {\left\| {\displaystyle{{x-x_i} \over h}} \right\|} \right)}^2} \right]\lsqb {m_h\;(x)-x} \rsqb }$$with m h(x) being the weighted average of the sample points used to update the search area with radius h and m h (x) − x being a drift vector, which terminates the iteration process when it is below a certain tolerance level.

3.2.2. Binarization

To avoid intensive processing of colour images, we binarized greyscale images using the method by Otsu (Reference Otsu1979) to prevent disparity between the RIA foreground and the background size ratio. This method adaptively searches for a threshold according to the characteristics of the image and converts a greyscale image into a binary one. The transformation can be expressed as:

where w 0 is the ratio of foreground to image with u 0 being its average greyscale value and w 1 is the ratio of background to image with u 1 being its average greyscale value. The highest variance g maximises the foreground and background difference, and the corresponding threshold t is the optimum.

3.2.3. Morphological processing

Binarization may produce some isolated noise pixels besides the foreground target, affecting its integrity. We performed noise removal by applying corrosion, expansion, opening, and closing, which can be expressed using the standard set theory as:

$$\eqalign{A\Theta B &= \lcub {w\in Z + b\in A,b\in B} \rcub \cr A\oplus B &= \lcub {w\in Z = a + b,a\in A,b\in B} \rcub \cr A \circ B &= (A\Theta B)\oplus B \cr A \bullet B &= (A\oplus B)\Theta B}$$

$$\eqalign{A\Theta B &= \lcub {w\in Z + b\in A,b\in B} \rcub \cr A\oplus B &= \lcub {w\in Z = a + b,a\in A,b\in B} \rcub \cr A \circ B &= (A\Theta B)\oplus B \cr A \bullet B &= (A\oplus B)\Theta B}$$where Z represents the foreground, w is a pixel at different positions in the foreground image, and a and b are members of structural element sets A and B, respectively.

We used the improved canny operator (Othman and Abdullah, Reference Othman and Abdullah2018) to extract the edge of the radar image and boundary of the echoes in the RIAs:

$$\eqalign{ E_x &= \displaystyle{{\partial P} \over {\partial x}}*f(x,y)\quad E_y = \displaystyle{{\partial P} \over {\partial y}}*f(x,y) \cr A(i,j) &= \sqrt {E_x^2 (i,j) + E_y^2 (i,j)} \cr a(i,j) &= \arctan \left( {\displaystyle{{E_x\;(x,y)} \over {E_y\;(x,y)}}} \right)}$$

$$\eqalign{ E_x &= \displaystyle{{\partial P} \over {\partial x}}*f(x,y)\quad E_y = \displaystyle{{\partial P} \over {\partial y}}*f(x,y) \cr A(i,j) &= \sqrt {E_x^2 (i,j) + E_y^2 (i,j)} \cr a(i,j) &= \arctan \left( {\displaystyle{{E_x\;(x,y)} \over {E_y\;(x,y)}}} \right)}$$where P(x, y) is a two-dimensional Gaussian function, f(x, y) is the image data, A(i, j) is the edge feature of point (i, j) in the image, and a(i, j) is the normal vector of the image at point (i, j). Figure 5 illustrates the complete image processing for an RIA.

Figure 5. Image processing of RIA. (a) RIA; (b) Filtering and Binarization; (c) Morphological processing; (d) Canny.

3.3. Image registration

Image registration can be based on transformations of greyscale images, features and domain. Considering the notable divergence between marine radar images and ENC, we used a feature-based affine transformation for their fusion. Three or more reference points should be selected for the transformation, and its principle can be expressed as:

where (x i, y i) are the coordinates of a pixel in the ENC, k ′, θ, x o and y o are parameters for the affine transformation, where θ corresponds to the image rotation, k is a scale factor and x 0 and y 0 correspond to the image shift. Thus, we obtain:

with a 11 = a 22 = k ′cosθ, a 13 = x o, a 12 = −a 21 = k ′sinθ, and a 23 = y o.

Therefore, correlating radar images with ENC data requires at least three pairs of reference points with accurate correspondence to determine the transformation parameters. The accuracy of the reference points directly determines the fusion outcome. After the transformation, however, some deformation may occur, but it is generally negligible. On the other hand, if the deformation undermines fusion, then according to “the display of radar information shall have priority” from IEC 62388 (2013), priority should be given to the display of radar images.

To determine the reference points for registration, we considered various aspects. After target detection from the radar image, targets with small sizes and inconspicuous shapes may interfere with the detection results, as registration is less effective for small objects such as buoys. However, according to the International Convention on Standards of Training, Certification and Watchkeeping for Seafarers, when ships are sailing along the coast in crowded waters, the crew should check the position of the ship at appropriate intervals through independent positioning methods, and reference objects are mainly used for navigation. Therefore, small reference objects that can accurately correspond to the ENC goal should not be ignored. Consequently, pixels between the radar image and ENC are suitable as registration reference points.

Depending on the target resolution of the radar, manual selection of registration reference points cannot be performed on large ranges. Therefore, we implemented the automatic registration algorithm, illustrated in Figure 6, which focused on objects that perform well in target detection as reference points for registration. The algorithm first locates a pair of accurate reference points on the ENC and radar images whose respective coordinates are (x 1, y 1) and ![]() $\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $. When two or more bounding boxes are found, a ray is emitted from (x 1, y 1) and

$\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $. When two or more bounding boxes are found, a ray is emitted from (x 1, y 1) and ![]() $\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $ to the geometric centre of the RIA in the ENC and radar images to acquire two or more groups of coordinates in front of the radar echo edge. If there is only one bounding box, a specific angle on the bow is selected, emitting two rays to the areas around (x 1, y 1) and

$\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $ to the geometric centre of the RIA in the ENC and radar images to acquire two or more groups of coordinates in front of the radar echo edge. If there is only one bounding box, a specific angle on the bow is selected, emitting two rays to the areas around (x 1, y 1) and ![]() $\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $ to obtain the coordinates of the two registration reference points.

$\lpar x_{1}^{\prime}\comma \; y_{1}^{\prime}\rpar $ to obtain the coordinates of the two registration reference points.

Figure 6. Complete process for automatic registration from detected typical targets. (a) Detection result; (b) Binarization; (c) Morphological processing; (d) Canny; (e) Registration.

4. RESULTS AND DISCUSSION

4.1. Typical target detection

We leveraged the UC Merced Land Use Dataset with 21 object classes (Yang and Newsam, Reference Yang and Newsam2010) as a dataset sample for pre-training the network toward the problem domain of specific object classes for detection. The annotated typical target dataset (ENC data and marine radar images acquired from the voyage data recorder monitor) was used to fine-tune the pre-trained model using transfer learning. We used stochastic gradient descent with momentum and weight decay of 0·9 and 0·0005, respectively. The initial learning rate of 0·001 was divided by 10 using stepdown every 12,000 iterations. For the RPN in the Faster R-CNN and R-FCN, we set the batch size to 256.

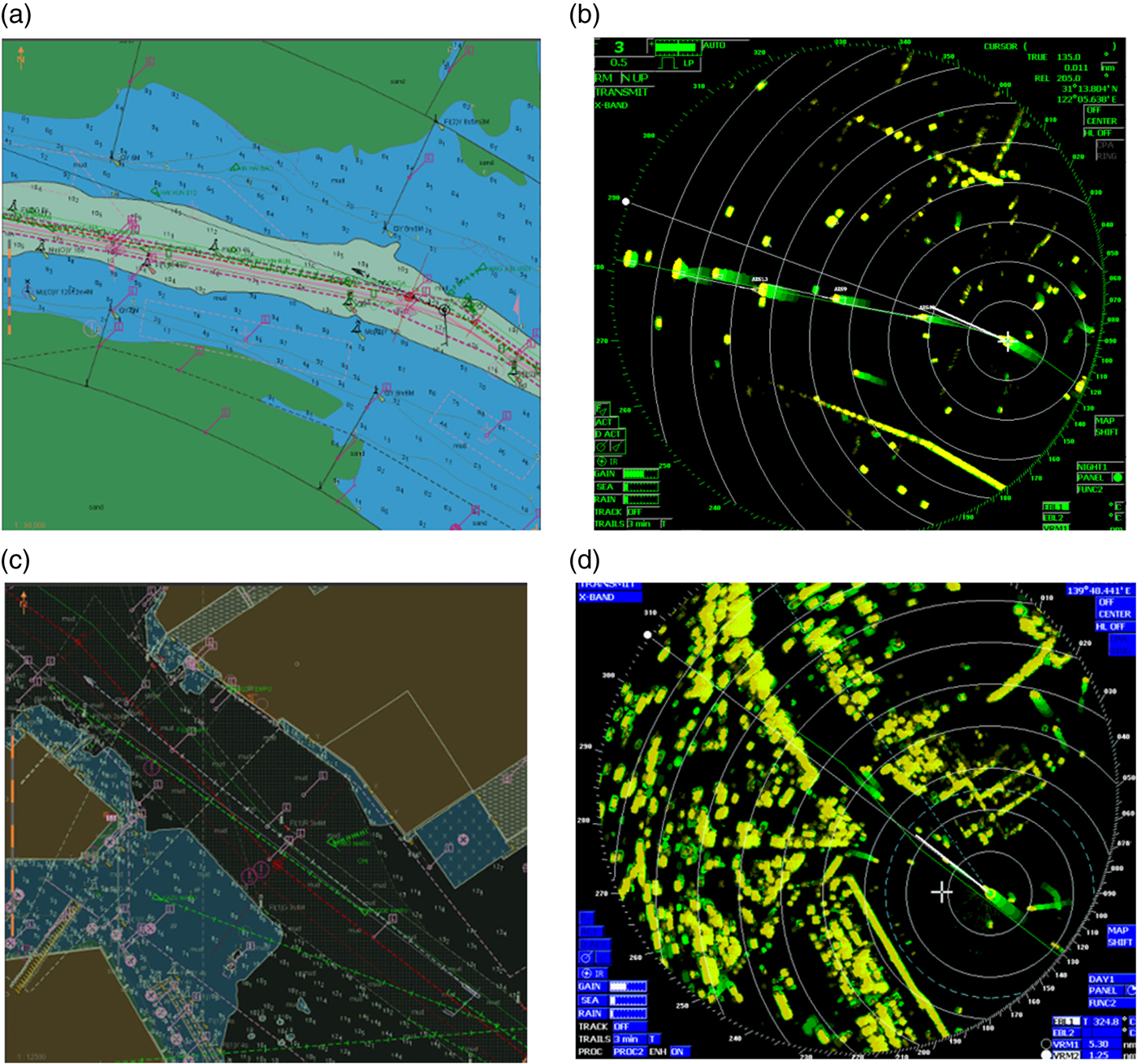

Table 1 lists the performance of the Faster R-CNN and R-FCN in the data set we constructed. Both detection performances for typical marine radar targets are not ideal. However, the R-FCN performed better than the Faster R-CNN as the mAP of the R-FCN is higher than that of the Faster R-CNN; a detection model with a higher mAP value can classify the target data set considering the precision and recall overall. Thus, the R-FCN is considered more suitable to perform the detection task in our data set efficiently. From these results, we determined that various factors should be considered for typical radar target detection. First, radar information from different target types may be very similar, thus undermining the detection accuracy. Figure 7 shows examples of indistinguishable objects, namely a shoal (Figure 7(b)) and a breakwater (Figure 7(d)).

Table 1. Detection result of Faster R-CNN and R-FCN for typical radar target.

Figure 7. Typical target with undistinguishable echoes: (a) ENC of a shoal; (b) radar echoes of a shoal; (c) ENC of a breakwater; (d) Civil Marine Radar (CMR) echoes of a breakwater.

In addition, targets with small sizes and inconspicuous shapes may interfere with the detection results. In fact, such targets produce a low average precision that undermines detection. Furthermore, although the position of the ship is stable, and its geometric shape is regular with strong capability to reflect Civil Marine Radar (CMR) echoes, the average precision of the vessel is low; furthermore, there will be leakage detection and error detection, as illustrated in Figure 8. Therefore, ships cannot be used as reference points for automatic registration.

Figure 8. Target detection of ships showing detection omission (a) without and (b) with radar persistence.

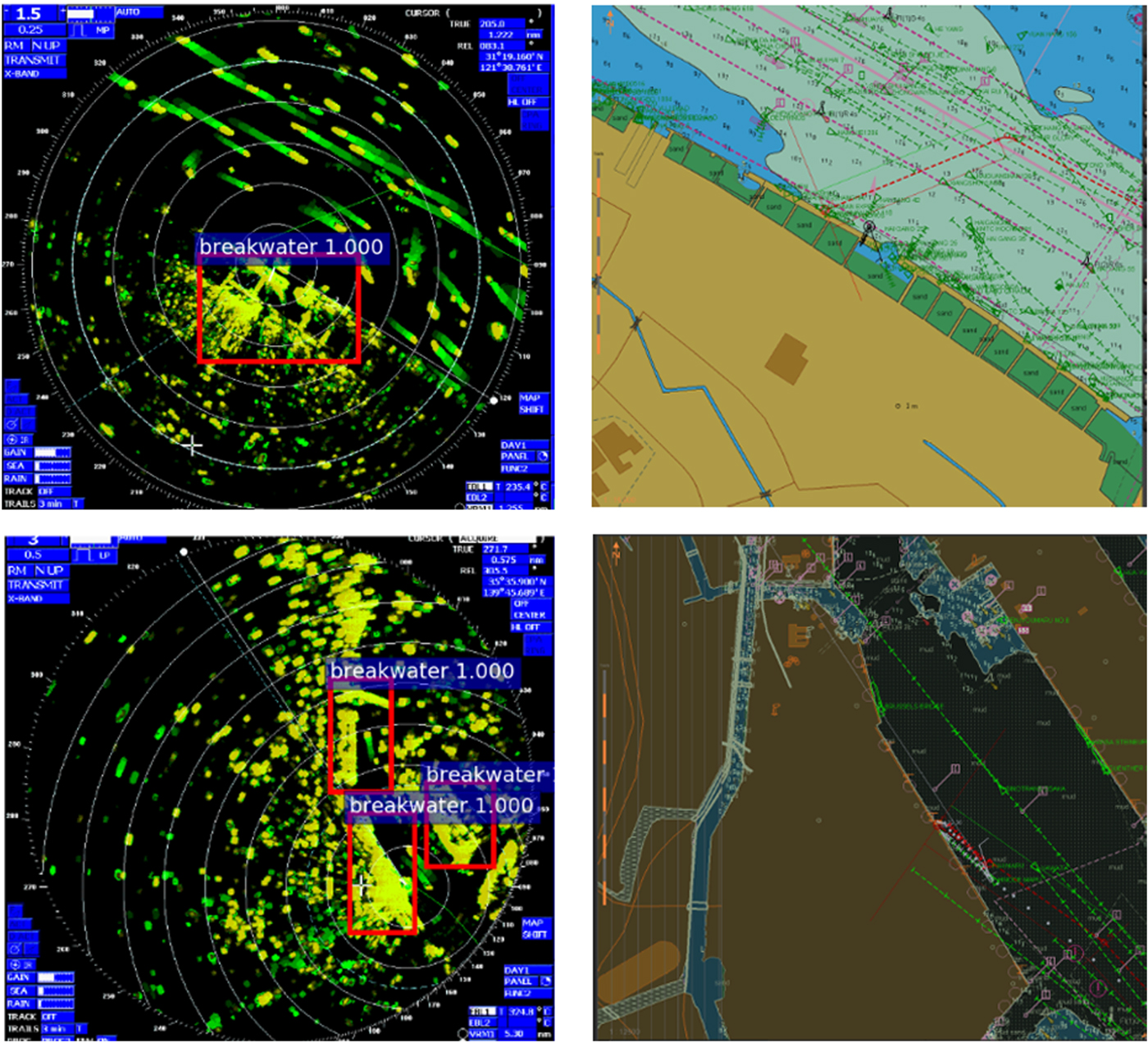

To improve the detection performance, we considered typical targets in the radar images with different characteristics and trained different models to reduce the detection classes and avoid similarity among different targets. For example, we considered the waters near the Yangtze River estuary (Figure 9). According to the Shanghai Gang sailing direction, arriving ships mostly rely on lights and lightships for positioning and navigation. However, it is difficult to distinguish lighthouses and lightships from a large number of densely anchored ships. In addition, the position and shape of echoes from smooth beach shorelines and mudflats can change with tide diversification. Moreover, radar images usually do not produce notable distinctions between steep and smooth beach shorelines, which are thus not suitable as reference points for registration and navigation. In these types of waters, echo-stable buildings are preferred as typical targets such as breakwaters and quayside edges, as shown in Figure 9. When these targets were used for the R-FCN, we obtained better detection performance. Specifically, target detection in the waters of the Yangtze River and waters near the Kobe Port estuary yielded average precision for the breakwater of up to 0·4372, which is higher compared to using other targets.

Figure 9. Breakwater selected as typical target in the area of the Yangtze river and Kobe port.

We also verified the feasibility of the R-FCN in the inland waters near the Caledon port, Vietnam and the results obtained are listed in Table 2 and shown in Figure 10. The detection performance notably improved with a mAP of 0·5178, which is significantly higher than the values previously obtained (0·2973 and 0·3649). This indicates that reducing the classification category of the target helps to improve the target detection performance of the model after considering the precision and recall rate.

Table 2. Detection of typical targets from multiple radar in waters near the Port of Caledon, Vietnam.

Figure 10. Detection of typical targets near the Port of Caledon, Vietnam.

4.2. Data fusion

The affine transformation parameters, k, θ, x o, and y o in Equation (17) were determined to perform fusion simulations on ENC data and CMR images at intervals of 5 min. The results are shown in Figure 11.

Figure 11. Using fixed parameter to perform data fusion of ENC and radar images every five minutes.

The algorithm yields a suitable performance because fusion of the shorelines from the ENC and radar images coincide. Moreover, the shoreline and shoal are separated in Figures 12(a) and 12(b) without loss of radar information. The image details show successful fusion by the consistent position of the land in Figure 11(a) and that of the buoys in Figures 12(a)–(c). Thus, image fusion of ENC and marine radar images can be performed even without the identification of accurate feature points.

Figure 12. Image fusion using reference points on the left and right of the ship. (a) ENC; (b) CMR image; (c) Binarization; (d) Morphological; (e1) Left side of ship; (e2) Right side of ship.

We executed training and registration using a Nvidia GeForce GTX 1080 GPU and Intel Core i5–7 processors. With this equipment, the average time for target detection on a single test image was 0·15 s, and registration was completed within 0·5 s, which is below the maximum update rate of the marine radar antenna (48 rpm), suggesting that the proposed algorithm satisfies the requirement for real-time performance.

The success of the registration algorithm depends on the quality of marine radar image processing. Moreover, if the radar detects contiguous objects for reference points near the range, these objects will cause pixel adhesion, and the canny operator cannot extract the edge features of these objects. We performed a simulation to demonstrate this situation. As shown in Figure 12(e2), the shoreline on the left side is steep without occlusion from other objects. However, the shoreline on the right of the ship is covered by a building. Taking the shoreline on the left and right of the ship as RIAs to perform automatic registration with the corresponding reference points yields the results shown in Figure 12.

Fusion using the RIA from the left of the ship successfully matches the shoreline and the bridge, but that from the right of the ship fails. The failure is caused by limitations in the working principle and performance of a CMR. Even with human assessment, it is difficult to find pixels that can accurately correspond to the ENC position among many erratic pixels. This complicates the search for registration reference points in such areas. Therefore, registration reference points in the RIA should be searched only when no object interference occurs.

Now, consider the case when the ship is being used as the reference point and the GNSS is giving a large error, thus drastically undermining fusion. In this case, achieving fusion demands reference points other than the ship. For instance, if the buoy detected by radar with clear echoes and stable position on the ENC is used as a registration reference point for fusion, the algorithm yields the result shown in Figure 13.

Figure 13. Data fusion using other reference points detected by radar under large error of the ship GNSS. (a) ENC; (b) Typical target detection; (c) Binarization; (d) Morphological; (e) Canny operator; (f) Auto Registration Algorithm; (g) Registration.

The automatic fusion of ENC data and marine radar image is realised using the buoy echo detected by the radar, disregarding information on the ship position. The fusion results show that the ship position on the radar is consistent with the GNSS signal on the ENC, and the radar echo of the shoreline is accurately integrated with ENC data, verifying the high performance of the proposed algorithm under this scenario.

5. CONCLUSIONS

High-level data fusion is not realised by conventional chart radar, as it depends on the measurement environment and is a cumbersome process requiring much experience. Recent studies have mostly focused on target detection considering complete images and demands the adjustment of several parameters more related to image pre-processing than to RIAs. In addition, detection by different operators has the limitation of fixed types of detection features and is affected by radar echo quality and target deformation, often failing to produce ideal results. To avoid these limitations, we proposed a method based on deep learning to detect RIAs on marine radar images. The proposed method can detect different and robust image feature maps through a continuous convolution layer, which results in more accurate and robust reference points for registration obtained after contour extraction from detected targets. We employed an affine transformation to fuse corresponding ENC data and marine radar images. The proposed algorithm was verified for fusion of ENC data and marine radar images retrieved from real ships sailing in narrow channels. Fusion can be performed even under considerable errors in both the ship positioning measurements and heading signal. Fusion results demonstrate the effectiveness and consistency of the proposed algorithm. The results from data fusion between ENC data and marine radar images can help in the interpretation of navigation scenarios. Moreover, the image retrieved from fusion can provide real-time dynamic information of marine radar and suitably reflect ENC static navigation information, thus improving aspects such as safety during navigation. In a broader sense, the proposed method can serve as a guideline for future integration and data fusion of multiple navigation sensors.

ACKNOWLEDGMENTS

This work was supported by the National Nature Science Foundation of China (Nos. 51579024, 51879027, 61374114) and the Fundamental Research Funds for the Central Universities (DMU no. 3132016311)