1. Introduction

A large number of biological sequences are currently available. The local score in sequence analysis, first defined in [Reference Karlin and Altschul8], quantifies the highest level of a certain quantity of interest, e.g. hydrophobicity, polarity, etc., that can be found locally inside a given sequence. This allows us, for example, to detect atypical segments in biological sequences. In order to distinguish significantly interesting segments from ones that may have appeared by chance alone, it is necessary to evaluate the p-value of a given local score. Different results have already been established using different probabilistic models for sequences: independent and identically distributed (i.i.d.) variables model [Reference Cellier, Charlot and Mercier2, Reference Karlin and Altschul8, Reference Karlin and Dembo9, Reference Mercier and Daudin12], Markovian models [Reference Hassenforder and Mercier7, Reference Karlin and Dembo9], and hidden Markov models [Reference Durbin, Eddy, Krogh and Mitchion4]. In this article we will focus on the Markovian model.

An exact method was proposed in [Reference Hassenforder and Mercier7] to calculate the distribution of the local score for a Markovian sequence, but this result is computationally time-consuming for long sequences ( ${>}10^3$

). Karlin and Dembo [Reference Karlin and Dembo9] established the limit distribution of the local score for a Markovian sequence and a random scoring scheme depending on the pairs of consecutive states in the sequence. They proved that, in the case of a non-lattice scoring scheme, the distribution of the local score is asymptotically a Gumble distribution, as in the i.i.d. case. In the lattice case, they gave asymptotic lower and upper bounds of Gumbel type for the local score distribution. In spite of its importance, their result in the Markovian case is unfortunately very little cited or used in the literature. A possible explanation could be that the random scoring scheme defined in [Reference Karlin and Dembo9] is more general than the ones classically used in practical approaches. In [Reference Fariello5] and [Reference Guedj6], the authors verify by simulations that the local score in a certain dependence model follows a Gumble distribution, and use simulations to estimate the two parameters of this distribution.

${>}10^3$

). Karlin and Dembo [Reference Karlin and Dembo9] established the limit distribution of the local score for a Markovian sequence and a random scoring scheme depending on the pairs of consecutive states in the sequence. They proved that, in the case of a non-lattice scoring scheme, the distribution of the local score is asymptotically a Gumble distribution, as in the i.i.d. case. In the lattice case, they gave asymptotic lower and upper bounds of Gumbel type for the local score distribution. In spite of its importance, their result in the Markovian case is unfortunately very little cited or used in the literature. A possible explanation could be that the random scoring scheme defined in [Reference Karlin and Dembo9] is more general than the ones classically used in practical approaches. In [Reference Fariello5] and [Reference Guedj6], the authors verify by simulations that the local score in a certain dependence model follows a Gumble distribution, and use simulations to estimate the two parameters of this distribution.

In this article we study the Markovian case for a more classical scoring scheme. We propose a new approximation, given as an asymptotic equivalence when the length of the sequence tends to infinity, for the distribution of the local score of a Markovian sequence. We compare it to the asymptotic bounds of [Reference Karlin and Dembo9] and illustrate the improvements both in a simple application case and on the real data examples proposed in [Reference Karlin and Altschul8].

1.1. Mathematical framework

Let  $(A_i)_{i \geq 0}$

be an irreducible and aperiodic Markov chain taking its values in a finite set

$(A_i)_{i \geq 0}$

be an irreducible and aperiodic Markov chain taking its values in a finite set  $\mathcal{A}$

containing r states denoted

$\mathcal{A}$

containing r states denoted  $\alpha$

,

$\alpha$

,  $\beta$

,

$\beta$

,  $\dots$

for simplicity. Let

$\dots$

for simplicity. Let  ${\bf P}=(p_{{\alpha}{\beta}})_{\alpha,\beta \in \mathcal{A}}$

be its transition probability matrix and

${\bf P}=(p_{{\alpha}{\beta}})_{\alpha,\beta \in \mathcal{A}}$

be its transition probability matrix and  $\pi$

its stationary frequency vector. In this work we suppose that P is positive (for all

$\pi$

its stationary frequency vector. In this work we suppose that P is positive (for all  $\alpha,\beta,\ p_{\alpha\beta}>0$

). We also suppose that the initial distribution of

$\alpha,\beta,\ p_{\alpha\beta}>0$

). We also suppose that the initial distribution of  $A_0$

is given by

$A_0$

is given by  $\pi$

, so that the Markov chain is stationary.

$\pi$

, so that the Markov chain is stationary.  $\textrm{P}_{\alpha}$

will stand for the conditional probability given

$\textrm{P}_{\alpha}$

will stand for the conditional probability given  $\{A_0 = \alpha\}$

. We consider a lattice score function

$\{A_0 = \alpha\}$

. We consider a lattice score function  $f\,:\, \mathcal{A}\rightarrow d\mathbb{Z}$

, with

$f\,:\, \mathcal{A}\rightarrow d\mathbb{Z}$

, with  $d\in\mathbb{N}$

being the lattice step. Note that, since

$d\in\mathbb{N}$

being the lattice step. Note that, since  $\mathcal{A}$

is finite, we have a finite number of possible scores. Since the Markov chain

$\mathcal{A}$

is finite, we have a finite number of possible scores. Since the Markov chain  $(A_i)_{i \geq 0}$

is stationary, the distribution of

$(A_i)_{i \geq 0}$

is stationary, the distribution of  $A_i$

is

$A_i$

is  $\pi$

for every

$\pi$

for every  $i \geq 0$

. We will simply denote by

$i \geq 0$

. We will simply denote by  $\textrm{E}[\,f(A)]$

the average score.

$\textrm{E}[\,f(A)]$

the average score.

In this article we make the hypothesis that the average score is negative, i.e.

\begin{eqnarray}\text{Hypothesis (1): }\ \ \textrm{E}[\,f(A)]=\sum_{\alpha}f({\alpha})\pi_{\alpha}<0. \end{eqnarray}

\begin{eqnarray}\text{Hypothesis (1): }\ \ \textrm{E}[\,f(A)]=\sum_{\alpha}f({\alpha})\pi_{\alpha}<0. \end{eqnarray}

We will also suppose that for every  ${\alpha} \in \mathcal{A}$

we have

${\alpha} \in \mathcal{A}$

we have

\begin{eqnarray}\text{Hypothesis (2): }\ \ \textrm{P}_{\alpha}(\,f(A_1) > 0) > 0. \end{eqnarray}

\begin{eqnarray}\text{Hypothesis (2): }\ \ \textrm{P}_{\alpha}(\,f(A_1) > 0) > 0. \end{eqnarray}

Note that, thanks to the assumption  ${p}_{{\alpha\beta}}>0$

for all

${p}_{{\alpha\beta}}>0$

for all  $\alpha,\beta$

, Hypothesis (2) is equivalent to the existence of

$\alpha,\beta$

, Hypothesis (2) is equivalent to the existence of  $\beta \in \mathcal{A}$

such that

$\beta \in \mathcal{A}$

such that  $f({\beta}) > 0$

.

$f({\beta}) > 0$

.

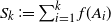

Let us introduce some definitions and notation. Let  $S_{0}\coloneqq 0$

and

$S_{0}\coloneqq 0$

and  $S_{k}\coloneqq \sum_{i=1}^kf(A_i)$

for

$S_{k}\coloneqq \sum_{i=1}^kf(A_i)$

for  $k\geq 1$

be the successive partial sums. Let

$k\geq 1$

be the successive partial sums. Let  $S^+$

be the maximal non-negative partial sum:

$S^+$

be the maximal non-negative partial sum:  $S^+\coloneqq \max\{0,S_k \,:\, k \geq 0\}$

.

$S^+\coloneqq \max\{0,S_k \,:\, k \geq 0\}$

.

Further, let  $\sigma^-\coloneqq \inf\{k\geq 1\,:\,S_k<0\}$

be the time of the first negative partial sum. Note that

$\sigma^-\coloneqq \inf\{k\geq 1\,:\,S_k<0\}$

be the time of the first negative partial sum. Note that  $\sigma^-$

is an almost surely (a.s.) finite stopping time due to Hypothesis (1), and let

$\sigma^-$

is an almost surely (a.s.) finite stopping time due to Hypothesis (1), and let  $Q_1 \coloneqq \max_{0 \leq k < \sigma^-} S_k$

.

$Q_1 \coloneqq \max_{0 \leq k < \sigma^-} S_k$

.

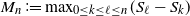

First introduced in [Reference Karlin and Altschul8], the local score, denoted  $M_n$

, is defined as the maximum segmental score for a sequence of length n:

$M_n$

, is defined as the maximum segmental score for a sequence of length n:  $M_{n}\coloneqq \max_{0\leq k\leq \ell\leq n}(S_{\ell}-S_{k})$

.

$M_{n}\coloneqq \max_{0\leq k\leq \ell\leq n}(S_{\ell}-S_{k})$

.

Note that in order to study the distributions of the variables  $S^+$

,

$S^+$

,  $Q_1$

, and

$Q_1$

, and  $M_n$

, which all take values in

$M_n$

, which all take values in  $d\mathbb{N}$

, it suffices to focus on the case

$d\mathbb{N}$

, it suffices to focus on the case  $d=1$

. We will thus consider

$d=1$

. We will thus consider  $d=1$

throughout the article.

$d=1$

throughout the article.

Remark 1.1. Karlin and Dembo [Reference Karlin and Dembo9] considered a more general model, with a random score function defined on pairs of consecutive states of the Markov chain: they associated with each transition  $(A_{i-1},A_{i})=(\alpha,\beta)$

a bounded random score

$(A_{i-1},A_{i})=(\alpha,\beta)$

a bounded random score  $X_{{\alpha}{\beta}}$

whose distribution depends on the pair

$X_{{\alpha}{\beta}}$

whose distribution depends on the pair  $(\alpha,\beta)$

. Moreover, they supposed that, for

$(\alpha,\beta)$

. Moreover, they supposed that, for  $(A_{i-1},A_i)=(A_{j-1},A_j)=(\alpha,\beta)$

, the random scores

$(A_{i-1},A_i)=(A_{j-1},A_j)=(\alpha,\beta)$

, the random scores  $X_{A_{i-1} A_i}$

and

$X_{A_{i-1} A_i}$

and  $X_{A_{j-1} A_j}$

are independent and identically distributed as

$X_{A_{j-1} A_j}$

are independent and identically distributed as  $X_{\alpha\beta}$

. Their model is also more general in that the scores are not restricted to the lattice case and may be continuous random variables.

$X_{\alpha\beta}$

. Their model is also more general in that the scores are not restricted to the lattice case and may be continuous random variables.

The framework of this article corresponds to the case where the score function is deterministic and lattice, with  $X_{A_{i-1}A_{i}}=f(A_i)$

.

$X_{A_{i-1}A_{i}}=f(A_i)$

.

Note also that in our case Hypotheses (1) and (2) assure so-called cycle positivity, i.e. the existence of some state  $\alpha \in \mathcal{A}$

and of some

$\alpha \in \mathcal{A}$

and of some  $m \geq 2$

satisfying

$m \geq 2$

satisfying  $\textrm{P}(\bigcap_{k=1}^{m-1} \{S_k>0\} \mid A_0=A_m=\alpha ) > 0$

. In [Reference Karlin and Dembo9], in order to simplify the presentation, the authors strengthened the assumption of cycle positivity by assuming that

$\textrm{P}(\bigcap_{k=1}^{m-1} \{S_k>0\} \mid A_0=A_m=\alpha ) > 0$

. In [Reference Karlin and Dembo9], in order to simplify the presentation, the authors strengthened the assumption of cycle positivity by assuming that  $\textrm{P}(X_{\alpha\beta} > 0) > 0$

and

$\textrm{P}(X_{\alpha\beta} > 0) > 0$

and  $\textrm{P}(X_{\alpha\beta} < 0) > 0$

for all

$\textrm{P}(X_{\alpha\beta} < 0) > 0$

for all  $\alpha, \beta \in \mathcal{A}$

(see (1.19) of [Reference Karlin and Dembo9]), but in fact cycle positivity is sufficient for their results to hold.

$\alpha, \beta \in \mathcal{A}$

(see (1.19) of [Reference Karlin and Dembo9]), but in fact cycle positivity is sufficient for their results to hold.

In Section 2 we first introduce a few more definitions and some notation. We then present the main results: in Theorem 2.1, we propose a recursive result for the exact distribution of the maximal non-negative partial sum  $S^+$

for an infinite sequence, and in Theorem 2.3, based on the exact distribution of

$S^+$

for an infinite sequence, and in Theorem 2.3, based on the exact distribution of  $S^+$

, we further propose a new approximation for the tail behaviour of the height of the first excursion

$S^+$

, we further propose a new approximation for the tail behaviour of the height of the first excursion  $Q_1$

. We also establish in Theorem 2.4 an asymptotic equivalence result for the distribution of the local score

$Q_1$

. We also establish in Theorem 2.4 an asymptotic equivalence result for the distribution of the local score  $M_n$

when the length n of the sequence tends to infinity. Section 3 contains the proofs of the results of Section 2 and of some useful lemmas which use techniques of Markov renewal theory and large deviations. In Section 4 we propose a computational method for deriving the quantities appearing in the main results. A simple scoring scheme is developed in Section 4.4, for which we compare our approximations to those proposed by Karlin and Dembo [Reference Karlin and Dembo9] in the Markovian case. In Section 4.5 we also show the improvements brought by the new approximations on the real data examples of [Reference Karlin and Altschul8].

$M_n$

when the length n of the sequence tends to infinity. Section 3 contains the proofs of the results of Section 2 and of some useful lemmas which use techniques of Markov renewal theory and large deviations. In Section 4 we propose a computational method for deriving the quantities appearing in the main results. A simple scoring scheme is developed in Section 4.4, for which we compare our approximations to those proposed by Karlin and Dembo [Reference Karlin and Dembo9] in the Markovian case. In Section 4.5 we also show the improvements brought by the new approximations on the real data examples of [Reference Karlin and Altschul8].

2. Statement of the main results

2.1. Definitions and notation

Let  $K_0 \coloneqq 0$

and, for

$K_0 \coloneqq 0$

and, for  $i \geq 1$

,

$i \geq 1$

,  $K_i \coloneqq \inf\{k > K_{i-1} \,:\, S_{k} - S_{K_{i-1}} < 0\}$

be the successive decreasing ladder times of

$K_i \coloneqq \inf\{k > K_{i-1} \,:\, S_{k} - S_{K_{i-1}} < 0\}$

be the successive decreasing ladder times of  $(S_k)_{k\geq 0}$

. Note that

$(S_k)_{k\geq 0}$

. Note that  $K_1 = \sigma^-$

.

$K_1 = \sigma^-$

.

We now consider the subsequence  $(A_i)_{ 0 \leq i \leq n}$

for a given length

$(A_i)_{ 0 \leq i \leq n}$

for a given length  $n \in \mathbb{N}\setminus \{0\}$

. Denote by

$n \in \mathbb{N}\setminus \{0\}$

. Denote by  $m(n)\coloneqq \max\{i \geq 0\ :\ K_i \leq n\}$

the random variable corresponding to the number of decreasing ladder times arrived before n. For every

$m(n)\coloneqq \max\{i \geq 0\ :\ K_i \leq n\}$

the random variable corresponding to the number of decreasing ladder times arrived before n. For every  $i=1,\dots,m(n)$

, we call the sequence

$i=1,\dots,m(n)$

, we call the sequence  $(A_j)_{K_{i-1}< j\leq K_{i}}$

the ith excursion above 0.

$(A_j)_{K_{i-1}< j\leq K_{i}}$

the ith excursion above 0.

Note that due to the negative drift we have  $\textrm{E}[K_1] < \infty$

(see Lemma 3.7) and

$\textrm{E}[K_1] < \infty$

(see Lemma 3.7) and  $m(n) \to \infty$

a.s. when

$m(n) \to \infty$

a.s. when  $n \to \infty$

. With every excursion

$n \to \infty$

. With every excursion  $i=1,\dots,m(n)$

we associate its maximal segmental score (also called height)

$i=1,\dots,m(n)$

we associate its maximal segmental score (also called height)  $Q_{i}$

defined by

$Q_{i}$

defined by  $Q_i \coloneqq \max_{K_{i-1}\leq k < K_{i}} (S_k - S_{K_{i-1}})$

.

$Q_i \coloneqq \max_{K_{i-1}\leq k < K_{i}} (S_k - S_{K_{i-1}})$

.

Note that  $M_{n}={\max}(Q_1,\dots,Q_{m(n)},Q^*)$

, with

$M_{n}={\max}(Q_1,\dots,Q_{m(n)},Q^*)$

, with  $Q^*$

being the maximal segmental score of the last incomplete excursion

$Q^*$

being the maximal segmental score of the last incomplete excursion  $(A_j)_{K_{m(n)}< j\leq n}$

. Mercier and Daudin [Reference Mercier and Daudin12] gave an alternative expression for

$(A_j)_{K_{m(n)}< j\leq n}$

. Mercier and Daudin [Reference Mercier and Daudin12] gave an alternative expression for  $M_n$

using the Lindley process

$M_n$

using the Lindley process  $(W_k)_{k \geq 0}$

describing the excursions above zero between the successive stopping times

$(W_k)_{k \geq 0}$

describing the excursions above zero between the successive stopping times  $(K_i)_{i\geq 0}$

. With

$(K_i)_{i\geq 0}$

. With  $W_0\coloneqq 0$

and

$W_0\coloneqq 0$

and  $W_{k+1}\coloneqq {\max}(W_{k}+f(A_{k+1}),0)$

, we have

$W_{k+1}\coloneqq {\max}(W_{k}+f(A_{k+1}),0)$

, we have  $M_{n}=\max_{0\leq k\leq n}W_k$

.

$M_{n}=\max_{0\leq k\leq n}W_k$

.

For every  $\alpha, \beta \in \mathcal{A}$

, we denote

$\alpha, \beta \in \mathcal{A}$

, we denote  $q_{\alpha\beta}\coloneqq \textrm{P}_{\alpha}(A_{K_1} = \beta)$

and

$q_{\alpha\beta}\coloneqq \textrm{P}_{\alpha}(A_{K_1} = \beta)$

and  ${\bf Q}\coloneqq (q_{\alpha\beta})_{\alpha,\beta \in \mathcal{A}}$

. Define

${\bf Q}\coloneqq (q_{\alpha\beta})_{\alpha,\beta \in \mathcal{A}}$

. Define  $\mathcal{A}^-\coloneqq \{\alpha\in\mathcal{A}\,:\,f(\alpha)<0\}$

and

$\mathcal{A}^-\coloneqq \{\alpha\in\mathcal{A}\,:\,f(\alpha)<0\}$

and  $\mathcal{A}^+\coloneqq \{\alpha\in\mathcal{A}\,:\,f(\alpha)>0\}$

. Note that the matrix Q is stochastic, with

$\mathcal{A}^+\coloneqq \{\alpha\in\mathcal{A}\,:\,f(\alpha)>0\}$

. Note that the matrix Q is stochastic, with  $q_{\alpha\beta}=0$

for

$q_{\alpha\beta}=0$

for  $\beta \in \mathcal{A}\setminus \mathcal{A}^-$

. Its restriction

$\beta \in \mathcal{A}\setminus \mathcal{A}^-$

. Its restriction  $\tilde{\mathbf{Q}}$

to

$\tilde{\mathbf{Q}}$

to  $\mathcal{A}^-$

is stochastic and irreducible, since

$\mathcal{A}^-$

is stochastic and irreducible, since  $q_{\alpha \beta}\geq p_{\alpha \beta} > 0$

for all

$q_{\alpha \beta}\geq p_{\alpha \beta} > 0$

for all  $\alpha, \beta \in \mathcal{A}^-$

. The states

$\alpha, \beta \in \mathcal{A}^-$

. The states  $(A_{K_i})_{i \geq 1}$

of the Markov chain at the end of the successive excursions define a Markov chain on

$(A_{K_i})_{i \geq 1}$

of the Markov chain at the end of the successive excursions define a Markov chain on  $\mathcal{A}^-$

with transition probability matrix

$\mathcal{A}^-$

with transition probability matrix  $\tilde{\mathbf{Q}}$

.

$\tilde{\mathbf{Q}}$

.

For every  $i \geq 2$

we thus have

$i \geq 2$

we thus have  $\textrm{P}(A_{K_i} = \beta \mid A_{K_{i-1}} = \alpha ) = q_{\alpha \beta}$

if

$\textrm{P}(A_{K_i} = \beta \mid A_{K_{i-1}} = \alpha ) = q_{\alpha \beta}$

if  $\alpha, \beta \in \mathcal{A}^-$

and 0 otherwise. Denote by

$\alpha, \beta \in \mathcal{A}^-$

and 0 otherwise. Denote by  $\tilde{z} > 0$

the stationary frequency vector of the irreducible stochastic matrix

$\tilde{z} > 0$

the stationary frequency vector of the irreducible stochastic matrix  $\tilde{\mathbf{Q}}$

, and let

$\tilde{\mathbf{Q}}$

, and let  $z\coloneqq (z_{\alpha})_{\alpha\in\mathcal{A}}$

, with

$z\coloneqq (z_{\alpha})_{\alpha\in\mathcal{A}}$

, with  $z_{\alpha}=\tilde{z}_{\alpha} > 0$

for

$z_{\alpha}=\tilde{z}_{\alpha} > 0$

for  $\alpha\in\mathcal{A}^-$

and

$\alpha\in\mathcal{A}^-$

and  $z_{\alpha}=0$

for

$z_{\alpha}=0$

for  $\alpha \in \mathcal{A} \setminus \mathcal{A}^-$

. Note that z is invariant for the matrix Q, i.e.

$\alpha \in \mathcal{A} \setminus \mathcal{A}^-$

. Note that z is invariant for the matrix Q, i.e.  $z{\bf Q}=z$

.

$z{\bf Q}=z$

.

Remark 2.1. Note that in Karlin and Dembo’s Markovian model of [Reference Karlin and Dembo9] the matrix Q is irreducible, thanks to their random scoring function and to their hypotheses recalled in Remark 1.1.

Using the strong Markov property, conditionally on  $(A_{K_i})_{i \geq 1}$

the random variables

$(A_{K_i})_{i \geq 1}$

the random variables  $(Q_i)_{i \geq 1}$

are independent, with the distribution of

$(Q_i)_{i \geq 1}$

are independent, with the distribution of  $Q_i$

depending only on

$Q_i$

depending only on  $A_{K_{i-1}}$

and

$A_{K_{i-1}}$

and  $A_{K_{i}}$

.

$A_{K_{i}}$

.

For every  $\alpha \in \mathcal{A}$

,

$\alpha \in \mathcal{A}$

,  $\beta \in \mathcal{A}^-$

, and

$\beta \in \mathcal{A}^-$

, and  $y \geq 0$

, let

$y \geq 0$

, let  $F_{Q_1,\alpha, \beta}(y)\coloneqq \textrm{P}_\alpha(Q_1 \leq y \mid A_{\sigma^-} = \beta)$

and

$F_{Q_1,\alpha, \beta}(y)\coloneqq \textrm{P}_\alpha(Q_1 \leq y \mid A_{\sigma^-} = \beta)$

and  $F_{Q_1,\alpha}(y)\coloneqq \textrm{P}_\alpha(Q_1 \leq y)$

. Note that for any

$F_{Q_1,\alpha}(y)\coloneqq \textrm{P}_\alpha(Q_1 \leq y)$

. Note that for any  $\alpha \in \mathcal{A}^-$

and

$\alpha \in \mathcal{A}^-$

and  $i \geq 1$

,

$i \geq 1$

,  $F_{Q_1,\alpha, \beta}$

represents the cumulative distribution function (CDF) of the height

$F_{Q_1,\alpha, \beta}$

represents the cumulative distribution function (CDF) of the height  $Q_i$

of the ith excursion given that it starts in state

$Q_i$

of the ith excursion given that it starts in state  $\alpha$

and ends in state

$\alpha$

and ends in state  $\beta$

, i.e.

$\beta$

, i.e.  $F_{Q_1,\alpha, \beta}(y)=\textrm{P}(Q_i \leq y \mid A_{K_i} = \beta, A_{K_{i-1}} = \alpha)$

, whereas

$F_{Q_1,\alpha, \beta}(y)=\textrm{P}(Q_i \leq y \mid A_{K_i} = \beta, A_{K_{i-1}} = \alpha)$

, whereas  $F_{Q_1,\alpha}$

represents the CDF of

$F_{Q_1,\alpha}$

represents the CDF of  $Q_i$

conditionally on the ith excursion starting in state

$Q_i$

conditionally on the ith excursion starting in state  $\alpha$

, i.e.

$\alpha$

, i.e.  $F_{Q_1,\alpha}(y)=\textrm{P}(Q_i \leq y \mid A_{K_{i-1}} = \alpha)$

. We thus have

$F_{Q_1,\alpha}(y)=\textrm{P}(Q_i \leq y \mid A_{K_{i-1}} = \alpha)$

. We thus have  $F_{Q_1,\alpha}(y)= \sum_{\beta \in \mathcal{A}} F_{Q_1,\alpha, \beta}(y) q_{\alpha \beta}. $

$F_{Q_1,\alpha}(y)= \sum_{\beta \in \mathcal{A}} F_{Q_1,\alpha, \beta}(y) q_{\alpha \beta}. $

We also introduce the stopping time  $\sigma^+\coloneqq \inf\{k\geq 1\,:\,S_k>0\}$

with values in

$\sigma^+\coloneqq \inf\{k\geq 1\,:\,S_k>0\}$

with values in  $\mathbb{N} \cup \{\infty\}$

. Due to Hypothesis (1) we have

$\mathbb{N} \cup \{\infty\}$

. Due to Hypothesis (1) we have  $\textrm{P}_\alpha(\sigma^+ < \infty) < 1$

for all

$\textrm{P}_\alpha(\sigma^+ < \infty) < 1$

for all  $\alpha\in \mathcal{A}$

.

$\alpha\in \mathcal{A}$

.

For every  $\alpha, \beta \in \mathcal{A}$

and

$\alpha, \beta \in \mathcal{A}$

and  $\xi > 0$

, let

$\xi > 0$

, let  $L_{\alpha\beta}(\xi)\coloneqq \textrm{P}_{\alpha}(S_{\sigma^+} \leq \xi,\, \sigma^+<\infty,\, A_{\sigma^+}=\beta)$

. Note that

$L_{\alpha\beta}(\xi)\coloneqq \textrm{P}_{\alpha}(S_{\sigma^+} \leq \xi,\, \sigma^+<\infty,\, A_{\sigma^+}=\beta)$

. Note that  $L_{\alpha\beta}(\xi) = 0$

for

$L_{\alpha\beta}(\xi) = 0$

for  $\beta \in \mathcal{A} \setminus \mathcal{A}^+$

, and

$\beta \in \mathcal{A} \setminus \mathcal{A}^+$

, and  $L_{\alpha\beta}(\infty) \leq \textrm{P}_\alpha(\sigma^+ < \infty) < 1$

, therefore

$L_{\alpha\beta}(\infty) \leq \textrm{P}_\alpha(\sigma^+ < \infty) < 1$

, therefore  $\int_{0}^{\infty} \textrm{d} L_{\alpha\beta}(\xi) = L_{\alpha\beta}(\infty) <1.$

$\int_{0}^{\infty} \textrm{d} L_{\alpha\beta}(\xi) = L_{\alpha\beta}(\infty) <1.$

Let us also denote by  $L_{\alpha}(\xi)\coloneqq \sum_{\beta \in \mathcal{A}^+}L_{\alpha \beta}(\xi) = \textrm{P}_{\alpha}(S_{\sigma^+} \leq \xi,\, \sigma^+<\infty)$

the conditional CDF of the first positive partial sum, when it exists, given that the Markov chain starts in state

$L_{\alpha}(\xi)\coloneqq \sum_{\beta \in \mathcal{A}^+}L_{\alpha \beta}(\xi) = \textrm{P}_{\alpha}(S_{\sigma^+} \leq \xi,\, \sigma^+<\infty)$

the conditional CDF of the first positive partial sum, when it exists, given that the Markov chain starts in state  $\alpha$

, and

$\alpha$

, and  $L_{\alpha}(\infty) \coloneqq \lim_{\xi \to \infty} L_{\alpha}(\xi) = \textrm{P}_{\alpha}(\sigma^+<\infty)$

.

$L_{\alpha}(\infty) \coloneqq \lim_{\xi \to \infty} L_{\alpha}(\xi) = \textrm{P}_{\alpha}(\sigma^+<\infty)$

.

For any  $\theta \in \mathbb{R}$

we introduce the matrix

$\theta \in \mathbb{R}$

we introduce the matrix  ${\boldsymbol \Phi}(\theta)\coloneqq \left(p_{\alpha\beta}\cdot{\exp}(\theta f(\beta))\right)_{\alpha,\beta \in \mathcal{A}}$

. Since the transition matrix P is positive, by the Perron–Frobenius theorem the spectral radius

${\boldsymbol \Phi}(\theta)\coloneqq \left(p_{\alpha\beta}\cdot{\exp}(\theta f(\beta))\right)_{\alpha,\beta \in \mathcal{A}}$

. Since the transition matrix P is positive, by the Perron–Frobenius theorem the spectral radius  $\rho(\theta) > 0$

of the matrix

$\rho(\theta) > 0$

of the matrix  ${\boldsymbol \Phi}(\theta)$

coincides with its dominant eigenvalue, for which there exists a unique positive right eigenvector

${\boldsymbol \Phi}(\theta)$

coincides with its dominant eigenvalue, for which there exists a unique positive right eigenvector  $u(\theta)=(u_i(\theta))_{1\leq i \leq r}$

(seen as a column vector) normalized so that

$u(\theta)=(u_i(\theta))_{1\leq i \leq r}$

(seen as a column vector) normalized so that  $\sum_{i=1}^r u_i(\theta)=1$

. Moreover,

$\sum_{i=1}^r u_i(\theta)=1$

. Moreover,  $\theta \mapsto \rho(\theta)$

is differentiable and strictly log convex (see [Reference Dembo and Karlin3, Reference Karlin and Ost10, Reference Lancaster11]). In Lemma 3.5 we prove that

$\theta \mapsto \rho(\theta)$

is differentiable and strictly log convex (see [Reference Dembo and Karlin3, Reference Karlin and Ost10, Reference Lancaster11]). In Lemma 3.5 we prove that  $\rho'(0) = \textrm{E}[\,f(A)]$

, hence

$\rho'(0) = \textrm{E}[\,f(A)]$

, hence  $\rho'(0) < 0$

by Hypothesis (1). Together with the strict log convexity of

$\rho'(0) < 0$

by Hypothesis (1). Together with the strict log convexity of  $\rho$

and the fact that

$\rho$

and the fact that  $\rho(0)= 1$

, this implies that there exists a unique

$\rho(0)= 1$

, this implies that there exists a unique  $\theta^* > 0$

such that

$\theta^* > 0$

such that  $\rho(\theta^*)=1$

(see [Reference Dembo and Karlin3] for more details).

$\rho(\theta^*)=1$

(see [Reference Dembo and Karlin3] for more details).

2.2. Main results: Improvements on the distribution of the local score

Let  $\alpha \in \mathcal{A}$

. We start by giving a result which allows us to recursively compute the CDF of the maximal non-negative partial sum

$\alpha \in \mathcal{A}$

. We start by giving a result which allows us to recursively compute the CDF of the maximal non-negative partial sum  $S^+$

. We denote by

$S^+$

. We denote by  $F_{S^+,\alpha}$

the CDF of

$F_{S^+,\alpha}$

the CDF of  $S^+$

conditionally on starting in state

$S^+$

conditionally on starting in state  $\alpha$

:

$\alpha$

:  $F_{S^+,\alpha}(\ell) \coloneqq \textrm{P}_{\alpha}(S^+\leq\ell)$

for all

$F_{S^+,\alpha}(\ell) \coloneqq \textrm{P}_{\alpha}(S^+\leq\ell)$

for all  $\ell \in \mathbb{N} $

, and for every

$\ell \in \mathbb{N} $

, and for every  $k \in \mathbb{N}\setminus \{0\}$

and

$k \in \mathbb{N}\setminus \{0\}$

and  $\beta \in \mathcal{A}$

,

$\beta \in \mathcal{A}$

,  $L^{(k)}_{\alpha \beta}\coloneqq \textrm{P}_\alpha(S_{\sigma^+} = k,\, \sigma^+ < \infty,\, A_{\sigma^+} = \beta)$

. Note that

$L^{(k)}_{\alpha \beta}\coloneqq \textrm{P}_\alpha(S_{\sigma^+} = k,\, \sigma^+ < \infty,\, A_{\sigma^+} = \beta)$

. Note that  $L^{(k)}_{\alpha \beta}=0$

for

$L^{(k)}_{\alpha \beta}=0$

for  $\beta \in \mathcal{A} \setminus \mathcal{A}^+$

and

$\beta \in \mathcal{A} \setminus \mathcal{A}^+$

and  $L_{\alpha}(\infty) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta}.$

$L_{\alpha}(\infty) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta}.$

The following result gives a recurrence relation for the double sequence  $(F_{S^+,\alpha}{(\ell )})_{\alpha,\ell}$

involving the coefficients

$(F_{S^+,\alpha}{(\ell )})_{\alpha,\ell}$

involving the coefficients  $L_{\alpha\beta}^{(k)}$

, which can be computed recursively (see Section 4.2).

$L_{\alpha\beta}^{(k)}$

, which can be computed recursively (see Section 4.2).

Theorem 2.1. (Exact result for the distribution of  $S^+$

). For all

$S^+$

). For all  $\alpha\in\mathcal{A}$

and

$\alpha\in\mathcal{A}$

and  $\ell \geq 1$

:

$\ell \geq 1$

:

\begin{align*}F_{S^+,\alpha}(0) &=\textrm{P}_\alpha(\sigma^+ = \infty) = 1 - L_{\alpha}(\infty),\\F_{S^+,\alpha}{(\ell )} &=1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \ F_{S^+,\beta}{(\ell-k)}.\end{align*}

\begin{align*}F_{S^+,\alpha}(0) &=\textrm{P}_\alpha(\sigma^+ = \infty) = 1 - L_{\alpha}(\infty),\\F_{S^+,\alpha}{(\ell )} &=1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \ F_{S^+,\beta}{(\ell-k)}.\end{align*}

The proof will be given in Section 3.

In Theorem 2.2 we obtain an asymptotic result for the tail behaviour of  $S^+$

using Theorem 2.1 and ideas inspired from [Reference Karlin and Dembo9] adapted to our framework (see also the discussion in Remark 1.1). Before stating this result we need to introduce some more notation.

$S^+$

using Theorem 2.1 and ideas inspired from [Reference Karlin and Dembo9] adapted to our framework (see also the discussion in Remark 1.1). Before stating this result we need to introduce some more notation.

For every  $\alpha, \beta \in \mathcal{A}$

and

$\alpha, \beta \in \mathcal{A}$

and  $k \in \mathbb{N}$

we denote

$k \in \mathbb{N}$

we denote

\[G_{\alpha\beta}^{(k)} \coloneqq \frac{u_{\beta}(\theta^{*})}{u_{\alpha}(\theta^*)} \textrm{e}^{\theta^* k }L_{\alpha\beta}^{(k)},\qquad G_{\alpha\beta}{(k)} \coloneqq \sum_{\ell=0}^{k} G_{\alpha\beta}^{(\ell)},\qquad G_{\alpha\beta}{(\infty)} \coloneqq \sum_{k=0}^{\infty} G_{\alpha\beta}^{(k)}.\]

\[G_{\alpha\beta}^{(k)} \coloneqq \frac{u_{\beta}(\theta^{*})}{u_{\alpha}(\theta^*)} \textrm{e}^{\theta^* k }L_{\alpha\beta}^{(k)},\qquad G_{\alpha\beta}{(k)} \coloneqq \sum_{\ell=0}^{k} G_{\alpha\beta}^{(\ell)},\qquad G_{\alpha\beta}{(\infty)} \coloneqq \sum_{k=0}^{\infty} G_{\alpha\beta}^{(k)}.\]

The matrix  ${\bf G(\infty)}\coloneqq (G_{\alpha\beta}(\infty))_{\alpha, \beta}$

is stochastic, using Lemma 3.3; the subset

${\bf G(\infty)}\coloneqq (G_{\alpha\beta}(\infty))_{\alpha, \beta}$

is stochastic, using Lemma 3.3; the subset  $\mathcal{A}^+$

is a recurrent class, whereas the states in

$\mathcal{A}^+$

is a recurrent class, whereas the states in  $\mathcal{A}\setminus \mathcal{A}^+$

are transient. The restriction of

$\mathcal{A}\setminus \mathcal{A}^+$

are transient. The restriction of  ${\bf G(\infty)}$

to

${\bf G(\infty)}$

to  $\mathcal{A}^+$

is stochastic and irreducible; we denote by

$\mathcal{A}^+$

is stochastic and irreducible; we denote by  $\tilde{w} > 0$

the corresponding stationary frequency vector. Define

$\tilde{w} > 0$

the corresponding stationary frequency vector. Define  $w=(w_{\alpha})_{\alpha\in\mathcal{A}}$

, with

$w=(w_{\alpha})_{\alpha\in\mathcal{A}}$

, with  $w_{\alpha}=\tilde{w}_{\alpha} > 0$

for

$w_{\alpha}=\tilde{w}_{\alpha} > 0$

for  $\alpha\in\mathcal{A}^+$

and

$\alpha\in\mathcal{A}^+$

and  $w_{\alpha}=0$

for

$w_{\alpha}=0$

for  $\alpha \in \mathcal{A}\setminus \mathcal{A}^+$

. The vector w is invariant for

$\alpha \in \mathcal{A}\setminus \mathcal{A}^+$

. The vector w is invariant for  $\bf G(\infty)$

, i.e.

$\bf G(\infty)$

, i.e.  $w{\bf G(\infty)}=w$

.

$w{\bf G(\infty)}=w$

.

Remark 2.2. Note that in Karlin and Dembo’s Markovian model of [Reference Karlin and Dembo9] the matrix  ${\bf G(\infty)}$

is positive, hence irreducible, thanks to their random scoring function and to their hypotheses recalled in Remark 1.1.

${\bf G(\infty)}$

is positive, hence irreducible, thanks to their random scoring function and to their hypotheses recalled in Remark 1.1.

Remark 2.3. In Section 4.3 we detail a recursive procedure for computing the CDF  $F_{S^+,\alpha}$

, based on Theorem 2.1. Note also that, for every

$F_{S^+,\alpha}$

, based on Theorem 2.1. Note also that, for every  $\alpha, \beta \in \mathcal{A}$

, there are a finite number of

$\alpha, \beta \in \mathcal{A}$

, there are a finite number of  $L_{\alpha\beta}^{(k)}$

terms different from zero, and therefore there are a finite number of non-null terms in the sum defining

$L_{\alpha\beta}^{(k)}$

terms different from zero, and therefore there are a finite number of non-null terms in the sum defining  $G_{\alpha \beta}(\infty)$

.

$G_{\alpha \beta}(\infty)$

.

The following result is the analogue, in our setting, of Lemma 4.3 of [Reference Karlin and Dembo9].

Theorem 2.2. (Asymptotics for the tail behaviour of  $S^+$

.) For every

$S^+$

.) For every  $\alpha\in \mathcal{A}$

we have

$\alpha\in \mathcal{A}$

we have

\begin{equation}\lim_{k \rightarrow +\infty} \frac{{ \textrm{e}}^{{\theta^{*}}{k}}\textrm{P}_{\alpha}(S^+>k)}{u_{\alpha}(\theta^{*})} = \frac{1}{c} \cdot \sum_{\gamma \in \mathcal{A}^{+}} \frac{w_{\gamma}}{u_{\gamma}(\theta^{*})}\sum_{\ell \geq 0} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) \textrm{e}^{\theta^{*}\ell }\coloneqq c(\infty),\end{equation}

\begin{equation}\lim_{k \rightarrow +\infty} \frac{{ \textrm{e}}^{{\theta^{*}}{k}}\textrm{P}_{\alpha}(S^+>k)}{u_{\alpha}(\theta^{*})} = \frac{1}{c} \cdot \sum_{\gamma \in \mathcal{A}^{+}} \frac{w_{\gamma}}{u_{\gamma}(\theta^{*})}\sum_{\ell \geq 0} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) \textrm{e}^{\theta^{*}\ell }\coloneqq c(\infty),\end{equation}

where  $w=(w_{\alpha})_{\alpha\in \mathcal{A}}$

is the stationary frequency vector of the matrix

$w=(w_{\alpha})_{\alpha\in \mathcal{A}}$

is the stationary frequency vector of the matrix  $\bf G(\infty)$

and

$\bf G(\infty)$

and

\[c\coloneqq \sum_{\gamma,\beta \in \mathcal{A}^+} \frac{w_{\gamma}}{u_{\gamma}(\theta^*)}u_{\beta}(\theta^*) \sum_{\ell \geq 0} \ell \cdot \textrm{e}^{\theta^*{\ell }} \, L^{(\ell)}_{\gamma \beta}.\]

\[c\coloneqq \sum_{\gamma,\beta \in \mathcal{A}^+} \frac{w_{\gamma}}{u_{\gamma}(\theta^*)}u_{\beta}(\theta^*) \sum_{\ell \geq 0} \ell \cdot \textrm{e}^{\theta^*{\ell }} \, L^{(\ell)}_{\gamma \beta}.\]

The proof is deferred to Section 3.

Remark 2.4. Note that there are a finite number of non-null terms in the above sums over  $\ell$

. We also have the following alternative expression for

$\ell$

. We also have the following alternative expression for  $c(\infty)$

:

$c(\infty)$

:

\[c(\infty) = \frac{1}{c(\textrm{e}^{\theta^*}-1)} \cdot \sum_{\gamma \in \mathcal{A}^+} \frac{w_{\gamma}}{u_{\gamma}(\theta^*)} \big\{\textrm{E}_\gamma\big[\textrm{e}^{\theta^* S_{\sigma^+}};\, \sigma^+

< \infty\big] - L_\gamma(\infty)\big\}.\]

\[c(\infty) = \frac{1}{c(\textrm{e}^{\theta^*}-1)} \cdot \sum_{\gamma \in \mathcal{A}^+} \frac{w_{\gamma}}{u_{\gamma}(\theta^*)} \big\{\textrm{E}_\gamma\big[\textrm{e}^{\theta^* S_{\sigma^+}};\, \sigma^+

< \infty\big] - L_\gamma(\infty)\big\}.\]

Indeed, by the summation by parts formula

\[\sum_{\ell=m}^k f_\ell (g_{\ell+1}-g_\ell) = f_{k+1} g_{k+1} - f_m g_m - \sum_{\ell=m}^k (\,f_{\ell+1}-f_\ell)g_{\ell+1},\]

\[\sum_{\ell=m}^k f_\ell (g_{\ell+1}-g_\ell) = f_{k+1} g_{k+1} - f_m g_m - \sum_{\ell=m}^k (\,f_{\ell+1}-f_\ell)g_{\ell+1},\]

we obtain

\begin{align*}& \sum_{\ell = 0}^{\infty} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) \textrm{e}^{\theta^*\ell }= \frac{1}{\textrm{e}^{\theta^*}-1} \sum_{\ell = 0}^{\infty} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) (\textrm{e}^{\theta^*(\ell+1) } - \textrm{e}^{\theta^*\ell } )\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1}\\[3pt] &\quad\times \bigg\{ \lim_{k \to \infty} (L_{\gamma}(\infty)-L_{\gamma}(k )) \textrm{e}^{\theta^*k } - L_{\gamma}(\infty) - \sum_{\ell = 0}^{\infty} (L_{\gamma}(\ell )-L_{\gamma}(\ell+1) ) \textrm{e}^{\theta^*(\ell+1) } \bigg\}\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1} \bigg\{ - L_{\gamma}(\infty) + \sum_{\ell = 0}^{\infty} \textrm{e}^{\theta^*(\ell+1) } \, \textrm{P}_{\gamma}(S_{\sigma^+} = \ell+1 , \, \sigma^+<\infty) \bigg\}\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1} \big\{\textrm{E}_\gamma\big[\textrm{e}^{\theta^* S_{\sigma^+}};\, \sigma^+

< \infty\big] - L_\gamma(\infty)\big\}.\end{align*}

\begin{align*}& \sum_{\ell = 0}^{\infty} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) \textrm{e}^{\theta^*\ell }= \frac{1}{\textrm{e}^{\theta^*}-1} \sum_{\ell = 0}^{\infty} (L_{\gamma}(\infty)-L_{\gamma}(\ell )) (\textrm{e}^{\theta^*(\ell+1) } - \textrm{e}^{\theta^*\ell } )\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1}\\[3pt] &\quad\times \bigg\{ \lim_{k \to \infty} (L_{\gamma}(\infty)-L_{\gamma}(k )) \textrm{e}^{\theta^*k } - L_{\gamma}(\infty) - \sum_{\ell = 0}^{\infty} (L_{\gamma}(\ell )-L_{\gamma}(\ell+1) ) \textrm{e}^{\theta^*(\ell+1) } \bigg\}\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1} \bigg\{ - L_{\gamma}(\infty) + \sum_{\ell = 0}^{\infty} \textrm{e}^{\theta^*(\ell+1) } \, \textrm{P}_{\gamma}(S_{\sigma^+} = \ell+1 , \, \sigma^+<\infty) \bigg\}\\[3pt] & = \frac{1}{\textrm{e}^{\theta^*}-1} \big\{\textrm{E}_\gamma\big[\textrm{e}^{\theta^* S_{\sigma^+}};\, \sigma^+

< \infty\big] - L_\gamma(\infty)\big\}.\end{align*}

Before stating the next results, let us denote, for every integer  $\ell < 0$

and

$\ell < 0$

and  $\alpha, \beta \in \mathcal{A}$

,

$\alpha, \beta \in \mathcal{A}$

,  $Q_{\alpha\beta}^{(\ell)}\coloneqq \textrm{P}_{\alpha}(S_{\sigma^-}=\ell , \, A_{\sigma^-}=\beta)$

. Note that

$Q_{\alpha\beta}^{(\ell)}\coloneqq \textrm{P}_{\alpha}(S_{\sigma^-}=\ell , \, A_{\sigma^-}=\beta)$

. Note that  $Q_{\alpha\beta}^{(\ell)} = 0$

for

$Q_{\alpha\beta}^{(\ell)} = 0$

for  $\beta \in \mathcal{A} \setminus \mathcal{A}^-$

. In Section 4 we give a recursive method for computing these quantities.

$\beta \in \mathcal{A} \setminus \mathcal{A}^-$

. In Section 4 we give a recursive method for computing these quantities.

Using Theorem 2.2 we obtain the following result, where the notation  $f_k \underset{k\rightarrow\infty}{\sim} g_k$

means

$f_k \underset{k\rightarrow\infty}{\sim} g_k$

means  $f_k - g_k = o(g_k)$

, or equivalently

$f_k - g_k = o(g_k)$

, or equivalently  $\displaystyle \frac{f_k}{g_k} \underset{k\rightarrow\infty}{\rightarrow} 1$

.

$\displaystyle \frac{f_k}{g_k} \underset{k\rightarrow\infty}{\rightarrow} 1$

.

Theorem 2.3. (Asymptotic approximation for the tail behaviour of  $Q_1$

.) We have the following asymptotic result on the tail distribution of the height of the first excursion: for every

$Q_1$

.) We have the following asymptotic result on the tail distribution of the height of the first excursion: for every  $\alpha \in \mathcal{A}$

we have

$\alpha \in \mathcal{A}$

we have

\begin{equation}\textrm{P}_{\alpha}(Q_1>k)\underset{k\rightarrow\infty}{\sim}\textrm{P}_{\alpha}(S^+>k)-\sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}\left (S^+>k-\ell\right )\cdot Q_{\alpha\beta}^{(\ell)}.\end{equation}

\begin{equation}\textrm{P}_{\alpha}(Q_1>k)\underset{k\rightarrow\infty}{\sim}\textrm{P}_{\alpha}(S^+>k)-\sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}\left (S^+>k-\ell\right )\cdot Q_{\alpha\beta}^{(\ell)}.\end{equation}

The proof will be given in Section 3.

Remark 2.5. Note that, as a straightforward consequence of Theorems 2.2 and 2.3, we recover the following limit result of Karlin and Dembo [Reference Karlin and Dembo9] (Lemma 4.4):

\[\lim_{k \rightarrow +\infty} \frac{\textrm{e}^{\theta^*{k}}\textrm{P}_{\alpha}(Q_1>k)}{u_{\alpha}(\theta^*)}= c(\infty)\bigg\{1 - \sum_{\beta \in \mathcal{A}^-} \frac{u_{\beta}(\theta^*)}{u_{\alpha}(\theta^*)} \sum_{\ell

< 0} \textrm{e}^{\theta^*\ell }Q^{(\ell)}_{\alpha \beta}\bigg\}.\]

\[\lim_{k \rightarrow +\infty} \frac{\textrm{e}^{\theta^*{k}}\textrm{P}_{\alpha}(Q_1>k)}{u_{\alpha}(\theta^*)}= c(\infty)\bigg\{1 - \sum_{\beta \in \mathcal{A}^-} \frac{u_{\beta}(\theta^*)}{u_{\alpha}(\theta^*)} \sum_{\ell

< 0} \textrm{e}^{\theta^*\ell }Q^{(\ell)}_{\alpha \beta}\bigg\}.\]

Using Theorems 2.2 and 2.3, we finally obtain the following result on the asymptotic distribution of the local score  $M_n$

for a sequence of length n.

$M_n$

for a sequence of length n.

Theorem 2.4. (Asymptotic distribution of the local score  $M_n$

.) For every

$M_n$

.) For every  $\alpha \in \mathcal{A}$

and

$\alpha \in \mathcal{A}$

and  $x \in {\mathbb R}$

we have:

$x \in {\mathbb R}$

we have:

\begin{align}&\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right ) \underset{n\rightarrow\infty}{\sim} \exp\bigg \{-\frac{n}{A^*}\sum_{\beta \in \mathcal{A}^-} z_{\beta} \textrm{P}_{\beta}\left (S^+> \left \lfloor \log(n)/\theta^*+x\right\rfloor \right )\bigg \} \nonumber \\[3pt] &\quad \times\exp\bigg\{ \frac{n}{A^{*}}\sum_{k

< 0}\sum_{\gamma \in \mathcal{A}^{-}} \textrm{P}_{\gamma}\left(S^+>\left \lfloor\log(n)/\theta^{*}+x\right\rfloor -k\right) \cdot\sum_{\beta \in \mathcal{A}^{-}}z_{\beta}Q_{\beta\gamma}^{(k)}\bigg \},\end{align}

\begin{align}&\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right ) \underset{n\rightarrow\infty}{\sim} \exp\bigg \{-\frac{n}{A^*}\sum_{\beta \in \mathcal{A}^-} z_{\beta} \textrm{P}_{\beta}\left (S^+> \left \lfloor \log(n)/\theta^*+x\right\rfloor \right )\bigg \} \nonumber \\[3pt] &\quad \times\exp\bigg\{ \frac{n}{A^{*}}\sum_{k

< 0}\sum_{\gamma \in \mathcal{A}^{-}} \textrm{P}_{\gamma}\left(S^+>\left \lfloor\log(n)/\theta^{*}+x\right\rfloor -k\right) \cdot\sum_{\beta \in \mathcal{A}^{-}}z_{\beta}Q_{\beta\gamma}^{(k)}\bigg \},\end{align}

where  $z=(z_{\alpha})_{\alpha\in \mathcal{A}}$

is the invariant probability measure of the matrix Qdefined in Section 2.1, and

$z=(z_{\alpha})_{\alpha\in \mathcal{A}}$

is the invariant probability measure of the matrix Qdefined in Section 2.1, and

\begin{align*}\displaystyle A^* \coloneqq \lim_{m\rightarrow +\infty} \frac{K_m}{m} = \frac{1}{\textrm{E}(\,f(A))}\sum_{\beta \in \mathcal{A}^-} z_{\beta} \textrm{E}_\beta[S_{\sigma^-}]\mbox{ a.s.}\end{align*}

\begin{align*}\displaystyle A^* \coloneqq \lim_{m\rightarrow +\infty} \frac{K_m}{m} = \frac{1}{\textrm{E}(\,f(A))}\sum_{\beta \in \mathcal{A}^-} z_{\beta} \textrm{E}_\beta[S_{\sigma^-}]\mbox{ a.s.}\end{align*}

• Note that the asymptotic equivalent in (5) does not depend on the initial state

$\alpha$

.

$\alpha$

.• We recall, for comparison, the asymptotic lower and upper bounds of [Reference Karlin and Dembo9] for the distribution of

$M_n$

:

(6)

$M_n$

:

(6) \begin{equation}\liminf_{n\rightarrow +\infty}\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right ) \geq \exp\left \{-K^+ {\exp}({-}\theta^*x)\right\}\!,\vspace*{-6pt}\end{equation}

(7)

\begin{equation}\liminf_{n\rightarrow +\infty}\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right ) \geq \exp\left \{-K^+ {\exp}({-}\theta^*x)\right\}\!,\vspace*{-6pt}\end{equation}

(7) \begin{equation}\limsup_{n\rightarrow +\infty}\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right )\leq \exp\left \{-K^*{\exp}({-}\theta^*x) \right\}\!,\end{equation}

\begin{equation}\limsup_{n\rightarrow +\infty}\textrm{P}_\alpha \left(M_n\leq \frac{\log (n)}{\theta^*}+x\right )\leq \exp\left \{-K^*{\exp}({-}\theta^*x) \right\}\!,\end{equation}

with

$K^+= K^*{\exp}(\theta^*) $

and

$K^+= K^*{\exp}(\theta^*) $

and  $K^*=v(\infty)\cdot c(\infty)$

, where

$K^*=v(\infty)\cdot c(\infty)$

, where  $c(\infty)$

is given in Theorem 2.2 and is related to the defective distribution of the first positive partial sum

$c(\infty)$

is given in Theorem 2.2 and is related to the defective distribution of the first positive partial sum  $S_{\sigma^+}$

(see also Remark 2.4), and

$S_{\sigma^+}$

(see also Remark 2.4), and  $v(\infty)$

is related to the distribution of the first negative partial sum

$v(\infty)$

is related to the distribution of the first negative partial sum  $S_{\sigma^-}$

(see (5.1) and (5.2) of [Reference Karlin and Dembo9] for more details). A more explicit formula for

$S_{\sigma^-}$

(see (5.1) and (5.2) of [Reference Karlin and Dembo9] for more details). A more explicit formula for  $K^*$

is given in Section 4.4 for an application in a simple case.

$K^*$

is given in Section 4.4 for an application in a simple case.• Even if the expression of our asymptotic equivalent in (5) seems more cumbersome than the asymptotic bounds recalled in (6) and (7), the practical implementations are equivalent.

3. Proofs of the main results

3.1. Proof of Theorem 2.1

\vspace*{-12pt}\begin{align*}F_{S^+,\alpha}(\ell )&= \textrm{P}_\alpha(\sigma^+ = \infty) + \textrm{P}_\alpha(S^+ \leq \ell ,\, \sigma^+ < \infty)\\[3pt] &=1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} \textrm{P}_\alpha(S^+ \leq \ell ,\, \sigma^+ < \infty,\, S_{\sigma^+} = k ,\, A_{\sigma^+} = \beta)\\[3pt] &= 1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \, \textrm{P}_\alpha(S^+ \leq \ell \mid \sigma^+

< \infty,\, S_{\sigma^+} = k ,\, A_{\sigma^+} = \beta).\end{align*}

\vspace*{-12pt}\begin{align*}F_{S^+,\alpha}(\ell )&= \textrm{P}_\alpha(\sigma^+ = \infty) + \textrm{P}_\alpha(S^+ \leq \ell ,\, \sigma^+ < \infty)\\[3pt] &=1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} \textrm{P}_\alpha(S^+ \leq \ell ,\, \sigma^+ < \infty,\, S_{\sigma^+} = k ,\, A_{\sigma^+} = \beta)\\[3pt] &= 1 - L_{\alpha}(\infty) + \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \, \textrm{P}_\alpha(S^+ \leq \ell \mid \sigma^+

< \infty,\, S_{\sigma^+} = k ,\, A_{\sigma^+} = \beta).\end{align*}

It then suffices to note that

\[\textrm{P}_\alpha(S^+ - S_{\sigma^+} \leq \ell-k \mid \sigma^+

< \infty,\, S_{\sigma^+} = k,\, A_{\sigma^+} = \beta)= \textrm{P}_\beta(S^+ \leq \ell-k) ,\]

\[\textrm{P}_\alpha(S^+ - S_{\sigma^+} \leq \ell-k \mid \sigma^+

< \infty,\, S_{\sigma^+} = k,\, A_{\sigma^+} = \beta)= \textrm{P}_\beta(S^+ \leq \ell-k) ,\]

by the strong Markov property applied to the stopping time  $\sigma^+$

.

$\sigma^+$

.  $\hfill\square$

$\hfill\square$

3.2. Proof of Theorem 2.2

We first prove some preliminary lemmas.

Lemma 3.1.  $\lim_{k \to \infty} \textrm{P}_\alpha(S^+ > k)= 0$

for every

$\lim_{k \to \infty} \textrm{P}_\alpha(S^+ > k)= 0$

for every  $\alpha \in \mathcal{A}$

.

$\alpha \in \mathcal{A}$

.

Proof. With  $F_{S^+,\alpha}$

defined in Theorem 2.1, we introduce, for every

$F_{S^+,\alpha}$

defined in Theorem 2.1, we introduce, for every  $\alpha$

and

$\alpha$

and  $\ell \geq 0$

,

$\ell \geq 0$

,

\[b_{\alpha}(\ell ) \coloneqq \frac{1-F_{S^+,\alpha}(\ell )}{u_{\alpha}(\theta^*)}\textrm{e}^{\theta^*\ell }, \qquad a_{\alpha}(\ell ) \coloneqq \frac{L_{\alpha}(\infty) - L_{\alpha}(\ell )}{u_{\alpha}(\theta^*)}\textrm{e}^{\theta^*\ell }.\]

\[b_{\alpha}(\ell ) \coloneqq \frac{1-F_{S^+,\alpha}(\ell )}{u_{\alpha}(\theta^*)}\textrm{e}^{\theta^*\ell }, \qquad a_{\alpha}(\ell ) \coloneqq \frac{L_{\alpha}(\infty) - L_{\alpha}(\ell )}{u_{\alpha}(\theta^*)}\textrm{e}^{\theta^*\ell }.\]

Theorem 2.1 allows us to obtain the following renewal system for the family  $(b_{\alpha})_{\alpha\in\mathcal{A}}$

:

$(b_{\alpha})_{\alpha\in\mathcal{A}}$

:

\begin{equation}\text{for all } \ell > 0, \text{ for all }\alpha \in \mathcal{A},\quad b_{\alpha}(\ell )= a_{\alpha}(\ell ) + \sum_{\beta} \sum_{k = 0}^{\ell} b_{\beta}(\ell-k) G^{(k)}_{\alpha \beta}.\end{equation}

\begin{equation}\text{for all } \ell > 0, \text{ for all }\alpha \in \mathcal{A},\quad b_{\alpha}(\ell )= a_{\alpha}(\ell ) + \sum_{\beta} \sum_{k = 0}^{\ell} b_{\beta}(\ell-k) G^{(k)}_{\alpha \beta}.\end{equation}

Since the restriction  ${\tilde{\mathbf{G}}(\infty)}$

of

${\tilde{\mathbf{G}}(\infty)}$

of  ${\bf G(\infty)}$

to

${\bf G(\infty)}$

to  $\mathcal{A}^+$

is stochastic, its spectral radius equals 1 and the corresponding right eigenvector is the vector having all components equal to 1; the left eigenvector is the stationary frequency vector

$\mathcal{A}^+$

is stochastic, its spectral radius equals 1 and the corresponding right eigenvector is the vector having all components equal to 1; the left eigenvector is the stationary frequency vector  $\tilde w > 0$

.

$\tilde w > 0$

.

Step 1: For every  $\alpha \in \mathcal{A}^+$

, a direct application of Theorem 2.2 of [Reference Athreya and Rama Murthy1] gives the formula in (3) for the limit

$\alpha \in \mathcal{A}^+$

, a direct application of Theorem 2.2 of [Reference Athreya and Rama Murthy1] gives the formula in (3) for the limit  $c(\infty)$

of

$c(\infty)$

of  $b_{\alpha}(\ell )$

when

$b_{\alpha}(\ell )$

when  $\ell \to \infty$

, which implies that

$\ell \to \infty$

, which implies that  $\displaystyle \lim_{k \to \infty} \textrm{P}_\alpha(S^+ > k)= 0$

.

$\displaystyle \lim_{k \to \infty} \textrm{P}_\alpha(S^+ > k)= 0$

.

Step 2: Now consider  $\alpha \notin \mathcal{A}^+$

. By Theorem 2.1 we have

$\alpha \notin \mathcal{A}^+$

. By Theorem 2.1 we have

\[\textrm{P}_\alpha(S^+ > \ell ) = L_{\alpha}(\infty) - \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \left\{1-\textrm{P}_\beta(S^+ > \ell-k) \right\}\!.\]

\[\textrm{P}_\alpha(S^+ > \ell ) = L_{\alpha}(\infty) - \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\ell} L^{(k)}_{\alpha \beta} \left\{1-\textrm{P}_\beta(S^+ > \ell-k) \right\}\!.\]

Since  $\textrm{P}_\beta(S^+ > \ell-k ) = 1$

for

$\textrm{P}_\beta(S^+ > \ell-k ) = 1$

for  $k > \ell$

and

$k > \ell$

and  $L_{\alpha}(\infty) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta}$

, we deduce that

$L_{\alpha}(\infty) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta}$

, we deduce that

\begin{equation}\textrm{P}_\alpha(S^+ > \ell ) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta} \, \textrm{P}_\beta(S^+ > \ell-k ).\end{equation}

\begin{equation}\textrm{P}_\alpha(S^+ > \ell ) = \sum_{\beta \in \mathcal{A}^+} \sum_{k=1}^{\infty} L^{(k)}_{\alpha \beta} \, \textrm{P}_\beta(S^+ > \ell-k ).\end{equation}

Note that for fixed  $\alpha$

and

$\alpha$

and  $\beta$

there are a finite number of non-null terms in the above sum over k. Using the fact that for fixed

$\beta$

there are a finite number of non-null terms in the above sum over k. Using the fact that for fixed  $\beta \in \mathcal{A}^+$

and

$\beta \in \mathcal{A}^+$

and  $k \geq 1$

we have

$k \geq 1$

we have  $\textrm{P}_\beta(S^+ > \ell-k) \rightarrow 0$

when

$\textrm{P}_\beta(S^+ > \ell-k) \rightarrow 0$

when  $\ell \to \infty$

, as shown previously in Step 1, the stated result follows.

$\ell \to \infty$

, as shown previously in Step 1, the stated result follows.

Lemma 3.2. Let  $\theta > 0$

. With

$\theta > 0$

. With  $u(\theta)$

defined in Section 2.1, the sequence of random variables

$u(\theta)$

defined in Section 2.1, the sequence of random variables  $(U_m(\theta))_{m \geq 0}$

defined by

$(U_m(\theta))_{m \geq 0}$

defined by  $U_0(\theta)\coloneqq 1$

and

$U_0(\theta)\coloneqq 1$

and

\[U_m(\theta)\coloneqq \prod_{i=0}^{m-1}\left [ \frac{{\exp}(\theta f(A_{i+1}))}{u_{A_i}(\theta)}\cdot\frac{u_{A_{i+1}}(\theta)}{\rho(\theta)}\right ]=\frac{{\exp}(\theta S_m)u_{A_m}(\theta)}{\rho(\theta)^m u_{A_0}(\theta)}, \qquad \text{for } m \geq 1, \]

\[U_m(\theta)\coloneqq \prod_{i=0}^{m-1}\left [ \frac{{\exp}(\theta f(A_{i+1}))}{u_{A_i}(\theta)}\cdot\frac{u_{A_{i+1}}(\theta)}{\rho(\theta)}\right ]=\frac{{\exp}(\theta S_m)u_{A_m}(\theta)}{\rho(\theta)^m u_{A_0}(\theta)}, \qquad \text{for } m \geq 1, \]

is a martingale with respect to the canonical filtration  $\mathcal{F}_m = \sigma(A_0,\ldots, A_m)$

.

$\mathcal{F}_m = \sigma(A_0,\ldots, A_m)$

.

Proof. For every  $m \in \mathbb{N}$

and

$m \in \mathbb{N}$

and  $\theta >0$

,

$\theta >0$

,  $U_m(\theta)$

is clearly measurable with respect to

$U_m(\theta)$

is clearly measurable with respect to  $\mathcal{F}_m$

and integrable, since

$\mathcal{F}_m$

and integrable, since  $\mathcal{A}$

is finite. We can write

$\mathcal{A}$

is finite. We can write

\[U_{m+1}(\theta)=U_m(\theta) \frac{{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta)}{u_{A_m}(\theta)\rho(\theta)}.\]

\[U_{m+1}(\theta)=U_m(\theta) \frac{{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta)}{u_{A_m}(\theta)\rho(\theta)}.\]

Since  $U_m(\theta)$

and

$U_m(\theta)$

and  $u_{A_m} (\theta)$

are measurable with respect to

$u_{A_m} (\theta)$

are measurable with respect to  $\mathcal{F}_m$

, we have

$\mathcal{F}_m$

, we have

\[\textrm{E}[U_{m+1}(\theta) \mid \mathcal{F}_m] = U_m(\theta) \frac{\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid \mathcal{F}_m] }{u_{A_m} (\theta)\rho(\theta)}.\]

\[\textrm{E}[U_{m+1}(\theta) \mid \mathcal{F}_m] = U_m(\theta) \frac{\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid \mathcal{F}_m] }{u_{A_m} (\theta)\rho(\theta)}.\]

By the Markov property we further have

\[\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid \mathcal{F}_m]= \textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m],\]

\[\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid \mathcal{F}_m]= \textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m],\]

and by definition of  $u(\theta)$

,

$u(\theta)$

,

\[\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m = \alpha] = \sum_{\beta} {\exp}(\theta f(\beta)) u_{\beta}(\theta) p_{\alpha \beta} =u_{\alpha}(\theta)\rho(\theta). \]

\[\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m = \alpha] = \sum_{\beta} {\exp}(\theta f(\beta)) u_{\beta}(\theta) p_{\alpha \beta} =u_{\alpha}(\theta)\rho(\theta). \]

We deduce that  $\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m] = u_{A_m}(\theta)\rho(\theta)$

, and hence

$\textrm{E}[{\exp}(\theta f(A_{m+1})) u_{A_{m+1}}(\theta) \mid A_m] = u_{A_m}(\theta)\rho(\theta)$

, and hence  $\textrm{E}[U_{m+1}(\theta) \mid \mathcal{F}_m]=U_{m}(\theta)$

, which finishes the proof.$\hfill\square$

$\textrm{E}[U_{m+1}(\theta) \mid \mathcal{F}_m]=U_{m}(\theta)$

, which finishes the proof.$\hfill\square$

Lemma 3.3. With  $\theta^*$

defined at the end of Section 2.1we have, for all

$\theta^*$

defined at the end of Section 2.1we have, for all  $\alpha \in \mathcal{A}$

,

$\alpha \in \mathcal{A}$

,

\begin{equation*} \frac{1}{u_\alpha(\theta^*)} \sum_{\beta \in \mathcal{A}^+} \sum_{\ell = 1}^{\infty} L^{(\ell)}_{\alpha \beta} \, \textrm{e}^{\theta^* \ell } \ u_\beta(\theta^*) = 1.\end{equation*}

\begin{equation*} \frac{1}{u_\alpha(\theta^*)} \sum_{\beta \in \mathcal{A}^+} \sum_{\ell = 1}^{\infty} L^{(\ell)}_{\alpha \beta} \, \textrm{e}^{\theta^* \ell } \ u_\beta(\theta^*) = 1.\end{equation*}

Proof. The proof uses Lemma 3.1 and ideas inspired by [Reference Karlin and Dembo9] (Lemma 4.2). First note that the above equation is equivalent to  $\textrm{E}_{\alpha}[U_{\sigma^+}(\theta^*);\,\sigma^+<\infty]=1$

, with

$\textrm{E}_{\alpha}[U_{\sigma^+}(\theta^*);\,\sigma^+<\infty]=1$

, with  $U_m(\theta)$

defined in Lemma 3.2. By applying the optional sampling theorem to the bounded stopping time

$U_m(\theta)$

defined in Lemma 3.2. By applying the optional sampling theorem to the bounded stopping time  $\tau_n \coloneqq \min(\sigma^+,n)$

and to the martingale

$\tau_n \coloneqq \min(\sigma^+,n)$

and to the martingale  $(U_m(\theta^*))_m$

, we obtain

$(U_m(\theta^*))_m$

, we obtain

\[1=\textrm{E}_\alpha[U_0(\theta^*)]=\textrm{E}_\alpha[U_{\tau_n}(\theta^*)] = \textrm{E}_\alpha[U_{\sigma^+}(\theta^*);\, \sigma^+ \leq n] + \textrm{E}_\alpha[U_{n}(\theta^*);\, \sigma^+ > n].\]

\[1=\textrm{E}_\alpha[U_0(\theta^*)]=\textrm{E}_\alpha[U_{\tau_n}(\theta^*)] = \textrm{E}_\alpha[U_{\sigma^+}(\theta^*);\, \sigma^+ \leq n] + \textrm{E}_\alpha[U_{n}(\theta^*);\, \sigma^+ > n].\]

We will show that  $\textrm{E}_\alpha[U_{n}(\theta^*); \,\sigma^+ > n] \rightarrow 0$

when

$\textrm{E}_\alpha[U_{n}(\theta^*); \,\sigma^+ > n] \rightarrow 0$

when  $n \to \infty$

. Passing to the limit in the previous relation will then give the desired result. Since

$n \to \infty$

. Passing to the limit in the previous relation will then give the desired result. Since  $\rho(\theta^*)=1$

we have

$\rho(\theta^*)=1$

we have

\[U_n(\theta^*) =\frac{{\exp}(\theta^* S_n)u_{A_n}(\theta^*)}{u_{A_0}(\theta^*)},\]

\[U_n(\theta^*) =\frac{{\exp}(\theta^* S_n)u_{A_n}(\theta^*)}{u_{A_0}(\theta^*)},\]

and it suffices to show that  $\lim_{n \to \infty}\textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n] = 0$

.

$\lim_{n \to \infty}\textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n] = 0$

.

For a fixed  $a > 0$

we can write

$a > 0$

we can write

\begin{align}\textrm{E}_{\alpha}[{\exp}(\theta^{*} S_{n});\, \sigma^{+} &> n] = \textrm{E}_{\alpha}[{\exp}(\theta^{*} S_{n});\, \sigma^{+} > n, \text{there exists } k \leq n\,:\,S_k \leq -2a] \nonumber\\[4pt] & + \textrm{E}_{\alpha}[{\exp}(\theta^{*} S_n);\, \sigma^{+} > n, -2a \leq S_{k} \leq 0, \text{ for all } 0 \leq k \leq n].\end{align}

\begin{align}\textrm{E}_{\alpha}[{\exp}(\theta^{*} S_{n});\, \sigma^{+} &> n] = \textrm{E}_{\alpha}[{\exp}(\theta^{*} S_{n});\, \sigma^{+} > n, \text{there exists } k \leq n\,:\,S_k \leq -2a] \nonumber\\[4pt] & + \textrm{E}_{\alpha}[{\exp}(\theta^{*} S_n);\, \sigma^{+} > n, -2a \leq S_{k} \leq 0, \text{ for all } 0 \leq k \leq n].\end{align}

The first expectation on the right-hand side of (10) can be bounded further:

\begin{align} &\textrm{E}_\alpha[{\exp}(\theta^* S_n); \, \sigma^+ > n , \text{ there exists } k \leq n\,:\,S_k \leq -2a] \nonumber\\[4pt] &\shoveleft{\leq \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, S_n \leq -a]} \nonumber\\[4pt] &\qquad + \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, S_n > -a, \text{ there exists } k

< n\,:\,S_k \leq -2a].\end{align}

\begin{align} &\textrm{E}_\alpha[{\exp}(\theta^* S_n); \, \sigma^+ > n , \text{ there exists } k \leq n\,:\,S_k \leq -2a] \nonumber\\[4pt] &\shoveleft{\leq \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, S_n \leq -a]} \nonumber\\[4pt] &\qquad + \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, S_n > -a, \text{ there exists } k

< n\,:\,S_k \leq -2a].\end{align}

We obviously have

\begin{equation}\textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, \,S_n \leq -a] \leq {\exp}({-}\theta^* a).\end{equation}

\begin{equation}\textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n, \,S_n \leq -a] \leq {\exp}({-}\theta^* a).\end{equation}

Let us further define the stopping time  $T \coloneqq \inf\{k \geq 1 \,:\, S_k \leq -2a\}$

. Note that

$T \coloneqq \inf\{k \geq 1 \,:\, S_k \leq -2a\}$

. Note that  $T < \infty$

a.s., since

$T < \infty$

a.s., since  $S_n \rightarrow -\infty$

a.s. when

$S_n \rightarrow -\infty$

a.s. when  $n \to \infty$

. Indeed, by the ergodic theorem, we have

$n \to \infty$

. Indeed, by the ergodic theorem, we have  $S_n/n \rightarrow \textrm{E}[\,f(A)] < 0$

a.s. when

$S_n/n \rightarrow \textrm{E}[\,f(A)] < 0$

a.s. when  $n \to \infty$

. Therefore we have

$n \to \infty$

. Therefore we have

\begin{align*} &\textrm{E}_\alpha[{\exp}(\theta^* S_n); \sigma^+ > n, S_n > -a,\, \text{there exists }k

< n\,:\,S_k \leq -2a] \leq \textrm{P}_\alpha(T \leq n, S_n > -a)\\[3pt] &\quad = \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\alpha(T \leq n,\, S_n > -a \mid A_T = \beta)\textrm{P}_\alpha(A_T = \beta)\\[3pt] &\quad \leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\alpha(S_n - S_T > a \mid A_T = \beta)\textrm{P}_\alpha(A_T = \beta)\leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a) \textrm{P}_\alpha(A_T = \beta) ,\end{align*}

\begin{align*} &\textrm{E}_\alpha[{\exp}(\theta^* S_n); \sigma^+ > n, S_n > -a,\, \text{there exists }k

< n\,:\,S_k \leq -2a] \leq \textrm{P}_\alpha(T \leq n, S_n > -a)\\[3pt] &\quad = \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\alpha(T \leq n,\, S_n > -a \mid A_T = \beta)\textrm{P}_\alpha(A_T = \beta)\\[3pt] &\quad \leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\alpha(S_n - S_T > a \mid A_T = \beta)\textrm{P}_\alpha(A_T = \beta)\leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a) \textrm{P}_\alpha(A_T = \beta) ,\end{align*}

by the strong Markov property. For every  $a > 0$

we thus have

$a > 0$

we thus have

\begin{align}\limsup_{n \to \infty} \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n,\, S_n > -a, \, \text{there exists } k

< n\,:\,S_k \leq -2a] \nonumber\\ \leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a).\end{align}

\begin{align}\limsup_{n \to \infty} \textrm{E}_\alpha[{\exp}(\theta^* S_n);\, \sigma^+ > n,\, S_n > -a, \, \text{there exists } k

< n\,:\,S_k \leq -2a] \nonumber\\ \leq \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a).\end{align}

Considering the second expectation on the right-hand side of (10), we have

\begin{equation}\lim_{n \to \infty} \textrm{P}_\alpha({-}2a \leq S_k \leq 0, \, \text{for all } 0 \leq k \leq n) = \textrm{P}_\alpha({-}2a \leq S_k \leq 0, \, \text{for all } k \geq 0) = 0,\end{equation}

\begin{equation}\lim_{n \to \infty} \textrm{P}_\alpha({-}2a \leq S_k \leq 0, \, \text{for all } 0 \leq k \leq n) = \textrm{P}_\alpha({-}2a \leq S_k \leq 0, \, \text{for all } k \geq 0) = 0,\end{equation}

again since  $S_n \rightarrow -\infty$

a.s. when

$S_n \rightarrow -\infty$

a.s. when  $n \to \infty$

.

$n \to \infty$

.

Equations (10)–(14) imply that, for every  $a > 0$

, we have

$a > 0$

, we have

\[\limsup_{n \to \infty} \textrm{E}_\alpha[{\exp}(\theta^* S_n); \, \sigma^+ > n] \leq {\exp}({-}\theta^*a) + \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a).\]

\[\limsup_{n \to \infty} \textrm{E}_\alpha[{\exp}(\theta^* S_n); \, \sigma^+ > n] \leq {\exp}({-}\theta^*a) + \sum_{\beta \in \mathcal{A}^-} \textrm{P}_\beta(S^+ > a).\]

Using Lemma 3.1 and taking  $a \to \infty$

we obtain

$a \to \infty$

we obtain  $\lim_{n \to \infty}\textrm{E}_\alpha[{\exp}(\theta^* S_n); \sigma^+ > n] = 0.$

$\hfill\square$

$\lim_{n \to \infty}\textrm{E}_\alpha[{\exp}(\theta^* S_n); \sigma^+ > n] = 0.$

$\hfill\square$

Proof of Theorem 2.2. For  $\alpha \in \mathcal{A}^+$

the formula has already been shown in Step 1 of the proof of Lemma 3.1. For

$\alpha \in \mathcal{A}^+$

the formula has already been shown in Step 1 of the proof of Lemma 3.1. For  $\alpha \notin \mathcal{A}^+$

we prove the stated formula using Theorem 2.1. Equation (9) implies the formula in (8).

$\alpha \notin \mathcal{A}^+$

we prove the stated formula using Theorem 2.1. Equation (9) implies the formula in (8).

Note that for every  $\alpha$

and

$\alpha$

and  $\beta$

there are a finite number of non-null terms in the above sum over k. Moreover, as shown in Step 1 of the proof of Lemma 3.1, we have, for all

$\beta$

there are a finite number of non-null terms in the above sum over k. Moreover, as shown in Step 1 of the proof of Lemma 3.1, we have, for all  $\beta \in \mathcal{A}^+$

and

$\beta \in \mathcal{A}^+$

and  $k\geq 0$

,

$k\geq 0$

,

\[ \frac{\textrm{e}^{\theta^*(\ell-k)}\textrm{P}_{\beta}(S^+>\ell-k)}{u_{\beta}(\theta^*)} \underset{\ell \to \infty}{\longrightarrow} c(\infty).\]

\[ \frac{\textrm{e}^{\theta^*(\ell-k)}\textrm{P}_{\beta}(S^+>\ell-k)}{u_{\beta}(\theta^*)} \underset{\ell \to \infty}{\longrightarrow} c(\infty).\]

We finally obtain

\[\lim_{\ell \rightarrow +\infty} \frac{\textrm{e}^{\theta^*{\ell }}\textrm{P}_{\alpha}(S^+> \ell )}{u_{\alpha}(\theta^*)} = \frac{c(\infty)}{u_\alpha(\theta^*)} \sum_{\beta \in \mathcal{A}^+} \sum_{k = 1}^{\infty} L^{(k)}_{\alpha \beta} \, \textrm{e}^{\theta^* k } \ u_\beta(\theta^*),\]

\[\lim_{\ell \rightarrow +\infty} \frac{\textrm{e}^{\theta^*{\ell }}\textrm{P}_{\alpha}(S^+> \ell )}{u_{\alpha}(\theta^*)} = \frac{c(\infty)}{u_\alpha(\theta^*)} \sum_{\beta \in \mathcal{A}^+} \sum_{k = 1}^{\infty} L^{(k)}_{\alpha \beta} \, \textrm{e}^{\theta^* k } \ u_\beta(\theta^*),\]

which equals  $c(\infty)$

, as desired, by Lemma 3.3.

$c(\infty)$

, as desired, by Lemma 3.3.

3.3. Proof of Theorem 2.3

Since  $S^+ \geq Q_1$

, for every

$S^+ \geq Q_1$

, for every  $\alpha \in \mathcal{A}$

we have

$\alpha \in \mathcal{A}$

we have

\[\textrm{P}_{\alpha}(S^+>k) = \textrm{P}_{\alpha}(Q_1>k) + \textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k).\]

\[\textrm{P}_{\alpha}(S^+>k) = \textrm{P}_{\alpha}(Q_1>k) + \textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k).\]

By applying the strong Markov property to the stopping time  $\sigma^-$

we can further decompose the last probability with respect to the values taken by

$\sigma^-$

we can further decompose the last probability with respect to the values taken by  $S_{\sigma^-}$

and

$S_{\sigma^-}$

and  $A_{\sigma^-}$

:

$A_{\sigma^-}$

:

\begin{align*}& \textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k) = \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k,\, S_{\sigma^-}=\ell,\, A_{\sigma^-}=\beta)\\[3pt] &= \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\alpha}(S^+ -S_{\sigma^-} >k-\ell \mid A_{\sigma^-}=\beta,\, Q_1 \leq k,\, S_{\sigma^-}=\ell ) \\[3pt] & \ \ \ \ \times \textrm{P}_{\alpha}(Q_1 \leq k,\, S_{\sigma^-}=\ell ,\, A_{\sigma^-}=\beta)\\[3pt] &= \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+ >k-\ell ) \cdot \{Q_{\alpha\beta}^{(\ell)}- \textrm{P}_{\alpha}(Q_1 > k,\, S_{\sigma^-}=\ell ,\, A_{\sigma^-}=\beta) \}.\end{align*}

\begin{align*}& \textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k) = \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\alpha}(S^+>k,\, Q_1 \leq k,\, S_{\sigma^-}=\ell,\, A_{\sigma^-}=\beta)\\[3pt] &= \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\alpha}(S^+ -S_{\sigma^-} >k-\ell \mid A_{\sigma^-}=\beta,\, Q_1 \leq k,\, S_{\sigma^-}=\ell ) \\[3pt] & \ \ \ \ \times \textrm{P}_{\alpha}(Q_1 \leq k,\, S_{\sigma^-}=\ell ,\, A_{\sigma^-}=\beta)\\[3pt] &= \sum_{\ell<0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+ >k-\ell ) \cdot \{Q_{\alpha\beta}^{(\ell)}- \textrm{P}_{\alpha}(Q_1 > k,\, S_{\sigma^-}=\ell ,\, A_{\sigma^-}=\beta) \}.\end{align*}

We thus obtain

\begin{align*} &\textrm{P}_{\alpha}(S^+>k)-\sum_{\ell <0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+>k-\ell )\cdot Q_{\alpha\beta}^{(\ell )} - \textrm{P}_{\alpha}(Q_1>k) \\[3pt] &\quad = - \sum_{\ell <0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+ >k-\ell ) \ \textrm{P}_{\alpha}(Q_1 > k, S_{\sigma^-}=\ell, A_{\sigma^-}=\beta).\end{align*}

\begin{align*} &\textrm{P}_{\alpha}(S^+>k)-\sum_{\ell <0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+>k-\ell )\cdot Q_{\alpha\beta}^{(\ell )} - \textrm{P}_{\alpha}(Q_1>k) \\[3pt] &\quad = - \sum_{\ell <0}\sum_{\beta \in \mathcal{A}^-}\textrm{P}_{\beta}(S^+ >k-\ell ) \ \textrm{P}_{\alpha}(Q_1 > k, S_{\sigma^-}=\ell, A_{\sigma^-}=\beta).\end{align*}

By Theorem 2.2 we have  $\textrm{P}_{\beta}(S^+ >k ) = O(\textrm{e}^{-\theta^*k})$

as

$\textrm{P}_{\beta}(S^+ >k ) = O(\textrm{e}^{-\theta^*k})$

as  $k \to \infty$

for every

$k \to \infty$

for every  $\beta \in \mathcal{A}^-$

, from which we deduce that the left-hand side of the previous equation is

$\beta \in \mathcal{A}^-$

, from which we deduce that the left-hand side of the previous equation is  $o(\textrm{P}_{\alpha}(Q_1 > k))$

when

$o(\textrm{P}_{\alpha}(Q_1 > k))$

when  $k \to \infty$

. The stated result then easily follows.

$k \to \infty$

. The stated result then easily follows.  $\hfill\square$

$\hfill\square$

3.4. Proof of Theorem 2.4

We will first prove some useful lemmas.

Lemma 3.4. There exists a constant  $C > 0$

such that, for every

$C > 0$

such that, for every  $\alpha \in \mathcal{A}$

,

$\alpha \in \mathcal{A}$

,  $\beta \in \mathcal{A}^-$

, and

$\beta \in \mathcal{A}^-$

, and  $y > 0$

, we have

$y > 0$

, we have  $\textrm{P}_\alpha (Q_1 > y \mid A_{\sigma^-} = \beta) \leq C \textrm{e}^{-\theta^*y}.$

$\textrm{P}_\alpha (Q_1 > y \mid A_{\sigma^-} = \beta) \leq C \textrm{e}^{-\theta^*y}.$

Proof. The proof is partly inspired by [Reference Karlin and Dembo9]. Let  $y > 0$

and denote by

$y > 0$

and denote by  $\sigma(y)$

the first exit time of

$\sigma(y)$

the first exit time of  $S_n$

from the interval [0,y]. Applying the optional sampling theorem to the martingale

$S_n$

from the interval [0,y]. Applying the optional sampling theorem to the martingale  $(U_m(\theta^*))_m$

(see Lemma 3.2) and to the stopping time

$(U_m(\theta^*))_m$

(see Lemma 3.2) and to the stopping time  $\sigma(y)$

, we get

$\sigma(y)$

, we get

\begin{equation}\textrm{E}_{\alpha} [U_{\sigma(y)}(\theta^*) ]=\textrm{E}_{\alpha} [U_0(\theta^*) ]=1.\end{equation}

\begin{equation}\textrm{E}_{\alpha} [U_{\sigma(y)}(\theta^*) ]=\textrm{E}_{\alpha} [U_0(\theta^*) ]=1.\end{equation}

The applicability of the optional sampling theorem is guaranteed by the fact that there exists  $\tilde{C} > 0$

such that, for every

$\tilde{C} > 0$

such that, for every  $n \in \mathbb{N}$

, we have

$n \in \mathbb{N}$

, we have  $0 < U_{\min(\sigma(y),n) }(\theta^*) \leq \tilde{C}$

a.s. Indeed, this follows from the fact that when

$0 < U_{\min(\sigma(y),n) }(\theta^*) \leq \tilde{C}$

a.s. Indeed, this follows from the fact that when  $\sigma(y) > n$

we have

$\sigma(y) > n$

we have  $0 \leq S_n \leq y$

, and when

$0 \leq S_n \leq y$

, and when  $\sigma(y) \leq n$

either

$\sigma(y) \leq n$

either  $S_{\sigma(y)} < 0$

or

$S_{\sigma(y)} < 0$

or  $y < S_{\sigma(y)} < y + \max\left\{\kern1pt f(\alpha)\,:\, \alpha \in \mathcal{A}^+ \right \}$

.

$y < S_{\sigma(y)} < y + \max\left\{\kern1pt f(\alpha)\,:\, \alpha \in \mathcal{A}^+ \right \}$

.

We deduce from (15) that, for some constant  $K > 0$

, we have

$K > 0$

, we have

\begin{align*}1 &= \textrm{E}_{\alpha}\bigg [\textrm{e}^{\theta^* S_{\sigma(y)}}\frac{u_{A_{\sigma(y)}}(\theta^*)}{u_{A_{0}}(\theta^*)} \bigg ] \geq K \textrm{e}^{\theta^*y}\, \textrm{E}_{\alpha} [ \textrm{e}^{\theta^* (S_{\sigma(y)}-y))} \mid S_{\sigma(y)} > y ] \cdot \textrm{P}_{\alpha}(S_{\sigma(y)} > y)\\[5pt] & \geq K \textrm{e}^{\theta^*y} \, \textrm{P}_{\alpha}(S_{\sigma(y)} > y) \geq K \textrm{e}^{\theta^*y} \, \textrm{P}_{\alpha}(S_{\sigma(y)} > y \mid A_{\sigma^-}=\beta)q_{\alpha \beta}.\end{align*}

\begin{align*}1 &= \textrm{E}_{\alpha}\bigg [\textrm{e}^{\theta^* S_{\sigma(y)}}\frac{u_{A_{\sigma(y)}}(\theta^*)}{u_{A_{0}}(\theta^*)} \bigg ] \geq K \textrm{e}^{\theta^*y}\, \textrm{E}_{\alpha} [ \textrm{e}^{\theta^* (S_{\sigma(y)}-y))} \mid S_{\sigma(y)} > y ] \cdot \textrm{P}_{\alpha}(S_{\sigma(y)} > y)\\[5pt] & \geq K \textrm{e}^{\theta^*y} \, \textrm{P}_{\alpha}(S_{\sigma(y)} > y) \geq K \textrm{e}^{\theta^*y} \, \textrm{P}_{\alpha}(S_{\sigma(y)} > y \mid A_{\sigma^-}=\beta)q_{\alpha \beta}.\end{align*}

Note further that,  $\mathcal{A}$

being finite, there exists

$\mathcal{A}$

being finite, there exists  $c > 0$

such that for all

$c > 0$

such that for all  $\alpha \in \mathcal{A}$

and

$\alpha \in \mathcal{A}$

and  $\beta \in \mathcal{A}^-$

we have

$\beta \in \mathcal{A}^-$

we have  $q_{\alpha \beta}=\textrm{P}_\alpha(A_{\sigma^-}=\beta) \geq p_{\alpha \beta} \geq c.$

In order to obtain the bound in the statement, it remains to note that

$q_{\alpha \beta}=\textrm{P}_\alpha(A_{\sigma^-}=\beta) \geq p_{\alpha \beta} \geq c.$

In order to obtain the bound in the statement, it remains to note that  $\textrm{P}_{\alpha}(Q_1 > y \mid A_{\sigma^-}=\beta) = \textrm{P}_{\alpha}(S_{\sigma(y)} > y \mid A_{\sigma^-}=\beta ).$

$\textrm{P}_{\alpha}(Q_1 > y \mid A_{\sigma^-}=\beta) = \textrm{P}_{\alpha}(S_{\sigma(y)} > y \mid A_{\sigma^-}=\beta ).$

Lemma 3.5.  $\rho'(0)=\textrm{E}[\,f(A)]<0$

.

$\rho'(0)=\textrm{E}[\,f(A)]<0$

.

Proof. By the fact that  $\rho(\theta)$

is an eigenvalue of the matrix

$\rho(\theta)$

is an eigenvalue of the matrix  ${\boldsymbol \Phi}(\theta)$

with corresponding eigenvector

${\boldsymbol \Phi}(\theta)$

with corresponding eigenvector  $u(\theta)$

, we have

$u(\theta)$

, we have  $\rho(\theta)u_{\alpha}(\theta)=\left ({\boldsymbol \Phi}(\theta)u(\theta)\right )_{\alpha}=\sum_\beta p_{\alpha\beta}\textrm{e}^{\theta f(\beta)}u_\beta(\theta)$

.

$\rho(\theta)u_{\alpha}(\theta)=\left ({\boldsymbol \Phi}(\theta)u(\theta)\right )_{\alpha}=\sum_\beta p_{\alpha\beta}\textrm{e}^{\theta f(\beta)}u_\beta(\theta)$

.

When differentiating the previous relation with respect to  $\theta$

we obtain

$\theta$

we obtain

\[\frac{\textrm{d}}{\textrm{d} \theta}(\rho (\theta)u_\alpha(\theta))=\sum_\beta p_{\alpha\beta} (\,f(\beta)\textrm{e}^{\theta f(\beta)}u_\beta(\theta)+\textrm{e}^{\theta f(\beta)}u^{\prime}_{\beta}(\theta) ).\]

\[\frac{\textrm{d}}{\textrm{d} \theta}(\rho (\theta)u_\alpha(\theta))=\sum_\beta p_{\alpha\beta} (\,f(\beta)\textrm{e}^{\theta f(\beta)}u_\beta(\theta)+\textrm{e}^{\theta f(\beta)}u^{\prime}_{\beta}(\theta) ).\]

We have  $\rho(0)=1$

and

$\rho(0)=1$

and  $u(0)=^t(1/r,\dots,1/r)$

. For

$u(0)=^t(1/r,\dots,1/r)$

. For  $\theta=0$

we then get

$\theta=0$

we then get

\begin{equation}\hspace*{-8pt}\sum_\alpha \pi_{\alpha} \frac{\textrm{d}}{\textrm{d} \theta}(\rho (\theta)u_\alpha(\theta)) \bigg |_{\theta=0} =\frac{1}{r}\textrm{E}[\,f(A)]+\sum_{\alpha,\beta}\pi_{\alpha} p_{\alpha\beta}u^{{\prime}}_{\beta}(0)=\frac{1}{r}\textrm{E}[\,f(A)]+\sum_{\beta}\pi_{\beta}u^{\prime}_{\beta}(0).\end{equation}

\begin{equation}\hspace*{-8pt}\sum_\alpha \pi_{\alpha} \frac{\textrm{d}}{\textrm{d} \theta}(\rho (\theta)u_\alpha(\theta)) \bigg |_{\theta=0} =\frac{1}{r}\textrm{E}[\,f(A)]+\sum_{\alpha,\beta}\pi_{\alpha} p_{\alpha\beta}u^{{\prime}}_{\beta}(0)=\frac{1}{r}\textrm{E}[\,f(A)]+\sum_{\beta}\pi_{\beta}u^{\prime}_{\beta}(0).\end{equation}

On the other hand,