Psychological research investigating sound and music has increasingly been adapted to the evaluation of soundtracking for games, and is now being considered in the development and design stages of some titles. This chapter summarizes the main findings of this body of knowledge. It also describes the application of emotional measurement techniques and player responses using biophysiological metering and analysis. The distinction between different types of psychophysiological responses to sound and music are explored, with the advantages and limitations of such techniques considered. The distinction between musically induced and perceived emotional response is of particular relevance to future game design, and the use of biophysiological metering presents a unique opportunity to create fast, continuous control signals for gaming and game sound to maximize player experiences. The world of game soundtracking also presents a unique opportunity to explore sound and music evaluation in ways which traditional musicology might not offer (for example, deliberately antagonistic music, non-linear sound design and so on). The chapter concludes with directions for future research based on the paradigm of biofeedback and bio-controlled game audio.

Introduction

As readers of this book will doubtlessly be very much aware, creating effective soundtracking for games (which readers should take to include music, dialogue and/or sound effects), presents particular challenges that are distinct from creating music for its own sake, or music for static sound-to-picture formats (films, TV). This is because gameplay typically affords players agency over the timing of events in the game. One of the great benefits of effective soundtracking is the ability to enhance emotional engagement and immersion with the gameplay narrative for the player – accentuating danger, success, challenges and so on. Different types of game can employ established musical grammars which are often borrowed from linear musical domains.

Emotional Engagement through Avatar and Control

There is a distinction to be made between active and passive physiological control over the audio (or indeed, more generally over the game environment). Active control means that the user must be able to exert clear agency over the resulting actions, for example, by imagining an action and seeing an avatar follow this input with a direct correlation in the resulting sound world. Passive control would include detection of control signals which are not directly controllable by the end user (for example, heart rate, or galvanic skin response for emotional state estimation). This has been proposed as a control signal in horror games such as 2011’s Nevermind,Footnote 1 in which the gameplay is responsive to electro-dermal activity – world designs which elicit higher sweat responses from the player are chosen over worlds with less player responseFootnote 2 – and becomes particularly interesting in game contexts when the possibility of adapting the music to biofeedback is introduced.Footnote 3 Here, the potential to harness unconscious processes (passive control) for music and sound generation may be realized, for example, through individually adaptive, responsive or context-dependent remixing. Such technology could be married together with the recent significant advances in music information retrieval (MIR), non-linear music creationFootnote 4 and context-adaptive music selection. For example, it could be used to create systems for unsupervised music selection based on individual player preference, performance, narrative or directly from biophysiological activity. As with any such system, there are ethical concerns, not just in the storage of individual data, but also in the potential to cause harm to the player, for example by creating an experience that is too intense or scary in this context.

Psychophysiological Assessment as a Useful Tool for Both Analysing Player Response to Music in Games, and Creating New Interactive Musical Experiences

Psychophysiological assessment can provide clues as to player states and how previous experience with sound and music in linear domains (traditional non-interactive music and media) might influence a player in a game context (learned associations with major or minor keys, for example). Moreover, it suggests that these states are not necessarily distinct (for example, a player might be both afraid and excited – consider, for example, riding a rollercoaster, which can be both scary and invigorating, with the balance depending on the individual). In other words, there is not necessarily an emotional state which is universally ‘unwanted’ in gameplay, but there may be states which are ‘inappropriate’ or emotionally incongruous. These are difficult phenomena to evaluate through traditional measurement techniques, especially in the midst of gameplay, yet they remain of vital importance for the game sound designer or composer. Biophysiological measurement, which includes central and peripheral nervous system measurement (i.e., heart and brain activity, as well as sweat and muscle myography) can be used both to detect emotional states, and to use physical responses to control functional music, for example, in the design of new procedural audio techniques for gaming.Footnote 5 Music can have a huge impact on our day-to-day lives, and is increasingly being correlated with mental health and well-being.Footnote 6 In such contexts, music has been shown to reduce stress, improve athletic performance, aid mindfulness and increase concentration. With such functional qualities in mind, there is significant potential for music in game soundtracking, which might benefit from biophysiologically informed computer-aided music or sound design. This chapter gives an overview of this type of technology and considers how biosensors might be married with traditional game audio strategies (e.g., procedural audio, or algorithmically generated music), but the general methodology for design and application might be equally suited to a wide variety of artistic applications. Biophysiological measurement hardware is becoming increasingly affordable and accessible, giving rise to music-specific applications in the emerging field of brain–computer music interfacing (BCMI).Footnote 7

For these types of system to be truly successful, the mapping between neurophysiological cues and audio parameters must be intuitive for the audience (players) and for the composer/sound designers involved. Several such systems have been presented in research,Footnote 8 but commercial take-up is limited. To understand why this might be, we must first consider how biosensors might be used in a game music context, and how emotions are generally measured in psychological practice, as well as how game music might otherwise adapt to game contexts which require non-linear, emotionally congruous soundtracking in order to maximize immersion. Therefore, this chapter will provide an overview of emotion and measurement strategies, including duration, scale and dimensionality, before considering experimental techniques using biosensors, including a discussion of the use of biosensors in overcoming the difficulty between differentiation of perceived and induced emotional states. This section will include a brief overview of potential biosensors for use in game audio contexts. We will then review examples of non-linearity and adaptive audio in game soundtracking, before considering some possible examples of potential future applications.

Theoretical Overview of Emotion

There are generally three categories of emotional response to music:

Emotion: A shorter response; usually to an identifiable stimulus, giving rise to a direct perception and possibly an action (e.g., fight/flight).

Affect/Subjective feeling: A longer evoked experiential episode.

Mood: The most diverse and diffuse category. Perhaps indiscriminate to particular eliciting events, and as such less pertinent to gameplay, though it may influence cognitive state and ability (e.g., negative or depressed moods).

Emotion (in response to action) is the most relevant to gameplay scenarios, where player state might be influenced by soundtracking, and as such this chapter will henceforth refer to emotion unilaterally.

Emotional Descriptions and Terms

Psychological evaluations of music as a holistic activity (composition, performance and listening) exist, as do evaluations of the specific contributions of individual musical features to overall perception. These generally use self-report mechanisms (asking the composer, performer or listener what they think or feel in response to stimulus sets which often feature multiple varying factors). The domain of psychoacoustics tends to consider the contribution of individual, quantifiable acoustic features to perception (for example, correlation between amplitude and perceived loudness, spectro-temporal properties and timbral quality, or acoustic frequency and pitch perception). More recently this work has begun to consider how such factors contribute to emotional communication in a performance and an emotional state in a listener (for research which considers such acoustic cues as emotional correlates in the context of speech and dialogue).Footnote 9

At this stage it is useful to define common terminology and the concepts that shape psychophysiological assessments of perceptual responses to sound and music. Immersion is a popular criterion in the design and evaluation of gaming.

The term ‘immersion’ is often used interchangeably in this context with ‘flow’,Footnote 10 yet empirical research on the impact of music on immersion in the context of video games is still in its infancy. A player-focused definition (as opposed to the perceptual definition of Slater,Footnote 11 wherein simulated environments combine with perception to create coherence) has been suggested as a sense of the loss of time perception,Footnote 12 but this was contested in later work as anecdotal.Footnote 13 Some studies have considered players’ perceived, self-reported state in an ecological context,Footnote 14 that is, while actually playing – suggesting that players underestimate how long they actually play for if asked retrospectively. Later research has suggested that attention might be better correlated to immersion.Footnote 15

Whichever definition the reader prefers, the cognitive processes involved are likely to be contextually dependent; in other words the music needs to be emotionally congruent with gameplay events.Footnote 16 This has implications for soundtrack timing, and for emotional matching of soundtrack elements with gameplay narrative.Footnote 17

Despite the increasing acknowledgement of the effect of sound or music on immersion in multimodal scenarios (which would include video game soundtracking, sound in film, interactive media, virtual reality (VR) and so on), there is a problem in the traditional psychophysical evaluation of such a process: simply put, the experimenter will break immersion if they ask players to actively react to a change in the stimulus (e.g., stop playing when music changes), and clearly this makes self-report mechanisms problematic when evaluating game music in an authentic manner.

Distinguishing Felt Emotion

We must consider the distinction between perceived and induced emotions, which is typically individual depending on the listeners’ preferences and current emotional state. The player might ask themselves: ‘I understand this section of play is supposed to make me feel sad’ as opposed to ‘I feel sad right now at this point in the game’. We might look for increased heart rate variability, skin conductance (in other words, more sweat), particular patterns of brain activity (particularly frontal asymmetry or beta frequencies)Footnote 18 and so on, as evidence of induced, as opposed to perceived emotions. Methods for measuring listeners’ emotional responses to musical stimuli have included self-reporting and biophysiological measurement, and in many cases combinations thereof.

The distinction between perceived and induced or experienced emotions in response to musical stimuli is very important. For example, induced emotions might be described as ‘I felt this’, or ‘I experienced this’, while perceived emotions could be reported as ‘this was intended to communicate’, or ‘I observed’, and so on. The difference has been well documented,Footnote 19 though the precise terminology used can vary widely.

Perceived emotions are more commonly noted than induced emotions: ‘Generally speaking, emotions were less frequently felt in response to music than they were perceived as expressive properties of the music.’Footnote 20 This represents a challenge, which becomes more important when considering game soundtracking that aims to induce emotionally congruent states in the player, rather than to simply communicate them. In other words, rather than communicating an explicit goal, for example ‘play sad music = sad player’, the goal is rather to measure the player’s own bioresponse and adapt the music to their actual arousal as an emotional indicator as interpreted by the system: biophysiological responses help us to investigate induced rather than perceived emotions. Does the music communicate an intended emotion, or does the player really feel this emotion?

Models of Mapping Emotion

A very common model for emotions is the two-dimensional or circumplex model of affect,Footnote 21 wherein positivity of emotion (valence) is placed on the horizontal axis, and intensity of emotion (arousal) on the vertical axis of the Cartesian space. This space has been proposed to represent the blend of interacting neurophysiological systems dedicated to the processing of hedonicity (pleasure–displeasure) and arousal (quiet–activated).Footnote 22 In similar ways, the Geneva Emotion Music Scale (GEMS) describes nine dimensions that represent the semantic space of musically evoked emotions,Footnote 23 but unlike the circumplex model, no assumption is made as to the neural circuitry underlying these semantic dimensions.Footnote 24 Other common approaches include single bipolar dimensions (such as happy/sad), or multiple bipolar dimensions (happy/sad, boredom/surprise, pleasant/frightening etc.). Finally, some models utilize free-choice responses to musical stimuli to determine emotional correlations. Perhaps unsurprisingly, many of these approaches share commonality in the specific emotional descriptors used. Game music systems that are meant to produce emotionally loaded music could potentially be mapped to these dimensional theories of emotion drawn from psychology. Finally, much of the literature on emotion and music uses descriptive qualifiers for the emotion expressed by the composer or experienced by the listener in terms of basic emotions, like ‘sad’, ‘happy’.Footnote 25 We feel it is important to note here that, even though listeners may experience these emotions in isolation, the affective response to music in a gameplay context is more likely to reflect collections and blends of emotions, including, of course, those coloured by gameplay narrative.

Interested readers can find more exhaustive reviews on the link between music and emotion in writing by Klaus R. Scherer,Footnote 26 and a special issue of Musicae Scientiae.Footnote 27 Much of this literature describes models of affect, including definitions of emotions, emotional components and emotional correlates that may be modulated by musical features.

Introduction to Techniques of Psychophysiological Measurement

While biosensors are now becoming more established, with major brands like Fitbit and Apple Watch giving consumers access to biophysiological data, harnessing this technology for game music, or even more general interaction with music (for example, using sensors to select music playlists autonomously) is less common and something of an emerging field. The challenges can be broadly surmised as (1) extracting meaningful control information from the raw data with a high degree of accuracy and signal clarity (utility), and (2) designing game audio soundtracks which respond in a way that is congruent with both the game progress and the player state as measured in the extracted control signal (congruence).

Types of Sensor and Their Uses

Often research has a tendency to focus on issues of technical implementation, beyond the selection and definition of signal collection metrics outlined above. For example, it can be challenging to remove misleading data artefacts caused by blinking, eye movement, head movement and other muscle movement.Footnote 28 Related research challenges include increased speed of classification of player emotional state (as measured by biofeedback), for example, by means of machine-learning techniques,Footnote 29 or accuracy of the interface.Footnote 30 Generally these are challenges related to the type of data processing employed, and can be addressed either by statistical data reduction or noise reduction and artefact removal techniques, which can be borrowed from other measurement paradigms, such as functional magnetic resonance imaging (fMRI).Footnote 31 Hybrid systems, utilizing more than one method of measurement, include joint sensor studies correlating affective induction by means of music with neurophysiological cues;Footnote 32 measurement of subconscious emotional state as adapted to musical control;Footnote 33 and systems combining galvanic skin responses (GSR) for the emotional assessment of audio and music.Footnote 34 One such sensor which has yet to find mainstream use in game soundtracking but is making steady advances in the research community is the electroencephalogram (EEG), a device for measuring electrical activity across the brain via electrodes placed across the scalp.Footnote 35 Electrode placement varies in practice, although there is a standard 10/20 arrangement Footnote 36 – however, the number of electrodes, and potential difficulties with successful placement and quality of signal are all barriers to mainstream use in gaming at the time of writing, and are potentially insurmountable in VR contexts due to the need to place equipment around the scalp (which might interfere with VR headsets, and be adversely affected by the magnetic field induced by headphones or speakers).

Mapping Sensory Data

There is a marked difference between systems for controlling music in a game directly by means of these biosensors, and systems that translate the biophysiological data itself into audio (sonification or musification).Footnote 37 In turn, the distinction between sonification and musification is the complexity and intent of the mapping, and I have discussed this further elsewhere.Footnote 38 Several metrics for extracting control data from EEG for music are becoming common. The P300 ERP (Event Related Potential, or ‘oddball’ paradigm) has been used to allow active control over note selection for real-time sound synthesis.Footnote 39 In this type of research, the goal is that the user might consciously control music selection using thought alone. Such methods are not dissimilar to systems that use biophysiological data for spelling words, which are now increasingly common in the BCI (brain–computer interface) world,Footnote 40 though the system is adapted to musical notes rather than text input. Stimulus-responsive input measures, for example, SSVEP responses (a measurable response to visual stimulation at a given frequency)Footnote 41 have been adapted to real-time score selection.Footnote 42 SSVEP allows users to focus their gaze on a visual stimulus oscillating at a given rate. As well as active selection in a manner which is analogous to the ERP, described above, SSVEP also allows for a second level of control by mapping the duration of the gaze with non-linear features. This has clear possibilities in game soundtracking, wherein non-linearity is crucial, as it might allow for a degree of continuous control: for example, after selecting a specific musical motif or instrument set, the duration of a user’s gaze can be used to adjust the ratio for the selected passages accordingly. A similar effect could be achieved using eye-tracking in a hybrid system, utilizing duration of gaze as a secondary non-linear control. Active control by means of brain patterns (Mu frequency rhythm) and the player’s imagination of motor movement are also becoming popular as control signals for various applications, including avatar movement in virtual reality, operation of spelling devices, and neuroprostheses.Footnote 43 The challenge, then, is in devising and evaluating the kinds of mappings which are most suited to game-specific control (non-linear and narratively congruent mappings).

Non-Linearity

Non-linearity is a relatively straightforward concept for game soundtracking – music with a static duration may not fit synchronously with player actions. Emotional congruence can also be quickly explained: Let us imagine a MMORPG such as World of Warcraft. The player might be in a part of a sequence, which requires generically happy-sounding music – no immediate threat, for example. The tune which is selected by the audio engine might be stylistically related to ambient, relaxing music, but in this particular case, wind chimes and drone notes have negative connotations for the player. This soundscape might then create the opposite of the intended emotional state in the player. In related work, there is a growing body of evidence which suggests that when sad music is played to listeners who are in a similar emotional state, the net effect can actually be that the listeners’ emotional response is positive, due to an emotional mirroring effect which releases some neurosympathetic responses.Footnote 44 There is some suggestion that music has the power to make the hearer feel as though it is a sympathetic listener, and to make people in negative emotional states feel listened to – essentially, by mirroring. So, simply giving generically sad music to the player at a challenging point in the narrative (e.g., low player health, death of an NPC or similar point in the narrative) might not achieve the originally intended effect.

One solution might be to apply procedural audio techniques to an individual player’s own selection of music (a little like the way in which Grand Theft Auto games allow players to switch between radio stations), or in an ideal world, to use biophysiological measures of emotion to manipulate a soundtrack according to biofeedback response in order to best maximize the intended, induced emotional response in an individual player on a case-by-case basis.

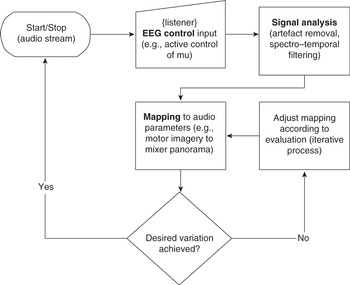

Figure 17.1 shows a suggested signal flow illustrating how existing biosensor research might be implemented in this fashion, in for example a non-linear, biofeedback-informed game audio system, based on metrics including heart rate, electro-dermal activity, and so on, as previously mentioned.

Figure 17.1 Overview of signal flow and iterative evaluation process

In Figure 17.1, real-time input is analysed and filtered, including artefact removal to produce simple control signals. Control signals are then mapped to mixer parameters, for example, combining different instrumentation or musical motifs. In the case of a horror game, is the user ‘scared’ enough? If not, adjust the music. Is the user ‘bored’? If so, adjust the music, and so on. Any number of statistical data reduction techniques might be used in the signal analysis block. For details of previous studies using principal component analysis for music measurement when analysing EEG, the interested reader is referred to previous work.Footnote 45

If we consider one of the best-known examples of interactive, narrative congruous music, the iMUSE system by LucasArts (popularized in Monkey Island 2, amongst other games), musical material adapts to the player character’s location and the game action.Footnote 46 For example, musical transformations include vertical sequencing (adding or removing instrumentation), and horizontal (time-based) resequencing (such as looping sections, or jumping to different sections of a theme). Adding biosensor feedback would allow such changes to respond to a further control signal: that of the player’s emotional state.

There are ethical concerns when collecting a player’s biophysiological responses, beyond the immediate data protection requirements. Should the game report when it notes unusual biophysiological responses? The hardware is likely to be well below medical grade, and false reporting could create psychological trauma. What if in the case of a game like Nevermind, the adaptations using biofeedback are too effective? Again, this could result in psychological trauma. Perhaps we should borrow the principle of the Hippocratic oath – firstly do no harm – when considering these scenarios, and moreover it could be argued that playing a game gives some degree of informed consent in the case of extremely immersive experiences. Within the context of gameplay, the idea of ‘harm’ becomes somewhat nebulous, but there is certainly room to consider levels of consent for the player in particularly intense games.

Adaptive Game Soundtracking: Biofeedback-Driven Soundtracks?

For video game soundtracking to move towards enhancing player immersion, we might suggest that simple modulation and procedural audio techniques need to be made more sophisticated to be able to adapt musical features to players’ emotional responses to music in full synchronization. A fully realized system might also need to incorporate elements of surprise, for example. Biomarkers indicating lack of attention or focus could be used as control signals for such audio cues, depending on the gameplay – ERP in brain activity would be one readily available biophysiological cue. As such, there is a serious argument to be made for the use of active biophysiological sensor mapping to assist access in terms of inclusion for gaming: players who might otherwise be unable to enjoy gaming could potentially take part using this technology, for example in the field of EEG games.Footnote 47 EEG games remain primarily the domain of research activity rather than commercial practice at the time of writing, though Facebook have very recently announced the acquisition of the MYO brand of ‘mind-reading wrist wearables’. Significant advances have already been made in the field of brain–computer music interfacing. Might bio-responsive control signals be able to take these advances to the next level by providing inclusive soundtrack design for players with a wide range of access issues, as in the case of the EEG games above? The use of musical stimuli to mediate or entrain a player’s emotional state (i.e., through biofeedback) also remains a fertile area for research activity.Footnote 48 Neurofeedback is becoming increasingly common in the design of brain–computer music interfacing for specific purposes such as therapeutic applications, and this emerging field might be readily adaptable to gaming scenarios. This would potentially offer game soundtrack augmentation to listeners who would benefit from context-specific or adaptive audio, including non-linear immersive audio in gaming or virtual reality, by harnessing the power of music to facilitate emotional contagion, communication and perhaps most importantly, interaction with other players in the context of multiplayer games (e.g., MMORPG games).

Conclusions

Music has been shown to induce physical responses on conscious and unconscious levels.Footnote 49 Such measurements can be used as indicators of affective states. There are established methods for evaluating emotional responses in traditional psychology and cognitive sciences. These can be, and have been, adapted to the evaluation of emotional responses to music. One such popular model is the two-dimensional (or circumplex) model of affect. However, this type of model is not without its problems. For example, let us consider a state, which is very negative and also very active (low valence and high arousal). How would you describe the player in this condition, afraid? Or angry? Both are very active, negative states, but both are quite different types of emotional response. Hence many other dimensional or descriptive approaches to emotion measurement have been proposed by music psychologists. This is a difficult question to answer based on the current state of research – we might at this stage simply have to say that existing models do not yet currently give us the full range we need when thinking about emotional response in active gameplay. Again, biosensors potentially offer a solution to overcoming these challenges in evaluating player emotional states.Footnote 50 However, player immersion remains well understood by the gaming community and as such is a desirable, measurable attribute.Footnote 51 Emotional congruence between soundtracking and gameplay is also a measurable attribute, providing the players evaluating this attribute have a shared understanding of exactly what it is they are being asked to evaluate. Considering the affective potential of music in video games is a useful way of understanding a player’s experience of emotion in the gameplay.

These solutions sound somewhat far-fetched, but we have already seen a vast increase in consumer-level wearable biosensor technology, and decreasing computational cost associated with technology like facial recognition of emotional state – so these science-fiction ideas may well become commercially viable in the world of game audio soon. Scholarship in musicology has shown an increasing interest in the psychology of music, and the impact of music on emotions – but the impact of music, emotion and play more generally remains a fertile area for further work, which this type of research may help to facilitate.