1. Introduction

A feedback control method for skin-friction drag reduction by Choi, Moin & Kim (Reference Choi, Moin and Kim1994), called opposition control, is a physics-based control strategy that mitigates the strength of near-wall streamwise vortices in a channel by providing blowing and suction at the wall ![]() $(\phi )$ which is 180

$(\phi )$ which is 180![]() $^{\circ }$ out-of-phase with the instantaneous wall-normal velocity

$^{\circ }$ out-of-phase with the instantaneous wall-normal velocity ![]() $v$ above the wall. Choi et al. (Reference Choi, Moin and Kim1994) showed that the sensing-plane location of

$v$ above the wall. Choi et al. (Reference Choi, Moin and Kim1994) showed that the sensing-plane location of ![]() $y^+ \approx 10$ (i.e.

$y^+ \approx 10$ (i.e. ![]() $\phi =-v_{y^+\approx 10}$) was the optimal location providing 25 % skin-friction drag reduction in a turbulent channel flow, where

$\phi =-v_{y^+\approx 10}$) was the optimal location providing 25 % skin-friction drag reduction in a turbulent channel flow, where ![]() $y^+=yu_{\tau }/\nu$,

$y^+=yu_{\tau }/\nu$, ![]() $y$ is the wall-normal distance from the wall,

$y$ is the wall-normal distance from the wall, ![]() $u_{\tau }$ is the wall shear velocity and

$u_{\tau }$ is the wall shear velocity and ![]() $\nu$ is the kinematic viscosity. Later, a number of studies have investigated the detailed characteristics of opposition control. Hammond, Bewley & Moin (Reference Hammond, Bewley and Moin1998) showed that the sensing-plane location of

$\nu$ is the kinematic viscosity. Later, a number of studies have investigated the detailed characteristics of opposition control. Hammond, Bewley & Moin (Reference Hammond, Bewley and Moin1998) showed that the sensing-plane location of ![]() $y^+ = 15$ provided slightly more drag reduction than that of

$y^+ = 15$ provided slightly more drag reduction than that of ![]() $y^+ = 10$. Chung & Talha (Reference Chung and Talha2011) reported that the maximum drag-reduction rate with a given sensing location depended on the amplitude of blowing/suction. For example, approximately 10 % drag reduction was obtained with

$y^+ = 10$. Chung & Talha (Reference Chung and Talha2011) reported that the maximum drag-reduction rate with a given sensing location depended on the amplitude of blowing/suction. For example, approximately 10 % drag reduction was obtained with ![]() $\phi =-(v_{y^+=25}/5)$, whereas the drag increased with

$\phi =-(v_{y^+=25}/5)$, whereas the drag increased with ![]() $\phi =-v_{y^+=25}$. The effect of the Reynolds number had been also investigated; the maximum drag-reduction rate decreased as the Reynolds number increased (Chang, Collis & Ramakrishnan Reference Chang, Collis and Ramakrishnan2002; Iwamoto, Suzuki & Kasagi Reference Iwamoto, suzuki and Kasagi2002), but drag reduction of 20 % was still achieved at

$\phi =-v_{y^+=25}$. The effect of the Reynolds number had been also investigated; the maximum drag-reduction rate decreased as the Reynolds number increased (Chang, Collis & Ramakrishnan Reference Chang, Collis and Ramakrishnan2002; Iwamoto, Suzuki & Kasagi Reference Iwamoto, suzuki and Kasagi2002), but drag reduction of 20 % was still achieved at ![]() $Re_{\tau } = 1000$ with a sensing location of

$Re_{\tau } = 1000$ with a sensing location of ![]() $y^+ = 13.5$ (Wang, Huang & Xu Reference Wang, Huang and Xu2016), where

$y^+ = 13.5$ (Wang, Huang & Xu Reference Wang, Huang and Xu2016), where ![]() $Re_{\tau } = u_{\tau } \delta / \nu$ and

$Re_{\tau } = u_{\tau } \delta / \nu$ and ![]() $\delta$ is the channel half-height. Rebbeck & Choi (Reference Rebbeck and Choi2001, Reference Rebbeck and Choi2006) experimentally conducted opposition control with a single pair of sensing probe and actuator, and showed that strong downwash motions near the wall were suppressed by the blowing at the wall.

$\delta$ is the channel half-height. Rebbeck & Choi (Reference Rebbeck and Choi2001, Reference Rebbeck and Choi2006) experimentally conducted opposition control with a single pair of sensing probe and actuator, and showed that strong downwash motions near the wall were suppressed by the blowing at the wall.

Since it is difficult and even impractical to measure the instantaneous wall-normal velocity ![]() $v$ at

$v$ at ![]() $y^+=10$ (

$y^+=10$ (![]() $v_{10}$ hereafter), opposition controls using predicted

$v_{10}$ hereafter), opposition controls using predicted ![]() $v_{10}$'s (

$v_{10}$'s (![]() $v_{10}^{pred}$'s) from wall variables such as the wall pressure and shear stresses have been searched for. For example, Choi et al. (Reference Choi, Moin and Kim1994) conducted a Taylor series expansion on near-wall wall-normal velocity,

$v_{10}^{pred}$'s) from wall variables such as the wall pressure and shear stresses have been searched for. For example, Choi et al. (Reference Choi, Moin and Kim1994) conducted a Taylor series expansion on near-wall wall-normal velocity,

where ![]() $y=0$ is the wall location and the subscript

$y=0$ is the wall location and the subscript ![]() $w$ denotes the wall. Due to the continuity

$w$ denotes the wall. Due to the continuity ![]() $({\partial v}/{\partial y} = - {\partial u}/{\partial x} - {\partial w}/{\partial z})$,

$({\partial v}/{\partial y} = - {\partial u}/{\partial x} - {\partial w}/{\partial z})$,

where ![]() $x$ and

$x$ and ![]() $z$ are the streamwise and spanwise directions, respectively, and

$z$ are the streamwise and spanwise directions, respectively, and ![]() $u$ and

$u$ and ![]() $w$ are the corresponding velocity components. Because the first term in the bracket had a negligible correlation with v 10,

$w$ are the corresponding velocity components. Because the first term in the bracket had a negligible correlation with v 10,

and they applied

resulting in approximately 6 % drag reduction. The correlation coefficient between ![]() $v_{10}$ and

$v_{10}$ and ![]() $v$ predicted using this Taylor series expansion was

$v$ predicted using this Taylor series expansion was ![]() $\rho _{v_{10}} \approx 0.75$, which is not low but not high enough to produce a significant amount of drag reduction. Here, the correlation coefficient between

$\rho _{v_{10}} \approx 0.75$, which is not low but not high enough to produce a significant amount of drag reduction. Here, the correlation coefficient between ![]() $v_{10}$ and

$v_{10}$ and ![]() $\psi$ is defined as

$\psi$ is defined as ![]() $\rho _{v_{10}} = \langle v_{10}(x,z,t) \psi (x,z,t) \rangle /({v_{10,rms}}{\psi _{rms}})$, where

$\rho _{v_{10}} = \langle v_{10}(x,z,t) \psi (x,z,t) \rangle /({v_{10,rms}}{\psi _{rms}})$, where ![]() $\langle \ \rangle$ denotes the averaging in the homogeneous directions (

$\langle \ \rangle$ denotes the averaging in the homogeneous directions (![]() $x, z$) and time, and the subscript

$x, z$) and time, and the subscript ![]() $rms$ indicates the root-mean square. Bewley & Protas (Reference Bewley and Protas2004) retained even high-order terms (up to the terms of

$rms$ indicates the root-mean square. Bewley & Protas (Reference Bewley and Protas2004) retained even high-order terms (up to the terms of ![]() $O(y^5)$) in the Taylor series expansion, but high-order terms rather degraded the correlation. Several studies have presented methods of predicting the near-wall velocity from the flow variables at the wall or away from the wall using direct numerical simulation (DNS) data. Podvin & Lumley (Reference Podvin and Lumley1998) conducted a proper orthogonal decomposition (POD) to the streamwise and spanwise wall velocity gradients (

$O(y^5)$) in the Taylor series expansion, but high-order terms rather degraded the correlation. Several studies have presented methods of predicting the near-wall velocity from the flow variables at the wall or away from the wall using direct numerical simulation (DNS) data. Podvin & Lumley (Reference Podvin and Lumley1998) conducted a proper orthogonal decomposition (POD) to the streamwise and spanwise wall velocity gradients (![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$), and showed that near-wall streamwise streaks were reconstructed well but wall-normal and spanwise velocities were not very well reproduced. Bewley & Protas (Reference Bewley and Protas2004) developed an adjoint-based estimator which was optimized by solving the adjoint Navier–Stokes equations. An estimator using all three wall variables (

$\partial w/\partial y \vert _w$), and showed that near-wall streamwise streaks were reconstructed well but wall-normal and spanwise velocities were not very well reproduced. Bewley & Protas (Reference Bewley and Protas2004) developed an adjoint-based estimator which was optimized by solving the adjoint Navier–Stokes equations. An estimator using all three wall variables (![]() $\partial u/\partial y \vert _w$,

$\partial u/\partial y \vert _w$, ![]() $\partial w/\partial y \vert _w$ and

$\partial w/\partial y \vert _w$ and ![]() $p_w$ (wall pressure)) showed a better prediction of near-wall velocity components for a turbulent channel flow at

$p_w$ (wall pressure)) showed a better prediction of near-wall velocity components for a turbulent channel flow at ![]() $Re_{\tau }=100$ than that from the Taylor series expansion, showing

$Re_{\tau }=100$ than that from the Taylor series expansion, showing ![]() $\rho _{v_{10}} \approx 0.88$. Hœpffner et al. (Reference Hœpffner, Chevalier, Bewley and Henningson2005) and Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) developed a linear estimation model based on the linearized Navier–Stokes equations and a Kalman filter. They improved the performance of the estimator by treating nonlinear terms in the Navier–Stokes equations as the external forcings which were sampled from DNS data, and obtained

$\rho _{v_{10}} \approx 0.88$. Hœpffner et al. (Reference Hœpffner, Chevalier, Bewley and Henningson2005) and Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) developed a linear estimation model based on the linearized Navier–Stokes equations and a Kalman filter. They improved the performance of the estimator by treating nonlinear terms in the Navier–Stokes equations as the external forcings which were sampled from DNS data, and obtained ![]() $\rho _{v_{10}} \approx 0.85$ using three wall variables of

$\rho _{v_{10}} \approx 0.85$ using three wall variables of ![]() $\omega _y \vert _w$,

$\omega _y \vert _w$, ![]() $\partial ^2 v/\partial y^2 \vert _w$, and

$\partial ^2 v/\partial y^2 \vert _w$, and ![]() $p_w$ for a turbulent channel flow at

$p_w$ for a turbulent channel flow at ![]() $Re_{\tau }=100$, where

$Re_{\tau }=100$, where ![]() $\omega _y \vert _w$ is the wall-normal vorticity at the wall. Illingworth, Monty & Marusic (Reference Illingworth, Monty and Marusic2018) applied a linear estimator similar to that of Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) to a turbulent channel flow at

$\omega _y \vert _w$ is the wall-normal vorticity at the wall. Illingworth, Monty & Marusic (Reference Illingworth, Monty and Marusic2018) applied a linear estimator similar to that of Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) to a turbulent channel flow at ![]() $Re_{\tau }=1000$, and predicted large scale

$Re_{\tau }=1000$, and predicted large scale ![]() $u$ at an arbitrary

$u$ at an arbitrary ![]() $y$ location using all three velocity components at

$y$ location using all three velocity components at ![]() $y^+=197$. A linear estimator based on

$y^+=197$. A linear estimator based on ![]() $\partial u/\partial y \vert _w$ also reasonably predicted large scale

$\partial u/\partial y \vert _w$ also reasonably predicted large scale ![]() $u$ at an arbitrary

$u$ at an arbitrary ![]() $y$, but its performance was not better than that using all three velocity components at

$y$, but its performance was not better than that using all three velocity components at ![]() $y^+=400$ in a turbulent channel flow at

$y^+=400$ in a turbulent channel flow at ![]() $Re_{\tau }=2000$ (Oehler, Garcia-Gutiérrez & Illingworth Reference Oehler, Garcia-Gutiérrez and Illingworth2018). Oehler & Illingworth (Reference Oehler, Illingworth, Lau and Kelso2018) used an estimator to impose a body forcing

$Re_{\tau }=2000$ (Oehler, Garcia-Gutiérrez & Illingworth Reference Oehler, Garcia-Gutiérrez and Illingworth2018). Oehler & Illingworth (Reference Oehler, Illingworth, Lau and Kelso2018) used an estimator to impose a body forcing ![]() $f_b \vert _{y=y_b}$ predicted by sensing

$f_b \vert _{y=y_b}$ predicted by sensing ![]() $u \vert _{y=y_s}$ or

$u \vert _{y=y_s}$ or ![]() $\partial u/\partial y \vert _w$, for the minimization of the magnitude of the velocity fluctuations in a turbulent channel flow at

$\partial u/\partial y \vert _w$, for the minimization of the magnitude of the velocity fluctuations in a turbulent channel flow at ![]() $Re_{\tau }=2000$, and obtained a minimum value when

$Re_{\tau }=2000$, and obtained a minimum value when ![]() $y_s=0.26 \delta$ and

$y_s=0.26 \delta$ and ![]() $y_b = 0.29 \delta$.

$y_b = 0.29 \delta$.

Another approach for predicting ![]() $v_{10}$ with the wall variables is using a neural network. Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) applied a neural network for the first time to perform a control with

$v_{10}$ with the wall variables is using a neural network. Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) applied a neural network for the first time to perform a control with ![]() $v_{10}^{pred}$ (predicted

$v_{10}^{pred}$ (predicted ![]() $v_{10}$) in a turbulent channel flow at

$v_{10}$) in a turbulent channel flow at ![]() $Re_{\tau }=100$. They used the information of

$Re_{\tau }=100$. They used the information of ![]() $\partial w/\partial y \vert _w$ along the spanwise direction to predict

$\partial w/\partial y \vert _w$ along the spanwise direction to predict ![]() $v_{10}$ (i.e.

$v_{10}$ (i.e. ![]() $v_{10}^{pred}(x,z)=f({\left . \partial w/\partial y\right |_w}(x,z\pm n\Delta z)),\ n=0,1,2,\ldots )$, and showed that the spanwise length of at least 90 wall units was required for accurately predicting

$v_{10}^{pred}(x,z)=f({\left . \partial w/\partial y\right |_w}(x,z\pm n\Delta z)),\ n=0,1,2,\ldots )$, and showed that the spanwise length of at least 90 wall units was required for accurately predicting ![]() $v_{10}$ with

$v_{10}$ with ![]() $\partial w/\partial y \vert _w$'s, resulting in

$\partial w/\partial y \vert _w$'s, resulting in ![]() $\rho _{v_{10}}$ of approximately 0.85 and 18 % drag reduction. Lorang, Podvin & Le Quéré (Reference Lorang, Podvin and Le Quéré2008) obtained the first POD mode of

$\rho _{v_{10}}$ of approximately 0.85 and 18 % drag reduction. Lorang, Podvin & Le Quéré (Reference Lorang, Podvin and Le Quéré2008) obtained the first POD mode of ![]() $v_{10}$ with a neural network by sensing whole domain information of

$v_{10}$ with a neural network by sensing whole domain information of ![]() $\partial w/\partial y \vert _w$ in a turbulent channel flow at

$\partial w/\partial y \vert _w$ in a turbulent channel flow at ![]() $Re_{\tau }=140$, and performed a control with it, resulting in a drag reduction of 13 % which was slightly smaller than the amount of drag reduction (14 %) with the method of Lee et al. (Reference Lee, Kim, Babcock and Goodman1997). The difference in the amounts of drag reduction from those two studies may come from the difference in the Reynolds numbers, i.e.

$Re_{\tau }=140$, and performed a control with it, resulting in a drag reduction of 13 % which was slightly smaller than the amount of drag reduction (14 %) with the method of Lee et al. (Reference Lee, Kim, Babcock and Goodman1997). The difference in the amounts of drag reduction from those two studies may come from the difference in the Reynolds numbers, i.e. ![]() $Re_{\tau }=100$ versus

$Re_{\tau }=100$ versus ![]() $140$. Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) used a neural network to predict high-order terms (

$140$. Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) used a neural network to predict high-order terms (![]() $O(y^3)$) of the Taylor series expansion of near-wall velocity components by sensing

$O(y^3)$) of the Taylor series expansion of near-wall velocity components by sensing ![]() $p_w$,

$p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$, and the reconstructed streamwise and spanwise velocities had correlations higher than 0.9, but

$\partial w/\partial y \vert _w$, and the reconstructed streamwise and spanwise velocities had correlations higher than 0.9, but ![]() $\rho _{v_{10}}$ (obtained from the continuity) was only approximately 0.6. Recently, Yun & Lee (Reference Yun and Lee2017) used

$\rho _{v_{10}}$ (obtained from the continuity) was only approximately 0.6. Recently, Yun & Lee (Reference Yun and Lee2017) used ![]() $p_w$ to predict

$p_w$ to predict ![]() $v_{10}$ by a neural network with the streamwise and spanwise sensing lengths of 90 and 45 wall units, respectively, and showed

$v_{10}$ by a neural network with the streamwise and spanwise sensing lengths of 90 and 45 wall units, respectively, and showed ![]() $\rho _{v_{10}}=0.85$. These previous studies showed that the neural network is an attractive tool to predict

$\rho _{v_{10}}=0.85$. These previous studies showed that the neural network is an attractive tool to predict ![]() $v_{10}$ with wall-variable sensing, but shallow neural networks (one nonlinear layer in Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) and Lorang et al. (Reference Lorang, Podvin and Le Quéré2008), two nonlinear layers in Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) and Yun & Lee (Reference Yun and Lee2017)) may not be sufficient to yield a high

$v_{10}$ with wall-variable sensing, but shallow neural networks (one nonlinear layer in Lee et al. (Reference Lee, Kim, Babcock and Goodman1997) and Lorang et al. (Reference Lorang, Podvin and Le Quéré2008), two nonlinear layers in Milano & Koumoutsakos (Reference Milano and Koumoutsakos2002) and Yun & Lee (Reference Yun and Lee2017)) may not be sufficient to yield a high ![]() $\rho _{v_{10}}$.

$\rho _{v_{10}}$.

In recent years, machine learning, especially deep learning (LeCun, Bengio & Hinton Reference LeCun, Bengio and Hinton2015), has shown remarkable performance. Güemes, Discetti & Ianiro (Reference Güemes, Discetti and Ianiro2019) applied an extended POD and convolutional neural networks, respectively, to reconstruct large- and very large-scale motions in a turbulent channel flow based on the wall shear stress measurement, and showed that the convolutional neural networks performed significantly better than the extended POD. Kim & Lee (Reference Kim and Lee2020) used a nine-layer convolutional neural network (CNN) to predict the heat flux at the wall using wall variables (![]() $p_w$,

$p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$), and showed that the CNN outperformed a linear regression. So far, there is no attempt to apply a CNN to the prediction of the near-wall flow (

$\partial w/\partial y \vert _w$), and showed that the CNN outperformed a linear regression. So far, there is no attempt to apply a CNN to the prediction of the near-wall flow (![]() $v_{10}$) from the flow variables at the wall and to the flow control in a feedback manner. Therefore, in the present study we first aim at predicting

$v_{10}$) from the flow variables at the wall and to the flow control in a feedback manner. Therefore, in the present study we first aim at predicting ![]() $v_{10}$ using a CNN which is currently the most successful deep learning method in discovering spatial distributions of a raw input that are closely related to a desired output, where the wall flow variables (

$v_{10}$ using a CNN which is currently the most successful deep learning method in discovering spatial distributions of a raw input that are closely related to a desired output, where the wall flow variables (![]() $p_w$,

$p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$) and

$\partial w/\partial y \vert _w$) and ![]() $v_{10}$ are the input and output, respectively, used in this study. We investigate how high

$v_{10}$ are the input and output, respectively, used in this study. We investigate how high ![]() $\rho _{v_{10}}$ can be achieved from the CNN as compared to fully connected neural networks (FCNN) used in the previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Lorang et al. Reference Lorang, Podvin and Le Quéré2008; Yun & Lee Reference Yun and Lee2017). We then perform opposition control with

$\rho _{v_{10}}$ can be achieved from the CNN as compared to fully connected neural networks (FCNN) used in the previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Milano & Koumoutsakos Reference Milano and Koumoutsakos2002; Lorang et al. Reference Lorang, Podvin and Le Quéré2008; Yun & Lee Reference Yun and Lee2017). We then perform opposition control with ![]() $v_{10}^{pred}$ predicted by the CNN. Because the controlled flow is not available in practice, we train our CNN only with the uncontrolled flow. Note that previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Lorang et al. Reference Lorang, Podvin and Le Quéré2008) used controlled flows to train the neural network. Finally, we apply the CNN to a higher Reynolds number flow to see if the prediction and control capabilities are maintained even if the CNN is trained with a lower Reynolds number flow. Details of the problem setting, CNN, and numerical method are presented in § 2. The prediction performance of the CNN is given in § 3. In § 4 we provide the results of control with

$v_{10}^{pred}$ predicted by the CNN. Because the controlled flow is not available in practice, we train our CNN only with the uncontrolled flow. Note that previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Lorang et al. Reference Lorang, Podvin and Le Quéré2008) used controlled flows to train the neural network. Finally, we apply the CNN to a higher Reynolds number flow to see if the prediction and control capabilities are maintained even if the CNN is trained with a lower Reynolds number flow. Details of the problem setting, CNN, and numerical method are presented in § 2. The prediction performance of the CNN is given in § 3. In § 4 we provide the results of control with ![]() $v_{10}^{pred}$ from the CNN. An application to a higher Reynolds number flow is given in § 5, followed by conclusions. In the appendices the results from other machine learning techniques such as the random forest and FCNN are given and their results are briefly discussed.

$v_{10}^{pred}$ from the CNN. An application to a higher Reynolds number flow is given in § 5, followed by conclusions. In the appendices the results from other machine learning techniques such as the random forest and FCNN are given and their results are briefly discussed.

2. Methodology

2.1. Problem setting

In the present study we predict ![]() $v_{10}$ from a spatial distribution of wall variables (

$v_{10}$ from a spatial distribution of wall variables (![]() $\chi _w$) in a turbulent channel flow, where a CNN is used to extract hidden features of

$\chi _w$) in a turbulent channel flow, where a CNN is used to extract hidden features of ![]() $\chi _w$ which may closely represent

$\chi _w$ which may closely represent ![]() $v_{10}$. We consider three different wall variables (

$v_{10}$. We consider three different wall variables (![]() $\chi _w=p_w$,

$\chi _w=p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$) that are measurable quantities in real systems (Kasagi, Suzuki & Fukagata Reference Kasagi, Suzuki and Fukagata2008). Each of these wall variables is used to predict

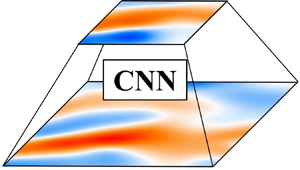

$\partial w/\partial y \vert _w$) that are measurable quantities in real systems (Kasagi, Suzuki & Fukagata Reference Kasagi, Suzuki and Fukagata2008). Each of these wall variables is used to predict ![]() $v_{10}$ (figure 1) and is used for the control. Since Bewley & Protas (Reference Bewley and Protas2004) and Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) showed that using more wall variables improved the prediction performance, all three wall variables are also used to predict

$v_{10}$ (figure 1) and is used for the control. Since Bewley & Protas (Reference Bewley and Protas2004) and Chevalier et al. (Reference Chevalier, Hœpffner, Bewley and Henningson2006) showed that using more wall variables improved the prediction performance, all three wall variables are also used to predict ![]() $v_{10}$ and the results are given in § 4.3. A region on the wall (coloured in yellow) in figure 1 is an example of the sensing region of the wall variable

$v_{10}$ and the results are given in § 4.3. A region on the wall (coloured in yellow) in figure 1 is an example of the sensing region of the wall variable ![]() $\chi _w$ whose streamwise and spanwise lengths are approximately 90 wall units. The size of each sensing region is selected considering those of previous studies in which at least 90 wall units in the spanwise direction was required for

$\chi _w$ whose streamwise and spanwise lengths are approximately 90 wall units. The size of each sensing region is selected considering those of previous studies in which at least 90 wall units in the spanwise direction was required for ![]() $\partial w/\partial y \vert _w$ (Lee et al. Reference Lee, Kim, Babcock and Goodman1997), and 90 wall units in the streamwise direction was sufficient for

$\partial w/\partial y \vert _w$ (Lee et al. Reference Lee, Kim, Babcock and Goodman1997), and 90 wall units in the streamwise direction was sufficient for ![]() $p_w$ (Yun & Lee Reference Yun and Lee2017). One of the wall variables is the input of the present CNN (see below), and the output is

$p_w$ (Yun & Lee Reference Yun and Lee2017). One of the wall variables is the input of the present CNN (see below), and the output is ![]() $v_{10}^{pred}$ at the centre location of each sensing region. As we show below, this size is not big enough to include the influence of

$v_{10}^{pred}$ at the centre location of each sensing region. As we show below, this size is not big enough to include the influence of ![]() $v_{10}$ on the wall variables, but is still sufficient to have a high correlation between

$v_{10}$ on the wall variables, but is still sufficient to have a high correlation between ![]() $v_{10}$ and

$v_{10}$ and ![]() $v_{10}^{pred}$.

$v_{10}^{pred}$.

Figure 1. Schematic diagram on the relation between the predicted ![]() $v$ at

$v$ at ![]() $y^+=10$ (

$y^+=10$ (![]() $v_{10}^{pred}$) and wall-variable distribution with a CNN in a turbulent channel flow. The input (

$v_{10}^{pred}$) and wall-variable distribution with a CNN in a turbulent channel flow. The input (![]() $\chi _w$) of the CNN is one of

$\chi _w$) of the CNN is one of ![]() $p_w$,

$p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$, and the output is

$\partial w/\partial y \vert _w$, and the output is ![]() $v_{10}^{pred}$.

$v_{10}^{pred}$.

The two-point correlation coefficient ![]() $\rho$ between

$\rho$ between ![]() $v_{10}$ and

$v_{10}$ and ![]() $\chi _w$ in a turbulent channel flow is defined as

$\chi _w$ in a turbulent channel flow is defined as

where ![]() $\langle v_{10} \rangle =0$, and

$\langle v_{10} \rangle =0$, and ![]() $\Delta x$ and

$\Delta x$ and ![]() $\Delta z$ are the separation distances in the streamwise and spanwise directions, respectively. Figure 2 shows the contours of the two-point correlations for three different flows: (

$\Delta z$ are the separation distances in the streamwise and spanwise directions, respectively. Figure 2 shows the contours of the two-point correlations for three different flows: (![]() $a$–

$a$–![]() $c$) uncontrolled flow at

$c$) uncontrolled flow at ![]() $Re_{\tau } = 178$, (

$Re_{\tau } = 178$, (![]() $d$–

$d$–![]() $f$) controlled flow with opposition control (Choi et al. Reference Choi, Moin and Kim1994) at

$f$) controlled flow with opposition control (Choi et al. Reference Choi, Moin and Kim1994) at ![]() $Re_{\tau } = 178$, and (

$Re_{\tau } = 178$, and (![]() $g$–

$g$–![]() $i$) uncontrolled flow at

$i$) uncontrolled flow at ![]() $Re_{\tau } = 578$, where

$Re_{\tau } = 578$, where ![]() $Re_{\tau } = u_{\tau _o} \delta / \nu$ and

$Re_{\tau } = u_{\tau _o} \delta / \nu$ and ![]() $u_{\tau _o}$ is the wall shear velocity of the uncontrolled flow. These correlation contours indicate that there are distinct regions of close relations between

$u_{\tau _o}$ is the wall shear velocity of the uncontrolled flow. These correlation contours indicate that there are distinct regions of close relations between ![]() $v_{10}$ and

$v_{10}$ and ![]() $\chi _w$. For the uncontrolled flow at

$\chi _w$. For the uncontrolled flow at ![]() $Re_{\tau } = 178$ (figure 2

$Re_{\tau } = 178$ (figure 2![]() $a$–

$a$–![]() $c$), the wall pressure has the highest correlation on the downstream of

$c$), the wall pressure has the highest correlation on the downstream of ![]() $v_{10}$, but has the lowest maximum correlation among three wall variables investigated in this study. The streamwise wall shear rate

$v_{10}$, but has the lowest maximum correlation among three wall variables investigated in this study. The streamwise wall shear rate ![]() $\partial u/\partial y \vert _w$ has the highest correlation at the upstream of

$\partial u/\partial y \vert _w$ has the highest correlation at the upstream of ![]() $v_{10}$, whereas the correlation with the spanwise wall shear rate

$v_{10}$, whereas the correlation with the spanwise wall shear rate ![]() $\partial w/\partial y \vert _w$ is highest at slightly downstream but sideways locations. The two-point correlation is highest for the spanwise wall shear rate, but this correlation magnitude (

$\partial w/\partial y \vert _w$ is highest at slightly downstream but sideways locations. The two-point correlation is highest for the spanwise wall shear rate, but this correlation magnitude (![]() $\rho = 0.56$) is not high enough to accurately predict

$\rho = 0.56$) is not high enough to accurately predict ![]() $v_{10}$. Also, these correlation contours themselves do not provide how one can construct

$v_{10}$. Also, these correlation contours themselves do not provide how one can construct ![]() $v_{10}$ from this information. Hence, in the present study, we construct

$v_{10}$ from this information. Hence, in the present study, we construct ![]() $v_{10}$ from the wall-variable information in

$v_{10}$ from the wall-variable information in ![]() $-45 < \Delta x^+ < 45$ and

$-45 < \Delta x^+ < 45$ and ![]() $-45 < \Delta z^+ < 45$ using a CNN, and discuss how high correlations can be obtained from this approach.

$-45 < \Delta z^+ < 45$ using a CNN, and discuss how high correlations can be obtained from this approach.

Figure 2. Contours of the correlation coefficients between ![]() $v_{10}$ and

$v_{10}$ and ![]() $\chi _w$: (

$\chi _w$: (![]() $a$–

$a$–![]() $c$) uncontrolled flow at

$c$) uncontrolled flow at ![]() $Re_{\tau } = 178$; (

$Re_{\tau } = 178$; (![]() $d$–

$d$–![]() $f$) controlled flow at

$f$) controlled flow at ![]() $Re_{\tau } = 178$ by opposition control; (

$Re_{\tau } = 178$ by opposition control; (![]() $g$–

$g$–![]() $i$) uncontrolled flow at

$i$) uncontrolled flow at ![]() $Re_{\tau } = 578$. (

$Re_{\tau } = 578$. (![]() $a{,}d{,}g$)

$a{,}d{,}g$) ![]() $\chi _w = p_w$, (

$\chi _w = p_w$, (![]() $b{,}e{,}h$)

$b{,}e{,}h$) ![]() $\chi _w = \partial u /\partial y \vert _w$ and (

$\chi _w = \partial u /\partial y \vert _w$ and (![]() $c{,}f{,}i$)

$c{,}f{,}i$) ![]() $\chi _w = \partial w / \partial y \vert _w$. Solid circles at the centre denote the location of

$\chi _w = \partial w / \partial y \vert _w$. Solid circles at the centre denote the location of ![]() $v_{10}$ (

$v_{10}$ (![]() $\Delta x = \Delta z = 0$), and cross symbols are the locations of the maximum correlation magnitude. The values of

$\Delta x = \Delta z = 0$), and cross symbols are the locations of the maximum correlation magnitude. The values of ![]() $\rho$ at these locations are given at the bottom of each figure. Here,

$\rho$ at these locations are given at the bottom of each figure. Here, ![]() $\Delta x^+ = \Delta x u_{\tau _o}/\nu$ and

$\Delta x^+ = \Delta x u_{\tau _o}/\nu$ and ![]() $\Delta z^+ = \Delta z u_{\tau _o}/\nu$.

$\Delta z^+ = \Delta z u_{\tau _o}/\nu$.

For the controlled flow at ![]() $Re_{\tau } = 178$ (figure 2

$Re_{\tau } = 178$ (figure 2![]() $d$–

$d$–![]() $f$), the correlations with

$f$), the correlations with ![]() $p_w$ and

$p_w$ and ![]() $\partial w/\partial y \vert _w$ are very similar to those for the uncontrolled flow. This suggests that a CNN trained with the uncontrolled flow can be applied to predict

$\partial w/\partial y \vert _w$ are very similar to those for the uncontrolled flow. This suggests that a CNN trained with the uncontrolled flow can be applied to predict ![]() $v_{10}$ for the controlled flow and also to control the flow in a feedback manner even without requiring training data of the controlled flow. On the other hand, the correlations with

$v_{10}$ for the controlled flow and also to control the flow in a feedback manner even without requiring training data of the controlled flow. On the other hand, the correlations with ![]() $\partial u/\partial y \vert _w$ have opposite signs in many places to those for the uncontrolled flow. This is because the blowing and suction at the wall from opposition control changes

$\partial u/\partial y \vert _w$ have opposite signs in many places to those for the uncontrolled flow. This is because the blowing and suction at the wall from opposition control changes ![]() $\partial u/\partial y \vert _w$ to be approximately

$\partial u/\partial y \vert _w$ to be approximately ![]() $180^{\circ }$ out-of-phase different from

$180^{\circ }$ out-of-phase different from ![]() $v_{10}$. For the uncontrolled flow at

$v_{10}$. For the uncontrolled flow at ![]() $Re_{\tau } = 578$ (figure 2

$Re_{\tau } = 578$ (figure 2![]() $g$–

$g$–![]() $i$), the correlations are very similar to those at

$i$), the correlations are very similar to those at ![]() $Re_{\tau } = 178$, as the near-wall flow is well scaled in wall units, which suggests that the CNN trained at a lower Reynolds number should be applicable to the flow at a higher Reynolds number.

$Re_{\tau } = 178$, as the near-wall flow is well scaled in wall units, which suggests that the CNN trained at a lower Reynolds number should be applicable to the flow at a higher Reynolds number.

Note that near-wall flow structures are significantly changed by opposition control (Choi et al. Reference Choi, Moin and Kim1994; Hammond et al. Reference Hammond, Bewley and Moin1998), and a higher Reynolds number flow contains smaller scales than those at ![]() $Re_{\tau } = 178$. Therefore, the success of the present control based on a CNN trained with uncontrolled flow at

$Re_{\tau } = 178$. Therefore, the success of the present control based on a CNN trained with uncontrolled flow at ![]() $Re_{\tau } = 178$ relies on the proper selection of wall sensing variable that maintains a similar correlation coefficient with

$Re_{\tau } = 178$ relies on the proper selection of wall sensing variable that maintains a similar correlation coefficient with ![]() $v_{10}$ for controlled and higher Reynolds number flows. For the present turbulent channel flow, the wall sensing variables satisfying this requirement are

$v_{10}$ for controlled and higher Reynolds number flows. For the present turbulent channel flow, the wall sensing variables satisfying this requirement are ![]() $p_w$ and

$p_w$ and ![]() $\partial w/\partial y \vert _w$, but

$\partial w/\partial y \vert _w$, but ![]() $\partial u/\partial y \vert _w$ fails to satisfy this requirement. The details of the CNN used are provided in § 2.3. Other machine learning techniques such as the Lasso, random forest and FCNN are also tested, and comparisons of the prediction performance by different machine learning techniques are given in appendix A.

$\partial u/\partial y \vert _w$ fails to satisfy this requirement. The details of the CNN used are provided in § 2.3. Other machine learning techniques such as the Lasso, random forest and FCNN are also tested, and comparisons of the prediction performance by different machine learning techniques are given in appendix A.

2.2. The dataset

The dataset (![]() $v_{10}^{true}$,

$v_{10}^{true}$, ![]() $\chi _w$) for training a CNN is obtained from direct numerical simulation of a turbulent channel flow at

$\chi _w$) for training a CNN is obtained from direct numerical simulation of a turbulent channel flow at ![]() $Re_{\tau } = 178$, where

$Re_{\tau } = 178$, where ![]() $v_{10}^{true}= v_{10}$. The governing equations for the continuity and incompressible Navier–Stokes equations are

$v_{10}^{true}= v_{10}$. The governing equations for the continuity and incompressible Navier–Stokes equations are

where ![]() $x_i\, (= (x,y,z))$ are the Cartesian coordinates,

$x_i\, (= (x,y,z))$ are the Cartesian coordinates, ![]() $u_i\, (= (u,v,w))$ are the corresponding velocity components,

$u_i\, (= (u,v,w))$ are the corresponding velocity components, ![]() $p$ is the pressure fluctuation,

$p$ is the pressure fluctuation, ![]() $-\textrm {d}P/\textrm {d}x_1$ is the mean pressure gradient to maintain a constant mass flow rate in a channel. The Reynolds number is

$-\textrm {d}P/\textrm {d}x_1$ is the mean pressure gradient to maintain a constant mass flow rate in a channel. The Reynolds number is ![]() $Re= 5600$ based on the bulk velocity (

$Re= 5600$ based on the bulk velocity (![]() $u_b$) and channel height (

$u_b$) and channel height (![]() $2 \delta$), and is 178 based on the wall shear velocity of the uncontrolled flow (

$2 \delta$), and is 178 based on the wall shear velocity of the uncontrolled flow (![]() $u_{\tau _o}$) and channel half-height (

$u_{\tau _o}$) and channel half-height (![]() $\delta$). A semi-implicit fractional step method is used to solve (2.2) and (2.3), where a third-order Runge–Kutta and the Crank–Nicolson schemes are used for the convection and diffusion terms, respectively. For spatial derivatives, the second-order central difference scheme is used. The no-slip condition is applied to the upper and lower walls, and periodic boundary conditions are used in the wall-parallel directions. The computational domain size is

$\delta$). A semi-implicit fractional step method is used to solve (2.2) and (2.3), where a third-order Runge–Kutta and the Crank–Nicolson schemes are used for the convection and diffusion terms, respectively. For spatial derivatives, the second-order central difference scheme is used. The no-slip condition is applied to the upper and lower walls, and periodic boundary conditions are used in the wall-parallel directions. The computational domain size is ![]() $3{\rm \pi} \delta (x) \times 2\delta (y) \times {\rm \pi}\delta (z)$ and the number of grid points is

$3{\rm \pi} \delta (x) \times 2\delta (y) \times {\rm \pi}\delta (z)$ and the number of grid points is ![]() $192(x) \times 129(y) \times 128(z)$. In the wall-normal direction a non-uniform grid is used with

$192(x) \times 129(y) \times 128(z)$. In the wall-normal direction a non-uniform grid is used with ![]() ${\Delta }{y^+} \approx 0.2 - 7.0$ (dense grids near the wall). Uniform grids are used in the wall-parallel directions with

${\Delta }{y^+} \approx 0.2 - 7.0$ (dense grids near the wall). Uniform grids are used in the wall-parallel directions with ![]() ${\Delta }{x^+} \approx 8.7$ and

${\Delta }{x^+} \approx 8.7$ and ![]() ${\Delta }{z^+} \approx 4.4$.

${\Delta }{z^+} \approx 4.4$.

The simulation starts with a laminar velocity profile with random perturbations and continues until the flow reaches a fully developed state. Then, 740 instantaneous fields of ![]() $v_{10}^{true}$ and

$v_{10}^{true}$ and ![]() $\chi _w$'s (

$\chi _w$'s (![]() $=p_w$,

$=p_w$, ![]() $\partial u/\partial y \vert _w$, and

$\partial u/\partial y \vert _w$, and ![]() $\partial w/\partial y \vert _w$) are stored during

$\partial w/\partial y \vert _w$) are stored during ![]() $T^+=Tu_{\tau _o}^2/\nu =29\,560$ with an interval of

$T^+=Tu_{\tau _o}^2/\nu =29\,560$ with an interval of ![]() ${\Delta }T^+=40$, where

${\Delta }T^+=40$, where ![]() $v_{10}^{true}$ is the label for output of a CNN (

$v_{10}^{true}$ is the label for output of a CNN (![]() $v_{10}^{pred}$), and

$v_{10}^{pred}$), and ![]() $\chi _w$'s are the input whose domain size is approximately

$\chi _w$'s are the input whose domain size is approximately ![]() $90 (l_x^+) \times 90 (l_z^+)$ in wall units (corresponding to

$90 (l_x^+) \times 90 (l_z^+)$ in wall units (corresponding to ![]() $11 \times 21$ grid points, respectively), as shown in figure 1. Here, one instantaneous field contains the information of

$11 \times 21$ grid points, respectively), as shown in figure 1. Here, one instantaneous field contains the information of ![]() $\chi _w$'s and

$\chi _w$'s and ![]() $v_{10}^{true}$ at both sides of the channel. The

$v_{10}^{true}$ at both sides of the channel. The ![]() $\chi _w$ and

$\chi _w$ and ![]() $v_{10}^{true}$ are normalized with their root-mean-square (subscript

$v_{10}^{true}$ are normalized with their root-mean-square (subscript ![]() $rms$) values as

$rms$) values as

where ![]() $\langle \chi _w \rangle$ denotes the mean value of

$\langle \chi _w \rangle$ denotes the mean value of ![]() $\chi _w$. The dataset of

$\chi _w$. The dataset of ![]() $\chi _w^{\ast }$ and

$\chi _w^{\ast }$ and ![]() $v_{10}^{\ast }$ is divided into three sets of different sizes, i.e. training, validation and test sets. Only the training set is used for optimizing a CNN. The validation set is used for checking the optimization process at each training iteration, and the prediction performance is evaluated with the test set after the whole training procedure is finished. We use 700 instantaneous fields (containing 34 406 400 pairs of

$v_{10}^{\ast }$ is divided into three sets of different sizes, i.e. training, validation and test sets. Only the training set is used for optimizing a CNN. The validation set is used for checking the optimization process at each training iteration, and the prediction performance is evaluated with the test set after the whole training procedure is finished. We use 700 instantaneous fields (containing 34 406 400 pairs of ![]() $\chi _w$'s and

$\chi _w$'s and ![]() $v_{10}^{true}$) for the training, and extract data at every third grid point in the streamwise and spanwise directions (resulting in approximately 3.8 million pairs of

$v_{10}^{true}$) for the training, and extract data at every third grid point in the streamwise and spanwise directions (resulting in approximately 3.8 million pairs of ![]() $\chi _w$'s and

$\chi _w$'s and ![]() $v_{10}^{true}$), respectively, to exclude highly correlated data. Twenty instantaneous fields (containing 983,040 pairs of

$v_{10}^{true}$), respectively, to exclude highly correlated data. Twenty instantaneous fields (containing 983,040 pairs of ![]() $\chi _w$'s and

$\chi _w$'s and ![]() $v_{10}^{true}$) are used for each validation and test set. Here, we use the number of training data of

$v_{10}^{true}$) are used for each validation and test set. Here, we use the number of training data of ![]() $N_{train} \approx 3.8 \times 10^6$ which is approximately three times that used in the ImageNet large-scale visual recognition challenge (ILSVRC) for developing convolutional neural networks (Krizhevsky, Sutskever & Hinton Reference Krizhevsky, Sutskever, Hinton, Pereira, Burges and Bottou2012; Simonyan & Zisserman Reference Simonyan and Zisserman2014; Szegedy et al. Reference Szegedy, Liu, Jia, Sermanet, Reed, Anguelov, Erhan, Vanhoucke and Rabinovich2014; He et al. Reference He, Zhang, Ren and Sun2015; Russakovsky et al. Reference Russakovsky, Deng, Su, Krause, Satheesh, Ma, Huang, Karpathy, Khosla and Bernstein2015). This is because the present training searches for the spatial correlations of

$N_{train} \approx 3.8 \times 10^6$ which is approximately three times that used in the ImageNet large-scale visual recognition challenge (ILSVRC) for developing convolutional neural networks (Krizhevsky, Sutskever & Hinton Reference Krizhevsky, Sutskever, Hinton, Pereira, Burges and Bottou2012; Simonyan & Zisserman Reference Simonyan and Zisserman2014; Szegedy et al. Reference Szegedy, Liu, Jia, Sermanet, Reed, Anguelov, Erhan, Vanhoucke and Rabinovich2014; He et al. Reference He, Zhang, Ren and Sun2015; Russakovsky et al. Reference Russakovsky, Deng, Su, Krause, Satheesh, Ma, Huang, Karpathy, Khosla and Bernstein2015). This is because the present training searches for the spatial correlations of ![]() $\chi _w$'s and

$\chi _w$'s and ![]() $v_{10}^{true}$ and, thus, it possesses some similarity with that of image recognition in the ILSVRC, but it may require more training data due to the unsteady characteristics of the present problem than that used in the ILSVRC. In appendix B we show that

$v_{10}^{true}$ and, thus, it possesses some similarity with that of image recognition in the ILSVRC, but it may require more training data due to the unsteady characteristics of the present problem than that used in the ILSVRC. In appendix B we show that ![]() $N_{train} \approx 3.8 \times 10^6$ is sufficient for the present problem.

$N_{train} \approx 3.8 \times 10^6$ is sufficient for the present problem.

2.3. Convolutional neural network

The CNN is a class of neural network, composed of input, hidden and output layers with artificial neurones. The CNN uses a discrete convolution operation with filters to construct the next layer keeping spatially two-dimensional feature maps. Therefore, unlike a FCNN whose inputs to a neurone are outputs from all neurones in the previous layer, local outputs from the previous layer in the CNN are inputs to a neurone, and neurones share the same weights (LeCun et al. Reference LeCun, Boser, Denker, Henderson, Howard, Hubbard, Jackel and Touretzky1989, Reference LeCun, Bengio and Hinton2015). Figure 3 shows the architecture of the CNN used in the present study. We use 17 hidden layers, one average pooling layer and one linear layer adopting a residual block proposed by He et al. (Reference He, Zhang, Ren and Sun2015). For the hidden layers without downsampling, we use a filter size of ![]() $3\times 3$ or

$3\times 3$ or ![]() $5\times 5$, with a stride of 1 for the convolution, where the stride is the magnitude of movement between applications of the filter to the input feature map (Singh & Manure Reference Singh and Manure2019). After the first and second downsampling layers, the height (

$5\times 5$, with a stride of 1 for the convolution, where the stride is the magnitude of movement between applications of the filter to the input feature map (Singh & Manure Reference Singh and Manure2019). After the first and second downsampling layers, the height (![]() $h_m$) and width (

$h_m$) and width (![]() $w_m$) of the feature maps are reduced by half, and the depth (

$w_m$) of the feature maps are reduced by half, and the depth (![]() $d_m$) is doubled, as in He et al. (Reference He, Zhang, Ren and Sun2015). We use a convolution operation with a stride of 2 and a filter size of

$d_m$) is doubled, as in He et al. (Reference He, Zhang, Ren and Sun2015). We use a convolution operation with a stride of 2 and a filter size of ![]() $2\times 2$ for the first and second downsampling layers. After

$2\times 2$ for the first and second downsampling layers. After ![]() $h_m$ or

$h_m$ or ![]() $w_m$ of the feature map becomes equal to

$w_m$ of the feature map becomes equal to ![]() $h_f$ or

$h_f$ or ![]() $w_f$ of the filter, respectively, we use global average pooling for the last downsampling (average pooling layer in figure 3), where the feature map is averaged while keeping the depth unchanged. After the average pooling layer, the feature map is connected to the linear layer to print out

$w_f$ of the filter, respectively, we use global average pooling for the last downsampling (average pooling layer in figure 3), where the feature map is averaged while keeping the depth unchanged. After the average pooling layer, the feature map is connected to the linear layer to print out ![]() $v_{10}^{pred}$ without an activation function. In the present CNN Relu (Nair & Hinton Reference Nair, Hinton, Fürnkranz and Joachims2010) is used as the activation function, and a batch normalization (Ioffe & Szegedy Reference Ioffe and Szegedy2015) is applied after each convolution operation. All weights (

$v_{10}^{pred}$ without an activation function. In the present CNN Relu (Nair & Hinton Reference Nair, Hinton, Fürnkranz and Joachims2010) is used as the activation function, and a batch normalization (Ioffe & Szegedy Reference Ioffe and Szegedy2015) is applied after each convolution operation. All weights (![]() $w_j$) in the filters are initialized by the Xavier method (Glorot & Bengio Reference Glorot, Bengio, Teh and Titterington2010), and they are optimized to minimize a given loss function defined as

$w_j$) in the filters are initialized by the Xavier method (Glorot & Bengio Reference Glorot, Bengio, Teh and Titterington2010), and they are optimized to minimize a given loss function defined as

\begin{equation} L = \frac{1}{2N}\sum_{i=1}^{N}{{{\left( \frac{v_{10\, i}^{pred}-v^{true}_{10 \,i}}{v^{true}_{10, rms}} \right)}^{2}}} + 0.025\sum_{j}{w_j^2}, \end{equation}

\begin{equation} L = \frac{1}{2N}\sum_{i=1}^{N}{{{\left( \frac{v_{10\, i}^{pred}-v^{true}_{10 \,i}}{v^{true}_{10, rms}} \right)}^{2}}} + 0.025\sum_{j}{w_j^2}, \end{equation}

where ![]() $N$ is the number of mini-batch data (256 in this study following He et al. Reference He, Zhang, Ren and Sun2015). An adaptive moment estimation (Kingma & Ba Reference Kingma and Ba2014), which is a variant of gradient descent, is used for updating the weights, and the gradients of the loss function with respect to the weights are calculated through the back-propagation algorithm (Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986). We conduct early stopping to prevent overfitting (Bengio Reference Bengio2012). There are many user-defined parameters in constructing a CNN. A study on these parameters is conducted and its results are given in appendix B.

$N$ is the number of mini-batch data (256 in this study following He et al. Reference He, Zhang, Ren and Sun2015). An adaptive moment estimation (Kingma & Ba Reference Kingma and Ba2014), which is a variant of gradient descent, is used for updating the weights, and the gradients of the loss function with respect to the weights are calculated through the back-propagation algorithm (Rumelhart, Hinton & Williams Reference Rumelhart, Hinton and Williams1986). We conduct early stopping to prevent overfitting (Bengio Reference Bengio2012). There are many user-defined parameters in constructing a CNN. A study on these parameters is conducted and its results are given in appendix B.

Figure 3. Architecture of the CNN used in the present study. Each box and arrow after the input and before the average pooling layer represent a hidden layer and flow of the feature maps, respectively. Dimensions of the feature maps, denoted as [height (![]() $h_m$), width (

$h_m$), width (![]() $w_m$), depth (

$w_m$), depth (![]() $d_m$)], are given next to the arrows, and the size and number of filters (

$d_m$)], are given next to the arrows, and the size and number of filters (![]() $h_f\times w_f\times d_{input}$,

$h_f\times w_f\times d_{input}$, ![]() $d_{output}$, respectively) are given inside each box. The

$d_{output}$, respectively) are given inside each box. The ![]() $h_m$ and

$h_m$ and ![]() $w_m$ are the numbers of grid points of the feature maps in the

$w_m$ are the numbers of grid points of the feature maps in the ![]() $z$ and

$z$ and ![]() $x$ directions, respectively. The

$x$ directions, respectively. The ![]() $h_f$ and

$h_f$ and ![]() $w_f$ are the numbers of filter weights in the

$w_f$ are the numbers of filter weights in the ![]() $z$ and

$z$ and ![]() $x$ directions, respectively. Zero paddings are used to adjust the sizes of

$x$ directions, respectively. Zero paddings are used to adjust the sizes of ![]() $h_m$ and

$h_m$ and ![]() $w_m$ of the feature maps after convolution operations. Grey-coloured boxes are the downsampling layers. A residual block without a downsampling layer (lower left figure) consists of two hidden layers, and its output is the sum of the output from the last hidden layer

$w_m$ of the feature maps after convolution operations. Grey-coloured boxes are the downsampling layers. A residual block without a downsampling layer (lower left figure) consists of two hidden layers, and its output is the sum of the output from the last hidden layer ![]() $f(x)$ and the input of the residual block

$f(x)$ and the input of the residual block ![]() $x$. For a residual block with a downsampling layer (lower right figure), its output is the sum of the output from the last hidden layer

$x$. For a residual block with a downsampling layer (lower right figure), its output is the sum of the output from the last hidden layer ![]() $f(x)$ and the downsampled input

$f(x)$ and the downsampled input ![]() $x^{\ast }$, where downsampling (

$x^{\ast }$, where downsampling (![]() $D^{\ast }$) is carried out with the same filter size and stride as those of the downsampling layer (

$D^{\ast }$) is carried out with the same filter size and stride as those of the downsampling layer (![]() $D$). For downsampling (

$D$). For downsampling (![]() $D$ and

$D$ and ![]() $D^{\ast }$), zero padding is applied on the bottom row or right column of a feature map when

$D^{\ast }$), zero padding is applied on the bottom row or right column of a feature map when ![]() $h_m$ or

$h_m$ or ![]() $w_m$ of the input

$w_m$ of the input ![]() $x$ is an odd number.

$x$ is an odd number.

3. Prediction performance

In this section we estimate the performance of the CNN in predicting ![]() $v_{10}^{true}$ with

$v_{10}^{true}$ with ![]() $\chi _w$'s by analysing the instantaneous and statistical quantities of

$\chi _w$'s by analysing the instantaneous and statistical quantities of ![]() $v_{10}^{pred}$'s.

$v_{10}^{pred}$'s.

3.1. Multiple input (spatial distribution of  $\chi _w$) and single output (

$\chi _w$) and single output ( $v_{10}^{pred}$ at a point)

$v_{10}^{pred}$ at a point)

The correlation coefficients between ![]() $v_{10}^{true}$ and

$v_{10}^{true}$ and ![]() $v_{10}^{pred}$'s by the CNN with

$v_{10}^{pred}$'s by the CNN with ![]() $\chi _w=p_w$,

$\chi _w=p_w$, ![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$ are

$\partial w/\partial y \vert _w$ are ![]() $\rho _{v_{10}} = 0.95$, 0.90 and 0.95, respectively, where

$\rho _{v_{10}} = 0.95$, 0.90 and 0.95, respectively, where ![]() $\rho _{v_{10}} = \langle v_{10}^{true}(x,z,t)v_{10}^{pred}(x,z,t) \rangle / ({v_{10,rms}^{true}}{v_{10,rms}^{pred}})$. These magnitudes are much bigger than the maximum two-point correlations described before (

$\rho _{v_{10}} = \langle v_{10}^{true}(x,z,t)v_{10}^{pred}(x,z,t) \rangle / ({v_{10,rms}^{true}}{v_{10,rms}^{pred}})$. These magnitudes are much bigger than the maximum two-point correlations described before (![]() $\rho = 0.36$, 0.50 and 0.56, respectively) and also those from other machine learning techniques considered (appendix A). Figure 4 shows the instantaneous fields of

$\rho = 0.36$, 0.50 and 0.56, respectively) and also those from other machine learning techniques considered (appendix A). Figure 4 shows the instantaneous fields of ![]() $v_{10}^{true}$ and

$v_{10}^{true}$ and ![]() $v_{10}^{pred}$'s reconstructed by the CNN, together with

$v_{10}^{pred}$'s reconstructed by the CNN, together with ![]() $\chi _w$'s. Although the distributions of

$\chi _w$'s. Although the distributions of ![]() $\chi _w$'s are very different from that of

$\chi _w$'s are very different from that of ![]() $v_{10}^{true}$, the CNN captures most of the

$v_{10}^{true}$, the CNN captures most of the ![]() $v_{10}$ field from all the wall variables investigated, indicating that the CNN is an adequate tool to predict

$v_{10}$ field from all the wall variables investigated, indicating that the CNN is an adequate tool to predict ![]() $v_{10}$. To understand how

$v_{10}$. To understand how ![]() $v_{10}^{pred}$ is correlated with

$v_{10}^{pred}$ is correlated with ![]() $\chi _w$, we compute the saliency map proposed by Simonyan, Vedaldi & Zisserman (Reference Simonyan, Vedaldi and Zisserman2013), and provide the results in appendix C.

$\chi _w$, we compute the saliency map proposed by Simonyan, Vedaldi & Zisserman (Reference Simonyan, Vedaldi and Zisserman2013), and provide the results in appendix C.

Figure 4. Contours of the instantaneous ![]() $v_{10}^{true}$,

$v_{10}^{true}$, ![]() $v_{10}^{pred}$'s and instantaneous

$v_{10}^{pred}$'s and instantaneous ![]() $\chi _w$'s: (

$\chi _w$'s: (![]() $a$)

$a$) ![]() $v_{10}^{true}$ (DNS); (

$v_{10}^{true}$ (DNS); (![]() $b$)

$b$) ![]() $v_{10}^{pred}$ from

$v_{10}^{pred}$ from ![]() $\chi _w=p_w$; (

$\chi _w=p_w$; (![]() $c$)

$c$) ![]() $v_{10}^{pred}$ from

$v_{10}^{pred}$ from ![]() $\chi _w=\partial u/\partial y \vert _w$; (

$\chi _w=\partial u/\partial y \vert _w$; (![]() $d$)

$d$) ![]() $v_{10}^{pred}$ from

$v_{10}^{pred}$ from ![]() $\chi _w=\partial w/\partial y \vert _w$; (

$\chi _w=\partial w/\partial y \vert _w$; (![]() $e$)

$e$) ![]() $p_w$; (

$p_w$; (![]() $f$)

$f$) ![]() $\partial u/\partial y \vert _w$; (

$\partial u/\partial y \vert _w$; (![]() $g$)

$g$) ![]() $\partial w/\partial y \vert _w$.

$\partial w/\partial y \vert _w$.

3.2. Multiple input and multiple output (spatial distributions of  $\chi _w$ and

$\chi _w$ and  $v_{10}^{pred}$)

$v_{10}^{pred}$)

Although the CNN in § 3.1 performed well, the reconstructed flow field ![]() $v_{10}^{pred}$ (figure 4) contained spatial oscillations that might provide numerical instability during feedback control. To understand the source of these oscillations, we (i) try even numbers of grid points

$v_{10}^{pred}$ (figure 4) contained spatial oscillations that might provide numerical instability during feedback control. To understand the source of these oscillations, we (i) try even numbers of grid points ![]() $(24 \times 12)$ for

$(24 \times 12)$ for ![]() $\chi _w$ to see if they came from zero paddings at the downsampling layers owing to the use of odd numbers

$\chi _w$ to see if they came from zero paddings at the downsampling layers owing to the use of odd numbers ![]() $(21 \times 11)$; (ii) use a continuous activation function,

$(21 \times 11)$; (ii) use a continuous activation function, ![]() $y = \tanh (x)$, since we used a discontinuous activation function (Relu),

$y = \tanh (x)$, since we used a discontinuous activation function (Relu), ![]() $y = \max (0, x)$; (iii) apply a linear regression model (Lasso) with

$y = \max (0, x)$; (iii) apply a linear regression model (Lasso) with ![]() $21 \times 11$ grid points for

$21 \times 11$ grid points for ![]() $\chi _w$. The spatial oscillations in

$\chi _w$. The spatial oscillations in ![]() $v_{10}^{pred}$ still exist for (i) and (ii), but disappear for (iii) (not shown in this paper). This may indicate that the spatial oscillations in

$v_{10}^{pred}$ still exist for (i) and (ii), but disappear for (iii) (not shown in this paper). This may indicate that the spatial oscillations in ![]() $v_{10}^{pred}$ occur because it is nonlinearly determined with

$v_{10}^{pred}$ occur because it is nonlinearly determined with ![]() $\chi _w$ by the CNN. Therefore, to obtain a smoother distribution of

$\chi _w$ by the CNN. Therefore, to obtain a smoother distribution of ![]() $v_{10}^{pred}$ in space, we consider another CNN in this section in which multiple output (a spatial distribution of

$v_{10}^{pred}$ in space, we consider another CNN in this section in which multiple output (a spatial distribution of ![]() $v_{10}^{pred}$) is produced from multiple input (a spatial distribution of

$v_{10}^{pred}$) is produced from multiple input (a spatial distribution of ![]() $\chi _w$). We call this CNN an MP-CNN, whereas the CNN in § 3.1 is called 1P-CNN.

$\chi _w$). We call this CNN an MP-CNN, whereas the CNN in § 3.1 is called 1P-CNN.

Figure 5 shows the schematic diagrams of 1P-CNN and MP-CNN. For MP-CNN, we keep the architectures of all hidden layers of 1P-CNN (17 hidden layers), and then add three additional hidden layers. The sizes of the input wall variable ![]() $\chi _w$ and output

$\chi _w$ and output ![]() $v_{10}^{pred}$ are

$v_{10}^{pred}$ are ![]() $l^+_x\times l^+_z\approx 270\times 135$ and

$l^+_x\times l^+_z\approx 270\times 135$ and ![]() $130\times 65$, respectively, and the corresponding numbers of grid points for the input and output are

$130\times 65$, respectively, and the corresponding numbers of grid points for the input and output are ![]() $32\times 32$ and

$32\times 32$ and ![]() $16\times 16$, respectively. The centre positions of the input and output are the same. The input size in space should be taken to be larger than the output size, because

$16\times 16$, respectively. The centre positions of the input and output are the same. The input size in space should be taken to be larger than the output size, because ![]() $v_{10}$ at a point is correlated with the wall variables nearby. As shown in figure 2, the maximum correlations between

$v_{10}$ at a point is correlated with the wall variables nearby. As shown in figure 2, the maximum correlations between ![]() $v_{10}$ and

$v_{10}$ and ![]() $\chi _w$'s occur at

$\chi _w$'s occur at ![]() $|\Delta x^+| \le 45$ and

$|\Delta x^+| \le 45$ and ![]() $|\Delta z^+| \le 15$, and, thus, the input size, which is twice the output size, should be enough to produce high performance of MP-CNN. The choice of the output size,

$|\Delta z^+| \le 15$, and, thus, the input size, which is twice the output size, should be enough to produce high performance of MP-CNN. The choice of the output size, ![]() $l^+_x\times l^+_z \approx 130\times 65$, is rather arbitrary, but this size is at least comparable to the size of a region of rapidly varying

$l^+_x\times l^+_z \approx 130\times 65$, is rather arbitrary, but this size is at least comparable to the size of a region of rapidly varying ![]() $v_{10}$ (see, for example, figure 4). A dataset of

$v_{10}$ (see, for example, figure 4). A dataset of ![]() $\chi _w$ and

$\chi _w$ and ![]() $v_{10}^{true}$ are obtained from direct numerical simulation of a turbulent channel flow as before. We apply the generative adversarial networks (GAN; Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) to optimize MP-CNN, because previous studies (Ledig et al. Reference Ledig, Theis, Huszár, Caballero, Cunningham, Acosta, Aitken, Tejani, Totz and Wang2016; Lee & You Reference Lee and You2019) showed that a CNN trained with GAN produces more realistic images than using only the quadratic error as a loss function. The details about GAN and loss function are described in appendix D.

$v_{10}^{true}$ are obtained from direct numerical simulation of a turbulent channel flow as before. We apply the generative adversarial networks (GAN; Goodfellow et al. Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) to optimize MP-CNN, because previous studies (Ledig et al. Reference Ledig, Theis, Huszár, Caballero, Cunningham, Acosta, Aitken, Tejani, Totz and Wang2016; Lee & You Reference Lee and You2019) showed that a CNN trained with GAN produces more realistic images than using only the quadratic error as a loss function. The details about GAN and loss function are described in appendix D.

Figure 5. Schematics of the present convolutional neural networks: (![]() $a$) 1P-CNN; (

$a$) 1P-CNN; (![]() $b$) MP-CNN. The detail of 1P-CNN is given in figure 3. For MP-CNN, the size and number of filters are given in each box, and the dimensions of the feature maps are given next to each arrow. The grey-coloured box is the deconvolution layer where the height and width of the feature map increase twice.

$b$) MP-CNN. The detail of 1P-CNN is given in figure 3. For MP-CNN, the size and number of filters are given in each box, and the dimensions of the feature maps are given next to each arrow. The grey-coloured box is the deconvolution layer where the height and width of the feature map increase twice.

Figure 6 shows ![]() $v_{10}$,

$v_{10}$, ![]() $\partial v_{10} / \partial x$ and

$\partial v_{10} / \partial x$ and ![]() $\partial v_{10} / \partial z$ from 1P-CNN and MP-CNN with

$\partial v_{10} / \partial z$ from 1P-CNN and MP-CNN with ![]() $\chi _w=\partial u/\partial y \vert _w$, respectively, together with those from DNS. The correlation coefficients between the true (DNS) and predicted values with MP-CNN are

$\chi _w=\partial u/\partial y \vert _w$, respectively, together with those from DNS. The correlation coefficients between the true (DNS) and predicted values with MP-CNN are ![]() $\rho = 0.92$, 0.87 and 0.91 for

$\rho = 0.92$, 0.87 and 0.91 for ![]() $v_{10}$,

$v_{10}$, ![]() $\partial v_{10} / \partial x$ and

$\partial v_{10} / \partial x$ and ![]() $\partial v_{10} / \partial z$, respectively, whereas those with 1P-CNN are

$\partial v_{10} / \partial z$, respectively, whereas those with 1P-CNN are ![]() $\rho = 0.90$, 0.81 and 0.89, respectively. The results of the correlation coefficients and reconstructed fields (figure 6) indicate that the prediction performance is improved both quantitatively and qualitatively with MP-CNN. Note that oscillations observed with 1P-CNN nearly disappear with MP-CNN. For

$\rho = 0.90$, 0.81 and 0.89, respectively. The results of the correlation coefficients and reconstructed fields (figure 6) indicate that the prediction performance is improved both quantitatively and qualitatively with MP-CNN. Note that oscillations observed with 1P-CNN nearly disappear with MP-CNN. For ![]() $\chi _w=p_w$, the correlation coefficients for

$\chi _w=p_w$, the correlation coefficients for ![]() $v_{10}$,

$v_{10}$, ![]() $\partial v_{10} / \partial x$ and

$\partial v_{10} / \partial x$ and ![]() $\partial v_{10} / \partial z$ are

$\partial v_{10} / \partial z$ are ![]() $\rho = 0.96$, 0.92 and 0.96 with MP-CNN, respectively, whereas those with 1P-CNN are

$\rho = 0.96$, 0.92 and 0.96 with MP-CNN, respectively, whereas those with 1P-CNN are ![]() $\rho = 0.95$, 0.89 and 0.95, respectively. For

$\rho = 0.95$, 0.89 and 0.95, respectively. For ![]() $\chi _w=\partial w/\partial y \vert _w$,

$\chi _w=\partial w/\partial y \vert _w$, ![]() $\rho = 0.96$, 0.90 and 0.96 with MP-CNN, whereas

$\rho = 0.96$, 0.90 and 0.96 with MP-CNN, whereas ![]() $\rho = 0.95$, 0.89 and 0.95 with 1P-CNN. The reconstructions with

$\rho = 0.95$, 0.89 and 0.95 with 1P-CNN. The reconstructions with ![]() $\chi _w=p_w$ and

$\chi _w=p_w$ and ![]() $\partial w/\partial y \vert _w$ show results similar to those with

$\partial w/\partial y \vert _w$ show results similar to those with ![]() $\chi _w=\partial u/\partial y \vert _w$.

$\chi _w=\partial u/\partial y \vert _w$.

Figure 6. Contours of the instantaneous ![]() $v_{10}, \partial v_{10} / \partial x$ and

$v_{10}, \partial v_{10} / \partial x$ and ![]() $\partial v_{10} / \partial z$: (

$\partial v_{10} / \partial z$: (![]() $a$–

$a$–![]() $c$)

$c$) ![]() $v_{10}$; (

$v_{10}$; (![]() $d$–

$d$–![]() $f$)

$f$) ![]() $\partial v_{10} / \partial x$; (

$\partial v_{10} / \partial x$; (![]() $g$–

$g$–![]() $i$)

$i$) ![]() $\partial v_{10} / \partial z$. (

$\partial v_{10} / \partial z$. (![]() $a{,}d{,}g$) are from DNS, and (

$a{,}d{,}g$) are from DNS, and (![]() $b{,}e{,}h$) and (

$b{,}e{,}h$) and (![]() $c{,}f{,}i$) are from 1P-CNN and MP-CNN with

$c{,}f{,}i$) are from 1P-CNN and MP-CNN with ![]() $\chi _w =\partial u/\partial y \vert _w$, respectively. Here,

$\chi _w =\partial u/\partial y \vert _w$, respectively. Here, ![]() $\partial v_{10} / \partial x$ and

$\partial v_{10} / \partial x$ and ![]() $\partial v_{10} / \partial z$ are calculated using the second-order central difference. Flow variables are normalized with

$\partial v_{10} / \partial z$ are calculated using the second-order central difference. Flow variables are normalized with ![]() $u_{\tau _o}$ and

$u_{\tau _o}$ and ![]() $\delta$.

$\delta$.

Figure 7 shows the streamwise and spanwise energy spectra of ![]() $v_{10}^{pred}$ from

$v_{10}^{pred}$ from ![]() $\chi _w = p_w,$

$\chi _w = p_w,$![]() $\partial u/\partial y \vert _w$ and

$\partial u/\partial y \vert _w$ and ![]() $\partial w/\partial y \vert _w$, together with those of

$\partial w/\partial y \vert _w$, together with those of ![]() $v_{10}^{true}$. Overall, both 1P-CNN and MP-CNN predict the energy spectra very well. At high wavenumbers, 1P-CNN exhibits severe energy pile up both in the streamwise and spanwise wavenumbers, whereas MP-CNN reduces the energy pile up in the streamwise wavenumber and matches the spanwise energy spectrum nearly perfectly at all wavenumbers. This indicates that small-scale motions of

$v_{10}^{true}$. Overall, both 1P-CNN and MP-CNN predict the energy spectra very well. At high wavenumbers, 1P-CNN exhibits severe energy pile up both in the streamwise and spanwise wavenumbers, whereas MP-CNN reduces the energy pile up in the streamwise wavenumber and matches the spanwise energy spectrum nearly perfectly at all wavenumbers. This indicates that small-scale motions of ![]() $v_{10}$ is better predicted by MP-CNN than by 1P-CNN.

$v_{10}$ is better predicted by MP-CNN than by 1P-CNN.

Figure 7. Energy spectra of ![]() $v_{10}$ from

$v_{10}$ from ![]() $\chi _w = p_w,$

$\chi _w = p_w,$![]() $\partial u/\partial y \vert _w$, and

$\partial u/\partial y \vert _w$, and ![]() $\partial w/\partial y \vert _w$ (uncontrolled flow): (

$\partial w/\partial y \vert _w$ (uncontrolled flow): (![]() $a$–

$a$–![]() $c$) streamwise wavenumber; (

$c$) streamwise wavenumber; (![]() $d$–

$d$–![]() $f$) spanwise wavenumber.

$f$) spanwise wavenumber. ![]() $(a,d)\,\chi _w = p_w$;

$(a,d)\,\chi _w = p_w$; ![]() $(b,e)\,\chi_w=\partial u/\partial y \vert _w; (c, f)\,\chi_w=\partial w/\partial y \vert _w$. Black circle,

$(b,e)\,\chi_w=\partial u/\partial y \vert _w; (c, f)\,\chi_w=\partial w/\partial y \vert _w$. Black circle, ![]() $v_{10}^{true}$ (DNS); black line,

$v_{10}^{true}$ (DNS); black line, ![]() $v_{10}^{pred}$ with 1P-CNN; red line,

$v_{10}^{pred}$ with 1P-CNN; red line, ![]() $v_{10}^{pred}$ with MP-CNN.

$v_{10}^{pred}$ with MP-CNN.

An additional advantage of MP-CNN is a significant reduction of the computational cost. Total of ![]() $192 (x) \times 128 (z)$ prediction processes should be required to reconstruct an entire

$192 (x) \times 128 (z)$ prediction processes should be required to reconstruct an entire ![]() $v_{10}$ field with 1P-CNN, where one prediction process means one operation of the CNN to print out

$v_{10}$ field with 1P-CNN, where one prediction process means one operation of the CNN to print out ![]() $v_{10}^{pred}$ with given

$v_{10}^{pred}$ with given ![]() $\chi _w$. Because the number of the grid points of

$\chi _w$. Because the number of the grid points of ![]() $v_{10}^{pred}$ is

$v_{10}^{pred}$ is ![]() $16(x) \times 16(z)$ for MP-CNN, it requires only

$16(x) \times 16(z)$ for MP-CNN, it requires only ![]() $192/16 (x) \times 128/16 (z)$ prediction processes to reconstruct an entire field. Although the computational cost for one prediction process is greater for MP-CNN due to larger input and output sizes than for 1P-CNN, the computational time required to reconstruct the entire field is approximately 40 times smaller with MP-CNN than that with 1P-CNN.

$192/16 (x) \times 128/16 (z)$ prediction processes to reconstruct an entire field. Although the computational cost for one prediction process is greater for MP-CNN due to larger input and output sizes than for 1P-CNN, the computational time required to reconstruct the entire field is approximately 40 times smaller with MP-CNN than that with 1P-CNN.

4. Application to feedback control

In this section we apply MP-CNN to opposition control (Choi et al. Reference Choi, Moin and Kim1994) for skin-friction drag reduction, ![]() $v_{w}(x, z) = - v_{10}^{pred} (x,z)$, where

$v_{w}(x, z) = - v_{10}^{pred} (x,z)$, where ![]() $v_{10}^{pred}$ is obtained from

$v_{10}^{pred}$ is obtained from ![]() $\chi _w = p_w$,

$\chi _w = p_w$, ![]() $\partial u/\partial y \vert _w$, or

$\partial u/\partial y \vert _w$, or ![]() $\partial w/\partial y \vert _w$. We train our MP-CNN with the uncontrolled turbulent channel flow because the controlled flow data is not available in practical situations, and we conduct an off-line control in which MP-CNN is not trained during the control. This is different from the approaches taken by the previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Lorang et al. Reference Lorang, Podvin and Le Quéré2008) in which neural networks were trained with the controlled flow data from opposition control. The rationale of using the CNN trained with the uncontrolled flow for the present control was already explained in the discussion related to figure 2. The control input

$\partial w/\partial y \vert _w$. We train our MP-CNN with the uncontrolled turbulent channel flow because the controlled flow data is not available in practical situations, and we conduct an off-line control in which MP-CNN is not trained during the control. This is different from the approaches taken by the previous studies (Lee et al. Reference Lee, Kim, Babcock and Goodman1997; Lorang et al. Reference Lorang, Podvin and Le Quéré2008) in which neural networks were trained with the controlled flow data from opposition control. The rationale of using the CNN trained with the uncontrolled flow for the present control was already explained in the discussion related to figure 2. The control input ![]() $v_{w}$ is updated at every 20 computational time steps

$v_{w}$ is updated at every 20 computational time steps ![]() $\Delta t_c (= 20 \Delta t)$, where

$\Delta t_c (= 20 \Delta t)$, where ![]() $\Delta t$ is the computational time step

$\Delta t$ is the computational time step ![]() $(\Delta {t^+}=\Delta t {u_{\tau _o}^2}/\nu =0.08)$. As observed in the previous study (Lee, Kim & Choi Reference Lee, Kim and Choi1998), the control performance is not degraded even if

$(\Delta {t^+}=\Delta t {u_{\tau _o}^2}/\nu =0.08)$. As observed in the previous study (Lee, Kim & Choi Reference Lee, Kim and Choi1998), the control performance is not degraded even if ![]() $\Delta t_c$ is greater than

$\Delta t_c$ is greater than ![]() $\Delta t$, and drag-reduction rate with

$\Delta t$, and drag-reduction rate with ![]() $\Delta t_c=20 \Delta t$ differs only by 0.5 % compared to that with

$\Delta t_c=20 \Delta t$ differs only by 0.5 % compared to that with ![]() $\Delta t_c= \Delta t$ in our numerical simulation with opposition control.

$\Delta t_c= \Delta t$ in our numerical simulation with opposition control.

4.1. Control with  $v_{10}^{pred}$

$v_{10}^{pred}$

Figure 8 shows the scatter plots of ![]() $v_{10}^{true}$ (from opposition control) and

$v_{10}^{true}$ (from opposition control) and ![]() $v_{10}^{pred}$ by MP-CNN trained with the uncontrolled flow. The MP-CNN trained with the uncontrolled flow completely loses its prediction performance for

$v_{10}^{pred}$ by MP-CNN trained with the uncontrolled flow. The MP-CNN trained with the uncontrolled flow completely loses its prediction performance for ![]() $\chi _w=\partial u / \partial y \vert _w$ (figure 8

$\chi _w=\partial u / \partial y \vert _w$ (figure 8![]() $b$), whereas it still maintains approximately linear relations (but with slopes different from 1) for

$b$), whereas it still maintains approximately linear relations (but with slopes different from 1) for ![]() $\chi _w=p_w$ and

$\chi _w=p_w$ and ![]() $\partial w / \partial y \vert _w$. Therefore, we modify the magnitude of