1. Introduction and main result

Let  $(S_k)_{k \in {\mathbb{N}}_0}$ be a not necessarily monotone sequence of nonnegative random variables. Define the counting process

$(S_k)_{k \in {\mathbb{N}}_0}$ be a not necessarily monotone sequence of nonnegative random variables. Define the counting process  $(N(t))_{t \geq 0}$ by

$(N(t))_{t \geq 0}$ by

\begin{equation*} N(t)\,:\!=\sum_{k \geq 0}\textbf{1}_{\{S_k \leq t\}}, \qquad t \geq 0,\end{equation*}

\begin{equation*} N(t)\,:\!=\sum_{k \geq 0}\textbf{1}_{\{S_k \leq t\}}, \qquad t \geq 0,\end{equation*}

where  $\textbf{1}_A=1$ if the event A holds and 0 otherwise. Throughout the paper we always assume that

$\textbf{1}_A=1$ if the event A holds and 0 otherwise. Throughout the paper we always assume that  $N(t)<\infty$ almost surely (a.s.) for

$N(t)<\infty$ almost surely (a.s.) for  $t \geq 0$.

$t \geq 0$.

Denote by  $D\,:\!=D[0,\infty)$ the Skorokhod space of right-continuous real-valued functions which are defined on

$D\,:\!=D[0,\infty)$ the Skorokhod space of right-continuous real-valued functions which are defined on  $[0,\infty)$ and have finite limits from the left at each positive point. For a function

$[0,\infty)$ and have finite limits from the left at each positive point. For a function  $h\in D$, the random process

$h\in D$, the random process  $X\,:\!=(X(t))_{t \geq 0}$, which is the main object of our investigation, is given by

$X\,:\!=(X(t))_{t \geq 0}$, which is the main object of our investigation, is given by

\begin{equation*}X(t)\,:\!=\sum_{k \geq0}{h(t-S_k)}\textbf{1}_{\{S_k \leq t\}}= \int_{[0,\,t]}{h(t-y)}\textrm{d}N(y), \qquad t \geq 0.\end{equation*}

\begin{equation*}X(t)\,:\!=\sum_{k \geq0}{h(t-S_k)}\textbf{1}_{\{S_k \leq t\}}= \int_{[0,\,t]}{h(t-y)}\textrm{d}N(y), \qquad t \geq 0.\end{equation*}

We call X a general shot noise process, for no assumptions are imposed apart from  $N(t)<\infty$ a.s. Plainly,

$N(t)<\infty$ a.s. Plainly,  $X\in D$ a.s.

$X\in D$ a.s.

Denote by  $Y_1\,:\!=(Y_1(t))_{t\geq 0}$,

$Y_1\,:\!=(Y_1(t))_{t\geq 0}$,  $Y_2\,:\!=(Y_2(t))_{t\geq0},\ldots$ independent and identically distributed (i.i.d.) random processes with paths in D. Assume that, for

$Y_2\,:\!=(Y_2(t))_{t\geq0},\ldots$ independent and identically distributed (i.i.d.) random processes with paths in D. Assume that, for  $k\in{\mathbb{N}}_0$,

$k\in{\mathbb{N}}_0$,  $Y_{k+1}$ is independent of

$Y_{k+1}$ is independent of  $(S_0,\ldots, S_k)$. In particular, the case of complete independence of

$(S_0,\ldots, S_k)$. In particular, the case of complete independence of  $(Y_j)_{j\in{\mathbb{N}}}$ and

$(Y_j)_{j\in{\mathbb{N}}}$ and  $(S_k)_{k\in{\mathbb{N}}_0}$ is not excluded. Set

$(S_k)_{k\in{\mathbb{N}}_0}$ is not excluded. Set

\begin{equation*}Y(t)\,:\!=\sum_{k\geq0}Y_{k+1}(t-S_k)\textbf{1}_{\{S_k\leq t\}},\qquad t\geq 0\end{equation*}

\begin{equation*}Y(t)\,:\!=\sum_{k\geq0}Y_{k+1}(t-S_k)\textbf{1}_{\{S_k\leq t\}},\qquad t\geq 0\end{equation*}

and call  $Y\,:\!=(Y(t))_{t\geq 0}$ a random process with immigration at random times. The interpretation is that associated with the kth immigrant arriving at the system at time

$Y\,:\!=(Y(t))_{t\geq 0}$ a random process with immigration at random times. The interpretation is that associated with the kth immigrant arriving at the system at time  $S_{k-1}$ is the process

$S_{k-1}$ is the process  $Y_k$ which defines a model-dependent ‘characteristic’ of the kth immigrant. For instance,

$Y_k$ which defines a model-dependent ‘characteristic’ of the kth immigrant. For instance,  $Y_k(t-S_{k-1})$ may be the fitness of the kth immigrant at time t. The value of Y(t) is then given by the sum of ‘characteristics’ of all immigrants arriving at the system up to and including time t. Assume that the function

$Y_k(t-S_{k-1})$ may be the fitness of the kth immigrant at time t. The value of Y(t) is then given by the sum of ‘characteristics’ of all immigrants arriving at the system up to and including time t. Assume that the function  $g(t)\,:\!={\mathrm{E}}[ Y_1(t)]$ is finite for all

$g(t)\,:\!={\mathrm{E}}[ Y_1(t)]$ is finite for all  $t\geq 0$, not identically 0, and that

$t\geq 0$, not identically 0, and that  $g\in D$. To investigate weak convergence of the process Y, properly normalized and centered, it is natural to use the decomposition

$g\in D$. To investigate weak convergence of the process Y, properly normalized and centered, it is natural to use the decomposition

\begin{equation}Y(t)=\sum_{k\geq 0}(Y_{k+1}(t-S_k)-g(t-S_k))\textbf{1}_{\{S_k\leq t\}}+\sum_{k\geq 0}g(t-S_k)\textbf{1}_{\{S_k\leq t\}}, \qquad t\geq 0.\end{equation}

\begin{equation}Y(t)=\sum_{k\geq 0}(Y_{k+1}(t-S_k)-g(t-S_k))\textbf{1}_{\{S_k\leq t\}}+\sum_{k\geq 0}g(t-S_k)\textbf{1}_{\{S_k\leq t\}}, \qquad t\geq 0.\end{equation}

For fixed  $t>0$, while the first summand is the terminal value of a martingale, the second is the value at time t of a general shot noise process. The summands should be treated separately, for each of these requires a specific approach. Weak convergence of the first summand in (1) is investigated in [Reference Dong and Iksanov11].

$t>0$, while the first summand is the terminal value of a martingale, the second is the value at time t of a general shot noise process. The summands should be treated separately, for each of these requires a specific approach. Weak convergence of the first summand in (1) is investigated in [Reference Dong and Iksanov11].

In the present paper we aim to prove a functional limit theorem for a general shot noise process X under natural assumptions. Besides being of independent interest, our findings pave the way towards controlling the asymptotic behavior of the second summand in (1). These, taken together with the results from [Reference Dong and Iksanov11], should eventually lead to understanding of the asymptotics of processes Y.

A rich source of random processes Y with immigration at random times are queueing systems and various branching processes with or without immigration. For example, particular instances of random variables Y(t) are given by the number of the kth-generation individuals ( $k\geq 2$) with positions

$k\geq 2$) with positions  $\leq t$ in a branching random walk; the number of customers served up to and including time t; or the number of busy servers at time t in a GEN/G/

$\leq t$ in a branching random walk; the number of customers served up to and including time t; or the number of busy servers at time t in a GEN/G/ $\infty$ queueing system, where GEN means that the arrival of customers is regulated by a general point process. Nowadays, rather popular objects of research are queueing systems in which the input process is more complicated than the renewal process, for instance, a Cox process (also known as a doubly stochastic Poisson process) [Reference Chernavskaya8] or a Hawkes process [Reference Daw and Pender10, Reference Koops, Saxena, Boxma and Mandjes28], and branching processes with immigration governed by a process which is more general than the renewal process, for instance, an inhomogeneous Poisson process [Reference Yakovlev and Yanev33] or a Cox process [Reference Butkovsky7]. Note that some authors have investigated the processes X, Y, or the like from a purely mathematical viewpoint. An incomplete list of relevant publications includes [Reference Daley9, Reference Rice30, Reference Schmidt31, Reference Westcott32] and the recent article [Reference Pang and Zhou29]. On the other hand, we stress that the results obtained in the aforementioned papers do not overlap with ours.

$\infty$ queueing system, where GEN means that the arrival of customers is regulated by a general point process. Nowadays, rather popular objects of research are queueing systems in which the input process is more complicated than the renewal process, for instance, a Cox process (also known as a doubly stochastic Poisson process) [Reference Chernavskaya8] or a Hawkes process [Reference Daw and Pender10, Reference Koops, Saxena, Boxma and Mandjes28], and branching processes with immigration governed by a process which is more general than the renewal process, for instance, an inhomogeneous Poisson process [Reference Yakovlev and Yanev33] or a Cox process [Reference Butkovsky7]. Note that some authors have investigated the processes X, Y, or the like from a purely mathematical viewpoint. An incomplete list of relevant publications includes [Reference Daley9, Reference Rice30, Reference Schmidt31, Reference Westcott32] and the recent article [Reference Pang and Zhou29]. On the other hand, we stress that the results obtained in the aforementioned papers do not overlap with ours.

The present work was preceded by several articles [Reference Iksanov16, Reference Iksanov, Kabluchko and Marynych20, Reference Iksanov, Kabluchko, Marynych and Shevchenko21, Reference Iksanov, Marynych and Meiners22, Reference Kabluchko and Marynych27] in which weak convergence of renewal shot noise processes has been investigated. The latter is a particular case of processes X in which the input sequence  $(S_k)_{k\in{\mathbb{N}}_0}$ is a standard random walk. Development of elements of the weak convergence theory for renewal shot noise processes was motivated by and effectively used for the asymptotic analysis of various characteristics of several random regenerative structures: the order of random permutations [Reference Gnedin, Iksanov and Marynych12], the number of zero and nonzero blocks of weak random compositions [Reference Alsmeyer, Iksanov and Marynych2, Reference Iksanov, Marynych and Vatutin25], the number of collisions in coalescents with multiple collisions [Reference Gnedin, Iksanov, Marynych and Möhle13], the number of busy servers in a G/G/

$(S_k)_{k\in{\mathbb{N}}_0}$ is a standard random walk. Development of elements of the weak convergence theory for renewal shot noise processes was motivated by and effectively used for the asymptotic analysis of various characteristics of several random regenerative structures: the order of random permutations [Reference Gnedin, Iksanov and Marynych12], the number of zero and nonzero blocks of weak random compositions [Reference Alsmeyer, Iksanov and Marynych2, Reference Iksanov, Marynych and Vatutin25], the number of collisions in coalescents with multiple collisions [Reference Gnedin, Iksanov, Marynych and Möhle13], the number of busy servers in a G/G/ $\infty$ queueing system [Reference Iksanov, Jedidi and Bouzzefour18], random processes with immigration at the epochs of a renewal process [Reference Iksanov, Marynych and Meiners23, Reference Iksanov, Marynych and Meiners24]. Chapter 3 of [Reference Iksanov17] provides a survey of the results obtained in the aforementioned articles, pointers to relevant literature, and a detailed discussion of possible applications.

$\infty$ queueing system [Reference Iksanov, Jedidi and Bouzzefour18], random processes with immigration at the epochs of a renewal process [Reference Iksanov, Marynych and Meiners23, Reference Iksanov, Marynych and Meiners24]. Chapter 3 of [Reference Iksanov17] provides a survey of the results obtained in the aforementioned articles, pointers to relevant literature, and a detailed discussion of possible applications.

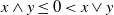

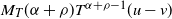

To formulate our main result we need additional notation. Denote by  $W_\alpha\,:\!=(W_\alpha(u))_{u\geq 0}$ a centered Gaussian process which is a.s. locally Hölder continuous with exponent

$W_\alpha\,:\!=(W_\alpha(u))_{u\geq 0}$ a centered Gaussian process which is a.s. locally Hölder continuous with exponent  $\alpha> 0$ and satisfies

$\alpha> 0$ and satisfies  $W_\alpha(0)=0$ a.s. In particular, for all

$W_\alpha(0)=0$ a.s. In particular, for all  $T>0$, all

$T>0$, all  $0\leq x,y\leq T$, and some a.s. finite random variable

$0\leq x,y\leq T$, and some a.s. finite random variable  $M_T$,

$M_T$,

\begin{equation}|W_\alpha(x)-W_\alpha (y)| \leq M_T |x-y|^\alpha.\end{equation}

\begin{equation}|W_\alpha(x)-W_\alpha (y)| \leq M_T |x-y|^\alpha.\end{equation}

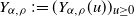

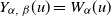

Define the random process  $Y_{\alpha,\rho}\,:\!=(Y_{\alpha,\rho}(u))_{u\geq 0}$ by

$Y_{\alpha,\rho}\,:\!=(Y_{\alpha,\rho}(u))_{u\geq 0}$ by

\begin{equation} Y_{\alpha,\rho}(u)\,:\!= \rho \int_0^u (u-y)^{\rho-1}W_{\alpha}(y)\textrm{d}y, \quad u>0,\qquad Y_{\alpha,\rho}(0)\,:\!=\lim_{u\to +0}Y_{\alpha,\rho}(u),\end{equation}

\begin{equation} Y_{\alpha,\rho}(u)\,:\!= \rho \int_0^u (u-y)^{\rho-1}W_{\alpha}(y)\textrm{d}y, \quad u>0,\qquad Y_{\alpha,\rho}(0)\,:\!=\lim_{u\to +0}Y_{\alpha,\rho}(u),\end{equation}

when  $\rho>0$, and by

$\rho>0$, and by

\begin{equation}\begin{split}Y_{\alpha,\rho}(u)\,:\!=u^\rho W_\alpha(u) + |\rho| \int_0^u(W_{\alpha}(u) - W_{\alpha}(u-y)) y^{\rho-1}\textrm{d}y, \qquad u>0, \\ Y_{\alpha,\rho}(0)\,:\!=\lim_{u\to+0}Y_{\alpha,\rho}(u), \end{split}\end{equation}

\begin{equation}\begin{split}Y_{\alpha,\rho}(u)\,:\!=u^\rho W_\alpha(u) + |\rho| \int_0^u(W_{\alpha}(u) - W_{\alpha}(u-y)) y^{\rho-1}\textrm{d}y, \qquad u>0, \\ Y_{\alpha,\rho}(0)\,:\!=\lim_{u\to+0}Y_{\alpha,\rho}(u), \end{split}\end{equation}

when  $-\alpha<\rho<0$. Also, put

$-\alpha<\rho<0$. Also, put  $Y_{\alpha,0}=W_\alpha$. Using (2), we conclude that

$Y_{\alpha,0}=W_\alpha$. Using (2), we conclude that  $Y_{\alpha,\rho}(0)=0$ a.s. whenever

$Y_{\alpha,\rho}(0)=0$ a.s. whenever  $\rho>-\alpha$.

$\rho>-\alpha$.

Convergence of the integrals in (3) and (4) and a.s. continuity of the processes  $Y_{\alpha,\rho}$ will be proved in Lemma 2.1 below. When

$Y_{\alpha,\rho}$ will be proved in Lemma 2.1 below. When  $W_\alpha$ is a Brownian motion (so that

$W_\alpha$ is a Brownian motion (so that  $\alpha=1/2-\varepsilon$ for any

$\alpha=1/2-\varepsilon$ for any  $\varepsilon\in (0,1/2)$), the process

$\varepsilon\in (0,1/2)$), the process  $Y_{\alpha,\rho}$ can be represented as a Skorokhod integral,

$Y_{\alpha,\rho}$ can be represented as a Skorokhod integral,

\begin{equation*} Y_{\alpha,\rho}(u)\,:\!=\int_{[0,\,u]}(u-y)^\rho\textrm{d}W_\alpha(y), \qquad u \geq 0.\end{equation*}

\begin{equation*} Y_{\alpha,\rho}(u)\,:\!=\int_{[0,\,u]}(u-y)^\rho\textrm{d}W_\alpha(y), \qquad u \geq 0.\end{equation*}

The so defined process is called a Riemann–Liouville process or fractionally integrated Brownian motion with exponent  $\rho$ for

$\rho$ for  $\rho>-1/2$. Since these processes appear several times in our presentation we reserve a special notation for them,

$\rho>-1/2$. Since these processes appear several times in our presentation we reserve a special notation for them,  $R_\rho$. When

$R_\rho$. When  $W_\alpha$ is a more general Gaussian process satisfying the standing assumptions, the process

$W_\alpha$ is a more general Gaussian process satisfying the standing assumptions, the process  $Y_{\alpha,\rho}$ may be called a fractionally integrated Gaussian process. Note that, for positive integer

$Y_{\alpha,\rho}$ may be called a fractionally integrated Gaussian process. Note that, for positive integer  $\rho$, the process

$\rho$, the process  $Y_{\alpha,\rho}$ is up to a multiplicative constant an r-times integrated process

$Y_{\alpha,\rho}$ is up to a multiplicative constant an r-times integrated process  $W_{\alpha}$. This can be easily checked with the help of integration by parts.

$W_{\alpha}$. This can be easily checked with the help of integration by parts.

Throughout the paper we assume that the spaces D and  $D\times D$ are endowed with the

$D\times D$ are endowed with the  $J_1$-topology, and we denote weak convergence in these spaces by

$J_1$-topology, and we denote weak convergence in these spaces by  $\stackrel{J_{1}}\implies$ and

$\stackrel{J_{1}}\implies$ and  $\stackrel{J_1(D\times D)}\implies$, respectively. Comprehensive information concerning the

$\stackrel{J_1(D\times D)}\implies$, respectively. Comprehensive information concerning the  $J_1$-topology can be found in [Reference Billingsley4, Reference Jacod and Shiryaev26]. In what follows we use the notation

$J_1$-topology can be found in [Reference Billingsley4, Reference Jacod and Shiryaev26]. In what follows we use the notation  ${\mathbb{R}}^+\,:\!=[0,\infty)$.

${\mathbb{R}}^+\,:\!=[0,\infty)$.

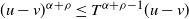

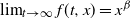

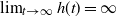

Theorem 1.1. Let  $h \in D$ be an eventually nondecreasing function of bounded variation which is regularly varying at

$h \in D$ be an eventually nondecreasing function of bounded variation which is regularly varying at  $\infty$ of index

$\infty$ of index  $\beta\geq 0$ (i.e.,

$\beta\geq 0$ (i.e.,  $\lim_{t\to\infty}(h(tx)/h(t))=x^\beta$ for all

$\lim_{t\to\infty}(h(tx)/h(t))=x^\beta$ for all  $x>0$). Assume that

$x>0$). Assume that  $\lim_{t\to\infty}h(t)=\infty$ when

$\lim_{t\to\infty}h(t)=\infty$ when  $\beta=0$, and that

$\beta=0$, and that

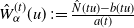

\begin{equation}\frac{N(t\cdot\!)-b(t\cdot\!)}{a(t)} \stackrel{J_{1}} \implies W_{\alpha}(\cdot), \qquad t \to \infty,\end{equation}

\begin{equation}\frac{N(t\cdot\!)-b(t\cdot\!)}{a(t)} \stackrel{J_{1}} \implies W_{\alpha}(\cdot), \qquad t \to \infty,\end{equation}

where  $a\,:\, {\mathbb{R}}^+\to{\mathbb{R}}^+$ is regularly varying at

$a\,:\, {\mathbb{R}}^+\to{\mathbb{R}}^+$ is regularly varying at  $\infty$ of positive index, and

$\infty$ of positive index, and  $b\,:\,{\mathbb{R}}^+\to{\mathbb{R}}^+$ is a nondecreasing function. Then

$b\,:\,{\mathbb{R}}^+\to{\mathbb{R}}^+$ is a nondecreasing function. Then

\begin{equation*}\frac{X(t\cdot\!)-\int_{[0,\,t \cdot]}h(t \cdot-y){\rm d} b(y)}{a(t) h(t)}\stackrel{J_{1}} \implies Y_{\alpha,\beta}(\cdot), \qquad t \to\infty.\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-\int_{[0,\,t \cdot]}h(t \cdot-y){\rm d} b(y)}{a(t) h(t)}\stackrel{J_{1}} \implies Y_{\alpha,\beta}(\cdot), \qquad t \to\infty.\end{equation*}Remark 1.1. The proof given below reveals that the assumption  $\lim_{t\to\infty}h(t)=\infty$ when

$\lim_{t\to\infty}h(t)=\infty$ when  $\beta=0$ is not needed if h is nondecreasing on

$\beta=0$ is not needed if h is nondecreasing on  ${\mathbb{R}}^+$ rather than eventually nondecreasing.

${\mathbb{R}}^+$ rather than eventually nondecreasing.

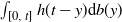

Since b is nondecreasing and h is a locally bounded and almost everywhere continuous function (in view of  $h \in D$), the integral

$h \in D$), the integral  $\int_{[0,\,t]}h(t-y){\rm d} b(y)$ exists as a Riemann–Stieltjes integral.

$\int_{[0,\,t]}h(t-y){\rm d} b(y)$ exists as a Riemann–Stieltjes integral.

The remainder of this article is organized as follows. In Section 2 we investigate local Hölder continuity of the limit processes in Theorem 1.1. In Section 3 we give five specializations of Theorem 1.1 for particular sequences  $(S_k)_{k\in{\mathbb{N}}_0}$. Finally, we prove Theorem 1.1 in Section 4.

$(S_k)_{k\in{\mathbb{N}}_0}$. Finally, we prove Theorem 1.1 in Section 4.

2. Hölder continuity of the limit processes

For the subsequent presentation, it is convenient to define the process  $W_\alpha$ on the whole line. To this end, put

$W_\alpha$ on the whole line. To this end, put  $W_\alpha(x)=0$ for

$W_\alpha(x)=0$ for  $x<0$. The right-hand side of (4) can then be given in an equivalent form,

$x<0$. The right-hand side of (4) can then be given in an equivalent form,

\begin{equation*}Y_{\alpha,\rho}(u)= |\rho| \int_0^\infty (W_\alpha (u) -W_\alpha(u-y)) y^{\rho-1}\textrm{d}y,\qquad u>0.\end{equation*}

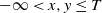

\begin{equation*}Y_{\alpha,\rho}(u)= |\rho| \int_0^\infty (W_\alpha (u) -W_\alpha(u-y)) y^{\rho-1}\textrm{d}y,\qquad u>0.\end{equation*} It is important for us that the formula (2) still holds true for negative x, y. More precisely, we claim that, for all  $T>0$, all

$T>0$, all  $-\infty<x,y\leq T$, and the same random variable

$-\infty<x,y\leq T$, and the same random variable  $M_T$ as in (2),

$M_T$ as in (2),

\begin{equation}|W_\alpha(x)-W_\alpha(y)|\leq M_T|x-y|^\alpha.\end{equation}

\begin{equation}|W_\alpha(x)-W_\alpha(y)|\leq M_T|x-y|^\alpha.\end{equation}

This inequality is trivially satisfied in the case  $x\vee y\leq 0$, and follows from (2) in the case

$x\vee y\leq 0$, and follows from (2) in the case  $x\wedge y\geq 0$. Assume now that

$x\wedge y\geq 0$. Assume now that  $x\wedge y\leq 0<x\vee y$. Then

$x\wedge y\leq 0<x\vee y$. Then  $|W_\alpha(x)-W_\alpha(y)|=|W_\alpha(x\vee y)|\leq M_T (x\vee y)^\alpha\leq M_T|x-y|^\alpha$. Here, the first inequality is a consequence of (2) with

$|W_\alpha(x)-W_\alpha(y)|=|W_\alpha(x\vee y)|\leq M_T (x\vee y)^\alpha\leq M_T|x-y|^\alpha$. Here, the first inequality is a consequence of (2) with  $y=0$.

$y=0$.

Lemma 2.1. Let  $\rho>-\alpha$. The following assertions hold:

$\rho>-\alpha$. The following assertions hold:

(i)

$|Y_{\alpha,\rho}(u)|<\infty$ a.s. for each fixed

$|Y_{\alpha,\rho}(u)|<\infty$ a.s. for each fixed  $u>0$;

$u>0$;(ii) the process

$Y_{\alpha,\rho}$ is a.s. locally Hölder continuous with exponent

$Y_{\alpha,\rho}$ is a.s. locally Hölder continuous with exponent  $\min(1, \, \alpha + \rho)$ if

$\min(1, \, \alpha + \rho)$ if  $\alpha+\rho \neq 1$, and with arbitrary positive exponent less than 1 if

$\alpha+\rho \neq 1$, and with arbitrary positive exponent less than 1 if  $\alpha+\rho=1$; more precisely, in the latter situation we have, for any

$\alpha+\rho=1$; more precisely, in the latter situation we have, for any  $T^\ast>T$,

$T^\ast>T$,

\begin{equation*}\sup_{0 \leq u \neq v \leq T}\frac{|Y_{\alpha,\rho}(u)-Y_{\alpha,\rho}(v)|}{|u-v| \log (T^\ast|u-v|^{-1})} < \infty \quad \text{{\rm a.s.}}\end{equation*}

\begin{equation*}\sup_{0 \leq u \neq v \leq T}\frac{|Y_{\alpha,\rho}(u)-Y_{\alpha,\rho}(v)|}{|u-v| \log (T^\ast|u-v|^{-1})} < \infty \quad \text{{\rm a.s.}}\end{equation*}

Proof. The case  $\rho=0$ is trivial. Fix

$\rho=0$ is trivial. Fix  $T>0$.

$T>0$.

(i) Using (2) we obtain, for all  $u\in [0,T]$,

$u\in [0,T]$,

\begin{multline*}|Y_{\alpha,\rho}(u)|\leq \rho \int_0^u(u-y)^{\rho-1}|W_\alpha(y)|{\rm d}y \leq M_T\rho \int_0^u(u-y)^{\rho-1}y^\alpha {\rm d}y\\=M_T\rho{\rm B}(\rho,\alpha+1)u^{\rho+\alpha}

< \infty\quad \text{a.s.}\end{multline*}

\begin{multline*}|Y_{\alpha,\rho}(u)|\leq \rho \int_0^u(u-y)^{\rho-1}|W_\alpha(y)|{\rm d}y \leq M_T\rho \int_0^u(u-y)^{\rho-1}y^\alpha {\rm d}y\\=M_T\rho{\rm B}(\rho,\alpha+1)u^{\rho+\alpha}

< \infty\quad \text{a.s.}\end{multline*}

in the case  $\rho>0$, and

$\rho>0$, and

\begin{multline*}|Y_{\alpha,\rho}(u)|\leq u^\rho |W_\alpha(u)| + |\rho| \int_0^u|W_\alpha (u) - W_\alpha(u-y)) y^{\rho-1}\textrm{d}y \\ \leq M_Tu^{\rho+\alpha} + |\rho| M_T(\rho+\alpha)^{-1}u^{\rho+\alpha}= M_T\alpha (\rho + \alpha)^{-1} u^{\rho+\alpha} < \infty\quad\text{a.s.}\end{multline*}

\begin{multline*}|Y_{\alpha,\rho}(u)|\leq u^\rho |W_\alpha(u)| + |\rho| \int_0^u|W_\alpha (u) - W_\alpha(u-y)) y^{\rho-1}\textrm{d}y \\ \leq M_Tu^{\rho+\alpha} + |\rho| M_T(\rho+\alpha)^{-1}u^{\rho+\alpha}= M_T\alpha (\rho + \alpha)^{-1} u^{\rho+\alpha} < \infty\quad\text{a.s.}\end{multline*}

in the case  $-\alpha<\rho<0$.

$-\alpha<\rho<0$.

(ii) By symmetry it is enough to investigate the case  $0\leq v<u\leq T$, and this is tacitly assumed throughout the proof.

$0\leq v<u\leq T$, and this is tacitly assumed throughout the proof.

Assume first that  $-\alpha < \rho < 0$. Appealing to (6) and (2), we conclude that, for

$-\alpha < \rho < 0$. Appealing to (6) and (2), we conclude that, for  $v>0$,

$v>0$,

\begin{align*}|\rho|^{-1}\left|Y_{\alpha,\rho}(u) - Y_{\alpha,\rho}(v)\right|& = \left| \int_0^\infty (W_\alpha(u) - W_\alpha(u-y) - W_\alpha(v) + W_\alpha(v-y))y^{\rho-1}\textrm{d}y \right| \\[3pt]& \leq \int_0^{u-v} \left|W_\alpha(u) - W_\alpha(u-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_0^{u-v} \left|W_\alpha(v) - W_\alpha(v-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_{u-v}^\infty \left|W_\alpha(u) - W_\alpha(v)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_{u-v}^\infty \left|W_\alpha(u-y) - W_\alpha(v-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \leq 2M_T \left( \int_0^{u-v} y^{\rho - 1 + \alpha}\textrm{d}y+ (u-v)^\alpha \int_{u-v}^\infty y^{\rho - 1}\textrm{d}y\right) \\[3pt]& = 2M_T \alpha (|\rho|(\rho+\alpha))^{-1}(u-v)^{\rho+\alpha}\quad\text{a.s.}\end{align*}

\begin{align*}|\rho|^{-1}\left|Y_{\alpha,\rho}(u) - Y_{\alpha,\rho}(v)\right|& = \left| \int_0^\infty (W_\alpha(u) - W_\alpha(u-y) - W_\alpha(v) + W_\alpha(v-y))y^{\rho-1}\textrm{d}y \right| \\[3pt]& \leq \int_0^{u-v} \left|W_\alpha(u) - W_\alpha(u-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_0^{u-v} \left|W_\alpha(v) - W_\alpha(v-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_{u-v}^\infty \left|W_\alpha(u) - W_\alpha(v)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \quad + \int_{u-v}^\infty \left|W_\alpha(u-y) - W_\alpha(v-y)\right|y^{\rho-1}\textrm{d}y \\[3pt]& \leq 2M_T \left( \int_0^{u-v} y^{\rho - 1 + \alpha}\textrm{d}y+ (u-v)^\alpha \int_{u-v}^\infty y^{\rho - 1}\textrm{d}y\right) \\[3pt]& = 2M_T \alpha (|\rho|(\rho+\alpha))^{-1}(u-v)^{\rho+\alpha}\quad\text{a.s.}\end{align*}

We already know from the proof of (i) that a similar inequality holds when  $v=0$. Thus, the claim of (ii) has been proved in the case

$v=0$. Thus, the claim of (ii) has been proved in the case  $-\alpha<\rho<0$.

$-\alpha<\rho<0$.

Assume now that  $\rho \geq 1$. We infer with the help of (2) that

$\rho \geq 1$. We infer with the help of (2) that

\begin{align*}|Y_{\alpha,\rho}&(u) - Y_{\alpha,\rho}(v)| \\[3pt] & = \left|\rho \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1})W_{\alpha}(y)\textrm{d}y+ \rho \int_v^u(u-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y \right| \\[3pt]& \leq \rho \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1})\left|W_{\alpha}(y)\right|\textrm{d}y+ \rho \int_v^u(u-y)^{\rho-1} \left|W_{\alpha}(y)\right|\textrm{d}y \\[3pt]& \leq \rho M_T \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1}) y^\alpha\textrm{d}y+ \rho M_T \int_v^u(u-y)^{\rho-1} y^\alpha \textrm{d}y \\[3pt]& = \rho M_T \int_0^u (u-y)^{\rho-1} y^\alpha \textrm{d}y- \rho M_T \int_0^v (v-y)^{\rho-1} y^\alpha \textrm{d}y \\[3pt]& = \rho M_T {\rm B}(\rho,\alpha+1) (u^{\rho + \alpha}-v^{\rho +\alpha})\\[3pt]& \leq \rho (\rho+\alpha)M_T {\rm B}(\rho,\alpha+1)T^{\rho+\alpha-1}(u-v) \quad\text{a.s.},\end{align*}

\begin{align*}|Y_{\alpha,\rho}&(u) - Y_{\alpha,\rho}(v)| \\[3pt] & = \left|\rho \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1})W_{\alpha}(y)\textrm{d}y+ \rho \int_v^u(u-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y \right| \\[3pt]& \leq \rho \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1})\left|W_{\alpha}(y)\right|\textrm{d}y+ \rho \int_v^u(u-y)^{\rho-1} \left|W_{\alpha}(y)\right|\textrm{d}y \\[3pt]& \leq \rho M_T \int_0^v((u-y)^{\rho-1}-(v-y)^{\rho-1}) y^\alpha\textrm{d}y+ \rho M_T \int_v^u(u-y)^{\rho-1} y^\alpha \textrm{d}y \\[3pt]& = \rho M_T \int_0^u (u-y)^{\rho-1} y^\alpha \textrm{d}y- \rho M_T \int_0^v (v-y)^{\rho-1} y^\alpha \textrm{d}y \\[3pt]& = \rho M_T {\rm B}(\rho,\alpha+1) (u^{\rho + \alpha}-v^{\rho +\alpha})\\[3pt]& \leq \rho (\rho+\alpha)M_T {\rm B}(\rho,\alpha+1)T^{\rho+\alpha-1}(u-v) \quad\text{a.s.},\end{align*}where the last inequality follows from the mean value theorem for differentiable functions.

It remains to investigate the case  $0 < \rho < 1$. We shall use the following decomposition:

$0 < \rho < 1$. We shall use the following decomposition:

\begin{align*}\left|Y_{\alpha,\rho}(u) - Y_{\alpha,\rho}(v)\right| & =\left|\rho \int_0^u(u-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y- \rho \int_0^v(v-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y \right| \\& = \left | \rho \int_0^v (W_{\alpha}(v) - W_{\alpha}(v-y))(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y \right. \\& \quad - \left. \rho \int_0^{u-v}(W_\alpha(v)-W_{\alpha}(u-y))y^{\rho-1}\textrm{d}y+ W_\alpha(v)(u^\rho - v^\rho) \right| \\& \leq I_1 + I_2+I_3,\end{align*}

\begin{align*}\left|Y_{\alpha,\rho}(u) - Y_{\alpha,\rho}(v)\right| & =\left|\rho \int_0^u(u-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y- \rho \int_0^v(v-y)^{\rho-1} W_{\alpha}(y)\textrm{d}y \right| \\& = \left | \rho \int_0^v (W_{\alpha}(v) - W_{\alpha}(v-y))(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y \right. \\& \quad - \left. \rho \int_0^{u-v}(W_\alpha(v)-W_{\alpha}(u-y))y^{\rho-1}\textrm{d}y+ W_\alpha(v)(u^\rho - v^\rho) \right| \\& \leq I_1 + I_2+I_3,\end{align*}where

\begin{align*} I_1 & \,:\!= \rho \int_0^v |W_{\alpha}(v) - W_{\alpha}(v-y)|(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y, \\ I_2 & \,:\!= \rho \int_0^{u-v} |W_\alpha(v)-W_{\alpha}(u-y)|y^{\rho-1}\textrm{d}y, \\ I_3 & \,:\!=|W_\alpha(v)|(u^\rho - v^\rho).\end{align*}

\begin{align*} I_1 & \,:\!= \rho \int_0^v |W_{\alpha}(v) - W_{\alpha}(v-y)|(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y, \\ I_2 & \,:\!= \rho \int_0^{u-v} |W_\alpha(v)-W_{\alpha}(u-y)|y^{\rho-1}\textrm{d}y, \\ I_3 & \,:\!=|W_\alpha(v)|(u^\rho - v^\rho).\end{align*}

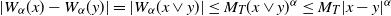

The summand  $I_1$ can be estimated as

$I_1$ can be estimated as

\begin{align*}I_1 &\leq \rho M_T \int_0^v y^\alpha(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y \\& = \rho M_T (u-v)^{\alpha + \rho} \int_0^{v/(u-v)}t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t.\end{align*}

\begin{align*}I_1 &\leq \rho M_T \int_0^v y^\alpha(y^{\rho-1}-(y+u-v)^{\rho-1})\textrm{d}y \\& = \rho M_T (u-v)^{\alpha + \rho} \int_0^{v/(u-v)}t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t.\end{align*}

Using the inequality  $x^{\rho-1} - (x+1)^{\rho-1} \leq(1-\rho)x^{\rho-2}$ for

$x^{\rho-1} - (x+1)^{\rho-1} \leq(1-\rho)x^{\rho-2}$ for  $x>0$ gives

$x>0$ gives

\begin{align*} I_1 & \leq \rho (1-\rho)M_T (u-v)^{\alpha + \rho} \int_0^{v/(u-v)} t^{\alpha+\rho-2}{\rm d}t \\ & = \rho (1-\rho)(\alpha+\rho-1)^{-1}M_T v^{\alpha+\rho-1} (u-v)\\ & \leq \rho (1-\rho)(\alpha+\rho-1)^{-1}M_T T^{\alpha+\rho-1} (u-v) \end{align*}

\begin{align*} I_1 & \leq \rho (1-\rho)M_T (u-v)^{\alpha + \rho} \int_0^{v/(u-v)} t^{\alpha+\rho-2}{\rm d}t \\ & = \rho (1-\rho)(\alpha+\rho-1)^{-1}M_T v^{\alpha+\rho-1} (u-v)\\ & \leq \rho (1-\rho)(\alpha+\rho-1)^{-1}M_T T^{\alpha+\rho-1} (u-v) \end{align*}

in the case  $\alpha+\rho>1$, and

$\alpha+\rho>1$, and

\begin{align*}I_1 & \leq \rho M_T(u-v)^{\alpha+\rho} \left(\int_0^1t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t+ \int_1^\infty t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t\right) \\& \leq \rho M_T(u-v)^{\alpha+\rho} \left(\int_0^1 t^{\alpha +\rho-1}\textrm{d}t+(1 - \rho) \int_1^\infty t^{\alpha + \rho-2} \textrm{d}t\right) \\& = \rho M_T \left(\frac{1}{\alpha+\rho} +\frac{1-\rho}{1-\alpha-\rho}\right) (u-v)^{\alpha+\rho}\end{align*}

\begin{align*}I_1 & \leq \rho M_T(u-v)^{\alpha+\rho} \left(\int_0^1t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t+ \int_1^\infty t^\alpha(t^{\rho-1}-(t+1)^{\rho-1})\textrm{d}t\right) \\& \leq \rho M_T(u-v)^{\alpha+\rho} \left(\int_0^1 t^{\alpha +\rho-1}\textrm{d}t+(1 - \rho) \int_1^\infty t^{\alpha + \rho-2} \textrm{d}t\right) \\& = \rho M_T \left(\frac{1}{\alpha+\rho} +\frac{1-\rho}{1-\alpha-\rho}\right) (u-v)^{\alpha+\rho}\end{align*}

in the case  $0<\alpha+\rho<1$. Also,

$0<\alpha+\rho<1$. Also,

\begin{align*}I_1 & \leq \rho M_T(u-v) \int_0^{v/(u-v)} \left(1-\left(\frac{t}{t+1}\right)^\alpha \right)\textrm{d}t \\& \leq \rho M_T(u-v) \int_0^{v/(u-v)} \frac{\textrm{d}t}{t+1} \displaybreak\\& = \rho M_T(u-v) \ln \frac{u}{u-v} \\& \leq \rho M_T(u-v) \ln \frac{T}{u-v}\end{align*}

\begin{align*}I_1 & \leq \rho M_T(u-v) \int_0^{v/(u-v)} \left(1-\left(\frac{t}{t+1}\right)^\alpha \right)\textrm{d}t \\& \leq \rho M_T(u-v) \int_0^{v/(u-v)} \frac{\textrm{d}t}{t+1} \displaybreak\\& = \rho M_T(u-v) \ln \frac{u}{u-v} \\& \leq \rho M_T(u-v) \ln \frac{T}{u-v}\end{align*}

in the case  $\alpha+\rho=1$.

$\alpha+\rho=1$.

Further,

\begin{equation*}I_2\leq \rho M_T \int_0^{u-v}(u-v-y)^\alpha y^{\rho-1}{\rm d}y=\rho M_T {\rm B}(\rho,\alpha+1)(u-v)^{\alpha+\rho}.\end{equation*}

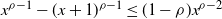

\begin{equation*}I_2\leq \rho M_T \int_0^{u-v}(u-v-y)^\alpha y^{\rho-1}{\rm d}y=\rho M_T {\rm B}(\rho,\alpha+1)(u-v)^{\alpha+\rho}.\end{equation*} In the case  $\alpha+\rho>1$ the inequality

$\alpha+\rho>1$ the inequality  $(u-v)^{\alpha+\rho}\leq T^{\alpha+\rho-1}(u-v)$ has to be additionally used.

$(u-v)^{\alpha+\rho}\leq T^{\alpha+\rho-1}(u-v)$ has to be additionally used.

Finally,

\begin{equation*}I_3\leq M_T v^\alpha(u^\rho-v^\rho)\leq M_T(u^{\alpha+\rho}-v^{\alpha+\rho}).\end{equation*}

\begin{equation*}I_3\leq M_T v^\alpha(u^\rho-v^\rho)\leq M_T(u^{\alpha+\rho}-v^{\alpha+\rho}).\end{equation*} The right-hand side does not exceed  $M_T(u-v)^{\alpha+\rho}$ in the case

$M_T(u-v)^{\alpha+\rho}$ in the case  $0<\alpha+\rho\leq 1$ in view of the subadditivity of

$0<\alpha+\rho\leq 1$ in view of the subadditivity of  $x\mapsto x^{\alpha+\rho}$ on

$x\mapsto x^{\alpha+\rho}$ on  $[0,\infty)$ and

$[0,\infty)$ and  $M_T(\alpha+\rho)T^{\alpha+\rho-1}(u-v)$ in the case

$M_T(\alpha+\rho)T^{\alpha+\rho-1}(u-v)$ in the case  $\alpha+\rho>1$.

$\alpha+\rho>1$.

The proof of Lemma 2.1 is complete.

3. Applications of Theorem 1.1

In this section we give five examples of particular sequences  $(S_k)_{k\in{\mathbb{N}}_0}$ which satisfy the limit relation (5) with four different Gaussian processes

$(S_k)_{k\in{\mathbb{N}}_0}$ which satisfy the limit relation (5) with four different Gaussian processes  $W_\alpha$. Throughout the section we always assume, without further notice, that h satisfies the assumptions of Theorem 1.1.

$W_\alpha$. Throughout the section we always assume, without further notice, that h satisfies the assumptions of Theorem 1.1.

In the case where the sequence  $(S_k)_{k\in{\mathbb{N}}_0}$ is a.s. nondecreasing, the counting process

$(S_k)_{k\in{\mathbb{N}}_0}$ is a.s. nondecreasing, the counting process  $(N(t))_{t\geq 0}$ is nothing other than a generalized inverse function for

$(N(t))_{t\geq 0}$ is nothing other than a generalized inverse function for  $(S_k)$, that is,

$(S_k)$, that is,

\begin{equation}N(t)=\inf\{k\in{\mathbb{N}}\,:\, S_k>t\}\quad\text{a.s.,}\qquad t\geq 0.\end{equation}

\begin{equation}N(t)=\inf\{k\in{\mathbb{N}}\,:\, S_k>t\}\quad\text{a.s.,}\qquad t\geq 0.\end{equation}

In view of this, if a functional limit theorem for  $S_{[ut]}$ in the

$S_{[ut]}$ in the  $J_1$-topology on D holds, and the limit process is a.s. continuous, then the corresponding functional limit theorem for N(ut) in the

$J_1$-topology on D holds, and the limit process is a.s. continuous, then the corresponding functional limit theorem for N(ut) in the  $J_1$-topology on D is a simple consequence. A detailed discussion of this fact can be found, for instance, in [Reference Iglehart and Whitt15]. If the sequence

$J_1$-topology on D is a simple consequence. A detailed discussion of this fact can be found, for instance, in [Reference Iglehart and Whitt15]. If the sequence  $(S_k)_{k\in{\mathbb{N}}_0}$ is not monotone (as, for instance, in Subsection 3.2 below), then equality (7) is no longer true, and the proof of a functional limit theorem for N(ut) requires an additional specific argument in every particular case.

$(S_k)_{k\in{\mathbb{N}}_0}$ is not monotone (as, for instance, in Subsection 3.2 below), then equality (7) is no longer true, and the proof of a functional limit theorem for N(ut) requires an additional specific argument in every particular case.

3.1. Delayed standard random walk

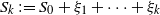

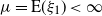

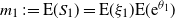

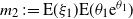

Let  $\xi_1,\xi_2,\ldots$ be i.i.d. nonnegative random variables which are independent of a nonnegative random variable

$\xi_1,\xi_2,\ldots$ be i.i.d. nonnegative random variables which are independent of a nonnegative random variable  $S_0$. The random sequence

$S_0$. The random sequence  $(S_k)_{k\in{\mathbb{N}}_0}$ defined by

$(S_k)_{k\in{\mathbb{N}}_0}$ defined by  $S_k\,:\!=S_0+\xi_1+\cdots+\xi_k$ for

$S_k\,:\!=S_0+\xi_1+\cdots+\xi_k$ for  $k\in{\mathbb{N}}_0$ is called a delayed standard random walk provided that

$k\in{\mathbb{N}}_0$ is called a delayed standard random walk provided that  ${\mathrm{P}}\{S_0=0\}<1$. In the case

${\mathrm{P}}\{S_0=0\}<1$. In the case  $S_0=0$ a.s., the term zero-delayed standard random walk is used. It is well known (see, for instance, [Reference Bingham5, Theorem 1b(i)]) that

$S_0=0$ a.s., the term zero-delayed standard random walk is used. It is well known (see, for instance, [Reference Bingham5, Theorem 1b(i)]) that

(a) if

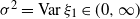

$\sigma^2\,:\!={\rm Var}\,\xi_1\in (0,\infty)$, then

(8)where

$\sigma^2\,:\!={\rm Var}\,\xi_1\in (0,\infty)$, then

(8)where \begin{equation}\frac{N(t\cdot\!)-\mu^{-1}t(\cdot)}{(\sigma^2\mu^{-3}t)^{1/2}}\stackrel{J_{1}} \implies B(\cdot) , \qquad t \to \infty,\end{equation}

\begin{equation}\frac{N(t\cdot\!)-\mu^{-1}t(\cdot)}{(\sigma^2\mu^{-3}t)^{1/2}}\stackrel{J_{1}} \implies B(\cdot) , \qquad t \to \infty,\end{equation} $\mu\,:\!={\mathrm{E}} (\xi_1) < \infty$, and

$\mu\,:\!={\mathrm{E}} (\xi_1) < \infty$, and  $(B(u))_{u\geq 0}$ is a standard Brownian motion (so that relation (5) holds with

$(B(u))_{u\geq 0}$ is a standard Brownian motion (so that relation (5) holds with  $b(t)=\mu^{-1}t$ and

$b(t)=\mu^{-1}t$ and  $a(t)=(\sigma^2\mu^{-3}t)^{1/2}$);

$a(t)=(\sigma^2\mu^{-3}t)^{1/2}$);(b) if

(9)for some L slowly varying at \begin{equation} \sigma^2=\infty \quad \text{and} \quad \int_{[0,\,x]}y^2{\mathrm{P}}\{\xi_1\in {\rm d}y\}\sim L(x),\qquad x\to\infty\end{equation}

\begin{equation} \sigma^2=\infty \quad \text{and} \quad \int_{[0,\,x]}y^2{\mathrm{P}}\{\xi_1\in {\rm d}y\}\sim L(x),\qquad x\to\infty\end{equation} $\infty$, then

(10)where c is a positive measurable function satisfying

$\infty$, then

(10)where c is a positive measurable function satisfying \begin{equation}\frac{N(t\cdot\!)-\mu^{-1}t(\cdot)}{\mu^{-3/2}c(t)} \stackrel{J_{1}}\implies B(\cdot) , \qquad t \to \infty,\end{equation}

\begin{equation}\frac{N(t\cdot\!)-\mu^{-1}t(\cdot)}{\mu^{-3/2}c(t)} \stackrel{J_{1}}\implies B(\cdot) , \qquad t \to \infty,\end{equation} $\lim_{t\to\infty}c(t)^{-2}tL(c(t))=1$ (so that relation (5) holds with

$\lim_{t\to\infty}c(t)^{-2}tL(c(t))=1$ (so that relation (5) holds with  $b(t)=\mu^{-1}t$ and

$b(t)=\mu^{-1}t$ and  $a(t)=\mu^{-3/2}c(t)$; since c is asymptotically inverse for

$a(t)=\mu^{-3/2}c(t)$; since c is asymptotically inverse for  $t\mapsto t^2/L(t)$, an application of [Reference Bingham, Goldie and Teugels6, Proposition 1.5.15] enables us to conclude that c, and hence also a, are regularly varying at

$t\mapsto t^2/L(t)$, an application of [Reference Bingham, Goldie and Teugels6, Proposition 1.5.15] enables us to conclude that c, and hence also a, are regularly varying at  $\infty$ of index

$\infty$ of index  $1/2$).

$1/2$).

Thus, according to Theorem 1.1, we have

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{(\sigma^2\mu^{-3}t)^{1/2} h(t)} \stackrel{J_{1}} \implies\beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B(y)\textrm{d}y=R_\beta(\cdot),\qquad t \to \infty,\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{(\sigma^2\mu^{-3}t)^{1/2} h(t)} \stackrel{J_{1}} \implies\beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B(y)\textrm{d}y=R_\beta(\cdot),\qquad t \to \infty,\end{equation*}

provided that  $\sigma^2\in (0,\infty)$ (in the case

$\sigma^2\in (0,\infty)$ (in the case  $\beta=0$, the limit process is

$\beta=0$, the limit process is  $R_0=B$), and

$R_0=B$), and

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{\mu^{-3/2}c(t)h(t)} \stackrel{J_{1}} \implies R_\beta(\cdot), \qquad t \to \infty\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{\mu^{-3/2}c(t)h(t)} \stackrel{J_{1}} \implies R_\beta(\cdot), \qquad t \to \infty\end{equation*}

provided that conditions (9) hold. In particular, irrespective of whether the variance is finite or not, the limit process is a fractionally integrated Brownian motion with parameter  $\beta$. As far as zero-delayed standard random walks are concerned, the aforementioned results can be found in Theorem 1.1 (A1, A2) of [Reference Iksanov16].

$\beta$. As far as zero-delayed standard random walks are concerned, the aforementioned results can be found in Theorem 1.1 (A1, A2) of [Reference Iksanov16].

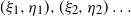

3.2. Perturbed random walks

Let  $(\xi_1, \eta_1),(\xi_2,\eta_2)\ldots$ be i.i.d. random vectors with nonnegative coordinates. Put

$(\xi_1, \eta_1),(\xi_2,\eta_2)\ldots$ be i.i.d. random vectors with nonnegative coordinates. Put

\begin{equation*}S_1\,:\!=\eta_1,\quad S_n\,:\!=\xi_1+\cdots+\xi_{n-1}+\eta_n,\qquad n\geq 2.\end{equation*}

\begin{equation*}S_1\,:\!=\eta_1,\quad S_n\,:\!=\xi_1+\cdots+\xi_{n-1}+\eta_n,\qquad n\geq 2.\end{equation*}

The so defined sequence  $(S_n)_{n\in{\mathbb{N}}}$ is called a perturbed random walk. Various properties of perturbed random walks are discussed in [Reference Iksanov17].

$(S_n)_{n\in{\mathbb{N}}}$ is called a perturbed random walk. Various properties of perturbed random walks are discussed in [Reference Iksanov17].

Assume that  $\sigma^2={\rm Var}\,\xi_1\in (0,\infty)$ and

$\sigma^2={\rm Var}\,\xi_1\in (0,\infty)$ and  ${\mathrm{E}}(\eta^a)<\infty$ for some

${\mathrm{E}}(\eta^a)<\infty$ for some  $a>0$. Put

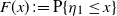

$a>0$. Put  $F(x)\,:\!={\mathrm{P}}\{\eta_1\leq x\}$ for

$F(x)\,:\!={\mathrm{P}}\{\eta_1\leq x\}$ for  $x\in{\mathbb{R}}$. According to [Reference Alsmeyer, Iksanov and Marynych2, Theorem 3.2],

$x\in{\mathbb{R}}$. According to [Reference Alsmeyer, Iksanov and Marynych2, Theorem 3.2],

\begin{equation*}\frac{N(t\cdot\!)-\mu^{-1}\int_0^{t\cdot}F(y){\rm d}y}{\sqrt{\sigma^2\mu^{-3}t}}\stackrel{J_{1}} \implies B(\cdot), \qquad t \to \infty,\end{equation*}

\begin{equation*}\frac{N(t\cdot\!)-\mu^{-1}\int_0^{t\cdot}F(y){\rm d}y}{\sqrt{\sigma^2\mu^{-3}t}}\stackrel{J_{1}} \implies B(\cdot), \qquad t \to \infty,\end{equation*}

where  $\mu={\mathrm{E}}(\xi_1)<\infty$. Therefore, by Theorem 1.1,

$\mu={\mathrm{E}}(\xi_1)<\infty$. Therefore, by Theorem 1.1,

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot} h(y)F(y){\rm d}y}{(\sigma^2\mu^{-3}t)^{1/2} h(t)} \stackrel{J_{1}} \implies R_\beta(\cdot),\qquad t \to \infty.\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot} h(y)F(y){\rm d}y}{(\sigma^2\mu^{-3}t)^{1/2} h(t)} \stackrel{J_{1}} \implies R_\beta(\cdot),\qquad t \to \infty.\end{equation*}3.3. Random walks with long memory

Let  $\xi_1,\xi_2,\ldots$ be i.i.d. positive random variables with finite mean. Assume that these are independent of random variables

$\xi_1,\xi_2,\ldots$ be i.i.d. positive random variables with finite mean. Assume that these are independent of random variables  $\theta_1, \theta_2, \ldots$ which form a centered stationary Gaussian sequence with

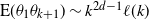

$\theta_1, \theta_2, \ldots$ which form a centered stationary Gaussian sequence with  ${\mathrm{E}} (\theta_1\theta_{k+1})\sim k^{2d-1}\ell(k)$ as

${\mathrm{E}} (\theta_1\theta_{k+1})\sim k^{2d-1}\ell(k)$ as  $k\to\infty$ for some

$k\to\infty$ for some  $d\in (0,1/2)$. Put

$d\in (0,1/2)$. Put  $S_0\,:\!=0$ and

$S_0\,:\!=0$ and

\begin{equation*}S_n-S_{n-1}=\xi_n {\rm e}^{\theta_n},\qquad n\in{\mathbb{N}}.\end{equation*}

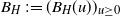

\begin{equation*}S_n-S_{n-1}=\xi_n {\rm e}^{\theta_n},\qquad n\in{\mathbb{N}}.\end{equation*} Recall that a fractional Brownian motion with Hurst index  $H\in(0,1)$ is a centered Gaussian process

$H\in(0,1)$ is a centered Gaussian process  $B_H\,:\!=(B_H(u))_{u\geq 0}$ with covariance

$B_H\,:\!=(B_H(u))_{u\geq 0}$ with covariance  ${\mathrm{E}}(B_H(u)B_H(v))=2^{-1}(u^{2H}+v^{2H}-(u-v)^{2H})$ for

${\mathrm{E}}(B_H(u)B_H(v))=2^{-1}(u^{2H}+v^{2H}-(u-v)^{2H})$ for  $u,v\geq 0$. This process has stationary increments and is self-similar of index H. Therefore, for any

$u,v\geq 0$. This process has stationary increments and is self-similar of index H. Therefore, for any  $p>0$,

$p>0$,

\begin{equation*}{\mathrm{E}}|B_H(u)-B_H(v)|^p=(u-v)^{Hp}{\mathrm{E}} |B_H(1)|^p,\qquad u,v\geq 0.\end{equation*}

\begin{equation*}{\mathrm{E}}|B_H(u)-B_H(v)|^p=(u-v)^{Hp}{\mathrm{E}} |B_H(1)|^p,\qquad u,v\geq 0.\end{equation*}

According to the Kolmogorov–Chentsov sufficient conditions, there exists a version of  $B_H$ (which we also denote by

$B_H$ (which we also denote by  $B_H$) which is a.s. Hölder continuous with exponent smaller than

$B_H$) which is a.s. Hölder continuous with exponent smaller than  $H-1/p$ for any

$H-1/p$ for any  $p>0$, and hence also smaller than H.

$p>0$, and hence also smaller than H.

According to [Reference Beran, Feng, Ghosh and Kulik3, Example 4.25, p. 357],

\begin{equation*}\frac{N(t\cdot\!)-m_1^{-1}t(\cdot)}{(d(2d+1))^{-1/2}m_1^{-3/2-d}m_2t^{d+1/2}(\ell(t))^{1/2}} \stackrel{J_{1}} \implies B_{d+1/2}(\cdot),\qquad t\to\infty,\end{equation*}

\begin{equation*}\frac{N(t\cdot\!)-m_1^{-1}t(\cdot)}{(d(2d+1))^{-1/2}m_1^{-3/2-d}m_2t^{d+1/2}(\ell(t))^{1/2}} \stackrel{J_{1}} \implies B_{d+1/2}(\cdot),\qquad t\to\infty,\end{equation*}

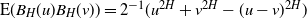

where  $m_1\,:\!={\mathrm{E}} (S_1)={\mathrm{E}}(\xi_1){\mathrm{E}} ({\rm e}^{\theta_1})$ and

$m_1\,:\!={\mathrm{E}} (S_1)={\mathrm{E}}(\xi_1){\mathrm{E}} ({\rm e}^{\theta_1})$ and  $m_2\,:\!={\mathrm{E}}(\xi_1){\mathrm{E}}(\theta_1{\rm e}^{\theta_1})$. An application of Theorem 1.1 yields, as

$m_2\,:\!={\mathrm{E}}(\xi_1){\mathrm{E}}(\theta_1{\rm e}^{\theta_1})$. An application of Theorem 1.1 yields, as  $t\to\infty$,

$t\to\infty$,

\begin{equation*}\frac{X(t\cdot\!)-m_1^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{(d(2d+1))^{-1/2}m_1^{-3/2-d}m_2t^{d+1/2}(\ell(t))^{1/2}h(t)} \stackrel{J_{1}} \implies \beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B_{d+1/2}(y)\textrm{d}y\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-m_1^{-1}\int_0^{t \cdot}{h(y)\textrm{d}y}}{(d(2d+1))^{-1/2}m_1^{-3/2-d}m_2t^{d+1/2}(\ell(t))^{1/2}h(t)} \stackrel{J_{1}} \implies \beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B_{d+1/2}(y)\textrm{d}y\end{equation*}

if  $\beta>0$. If

$\beta>0$. If  $\beta=0$, the limit process is

$\beta=0$, the limit process is  $B_{d+1/2}$.

$B_{d+1/2}$.

3.4. Counting process in a branching random walk

Assume that the random variables  $\xi_k$ defined in Subsection 3.1 are a.s. positive. For some integer

$\xi_k$ defined in Subsection 3.1 are a.s. positive. For some integer  $k\geq 2$, we take in the role of N(t) the number of the kth-generation individuals with positions

$k\geq 2$, we take in the role of N(t) the number of the kth-generation individuals with positions  $\leq t$ in a branching random walk in which the first-generation individuals are located at the points

$\leq t$ in a branching random walk in which the first-generation individuals are located at the points  $S_1=\xi_1$,

$S_1=\xi_1$,  $S_2=\xi_1+\xi_2$,

$S_2=\xi_1+\xi_2$,  $\ldots$ (a more precise definition can be found in [Reference Iksanov and Kabluchko19, Section 1.2]).

$\ldots$ (a more precise definition can be found in [Reference Iksanov and Kabluchko19, Section 1.2]).

Assume that  $\sigma^2={\rm Var}\,\xi_1 \in (0,\infty)$. Theorem 1.3 in [Reference Iksanov and Kabluchko19] implies that

$\sigma^2={\rm Var}\,\xi_1 \in (0,\infty)$. Theorem 1.3 in [Reference Iksanov and Kabluchko19] implies that

\begin{equation*}\frac{N(t\cdot\!)-(t\cdot\!)^k/(k!\mu^k)}{((k-1)!)^{-1}\sqrt{\sigma^2\mu^{-2k-1}t^{2k-1}}\,} \stackrel{J_{1}} \implies R_{k-1}(\cdot), \qquad t \to\infty,\end{equation*}

\begin{equation*}\frac{N(t\cdot\!)-(t\cdot\!)^k/(k!\mu^k)}{((k-1)!)^{-1}\sqrt{\sigma^2\mu^{-2k-1}t^{2k-1}}\,} \stackrel{J_{1}} \implies R_{k-1}(\cdot), \qquad t \to\infty,\end{equation*}

where  $\mu={\mathrm{E}}(\xi_1)<\infty$. Of course, for

$\mu={\mathrm{E}}(\xi_1)<\infty$. Of course, for  $k=1$ this limit relation is also valid and amounts to (8), as it must be. By Lemma 2.1, the process

$k=1$ this limit relation is also valid and amounts to (8), as it must be. By Lemma 2.1, the process  $R_{k-1}$ is a.s. locally Hölder continuous with any positive exponent smaller than

$R_{k-1}$ is a.s. locally Hölder continuous with any positive exponent smaller than  $k-1/2$. Thus, Theorem 1.1 applies and gives

$k-1/2$. Thus, Theorem 1.1 applies and gives

\begin{align*}\frac{X(t\cdot\!)-((k-1)!\mu^k)^{-1}\int_0^{t\cdot}{h(t\cdot-y)y^{k-1}\textrm{d}y}}{((k-1)!)^{-1}\sqrt{\sigma^2\mu^{-2k-1}t^{2k-1}}h(t)} \stackrel{J_{1}} \implies & \beta \int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}R_{k-1}(y)\textrm{d}y\\&=(k-1){\rm B}(k-1,\beta+1) R_{\beta+k-1}(\cdot)\end{align*}

\begin{align*}\frac{X(t\cdot\!)-((k-1)!\mu^k)^{-1}\int_0^{t\cdot}{h(t\cdot-y)y^{k-1}\textrm{d}y}}{((k-1)!)^{-1}\sqrt{\sigma^2\mu^{-2k-1}t^{2k-1}}h(t)} \stackrel{J_{1}} \implies & \beta \int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}R_{k-1}(y)\textrm{d}y\\&=(k-1){\rm B}(k-1,\beta+1) R_{\beta+k-1}(\cdot)\end{align*}

as  $t\to\infty$, where

$t\to\infty$, where  ${\rm B}(\!\cdot,\cdot\!)$ is the beta function. The latter equality can be checked as follows: for

${\rm B}(\!\cdot,\cdot\!)$ is the beta function. The latter equality can be checked as follows: for  $u>0$,

$u>0$,

\begin{align*}\beta \int_0^u (u-y)^{\beta-1}R_{k-1}(y)\textrm{d}y &= \beta\int_0^u (u-y)^{\beta-1}(k-1) \int_0^y (y-x)^{k-2}B(x)\textrm{d}x\textrm{d}y \\&= \beta(k-1) \int_0^u B(x) \int_x^ u(u-y)^{\beta-1}(y-x)^{k-2}\textrm{d}y\textrm{d}x \\&= \beta(k-1) \int_0^u B(x)\int_0^{u-x}(u-x-y)^{\beta-1}y^{k-2}\textrm{d}y\textrm{d}x \\&= \beta(k-1){\rm B}(k-1, \beta) \int_0^u (u-x)^{\beta+k-2} B(x) \textrm{d}x \\&= \frac{\beta(k-1){\rm B}(k-1, \beta)}{\beta+k-1}R_{\beta+k-1}(u)\\&= (k-1){\rm B}(k-1,\beta+1)R_{\beta+k-1}(u).\end{align*}

\begin{align*}\beta \int_0^u (u-y)^{\beta-1}R_{k-1}(y)\textrm{d}y &= \beta\int_0^u (u-y)^{\beta-1}(k-1) \int_0^y (y-x)^{k-2}B(x)\textrm{d}x\textrm{d}y \\&= \beta(k-1) \int_0^u B(x) \int_x^ u(u-y)^{\beta-1}(y-x)^{k-2}\textrm{d}y\textrm{d}x \\&= \beta(k-1) \int_0^u B(x)\int_0^{u-x}(u-x-y)^{\beta-1}y^{k-2}\textrm{d}y\textrm{d}x \\&= \beta(k-1){\rm B}(k-1, \beta) \int_0^u (u-x)^{\beta+k-2} B(x) \textrm{d}x \\&= \frac{\beta(k-1){\rm B}(k-1, \beta)}{\beta+k-1}R_{\beta+k-1}(u)\\&= (k-1){\rm B}(k-1,\beta+1)R_{\beta+k-1}(u).\end{align*}3.5. Inhomogeneous Poisson process

Let  $(N(t))_{t\geq 0}$ be an inhomogeneous Poisson process with

$(N(t))_{t\geq 0}$ be an inhomogeneous Poisson process with  ${\mathrm{E}}[N(t)]=m(t)$ for a nondecreasing function m(t) satisfying

${\mathrm{E}}[N(t)]=m(t)$ for a nondecreasing function m(t) satisfying

\begin{equation}m(t) \sim c t^w,\qquad t\to\infty,\end{equation}

\begin{equation}m(t) \sim c t^w,\qquad t\to\infty,\end{equation}

where  $c,w>0$. Without loss of generality we can identify

$c,w>0$. Without loss of generality we can identify  $(N(t))_{t\geq 0}$ with the process

$(N(t))_{t\geq 0}$ with the process  $(N^\ast(m(t)))_{t\geq 0}$, where

$(N^\ast(m(t)))_{t\geq 0}$, where  $(N^\ast(t))_{t\geq 0}$ is a homogeneous Poisson process with

$(N^\ast(t))_{t\geq 0}$ is a homogeneous Poisson process with  ${\mathrm{E}}[ N^\ast(t)]=t$,

${\mathrm{E}}[ N^\ast(t)]=t$,  $t\geq 0$. According to (8),

$t\geq 0$. According to (8),

\begin{equation}\frac{N^\ast (t\cdot\!)-(t \cdot\!)}{t^{1/2}} \stackrel{J_{1}}\implies B(\cdot) , \qquad t \to \infty.\end{equation}

\begin{equation}\frac{N^\ast (t\cdot\!)-(t \cdot\!)}{t^{1/2}} \stackrel{J_{1}}\implies B(\cdot) , \qquad t \to \infty.\end{equation}Dini’s theorem in combination with (11) ensures that

\begin{equation}\lim_{t\to\infty}\sup_{u\in[0,\,T]}\Big|\frac{m(tu)}{ct^w}-u^w\Big|=0\end{equation}

\begin{equation}\lim_{t\to\infty}\sup_{u\in[0,\,T]}\Big|\frac{m(tu)}{ct^w}-u^w\Big|=0\end{equation}

for all  $T>0$. It is known (see, for instance, [Reference Gut14, Lemma 2.3, p. 159]) that the composition mapping

$T>0$. It is known (see, for instance, [Reference Gut14, Lemma 2.3, p. 159]) that the composition mapping  $(x,\varphi)\mapsto (x\circ \varphi)$ is continuous on continuous functions

$(x,\varphi)\mapsto (x\circ \varphi)$ is continuous on continuous functions  $x\,{:}\,{\mathbb{R}}_+ \to {\mathbb{R}}$ and continuous nondecreasing functions

$x\,{:}\,{\mathbb{R}}_+ \to {\mathbb{R}}$ and continuous nondecreasing functions  $\varphi\,:\, {\mathbb{R}}_+\to {\mathbb{R}}_+$. Using this fact in conjunction with (12), (13), and the continuous mapping theorem, we infer that

$\varphi\,:\, {\mathbb{R}}_+\to {\mathbb{R}}_+$. Using this fact in conjunction with (12), (13), and the continuous mapping theorem, we infer that

\begin{equation*}\frac{N(t\cdot\!)-m(t\cdot\!)}{(ct^w)^{1/2}}\stackrel{J_{1}} \implies B((\cdot)^w),\qquad t\to\infty.\end{equation*}

\begin{equation*}\frac{N(t\cdot\!)-m(t\cdot\!)}{(ct^w)^{1/2}}\stackrel{J_{1}} \implies B((\cdot)^w),\qquad t\to\infty.\end{equation*}Thus, the limit process is a time-changed Brownian motion. An application of Theorem 1.1 yields

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot} h(y){\rm d}m(y)}{(ct^w)^{1/2}h(t)}\stackrel{J_{1}} \implies\beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B(y^w){\rm d}y,\qquad t \to\infty\end{equation*}

\begin{equation*}\frac{X(t\cdot\!)-\mu^{-1}\int_0^{t \cdot} h(y){\rm d}m(y)}{(ct^w)^{1/2}h(t)}\stackrel{J_{1}} \implies\beta\int_0^{(\cdot)}(\!\cdot-y)^{\beta-1}B(y^w){\rm d}y,\qquad t \to\infty\end{equation*}

if  $\beta>0$. If

$\beta>0$. If  $\beta=0$, the limit process is

$\beta=0$, the limit process is  $B((\cdot)^w)$.

$B((\cdot)^w)$.

4 Proof of Theorem 1.1

To prove weak convergence of finite-dimensional distributions we need an auxiliary lemma.

Lemma 1. Let  $f\,{:}\,{\mathbb{R}}^+ \times {\mathbb{R}}^+ \to (0,\infty)$ be a function which is nondecreasing in the second coordinate and satisfies

$f\,{:}\,{\mathbb{R}}^+ \times {\mathbb{R}}^+ \to (0,\infty)$ be a function which is nondecreasing in the second coordinate and satisfies  $\lim_{t \to\infty}f(t,x) =x^{\beta}$ for all

$\lim_{t \to\infty}f(t,x) =x^{\beta}$ for all  $x>0$ and some

$x>0$ and some  $\beta \geq 0$. For

$\beta \geq 0$. For  $t,x\geq 0$, set

$t,x\geq 0$, set

\begin{align*}Z(t,x) & \,:\!= \int_{[0,\,x]}W_\alpha(x-y) {\rm d}_y f(t,y), \\Z(x) & \,:\!=\int_{[0,\,x]}W_\alpha(x-y) {\rm d}y^\beta\ { for }\beta > 0 , \\ Z(x) & \,:\!= W_\alpha(x)\ { for } \beta =0.\end{align*}

\begin{align*}Z(t,x) & \,:\!= \int_{[0,\,x]}W_\alpha(x-y) {\rm d}_y f(t,y), \\Z(x) & \,:\!=\int_{[0,\,x]}W_\alpha(x-y) {\rm d}y^\beta\ { for }\beta > 0 , \\ Z(x) & \,:\!= W_\alpha(x)\ { for } \beta =0.\end{align*}

Then, for any  $u,v > 0$,

$u,v > 0$,

\begin{equation*}\lim_{t \to \infty} {\mathrm{E}}[Z(t,u)Z(t,v)] = {\mathrm{E}} [Z(u)Z(v)].\end{equation*}

\begin{equation*}\lim_{t \to \infty} {\mathrm{E}}[Z(t,u)Z(t,v)] = {\mathrm{E}} [Z(u)Z(v)].\end{equation*} Proof. Fix any  $u,v>0$. For each

$u,v>0$. For each  $t>0$, denote by

$t>0$, denote by  $Q_t^{(u)}$ and

$Q_t^{(u)}$ and  $Q_t^{(v)}$ independent random variables with the distribution functions

$Q_t^{(v)}$ independent random variables with the distribution functions

\begin{equation*}{\mathrm{P}}\{Q_t^{(u)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\\frac{f(t,y)}{f(t,u)}, & \text{if } y \in [0,u],\\1, & \text{if } y>u ;\end{cases}\qquad {\mathrm{P}}\{Q_t^{(v)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\\frac{f(t,y)}{f(t,v)}, & \text{if } y \in [0,v],\\1, & \text{if } y>v.\end{cases}\end{equation*}

\begin{equation*}{\mathrm{P}}\{Q_t^{(u)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\\frac{f(t,y)}{f(t,u)}, & \text{if } y \in [0,u],\\1, & \text{if } y>u ;\end{cases}\qquad {\mathrm{P}}\{Q_t^{(v)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\\frac{f(t,y)}{f(t,v)}, & \text{if } y \in [0,v],\\1, & \text{if } y>v.\end{cases}\end{equation*}

Also, denote by  $Q^{(u)}$ and

$Q^{(u)}$ and  $Q^{(v)}$ independent random variables with the distribution functions

$Q^{(v)}$ independent random variables with the distribution functions

\begin{equation*}{\mathrm{P}}\{Q^{(u)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\(\frac{y}{u})^{\beta}, & \text{if } y \in [0,u],\\1, & \text{if } y>u ;\end{cases}\qquad {\mathrm{P}}\{Q^{(v)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\(\frac{y}{v})^{\beta}, & \text{if } y \in [0,v],\\1, & \text{if } y>v.\end{cases}\end{equation*}

\begin{equation*}{\mathrm{P}}\{Q^{(u)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\(\frac{y}{u})^{\beta}, & \text{if } y \in [0,u],\\1, & \text{if } y>u ;\end{cases}\qquad {\mathrm{P}}\{Q^{(v)} \leq y\} =\begin{cases}0, & \text{if } y<0, \\(\frac{y}{v})^{\beta}, & \text{if } y \in [0,v],\\1, & \text{if } y>v.\end{cases}\end{equation*}By assumption,

\begin{equation*}(Q_t^{(u)}, Q_t^{(v)}) \stackrel{{\rm d}} \to(Q^{(u)}, Q^{(v)}), \qquad t \to \infty.\end{equation*}

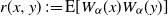

\begin{equation*}(Q_t^{(u)}, Q_t^{(v)}) \stackrel{{\rm d}} \to(Q^{(u)}, Q^{(v)}), \qquad t \to \infty.\end{equation*} Define the function  $r(x,y)\,:\!={\mathrm{E}} [W_\alpha(x)W_\alpha(y)]$ on

$r(x,y)\,:\!={\mathrm{E}} [W_\alpha(x)W_\alpha(y)]$ on  ${\mathbb{R}}^+\times {\mathbb{R}}^+$. Using the a.s. continuity of

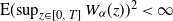

${\mathbb{R}}^+\times {\mathbb{R}}^+$. Using the a.s. continuity of  $W_\alpha$, Lebesgue’s dominated convergence theorem, and the fact that, according to [Reference Adler1, Theorem 3.2, p. 63],

$W_\alpha$, Lebesgue’s dominated convergence theorem, and the fact that, according to [Reference Adler1, Theorem 3.2, p. 63],  ${\mathrm{E}}(\!\sup_{z\in [0,\,T]}W_\alpha(z))^2<\infty$, we conclude that r is continuous, hence also bounded on

${\mathrm{E}}(\!\sup_{z\in [0,\,T]}W_\alpha(z))^2<\infty$, we conclude that r is continuous, hence also bounded on  $[0,T] \times [0,T]$ for all

$[0,T] \times [0,T]$ for all  $T>0$. This entails

$T>0$. This entails

\begin{equation*} r(u-Q_t^{(u)}, v-Q_t^{(v)}) \stackrel{{\rm d}}{\longrightarrow} r(u-Q^{(u)}, v-Q^{(v)}),\qquad t \to \infty,\end{equation*}

\begin{equation*} r(u-Q_t^{(u)}, v-Q_t^{(v)}) \stackrel{{\rm d}}{\longrightarrow} r(u-Q^{(u)}, v-Q^{(v)}),\qquad t \to \infty,\end{equation*}and thus

\begin{equation*}\lim_{t \to \infty} {\mathrm{E}} [r(u-Q_t^{(u)},v-Q_t^{(v)})] = {\mathrm{E}}[ r(u-Q^{(u)}, v-Q^{(v)})]\end{equation*}

\begin{equation*}\lim_{t \to \infty} {\mathrm{E}} [r(u-Q_t^{(u)},v-Q_t^{(v)})] = {\mathrm{E}}[ r(u-Q^{(u)}, v-Q^{(v)})]\end{equation*}by Lebesgue’s dominated convergence theorem. Further,

\begin{align*}{\mathrm{E}}[& Z(t,u)Z(t,v)] \\& = \,f(t,u)f(t,v)\int_{[0,\,u]}\int_{[0,\,v]} {\mathrm{E}} W_\alpha(u-y)W_\alpha(v-z) \textrm{d}_y \left(\frac{f(t,y)}{f(t,u)}\right) \textrm{d}_z \left(\frac{f(t,z)}{f(t,v)}\right) \\& = \,f(t,u)f(t,v){\mathrm{E}}[ r (u-Q_t^{(u)}, v-Q_t^{(v)})]\underset{t \to \infty}{\longrightarrow} (uv)^{\beta} {\mathrm{E}}[ r(u-Q^{(u)}, v-Q^{(v)})].\end{align*}

\begin{align*}{\mathrm{E}}[& Z(t,u)Z(t,v)] \\& = \,f(t,u)f(t,v)\int_{[0,\,u]}\int_{[0,\,v]} {\mathrm{E}} W_\alpha(u-y)W_\alpha(v-z) \textrm{d}_y \left(\frac{f(t,y)}{f(t,u)}\right) \textrm{d}_z \left(\frac{f(t,z)}{f(t,v)}\right) \\& = \,f(t,u)f(t,v){\mathrm{E}}[ r (u-Q_t^{(u)}, v-Q_t^{(v)})]\underset{t \to \infty}{\longrightarrow} (uv)^{\beta} {\mathrm{E}}[ r(u-Q^{(u)}, v-Q^{(v)})].\end{align*}

It remains to note that while in the case  $\beta > 0$ we have

$\beta > 0$ we have

\begin{align*}(uv)^{\beta}& {\mathrm{E}}[ r (u-Q^{(u)}, v-Q^{(v)})]\\ & = \int_{[0,\,u]}\int_{[0,\,v]} r(u-y, v-z) \textrm{d}_y (u^{\beta} \rm{\mathrm{P}}\{Q^{(u)} \leq y\} ) \textrm{d}_z (v^{\beta} \rm{\mathrm{P}}\{Q^{(v)} \leq z\}) \\& = \int_{[0,\,u]}\int_{[0,\,v]}r(u-y, v-z) \textrm{d} y^{\beta}\textrm{d} z^{\beta}={\mathrm{E}} \int_{[0,\,u]}W_\alpha(u-y) \textrm{d} y^{\beta} \int_{[0,\,v]}W_\alpha(v-z) \textrm{d} z^{\beta} \\& = {\mathrm{E}} [Z(u)Z(v)],\end{align*}

\begin{align*}(uv)^{\beta}& {\mathrm{E}}[ r (u-Q^{(u)}, v-Q^{(v)})]\\ & = \int_{[0,\,u]}\int_{[0,\,v]} r(u-y, v-z) \textrm{d}_y (u^{\beta} \rm{\mathrm{P}}\{Q^{(u)} \leq y\} ) \textrm{d}_z (v^{\beta} \rm{\mathrm{P}}\{Q^{(v)} \leq z\}) \\& = \int_{[0,\,u]}\int_{[0,\,v]}r(u-y, v-z) \textrm{d} y^{\beta}\textrm{d} z^{\beta}={\mathrm{E}} \int_{[0,\,u]}W_\alpha(u-y) \textrm{d} y^{\beta} \int_{[0,\,v]}W_\alpha(v-z) \textrm{d} z^{\beta} \\& = {\mathrm{E}} [Z(u)Z(v)],\end{align*}

in the case  $\beta = 0$ we have

$\beta = 0$ we have

\begin{equation*}(uv)^{\beta} {\mathrm{E}}[ r (u-Q^{(u)}, v-Q^{(v)})] = r(u,v) = {\mathrm{E}}[ W_\alpha(u)W_\alpha(v)] = {\mathrm{E}}[ Z(u)Z(v)].\end{equation*}

\begin{equation*}(uv)^{\beta} {\mathrm{E}}[ r (u-Q^{(u)}, v-Q^{(v)})] = r(u,v) = {\mathrm{E}}[ W_\alpha(u)W_\alpha(v)] = {\mathrm{E}}[ Z(u)Z(v)].\end{equation*}The proof of Lemma 1 is complete.

Proof of Theorem 1.1. Since h is eventually nondecreasing, there exists  $t_0>0$ such that h(t) is nondecreasing for

$t_0>0$ such that h(t) is nondecreasing for  $t>t_0$. Being a regularly varying function of nonnegative index, h is eventually positive. Hence, increasing

$t>t_0$. Being a regularly varying function of nonnegative index, h is eventually positive. Hence, increasing  $t_0$ if needed, we can ensure that

$t_0$ if needed, we can ensure that  $h(t)>0$ for

$h(t)>0$ for  $t>t_0$. We first show that the behavior of the function of bounded variation h on

$t>t_0$. We first show that the behavior of the function of bounded variation h on  $[0,t_0]$ does not affect the weak convergence of the general shot noise process. Once this is done, we can assume, without loss of generality, that

$[0,t_0]$ does not affect the weak convergence of the general shot noise process. Once this is done, we can assume, without loss of generality, that  $h(0)=0$ and that h is nondecreasing on

$h(0)=0$ and that h is nondecreasing on  ${\mathbb{R}}^+$.

${\mathbb{R}}^+$.

Integrating by parts yields

\begin{align}X(tu)-\int_{[0,\,tu]}(h(tu-y){\rm d}b(y) & = \int_{[0,\,u]}(h(t(u-y)){\rm d}_y(N(ty)-b(ty))\notag\\ & = (h(tu)-h((tu)-))(N(0)-b(0))\notag\\ & \quad + \int_{(0,\,u]}(N(ty)-b(ty))){\rm d}_y(-h(t(u-y))) . \end{align}

\begin{align}X(tu)-\int_{[0,\,tu]}(h(tu-y){\rm d}b(y) & = \int_{[0,\,u]}(h(t(u-y)){\rm d}_y(N(ty)-b(ty))\notag\\ & = (h(tu)-h((tu)-))(N(0)-b(0))\notag\\ & \quad + \int_{(0,\,u]}(N(ty)-b(ty))){\rm d}_y(-h(t(u-y))) . \end{align} For all  $T>0$,

$T>0$,

\begin{equation*}\frac{\sup_{u\in[0,\,T]}|h(tu)-h((tu)-)||N(0)-b(0)|}{a(t)h(t)}\leq\frac{h(tT)}{h(t)}\frac{|N(0)-b(0)|}{a(t)} {\overset{{\rm P}}{\to}} 0,\qquad t\to\infty,\end{equation*}

\begin{equation*}\frac{\sup_{u\in[0,\,T]}|h(tu)-h((tu)-)||N(0)-b(0)|}{a(t)h(t)}\leq\frac{h(tT)}{h(t)}\frac{|N(0)-b(0)|}{a(t)} {\overset{{\rm P}}{\to}} 0,\qquad t\to\infty,\end{equation*}

because h is regularly varying at  $\infty$. Denote by

$\infty$. Denote by  $\mathrm{V}_0^{t_0}(h)$ the total variation of h on

$\mathrm{V}_0^{t_0}(h)$ the total variation of h on  $[0,t_0]$. By assumption,

$[0,t_0]$. By assumption,  $\mathrm{V}_0^{t_0}(h)<\infty$. For all

$\mathrm{V}_0^{t_0}(h)<\infty$. For all  $T>0$,

$T>0$,

\begin{multline*}\frac{\sup_{u\in [0,\,T]}\int_{[u-t_0/t,\,u)}(N(ty)-b(ty)){\rm d}_y(-h(t(u-y)))}{a(t)h(t)}\\ \leq \frac{\sup_{u\in[0,\,T]}|N(tu)-b(tu)|}{a(t)}\frac{\mathrm{V}_0^{t_0}(h)}{h(t)}{\overset{{\rm P}}{\to}}0,\qquad t\to\infty.\end{multline*}

\begin{multline*}\frac{\sup_{u\in [0,\,T]}\int_{[u-t_0/t,\,u)}(N(ty)-b(ty)){\rm d}_y(-h(t(u-y)))}{a(t)h(t)}\\ \leq \frac{\sup_{u\in[0,\,T]}|N(tu)-b(tu)|}{a(t)}\frac{\mathrm{V}_0^{t_0}(h)}{h(t)}{\overset{{\rm P}}{\to}}0,\qquad t\to\infty.\end{multline*}

The convergence to 0 is justified by the facts that, according to (5), the first factor converges in distribution to  $\sup_{u\in [0,\,T]}|W_\alpha(u)|$, whereas the second trivially converges to 0. Recall that, when

$\sup_{u\in [0,\,T]}|W_\alpha(u)|$, whereas the second trivially converges to 0. Recall that, when  $\beta=0$,

$\beta=0$,  $\lim_{t\to\infty}h(t)=\infty$ holds by assumption, whereas when

$\lim_{t\to\infty}h(t)=\infty$ holds by assumption, whereas when  $\beta>0$, it holds automatically. Thus, as was claimed, while investigating the asymptotic behavior of the second summand on the right-hand side of (14) we can and do assume that

$\beta>0$, it holds automatically. Thus, as was claimed, while investigating the asymptotic behavior of the second summand on the right-hand side of (14) we can and do assume that  $h(0)=0$ and that h is nondecreasing on

$h(0)=0$ and that h is nondecreasing on  ${\mathbb{R}}^+$.

${\mathbb{R}}^+$.

Skorokhod’s representation theorem ensures that there exist versions  $(\hat N(t))_{t \geq 0}$ and

$(\hat N(t))_{t \geq 0}$ and  $(\hat W_\alpha(t))_{t \geq0}$ of the processes

$(\hat W_\alpha(t))_{t \geq0}$ of the processes  $(N(t))_{t \geq 0}$ and

$(N(t))_{t \geq 0}$ and  $(W_\alpha(t))_{t\geq 0}$ such that, for all

$(W_\alpha(t))_{t\geq 0}$ such that, for all  $T>0$,

$T>0$,

\begin{equation}\lim_{t \to \infty} \sup_{u \in [0,\,T]} |\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)|=0 \quad \text{a.s.},\end{equation}

\begin{equation}\lim_{t \to \infty} \sup_{u \in [0,\,T]} |\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)|=0 \quad \text{a.s.},\end{equation}

where  $\hat W_\alpha^{(t)}(u)\,:\!=\frac{\hat N(tu)-b(tu)}{a(t)}$ for

$\hat W_\alpha^{(t)}(u)\,:\!=\frac{\hat N(tu)-b(tu)}{a(t)}$ for  $t>0$ and

$t>0$ and  $u\geq 0$. For each

$u\geq 0$. For each  $t>0$, set

$t>0$, set

\begin{align*}h_t(x) & \,:\!= h(tx)/h(t), \qquad x\geq 0 , \\X_t(u) & \,:\!= (a(t))^{-1}\int_{(0,\,u]}(N(ty)-b(ty))){\rm d}_y(-h_t(u-y)),\qquad u\geq 0, \\\hat X^\ast_t(u) & \,:\!= \int_{(0,\,u]} \hat W_\alpha^{(t)}(y)\textrm{d}_y (-h_t(u-y)),\qquad u\geq 0.\end{align*}

\begin{align*}h_t(x) & \,:\!= h(tx)/h(t), \qquad x\geq 0 , \\X_t(u) & \,:\!= (a(t))^{-1}\int_{(0,\,u]}(N(ty)-b(ty))){\rm d}_y(-h_t(u-y)),\qquad u\geq 0, \\\hat X^\ast_t(u) & \,:\!= \int_{(0,\,u]} \hat W_\alpha^{(t)}(y)\textrm{d}_y (-h_t(u-y)),\qquad u\geq 0.\end{align*}

The distributions of the processes  $(X_t(u))_{u\geq 0}$ and

$(X_t(u))_{u\geq 0}$ and  $(\hat X_t^\ast(u))_{u\geq 0}$ are the same. Hence, it remains to check that

$(\hat X_t^\ast(u))_{u\geq 0}$ are the same. Hence, it remains to check that

\begin{equation}\lim_{t \to \infty} \int_{(0,\,u]} (\hat W_\alpha^{(t)}(y)- \hat W_\alpha(y))\textrm{d}_y (-h_t(u-y))= 0\quad \text{a.s.}\end{equation}

\begin{equation}\lim_{t \to \infty} \int_{(0,\,u]} (\hat W_\alpha^{(t)}(y)- \hat W_\alpha(y))\textrm{d}_y (-h_t(u-y))= 0\quad \text{a.s.}\end{equation}

in the  $J_1$-topology on D, and

$J_1$-topology on D, and

\begin{equation} \int_{(0,\,\cdot]} W_\alpha(y)\textrm{d} (-h_t(\!\cdot-y))\stackrel{J_{1}} \implies Y_{\alpha,\beta}(\cdot), \qquad t \to\infty.\end{equation}

\begin{equation} \int_{(0,\,\cdot]} W_\alpha(y)\textrm{d} (-h_t(\!\cdot-y))\stackrel{J_{1}} \implies Y_{\alpha,\beta}(\cdot), \qquad t \to\infty.\end{equation}

In view of (15) and the monotonicity of  $h_t$, we have, for all

$h_t$, we have, for all  $T>0$ as

$T>0$ as  $t \to \infty$,

$t \to \infty$,

\begin{multline*}\sup_{u \in [0,\,T]} \left|\int_{(0,\,u]} \left(\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)\right)\textrm{d}_y (-h_t(u-y))\right| \\\leq \sup_{u \in [0,\,T]} \left|\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)\right| h_t(T) \longrightarrow 0\quad \text{a.s.} ,\end{multline*}

\begin{multline*}\sup_{u \in [0,\,T]} \left|\int_{(0,\,u]} \left(\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)\right)\textrm{d}_y (-h_t(u-y))\right| \\\leq \sup_{u \in [0,\,T]} \left|\hat W_\alpha^{(t)}(u)-\hat W_\alpha(u)\right| h_t(T) \longrightarrow 0\quad \text{a.s.} ,\end{multline*}which proves (16).

Since  $W_\alpha$ is a Gaussian process, the convergence of the finite-dimensional distributions in (17) which is equivalent to the convergence of covariances follows from Lemma 1. While applying the lemma we use the equalities

$W_\alpha$ is a Gaussian process, the convergence of the finite-dimensional distributions in (17) which is equivalent to the convergence of covariances follows from Lemma 1. While applying the lemma we use the equalities

\begin{equation*}Y_{\alpha,\,\beta}(u)=\int_{(0,\,u]} W_\alpha(y)\textrm{d}_y(-(u-y)^\beta)\end{equation*}

\begin{equation*}Y_{\alpha,\,\beta}(u)=\int_{(0,\,u]} W_\alpha(y)\textrm{d}_y(-(u-y)^\beta)\end{equation*}

when  $\beta>0$ and

$\beta>0$ and  $Y_{\alpha,\,\beta}(u)=W_\alpha(u)$ when

$Y_{\alpha,\,\beta}(u)=W_\alpha(u)$ when  $\beta=0$. Our next step is to prove the tightness on D[0, T], for all

$\beta=0$. Our next step is to prove the tightness on D[0, T], for all  $T>0$, of

$T>0$, of

\begin{equation*}\hat{X}_t(u)\,:\!=\int_{(0,\,u]} W_\alpha(y) \textrm{d}_y (-h_t(u-y))=\int_{[0,\,u)} W_\alpha(u-y) \textrm{d}_y h_t(y),\qquad u \geq 0.\end{equation*}

\begin{equation*}\hat{X}_t(u)\,:\!=\int_{(0,\,u]} W_\alpha(y) \textrm{d}_y (-h_t(u-y))=\int_{[0,\,u)} W_\alpha(u-y) \textrm{d}_y h_t(y),\qquad u \geq 0.\end{equation*}

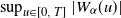

By [Reference Billingsley4, Theorem 15.5], it is enough to show that, for any  $r_1,r_2>0$, there exist

$r_1,r_2>0$, there exist  $t_0,\delta>0$ such that, for all

$t_0,\delta>0$ such that, for all  $t \geq t_0$,

$t \geq t_0$,

\begin{equation} {\rm{\mathrm{P}}}\Big\{\sup_{0 \leq u,v\leq T, |u-v|\leq \delta}|\hat{X}_t(u)-\hat{X}_t(v)| > r_1\Big\} \leq r_2.\end{equation}

\begin{equation} {\rm{\mathrm{P}}}\Big\{\sup_{0 \leq u,v\leq T, |u-v|\leq \delta}|\hat{X}_t(u)-\hat{X}_t(v)| > r_1\Big\} \leq r_2.\end{equation}

Put  $l\,:\!=\max(u,v)$. Recalling that

$l\,:\!=\max(u,v)$. Recalling that  $W_\alpha(s)=0$ for

$W_\alpha(s)=0$ for  $s<0$ (see the beginning of Section 2), we have, for

$s<0$ (see the beginning of Section 2), we have, for  $0\leq u,v\leq T$ and

$0\leq u,v\leq T$ and  $|u-v|\leq \delta$,

$|u-v|\leq \delta$,

\begin{align*}|\hat{X}_t(u)-\hat{X}_t(v)|& = \left| \int_{[0,\,l)}( W_\alpha(u-y)-W_\alpha(v-y)) \textrm{d}_y h_t(y) \right| \\& \leq M_T |u-v|^\alpha h_t(T) \leq M_T |u-v|^{\alpha}\lambda\end{align*}

\begin{align*}|\hat{X}_t(u)-\hat{X}_t(v)|& = \left| \int_{[0,\,l)}( W_\alpha(u-y)-W_\alpha(v-y)) \textrm{d}_y h_t(y) \right| \\& \leq M_T |u-v|^\alpha h_t(T) \leq M_T |u-v|^{\alpha}\lambda\end{align*}

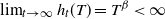

for large enough t and a positive constant  $\lambda$. The existence of

$\lambda$. The existence of  $\lambda$ is justified by the relation

$\lambda$ is justified by the relation  $\lim_{t\to\infty}h_t(T)=T^\beta<\infty$. Decreasing

$\lim_{t\to\infty}h_t(T)=T^\beta<\infty$. Decreasing  $\delta$ if needed, we ensure that the inequality (18) holds for any positive

$\delta$ if needed, we ensure that the inequality (18) holds for any positive  $r_1$ and

$r_1$ and  $r_2$. The proof of Theorem 1.1 is complete.

$r_2$. The proof of Theorem 1.1 is complete.

Acknowledgement

The authors thank the anonymous referee for a useful comment which enabled them to improve the presentation of the delayed standard random walk in Section 3.