1. Introduction

Financial firms are regularly asked to provide reassurance that they have the capital strength to cope with the events of the future. Regulators, shareholders, market counterparties and individual policyholders alike demand assurance that capital buffers are large enough to withstand potential losses. Where a track record of prudent management might have sufficed to engender trust in the past, firms are now asked to report, or are keen to demonstrate, their supposed strength in a quantitative manner. The “1-in-200 year” test in Individual Capital Assessment and Solvency II calculations are examples of quantitative demonstration of capital strength in insurance that echoes the 5% daily value-at-risk (VaR) number monitored by banks. Section 1.1 contains examples of two published cases where the capital calculations based on 1-year VaR of firms proved to be insufficient.

Practitioners involved in these calculations are aware that a purely mechanical approach to a capital or solvency calculation can be unhelpful. This paper looks at the various reasons why a purely mechanical approach may not be possible or justified. It also presents tools and techniques to help address some of these difficulties.

The main reasons for this, which are behind much of the thinking in this paper, can be summarised in terms of breadth and depth:

Too much breadth – although unintuitive at first sight, one of the biggest issues related to the calculation of solvency capital is that a glut of data/information on the numerous potential risks that a firm is exposed to may actually make it difficult to see the wood from the trees. In other words, a good understanding of what risks are important to the business is increasingly necessary, and a simplification or sifting stage is essential to begin to quantify the risks faced by the firm.

Insufficient depth – to adequately calibrate a probability event in the tail of a distribution requires a large amount of data, which is often simply not available. There may not even be enough data to know what model is most suitable for these tail events, never mind what parameters should be chosen for this model.

In both cases, judgement is needed to make sensible choices, but these choices will influence the results. Understanding this influence, and modifying results to allow for it, should be an important aspect of capital calculations. This paper seeks both to offer a guide to the kinds of things that can go wrong and to provide a set of tools that can be used to make capital calculations more robust.

It is tempting to believe that complex analytical and statistical approaches are doomed to fail and that a simple approach is the best way forward. Haldane (Reference Haldane2012) makes an erudite argument for such an approach, and we recommend it as further reading. Our view is that risk models are necessary but have to be carefully built, given that they can at best be an approximate representation of the real world. In particular, practitioners need to be aware of the impact of the multitude of choices they are forced to make, and model results should be treated with a healthy dose of wariness.

1.1. A (Hypothetical) Motivating Example

We consider a set of hypothetical but not implausible events for a financial firm that assesses at the start of the year that €10 bn is sufficient to absorb losses with 99.5% probability. By the end of the year, the firm has lost €20 bn. Many things have gone wrong at once, including the following:

Accounting change

• A clarification in the regulatory calculation of the illiquidity premium meant that the insurer could no longer use the yield on certain illiquid assets to discount the liabilities. The liabilities rose as a result of this change, leading to an accounting loss. At the same time, a change in tax rules meant that a deferred tax asset previously considered recoverable had to be written off.

Unmodelled heterogeneity

• A problem arose with a set of policies that, for calculation convenience, had been modelled together. It turned out that within a group of policies assumed to be homogenous, there was in fact a variety of investment choices; as a result, a large block of these policies had guarantees that were significantly into the money. Policyholders selectively took advantage of these guarantees, producing costs far beyond those projected from the capital model.

Market risks

• New risks emerged that had not previously been modelled explicitly. Specifically, a loss on derivative positions arose from the widening of spreads between swaps based on LIBOR and overnight index swaps. A euro government defaulted on its bonds.

• A change in the shape of the credit spread curve meant that the existing market-consistent ESG could not exactly calibrate the year-end market conditions. Concerned about how this would appear in upcoming meetings with the regulator, a new ESG from a different provider was put in place at short notice. However, for reasons that are still not fully transparent, this led to an increase in the stated time value of liability options.

Non-market risks

• Losses from an earthquake in the Middle East were substantially greater than the maximum possible loss calculated from a third-party expert model. The reasons for this seem to be a combination of a disproportionate number of January policy inceptions (the model assumed uniformity over the year), inadequate coverage of the region in the external catastrophe model, and poor claims management exacerbated by political instability and corruption.

• The annuity portfolio took a one-off hit because of a misestimation of the proportion married and a strengthening of the mortality improvement rates.

Poor returns on new investments

• Early in the year, the firm participated in some securitised AAA investments that exploited market anomalies to provide yields closer to those on junk bonds. The capital model had been based on the portfolio before this participation. The participation turned out to be disastrous, and virtually all the investment had to be written off following an avalanche of unforeseen defaults in the underlying assets.

Legal loophole

• Many life insurance claims became due following an industrial accident. The insurer was confident that a proportion would be recovered from reinsurers as assumed within the capital model. However, the reinsurance contract contained a clause not captured in the capital model, which limited payouts in the event of large losses from a single event.

Although our example in this case is hypothetical, there are indeed real examples of firms who proclaimed that they held economic capital to withstand a loss equivalent to a very extreme event (e.g. a 1-in-2,000 year) before losing a multiple of that amount of that figure.

Table 1 shows the published 1-year VaR figures for two of the well-publicised cases in 2008 that ultimately led to state bailouts – AIG (2008) and Fortis (2008). We have also calculated the confidence level associated with the experienced losses, assuming normal distributions.

Table 1 Published 2008 1-year VaR for AIG and Fortis

1Based on the upper value of $19.5 bn ECAP.

It is of course theoretically possible that such an outcome was a case of exceptionally bad luck, but with hindsight we have seen how risks that ultimately proved to be important were either overlooked or scoped out of the capital models.

1.2. Capital Model Scope

Our hypothetical example in section 1.1 highlights many possible sources of loss. Whereas some of these are avoidable, and others may be captured within a stochastic internal model, many will not be modelled. These losses may be attributed to lack of knowledge and genuine model uncertainty rather than an explicit stochastic element. It is debatable whether probability theory is the right tool to address such risks.

If these risks are simply ignored, then firms are likely to see frequent exceptions, that is, experience losses worse than the previously claimed 1-in-200 event. Mounting evidence of such exceptions could undermine a firm's claims of financial strength, and may even call into question the advertised degree of protection (e.g. 1-in-200) that a supervisory regime claims to offer.

Thus, rather than simply asserting (ex post) that these risks were out of scope, it is therefore desirable that risks are identified and some attempt is made at quantification, even if a variety of techniques are needed to address different elements.

1.3. What's in this Paper?

In the remainder of this paper, we focus on four specific types of error and also examine some broad themes.

Section 2 considers the overall aspect of choice or judgement with respect to modelling, explaining the basic need for judgement, and highlighting several broad areas where judgement/choice is manifested in the context of actuarial modelling. The chapter then considers each area, and introduces some methods of mitigating the need for judgement as well, providing some observations on good practice for cases where judgement is inevitable.

Section 3 looks at the choice of risks to include in a model. Two key aspects are considered. These are the selection of features and extraction of features to be modelled. How to allow for the risks not explicitly covered in the model is also discussed. Some mitigants including grossing-up techniques and stress testing are discussed.

Section 4 looks into model and parameter risk and describes classic statistical approaches to parameter error, showing constructions of confidence and prediction intervals, incorporating the T-effect, for a range of distributions and data sample sizes. It also tackles model error, with a number of examples, before discussing some techniques to assess model errors.

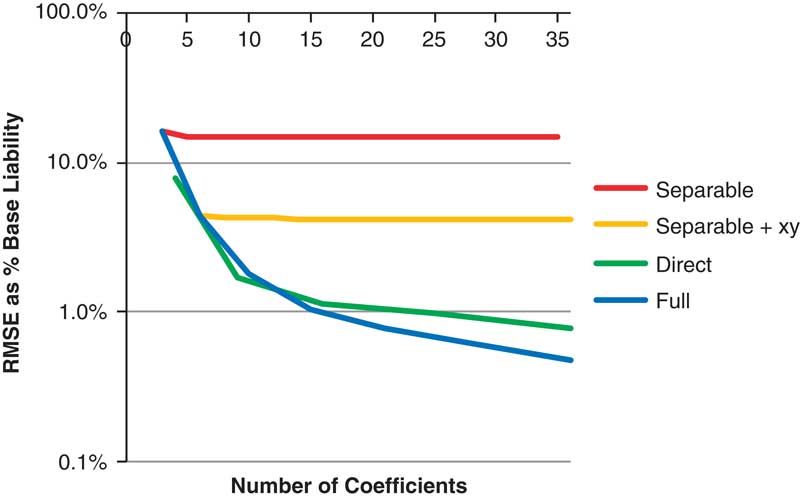

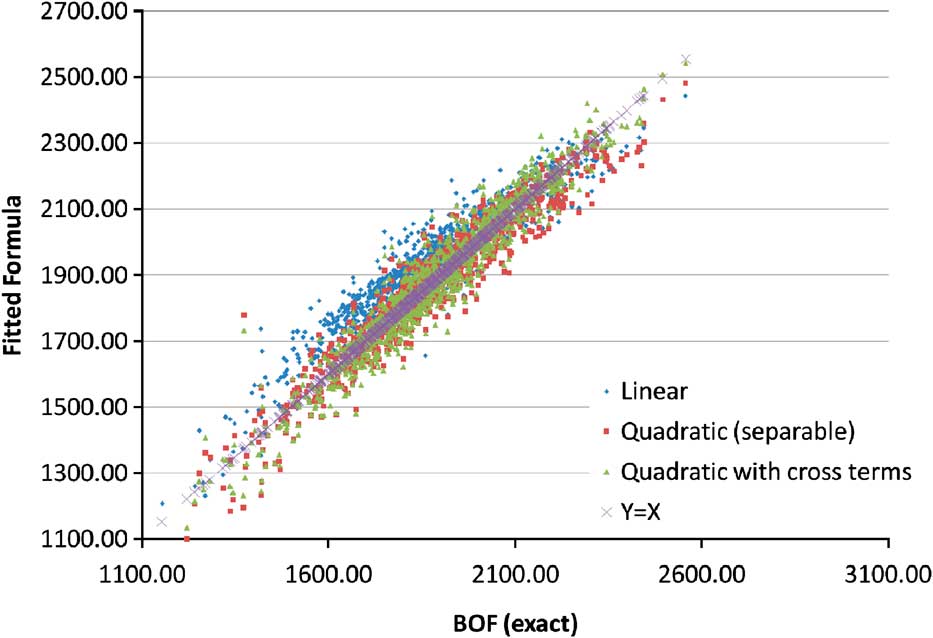

Section 5 looks at several aspects of errors introduced by calculation approximations when, in the interest of faster run times, assets or liabilities are approximated by polynomials. This first looks at proxy models, and the potential errors that they introduce, as well as the errors introduced by Monte Carlo sampling.

Section 6 includes a summary of key conclusions from previous sections, our concluding thoughts on the topic and references.

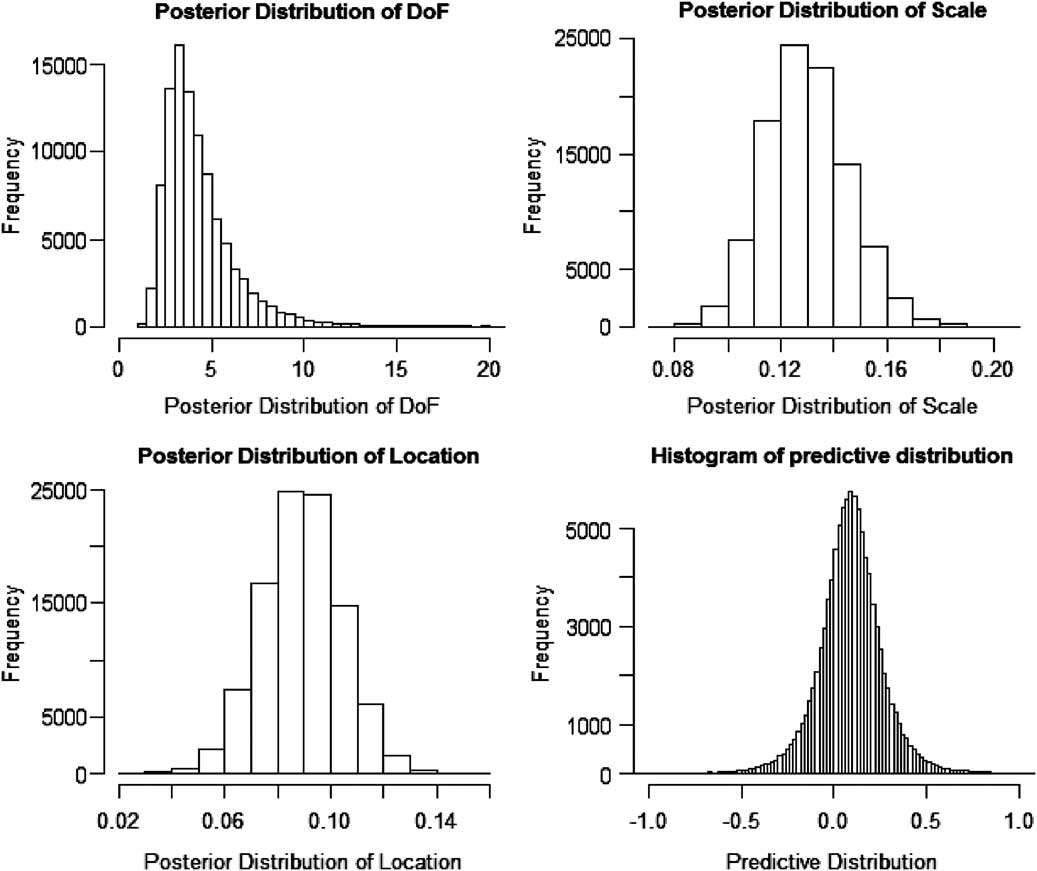

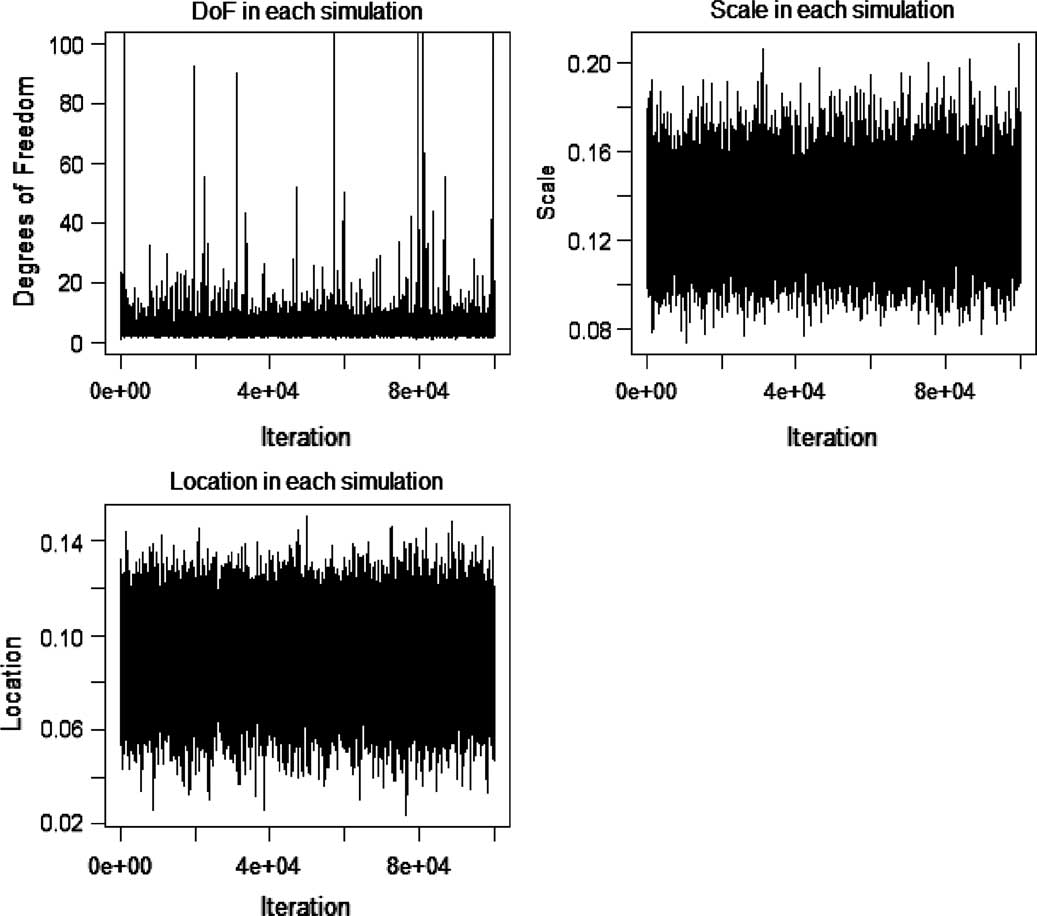

Appendix A draws together the use of Bayesian methods in risk analysis, including the application of judgement, Bayesian networks and the use of Markov chain Monte Carlo methods to compute posterior distributions.

Appendix B gives details of the liability valuation formulas used in the proxy model examples from section 5.

2. Judgement

First, it is important to acknowledge that judgement is a necessary and inescapable part of actuarial modelling, and it is very difficult (in fact, one could argue impossible with the exception of pathological examples) to simply avoid the need to make any choices. Moreover, as we shall explain, judgement permeates almost every aspect of modelling, which means that one may encounter the need to make choices at various levels.

Another aspect is that any judgement, by definition, depends to a certain extent on who is making the judgement, and the framework within which they need to make the judgement. In the United Kingdom, the board is ultimately responsible for all assumptions and judgements, and statutory audits in the United Kingdom assert that a set of accounts provide a “true and fair” view, in accordance with the accounting principles.

A specific element of the audit assesses whether judgements made by management during the production of a set of “true and fair” accounts are reasonable. A reasonable judgement may be interpreted as within the range of conclusions an expert could draw from the available data, and will have regard to common practice in the market. The range for reasonable judgements may be narrower than parameter standard errors in a statistical sense, especially when data are limited.

An audit process also looks for errors that are unintentional human mistakes, such as the use of an incorrect tax formula or omitting an expense provision. Any such mistake is assessed using the concept of a “materiality” limit. This is an amount of error, expressed in currency terms, which is deemed not likely to change the decisions of the users. Material errors should be corrected, but a clean audit can still be granted provided the aggregate effect of mistakes is within the materiality limit.

The exercise of judgement is not a mistake; it is possible for two reasonable judgements to differ by more than the materiality limit. Therefore, decision makers relying on financial statements should be aware that alternative judgements in the account preparations could have led to different decisions. True and fair accounts must be free of material mistakes but cannot be free of material judgement.

Thus, there is a considerable element of judgement embedded in the production of the basic “realistic” or “best estimate” balance sheet, even before extrapolating into the calculation of the capital required to withstand a hypothetical 1-in-200 event.

We try to address this aspect of judgement by looking at two elements:

• Section 2.1 aims to broadly categorise the different manifestation of choice/judgement inherent within modelling, and point the reader to tools that potentially deal with each aspect.

• It is extremely unlikely that we can do away with judgement altogether, and there will be aspects that inevitably require judgement. In section 2.2, we try and summarise some good principles within current industry practices.

2.1. Manifestations of Judgement

Judgements are an integral part of any modelling exercise, because any model is necessarily a simplified representation of the real world and as such needs to be stripped down to its most relevant components. This ensures that the model is a useful analogy of the real world for the specific purpose that a model is required for. The process of stripping down to the bare useful components and “calibrating” the resultant model inevitably has a large amount of judgement associated with it. In the limited context of actuarial modelling, this judgement can broadly be thought to manifest itself in the following ways:

• choosing which risk factors to model;

• choice of overall model framework;

• choosing individual parts of the model;

• choice of calibration methodology;

• judgements inherent within the data itself;

• choice of parameters.

One can imagine that these have (broadly) decreasing levels of significance to the end results. However, the industry appears to have the greatest focus on the final (and perhaps second to last) elements of parameter choice and calibration methodology, often to the detriment of the overall choice of framework and model fitting. For example, companies may focus most of the documentation and rationale of expert judgement in the final two categories, potentially at the expense of reduced oversight and attention paid to the substantial implied judgements involved in the first two categories.

2.1.1. What Risk Factors to Model?

Perhaps the single biggest modelling choice to be made by a company is simply what risks to model. As briefly explained above, a model can only hope to be a simplified representation of the reality it intends to model. A philosophical way of thinking about it is that any model of the universe needs to be at least as large as the universe itself, and in fact in order for us to project it into the future faster than real time, it needs to be even larger.

A key constraint on the number of risk factors to include in a model is availability of resources. Human resources, computer resources and time are required in most modelling exercises and these have to be used in the most efficient manner. To that end, we need to choose the most appropriate aspects to model. Suppose we could simplify the modelling problem considerably, by converting the problem into a simple choice regarding the number of “risk factors” to model. It can be shown that the number of modelling choices faced by a company is simply enormous! For example, section 3.1 details a case study to highlight the enormity of this decision, where over a million inputs to the balance sheet are summarised by as few as 100 risk factors.

Needless to say, choosing the risk factors to model is an extremely important exercise and one that should not be taken lightly. Also important is that once the modelling choices have been made is how to allow for the risk factors that are not modelled. This is addressed in the “grossing-up techniques” commentary in section 3.3.

2.1.2. Choice of Overall Framework

This is quite possibly the second most significant judgement to be made within a (capital) modelling context, although it is not always appreciated as such. Of course, the materiality of the different choices depends on the particular problem at hand. We try to illustrate this in the context of aggregation methodology using a very simple case study with two risks (described as two products of equal size, A and B):

• Each risk akin to a simple product with a guaranteed £100 m liability, in which not all the risks are hedgeable. There is a residual 1-in-200 risk that the assets (and capital) would lose half their value. The extra capital required at time 0 such that the product has a 99.5% probability of meeting its guarantees at time 1 is (an extra) £200 m (£100 m for each product).

• The two products are assumed to be uncorrelated.

Let us now consider two commonly used methods of calculating the aggregate capital requirement:

1. Using an “external correlation matrix” approach, the answer is relatively simple. The aggregate capital requirement can be calculated as

$$$\sqrt {Capital_{A}^{2} + Capital_{B}^{2} } $$$

, which is £141.6 m in total and £70.8 m per product.

$$$\sqrt {Capital_{A}^{2} + Capital_{B}^{2} } $$$

, which is £141.6 m in total and £70.8 m per product.2. Using an alternative approach of undertaking a Monte Carlo simulation of the losses of the two products assuming the distribution of the losses are lognormal (again, a popular choice amongst practitioners) with the same 1-in-200 individual capital requirements. This produces a different aggregate capital requirement of £121 m and £60.5 m post-diversification capital requirement for each product.

This simple example of the impact of the choice of the aggregation framework shows that the aggregate capital requirement can differ by 20% of the liabilities. This example is not special in any sense, in that the two products could represent pretty much any two risk factors.

One can appreciate that there are multiple choices to be made in simply aggregating different risk factors. This is further compounded by choices that need to be made in relation to exotic copula structures, non-linearities, etc.

Finally, this example only touches on a very specific aspect of framework choice; there are many other implicit judgements necessitated by trying to create a simplified representation of the real world. There is a long list of other judgements that need to be made, examples of which include:

• using heavy models versus lite (proxy) models;

• if proxy model, choice of proxy model;

• what measure is used to estimate capital, for example, VaR, tail VaR, etc;

• granularity of assets, model points;

• use of instantaneous stress approximation (time 0, time 1 or other);

• holistic model of the business versus detailed product-specific models aggregated;

• treatment of new business;

• fungibility of capital;

• measure of correlation used.

2.1.3. Choice of Model Components

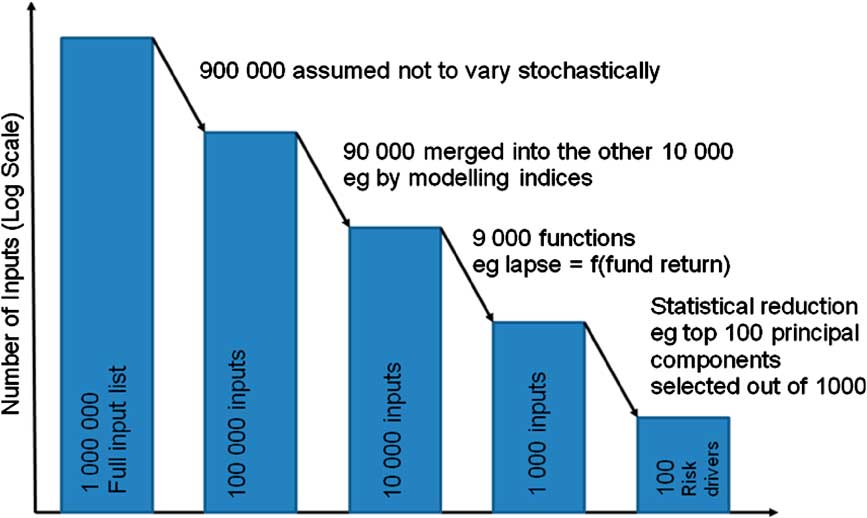

The choice of model for each risk is an important aspect of modelling. A previous paper, an extract of which is showed below, from this working party (Frankland et al., Reference Frankland, Smith, Wilkins, Varnell, Holtham, Bifis, Eshun and Dullaway2009) showed that fitting different models to the same historical data can have a range of different results, even when using relatively large amounts of data (Chart 1).

Chart 1 Quantile-Quantile plot of UK equity returns, 1969–2008, with various fitted models

“Even after settling on a single data set, the fitted curves for U.K. produce a wide range of values for the 1-in-200 fall. The most extreme results are from a Pearson Type IV, applied to simple returns, which implies a fall of 75% at the 1-in-200 probability level. At the other extreme is the lognormal distribution, with a fit implying that even a 35% fall would be more extreme than the 1-in-200 event. Other distributions produce intermediate results”.

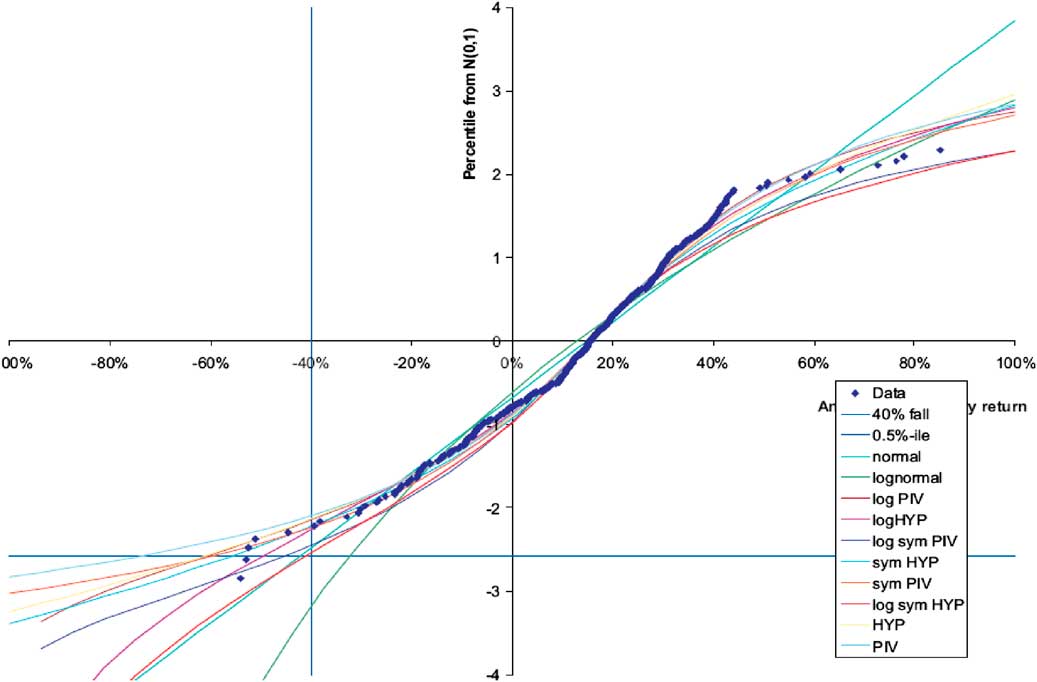

Chart 2 shows the 0.5th percentile estimated using different models for the UK and Denmark. Interestingly, the same extreme potential model error is also true for Denmark (which also has relatively large amounts of historical data), but in this case the models that result in the most and least extreme values are completely different.

Chart 2 0.5th percentile estimated using different models based on UK and Danish equity data

Another example of model risk is described in section 4.2 and the reader is also referred to a recent paper by Richards, Currie and Ritchie, and the subsequent discussion.

2.1.4. Choices Inherent within the Calibration

There are other, more commonly acknowledged calibration choices that should be noted in the same context. For example, calibration of a model is required to fit historical data to a 1-in-200 event, and one of the decisions is the choice of data period to use. Very simply, if we are using x years of data, then the model would accept the worst event within this data window as being a 1 in x event. Thus, the choice of data window makes an important contribution to the results, and it is important to note that even picking any available data set comes with a default assumption regarding the data period.

This context results in an obvious place where one may want to exercise judgement, that is, one may want to impose some views on the extremity of actual events observed. An obvious example can be constructed by using very short data series that included the recent credit crisis, which would naively overestimate the resulting extreme percentile calculated by assuming such an extreme event that would occur regularly within such a short time period. Of course, it goes without saying that the reverse would also have been true when looking at short-term data before the recent financial crisis.

Another example of judgement related to calibration is the choice of method to estimate the parameters of a model. The two approaches that are commonly used are maximum likelihood estimation and the method of moments (described further in section 4.1.4). In calibration exercises where data are limited, these different approaches can lead to very different results.

2.1.5. Judgement Inherent within Underlying Data

Before the application of judgement to the data, it is useful to consider the nature of the raw data used to calibrate the model. The data might not themselves be accurate, being based on estimates, or might contain a systemic effect that would influence our interpretation of the data and perhaps the calibration.

A recent example of data that is based on estimates, which was perhaps not generally appreciated at the time, is the ONS mortality data for older ages in England and Wales. The results of the 2011 Census revealed that there were 30,000 fewer lives aged 90 years and above than expected. In absolute terms, a difference of 30,000 lives is not a large change. However, in relative terms, it represents a reduction of around 15%. Between census years, the exposure to risk is an estimated figure. The funnel of doubt around the estimate increases as the time since the last census increases. The ONS data, being based on a large credible data set, are the source of most actuarial work on longevity improvements, including the projection model developed by the continuous mortality investigation. One implication of the recent census data is that estimates of the rate of improvement of mortality at high ages might have been significantly overstated.

An example of a systematic effect that may be present in data, and should be considered before using any data to calibrate an internal model, would be the derivation process behind a complex market index. As an illustration, Markit publishes a report on its iBoxx EUR Benchmark IndexFootnote 1 that documents multiple changes to the basis of preparation. This index may be considered a suitable starting point to construct a model of future EUR bond spread behaviour, but such an analysis should consider the effect of the various changes. Of course, the report from Markit contains extensive details of the past index changes, but an equivalent level of detail might not be available for other potential data sources.

2.1.6. Choice of Parameters and Expert Overlay

Irrespective of the model we have chosen to use, we would need to supply it with suitable parameters. This can be done by using some statistical methods of choosing the best parameters, acknowledging the parameter uncertainty inherent from fitting to limited data. Section 4.1.1 looks at possible ways of quantifying the risk capital where parameters are uncertain.

In addition to genuine parameter uncertainty (assuming that past data are truly reflective of the future), we may also have uncertainty as the future conditions may be different to historic conditions (taking interest rates, for example). In this case, management still needs to make some judgements on the choice of parameters.

This judgement on model parameters can be explicit or implicit. For example, at the most explicit level, one may override the 1-in-200 stress itself by superimposing the views of investment experts. In many cases, the paucity of data and the changing economic landscape makes this a regular part of the capital calculation exercise. Alternatively, judgement can be more implicit in the structure of the model. For example, conditional on the form of the model, one may have prior views on certain parameters (e.g. we may have a prior view on the volatility parameter of a lognormal distribution).

However, it should be recognised that any judgement (even though necessitated because of poor data and changing environment) is ultimately subjective. One advantage of explicit judgement (over implicit judgement) is that it is extremely transparent and openly recognises that models and data can only go so far in terms of predicting future distributions.

For either explicit or implicit judgement, we need to recognise that, although one may have a better base assumption, parameter uncertainty (section 4.1.1) still needs to be taken into account. In addition, one should aim to follow a good process when coming up with the parameters, and some observations on current practice within the industry are discussed in the next section.

2.2. Expert Judgement: Current Practices

Judgement is by no means limited to actuarial modelling, and it has been the subject of much literature outside of the financial world, for example, engineering and public health. From a purely statistical viewpoint, there is much to learn from Bayesian statistics, and a summary of Bayesian methods is provided in Appendix A, which we hope the readers find useful. In addition, there has been substantial research on this subject in other fields, particularly science and engineering (see Ouchi, Reference Ouchi2004 for a literature review of expert judgement research). We refer to some examples as extremely useful reading,Footnote 2 and perhaps learning good principles on judgement from other fields could be the scope of further actuarial research.

However, perhaps because of the huge range of possible decisions to make within actuarial modelling itself, we limited our scope to discuss aspects of current practices in the UK insurance industry. It quickly becomes evident that judgement has always been a part of risk modelling, even if it has not always been explicit. More recently, as firms started to prepare for Solvency II, there has been a much greater level of codification and documentation of expert judgement, as with other aspects of modelling. In this section, we look at how insurance firms are currently approaching this area and briefly look at research on expert judgement outside financial modelling.

2.2.1. Regulatory Background

The Solvency II Directive does not contain any direct references to expert judgement, but its use is anticipated in the Level 2 technical standards:

Expert judgement

“1. Insurance and reinsurance undertakings shall choose assumptions on the issues covered by Title 1, Chapter VI of the Directive 2009/138/EC based on the expertise of persons with relevant knowledge, experience and understanding of the risks inherent in the insurance or reinsurance business thereof (expert judgement)”.

Article 4, implementing measures, states that:

“Insurance and reinsurance undertakings shall choose assumptions on [the issues covered by Title 1, Chapter VI of the Directive 2009/138/EC] [valuation of assets and liabilities, calculation of capital requirements and assessments of own funds] based on the expertise of persons with relevant knowledge, experience and understanding of the risks inherent in the insurance or reinsurance business thereof (expert judgment).

Insurance and reinsurance undertakings shall, taking due account of the principle of proportionality, ensure that internal users of the relevant assumptions are informed about its relevant content, degree of reliance and its limitations. For this purpose, service providers to whom functions have been outsourced shall be considered as internal users”.

Level 3, Guideline 55 requires that:

“The actuarial function should express an opinion on which risks should be covered by the internal model, in particular with regard to the underwriting risks. This opinion should be based on technical analysis and should reflect the experience and expert judgement of the function”.

CEIOPS issued a consultative paper on internal models (CP56) in 2009 that, inter alia, set out its views at that time on expert judgement, commencing that “CEIOPS recognises that in a great many cases expert judgement comes into play in internal model design, operation and validation”. Further references from the CP are set out in the Appendix to this policy.

It is likely that the use of expert judgement will be subject to Level 3 guidance. EIOPA prepared an early draft guidance paper on expert judgement, which concentrated on the need for proper documentation, validation and governance of assumptions derived by expert judgement. It also identified the need for clear communication between users and providers of expert judgement to avoid misunderstandings. However, with the ongoing delays to the Solvency II project, it is not clear when this particular guidance will be open to public consultation.

In a letter to firms on IMAP progress in July 2012 (Adams, Reference Adams2012), the FSA identified expert judgement as an area for additional commentary. This recognised expert judgement as important and necessary in many aspects of internal models but identified areas for improvement that include the same areas of documentation, validation and governance. This feedback indicates that firms are finding it challenging to integrate expert judgement, with its inevitable uncertainties, into internal model processes where the emphasis is on a high standard of justification and documentation.

Against this background, a wide range of practices have developed. We comment below on a few of these, based on the experience of members of the working party and a survey made by the Solvency & Capital Management Research Group (Michael et al., Reference Michael, Roger and Peter2012) covering 15 UK life firms. Our comments are biased towards risk calibration in internal models, although, as we note below, expert judgement may apply in many other areas.

2.2.2. Scope of Expert Judgement

Expert judgement can be applied in many areas of actuarial work and not limited to deciding on the distribution of risks. Examples of other areas where expert judgement is commonly used include management actions applied in extreme scenarios, asset valuation in illiquid markets and the use of approximations. Expert judgement applies to standard formula calculations and internal models. Firms differ on which areas are within the scope of expert judgement and in fact defining that scope is not straightforward. There is almost no area of a capital model that does not involve some subjective aspects, and thus it is difficult to determine where the boundary should lie.

Defining when expert judgement should be used is also not easy. Sometimes there seems to be an assumption that data analysis and expert judgement are mutually exclusive, usually with the expectation that expert judgement is used only when data are insufficient to be relied on alone. In practice, it seems more likely that decisions will combine both, but with more weight on expert judgement as the quantity or quality of data diminishes.

In the area of risk distributions, expert judgement is commonly applied to the choice of data, calibrated parameters of risk models and correlations. It is also commonly used to adjust the tails of the distribution that are not considered sufficiently extreme, or conversely, are considered too extreme.

A more fundamental divergence between firms is whether the choice of overall risk model is considered to be subject to expert judgement or not. In section 2.1, we have illustrated how model risk can be as significant as other modelling decisions, if not more so; however, as we noted above, it seems that some firms limit the scope of expert judgement to the choices made once the model is selected.

2.2.3. Expert Judgement Policies

Most firms have developed a framework for expert judgement and this is usually embodied in an expert judgement policy. This is commonly a standalone policy covering all applications of expert judgement, but may instead be embedded in the documentation of those parts of the internal model where judgement is applied. Typically, the policies cover the high-level principles and the governance process, such as the various levels of review and signoff needed for the approval of specific expert judgements. Other contents vary widely and there are some significant differences in the scope and application of expert judgement.

2.2.4. Who are the Experts?

Arguably, the single most important aspect of expert judgement is simply identifying suitable and relevant experts. Policies may indicate what criteria are used to establish whether someone qualifies as an expert, but often there is no formal process to identify them in advance. Instead the choice might be left to the modeller's discretion, which allows for some flexibility, but one may need to be careful to ensure that a wide enough range of views is sought. Another important aspect is for the experts to be sufficiently independent of both the calibration process and the capital calculation process. The importance of this is likely underestimated, as are the risks of expert judgement influencing the capital outcomes.

Occasionally more than one expert may express a view, which in turn leads to differences of opinion that need to be merged into a single modelling decision. Thus, in addition to model risk, we now have to consider “expert risk”. Some firms get round this by using a technical committee, and therefore effectively (or explicitly) the committee acts as the “expert” and the committee process resolves diverse views into a single decision. However, committees are only one way to aggregate the opinions of several experts and may not be the most effective, and this aspect has been better researched in other fields. SeeFootnote 3 Ouchi (Reference Ouchi2004) for a literature review of expert judgement research. A large part of this research covers elicitation and aggregation of expert judgement, which so far do not seem to have been widely considered in economic capital applications (other than possibly operational risk).

Elicitation is the process of gathering expert judgement. Experts are human and can be subject to various biases or herding. In a group or committee, the most senior, most confident or simply most biased (e.g. not independent) experts may overrule other opinions. Various structured approaches to elicitation have been developed to reduce the impact of these biases (Cooke & Goossens, Reference Cooke and Goossens1999) give a detailed procedure guide. As an example, one of the first such approaches was the Delphi technique, in which experts give their initial opinions individually and these are then shared anonymously with the group. The experts can then revise their opinions and the process is repeated until a consensus is reached.

To summarise, we need to be conscious about careful choice of experts, ensuring independence of experts, and the aggregation of expert judgement from more than one expert. There is scope for further research in these areas to see whether a more structured approach to expert judgement could address some of these perceived weaknesses.

2.2.5. Validation of Expert Judgement

Expert judgement in an internal model is subject to validation requirements. Expert judgement, by its nature, is not the output of a mechanical process that can be tested in a simple manner. Where expert judgement has been used and is of material consequence to capital requirements, it is good practice to try and validate the judgement.

Some examples of potential methods that can be used to validate judgement are given below:

• The most common approach is a review and challenge process, which involves discussing the judgement with the expert, with the aim of better understanding the rationale and applicability to the current problem.

• Discussion by an independent panel (e.g. a technical committee) may provide a useful avenue for discussion and debate.

• Sensitivity analysis, to establish the importance of the expert judgement.

• Comparison against any relevant external information such as industry benchmarks and other regulatory information (e.g. internal model stress against the standard formula stresses). Care must be taken to avoid systemic risk via “herd behaviour”.

• Backtesting has also been proposed, although this may be problematic as judgement is often used in situations where data are sparse by definition (i.e. opining on an extreme event).

2.2.6. Case Study

We illustrate the importance of the validation of expert judgement and independence of experts by considering the views of different experts (at different points in history) on the maximum possible human lifetime for different countries. In 2002, Jim Oeppen and James W. Vaupel published a paper looking at life expectancy, and how proposed limits on life expectancy have consistently been exceeded by reality (Chart 3).

Chart 3 Male life expectancy at birth for a series of countries as a function of the year of publication

The markers on Chart 3 show male life expectancy at birth for a series of countries, expressed as a function of the year of mortality table publication. The fitted trend is shown as the thick black ascending line. The horizontal bars correspond to expert assertions of biological upper bounds on life expectancy. The left-hand end of each bar represents the date of the assertion. The intersection of each horizontal line with the ascending trend indicates the point at which experience refuted the claimed upper bound. Where there is no intersection, and the horizontal bar lies to the right of the fitted trend, this indicates that the asserted upper bound was already contrary to published mortality tables at the date the assertions were made.

It is not surprising that even experts get life expectancy wrong. Longevity is a subtle and complex area, with its fair share of historic data, data errors, competing models and uncertain impact of future social trends. What is surprising is that experts have appeared to systematically underestimate future lifespans rather than a mixture of underestimating and overestimating, which we would have intuitively expected.

Oeppen and Vaupel give one possible explanation for the consistent underestimates that “They give politicians license to postpone painful adjustments to social security and medical-care systems”. One might say that in the market for theories, there is a demand from politicians for short lifespan theories. There might be a similar demand from insurers or pension funds who write annuities. There is no similar demand for theories of long lifespans, as the pensioners who might benefit from better capitalised annuity writers are seldom sufficiently well organised to commission research to support their case. As pointed out by Smith & Thomas (Reference Smith and Thomas2002), a skewed demand for experts may bias the theories that the market supplies.

2.3. Key Points

The salient point of this chapter is simply that, although the calculation of capital requirements is extremely sensitive to judgements made, judgements are also a necessary and inescapable part of actuarial modelling. To that end, it is important for the companies to recognise where judgement occurs, and we try and broadly categorise different areas where judgements can occur, together with some detailed examples. In particular, the importance of the choice of risk factors to model, choice of framework and choice of model should not be underestimated.

Given that in many cases expert judgement is inescapable, we take a critical look at current practices of expert judgement within the industry. Key aspects considered are the scope of expert judgement (it needs to cover all the categories discussed, in particular choice of risk factors, framework and models) as well as the importance of choosing independent experts. The paper also highlights that we can perhaps learn more about the process of gathering expert judgement from other fields.

3. Choice of Risk Factors to Model

As highlighted in section 2.1.1 previously, it is not always intuitive what risk factors to model or how they should be modelled. To do this, it is essential to identify the features of the phenomenon that are essential and then extract the features to be modelled. Consideration should be given to features that are not considered. We discuss each of these in turn below.

3.1. Selection of Risk Factors

Selection of risk factors reduces the dimensionality of the data set by selecting only a subset of the identified predictive variables or the factors that have the greatest impact on the variable of interest. Although this is perhaps the single most important aspect of risk modelling within a company, it is not always transparent what drives the selection of factors. In theory, the variables discarded are those that have least predictive power in explaining a set of data outcomes.

In practice, the variables ultimately modelled are chosen using judgement accumulated over the years; it is very unlikely for a model to be created entirely from scratch, and as such it often inherits legacy judgements, such as granularity of model points, assets, time-step, etc.

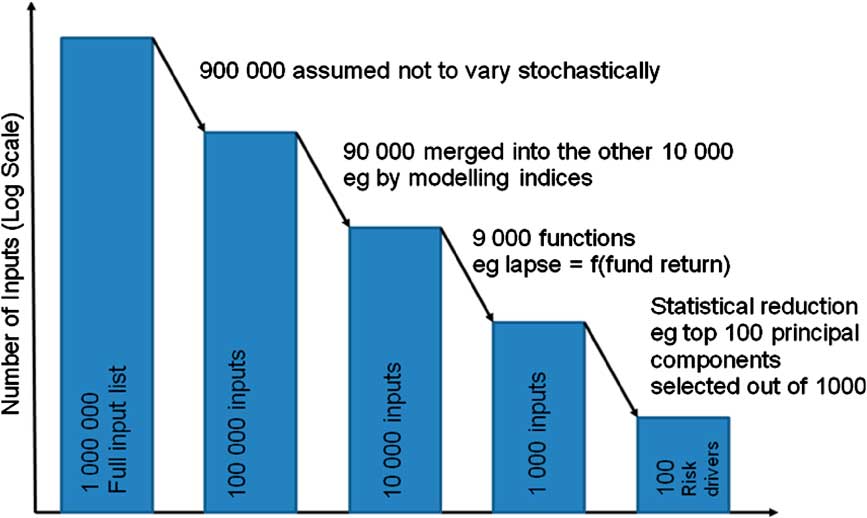

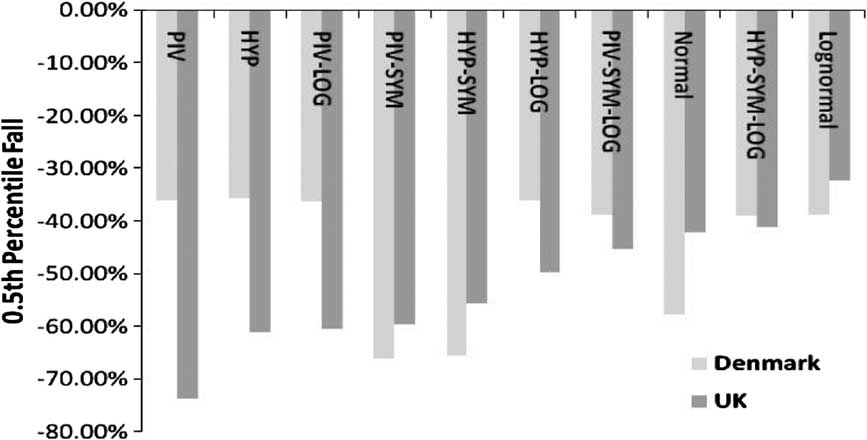

It is perhaps useful to consider the selection of risk factors via a hypothetical example. One can imagine that the number of inputs that affect the balance sheet of a firm that can be modelled stochastically is in excess of a million (this could easily be true assuming you count separately each number in the input mortality tables, have a number of different business units and products, etc.), but that ultimately an internal model is constrained to use as few as 100 risk drivers (Chart 4).

Chart 4 Simple illustration of how the number of risk factors compounds

The processes from reducing the 1,000,000 to 100 could include:

• ignoring some drivers because they are deemed to be deterministic or “insignificant”;

• using some as proxies for others;

• making some risk factors a function of others;

• more quantitative approaches such as principal component analysis (PCA) (Chart 5).

Chart 5 Simple illustration of how risk factors to include in the capital model might be obtained

In addition to the selection methods alluded to above, there are many algorithmic approaches used to identify those variables that minimise the measurement of predictive error subject to constraints of the size of any subset studied. Algorithmic approaches typically carry out a feature selection by including extra variables one at a time when carrying out the process in a forward manner, or exclude variables one at a time when carrying out the process in a backward manner. Of course, one needs to be careful when interpreting p-values associated with adding or removing variables problematic with this approach, as each is conditional on prior inclusion or exclusion of variables. In addition, any R 2 value may be overestimated, if based on the number of degrees of freedom from using the fitted variables, rather than the number of degrees of freedom used up in the entire model fitting process.

3.2. Dimension Reduction

This section focuses on the final step in the diagram overleaf, which considers the reduction in a large number of stochastic variables to a smaller number of important or “principal” factors.

3.2.1. PCA

This is a statistical method that transforms data from a high dimension space into a space of reduced dimension. A subset of new features from the original features in the data is performed by means of functional mapping techniques that aim to keep as many features as possible of the original data set. PCA is one of the most commonly used feature extraction techniques.

PCA uses an orthogonal transformation to convert a set of observations of correlated variables into a set of orthogonal principal components. The number of principal components is less than or equal to the number of original variables. PCA is a heavily researched statistical technique and already has a lot of applications in finance. For detailed examples in the context of interest rate modelling, refer to Lazzari & Wong (Reference Lazzari and Wong2012).

Deciding which factors to retain in the model can be based on the following techniques:

a) The eigenvalue-one criterion: also known as the Kaiser criterion, components are retained that have an eigenvalue greater than or equal to 1, that is, the retained variable is accounting for a greater amount of the variance than that contributed by one variable.

b) Proportion of variance retained: retain a component if it accounts for a threshold percentage, for example, 5% or 10% of the total variance, where the proportion of variance retained is given by: eigenvalue for component of interest/Σ (eigenvalues of correlation matrix).

Non-linear dimension reduction techniques extend these ideas based on non-linear mappings. Again, the principle is the same, maximising the variance in the resulting data set relative to the variance in the original data set.

The single biggest criticism of PCA in finance is that it is a pure statistical technique and ignores any economic or real-world causalities. Thus, it needs to be used and interpreted very carefully to avoid data mining and spurious accuracy. For example, you do not want to inadvertently have one of the important risk factors of your model be the size of Brazilian coffee beans, simply because the time series was included in the set of regression variables and provided an almost perfect fit. Although this is an extreme example that is obviously nonsensical, the lesson is that pure statistical models would be blind to this. More importantly, there may be many other significantly more subtle areas where the models are inadvertently subject to data mining.

Another criticism is that pure PCA factors are simply a linear combination of many variables and would lack intuition, as well as being subject to changes over time that are hard to explain.

The main mitigant of inadvertent data mining is for there to be expert human intervention at key stages of the process, choice of regression inputs through to sense checking and validation of the output results. Some of these are discussed in the next section.

3.2.2. Direct Choice of Principal Factors

This is another technique that is also commonly used alongside the PCA approach. This method directly chooses what are thought to be the principal factors so as to arrive directly at a fitted model, without recourse to any dimension reduction technique being applied. As such, this approach relies hugely on the skill or otherwise of the modeller in picking the appropriate modelling random variables that best satisfy the “eigenvalue-one” criterion and/or the “maximising the proportion of variance retained” criterion discussed above.

Although this approach could be very efficient in the presence of a skilled modeller, it suffers from the same drawbacks as per other aspects of judgement. In addition, the subjective element reduces the transparency of the choice components if not properly documented.

3.2.3. Hybrid Methods

In practice, neither a purely statistical nor a purely judgemental method is used. The method often adopted is a combination of the direct choice of principal factors and statistical techniques. Although this may often be done implicitly, it may be useful to be aware of the judgement and statistical steps explicitly, so as to avoid the worst pitfalls of each method.

Also important is the need to be aware of areas where selection of risk factors can cause potential problems. Some possible pitfalls are:

a) The model may have been subject to too wide a range of explanatory variables, resulting in inadvertent data mining.

b) The most important factors may have been wrongly selected or not selected at all, giving spurious and misleading results.

c) Presence of correlated variables results in a risk of a more intuitive/direct explanatory variable killed for another less intuitive/useful variable.

d) If the factors selected mechanically have not been validated, there is a risk that some inappropriate factors have been inadvertently included.

e) The weighting of the different factors may change over time. Factors that may have had an influence in the past may dissipate over time, whereas the opposite may be true of other factors.

f) Exogenous factors, for example, a change in external regulatory or commercial environment may change the nature of the problem and one needs to remember to revisit the choice of random variables in the selection process (i.e. there may be some “emerging” risk factors).

3.3. Grossing-Up Techniques

Acknowledging that there are practical limitations to the number of risks that can be modelled, some effort needs to be made to “gross up” the capital (i.e. make a capital allowance for the risks that have not been explicitly modelled). Thus, having gone through the selection (and perhaps) reduction of risk factors to come up with a capital model, one should take care not to forget these steps when estimating the total capital.

For example, as a very crude calculation, you might think that reducing 1,000,000 to 100 risk drivers, the resulting SCR should be grossed up by a multiple of 10,000. Most of us would probably say that is too big as there has been some effort to pick the most important of the 1,000,000 within the 100, but it is still likely that some important risks get squeezed out. Moreover, this only considers the risk factors that have been identified but not modelled (and does not delve into the unknown).

In principle, there is a strong relationship between grossing-up methodology and willingness to update models/incorporate new risks. If your initial SCR contains an explicit gross-up factor, then it is reasonable to release some of that factor as modelling becomes more detailed and comprehensive. If there is no grossing up, then every new risk you model causes a higher SCR, and therefore one might struggle to find acceptance within the business.

In addition to the basic grossing-up techniques for risk factors that may have been identified but not modelled described above, the following grossing-up techniques following dimension reduction are suggested below. Where the commonly used PCA approach has been used, it should be possible to apply both grossing-up techniques discussed below.

3.3.1. R 2 Adjustment

Following dimension reduction, a computation of the R 2 value can be compared between the original data set and the data set following dimension reduction. As noted above, the R 2 value in the dimensionally reduced data set will be an overestimate based on number of degrees of freedom used in the fitted variables, rather than the degrees of freedom used in the fitting process. A possible approximate grossing-up factor may be undertaken as follows:

This only allows for the PCA element. A suitable margin for prudence should also be incorporated, to allow for any potential missed features in both the original data set and the dimensionally reduced data set that could affect results significantly in the future.

3.3.2. R Eigenvalue Grossing-up Factor

Compute the eigenvalue correlation matrix for both the components in the dimensionally reduced model and the original model. The grossing-up factor for the model then becomes:

Again a suitable prudence margin should be allowed for risk selection errors in the original model.

3.4. Stress and Scenario Testing

A possible method of estimating the impact of risks not modelled stochastically is to carry out a series of “sensitivity tests” to the model with changes in that deterministic variable. In addition, scenario testing is also useful, both as an addition to the aggregation methods applied and as an explanatory aid to senior management.

It should be noted that scenario and sensitivity testing could quite easily span a number of sections in this document as it is quite a broad method and can be used for a number of purposes. In addition to modelling non-stochastic risks and addressing some aspects of uncertainty on models and parameters, some specific examples of reasons why sensitivity testing is required are listed below.

• Not enough data for calibration of model – model uncertainty. An example of these is in the aggregation of capital owing to lack of data, there may be uncertainty as to the copula to use. For example, use of a Gaussian versus Student T copula. The impact of using an alternative copula can be tested;

• Not enough data for calibration of the parameters of a model – parameter uncertainty. An example of this is assessing the impact using a different loss, given default in deriving the capital for credit risk;

• Changes in conditions – the past may not be a good guide to what may happen in the future such as changes in interest rate regimes;

• Assessing the importance of assumptions made – the assumptions that have the most impact on the results can be determined by changing each assumption by the same proportion;

• Regulatory requirements such as “What if scenarios”, “reverse stress testing”, etc.

• Management information – an example of this is to assess the impact of different volumes of new business or different business mix on the capital strength of a firm in the next 3 years.

• Assessing the impact of different data sources used in the calibration of a model. For a lot of risks, there are alternative data sources. For equities, S&P500 or FTSE 100 or FTSE All Share indices can be used to calibrate the risk. A sensitivity test can be undertaken in which the stresses derived from using different data sources are assessed.

• Assess the impact of prior beliefs. Most actuarial tasks involve a number of prior beliefs. Examples of prior beliefs in actuarial tasks include the distribution that the aggregate capital of a fund or company is assumed to follow. Sensitivity testing allows the impact of these assumptions to be assessed.

A detailed example of scenario and stress testing related to aggregation methodology is provided in the next section.

3.4.1. Stress and Scenario Testing: an Example

In this section, the results of a case study to assess the impact of the use of different copulas models are investigated.

This case study assesses the impact of prior beliefs, especially when there are not sufficient data to be conclusive about the assumption being made. It involves assessing the impact on aggregate capital when different types of copulas are used to aggregate individual capital requirements.

The copulas used in these case studies are the copulas that lend themselves easily to aggregate more than two risks. As such copulas such as Gumbel, Clayton and Frank are not considered.Footnote 4

In the first case, the marginal distributions are all assumed to be normally distributed as shown in Table 2. Another case study is presented later in which different marginal distributions are assumed. We assume that we have the following risks with the distributions as specified in Table 2.

Table 2 Marginal risk factor distribution

The risks are assumed to be correlated as per Table 3.

Table 3 Correlation between risk factors

The aggregate capitals based on the following copulas are assessed:

a. correlation matrix;

b. normal;

c. Student T with different degrees of freedom.

The results of using different copulas are set out in Table 4.

Table 4 Capital required by type of copula

It is worth noting that when the marginals are all elliptically distributed (e.g. normal, Student T) the results of a normal copula and correlation matrix should be very similar, with any difference arising because of an inadequate number of simulations used in the Monte Carlo simulation.

Another sensitivity test was undertaken in which the marginal distributions were not all normally distributed. The distributions used are set out in Table 5. The parameters and the distributions were selected such that the capital of the marginal distributions was the same as that of the normal distributions above.

Table 5 Sensitivity - the effect of some risk factors not being normally distributed

1Note that the mean and standard deviation do not refer to lognormal distribution, but to its logarithm.

The results of this sensitivity are shown in Table 6.

Table 6 Sensitivity - capital required when some risk drivers are not normally distributed

The sensitivity testing as described in the case study above can be used to assess the range of values that the aggregate capital can assume, given the uncertainty about the appropriate dependency approach to use. It is important to note that this covers just one aspect of sensitivity testing, and that this would ideally need to be repeated for all of the key decisions in the capital calculation framework.

3.5. Key Points

The selection of what risk factors to model stochastically is a crucial choice within capital models, and deserves a great deal of attention. We illustrate this by an example where a very large number of potentially stochastic inputs are compressed to merely 100 risk drivers. We discuss the possible range of factor-reduction methods at the final stages of the selection and highlight some potential pitfalls in the process.

We also explain the importance of “grossing up” for all the risk factors that are not modelled stochastically, by considering the link between grossing-up methodology and willingness to update models/incorporate new risks.

Finally, we discuss the idea of scenario and stress testing, together with a worked example on capital aggregation.

4. Model and Parameter Error

4.1. Allowing for Model and Parameter Risk

With the “appropriate” model and “accurate” assumptions, we can justify statements such as “with €100 m of available capital, there is a 99.5% probability of sufficiency one year from now”.

However, given that a model is only intended to be a representation of reality, it is unlikely any model will be ever fully correct, or the assumptions fully accurate. This section considers how such a statement may be modified if a firm has concerns about the correctness of models or accuracy of parameter estimates. There might be several models that adequately explain the data. Even when one model is a better fit than another, we may not be able to reject the worse fitting model as a possible explanation of the data. There is a difference, however, between picking one model as the most credible explanation and picking one model as the only credible explanation. We should not discount the possibility that some initially less plausible model could subsequently turn out to be the most appropriate.

Model error is one of many risks to which financial firms are exposed. We might hope to quantify model error in much the same way as other risks, by examining the potential losses arising from model mis-specification. These might then be incorporated into a risk aggregation process, making appropriate assumptions about the correlation between model risk and other risks.

In this section, we argue that model risk is of an essentially different nature to other modelled risks. To describe interest rate risk, we take as given a model of how interest rates might move, test the model against past data and use this model to explore the likelihood of possible adverse shocks. A probability approach is ideal for such an analysis. The probability framework is less equipped to cope with ambiguity in models. For example, we may struggle to find an empirical basis to express the likelihood of alternative models being correct.

Several different models might account for the historic data, but they might have different implied capital requirements. Percentile-based capital definitions no longer produce a unique number, but rather a scatter of numbers depending on which model is deemed to be correct. If we want the answer to be a single number, then we have to change the question.

We then give concrete examples of model and parameter risk. We consider possible ways of clarifying the 99.5%-ile question in the context of model ambiguity and explore the impact on model output.

It is helpful to consider model ambiguity in three stages:

• Models and parameters are known to be correct. This is the (hypothetical) base case to which we compare other cases.

• Location scale uncertainty (section 4.1.1): past and future observations are samples from a given distribution family, but the model parameters are uncertain. Specifically, we consider situations where candidate distributions are related to each other by shifting or scaling.

• Model and location scale uncertainty (section 4.1.2): both the applicable model and the parameters are uncertain. For example, there may be some dependence between observations and the observations may be drawn from one of a family of fatter-tailed distributions. In each case, limited data are available to test the model or fit the parameters.

4.1.1. Parameter Uncertainty in Location Scale Families

We consider an example where the underlying model is of a known shape, but where the location and scale of the distribution are subject to uncertainty. This is usually the case in practice.

We then consider three possible definitions of a percentile where parameters are uncertain (Table 7).

Table 7 Possible percentile definitions when there is parameter uncertainty

It is not always clear in practice, even for statutory purposes such as computing the solvency capital requirement, which, if any, of these definitions applies.

4.1.2. Comparison of Statistical Definitions to Actuarial Best Estimates

We contrast probability definitions with actuarial concepts of best estimates, as discussed, for example by Jones et al. (Reference Jones, Copeman, Gibson, Line, Lowe, Martin, Matthews and Powell2006):

“best estimates … contain no allowance or margin for prudence or optimism”.

“… they [best estimates] are not deliberately biased upwards or downwards”.

“The estimates given in the report are central estimates in the sense that they represent our best estimate of the liability for outstanding claims, with no deliberate bias towards either over or under-statement”.

The actuarial best estimate definitions refer to a lack of deliberate bias. This suggests that provided the actuary did not intend to introduce bias, a “best estimate” results. If there turns out to be a bias in a statistical sense, this does not disqualify an actuarial best estimate provided the bias is unintentional. This highlights the difference between actuarial best estimates defined in terms of intention and statistically unbiased estimates defined in a mechanical way.

Our statistical definitions refer to probabilities that can in principle be tested in controlled simulation experiments, and indeed we will conduct such experiments in the example that follows. As defined above, any best estimate is a point estimate. However, there is an interval of plausible outcomes around this estimate. There are different statistical definitions for such an interval. The experiment that follows considers two of these intervals (described in section 4.1.1):

• substitution: β-quantile;

• confidence interval with probability γ containing the β-quantile;

• prediction interval containing the unseen observation with probability β.

What the experiment shows is that the properties of a probability distribution do not necessarily determine a unique construction for an interval. There might be (and indeed, there are) several ways to construct intervals satisfying the definitions.

4.1.3. Five Example Distributions

We now show some example calculations of these intervals based on five distribution families. In each case, we define a standard version of a random variable X; the other distributions in the family are the distributions of sX + m where m can take any value and s > 0.

Our five distributions include fatter- and thinner-tailed examples, and include asymmetric distributions (Table 8).

Table 8 The five example distributions and their properties

Our families consistent of cumulative distribution functions

![]() $$$F\left( {\frac{{x\,{\rm{ - }}\,m}}{s}} \right)$$$

and density

$$$F\left( {\frac{{x\,{\rm{ - }}\,m}}{s}} \right)$$$

and density

![]() $$$\frac{1}{s}f\left( {\frac{{x\,{\rm{ - }}\,m}}{s}} \right)$$$

, where F and f are taken from a (known) row of Table 8.

$$$\frac{1}{s}f\left( {\frac{{x\,{\rm{ - }}\,m}}{s}} \right)$$$

, where F and f are taken from a (known) row of Table 8.

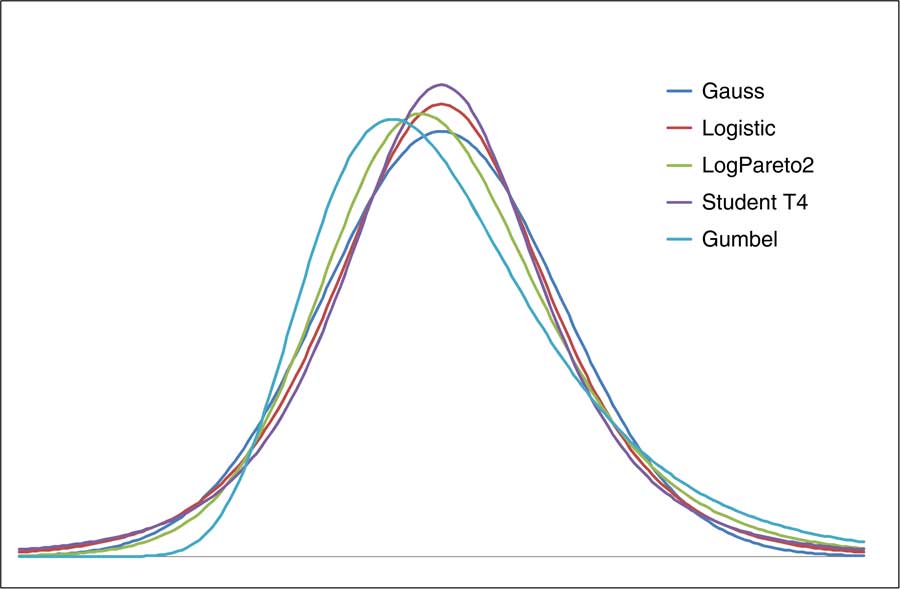

Chart 6 shows these probability density functions. We have chosen values of m and s for each family to make the distributions as close as possible to each other.

Chart 6 Probability density functions of the five example distributions

4.1.4. Methods of Estimating Parameters

We consider four ways of estimating model parameters given a random sample of historic data (Table 9).

Table 9 Four methods of estimating model parameters

4.1.5. Interval Calculation

We now provide algorithms for calculating the different types of intervals (Table 10).

Table 10 Algorithm for calculating the different types of interval

When talking about probabilities, care needs to be taken with respect of the set over which averages are calculated. The frequentist methods (method of moments, probability weighted moments, maximum likelihood) measure probabilities over alternative data sets. The Bayesian statements average over possible alternative parameter sets but not over any data sets, besides the one that actually emerged. A 99.5% interval under a frequentist approach is not the same as a 99.5% Bayesian interval.

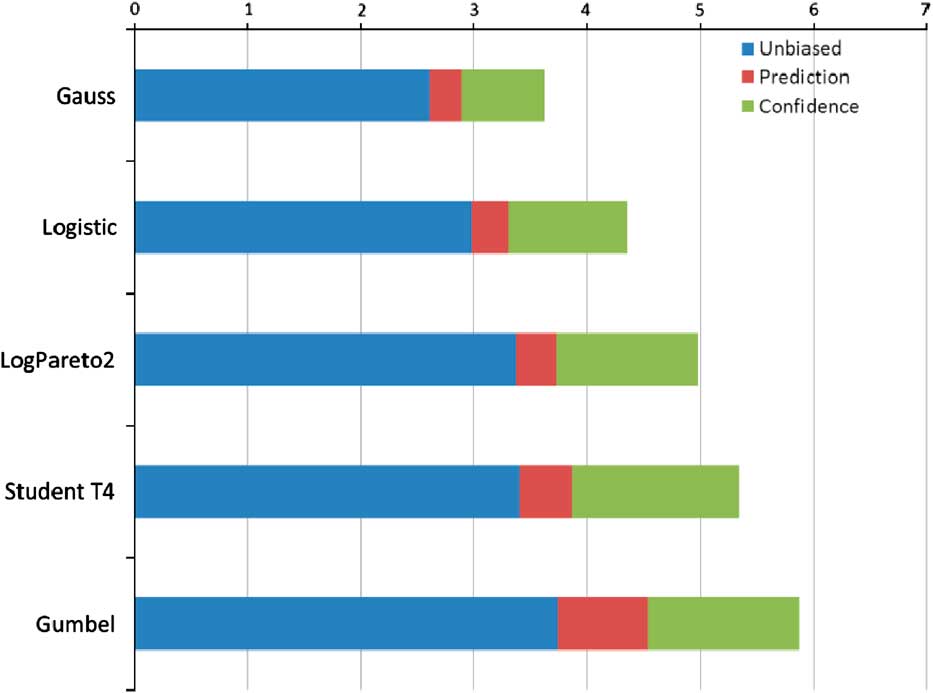

We use Monte Carlo methods to calculate confidence and prediction intervals. We show these in the case of 20 data points, using the method of moments, based on the 99.5%-ile. These measures differ only because the data are limited; as the data increase these are all consistent estimators of the “true” percentile. None of these is *the* right answer; the different numbers simply answer different questions (Chart 7).

Chart 7 Illustration of various interval definitions for the five example distributions

The interval size (expressed as a number of sample standard deviations) varies from one distribution to another. Note that, although the T4 distribution has the fattest tails in an asymptotic sense (it has a power law tail, whereas the others are exponential), the Gumbel produces the largest confidence intervals. This is because the asymptotic point at which the T4 distribution becomes fatter lies way beyond the 99.5%-ile in which we are interested.

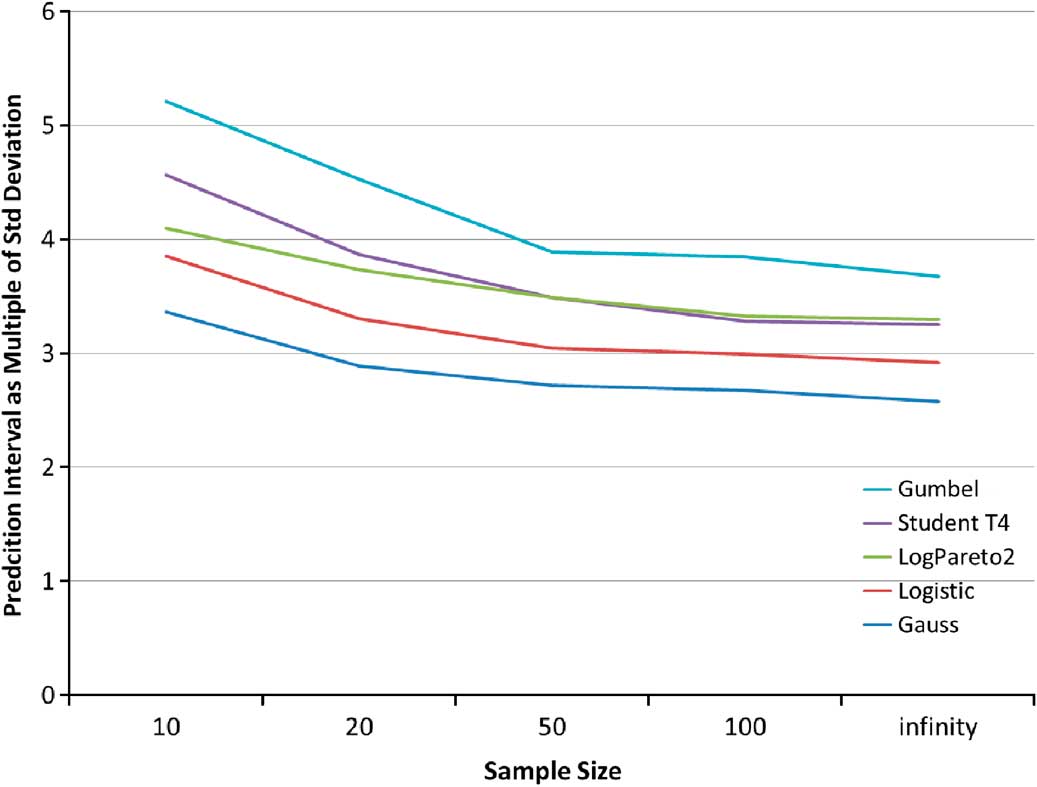

We also consider how the intervals vary by the number of observations. The prediction intervals are shown below. We can see that the prediction interval requires a smaller number of standard deviations as the sample size increases, because a better supply of data reduces the errors in parameter estimates (Chart 8).

Chart 8 Prediction Interval variation by sample size for the five example distribution

4.2. More on Model Risk

We have described ways of calculating 99.5%-iles in the presence of parameter risk, at least in the case of location scale probability distribution families.

Uncertainty about shape parameters or about underlying models is more difficult to address. The difficulty is constructing an interval that covers 99.5% of future outcomes, uniformly across a broad family of models. In general, the best we can hope is an inequality, so that at least 99.5% is covered.

We set out two examples of the impact of using different models to estimate variables of interests. These show that the choice of the models has a very material impact of the estimate of the variable of interest. We then set out some techniques that can be used to assess the impact of model errors. The techniques described later are by no means an exhaustive list, but a list of the techniques commonly used in practice.

4.2.1. Example of Model Risk

A recent paper by Richards, Currie and Ritchie compares seven different models of longevity. They compare the value of a life annuity with a 70-year-old man, limited to 35 years and discounted at 3%. The paper constructs a probability distribution forecast for this annuity in 1 year's time using different models of mortality improvements. We reproduce table 5 from that paper below (Table 11).

Table 11 Comparison of average annuity value and percentile estimate by different longevity models

.

Please refer to the paper by Richards et al. (2014) for more details about the mortality models. We might hope for a degree of consensus between the different modelling approaches, but these figures show the opposite. Indeed, a future annuity value of 12.5 lies below the mean for two of the models, but above the 99.5%-ile for two other models (http://www.actuaries.org.uk/sites/all/files/documents/pdf/value-risk-framework-longevity-risk-printversionupdated20121109.pdf).

4.2.2. Another Example: Gauss and Gumbel Distributions

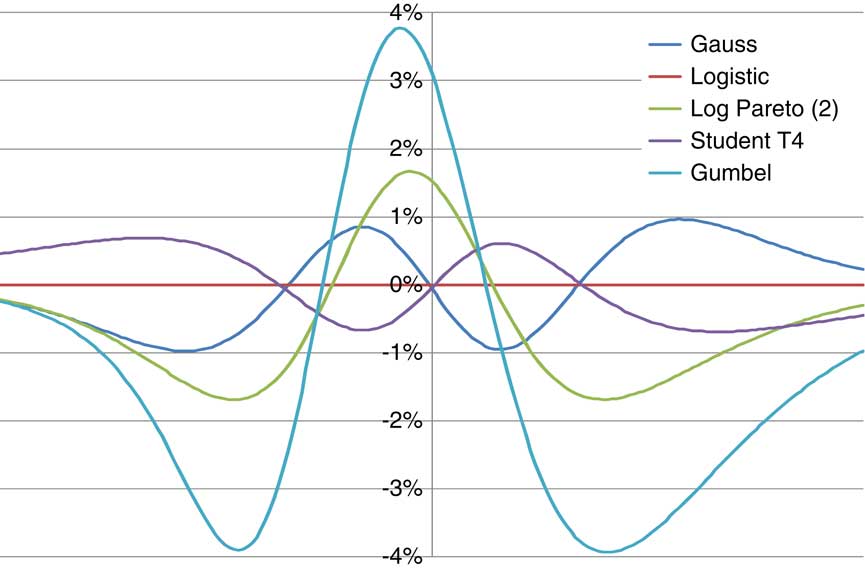

Let us return to the set of five probability distributions described in section 4.1.3. The distribution functions are sufficiently close that the curves are difficult to distinguish by eye. However, we can separate them by subtracting one of the cumulative distribution functions, for example, the logistic. Chart 9 shows the CDF for each distribution minus the logistic CDF.

Chart 9 Illustrating the difference in the CDF of the five example distributions, measured relative to the logistic

Although these distributions have different shapes, the distribution functions differ nowhere by more than 4%. This suggests that it will be difficult to separate the distributions using statistical tests based on small sample sizes.

Goodness-of-fit tests such as Kolmogorov–Smirnov and Anderson–Darling have low power when data are scarce. Table 12 shows the power of KS and AD tests with 20 data points. The chance of rejecting an incorrect model is often only marginally better than the chance of rejecting the correct model, and in a few cases the correct model is more likely rejected than an incorrect one.

Table 12 Probability of Rejecting a Model Fitted Using the Method of Moments Tested Using a Kolmogorov–Smirnov (or Anderson–Darling) Statistic Based on 95% Confidence and 20 Observations

Even with samples of 200 or more, it is common not to reject any of our five models. This implies that model risk remains relevant for applications including scenario generators, longevity forecasts or estimates of reserve variability in general insurance.

4.3. Techniques to Assess Model Errors

The examples described above shows that there are a range of models that can be used to undertake a task. This motivates a quest to understand any error introduced by model choice.

Model risk remains relevant for virtually all aspects of actuarial modelling, from longevity forecasting to capital aggregation. Are we then at risk of channelling too much energy and expense into the “holy grail” route of modelling, justifying each parameter and component of a single model? Does our governance process consider model risk, or does board approval for one model implicitly entail rejection for all others?

Given the inevitable uncertainty in which model is correct, how can we make any progress at all? There are several possible ways to proceed:

• Pick a standard distribution, for example, the Gaussian distribution, on the basis that it is not rejected. The statistical mistake here is to confuse “not rejected” with “accepted”.

• Taking the prudent approach – the highest 99.5%-ile from all the models.

• Build a “hyper-model”, which simulates data from a mixture of the available models, although expert judgement is still needed to assess prior weights. Ian Cook (Reference Cook2011) has described this approach in more details on the context of catastrophe models.

Industry collaboration on validation standards may lead to generally accepted practices. For example, over a period of time practice may converge on a requirement to demonstrate at least 99.5% confidence if the data happen to come from a Gaussian or logistic distribution, but not for Student T or Gumbel distributions. This may not always be a good thing, particularly if it leads to a false sense of security.

A commonly used mitigant against model and parameter error already in use within the industry is to carry out scenario- and stress-testing approaches. These were described in section 3.4. A more statistical technique that may prove useful is the concept of robust statistics and ambiguity sets described below.

4.3.1. Robust Statistics and Ambiguity Sets

Robust statistics is the study of techniques that can be justified across a range of possible models rather than a single model. It can be used to help derive prediction intervals that are robust to the choice of distribution.

Suppose using the method of moments we wanted to construct a prediction interval of the form

![]() $$$\left( {{\rm{ - }}\infty, \hat{\mu }\, + \,y\hat{\sigma }} \right)$$$

valid across a class of distributions (this set is known as the ambiguity set). We cannot achieve exact α-coverage for all distributions. We can, however, achieve at least α-coverage for a class of distributions simply by taking the largest y across that class. For example, we might specify that the methodology should cover at least a proportion α of observations X n + 1 for an ambiguity class, including Gaussian and logistic distributions. In this case, our chart in section 4.1.4 shows that the logistic distribution produces the highest y, and therefore the prediction interval is defined based on the logistic distribution, knowing this is conservative for other distributions in the specification of the ambiguity class.

$$$\left( {{\rm{ - }}\infty, \hat{\mu }\, + \,y\hat{\sigma }} \right)$$$

valid across a class of distributions (this set is known as the ambiguity set). We cannot achieve exact α-coverage for all distributions. We can, however, achieve at least α-coverage for a class of distributions simply by taking the largest y across that class. For example, we might specify that the methodology should cover at least a proportion α of observations X n + 1 for an ambiguity class, including Gaussian and logistic distributions. In this case, our chart in section 4.1.4 shows that the logistic distribution produces the highest y, and therefore the prediction interval is defined based on the logistic distribution, knowing this is conservative for other distributions in the specification of the ambiguity class.

Robustness for a given ambiguity class says nothing about models outside the class. We might construct prediction intervals robust across uniform, normal and logistic distributions, but these intervals would not be valid for Gumbel distributions. They would also not be valid if other assumptions are violated – if the observations are not independent or are drawn from more than one distribution.

The size of the ambiguity set is a key determinant of prediction interval size. A bigger ambiguity set means a harsher test of coverage and larger prediction intervals. It also means more wastage, in the sense that if the true distribution is one of the more benign from the ambiguity set, then the prediction interval will contain a higher than intended proportion of future observations.