1. Introduction

Cognitive modeling plays an increasingly important role in our endeavor to understand the human mind. Building models of people's cognitive strategies and representations is useful for at least three reasons. First, testing our understanding of psychological phenomena by recreating them in computer simulations forces precision and helps to identify gaps in explanations. Second, computational modeling permits the transfer of insights about human intelligence to the creation of artificial intelligence (AI) and vice versa. Third, cognitive modeling of empirical phenomena is a way to infer the underlying psychological mechanisms, which is critical to predicting human behavior in novel situations.

Unfortunately, inferring cognitive mechanisms and representations from limited experimental data is an ill-posed problem, because any behavior could be generated by infinitely many candidate mechanisms (Anderson Reference Anderson1978). Thus, cognitive scientists must have strong inductive biases to infer cognitive mechanisms from limited data. Theoretical frameworks, such as evolutionary psychology (Buss Reference Buss1995), embodied cognition (Wilson Reference Wilson2002), production systems (e.g., Anderson Reference Anderson1996), dynamical systems theory (Beer Reference Beer2000), connectionism (Rumelhart & McClelland Reference Rumelhart and McClelland1987), Bayesian models of cognition (Griffiths et al. Reference Griffiths, Chater, Kemp, Perfors and Tenenbaum2010), ecological rationality (Todd & Gigerenzer Reference Todd and Gigerenzer2012), and the free-energy principle (Friston Reference Friston2010) to name just a few, provide researchers guidance in the search for plausible hypotheses. Here, we focus on a particular subset of theoretical frameworks that emphasize developing computational models of cognition: cognitive architectures (Langley et al. Reference Langley, Laird and Rogers2009), connectionism (Rumelhart & McClelland Reference Rumelhart and McClelland1987), computational neuroscience (Dayan & Abbott Reference Dayan and Abbott2001), and rational analysis (Anderson Reference Anderson1990). These frameworks provide complementary functional or architectural constraints on modeling human cognition. Cognitive architectures, such as ACT-R (Anderson et al. Reference Anderson, Bothell, Byrne, Douglass, Lebiere and Qin2004), connectionism, and computational neuroscience constrain the modeler's hypothesis space based on previous findings about the nature, capacities, and limits of the mind's cognitive architecture. These frameworks scaffold explanations of psychological phenomena with assumptions about what the mind can and cannot do. But the space of cognitively feasible mechanisms is so vast that most phenomena can be explained in many different ways − even within the confines of a cognitive architecture.

As psychologists, we are trying to understand a system far more intelligent than anything we have ever created ourselves; it is possible that the ingenious design and sophistication of the mind's cognitive mechanisms are beyond our creative imagination. To address this challenge, rational models of cognition draw inspiration from the best examples of intelligent systems in computer science and statistics. Perhaps the most influential framework for developing rational models of cognition is rational analysis (Anderson Reference Anderson1990). In contrast to traditional cognitive psychology, rational analysis capitalizes on the functional constraints imposed by goals and the structures of the environment rather than the structural constraints imposed by cognitive architectures. Its inductive bias toward rational explanations is often rooted in the assumption that evolution and learning have optimally adapted the human mind to the structure of its environment (Anderson Reference Anderson1990). This assumption is supported by empirical findings that under naturalistic conditions people achieve near-optimal performance in perception (Knill & Pouget Reference Knill and Pouget2004; Knill & Richards Reference Knill and Richards1996; Körding & Wolpert Reference Körding and Wolpert2004), statistical learning (Fiser et al. Reference Fiser, Berkes, Orbán and Lengyel2010), and motor control (Todorov Reference Todorov2004; Wolpert & Ghahramani Reference Wolpert and Ghahramani2000), as well as inductive learning and reasoning (Griffiths & Tenenbaum Reference Griffiths and Tenenbaum2006; Reference Griffiths and Tenenbaum2009). Valid rational modeling provides solid theoretical justifications and enables researchers to translate assumptions about people's goals and the structure of the environment into substantive, detailed, and often surprisingly accurate predictions about human behavior under a wide range of circumstances.

That said, the inductive bias of rational theories can be insufficient to identify the correct explanation and sometimes points modelers in the wrong direction. Canonical rational theories of human behavior have several fundamental problems. First, human judgment and decision-making systematically violate the axioms of rational modeling frameworks such as expected utility theory (Kahneman & Tversky Reference Kahneman and Tversky1979), logic (Wason Reference Wason1968), and probability theory (Tversky & Kahneman Reference Tversky and Kahneman1973; Reference Tversky and Kahneman1974). Furthermore, standard rational models define optimal behavior without specifying the underlying cognitive and neural mechanisms that psychologists and neuroscientists seek to understand. Rational models of cognition are expressed at what Marr (Reference Marr1982) termed the “computational level,” identifying the abstract computational problems that human minds must solve and their ideal solutions. In contrast, psychological theories have traditionally been expressed at Marr's “algorithmic level,” focusing on representations and the algorithms by which they are transformed.

This suggests that relying either cognitive architectures or rationality alone might be insufficient to uncover the cognitive mechanisms that give rise to human intelligence. The strengths and weaknesses of these two approaches are complementary − each offers exactly what the other is missing. The inductive constraints of modeling human cognition in terms of cognitive architectures were, at least to some extent, built from the ground up by studying and measuring the mind's elementary operations. In contrast, the inductive constraints of rational modeling are derived from top-down considerations of the requirements of intelligent action. We believe that the architectural constraints of bottom-up approaches to cognitive modeling should be integrated with the functional constraints of rational analysis.

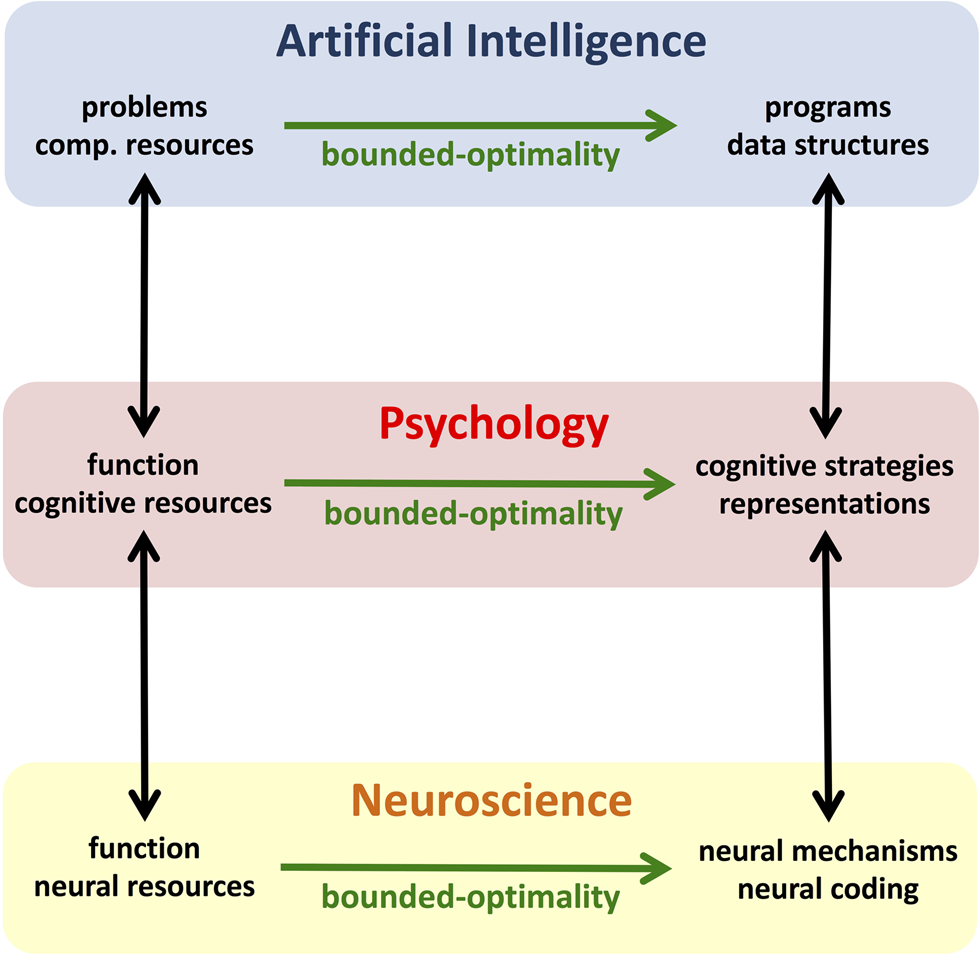

The integration of (bottom-up) cognitive constraints and (top-down) rational principles is an approach that is starting to be used across several disciplines, and initial results suggest that combining the strengths of these approaches results in more powerful models that can account for a wider range of cognitive phenomena. Economists have developed mathematical models of bounded-rational decision-making to accommodate people's violations of classic notions of rationality (e.g., Dickhaut et al. Reference Dickhaut, Rustichini and Smith2009; Gabaix et al. Reference Gabaix, Laibson, Moloche and Weinberg2006; Simon Reference Simon1956; C. A. Sims Reference Sims2003). Neuroscientists are learning how the brain represents the world as a trade-off between accuracy and metabolic cost (e.g., Levy & Baxter Reference Levy and Baxter2002; Niven & Laughlin Reference Niven and Laughlin2008; Sterling & Laughlin Reference Sterling and Laughlin2015). Linguists are explaining language as a system for efficient communication (e.g., Hawkins Reference Hawkins2004; Kemp & Regier Reference Kemp and Regier2012; Regier et al. Reference Regier, Kay and Khetarpal2007; Zaslavsky et al. Reference Zaslavsky, Kemp, Regier and Tishby2018; Zipf Reference Zipf1949), and more recently, psychologists have also begun to incorporate cognitive constraints into rational models (e.g., Griffiths et al. Reference Griffiths, Lieder and Goodman2015).

In this article, we identify the rational use of limited resources as a common theme connecting these developments and providing a unifying framework for explaining the corresponding phenomena. We review recent multidisciplinary progress in integrating rational models with cognitive constraints and outline future directions and opportunities. We start by reviewing the historical role of classic notions of rationality in explaining human behavior and some cognitive biases that have challenged this role. We present our integrative modeling paradigm, resource rationality, as a solution to the problems faced by previous approaches, illustrating how its central idea can reconcile rational principles with numerous cognitive biases. We then outline how future work might leverage resource-rational analysis to answer classic questions of cognitive psychology, revisit the debate about human rationality, and build bridges from cognitive modeling to computational neuroscience and AI.

2. A brief history of rationality

Notions of rationality have a long history and have been influential across multiple scientific disciplines, including philosophy (Harman Reference Harman, LaFollette, Deigh and Stroud2013; Mill Reference Mill1882), economics (Friedman & Savage Reference Friedman and Savage1948; Reference Friedman and Savage1952), psychology (Braine Reference Braine1978; Chater et al. Reference Chater, Tenenbaum and Yuille2006; Griffiths et al. Reference Griffiths, Chater, Kemp, Perfors and Tenenbaum2010; Newell et al. Reference Newell, Shaw and Simon1958; Oaksford & Chater Reference Oaksford and Chater2007), neuroscience (Knill & Pouget Reference Knill and Pouget2004), sociology (Hedström & Stern Reference Hedström, Stern, Durlauf and Blume2008), linguistics (Frank & Goodman Reference Frank and Goodman2012), and political science (Lohmann Reference Lohmann, Durlauf and Blume2008). Most rational models of the human mind are premised on the classic notion of rationality (Sosis & Bishop Reference Sosis and Bishop2014), according to which people act to maximize their expected utility, reason based on the laws of logic, and handle uncertainty according to probability theory. For instance, rational actor models (Friedman & Savage Reference Friedman and Savage1948; Reference Friedman and Savage1952) predict that decision-makers select the action  $a^{\rm \star }$ that maximizes their expected utility (Von Neumann & Morgenstern Reference Von Neumann and Morgenstern1944), that is

$a^{\rm \star }$ that maximizes their expected utility (Von Neumann & Morgenstern Reference Von Neumann and Morgenstern1944), that is

$$a^{\vskip1pt \rm \star } = \arg {\max }}_{\rm a} \mathop \int \nolimits u\lpar o \rpar \cdot p\lpar {o{\rm \vert }a} \rpar \;do\comma \;\;$$

$$a^{\vskip1pt \rm \star } = \arg {\max }}_{\rm a} \mathop \int \nolimits u\lpar o \rpar \cdot p\lpar {o{\rm \vert }a} \rpar \;do\comma \;\;$$where the utility function u measures how good the outcome o is from the decision-maker's perspective and p(o|a) is the conditional probability of its occurrence if action a is taken.

Psychologists soon began to interpret the classic notions of rationality as hypotheses about human thinking and decision-making (e.g., Edwards Reference Edwards1954; Newell et al. Reference Newell, Shaw and Simon1958) and other disciplines also adopted rational principles to predict human behavior. The foundation of these models was shaken when a series of experiments suggested that people's judgment and decision-making systematically violate the laws of logic (Wason Reference Wason1968) probability theory (Tversky & Kahneman Reference Tversky and Kahneman1974), and expected utility theory (Kahneman & Tversky Reference Kahneman and Tversky1979). These systematic deviations are known as cognitive biases. The well-known anchoring bias (Tversky & Kahneman Reference Tversky and Kahneman1974), base-rate neglect and the conjunction fallacy (Kahneman & Tversky Reference Kahneman and Tversky1972), people's tendency to systematically overestimate the frequency of extreme events (Lichtenstein et al. Reference Lichtenstein, Slovic, Fischhoff, Layman and Combs1978), and overconfidence (Moore & Healy Reference Moore and Healy2008) are just a few examples of the dozens of biases that have been reported over the last four decades (Gilovich et al. Reference Gilovich, Griffin and Kahneman2002). In many cases the interpretation of these empirical phenomena as irrational errors has been challenged by subsequent analyses (e.g., Dawes & Mulford Reference Dawes and Mulford1996; Fawcett et al. Reference Fawcett, Fallenstein, Higginson, Houston, Mallpress, Trimmer and McNamara2014; Gigerenzer Reference Gigerenzer2015; Gigerenzer et al. Reference Gigerenzer, Fiedler, Olsson, Todd and Gigerenzer2012; Hahn & Warren Reference Hahn and Warren2009; Hertwig et al. Reference Hertwig, Pachur and Kurzenhäuser2005). But as reviewed below, cognitive limitations also appear to play a role in at least some of the reported biases. While some of these biases can be described by models such as prospect theory (Kahneman & Tversky Reference Kahneman and Tversky1979; Tversky & Kahneman Reference Tversky and Kahneman1992) such descriptions do not reveal the underlying causes and mechanisms. According to Tversky and Kahneman (Reference Tversky and Kahneman1974), cognitive biases result from people's use of fast but fallible cognitive strategies known as heuristics. Unfortunately, the number of heuristics that have been proposed is so high that it is often difficult to predict which heuristic people will use in a novel situation and what the results will be.

The undoing of expected utility theory, logic, and probability theory as principles of human reasoning and decision-making has not only challenged the idealized concept of “man as rational animal” but also taken away mathematically precise, overarching theoretical principles for modeling human behavior and cognition. These principles have been replaced by different concepts of “bounded rationality” according to which cognitive constraints limit people's performance so that classical notions of rationality become unattainable (Simon Reference Simon1955; Tversky & Kahneman Reference Tversky and Kahneman1974). While research in the tradition of Simon (Reference Simon1955) has developed notions of rationality that take people's limited cognitive resources into account (e.g., Gigerenzer & Selten Reference Gigerenzer and Selten2002), research in the tradition of Tversky and Kahneman (Reference Tversky and Kahneman1974) has sought to characterize bounded rationality in terms of cognitive biases. In the latter line of work and its applications, the explanatory principle of bounded rationality has often been used rather loosely, that is without precisely specifying the underlying cognitive limitations and exactly how they constrain cognitive performance (Gilovich et al. Reference Gilovich, Griffin and Kahneman2002). As illustrated in Figure 1, infinitely many cognitive mechanisms are consistent with this rather vague use of the term “bounded rationality.” This raises questions about which of those mechanisms people use, which of them they should use, and how these two sets of mechanisms are related to each other. Answering these questions requires a more precise theory of bounded rationality.

Figure 1. Resource rationality and its relationship to optimality and Tversky and Kahneman's concept of bounded rationality. The horizontal dimension corresponds to alternative cognitive mechanisms that achieve the same level of performance. Each dot represents a possible mind. The gray dots are minds with bounded cognitive resources and the blue dots are minds with unlimited computational resources. The thick black line symbolizes the bounds entailed by people's limited cognitive resources. Resource limitations reflect anatomical, physiological, and metabolic constraints on neural information processing as discussed below as time constraints, but they can be modelled at a higher level of abstraction (e.g., in terms of processing speed or multi-tasking capacity). For the purpose of deriving a resource-rational mechanism these constraints are assumed to be fixed. (Some cognitive constraints may change as a consequence of brain development, exhaustion, and many other factors. Sufficiently large changes may warrant the resource-rational analysis to be redone.)

Simon (Reference Simon1955; Reference Simon1956) famously argued that rational decision strategies must be adapted to both the structure of the environment and the mind's cognitive limitations. He suggested that the pressure for adaptation makes it rational to use a heuristic that selects the first option that is good enough instead of trying to find the ideal option: satisficing. Simon's ideas inspired the theory of ecological rationality, which maintains that people make adaptive use of simple heuristics that exploit the structure of natural environments (Gigerenzer & Goldstein Reference Gigerenzer and Goldstein1996; Gigerenzer & Selten Reference Gigerenzer and Selten2002; Hertwig & Hoffrage Reference Hertwig and Hoffrage2013; Todd & Brighton Reference Todd and Brighton2016; Todd & Gigerenzer Reference Todd and Gigerenzer2012). A number of candidate heuristics have been identified over the years (Gigerenzer & Gaissmaier Reference Gigerenzer and Gaissmaier2011; Gigerenzer & Goldstein Reference Gigerenzer and Goldstein1996; Gigerenzer et al. Reference Gigerenzer, Todd and Research Group1999; Hertwig & Hoffrage Reference Hertwig and Hoffrage2013; Todd & Gigerenzer Reference Todd and Gigerenzer2012) that typically use only a small subset of available information and perform much less computation than would be required to compute expected utilities (Gigerenzer & Gaissmaier Reference Gigerenzer and Gaissmaier2011; Gigerenzer & Goldstein Reference Gigerenzer and Goldstein1996).

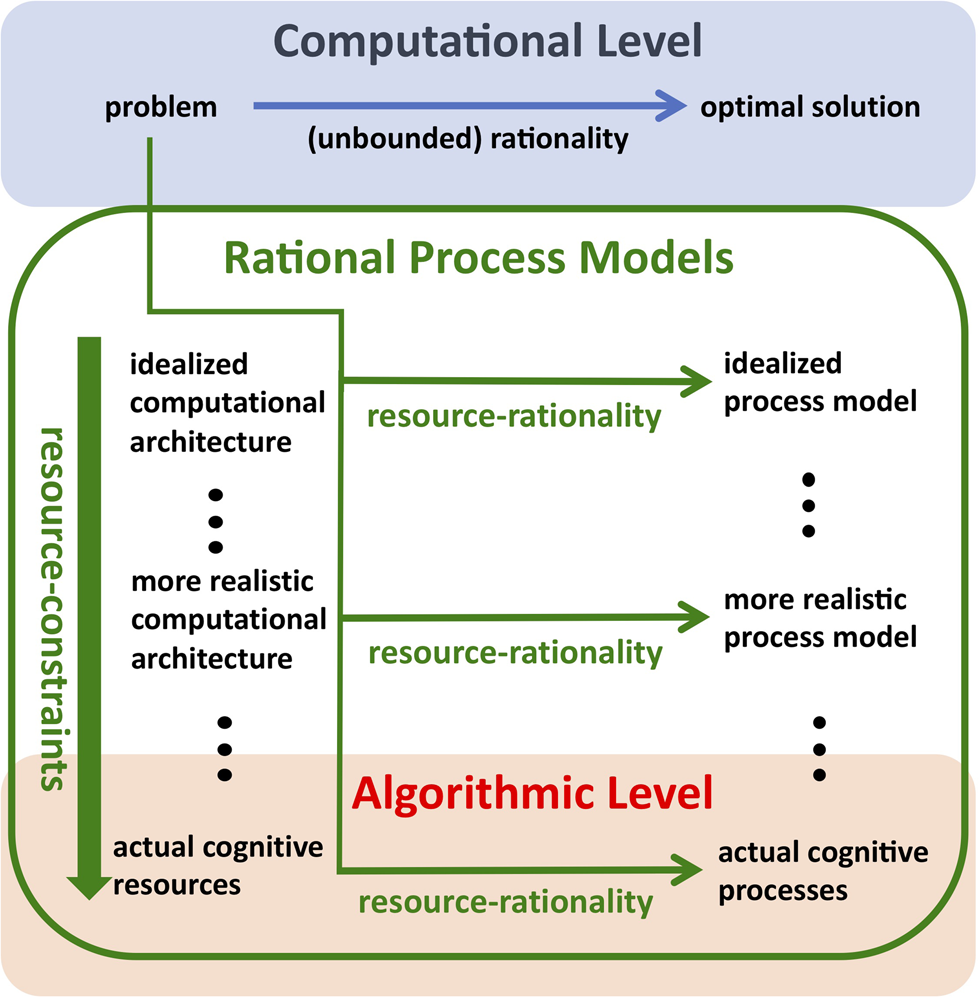

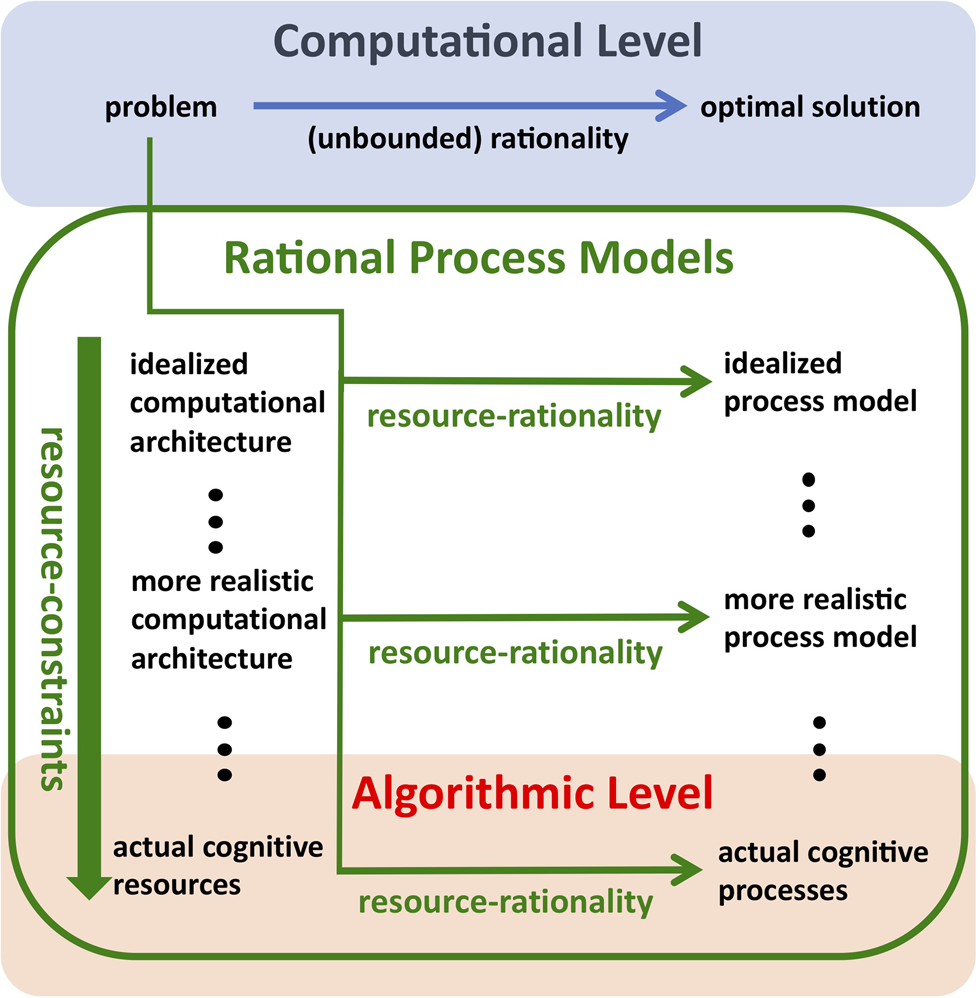

In parallel work, Anderson (Reference Anderson1990) developed the idea of understanding human cognition as a rational adaptation to environmental structure and goals pursued within it, creating a cognitive modeling paradigm known as rational analysis (Chater & Oaksford Reference Chater and Oaksford1999) that derives models of human behavior from structural environmental assumptions according to the six steps summarized in Box 1 and Figure 2. Rational process models can be used to connect the computational level of analysis to the algorithmic level of analysis. The principle of resource rationality allows us to derive rational process models from assumptions about a system's function and its cognitive constraints.

Box 1 The six steps of rational analysis.

1. Precisely specify what are the goals of the cognitive system.

2. Develop a formal model of the environment to which the system is adapted.

3. Make the minimal assumptions about computational limitations.

4. Derive the optimal behavioral function given items 1 through 3.

5. Examine the empirical literature to see if the predictions of the behavioral function are confirmed.

6. If the predictions are off, then iterate.

Figure 2. Rational process models can be used to connect the computational level of analysis to the algorithmic level of analysis. The principle of resource rationality allows us to derive rational process models from assumptions about a system's function and its cognitive constraints.

Box 1. Rational models developed in this way have provided surprisingly good explanations of cognitive biases by identifying how the environment that people's strategies are adapted to differs from the tasks participants are given in the laboratory and how people's goals often differ from what the experimenter intended them to be; examples include the confirmation bias (Austerweil & Griffiths Reference Austerweil and Griffiths2011; Oaksford & Chater Reference Oaksford and Chater1994), people's apparent misconceptions of randomness (Griffiths & Tenenbaum Reference Griffiths and Tenenbaum2001; Tenenbaum & Griffiths Reference Tenenbaum and Griffiths2001), the gambler's fallacy (Hahn & Warren Reference Hahn and Warren2009), and several common logical fallacies in argument construction (Hahn & Oaksford Reference Hahn and Oaksford2007). The theoretical frameworks of ecological rationality and rational analysis are founded on the assumption that evolution has adapted the human mind to the structure of our evolutionary environment (Buss Reference Buss1995).

Paralleling rational analysis, some evolutionary ecologists seek to explain animals’ behavior and cognition as an optimal adaptation to their environments (Houston & McNamara Reference Houston and McNamara1999; McNamara & Weissing Reference McNamara, Weissing, Székely, Moore and Komdeur2010). This approach predicts the outcome of evolution from optimality principles, but research on how animals forage for food has identified several cognitive biases in their decisions (e.g., Bateson et al. Reference Bateson, Healy and Hurly2002; Latty & Beekman Reference Latty and Beekman2010; Shafir et al. Reference Shafir, Waite and Smith2002). Subsequent work has sought to reconcile these biases with evolutionary fitness maximization by incorporating constraints on animals’ information processing capacity and by moving from optimal behavior to optimal decision mechanisms that work well across multiple environments (Dukas Reference Dukas2004; Johnstone et al. Reference Johnstone, Dall and Dukas2002).

Research on human cognition faces similar challenges. While it is a central tenet of rational analysis to assume only minimal computational limitations (step 3), the computational constraints imposed by people's limited resources are often substantial (Newell & Simon Reference Newell and Simon1972; Simon Reference Simon1982) and computing exact solutions to the problems people purportedly solve is often computationally intractable (Van Rooij Reference Van Rooij2008). For this reason, rational analysis cannot account for cognitive biases resulting from limited resources. A complete theory of bounded rationality must go further in accounting for people's cognitive constraints and limited time.

Fortunately, AI researchers have already developed a theory of rationality that accounts for limited computational resources (Horvitz Reference Horvitz1987; Horvitz et al. Reference Horvitz, Cooper and Heckerman1989; Horvitz Reference Horvitz1990; Russell Reference Russell1997; Russell & Subramanian Reference Russell and Subramanian1995). Bounded optimality is a theory for designing optimal programs for agents with performance-limited hardware that must interact with their environments in real time. A program is bounded-optimal for a given architecture if it enables that architecture to perform as well as or better than any other program the architecture could execute instead. This standard is attainable by its very definition. Recently, this idea that bounded rationality can be defined as the solution to a constrained optimization problem has been applied to a particular class of resource-bounded agents: people (Griffiths et al. Reference Griffiths, Lieder and Goodman2015; Lewis et al. Reference Lewis, Howes and Singh2014). This leads to a precise theory that uniquely identifies how people should think and decide to make optimal use of their finite time and bounded cognitive resources (see Fig. 1). In the next section, we synthesize and refine these approaches into a paradigm for modeling cognitive mechanisms and representations that we refer to as resource-rational analysis.

3. Resource-rational analysis

While bounded optimality was originally developed as a theoretical foundation for designing intelligent agents, it has been successfully adopted for cognitive modeling (Gershman et al. Reference Gershman, Horvitz and Tenenbaum2015; Griffiths et al. Reference Griffiths, Lieder and Goodman2015; Lewis et al. Reference Lewis, Howes and Singh2014). When combined with reasonable assumptions about human cognitive capacities and limitations, bounded optimality provides a realistic normative standard for cognitive strategies and representations (Griffiths et al. Reference Griffiths, Lieder and Goodman2015), thereby allowing psychologists to derive realistic models of cognitive mechanisms based on the assumption that the human mind makes rational use of its limited cognitive resources. Variations of this principle are known by various names, including computational rationality (Lewis et al. Reference Lewis, Howes and Singh2014), algorithmic rationality (Halpern & Pass Reference Halpern and Pass2015), bounded rational agents (Vul et al. Reference Vul, Goodman, Griffiths and Tenenbaum2014), boundedly rational analysis (Icard Reference Icard2014), the rational minimalist program (Nobandegani Reference Nobandegani2017), and the idea of rational models with limited processing capacity developed in economics (Caplin & Dean Reference Caplin and Dean2015; Fudenberg et al. Reference Fudenberg, Strack and Strzalecki2018; Gabaix et al. Reference Gabaix, Laibson, Moloche and Weinberg2006; C. A. Sims Reference Sims2003; Woodford Reference Woodford2014) reviewed below. Here, we will refer to this principle as resource rationality (Griffiths et al. Reference Griffiths, Lieder and Goodman2015; Lieder et al. Reference Lieder, Griffiths, Goodman, Bartlett, Pereira, Bottou, Burges and Weinberger2012) and advocate its use in a cognitive modeling paradigm called resource-rational analysis (Griffiths et al. Reference Griffiths, Lieder and Goodman2015).

Figure 1 illustrates that resource rationality identifies the best biologically feasible mind out of the infinite set of bounded-rational minds. To make the notion of resource rationality precise, we apply the principle of bounded optimality to define a resource-rational mind  $m^{\rm \star }$ for the brain B interacting with the environment E as

$m^{\rm \star }$ for the brain B interacting with the environment E as

$$m^{\vskip1pt \rm \star } = \arg \mathop {\max }\nolimits_{m\in M_B} {\rm {\opf E}}_{P\lpar T\comma l_T\vert E\comma A_t = m\lpar {l_t} \rpar \rpar }\lsqb {u\lpar {l_T} \rpar } \rsqb \comma \;$$

$$m^{\vskip1pt \rm \star } = \arg \mathop {\max }\nolimits_{m\in M_B} {\rm {\opf E}}_{P\lpar T\comma l_T\vert E\comma A_t = m\lpar {l_t} \rpar \rpar }\lsqb {u\lpar {l_T} \rpar } \rsqb \comma \;$$where M B is the set of biologically feasible minds, T is the agent's (unknown) lifetime, its life history l t = (S 0, S 1, ⋅ ⋅ ⋅ , S t) is the sequence of world states the agent has experienced until time t, A t = m(l t) is the action that the mind m will choose based on that experience, and the agent's utility function u assigns values to life histories.

Our theory assumes that the cognitive limitations inherent in the biologically feasible minds M B include a limited set of elementary operations (e.g., counting and memory recall are available but applying Bayes’ theorem is not), limited processing speed (each operation takes a certain amount of time), and potentially other constraints, such as limited working memory. Critically, the world state S t is constantly changing as the mind m deliberates. Thus, performing well requires the bounded optimal mind  $m^{\rm \star }$ to not only generate good decisions, but to do so quickly. Since each cognitive operation takes time, bounded optimality often requires computational frugality.

$m^{\rm \star }$ to not only generate good decisions, but to do so quickly. Since each cognitive operation takes time, bounded optimality often requires computational frugality.

Identifying the resource-rational mind defined by Equation 2 would require optimizing over an entire lifetime, but if we assume that life can be partitioned into a sequence of episodes, we can use this definition to derive the optimal heuristic  $h^{\rm \star }$ that a person should use to make a single decision or inference in a particular situation. To achieve this, we decompose the value of having applied a heuristic into the utility of the judgment, decision, or belief update that results from it (i.e., u(result)) and the computational cost of its execution. The latter is critical because the time and cognitive resources expended on any decision or inference (current episode) take away from a person's budget for later ones (future episodes). To capture this, let the random variable cost(t h, ρ, λ) denote the total opportunity cost of investing the cognitive resources ρ used or blocked by the heuristic h for the duration t h of its execution, when the agent's cognitive opportunity cost per quantum of cognitive resources and unit time is λ. The resource-rational heuristic

$h^{\rm \star }$ that a person should use to make a single decision or inference in a particular situation. To achieve this, we decompose the value of having applied a heuristic into the utility of the judgment, decision, or belief update that results from it (i.e., u(result)) and the computational cost of its execution. The latter is critical because the time and cognitive resources expended on any decision or inference (current episode) take away from a person's budget for later ones (future episodes). To capture this, let the random variable cost(t h, ρ, λ) denote the total opportunity cost of investing the cognitive resources ρ used or blocked by the heuristic h for the duration t h of its execution, when the agent's cognitive opportunity cost per quantum of cognitive resources and unit time is λ. The resource-rational heuristic  $h^{\rm \star }$ for a brain B to use in the belief state b 0 is then

$h^{\rm \star }$ for a brain B to use in the belief state b 0 is then

$$h^{\vskip1pt \rm \star }\lpar {s_0\comma \;B\comma \;E} \rpar = \mathop {{\rm argmax}}\limits_{h\in \;H_B} {\rm {\opf E}}_{P{\rm \lpar result\vert }s_0\comma h\comma E \comma B\rpar }\big[ {u\lpar {{\rm result}} \rpar } \big] \;-{\rm {\opf E}}_{t_h\comma \rho \comma \lambda \vert h\comma s_0\comma B\comma E}\big[ {{\rm cost}\lpar {t_h\comma \;\rho \comma \;\;\lambda } \rpar } \big]\comma $$

$$h^{\vskip1pt \rm \star }\lpar {s_0\comma \;B\comma \;E} \rpar = \mathop {{\rm argmax}}\limits_{h\in \;H_B} {\rm {\opf E}}_{P{\rm \lpar result\vert }s_0\comma h\comma E \comma B\rpar }\big[ {u\lpar {{\rm result}} \rpar } \big] \;-{\rm {\opf E}}_{t_h\comma \rho \comma \lambda \vert h\comma s_0\comma B\comma E}\big[ {{\rm cost}\lpar {t_h\comma \;\rho \comma \;\;\lambda } \rpar } \big]\comma $$where H B is the set of heuristics that brain B can execute and s 0 = (o, b 0) comprises observed information about the initial state of the external world (o) and the person's initial belief state b 0. As described below, this formulation makes it possible to develop automatic methods for deriving simple heuristics – like the ones people use – from first principles.

Resource-rational cognitive mechanisms trade off accuracy against effort in an adaptive, nearly optimal manner. This is reminiscent of the proposal that people optimally trade off the time it takes to gather information about prices against its financial benefits (Stigler Reference Stigler1961) but there are two critical differences. The most important difference is that while Stigler (Reference Stigler1961) defined a problem to be solved by the decision-maker, Equation 3 defines a problem to be solved by evolution, cognitive development, and life-long learning. That is, we propose that people never have to directly solve the constrained optimization problem defined in Equation 3. Rather, we believe that for most of our decisions the problem of finding a good decision mechanism has already been solved by evolution (Dukas Reference Dukas1998a; McNamara & Weissing Reference McNamara, Weissing, Székely, Moore and Komdeur2010), learning, and cognitive development (Siegler & Jenkins Reference Siegler and Jenkins1989; Shrager & Siegler Reference Shrager and Siegler1998). In many cases the solution  $h^{\rm \star }$ may be a simple heuristic. Thus, when people confront a decision they can usually rely on a simple decision rule without having to discover it on the spot. The second critical difference is that while resource rationality is a principle for modeling internal cognitive mechanisms (i.e., heuristics) Stigler's information economics defined models of optimal behavior. Identifying the optimal behavior (subject to the cost of collecting information) would, in general, require people to perform optimization under constraints in their heads. By contrast, resource-rational analysis will almost invariably favor a simple heuristic over optimization under constraints because it penalizes decision mechanisms by the cost of the mental effort required to execute them and only considers decision-mechanisms that are biologically feasible. That is, while Stigler's information economics focused on the cost of collecting information (e.g., how long it takes to visit different shops to find out how much they charge for a product), resource rationality additionally accounts for the cost of thinking according to one strategy (e.g., evaluating each product's utility in all possible scenarios in which it might be used) versus another (e.g., just comparing the prices).

$h^{\rm \star }$ may be a simple heuristic. Thus, when people confront a decision they can usually rely on a simple decision rule without having to discover it on the spot. The second critical difference is that while resource rationality is a principle for modeling internal cognitive mechanisms (i.e., heuristics) Stigler's information economics defined models of optimal behavior. Identifying the optimal behavior (subject to the cost of collecting information) would, in general, require people to perform optimization under constraints in their heads. By contrast, resource-rational analysis will almost invariably favor a simple heuristic over optimization under constraints because it penalizes decision mechanisms by the cost of the mental effort required to execute them and only considers decision-mechanisms that are biologically feasible. That is, while Stigler's information economics focused on the cost of collecting information (e.g., how long it takes to visit different shops to find out how much they charge for a product), resource rationality additionally accounts for the cost of thinking according to one strategy (e.g., evaluating each product's utility in all possible scenarios in which it might be used) versus another (e.g., just comparing the prices).

Equation 3 assumes that all possible outcomes and their probabilities and consequences are known. But the real world is very complex and highly uncertain, and limited experience constrains how well people can be adapted to it. Being equipped with a different heuristic for each and every situation would be prohibitively expensive (Houston & McNamara Reference Houston and McNamara1999) − not least because of the difficulty of selecting between them (Milli et al. Reference Milli, Lieder and Griffiths2017; Reference Milli, Lieder and Griffiths2019). To accommodate these bounds on human rationality, we relax the optimality criterion in Equation 3 from optimality with respect to true environment E to optimality with respect to the information i that has been obtained about the environment through direct experience, indirect experience, and evolutionary adaptation. We can therefore define the boundedly resource-rational heuristic given the limited information i as

$$\eqalign{h^{\vskip1pt \rm \star }\lpar {s_0\comma \;B\comma \;i} \rpar =& \mathop {{\rm argmax}}\nolimits_{h\in \;H_B} {\rm {\opf E}}_{E\vert i}\Big[ {{\rm {\opf E}}_{P{\rm \lpar result\vert }s_0\comma h\comma E \comma B\rpar }\big[ {u\lpar {\,{\rm result}} \rpar } \big] \;}\cr\quad&-{\rm {\opf E}}_{t_h\comma \rho \comma \lambda \vert h\comma s_0\comma B\comma E}\big[ {{\rm cost}\lpar {t_h\comma \;\rho \comma \;\;\lambda } \rpar } \big]\Big]. } }$$

$$\eqalign{h^{\vskip1pt \rm \star }\lpar {s_0\comma \;B\comma \;i} \rpar =& \mathop {{\rm argmax}}\nolimits_{h\in \;H_B} {\rm {\opf E}}_{E\vert i}\Big[ {{\rm {\opf E}}_{P{\rm \lpar result\vert }s_0\comma h\comma E \comma B\rpar }\big[ {u\lpar {\,{\rm result}} \rpar } \big] \;}\cr\quad&-{\rm {\opf E}}_{t_h\comma \rho \comma \lambda \vert h\comma s_0\comma B\comma E}\big[ {{\rm cost}\lpar {t_h\comma \;\rho \comma \;\;\lambda } \rpar } \big]\Big]. } }$$Since the mechanisms of adaptation are also bounded, we should not expect people's heuristics to be perfectly resource-rational. Instead, even a resource-rational mind might have to rely on heuristics for choosing heuristics to approximate the prescriptions of Equation 4. Recent work is beginning to illuminate what the mechanisms of strategy selection and adaptation might be (Lieder & Griffiths Reference Lieder and Griffiths2017) but more research is needed to identify how and how closely the mind approximates resource-rational thinking and decision-making.

It is too early to know how resource-rational people really are, but we are optimistic that resource-rational analysis can be a useful methodology for answering interesting questions about cognitive mechanisms − in the same way in which Bayesian modeling is a useful methodology for elucidating what the mind does and why it does what it does (Griffiths et al. Reference Griffiths, Kemp, Tenenbaum and Sun2008; Griffiths et al. Reference Griffiths, Chater, Kemp, Perfors and Tenenbaum2010). In other words, resource rationality is not a fully fleshed out theory of cognition, designed as a new standard of normativity against which human judgments can be assessed, but a methodological device that allows researchers to translate their assumptions about cognitive constraints and functional requirements into precise mathematical models of cognitive processes and representations.

Resource rationality serves as a unifying theme for many recent models and theories of perception, decision-making, memory, reasoning, attention, and cognitive control that we will review below. While rational analysis makes only minimal assumptions about cognitive constraints, it has been argued that there are many cases where cognitive limitations impose substantial constraints (Simon Reference Simon1956; Reference Simon1982). Resource-rational analysis (Griffiths et al. Reference Griffiths, Lieder and Goodman2015) thus extends rational analysis to also consider which cognitive operations are available to people and how costly those operations are in terms of time cognitive resources. This means including the structure and resources of the mind itself in the definition of the environment to which cognitive mechanisms are supposedly adapted. Resource-rational analysis thereby follows Simon's advice that “we must be prepared to accept the possibility that what we call ‘the environment’ may lie, in part, within the skin of the biological organism” (Simon Reference Simon1955).

Resource-rational analysis is a five-step process (see Box 2) that leverages the formal theory of bounded optimality introduced above to derive rational process models of cognitive abilities from formal definitions of their function and abstract assumptions about the mind's computational architecture. This function-first approach starts at the computational level of analysis (Marr Reference Marr1982). When the function of the studied cognitive capacity has been formalized, step 2 of resource-rational analysis is to postulate an abstract computational architecture, that is a set of elementary operations and their costs, with which the mind might realize this function. Next, resource-rational analysis derives the optimal algorithm for solving the problem identified at the computational level with the abstract computational architecture defined in step 2 (Equation 3), thereby pushing the principles of rational analysis toward Marr's algorithmic level (see Fig. 2). The resulting process model can be used to simulate people's responses and reaction times in an experiment. Next, the model's predictions are tested against empirical data. The results can be used to refine the theory's assumptions about the computational architecture and the problem to be solved. The process of resource-rational analysis can then be repeated under these refined assumptions to derive a more accurate process model. Refining the model's assumptions may include moving from an abstract computational architecture to increasingly more realistic models of the mind's cognitive architecture or the brain's biophysical limits. As the assumptions about the computational architecture become increasingly more realistic and the model's predictions become more accurate, the corresponding rational process model should become increasingly more similar to the psychological/neurocomputational mechanisms that generate people's responses (see Fig. 2). The process of resource-rational analysis ends when either the model's predictions are accurate enough or all relevant cognitive constraints have been incorporated sensibly. This process makes resource-rational analysis a methodology for reverse-engineering cognitive mechanisms (Griffiths et al. Reference Griffiths, Lieder and Goodman2015).

Box 2 The five steps of resource-rational analysis. Note that a resource-rational analysis may stop in step 5 even when human performance substantially deviates from the resource-rational predictions as long as reasonable attempts have been made to model the constraints accurately based on the available empirical evidence. Furthermore, refining the assumed computational architecture can also include modeling how the brain might approximate the postulated algorithm.

1. Start with a computational-level (i.e., functional) description of an aspect of cognition formulated as a problem and its solution.

2. Posit which class of algorithms the mind's computational architecture might use to approximately solve this problem, the cost of the computational resources used by these algorithms, and the utility of more accurately approximating the correct solution.

3. Find the algorithm in this class that optimally trades off resources and approximation accuracy (Equation 3 or 4).

4. Evaluate the predictions of the resulting rational process model against empirical data.

5. Refine the computational-level theory (step 1) or assumed computational architecture and its constraints (step 2) to address significant discrepancies, derive a refined resource-rational model, and then reiterate or stop if the model's assumptions are already sufficiently realistic.

Resource-rational analysis can be seen as an extension of rational-analysis from predicting behavior from the structure of the external environment to predicting cognitive mechanisms from internal cognitive resources and the external environment. These advances allow us to translate our growing understanding of the brain's computational architecture into more realistic models of psychological processes and mental representations. Fundamentally, it provides a tool for replacing the traditional method of developing cognitive process models − in which a theorist imagines ways in which different processes might combine to capture behavior − with a means of automatically deriving hypotheses about cognitive processes from the problem people have to solve and the resources they have available to do so.

Deriving resource-rational models of cognitive mechanisms from assumptions about their function and the cognitive architecture available to realize them (step 2) is the centerpiece of resource-rational analysis (Griffiths et al. Reference Griffiths, Lieder and Goodman2015). This process often involves manual derivations (e.g., Lieder et al. Reference Lieder, Griffiths, Goodman, Bartlett, Pereira, Bottou, Burges and Weinberger2012; Reference Lieder, Hsu and Griffiths2014), but it is also possible to develop computational methods that discover complex resource-rational cognitive strategies automatically (Callaway et al. Reference Callaway, Gul, Krueger, Griffiths and Lieder2018a; Reference Callaway, Lieder, Das, Gul, Krueger and Griffiths2018b; Lieder et al. Reference Lieder, Krueger and Griffiths2017).

Resource-rational analysis combines the strengths of rational approaches to cognitive modeling with insights from the literature on cognitive biases and capacity limitations. We argue below that this enables resource-rational analysis to leverage mathematically precise unifying principles to develop psychologically realistic process models that explain and predict a wide range of seemingly unrelated cognitive and behavioral phenomena.

4. Modeling capacity limits to explain cognitive biases: case studies in decision-making

In this section, we review research suggesting that the principle of resource rationality can explain many of the biases in decision-making that led to the downfall of expected utility theory. Later, we will argue that the same conclusion also holds for other areas of human cognition. Extant work has augmented rational models with different kinds of cognitive limitations and costs, including costly information acquisition and limited attention, limited representational capacity, neural noise, finite time, and limited computational resources. The following sections review resource-rational analyses of the implications of each of these cognitive limitations in turn, showing that each can account for a number of cognitive biases that expected utility cannot. This brief review illustrates that resource rationality is an integrative framework for connecting theories from economics, psychology, and neuroscience.

4.1 Costly information acquisition and limited attention

People tend to have inconsistent preferences and often fail to choose the best available option even when all of the necessary information is available (Kahneman & Tversky Reference Kahneman and Tversky1979). Previous research has found that many of these violations of expected utility theory might result from the fact that acquiring information is costly (Bogacz et al. Reference Bogacz, Brown, Moehlis, Holmes and Cohen2006; Gabaix et al. Reference Gabaix, Laibson, Moloche and Weinberg2006; Lieder et al. Reference Lieder, Krueger and Griffiths2017; Sanjurjo Reference Sanjurjo2017; C. A. Sims Reference Sims2003; Verrecchia Reference Verrecchia1982). This cost could include an explicit price that people must pay to purchase information (e.g., Verrecchia Reference Verrecchia1982), the opportunity cost of the decision-maker's time (e.g., Bogacz et al. Reference Bogacz, Brown, Moehlis, Holmes and Cohen2006; Gabaix et al. Reference Gabaix, Laibson, Moloche and Weinberg2006) and cognitive resources (Shenhav et al. Reference Shenhav, Musslick, Lieder, Kool, Griffiths, Cohen and Botvinick2017), the mental effort of paying attention (C. A. Sims Reference Sims2003), and the cost of overriding one's automatic response tendencies (Kool & Botvinick Reference Kool and Botvinick2013). Regardless of the source of the cost, we can define resource-rational decision-making as using a mechanism achieving the best possible tradeoff between the expected utility and cost of the resulting decision (see Equation 4).

Rather than trying to evaluate all of their options people tend to select the first alternative they encounter that they consider good enough. For instance, when given the choice between seven different gambles a person striving to win at least $5 may choose the second one without even looking at gambles 3−7 because all of its payoffs range from $5.50 to $9.75. This heuristic is known as Satisficing (Simon Reference Simon1956). Satisficing can be interpreted as the solution to an optimal stopping problem, and Caplin et al. (Reference Caplin, Dean and Martin2011) showed that satisficing with an adaptive aspiration level is a bounded-optimal decision strategy for certain decision problems where information is costly. This analysis can be cast in exactly the form of Equation 3, where the utility of the final outcome trades off against the cost of gathering additional information.

Curiously, people also fail to consider all alternatives even when information can be gathered free of charge. This might be because people's attentional resources are limited. The theory of rational inattention (C. A. Sims Reference Sims2003; Reference Sims2006) explains several biases in economic decisions, including the inertia, randomness, and abruptness of people's reactions to new financial information, by postulating that people allocate their limited attention optimally. For instance, the limited attention of consumers may prevent them from becoming more frugal as the balance of their bank account drops, even though that information is freely available to them. Furthermore, the rational inattention model can also explain the seemingly irrational phenomenon that adding an additional alternative can increase the probability that the decision-maker will choose one of the already available options (Matějka & McKay Reference Matějka and McKay2015).

The rational inattention model discounts all information equally, but people tend to focus on a small number of relevant variables while neglecting others completely. To capture this, Gabaix (Reference Gabaix2014) derived which of the thousands of potentially relevant variables a bounded-optimal decision-maker should attend to depending on their variability, their effect on the utilities of alternative choices, and the cost of attention. The resulting sparse max model generally attends only to a small subset of the variables, specifies how much attention each of them should receive, replaces unobserved variables by their default values, adjusts the default values of partially attended variables toward their true values, and then chooses the action that is best according to its simplified model of the world. The sparse max model can be interpreted as an instantiation of Equation 4, and Gabaix (Reference Gabaix2014) and Gabaix et al. (Reference Gabaix, Laibson, Moloche and Weinberg2006) showed that the model's predictions capture how people gather information and predicts their choices better than expected utility theory. In subsequent work, Gabaix extended the sparse max model to sequential decision problems (Gabaix Reference Gabaix2016) to provide a unifying explanation for many seemingly unrelated biases and economic phenomena (Gabaix Reference Gabaix2017).

People tend to consider only a small number of possible outcomes − often focusing on the worst-case and the best-case scenarios. This can skew their decisions towards irrational risk aversion (e.g., fear of air travel) or irrational risk seeking (e.g., playing the lottery). This may be a consequence of people rationally allocating their limited attention to the most important eventualities (Lieder et al. Reference Lieder, Callaway, Krueger, Das, Griffiths and Gul2018a).

4.1.1 Noisy evidence and limited time

Noisy information processing is believed to be the root cause of many biases in decision-making (Hilbert Reference Hilbert2012). Making good decisions often requires integrating many pieces of weak or noisy evidence over time. However, time is limited and valuable, which creates pressure to decide quickly. The principle of resource rationality has been applied to understand how people trade off speed against accuracy to make the best possible use of their limited time in the face of noisy evidence. Speed-accuracy trade-offs have been most thoroughly explored in perceptual decision-making experiments where people are incentivized to maximize their reward rate (points/second) across a series of self-paced perceptual judgments (e.g., “Are there more dots moving to the right or to the left?”). Such decisions are commonly modelled using variants of the drift-diffusion model (Ratcliff Reference Ratcliff1978), which has three components: evidence generation, evidence accumulation, and choice. The principle of resource rationality (Equation 3) has been applied to derive optimal mechanisms for generating evidence and deciding when to stop accumulating it.

4.1.2 Deciding when to stop

Research on judgment and decision-making has often concluded that people think too little and decide too quickly, but a quantitative evaluation of human performance in perceptual decision-making against a bounded optimal model suggests the opposite (Holmes & Cohen Reference Holmes and Cohen2014). Bogacz et al. (Reference Bogacz, Brown, Moehlis, Holmes and Cohen2006) showed that the drift-diffusion model achieves the best possible accuracy at a required speed and achieves a required accuracy as quickly as possible. The drift diffusion model sums the difference between the evidence in favor of option A and the evidence in favor of option B over time, stopping evidence accumulation when the strength of the accumulated evidence exceeds a threshold. Bogacz et al. (Reference Bogacz, Brown, Moehlis, Holmes and Cohen2006) derived the decision threshold that maximizes the decision-maker's reward rate. Comparing to this optimal speed-accuracy trade-off people gather too much information before committing to a decision (Holmes & Cohen Reference Holmes and Cohen2014). While Bogacz et al. (Reference Bogacz, Brown, Moehlis, Holmes and Cohen2006) focused on perceptual decision-making, subsequent work has derived optimal decision thresholds for value-based choice (Fudenberg et al. Reference Fudenberg, Strack and Strzalecki2018; Gabaix & Laibson Reference Gabaix and Laibson2005; Tajima et al. Reference Tajima, Drugowitsch and Pouget2016).

When repeatedly choosing between two stochastically rewarded actions people (and other animals) usually fail to learn to always choose the option that is more likely to be rewarded; instead, they randomly select each option with a frequency that is roughly equal to the probability that it will be rewarded (Herrnstein Reference Herrnstein1961). To make sense of this, Vul et al. (Reference Vul, Goodman, Griffiths and Tenenbaum2014) derived how many mental simulations a bounded agent should perform for each of its decisions to maximize its reward rate across the entirety of its choices. The optimal number of mental simulations turned out to be very small and depends on the ratio of the time needed to execute an action over the time required to simulate it. Concretely, it is bounded-optimal to decide based on only a single sample, which is equivalent to probability matching, when it takes at most three times as long to execute the action as to simulate it. But when the stakes of the decision increase relative to the agent's opportunity cost, then the optimal number of simulations increases as well. This prediction is qualitatively consistent with studies finding that choice behavior gradually changes from probability matching to maximization as monetary incentives increase (Shanks et al. Reference Shanks, Tunney and McCarthy2002; Vulkan Reference Vulkan2000).

4.1.3 Effortful evidence generation

In everyday life, people often must actively generate the evidence for and against each alternative. Resource-rational models postulating that people optimally tradeoff the quality of their decisions against the cost of evidence generation can accurately capture how much effort decision-makers invest under various circumstances (Dickhaut et al. Reference Dickhaut, Rustichini and Smith2009) and the inversely U-shaped relationship between decision-time and decision-quality (Woodford Reference Woodford2014; Reference Woodford2016).

4.2. Computational complexity and limited computational resources

Many models assume that human decision-making is approximately resource-rational subject to the constraints imposed by unreliable evidence and neural noise (e.g., Howes et al. Reference Howes, Warren, Farmer, El-Deredy and Lewis2016; Khaw et al. Reference Khaw, Li and Woodford2017; Stocker et al. Reference Stocker, Simoncelli, Hughes, Weiss, Schölkopf and Platt2006). However, Beck et al. (Reference Beck, Ma, Pitkow, Latham and Pouget2012) argued that the relatively small levels of neural noise measured neurophysiologically cannot account for the much greater levels of variability and suboptimality in human performance. They propose that instead of making optimal use of noisy representations, the brain uses approximations that entail systematic biases (Beck et al. Reference Beck, Ma, Pitkow, Latham and Pouget2012). From the perspective of bounded optimality, approximations are necessary because the computational complexity of decision-making in the real world far exceeds cognitive capacity (Bossaerts & Murawski Reference Bossaerts and Murawski2017; Bossaerts et al. Reference Bossaerts, Yadav and Murawski2018). People cope with this computational complexity through efficient heuristics and habits. In the next section, we argue that resource rationality can provide a unifying explanation for each of these phenomena.

4.3. Resource-rational heuristics

More reasoning and more information do not automatically lead to better decisions. To the contrary, simple heuristics that make clever use of the most important information can outperform complex decision-procedures that use large amounts of data and computation less cleverly (Gigerenzer & Gaissmaier Reference Gigerenzer and Gaissmaier2011). This highlights that resource rationality critically depends on which information is considered and how it is used.

To solve complex decision problems, people generally take multiple steps in reasoning. Choosing those cognitive operations well is challenging because the benefit of each operation depends on which operations will follow: In principle, choosing the best first cognitive operation requires planning multiple cognitive operations ahead. Gabaix and Laibson (Reference Gabaix and Laibson2005) proposed that people simplify this intractable meta-decision-making problem by choosing each cognitive operation according to a myopic cost−benefit analysis that pits the immediate improvement in decision quality expected from each decision operation against its cognitive cost (see Equation 3). Gabaix et al. (Reference Gabaix, Laibson, Moloche and Weinberg2006) found that this model correctly predicted people's suboptimal information search behavior in a simple bandit task and explained how people choose between many alternatives with multiple attributes better than previous models.

Recent work has developed a non-myopic approach to deriving resource-rational heuristics (Callaway et al. Reference Callaway, Gul, Krueger, Griffiths and Lieder2018a; Lieder et al. Reference Lieder, Krueger and Griffiths2017) and previously proposed heuristic models of planning. They also found that people's planning operations achieved about 86% of the best possible trade-off between decision quality and time cost and agreed with the bounded-optimal strategy about 55% of the time. This quantitative analysis offers a more nuanced and presumably more accurate assessment of human rationality than qualitative assessments according to which people are either “rational” or “irrational.” Furthermore, this resource-rational analysis correctly predicted how people's planning strategies differ across environments and that their aspiration levels decrease as people gather more information.

This line of work led to a new computational method that can automatically derive resource-rational cognitive strategies from a mathematical model of their function and assumptions about available cognitive resources and their costs. This method is very general and can be applied across different cognitive domains. In an application to multi-alternative risky choice (Lieder et al. Reference Lieder, Krueger and Griffiths2017), and elucidated the conditions under which they are bounded-optimal. Furthermore, it also led to the discovery of a previously unknown heuristic that combines elements of satisficing and Take-The-Best (SAT-TTB; see Figure 3). A follow-up experiment confirmed that people do use that strategy specifically for the kinds of decision problems for which it is bounded-optimal. These examples illustrate that bounded-optimal mechanisms for complex decision problems generally involve approximations that introduce systematic biases, supporting the view that many cognitive biases could reflect people's rational use of limited cognitive resources.

Figure 3. Illustration of the resource-rational SAT-TTB heuristic for multi-alternative risky choice in the Mouselab paradigm where participants choose between bets (red boxes) based on their initially concealed payoffs (gray boxes) for different events (rows) that occur with known probabilities (leftmost column). These payoffs can be uncovered by clicking on corresponding cells of the payoff matrix. The SAT-TTB strategy collects information about the alternatives’ payoffs for the most probable outcome (here a brown ball being drawn from the urn) until it encounters a payoff that is high enough (here $0.22). As soon as it finds a single payoff that exceeds its aspiration level, it stops collecting information and chooses the corresponding alternative. The automatic strategy discovery method by Lieder et al. (Reference Lieder, Krueger and Griffiths2017) derived this strategy as the resource-rational heuristic for low-stakes decisions where one outcome is much more probable than all others.

4.4. Habits

In sharp contrast to the prescription of expected utility theory that actions should be chosen based on their expected consequences, people often act habitually without deliberating about consequences (Dolan & Dayan Reference Dolan and Dayan2013). The contrast between the enormous computational complexity of expected utility maximization (Bossaerts & Murawski Reference Bossaerts and Murawski2017; Bossaerts et al. Reference Bossaerts, Yadav and Murawski2018) and people's limited computational resources and finite time suggests that habits may be necessary for bounded-optimal decision-making. Reusing previously successful action sequences allows people to save substantial amounts of time-consuming and error-prone computation; therefore, the principle of resource rationality in Equation 3 can be applied to determine under which circumstances it is rational to rely on habits.

When habits and goal-directed decision-making compete for behavioral control the brain appears to arbitrate between them in a manner consistent with a rational cost−benefit analysis (Daw et al. Reference Daw, Niv and Dayan2005; Keramati et al. Reference Keramati, Dezfouli and Piray2011). More recent work has applied the idea of bounded optimality to derive how the habitual and goal-directed decision systems might collaborate (Huys et al. Reference Huys, Lally, Faulkner, Eshel, Seifritz, Gershman, Dayan and Roiser2015; Keramati et al. Reference Keramati, Smittenaar, Dolan and Dayan2016). Keramati et al. (Reference Keramati, Smittenaar, Dolan and Dayan2016) found that people adaptively integrate planning and habits according to how much time is available. Similarly, Huys et al. (Reference Huys, Lally, Faulkner, Eshel, Seifritz, Gershman, Dayan and Roiser2015) postulated that people decompose sequential decision problems into sub-problems to optimally trade off planning cost savings attained by reusing previous action sequences against the resulting decrease in decision quality.

Overall, the examples reviewed in this section highlight that the principle of resource rationality (Equation 3) provides a unifying framework for a wide range of successful models of seemingly unrelated phenomena and cognitive biases. Resource rationality might thus be able to fill the theoretical vacuum that was left behind by the undoing of expected utility theory. While this section focused on decision-making, the following sections illustrate that the resource-rational framework applies across all domains of cognition and perception.

5. Revisiting classic questions of cognitive psychology

The standard methodology for developing computational models of cognition is to start with a set of component cognitive processes − similarity, attention, and activation − and consider how to assemble them into a structure reproducing human behavior. Resource rationality represents a different approach to cognitive modeling: while the components may be the same, they are put together by finding the optimal solution to a computational problem. This brings advances in AI and ideas from computational-level theories of cognition to bear on cognitive psychology's classic questions about mental representations, cognitive strategies, capacity limits, and the mind's cognitive architecture.

Resource rationality complements the traditional bottom-up approach driven by empirical phenomena with a top-down approach that starts from the computational level of analysis. It leverages computational-level theories to address the problem that cognitive strategies and representations are rarely identifiable from the available behavioral data alone (Anderson Reference Anderson1978) by considering only those mechanisms and representations that realize their function in a resource-rational manner. In addition to helping us uncover cognitive mechanisms, resource-rational analysis also explains why they exist and why they work the way they do. Rational analysis forges a valuable connection between computer science and psychology. Resource-rational analysis strengthens this connection while establishing an additional bridge from psychological constructs to the neural mechanisms implementing them. This connection allows psychological theories to be constrained by our rapidly expanding understanding of the brain.

Below we discuss how resource-rational analysis can shed light on cognitive mechanisms, mental representations, and cognitive architectures, how it links cognitive psychology to other disciplines, and how it contributes to the debate about human rationality.

5.1. Reverse-engineering cognitive mechanisms and mental representations

Resource-rational analysis is a methodology for reverse-engineering the mechanisms and representations of human cognition. This section illustrates the potential of this approach with examples from modeling memory, attention, reasoning, and cognitive control.

5.1.1 Memory

Anderson and Milson's (Reference Anderson and Milson1989) highly influential rational analysis of memory can be interpreted as the first application of the principle of bounded optimality in cognitive psychology. Their model combines an optimal memory storage mechanism with a resource-rational stopping rule that trades off the cost of continued memory search against its expected benefits (see Equation 3). The storage mechanism presorts memories optimally by exploiting how the probability that a previously encountered piece of information will be needed again depends on the frequency, recency, and pattern of its previous occurrences (Anderson & Schooler Reference Anderson and Schooler1991), The resulting model correctly predicted the effects of frequency, recency, and spacing of practice on the accuracy and speed of memory recall. While Anderson's rational analysis of memory made only minimal assumptions about its computational constraints, this could be seen as the first iteration of a resource-rational analysis that will be continued by future work.

More recent research has applied resource-rational analysis to working memory, where computational constraints play a significantly larger role than in long-term memory. For instance, Howes et al. (Reference Howes, Warren, Farmer, El-Deredy and Lewis2016) found that bounded optimality can predict how many items a person chooses to commit to memory from the cost of misremembering, their working memory capacity, and how long it takes to look up forgotten information. Furthermore, resource rationality predicts that working memory should encode information in representations that optimally trade off efficiency with the cost of error (C. R. Sims Reference Sims2016; C. R. Sims et al. Reference Sims, Jacobs and Knill2012). This optimal encoding, in turn, depends on the statistics of the input distribution and the nature of the task. This allows the model to correctly predict how the precision of working memory representations depends on the number of items to be remembered and the variability of their features. Over time working memory also have to dynamically reallocate its limited capacity across multiple memory traces depending on their current strength and importance (Suchow Reference Suchow2014). Suchow and Griffiths (Reference Suchow and Griffiths2016) found that the optimal solution to this problem captured three directed remembering phenomena from the literature on visual working memory.

5.1.2. Attention

The allocation of attention allows us to cope with a world filled with vastly more information than we can possibly process. Applying resource-rational analysis to problems where the amount of incoming data exceeds the cognitive system's processing capacity might thus be a promising approach to discovering candidate mechanisms of attention. Above we have reviewed a number of bounded optimal models of the effect of limited attention on decision-making (Caplin & Dean Reference Caplin and Dean2015; Caplin et al. Reference Caplin, Dean and Leahy2017; Gabaix Reference Gabaix2014; Reference Gabaix2016; Reference Gabaix2017; Lieder Reference Lieder2018; C. A. Sims Reference Sims2003; Reference Sims2006), so this section briefly reviews resource-rational models of visual attention.

The function of visual attention can be formalized as a decision-problem in the framework of partially observable Markov decision processes (POMDPs; Gottlieb et al. Reference Gottlieb, Oudeyer, Lopes and Baranes2013) or meta-level Markov decision processes (Lieder et al. Reference Lieder, Krueger and Griffiths2017). Such decision-theoretic models make it possible to derive optimal attentional mechanisms. For instance, Lewis et al. (Reference Lewis, Howes and Singh2014) and Butko and Movellan (Reference Butko and Movellan2008) developed bounded optimal models of how long people look at a given stimulus and where they will look next, respectively, and the resource-rational model by Lieder et al. (Reference Lieder, Shenhav, Musslick and Griffiths2018e) captures how visual attention is shaped by learning.

Finally, resource-rational analysis can also elucidate how people distribute their limited attentional resources among multiple internal representations and how much attention they invest in total (Van den Berg & Ma Reference van den Berg and Ma2018). Among other phenomena, the rational deployment of limited attentional resources can explain how people's visual search performance deteriorates with the number of items they must inspect in parallel. To explain such phenomena the model by van den Berg and Ma (Reference van den Berg and Ma2018) assumes that the total amount of attentional resources people invest is chosen according to a rational cost−benefit analysis that evaluates the expected benefits of allocating more attentional resources against their neural costs (see Equation 3).

5.1.3. Reasoning

Studies reporting that people appear to make systematic errors in simple reasoning tasks (e.g., Tversky & Kahneman Reference Tversky and Kahneman1974; Wason Reference Wason1968) have painted a bleak picture of the human mind that is in stark contrast to impressive human performance in complex problems of vision, intuitive physics, and social cognition. Taking into account the cognitive constraints that require people to approximate Bayesian reasoning might resolve this apparent contradiction (Sanborn & Chater Reference Sanborn and Chater2016), and resource-rational analyses of how people overcome the computational challenges of reasoning might uncover their heuristics (e.g., Lieder et al. Reference Lieder, Callaway, Krueger, Das, Griffiths and Gul2018a; Reference Lieder, Griffiths and Hsu2018b).

One fundamental reasoning challenge is the frame problem (Fodor Reference Fodor and Pylyshyn1987; Glymour Reference Glymour and Pylyshyn1987): Given that everything could be related to everything, how do people decide which subset of their knowledge to take into account for reasoning about a question of interest? The resource-rational framework can be applied to derive which variables should be considered and which should be ignored depending on the problem to be solved, the resources available, and their costs. In an analysis of this problem, Icard and Goodman (Reference Icard and Goodman2015) showed that it is often resource-rational to ignore all but the one to three most relevant variables. Their analysis explained why people neglect alternative causes more frequently in predictive reasoning (“What will happen if …”) than in diagnostic reasoning (“Why did this happen?”). Nobandegani and Psaromiligkos (Reference Nobandegani and Psaromiligkos2017) extended Icard and Goodman's analysis of the frame problem toward a process model of how people simultaneously retrieve relevant causal factors from memory and reason over the mental model constructed thus far. Future work should extend this approach to studying alternative ways in which people simplify the mental model they use for reasoning and how they select this simplification depending on the inference they are trying to draw and their reasoning strategy.

Recently, the frame problem has also been studied in the context of decision-making (Gabaix Reference Gabaix2014; Reference Gabaix2016). Gabaix's characterization of a resource-rational solution to this problem predicts many systematic errors in human reasoning, including base-rate neglect, insensitivity to sample size, overconfidence, projection bias (the tendency to underappreciate how different the future will be from the present), and misconceptions of regression to the mean (Gabaix Reference Gabaix2017).

Resource-rational analysis has also already shed light on two additional questions about human reasoning: “How do we decide how much to think?” and “From where do hypotheses come?” Previous research on reasoning suggested that people generally think too little, a view that emerged from findings such as the anchoring bias (Tversky & Kahneman Reference Tversky and Kahneman1974), according to which people's numerical estimates are biased toward their initial guesses (Epley & Gilovich Reference Epley and Gilovich2004). Contrary to the traditional interpretation that people think too little, a resource-rational analysis of numerical estimation suggested that many anchoring biases are consistent with people choosing the number of adjustments they make to their initial guess in accordance with the optimal speed-accuracy trade-off defined in Equation 3 (Lieder et al. Reference Lieder, Griffiths, Huys and Goodman2018c; Reference Lieder, Griffiths, Huys and Goodman2018d). Drawing inspiration from computer science and statistics, this resource-rational analysis yielded a general reasoning mechanism that iteratively proposes adjustments to an initial idea; the proposed adjustments are probabilistically accepted or rejected in such a way that the resulting train of thought eventually converges to the Bayes-optimal inference.

The idea that people generate hypotheses in this way can explain a wide range of biases in probabilistic reasoning (Dasgupta et al. Reference Dasgupta, Schulz and Gershman2017) and has since been successfully applied to model how people reason about causal structures (Bramley et al. Reference Bramley, Dayan, Griffiths and Lagnado2017), medical diagnoses, and natural scenes (Dasgupta et al. Reference Dasgupta, Schulz and Gershman2017; Reference Dasgupta, Schulz, Goodman and Gershman2018). A subsequent resource-rational analysis revealed that once people have generated a hypothesis in this way they memorize it and later retrieve it to more efficiently reason about related questions in the future (Dasgupta et al. Reference Dasgupta, Schulz, Goodman and Gershman2018).

5.1.4. Goals, executive functions, and mental effort

Goals and goal-directed behavior and cognition are essential features of the human mind (Carver & Scheier Reference Carver and Scheier2001). Yet, from the perspective of expected utility theory (Equation 1), there is no reason why people should have goals in the first place. An unboundedly optimal agent would simply maximize its expected utility by scoring all outcomes its actions might have according to its graded utility function. In contrast, people often think only about which subgoal to pursue next and how to achieve it (Newell & Simon Reference Newell and Simon1972). This is suboptimal from the perspective of expected utility theory, even though it seems intuitively rational for people to be goal-directed, and empirical studies have found that setting goals and planning how to achieve them is highly beneficial (Locke & Latham Reference Locke and Latham2002). The resource rationality framework can reconcile this tension by pointing out that goal-directed planning affords many computational simplifications that make good decision-making tractable. For instance, planning backward from the goal − as in means-ends analysis (Newell & Simon Reference Newell and Simon1972) − allows decision-makers to save substantial amounts of computation by ignoring the vast majority of all possible states and action sequences. Future work will apply resource rationality to provide a normative justification for the existence of goals and develop an optimal theory of goal-setting.