1. Introduction and main results

One of the most used models in the dynamic of populations is the Galton–Watson branching process, which has numerous applications in different areas, including physics, biology, medicine, and economics. We refer the reader to the books of Harris [Reference Harris17] and Athreya and Ney [Reference Athreya and Ney6] for an introduction. Branching processes in random environments were first considered by Smith and Wilkinson [Reference Smith and Wilkinson24] and by Athreya and Karlin [Reference Athreya and Karlin4, Reference Athreya and Karlin5]. The subject has been further studied by Kozlov [Reference Kozlov20, Reference Kozlov21], Dekking [Reference Dekking7], Liu [Reference Liu22], D’Souza and Hambly [Reference D’Souza and Hambly8], Geiger and Kersting [Reference Geiger and Kersting9], Guivarc’h and Liu [Reference Guivarc’h and Liu16], Geiger, Kersting and Vatutin [Reference Geiger, Kersting and Vatutin10], Afanasyev [Reference Afanasyev1], and Kersting and Vatutin [Reference Kersting and Vatutin19], to name only a few. Recently, in [Reference Grama, Lauvergnat and Le Page13], based on new conditioned limit theorems for sums of functions defined on Markov chains in [Reference Grama, Lauvergnat and Le Page11, Reference Grama, Lauvergnat and Le Page12, Reference Grama, Lauvergnat and Le Page14, Reference Grama, Le Page and Peigné15], exact asymptotic results for the survival probability when the environment is a Markov chain have been obtained for branching processes in Markovian environment (BPMEs). In this paper we complement these by new results, such as a limit theorem for the normalized number of particles and a Yaglom-type theorem for BPMEs.

We start by introducing the Markovian environment, which is given on the probability space

![]() $\left( \Omega, \mathscr{F}, \mathbb{P} \right)$

by a homogeneous Markov chain

$\left( \Omega, \mathscr{F}, \mathbb{P} \right)$

by a homogeneous Markov chain

![]() $\left( X_n \right)_{n\geq 0}$

with values in the finite state space

$\left( X_n \right)_{n\geq 0}$

with values in the finite state space

![]() $\mathbb{X}$

and with the matrix of transition probabilities

$\mathbb{X}$

and with the matrix of transition probabilities

![]() $\textbf{P} = (\textbf{P} (i,j))_{i,j\in \mathbb{X}}$

. We suppose the following.

$\textbf{P} = (\textbf{P} (i,j))_{i,j\in \mathbb{X}}$

. We suppose the following.

Condition 1. The Markov chain

![]() $\left( X_n \right)_{n\geq 0}$

is irreducible and aperiodic.

$\left( X_n \right)_{n\geq 0}$

is irreducible and aperiodic.

Condition 1 implies a spectral gap property for the transition operator

![]() $\textbf{P}$

of

$\textbf{P}$

of

![]() $\left( X_n \right)_{n\geq 0}$

, defined by the relation

$\left( X_n \right)_{n\geq 0}$

, defined by the relation

![]() $\textbf{P} g (i) = \sum_{j\in \mathbb{X}} g(\,j) \textbf{P}(i,j)$

for any g in the space

$\textbf{P} g (i) = \sum_{j\in \mathbb{X}} g(\,j) \textbf{P}(i,j)$

for any g in the space

![]() $\mathscr{C} (\mathbb X)$

of complex functions g on

$\mathscr{C} (\mathbb X)$

of complex functions g on

![]() $\mathbb{X}$

endowed with the norm

$\mathbb{X}$

endowed with the norm

![]() $\left\lVert{g}\right\rVert_{\infty} = \sup_{x\in \mathbb{X}} \left\lvert{g(x)}\right\rvert$

. Indeed, Condition 1 is necessary and sufficient for the matrix

$\left\lVert{g}\right\rVert_{\infty} = \sup_{x\in \mathbb{X}} \left\lvert{g(x)}\right\rvert$

. Indeed, Condition 1 is necessary and sufficient for the matrix

![]() $(\textbf{P} (i,j))_{i,j\in \mathbb X}$

to be primitive (all entries of

$(\textbf{P} (i,j))_{i,j\in \mathbb X}$

to be primitive (all entries of

![]() $\textbf{P}^{k_0}$

are positive for some

$\textbf{P}^{k_0}$

are positive for some

![]() $k_0 \geq 1$

). By the Perron–Frobenius theorem, there exist positive constants

$k_0 \geq 1$

). By the Perron–Frobenius theorem, there exist positive constants

![]() $c_1$

,

$c_1$

,

![]() $c_2$

, a unique positive

$c_2$

, a unique positive

![]() $\textbf{P}$

-invariant probability

$\textbf{P}$

-invariant probability

![]() $\boldsymbol{\nu}$

on

$\boldsymbol{\nu}$

on

![]() $\mathbb{X}$

(

$\mathbb{X}$

(

![]() $\boldsymbol{\nu}(\textbf{P})=\boldsymbol{\nu}$

), and an operator Q on

$\boldsymbol{\nu}(\textbf{P})=\boldsymbol{\nu}$

), and an operator Q on

![]() $\mathscr{C}(\mathbb X)$

such that, for any

$\mathscr{C}(\mathbb X)$

such that, for any

![]() $g \in \mathscr{C}(\mathbb X)$

and

$g \in \mathscr{C}(\mathbb X)$

and

![]() $n \geq 1$

,

$n \geq 1$

,

![]() $i \in \mathbb{X}$

,

$i \in \mathbb{X}$

,

where

![]() $Q \left(1 \right) = 0$

and

$Q \left(1 \right) = 0$

and

![]() $\boldsymbol{\nu} \left(Q(g) \right) = 0$

with

$\boldsymbol{\nu} \left(Q(g) \right) = 0$

with

![]() $\boldsymbol{\nu}(g) \,{:\!=}\, \sum_{i \in \mathbb{X}} g(i) \boldsymbol{\nu}(i)$

. In particular, from (1.1), it follows that, for any

$\boldsymbol{\nu}(g) \,{:\!=}\, \sum_{i \in \mathbb{X}} g(i) \boldsymbol{\nu}(i)$

. In particular, from (1.1), it follows that, for any

![]() $(i,j) \in \mathbb{X}^2$

,

$(i,j) \in \mathbb{X}^2$

,

Set

![]() $\mathbb N \,{:\!=}\, \left\{0,1,2,\ldots\right\}$

. For any

$\mathbb N \,{:\!=}\, \left\{0,1,2,\ldots\right\}$

. For any

![]() $i\in \mathbb X$

, let

$i\in \mathbb X$

, let

![]() $\mathbb{P}_i$

be the probability law on

$\mathbb{P}_i$

be the probability law on

![]() $ \mathbb X^{\mathbb N} $

and

$ \mathbb X^{\mathbb N} $

and

![]() $\mathbb{E}_i$

the associated expectation generated by the finite-dimensional distributions of the Markov chain

$\mathbb{E}_i$

the associated expectation generated by the finite-dimensional distributions of the Markov chain

![]() $\left( X_n \right)_{n\geq 0}$

starting at

$\left( X_n \right)_{n\geq 0}$

starting at

![]() $X_0 = i$

. Note that

$X_0 = i$

. Note that

![]() $\textbf{P}^ng(i) = \mathbb{E}_i \left( g(X_n) \right)$

, for any

$\textbf{P}^ng(i) = \mathbb{E}_i \left( g(X_n) \right)$

, for any

![]() $g \in \mathscr{C}(\mathbb X)$

,

$g \in \mathscr{C}(\mathbb X)$

,

![]() $i \in \mathbb{X}$

, and

$i \in \mathbb{X}$

, and

![]() $n\geq 1.$

$n\geq 1.$

Assume that on the same probability space

![]() $\left( \Omega, \mathscr{F}, \mathbb{P} \right)$

, for any

$\left( \Omega, \mathscr{F}, \mathbb{P} \right)$

, for any

![]() $i \in \mathbb{X}$

, we are given a random variable

$i \in \mathbb{X}$

, we are given a random variable

![]() $\xi_i$

with the probability generating function

$\xi_i$

with the probability generating function

Consider a collection of independent and identically distributed random variables

![]() $( \xi_i^{n,j} )_{j,n \geq 1}$

having the same law as the generic variable

$( \xi_i^{n,j} )_{j,n \geq 1}$

having the same law as the generic variable

![]() $\xi_i$

. The variable

$\xi_i$

. The variable

![]() $\xi_{i}^{n,j}$

represents the number of children generated by the parent

$\xi_{i}^{n,j}$

represents the number of children generated by the parent

![]() $j\in \{1, 2, \dots\}$

at time n when the environment is i. Throughout the paper, the sequences

$j\in \{1, 2, \dots\}$

at time n when the environment is i. Throughout the paper, the sequences

![]() $( \xi_i^{n,j} )_{j,n \geq 1}$

,

$( \xi_i^{n,j} )_{j,n \geq 1}$

,

![]() $i\in \mathbb{X}$

, and the Markov chain

$i\in \mathbb{X}$

, and the Markov chain

![]() $\left( X_n \right)_{n\geq 0}$

are supposed to be independent.

$\left( X_n \right)_{n\geq 0}$

are supposed to be independent.

Denote by

![]() $\mathbb E$

the expectation associated to

$\mathbb E$

the expectation associated to

![]() $\mathbb P.$

We assume that the variables

$\mathbb P.$

We assume that the variables

![]() $\xi_i$

have positive means and finite second moments.

$\xi_i$

have positive means and finite second moments.

Condition 2. For any

![]() $i \in \mathbb{X}$

, the random variable

$i \in \mathbb{X}$

, the random variable

![]() $\xi_i$

satisfies the following:

$\xi_i$

satisfies the following:

![]() $\mathbb{E} \left( \xi_i \right)>0$

and

$\mathbb{E} \left( \xi_i \right)>0$

and

![]() $\mathbb{E} ( \xi_i^2 ) < +\infty.$

$\mathbb{E} ( \xi_i^2 ) < +\infty.$

From Condition 2 it follows that

![]() $0< f_i^{\prime}(1) < +\infty$

and

$0< f_i^{\prime}(1) < +\infty$

and

![]() $f_i^{\prime\prime}(1) < +\infty.$

$f_i^{\prime\prime}(1) < +\infty.$

We are now prepared to introduce the branching process

![]() $\left( Z_n \right)_{n\geq 0}$

in the Markovian environment

$\left( Z_n \right)_{n\geq 0}$

in the Markovian environment

![]() $\left( X_n \right)_{n\geq 0}$

. The initial population size is

$\left( X_n \right)_{n\geq 0}$

. The initial population size is

![]() $Z_0 = z \in \mathbb N$

. For

$Z_0 = z \in \mathbb N$

. For

![]() $n\geq 1$

, we let

$n\geq 1$

, we let

![]() $Z_{n-1}$

be the population size at time

$Z_{n-1}$

be the population size at time

![]() $n-1$

and assume that at time n the parent

$n-1$

and assume that at time n the parent

![]() $j\in \{1, \dots Z_{n-1} \}$

generates

$j\in \{1, \dots Z_{n-1} \}$

generates

![]() $\xi_{X_n}^{n,j}$

children. Then the population size at time n is given by

$\xi_{X_n}^{n,j}$

children. Then the population size at time n is given by

\begin{equation*}Z_{n} = \sum_{j=1}^{Z_{n-1}} \xi_{X_{n}}^{n,j}, \end{equation*}

\begin{equation*}Z_{n} = \sum_{j=1}^{Z_{n-1}} \xi_{X_{n}}^{n,j}, \end{equation*}

where the empty sum is equal to 0. In particular, when

![]() $Z_0=0$

, it follows that

$Z_0=0$

, it follows that

![]() $Z_n=0$

for any

$Z_n=0$

for any

![]() $n\geq 1$

. We note that for any

$n\geq 1$

. We note that for any

![]() $n\geq 1$

the variables

$n\geq 1$

the variables

![]() $\xi_{i}^{n,j}$

,

$\xi_{i}^{n,j}$

,

![]() $j\geq 1$

,

$j\geq 1$

,

![]() $i\in \mathbb X$

, are independent of

$i\in \mathbb X$

, are independent of

![]() $Z_{0},\ldots, Z_{n-1}$

.

$Z_{0},\ldots, Z_{n-1}$

.

Introduce the function

![]() $\rho: \mathbb X \mapsto \mathbb R$

satisfying

$\rho: \mathbb X \mapsto \mathbb R$

satisfying

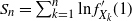

Along with

![]() $\left( Z_n \right)_{n\geq 0}$

consider the Markov walk

$\left( Z_n \right)_{n\geq 0}$

consider the Markov walk

![]() $\left( S_n \right)_{n\geq 0}$

such that

$\left( S_n \right)_{n\geq 0}$

such that

![]() $S_0 = 0$

and, for

$S_0 = 0$

and, for

![]() $n\geq 1$

,

$n\geq 1$

,

The couple

![]() $\left( X_n, Z_n\right)_{n\geq 0}$

is a Markov chain with values in

$\left( X_n, Z_n\right)_{n\geq 0}$

is a Markov chain with values in

![]() $\mathbb X \times \mathbb N $

, whose transition operator

$\mathbb X \times \mathbb N $

, whose transition operator

![]() $\widetilde{\textbf{P}} $

is defined by the following relation: for any

$\widetilde{\textbf{P}} $

is defined by the following relation: for any

![]() $i\in \mathbb X$

,

$i\in \mathbb X$

,

![]() $z\in \mathbb N,$

$z\in \mathbb N,$

![]() $\ s\in [0,1]$

, and

$\ s\in [0,1]$

, and

![]() $h: \mathbb X \mapsto \mathbb R$

bounded measurable,

$h: \mathbb X \mapsto \mathbb R$

bounded measurable,

where

![]() $h_s(i,z)= h(i) s^z$

. Let

$h_s(i,z)= h(i) s^z$

. Let

![]() $\mathbb{P}_{i,z}$

be the probability law on

$\mathbb{P}_{i,z}$

be the probability law on

![]() $ (\mathbb X \times \mathbb N )^{\mathbb N}$

and

$ (\mathbb X \times \mathbb N )^{\mathbb N}$

and

![]() $\mathbb{E}_{i,z}$

the associated expectation generated by the finite-dimensional distributions of the Markov chain

$\mathbb{E}_{i,z}$

the associated expectation generated by the finite-dimensional distributions of the Markov chain

![]() $\left( X_n, Z_n \right)_{n\geq 0}$

starting at

$\left( X_n, Z_n \right)_{n\geq 0}$

starting at

![]() $X_0 = i$

and

$X_0 = i$

and

![]() $Z_0=z$

. By straightforward calculations, for any

$Z_0=z$

. By straightforward calculations, for any

![]() $i\in \mathbb X$

,

$i\in \mathbb X$

,

![]() $z\in \mathbb N$

,

$z\in \mathbb N$

,

The following non-lattice condition is used indirectly in the proofs in the present paper; it is needed to ensure that the local limit theorem for the Markov walk (1.4) holds true.

Condition 3. For any

![]() $\theta, a \in \mathbb{R}$

, there exist

$\theta, a \in \mathbb{R}$

, there exist

![]() $m\geq 0$

and a path

$m\geq 0$

and a path

![]() $x_0, \dots, x_m$

in

$x_0, \dots, x_m$

in

![]() $\mathbb{X}$

such that

$\mathbb{X}$

such that

![]() $\textbf{P}(x_0,x_1) \cdots \textbf{P}(x_{m-1},x_m) {\textbf{P}}(x_m,x_0) > 0$

and

$\textbf{P}(x_0,x_1) \cdots \textbf{P}(x_{m-1},x_m) {\textbf{P}}(x_m,x_0) > 0$

and

Condition 3 is an extension of the corresponding non-lattice condition for independent and identically distributed random variables

![]() $X_0, X_1, \ldots $

, which can be stated as follows: there exists

$X_0, X_1, \ldots $

, which can be stated as follows: there exists

![]() $m\geq 0$

such that

$m\geq 0$

such that

![]() $X_0 + \cdots + X_m$

does not take values in the lattice

$X_0 + \cdots + X_m$

does not take values in the lattice

![]() $(m+1)\theta + a\mathbb{Z}$

with some positive probability, whatever

$(m+1)\theta + a\mathbb{Z}$

with some positive probability, whatever

![]() $\theta, a \in \mathbb{R}$

. Usually, the latter is formulated in an equivalent way with

$\theta, a \in \mathbb{R}$

. Usually, the latter is formulated in an equivalent way with

![]() $m=0$

. For Markov chains, Condition 3 is equivalent to the condition that the Fourier transform operator

$m=0$

. For Markov chains, Condition 3 is equivalent to the condition that the Fourier transform operator

has a spectral radius strictly less than 1 for

![]() $t\not= 0$

; see Lemma 4.1 of [Reference Grama, Lauvergnat and Le Page14]. Non-latticity for Markov chains with not necessarily finite state spaces is considered, for instance, in Shurenkov [Reference Shurenkov23] and Alsmeyer [Reference Alsmeyer3].

$t\not= 0$

; see Lemma 4.1 of [Reference Grama, Lauvergnat and Le Page14]. Non-latticity for Markov chains with not necessarily finite state spaces is considered, for instance, in Shurenkov [Reference Shurenkov23] and Alsmeyer [Reference Alsmeyer3].

For the following facts and definitions we refer to [Reference Grama, Lauvergnat and Le Page13]. Under Condition 1, from the spectral gap property of the operator

![]() $\textbf{P}$

it follows that, for any

$\textbf{P}$

it follows that, for any

![]() $\lambda \in \mathbb{R}$

and any

$\lambda \in \mathbb{R}$

and any

![]() $i \in \mathbb{X}$

, the limit

$i \in \mathbb{X}$

, the limit

exists and does not depend on the initial state of the Markov chain

![]() $X_0=i$

. Moreover, the number

$X_0=i$

. Moreover, the number

![]() $k(\lambda)$

is the spectral radius of the transfer operator

$k(\lambda)$

is the spectral radius of the transfer operator

![]() $\textbf{P}_{\lambda}$

:

$\textbf{P}_{\lambda}$

:

In particular, under Conditions 1 and 3,

![]() $k(\lambda)$

is a simple eigenvalue of the operator

$k(\lambda)$

is a simple eigenvalue of the operator

![]() $\textbf{P}_{\lambda}$

and there is no other eigenvalue of modulus

$\textbf{P}_{\lambda}$

and there is no other eigenvalue of modulus

![]() $k(\lambda)$

. In addition, the function

$k(\lambda)$

. In addition, the function

![]() $k(\lambda)$

is analytic on

$k(\lambda)$

is analytic on

![]() $\mathbb R.$

$\mathbb R.$

The BPME is said to be subcritical if

![]() $k'(0)<0$

, critical if

$k'(0)<0$

, critical if

![]() $k'(0)=0$

, and supercritical if

$k'(0)=0$

, and supercritical if

![]() $k'(0)>0$

. The following identity has been established in [Reference Grama, Lauvergnat and Le Page13]:

$k'(0)>0$

. The following identity has been established in [Reference Grama, Lauvergnat and Le Page13]:

where

![]() $\mathbb E_{\boldsymbol{\nu}} $

is the expectation generated by the finite-dimensional distributions of the Markov chain

$\mathbb E_{\boldsymbol{\nu}} $

is the expectation generated by the finite-dimensional distributions of the Markov chain

![]() $\left( X_n \right)_{n\geq 0}$

in the stationary regime, and

$\left( X_n \right)_{n\geq 0}$

in the stationary regime, and

![]() $\phi (\lambda)=\mathbb{E}_{\boldsymbol{\nu}} ( \exp\{\lambda\ln f_{X_1}^{\prime}(1)\} )$

,

$\phi (\lambda)=\mathbb{E}_{\boldsymbol{\nu}} ( \exp\{\lambda\ln f_{X_1}^{\prime}(1)\} )$

,

![]() $\lambda \in \mathbb{R}.$

The relation (1.9) proves that the classification made in the case of BPMEs and that for independent and identically distributed environment are coherent: when the random variables

$\lambda \in \mathbb{R}.$

The relation (1.9) proves that the classification made in the case of BPMEs and that for independent and identically distributed environment are coherent: when the random variables

![]() $\left( X_n \right)_{n\geq 1}$

are independent and identically distributed with common law

$\left( X_n \right)_{n\geq 1}$

are independent and identically distributed with common law

![]() $\boldsymbol{\nu}$

, from (1.9) it follows that the two classifications coincide.

$\boldsymbol{\nu}$

, from (1.9) it follows that the two classifications coincide.

In the present paper we will focus on the critical case:

![]() $k'(0)=0$

. Our first result establishes the exact asymptotic of the survival probability of

$k'(0)=0$

. Our first result establishes the exact asymptotic of the survival probability of

![]() $Z_n$

jointly with the event

$Z_n$

jointly with the event

![]() $\{X_n=j\}$

when the branching process starts with z particles.

$\{X_n=j\}$

when the branching process starts with z particles.

Theorem 1.1. Assume Conditions 1–3 and

![]() $k'(0) = 0.$

Then there exists a positive function

$k'(0) = 0.$

Then there exists a positive function

![]() $u(i,z): \mathbb{X} \times \mathbb{N} \mapsto \mathbb R_{+}^{*}$

such that for any

$u(i,z): \mathbb{X} \times \mathbb{N} \mapsto \mathbb R_{+}^{*}$

such that for any

![]() $(i,j) \in \mathbb{X}^2$

and

$(i,j) \in \mathbb{X}^2$

and

![]() $z \in \mathbb{N}$

,

$z \in \mathbb{N}$

,

![]() $z\not=0,$

$z\not=0,$

An explicit formula for u(i,z) is given in Proposition 3.4. In the case

![]() $z=1$

, Theorem 1.1 has been proved in [Reference Grama, Lauvergnat and Le Page13, Theorem 1.1]. The proof for the case

$z=1$

, Theorem 1.1 has been proved in [Reference Grama, Lauvergnat and Le Page13, Theorem 1.1]. The proof for the case

![]() $z>1$

, which is not a direct consequence of the case

$z>1$

, which is not a direct consequence of the case

![]() $z=1$

, will be given in Proposition 2.3.

$z=1$

, will be given in Proposition 2.3.

We shall complement the previous statement by studying the asymptotic behavior of

![]() $Z_n$

given

$Z_n$

given

![]() $Z_n>0$

under the following condition.

$Z_n>0$

under the following condition.

Condition 4. The random variables

![]() $\xi_i$

,

$\xi_i$

,

![]() $i\in \mathbb X$

, satisfy

$i\in \mathbb X$

, satisfy

Condition 4 is quite natural—it says that each parent can generate more than one child with positive probability. In the present paper is used to prove the nondegeneracy of the limit of the martingale

in the key result Lemma 4.1.

The next result concerns the nondegeneracy of the limit law of the properly normalized number of particles

![]() $Z_n$

at time n jointly with the event

$Z_n$

at time n jointly with the event

![]() $\{X_n=j\}$

.

$\{X_n=j\}$

.

Theorem 1.2. Assume Conditions 1–4 and

![]() $k'(0) = 0.$

Then, for any

$k'(0) = 0.$

Then, for any

![]() $i \in \mathbb{X}$

,

$i \in \mathbb{X}$

,

![]() $z \in \mathbb{N}$

,

$z \in \mathbb{N}$

,

![]() $z\not=0,$

there exists a probability measure

$z\not=0,$

there exists a probability measure

![]() $\mu_{i,z}$

on

$\mu_{i,z}$

on

![]() $\mathbb{R}_{+}$

such that, for any continuity point

$\mathbb{R}_{+}$

such that, for any continuity point

![]() $t\geq0$

of the distribution function

$t\geq0$

of the distribution function

![]() $\mu_{i,z} ([0,\cdot])$

and

$\mu_{i,z} ([0,\cdot])$

and

![]() $j \in \mathbb{X}$

, it holds that

$j \in \mathbb{X}$

, it holds that

and

Moreover, it holds that

![]() $\mu_{i,z} (\{0\})=0$

.

$\mu_{i,z} (\{0\})=0$

.

From [Reference Grama, Lauvergnat and Le Page14, Lemma 10.3] it follows that, under Conditions 1 and 3, the quantity

\begin{equation} \sigma^2 \,{:\!=}\, \boldsymbol{\nu} \left( \rho^2 \right) - \boldsymbol{\nu} \left( \rho \right)^2 + 2 \sum_{n=1}^{+\infty} \left[ \boldsymbol{\nu} \left( \rho \textbf{P}^n \rho \right) - \boldsymbol{\nu} \left( \rho \right)^2 \right] \end{equation}

\begin{equation} \sigma^2 \,{:\!=}\, \boldsymbol{\nu} \left( \rho^2 \right) - \boldsymbol{\nu} \left( \rho \right)^2 + 2 \sum_{n=1}^{+\infty} \left[ \boldsymbol{\nu} \left( \rho \textbf{P}^n \rho \right) - \boldsymbol{\nu} \left( \rho \right)^2 \right] \end{equation}

is finite and positive, i.e.

![]() $0< \sigma <\infty$

. Let

$0< \sigma <\infty$

. Let

be the Rayleigh distribution function. The following assertion gives the asymptotic behavior of the normalized Markov walk

![]() $S_n$

jointly with

$S_n$

jointly with

![]() $X_n$

provided

$X_n$

provided

![]() $Z_n>0$

.

$Z_n>0$

.

Theorem 1.3. Assume Conditions 1–4 and

![]() $k'(0) = 0.$

Then, for any

$k'(0) = 0.$

Then, for any

![]() $i,j \in \mathbb{X}$

,

$i,j \in \mathbb{X}$

,

![]() $z \in \mathbb{N}$

,

$z \in \mathbb{N}$

,

![]() $z\not=0$

, and

$z\not=0$

, and

![]() $t \in \mathbb R$

,

$t \in \mathbb R$

,

and

The following assertion is the Yaglom-type limit theorem for

![]() $\log Z_n$

jointly with

$\log Z_n$

jointly with

![]() $X_n$

.

$X_n$

.

Theorem 1.4. Assume Conditions 1–4 and

![]() $k'(0) = 0.$

Then, for any

$k'(0) = 0.$

Then, for any

![]() $i, j \in \mathbb{X}$

,

$i, j \in \mathbb{X}$

,

![]() $z \in \mathbb{N}$

,

$z \in \mathbb{N}$

,

![]() $z\not=0$

, and

$z\not=0$

, and

![]() $t \geq 0$

,

$t \geq 0$

,

and

As mentioned before, in the proofs of the stated results we make use of the previous developments in the papers [Reference Grama, Lauvergnat and Le Page13, Reference Grama, Lauvergnat and Le Page14]. These studies are based heavily on the existence of the harmonic function and the study of the asymptotic of the probability of the exit time for Markov chains which were performed recently in [Reference Grama, Lauvergnat and Le Page12, Reference Grama, Le Page and Peigné15]; these are recalled in the next section. For recurrent Markov chains, alternative approaches based on renewal arguments are possible. The advantage of the harmonic function approach proposed here is that it could be extended to more general Markov environments which are not recurrent. In particular, with these methods one could treat multi-type branching processes in random environments.

The outline of the paper is as follows. In Section 2 we give a series of assertions for walks on Markov chains conditioned to stay positive and prove Theorem 1.1 for

![]() $z>1$

. In Section 3 we state some preparatory results for branching processes. The proofs of Theorems 1.2, 1.3, and 1.4 are given in Sections 4 and 5.

$z>1$

. In Section 3 we state some preparatory results for branching processes. The proofs of Theorems 1.2, 1.3, and 1.4 are given in Sections 4 and 5.

We end this section by fixing some notation. As usual the symbol c will denote a positive constant depending on all previously introduced constants. In the same way the symbol c equipped with subscripts will denote a positive constant depending only on the indices and all previously introduced constants. All these constants will change in value at every occurrence. By

![]() $f \circ g$

we mean the composition of two functions f and g:

$f \circ g$

we mean the composition of two functions f and g:

![]() $f \circ g (\cdot)=f(g(\cdot))$

. The indicator of an event A is denoted by

$f \circ g (\cdot)=f(g(\cdot))$

. The indicator of an event A is denoted by

![]() $\unicode{x1D7D9}_A$

. For any bounded measurable function f on

$\unicode{x1D7D9}_A$

. For any bounded measurable function f on

![]() $\mathbb{X}$

, random variable X in some measurable space

$\mathbb{X}$

, random variable X in some measurable space

![]() $\mathbb{X}$

, and event A, we define

$\mathbb{X}$

, and event A, we define

2. Facts about Markov walks conditioned to stay positive

2.1. Conditioned limit theorems

We start by formulating two propositions which are consequences of the results in [Reference Grama, Lauvergnat and Le Page12], [Reference Grama, Lauvergnat and Le Page13], and Reference Grama, Lauvergnat and Le Page14].

We introduce the first time when the Markov walk

![]() $\left(y+ S_n \right)_{n\geq 0}$

becomes nonpositive: for any

$\left(y+ S_n \right)_{n\geq 0}$

becomes nonpositive: for any

![]() $y \in \mathbb{R}$

, set

$y \in \mathbb{R}$

, set

where

![]() $\inf \emptyset = 0.$

Conditions 1 and 3 together with

$\inf \emptyset = 0.$

Conditions 1 and 3 together with

![]() $\boldsymbol{\nu}(\rho) = 0$

ensure that the stopping time

$\boldsymbol{\nu}(\rho) = 0$

ensure that the stopping time

![]() $\tau_y$

is well defined and finite

$\tau_y$

is well defined and finite

![]() $\mathbb{P}_i$

-almost surely (-a.s.), for any

$\mathbb{P}_i$

-almost surely (-a.s.), for any

![]() $i \in \mathbb{X}$

.

$i \in \mathbb{X}$

.

The following important proposition is a direct consequence of the results in [Reference Grama, Lauvergnat and Le Page12] adapted to the case of a finite Markov chain. It proves the existence of the harmonic function related to the Markov walk

![]() $\left(y+ S_n \right)_{n\geq 0}$

and states some of its properties that will be used in the proofs of the main results of the paper.

$\left(y+ S_n \right)_{n\geq 0}$

and states some of its properties that will be used in the proofs of the main results of the paper.

Proposition 2.1. Assume Conditions 1 and 3 and

![]() $k'(0) = 0$

. There exists a nonnegative function V on

$k'(0) = 0$

. There exists a nonnegative function V on

![]() $\mathbb{X} \times \mathbb{R}$

such that the following hold:

$\mathbb{X} \times \mathbb{R}$

such that the following hold:

-

1. For any

$(i,y) \in \mathbb{X} \times \mathbb{R}$

and

$(i,y) \in \mathbb{X} \times \mathbb{R}$

and

$n \geq 1$

,

$n \geq 1$

, \begin{equation*} {}\mathbb{E}_i \left( V \left( X_n, y+S_n \right) \,;\, \tau_y > n \right) = V(i,y). {}\end{equation*}

\begin{equation*} {}\mathbb{E}_i \left( V \left( X_n, y+S_n \right) \,;\, \tau_y > n \right) = V(i,y). {}\end{equation*}

-

2. For any

$i\in \mathbb{X}$

, the function

$i\in \mathbb{X}$

, the function

$V(i,\cdot)$

is nondecreasing, and for any

$V(i,\cdot)$

is nondecreasing, and for any

$(i,y) \in \mathbb{X} \times \mathbb{R}$

,

$(i,y) \in \mathbb{X} \times \mathbb{R}$

,  \begin{equation*} {}V(i,y) \leq c \left( 1+\max(y,0) \right). {}\end{equation*}

\begin{equation*} {}V(i,y) \leq c \left( 1+\max(y,0) \right). {}\end{equation*}

-

3. For any

$i \in \mathbb{X}$

,

$i \in \mathbb{X}$

,

$y > 0$

, and

$y > 0$

, and

$\delta \in (0,1)$

,

$\delta \in (0,1)$

,  \begin{equation*} {}\left( 1- \delta \right)y - c_{\delta} \leq V(i,y) \leq \left(1+\delta \right)y + c_{\delta}. {}\end{equation*}

\begin{equation*} {}\left( 1- \delta \right)y - c_{\delta} \leq V(i,y) \leq \left(1+\delta \right)y + c_{\delta}. {}\end{equation*}

We need the asymptotic of the probability of the event

![]() $\{ \tau_y > n\}$

jointly with the state of the Markov chain

$\{ \tau_y > n\}$

jointly with the state of the Markov chain

![]() $(X_n)_{n\geq 1}$

.

$(X_n)_{n\geq 1}$

.

Proposition 2.2. Assume Conditions 1 and 3 and

![]() $k'(0)=0$

.

$k'(0)=0$

.

-

1. For any

$(i,y) \in \mathbb{X} \times \mathbb{R}$

and

$(i,y) \in \mathbb{X} \times \mathbb{R}$

and

$j \in \mathbb{X}$

, we have

$j \in \mathbb{X}$

, we have  \begin{align*}\lim_{n\to +\infty} \sqrt{n} \mathbb{P}_{i} \left( X_n = j \,,\, \tau_y > n \right) = \frac{2V(i,y) \boldsymbol{\nu} (\,j)}{\sqrt{2\pi} \sigma}.\end{align*}

\begin{align*}\lim_{n\to +\infty} \sqrt{n} \mathbb{P}_{i} \left( X_n = j \,,\, \tau_y > n \right) = \frac{2V(i,y) \boldsymbol{\nu} (\,j)}{\sqrt{2\pi} \sigma}.\end{align*}

-

2. For any

$(i,y) \in \mathbb{X} \times \mathbb{R}$

,

$(i,y) \in \mathbb{X} \times \mathbb{R}$

,

$j\in \mathbb{X}$

, and

$j\in \mathbb{X}$

, and

$n\geq 1$

,

$n\geq 1$

, $${\mathbb{P}_i}\left( {{X_n} = j,{\tau _y} > n} \right) \le c{{1 + \max (y,0)} \over {\sqrt n }}.$$

$${\mathbb{P}_i}\left( {{X_n} = j,{\tau _y} > n} \right) \le c{{1 + \max (y,0)} \over {\sqrt n }}.$$

For a proof of the first assertion of Proposition 2.2, see Lemma 2.11 in [Reference Grama, Lauvergnat and Le Page13]. The second is deduced from the point (b) of Theorem 2.3 of [Reference Grama, Lauvergnat and Le Page12].

Denote by

![]() $ \textrm{supp}(V) = \left\{ (i,y) \in \mathbb{X} \times \mathbb{R} : \right.$

$ \textrm{supp}(V) = \left\{ (i,y) \in \mathbb{X} \times \mathbb{R} : \right.$

![]() $\left. V(i,y) > 0 \right\}$

the support of the function V. By the point 3 of Theorem 1.1, the harmonic function V satisfies the following property: for any

$\left. V(i,y) > 0 \right\}$

the support of the function V. By the point 3 of Theorem 1.1, the harmonic function V satisfies the following property: for any

![]() $i\in \mathbb X$

there exists

$i\in \mathbb X$

there exists

![]() $y_i\geq 0$

such that

$y_i\geq 0$

such that

![]() $(i,y)\in \text{supp}\ V$

for any

$(i,y)\in \text{supp}\ V$

for any

![]() $y>y_i$

.

$y>y_i$

.

In addition to the previous two propositions we need the following result, which gives the asymptotic behavior of the conditioned limit law of the Markov walk

![]() $\left(y+ S_n \right)_{n\geq 0}$

jointly with the Markov chain

$\left(y+ S_n \right)_{n\geq 0}$

jointly with the Markov chain

![]() $\left(X_n \right)_{n\geq 0}$

. It extends Theorem 2.5 of [Reference Grama, Lauvergnat and Le Page12], which considers the asymptotic of

$\left(X_n \right)_{n\geq 0}$

. It extends Theorem 2.5 of [Reference Grama, Lauvergnat and Le Page12], which considers the asymptotic of

given the event

![]() $\{\tau_y >n\}$

.

$\{\tau_y >n\}$

.

Proposition 2.3. Assume Conditions 1 and 3 and

![]() $k'(0)=0$

.

$k'(0)=0$

.

-

1. For any

$(i,y) \in \textrm{supp}(V)$

,

$(i,y) \in \textrm{supp}(V)$

,

$ j\in \mathbb{X} $

, and

$ j\in \mathbb{X} $

, and

$t\geq 0$

,

$t\geq 0$

,  \begin{align*} {}\mathbb{P}_i \left( \left.{\frac{y+S_n}{\sigma \sqrt{n}} \leq t, X_n=j } \,\middle|\,{\tau_y >n}\right. \right) \underset{n\to+\infty}{\longrightarrow} {} \Phi^+(t) \boldsymbol{\nu} (\,j).\end{align*}

\begin{align*} {}\mathbb{P}_i \left( \left.{\frac{y+S_n}{\sigma \sqrt{n}} \leq t, X_n=j } \,\middle|\,{\tau_y >n}\right. \right) \underset{n\to+\infty}{\longrightarrow} {} \Phi^+(t) \boldsymbol{\nu} (\,j).\end{align*}

-

2. There exists

$\varepsilon_0 >0$

such that, for any

$\varepsilon_0 >0$

such that, for any

$\varepsilon \in (0,\varepsilon_0)$

,

$\varepsilon \in (0,\varepsilon_0)$

,

$n\geq 1$

,

$n\geq 1$

,

$t_0 > 0$

,

$t_0 > 0$

,

$t\in[0,t_0]$

,

$t\in[0,t_0]$

,

$(i,y) \in \mathbb{X} \times \mathbb{R}$

, and

$(i,y) \in \mathbb{X} \times \mathbb{R}$

, and

$ j \in \mathbb{X}$

,

$ j \in \mathbb{X}$

,  \begin{align*} {}&\left\lvert{ \mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) - \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t) \boldsymbol{\nu} (\,j) }\right\rvert \\[3pt] {}&\hskip6cm \leq c_{\varepsilon,t_0} \frac{\left( 1+\max(y,0)^2 \right)}{n^{1/2+\varepsilon}}.\end{align*}

\begin{align*} {}&\left\lvert{ \mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) - \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t) \boldsymbol{\nu} (\,j) }\right\rvert \\[3pt] {}&\hskip6cm \leq c_{\varepsilon,t_0} \frac{\left( 1+\max(y,0)^2 \right)}{n^{1/2+\varepsilon}}.\end{align*}

Proof. It is enough to prove the point 2 of the proposition. This will be derived from the corresponding result in Theorem 2.5 of [Reference Grama, Lauvergnat and Le Page12]. We first establish an upper bound. Let

![]() $k=\big[n^{1/4}\big]$

and

$k=\big[n^{1/4}\big]$

and

![]() $\| \rho \|_{\infty}=\max_{i\in \mathbb X } | \rho(i) |$

. Since

$\| \rho \|_{\infty}=\max_{i\in \mathbb X } | \rho(i) |$

. Since

we have

\begin{align} &\mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt]&\leq\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n} \sigma} \leq t + \frac{k}{\sigma\sqrt{n}} \| \rho \|, X_n=j, \tau_y > n-k \right) \nonumber \\[2pt] &\,{:\!=}\,I (k,n).\end{align}

\begin{align} &\mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt]&\leq\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n} \sigma} \leq t + \frac{k}{\sigma\sqrt{n}} \| \rho \|, X_n=j, \tau_y > n-k \right) \nonumber \\[2pt] &\,{:\!=}\,I (k,n).\end{align}

By the Markov property

Now, using (1.2) and setting

we have

By Theorem 2.5 and Remark 2.10 of [Reference Grama, Lauvergnat and Le Page12], there exists

![]() $\varepsilon_0>0$

such that for any

$\varepsilon_0>0$

such that for any

![]() $\varepsilon \in (0, \varepsilon_0)$

and

$\varepsilon \in (0, \varepsilon_0)$

and

![]() $t_0>0$

,

$t_0>0$

,

![]() $n\geq1$

, we have, for

$n\geq1$

, we have, for

![]() $t_{n,k}\leq t_0 $

,

$t_{n,k}\leq t_0 $

,

\begin{align} &\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n-k} \sigma} \leq t_{n,k}, \tau_y > n-k \right) \nonumber \\[3pt] &\leq \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^{+}(t_{n,k}) t_0\frac{(1+\max\{0,y\})^2 }{ (n-k)^{1/2+\varepsilon/16}}. \end{align}

\begin{align} &\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n-k} \sigma} \leq t_{n,k}, \tau_y > n-k \right) \nonumber \\[3pt] &\leq \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^{+}(t_{n,k}) t_0\frac{(1+\max\{0,y\})^2 }{ (n-k)^{1/2+\varepsilon/16}}. \end{align}

Since

![]() $| t_{n,k} - t| \leq c_{t_0}\frac{1}{n^{1/4}}$

and

$| t_{n,k} - t| \leq c_{t_0}\frac{1}{n^{1/4}}$

and

![]() $\Phi^+$

is smooth, we obtain

$\Phi^+$

is smooth, we obtain

From (2.2), (2.3), (2.4), and (2.5) it follows that

\begin{align} \mathbb{P}_i &\left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt]&\leq \boldsymbol \nu(j) \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t)+ c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ n^{1/2+\varepsilon/16}}. \end{align}

\begin{align} \mathbb{P}_i &\left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt]&\leq \boldsymbol \nu(j) \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t)+ c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ n^{1/2+\varepsilon/16}}. \end{align}

Now we shall establish a lower bound. With the notation introduced above, we have

\begin{align} &\mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt] &\geq \mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n} \sigma} \leq t - \frac{k}{\sigma\sqrt{n}} \| \rho \|, X_n=j, \tau_y > n-k \right) \nonumber \\[3pt] & -\mathbb{P}_i \left( n-k < \tau_y \leq n \right) \nonumber \\[3pt] &\,{:\!=}\,I_1 (k,n) - I_2(k,n). \end{align}

\begin{align} &\mathbb{P}_i \left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \nonumber \\[3pt] &\geq \mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n} \sigma} \leq t - \frac{k}{\sigma\sqrt{n}} \| \rho \|, X_n=j, \tau_y > n-k \right) \nonumber \\[3pt] & -\mathbb{P}_i \left( n-k < \tau_y \leq n \right) \nonumber \\[3pt] &\,{:\!=}\,I_1 (k,n) - I_2(k,n). \end{align}

As in the proof of (2.6), we establish the lower bound

Note that

![]() $0 \geq \min_{n-k < i \leq n} \{ y+S_i \} \geq y+S_{n-k} - k \| \rho \|_{\infty}$

, on the set

$0 \geq \min_{n-k < i \leq n} \{ y+S_i \} \geq y+S_{n-k} - k \| \rho \|_{\infty}$

, on the set

![]() $\{ n-k < \tau_y \leq n \}$

. Set

$\{ n-k < \tau_y \leq n \}$

. Set

Then

\begin{align} I_2(k,n) &= \mathbb{P}_i \left( n-k < \tau_y \leq n \right) \nonumber\\[3pt]&\leq \mathbb P_i \left( \frac{y+S_{n-k}}{\sigma\sqrt{n-k}} \leq t_{n,k}, n-k < \tau_y \leq n \right) \nonumber\\[3pt]&\leq \mathbb P_i \left( \frac{y+S_{n-k}}{\sigma\sqrt{n-k}} \leq t_{n,k}, \tau_y \geq n -k \right). \end{align}

\begin{align} I_2(k,n) &= \mathbb{P}_i \left( n-k < \tau_y \leq n \right) \nonumber\\[3pt]&\leq \mathbb P_i \left( \frac{y+S_{n-k}}{\sigma\sqrt{n-k}} \leq t_{n,k}, n-k < \tau_y \leq n \right) \nonumber\\[3pt]&\leq \mathbb P_i \left( \frac{y+S_{n-k}}{\sigma\sqrt{n-k}} \leq t_{n,k}, \tau_y \geq n -k \right). \end{align}

Again by Theorem 2.5 and Remark 2.10 of [Reference Grama, Lauvergnat and Le Page12], there exists

![]() $\varepsilon_0>0$

such that for any

$\varepsilon_0>0$

such that for any

![]() $\varepsilon \in (0, \varepsilon_0)$

and

$\varepsilon \in (0, \varepsilon_0)$

and

![]() $t_0>0$

,

$t_0>0$

,

![]() $n\geq1$

, we have, for

$n\geq1$

, we have, for

![]() $t_{n,k}\leq t_0 $

,

$t_{n,k}\leq t_0 $

,

\begin{align} &\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n-k} \sigma} \leq t_{n,k}, \tau_y > n-k \right) \nonumber \\&\leq \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^+(t_{n,k}) + c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ (n-k)^{1/2+\varepsilon/16}}. \end{align}

\begin{align} &\mathbb{P}_i \left(\frac{y+S_{n-k}}{\sqrt{n-k} \sigma} \leq t_{n,k}, \tau_y > n-k \right) \nonumber \\&\leq \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^+(t_{n,k}) + c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ (n-k)^{1/2+\varepsilon/16}}. \end{align}

Since

![]() $| t_{n,k} | \leq c_{t_0}\frac{1}{n^{1/4}}$

and

$| t_{n,k} | \leq c_{t_0}\frac{1}{n^{1/4}}$

and

![]() $\Phi^+(0)=0$

, we obtain

$\Phi^+(0)=0$

, we obtain

\begin{align} \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^+(t_{n,k}) &\leq \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(0) + c_{t_0}\frac{(1+\max\{0,y\})^2}{n^{1/2 +1/4}} \nonumber \\[3pt] &=c_{t_0}\frac{(1+\max\{0,y\})^2}{n^{1/2 +1/4}}. \end{align}

\begin{align} \frac{2V(i,y)}{\sqrt{2\pi (n-k)}\sigma} \Phi^+(t_{n,k}) &\leq \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(0) + c_{t_0}\frac{(1+\max\{0,y\})^2}{n^{1/2 +1/4}} \nonumber \\[3pt] &=c_{t_0}\frac{(1+\max\{0,y\})^2}{n^{1/2 +1/4}}. \end{align}

From (2.9), (2.10), and (2.11), we deduce that

Using (2.7), (2.8), and (2.12), one gets

\begin{align*} \mathbb P_i &\left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \\[3pt]&\geq \boldsymbol \nu(j) \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t)- c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ n^{1/2+\varepsilon/16}}, \end{align*}

\begin{align*} \mathbb P_i &\left(\frac{y+S_n}{\sqrt{n} \sigma} \leq t, X_n=j, \tau_y > n \right) \\[3pt]&\geq \boldsymbol \nu(j) \frac{2V(i,y)}{\sqrt{2\pi n}\sigma} \Phi^+(t)- c_{\varepsilon, t_0}\frac{(1+\max\{0,y\})^2 }{ n^{1/2+\varepsilon/16}}, \end{align*}

which together with (2.6) ends the proof of the point 2 of the proposition. The point 1 follows from the point 2.

We need the following estimate, whose proof can be found in [Reference Grama, Lauvergnat and Le Page14].

Proposition 2.4. Assume Conditions 1 and 4 and

![]() $k'(0)=0$

. Then there exists

$k'(0)=0$

. Then there exists

![]() $c > 0$

such that for any

$c > 0$

such that for any

![]() $a>0$

, nonnegative function

$a>0$

, nonnegative function

![]() $\psi \in \mathscr{C}(\mathbb X)$

,

$\psi \in \mathscr{C}(\mathbb X)$

,

![]() $y \in \mathbb{R}$

,

$y \in \mathbb{R}$

,

![]() $t \geq 0$

, and

$t \geq 0$

, and

![]() $n \geq 1$

,

$n \geq 1$

,

\begin{align*} \sup_{i\in \mathbb{X}} \mathbb{E}_{i} &\left( \psi \left( X_{n} \right) \,;\, y+S_{n} \in [t,t+a] \,,\, \tau_y > n \right) \\ &\leq \frac{c \left\lVert{\psi}\right\rVert_{\infty}}{n^{3/2}} \left( 1+a^3 \right)\left( 1+t \right)\left( 1+\max(y,0) \right). \end{align*}

\begin{align*} \sup_{i\in \mathbb{X}} \mathbb{E}_{i} &\left( \psi \left( X_{n} \right) \,;\, y+S_{n} \in [t,t+a] \,,\, \tau_y > n \right) \\ &\leq \frac{c \left\lVert{\psi}\right\rVert_{\infty}}{n^{3/2}} \left( 1+a^3 \right)\left( 1+t \right)\left( 1+\max(y,0) \right). \end{align*}

2.2. Change of probability measure

Fix any

![]() $(i,y) \in \textrm{supp}(V)$

and

$(i,y) \in \textrm{supp}(V)$

and

![]() $z\in \mathbb N$

. The harmonic function V from Proposition 2.1 allows us to introduce the probability measure

$z\in \mathbb N$

. The harmonic function V from Proposition 2.1 allows us to introduce the probability measure

![]() $\mathbb{P}_{i,y,z}^+$

on

$\mathbb{P}_{i,y,z}^+$

on

![]() ${(\mathbb X \times \mathbb N})^{\mathbb{N}}$

and the corresponding expectation

${(\mathbb X \times \mathbb N})^{\mathbb{N}}$

and the corresponding expectation

![]() $\mathbb{E}_{i,y,z}^+$

, by the following relation: for any

$\mathbb{E}_{i,y,z}^+$

, by the following relation: for any

![]() $n\geq 1$

and any bounded measurable g:

$n\geq 1$

and any bounded measurable g:

![]() ${(\mathbb X \times \mathbb N )}^{n} \mapsto \mathbb{R}$

,

${(\mathbb X \times \mathbb N )}^{n} \mapsto \mathbb{R}$

,

\begin{align} {} {}&\mathbb{E}_{i,y,z}^+ \left( g \left( X_1,Z_1, \dots, X_n,Z_n \right) \right) \nonumber\\ {}&\,{:\!=}\, \frac{1}{V(i,y)} \mathbb{E}_{i,z} \big( g\left( X_1,Z_1, \dots, X_n,Z_n \right) \nonumber\\ {}&\qquad\qquad\qquad \times V\left( X_n, y+S_n \right) \,;\, \tau_y > n \big). \end{align}

\begin{align} {} {}&\mathbb{E}_{i,y,z}^+ \left( g \left( X_1,Z_1, \dots, X_n,Z_n \right) \right) \nonumber\\ {}&\,{:\!=}\, \frac{1}{V(i,y)} \mathbb{E}_{i,z} \big( g\left( X_1,Z_1, \dots, X_n,Z_n \right) \nonumber\\ {}&\qquad\qquad\qquad \times V\left( X_n, y+S_n \right) \,;\, \tau_y > n \big). \end{align}

The fact that the function V is harmonic (by point 1 of Proposition 2.1) ensures the applicability of the Kolmogorov extension theorem and shows that

![]() $\mathbb{P}_{i,z,y}^+$

is a probability measure. In the same way we define the probability measure

$\mathbb{P}_{i,z,y}^+$

is a probability measure. In the same way we define the probability measure

![]() $\mathbb{P}_{i,y}^+$

and the corresponding expectation

$\mathbb{P}_{i,y}^+$

and the corresponding expectation

![]() $\mathbb{E}_{i,y}^+$

: for any

$\mathbb{E}_{i,y}^+$

: for any

![]() $(i,y) \in \textrm{supp}(V)$

,

$(i,y) \in \textrm{supp}(V)$

,

![]() $n \geq 1$

, and any bounded measurable g:

$n \geq 1$

, and any bounded measurable g:

![]() $\mathbb{X}^n \to \mathbb{R}$

,

$\mathbb{X}^n \to \mathbb{R}$

,

\begin{align} &\mathbb{E}_{i,y}^+ \left( g \left( X_1, \dots, X_n \right) \right) \nonumber\\ &\,{:\!=}\, \frac{1}{V(i,y)} \mathbb{E}_{i} \left( g\left( X_1, \dots, X_n \right) V\left( X_n, y+S_n \right) \,;\, \tau_y > n \right). \end{align}

\begin{align} &\mathbb{E}_{i,y}^+ \left( g \left( X_1, \dots, X_n \right) \right) \nonumber\\ &\,{:\!=}\, \frac{1}{V(i,y)} \mathbb{E}_{i} \left( g\left( X_1, \dots, X_n \right) V\left( X_n, y+S_n \right) \,;\, \tau_y > n \right). \end{align}

The relation between the expectations

![]() $\mathbb{E}_{i,y,z}^+$

and

$\mathbb{E}_{i,y,z}^+$

and

![]() $\mathbb{E}_{i,y}^+$

is given by the following identity: for any

$\mathbb{E}_{i,y}^+$

is given by the following identity: for any

![]() $n \geq 1$

and any bounded measurable g:

$n \geq 1$

and any bounded measurable g:

![]() $\mathbb{X}^n \to \mathbb{R}$

,

$\mathbb{X}^n \to \mathbb{R}$

,

With the help of Proposition 2.4, we have the following bounds.

Lemma 2.5. Assume Conditions 1 and 3 and

![]() $k'(0)=0$

. For any

$k'(0)=0$

. For any

![]() $(i,y) \in \textrm{supp}(V)$

, we have, for any

$(i,y) \in \textrm{supp}(V)$

, we have, for any

![]() $k \geq 1$

,

$k \geq 1$

,

In particular,

\begin{equation*} \mathbb{E}_{i,y}^+ \left( \sum_{k=0}^{+\infty} e^{-S_k} \right) \leq \frac{c \left( 1+\max(y,0) \right)e^{y}}{V(i,y)}. \end{equation*}

\begin{equation*} \mathbb{E}_{i,y}^+ \left( \sum_{k=0}^{+\infty} e^{-S_k} \right) \leq \frac{c \left( 1+\max(y,0) \right)e^{y}}{V(i,y)}. \end{equation*}

The proof, being similar to that in [Reference Grama, Lauvergnat and Le Page13], is left to the reader.

We need the following statements. Let

![]() $ \mathscr F_n = \sigma \{ X_0,Z_0, \ldots, X_n, Z_n \} $

and

$ \mathscr F_n = \sigma \{ X_0,Z_0, \ldots, X_n, Z_n \} $

and

![]() $(Y_n)_{n\geq 0}$

a bounded

$(Y_n)_{n\geq 0}$

a bounded

![]() $(\mathscr F_n)_{n\geq 0}$

-adapted sequence.

$(\mathscr F_n)_{n\geq 0}$

-adapted sequence.

Lemma 2.6. Assume Conditions 1–3 and

![]() $k'(0)=0$

. For any

$k'(0)=0$

. For any

![]() $k \geq 1$

,

$k \geq 1$

,

![]() $(i,y) \in \textrm{supp}(V)$

,

$(i,y) \in \textrm{supp}(V)$

,

![]() $z\in \mathbb N$

, and

$z\in \mathbb N$

, and

![]() $j \in \mathbb{X}$

,

$j \in \mathbb{X}$

,

Proof. For the sake of brevity, for any

![]() $(i,j) \in \mathbb{X}^2$

,

$(i,j) \in \mathbb{X}^2$

,

![]() $y \in \mathbb{R}$

, and

$y \in \mathbb{R}$

, and

![]() $n \geq 1$

, we set

$n \geq 1$

, we set

Fix

![]() $k \geq 1$

. By the point 1 of Proposition 2.2, it is clear that for any

$k \geq 1$

. By the point 1 of Proposition 2.2, it is clear that for any

![]() $(i,y) \in \textrm{supp}(V)$

and n large enough,

$(i,y) \in \textrm{supp}(V)$

and n large enough,

![]() $\mathbb{P}_i \left( \tau_y > n \right) > 0$

. By the Markov property, for any

$\mathbb{P}_i \left( \tau_y > n \right) > 0$

. By the Markov property, for any

![]() $j \in \mathbb{X}$

and

$j \in \mathbb{X}$

and

![]() $n \geq k+1$

large enough,

$n \geq k+1$

large enough,

\begin{align*} I_0 &\,{:\!=}\, \mathbb{E}_{i,z} \left( \left.{ Y_k \,;\, X_n = j} \,\middle|\,{ \tau_y > n }\right. \right)\\[3pt] &= \mathbb{E}_{i,z} \left( Y_k \frac{P_{n-k} \left( X_k,y+S_k, j \right)}{\mathbb{P}_i \left( \tau_y > n \right)} \,;\, \tau_y > m \right). \end{align*}

\begin{align*} I_0 &\,{:\!=}\, \mathbb{E}_{i,z} \left( \left.{ Y_k \,;\, X_n = j} \,\middle|\,{ \tau_y > n }\right. \right)\\[3pt] &= \mathbb{E}_{i,z} \left( Y_k \frac{P_{n-k} \left( X_k,y+S_k, j \right)}{\mathbb{P}_i \left( \tau_y > n \right)} \,;\, \tau_y > m \right). \end{align*}

Using the point 1 of Proposition 2.2, by the Lebesgue dominated convergence theorem,

\begin{align*} {}\lim_{n\to+\infty} I_0 &= {}\mathbb{E}_{i,z} \left( Y_k \frac{V \left( X_k,y+S_m \right)}{V(i,y)} \,;\, \tau_y > k \right) \boldsymbol{\nu}(\,j) \\[3pt] {}&= \mathbb{E}_{i,y,z}^+ \left( Y_k \right) \boldsymbol{\nu} (\,j).\\[-35pt] \end{align*}

\begin{align*} {}\lim_{n\to+\infty} I_0 &= {}\mathbb{E}_{i,z} \left( Y_k \frac{V \left( X_k,y+S_m \right)}{V(i,y)} \,;\, \tau_y > k \right) \boldsymbol{\nu}(\,j) \\[3pt] {}&= \mathbb{E}_{i,y,z}^+ \left( Y_k \right) \boldsymbol{\nu} (\,j).\\[-35pt] \end{align*}

Lemma 2.7. Assume that

![]() $(i,y) \in \text{supp}\ V $

and

$(i,y) \in \text{supp}\ V $

and

![]() $z\in \mathbb N$

. For any bounded

$z\in \mathbb N$

. For any bounded

![]() $(\mathscr F_n)_{n\geq 0}$

-adapted sequence

$(\mathscr F_n)_{n\geq 0}$

-adapted sequence

![]() $(Y_n)_{n\geq 0}$

such that

$(Y_n)_{n\geq 0}$

such that

![]() $Y_n\to Y_{\infty}$

$Y_n\to Y_{\infty}$

![]() $\mathbb P_{i,y,z}^{+}$

-a.s.,

$\mathbb P_{i,y,z}^{+}$

-a.s.,

Proof. Let

![]() $k\geq1$

and

$k\geq1$

and

![]() $\theta>1$

. Then

$\theta>1$

. Then

\begin{align} \mathbb E_{i,z} \big( \big| Y_n-Y_k \big|; \tau_y > n \big) &= \mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; \tau_y > \theta n \big) \nonumber \\[3pt] & + \mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; n< \tau_y \leq \theta n \big). \end{align}

\begin{align} \mathbb E_{i,z} \big( \big| Y_n-Y_k \big|; \tau_y > n \big) &= \mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; \tau_y > \theta n \big) \nonumber \\[3pt] & + \mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; n< \tau_y \leq \theta n \big). \end{align}

We bound the second term in the right-hand side of (2.16):

By the point 1 of Proposition 2.2, we have

\begin{align} &\lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( n< \tau_y \leq \theta n \big) \nonumber \\[3pt] &= \lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( \tau_y >n \big) - \lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( \tau_y > \theta n \big) \nonumber\\[3pt] &= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} \Big(1-\frac{1}{\sqrt{\theta}}\Big). \end{align}

\begin{align} &\lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( n< \tau_y \leq \theta n \big) \nonumber \\[3pt] &= \lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( \tau_y >n \big) - \lim_{n\to \infty} \sqrt{n} \mathbb P_{i,z} \big( \tau_y > \theta n \big) \nonumber\\[3pt] &= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} \Big(1-\frac{1}{\sqrt{\theta}}\Big). \end{align}

Now we shall prove that

Recall that

![]() $\theta >1$

. By the Markov property (conditioning on

$\theta >1$

. By the Markov property (conditioning on

![]() $\mathscr F_n$

),

$\mathscr F_n$

),

\begin{align} &\mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; \tau_y > \theta n \big)\nonumber \\[3pt] &\qquad = \mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ; \tau_y > n \big), \end{align}

\begin{align} &\mathbb E_{i,z} \big( \big|Y_n-Y_k \big|; \tau_y > \theta n \big)\nonumber \\[3pt] &\qquad = \mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ; \tau_y > n \big), \end{align}

where we use the notation

![]() $P_{n'}(i',y') \,{:\!=}\, \mathbb P_{i',y'} \big( \tau_y^{\prime} > n' \big).$

By the point 2 of Proposition 2.2 and the point 3 of Proposition 2.1, there exists

$P_{n'}(i',y') \,{:\!=}\, \mathbb P_{i',y'} \big( \tau_y^{\prime} > n' \big).$

By the point 2 of Proposition 2.2 and the point 3 of Proposition 2.1, there exists

![]() $y_0>0$

such that for

$y_0>0$

such that for

![]() $i' \in \mathbb X$

,

$i' \in \mathbb X$

,

![]() $y' > y_0$

, and

$y' > y_0$

, and

![]() $n'\in\mathbb N$

,

$n'\in\mathbb N$

,

Representing the right-hand side of (2.20) as a sum of two terms and using (2.21) gives

\begin{align} &\sqrt{n}\mathbb E_{i,z} \big( \big|Y_n-Y_k \big|;\ \tau_y > \theta n \big)\nonumber \\[3pt] & = \sqrt{n}\mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ;\ y+S_n \leq y_0, \tau_y > n \big) \nonumber \\[3pt] & \quad + \sqrt{n}\mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ;\ y+S_n > y_0, \tau_y > n \big) \nonumber \\[3pt] &\leq c \sqrt{n}\mathbb P_{i} \big( y+S_n \leq y_0, \tau_y > n \big) \nonumber \\[3pt] &\quad + \frac{c}{\sqrt{\theta-1}} \mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert V(X_n, y+S_n);\ \tau_y > n \big). \end{align}

\begin{align} &\sqrt{n}\mathbb E_{i,z} \big( \big|Y_n-Y_k \big|;\ \tau_y > \theta n \big)\nonumber \\[3pt] & = \sqrt{n}\mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ;\ y+S_n \leq y_0, \tau_y > n \big) \nonumber \\[3pt] & \quad + \sqrt{n}\mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert P_{[(\theta-1)n]}(X_n,y+S_n) ;\ y+S_n > y_0, \tau_y > n \big) \nonumber \\[3pt] &\leq c \sqrt{n}\mathbb P_{i} \big( y+S_n \leq y_0, \tau_y > n \big) \nonumber \\[3pt] &\quad + \frac{c}{\sqrt{\theta-1}} \mathbb E_{i,z} \big( \left\lvert{Y_n-Y_k}\right\rvert V(X_n, y+S_n);\ \tau_y > n \big). \end{align}

Using the point 1 of Proposition 2.3 and the point 1 of Proposition 2.2, we have

By the change-of-measure formula (2.13),

Letting first

![]() $n\to\infty$

and then

$n\to\infty$

and then

![]() $k\to\infty$

, by the Lebesgue dominated convergence theorem,

$k\to\infty$

, by the Lebesgue dominated convergence theorem,

From (2.22)–(2.25) we deduce (2.19). Now (2.16)–(2.19) imply that, for any

![]() $\theta>1$

,

$\theta>1$

,

Since

![]() $\theta $

can be taken arbitrarily close to 1, we conclude the claim of the lemma.

$\theta $

can be taken arbitrarily close to 1, we conclude the claim of the lemma.

The next assertion is an easy consequence of Lemmata 2.6 and 2.7.

Lemma 2.8. Assume that

![]() $(i,y) \in \text{supp}\ V $

,

$(i,y) \in \text{supp}\ V $

,

![]() $j\in \mathbb{X}$

, and

$j\in \mathbb{X}$

, and

![]() $z\in \mathbb N$

. For any bounded

$z\in \mathbb N$

. For any bounded

![]() $(\mathscr F_n)_{n\geq 0}$

-adapted sequence

$(\mathscr F_n)_{n\geq 0}$

-adapted sequence

![]() $(Y_n)_{n\geq 0}$

such that

$(Y_n)_{n\geq 0}$

such that

![]() $Y_n\to Y_{\infty}$

$Y_n\to Y_{\infty}$

![]() $\mathbb P_{i,y,z}^{+}$

-a.s.,

$\mathbb P_{i,y,z}^{+}$

-a.s.,

Proof. For any

![]() $n > k \geq1$

, we have

$n > k \geq1$

, we have

\begin{align} &\lim_{n\to \infty} \sqrt{n} \mathbb E_{i,z} \big( Y_n;\ X_n=j, \tau_y > n \big) \nonumber \\[3pt] &= \sqrt{n} \mathbb E_{i,z} \big( Y_k;\ X_n=j, \tau_y > n \big) + \sqrt{n} \mathbb E_{i,z} \big( Y_n-Y_k;\ X_n=j, \tau_y > n \big). \end{align}

\begin{align} &\lim_{n\to \infty} \sqrt{n} \mathbb E_{i,z} \big( Y_n;\ X_n=j, \tau_y > n \big) \nonumber \\[3pt] &= \sqrt{n} \mathbb E_{i,z} \big( Y_k;\ X_n=j, \tau_y > n \big) + \sqrt{n} \mathbb E_{i,z} \big( Y_n-Y_k;\ X_n=j, \tau_y > n \big). \end{align}

By Lemma 2.6, the first term in the right-hand side of (2.26) converges to

as

![]() $n\to\infty$

, where

$n\to\infty$

, where

![]() $ \lim_{k\to \infty} \mathbb E_{i,z,y}^{+} Y_{k} = \mathbb E_{i,y,z}^{+} Y_{\infty}.$

By Lemma 2.7, the second term in the right-hand side of (2.26) vanishes, which completes the proof.

$ \lim_{k\to \infty} \mathbb E_{i,z,y}^{+} Y_{k} = \mathbb E_{i,y,z}^{+} Y_{\infty}.$

By Lemma 2.7, the second term in the right-hand side of (2.26) vanishes, which completes the proof.

2.3. The dual Markov chain

Note that the invariant measure

![]() $\boldsymbol{\nu}$

is positive on

$\boldsymbol{\nu}$

is positive on

![]() $\mathbb{X}$

. Therefore the dual Markov kernel

$\mathbb{X}$

. Therefore the dual Markov kernel

is well defined. On an extension of the probability space

![]() $(\Omega, \mathscr{F}, \mathbb{P})$

we consider the dual Markov chain

$(\Omega, \mathscr{F}, \mathbb{P})$

we consider the dual Markov chain

![]() $\left( X_n^* \right)_{n\geq 0}$

with values in

$\left( X_n^* \right)_{n\geq 0}$

with values in

![]() $\mathbb{X}$

and with transition probability

$\mathbb{X}$

and with transition probability

![]() $\textbf{P}^*$

. The dual chain

$\textbf{P}^*$

. The dual chain

![]() $\left( X_n^* \right)_{n\geq 0}$

can be chosen to be independent of the chain

$\left( X_n^* \right)_{n\geq 0}$

can be chosen to be independent of the chain

![]() $\left( X_n \right)_{n\geq 0}$

. Accordingly, the dual Markov walk

$\left( X_n \right)_{n\geq 0}$

. Accordingly, the dual Markov walk

![]() $\left(S_n^* \right)_{n\geq 0}$

is defined by setting

$\left(S_n^* \right)_{n\geq 0}$

is defined by setting

For any

![]() $y \in \mathbb{R}$

define the first time when the Markov walk

$y \in \mathbb{R}$

define the first time when the Markov walk

![]() $\left(y+ S_n^* \right)_{n\geq 0}$

becomes nonpositive:

$\left(y+ S_n^* \right)_{n\geq 0}$

becomes nonpositive:

For any

![]() $i\in \mathbb{X}$

, denote by

$i\in \mathbb{X}$

, denote by

![]() $\mathbb{P}_i^*$

and

$\mathbb{P}_i^*$

and

![]() $\mathbb{E}_i^*$

the probability and the associated expectation generated by the finite-dimensional distributions of the Markov chain

$\mathbb{E}_i^*$

the probability and the associated expectation generated by the finite-dimensional distributions of the Markov chain

![]() $( X_n^* )_{n\geq 0}$

starting at

$( X_n^* )_{n\geq 0}$

starting at

![]() $X_0^* = i$

.

$X_0^* = i$

.

It is easy to verify (see [Reference Grama, Lauvergnat and Le Page13]) that

![]() $\boldsymbol{\nu}$

is also

$\boldsymbol{\nu}$

is also

![]() $\textbf{P}^*$

invariant and that Conditions 1 and 3 are satisfied for

$\textbf{P}^*$

invariant and that Conditions 1 and 3 are satisfied for

![]() $\textbf{P}^*$

. This implies that Propositions 2.1–2.4, formulated in Subsection 2.1, hold also for the dual Markov chain

$\textbf{P}^*$

. This implies that Propositions 2.1–2.4, formulated in Subsection 2.1, hold also for the dual Markov chain

![]() $(X_n^*)_{n\geq 0}$

and the Markov walk

$(X_n^*)_{n\geq 0}$

and the Markov walk

![]() $\left(y+ S_n^* \right)_{n\geq 0}$

, with the harmonic function

$\left(y+ S_n^* \right)_{n\geq 0}$

, with the harmonic function

![]() $V^*$

such that, for any

$V^*$

such that, for any

![]() $(i,y) \in \mathbb{X} \times \mathbb{R}$

and

$(i,y) \in \mathbb{X} \times \mathbb{R}$

and

![]() $n \geq 1$

,

$n \geq 1$

,

The following duality property is obvious (see [Reference Grama, Lauvergnat and Le Page13]).

Lemma 2.9. (Duality.) For any

![]() $n\geq 1$

and any function g:

$n\geq 1$

and any function g:

![]() $\mathbb{X}^n \to \mathbb{C}$

,

$\mathbb{X}^n \to \mathbb{C}$

,

3. Preparatory results for branching processes

Throughout the remainder of the paper we will use the following notation. For

![]() $ s \in [0,1)$

, let

$ s \in [0,1)$

, let

and, by continuity,

In addition, for

![]() $s \in [0,1),\ z\in \mathbb{N},\ z\neq 0$

, let

$s \in [0,1),\ z\in \mathbb{N},\ z\neq 0$

, let

![]() $g_{z}(s)=s^z$

and

$g_{z}(s)=s^z$

and

and, by continuity,

For any

![]() $n\geq 1$

,

$n\geq 1$

,

![]() $z\in \mathbb{N},\ z\not= 0$

, and

$z\in \mathbb{N},\ z\not= 0$

, and

![]() $s\in [0,1]$

, the following quantity will play an important role in our study:

$s\in [0,1]$

, the following quantity will play an important role in our study:

Under Condition 2, for any

![]() $i\in \mathbb X$

and

$i\in \mathbb X$

and

![]() $s\in [0,1]$

we have

$s\in [0,1]$

we have

![]() $f_i(s)\in [0,1]$

and

$f_i(s)\in [0,1]$

and

![]() $f_{X_1}\circ \cdots \circ f_{X_n} (s) \in [0,1]$

. This implies that, for any

$f_{X_1}\circ \cdots \circ f_{X_n} (s) \in [0,1]$

. This implies that, for any

![]() $s\in [0,1]$

,

$s\in [0,1]$

,

For any

![]() $n\geq 1$

and

$n\geq 1$

and

![]() $z\in \mathbb{N},\ z\neq 0$

, the function

$z\in \mathbb{N},\ z\neq 0$

, the function

![]() $s\mapsto q_{n,z} (s) $

is convex on [0,1]. Since the sequence

$s\mapsto q_{n,z} (s) $

is convex on [0,1]. Since the sequence

![]() $( \xi_i^{n,j} )_{j,n \geq 1}$

is independent of the Markov chain

$( \xi_i^{n,j} )_{j,n \geq 1}$

is independent of the Markov chain

![]() $\left( X_n \right)_{n\geq 0},$

with

$\left( X_n \right)_{n\geq 0},$

with

![]() $s=0$

, we have,

$s=0$

, we have,

![]() $\mathbb P_{i,y,z}^{+}$

-a.s.,

$\mathbb P_{i,y,z}^{+}$

-a.s.,

Note also that

![]() $\{ Z_{n} >0\} \supset \{ Z_{n+1} >0\}$

and therefore, for

$\{ Z_{n} >0\} \supset \{ Z_{n+1} >0\}$

and therefore, for

![]() $n\geq 1$

,

$n\geq 1$

,

Taking the limit as

![]() $n\to\infty$

,

$n\to\infty$

,

![]() $\mathbb P_{i,y,z}^{+}$

-a.s.,

$\mathbb P_{i,y,z}^{+}$

-a.s.,

\begin{align} \lim_{n\to \infty} q_{n,z} (0) &= \lim_{n\to \infty} \mathbb P_{i,y,z}^{+} (Z_n >0 \big| (X_k)_{k\geq 0} ) \nonumber \\[3pt] &= \mathbb P_{i,y,z}^{+} ( \cap_{n\geq 1} \{ Z_n >0\} \big| (X_k)_{k\geq 0} ). \end{align}

\begin{align} \lim_{n\to \infty} q_{n,z} (0) &= \lim_{n\to \infty} \mathbb P_{i,y,z}^{+} (Z_n >0 \big| (X_k)_{k\geq 0} ) \nonumber \\[3pt] &= \mathbb P_{i,y,z}^{+} ( \cap_{n\geq 1} \{ Z_n >0\} \big| (X_k)_{k\geq 0} ). \end{align}

Moreover, by convexity of the function

![]() $q_{n,z}(s)$

we have

$q_{n,z}(s)$

we have

The following formula (whose proof is left to the reader) is similar to the well-known statements from the papers by Agresti [Reference Agresti2] and Geiger and Kersting [Reference Geiger and Kersting9]: for any

![]() $s \in [0,1)$

and

$s \in [0,1)$

and

![]() $n \geq 1$

,

$n \geq 1$

,

\begin{align} \frac{1}{q_{n,z} (s)} &= \frac{1}{z f^{\prime}_{X_1}(1) \cdots f^{\prime}_{X_n}(1) (1-s) } \nonumber \\[3pt] &+ \frac{1}{z}\sum_{k=1}^{n} \frac{\varphi_{X_{k}} \circ f_{X_{k+1}} \circ \cdots \circ f_{X_n} (s)}{f^{\prime}_{X_1}(1) \cdots f^{\prime}_{X_{k-1}}(1) } \nonumber\\[3pt] &+ \psi_{z} \circ f_{X_{1}} \circ \cdots \circ f_{X_n} (s). \end{align}

\begin{align} \frac{1}{q_{n,z} (s)} &= \frac{1}{z f^{\prime}_{X_1}(1) \cdots f^{\prime}_{X_n}(1) (1-s) } \nonumber \\[3pt] &+ \frac{1}{z}\sum_{k=1}^{n} \frac{\varphi_{X_{k}} \circ f_{X_{k+1}} \circ \cdots \circ f_{X_n} (s)}{f^{\prime}_{X_1}(1) \cdots f^{\prime}_{X_{k-1}}(1) } \nonumber\\[3pt] &+ \psi_{z} \circ f_{X_{1}} \circ \cdots \circ f_{X_n} (s). \end{align}

We can rewrite (3.6) in the following more convenient form: for any

![]() $s \in [0,1)$

and

$s \in [0,1)$

and

![]() $n \geq 1$

,

$n \geq 1$

,

\begin{align} q_{n,z} (s)^{-1} &= \frac{1}{z}\bigg( \frac{e^{-S_n}}{1-s} + \sum_{k=0}^{n-1} e^{-S_{k}} \eta_{k+1,n}(s) \bigg) \nonumber\\[3pt] {}&+ \psi_{z} \circ f_{X_{1}} \circ \cdots \circ f_{X_n} (s), \end{align}

\begin{align} q_{n,z} (s)^{-1} &= \frac{1}{z}\bigg( \frac{e^{-S_n}}{1-s} + \sum_{k=0}^{n-1} e^{-S_{k}} \eta_{k+1,n}(s) \bigg) \nonumber\\[3pt] {}&+ \psi_{z} \circ f_{X_{1}} \circ \cdots \circ f_{X_n} (s), \end{align}

where

Since

![]() $\frac{1}{2}\varphi(0) \leq \varphi(s) \leq 2 \varphi(1) $

, for any

$\frac{1}{2}\varphi(0) \leq \varphi(s) \leq 2 \varphi(1) $

, for any

![]() $k \in \{ 1, \dots, m \}$

,

$k \in \{ 1, \dots, m \}$

,

By Theorem 5 of [Reference Athreya and Karlin4], for any

![]() $(i,y) \in \textrm{supp}(V)$

,

$(i,y) \in \textrm{supp}(V)$

,

![]() $s \in[0,1)$

,

$s \in[0,1)$

,

![]() $m\geq 1$

, and

$m\geq 1$

, and

![]() $k \in \{ 1, \dots, m \}$

, there exists a random variable

$k \in \{ 1, \dots, m \}$

, there exists a random variable

![]() $\eta_{k,\infty}(s)$

such that

$\eta_{k,\infty}(s)$

such that

everywhere, and by (3.8), for any

![]() $s \in[0,1)$

and

$s \in[0,1)$

and

![]() $k \geq 1 $

,

$k \geq 1 $

,

In the same way,

everywhere. For any

![]() $s \in [0,1)$

, define

$s \in [0,1)$

, define

![]() $q_{\infty,z}(s)$

by setting

$q_{\infty,z}(s)$

by setting

\begin{equation} {} {}q_{\infty,z}(s)^{-1} \,{:\!=}\, \frac{1}{z}\left[ \sum_{k=0}^{+\infty} e^{-S_k} \eta_{k+1,\infty}(s) \right] + \psi_{z,\infty}(s). \end{equation}

\begin{equation} {} {}q_{\infty,z}(s)^{-1} \,{:\!=}\, \frac{1}{z}\left[ \sum_{k=0}^{+\infty} e^{-S_k} \eta_{k+1,\infty}(s) \right] + \psi_{z,\infty}(s). \end{equation}

By Lemma 2.5, we have that

Lemma 3.1. Assume Conditions 1 and 3 and

![]() $k'(0)=0$

. For any

$k'(0)=0$

. For any

![]() $(i,y) \in \text{supp}\ V$

,

$(i,y) \in \text{supp}\ V$

,

![]() $z\in \mathbb{N},\ z\neq 0$

, and

$z\in \mathbb{N},\ z\neq 0$

, and

![]() $s\in [0,1)$

,

$s\in [0,1)$

,

and

Proof. We give a sketch only. Following the proof of Lemma 3.2 in [Reference Grama, Lauvergnat and Le Page13], for any

![]() $(i,y) \in \text{supp}\ V$

, by (3.7), (3.8), and (3.10), we obtain

$(i,y) \in \text{supp}\ V$

, by (3.7), (3.8), and (3.10), we obtain

\begin{align} \mathbb{E}_{i,y}^+ \left( \left\lvert{q_{n,z}^{-1}(s) - q_{\infty,z}^{-1}(s)}\right\rvert \right) &\leq \frac{1}{z(1-s)}\mathbb{E}_{i,y}^+ \left( e^{-S_n} \right) \nonumber\\[3pt] &+ \frac{1}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=0}^{l} e^{-S_k} \left\lvert{\eta_{k+1,n}(s) - \eta_{k+1,\infty}(s) }\right\rvert \right) \nonumber\\[3pt] &+ \frac{2\eta}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=l+1}^{+\infty} e^{-S_k} \right) \nonumber\\[3pt] {}&+ \mathbb{E}_{i,y}^+ \big|\psi_{z}\circ f_{X_1} \circ\dots \circ f_{X_n}(s) - \psi_{z,\infty}(s)\big|. \end{align}

\begin{align} \mathbb{E}_{i,y}^+ \left( \left\lvert{q_{n,z}^{-1}(s) - q_{\infty,z}^{-1}(s)}\right\rvert \right) &\leq \frac{1}{z(1-s)}\mathbb{E}_{i,y}^+ \left( e^{-S_n} \right) \nonumber\\[3pt] &+ \frac{1}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=0}^{l} e^{-S_k} \left\lvert{\eta_{k+1,n}(s) - \eta_{k+1,\infty}(s) }\right\rvert \right) \nonumber\\[3pt] &+ \frac{2\eta}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=l+1}^{+\infty} e^{-S_k} \right) \nonumber\\[3pt] {}&+ \mathbb{E}_{i,y}^+ \big|\psi_{z}\circ f_{X_1} \circ\dots \circ f_{X_n}(s) - \psi_{z,\infty}(s)\big|. \end{align}

The last term in the right-hand side of (3.14) converges to 0 as

![]() $n\to \infty$

by (3.11). By Lemma 2.5 and the Lebesgue dominated convergence theorem, we have

$n\to \infty$

by (3.11). By Lemma 2.5 and the Lebesgue dominated convergence theorem, we have

\begin{align*} {}\limsup_{n\to\infty} \mathbb{E}_{i,y}^+ \left( \left\lvert{q_{n,z}^{-1}(s) - q_{\infty,z}^{-1}(s)}\right\rvert \right) {}\leq \frac{2\eta}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=l+1}^{+\infty} e^{-S_k} \right). \end{align*}

\begin{align*} {}\limsup_{n\to\infty} \mathbb{E}_{i,y}^+ \left( \left\lvert{q_{n,z}^{-1}(s) - q_{\infty,z}^{-1}(s)}\right\rvert \right) {}\leq \frac{2\eta}{z} \mathbb{E}_{i,y}^+ \left( \sum_{k=l+1}^{+\infty} e^{-S_k} \right). \end{align*}

Taking the limit as

![]() $l\to \infty$

, again by Lemma 2.5, we conclude the first assertion of the lemma. The second assertion follows from the first one, since

$l\to \infty$

, again by Lemma 2.5, we conclude the first assertion of the lemma. The second assertion follows from the first one, since

![]() $q_{n,z}(s) \leq 1$

and

$q_{n,z}(s) \leq 1$

and

![]() $q_{\infty,z}(s)\leq 1$

.

$q_{\infty,z}(s)\leq 1$

.

Lemma 3.2. Assume Conditions 1 and 3 and

![]() $k'(0)=0$

. For any

$k'(0)=0$

. For any

![]() $(i,y)\in \text{supp}\ V$

and

$(i,y)\in \text{supp}\ V$

and

![]() $z\in \mathbb N$

,

$z\in \mathbb N$

,

![]() $z\not=0$

, we have, for any

$z\not=0$

, we have, for any

![]() $k \geq 1$

,

$k \geq 1$

,

![]() $\mathbb P_{i,y,z}^{+}$

-a.s.,

$\mathbb P_{i,y,z}^{+}$

-a.s.,

Proof. By (3.4) we have,

![]() $\mathbb P_{i,z,y}^{+}$

-a.s.,

$\mathbb P_{i,z,y}^{+}$

-a.s.,

By Lemma 2.5 and a monotone convergence argument,

Thus,

![]() $\mathbb P_{i,y}^{+}$

-a.s.,

$\mathbb P_{i,y}^{+}$

-a.s.,

which ends the proof of the lemma.

We will make use of the following lemma.

Lemma 3.3. There exists a constant c such that, for any

![]() $z\in \mathbb N$

,

$z\in \mathbb N$

,

![]() $z\not=0,$

and

$z\not=0,$

and

![]() $y\geq 0$

sufficiently large,

$y\geq 0$

sufficiently large,

Proof. We follow the same line as the proof of Theorem 1.1 in [Reference Grama, Lauvergnat and Le Page13]. First, we have

Using (3.5) and the fact that

![]() $q_{n,z}(0)$

is nonincreasing in n, we have

$q_{n,z}(0)$

is nonincreasing in n, we have

Setting

![]() $B_{n,j}=\{ -(\,j+1) < \min_{1\leq k\leq n} (y+S_k) \leq -j \}$

, this implies

$B_{n,j}=\{ -(\,j+1) < \min_{1\leq k\leq n} (y+S_k) \leq -j \}$

, this implies

\begin{align*} &\mathbb P_{i,z}(Z_n>0, \tau_y \leq n) \\[3pt]& \leq z \mathbb E_{i,0}(e^{\min_{1\leq k\leq n} S_k};\ \tau_y \leq n) \\[3pt]&\leq z e^{-y}\sum_{j=1}^{\infty} \mathbb E_{i,0}(e^{\min_{1\leq k\leq n} (y+S_k)};\ B_{n,j}, \tau_y \leq n)\\[3pt]&\leq z e^{-y}\sum_{j=1}^{\infty} e^{-j} \mathbb P_{i,0}( \tau_{y+j+1} > n). \end{align*}

\begin{align*} &\mathbb P_{i,z}(Z_n>0, \tau_y \leq n) \\[3pt]& \leq z \mathbb E_{i,0}(e^{\min_{1\leq k\leq n} S_k};\ \tau_y \leq n) \\[3pt]&\leq z e^{-y}\sum_{j=1}^{\infty} \mathbb E_{i,0}(e^{\min_{1\leq k\leq n} (y+S_k)};\ B_{n,j}, \tau_y \leq n)\\[3pt]&\leq z e^{-y}\sum_{j=1}^{\infty} e^{-j} \mathbb P_{i,0}( \tau_{y+j+1} > n). \end{align*}

Using the point 2 of Proposition 2.2 we obtain the assertion of the lemma.

It is known from the results in [Reference Grama, Lauvergnat and Le Page12] that when y is sufficiently large,

![]() $(i,y) \in \text{supp}\ V$

. For

$(i,y) \in \text{supp}\ V$

. For

![]() $(i,y) \in \text{supp}\ V$

, set

$(i,y) \in \text{supp}\ V$

, set

Theorem 1.1 is a direct consequence of the following proposition, which extends Theorem 1.1 in [Reference Grama, Lauvergnat and Le Page13] to the case

![]() $z>1$

.

$z>1$

.

Proposition 3.4. Assume Conditions 1–4. Suppose that

![]() $i\in \mathbb X$

and

$i\in \mathbb X$

and

![]() $z\in \mathbb N, z \neq 0$

. Then for any

$z\in \mathbb N, z \neq 0$

. Then for any

![]() $i \in \mathbb X$

the limit as

$i \in \mathbb X$

the limit as

![]() $y\to \infty$

of V(i,y) U(i,y,z) exists and satisfies

$y\to \infty$

of V(i,y) U(i,y,z) exists and satisfies

Moreover,

Proof. By Lemma 2.8 and (3.17), for

![]() $(i,y)\in \text{supp}\ V$

and

$(i,y)\in \text{supp}\ V$

and

![]() $j \in \mathbb X, $

$j \in \mathbb X, $

\begin{align} &\lim_{n\to\infty}\sqrt{n} \mathbb P_{i,z} \left( Z_n > 0 \,,\, X_n = j \,,\, \tau_y > n \right) \nonumber \\[3pt]&= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} \boldsymbol \nu (j)\mathbb P_{i,y,z}^{+} (\cap_{n\geq1} \{ Z_n>0\}) \nonumber \\[3pt]&= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} U(i,y,z) \boldsymbol \nu (j), \end{align}

\begin{align} &\lim_{n\to\infty}\sqrt{n} \mathbb P_{i,z} \left( Z_n > 0 \,,\, X_n = j \,,\, \tau_y > n \right) \nonumber \\[3pt]&= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} \boldsymbol \nu (j)\mathbb P_{i,y,z}^{+} (\cap_{n\geq1} \{ Z_n>0\}) \nonumber \\[3pt]&= \frac{2V(i,y)}{\sqrt{2\pi}\sigma} U(i,y,z) \boldsymbol \nu (j), \end{align}

and

\begin{align} \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j)& = \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j, \tau_y > n) \nonumber \\[3pt] & +\sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j, \tau_y \leq n) \nonumber \\[3pt] &= J_1(n,y) + J_2(n,y). \end{align}

\begin{align} \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j)& = \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j, \tau_y > n) \nonumber \\[3pt] & +\sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j, \tau_y \leq n) \nonumber \\[3pt] &= J_1(n,y) + J_2(n,y). \end{align}

By Lemma 3.3,

From (3.18), (3.19), and (3.20), when y is sufficiently large,

\begin{align} \limsup_{n\to\infty} \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j)&\leq\frac{2V(i,y)}{\sqrt{2\pi}\sigma} U(i,y,z) \boldsymbol \nu (j) \nonumber \\[3pt]&+ c z e^{-y} (1+ \max\{y,0 \}) <\infty. \end{align}

\begin{align} \limsup_{n\to\infty} \sqrt{n} \mathbb P_{i,z} (Z_n>0, X_n=j)&\leq\frac{2V(i,y)}{\sqrt{2\pi}\sigma} U(i,y,z) \boldsymbol \nu (j) \nonumber \\[3pt]&+ c z e^{-y} (1+ \max\{y,0 \}) <\infty. \end{align}

Similarly, when y is sufficiently large,

Since

![]() $\mathbb{P}_{i,z} \left( Z_n > 0 \,,\, X_n = j \,,\, \tau_y > n \right)$

is nondecreasing in y, from (3.18) it follows that the function