Video game music is often sonically similar to film music, particularly when games use musical styles that draw on precedent in cinema. Yet there are distinct factors in play that are specific to creating and producing music for games. These factors include:

technical considerations arising from the video game technology,

interactive qualities of the medium, and

aesthetic traditions of game music.

Apart from books and manuals that teach readers how to use particular game technologies (such as, for example, Ciarán Robinson’s Game Audio with FMOD and Unity),Footnote 1 some composers and audio directors have written about their processes in more general terms. Rob Bridgett,Footnote 2 Winifred Phillips,Footnote 3 George Sanger,Footnote 4 Michael Sweet,Footnote 5 Chance Thomas,Footnote 6 and Gina Zdanowicz and Spencer BambrickFootnote 7 amongst others have written instructive guides that help to convey their approaches and philosophies to music in games. Each of these volumes has a slightly different approach and focus. Yet all discussions of creating and producing game music deal with the three interlinked factors named above.

Music is one element of the video game; as such it is affected by technical aspects of the game as a whole. The first part of this book considered how sound chip technology defined particular parameters for chiptune composers. Even if modern games do not use sound-producing chips like earlier consoles, technical properties of the hardware and software still have implications for the music. These might include memory and processing power, output hardware, or other factors determined by the programming. In most cases, musical options available to the composer/audio director are determined by the (negotiated) allocation of time, budget and computational resources to audio by the game directors. This complexity, as well as the variety of audio elements of a game, is part of the reason why large game productions typically have an ‘audio director’. The role of the audio director is to supervise all sound in the game, managing the creation of sound materials (music, sound effects, dialogue), while co-ordinating with the teams programming other aspects of the game.

For smaller-sized productions such as mobile games, indie games or games that just do not use that much music, tasks conducted by an audio director are either outsourced and/or co-ordinated by a game producer. Additionally, in-house composers are rather uncommon; most composers do work-for-hire for a specific project, and are therefore oftentimes not permanent team members.Footnote 8

Composers must consider how their music will interact with the other elements of the game. Perhaps chief amongst these concerns is the question of how the music will respond to the player and gameplay. There are a variety of ways that music might do so. A game might simply feature a repeating loop that begins when the game round starts, and repeats until the player wins or loses. Or a game might involve more complicated interactive systems. Sometimes the music programming is handled by specialist ‘middleware’ software, like FMOD and Wwise, which are specifically designed to allow advanced audio options. In any case, the composer and audio directors are tasked with ensuring that music fits with the way the material will be deployed in the context of the game.

Karen Collins has defined a set of terms for describing this music. She uses ‘dynamic music’, as a generic term for ‘changeable’ music; ‘adaptive’ for music that changes in reaction to the game state, not in direct response to the player’s actions (such as music that increases in tempo once an in-game countdown timer reaches a certain value); and ‘interactive’ for music that does change directly as a result of the player’s actions, such as when music begins when the player’s avatar moves into a new location.Footnote 9

Unlike the fixed timings of a film, games often have to deal with uncertainty about precisely when particular events will occur, as this depends on the player’s actions. Composers frequently have to consider whether and how music should respond to events in the game. Musical reactions have to be both prompt and musically coherent. There are a great variety of approaches to the temporal indeterminacy of games, but three of the most common are loops, sections and layers.Footnote 10 Guy Michelmore, in Chapter 4 of this volume, outlines some of the challenges and considerations of writing using loops, sections and layers.

A less common technique is the use of generative music, where musical materials are generated on the fly. As Zdanowicz and Bambrick put it, ‘Instead of using pre-composed modules of music’, music is ‘triggered at the level of individual notes’.Footnote 11 Games like Spore, Proteus, No Man’s Sky and Mini Metro have used generative techniques. This approach seems best suited to games where procedural generation is also evident in other aspects of the game. Generative music would not be an obvious choice for game genres that expect highly thematic scores with traditional methods of musical development.

As the example of generative music implies, video games have strong traditions of musical aesthetics, which also play an important part in how game music is created and produced. Perhaps chief amongst such concerns is genre. In the context of video games, the word ‘genre’ is usually used to refer to the type of game (strategy game, stealth game, first-person shooter), rather than the setting of the game (Wild West, science fiction, etc.). Different game genres have particular conventions of how music is implemented. For instance, it is typical for a stealth game to use music that reacts when the player’s avatar is discovered, while gamers can expect strategy games to change music based on the progress of the battles, and Japanese role-playing game (RPG) players are likely to expect a highly thematic score with character and location themes.

K. J. Donnelly has emphasized that, while dynamic music systems are important, we should be mindful that in a great many games, music does not react to the ongoing gameplay.Footnote 12 Interactivity may be an essential quality of games, but this does not necessarily mean that music has to respond closely to the gameplay, nor that a more reactive score is intrinsically better than non-reactive music. Music that jumps rapidly between different sections, reacting to every single occurrence in a game can be annoying or even ridiculous. Abrupt and awkward musical transitions can draw unwanted attention to the implementation. While a well-made composition is, of course, fundamental, good implementation into the game is also mandatory for a successful score.

The genre of the game will also determine the cues required in the game. Most games will require some kind of menu music, but loading cues, win/lose cues, boss music, interface sounds and so on, will be highly dependent on the genre, as well as the particular game. When discussing game music, it is easy to focus exclusively on music heard during the main gameplay, though we should recognize the huge number of musical elements in a game. Even loading and menu music can be important parts of the experience of playing the game.Footnote 13

The highly collaborative and interlinked nature of game production means that there are many agents and agendas that affect the music beyond the composer and audio director. These can include marketing requirements, broader corporate strategy of the game publisher and developer, and technical factors. Many people who are not musicians or directly involved with audio make decisions that affect the music of a game. The process of composing and producing music for games balances the technical and financial resources available to creators with the demands of the medium and the creative aspirations of the producers.

Even more than writing music for film, composing for video games is founded on the principle of interactive relationships. Of course, interactivity is particularly obvious when games use dynamic music systems to allow music to respond to the players. But it is also reflected more generally in the collaborative nature of game music production and the way that composers produce music to involve players as active participants, rather than simply as passive audience members. This chapter will outline the process of creating video game music from the perspective of the composer. The aim is not to provide a definitive model of game music production that applies for all possible situations. Instead, this chapter will characterize the processes and phases of production that a game composer will likely encounter while working on a project, and highlight some of the factors in play at each stage.

Beginning the Project

Though some games companies have permanent in-house audio staff, most game composers work as freelancers. As with most freelance artists, game composers typically find themselves involved with a project through some form of personal connection. This might occur through established connections or through newly forged links. For the latter, composers might pitch directly to developers for projects that are in development, or they might network at professional events like industry conferences (such as Develop in the UK). As well as cultivating connections with developers, networking with other audio professionals is important, since many composers are given opportunities for work by their peers.

Because of the technical complexity of game production, and the fact that games often require more music than a typical film or television episode, video games frequently demand more collaborative working patterns than non-interactive media. It is not uncommon for games to involve teams of composers. One of the main challenges of artistic collaborations is for each party to have a good understanding of the other’s creative and technical processes, and to find an effective way to communicate. Unsurprisingly, one positive experience of a professional relationship often leads to another. As well as the multiple potential opportunities within one game, composers may find work as a result of previous fruitful collaborations with other designers, composers or audio directors. Since most composers find work through existing relationships, networking is crucial for any composer seeking a career in writing music for games.

Devising the Musical Strategy

The first task facing the composer is to understand, or help devise, the musical strategy for the game. This is a process that fuses practical issues with technical and artistic aspirations for the game. The game developers may already have a well-defined concept for the music of their game, or the composer might shape this strategy in collaboration with the developers.

Many factors influence a game’s musical strategy. The scale and budget of the project are likely well outside the composer’s control. The demands of a mobile game that only requires a few minutes of music will be very different from those of a high-budget title from a major studio that might represent years of work. Composers should understand how the game is assembled and whether they are expected to be involved in the implementation/integration of music into the game, or simply delivering the music (either as finished cues or as stems/elements of cues). It should also become clear early in the process whether there is sufficient budget to hire live performers. If the budget will not stretch to live performance, the composer must rely on synthesized instruments, and/or their own performing abilities.

Many technical decisions are intimately bound up with the game’s interactive and creative ethos. Perhaps the single biggest influence on the musical strategy of a game is the interactive genre or type of game (whether it is a first-person shooter, strategy game, or racing game, and so on). The interactive mechanics of the game will heavily direct the musical approach to the game’s music, partly as a result of precedent from earlier games, and partly because of the music’s engagement with the player’s interactivity.Footnote 1 These kinds of broad-level decisions will affect how much music is required for the game, and how any dynamic music should be deployed. For instance, does the game have a main character? Should the game adopt a thematic approach? Should it aim to respond to the diversity of virtual environments in the game? Should it respond to player action? How is the game structured, and does musical development align with this structure?

Part of the creative process will involve the composer investigating these questions in tandem with the developers, though some of the answers may change as the project develops. Nevertheless, having a clear idea of the music’s integration into the game and of the available computational/financial resources is essential for the composer to effectively begin creating the music for the game.

The film composer may typically be found writing music to a preliminary edit of the film. In comparison, the game composer is likely to be working with materials much further away from the final form of the product.Footnote 2 It is common for game composers to begin writing based on incomplete prototypes, design specifications and concept art/mood boards supplied by the developers. From these materials, composers will work in dialogue with the developers to refine a style and approach for the game. For games that are part of a series or franchise, the musical direction will often iterate on the approach from previous instalments, even if the compositional staff are not retained from one game to the sequel.

If a number of composers are working on a game, the issue of consistency must be considered carefully. It might be that the musical style should be homogenous, and so a strong precedent or model must be established for the other composers to follow (normally by the lead composer or audio director). In other cases, multiple composers might be utilized precisely because of the variety they can bring to a project. Perhaps musical material by one composer could be developed in different ways by other composers, which might be heard in contrasting areas of the game.

Unlike a film or television episode, where the composer works primarily with one individual (the director or producer), in a game, musical discussions are typically held between a number of partners. The composer may receive feedback from the audio director, the main creative director or even executives at the publishers.Footnote 3 This allows for a multiplicity of potential opinions or possibilities (which might be liberating or frustrating, depending on the collaboration). The nature of the collaboration may also be affected by the musical knowledge of the stakeholders who have input into the audio. Once the aesthetic direction has been established, and composition is underway, the composer co-ordinates with the audio director and/or technical staff to ensure that the music fits with the implementation plans and technical resources of the game.Footnote 4 Of course, the collaboration will vary depending on the scale of the project and company – a composer writing for a small indie game produced by a handful of creators will use a different workflow compared to a high-budget game with a large audio staff.

Methods of Dynamic Composition

One of the fundamental decisions facing the composer and developers is how the music should react to the player and gameplay. This might simply consist of beginning a loop of music when the game round begins, and silencing the loop when it ends, or it might be that the game includes more substantial musical interactivity.

If producers decide to deploy more advanced musical systems, this has consequences for the finite technical resources available for the game as it runs. Complex interactive music systems will require greater system resources such as processing power and memory. The resources at the composer’s disposal will have to be negotiated with the rest of the game’s architecture. Complex dynamic music may also involve the use of middleware systems for handling the interactive music (such as FMOD, Wwise or a custom system), which would need to be integrated into the programming architecture of the game.Footnote 5 If the composer is not implementing the interactive music themselves, further energies must be dedicated to integrating the music into the game. In all of these cases, because of the implications for time, resources and budget, as well as the aesthetic result, the decisions concerning dynamic music must be made in dialogue with the game development team, and are not solely the concern of the composer.

The opportunity to compose for dynamic music systems is one of the reasons why composers are attracted to writing for games. Yet a composer’s enthusiasm for a dynamic system may outstrip that of the producers or even the players. And, as noted elsewhere in this book, we should be wary of equating more music, or more dynamic music, with a better musical experience.

Even the most extensive dynamic systems are normally created from relatively straightforward principles. Either the selection and order of musical passages is affected by the gameplay (‘horizontal’ changes), or the game affects the combinations of musical elements heard simultaneously (‘vertical’ changes). Of course, these two systems can be blended, and both can be used to manipulate small or large units of music. Most often, looped musical passages will play some part in the musical design, in order to account for the indeterminacy of timing in this interactive medium.

Music in games can be designed to loop until an event occurs, at which point the loop will end, or another piece will play. Writing in loops is tricky, not least when repetition might prompt annoyance. When writing looped cues, composers have to consider several musical aspects including:

Harmonic structure, to avoid awkward harmonic shifts when the loop repeats. Many looped cues use a cadence to connect the end of the loop back to the beginning.

Timbres and textures, so that musical statements and reverb are not noticeably cut off when the loop repeats.

Melodic material, which must avoid listener fatigue. Winifred Phillips suggests using continual variation to mitigate this issue.Footnote 6

Dynamic and rhythmic progression during the cue, so that when the loop returns to the start, it does not sound like a lowering of musical tension, which may not match with in-game action.

Ending the loop or transitioning to another musical section. How will the loop end in a way that is musically satisfying? Should the loop be interrupted, or will the reaction have to wait until the loop concludes? Will a transition passage or crossfade be required?

A game might involve just one loop for the whole game round (as in Tetris, 1989) or several: in the stealth game Splinter Cell (2002), loops are triggered depending on the attention attracted by the player’s avatar.Footnote 7

Sometimes, rather than writing a complete cue as a whole entity, composers may write cues in sections or fragments (stems). Stems can be written to sound one after each other, or simultaneously.

In a technique sometimes called ‘horizontal sequencing’Footnote 8 or ‘branching’,Footnote 9 sections of a composition are heard in turn as the game is played. This allows the music to respond to the game action, when musical sections and variations can be chosen to suit the action. For instance, the ‘Hyrule Field’ cue of Legend of Zelda: Ocarina of Time (1998) consists of twenty-three sections. The order of the sections is partly randomized to avoid direct repetition, but the set is subdivided into different categories, so the music can suit the action. When the hero is under attack, battle variations play; when he stands still, sections without percussion play. Even if the individual sections do not loop, writing music this way still has some of the same challenges as writing loops, particularly concerning transition between sections (see, for example, the complex transition matrix developed for The Operative: No One Lives Forever (2000)).Footnote 10

Stems can also be programmed to sound simultaneously. Musical layers can be added, removed or substituted, in response to the action. Composers have to think carefully about how musical registers and timbres will interact when different combinations of layers are used, but this allows music to respond quickly, adjusting texture, instrumentation, dynamics and rhythm along with the game action. These layers may be synchronized to the same tempo and with beginnings and endings aligned, or they may be unsynchronized, which, provided the musical style allows this, is a neat way to provide further variation. Shorter musical fragments designed to be heard on top of other cues are often termed ‘stingers’.

These three techniques are not mutually exclusive, and can often be found working together. This is partly due to the different advantages and disadvantages of each approach. An oft-cited example, Monkey Island 2 (1991), uses the iMUSE music system, and deploys loops, layers, branching sections and stingers. Halo: Combat Evolved (2001), too, uses loops, branching sections, randomization and stingers.Footnote 11 Like the hexagons of a beehive, the musical elements of dynamic systems use fundamental organizational processes to assemble individual units into large complex structures.

Even more than the technical and musical questions, composers for games must ask themselves which elements of the game construct their music responds to, and reinforces. Musical responses inevitably highlight certain aspects of the gameplay, whether that be the avatar’s health, success or failure, the narrative conceit, the plot, the environment, or any other aspect to which music is tied. Unlike in non-interactive media, composers for games must predict and imagine how the player will engage with the game, and create music to reinforce and amplify the emotional journeys they undertake. Amid exciting discussions of technical possibilities, composers must not lose sight of the player’s emotional and cognitive engagement with the game, which should be uppermost in the composer’s mind. Increased technical complexity, challenges for the composer and demands on resources all need to be balanced with the end result for the player. It is perhaps for this reason that generative and algorithmic music, as impressive as such systems are, has found limited use in games – the enhancement in the player’s experience is not always matched by the investment required to make successful musical outcomes.

Game Music as Media Music

As much as we might highlight the peculiar challenges of writing for games, it is important not to ignore game music’s continuity with previous media music. In many senses, game composers are continuing the tradition of media music that stretches back into the early days of film music in the late nineteenth century – that is, they are starting and developing a conversation between the screen and the viewer. For most players, the musical experience is more important than the technical complexities or systems that lie behind it. They hear the music as it sounds in relation to the screen and gameplay, not primarily the systematic and technical underpinnings. (Indeed, one of the points where players are most likely to become aware of the technology is when the system malfunctions or is somehow deficient, such as in glitches, disjunct transitions or incidences of too much repetition.) The fundamental question facing game composers is the same as for film composers: ‘What can the music bring to this project that will enhance the player/viewer’s experience?’ The overall job of encapsulating and enhancing the game on an aesthetic level is more important than any single technical concern.

Of course, where games and films/television differ is in the relationship with the viewer/listener. We are not dealing with a passive viewer, or homogenous audience, but a singular participant, addressed, and responded to, by the music. This is not music contemplated as an ‘other’ entity, but a soundtrack to the player’s actions. Over the course of the time taken to play through a game, players spend significantly longer with the music of any one game than with a single film or television episode. As players invest their time with the music of a game, they build a partnership with the score.

Players are well aware of the artifice of games and look for clues in the environment and game materials to indicate what might happen as the gameplay develops. Music is part of this architecture of communication, so players learn to attend to even tiny musical changes and development. For that reason, glitches or unintentional musical artefacts are particularly liable to cause a negative experience for players.

The connection of the music with the player’s actions and experiences (whether through dynamic music or more generally), forges the relationship between gamer and score. Little wonder that players feel so passionately and emotionally tied to game music – it is the musical soundtrack to their personal victories and defeats.

Delivering the Music

During the process of writing the music for the game, the composer will remain in contact with the developers. The audio director may need to request changes or revisions to materials for technical or creative reasons, and the requirement for new music might appear, while the music that was initially ordered might become redundant. Indeed, on larger projects in particular, it is not uncommon for drafts to be ultimately unused in the final projects.

Composers may deliver their music as purely synthesized materials, or the score might involve some aspect of live performance. A relatively recent trend has seen composers remotely collaborating with networks of soloist musicians. Composers send cues and demos to specific instrumentalists or vocalists, who then record parts in live performance, which are then integrated into the composition. This blended approach partly reflects a wider move in game scoring towards smaller ensembles and unusual combinations of instruments (often requiring specialist performers). The approach is also well suited to scores that blend together sound-design and musical elements. Such hybrid approaches can continue throughout the compositional process, and the contributed materials can inform the ongoing development of the musical compositions.

Of course, some scores still demand a large-scale orchestral session. While the composer is ultimately responsible for such sessions, composers rely on a larger team of collaborators to help arrange and record orchestras. An orchestrator will adapt the composer’s materials into written notation readable by human performers, while the composer will also require the assistance of engineers, mixers, editors and orchestra contractors to enable the session to run smoothly. Orchestral recording sessions typically have to be organized far in advance of the recording date, which necessitates that composers and producers establish the amount of music to be recorded and the system of implementation early on, in case this has implications for the way the music should be recorded. For example, if musical elements need to be manipulated independently of each other, the sessions need to be organized so they are recorded separately.

Promotion and Afterlife

Some game trailers may use music from the game they advertise, but in many cases, entirely separate music is used. There are several reasons for this phenomenon. On a practical level, if a game is advertised early in the development cycle, the music may not yet be ready, and/or the composer may be too busy writing the game’s music to score a trailer. More conceptually, trailers are a different medium to the games they advertise, with different aesthetic considerations. The game music, though perfect for the game, may not fit with the trailer’s structure or overall style. Unsurprisingly, then, game trailers often use pre-existing music.

Trailers also serve as one of the situations where game music may achieve an afterlife. Since trailers rely heavily on licensed pre-existing music, and trailers often draw on more than one source, game music may easily reappear in another trailer (irrespective of the similarity of the original game to the one being advertised).

Beyond a soundtrack album or other game-specific promotion, the music of a game may also find an afterlife in online music cultures (including YouTube uploads and fan remixes), or even in live performance. Game music concerts are telling microcosms of the significance of game music. On the one hand, they may seem paradoxical – if the appeal of games is founded on interactivity, then why should a format that removes such engagement be popular? Yet, considered more broadly, the significance of these concerts is obvious: they speak to the connection between players and music in games. This music is the soundtrack to what players feel is their own life. Why wouldn’t players be enthralled at the idea of a monumental staging of music personally connected to them? Here, on a huge scale in a public event, they can relive the highlights of their marvellous virtual lives.

* * *

This brief overview of the production of music for games has aimed to provide a broad-strokes characterization of the process of creating such music. Part of the challenge and excitement of music for games comes from the negotiation of technical and aesthetic demands. Ultimately, however, composers aim to create deeply satisfying experiences for players. Game music does so by building personal relationships with gamers, enriching their lives and experiences, in-game and beyond.

Within narrative-based video games the integration of storytelling, where experiences are necessarily directed, and of gameplay, where the player has a degree of autonomy, continues to be one of the most significant challenges that developers face. In order to mitigate this potential dichotomy, a common approach is to rely upon cutscenes to progress the narrative. Within these passive episodes where interaction is not possible, or within other episodes of constrained outcome where the temporality of the episode is fixed, it is possible to score a game in exactly the same way as one might score a film or television episode. It could therefore be argued that the music in these sections of a video game is the least idiomatic of the medium. This chapter will instead focus on active gameplay episodes, and interactive music, where the unique challenges lie. When music accompanies active gameplay a number of conflicts, tensions and paradoxes arise. In this chapter, these will be articulated and interrogated through three key questions:

Do we score a player’s experience, or do we direct it?

How do we distil our aesthetic choices into a computer algorithm?

How do we reconcile the players’ freedom to instigate events at indeterminate times with musical forms that are time-based?

In the following discussion there are few certainties, and many more questions. The intention is to highlight the issues, to provoke discussion and to forewarn.

Scoring and Directing

Gordon Calleja argues that in addition to any scripted narrative in games, the player’s interpretation of events during their interaction with a game generates stories, what he describes as an ‘alterbiography’.Footnote 1 Similarly, Dominic Arsenault refers to the ‘emergent narrative that arises out of the interactions of its rules, objects, and player decisions’Footnote 2 in a video game. Music in video games is viewed by many as being critical in engaging players with storytelling,Footnote 3 but in addition to underscoring any wider narrative arc music also accompanies this active gameplay, and therefore narrativizes a player’s actions. In Claudia Gorbman’s influential book Unheard Melodies: Narrative Film Music she identifies that when watching images and hearing music the viewer will form mental associations between the two, bringing the connotations of the music to bear on their understanding of a scene.Footnote 4 This idea is supported by empirical research undertaken by Annabel Cohen who notes that, when interpreting visuals, participants appeared ‘unable to resist the systematic influence of music on their interpretation of the image’.Footnote 5 Her subsequent congruence-associationist model helps us to understand some of the mechanisms behind this, and the consequent impact of music on the player’s alterbiography.Footnote 6

Cohen’s model suggests that our interpretation of music in film is a negotiation between two processes.Footnote 7 Stimuli from a film will trigger a ‘bottom-up’ structural and associative analysis which both primes ‘top-down’ expectations from long-term memory through rapid pre-processing, and informs the working narrative (the interpretation of the ongoing film) through a slower, more detailed analysis. At the same time, the congruence (or incongruence) between the music and visuals will affect visual attention and therefore also influence the meaning derived from the film. In other words, the structures of the music and how they interact with visual structures will affect our interpretation of events. For example, ‘the film character whose actions were most congruent with the musical pattern would be most attended and, consequently, the primary recipient of associations of the music’.Footnote 8

In video games the music is often responding to actions instigated by the player, therefore it is these actions that will appear most congruent with the resulting musical pattern. In applying Cohen’s model to video games, the implication is that the player themselves will often be the primary recipient of the musical associations formed through experience of cultural, cinematic and video game codes. In responding to events instigated by the player, these musical associations will likely be ascribed to their actions. You (the player) act, superhero music plays, you are the superhero.

Of course, there are also events within games that are not instigated by the player, and so the music will attach its qualities to, and narrativize, these also. Several scholars have attempted to distinguish between two musical positions,Footnote 9 defining interactive music as that which responds directly to player input, and adaptive music that ‘reacts appropriately to – and even anticipates – gameplay rather than responding directly to the user’.Footnote 10 Whether things happen because the ‘game’ instigates them or the ‘player’ instigates them is up for much debate, and it is likely that the perceived congruence of the music will oscillate between game events and players actions, but what is critical is the degree to which the player perceives a causal relationship between these events or actions and the music.Footnote 11 These relationships are important for the player to understand, since while music is narrativizing events it is often simultaneously playing a ludic role – supplying information to support the player’s engagement with the mechanics of the game.

The piano glissandi in Dishonored (2012) draw the player’s attention to the enemy NPC (Non-Player Character) who has spotted them, the four-note motif in Left 4 Dead 2 (2009) played on the piano informs the player that a ‘Spitter’ enemy type has spawned nearby,Footnote 12 and if the music ramps up in Skyrim (2011), Watch Dogs (2014), Far Cry 5 (2018) or countless other games, the player knows that enemies are aware of their presence and are now in active pursuit. The music dramatizes the situation while also providing information that the player will interpret and use. In an extension to Chion’s causal mode of listening,Footnote 13 where viewers listen to a sound in order to gather information about its cause or sources, we could say that in video games players engage in ludic listening, interpreting the audio’s system-related meaning in order to inform their actions. Sometimes this ludic function of music is explicitly and deliberately part of the game design in order to avoid an overloading of the visual channel while compensating for a lack of peripheral vision and spatial information,Footnote 14 but whether deliberate or inadvertent, the player will always be trying to interpret music’s meaning in order to gain advantage.

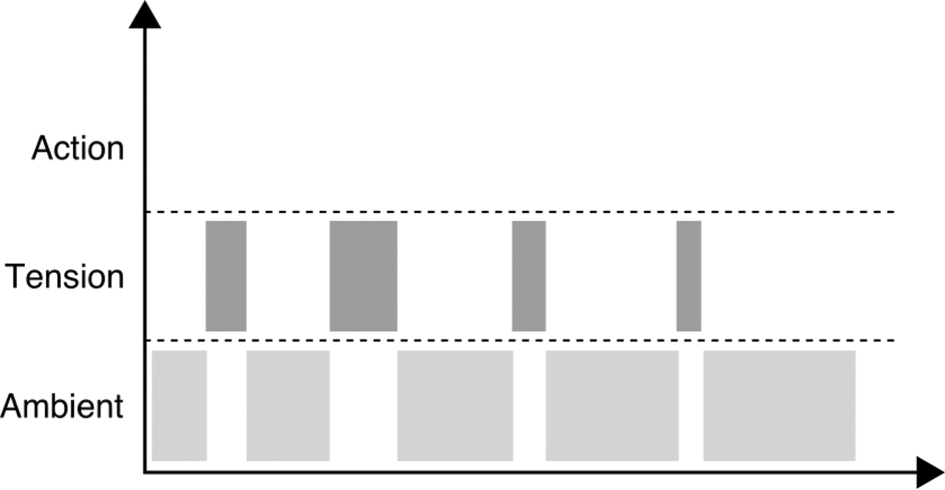

Awareness of the causal links between game events, game variables and music will likely differ from player to player. Some players may note the change in musical texture when crouching under a table in Sly 3: Honor Among Thieves (2005) as confirmation that they are hidden from view; some will actively listen out for the rising drums that indicate the proximity of an attacking wolf pack in Rise of the Tomb Raider (2015);Footnote 15 while others may remain blissfully unaware of the music’s usefulness (or may even play with the music switched off).Footnote 16 Feedback is sometimes used as a generic term for all audio that takes on an informative role for the player,Footnote 17 but it is important to note that the identification of causality, with musical changes being perceived as either adaptive (game-instigated) or interactive (player-instigated), is likely to induce different emotional responses. Adaptive music that provides information to the player about game states and variables can be viewed as providing a notification or feed-forward function – enabling the player. Interactive music that corresponds more directly to player input will likewise have an enabling function, but it carries a different emotional weight since this feedback also comments on the actions of the player, providing positive or negative reinforcement (see Figure 5.1).

Figure 5.1 Notification and feedback: Enabling and commenting functions

The percussive stingers accompanying a successful punch in The Adventures of Tintin: The Secret of the Unicorn (2011), or the layers that begin to play upon the successful completion of a puzzle in Vessel (2012) provide positive feedback, while the duff-note sounds that respond to a mistimed input in Guitar Hero (2005) provide negative feedback. Whether enabling or commenting, music often performs these ludic functions. It is simultaneously narrating and informing; it is ludonarrative. The implications of this are twofold. Firstly, in order to remain congruent with the game events and player actions, music will be inclined towards a Mickey-Mousing type approach,Footnote 18 and secondly, in order to fulfil its ludic functions, it will tend towards a consistent response, and therefore will be inclined towards repetition.

When the player is web-slinging their way across the city in Spider-Man (2018) the music scores the experience of being a superhero, but when they stop atop a building to survey the landscape the music must logically also stop. When the music strikes up upon entering a fort in Assassin’s Creed: Origins (2017), we are informed that danger may be present. We may turn around and leave, and the music fades out. Moving between these states in either game in quick succession highlights the causal link between action and music; it draws attention to the system, to the artifice. Herein lies a fundamental conflict of interactive music – in its attempt to be narratively congruent, and ludically effective, music can reveal the systems within the game, but in this revealing of the constructed nature of our experience it disrupts our immersion in the narrative world. While playing a game we do not want to be reminded of the architecture and artificial mechanics of that game. These already difficult issues around congruence and causality are further exacerbated when we consider that music is often not just scoring the game experience, it is directing it.

Film music has sometimes been criticized for a tendency to impose meaning, for telling a viewer how to feel,Footnote 19 but in games music frequently tells a player how to act. In the ‘Medusa’s Call’ chapter of Far Cry 3 (2012) the player must ‘Avoid detection. Use stealth to kill the patrolling radio operators and get their intel’, and the quiet tension of the synthesizer and percussion score supports this preferred stealth strategy. In contrast, the ‘Kick the Hornet’s Nest’ episode (‘Burn all the remaining drug crops’) encourages the player to wield their flamethrower to spectacular destructive effect through the electro-dubstep-reggae mashup of ‘Make it Bun Dem’ by Skrillex and Damian ‘Jr. Gong’ Marley. Likewise, a stealth approach is encouraged by the James-Bond-like motif of Rayman Legends (2013) ‘Mysterious Inflatable Island’, in contrast to the hell-for-leather sprint inferred from the scurrying strings of ‘The Great Lava Pursuit’. In these examples, and many others, we can see that rather than scoring a player’s actual experience, the music is written in order to match an imagined ideal experience – where the intentions of the music are enacted by the player. To say that we are scoring a player experience implies that somehow music is inert, that it does not impact on the player’s behaviour. But when the player is the protagonist it may be the case that, rather than identifying what is congruent with the music’s meaning, the player acts in order for image and music to become congruent. When music plays, we are compelled to play along; the music directs us. Both Ernest Adams and Tulia-Maria Cășvean refer to the idea of the player’s contract,Footnote 20 that in order for games to work there has to be a tacit agreement between the player and the game maker; that they both have a degree of responsibility for the experience. If designers promise to provide a credible, coherent world then the player agrees to behave according to a given set of predefined rules, usually determined by game genre, in order to maintain this coherence. Playing along with the meanings implicit in music forms part of this contract. But the player also has the agency to decide not to play along with the music, and so it will appear incongruent with their actions, and again the artifice of the game is revealed.Footnote 21

When game composer Marty O’Donnell states ‘When [the players] look back on their experience, they should feel like their experience was scored, but they should never be aware of what they did to cause it to be scored’Footnote 22 he is demonstrating an awareness of the paradoxes that arise when writing music for active game episodes. Music is often simultaneously performing ludic and narrative roles, and it is simultaneously following (scoring) and leading (directing). As a consequence, there is a constant tension between congruence, causality and abstraction. Interactive music represents a catch-22 situation. If music seeks to be narratively congruent and ludically effective, this compels it towards a Mickey-Mousing approach, because of the explicit, consistent and close matching of music to the action required. This leads to repetition and a highlighting of the artifice. If music is directing the player or abstracted from the action, then it runs the risk of incongruence. Within video games, we rely heavily upon the player’s contract and the inclination to act in congruence with the behaviour implied by the music, but the player will almost always have the ability to make our music sound inappropriate should they choose to.

Many composers who are familiar with video games recognize that music should not necessarily always act in the same way throughout a game. Reflecting on his work in Journey (2012), composer Austin Wintory states, ‘An important part of being adaptive game music/audio people is not [to ask] “Should we be interactive or should we not be?” It’s “To what extent?” It’s not a binary system, because storytelling entails a certain ebb and flow’.Footnote 23 Furthermore, he notes that if any relationship between the music and game, from Mickey-Mousing to counterpoint, becomes predictable then the impact can be lost, recommending that ‘the extent to which you are interactive should have an arc’.Footnote 24 This bespoke approach to writing and implementing music for games, where the degree of interactivity might vary between different active gameplay episodes, is undoubtedly part of the solution to the issues outlined but faces two main challenges. Firstly, most games are very large, making a tailored approach to each episode or level unrealistic, and secondly, that unlike in other art forms or audiovisual media, the decisions about how music will act within a game are made in absentia; we must hand these to a system that serves as a proxy for our intent. These decisions in the moment are not made by a human being, but are the result of a system of events, conditions, states and variables.

Algorithms and Aesthetics

Given the size of most games, and an increasingly generative or systemic approach to their development, the complex choices that composers and game designers might want to make about the use of music during active gameplay episodes must be distilled into a programmatic system of events, states and conditions derived from discrete (True/False) or continuous (0.0–1.0) variables. This can easily lead to situations where what might seem programmatically correct does not translate appropriately to the player’s experience. In video games there is frequently a conflict between our aesthetic aims and the need to codify the complexity of human judgement we might want to apply.

One common use of music in games is to indicate the state of the artificial intelligence (AI), with music either starting or increasing in intensity when the NPCs are in active pursuit of the player, and ending or decreasing in intensity when they end the pursuit or ‘stand down’.Footnote 25 This provides the player with ludic information about the NPC state, while at the same time heightening tension to reflect the narrative situation of being pursued. This could be seen as a good example of ludonarrative consonance or congruence. However, when analysed more closely we can see that simply relying on the ludic logic of gameplay events to determine the music system, which has narrative implications, is not effective. In terms of the game’s logic, when an NPC stands down or when they are killed the outcome is the same; they are no longer actively seeking the player (Active pursuit = False). In many games, the musical result of these two different events is the same – the music fades out. But if as a player I have run away to hide and waited until the NPC stopped looking for me, or if I have confronted the NPC and killed them, these events should feel very different. The common practice of musically responding to both these events in the same way is an example of ludonarrative dissonance.Footnote 26 In terms of providing ludic information to the player it is perfectly effective, but in terms of narrativizing the player’s actions, it is not.

The perils of directly translating game variables into musical states is comically apparent in the ramp into epic battle music when confronting a small rat in The Elder Scrolls III: Morrowind (2002) (Enemy within a given proximity = True). More recent games such as Middle Earth: Shadow of Mordor (2014) and The Witcher 3: Wild Hunt (2015) treat such encounters with more sophistication, reserving additional layers of music, or additional stingers, for confrontations with enemies of greater power or higher ranking than the player, but the translation of sophisticated human judgements into mechanistic responses to a given set of conditions is always challenging. In both Thief (2014) and Sniper Elite V2 (2012) the music intensifies upon detection, but the lackadaisical attitudes of the NPCs in Thief, and the fact that you can swiftly dispatch enemies via a judicious headshot in Sniper Elite V2, means that the music is endlessly ramping up and down in a Mickey-Mousing fashion. In I Am Alive (2012), a percussive layer mirrors the player character’s stamina when climbing. This is both ludically and narratively effective, since it directly signifies the depleting reserves while escalating the tension of the situation. Yet its literal representation of this variable means that the music immediately and unnaturally drops to silence should you ‘hook-on’ or step onto a horizontal ledge. In all these instances, the challenging catch-22 of scoring the player’s actions discussed above is laid bare, but better consideration of the player’s alterbiography might have led to a different approach. Variables are not feelings, and music needs to interpolate between conditions. Just because the player is now ‘safe’ it does not mean that their emotions are reset to 0.0 like a variable: they need some time to recover or wind down. The literal translation of variables into musical responses means that very often there is no coda, no time for reflection.

There are of course many instances where the consideration of the experiential nature of play can lead to a more sophisticated consideration of how to translate variables into musical meaning. In his presentation on the music of Final Fantasy XV (2016), Sho Iwamoto discussed the music that accompanies the player while they are riding on a Chocobo, the large flightless bird used to more quickly traverse the world. Noting that the player is able to transition quickly between running and walking, he decided on a parallel approach to scoring whereby synchronized musical layers are brought in and out.Footnote 27 This approach is more suitable when quick bidirectional changes in game states are possible, as opposed to the more wholescale musical changes brought about by transitioning between musical segments.Footnote 28 A logical approach would be to simply align the ‘walk’ state with specific layers, and a ‘run’ state with others, and to crossfade between these two synchronized stems. The intention of the player to run is clear (they press the run button), but Iwamoto noted that players would often be forced unintentionally into a ‘walk’ state through collisions with trees or other objects. As a consequence, he implemented a system that responds quickly to the intentional choice, transitioning from the walk to run music over 1.5 beats, but chose to set the musical transition time from the ‘run’ to ‘walk’ state to 4 bars, thereby smoothing out the ‘noise’ of brief unintentional interruptions and avoiding an overly reactive response.Footnote 29

These conflicts between the desire for nuanced aesthetic results and the need for a systematic approach can be addressed, at least in part, through a greater engagement by composers with the systems that govern their music and by a greater understanding of music by game developers. Although the situation continues to improve it is still the case in many instances that composers are brought on board towards the end of development, and are sometimes not involved at all in the integration process. What may ultimately present a greater challenge is that players play games in different ways.

A fixed approach to the micro level of action within games does not always account for how they might be experienced on a more macro level. For many people their gaming is opportunity driven, snatching a valued 30–40 minutes here and there, while others may carve out an entire weekend to play the latest release non-stop. The sporadic nature of many people’s engagement with games not only mitigates against the kind of large-scale musico-dramatic arc of films, but also likely makes music a problem for the more dedicated player. If music needs to be ‘epic’ for the sporadic player, then being ‘epic’ all the time for 10+ hours is going to get a little exhausting. This is starting to be recognized, with players given the option in the recent release of Assassin’s Creed: Odyssey (2018) to choose the frequency at which the exploration music occurs.

Another way in which a fixed-system approach to active music falters is that, as a game character, I am not the same at the end of the game as I was at the start, and I may have significant choice over how my character develops. Many games retain the archetypal narrative of the Hero’s Journey,Footnote 30 and characters go through personal development and change – yet interactive music very rarely reflects this: the active music heard in 20 hours is the same that was heard in the first 10 minutes. In a game such as Silent Hill: Shattered Memories (2009) the clothing and look of the character, the dialogue, the set dressing, voicemail messages and cutscenes all change depending on your actions in the game and the resulting personality profile, but the music in active scenes remains similar throughout. Many games, particularly RPGs (Role-Playing Games) enable significant choices in terms of character development, but it typically remains the case that interactive music responds more to geography than it does to character development. Epic Mickey (2010) is a notable exception; when the player acts ‘good’ by following missions and helping people, the music becomes more magical and heroic, but when acting more mischievous and destructive, ‘You’ll hear a lot of bass clarinets, bassoons, essentially like the wrong notes … ’.Footnote 31 The 2016 game Stories: The Path of Destinies takes this idea further, offering moments of choice where six potential personalities or paths are reflected musically through changes in instrumentation and themes. Reflecting the development of the player character through music, given the potential number of variables involved, would, of course, present a huge challenge, but it is worthy of note that so few games have even made a modest attempt to do this.

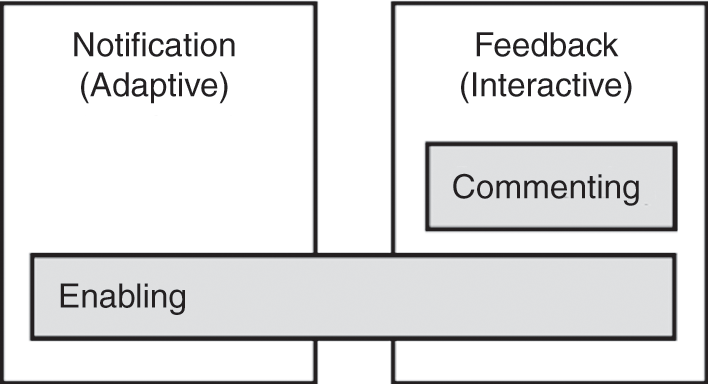

Recognition that players have different preferences, and therefore have different experiences of the same game, began with Bartle’s identification of common characteristics of groups of players within text-based MUD (Multi-User Dungeon) games.Footnote 32 More recently the capture and analysis of gameplay metrics has allowed game-user researchers to refine this understanding through the concept of player segmentation.Footnote 33 To some extent, gamers are self-selecting in terms of matching their preferred gaming style with the genre of games they play, but games want to appeal to as wide an audience as possible and so attempt to appeal to different types of player. Making explicit reference to Bartle’s player types, game designer Chris McEntee discusses how Rayman Origins (2011) has a co-operative play mode for ‘Socializers’, while ‘Explorers’ are rewarded through costumes that can be unlocked, and ‘Killers’ are appealed to through the ability to strike your fellow player’s character and push them into danger.Footnote 34 One of the conflicts between musical interactivity and the gaming experience is that the approach to music, the systems and thresholds chosen are developed for the experience of an average player, but we know that approaches may differ markedly.Footnote 35 A player who approaches Dishonored 2 (2016) with an aggressive playstyle will hear an awful lot of the high-intensity ‘fight’ music; however a player who achieves a very stealthy or ‘ghost’ playthrough will never hear it.Footnote 36 A representation of the potential experiences of different player types with typical ‘Ambient’, ‘Tension’ and ‘Action’ music tracks is shown below (Figures 5.2–5.4).

Figure 5.2 Musical experience of an average approach

Figure 5.3 Musical experience of an aggressive approach

Figure 5.4 Musical experience of a stealthy approach

A single fixed-system approach to music that fails to adapt to playstyles will potentially result in a vastly different, and potentially unfulfilling, musical experience. A more sophisticated method, where the thresholds are scaled or recalibrated around the range of the player’s ‘mean’ approach, could lead to a more personalized and more varied musical experience. This could be as simple as raising the threshold at which a reward stinger is played for the good player, or as complex as introducing new micro tension elements for a stealth player’s close call.

Having to codify aesthetic decisions represents for many composers a challenge to their usual practice outside of games, and indeed perhaps a conflict with how we might feel these decisions should be made. Greater understanding by composers of the underlying systems that govern their music’s use is undoubtedly part of the answer, as is a greater effort to track and understand an individual’s behaviour within games, so that we can provide a good experience for the ‘sporadic explorer’, as well as the ‘dedicated killer’, but there is a final conflict between interactivity and music that is seemingly irreconcilable – that of player agency and the language of music itself.

Agency and Structure

As discussed above, one of the defining features of active gameplay episodes within video games, and indeed a defining feature of interactivity itself, is that the player is granted agency; the ability to instigate actions and events of their own choosing, and crucially for music – at a time of their own choosing. Parallel, vertical or layer-based approaches to musical form within games can respond to events rapidly and continuously without impacting negatively on musical structures, since the temporal progression of the music is not interrupted. However, other gaming events often necessitate a transitional approach to music, where the change from one musical cue to an alternate cue mirrors a more significant change in the dramatic action.Footnote 37 In regard to film music, K. J. Donnelly highlights the importance of synchronization, that films are structured through what he terms ‘audiovisual cadences’ – nodal points of narrative or emotional impact.Footnote 38 In games these nodal points, in particular at the end of action-based episodes, also have great significance, but the agency of the player to instigate these events at any time represents an inherent conflict with musical structures.

There is good evidence that an awareness of musical, especially rhythmic, structures is innate. Young babies will indicate negative brainwave patterns when there is a change to an otherwise consistent sequence of rhythmic cycles, and even when listening to a monotone metronomic pulse we will perceive some of these sounds as accented.Footnote 39 Even without melody, harmonic sequences or phrasing, most music sets up temporal expectations, and the confirmation of, or violation of expectation in music is what is most closely associated with strong or ‘peak’ emotions.Footnote 40 To borrow Chion’s terminology we might say that peak emotions are a product of music’s vectorization,Footnote 41 the way it orients towards the future, and that musical expectation has a magnitude and direction. The challenges of interaction, the conflict between the temporal determinacy of vectorization and the temporal indeterminacy of the player’s actions, have stylistic consequences for music composed for active gaming episodes.

In order to avoid jarring transitions and to enable smoothness,Footnote 42 interactive music is inclined towards harmonic stasis and metrical ambiguity, and to avoiding melody and vectorization. The fact that such music should have little structure in and of itself is perhaps unsurprising – since the musical structure is a product of interaction. If musical gestures are too strong then this will not only make transitions difficult, but they will potentially be interpreted as having ludic meaning where none was intended. In order to enable smooth transitions, and to avoid the combinatorial explosion that results from potentially transitioning between different pieces with different harmonic sequences, all the interactive music in Red Dead Redemption (2010) was written in A minor at 160 bpm, and most music for The Witcher 3: Wild Hunt in D minor. Numerous other games echo this tendency towards repeated ostinatos around a static tonal centre. The music of Doom (2016) undermines rhythmic expectancy through the use of unusual and constantly fluctuating time signatures, and the 6/8 polyrhythms in the combat music of Batman: Arkham Knight (2015) also serve to unlock our perception from the usual 4/4 metrical divisions that might otherwise dominate our experience of the end-state transition. Another notable trend is the increasingly blurred border between music and sound effects in games. In Limbo (2010) and Little Nightmares (2017), there is often little delineation between game-world sounds and music, and the audio team of Shadow of the Tomb Raider (2018) talk about a deliberate attempt to make the player ‘unsure that what they are hearing is score’.Footnote 43 All of these approaches can be seen as stylistic responses to the challenge of interactivity.Footnote 44

The methods outlined above can be effective in mitigating the interruption of expectation-based structures during musical transitions, but the de facto approach to solving this has been for the music to not respond immediately, but instead to wait and transition at the next appropriate musical juncture. Current game audio middleware enables the system to be aware of musical divisions, and we can instruct transitions to happen at the next beat, next bar or at an arbitrary but musically appropriate point through the use of custom cues.Footnote 45 Although more musically pleasing,Footnote 46 such metrical transitions are problematic since the audiovisual cadence is lost – music always responds after the event – and they provide an opportunity for incongruence, for if the music has to wait too long after the event to transition then the player may be engaging in some other kind of trivial activity at odds with the dramatic intent of the music. Figure 5.5 illustrates the issue. The gameplay action begins at the moment of pursuit, but the music waits until the next juncture (bar) to transition to the ‘Action’ cue. The player is highly skilled and so quickly triumphs. Again, the music holds on the ‘Action’ cue until the next bar line in order to transition musically back to the ‘Ambient’ cue.

Figure 5.5 Potential periods of incongruence due to metrical transitions are indicated by the hatched lines

The resulting periods of incongruence are unfortunate, but the lack of audiovisual cadence is particularly problematic when one considers that these transitions are typically happening at the end of action-based episodes, where music is both narratively characterizing the player and fulfilling the ludic role of feeding-back on their competence.Footnote 47 Without synchronization, the player’s sense of accomplishment and catharsis can be undermined. The concept of repetition, of repeating the same episode or repeatedly encountering similar scenarios, features in most games as a core mechanic, so these ‘end-events’ will be experienced multiple times.Footnote 48 Given that game developers want players to enjoy the gaming experience it would seem that there is a clear rationale for attempting to optimize these moments. This rationale is further supported by the suggestion that the end of an experience, particularly in a goal-oriented context, may play a disproportionate role in people’s overall evaluation of the experience and their subsequent future behaviour.Footnote 49

The delay between the event and the musical response can be alleviated in some instances by moving to a ‘pre-end’ musical segment, one that has an increased density of possible exit points, but the ideal would be to have vectorized music that leads up to and enhances these moments of triumph, something which is conceptually impossible unless we suspend the agency of the player. Some games do this already. When an end-event is approached in Spider-Man the player’s input and agency are often suspended, and the climactic conclusion is played out in a cutscene, or within the constrained outcome of a quick-time event that enables music to be more closely synchronized. However, it would also be possible to maintain a greater impression of agency and to achieve musical synchronization if musical structures were able to input into game’s decision-making processes. That is, to allow musical processes to dictate the timing of game events. Thus far we have been using the term ‘interactive’ to describe music during active episodes, but most music in games is not truly interactive, in that it lacks a reciprocal relationship with the game’s systems. In the vast majority of games, the music system is simply a receiver of instruction.Footnote 50 If the music were truly interactive then this would raise the possibility of thresholds and triggers being altered, or game events waiting, in order to enable the synchronization of game events to music.

Manipulating game events in order to synchronize to music might seem anathema to many game developers, but some are starting to experiment with this concept. In the Blood and Wine expansion pack for The Witcher 3: Wild Hunt the senior audio programmer, Colin Walder, describes how the main character Geralt was ‘so accomplished at combat that he is balletic, that he is dancing almost’.Footnote 51 With this in mind they programmed the NPCs to attack according to musical timings, with big attacks syncing to a grid, and smaller attacks syncing to beats or bars. He notes ‘I think the feeling that you get is almost like we’ve responded somehow with the music to what was happening in the game, when actually it’s the other way round’.Footnote 52 He points out that ‘Whenever you have a random element then you have an opportunity to try and sync it, because if there is going to be a sync point happen [sic] within the random amount of time that you were already prepared to wait, you have a chance to make a sync happen’.Footnote 53 The composer Olivier Derivière is also notable for his innovations in the area of music and synchronization. In Get Even (2017) events, animations and even environmental sounds are synced to the musical pulse. The danger in using music as an input to game state changes and timings is that players may sense this loss of agency, but it should be recognized that many games artificially manipulate the player all the time through what is termed dynamic difficulty adjustment or dynamic game balancing.Footnote 54 The 2001 game Max Payne dynamically adjusts the amount of aiming assistance given to the player based on their performance,Footnote 55 in Half-Life 2 (2004) the content of crates adjusts to supply more health when the player’s health is low,Footnote 56 and in BioShock (2007) they are rendered invulnerable for 1–2 seconds when at their last health point in order to generate more ‘barely survived’ moments.Footnote 57 In this context, the manipulation of game variables and timings in order for events to hit musically predetermined points or quantization divisions seems less radical than the idea might at first appear.

The reconciliation of player agency and musical structure remains a significant challenge for video game music within active gaming episodes, and it has been argued that the use of metrical or synchronized transitions is only a partial solution to the problem of temporal indeterminacy. The importance of the ‘end-event’ in the player’s narrative, and the opportunity for musical synchronization to provide a greater sense of ludic reward implies that the greater co-influence of a more truly interactive approach is worthy of further investigation.

Conclusion

Video game music is amazing. Thrilling and informative, for many it is a key component of what makes games so compelling and enjoyable. This chapter is not intended to suggest any criticism of specific games, composers or approaches. Composers, audio personnel and game designers wrestle with the issues outlined above on a daily basis, producing great music and great games despite the conflicts and tensions within musical interactivity.

Perhaps one common thread in response to the questions posed and conflicts identified is the appreciation that different people play different games, in different ways, for different reasons. Some may play along with the action implied by the music, happy for their actions to be directed, while others may rail against this – taking an unexpected approach that needs to be more closely scored. Some players will ‘run and gun’ their way through a game, while for others the experience of the same gaming episode will be totally different. Some players may be happy to accept a temporary loss of autonomy in exchange for the ludonarrative reward of game/music synchronization, while for others this would be an intolerable breach of the gaming contract.

As composers are increasingly educated about the tools of game development and approaches to interactive music, they will no doubt continue to become a more integrated part of the game design process, engaged with not only writing the music, but with designing the algorithms that govern how that music works in game. In doing so there is an opportunity for composers to think not about designing an interactive music system for a game, but about designing interactive music systems, ones that are aware of player types and ones that attempt to resolve the conflicts inherent in musical interactivity in a more bespoke way. Being aware of how a player approaches a game could, and should, inform how the music works for that player.

Contemporary audiovisual objects unify sound and moving image in our heads via the screen and speakers/headphones. The synchronization of these two channels remains one of the defining aspects of contemporary culture. Video games follow their own particular form of synchronization, where not only sound and image, but also player input form a close unity.Footnote 1 This synchronization unifies the illusion of movement in time and space, and cements it to the crucial interactive dimension of gaming. In most cases, the game software’s ‘music engine’ assembles the whole, fastening sound to the rest of the game, allowing skilled players to synchronize themselves and become ‘in tune’ with the game’s merged audio and video. This constitutes the critical ‘triple lock’ of player input with audio and video that defines much gameplay in digital games.

This chapter will discuss the way that video games are premised upon a crucial link-up between image, sound and player, engaging with a succession of different games as examples to illustrate differences in relations of sound, image and player psychology. There has been surprisingly little interest in synchronization, not only in video games but also in other audiovisual culture.Footnote 2 In many video games, it is imperative that precise synchronization is achieved or else the unity of the gameworld and the player’s interaction with it will be degraded and the illusion of immersion and the effectiveness of the game dissipated. Synchronization can be precise and momentary, geared around a so-called ‘synch point’; or it might be less precise and more continuous but evincing matched dynamics between music and image actions; or the connections can be altogether less clear. Four types of synchronization in video games exist. The first division, precise synchronization, appears most evidently in interactive sounds where the game player delivers some sort of input that immediately has an effect on audiovisual output in the game. Clearest where diegetic sounds emanate directly from player activity, it also occurs in musical accompaniment that develops constantly in parallel to the image activity and mood. The second division, plesiochrony, involves the use of ambient sound or music which fits vaguely with the action, making a ‘whole’ of sound and image, and thus a unified and immersive environment as an important part of gameplay. The third strain would be music-led asynchrony, where the music dominates and sets time for the player. Finally, in parallel-path asynchrony, music accompanies action but evinces no direct weaving of its material with the on-screen activity or other sounds.

Synching It All Up

It is important to note that synchronization is both the technological fact of the gaming hardware pulling together sound, image and gamer, and simultaneously a critically important psychological process for the gamer. This is central to immersion, merging sensory stimuli and completing a sense of surrounding ambience that takes in coherently matched sound and image. Now, this may clearly be evident in the synchronization of sound effects with action, matching the world depicted on screen as well as the game player’s activities. For instance, if we see a soldier fire a gun on screen we expect to hear the crack of the gunshot, and if the player (or the player’s avatar) fires a gun in the game, we expect to hear a gunshot at the precise moment the action takes place. Sound effects may appear more directly synched than music in the majority of cases, yet accompanying music can also be an integrated part of such events, also matching and directing action, both emotionally and aesthetically. Synchronization holds together a unity of audio and visual, and their combination is added to player input. This is absolutely crucial to the process of immersion through holding together the illusion of sound and vision unity, as well as the player’s connection with that amalgamation.

Sound provides a more concrete dimension of space for video games than image, serving a crucial function in expanding the surface of its flat images. The keystones of this illusion are synch points, which provide a structural relationship between sound, image and player input. Synch points unify the game experience as a perceptual unity and aesthetic encounter. Writing primarily about film but with relevance to all audiovisual culture, Michel Chion coined the term ‘synchresis’ to describe the spontaneous appearance of synchronized connection between sound and image.Footnote 3 This is a perceptual lock that magnetically draws together sound and image, as we expect the two to be attached. The illusory and immersive effect in gameplay is particularly strong when sound and image are perceived as a unity. While we, the audience, assume a strong bond between sounds and images occupying the same or similar space, the keystones of this process are moments of precise synchronization between sound and image events.Footnote 4 This illusion of sonic and visual unity is the heart of audiovisual culture. Being perceived as an utter unity disavows the basis in artifice and cements a sense of audiovisual culture as on some level being a ‘reality’.Footnote 5

The Gestalt psychology principle of isomorphism suggests that we understand objects, including cultural objects, as having a particular character, as a consequence of their structural features.Footnote 6 Certain structural features elicit an experience of expressive qualities, and these features recur across objects in different combinations. This notion of ‘shared essential structure’ accounts for the common pairing of certain things: small, fast-moving objects with high-pitched sounds; slow-moving music with static or slow-moving camerawork with nothing moving quickly within the frame, and so on. Isomorphism within Gestalt psychology emphasizes a sense of cohesion and unity of elements into a distinct whole, which in video games is premised upon a sense of synchronization, or at least ‘fitting’ together unremarkably; matching, if not obviously, then perhaps on some deeper level of unity. According to Rudolf Arnheim, such a ‘structural kinship’ works essentially on a psychological level,Footnote 7 as an indispensable part of perceiving expressive similarity across forms, as we encounter similar features in different contexts and formulations. While this is, of course, bolstered by convention, it appears to have a basis in primary human perception.Footnote 8

One might make an argument that many video games are based on a form of spatial exploration, concatenating the illusory visual screen space with that of stereo sound, and engaging a constant dynamic of both audio and visual movement and stasis. This works through isomorphism and dynamic relationships between sound and image that can remain in a broad synchronization, although not matching each other pleonastically blow for blow.Footnote 9 A good example here would be the first-person shooter Quake (1996), where the player sees their avatar’s gun in the centre of the screen and has to move and shoot grotesque cyborg and organic enemies. Trent Reznor and Nine Inch Nails’ incidental soundtrack consists of austere electronic music, dominated by ambient drones and treated electronic sounds. It is remarkable in itself but is also matched well to the visual aspects of the gameworld. The player moves in 3-D through a dark and grim setting that mixes an antiquated castle with futuristic high-tech architecture, corridors and underwater shafts and channels. This sound and image environment is an amalgam, often of angular, dark-coloured surfaces and low-pitched notes that sustain and are filtered to add and subtract overtones. It is not simply that sound and image fit together well, but that the tone of both is in accord on a deep level. Broad synchronization consists not simply of a mimetic copy of the world outside the game (where we hear the gunshot when we fire our avatar’s gun) but also of a general cohesion of sound and image worlds which is derived from perceptual and cognitive horizons as well as cultural traditions. In other words, the cohesion of the sound with the image in the vast majority of cases is due to structural and tonal similarities between what are perhaps too often approached as utterly separate channels. However, apart from these deep- (rather than surface-) level similarities, coherence of sound and image can also vary due to the degree and mode of synchronization between the two.

Finger on the Trigger

While synchronization may be an aesthetic strategy or foundation, a principle that produces a particular psychological engagement, it is also essentially a technological process. Broadly speaking, a computer CPU (central processing unit) has its own internal clocks that synchronizes and controls all its operations. There is also a system clock which controls things for the whole system (outside of the CPU). These clocks also need to be in synchronization.Footnote 10 While matters initially rest on CPU and console/computer architecture – the hardware – they also depend crucially on software.Footnote 11