Introduction

Machine learning with deep learning convolutional neural networks represents a form of artificial intelligence (AI) that allows computers to learn from data by recognising patterns and making predictions.Reference Jiang, Jiang, Zhi, Dong, Li and Ma1 Advances in computational speed, power and accessibility have allowed AI to grow in popularity and be applied to many specialties in medicine and surgery.Reference Jiang, Jiang, Zhi, Dong, Li and Ma1 The expansion of data capture, storage and availability has transformed the capacity to interpret large volumes of examination, radiographic and laboratory results, to produce prediction probabilities. Applications of AI in medicine and surgery are diverse, having been applied to image classification, prediction of diagnosis, clinical decision-making, and identification of patients at high risk for complications.Reference McBee, Awan, Colucci, Ghobadi, Kadom and Kansagra2,Reference Liu, Sinha, Unberath, Ishii, Hager and Taylor3

Computer-vision algorithms, such as image classification and object detection, represent clinically relevant applications of AI in medicine and surgery. Supervised learning models can be constructed using image datasets previously graded by experts. Examples of image classification algorithms include those that: differentiate skin lesions such as benign seborrheic keratosis from keratinocytic carcinomas and malignant melanomasa;Reference Esteva, Kuprel, Novoa, Ko, Swetter and Blau4 categorise chest radiographs for pleural effusion, cardiomegaly or mediastinal enlargement;Reference Swaminathan, Qirko, Smith, Corcoran, Wysham and Bazaz5 distinguish tuberculosis from pulmonary nodules on chest radiographs;Reference Lakhani and Sundaram6 determine breast density on mammograms and classify tumours;Reference Wang and Khosla7 and evaluate retinal fundus photographs to detect diabetic retinopathy and macular degeneration.Reference Kapoor, Walters and Al-Aswad8

In the field of otolaryngology, head and neck surgery, image classification algorithms have been constructed to: determine osteomeatal complex inflammation on computed tomography scans;Reference Chowdhury, Smith, Chandra and Turner9 distinguish squamous cell carcinoma and thyroid cancer from head and neck tissue samples;Reference Halicek, Little, Wang, Griffith, El-Deiry and Chen10 and detect endolymphatic hydrops in mice cochleae using optical coherence tomography.Reference Liu, Zhu, Kim, Raphael, Applegate and Oghalai11 These experiments reveal the feasibility of constructing convolutional neural networks to address clinical queries and to provide a preliminary step towards implementing computer-vision algorithms into otolaryngology, head and neck surgery practice. Various imaging modalities are frequently used during clinical examination and out-patient follow up, providing an ideal opportunity to investigate the application of AI in otolaryngology, head and neck surgery.

Artificial intelligence has the potential to automate routine clinical tasks to improve efficiency, reduce expenditure and provide interpretations of comparable accuracy to specialists in under-resourced settings. Otoscopy is a routine procedure performed by trained practitioners to evaluate the tympanic membrane.Reference Rosenfeld, Shin, Schwartz, Coggins, Gagnon and Hackell12 In Australia, Indigenous children living in rural and remote areas have the highest rates of ear disease in the world.Reference Dart13 In Northern and Central Australia, 91 per cent of Indigenous children suffer from some form of otitis media, including: suppurative otitis media (chronic squamous otitis media and chronic mucosal otitis media, 48 per cent); otitis media with effusion (31 per cent); acute otitis media without perforation (26 per cent); and acute otitis media with perforation (24 per cent).Reference Morris, Leach, Silberberg, Mellon, Wilson and Hamilton14

The key determinant of an accurate diagnosis is a trained clinician who can synthesise examination data and history. Specialist otolaryngologists are able to provide the most accurate diagnoses, but access to such specialists is limited in rural and remote communities, and this poses potential missed opportunities for diagnosis and treatment.Reference Gibney, Morris, Carapetis, Skull and Leach15,Reference Gunasekera, Miller, Burgess, Chando, Sheriff and Tsembis16 Most ear disease screening is performed by local nurses or Indigenous healthcare workers in rural and remote areas, who have less clinical experience and formal training, and limited access to ear specialists (i.e. audiology, otolaryngology, hearing aid services).Reference Gibney, Morris, Carapetis, Skull and Leach15,17,Reference Gunasekera, Morris, Daniels, Couzos and Craig18 Undiagnosed tympanic membrane perforations increase the likelihood of middle-ear infection, and contribute to impaired hearing and language development.Reference Morris, Leach, Halpin, Mellon, Gadil and Wigger19 Otoscopy is the primary modality to identify perforations following careful history-taking.Reference Oyewumi, Brandt, Carrillo, Atkinson, Iglar and Forte20

Whilst otoscopic images can be readily gathered by healthcare workers with limited training and equipment,Reference Elliott, Smith, Bensink, Brown, Stewart and Perry21 subsequent interpretation of gathered otoscopic images by specialist otolaryngologists may be delayed and impair expedient decision-making. Therefore, a computer-vision algorithm capable of identifying pathology has the potential to improve patient care in areas with limited access to otolaryngologists. As a preliminary step towards establishing a comprehensive tympanic membrane computer-vision tool, this study aimed to explore the feasibility of creating a proof-of-concept AI convolutional neural network computer-vision algorithm, to identify tympanic membrane perforations of various sizes.

Materials and methods

Ethics

All patient data in this study were obtained from de-identified publicly accessible online databases. Ethical approval was obtained from the Menzies School of Health Research, Northern Territory, Australia (health research ethics committee number: 2019-3410), and the study was undertaken in compliance with the Helsinki Declaration of 1975.22

Image library

Otoscopic images of human tympanic membranes were retrospectively collected manually using de-identified online image repositories (Google Images; Google, Mountain View, California, USA). Search terms included ‘tympanic membrane’, ‘ear drum’, ‘otoscope’, ‘otoscopic’ and ‘perforation’. The images included in the otoscopic image dataset were required to have an unobstructed view of the entire tympanic membrane and illumination from a speculum light source. Prior to analysis, images were screened by the primary investigator (ARH). Ear canals obstructed with cerumen, grommets or foreign bodies, as well as cases of significant reconstruction or total perforation, were excluded. Images with over- or under-saturation from the light source that distorted interpretation were excluded. Images were cropped to remove unnecessary borders.

Presence and size of perforation

The ‘gold standard’ classification was defined by trained otolaryngologists, who independently classified each otoscopic image as with or without a tympanic membrane perforation.

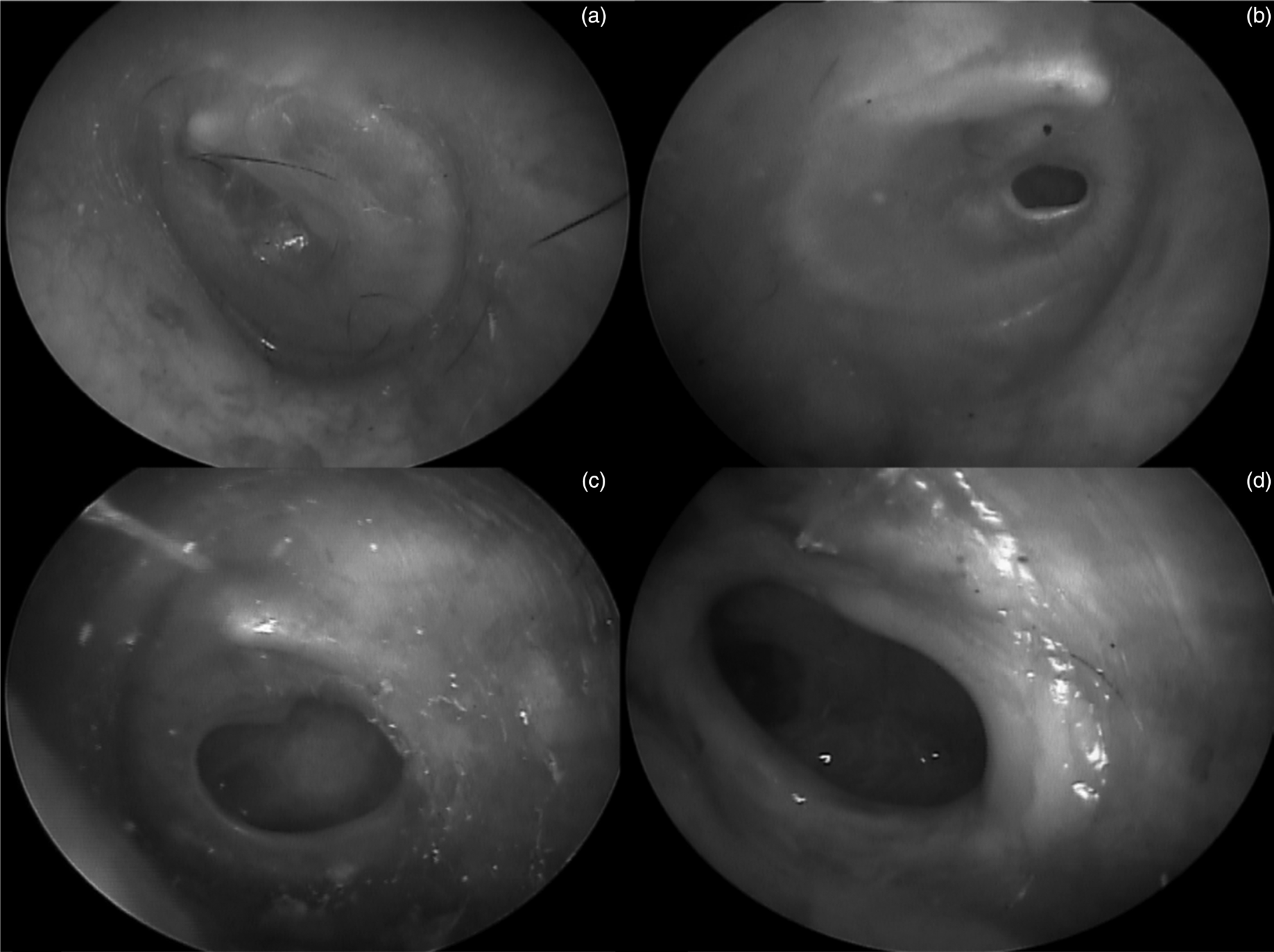

Two investigators estimated the size of each perforation independently. The size of the tympanic membrane perforation was quantified as the proportion of pixels across the largest cross-sectional diameter of the perforation divided by the largest cross-sectional diameter of the entire tympanic membrane. Perforation size was categorised into three distinct groups: (a) small – less than one-third, (b) medium – one-third to two-thirds, or (c) large – more than two-thirds of the entire tympanic membrane (Figure 1). Measurements were performed using the GNU Image Manipulation Program, version 2.8.22 (GIMP Development Team, 2017).23

Fig. 1. Examples of tympanic membrane classifications from otoscopic images: (a) no perforation, (b) small perforation, (c) medium perforation and (d) large perforation.

Training and testing

The convolutional neural network architecture Inception-V3 (Google) was used. The methodology of convolutional neural networks and transfer learning have been previously described.Reference Krizhevsky, Sutskever and Hinton24 The transfer learning method was conducted in Python programming language using TensorFlow deep learning software (Google).

Training images were used to train the convolutional neural network during the transfer learning process. Test images were used to evaluate the performance of the algorithm. Fifty test images were retrospectively gathered from tele-otoscopy outreach campaigns from children in Wadeye, Northern Territory, Australia, between January 2018 and September 2019. As reported previously, the neural network is designed to be trained for 100 000 steps (number of integrations performed during training to compare model predictions, error and weight of each node) at a learning rate of 0.05 (rate at which the algorithm interprets training data or weight of node for each step).Reference Chowdhury, Smith, Chandra and Turner9

Performance evaluation

The performance of the model was assessed for accuracy using the receiver operating characteristics curve. Accuracy was defined as the percentage of correct responses determined by the algorithm performed on the test images. Output probabilities of each test image were used to construct a receiver operating characteristics curve. The area under the curve was used to evaluate the predictive capacity of the algorithm with corresponding 95 per cent confidence intervals (CIs). Statistical analysis was performed using SPSS software (version 20, 2011; IBM, Armonk, New York, USA).

Results

A total of 233 images (138 left and 95 right) were included in the image library (183 were used for training and 50 were used for testing), consisting of 105 intact and 128 perforated tympanic membranes. There was no disagreement between the two otolaryngologists who classified the images manually. Images for testing (n = 50; 27 left and 23 right) consisted of 25 intact and 25 perforated tympanic membranes (7 small, 7 medium and 11 large perforations).

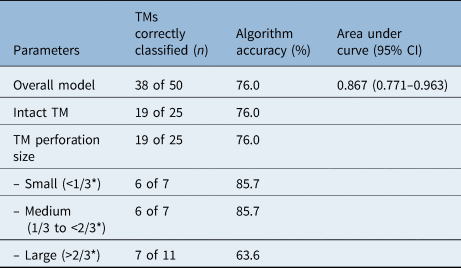

A total of 38 out of 50 test images were correctly classified, yielding an accuracy of 76.0 per cent (95 per cent CI = 62.1–86.0 per cent) and an area under the curve of 0.867 (95 per cent CI = 0.771–0.963) (Table 1). Nineteen of 25 intact tympanic membranes (76.0 per cent) were correctly classified as ‘intact’. Nineteen of 25 perforated tympanic membranes (76.0 per cent) were correctly classified as ‘perforated’.

Table 1. Comparison of accuracy and performance for algorithms to classify TM perforations by size

*Of the total tympanic membrane diameter. TM = tympanic membrane; CI = confidence interval

Stratifying algorithm responses by size of perforation, six of seven small perforations (85.7 per cent) and six of seven medium perforations (85.7 per cent) were correctly classified as ‘perforated’. Seven of 11 large perforations (63.6 per cent) were correctly classified as ‘perforated’.

Twelve of 50 images (24.0 per cent) were classified incorrectly, consisting of 6 false positives (i.e. tympanic membranes were incorrectly classified as perforated when intact) and 6 false negatives (i.e. tympanic membranes were incorrectly classified as intact when perforated). The false negative classifications (n = 6) were for images of one small, one medium and four large tympanic membrane perforations.

Discussion

The key step in diagnosing ear diseases such as perforation, acute otitis media, or otitis media with effusion, following careful history-taking and before audiometry and tympanometry, is visual inspection of the tympanic membrane using an otosocope.Reference Rosenfeld, Shin, Schwartz, Coggins, Gagnon and Hackell12 The accuracy of otoscopy to diagnose ear disease depends upon the experience of the practitioner.Reference Oyewumi, Brandt, Carrillo, Atkinson, Iglar and Forte20,Reference Asher, Leibovitz, Press, Greenberg, Bilenko and Reuveni25,Reference Buchanan and Pothier26 Although audiologists can recognise some tympanic membrane conditions with accuracy that is comparable to otolaryngologists, healthcare workers with less training have significantly lower accuracy.Reference Gunasekera, Miller, Burgess, Chando, Sheriff and Tsembis16 The implications of inaccurate diagnosis are significantly more severe in settings with limited access to otolaryngologists and audiologists.Reference Myburgh, van Zijl, Swanepoel, Hellström and Laurent27

This study demonstrates the feasibility of using a publicly available convolutional neural network architecture to develop a classification algorithm, using images from online repositories, to identify tympanic membrane perforations, and to test such an algorithm on otoscopic images collected in a rural setting. The algorithm identified both intact and perforated tympanic membranes with similar accuracy. The algorithm was more likely to identify a perforation on otoscopic images of small and medium tympanic membrane perforations. However, false negative classifications (i.e. classified as intact when a perforation was present) were mostly of otoscopic images of large perforations (greater than two-thirds of the tympanic membrane diameter).

Accuracy differences between different sized perforations may be because of the algorithm's reduced ability to detect focal abnormalities on the tympanic membrane. For example, this image classifier model was constructed to view the entire otoscopic image, rather than focus on key components (e.g. malleus, annulus). It is plausible that as perforation size increased, the algorithm was unable to identify that the deeper structures exposed to view represented an abnormal tympanic membrane rather than an intact tympanic membrane. For smaller perforations, the algorithm likely recognised discrete aberrations in the consistency of the otoscopic image and considered these defects to represent perforations. This may seem counter-intuitive to clinical experience, where it may be easier to detect larger perforations than smaller ones.

In order to circumvent this limitation, object detection and segmentation techniques may be utilised in future iterations of the algorithm. This would allow the algorithm to be trained to focus on searching for specific defects in the tympanic membrane, rather than reviewing the entire otoscopic image. A previous experiment using object detection and segmentation yielded an accuracy of 81.4 per cent to detect suspicious tympanic membrane perforations.Reference Seok, Song, Koo, Kin and Choi28 The authors did not report or measure perforation size. Object detection and segmentation may be promising techniques to improve the capture of tympanic membrane perforations, but consideration of perforation size is warranted, as the threshold to which this affects performance is unclear.

Image classification algorithms may be most useful to detect global pathology on tympanic membranes, such as acute otitis media. For example, previous image classification applications using Inception-V3 and MobileNets yielded accuracy rates of 82.2 per cent and 80 per cent to detect acute otitis media, respectively, based on 108 images.Reference Kasher29 Another image classification application for acute otitis media using a decision tree analysis resulted in diagnostic accuracy of 80.0–82.35 per cent, based on 74 images.Reference Myburgh, van Zijl, Swanepoel, Hellström and Laurent27

As this was a proof-of-concept study, there are several limitations, which will be addressed in any future large-scale work. Firstly, images gathered from online repositories may not be comparable to images gathered in primary care settings. Despite this, publicly available images have been used for preliminary development and testing, with future endeavours aimed at increasing the image library prospectively, to potentially yield greater accuracy. The images were optimised and did not include features that would often be seen in the clinical setting, such as wax. The images did not represent the ultimate target group of rural and remote Indigenous children. More images, standardised collection methods, and a variety of perforations could improve the algorithm's ability to identify true perforations.

In addition, accuracy varied by size of perforation. This suggests that the algorithm requires more training images prior to testing. Accuracy may be improved in future experiments with the inclusion of segmentation, image augmentation techniques and hyperparameter tuning.

Additionally, this study was performed using still images rather than a series of images or a video. In practice, clinicians can evaluate the tympanic membrane from several angles, for a longer duration, and using pneumatic otoscopy. It is plausible that the diagnostic accuracy of the object detection algorithm could be improved by using a series of images or a video.

Otoscopy was performed by multiple practitioners, and the angle, depth, brightness and clarity could be inconsistent. It is possible that a set of images performed in a standardised manner could yield greater diagnostic accuracy. However, the clinical value of this algorithm is to improve the diagnosis made by practitioners without specialist training in a variety of settings. Therefore, performance could be improved by collecting images from numerous sites and practitioners, to develop better diagnostic accuracy despite image heterogeneity.

Finally, the gold standard was based on otolaryngologists’ assessment of images. Ideally, independent test results, such as tympanometry or findings recorded at the time of examination, would have been used to validate the otolaryngologists’ assessment of the images.

• This study applied artificial intelligence (AI) methods, including machine learning and convolutional neural networks, to develop an image classification algorithm to detect tympanic membrane perforations

• A total of 233 (183 training, 50 testing) otoscopic images were used, and the ‘gold standard’ ‘ground truth’ was defined by otolaryngologists (small, medium or large perforations)

• The algorithm equally identified intact and perforated tympanic membranes (76.0 per cent, 95 per cent confidence interval (CI) = 62.1–86.0 per cent)

• Model performance was significantly accurate (area under the curve = 0.867, 95 per cent CI = 0.771–0.963)

• This study represents a preliminary step towards applying AI to ear disease screening

• This may be most applicable for healthcare workers in rural and remote communities distant from otolaryngology services

This study utilised technological advancements in AI, by applying a machine learning convolutional neural network algorithm, to identify tympanic membrane perforations using otoscopic images. The use of AI applications in medicine and surgery is expanding rapidly, with emphasis on computer vision, predictive outbreak models, natural language processing algorithms, and automated planning and scheduling applications.Reference Wahl, Cossy-Gantner, Germann and Schwalbe30 In the field of otolaryngology, AI has the potential to be directed towards public health problems that persist in under-resourced communities. For example, significant government funding has been applied to ear disease in Aboriginal Australians through various grants, outreach campaigns and public awareness initiatives, without a significant improvement in disease incidence.Reference Kenyon31

Telemedicine has been shown to improve screening rates, expenditure and community engagement.Reference Nguyen, Smith, Armfield, Bensink and Scuffham32 However, telemedicine requires an investment of time and experienced otolaryngologists to review otoscopic findings. An alternative option may be to include AI into these strategies by automating the process of otoscopic image review, in real time. An accurate and reliable AI algorithm could serve to review otoscopic images quickly and efficiently, with comparable accuracy to experienced otolaryngologists, as a form of rapid screening, to triage patients requiring further review. An extension of this could be a smartphone application that provides non-specialty-trained healthcare workers in rural settings with instantaneous diagnoses, to potentially initiate early treatment and specialist referral.

To reach this goal, significantly more rigorous and comprehensive investigation is required for computer-vision algorithms. This would include a much larger image library, co-ordination across urban and rural settings, training in multiple otological conditions, in addition to tympanic membrane perforation, and resolution of discrepancies in the current algorithm. Future endeavours are underway to prospectively collect photographs taken by otolaryngologists using endoscopes and handheld otoscopes, in order to expand the image library, refine model performance and fine-tune the algorithm for application using various instruments.

Conclusion

This study demonstrates the feasibility of constructing a proof-of-concept AI convolutional neural network algorithm to identify tympanic membranes with or without perforation. However, accuracy is affected by perforation size. Future endeavours are warranted to establish a comprehensive library of tympanic membrane pathology and construct a robust algorithm for use in daily practice. The application of an image classification algorithm may be useful to screen for ear disease in under-resourced communities distant from otolaryngology services.

Competing interests

Raymond Sacks is a consultant for Medtronic.

Acknowledgments

We sincerely appreciate and acknowledge Dr Graeme Crossland, Dr Hemi Patel, Dr Robyn Aitken, Christian Aguilar, Royal Darwin Hospital, Top End Health Service and the Department of Health for the Northern Territory, Australia. We thank the community councils and traditional owners for allowing this research in their communities. We acknowledge the assistance of many clinical and administrative staff in the Top End Health Service for their efforts collecting and managing clinical data used for this study.