I would not want all social scientists to adopt Richard Fenno’s techniques for open-ended participant observation—and neither would Fenno. We would lose much if this were done. But we also lose much when we abide by disciplinary norms that discourage scholars from sticking a toe in the water of qualitative participant observation…

—John R. Hibbing (Reference Hibbing and Fenno2003, xii)

As long as political scientists continue to study politicians, some of us certainly will want to collect data through repeated interactions with these politicians in their natural habitats. If that is so, we should be as self-conscious as we can be about this kind of political science activity and about the relationship between political scientists and politicians it entails. And not just because people doing this kind of research can benefit but also because, through their lack of understanding, political scientists who do not do this kind of research can unintentionally impede the work of those who do.

—Richard Fenno (Reference Fenno2003, 250)

At one time, in-depth qualitative research was central to the study of American political institutions. This type of research—commonly referred to as “ethnography” in other disciplines and famously referred to as “soaking and poking” by Fenno (Reference Fenno2003)—typically involves a combination of participant observation and in-depth interviews with political actors. Many of the foundational studies on American political institutions drew from this approach. For instance, studies by Matthews (Reference Matthews1960), Huitt (Reference Huitt1961), and Fenno (Reference Fenno1966, Reference Fenno1973, Reference Fenno2003), among others, were based heavily on these scholars’ repeated interactions with lawmakers, thereby forming much of the foundation for later studies of the House and the Senate. Neustadt’s (Reference Neustadt1991) theory of presidential power, which continues to shape how political scientists study the presidency, evolved from his time working in the Truman (and later Kennedy) White House. In the states, the voluminous work of Rosenthal (Reference Rosenthal1981), who often incorporated an in-depth qualitative approach to understanding state legislatures, shaped how scholars study state institutions.

However, since that time, the American politics subfield has become more quantitative. To be clear, the increased use of quantitative methods, and their increasing sophistication, is not a negative. However, we should be concerned about a parallel decline in the use of more qualitative approaches. It is not as if scholars have ceased using them altogether. Recent decades have produced important and insightful studies about Congress (Baker Reference Baker2000; Beckmann Reference Beckmann2010; Curry Reference Curry2015; Hall Reference Hall1996; Hansen Reference Hansen2014; Sellers Reference Sellers2009), campaigns (Glaser Reference Glaser1996a; Parker Reference Parker2014), local politics (Gillespie Reference Gillespie2012), welfare policies (Soss Reference Soss2000), and the Supreme Court (Miller Reference Miller2009; Perry Reference Perry1991) that draw on in-depth qualitative research. However, these types of studies are increasingly novel in research about American political institutions.

In this essay I discuss the declining use of in-depth qualitative approaches, such as elite interviewing and participant observation, to study our political institutions. My argument is simple: methodological pluralism is good, and the declining use of these approaches is bad. Different approaches can yield different insights, and a decreased use of any approach may bias us away from certain findings, theories, and discussions. As a field, we should be cognizant of this and devise ways to protect methodological pluralism.

DECLINING METHODOLOGICAL PLURALISM

Elite interviewing and participant observation are the two specific methods most commonly used to engage in in-depth qualitative research—although their use is not always in-depth (i.e., ethnographic) in nature. This section assesses the general decline of these methods as part of the scholarship on American political institutions, but it does not discern between uses and discussions of these methods that are more or less in-depth. Presumably, the overall decline discussed here is true for all approaches to using these methods.

One way to illustrate the decline of these methods is shown in figure 1. Using JSTOR searches, I totaled the number of articles in select journals that regularly publish research about American political institutions. The focus was on five different institutions (i.e., the Congress, presidency, bureaucracy, courts, and state legislatures) and the results are compiled by decade beginning with the 1960s (the “2010s” cover 2010–2015). First, I searched for variations of each institution’s name in article titles. Second, I repeated each search adding qualifiers for articles mentioning “interviews” or “participant observation” in the text. I used these totals to produce the resulting percentages. (The specific journals and search language are listed in the notes for figure 1.) This approach not only identifies articles that use these methods but also those that mention them at any point, whether in a literature review, as a critique, or as a methodological approach. It therefore is not only a measure of the use of these methods but also of how frequently scholars discuss research that uses interview and participant-observation approaches. In this way, the results are an approximate measure of how prominent these methods are in academic discussions of these topics—at least in journal articles.

Figure 1 “Interviews” and “Participant Observation” in Articles on American Political Institutions Published in Select Peer-Review Journals

Notes: The full list of journals searched includes American Journal of Political Science, American Political Science Review, British Journal of Political Science, Journal of Politics, Journal of Public Administration Research and Theory, Law and Society Review, Legislative Studies Quarterly, Perspectives on Politics, Political Research Quarterly, Political Science Quarterly, Polity, Presidential Studies Quarterly, PS: Political Science & Politics, Public Choice, Publius, Social Science Quarterly, State and Local Government Review, State Politics & Policy Quarterly, and The Supreme Court Review (as well as any predecessor journals). Each search searched for the name of the institution in the title of the article and then the words “interview,” “interviews,” “participant observation,” or “participant-observation” in the full text. For Congress, the specific words searched for in article titles were “Congress,” “House,” or “Senate.” For Presidency, the specific words searched for in article titles were “President” or “Presidency.” For Bureaucracy, the specific words searched for in article titles were “Bureaucracy,” “Bureaucratic,” “Agency,” or “Executive.” For State Legislatures, the specific words searched for in article titles were “State Legislature,” “State Legislators,” or “State Legislative.” For Courts, the specific words searched for in article titles were “Court,” “Courts,” “Judiciary,” or “Judicial.”

The results confirm what is already known about the decline of these approaches, but they are striking nonetheless. The mere mention of these methods has declined since the 1970s. Across all of the institutions, academic discussion peaked in that decade, with 33% of articles mentioning either interviews or participant observation. By the 2010s, that proportion had reached an all-time low—only 19%. Other than in the study of the courts, the decline is sharp. For instance, 42% of articles about Congress mentioned these methods in the 1970s, compared to only 15% since 2010. Almost 50% of all published articles about the bureaucracy mentioned these approaches in the 1960s. So far in the 2010s, that proportion is only 17%. Clearly, these methods have become a far less prominent part of academic discussions and research programs in the American politics subfield.

EXPLAINING THE DECLINE

There are numerous reasons for the decline in in-depth qualitative research on American political institutions. Factors including increased computing power, the rise of the Internet, and the availability of quantitative data about various political phenomena (e.g., congressional roll-call voting records) have rendered quantitative approaches more practicable. Moreover, it is clear that gaining access to political elites has become more difficult as American lawmakers and politicians have become less willing to participate in interviews and other forms of direct study (Baker Reference Baker, Schickler and Lee2011; Beckmann and Hall Reference Beckmann, Hall and Mosley2013; Goldstein Reference Goldstein2002; Parker Reference Parker2015). This article focuses on an arguably misguided reason: the perception that in-depth qualitative research is too difficult and time consuming to conduct and even when done well is less systematic than other approaches and likely to result in biased and unrepresentative findings. As a consequence, engaging in these methods is viewed as hazardous to academic success, especially for young scholars who must complete dissertations and/or obtain tenure.

This article focuses on an arguably misguided reason: the perception that in-depth qualitative research is too difficult and time consuming to conduct and even when done well is less systematic than other approaches and likely to result in biased and unrepresentative findings.

Scholars of American politics who write about participant-observation approaches—even those who are supportive of them—often reflect this view of in-depth qualitative research as too difficult or unsystematic. Footnote 1 Perhaps due to its prominence, these discussions often originate around Fenno’s (Reference Fenno2003) book, Home Style: House Members in their Districts. In this pioneering study, Fenno sought to understand how members of Congress represent their constituents by engaging in in-depth participant observation with 18 representatives in their congressional districts. His book was universally praised for its insights into the representational behavior of lawmakers; however, its methodological approach, by its very nature, often is presented as unsystematic and nonreplicable. For instance, in his foreword to the 2003 edition of Fenno’s book, Hibbing called Fenno’s method “immensely time-consuming and difficult” and stated that it is “almost impossible to work extended participant observation even into the somewhat flexible schedules of academics” (Hibbing Reference Hibbing and Fenno2003, xi). Furthermore, Hibbing contended that the skills needed to accomplish it are considered idiosyncratic and beyond most political scientists’ abilities:

And to be done really well it requires great personal skills. Rereading Home Style only deepened my long-held belief that Dick Fenno is amazingly good with people. He has an uncanny ability to read them and the situations they are in, to know when to talk and when to be quiet, to know when to be patient and when to make his move, to know when to be supportive and when to be distant. These are skills that cannot be learned from a 50-page appendix, no matter how insightful it may be (Hibbing Reference Hibbing and Fenno2003, xi).

Concerns about difficulty are a mere precursor for an even more troubling attitude—that these methods are, by their very nature, unsystematic and will produce biased and unrepresentative findings. In discussing his more quantitative approach to studying the representational behavior of lawmakers, Grimmer (Reference Grimmer2013) provided a typical example of this view. He argued that qualitative approaches are flawed because we cannot interview or observe every lawmaker or even a representative sample of lawmakers; as a result, the study cannot produce systematic knowledge:

Some members of Congress (if not most) are unreceptive to requests to be observed. Even for those representatives included in the sample, we can only observe their activities on a small subset of days. Other problems with systematic participant observation imply that even when executed well, this methodology is unable to provide systematic and valid measurements…the observer might distort the legislator’s behavior…[and] interviews rely on the imperfect memory of humans that may fail to recall actions of particular importance (Grimmer Reference Grimmer2013, 28).

To be sure, these attitudes are not held only regarding Fenno’s work, or even only about participant-observation research, but also about interview research and other in-depth qualitative approaches to understanding American political institutions. For instance, a review of Miller’s (Reference Miller2009) study of the interactions between Congress and the courts criticizes it for drawing solely on 44 interviews as evidence, stating that his results may be “simply a function of the small sample he interviewed for the book” (Frederick Reference Frederick2009, 450). Similarly, Perry quoted senior colleagues speaking of his in-depth interview research in Deciding to Decide (1991), among other statements: “Well, if you want to spend your time doing this that’s fine, but it’s not social science” and “This is mindless gossip mongering, and ipse dixit inside dopesterism” (quoted in Kritzer Reference Kritzer1994).

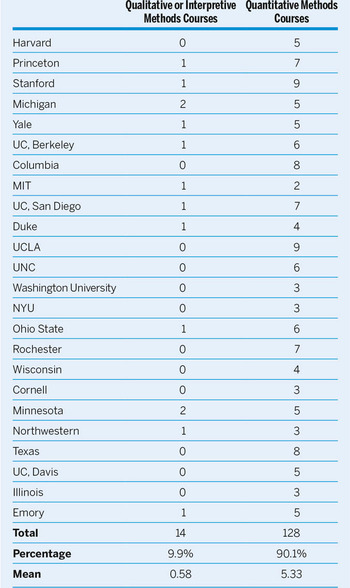

The supposed superiority of quantitative forms of analysis is well ingrained in our disciplinary culture, and this is reflected in graduate-school curricula. Table 1 lists the number of graduate courses currently or recently offered by 25 prominent political science departments that were focused on either quantitative or qualitative/interpretive methods. Footnote 2 Across these programs, on average, more than five courses were offered in quantitative methods and less than one was offered on qualitative or interpretive methods. Furthermore, in half of the programs, there were no courses dedicated to qualitative or interpretive methods.

Table 1 Graduate Courses Focusing Entirely on Quantitative or Qualitative or Interpretive Methods in Select Political Science PhD Programs

Notes: Counts were obtained by going to each department’s website and accessing either a course catalog or available information on courses from recent years and by reading course titles and descriptions. Courses that seemed to focus entirely on either quantitative or qualitative methodological approaches were counted in the appropriate column. The specific courses counted for each department can be obtained from the author.

A MISTAKEN CRITIQUE

Of course, these criticisms of in-depth qualitative research are largely mistaken. Studies using these approaches are not unsystematic, and there is a stunning lack of evidence that they lead to biased findings.

For instance, in critiques such as Grimmer’s (Reference Grimmer2013), citations or detailed discussions are never offered to support the claim that participant observation or interview evidence leads to biased findings when representative samples of political elites are not drawn. This criticism is treated as self-evident, but it is far from it. For a “sample” to produce biased findings, there must be important and theoretically relevant differences between that which is and is not studied, and the researcher would have to ignore those differences when drawing inferences. The suggestion that the knowledge gained from studying a small set of lawmakers might be biased because there might be differences between those willing and not willing to be studied is not a refutation. Certainly, it is a claim worthy of reflection, but its mere suggestion is not an indictment. To date, no one has demonstrated that the results of Fenno (Reference Fenno2003), Perry (Reference Perry1991), Huitt (Reference Huitt1961), and many others are biased because of who these scholars were able to observe. This should lead us to question such claims against participant-observation and/or interview-oriented research.

For a “sample” to produce biased findings, there must be important and theoretically relevant differences between that which is and is not studied, and the researcher would have to ignore those differences when drawing inferences.

Yet these claims gain credence because their proponents often apply a positivist methodological standard to research that is not necessarily positivist. Footnote 3 Because most PhD programs provide methodological training that is overwhelmingly positivist and quantitative, many political scientists are not aware of the various philosophical approaches to empirical research. Positivist and interpretivist approaches draw on different orientations and standards (see Schwartz-Shea and Yanow Reference Schwartz-Shea and Yanow2012, 91–114, for a more thorough overview). Positivist research emphasizes front-loaded research designs and values the validity, reliability, replicability, objectivity, and falsifiability of tests conducted using data representative of a larger universe of phenomena. Footnote 4 For in-depth interpretive qualitative research, these standards typically cannot apply and, in many ways, do not make sense. Interpretive approaches emphasize meaning-making processes and value flexibility in research designs that allow researchers to explore unforeseen twists and turns and to develop knowledge as the research process unfolds. Therefore, the goal of interpretive qualitative research is to develop an “ethnographic sensibility” (Schatz Reference Schatz2009)—that is, for the researcher to learn how to think and feel like those being studied. Researchers using this approach have various methods for ensuring that this process is rigorous and that the conclusions drawn are defensible. This includes extensive field notetaking and engaging in reflexivity—that is, a continual consideration of how their own particular circumstances and sense making influence the conclusions they draw.

Both positivist and interpretive approaches have strengths and weaknesses as well as benefits and limitations. However, because critics of in-depth qualitative research often are unaware of the rigor and standard practices involved in interpretive approaches, it is viewed as less scientific and more prone to human error. It is true that interviewing and participant observation require a researcher to make judgment calls, and human error is a factor, but that also is true for positivist and quantitative approaches, in which human error may occur in the decision-making process regarding data collection, choice of measures, coding, variable inclusion or exclusion, and interpretation of the meanings of findings. Neither approach is immune to human error.

Whereas the rigor of in-depth qualitative approaches is generally underappreciated in the study of American institutions, the difficulty of using these methods is overstated. As Beckmann and Hall (Reference Beckmann, Hall and Mosley2013, 201) stated, “[d]ifficult is not impossible.” Scholars using these methodological approaches have developed various strategies to gain access to institutions and political elites, including beginning with background interviews or with an acquaintance who can serve as a reference to open doors to elites (Beckmann and Hall Reference Beckmann, Hall and Mosley2013; Curry Reference Curry2015); working to develop relationships and build trust with politicians before the period of study begins (Parker Reference Parker2015); forming mutually beneficial arrangements between the researcher and the participant (Curry Reference Curry2015; Gillespie and Michelson Reference Gillespie and Michelson2011); and staying aware and keeping schedules flexible to take advantage of opportunities in the field as they arise (Glaser Reference Glaser1996b). Generally, using these strategies and others can be time consuming when interviewing and conducting participant-observation research in American political institutions but they also are less daunting than typically assumed.

THE DECLINE IS DOING HARM

Different approaches to studying a subject can produce different insights. In-depth qualitative approaches using interviews or participant observation are well suited to gain insight about important political processes and patterns that either are not publicly observable or part of the public record. They also can provide insights about the motivations of elites that are difficult to ascertain using quantitative data alone.

It is interesting—and perhaps paradoxical, in light of their declining deployment—that scholars of American political institutions seem to recognize the value of in-depth qualitative research. Table 2 provides data on book awards presented by the American Political Science Association’s (APSA’s) organized sections since 2000, as well as the percentage awarded to studies that use elite interviews or participant observation as a form of analysis. Books that use these methods continue to be recognized at meaningful rates; since 2000, more than 25% receiving an award drew on qualitative methods of inquiry. This may not appear to be a significant percentage because almost 75% of the awarded books used other methods. However, during a period when discussion of these methods is declining in journals, the fact that committees of scholars continue to recognize the accomplishments of books using in-depth qualitative approaches makes a positive statement about the value they find in these studies, despite the methodological critiques.

Table 2 Elite Interviews, Participant Observation, and APSA Section Book Awards Since 2000

Notes: This table counts all studies receiving the respective awards that were related to the topic of American politics. Each awarded study was coded as including or not including interview or participant-observation evidence.

Awards notwithstanding, the field’s turn from in-depth qualitative research risks several detrimental developments. Certain topics are more difficult to study using quantitative data alone because much elite behavior in our institutions is neither publicly observable nor quantifiable. Recent studies, for instance, have shown how interview and participant-observation approaches can improve our understanding of congressional communications and messaging strategies (Lee Reference Lee2016; Sellers Reference Sellers2009), party and leadership influence in Congress (Curry Reference Curry2015; Hansen Reference Hansen2014), representational behavior of lawmakers on the campaign trail (Parker Reference Parker2014), the relationship between Congress and the courts (Miller Reference Miller2009), presidential management of the federal bureaucracy (West Reference West2006), and more. These studies provide insight about the motivations of political actors, the decisions that occur outside of public view and behind the scenes, and the interactions among key officeholders that otherwise are unobservable. If the use of these methods continues to decline, we may no longer study certain topics—and we also may lose insights and information that are difficult to uncover using purely quantitative approaches.

If the use of these methods continues to decline, we may no longer study certain topics—and we also may lose insights and information that are difficult to uncover using purely quantitative approaches.

Perhaps as important, in-depth qualitative approaches enhance the ability to speak to political practitioners about our research. These forms of research occur “on the ground” among the elites and practitioners that we are studying, helping us to gain a sensibility about their world and its cultural particularities, its rhythms and jargon, and—ultimately—that which is important to them. If “pracademics” Footnote 5 is a goal for many in the field, then incorporating knowledge gained from in-depth qualitative studies can help develop insights that relate closely to the worlds of political elites—and may even inform the decisions made by political elites (Gillespie and Michelson Reference Gillespie and Michelson2011). Moreover, the use of in-depth qualitative approaches can build goodwill between academics and practitioners through direct and repeated interactions, which can make each group more attentive to the messages of the other.

SUGGESTIONS FOR THE FUTURE

How can we ensure that the future study of American political institutions continues to involve in-depth qualitative approaches? Because training in and familiarity with these methods is a key problem, efforts should be made to expand access to courses and mentorship. Indeed, scholars hoping to engage in interviewing or participant-observation research often must rely on informal mentorship to learn how to use these methods (Glaser Reference Glaser1996b). Informal mentorship is crucial, but formal mentorship and instruction also are important. Currently, opportunities are provided for graduate students and junior faculty through the Institute for Qualitative and Multi-Method Research held annually at Syracuse University, Footnote 6 and the Methods Café (Yanow and Schwartz-Shea Reference Yanow and Schwartz-Shea2007) at the APSA and Western Political Science Association annual meetings. Yet, still more can be done.

Methods training no longer must be restricted by geographical space. With a webcam and an Internet connection, scholars can host classes, discussions, and symposia online—thereby providing training to professors and students in other departments and programs thousands of miles away. The Qualitative and Multi-Method Research section of APSA, for example, could coordinate these activities and give researchers—who may not have the time or funding to attend programs at Syracuse University or annual conferences—the opportunity to learn about in-depth qualitative and interpretive approaches.

In addition to training, the use of these methods can be expanded by working to improve political scientists’ access to governmental institutions and political elites. Currently, some programs, such as the APSA Congressional Fellowship Program (CFP), place political scientists with members of Congress to both work in and observe the inner workings of their offices. The CFP provides an immersive experience, enabling scholars to spend at least nine months in a congressional office; however, the program has been available for only five to eight (or fewer) political scientists a year. Other fellowship programs exist in Washington, but most are intended for nonpolitical scientists or are open to people working in various fields, which limits opportunities for political scientists. As a field, we should consider bolstering existing programs and creating new ones.

Working to improve opportunities for access occurs in other ways as well. Those of us who engage directly with political practitioners should remember to be good citizens. How we act and interact with politicians will influence their opinion of political science and political scientists. To preserve or even improve the opportunities that others will have to gain access, we should make a good impression. This, of course, does not mean changing what we find or write in order to be complimentary of politicians but rather that we should be cordial, polite, and respectful in our interactions.

The purpose of this article is not to advocate for in-depth qualitative approaches as a superior method for studying American political institutions. Rather, what I want to communicate is that we should strive for diversity in our approaches in order to yield different insights. Interviewing and participant observing with political elites is no longer a common way to seek these insights, which is a detrimental development. It is my sincere hope that future generations of scholars will reverse this trend.