Enclosure, Continuity

The ceiling and walls are crisp white. Fluorescent lighting, no windows. There’s a puzzle-pieced black mat laid down. Static charge everywhere. No sprung floor. This is not a dance studio. One light switch on either side, two desktops, four robots, and many, many buttons. It is Monday, 5 June 2017, my first day in residence at the lab.Footnote 1 I have another four weeks before I fly back to my home in Brooklyn. I am a dancer and a choreographer, and today I begin making works with robots. To me, a robot is an entity that senses its environment, computes conclusions, and actuates through space. It is not something else first, like a smartphone, a toy, a car.

Prior to this summer, I had surprisingly few fictional references and little theoretical knowledge about robots. This naïveté about robots as characters or threats, combined with my Bay Area “techno-utopianism”Footnote 2 and intermediate programming experience, led me to approach this residency as a curious neophyte seeking new choreographic methods and performance partners. I was instinctively drawn to robots because I could make them move.

Amy LaViers (the lab director), Ishaan Pakrasi (a lab member), and I stand in an open triangle.Footnote 3 The three of us prototype the first “movement hour” together. I will be teaching movement hour four days a week to the dozen members of the lab, all engineering students. We each perform an exaggerated walk across the room and describe each other’s gait. LaViers skitters quickly, hair wisping behind her ears, highlighting her unswerving horizontal focus. Pakrasi bows his knees out, sending his center forward and down, counterbalanced by his dangling arms behind. I swing my legs and arms in a circular, gathering motion, collecting air in my limbs and redirecting it to my meridian. This first task of traveling across a room feels immediately symbolic of my foreignness, arriving at a new domain, selecting distinctive motions to take me there.

I acknowledge all of the robots that first day. I jostle the SoftBank Nao robot out of a Styrofoam box. Nao is primarily an educational and research robot, to teach children to program, for example. It was released to the public in 2008 and thousands of Nao robots are in use across dozens of countries today (Saenz Reference Saenz2010; Dent Reference Dent2017; Guizzo Reference Guizzo2011; Aldebaran 2014). There are six versions of the Nao, and I use the fifth.

I hold this amalgamation of plastic, motors, cables, and circuit boards in my hands. There’s a baby-like weight to it, maybe 12 pounds, 24 inches tall. The places where you expect it to move, it does. “Shoulder” joint rotates the “arm” in a 250-degree arc. A “knee” connects to a “calf.” I hold it under the “armpits,” supporting the “torso” and letting the “legs” dangle out of the “hip sockets.” It has the shape of a human, so I use human nomenclature to describe it. I lack other words for these parts. The robot doesn’t have sitz bones, yet it will certainly be balanced when I place it on its equivalent rear end on the floor. Other words could describe the physical joints—I name the robot’s hips “piba” and the ankles “loune.” I generate these nonsensical words as a means of divorcing the robot’s form from my own—to name it as the manufactured and inorganic body it is.

Nao is smooth. Its carapace is scratchless, pristine, in opaque white and red. I can wrap my hand around the forearm. The foot platforms are wide and raised. I’m reminded of shortbread

cookies, the fixed slice height all the way around. There’s a semblance of two irises on either side of its face. Multicolored lights surrounding these irises flash in varied bands. I’m unsure what the colors mean. I wade into a state of suspended discomfort. I have never needed to read the signals of a humanoid form in laborious detail like this.

Nov Chakraborty (another student) joins us after LaViers goes to her office. We three are soon crouched around a single laptop on the black mat floor. Chakraborty programs Nao to say “Hello, Catie” and wave to me. When the high-pitched greeting breaks our awkward silence, Pakrasi, Chakraborty, and I quiver. Our shyness is acute.

Next, they ask me what to do with it. We compose a short solo for the robot together. I create a few gestures and positions; we manipulate the robot in Animation Mode to shape them, placing its baby limbs into formation and pausing to hit “record” on the laptop each time. We add time stamps to designate when it should arrive at each shape. The solo is now a series of robot positions and orientations, recorded in a software called Choregraphe, where they appear as illustrated boxes with interconnected lines. When I press “upload,” the solo takes four seconds to compile and transfer to the robot over the network cable.

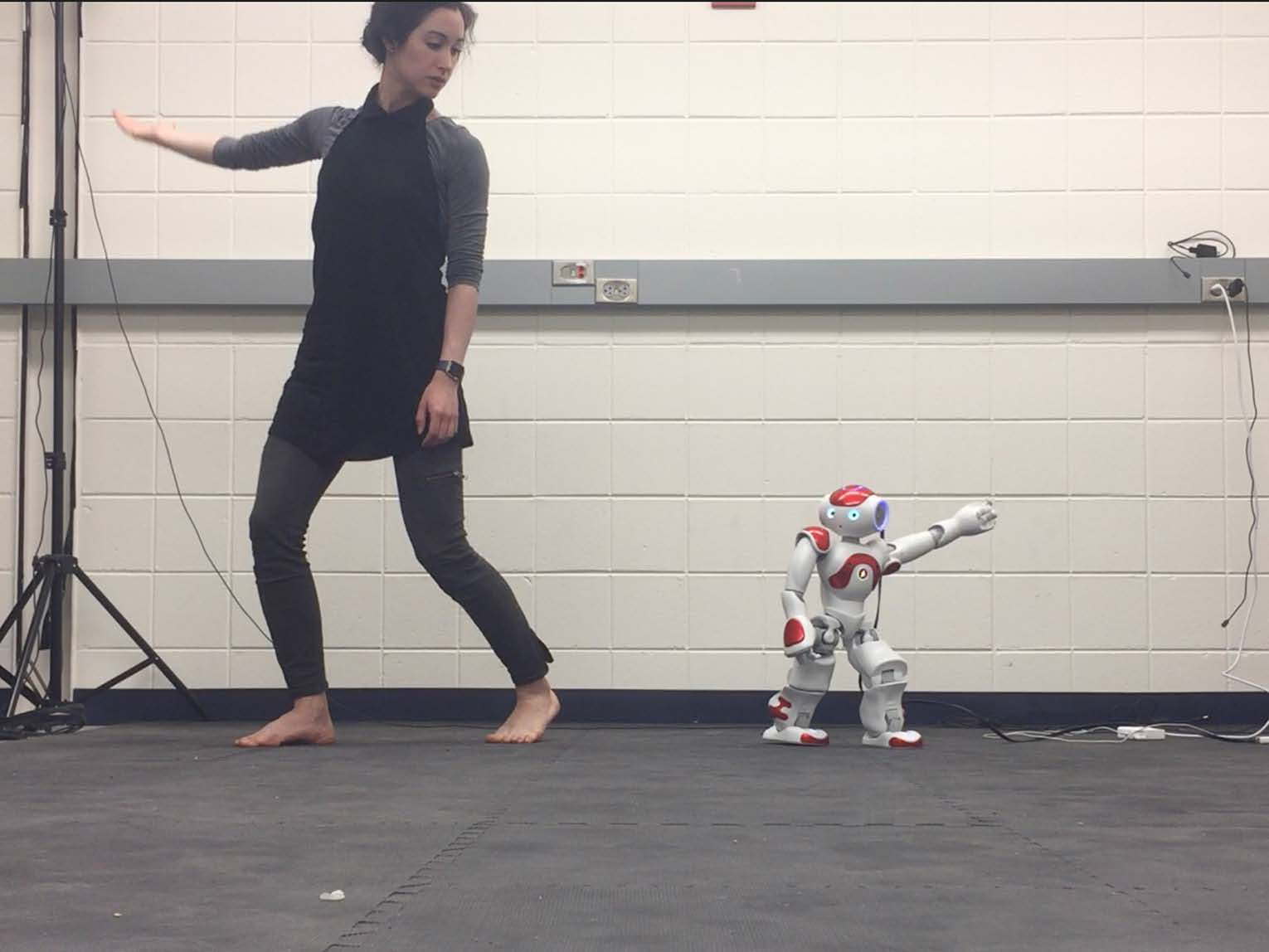

Strangely, I’ve designed and observed these poses without physicalizing them. I feel compelled to learn the solo, implementing direct interpolations between the poses to fill the duration from one pose to the next. My hands, wrists, and toes feel textural and superfluous as I mark each stance. Stepping from pose to pose is an alien form of choreographing and dancing for me. My choreographed phrases for human dancers were sustained motions, incorporating incidental pauses for affective contrast. Stoppages were not composition elements as they are while I choreograph the Nao. My phrases for people are captured by names like “raising flag manège” or pictographs with directional side scribbles, brought back into realization through muscle memory and collective recall. But glancing sideways to the laptop, I see the saved file, “dayone.crg,” fully generated, waiting to be executed. I orient the robot to stand parallel with its back to the wall and press “play.” Suddenly the robot and I are moving together, oriented toward Chakraborty and Pakrasi (fig. 2).

Figure 1 Catie Cuan and the Wen in Step Change, a film from OUTPUT, created during the ThoughtWorks Arts Residency, 2018. (Photo by Kevin Barry)

Figure 2 Catie Cuan and the Nao robot rehearsing a duet in the RAD Lab at the UIUC campus on 5 June 2017. (Photo by Ishaan Pakrasi)

They watch us from seated positions on the black mat floor. We four are in the proscenium, abiding by the formality of performance in a space so removed from it. Our faces are over-illuminated by the fluorescence bouncing off the robot’s limbs. I steady my nerves. I have danced on far grander stages than this one, yet I am anxious. I don’t have the protections of wings, lights, a costume. I don’t have the warmth and energy of other bodies to guide me. I have the black mat, the white walls, the shrunken facsimile of a human body. We stop dancing and Pakrasi and Chakraborty hesitantly clap. I turn towards the robot to see it paused in its final posture: head bowed and arms at its side.

Hours pass until we pack up for the evening. Pakrasi remarks that he will show me how to use the Rethink Robotics Baxter tomorrow. I turn to it—the Baxter is six feet high, with two arms, a screen for a face, and two grippers as fingered hands (fig. 3). The Baxter was designed for industrial jobs on a production line and widely adopted by university research groups (Simon Reference Simon2018). Pakrasi gestures to the computer we will use to direct the robot over the network. I nod and approach the robot, then place my cheek to the screen and close my eyes. The darkness behind my lids is calming after this white box of a room. I feel porous, buoyant, and full of anticipation. I feel powerful and complex. I put one hand on each gripper and breathe. Seconds flicker by and I open my eyes to see Pakrasi and Chakraborty teetering on an inhalation. Hold, hold the space for this, I murmur to myself. Moments linger before we exit the lab.

Figure 3 Catie Cuan and the Baxter robot in front of a rigged white sheet, holding iPhones and flashlights to craft shadowed images. The resulting film is titled Partitions from the project Time to Compile (Cuan Reference Cuan2017). (Photo courtesy of Catie Cuan)

The days of these four weeks in Urbana-Champaign become routinized and permutations of the mirror game keep appearing in my work. In the canonical mirror game, two movers stand across from each other and attempt to match each other’s movements. The leader and follower roles may be explicit or emergent. I repeatedly craft the mirror game with a variety of moving bodies. In my second week, a human mirrors the Baxter. In my third week, a human mirrors a virtual reality (VR) avatar using an HTC VIVE.Footnote 4 These robot and VR bodies are all reshaped by shadow and light to vary their size, dimensions, and appearance, diffusing their humanoid similitude. The glaring reflectivity of these robot surfaces is another quality I aim to soften. I envision these mirror dances with robots occurring in tiny silos across long distances, much like my evening routine of hunching over my laptop in a hotel room to say goodnight to my fiancé. Moving bodies reflecting one another with lags and delays due to our devices and our muscles.

One afternoon I am seated on the black mat, feeling urgency for no clear reason. My notes occupy several square feet around me and I am hurriedly glancing and scratching at them. Out of this fervor, a breakthrough: What if I run these paired, mirror game dances like a game of movement telephone, fully intertwined? The moving human plays the mirror game with one robot, alone (reminiscent of the isolated hunching laptop silo); then that moving person is detected by a camera or motion capture system. Moving person 1’s data is sent to a second moving robot or machine body and the data drives its motion. Machine body 2 stands across from human 2 in a separate dark room. And on and on. All the humans believe they are in isolation, mirroring an unfamiliar moving machine body. Instead, their data is traveling. These humans are dancing with other humans. At the end, the humans each see their experience replayed via a 360-camera that captures the partitioned rooms simultaneously. Continuity is revealed. The 360-camera view shows these bodies in an elaborate, unchoreo-graphed unison group dance where they are willing objects and exploited subjects in relation to proximal machines.

There has never been more data about the body. This data is used for fitness step counts and genealogy, surveillance and selfie filters, and is fed into algorithms and collected in databases. Our bodies are sent online to others — some strangers, some friends. The distance between people has been collapsed by video calls and emails. And yet, it is humans who generate these media, and humans who control them. Beneath the magic of every screen, every moving robot body, is a series of small physical parts and minute decisions made by people for how those robots (and data) will function and what the robots will do. I wonder if there is similar attention to how these robots elicit emotions. What do robots mean to people? How do we feel around them? Do they perform for us or do we perform for them?

Snippets of this work are shown in the lab, under the title Time to Compile by “Catie Cuan x RAD Lab” on 30 June 2017. We grapple over a descriptive term—an installation? A performance? A research process? I play the role of certainty with very little rehearsal. I instinctively hang sheets and buy work lights from Home Depot to make it a theatre. In the moments before that first showing, one of the robots fails completely and I fervently and unjustly snap at Pakrasi. It is a foreshadowing of countless future vexations to come.

In mid-December 2017, I return to Urbana-Champaign for three weeks. There, I craft a defining moment by placing a smartphone and a flashlight in each of the Baxter’s grippers. Grasping these items in front of a sheet, the robot becomes its own documentarian. Encountering new robots, like the Baxter, causes me to ask: how do they see me? Where is the camera? Am I zoomed in to an intimate shot? Where do these images of me go exactly? These questions arise when I film and edit a duet between us from three viewpoints: the smartphone in hand, a camera in front (see fig. 3), and a camera behind a diaphanous sheet.

At a public showing of one version of Time to Compile (fig. 4), LaViers, Pakrasi, Erin Berl (another lab member), and I perform with three robots in separate partitioned quarters created by joining two pipes in an “X” with drapes hanging from them. The audience circulates around the edges. During the 15-minute transition from performance to installation, the Baxter virtual reality teleoperation link fails.Footnote 5 Without this core element, the installation continuity disappears completely. I am shocked to realize for the first time how much the whole piece hinges on technological success. The postshow artist talkback includes vitriol and praise but mostly puzzlement. These moving machines have no virtuosity, why should they be on a stage? Why not choreograph a dog or an air conditioner?Footnote 6

Figure 4 Catie Cuan and the Baxter robot in Time to Compile at the 2018 Conference for Research on Choreographic Interfaces (CRCI) at the Granoff Center for the Creative Arts, Brown University, 10 March 2018. The Baxter holds a sheet to hide and reveal itself. Catie then slowly wraps herself in the sheet by turning from one side to the other. (Photo by Keira Heu-Jwyn Chang)

Travel takes us to performances in Atlanta, Genoa, San Francisco, and New York City. I struggle deeply when shifting between my full body awareness and technological minutiae. I feel this harshly in Genoa, during a last-minute tech rehearsal where the projections keep disappearing and reappearing on the screen upstage of us. Pakrasi and I attempt to debug this as the audience filters in. Rebuffed, I stand on the stage with the resolve and quietness of a seasoned performer, ready for the piece to begin regardless of where it will go. And, as if by body-based magic, the projector works.

Extension

In March of 2018 I embark on a different residency.Footnote 7 When the time comes to give resident talks about our work, I propose beginning mine with a new premiere dance duet between the Nao robot and me, titled Lucid. I sketch each one of its poses and timestamps in advance, drawn on printer paper, the pages laid out in a conference room at my residency. I choreograph my motions with a practiced understanding of how the robot moves, marking through my dance and imagining the Nao in the space with me. The duet begins with the Nao and me in close proximity as we trade gestures and points in space (e.g., my hand occupies negative space under the robot’s lifted foot in one moment). It then transitions into a traveling, varied solo at a faster overall tempo, without the robot.

The loaner Nao arrives in a UPS box from SoftBank Robotics. My enthrallment from the summer of 2017 has turned to impatience. I must send the first robot back for replacement due to a faulty right toe button; the software crashes repeatedly. I try to assess how worn out the motors are on the second robot they send. A worn motor will limit the number of times I can run the piece without it suddenly shutting down.

Pakrasi is in town and offers to help me on the day of the show. Right before the performance on 31 May 2018, I walk into the low house lights to preset the robot under a prop. It malfunctions and turns off a few seconds later while I am in the green room. Darting from backstage to the green room, Pakrasi notifies me the robot is no longer pinging the wifi network. These errors feel familiar. There are whispers between us: Should we wait? Cancel the performance? Perform as the finale rather than the opener? Change the piece completely by subtracting the robot? Improvise a waltz with the limp, powered-off machine? I decide to venture onto the stage in my dance costume of black leggings and a black tank top and collect the hidden robot, taking it to the green room for debugging. The audience fidgets.

When I reset it for the second time, the robot reappears on the network. Back to the stage, music cue, and the robot works for exactly three and a half minutes of the performance before it collapses and tilts flat to the ground from overheating. What is more, it is exactly at this 3.5-minute mark when I had planned to kick it over, as a symbol of technological rejection prior to dancing a rapid solo. But the fatal, timely mechanical error transforms the robot into a vulnerable and flawed character. I am, as in Genoa, in awe of this uncanny coincidence of malfunction and storyline. When the end arrives, I pick up the Nao to bow and it is scathingly hot, arms dangling loosely, eyes a monochromatic white (fig. 5). I feel a rigid, tense relief at finishing the performance. I switch the robot into my left arm and in the wings blow on my burning right hand.

Figure 5 Catie Cuan and the Nao in rehearsal for her duet, Lucid, as part of her TED Residency Talk at the TED Headquarters in New York City, 29 May 2018. (Photo by Mary Stapleton)

Construction

The laboratory space itself is an enormous loft, the brick floor uneven with an occasional protruding nail driven into the glazed surface. It once was a shoe factory. Standing in this warehouse loft, “production” now signifies economic value and of course, a show. Fluorescent lights again, two desktop computers, shelves stacked with architectural models and various power tools. Once again, I am in a foreign place for human dancers.

It is 11 May 2018, I am beginning another new work.Footnote 8 I stand on the third floor of a warehouse in the Brooklyn Navy Yard across from the city’s largest industrial robot, an 11’-high, 3,000-pound ABB IRB 6700, the type used to manufacture cars or crush metal objects. I clear away folding chairs for the “dancing” area, an empty space between the robot and the windows. The robot is named Wen. It has six joints, each revolute, meaning the joint is constrained to rotation about a single axis. It moves along a 40-foot track. It is the first robot I’ve encountered that could kill me (fig. 6 fig. 7).

Figure 6 Catie Cuan in rehearsal with the ABB IRB 6700 robot, Wen, for the filming of her ThoughtWorks Arts Residency OUTPUT project. Consortium for Research and Robotics (CRR), Brooklyn Navy Yard, 10 August 2018. (Photo by Andrew McWilliams)

Figure 7 The Wen robot in a “resting pose” at the CRR. (Photo by Catie Cuan)

Killer robots are a well-known fictional trope,Footnote 9 but the bodily sensation of choreographing one is like learning to drive a truck. Trucks are an everyday fixture, but as you become acquainted with one, you recognize how dangerous and unwieldy it is. A carefully programmed, rehearsed set of motions and specifications is necessary to ensure you do not drive into the side of a building. We become inured to their threat. The same with massive robots. Programmed and rehearsed specifications and movements ensure you do not direct the robot to swing into your right temple when you are looking away. Precautions are taken—no one stands near the robot when it is actually moving—and the robot’s speed is tamped down to an unintimidating velocity.

Gina Nikbin and Nour Sabs (two roboticists) breeze me through how to program the Wen robot. This choreographic programming is labored translation: First, my scribbles, trajectories, and bits of text are translated into motions for myself. The second step involves taking the motion from my own body and mapping it in some way onto the robot’s six joints. Sometimes I do this by restricting the robot’s movements to a subset of my joints, the shoulder through fingertips, for example. Other times I do this second step by gathering the overall trajectory of my whole body in space — for instance, when I locomote from one region to another six feet to the right — and extract only that spatial translation to move the Wen six feet up the track. The third step is conveying my goals to Nikbin and Sabs so that we can calculate or select joint angles to program into the robot’s native software.

These joint angles are akin to selecting degrees for the hands of a clock, but the center pin of the clock is a motor whose torque you can command, thus changing the clock hand’s position. If midnight is 0 degrees, 3 o’clock would be 90 degrees. The clock hand is the robot’s attached link. The implication is that a choreographer can change the angle for one particular joint by commanding its relevant motor, and in doing so, simultaneously or in sequence, cause the robot to move. Everyone is impatient with this process. It is painstaking. Four hours tick by, producing only three minutes of movement for Wen. Corrections are tremendous undertakings, because we must scroll to the exact second of the robot’s performance, insert some modification, and hope that all is not lost—that the robot will be able to move continuously from the modification into the next configuration. We tussle over specifics and the best method I can find to bridge our linguistic barrier is to physically impersonate the robot. Weeks like this go by.

But at the end of each session, we pause to playback our work on Wen. And the logical constancy of the robot is absolute: it can only follow the program. When it begins to move, it does so in a satisfy-ingly continuous path, without breaks or pauses, a perfect constant velocity. The quality of the motion is foreign in an alluring way. It does not vibrate, deviate, or trip. When the move-ment trajectory is set, the robot sequences through it so predictably that it becomes appealing. I know that the motion is scripted but when I observe the robot’s performance, the exactness of the placement gives the impression that the robot has agency enough to recalibrate its balance and direct its own motors, as if conveying intention and purpose in the motion. The Wen, contrasted with the other robots, makes no errors once it successfully compiles the sequence. In a way, it becomes the ideal performer, executing this discrete trajectory as desired each time. If the audience knew how unerring this robot was, perhaps they would find the predictable program boring. However, the robot’s bulk and novelty, along with the audience’s suspension of disbelief, make for a successfully theatrical performance. There is beauty in the laboriousness of my process and the consistency of the outcome.

Unlike the Nao or the Baxter, this colossus Wen is purposely nonanthropomorphic. No eyeballs, no two grippers, no baby-weight limbs for me to cradle. Its height and force are affecting, yet they are wielded by my key-board inputs. “Choreography” is dance writing, just as programming is often defined as action scripting, or task instruction writing. The manifestation of these practices can be wholly different, but in this moment my program results in dance at runtime. Programming is choreography. My body is every body that I can inhabit and control.

Will my job as a dancer be taken by a robot? In “Automation and the Future of Work,” Aaron Benanav discusses the fear surrounding robots taking human jobs. Though it is not clear, he says, whether

a qualitative break with the past has taken place. “By one count, 57 percent of the jobs workers did in the 1960s no longer exist today” [quoting Jerry Kaplan]. Automation is a constant feature in the history of capitalism. By contrast, the discourse around automation, which extrapolates from instances of technological change to a broader social theory, is not constant; it periodically recurs in modern history. (2019:10)

I navigate the possibility of being automated by choreographing solos and duets alongside the Wen that employ its constancy. My limbs move in sequential isolation, frequently in a single plane. One inward creasing of the wrist. One arcing drag of a straight right leg, smearing my foot along the floor. This constancy allows me to explore what automation feels like; how a single speed carries from one limb to the next resulting in my prolonged muscle contractions; how the repetitious progress through a chain of required actions becomes automatic and unconscious so I can close my eyes while doing them. This feels easy. Being an industrial robot seems doable and exhausting.

After weeks of planning, programming, and testing, our crew of eight spends 14 hours on 22 August 2018 filming the robot and me dancing side by side. The windows are blacked out with trash bags to aid the rented studio lights. During the afternoon, the damp summer heat, the warmth coming from my body, and the robot’s motors coalesce to exhaust me. I feel the fatigue of rehearsing and filming. I glance at my performing robot partner. Wen is under no such duress. I focus on the notion that both bodies are my body as I initiate a new sequence of camera shots designed to mask my fatigue. This robot doesn’t replace me, I know, but I am augmented by it. My work changes as a result.

I name the entire project OUTPUT (see Cuan Reference Cuan2020:1–2). “I am inside the machine while I construct it around me” is the concept driving the OUTPUT performance. There are dances. There is the sequential process of taking features from my human dance and translating them into motions for the robot. There are hours of film edited into a short narrative (Cuan Reference Cuan2018). (I consider the uncut film, where we rehearse sequences and mill about the robot, as a separate work.) Our team builds two new, custom software programs for this residency. MOSAIC and CONCAT are portable tools comprising cameras and software. The first, CONCAT,Footnote 10 allows me to see my moving body sensed by a Kinect Footnote 11 depth camera juxtaposed with the ABB robot in a two-dimensional, scaled-down animation. A second novel software tool, MOSAIC,Footnote 12 was created so I could “dance with myself,” via short captured videos stitched together into collages (fig. 8). I started to con-ceptualize MOSAIC when I woke up one morning dreaming about the airstream of my body’s motion recorded by all these motion capturing modalities combined into a single film.

Figure 8 During the OUTPUT premiere performance, CONCAT (upper left) and MOSAIC (upper right) run in real time on laptops and are projected onto two screens upstage. Triskelion Arts, Brooklyn, New York, 14 September 2018. (Photo by Kevin Barry)

I devise an improvisational structure to incorporate these tools and my choreography while onstage. I see that these versions of my body, represented and re-represented through sensing the various tools capturing my movement, become a multitude of performers that I can play with and modulate in two-dimensional spaces (like screens) and three-dimensional spaces (like a stage and my live body).

The premiere performance begins with me dancing solo in the distant upstage (see ThoughtWorks Arts 2018); the Wen robot cannot be transported from the Navy Yard, so it appears in the performance on film and in animation. Over the next 14 minutes, I load the software and issue commands using wireless mice and keyboards onstage, a practice akin to an algo-rave.Footnote 13 These software tools result in a collage of videos and moving animated skeletons on two screens upstage. The unfolding of it all is an improvisation. The improvisation turns the work from a series of pre-scripted figurations that may be evaluated on the basis of their correctness — correct choreography with the correct timing, a feature of jobs well done — into an unfurling of my intuition.

Feelings of boredom, curiosity, weariness; the sense of dragging my palm across my stomach, the tug of my hair against my forehead, the warm sock around my ankle, all of these and more dictate the content.

The deep introspection I experienced onstage while integrating the various tools and my body into a visual landscape is a sensation I want to share. I take these components of OUTPUT into open rooms for individuals to experiment with.Footnote 14 The passing participants tell me they feel complicated next to such a “simple” being as this Wen robot. The systems invite the participants to move, and they perform with these captured versions of themselves and the programmed, animation of Wen for the other passersby, but their focus on the improvisation causes them to forget the casual audience. One individual noticed himself arriving at unexpected shapes and exclaimed, “Look at me!” (fig. 9).

Figure 9 Ryan Rockmore interacting with the CONCAT software from OUTPUT during Second Sundays at Pioneer Works, Brooklyn, New York, 14 April 2019. (Photo captured by the MOSAIC software; courtesy of Catie Cuan)

Engineering

There are fluorescent lights, white scholastic tiles, gray workbenches, tools, and a robot. It is the first robot I’ve encountered that I do not initially approach as a container for choreography. In this case, however, it is a very different kind of robot. The Vine robot (fig. 10) is soft, pliant, without joints. This is only a single motor at the robot’s base. Its body is a long tube of plas-tic folded inside itself, such that the robot grows forward by eversion, forced by compressed air. The robot often carries a sensor such as a camera or a small gripper on the end. I am midway through my first year of graduate school in mechanical engineering and commencing my second research rotation. My project is to take this robot into the underground squirrel burrows of Lake Lagunita on the Stanford University campus.

Figure 10 The Vine robot, created by members of Allison Okamura’s Collaborative Haptics and Robotics in Medicine (CHARM) Lab. (Photo by Catie Cuan)

The robot’s simplicity means it is effective at a narrow set of actions such as exploring confined spaces or temperature monitoring. As with other robots, I marvel at Vine’s constant unwavering motion.

The robot is not a performing body, but it could be. I imagine groups of Vine robots, their bodies stretching farther than any single entity with joints, motors, and a base could reach. Translating my body’s motions to the ABB robot, the Baxter, or the Nao was limited yet logical. How might I do this with a Vine? Perhaps choreographing shape, determining direction, regulating start and stop times. When we take the Vine into the burrow for the first time, it becomes stuck on a thick branch. Behind closed eyes, I picture myself like it—a column without limbs, angled into a vague curvature with only limited mechanisms to release myself. Exhale, go backwards, twist. When I open my eyes, I see the other research assistants paused in silence, perplexed at my silent twisting torso motion. I’m not sure I can approach creating motion for this robot from a stance other than my self-referential dance choreography. I’m now grappling with the notion that motion for robots is a generic ask of the tool, rather than choreography.

For the next quarter in school, I design an experiment with the KUKA LBR iiwa Footnote 15 robot arm, a manipulator with seven degrees of freedom, used in research laboratories and in industry (fig. 11). I investigate how individuals might give the robot nonverbal cues like “stop” or “begin traveling in a circle.” This experiment is heavily influenced by contact improvisation jams and the translation of intention through touch. Late on a Wednesday evening, I am reviewing the videos of the participants, who variously contact the robot, avoid it, rotate it, engage in a focused, unintentional duet with it…and I see a performance that requires no conventions of the stage, just bodies, a scripted set of tasks, and one observer: me.

Figure 11 Cameron Scoggins during a test experiment with the KUKA robot in the Stanford Robotics Lab (SRL), on 28 July 2019. Professor Oussama Khatib directs SRL. (Photo by Catie Cuan)

In my second-year haptics course at Stanford we build mechatronic devices that stimulate our sense of touch, both cutaneous (skin) and kinesthetic (bending of joints and muscles).Footnote 16 The study of haptics necessitates the study of motion and human perception. My professor and thesis advisor, Allison M. Okamura, describes an argument from Bruce Hood, experimental psychologist and neuroscientist, that humans have brains in order to move meaningfully through the world. He wrote, “Of the estimated 86–100 billion neurons in the brain, around 80 percent are to be found in the cerebellum, the base of the brain at the back that controls our movements” (2012). I recall the story of a sea squirt: once it settles at a permanent stationary location, it eats its own brain because it no longer has to move (Beilock Reference Beilock2012).

My Body

This practice of choreographing humans and robots is becoming clearer and more muddled all the time. Personal control varies when choreographing each group. This trust manifests in rehearsal: my human dancers trust me enough to decline an undesired movement, to make suggestions that enrich the piece, to feel free to rest when they are fatigued. We trust each other to execute the learned choreography as well as ensure one another’s safety should any unex-pected physical or theatrical errors occur. Performers are responsible and inculcated, such that I have never been part of a performance where a person deliberately deviated from the rehearsed events during showtime. Dancers may falter or slip, but rarely are they rendered immediately and permanently inoperable. While robots’ motions are scripted and satisfying in their predictability, errors are omnipresent. As with the Nao at TED, the Baxter at CRCI, and the projector in Genoa, there are many unpredictable errors that are challenging to fix in the narrow band of time before the audience begins to notice something is wrong. I found myself on several occasions performing with robots where the unilateral error-fixing eroded my trust in the robot’s robustness. This calls upon the notion of free will: while robots have none, their black box of continuous errors causes me as a performer and choreographer to feel that I have less control over them than I assume. Robots are simultaneously perfect performers and frustrating paperweights. They will not deviate from the planned script in movement quality or configuration, but on some occasions their total immediate failure destabilizes my understanding of them. This violation of trust can be jarring in the moment of performance, and semi-alluring when considering the mystic possibility that robots are not as inert or predictable as many of us might believe.

These errors lead to two observations. The first and more obvious: fictional or single-demo video representations of robots exclusively show them succeeding, leading viewers to expectations that may differ from reality. The second: given that the performance setting already creates heightened awareness and focus for me as a performer, the dread of robot failure intensifies the sentiment of risk and chance encounter, launching me into a unique internal experience that is personally limited to this neoteric genre of dancing with robots. It creates a distinctive anticipation and acuity that I rarely feel when dancing with humans. When I perform with, rather than use, technology, I come into conflict with the idea that technology disembodies me or removes me from a lived experience. Instead, my “liveness” seems potent and all consuming.

And a third consideration: these robot errors are emergent behaviors that are byproducts of amalgamating subsystems together. Rarely is the output the perfect sum of inputs, and thus modeling the final comprehensive entity is a challenge (statistician George Box asserted, “All models are wrong, but some are useful” [in Remington Reference Remington2017:E28]). There are similar examples in nature and in dance: where photosynthesis is not merely sunlight, water, carbon dioxide, and chlorophyll, but the process that intermingles them; and a live dance performance is not solely repeated steps, but an unrepeatable, vital act that extends beyond its constituent parts.

When dancing alongside a robot I have programmed, it feels like I am dancing with a version of myself, like I am blurring time, space, and identity. The first time I choreographed the Baxter robot, Pakrasi sat behind a desktop at the command line, and I proceeded to move the robot’s arms around until I was satisfied. We saved the file as “puppet1.”Footnote 17 We had 12 or 15 “puppet” files by the end of that first session. When I played back the sequence on the Baxter days later to dance alongside it, I felt myself recalling the prior grip of my hands on the robot in addition to my own body in the present. I was spiraling around the real robot and the specter of me as a puppeteer as I stepped through my choreography.

Humans are unequivocally agential when I dance with them. Onstage, our mutual trust is grounding. If I employ the linguistic fixture of the first-person/third-person point of view, I am decidedly in the former. When I dance with robots, they are generally unaware of me. This is because some robots, like the Wen or the Nao, have no sensors to detect my movements or determine if they physically contact me. Again, their frequent failings mean I monitor them continuously during the dance to know if such an error has occurred. This causes me to supplant my first-person perspective with a simultaneous first- and third-person amalgamation. I am the robot watching Catie the live performer, while at the same time performing myself, and encountering the robot as if it is a version of me from a previous time. This first-person/third-person framework fails me; the language isn’t adequate to describe this feeling of distribution.

Dancing with each of these robots is different, just as dancing with human partners varies from one to the next. I danced with the real Wen in the CRR warehouse and with the programmed, two-dimensional, animation of Wen on a stage. Next to the real Wen robot, my focus is hazy. The robot’s enormity confuses my visual sightlines, even when I am at a safe distance from it. I feel like it engulfs the space and me. Between my body and the robot’s, the warehouse is saturated with motion, the edges of the room are filled with the reach and velocity of our bodies. The Wen’s motors and base traveling along the ramp make high-pitched grinding noises that drown the frequency bandwidth of human speech. I feel like we create an enormous system, trading off movement phrases between us until the system reaches a swift pause, like a mash of instruments clanging out in different keys, before a quick piercing trumpet knocks sounds into silence. I notice the connections between my limbs and how to move each of them in isolation: the forearm swinging as a pendulum or the last knuckle of my thumb flexed backward.

When dancing with the Wen as a projected animation, my focus is tapered. As my skeleton is captured by the Kinect camera and represented next to the Wen animation, I feel an awareness of each point in my body, as though I’m standing in front of a mirror and occupying a series of shapes drawn on the mirror. The animation highlights the imbalance in joint numbers between myself and the robot. This reinforces the feeling of numbering and moving my limbs in isolation. The robot is now two-dimensional and silent. I feel a collapsing of the whole robot entity and resist the urge to make my motion exceedingly frontal or two-dimensional. I may orient my skeleton over the robot animation so my real body, my skeleton, and the robot animation are aligned in a single vertical column from front to back, facing the audience. Stacking my body and the robot body in this way makes the process of movement capture and translation immediately known to the viewer. It is also a visual representation of my internal feelings about being catapulted through time, agency, and hierarchy, when I am concomitantly creator, subject, and participant of the events onstage.

The Nao distinctly contrasts the Wen. I danced with four different Nao robots, two were red and two were blue. It is odd to accept that four different bodies are the same performer. They all share shape, quality, and expression with exactitude. Dancing with the Nao I feel drawn to its level, employing seated or lying down transitions to sequence past the robot’s 24-inch stature. Its humanoid proportions and speaker (purposely located where a mouth would be) cause me to approach it as a character and my choreography with the Nao tends to venture into narrative. If the space with the Wen was filled, this is pointillism. In performance with it, my gut concern about the robot failing arises often. This concern, married with the robot’s stature, makes me feel overbearing and responsible. I am like the springy padded floor of a bouncy house, ready to right the robot’s orientation and insulate it from obstacles. This sentiment is infantilizing. I daydream about being this robot, “born” in 2008, yet preserved in the same body ad infinitum.

My choreographic process differs between humans and robots. Take, for example, the process of manifesting any motion. With humans, I often relied on the training and unique improvisational styles of each dancer to smooth over the phrasing gaps; or I spin a text prompt into a realized movement phrase. To manifest motion in the robot, I arrive at rehearsal with motions like end effector trajectories, poses, or joint angles. (An end effector is the last link of a serial manipulator robot that contacts the environment; usually it is a gripper.) There is a possibility that I haven’t done the math correctly, that the trajectory I designed violates any number of rules: the robot will hit itself, the robot will reach a singularity (an unsolvable configuration that puts it in a locked position), the desired end effector point is outside the robot’s workspace, the motors cannot support the intended torque command at that speed… The list is endless.

All the robots I have danced with exist for primary purposes other than performing. This hard materiality places the robots in a context where choreography must adhere to the rules of the existing system. The same is true with human dancers, who can only do what humans are capable of doing. Choreographing robots requires reconfiguring yourself into the size, shape, and quality of a body very unlike your own. The Baxter, Nao, and Wen are fundamentally controlled by a series of motor torques that are sent to their joints which result in a twisting of the links into new positions. This results in one particularity: the lack of muscle contraction and relaxation. In humans, the muscles contracting and relaxing dictates the bending and rotating of joints. I lost the notion of initiation and reactive transition when choreographing robots. Recall a person shifting weight from right to left: the spring action of the right quadricep and calf begins an arc at about their center of mass, landing on the left leg. None of the robots I’ve worked with have this spring-like quality or contraction. This is one contrasting attribute that might underlie the constancy I was so drawn to with the Wen. A second particularity of inhab-iting another body is determining its modes of expression. For example, the Baxter robot cannot locomote or change levels independently, eliminating the prospect of certain connotative motions like a duck for obfuscation or a leap for jubilation.

Due to the specific modes of robot expression, for example its constancy, there may be styles of dance that organically emerge and can only be performed by robots, styles that leverage the swarm nature of robot systems — sharing a single networked brain — and involve elemental components like poses or trajectories that only robots can achieve. Making performances in these styles may be choreography or programming or both.

For robots, there is no awareness of the difference between performance and rehearsal, and I yearned for this distinction. In the minutes leading up to a scientific experiment with the KUKA, a “performance” of sorts for the experiment participant, I would repeatedly toggle my focus between the track pad, the computer, and the robot to make sure all was functional when the participant began the task. Again, despite these best efforts, I once witnessed the robot in freefall hit the mounting table below. A robot demo can be as exhilarating as a performance in the lead-up to the event, but the “after” is not nearly as satisfying. After a performance with other humans, I often felt invigorated and refreshed. After a robot demo or performance, the pained relief of nothing failing is temporary, until the next experiment or performance when the tension refoments.

My own choreography with robots began as scripting for dance performances, then explorations of humans in the loop with robots (as with the Vine and the KUKA). I now explore ways to choreograph a robot that are not specific preset phrases and trajectories, but instead utilize learning, randomization, and sensed information (about where the robot is contacted, for example) to generate the robot’s performing motion. I build devices that humans can wear or use to cooperate with and control robots. I wonder if all of these current projects will appear on stages, or if they belong elsewhere.

Proximity

I believe robot prevalence will accelerate. As computers became cheaper and easier for nonexperts to use, so will robots. Some of these robots will be used for dirty, dull, and dangerous jobs like mining or bomb defusal. Acceptance of robots for tasks like cleaning or delivering has increased significantly during the Covid-19 pandemic (Tucker Reference Tucker2020). In parallel, our science fiction fantasies linger, picturing the arrival of a personal, in-home robot assistant or butler. Not Siri, the Toto Washlet, or Roomba. But a dynamic, roving manipulator. A robot that generalizes well to a variety of tasks and affects its environment on a daily basis.

As personal computers proliferated, boundary lines among disciplines ruptured. Beginning in the 1960s, fields like semiotics, linguistics, and graphics were all the more relevant to computing (Lawler and Aristar Dry Reference Lawler and Dry1998:1–4; Liu Reference Liu2000:18; Carlson Reference Carlson2017:24–39). In the decades since, we witnessed the mass proliferation of information via the internet necessitate the intersection of computing with medicine, economics, and politics. I maintain this will be the case with robots. Due to the fact that robots are three-dimensional and act autonomously, we will see fields concerned with body, space, and time like choreography, theatre, architecture, and animation, not solely collaborate with roboticists — as many have done for decades (see Takayama, Dooley, and Ju Reference Takayama, Dooley and Ju2011; Gemeinboeck and Saunders Reference Gemeinboeck and Saunders2017; Apostolos Reference Apostolos1991) —but conceive of entirely new ways of probing exactly what a nonhuman body can emote, convey, and achieve; then weigh the implications of those new bodies for people. When I choreograph a robot to dance with me on a stage, we are in a near exchange of space and contact. I consider where else I may soon encounter a moving robot body in such proximity and who will have choreographed it.

Jean-Claude Latombe, a leading academic in robotics and motion planning, elevated the role of motion planning as “eminently necessary since, by definition, a robot accomplishes tasks by moving in the real world” (2012:ix). The problem of motion planning is often reduced to a start position, a goal position, and an obstacle-avoidance constraint. Contained within this problem is configuration space, or all the manners in which the robot changes shape to accommodate the particular constraints. Think of yourself wiggling through a narrow cave, turning to the side in order to pass through.

And yet, robot motion can be incidental. In one example, a reinforcement learning model is created, a reward is maximized, and the actual motion that the robot performs for this maximum reward is a byproduct of the trained model (Sutton and Barto Reference Sutton and Barto2018:7–10). In another, a contact point is desired at the end effector, and the joint angles are calculated using an inverse kinematics algorithm. These methods may optimize for variables like power-efficiency, task success, or speed, and the resulting motion may be innocuous in certain settings, and can be illegible in others, like when a robot is meant to be interactive, social, or acceptable. This question of motion becomes particularly acute as robots touch upon more facets of our everyday lives. Heather Knight notes, “Robots are uniquely capable of leveraging their embodiment to com-municate on human terms” (2011:44).

Because of this, researchers continue to develop control schemes and learning approaches for improving robots’ task successes alongside communicability. Work in autonomous vehicle algorithms and human-robot interaction explores how robots are perceived by people and how to leverage those perceptions for increased legibility and human-robot coordination (Sadigh et al. Reference Sadigh, Landolfi, Sastry, Seshia and Dragan2018; Breazeal et al. Reference Breazeal, Kidd, Thomaz, Hoffman and Berlin2005). There is research on learning from the evolutionary intelligence of animal and human bodies for more natural motion, like a method to learn agile locomotion skills from animals (Peng et al. Reference Peng, Coumans, Zhang, Lee, Tan and Levine2020), adding self-supervised control on top of human teleoperated data to improve general robot skill learning (Lynch et al. Reference Lynch, Khansari, Xiao, Kumar, Tompson, Levine, Sermanet, Kaelbling, Kragic and Suguira2020), and replicating the process rather than the product of natural evolution (Bongard Reference Bongard2013).

My friend and scholar Hank GerbaFootnote 18 contended recently that the debate around whether robots can actually feel masks the obverse question of how machine-like a person can actually be treated. As I press the buttons of this keyboard, reducing the full extension of my movement expression into a series of digit compressions, I cannot help but accept this as one underlying thesis of personal technology. Reducibility benefits usability. We demand simplicity rather than richness. Psychologist Barbara Tversky notes that “Humans have been moving and feeling much longer than they have been thinking, talking, and writing” (2020). I ponder if our average individual movement vocabularies have broadened or collapsed over time, as we specialize our bodies in an effort to maximize those same robot motion variables like power-efficiency, task success, or speed. In the case of the robot, the stakes of that resulting motion are how the robot will be perceived, how well it will perform. In the case of the human, the stakes of that resulting motion are our fundamental sensory experience of the world.

Current computers and phones (and ABB Wen robot trackpads) leave much to be desired in terms of leveraging the full range of human action; as a result, expanding interface inputs and outputs is a large research area in human-computer interaction. Individuals working in fields like augmented reality (AR) and virtual reality (VR) create interfaces that transition from the 2D (screen) to the 3D (Carmigniani et al. Reference Carmigniani, Furht, Anisetti, Ceravolo, Damiani and Ivkovic2011; Berg and Vance Reference Berg and Vance2017). Audio assistants like Amazon’s Alexa void the screen, and controllers like the UltraLeap allow broader free space hand motion (Amazon 2020; Foster Reference Foster2013).

Holding these two ideas side by side — robot motion, behavior, and appearance as critical design choices at a prevalence inflection point, while humans break away from stationary or two-dimensional tools to interact more richly with our technologies — leads us to question how our bodies share space. The whole robot is an interface: How else can I move, touch, speak to, and use it? Perform with it? Translate writing into dance, words into action? Are the models of how we exist in the world sufficient? When I perform with a robot and feel as though my body extends into it, does the definition of human as a standalone entity still apply? How is this definition challenged by the new relational experiences robots provide? My own self-conception has changed; might others’ change too as robots become more numerous among us?

Dancing with robots provokes my imagination of how we could live in the world with our technologies. Suppose every embodied thing we interact with could be animate and responsive, creating a ubiquitous improvisation that enlivens our exteroception and prompts movement exploration. If we foreground the body, if we initiate by dancing, in what way does this counteract reducibility? In what way does it centralize experience over productivity? Feeling over thinking? Expansion over contraction? A softening of egoism. A slackening of boundaries. The way we live with robots may offer a beginning, a growing possibility. The way we control and interact with robots could feel like being on a stage, inside the machine, folding ourselves in and out of these other moving bodies, feeling vivid, suspended, and vast.

This Room

The ceiling and walls are white. Purple graphic carpet and the low whirring of the fridge remind me of an airport. Beyond the floor-to-ceiling glass doors are evergreen trees, reaching to the edge of my high balcony. The afternoon sky is perennially clear blue in Palo Alto.

I am venturing into my next work during a new artistic residency. There are two giant screens at my desk connected to my laptop. As I gaze long into another room rendered on one of them, I see my torso reflected in the screen glass, staring back at me. The other room: a three-dimensional display of a black void, with gray markings delineating directions and distance. Centered in this simulated room is a new robot. I have yet to see this robot “in person,” but as I type lines of code into the cloud-based simulator, the robot begins to move.

I rise and place a camera several feet behind my desk chair so I can film myself dancing a phrase. The carpet against my calloused feet causes a faint tickle, the breeze sifts my hair. I repeat the phrase with eyes closed and notice a warm pulse in my ears. The dark room behind my eyelids, my constant space. Sitting back down, I type more lines, and play the recorded video of myself next to the moving robot in the simulator. I save final changes to the Python notebook, then upload the programmed sequence to the robot fleet. Several miles down the street, inside a warehouse, on the third floor, in an open space, under the fluorescent white lights, a real robot starts to dance.

To view supplemental materials related to this article, please visit: http://doi.org/10.1017/S105420432000012X