Article contents

INTERRUPTED TIME SERIES DESIGNS IN HEALTH TECHNOLOGY ASSESSMENT: LESSONS FROM TWO SYSTEMATIC REVIEWS OF BEHAVIOR CHANGE STRATEGIES

Published online by Cambridge University Press: 21 April 2004

Abstract

Objectives: In an interrupted time series (ITS) design, data are collected at multiple instances over time before and after an intervention to detect whether the intervention has an effect significantly greater than the underlying secular trend. We critically reviewed the methodological quality of ITS designs using studies included in two systematic reviews (a review of mass media interventions and a review of guideline dissemination and implementation strategies).

Methods: Quality criteria were developed, and data were abstracted from each study. If the primary study analyzed the ITS design inappropriately, we reanalyzed the results by using time series regression.

Results: Twenty mass media studies and thirty-eight guideline studies were included. A total of 66% of ITS studies did not rule out the threat that another event could have occurred at the point of intervention. Thirty-three studies were reanalyzed, of which eight had significant preintervention trends. All of the studies were considered “effective” in the original report, but approximately half of the reanalyzed studies showed no statistically significant differences.

Conclusions: We demonstrated that ITS designs are often analyzed inappropriately, underpowered, and poorly reported in implementation research. We have illustrated a framework for appraising ITS designs, and more widespread adoption of this framework would strengthen reviews that use ITS designs.

- Type

- GENERAL ESSAYS

- Information

- International Journal of Technology Assessment in Health Care , Volume 19 , Issue 4 , December 2003 , pp. 613 - 623

- Copyright

- © 2004 Cambridge University Press

Reliable and valid information on the effectiveness and cost-effectiveness of different interventions is required if policy makers are to make decisions based on these interventions. Rigorous research can help provide such information for policy makers; however, many existing studies are of poor quality (6).

The randomized controlled trial (RCT) is seen as the criterion standard methodology for the evaluation of health care interventions (17). However, there are many interventions for which it is impossible or impractical to use RCTs. For national interventions such as mass media campaigns (35) or within local contexts, the policy makers may wish to assess the impact of specific policies such as the dissemination of clinical guidelines (49). Thus, there is increasing interest in the use of quasi-experimental designs (18).

A common quasi-experimental design used to evaluate such interventions is a before-and-after study design. That is, some measure of compliance or outcome is taken before the intervention and then the same measure is taken after the intervention has occurred. Any changes are then inferred to have occurred because of the intervention. For example, the Working Party of the Royal College of Radiologists undertook a before-and-after study evaluating the impact on general practice of disseminating the Royal College of Radiologists booklet Making the Best Use of a Department of Radiology to general practitioners (58). The booklet provided guidelines for radiological investigations and was introduced on January 1, 1990. In the study, the number and type of referrals were measured for the year before and the year after the introduction of the guidelines. There was a reduction in radiological requests after the guidelines were introduced, and it was concluded that the guidelines had changed practice. Several questions remained unanswered. Was the number of referrals already decreasing before the intervention? Did the major National Health Service reforms introduced during 1989–90 impact upon the number of referrals (23;60)? Did the referrals stay at this lower level throughout 1990, or did they begin to return to the original level? These questions demonstrate the potential weaknesses of the before-and-after design (49).

Interrupted time series (ITS) designs can be used to strengthen before-and-after designs (15). In an ITS design, data are collected at multiple instances over time before and after an intervention (interruption) is introduced to detect whether the intervention has an effect significantly greater than the underlying secular trend. There are many examples of the use of this design for the evaluation of health care interventions (16;22;45;67).

An advantage of an ITS design is that it allows for the statistical investigation of potential biases in the estimate of the effect of the intervention. These potential biases include:

- Secular trend – the outcome may be increasing or decreasing with time. For example, the observations might be increasing before the intervention; hence, one could have wrongly attributed the observed effect to the intervention if a before-and-after study was performed.

- Cyclical or seasonal effects – there may be cyclical patterns in the outcome that occur over time.

- Duration of the intervention – the intervention might have an effect for the first three months only after it was introduced; data collected yearly would not have identified this effect.

- Random fluctuations – these are short fluctuations with no discernible pattern that can bias intervention effect estimates.

- Autocorrelation – this is the extent to which data collected close together in time are correlated with each other. The autocorrelation ranges between −1 and 1. A negative autocorrelation suggests that outcomes taken close together in time are likely to be dissimilar. For example, a high outcome is followed by a low outcome that is then followed by a high outcome and so on. In contrast, a positive autocorrelation suggests that outcomes measured close together in time are similar to each other. For example, a high outcome is followed by another high outcome. Ignoring autocorrelation can lead to spuriously significant effects (18).

To investigate such biases, researchers need to use appropriate statistical methods. For example, time series regression techniques (25) or autoregressive integrated moving average models (ARIMA) (10).

Studies using ITS designs are increasingly being considered for inclusion in systematic reviews (7); however, there has been no published research to assess the methodological quality of ITS studies. We, therefore, undertook a critical review of the methodological quality of ITS studies included in two systematic reviews. The first review evaluated the effectiveness of mass media interventions targeted at improving the utilization of health services (35) and the second review evaluated the effectiveness of different clinical guideline dissemination and implementation strategies (36).

METHODS

Inclusion Criteria

All ITS studies in the mass media review were included. One study from the guideline review was excluded, because it was also in the mass media review (48). Both reviews used the Effective Practice and Organisation of Care (EPOC) Cochrane Group definition of an ITS design (7): (i) there were at least three time points before and after the intervention, irrespective of the statistical analysis used; (ii) the intervention occurred at a clearly defined point in time; (iii) the study measured provider performance or patient outcome objectively.

Data Abstraction

Quality criteria were developed from two sources; from the EPOC data collection checklist (5), and from the classification of threats to validity identified by Campbell and Stanley (15). These quality criteria were agreed by consensus among all the authors in the study and are displayed in Box 1. At least two reviewers independently abstracted the data from each study.

Data Analysis

Descriptive statistics for each review were tabulated. If studies had not performed any statistical analysis or had used inappropriate statistical methods, we reanalyzed the results (where possible). For the purpose of reanalysis, data on individual observations over time were derived from tables of results or graphs presented in the original study. Data from graphs was obtained by scanning the graph as a digital image and reading the corresponding values from the images (35).

Statistical Method for Reanalysis of Primary Studies

Time series regression was used to reanalyze the results from each study. The best fit preintervention and postintervention lines were estimated by using linear regression, and autocorrelation was adjusted for by using the maximum likelihood methods where appropriate (25). First-order autocorrelation was tested for statistically by using the Durbin-Watson statistic, and higher-order autocorrelations were investigated by using the autocorrelation and partial autocorrelation function.

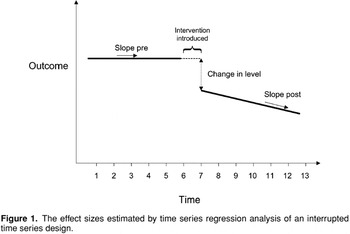

Two effect sizes were estimated (see Figure 1). First, a change in the level of outcome at the first point after the introduction of the intervention was estimated. This strategy was performed by extrapolating the preintervention regression line to the first point postintervention. The difference between this extrapolated point and the postintervention regression estimate for the same point estimated the change in level. Further mathematical details are available from the authors on request. Second, a change in the slopes of the regression lines was estimated (calculated as postintervention minus preintervention slope). We classified each study intervention as “significant” if either of these effect sizes were statistically significant.

The effect sizes estimated by time series regression analysis of an ITS design.

RESULTS

Twenty ITS studies were included from the mass media review (8;9;11;18;24;27;39–42;46–48;51;53;55;61;64;69;71), and thirty-eight ITS studies were included from the guidelines review (1–4;12–14;16;22;26;28–33;37;38;43–45;50;52;54;56;57;59;62;63;65–68;70; 72–75). Eighteen studies were published before 1990 and forty studies after 1990. The characteristics of the ITS studies are displayed in Table 1. Both reviews had similar numbers of preintervention data points; however, the average ratio of postintervention points to preintervention points indicated that the guideline studies tended to collect more postintervention points than preintervention points within each study. The time interval between data points varied, with “monthly” the most common in both reviews.

The quality criteria for the ITS studies are shown in Table 2. Thirty-eight (66%) studies did not rule out the threat that another event could have occurred at the same time as the intervention. Reporting of factors related to data collection, the primary outcome, and completeness of the data set were generally done in both reviews. No study provided a justification for the number of data points used or a rationale for the shape of the intervention effect.

Thirty-seven of the ITS studies in the reviews were analyzed inappropriately, of which thirty-three could be reanalyzed. Four “inappropriately analyzed” studies could not be reanalyzed because of the poor quality of the graphs in the primary papers. The results of the reanalyzed studies are shown in Table 3. Eight of the studies had statistically significant preintervention trends (3;11;21;30;52;57;61;75). The mean autocorrelations in the reanalyzed ITS studies were small. All of the interventions in each of the reanalyzed studies were termed “effective” in their original reports, but approximately half of these studies showed no statistically significant differences in slope and level when reanalyzed.

DISCUSSION

We have demonstrated that ITS designs are being increasingly used to evaluate health care behavior change interventions. Many of these studies had apparent threats to their internal validity that made interpretation of the results unreliable. The design of the studies was often poorly reported and analyzed inappropriately leading to misleading conclusions in the published reports of these studies.

There were no substantial differences between the characteristics of the studies in the two reviews. The mass media studies tended to have smaller postintervention phases. Most of the studies in both reviews had short time series. Short time series suggest that model fitting is performed with less confidence, standard errors are increased, type I error is increased, power is reduced, and hence, failure to detect autocorrelation or secular trends.

Over 65% of the studies were analyzed inappropriately. Most of these studies performed a t-test of the preintervention points versus postintervention points. The t-test would give incorrect effect sizes if preintervention trends were present and would decrease the standard error if positive autocorrelation were present (19;49). The t-test, therefore, should not be used to analyze results from an ITS design.

Many of the studies were underpowered. It is difficult to derive a formula for the sample size (34). As a rule of thumb, if one collects ten pre- and ten post-data points, then the study would have at least 80% power to detect a change in level of five standard deviations (of the pre-data) only if the autocorrelation is greater than 0.4 (20). This score is a large effect, and the results from the two reviews suggested that the autocorrelation was around the order of 0.1; therefore, a study with ten pre- and post-data points was likely to be underpowered. In addition, it is beneficial to have a long preintervention phase thereby increasing power to detect secular trends.

This study showed that many ITS studies had analytical shortfalls. In such instances, reviewers may need to consider reanalyzing. The data may be obtained directly from the authors, but if this is not possible, the reviewers can often scan the graphs from the original paper onto a computer as described in the methods section. We recommend reviewers use time series regression techniques for reanalyzing ITS studies. The main advantages of this technique is, first, that it can be performed using most standard statistical packages and, second, that it can estimate the effect sizes more precisely than other time series techniques when the series are short (20;34).

The lack of a randomized control group in an ITS design requires the investigators to be critical of the internal validity of the study. With this in mind, we developed a checklist for reviewers of such designs. This checklist encompassed the major threats to validity of ITS designs as proposed by Campbell and Stanley (15). Generally, the greatest threat to validity is that an event other than the control of the researchers occurred at the same time as the intervention, thereby making causal inferences impossible. It was disappointing, therefore, to note that this threat was not ruled out explicitly in 66% of the studies.

Implications for Researchers

ITS designs may appear superficially simple; however, we have demonstrated that they are often analyzed inappropriately, underpowered, and poorly reported in the implementation research field. To improve the quality of analysis and reporting, we suggest that researchers consider the list of quality criteria described in Box 1.

Implications for Systematic Reviewers

ITS designs are a useful and pragmatic evaluative tool when RCTs are not feasible. It is possible to consider this often neglected study design in systematic reviews and in so doing draw both qualitative and quantitative results on intervention effects. In this study, we have illustrated the usefulness of a framework for appraising ITS designs, and more widespread adoption of such a framework would strengthen reviews that use ITS designs.

Implications for Policy Makers

Policy makers may find ITS designs a useful way to assess the impact of specific policies that could remain unassessable otherwise. In addition, these designs can often be performed inexpensively from routine data collection.

The Health Services Research Unit is funded by the Chief Scientist Office of the Scottish Executive Health Department. The guidelines dissemination and implementation review was funded by the UK NHS R&D Health Technology Assessment Programme. However, the views expressed are those of the authors and not necessarily those of the funding body.

References

Box 1. Quality Criteria for ITS Designs

The effect sizes estimated by time series regression analysis of an ITS design.

Characteristics of Included Interrupted Time Series Studies

Quality Criteria for ITS Studies

Characteristics of Reanalyzed Studies

- 557

- Cited by