1 Introduction

In many environments, the determinants of outcomes are not observable. Decision makers make unobserved choices under risk and uncertainty, and outcomes are determined by a combination of their choices and luck. For instance, a firm’s profits are determined by both the business strategies taken by its managers and the macroeconomic factors that are beyond their control. How are outcomes evaluated in such situations? Are there systematic biases in the attribution of outcomes to the decision makers’ choices versus luck? Do they receive too little or too much credit?

We explore these questions in a leadership context where the choices decision makers make under risk affect their own payoffs as well as those of other individuals. Leadership is often about decision making for others and inherently involves assuming responsibility for the outcomes of others (Ertac & Gurdal, Reference Ertac and Gurdal2012; Edelson et al., Reference Edelson, Polania, Ruff, Fehr and Hare2018). In many cases, decision makers face a trade-off between maximizing their own payoff and those of other individuals. For example, society’s growing demand for corporate social responsibility, defined as sacrificing firm profits for social interest, demonstrates how decision makers in positions of power are expected to engage in prosocial activity (Bénabou & Tirole, Reference Bénabou and Tirole2010).

We report findings from two experiments designed to examine how individuals who are affected by the choices of the decision makers form inferences about the decision makers. Our experimental design emphasizes the role social preferences play in leadership and aims to analyze the inferences formed about this important personality trait of decision makers. Uncovering biases in the attribution of outcomes is important for understanding the attitudes towards decision makers and the decision-making environment.Footnote 1

In Experiment 1, individuals in their role as decision makers make an investment choice on behalf of their group. They choose between two investment options with binary outcomes. The outcome to the group depends on both the decision maker’s choice, which is unobservable to the other group members, and luck. A high investment leads to a higher probability of the good outcome for the group but comes at a higher private cost to the decision maker. Hence, one can also think of the high investment decision as a costly effort choice made by the decision maker that increases the group’s surplus at a personal cost. Consequently, decision makers’ choices are affected by their social preferences. Using this design, we examine the group members’ initial beliefs about the decision maker’s type, and how these beliefs are updated after observing the outcome of the choice made by the decision maker.

In our analysis of belief updating, we examine two issues. First, we study biases in the way prior beliefs are treated in the updating process when group members form inferences about decision makers’ prosocial preferences. More precisely, taking Bayes’ rule as the benchmark, we ask whether group members suffer from base-rate neglect (i.e., put too little weight on their prior beliefs) or confirmatory bias (i.e., put too much weight on their prior beliefs) relative to a Bayesian.Footnote 2 Second, we examine whether group members respond too little or too much to new information about the choice made by the decision maker, and whether there is an asymmetry in the way good and bad outcomes are treated. Responding too little to a good (bad) outcome, for example, would imply that they believe decision makers act selfishly (prosocially) and luck plays a bigger role in determining outcomes. Hence, if members exhibit a bigger response to bad outcomes (as compared to good outcomes), then this implies that they are more likely to blame the decision maker for acting selfishly when they see a bad outcome, but they do not attribute a good outcome to the decision maker’s prosociality.

In Experiment 1, we find that group members consistently suffer from base-rate neglect. This indicates, for example, that members who are initially more optimistic about the likelihood that the decision maker made a high investment decision tend to over-update their beliefs about the decision maker’s behavior when they observe a bad outcome. After accounting for base-rate neglect, we find that on average, members under-respond to good outcomes and attribute them more to luck as compared to a Bayesian. In contrast, their response to bad outcomes is similar to a Bayesian. This asymmetry implies that members on average attribute good outcomes more to luck and bad outcomes more to the decision maker’s selfish choice. As a result, decision makers get too little credit for their successes.

We also consider whether members’ belief-updating behavior depends on the process by which the decision maker is selected. For instance, it may be the case that members are more likely to blame decision makers for bad outcomes if they are not appointed by the group. Consistent with our theoretical framework, we find that the appointment mechanism affects the initial beliefs formed about the decision maker’s type. For example, members believe that a group-appointed decision maker is more likely to act in the group’s interest as compared to a randomly appointed decision maker. However, once we control for the impact of the appointment mechanism on the initial beliefs, we find that the appointment mechanism has no additional impact on the updated beliefs.

A feature of our design in Experiment 1 is that the decision makers’ choices and members’ beliefs are elicited using the strategy method, where all individuals first make choices as decision makers before reporting their beliefs as group members. This allows us to examine the relationship between individuals’ choices as decision makers and their attribution of the decision makers’ outcomes as members. Using this design, we uncover that the asymmetry we identify in the evaluation of good and bad outcomes is driven by those individuals who make the less prosocial choice for the group. That is, those who make lower investment choices as decision makers are more likely to attribute others’ good outcomes to luck. This suggests that a consensus effect may be at play as individuals use their own behavior as the basis for updating their beliefs about others (Ross et al., Reference Ross, Greene and House1977; Marks & Miller, Reference Marks and Miller1987; Dawes, Reference Dawes1989).Footnote 3

We explore this result further in Experiment 2, where participants no longer play both roles in the experiment. We are interested in investigating whether the biases we observe in Experiment 1 still exist when group members do not have experience making choices as decision makers.Footnote 4 Participants are informed at the beginning of the experiment whether they have been assigned as a decision maker or a group member. These roles do not change throughout the experiment.

To investigate whether different types (i.e., prosocial versus selfish individuals) form and update their beliefs differently, we ask group members to report their beliefs before asking them to indicate, hypothetically, what their investment decision would have been if they were the decision maker. This allows us to test whether there exists a correlation between each group member’s type and their belief irrespective of whether they play both roles or just one. That is, by eliciting these hypothetical decisions after the belief-elicitation stage, we are still able to examine the relationship between individuals’ effort choices as decision makers and their beliefs as members.

Interestingly, a correlation between the attribution of good outcomes to luck and what members would have chosen as decision makers also emerges in Experiment 2. That is, those members who are more likely to attribute good outcomes to luck are also more likely to state afterwards that they would have chosen low effort if they were placed in the position of the decision maker. This leads us to conclude that with and without the experience of acting as a decision maker, the same biases exist and seem to be driven by a consensus effect.

Our paper is related to three strands of the literature. First, our study substantially advances the research on attribution biases in beliefs in both economics and psychology. Studies in experimental economics have analyzed biases in beliefs and information processing by focusing mainly on ego-related beliefs, i.e., beliefs about one’s own ability or physical attributes where one’s ego can play a big role in shaping their beliefs (Eil & Rao, Reference Eil and Rao2011; Ertac, Reference Ertac2011; Grossman & Owens, Reference Grossman and Owens2012; Möbius et al., Reference Möbius, Niederle, Niehaus and Rosenblat2014; Coutts, Reference Coutts2019). Both Eil and Rao (Reference Eil and Rao2011) and Möbius et al. (Reference Möbius, Niederle, Niehaus and Rosenblat2014) find evidence of asymmetric updating, where agents are more responsive to good news than to bad news about their own performance in an IQ test or a beauty task. While Grossman and Owens (Reference Grossman and Owens2012) find no evidence of asymmetry, Ertac (Reference Ertac2011) and Coutts (Reference Coutts2019) find that individuals tend to overweigh bad news.Footnote 5

The related literature in psychology has mainly focused on self-serving biases in the attribution of own versus others’ outcomes (see, e.g., Miller and Ross, Reference Miller and Ross1975). Consistent with our findings, individuals tend to attribute others’ good outcomes to exogenous factors (such as luck). In comparison, they are more likely to attribute their own good outcomes to endogenous factors (such as ability). Similarly, Pettigrew (Reference Pettigrew1979) finds that good outcomes of out-group members are attributed to luck, but the opposite pattern emerges for in-group members.

Our novelty in relation to this strand of the literature in both economics and psychology is that we focus on the evaluation of others’ outcomes in a context where decision making is shaped by social preferences. We show that good and bad outcomes are treated asymmetrically in this case also, and attribution biases exist in the case of good outcomes only. Moreover, our findings reveal that individuals’ evaluation of others’ prosociality tend to be correlated with their own behavior.

Second, our work is related to the literature on outcome biases, where researchers also find asymmetric evaluation of others’ good and bad outcomes. However, it is assumed in this literature that all determinants of outcomes are fully observable. Despite this, good outcomes are treated more favorably than bad outcomes, suggesting that evaluators are biased by luck (see, e.g., Charness and Levine, Reference Charness and Levine2007; Gurdal et al., Reference Gurdal, Miller and Rustichini2013; Brownback and Kuhn, Reference Brownback and Kuhn2019). Our research extends this literature by considering the (arguably more common) setup where determinants of outcomes are not observable.

Finally, our paper is related to the literature which investigates how individuals respond to others’ favorable and unfavorable outcomes under uncertainty in contexts such as CEO compensation (Bertrand and Mullainathan, Reference Bertrand and Mullainathan2001; Leone et al., Reference Leone, Wu and Zimmerman2006), political elections (Wolfers, Reference Wolfers2007; Cole et al., Reference Cole, Healy and Werker2012), medical referrals (Sarsons, Reference Sarsons2019), and soccer (Gauriot & Page, Reference Gauriot and Page2019).Footnote 6 In addition, a significant amount of attention has been devoted to preferences for redistribution under uncertainty. While some papers investigate the link between redistribution and beliefs about the determinants of inequality (Fong, Reference Fong2001; Linos & West, Reference Linos and West2003; Aarøe & Petersen, Reference Aarøe and Petersen2014; Alesina et al., Reference Alesina, Stantcheva and Teso2018; Rey-Biel et al., Reference Rey-Biel, Sheremeta, Uler, Isaac and Norton2018), others aim to identify the causal effect of varying the source of inequality (such as merit versus luck) on the level of redistribution (Cappelen et al., Reference Cappelen, Hole, Sørensen and Tungodden2007; Almås et al., Reference Almås, Cappelen, Sørensen and Tungodden2010; Durante et al., Reference Durante, Putterman and van der Weele2014; Almås et al., Reference Almås, Cappelen and Tungodden2020). A key objective of all these studies is to examine how performance is evaluated under uncertainty. In contrast, our aim is to focus on the belief formation and updating process, and to study specifically the biases which may characterize it. Our experimental setting gives us the opportunity to examine attribution biases in a controlled environment with an objective signal generating process.

2 Experiment 1

2.1 Experimental design

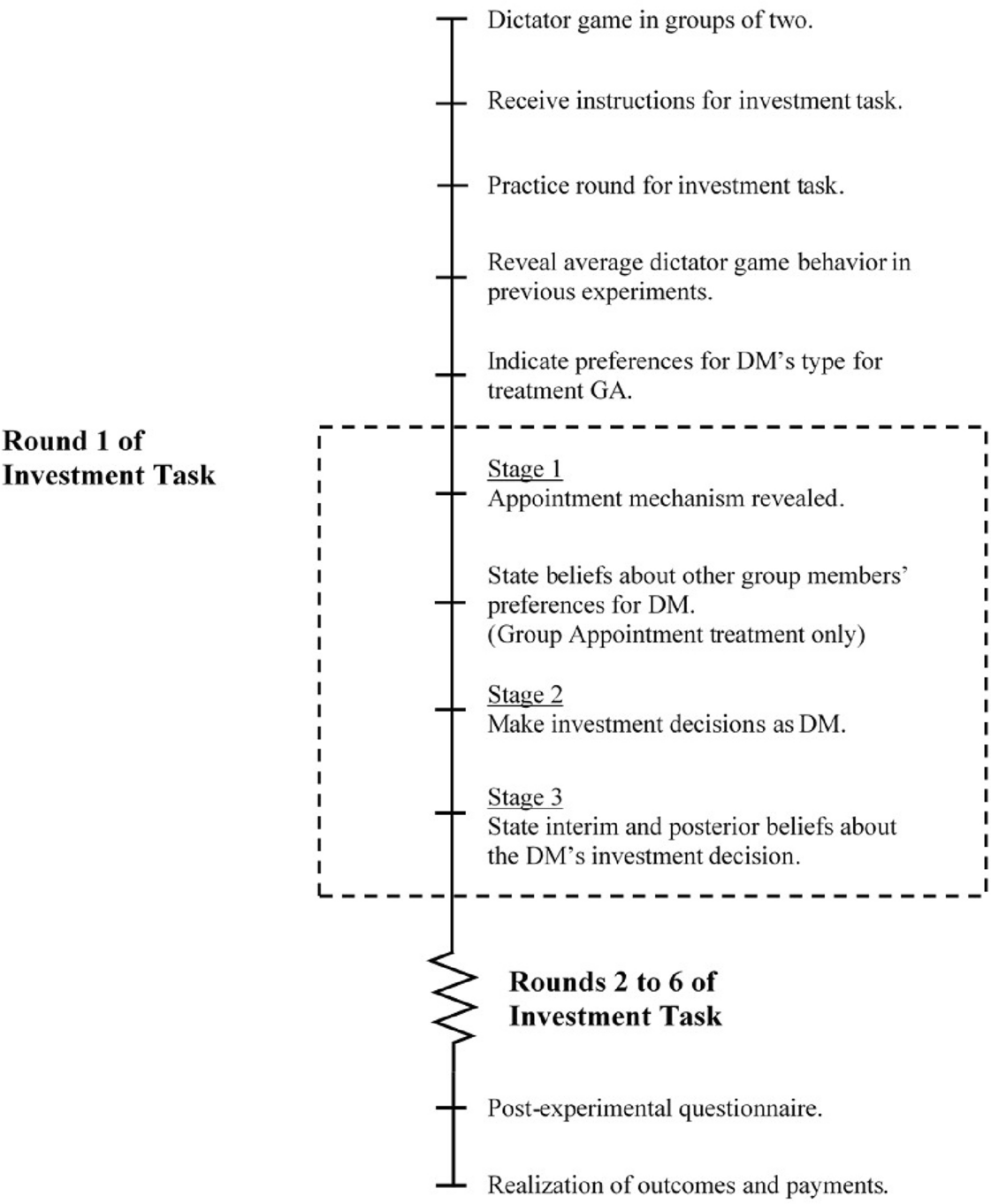

Figure 1 presents an overview of the experiment. The main task in the experiment is the investment task, which we explain in Sect. 2.1.1. According to our theoretical framework, decisions in the investment task are shaped by the subjects’ social preferences. Hence, to elicit their social preferences, subjects also play the dictator game in groups of two. Each subject is given 300 Experimental Currency Units (ECU) and asked to allocate this endowment between themselves and their matched partner. Both subjects within the pair make allocation decisions as the dictator. They are told that one of the decisions will be randomly chosen at the end of the experiment to determine the final allocation of the given endowment within each pair. Once subjects play the dictator game, they receive instructions for the investment task.Footnote 7

2.1.1 Investment task

The experiment features a within-subject treatment design, where subjects play six repeated rounds of the investment task. In each round, subjects are re-matched to a new group with two other individuals (perfect stranger matching). Within each group, there is a decision maker (referred to as the DM in the rest of the paper) who makes an unobservable investment decision on behalf of the group. In the experiment, we label the DM as the leader.

Decisions are elicited using a strategy method. In each round, all subjects make their investment decisions assuming that they have been assigned to be the DM, and then state their beliefs about their DM’s investment decision assuming that someone else in the group has been assigned to be the DM. This allows us to analyze whether beliefs are correlated with individuals’ own decisions.Footnote 8 No feedback is given during the entire experiment. Subjects are informed whether they were assigned the role of the DM at the end of the experiment.

As shown in Fig. 1, each round of the investment task consists of three stages, which we now explain in detail.

Fig. 1 Overview of Experiment 1

Stage 1: Appointment of DM. Group members’ unconditional beliefs about their DM’s investment decision can potentially depend on how the DM is appointed. We consider four mechanisms of appointing the DM. The appointment mechanism changes across the rounds, varying the initial beliefs members hold. This allows us to examine whether members’ updating behavior depends on the distribution of their initial beliefs. For example, it may be the case that members are more likely to blame DMs for their failures if the DM is not appointed by the group.

At the beginning of each round, subjects are informed which mechanism will be employed in that round. In three of the appointment mechanisms, the DM is appointed exogenously. In the random assignment mechanism (treatment RA), each individual has an equal chance of being appointed as the DM. In the low and high assignment mechanisms (treatments LA and HA), subjects are informed that the group member who allocated the least and the highest amount to their matched partner in the dictator game, respectively, would be appointed as the DM.Footnote 9 Ties are broken randomly.

The fourth mechanism is the group appointment mechanism (treatment GA). Before beginning the first round of the investment task, each group member is asked to indicate whether they prefer: (i) to appoint the member who allocated the lowest amount to their matched partner in the dictator game; (ii) to appoint the member who allocated the highest amount to their matched partner in the dictator game; or (iii) to randomly select one member to be the DM. In addition, the subjects are asked to state their beliefs about the other two group members’ preferences on which appointment mechanism to use.Footnote 10 To appoint the DM, the computer randomly picks one group member. This member’s decision is used to determine which of the other two members will be the DM. This ensures that there is no scope for strategic behavior in that subjects are unable to influence their probability of being the DM through their decisions.Footnote 11 This is especially important in our set-up because, as explained later, there is a clear advantage to being the DM.

Stage 2: DM’s investment decision. In the second stage of the investment task, each subject is asked to make an investment decision on behalf of the group.

The DM is given an individual endowment of 300 ECU and chooses between two investment options that will affect the payoffs of all the group members. The two investment options, given in Fig. 2, are: (i) Investment X, which corresponds to a high effort level; and (ii) Investment Y, which corresponds to a low effort level. Both investment options yield the same high return if they succeed and the same low return if they fail. However, they differ in their probability of success/failure, and in their cost to the DM. Investment X succeeds with a probability of 0.75 and costs the DM 250 ECU, while Investment Y succeeds with a probability of 0.25 and costs the DM 50 ECU. Subjects are informed that the DM’s investment decision will not be revealed to the group members. They only learn the outcome of the investment in the round randomly chosen for payment at the end of the experiment.

Fig. 2 Investment task

The returns from the two investment options are assumed to take the following values. In Game 1, the investment provides a return of 750 ECU for the group if it succeeds and 150 ECU if it fails. Note that the subjects’ investment decisions as the DM and their beliefs as members about the DM’s investment decision may be sensitive to the returns associated with the investment options. For instance, some subjects may be averse to choosing Investment Y if the members will receive a payoff of zero in case of failure. For this reason, we also consider Game 0, where the investment provides a return of 600 ECU if it succeeds and 0 ECU if it fails.

The return from the investment is distributed evenly between the DM and the two group members. The amount determines each group member’s final payoff, except for the DM’s. The DM’s final payoff is equal to the sum of the endowment and the share of the return from the investment minus the cost of investment. These final payoffs are given at the bottom of Fig. 2.

Stage 3: Elicitation of beliefs of group members. In the third stage of the investment task, subjects are asked to assume the role of group members and state their beliefs on the likelihood that the DM (i.e., one of the other two players in their group) has chosen Investment X. We elicit beliefs in the form of frequencies rather than probabilities.Footnote 12 When stating their beliefs, subjects are required to enter an integer number between 0 and 100.

We elicit two sets of beliefs from each subject.Footnote 13 First, each subject is asked to state their unconditional belief that the DM has chosen Investment X. Given that the subjects form these beliefs after being informed of the appointment mechanism, we refer to these unconditional beliefs as the members’ interim beliefs. Second, each subject is asked to state their beliefs conditional on observing whether the investment chosen by the DM has succeeded or failed. We refer to these beliefs as the members’ posterior beliefs. We do not impose any restrictions on their posterior beliefs. The group members can state any belief they want, regardless of what their interim beliefs are.

Subjects are paid for either their interim belief or their posterior beliefs. Beliefs are incentivized using the binarized scoring rule (BSR). We use the BSR because it incentives truth-telling independent of the subjects’ risk preferences (Hossain & Okui, Reference Hossain and Okui2013; Erkal et al., Reference Erkal, Gangadharan and Koh2020). It is a modified version of the quadratic scoring rule with a binary lottery procedure, where the distance between a subject’s belief report and the DM’s investment decision determines the probability of receiving a fixed amount (10 ECU in this case). As the subject’s reported belief gets further away from the DM’s investment decision, the probability of receiving the fixed payment gets lower.Footnote 14

2.1.2 Procedures and payment

The experiments were conducted in the Experimental Economics Laboratory at the University of Melbourne (E 2 MU) and programmed using z-Tree (Fischbacher, Reference Fischbacher2007). We ran 10 sessions with 24 to 30 subjects in each session. A total of 282 participants, mostly students from the University of Melbourne, were recruited using ORSEE (Greiner, Reference Greiner2015).Footnote 15 Each session lasted between 90 and 120 min.

To ensure that the subjects fully understood the tasks, the experimenter verbally summarized the instructions after the subjects finished reading the printed instructions. Subjects completed a set of control questions and participated in a practice round using treatment GA and Game 0 before beginning the actual investment task. For Game 0, we implemented treatments LA and HA only since, as explained in the next section, theory suggests that the difference in interim beliefs should be the greatest between these two treatments. We implemented all four appointment mechanisms for Game 1, which allows us to study the subjects’ behavior across different mechanisms using the same set of parameters.

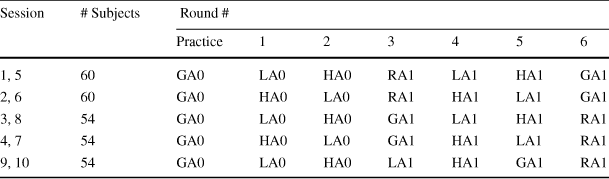

The order between treatments was changed to control for potential order effects. However, since our main focus is the treatments associated with Game 1, Game 0 was always implemented in Rounds 1 and 2 while Game 1 was always implemented in Rounds 3 to 6. Table 1 summarizes the order of the treatments in each session. In each cell of the table, the first two letters denote the appointment mechanism, while the Arabic numeral at the end denotes the game faced by the subjects in the corresponding round within the session.Footnote 16

Table 1 Order of treatments for each experiment session

|

Session |

# Subjects |

Round # |

||||||

|---|---|---|---|---|---|---|---|---|

|

Practice |

1 |

2 |

3 |

4 |

5 |

6 |

||

|

1, 5 |

60 |

GA0 |

LA0 |

HA0 |

RA1 |

LA1 |

HA1 |

GA1 |

|

2, 6 |

60 |

GA0 |

HA0 |

LA0 |

RA1 |

HA1 |

LA1 |

GA1 |

|

3, 8 |

54 |

GA0 |

LA0 |

HA0 |

GA1 |

LA1 |

HA1 |

RA1 |

|

4, 7 |

54 |

GA0 |

HA0 |

LA0 |

GA1 |

HA1 |

LA1 |

RA1 |

|

9, 10 |

54 |

GA0 |

LA0 |

HA0 |

LA1 |

HA1 |

GA1 |

RA1 |

At the end of the experiment, subjects were invited to complete a brief questionnaire which included demographic questions, questions about their decisions during the experiment, and an incentivized one-shot risk task (Gneezy & Potters Reference Gneezy and Potters1997) to elicit their risk preferences. Subjects were paid for either the dictator game or the investment task. If they were paid for the investment task, then we paid them for their decisions in one of the six rounds. For the chosen round, a DM was appointed according to the corresponding treatment and the DM was paid only for their investment decision. The other two members were paid for their DM’s decision as well as their stated beliefs. Earnings were converted to cash at the conclusion of the session at the rate 10 ECU = 1 AUD. Overall, subjects earned between $10 and $76, with the mean earnings being $ 34.07. Subjects’ earnings also included a show-up fee of $10.

2.2 Theoretical framework

In this section, we provide a simple theoretical framework to evaluate how beliefs will be formed under the different appointment mechanisms.

2.2.1 DM’s effort choice

Players maximize expected utility and are differentiated based on their other-regarding preferences. Let

![]() denote the type of player i. It is a private draw from a distribution

denote the type of player i. It is a private draw from a distribution

![]() with density

with density

![]() .

.

![]() is common knowledge.Footnote 17

is common knowledge.Footnote 17

Players are randomly assigned to groups of size

![]() . The DM in each group makes an effort choice

. The DM in each group makes an effort choice

![]() at cost

at cost

![]() which is deducted from an initial endowment

which is deducted from an initial endowment

![]() that the DM receives. Assume that

that the DM receives. Assume that

![]() . There are two possible team outputs,

. There are two possible team outputs,

![]() , where

, where

![]() , and the DM’s effort choice determines the probability with which each output level will be realized. A high effort choice leads to the high output level with a higher probability, but it costs more to the DM. Specifically, a high effort choice

, and the DM’s effort choice determines the probability with which each output level will be realized. A high effort choice leads to the high output level with a higher probability, but it costs more to the DM. Specifically, a high effort choice

![]() leads to an output

leads to an output

![]() with probability p, where

with probability p, where

![]() , while a low effort choice

, while a low effort choice

![]() leads to an output

leads to an output

![]() with probability

with probability

![]() .

.

For a given outcome Q, each member in the group receives

![]() and the utility of the DM is given by

and the utility of the DM is given by

where

![]() is the direct utility the DM receives from their own monetary payoff and

is the direct utility the DM receives from their own monetary payoff and

![]() represents the utility member j receives from their own monetary payoff. We assume

represents the utility member j receives from their own monetary payoff. We assume

![]() ,

,

![]() , and

, and

![]() denotes the weight the DM puts on the utilities of the other group members.Footnote 18

denotes the weight the DM puts on the utilities of the other group members.Footnote 18

DMs maximize their expected utility and choose

![]() over

over

![]() if

if

![]() . In the experimental design, we refer to

. In the experimental design, we refer to

![]() and

and

![]() as Investment X and Investment Y, respectively. The choice of parameters in Game 0 and Game 1 are

as Investment X and Investment Y, respectively. The choice of parameters in Game 0 and Game 1 are

![]() ,

,

![]() ,

,

![]() ,

,

![]() 750 (Game 1) or 600 (Game 0),

750 (Game 1) or 600 (Game 0),

![]() 150 (Game 1) or 0 (Game 0),

150 (Game 1) or 0 (Game 0),

![]() , and

, and

![]() . Given these parameter choices, if

. Given these parameter choices, if

![]() , then the DMs only care about their own payoff and choose

, then the DMs only care about their own payoff and choose

![]() since

since

![]() .Footnote 19 For

.Footnote 19 For

![]() ,

,

![]() holds if

holds if

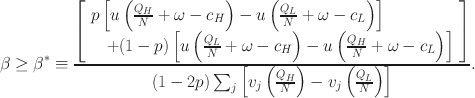

Intuitively, DMs choose high effort if they care sufficiently about the payoffs of the other group members.Footnote 20 In the experiment, subjects’ decisions in the dictator game provide a proxy for their types (

![]() ). We use the dictator game since it is widely used in the literature to measure social preferences.

). We use the dictator game since it is widely used in the literature to measure social preferences.

2.2.2 Information and beliefs

Members’ interim beliefs. We first consider the members’ interim beliefs about their DM’s type after observing the appointment mechanism. Specifically, we are interested in each member’s belief that the DM is of type

![]() , which corresponds to the likelihood that the DM chooses

, which corresponds to the likelihood that the DM chooses

![]() over

over

![]() . We denote member i’s interim belief after observing appointment mechanism

. We denote member i’s interim belief after observing appointment mechanism

![]() as

as

![]() .

.

Our first testable prediction is about the ranking of the members’ interim beliefs under the different appointment mechanisms:

Hypothesis 1

![]() .

.

The proof is in Appendix C. In treatment GA, all players prefer to have the highest type appointed as the DM. This is because all group members want the DM to choose

![]() which maximizes their expected payoffs. Although this implies that the beliefs under treatments GA and HA should be the same, the difference stated in the hypothesis is due to the implementation strategy we follow in treatment GA. Specifically, the highest type in the group will not necessarily be appointed as the DM under treatment GA if his/her appointment decision is randomly picked to be implemented. Hence,

which maximizes their expected payoffs. Although this implies that the beliefs under treatments GA and HA should be the same, the difference stated in the hypothesis is due to the implementation strategy we follow in treatment GA. Specifically, the highest type in the group will not necessarily be appointed as the DM under treatment GA if his/her appointment decision is randomly picked to be implemented. Hence,

![]() .

.

Members’ posterior beliefs. We next consider how members update their beliefs about their DM’s type after observing the outcome. The outcome

![]() is a signal that members receive about the DM’s type. Note that

is a signal that members receive about the DM’s type. Note that

![]() and

and

![]() .

.

We denote group member i’s unbiased posterior belief of the DM’s type, given a signal Q, as

![]() . Specifically, suppose the members receive a signal

. Specifically, suppose the members receive a signal

![]() . Using Bayes’ rule, member i’s posterior belief is given by

. Using Bayes’ rule, member i’s posterior belief is given by

![]() is defined in a similar way.

is defined in a similar way.

We test the null hypothesis that the members will be unbiased (i.e., Bayesian) when they update their beliefs:

Hypothesis 2

Group members behave like Bayesian agents when updating their beliefs about the DM under all of the appointment mechanisms.

We explain the econometric framework that we use to test Hypothesis Footnote 2 empirically in Sect. 2.3.3. If we detect deviations from the Bayesian benchmark (e.g., Tversky & Kahneman, Reference Tversky and Kahneman1974), our design allows us to investigate these deviations further in two ways. First, we can test whether the deviations vary across the different appointment mechanisms. That is, we can observe, for example, whether being appointed by the group (treatment GA) has an impact on the way members update their beliefs about the DM. Second, since we employ a strategy method, we can examine whether there is a correlation between subjects’ decisions as DMs and their beliefs (initial and updated) about the DM. Such a correlation can be explained by a consensus effect. For example, a subject who chooses

![]() may be more likely to believe that their DM has also chosen

may be more likely to believe that their DM has also chosen

![]() .

.

2.3 Results

Since there were no interactions between the group members during the experiment and no feedback was given to the subjects from the previous rounds, our unit of observation is at the subject level. For the main analyses in this paper, we pool data from the Game 0 and Game 1 treatments.Footnote 21 For robustness, we show in Appendix D.1 that the main conclusions do not change when we consider the Game 1 treatments only.

2.3.1 The dictator game as a proxy for an individual’s type

We conjecture in Sect. 2.2 that subjects’ behavior in the dictator game is a proxy for their type. That is, subjects who transfer more of their endowment to their matched partner in the dictator game are more likely to choose

![]() when they are in the role of the DM. As our testable hypotheses depend on this relationship between the DM’s type and their effort choice, we first examine if it holds.

when they are in the role of the DM. As our testable hypotheses depend on this relationship between the DM’s type and their effort choice, we first examine if it holds.

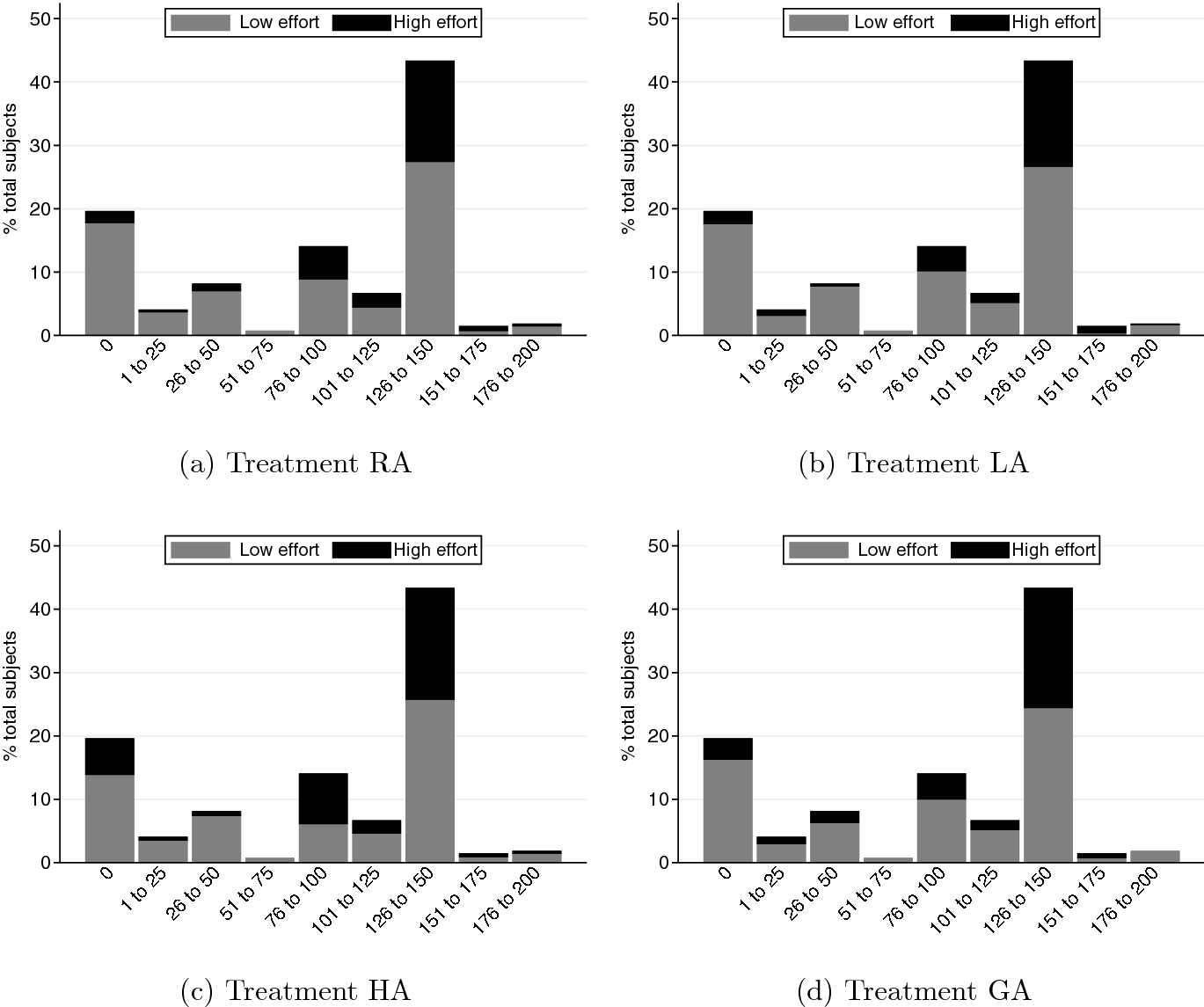

Figure 3 presents the distribution of subjects’ decisions in the dictator game against their effort choices across different appointment mechanisms. Because the subjects only participate in the dictator game once, the distribution of transfers are the same across the different treatments. Within each panel in Fig. 3, the black bars represent the proportion of DMs who choose high effort (

![]() ) while the gray bars represent the DMs who choose low effort (

) while the gray bars represent the DMs who choose low effort (

![]() ).

).

Fig. 3 DM’s effort choice in Game 1 against dictator game behavior

A clear pattern that emerges is that DMs who are more prosocial in the dictator game are also the ones who are more likely to choose the investment option that is in the interest of the group (i.e., high effort). This pattern is consistent across the different appointment mechanisms.Footnote 22 The correlation between the DM’s behavior in the dictator game and their effort choice are statistically significantly positive in all treatments. The Spearman’s rank correlation coefficients and corresponding p values are: (i) RA: 0.233, p value < 0.001; (ii) LA: 0.262, p value < 0.001; (iii) HA: 0.096, p value = 0.025; and (iv) GA: 0.183, p value = 0.003.

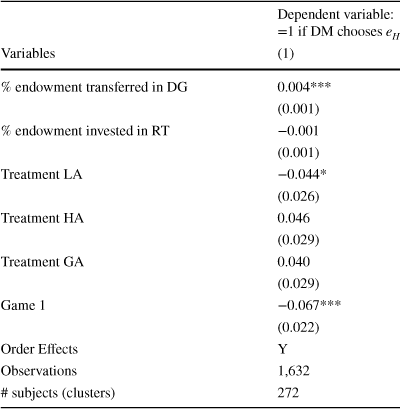

Table 2 presents marginal-effects estimates from a probit model for the relationship between the subjects’ decisions as DMs in the investment task and their dictator game behavior. In the regression analysis, we control for order effects, the subjects’ behavior in the risk task, the appointment mechanisms, and Game 1. We find a statistically significant and positive relationship between the DM’s decision in the dictator game and their decision to choose high effort in the investment task (p value < 0.001). A DM who transfers 1% more of their endowment to their matched partner in the dictator game is 0.4% more likely to choose

![]() in the investment task on average. In addition, consistent with our expectations about the DM’s behavior between the Game 0 and Game 1 treatments, we observe that subjects are 6.7% less likely to choose

in the investment task on average. In addition, consistent with our expectations about the DM’s behavior between the Game 0 and Game 1 treatments, we observe that subjects are 6.7% less likely to choose

![]() in Game 1 on average, and this effect is statistically significant (p value = 0.003).Footnote 23

in Game 1 on average, and this effect is statistically significant (p value = 0.003).Footnote 23

Table 2 Regression of DM’s effort choice

|

Dependent variable: =1 if DM chooses

|

|

|---|---|

|

Variables |

(1) |

|

% endowment transferred in DG |

0.004*** |

|

(0.001) |

|

|

% endowment invested in RT |

−0.001 |

|

(0.001) |

|

|

Treatment LA |

−0.044* |

|

(0.026) |

|

|

Treatment HA |

0.046 |

|

(0.029) |

|

|

Treatment GA |

0.040 |

|

(0.029) |

|

|

Game 1 |

−0.067*** |

|

(0.022) |

|

|

Order Effects |

Y |

|

Observations |

1,632 |

|

# subjects (clusters) |

272 |

Marginal effects of probit model reported. Robust standard errors in parentheses. Standard errors are clustered at the subject level.

DG: Dictator Game; RT: Risk Task.

*** p<0.01, ** p<0.05, * p<0.10.

Fig. 4 Preferences for DM under Treatment GA

The established link between dictator game behavior and effort choices implies that subjects’ preferences in treatment GA should be for the highest type to be appointed as the DM. Figure 4 presents the subjects’ preferences for their DM’s type under treatment GA (panel a) and their beliefs about the preferences of the other group members (panel b). The majority of the subjects (77.6%) prefer to have the individual who made the highest transfer in the dictator game to be the DM of their group. Moreover, the majority of the subjects (76.1%) believe that the other members of their group prefer to appoint the individual who made the highest transfer as the DM.

2.3.2 Analysis of interim beliefs

We next examine the members’ interim beliefs after they observe the appointment mechanism but prior to observing the DM’s outcomes. In all of our analyses, belief is a variable that takes an integer value in [0, 100], where a higher belief implies that the member thinks the DM is more likely to have chosen high effort (

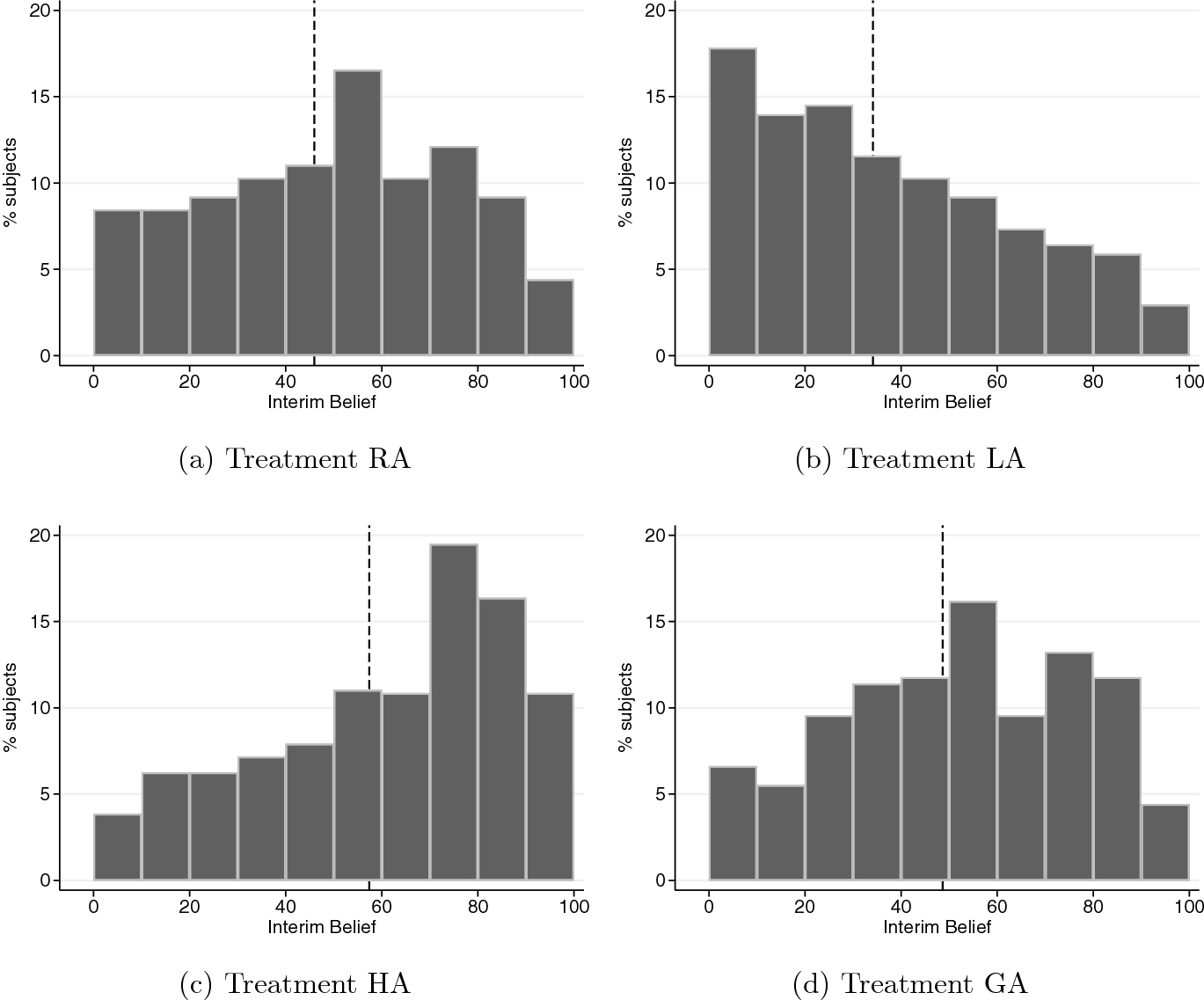

![]() ). Figure 5 presents the distributions of the members’ interim beliefs by treatment. In each panel, the dashed line represents the mean interim belief.

). Figure 5 presents the distributions of the members’ interim beliefs by treatment. In each panel, the dashed line represents the mean interim belief.

Fig. 5 Distributions of group members’ interim beliefs

The histograms in Fig 5 suggest that group members respond to the mechanism used to appoint the DM, as stated in Hypothesis Footnote 1. In treatment RA, the DM is randomly assigned and the members’ beliefs are approximately centered on 50%, with a mean of 45.94% (panel a). In contrast, the distribution of interim beliefs is highly skewed to the right in treatment LA with a mean of 34.15% (panel b), and to the left in treatment HA with a mean of 57.40% (panel c). When the DM is appointed based on the preferences of the group in treatment GA (panel d), the distribution of interim beliefs shifts slightly to the right relative to that in treatment RA, and the average interim belief increases to 48.65% which is lower than that in treatment HA.Footnote 24

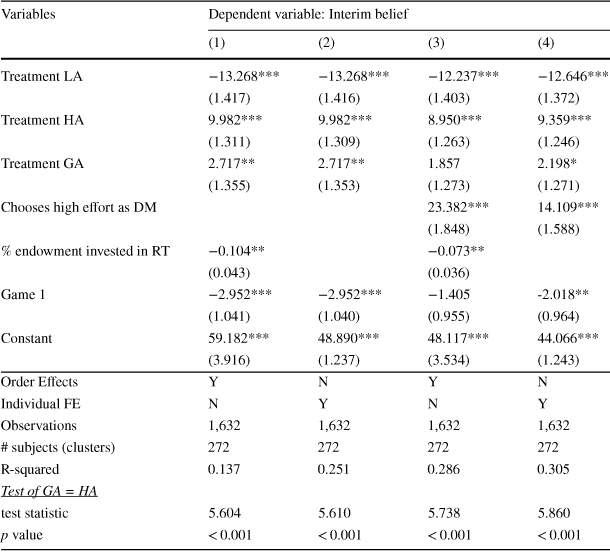

Table 3 presents OLS estimates for the regressions of interim beliefs against treatment variables, controlling for Game 1, order effects (in columns 1 and 3), and individual fixed effects (in columns 2 and 4). In all the specifications, treatment RA is the comparison group. The last row presents the results of a Wald test of equality between treatments GA and HA. The coefficient estimates in columns (1) and (2) support our conclusions from the non-parametric analysis. We also find that the members’ beliefs are on average lower in Game 1 treatments than in Game 0 treatments. This difference is statistically significant in both columns (1) and (2) (p value = 0.005 in both columns).

Table 3 Regression of members’ interim belief

|

Variables |

Dependent variable: Interim belief |

|||

|---|---|---|---|---|

|

(1) |

(2) |

(3) |

(4) |

|

|

Treatment LA |

−13.268*** |

−13.268*** |

−12.237*** |

−12.646*** |

|

(1.417) |

(1.416) |

(1.403) |

(1.372) |

|

|

Treatment HA |

9.982*** |

9.982*** |

8.950*** |

9.359*** |

|

(1.311) |

(1.309) |

(1.263) |

(1.246) |

|

|

Treatment GA |

2.717** |

2.717** |

1.857 |

2.198* |

|

(1.355) |

(1.353) |

(1.273) |

(1.271) |

|

|

Chooses high effort as DM |

23.382*** |

14.109*** |

||

|

(1.848) |

(1.588) |

|||

|

% endowment invested in RT |

−0.104** |

−0.073** |

||

|

(0.043) |

(0.036) |

|||

|

Game 1 |

−2.952*** |

−2.952*** |

−1.405 |

-2.018** |

|

(1.041) |

(1.040) |

(0.955) |

(0.964) |

|

|

Constant |

59.182*** |

48.890*** |

48.117*** |

44.066*** |

|

(3.916) |

(1.237) |

(3.534) |

(1.243) |

|

|

Order Effects |

Y |

N |

Y |

N |

|

Individual FE |

N |

Y |

N |

Y |

|

Observations |

1,632 |

1,632 |

1,632 |

1,632 |

|

# subjects (clusters) |

272 |

272 |

272 |

272 |

|

R-squared |

0.137 |

0.251 |

0.286 |

0.305 |

|

Test of GA = HA |

||||

|

test statistic |

5.604 |

5.610 |

5.738 |

5.860 |

|

p value |

|

|

|

|

Robust standard errors clustered at the subject level in parentheses. For all regressions, treatment RA is the reference treatment.

RT: Risk Task.

*** p<0.01, ** p<0.05, * p<0.10.

In columns (3) and (4), we control for the subjects’ own decision as a DM. A subject who chooses to exert high effort when placed in the position of the DM under a specific appointment mechanism is also more likely, as a group member, to expect the DM to choose high effort under the same appointment mechanism. This effect is statistically significant (p value < 0.001 in both columns).Footnote 25 The treatment effects remain similar in both direction and magnitude after controlling for the consensus effect, although the estimates for treatment GA are now statistically insignificant in column (3) and marginally statistically significant in column (4) (p values = 0.146 and 0.085, respectively).

We summarize our results in support for Hypothesis Footnote 1 as follows:

Result 1

Group members respond to the appointment mechanism in their interim beliefs. The interim beliefs are the lowest in treatment LA and the highest in treatment HA. The interim beliefs in treatment RA are lower than those in treatment GA.

2.3.3 Analysis of posterior beliefs

Estimation strategy for posterior beliefs. To test Hypothesis Footnote 2 and analyze updating behavior, we estimate the following equation:

where

![]() and

and

![]() captures non-systematic errors. This specification allows us to determine the weights members place on their interim beliefs and the signals they receive.Footnote 26 Note that

captures non-systematic errors. This specification allows us to determine the weights members place on their interim beliefs and the signals they receive.Footnote 26 Note that

![]() corresponds to the Bayesian benchmark. Hypothesis Footnote 2 states that

corresponds to the Bayesian benchmark. Hypothesis Footnote 2 states that

![]() for each appointment mechanism.

for each appointment mechanism.

Any deviation in the parameters from 1 is interpreted as non-Bayesian updating behavior. Appendix E provides detailed explanations of the interpretations of these parameters. First,

![]() captures the weight that group members place on their interim belief in the updating process. If

captures the weight that group members place on their interim belief in the updating process. If

![]() (

(

![]() ), then members suffer from base-rate neglect (confirmatory bias) in that they place too little (too much) weight on their interim belief.

), then members suffer from base-rate neglect (confirmatory bias) in that they place too little (too much) weight on their interim belief.

Next,

![]() and

and

![]() capture the extent to which members respond to signals of good outcome and bad outcome from the DM, respectively.

capture the extent to which members respond to signals of good outcome and bad outcome from the DM, respectively.

![]() or

or

![]() implies that members are, on average, over-responsive to a good or a bad signal, respectively, relative to a Bayesian. Specifically, biased members attribute the corresponding outcome more to the DM’s decision as compared to unbiased Bayesian members. On the other hand,

implies that members are, on average, over-responsive to a good or a bad signal, respectively, relative to a Bayesian. Specifically, biased members attribute the corresponding outcome more to the DM’s decision as compared to unbiased Bayesian members. On the other hand,

![]() or

or

![]() implies that members are conservative in their response to a good or a bad signal, respectively, and attribute the corresponding outcome more to the DM’s luck as compared to unbiased Bayesian members.Footnote 27

implies that members are conservative in their response to a good or a bad signal, respectively, and attribute the corresponding outcome more to the DM’s luck as compared to unbiased Bayesian members.Footnote 27

Finally, we can also capture asymmetric updating of beliefs, i.e., asymmetric attribution of outcomes to the DM’s decision and luck. If

![]() (

(

![]() ), then members are more likely to attribute a good (bad) outcome to the DM’s decision and a bad (good) outcome to luck.

), then members are more likely to attribute a good (bad) outcome to the DM’s decision and a bad (good) outcome to luck.

Estimating deviations from Bayes’ rule. We now estimate Eq. (2) using ordinary least squares (OLS) to analyze the biases that members suffer from when updating their beliefs.Footnote 28 Figure D1 of Appendix D.3 shows the distribution of subjects who update their beliefs inconsistently (i.e., in the opposite direction to that predicted by Bayes’ rule) or not at all. The inclusion of these observations in the analysis may result in biased or incorrect conclusions, particularly if these subjects are reporting beliefs that do not genuinely reflect their true posterior beliefs. Hence, for the remainder of the analysis, we exclude a subject if 25% or more of their posterior beliefs are inconsistent (44 out of 272 subjects in total) or if they report a posterior belief equal to the interim belief across all six rounds of the experiment (23 subjects in total). These two groups jointly constitute 24.6% of the sample. Note that these numbers are largely in line with what is found in the literature (see, e.g.,Möbius et al. Reference Möbius, Niederle, Niehaus and Rosenblat2014; Coutts Reference Coutts2019; Barron Reference Barron2020).Footnote 29

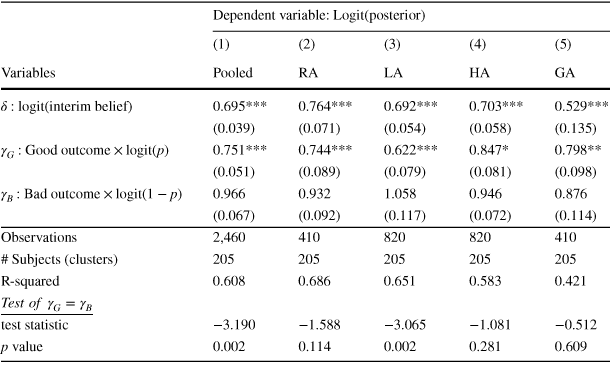

Table 4 presents the regression results of members both at the pooled level (column 1) and at the treatment level (columns 2 to 5). As a test of Hypothesis Footnote 2, our primary interest is to examine whether the coefficients are different from 1. Hence, asterisks are used in the table to indicate whether a coefficient is statistically significantly different from 1.

Table 4 Regression of members’ posterior beliefs

|

Dependent variable:

|

|||||

|---|---|---|---|---|---|

|

(1) |

(2) |

(3) |

(4) |

(5) |

|

|

Variables |

Pooled |

RA |

LA |

HA |

GA |

|

|

0.695*** |

0.764*** |

0.692*** |

0.703*** |

0.529*** |

|

(0.039) |

(0.071) |

(0.054) |

(0.058) |

(0.135) |

|

|

|

0.751*** |

0.744*** |

0.622*** |

0.847* |

0.798** |

|

(0.051) |

(0.089) |

(0.079) |

(0.081) |

(0.098) |

|

|

|

0.966 |

0.932 |

1.058 |

0.946 |

0.876 |

|

(0.067) |

(0.092) |

(0.117) |

(0.072) |

(0.114) |

|

|

Observations |

2,460 |

410 |

820 |

820 |

410 |

|

# Subjects (clusters) |

205 |

205 |

205 |

205 |

205 |

|

R-squared |

0.608 |

0.686 |

0.651 |

0.583 |

0.421 |

|

|

|||||

|

test statistic |

−3.190 |

−1.588 |

−3.065 |

−1.081 |

−0.512 |

|

p value |

0.002 |

0.114 |

0.002 |

0.281 |

0.609 |

Robust standard errors clustered at the subject level in parentheses. This analysis excludes subjects classified as inconsistent or non-updaters.

*** p < 0.01, ** p < 0.05, * p < 0.10. Null hypothesis is coefficient = 1.

Column (1) shows that group members are biased in their belief-updating process. The estimate for

![]() suggests that they suffer from base-rate neglect on average (test of

suggests that they suffer from base-rate neglect on average (test of

![]() : p value < 0.001).Footnote 30 The estimate for

: p value < 0.001).Footnote 30 The estimate for

![]() suggests that after controlling for the weight members place on their interim beliefs, members are conservative in their responses to good outcomes. That is, they attribute good outcomes to luck more than a Bayesian would and this effect is statistically significant (test of

suggests that after controlling for the weight members place on their interim beliefs, members are conservative in their responses to good outcomes. That is, they attribute good outcomes to luck more than a Bayesian would and this effect is statistically significant (test of

![]() : p value < 0.001). However, there is no statistically significant evidence that members respond to bad outcomes differently from a Bayesian (test of

: p value < 0.001). However, there is no statistically significant evidence that members respond to bad outcomes differently from a Bayesian (test of

![]() : p value = 0.608). Hence, relative to the Bayesian benchmark, group members give too little credit for the DM’s success but the right amount of blame for the DM’s failure.

: p value = 0.608). Hence, relative to the Bayesian benchmark, group members give too little credit for the DM’s success but the right amount of blame for the DM’s failure.

The last two rows of Table 4 present the results of a Wald test of equality between

![]() and

and

![]() , giving us a test of the presence of an asymmetric attribution bias. Overall, members update their beliefs about the DM asymmetrically (i.e.,

, giving us a test of the presence of an asymmetric attribution bias. Overall, members update their beliefs about the DM asymmetrically (i.e.,

![]() ). They tend to attribute good outcomes more to luck and, relatively, bad outcomes more to the DM’s decision. This effect is statistically significant (p value = 0.002).Footnote 31

). They tend to attribute good outcomes more to luck and, relatively, bad outcomes more to the DM’s decision. This effect is statistically significant (p value = 0.002).Footnote 31

We next analyze the members’ updating behavior across the different appointment mechanisms. The coefficient estimates in columns (2)–(5) of Table 4 reveal that biases similar to the ones observed at the pooled level exist at the treatment level. Under each appointment mechanism, members consistently suffer from base-rate neglect, attribute good outcomes more to luck, and treat bad outcomes no differently from a Bayesian.Footnote 32,

Footnote 33 The asymmetry observed in the attribution of outcomes is statistically significant in treatment LA only (Wald tests of

![]() : p values = 0.002, 0.114, 0.281 and 0.609, respectively, for treatments LA, RA, HA and GA).Footnote 34

: p values = 0.002, 0.114, 0.281 and 0.609, respectively, for treatments LA, RA, HA and GA).Footnote 34

In summary, we do not find support for Hypothesis Footnote 2. Members are not Bayesian when updating their beliefs after observing their DM’s outcomes.

Result 2

On average, group members exhibit similar biases in their updating behavior under all the appointment mechanisms. They suffer from base-rate neglect in their updating behavior. Compared to the Bayesian benchmark, members attribute good outcomes more to luck, but their average response to bad outcomes is not different from Bayesian.

We next consider the relationship between subjects’ effort choices (which are determined by their types) and their updating behavior. The results in Sect. 2.3.2 reveal that subjects’ effort choices are correlated with their interim beliefs. Our aim here is to test whether subjects who exert high effort as DMs update their beliefs differently to those who exert low effort after controlling for their interim beliefs.

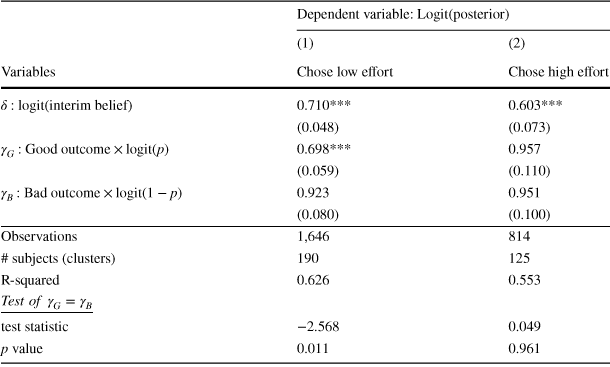

Table 5 reports separate parameter estimates of Eq. (2) based on whether the subjects have chosen low effort (column 1) or high effort (column 2) as DMs within a given round in the investment task. The estimates of

![]() and

and

![]() are not statistically significantly different between columns (1) and (2) (p values = 0.222 and 0.818, respectively). However, the estimate of

are not statistically significantly different between columns (1) and (2) (p values = 0.222 and 0.818, respectively). However, the estimate of

![]() is statistically significantly different between the two columns (p value = 0.035). While the estimate for

is statistically significantly different between the two columns (p value = 0.035). While the estimate for

![]() is statistically significantly less than 1 in column (1) (p value < 0.001), it is not different from 1 in column (2) (p value = 0.697). Hence, regardless of their effort choices as DMs in a given round of the task, subjects suffer from base-rate neglect (

is statistically significantly less than 1 in column (1) (p value < 0.001), it is not different from 1 in column (2) (p value = 0.697). Hence, regardless of their effort choices as DMs in a given round of the task, subjects suffer from base-rate neglect (

![]() ) and are no different from a Bayesian in their response to bad outcomes (

) and are no different from a Bayesian in their response to bad outcomes (

![]() ) on average. However, in a given round of the investment task, those individuals who choose low effort as DMs are more likely to attribute good outcomes to luck when they make decisions as group members.Footnote 35 This suggests that the consensus effect that we find to be driving members’ interim beliefs also appears to be shaping their updating behavior.

) on average. However, in a given round of the investment task, those individuals who choose low effort as DMs are more likely to attribute good outcomes to luck when they make decisions as group members.Footnote 35 This suggests that the consensus effect that we find to be driving members’ interim beliefs also appears to be shaping their updating behavior.

Table 5 Regression of members’ posterior beliefs based on effort choice as DMs

|

Dependent variable:

|

||

|---|---|---|

|

(1) |

(2) |

|

|

Variables |

Chose low effort |

Chose high effort |

|

|

0.710*** |

0.603*** |

|

(0.048) |

(0.073) |

|

|

|

0.698*** |

0.957 |

|

(0.059) |

(0.110) |

|

|

|

0.923 |

0.951 |

|

(0.080) |

(0.100) |

|

|

Observations |

1,646 |

814 |

|

# subjects (clusters) |

190 |

125 |

|

R-squared |

0.626 |

0.553 |

|

|

||

|

test statistic |

−2.568 |

0.049 |

|

p value |

0.011 |

0.961 |

Robust standard errors clustered at the subject level in parentheses. This analysis excludes subjects classified as inconsistent or non-updaters.

*** p < 0.01, ** p < 0.05, * p < 0.10. Null hypothesis is coefficient = 1.

3 Experiment 2

3.1 Motivation and design

The results from Experiment 1 suggest that subjects use their own behavior as the basis for forming and updating their beliefs about others. We design Experiment 2 to investigate this further.

Experiment 2 consists of two treatments. In treatment S (single role), subjects are informed at the beginning of the experiment whether they have been assigned as the DM or as a group member. They make incentivized decisions only in their assigned roles. Specifically, they are asked to make their investment decisions (only if they are the DM) or to report their beliefs about the DM’s decisions (only if they are the member). After reporting their beliefs at the end of each round, members are asked to indicate, hypothetically, what their investment decision would have been if they were the DM. This structure allows us to examine the relationship between individuals’ effort choices as DMs and their beliefs as members, while mitigating the impact that experience may have on their beliefs.

The key question we ask in Experiment 2 is whether we continue to observe the consensus effect in members’ attribution of outcomes in treatment S. Given the well-documented evidence on the consensus effect in driving behavior (e.g., Ross et al., Reference Ross, Greene and House1977; Marks & Miller, Reference Marks and Miller1987; Engelmann & Strobel, Reference Engelmann and Strobel2000, Reference Engelmann and Strobel2012), we expect the relationship between subjects’ behavior and their attribution of outcomes to persist even if they do not make choices as decision makers prior to stating their beliefs.Footnote 36 Consequently, we expect to observe similar biases in the overall attribution of outcomes when decisions are no longer elicited using the strategy method.Footnote 37

The second treatment that we implement in Experiment 2 is treatment D (dual role), where subjects make decisions both in the roles of the DM and a group member (as in Experiment 1). Treatment D is designed to provide a comparable benchmark against which to evaluate subjects’ behavior in treatment S. This is important given the differences in format between Experiment 1 and Experiment 2, which we outline below. A total of 297 subjects (99 DMs and 198 group members) participated in treatment S, and 206 subjects (making decisions in both roles) participated in treatment D.

Unlike Experiment 1, which was conducted in a physical laboratory, the sessions for Experiment 2 were conducted online during a lockdown amid the COVID-19 pandemic.Footnote 38 Subjects were recruited from a similar subject pool at the University of Melbourne, with between 18 and 24 subjects in each virtual session.Footnote 39 The experiments were programmed using oTree (Chen et al., Reference Chen, Schonger and Wickens2016). For each session, all the subjects were admitted into a Zoom meeting with their videos and microphones turned off. They were provided with separate links for the instructions to each part of the experiment, and the experimenter read out the instructions in the Zoom meeting. Each session lasted about 60 minutes on average.

The implementation of Experiment 2 was different on two other dimensions to accommodate the different and shorter nature of the online sessions. First, since we do not find any significant evidence in Experiment 1 that members’ updating behavior depends on the appointment mechanism (Result Footnote 2), we removed this treatment variation by using only the random-appointment mechanism (RA) to determine the DM of each group. Second, each group participated in only three rounds of the investment task (instead of six), remaining in the same group and role for all three rounds. In two of the rounds, we used the same parameters as in Game 0 and Game 1 from Experiment 1. We introduced Game 2 as the third set of parameters. Relative to Game 1, both investments in Game 2 provide a higher return of 900 ECU if they succeed and the same low return of 150 ECU if they fail.Footnote 40 We randomized the order of these parameters across the groups within each session.

3.2 Results

Table D9 in Appendix D.5 reveals that there are significant differences in the subject pools between Experiment 1 and Experiment 2. Subjects in Experiment 2 are on average slightly older, less likely to be Australian or majoring in economics, more likely to be a postgraduate student, and more experienced with economics experiments. However, with respect to the behavioral variables, the subjects do not differ in their decisions in the dictator game or risk task between Experiment 1 and Experiment 2.

We are mainly interested in analyzing members’ posterior beliefs in treatment S, to see whether the same type of biases as in Experiment 1 exist and whether we find evidence of a consensus effect.Footnote 41 To test for the consensus effect, we use the hypothetical choices that members make when they are asked what their investment decision would have been if they were the DM. Table D10 in Appendix D.5 reveals that there is a statistically significant and positive relationship between members’ hypothetical choices as DMs and their incentivized decisions in the dictator game. Hence, the hypothetical answers given by the members seem to be indicative of their underlying preferences.

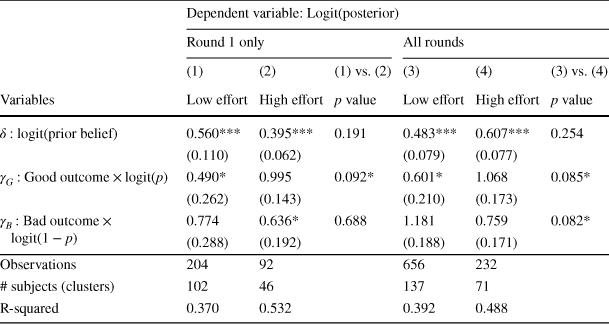

We start by analyzing the posterior beliefs elicited in Round 1 of treatment S, which provides the cleanest examination of members’ biases absent any experience in the decision-making process as DMs.Footnote 42 Columns (1) and (2) of Table 6 present parameter estimates of Eq. (2) based on whether members indicate, hypothetically, that they would have chosen low effort or high effort, respectively, if they were the DM of the group. Columns (3) and (4) report parameter estimates including members’ belief updates in all rounds of the investment task. In the table, we also present p values of pairwise comparisons of parameter estimates between columns (1) and (2), and between columns (3) and (4).

Table 6 Regression of members’ posterior beliefs based on hypothetical effort choice as DMs (treatment S)

|

Dependent variable:

|

||||||

|---|---|---|---|---|---|---|

|

Round 1 only |

All rounds |

|||||

|

(1) |

(2) |

(1) vs. (2) |

(3) |

(4) |

(3) vs. (4) |

|

|

Variables |

Low effort |

High effort |

p value |

Low effort |

High effort |

p value |

|

|

0.560*** |

0.395*** |

0.191 |

0.483*** |

0.607*** |

0.254 |

|

(0.110) |

(0.062) |

(0.079) |

(0.077) |

|||

|

|

0.490* |

0.995 |

0.092* |

0.601* |

1.068 |

0.085* |

|

(0.262) |

(0.143) |

(0.210) |

(0.173) |

|||

|

|

0.774 |

0.636* |

0.688 |

1.181 |

0.759 |

0.082* |

|

(0.288) |

(0.192) |

(0.188) |

(0.171) |

|||

|

Observations |

204 |

92 |

656 |

232 |

||

|

# subjects (clusters) |

102 |

46 |

137 |

71 |

||

|

R-squared |

0.370 |

0.532 |

0.392 |

0.488 |

||

Robust standard errors clustered at the subject level in parentheses. This analysis excludes subjects classified as inconsistent or non-updaters.

All columns control for members’ hypothetical effort choices in treatment S. Columns (1) and (2) restrict the analysis to the first round of updates only.

***p < 0.01, ** p < 0.05, * p < 0.10. Null hypothesis is coefficient = 1.

Columns (1) and (2) reveal that members who would have chosen low effort as DMs are more likely to attribute the DM’s good outcomes to luck relative to a Bayesian (p value = 0.054), while those who would have chosen high effort are no different from a Bayesian in their attribution of good outcomes (p value = 0.972). Overall, members who would have chosen low effort are more likely to attribute good outcomes to luck than those who would have chosen high effort (p value = 0.092).Footnote 43 These findings are consistent with those from Experiment 1 (Table 5). Interestingly, we now find that members who would have chosen high effort as DMs are more likely to attribute bad outcomes to luck relative to a Bayesian (p value = 0.064). However, there is no statistically significant difference in the attribution of bad outcomes between members who would have chosen high effort and those who would have chosen low effort (p value = 0.688).

Columns (3) and (4) of Table 6 reveal that, when we consider all rounds of the task, members who would have chosen low effort as DMs attribute good outcomes more to luck both relative to a Bayesian (p value = 0.060) and relative to those who would have chosen high effort as DMs (p value = 0.085). These results are consistent with those observed in columns (1) and (2), as well as Experiment 1. Moreover, we now find that members who would have chosen high effort as DMs are more likely to attribute bad outcomes to luck as compared to members who would have chosen low effort as DMs (p value = 0.082).Footnote 44

Finally, we compare members’ updating behavior across treatment S, treatment D, and Experiment 1. Tables D11 and D12 in Appendix D.5 present comparisons of members’ updating behavior between treatments S and D, separately by members’ effort choices and at the pooled level, respectively. The last column of Table D12 also provides p values from tests of differences in parameter estimates between Experiment 1 and Experiment 2. The tables provide two main insights. First, we do not find any systematic differences between treatments S and D in members’ attribution of outcomes, both when we compare subjects separately based on their effort choices as DMs, and at the pooled level. Second, despite the differences in the experimental design, format, and subject pool, we do not find any statistically significant differences in members’ attribution of outcomes between Experiment 1 and Experiment 2.

Hence, we conclude that there is no evidence to suggest that members’ biases in their attribution of the DM’s outcomes are driven by whether or not they have experience in the decision-making process as DMs. With and without the experience of acting as a decision maker, the same attribution biases exist and seem to be driven by a consensus effect.

4 Conclusion

In many environments, the determinants of outcomes are not observable. What beliefs do individuals hold in such circumstances about the determinants of others’ outcomes? Do they attribute the outcomes to luck or to the decisions made? Do the beliefs depend on the outcome, i.e., whether the outcome is good or bad? These are the questions we address in this paper.

Our results reveal that members suffer from biases in the way they attribute outcomes to luck versus the choices made. Moreover, members treat good and bad outcomes differently in the sense that while they attribute good outcomes more to luck as compared to a Bayesian, their response to bad outcomes is no different from a Bayesian. This asymmetry implies that the credit decision makers receive for good outcomes is less than the blame they get for bad outcomes.

In Experiment 1, we find that group members exhibit similar biases under all the mechanisms used to appoint the decision maker. However, we find that biases in updating behavior tend to be driven by those subjects who choose low effort as decision makers. Interestingly, the consensus effect we detect affects both initial beliefs and updating behavior. In Experiment 2, we show that the same type of biases exist even if the subjects do not have experience as decision makers.

Determining the systematic biases that individuals may have in the way they process new information and update their beliefs about the decisions of others is critical in a wide range of economic and social interactions. One general implication of our study is that the biases we identify may affect the generosity of decision makers in environments where social preferences matter. For example, they may act less generously if they know that they will not receive sufficient credit for good outcomes. The biases may also affect decision makers’ willingness to take risk. For instance, if business or political leaders are aware that they are given relatively more blame for their failures than credit for their successes, then this may perpetuate a culture of failure avoidance. Such a ‘fear of failure’ culture may reduce their incentives to exert costly effort or their tolerance towards risk.

Our study identifies the biases which exist in the evaluation of others’ decisions specifically in contexts where prosocial preferences play a key role in decision making. In future research, it would be interesting to understand whether the same type of biases exist in other contexts. For example, if performance in a skill-based task is important for leadership, do we observe that the same type of biases emerge in evaluation? Or, if we remove the anonymity of the decision maker, to what extent do in-group versus out-group considerations affect leadership evaluation? Answering these questions would broaden our understanding of biases in performance evaluation.