INTRODUCTION

Many political decisions happen in a context in which individuals face incentives to acquire information and use it to make an inference about a future event. For example, voters have an incentive to select the candidate that best represents their interests and desired policies, which might be affected by knowledge about foreign trade, immigration, or international conflict. Voters would therefore have an incentive to acquire information about these topics and use it to help determine which candidate to support. In this simple example, which occurs regularly in politics, there are incentives for accurate knowledge of the worldand yet few experiments have investigated how incentives affect political knowledge. If incentives matter in these situations and we fail to study their effects, then we may misunderstand behavior in many political contexts.

Prior research about political knowledge and behavior demonstrates that incentives can systematically affect people’s knowledge about domestic politics (Krupnikov et al. Reference Krupnikov, Levine, Lupia and Prior2006; Prior and Lupia Reference Prior and Lupia2008). We extend research about incentives and knowledge by examining how uncertainty in incentives affects knowledge. To do so, we examine knowledge questions for which the outcomes have not yet occurred, and therefore answering these questions correctly requires a combination of information about the current state of the world and the ability to make an inference about how things will change 5 weeks into the future. We show that incentives increase respondents’ effort and accuracy in answering knowledge questions, and subjects behave similarly whether the incentives are guaranteed or uncertain.

This paper contributes to the methodological literature focused on experimental design (Morton and Williams Reference Morton and Williams2010). Good experimental design captures the essential elements of the theory being tested, and if the underlying theory involves individuals having an incentive for accurate knowledge or information acquisition, then our experiments need to include similar incentives. In their absence, an experiment will not be a good match to the behavior being studied.

INCENTIVES, EFFORT, AND KNOWLEDGE ACCURACY

In this paper, we examine the ability of voters to identify the correct answer to a question where the outcome will not be known until a future, but not very distant, date. The ability to predict the outcome of an event in which initial conditions are knowable but there is some uncertainty about the future is a common, politically relevant task (Lupia and McCubbins Reference Lupia and McCubbins1998). Research demonstrates that incentives for accuracy improve the ability of people to correctly answer political knowledge questions (Feldman, Huddy, and George Reference Feldman, Huddy and Marcus2015; Prior and Lupia Reference Prior and Lupia2008) and financial incentives encourage subjects to update their beliefs about political facts (Hill Reference Hill2017).

We contribute to the literature on incentives and knowledge by studying both certain and uncertain incentives. Research has generally used incentives where the payoffs for correct knowledge occur with certainty; however, Hill (Reference Hill2017) does examine how probabilistic incentives affect learning. In many political contexts, incentives are uncertain and therefore understanding their effects is important to learning about behavior. For example, acquiring accurate knowledge may not change one’s vote with certainty, and even if it does affect a vote, the election outcome and its benefits are uncertain. In either case, the benefit of correct knowledge is uncertain and this uncertainty may affect behavior.Footnote 1 As elaborated below, we expect both certain and uncertain incentives to improve knowledge accuracy because they both affect the expected benefits of knowledge, which should lead to more correct answers.

Prior research has also focused on situations in which the correct answer to a question can be identified relatively easily online or in a book. However, the questions we use in our experiment (described in the next section) ask respondents to identify the right answer to a question when the outcome will not be known for about 5 weeks. Furthermore, we focus on knowledge in the realm of foreign affairs. Prior research suggests that while it is generally difficult to identify future outcomes in international affairs (Tetlock Reference Tetlock1998, Reference Tetlock1999, Reference Tetlock2006), some people appear able to make accurate predictions (Mellers et al. Reference Mellers, Stone, Atanasov, Rohrbaugh, Metz, Ungar, Bishop, Horowitz, Merkle and Tetlock2015; Tetlock Reference Tetlock1998, Reference Tetlock1992; Tetlock and Gardner Reference Tetlock and Gardner2016). We do not look at individual-level factors that correlate with prediction ability, and instead we focus on whether incentives for accuracy improve people’s ability to make accurate judgments about future outcomes.

In many ways, this is a hard test of responses to financial incentives given that people’s knowledge of and interest in international affairs typically lag behind domestic politics (Converse Reference Converse1964; Delli Carpini and Keeter Reference Delli Carpini and Keeter1996; Holsti Reference Holsti1992; Kinder and David Reference Kinder and Sears1985; Lupia Reference Lupia2015). However, foreign policies can have significant effects on people’s lives and well-being. For example, military conflict, international agreements, international trade policies, immigration policies, membership of international organizations, and economic integration have widespread implications for the economic prosperity and security of domestic populations. The importance of international affairs was highlighted in the 2016 US Presidential election, where immigration and globalization played a significant role in the campaign.

Our basic model of how incentives affect knowledge accuracy is displayed in Figure 1. In the rest of this section, we elaborate on both the direct path by which incentives improve accuracy and the indirect path in which increased effort leads to an increase in knowledge accuracy.

Figure 1 A Theory of Incentives, Effort, and Knowledge Accuracy.

Effect of Incentives on Effort

Answering questions correctly requires that respondents pay attention to the task and expend cognitive effort to acquire and use knowledge in making predictions about future events (Lupia and McCubbins Reference Lupia and McCubbins1998). Individuals will be more likely to incur these costs if there are benefits for doing so, and we expect individuals to expend greater effort to make accurate judgments when the expected benefit of effort increases.

Hypothesis 1 Incentives and Effort Hypothesis.

If there are incentives for accuracy, then individuals are more likely to expend effort than in the absence of incentives.

In our experiment, we provide a financial incentive for correct answers, but outside of the experimental context incentives could be any factors that increase the value of accurate knowledge.

Effect of Incentives on Knowledge Accuracy

Prior studies show that people respond to financial incentives with improved political knowledge (Prior and Lupia Reference Prior and Lupia2008); people update their beliefs in response to incentives, even if they are not perfect Bayesians (Hill Reference Hill2017); and incentives for accuracy reduce partisan bias (Prior, Sood, and Khanna Reference Prior, Sood and Khanna2015). We expect that incentives lead to improved knowledge accuracy through both the direct effect of incentives and an indirect effect of greater effort. This leads to the second set of hypotheses:

Hypothesis 2 Incentives and Knowledge Hypothesis.

If there are incentives for accuracy, then individuals are more likely to correctly answer questions than if such incentives are absent.

Hypothesis 3 Incentives and Knowledge Mediation Hypothesis.

If there are incentives for accuracy, then individuals are more likely to expend effort than when there is not an incentive for accuracy and effort will lead to improved accuracy.

Incentives should cause participants to engage in greater effort and therefore improve knowledge accuracy compared to participants in the control condition.

EXPERIMENTAL DESIGN

We recruited 1,016 subjects using Amazon MTurk for the experiment, which we designed to isolate the effects of incentives on effort and accuracy of answers to political knowledge questions. Previous research suggests that the MTurk platform produces acceptable samples for social science research (Berinsky, Huber, and Lenz Reference Berinsky, Huber and Lenz2012; Casler, Bickel, and Hackett Reference Casler, Bickel and Hackett2013; Hauser and Schwarz Reference Hauser and Schwarz2016; Huff and Tingley Reference Huff and Tingley2015; Levay, Freese, and Druckman Reference Levay, Freese and Druckman2016; Mullinix et al. Reference Mullinix, Leeper, Druckman and Freese2015).Footnote 2 We expect our theory about incentives, effort, and knowledge accuracy to apply to all people, and therefore there is no reason to expect MTurk respondents to be theoretically inappropriate for our purposes.

The additional advantage of MTurk is that we know subjects are at an internet-connected device and can search for information, and the platform also provides a way to track how long respondents take to complete the task, which we can use as a proxy measure for effort. Appendix C in the Supplemental Material presents descriptive statistics of the sample.

After being recruited via MTurk, but prior to treatment assignment, participants received background information about the experiment and they answered a series of political information questions. We used 10 political information questions that covered a mix of US domestic issues and foreign affairs; the questions are displayed in Section 1.2.2 in Appendix B in the Supplemental Material. After answering these questions, respondents were randomly assigned to the control group or one of the three treatment groups.

In Table 1, we report the average number of correct answers to the pre-treatment political information questions for respondents assigned to each treatment condition. As expected, the averages do not vary across treatment conditions. We measured pre-existing political information so that we can use it to contextualize the magnitude of estimated treatment effects by comparing them to the relationship between prior knowledge and the questions we use as our dependent variable.

Table 1 Descriptive Statistics of Pre-treatment Political Information, by Treatment

The control group received no incentives for accurate answers. We used three different incentive schemes in the experiment and held constant the expected value of a right answer to an outcome question at $0.50. We varied the benefit of a correct answer and the uncertainty associated with receiving the benefit if a question was answered correctly.

In the bonus treatment, participants received a $0.50 guaranteed payment for every correct answer. In the random bonus treatment, we randomly selected one of the five questions and paid respondents $2.50 if they got that particular question correct. In the lottery treatment, each participant earned a ticket for a $50 lottery for each correct answerand one $50 prize was awarded for every 100 correct answers.

We asked comprehension questions about the incentives immediately after exposure to the treatment but prior to our outcome questions. This helped ensure that participants understood the bonuses and their likelihood of receiving a bonus given a certain score (Kane and Barabas Reference Kane and Barabas2019).

To identify the effect of incentives on effort and knowledge accuracy, we chose to examine whether respondents got the right answer to five different questions that asked about an outcome that would not be officially known for about 5 weeks after the respondents were asked to predict the answer to the question.Footnote 3 The values for all five questions changed over the 5 weeks, but because of lumpiness in the response categories, the correct answer changed for only three questions over the time period of the study.

All questions were multiple choice, and the survey required participants to provide a response for each question to continue the survey. The number of possible responses ranged from 6 to 11 responses, which varied according to plausible answers to each question. Table 2 presents the full text of each question, including the number of possible responses. The entire question wording and possible responses are available to view in Appendix B in the Supplemental Material.

Table 2 Knowledge Accuracy Questions and Correct Answers

In all of the conditions, participants were allowed and encouraged to search for information to answer the questions. Making this consistent across conditions allows us to minimize the possibility that subjects in the treatment conditions inferred that we wanted them to search for information, whereas those in the control condition might not make that inference, and therefore our treatment effects would be confounded by subjects’ perceptions of what is expected of them.

These outcome questions represent a combination of correct information and the ability to use it to answer a question about the near-term future. We refer to the ability to answer such question as knowledge following the distinction between information as data and knowledge as the ability to make accurate predictions (Lupia and McCubbins Reference Lupia and McCubbins1998).

Because the correct answer would not be known for 5 weeks, respondents cannot simply look up the answers, but the outcomes also do not occur as far into the future as many of the predictions used in the Good Judgment Project or other forecasting examples.

For Question 1, a participant could search for information about the number of Jihadist terrorist attacks in the USA committed by people who were US citizens or permanent residents at the time of the experiment and this would help them answer the question correctly. Some of the possible answers were either impossible or highly unlikely given the prior number of terrorist attacks, but a correct answer still requires making a judgment about the number of events in the 5 weeks before correct answers were determined. An accurate response may reflect a combination of information search and the ability to make an inference about how the values will change over the course of 5 weeks.Footnote 4

The short time between asking the outcome questions and paying subjects means that the correct answers to our questions will necessarily be relatively close to the state of the world when taking the survey. However, the use of these kinds of questions is not wholly different than some used in other studies of predictions. For instance, Tetlock and Gardner (Reference Tetlock and Gardner2016, pp. 125–126) discuss the following example from their work:

As the Syrian civil war raged, displacing civilians in vast numbers, the IARPA tournament asked forecasters whether the number of registered Syrian refugees reported by the United Nations Refugee Agency as of 1 April 2014 would be under 2.6 million. That question was asked in the first week of January 2014, so forecasters had to look three months in the future.

Like our questions, this one requires some information about current conditions and the ability to predict into the short-term future, which requires consideration of the trends underlying the current state of the world and projecting them forward. The questions we use are neither as easy as straightforward information questions nor as difficult to answer correctly as predictions with an 18-month time frame.

After the knowledge questions, participants were asked a series of questions relating to effort and information search.Footnote 5 The full design, including the treatments, questions, and coding of variables, is presented in Appendix B in the Supplemental Material.

We used two attention checks during the experiment to ensure that our subjects were attentive. Almost 90% of the sample answered both questions correctly.Footnote 6

The experiment was launched on October 26, 2018, and participants were paid bonuses on December 1, 2018.

RESULTS

In this section, we discuss the results of our experiments. Overall, we find that incentives increase respondent effort and improve the accuracy of answers to the questions. The effect of incentives does not appear to vary with their uncertainty.

Incentives and Effort

Incentives increase the amount of effort expended. Pooled across all treatments, incentives for accuracy increased effort, measured as time spent on the survey, from a baseline of 10.83 minutes in the control group to 11.77 minutes across all treatments, an 8.71% increase in time spent. In both the control and treatment groups, subjects were told that they could use the internet to help them answer the questions correctly so this increase in effort is solely due to the incentives for accuracy.

Figure 2 illustrates the effect of each individual treatment on effort. The point estimates for the three treatments are indistinguishable from one another; although the lottery treatment just misses standard statistical significance levels (p = 0.125). These results are consistent with our expectations by demonstrating that even small incentives increase effort. Furthermore, as expected, the increase in effort does not vary with uncertainty because the expected value of effort is equivalent across treatments.

Figure 2 Determinants of Effort. Dependent Variable: Time Spent on Survey (Minutes).

Incentives and Knowledge Accuracy

The experimental results also demonstrate that incentives for accuracy increase the number of knowledge questions answered correctly. Table 3 presents descriptive statistics for knowledge accuracy across the experimental conditions. Pooled across all treatments, incentives for accuracy improved the number of correct answers by 0.28. On average, subjects in the control condition answered less than one of the five knowledge questions correctly. The modal number of correct answers was zero in the control group, and it was one in the treatment groups. So, while some information about the right answers was available online, it is clear that subjects still had a hard time answering the questions correctly.

Table 3 Descriptive Statistics of Predictive Accuracy, by Treatment

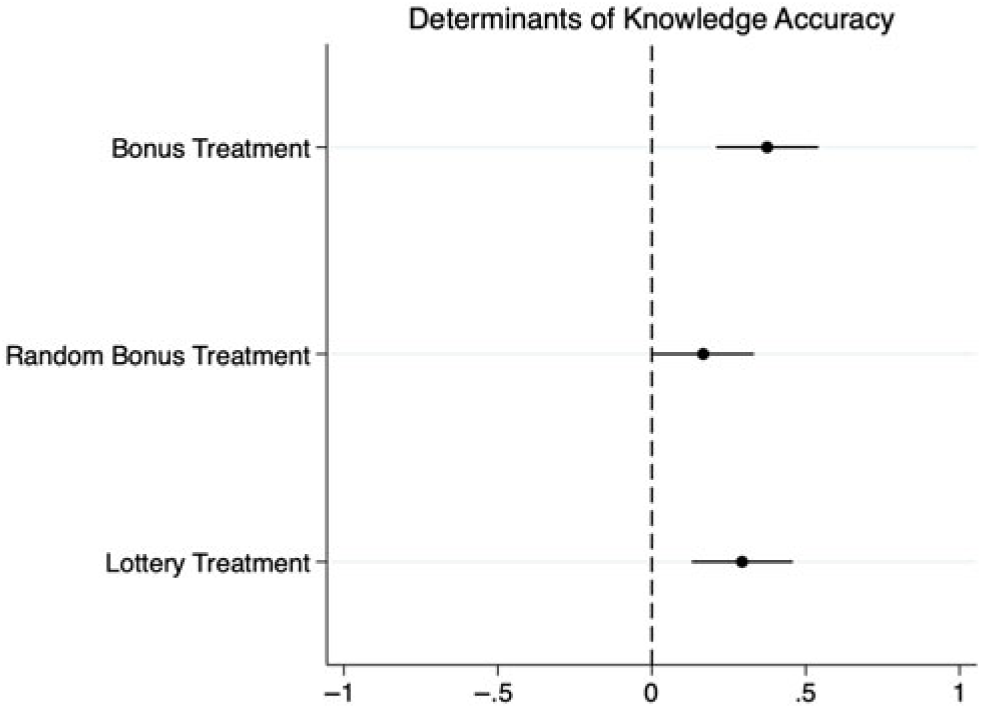

All three incentive conditions led to increased accuracy compared to the control condition. The bonus treatment increased accuracy by 0.375 correct answers, the random bonus treatment increased accuracy by 0.167 correct answers, and the lottery treatment increased accuracy by 0.29 correct answers.

Furthermore, the treatment effects are indistinguishable from each other, suggesting they have the same average effect on behavior. Significantly, Figure 3 illustrates the absence of differences between treatments on knowledge accuracy – all three incentives led to improved performance.

Figure 3 Determinants of Knowledge Accuracy. Dependent Variable: Number of Correct Answers.

To put these effect sizes in perspective, we compare the magnitude of the average treatment effect across all three conditions to the estimated magnitude of the relationship between pre-treatment political information and accurate knowledge in the control group. Our regression estimates indicate that each additional pre-treatment political information question answered correctly increases by 0.07 the number of knowledge questions answered correctly. Recall that the average treatment effect across incentive conditions is an increase of 0.28 in the number of correct answers, which is equivalent to moving from a respondent with an average level of baseline information to a respondent with the highest observed level of baseline political information in our control group.

The results indicate that we may understate both respondents’ willingness to expend effort on a task and their ability to correctly answer knowledge questions if we fail to implement the incentive conditions that best match the theoretical or real-world context being studied.

Incentives, Effort, and Knowledge Accuracy

To determine whether effort mediates the effects of the treatments on the participants’ knowledge accuracy, we use causal mediation analysis (Imai et al. Reference Imai, Keele, Tingley and Yamamoto2011; Imai, Keele, and Yamamoto Reference Imai, Keele and Yamamoto2010).Footnote 7

Table 4 presents the results of causal mediation analysis. Across all treatments, effort appears to be an important mediator of correct answers. Effort accounts for almost 14% of the average treatment effect of the bonus treatment, nearly 20% of the random bonus treatment, and about 13%of the lottery treatment on knowledge accuracy. All three of these estimates are of similar magnitude, suggesting that the effort induced by the incentives has a similar mediating effect on knowledge accuracy.

Table 4 Direct Treatment Effects and Mediation Effects of Incentives on Knowledge Accuracy. Mediating Variable: Time Spent on Survey (Minutes)

Note: The results were calculated using 1,000 simulations with 95% confidence intervals in brackets using mediation in Stata (Hicks and Tingley Reference Hicks and Tingley2011).

Text analysis

To further explore the mechanisms influencing knowledge accuracy, we use the structural topic model stm in R developed by Roberts et al. (Reference Roberts, Stewart, Tingley, Lucas, Leder-Luis, Gadarian, Albertson and Rand2014). Similar to Mildenberger and Tingley (Reference Mildenberger and Tingley2019), we examine respondents’ responses to an open-ended question about their thoughts as they answered the knowledge questions.Footnote 8 We identified that seven topics were an appropriate number for subjects’ responses in the experiment.

We then estimated the difference in the prevalence of each topic between the treatment conditions and the control condition. In comparing each treatment to the control, we consistently found that only one topic was consistently more common in the treatment than the control and this topic was associated with words related to answering questions correctly.

This result is presented visually in Figure 4 in which we plot the seven topics and the estimated difference for each topic between the lottery condition and the control condition; the topic labels are based on the most commonly appearing words for each of the seven topics. The results are substantively similar for both of the other two treatments.

Figure 4 Effect of Lottery on Free Responses by Respondents.

The text analysis provides further evidence that incentives affect behavior and that the different incentives are broadly similar in their effects.

DISCUSSION

In this paper, we demonstrate that incentives for accuracy increase both effort and the number of correct answers to knowledge questions about international affairs. Furthermore, our experiment shows that behavior does not vary with the uncertainty of the accuracy incentive. The results provide evidence for the importance of understanding the context in which political decisions are made and have both substantive and methodological importance for political science.

Substantively, individuals may be more capable of understanding and reaching accurate answers about politics than often found in previous research that uses non-incentivized behavior. Our results show that measures and estimates of citizen knowledge may be affected by context. People increase their effort and knowledge about international affairs when given incentives for being correct. Given the stakes of political decisions (Edlin, Gelman, and Kaplan Reference Edlin, Gelman and Kaplan2007), there can be quite large incentives to correctly understand political outcomes. Even though incentives exist in real political decisions, incentives for accuracy often do not exist in many experiments or survey settings. The results suggest that when looking at political behavior, we should consider the extent to which there were incentives for accuracy and how their absence or presence affects our interpretation of the observed behavior.

The possible mismatch between experimental/survey design and our theories is important for political behavior scholarship. Beyond the risks of cheating in these batteries of knowledge (Barabas et al. Reference Barabas, Jerit, Pollock and Rainey2014; Clifford and Jerit Reference Clifford and Jerit2016), the absence of explicit incentives means our surveys/experiments may not offer appropriate insight into situations in which there is utility associated with making the correct decision. For example, if an experiment focuses on how knowledge affects voting decisions, then we need to ensure that the experimental context captures the incentives for accurate knowledge at the ballot box.

Our experiment and results also suggest directions for future studies. First, future research could introduce explicit costs to searching for information so that we have a better sense of how the cost of effort affects behavior or measure effort directly through unobtrusive observations of individuals’ online search behavior and attention to international affairs. Second, the relatively small incentives in this study could mean we underestimate incentives’ effect. Future studies could test the effects of larger incentives on individual behavior. Third, incentives to make accurate judgments may be framed negatively as costs for making mistakes, which could produce systematic differences in individual behavior (Tversky and Kahneman Reference Tversky and Kahneman1981). The general point is that we still have much to learn about how costs and incentives affect effort, knowledge acquisition, and knowledge accuracy.

Supplementary Material

To view supplementary material for this article, please visit https://doi.org/10.1017/XPS.2019.27.