1. Introduction

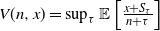

We repeatedly toss a fair coin. After each toss we can take the proportion of heads up to now as our reward, or continue. This is known as the Chow–Robbins game, first presented in 1965 [Reference Chow and Robbins1]. As a stopping problem this can be formulated as

where S is a random walk. In the classical version S has symmetric Bernoulli increments but it is possible to take different random walks as well. Chow and Robbins showed that an optimal stopping time exists in the Bernoulli case, and later [Reference Dvoretzky3] proved this for general centered independent and identically distributed (i.i.d.) increments with finite variance. But it was (and to some extent still is) difficult to see how that solution looks. Asymptotic results were given in [Reference Shepp9], which showed that the boundary of the continuation set

![]() $\partial C$

can be written as a function

$\partial C$

can be written as a function

![]() $b \colon \mathbb{R}^{+}\to \mathbb{R}$

with

$b \colon \mathbb{R}^{+}\to \mathbb{R}$

with

![]() $\lim_{t\to\infty}\frac{b(t)}{\alpha \sqrt{t}\,} = 1$

. Here,

$\lim_{t\to\infty}\frac{b(t)}{\alpha \sqrt{t}\,} = 1$

. Here,

![]() $\alpha\sqrt{t}$

is the boundary of the analogous stopping problem for a standard Brownian motion W (see Lemma 1),

$\alpha\sqrt{t}$

is the boundary of the analogous stopping problem for a standard Brownian motion W (see Lemma 1),

In 2007, [Reference Leung Lai, Yao and Aitsahlia6] gave a second-order approximation for the limit of b,

![]() $\lim_{t\to\infty}\!\big(\alpha \sqrt{t}-b(t) \big)= \frac{1}{2}$

. Somes approximation for values of b were also calculated by using the value function (2) [Reference Leung Lai and Yao5], without constructing it as an upper bound; also given were some calculations for a random walk with standard normal increments.

$\lim_{t\to\infty}\!\big(\alpha \sqrt{t}-b(t) \big)= \frac{1}{2}$

. Somes approximation for values of b were also calculated by using the value function (2) [Reference Leung Lai and Yao5], without constructing it as an upper bound; also given were some calculations for a random walk with standard normal increments.

A more rigorous computer analysis was given in [Reference Häggström and Wästlund4]. Using backward induction from a time horizon

![]() $T=10^{7}$

, lower and upper bounds for

$T=10^{7}$

, lower and upper bounds for

![]() $V^{S}$

were calculated. For points reachable from (0, 0), it was calculated whether they belong to the stopping or to the continuation set for all but seven points (n, x) with

$V^{S}$

were calculated. For points reachable from (0, 0), it was calculated whether they belong to the stopping or to the continuation set for all but seven points (n, x) with

![]() $n\leq 1000$

.

$n\leq 1000$

.

In this paper we give much sharper upper and lower bounds for

![]() $V^{S}$

. Using backward induction with these bounds, we are able to calculate all stopping and continuation points

$V^{S}$

. Using backward induction with these bounds, we are able to calculate all stopping and continuation points

![]() $(n,x)\in \mathbb{N}\times \mathbb{Z}$

with

$(n,x)\in \mathbb{N}\times \mathbb{Z}$

with

![]() $n\leq 10^{5}$

. We show that all seven points (n, x) with

$n\leq 10^{5}$

. We show that all seven points (n, x) with

![]() $n\leq 1000$

that were left open in [Reference Häggström and Wästlund4] belong to the stopping set. In Section 2 we construct an upper bound for the value function (1) for random walks with sub-Gaussian increments. The main observation is that the value function (2) is an upper bound for

$n\leq 1000$

that were left open in [Reference Häggström and Wästlund4] belong to the stopping set. In Section 2 we construct an upper bound for the value function (1) for random walks with sub-Gaussian increments. The main observation is that the value function (2) is an upper bound for

![]() $V^{S}$

. This is carried out in Section 2.1 on the Chow–Robbins game. In Section 2.2 we discuss how this kind of upper bound can be constructed for more general gain functions g and

$V^{S}$

. This is carried out in Section 2.1 on the Chow–Robbins game. In Section 2.2 we discuss how this kind of upper bound can be constructed for more general gain functions g and

![]() $V^{S}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + S_\tau)\right]$

. In Section 3 we construct a lower bound for

$V^{S}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + S_\tau)\right]$

. In Section 3 we construct a lower bound for

![]() $V^{S}(T,x)$

in the Bernoulli case for a given time horizon T. We show that there exist

$V^{S}(T,x)$

in the Bernoulli case for a given time horizon T. We show that there exist

![]() $0<c<1$

and

$0<c<1$

and

![]() $K>0$

such that

$K>0$

such that

![]() $K \int_{0}^{\infty}\exp\!\Big[ax-\frac{c}{2}a^2T\Big] \mathop{}\!\mathrm{d} a\leq V^{S}(T,x)$

for all

$K \int_{0}^{\infty}\exp\!\Big[ax-\frac{c}{2}a^2T\Big] \mathop{}\!\mathrm{d} a\leq V^{S}(T,x)$

for all

![]() $x\leq b(T)$

. We then show that the relative error of the bounds is of order

$x\leq b(T)$

. We then show that the relative error of the bounds is of order

![]() $\mathcal{O}\big(\frac{1}{T}\big)$

for

$\mathcal{O}\big(\frac{1}{T}\big)$

for

![]() $x\geq0$

. In Section 5 we give computational results for the Chow–Robbins game in the Bernoulli case and give a detailed description of our methods. We calculate all integer-valued points in the stopping and continuation sets for

$x\geq0$

. In Section 5 we give computational results for the Chow–Robbins game in the Bernoulli case and give a detailed description of our methods. We calculate all integer-valued points in the stopping and continuation sets for

![]() $n\leq 10^{5}$

, and give some examples of how

$n\leq 10^{5}$

, and give some examples of how

![]() $V^{S}(t,x)$

and b(t) look for continuous t close to zero.

$V^{S}(t,x)$

and b(t) look for continuous t close to zero.

1.1. Notation

We introduce some notation that we will use. V denotes a value function,

![]() $D = \{V=g\} = \{(t,x)\mid V(t,x) = g(t,x)\}$

the corresponding stopping set, and

$D = \{V=g\} = \{(t,x)\mid V(t,x) = g(t,x)\}$

the corresponding stopping set, and

![]() $C = \{V>g\}$

the continuation set. With (t, x) we denote real variables, with n positive integers. With a superscript we denote which driving process is used, e.g.

$C = \{V>g\}$

the continuation set. With (t, x) we denote real variables, with n positive integers. With a superscript we denote which driving process is used, e.g.

![]() $C^{S} = \big\{V^{S} = g\big\}$

,

$C^{S} = \big\{V^{S} = g\big\}$

,

![]() $C^{W} = \big\{V^{W} = g\big\}$

, etc.

$C^{W} = \big\{V^{W} = g\big\}$

, etc.

![]() $V_\mathrm{u}$

and

$V_\mathrm{u}$

and

![]() $V_\mathrm{l}$

denote upper and lower bounds respectively.

$V_\mathrm{l}$

denote upper and lower bounds respectively.

2. An upper bound for the value function

$\boldsymbol{{V}}^{\boldsymbol{{S}}}$

$\boldsymbol{{V}}^{\boldsymbol{{S}}}$

We construct an upper bound for the value function

![]() $V^{S}$

of the

$V^{S}$

of the

![]() $\frac{S_n}{n}$

problem, where S can be any random walk with sub-Gaussian increments. The classical Chow–Robbins game is a special case of these stopping problems.

$\frac{S_n}{n}$

problem, where S can be any random walk with sub-Gaussian increments. The classical Chow–Robbins game is a special case of these stopping problems.

Definition 1. (Sub-Gaussian random variable) Let

![]() $\sigma^2>0$

. A real, centered random variable

$\sigma^2>0$

. A real, centered random variable

![]() $\xi$

is called

$\xi$

is called

![]() $\sigma^2$

-sub-Gaussian

$\sigma^2$

-sub-Gaussian

![]() $\big($

or sub-Gaussian with parameter

$\big($

or sub-Gaussian with parameter

![]() $\sigma^2\big)$

if

$\sigma^2\big)$

if

![]() $\mathbb{E}[\mathrm{e}^{a\xi}] \leq \exp\Big[\frac{\sigma^2 a^2}{2}\Big]$

for all

$\mathbb{E}[\mathrm{e}^{a\xi}] \leq \exp\Big[\frac{\sigma^2 a^2}{2}\Big]$

for all

![]() $a\in \mathbb{R}$

.

$a\in \mathbb{R}$

.

Some examples of sub-Gaussian random variables are:

X with

$P(X_i =-1) = P(X_i =1) = \frac{1}{2} $

is 1-sub-Gaussian.

$P(X_i =-1) = P(X_i =1) = \frac{1}{2} $

is 1-sub-Gaussian.The normal distribution

$\mathcal{N}(0,\sigma^2)$

is

$\mathcal{N}(0,\sigma^2)$

is

$\sigma^2$

-sub-Gaussian.

$\sigma^2$

-sub-Gaussian.The uniform distribution on

$[-a,a]$

is

$[-a,a]$

is

$a^2$

-sub-Gaussian.

$a^2$

-sub-Gaussian.Any random variable Y with values in a compact interval [a, b] is

$\frac{1}{4}(b-a)^2$

-sub-Gaussian.

$\frac{1}{4}(b-a)^2$

-sub-Gaussian.

In the following we show that the value function

![]() $V^{W}$

of the continuous-time problem (2) is an upper bound for the value function

$V^{W}$

of the continuous-time problem (2) is an upper bound for the value function

![]() $V^{S}$

whenever S is a random walk with 1-sub-Gaussian increments. We first state the solution of (2).

$V^{S}$

whenever S is a random walk with 1-sub-Gaussian increments. We first state the solution of (2).

Let

where

![]() $\Phi_t(x) = \Phi\big(x/\sqrt{t}\big)$

denotes the cummulative distribution function of a centered normal distribution with variance

$\Phi_t(x) = \Phi\big(x/\sqrt{t}\big)$

denotes the cummulative distribution function of a centered normal distribution with variance

![]() $\sigma^2 = t$

,

$\sigma^2 = t$

,

![]() $\varphi_t$

is the corresponding density function, and

$\varphi_t$

is the corresponding density function, and

![]() $\alpha \approx 0.839\,923\,675\,692\,373$

is the unique solution to

$\alpha \approx 0.839\,923\,675\,692\,373$

is the unique solution to

![]() $\alpha \varphi(\alpha) = \big(1-\alpha^2\big)\Phi(\alpha)$

.

$\alpha \varphi(\alpha) = \big(1-\alpha^2\big)\Phi(\alpha)$

.

Lemma 1. The stopping problem (2) is solved by

![]() $\tau_{\ast} = \inf\{s\mid x+W_s\geq \alpha\sqrt{s+t}\}$

with value function

$\tau_{\ast} = \inf\{s\mid x+W_s\geq \alpha\sqrt{s+t}\}$

with value function

\begin{equation*}V^W(t,x) = \begin{cases} h(t,x) & {if } x \leq \alpha \sqrt{t},\\[5pt] \frac{x}{t} & {otherwise}. \end{cases}\end{equation*}

\begin{equation*}V^W(t,x) = \begin{cases} h(t,x) & {if } x \leq \alpha \sqrt{t},\\[5pt] \frac{x}{t} & {otherwise}. \end{cases}\end{equation*}

![]() $V^{W}(t,x)$

is differentiable (smooth fit), and

$V^{W}(t,x)$

is differentiable (smooth fit), and

![]() $h(t,x)\geq g(t,x) = \frac{x}{t}$

for all

$h(t,x)\geq g(t,x) = \frac{x}{t}$

for all

![]() $t>0$

and

$t>0$

and

![]() $x\in \mathbb{R}$

.

$x\in \mathbb{R}$

.

This result was proved independently in [Reference Shepp9, Reference Walker10].

We now prove that

![]() $V^{W}$

is an upper bound for

$V^{W}$

is an upper bound for

![]() $V^{S}$

. We know from general theory that

$V^{S}$

. We know from general theory that

![]() $V^{S}$

is the smallest superharmonic function dominating the gain function g (see, e.g., [Reference Peskir and Shiryaev8]). If we find a superharmonic function dominating g we have an upper bound for

$V^{S}$

is the smallest superharmonic function dominating the gain function g (see, e.g., [Reference Peskir and Shiryaev8]). If we find a superharmonic function dominating g we have an upper bound for

![]() $V^{S}$

.

$V^{S}$

.

Lemma 2. Let

![]() $X_i$

be i.i.d. 1-sub-Gaussian random variables, and

$X_i$

be i.i.d. 1-sub-Gaussian random variables, and

![]() $S_n = \sum_{i=1}^{n}X_i$

. The function

$S_n = \sum_{i=1}^{n}X_i$

. The function

![]() $h \colon \mathbb{R}^{+}\times \mathbb{R} \to \mathbb{R}^{+}$

such that

$h \colon \mathbb{R}^{+}\times \mathbb{R} \to \mathbb{R}^{+}$

such that

![]() $(t,x)\mapsto \big(1-\alpha^{2}\big)\int_{0}^{\infty} \exp\big[ax - \frac{1}{2}a^2t\big]\! \mathop{}\!\mathrm{d} a$

is S-superharmonic.

$(t,x)\mapsto \big(1-\alpha^{2}\big)\int_{0}^{\infty} \exp\big[ax - \frac{1}{2}a^2t\big]\! \mathop{}\!\mathrm{d} a$

is S-superharmonic.

Proof.We first show the claim for fixed

![]() $a\in \mathbb{R}$

and

$a\in \mathbb{R}$

and

![]() $f(t,x) = \mathrm{e}^{ax - \frac{1}{2}a^2t}$

. We need to show that

$f(t,x) = \mathrm{e}^{ax - \frac{1}{2}a^2t}$

. We need to show that

![]() $\mathbb{E} [f(t+1,x+X_1)]\leq f(t,x)$

for all

$\mathbb{E} [f(t+1,x+X_1)]\leq f(t,x)$

for all

![]() $t>0$

and

$t>0$

and

![]() $x\in \mathbb{R}$

, and calculate

$x\in \mathbb{R}$

, and calculate

\begin{alignat}{2} & & \mathbb{E}\bigg[\exp\!\bigg\{a(x+X_{1}) - \frac{a^2}{2}(t+1)\bigg\}\bigg] & \leq \exp\!\bigg\{ax - \frac{a^2}{2}t\bigg\} \nonumber \\ \Longleftrightarrow & \quad & \exp\!\bigg\{ax - \frac{a^2}{2}(t+1)\bigg\} \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq \exp\!\bigg\{ax - \frac{a^2}{2}t\bigg\} \nonumber \\ \Longleftrightarrow & & \mathrm{e}^{-\frac{a^2}{2}} \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq 1 \nonumber \\ \Longleftrightarrow & & \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq \mathrm{e}^{\frac{a^2}{2}}. \end{alignat}

\begin{alignat}{2} & & \mathbb{E}\bigg[\exp\!\bigg\{a(x+X_{1}) - \frac{a^2}{2}(t+1)\bigg\}\bigg] & \leq \exp\!\bigg\{ax - \frac{a^2}{2}t\bigg\} \nonumber \\ \Longleftrightarrow & \quad & \exp\!\bigg\{ax - \frac{a^2}{2}(t+1)\bigg\} \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq \exp\!\bigg\{ax - \frac{a^2}{2}t\bigg\} \nonumber \\ \Longleftrightarrow & & \mathrm{e}^{-\frac{a^2}{2}} \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq 1 \nonumber \\ \Longleftrightarrow & & \mathbb{E}\big[\mathrm{e}^{aX_{1}}\big] & \leq \mathrm{e}^{\frac{a^2}{2}}. \end{alignat}

The last inequality (3) is just the defining property of a 1-sub-Gaussian random variable. By integration over a and multiplication by

![]() $(1-\alpha)$

, the result follows.

$(1-\alpha)$

, the result follows.

Theorem 1. (An upper bound for

![]() $V^{S}$

) Let W be a standard Brownian motion, S a random walk with 1-sub-Gaussian increments, and

$V^{S}$

) Let W be a standard Brownian motion, S a random walk with 1-sub-Gaussian increments, and

Then

![]() $V^{W}(t,x)\geq V^{S}(t,x)$

for all

$V^{W}(t,x)\geq V^{S}(t,x)$

for all

![]() $t>0$

,

$t>0$

,

![]() $x\in \mathbb{R}$

.

$x\in \mathbb{R}$

.

Proof.Let

![]() $h(t,x)=\big(1-\alpha^{2}\big)\int_0^{\infty}\exp\Big[ax - \frac{a^{2}}{2}t\Big]\mathop{}\!\mathrm{d} a$

as in Lemma 1. We know that

$h(t,x)=\big(1-\alpha^{2}\big)\int_0^{\infty}\exp\Big[ax - \frac{a^{2}}{2}t\Big]\mathop{}\!\mathrm{d} a$

as in Lemma 1. We know that

![]() $h\geq g$

(Lemma 1), h is S-superharmonic (Lemma 2), and

$h\geq g$

(Lemma 1), h is S-superharmonic (Lemma 2), and

![]() $V^{W} = h \textbf{1}_{C^{W}} + g \textbf{1}_{D^{W}}$

.

$V^{W} = h \textbf{1}_{C^{W}} + g \textbf{1}_{D^{W}}$

.

![]() $V^{S}$

is the smallest superharmonic function dominating g, therefore

$V^{S}$

is the smallest superharmonic function dominating g, therefore

![]() $V^{S}\leq h$

. We know from Lemma 1 that

$V^{S}\leq h$

. We know from Lemma 1 that

![]() $V^{W}\big(t,\alpha\sqrt{t}\big) = h\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

, and therefore

$V^{W}\big(t,\alpha\sqrt{t}\big) = h\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

, and therefore

![]() $g\big(t,\alpha\sqrt{t}\big)\geq V^{S}\big(t,\alpha\sqrt{t}\big) \leq h\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

. Hence,

$g\big(t,\alpha\sqrt{t}\big)\geq V^{S}\big(t,\alpha\sqrt{t}\big) \leq h\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

. Hence,

![]() $V^{S}\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

and

$V^{S}\big(t,\alpha\sqrt{t}\big) = g\big(t,\alpha\sqrt{t}\big)$

and

![]() $\big(t,\alpha\sqrt{t}\big)\in D^{S}$

. The boundary

$\big(t,\alpha\sqrt{t}\big)\in D^{S}$

. The boundary

![]() $\partial C^{S}$

is the graph of a function, therefore

$\partial C^{S}$

is the graph of a function, therefore

![]() $(t,x)\in D^{S}$

for all

$(t,x)\in D^{S}$

for all

![]() $x\geq \alpha\sqrt{t}$

. It follows that

$x\geq \alpha\sqrt{t}$

. It follows that

![]() $C^{S}\subset C^{W}$

and that

$C^{S}\subset C^{W}$

and that

![]() $V^{S}(t,x)\leq V^{W}(t,x)$

for all

$V^{S}(t,x)\leq V^{W}(t,x)$

for all

![]() $t>0$

,

$t>0$

,

![]() $x\in \mathbb{R}$

.

$x\in \mathbb{R}$

.

Corollary 1. From the proof, we see that

![]() $C^{S}\subset C^{W}$

, and

$C^{S}\subset C^{W}$

, and

![]() $b(t)\leq \alpha\sqrt{t}$

for all

$b(t)\leq \alpha\sqrt{t}$

for all

![]() $t>0$

.

$t>0$

.

2.1. The Chow–Robbins game

Let

![]() $X_1, X_2, \dots$

be i.i.d. random variables with

$X_1, X_2, \dots$

be i.i.d. random variables with

![]() $P(X_i =-1) =P(X_i =1) = \frac{1}{2} $

and

$P(X_i =-1) =P(X_i =1) = \frac{1}{2} $

and

![]() $S_n = \sum_{i=1}^{n}X_i$

. The classical Chow–Robbins problem is given by

$S_n = \sum_{i=1}^{n}X_i$

. The classical Chow–Robbins problem is given by

The

![]() $X_i$

are 1-sub-Gaussian with variance 1. An almost surely (a.s.) finite stopping time

$X_i$

are 1-sub-Gaussian with variance 1. An almost surely (a.s.) finite stopping time

![]() $\tau_{\ast}$

exists that solves (4) (see [Reference Dvoretzky3]). By Theorem 1 we get that

$\tau_{\ast}$

exists that solves (4) (see [Reference Dvoretzky3]). By Theorem 1 we get that

![]() $V^S(t,x)\leq V^{W}(t,x) = h(x,t)\textbf{1}_{\{x \leq \alpha \sqrt{t}\}} + \frac{x}{t}\textbf{1}_{\{x > \alpha\sqrt{t}\}}$

. We will see later that this upper bound is very tight. We will construct a lower bound for

$V^S(t,x)\leq V^{W}(t,x) = h(x,t)\textbf{1}_{\{x \leq \alpha \sqrt{t}\}} + \frac{x}{t}\textbf{1}_{\{x > \alpha\sqrt{t}\}}$

. We will see later that this upper bound is very tight. We will construct a lower bound for

![]() $V^S$

in the next section and give a rigorous computer analysis of the problem in Section 5.

$V^S$

in the next section and give a rigorous computer analysis of the problem in Section 5.

Example 1. For some time it was unclear whether it is optimal to stop in (8, 2) or not. It was first shown in [Reference Medina and Zeilberger7] and later confirmed in [Reference Häggström and Wästlund4] that

![]() $(8,2)\in D^{S}$

(in [Reference Häggström and Wästlund4] this is written as 5–3, 5 heads–3 tails). We show here how to immediately prove this with our upper bound. We choose the time horizon

$(8,2)\in D^{S}$

(in [Reference Häggström and Wästlund4] this is written as 5–3, 5 heads–3 tails). We show here how to immediately prove this with our upper bound. We choose the time horizon

![]() $T=9$

, set

$T=9$

, set

![]() $V_\mathrm{u}^{S}(T,x) = V^{W}(T,x)$

, and calculate with one-step backward induction

$V_\mathrm{u}^{S}(T,x) = V^{W}(T,x)$

, and calculate with one-step backward induction

![]() $V_\mathrm{u}^{S}(8,2)$

as an upper bound for

$V_\mathrm{u}^{S}(8,2)$

as an upper bound for

![]() $V^{S}(8,2)$

:

$V^{S}(8,2)$

:

![]() $V^{S}_\mathrm{u}(9,3) = \frac{3}{9} = \frac{1}{3}$

(since

$V^{S}_\mathrm{u}(9,3) = \frac{3}{9} = \frac{1}{3}$

(since

![]() $3> \alpha\sqrt{9}$

),

$3> \alpha\sqrt{9}$

),

![]() $V^{S}_\mathrm{u}(9,1) = h(9,1) \approx 0.1642$

, and we get

$V^{S}_\mathrm{u}(9,1) = h(9,1) \approx 0.1642$

, and we get

\begin{align*} V^{S}(8,2) \leq V_\mathrm{u}^{S}(8,2) & = \max\left\{\frac{2}{8}, \frac{V^{S}_\mathrm{u}(9,3) + V^{S}_\mathrm{u}(9,1)}{2}\right\} , \\ \frac{V^{S}_\mathrm{u}(9,3) + V^{S}_\mathrm{u}(9,1)}{2} & = \frac{1}{6} + \frac{0.1642}{2}=0.2488 < \frac{2}{8}. \end{align*}

\begin{align*} V^{S}(8,2) \leq V_\mathrm{u}^{S}(8,2) & = \max\left\{\frac{2}{8}, \frac{V^{S}_\mathrm{u}(9,3) + V^{S}_\mathrm{u}(9,1)}{2}\right\} , \\ \frac{V^{S}_\mathrm{u}(9,3) + V^{S}_\mathrm{u}(9,1)}{2} & = \frac{1}{6} + \frac{0.1642}{2}=0.2488 < \frac{2}{8}. \end{align*}

Hence, we have

![]() $V^{S}(8,2)\leq g(8,2) = \frac{2}{8}$

, and it follows that (8, 2) is in the stopping set. For a detailed description of the method see Section 5.

$V^{S}(8,2)\leq g(8,2) = \frac{2}{8}$

, and it follows that (8, 2) is in the stopping set. For a detailed description of the method see Section 5.

2.2. Generalizations

In the proof of Theorem 1 we did not use the specific form of the gain function

![]() $g(t,x) = \frac{x}{t}$

. All we needed was that:

$g(t,x) = \frac{x}{t}$

. All we needed was that:

The value function of the stopping problem

(5)is on C of the form \begin{equation} V^{W}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + W_\tau)\right] \end{equation}

\begin{equation} V^{W}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + W_\tau)\right] \end{equation}

$V^{W}\big|_{C}(t,x) = \int_{\mathbb{R}}\exp\Big[ax - \frac{1}{2}a^2t\Big]\mathop{}\!\mathrm{d} \mu(a)$

for a measure

$V^{W}\big|_{C}(t,x) = \int_{\mathbb{R}}\exp\Big[ax - \frac{1}{2}a^2t\Big]\mathop{}\!\mathrm{d} \mu(a)$

for a measure

$\mu$

.

$\mu$

.

The function

$h(t,x) = \int_{\mathbb{R}}\exp\Big[ax - \frac{1}{2}a^2t\Big] \mathop{}\!\mathrm{d} \mu(a)$

dominates g on

$h(t,x) = \int_{\mathbb{R}}\exp\Big[ax - \frac{1}{2}a^2t\Big] \mathop{}\!\mathrm{d} \mu(a)$

dominates g on

$\mathbb{R}^{+}\times\mathbb{R}$

.

$\mathbb{R}^{+}\times\mathbb{R}$

.The boundary of the continuation set

$\partial C^{S}$

of the stopping problem

$\partial C^{S}$

of the stopping problem

$V^{S}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + S_\tau)\right]$

is the graph of a function

$V^{S}(t,x) = \sup_{\tau }\mathbb{E} \left [ g(t+\tau,x + S_\tau)\right]$

is the graph of a function

$b \colon \mathbb{R}^{+}\to \mathbb{R}$

. (This requirement can easily be relaxed to the symmetric case, where

$b \colon \mathbb{R}^{+}\to \mathbb{R}$

. (This requirement can easily be relaxed to the symmetric case, where

$\partial C^{S} = \mathrm{Graph}(b) \cup \mathrm{Graph}(-b)$

.)

$\partial C^{S} = \mathrm{Graph}(b) \cup \mathrm{Graph}(-b)$

.)

These requirements are not very restrictive, and there are many other gain functions and associated stopping problems for which this kind of upper bound can be constructed. A set of examples which fulfill these requirements and for which (5) is explicitly solvable can be found in [Reference Peskir and Shiryaev8]. Some of these are:

\begin{alignat*}{2} g(t,x) & = \frac{x^{2d-1}}{t^{q}} & & \text{with } d\in \mathbb{N} \text{ and } q>d-\frac{1}{2}, \\ g(t,x) & = |x|-\beta\sqrt{t} \qquad & & \text{for some } \beta \geq 0, \\ g(t,x) & = \frac{|x|}{t}. & &\end{alignat*}

\begin{alignat*}{2} g(t,x) & = \frac{x^{2d-1}}{t^{q}} & & \text{with } d\in \mathbb{N} \text{ and } q>d-\frac{1}{2}, \\ g(t,x) & = |x|-\beta\sqrt{t} \qquad & & \text{for some } \beta \geq 0, \\ g(t,x) & = \frac{|x|}{t}. & &\end{alignat*}

3. A lower bound for

$\boldsymbol{{V}}^{\boldsymbol{{S}}}$

$\boldsymbol{{V}}^{\boldsymbol{{S}}}$

In this section we want to give a lower bound for the value function of the Chow–Robbins game (4). Here, S will always be a symmetric Bernoulli random walk. The basis of our construction is the following lemma.

Lemma 3. (A lower bound for V) Let X be a random walk, g a gain function,

![]() $V(t,x) = \sup_{\tau }\mathbb{E} [g(\tau+t,X_\tau+x)]$

, and

$V(t,x) = \sup_{\tau }\mathbb{E} [g(\tau+t,X_\tau+x)]$

, and

![]() $h \colon \mathbb{R}^{+}\times \mathbb{R} \to \mathbb{R}$

measurable. For a given point

$h \colon \mathbb{R}^{+}\times \mathbb{R} \to \mathbb{R}$

measurable. For a given point

![]() $(t_0,x_0)$

let

$(t_0,x_0)$

let

![]() $\tau$

be a stopping time such that the stopped process

$\tau$

be a stopping time such that the stopped process

![]() $(h(t\wedge \tau+ t_0,X_{t\wedge \tau}+ x_0))_{t\geq 0}$

is a submartingale and

$(h(t\wedge \tau+ t_0,X_{t\wedge \tau}+ x_0))_{t\geq 0}$

is a submartingale and

![]() $h(\tau + t_0,X_\tau + x_0)\leq g(\tau + t_0,X_\tau + x_0)$

a.s. Then

$h(\tau + t_0,X_\tau + x_0)\leq g(\tau + t_0,X_\tau + x_0)$

a.s. Then

![]() $h(t_0,x_0)\leq V(t_0,x_0)$

.

$h(t_0,x_0)\leq V(t_0,x_0)$

.

Proof.Since

![]() $h(\tau,X_\tau)\leq g(\tau,X_\tau)$

we can use the optional sampling theorem and obtain

$h(\tau,X_\tau)\leq g(\tau,X_\tau)$

we can use the optional sampling theorem and obtain

![]() $h(t_0,x_0) \leq \mathbb{E} [h(\tau+t_0,X_\tau+x_0)] \leq \mathbb{E} [g(\tau+t_0,X_\tau+x_0)] \leq V(t_0,x_0)$

.

$h(t_0,x_0) \leq \mathbb{E} [h(\tau+t_0,X_\tau+x_0)] \leq \mathbb{E} [g(\tau+t_0,X_\tau+x_0)] \leq V(t_0,x_0)$

.

We modify the function

![]() $h(t,x)=\big(1-\alpha^2\big)\int_{0}^{\infty}\exp\Big[ax-\frac{a^2}{2}t\Big] \mathop{}\!\mathrm{d} a$

from Lemma 1 slightly to

$h(t,x)=\big(1-\alpha^2\big)\int_{0}^{\infty}\exp\Big[ax-\frac{a^2}{2}t\Big] \mathop{}\!\mathrm{d} a$

from Lemma 1 slightly to

for some

![]() $0 < c < 1$

and

$0 < c < 1$

and

![]() $K>0$

to match the assumptions of Lemma 3. As a stopping time we choose

$K>0$

to match the assumptions of Lemma 3. As a stopping time we choose

![]() $\tau_0 = \inf \big\{n\geq 0\mid x+S_n \geq \alpha \sqrt{t+n}-1\big\}$

. Unfortunately there is no c such that (6) is globally S-subharmonic, and hence we have to choose c depending on the time horizon T. This makes the following result a bit technical.

$\tau_0 = \inf \big\{n\geq 0\mid x+S_n \geq \alpha \sqrt{t+n}-1\big\}$

. Unfortunately there is no c such that (6) is globally S-subharmonic, and hence we have to choose c depending on the time horizon T. This makes the following result a bit technical.

Theorem 2. (A lower bound for

![]() $V^{S}$

) Let

$V^{S}$

) Let

Given a time horizon

![]() $T>0$

, let

$T>0$

, let

![]() $c_1$

be the biggest solution smaller than 1 to

$c_1$

be the biggest solution smaller than 1 to

![]() $\frac{1}{2}\big(h_{c}\big(T+1, \alpha\sqrt{T}-1\big)+h_{c}\big(T+1,\alpha\sqrt{T}+1\big)\big) = h_{c}\big(T,\alpha\sqrt{T}\big)$

,

$\frac{1}{2}\big(h_{c}\big(T+1, \alpha\sqrt{T}-1\big)+h_{c}\big(T+1,\alpha\sqrt{T}+1\big)\big) = h_{c}\big(T,\alpha\sqrt{T}\big)$

,

![]() $c_2$

the unique positive solution of

$c_2$

the unique positive solution of

and

![]() $c=\min\{c_1,c_2\}$

. Let

$c=\min\{c_1,c_2\}$

. Let

![]() $a_0$

be the unique positive solution (in a) to

$a_0$

be the unique positive solution (in a) to

![]() $\frac{1}{2}\left(e^{a}+e^{-a}\right)e^{-\frac{c}{2} a^2}=1$

. If

$\frac{1}{2}\left(e^{a}+e^{-a}\right)e^{-\frac{c}{2} a^2}=1$

. If

then

![]() $h_c(t,x)$

is a lower bound for

$h_c(t,x)$

is a lower bound for

![]() $V^{S}(t,x)$

for all

$V^{S}(t,x)$

for all

![]() $t\geq T$

and

$t\geq T$

and

![]() $x\leq \alpha \sqrt{t}$

.

$x\leq \alpha \sqrt{t}$

.

Remark 1. Our numerical evaluations suggest that for

![]() $T\geq 4$

(8) is always satisfied, and for

$T\geq 4$

(8) is always satisfied, and for

![]() $T\geq 200$

we always have

$T\geq 200$

we always have

![]() $c=c_1$

.

$c=c_1$

.

Figure 1. The upper bound

![]() $V^{W}$

and the lower bound

$V^{W}$

and the lower bound

![]() $h_c$

for a fixed T. For a better illustration

$h_c$

for a fixed T. For a better illustration

![]() $c=0.6$

is chosen very small.

$c=0.6$

is chosen very small.

Proof. We divide the proof into three parts. First, we show that the stopped process

![]() $h_c\big(t\wedge\tau_0,x+S_{t\wedge\tau_0}\big)_{t\geq T}$

is a submartingale; second, we calculate K; and third, we show that

$h_c\big(t\wedge\tau_0,x+S_{t\wedge\tau_0}\big)_{t\geq T}$

is a submartingale; second, we calculate K; and third, we show that

![]() $h\big(\tau_0,x+S_{\tau_0}\big)\leq g\big(\tau_0,x+S_{\tau_0}\big)$

$h\big(\tau_0,x+S_{\tau_0}\big)\leq g\big(\tau_0,x+S_{\tau_0}\big)$

![]() $P_{T,x}$

-a.s. and use Lemma 3 to prove the statement. An illustration of the setting is given in Figure 1.

$P_{T,x}$

-a.s. and use Lemma 3 to prove the statement. An illustration of the setting is given in Figure 1.

First, we have to show that

![]() $\frac{1}{2}\!\left(h_{c}(t+1,x-1)+h_{c}(t+1,x+1)\right) \geq h_{c}(t,x)$

for every

$\frac{1}{2}\!\left(h_{c}(t+1,x-1)+h_{c}(t+1,x+1)\right) \geq h_{c}(t,x)$

for every

![]() $t\geq T$

and

$t\geq T$

and

![]() $x\leq \alpha \sqrt{t}-1$

; we will even show that it applies for all

$x\leq \alpha \sqrt{t}-1$

; we will even show that it applies for all

![]() $x\leq \alpha \sqrt{t}$

. The constant K has no influence on this inequality, so we set it to 1 for now. We have

$x\leq \alpha \sqrt{t}$

. The constant K has no influence on this inequality, so we set it to 1 for now. We have

\begin{align} f_c(t,x)& \,:\!=\, \frac{1}{2}\big(h_{c}(t+1,x-1)+h_{c}(t+1,x+1)\big) - h_{c}(t,x) \nonumber \\ & = \int_{0}^{\infty}\frac{1}{2} \exp\bigg[a(x+1)-\frac{c}{2}a^2(t+1)\bigg]\mathop{}\!\mathrm{d} a + \int_{0}^{\infty}\frac{1}{2} \exp\bigg[a(x-1)-\frac{c}{2}a^2(t+1)\bigg] \mathop{}\!\mathrm{d} a \nonumber \\ & \quad - \int_{0}^{\infty}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \mathop{}\!\mathrm{d} a \nonumber \\ & = \int^{\infty}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg]\left[\frac{1}{2}\big(\mathrm{e}^a+\mathrm{e}^{-a}\big)\mathrm{e}^{-\frac{c}{2}a^2}-1 \right ]\mathop{}\!\mathrm{d} a. \end{align}

\begin{align} f_c(t,x)& \,:\!=\, \frac{1}{2}\big(h_{c}(t+1,x-1)+h_{c}(t+1,x+1)\big) - h_{c}(t,x) \nonumber \\ & = \int_{0}^{\infty}\frac{1}{2} \exp\bigg[a(x+1)-\frac{c}{2}a^2(t+1)\bigg]\mathop{}\!\mathrm{d} a + \int_{0}^{\infty}\frac{1}{2} \exp\bigg[a(x-1)-\frac{c}{2}a^2(t+1)\bigg] \mathop{}\!\mathrm{d} a \nonumber \\ & \quad - \int_{0}^{\infty}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \mathop{}\!\mathrm{d} a \nonumber \\ & = \int^{\infty}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg]\left[\frac{1}{2}\big(\mathrm{e}^a+\mathrm{e}^{-a}\big)\mathrm{e}^{-\frac{c}{2}a^2}-1 \right ]\mathop{}\!\mathrm{d} a. \end{align}

The function

![]() $\lambda(a)\,:\!=\,\frac{1}{2}\big(\mathrm{e}^a+\mathrm{e}^{-a}\big)e^{-\frac{c}{2}a^2}-1$

has a unique positive root

$\lambda(a)\,:\!=\,\frac{1}{2}\big(\mathrm{e}^a+\mathrm{e}^{-a}\big)e^{-\frac{c}{2}a^2}-1$

has a unique positive root

![]() $a_0$

; for

$a_0$

; for

![]() $a\in[0,a_0]$

we have

$a\in[0,a_0]$

we have

![]() $\lambda(a)\geq 0$

, and for

$\lambda(a)\geq 0$

, and for

![]() $a\geq a_0$

,

$a\geq a_0$

,

![]() $\lambda(a)\leq 0$

. Suppose, for given (t, x), we have

$\lambda(a)\leq 0$

. Suppose, for given (t, x), we have

![]() $f_c(t,x)\geq 0$

. Let

$f_c(t,x)\geq 0$

. Let

![]() $\delta \geq 0$

,

$\delta \geq 0$

,

![]() $\varepsilon \in \mathbb{R}$

; we have

$\varepsilon \in \mathbb{R}$

; we have

\begin{align*} f_c(t+\delta,x+\varepsilon) & = \int^{a_0}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a - \delta \frac{c}{2} a^2\bigg] \mathop{}\!\mathrm{d} a \\ & \quad + \int^{\infty}_{a_0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a - \delta \frac{c}{2} a^2\bigg] \mathop{}\!\mathrm{d} a \\ & \overset{(\ast)}\geq \int^{a_0}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a_0 - \delta \frac{c}{2} a_0^2\bigg] \mathop{}\!\mathrm{d} a \\ & \quad + \int^{\infty}_{a_0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a_{0} - \delta \frac{c}{2} a_0^2\bigg] \mathop{}\!\mathrm{d} a \\ & = \exp\bigg[\varepsilon a_{0} - \delta \frac{c}{2} a_0^2\bigg]\, f_c(t,x) \geq 0. \end{align*}

\begin{align*} f_c(t+\delta,x+\varepsilon) & = \int^{a_0}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a - \delta \frac{c}{2} a^2\bigg] \mathop{}\!\mathrm{d} a \\ & \quad + \int^{\infty}_{a_0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a - \delta \frac{c}{2} a^2\bigg] \mathop{}\!\mathrm{d} a \\ & \overset{(\ast)}\geq \int^{a_0}_{0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a_0 - \delta \frac{c}{2} a_0^2\bigg] \mathop{}\!\mathrm{d} a \\ & \quad + \int^{\infty}_{a_0}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \lambda(a) \exp\bigg[\varepsilon a_{0} - \delta \frac{c}{2} a_0^2\bigg] \mathop{}\!\mathrm{d} a \\ & = \exp\bigg[\varepsilon a_{0} - \delta \frac{c}{2} a_0^2\bigg]\, f_c(t,x) \geq 0. \end{align*}

Here,

![]() $(\ast)$

is true if

$(\ast)$

is true if

![]() $\varepsilon a - \delta \frac{c}{2} a^2 \geq \varepsilon a_{0} - \delta \frac{c}{2} a_0^2$

for

$\varepsilon a - \delta \frac{c}{2} a^2 \geq \varepsilon a_{0} - \delta \frac{c}{2} a_0^2$

for

![]() $a\leq a_{0}$

and

$a\leq a_{0}$

and

![]() $\varepsilon a - \delta \frac{c}{2} a^2 \leq \varepsilon a_{0} - \delta \frac{c}{2} a_0^2$

for

$\varepsilon a - \delta \frac{c}{2} a^2 \leq \varepsilon a_{0} - \delta \frac{c}{2} a_0^2$

for

![]() $a\geq a_{0}$

, which is the case if

$a\geq a_{0}$

, which is the case if

By assumption, we have

![]() $f_c(T,\alpha \sqrt{T})\geq 0$

. (If

$f_c(T,\alpha \sqrt{T})\geq 0$

. (If

![]() $c=c_1 $

as in all our computational examples, this is clear. If

$c=c_1 $

as in all our computational examples, this is clear. If

![]() $c=c_2<c_1$

, inspection of

$c=c_2<c_1$

, inspection of

![]() $f_c$

in (9) shows that

$f_c$

in (9) shows that

![]() $f_c>f_{c_1}$

.) The function

$f_c>f_{c_1}$

.) The function

![]() $\alpha \sqrt{t}$

is concave and

$\alpha \sqrt{t}$

is concave and

so for

![]() $t\geq T$

and

$t\geq T$

and

![]() $x\leq \alpha\sqrt{t}$

with

$x\leq \alpha\sqrt{t}$

with

![]() $(t,x)=\big(T+\delta,\alpha \sqrt{T}+\varepsilon\big)$

we have

$(t,x)=\big(T+\delta,\alpha \sqrt{T}+\varepsilon\big)$

we have

Putting this into (10) we get the condition

which is true by assumption. That concludes the first part of the proof.

Second, we want to choose K such that

![]() $h_c\big(t,\alpha\sqrt{t}\big)=g\big(t,\alpha\sqrt{t}\big) = \frac{\alpha}{\sqrt{t}\,}$

. We first show that this is possible, and then calculate K. We have

$h_c\big(t,\alpha\sqrt{t}\big)=g\big(t,\alpha\sqrt{t}\big) = \frac{\alpha}{\sqrt{t}\,}$

. We first show that this is possible, and then calculate K. We have

\begin{align*} h_c(t,x ) & = K \int_{0}^{\infty}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \mathop{}\!\mathrm{d} a = K\frac{1}{ct}\frac{\Phi_{ct}(x)}{\varphi_{ct}(x)} , \\ h_c\big(t,\alpha\sqrt{t}\big) & = K\frac{1}{ct}\frac{\Phi_{ct}\big(\alpha\sqrt{t}\big)}{\varphi_{ct}\big(\alpha\sqrt{t}\big)} = K\frac{1}{c\sqrt{t}\,}\frac{\Phi_{c}(\alpha)}{\varphi_{c}(\alpha)} , \end{align*}

\begin{align*} h_c(t,x ) & = K \int_{0}^{\infty}\exp\bigg[ax-\frac{c}{2}a^2t\bigg] \mathop{}\!\mathrm{d} a = K\frac{1}{ct}\frac{\Phi_{ct}(x)}{\varphi_{ct}(x)} , \\ h_c\big(t,\alpha\sqrt{t}\big) & = K\frac{1}{ct}\frac{\Phi_{ct}\big(\alpha\sqrt{t}\big)}{\varphi_{ct}\big(\alpha\sqrt{t}\big)} = K\frac{1}{c\sqrt{t}\,}\frac{\Phi_{c}(\alpha)}{\varphi_{c}(\alpha)} , \end{align*}

which depends only on

![]() $\frac{1}{\sqrt{t}\,}$

. Solving

$\frac{1}{\sqrt{t}\,}$

. Solving

![]() $h_c\big(t,\alpha\sqrt{t}\big) = \frac{\alpha}{\sqrt{t}\,}$

we get

$h_c\big(t,\alpha\sqrt{t}\big) = \frac{\alpha}{\sqrt{t}\,}$

we get

Third, we chose

![]() $\tau_0 = \inf \big\{n\geq 0\mid x+S_n \geq \alpha \sqrt{t+n}-1\big\}$

and need to show that

$\tau_0 = \inf \big\{n\geq 0\mid x+S_n \geq \alpha \sqrt{t+n}-1\big\}$

and need to show that

![]() $h_c(\tau_0,S_{\tau_0})\leq g(\tau_0,S_{\tau_0})$

. It is clear that

$h_c(\tau_0,S_{\tau_0})\leq g(\tau_0,S_{\tau_0})$

. It is clear that

![]() $S_{\tau_0}\in \big[\alpha\sqrt{\tau_0}-1,\alpha\sqrt{\tau_0}\big]$

. By the construction of K in the second part, we know that

$S_{\tau_0}\in \big[\alpha\sqrt{\tau_0}-1,\alpha\sqrt{\tau_0}\big]$

. By the construction of K in the second part, we know that

![]() $h_c\big(t,\alpha\sqrt{t}\big)= g\big(t,\alpha\sqrt{t}\big)$

. Since

$h_c\big(t,\alpha\sqrt{t}\big)= g\big(t,\alpha\sqrt{t}\big)$

. Since

![]() $h_c$

has strictly positive curvature, we know that

$h_c$

has strictly positive curvature, we know that

![]() $h(t, \cdot)$

has exactly one more intersection with

$h(t, \cdot)$

has exactly one more intersection with

![]() $g(t,\cdot)$

, which we denote by

$g(t,\cdot)$

, which we denote by

![]() $\alpha \sqrt{t}-d(t)$

. We will see that

$\alpha \sqrt{t}-d(t)$

. We will see that

![]() $d(t) \geq 1$

for

$d(t) \geq 1$

for

![]() $t\geq T$

and hence

$t\geq T$

and hence

![]() $h_c(t,x)\leq g(t,x)$

for

$h_c(t,x)\leq g(t,x)$

for

![]() $x\in \big[\alpha\sqrt{t}-d(t),\alpha\sqrt{t}\big]$

. We saw in the second part that

$x\in \big[\alpha\sqrt{t}-d(t),\alpha\sqrt{t}\big]$

. We saw in the second part that

![]() $\sqrt{t}\cdot h_c\big(t,\beta\sqrt{t}\big)$

is constant for any

$\sqrt{t}\cdot h_c\big(t,\beta\sqrt{t}\big)$

is constant for any

![]() $\beta>0$

. If, for some

$\beta>0$

. If, for some

![]() $x_0$

,

$x_0$

,

![]() $h_c(T,x_0)\leq g(T,x_0)=\frac{x_0}{T}$

, we set

$h_c(T,x_0)\leq g(T,x_0)=\frac{x_0}{T}$

, we set

![]() $\beta \,:\!=\,\frac{x_0}{\sqrt{T}\,}$

and see that, for all

$\beta \,:\!=\,\frac{x_0}{\sqrt{T}\,}$

and see that, for all

![]() $t>T$

,

$t>T$

,

If

![]() $x_0\leq \alpha\sqrt{T}-1$

, then

$x_0\leq \alpha\sqrt{T}-1$

, then

![]() $ \frac{x_0}{\sqrt{T}\,}\sqrt{t}\leq \alpha\sqrt{t}-1$

. If

$ \frac{x_0}{\sqrt{T}\,}\sqrt{t}\leq \alpha\sqrt{t}-1$

. If

![]() $d(T) \geq 1 $

then we set

$d(T) \geq 1 $

then we set

![]() $x_0 \,:\!=\, \alpha \sqrt{T}-d(T)$

. We can now conclude that for

$x_0 \,:\!=\, \alpha \sqrt{T}-d(T)$

. We can now conclude that for

![]() $t \geq T$

we have

$t \geq T$

we have

![]() $d(t) \geq 1$

, hence it is enough to show that

$d(t) \geq 1$

, hence it is enough to show that

![]() $d(T)\geq 1$

. For

$d(T)\geq 1$

. For

![]() $c=c_2$

this is true by assumption. In general

$c=c_2$

this is true by assumption. In general

![]() $c\leq c_2$

, and we have

$c\leq c_2$

, and we have

![]() $\frac{\partial }{\partial x}h_c(t,x) \leq \frac{\partial }{\partial x}h_{c_2}(t,x)$

. Since

$\frac{\partial }{\partial x}h_c(t,x) \leq \frac{\partial }{\partial x}h_{c_2}(t,x)$

. Since

![]() $h_{c_2}\big(T,\alpha \sqrt{T}\big)= h_{c_2}\big(T,\alpha \sqrt{T}\big)$

we have

$h_{c_2}\big(T,\alpha \sqrt{T}\big)= h_{c_2}\big(T,\alpha \sqrt{T}\big)$

we have

and the statement follows. Now

![]() $h_c$

and

$h_c$

and

![]() $\tau_0$

fulfill the conditions of Lemma 3. This completes the proof.

$\tau_0$

fulfill the conditions of Lemma 3. This completes the proof.

Remark 2. The only properties of S we used in the proof are that S has limited jump sizes upwards and that

![]() $m_{S_1}(a)=\mathbb{E} \big[\mathrm{e}^{a S_1}\big]$

has only one positive intersection with

$m_{S_1}(a)=\mathbb{E} \big[\mathrm{e}^{a S_1}\big]$

has only one positive intersection with

![]() $\mathrm{e}^{\frac{c}{2}a^2}$

(i.e.

$\mathrm{e}^{\frac{c}{2}a^2}$

(i.e.

![]() $\frac{1}{2}( \mathrm{e}^{a} + \mathrm{e}^{-a}) \mathrm{e}^{-\frac{c}{2}a^2}-1$

has only one positive root). This kind of lower bound can be constructed for any random walk with increments that fulfill these two conditions. This would of course result in different values for c.

$\frac{1}{2}( \mathrm{e}^{a} + \mathrm{e}^{-a}) \mathrm{e}^{-\frac{c}{2}a^2}-1$

has only one positive root). This kind of lower bound can be constructed for any random walk with increments that fulfill these two conditions. This would of course result in different values for c.

Some values for c are given in Table 1.

Table 1. Values of c for various T.

4. Error of the bounds

We want to show that the relative error of the constructed bounds is of order

![]() $\mathcal{O}\big(\frac{1}{T}\big)$

for

$\mathcal{O}\big(\frac{1}{T}\big)$

for

![]() $x\geq 0$

. First, we show that

$x\geq 0$

. First, we show that

![]() $c = c(T) \geq \frac{T-1}{T}$

for T large enough. Indeed, for

$c = c(T) \geq \frac{T-1}{T}$

for T large enough. Indeed, for

![]() $c_1$

, evaluating (9) for

$c_1$

, evaluating (9) for

![]() $c = \frac{T}{T+1}$

yields

$c = \frac{T}{T+1}$

yields

which can be seen to be positive for T large enough, yielding

![]() $c_1\geq \frac{T}{T+1}\geq \frac{T-1}{T}$

. We evaluate (7) for

$c_1\geq \frac{T}{T+1}\geq \frac{T-1}{T}$

. We evaluate (7) for

![]() $c = \frac{T-1}{T}$

and get, with elementary estimates,

$c = \frac{T-1}{T}$

and get, with elementary estimates,

\begin{align*} h_{\frac{T-1}{T}}\Big(T,\alpha\sqrt{T}-1\Big) & = \frac{K}{\sqrt{T-1}\,}\frac{\Phi\left(\frac{\alpha \sqrt{T}-1}{\sqrt{T}}\right)}{\varphi\left(\frac{\alpha \sqrt{T}-1}{\sqrt{T}}\right)}\\[2pt] & \leq \frac{K}{\sqrt{T-1}\,}\left(\frac{\Phi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right) - \frac{1}{\sqrt{T-1}\,}\varphi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right)}{\varphi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right)\exp\left[\frac{\alpha\sqrt{T}-\frac{1}{2}}{T-1}\right]}\right) \\[2pt] & = \exp\bigg[{-}\frac{\alpha\sqrt{T}-\frac{1}{2}}{T-1}\bigg]\left(\frac{\alpha }{\sqrt{T}\,} - \frac{K}{T-1}\right) \leq \frac{\alpha \sqrt{T}-1}{T}\end{align*}

\begin{align*} h_{\frac{T-1}{T}}\Big(T,\alpha\sqrt{T}-1\Big) & = \frac{K}{\sqrt{T-1}\,}\frac{\Phi\left(\frac{\alpha \sqrt{T}-1}{\sqrt{T}}\right)}{\varphi\left(\frac{\alpha \sqrt{T}-1}{\sqrt{T}}\right)}\\[2pt] & \leq \frac{K}{\sqrt{T-1}\,}\left(\frac{\Phi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right) - \frac{1}{\sqrt{T-1}\,}\varphi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right)}{\varphi\left(\frac{\alpha \sqrt{T}}{\sqrt{T}}\right)\exp\left[\frac{\alpha\sqrt{T}-\frac{1}{2}}{T-1}\right]}\right) \\[2pt] & = \exp\bigg[{-}\frac{\alpha\sqrt{T}-\frac{1}{2}}{T-1}\bigg]\left(\frac{\alpha }{\sqrt{T}\,} - \frac{K}{T-1}\right) \leq \frac{\alpha \sqrt{T}-1}{T}\end{align*}

for T large enough, so that

![]() $c_2 \geq \frac{T-1}{T}$

. We obtain

$c_2 \geq \frac{T-1}{T}$

. We obtain

![]() $c = \min \{c_1,c_2\}\geq \frac{T-1}{T}$

. We now calculate the asymptotic relative error between

$c = \min \{c_1,c_2\}\geq \frac{T-1}{T}$

. We now calculate the asymptotic relative error between

![]() $V_\mathrm{u}$

and

$V_\mathrm{u}$

and

![]() $V_\mathrm{l}$

in

$V_\mathrm{l}$

in

![]() $x = 0$

:

$x = 0$

:

\begin{align*} & \frac{V_\mathrm{u}(T,0)}{V_\mathrm{l}(T,0)}-1 = \frac{h(T,0)}{h_c(T,0)}-1 = \frac{1-\alpha^2}{K}\sqrt{c}-1 =\frac{1-\alpha^2}{\alpha}\frac{\Phi\left(\frac{\alpha}{\sqrt{c}\,}\right)}{\varphi\left(\frac{\alpha}{\sqrt{c}\,}\right)}-1 .\end{align*}

\begin{align*} & \frac{V_\mathrm{u}(T,0)}{V_\mathrm{l}(T,0)}-1 = \frac{h(T,0)}{h_c(T,0)}-1 = \frac{1-\alpha^2}{K}\sqrt{c}-1 =\frac{1-\alpha^2}{\alpha}\frac{\Phi\left(\frac{\alpha}{\sqrt{c}\,}\right)}{\varphi\left(\frac{\alpha}{\sqrt{c}\,}\right)}-1 .\end{align*}

We use the approximation

\begin{align*} \frac{\Phi\left(\frac{\alpha}{\sqrt{c}\,}\right)}{\varphi\left(\frac{\alpha}{\sqrt{c}\,}\right)} & \approx \exp\bigg[{-}\frac{\alpha^2(c-1)}{2c}\bigg]\left(\frac{\Phi(\alpha)}{\varphi(\alpha)} + \frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right) \\ & = \exp\bigg[{-}\frac{\alpha^2(c-1)}{2c}\bigg]\left(\frac{\alpha}{1-\alpha^2} + \frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right)\end{align*}

\begin{align*} \frac{\Phi\left(\frac{\alpha}{\sqrt{c}\,}\right)}{\varphi\left(\frac{\alpha}{\sqrt{c}\,}\right)} & \approx \exp\bigg[{-}\frac{\alpha^2(c-1)}{2c}\bigg]\left(\frac{\Phi(\alpha)}{\varphi(\alpha)} + \frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right) \\ & = \exp\bigg[{-}\frac{\alpha^2(c-1)}{2c}\bigg]\left(\frac{\alpha}{1-\alpha^2} + \frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right)\end{align*}

and get, with

![]() $c = \frac{T-1}{T}$

,

$c = \frac{T-1}{T}$

,

\begin{align*} \frac{h(T,0)}{h_c(T,0)}-1 & \approx \exp\bigg[-\frac{\alpha^2(c-1)}{2c}\bigg]\left(1+\frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right) -1\\ & = \exp\bigg[\frac{\alpha^2}{2T-2}\bigg]\left(1+\alpha\left(\sqrt{\frac{T-1}{T}}-1\right)\right)-1 = \mathcal{O}\bigg(\frac{1}{T}\bigg).\end{align*}

\begin{align*} \frac{h(T,0)}{h_c(T,0)}-1 & \approx \exp\bigg[-\frac{\alpha^2(c-1)}{2c}\bigg]\left(1+\frac{\alpha\big(1-\sqrt{c}\big)}{\sqrt{c}}\right) -1\\ & = \exp\bigg[\frac{\alpha^2}{2T-2}\bigg]\left(1+\alpha\left(\sqrt{\frac{T-1}{T}}-1\right)\right)-1 = \mathcal{O}\bigg(\frac{1}{T}\bigg).\end{align*}

It is now straightforward to check that, for

![]() $\alpha \sqrt{T} \geq x\geq 0$

,

$\alpha \sqrt{T} \geq x\geq 0$

,

This yields

for all

![]() $\alpha \sqrt{T} \geq x\geq 0$

.

$\alpha \sqrt{T} \geq x\geq 0$

.

5. Computational results

In this section we show how to compute the continuation and stopping set for the Chow–Robbins game. In 2013, Häggström and Wästlund [Reference Häggström and Wästlund4] computed stopping and continuation points starting from (0, 0). They used another unsymmetric notation for the problem. We give their bounds transformed into our setting. They chose a, rather large, time horizon of

![]() $T=10^{7}$

, and set

$T=10^{7}$

, and set

![]() $V^S_\mathrm{l}(T,x)= \max\left\{\frac{x}{T},0\right\}$

as a lower bound and

$V^S_\mathrm{l}(T,x)= \max\left\{\frac{x}{T},0\right\}$

as a lower bound and

as an upper bound. Then they used backward induction to calculate

![]() $V^S(n,x)$

for

$V^S(n,x)$

for

![]() $n<T$

and

$n<T$

and

![]() $i\in\{\mathrm{u},\mathrm{l}\}$

with

$i\in\{\mathrm{u},\mathrm{l}\}$

with

![]() $V_{i}^S(n,x) = \max \left \{ \frac{x}{n}, \mathbb{E}\big[V_i^{S}(n+1,x+X_i)\big] \right \}$

. If

$V_{i}^S(n,x) = \max \left \{ \frac{x}{n}, \mathbb{E}\big[V_i^{S}(n+1,x+X_i)\big] \right \}$

. If

![]() $V_\mathrm{u}(n,x)=\frac{x}{n}$

then

$V_\mathrm{u}(n,x)=\frac{x}{n}$

then

![]() $(n,x)\in D$

; if

$(n,x)\in D$

; if

![]() $V_\mathrm{l}(n,x)>\frac{x}{n}$

then

$V_\mathrm{l}(n,x)>\frac{x}{n}$

then

![]() $(n,x)\in C$

. In this way they were able to decide for all but seven points

$(n,x)\in C$

. In this way they were able to decide for all but seven points

![]() $(n,x)\in\mathbb{N}\times\mathbb{Z}$

with

$(n,x)\in\mathbb{N}\times\mathbb{Z}$

with

![]() $n\leq 1000$

whether they belong to C or D.

$n\leq 1000$

whether they belong to C or D.

We use backward induction from a finite time horizon as well, but use the much sharper bounds given in Sections 2 and 3. For our upper bound this has a nice intuition. We play the Chow–Robbins game up to the time horizon T, then we change the game to the favorable

![]() $\frac{W_t}{t}$

game, which slightly rises our expectation.

$\frac{W_t}{t}$

game, which slightly rises our expectation.

With a time horizon of

![]() $T=10^{6}$

we are able to calculate all the stopping and continuation points

$T=10^{6}$

we are able to calculate all the stopping and continuation points

![]() $(n,x)\in\mathbb{N}\times\mathbb{Z}$

with

$(n,x)\in\mathbb{N}\times\mathbb{Z}$

with

![]() $n\leq 489\,241$

. We show that all open points in [Reference Häggström and Wästlund4] belong to D.

$n\leq 489\,241$

. We show that all open points in [Reference Häggström and Wästlund4] belong to D.

Table 2. Exceptions for Theorem 3.

Unlike [Reference Häggström and Wästlund4] we use the symmetric notation. Let

![]() $X_i$

be i.i.d. random variables with

$X_i$

be i.i.d. random variables with

![]() $P(X_i =-1) =P(X_i =1) = \frac{1}{2} $

and

$P(X_i =-1) =P(X_i =1) = \frac{1}{2} $

and

![]() $S_n=\sum^{n}_{i=1} X_i$

. We choose a time horizon T and use

$S_n=\sum^{n}_{i=1} X_i$

. We choose a time horizon T and use

![]() $V^{W}$

given in Lemma 1 as an upper bound,

$V^{W}$

given in Lemma 1 as an upper bound,

![]() $V^S_\mathrm{u}(T,x)= V^{W}(T,x)$

, and

$V^S_\mathrm{u}(T,x)= V^{W}(T,x)$

, and

![]() $h_c$

given in Theorem 2 as a lower bound,

$h_c$

given in Theorem 2 as a lower bound,

![]() $V^S_\mathrm{l}(T,x)= h_c(T,x)$

, for

$V^S_\mathrm{l}(T,x)= h_c(T,x)$

, for

![]() $x\in \mathbb{Z}$

with

$x\in \mathbb{Z}$

with

![]() $x\leq \alpha \sqrt{T}$

. For

$x\leq \alpha \sqrt{T}$

. For

![]() $i\in\{\mathrm{u},\mathrm{l}\}$

we now recursively calculate

$i\in\{\mathrm{u},\mathrm{l}\}$

we now recursively calculate

![]() $V_i^S(n,x) = \max \left \{ \frac{x}{n}, \frac{1}{2}\big(V_i^S(n+1,x+1) + V_i^S(n+1,x-1)\big) \right \}$

. If

$V_i^S(n,x) = \max \left \{ \frac{x}{n}, \frac{1}{2}\big(V_i^S(n+1,x+1) + V_i^S(n+1,x-1)\big) \right \}$

. If

![]() $V_\mathrm{l}(n,x)>\frac{x}{n}$

, then

$V_\mathrm{l}(n,x)>\frac{x}{n}$

, then

![]() $(n,x)\in C$

. To check if

$(n,x)\in C$

. To check if

![]() $(n,x)\in D$

we use, instead of

$(n,x)\in D$

we use, instead of

![]() $V_\mathrm{u}(n,x)=\frac{x}{n}$

, the slightly stronger, but numerically easier to evaluate, condition

$V_\mathrm{u}(n,x)=\frac{x}{n}$

, the slightly stronger, but numerically easier to evaluate, condition

![]() $(n,x)\in D$

if

$(n,x)\in D$

if

![]() $\frac{1}{2}\big(V_\mathrm{u}^S(n+1,x+1) + V_\mathrm{u}^S(n+1,x-1)\big)< \frac{x}{n}$

. We use

$\frac{1}{2}\big(V_\mathrm{u}^S(n+1,x+1) + V_\mathrm{u}^S(n+1,x-1)\big)< \frac{x}{n}$

. We use

![]() $T=10^{6}$

to calculate V(0, 0) and the integer thresholds

$T=10^{6}$

to calculate V(0, 0) and the integer thresholds

![]() $\hat b(n) \,:\!=\, \lceil b(n) \rceil$

. For 34 values of

$\hat b(n) \,:\!=\, \lceil b(n) \rceil$

. For 34 values of

![]() $n\leq 10^{6}$

the exact value

$n\leq 10^{6}$

the exact value

![]() $\hat b(n)$

cannot be determined this way; the smallest such value is

$\hat b(n)$

cannot be determined this way; the smallest such value is

![]() $n = 489\,242$

.

$n = 489\,242$

.

Theorem 3. For the stopping problem (4) starting in

![]() $(0,0)$

, the stopping boundary

$(0,0)$

, the stopping boundary

![]() $\hat b$

for

$\hat b$

for

![]() $n\leq 489\,241$

is given by

$n\leq 489\,241$

is given by

with the eight exceptions shown in Table 2. For the value function we have

Häggström and Wästlund used a different notation (denoted with a ′ here), with

![]() $P\big(X^{\prime}_i =0\big)$

$P\big(X^{\prime}_i =0\big)$

![]() $=P\big(X^{\prime}_i =1\big) = \frac{1}{2}$

. Our functions and values translate to

$=P\big(X^{\prime}_i =1\big) = \frac{1}{2}$

. Our functions and values translate to

![]() $ V(n,x) = 2V^{\prime}\big(n,\frac{x+n}{2}\big)-1$

,

$ V(n,x) = 2V^{\prime}\big(n,\frac{x+n}{2}\big)-1$

,

![]() $b^{\prime}(n)=\left \lceil \frac{\alpha\sqrt{n}+n}{2} -\rho^{\prime}(n) \right \rceil$

with

$b^{\prime}(n)=\left \lceil \frac{\alpha\sqrt{n}+n}{2} -\rho^{\prime}(n) \right \rceil$

with

![]() $\rho^{\prime}(n) = \frac{1}{4} - \frac{1}{15.8+9\sqrt[4]{n}\,}$

, and

$\rho^{\prime}(n) = \frac{1}{4} - \frac{1}{15.8+9\sqrt[4]{n}\,}$

, and

The value of V(0, 0) is calculated with

![]() $T=2\cdot10^{6}$

.

$T=2\cdot10^{6}$

.

Remark 3. The function

![]() $\big(7.9+4.54\sqrt[4]{n}\big)^{-1} $

is constructed from our computed data. It is an interesting question whether it is indeed possible to show that

$\big(7.9+4.54\sqrt[4]{n}\big)^{-1} $

is constructed from our computed data. It is an interesting question whether it is indeed possible to show that

![]() $\alpha \sqrt{t} - b(t) = \frac{1}{2} -\mathcal{O}\big(t^{-\frac{1}{4}}\big)$

. A method to show that

$\alpha \sqrt{t} - b(t) = \frac{1}{2} -\mathcal{O}\big(t^{-\frac{1}{4}}\big)$

. A method to show that

![]() $\lim_{t\to \infty} \alpha \sqrt{t} - b(t) = \frac{1}{2}$

was introduced in [Reference Leung Lai, Yao and Aitsahlia6]. This is reflected nicely in our calculations.

$\lim_{t\to \infty} \alpha \sqrt{t} - b(t) = \frac{1}{2}$

was introduced in [Reference Leung Lai, Yao and Aitsahlia6]. This is reflected nicely in our calculations.

We also calculated

![]() $V^{S}$

and b for non-integer values. We did this by choosing an integer D and then calculating

$V^{S}$

and b for non-integer values. We did this by choosing an integer D and then calculating

![]() $V^{S}$

on

$V^{S}$

on

![]() $\frac{1}{D}\mathbb{N}\times\frac{1}{D}\mathbb{Z}$

with the method described above; for most plots we used

$\frac{1}{D}\mathbb{N}\times\frac{1}{D}\mathbb{Z}$

with the method described above; for most plots we used

![]() $D=300$

. Some plots of b and

$D=300$

. Some plots of b and

![]() $V^{S}$

are given in Figures 2–4. The method enables us to get very detailed impressions of b and

$V^{S}$

are given in Figures 2–4. The method enables us to get very detailed impressions of b and

![]() $V^{S}$

that inspire further analytical research. In [Reference Christensen and Fischer2] the authors showed that

$V^{S}$

that inspire further analytical research. In [Reference Christensen and Fischer2] the authors showed that

![]() $V^S$

is not differentiable on a dense subset of

$V^S$

is not differentiable on a dense subset of

![]() $C^S$

, and that

$C^S$

, and that

![]() $C^S$

is not convex.

$C^S$

is not convex.

Figure 2. The boundaries of the continuation sets. While asymptotically similar, they behave differently close to 0.

Figure 3. The value functions

![]() $V^{W}$

and

$V^{W}$

and

![]() $V^{S}$

in

$V^{S}$

in

![]() $t=1$

.

$t=1$

.

![]() $V^{S}$

does not follow the smooth fit principle and is not everywhere smooth on C.

$V^{S}$

does not follow the smooth fit principle and is not everywhere smooth on C.

Figure 4. The value functions

![]() $V^{W}$

and

$V^{W}$

and

![]() $V^{S}$

in

$V^{S}$

in

![]() $t=10$

.

$t=10$

.

Funding information

There are no funding bodies to thank relating to the creation of this article.

Competing interests

There were no competing interests to declare which arose during the preparation or publication process of this article.