1. Introduction

1.1 The original version of this paper was written for the UK Actuarial Profession's Financial Insurance Risk Management (FIRM) conference of June 2009. However, we believe it will be of interest to other actuarial practice areas such as non-life insurance and life assurance or to anyone involved in economic capital modelling, and interested in the use of economic capital output in pricing, business planning, capital allocation decisions and other activities.

1.2 This paper is relatively wide in its scope. We originally started off with a brief that had a large technical bias, nudging towards the more complex areas of dependency modelling such as copulas, but as we progressed in our writing we found ourselves addressing more fundamental questions such as:

– What do we mean by dependency?

– Is correlation coefficient a good measure of dependency?

– Are correlations stable over time and how do they vary?

– Are diversification benefits realistic?

– How often do we confuse spurious relationships for dependency?

– What do people mean when they talk about ‘tail correlation’?

– How does one communicate to the Board the impact of dependency modelling on economic capital results?

1.3 Diversification modelling is an important topic. Diversification benefits can amount to anything in the region of 25–50% of an insurance company's undiversified total economic capital, assuming a ground-up approach. It is therefore of great importance that this number is realistic and that any modelling underpinning the result is analytically robust and well documented. Analytical robustness, documentation and other criteria is becoming important for those organisations seeking internal model approval under Solvency II.

1.4 The words dependency and correlation have recently suffered a rather negative press in the wake of the current financial crisis within the banking industry. Typical comments along the lines of “it was the fault of the Gaussian copula – it doesn't capture tail dependency” or “the correlations were underestimated” or even “anything that relies on correlation is charlatanism” were seen in respect of structured credit securities and similarly complex financial products. Often a result of mathematical models undone by their weakest link: their assumptions or their statistical properties, such as the presence of absence of tail dependency. There is a clear need for a greater understanding of dependency and correlation and their limitations if we are to avoid a repeat of the experience of the structured credit world in the wider financial community.

1.5 This paper is a practical one, and not a theoretical one, and we attempt to illustrate theoretical concepts and ideas with as many numerical examples as possible whilst also highlighting many practical considerations in measuring, implementing and communicating the impact of correlations and dependency structures on economic capital results.

2. Executive Summary

Before going into detail within each of the ten main sections of this paper it is useful to provide an overview of the topics discussed herein.

2.1 Why Diversification is Important

We begin by defining economic capital and then go on to describe the more recent regulatory developments occurring under Basel II, Solvency II and the UK's current ICA regime. Particular attention is also played to the diversification aspects of the internal model approval process under Solvency II and the challenging requirements thereof.

2.2 Correlation as the Simplest Type of Dependency

Section 2 focuses on a particular type of dependency, the correlation coefficient. Correlation and dependency are often used interchangeably and yet they mean quite distinctly different things. We discuss the differences between them, and describe various types of correlation coefficients such as the linear (Pearson) correlation, the Spearman correlation coefficient and the Kendall Tau correlation. We also discuss, in some detail, the problems of using correlation as a sole measure of dependency.

2.3 Risk Aggregation

There are different ways to aggregate risks for an insurance company in its economic capital modelling efforts. A brief description is given of the main risk aggregation methods, namely variance-covariance matrix, copulas and causal models. Later on in the paper, separate sections are devoted to each of these methods, where their advantages and disadvantages are listed, taking into account considerations such as model accuracy, model consistency and ease of communication.

2.4 Data and Model Uncertainties

When looking at empirical evidence for dependency relationships, it is important not to mistake spurious relationships for dependency relationships between risks. There ideally should be some economic rationale behind hypotheses put forward. We take a look at Ancombe's quartet which illustrates how both statistics and visual data plots are necessary when drawing conclusions on dependency. Later on in the section we discuss the issues and considerations arising in model selection and model calibration.

2.5. Variance-Covariance Matrix Methods

2.5.1 The variance-covariance matrix is the simplest of the aggregation methods that are discussed. Following a discussion of the advantages and disadvantages of this method, other topics are discussed, from parameterisation to challenges such as the specification of variance-covariance matrix cross-terms, so common in large insurance groups, and the sometimes ignored topic of positive semi-definitive matrices.

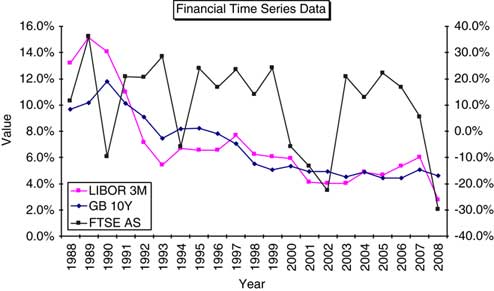

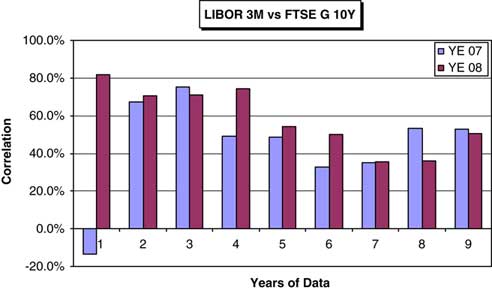

2.5.2 Despite the method being relatively straightforward parameterisation remains an issue, as it indeed it does with the other methods. Correlations between financial time series data can show marked variation according to the time period investigated. Such marked variation is illustrated in the numerical examples shown.

2.6 Copula Modelling Methods

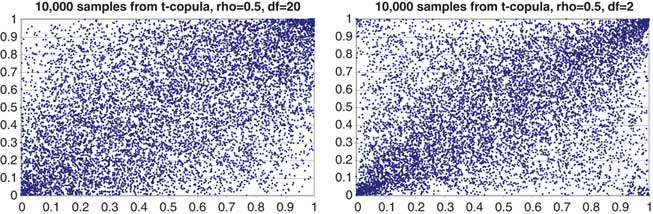

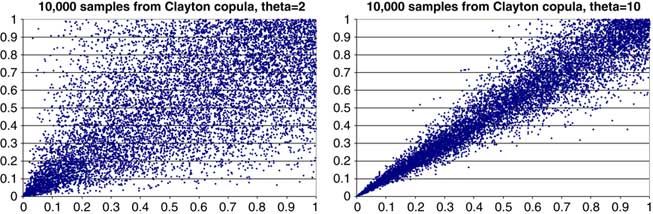

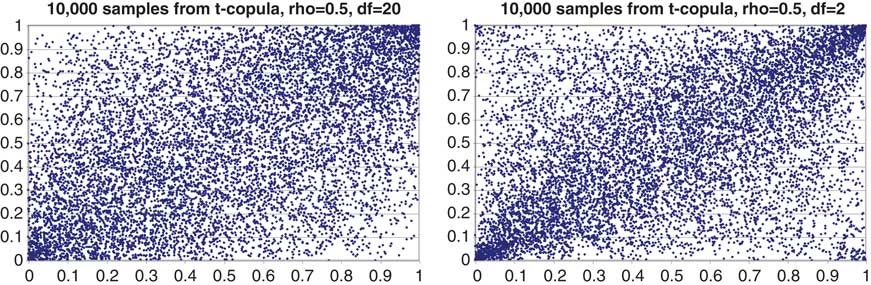

2.6.1 Copula modelling relies on more sophisticated modelling approaches using Monte Carlo simulation together with marginal risk distributions. The additional flexibility comes at the expense of complexities caused by copula selection, parameterisation and an increase in communication issues. Different copulas are discussed, from the more popular copulas such as the Gaussian and t copulas to those of the Archimedean family such as the Gumbel and Clayton copulas.

2.6.2 We have also constructed a hypothetical insurance company, ABC Insurance Company, to compare and contrast the economic capital modelling results from use of the variance-covariance matrix to those arising through use of either the Gaussian or t copulas.

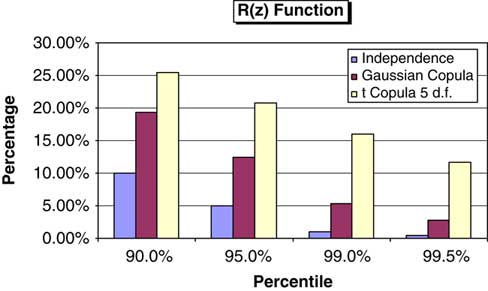

2.7 Tail Dependency

2.7.1 The concept of Tail dependency has arisen, in more recent times, as a description of the observation that large losses for different risks tend to occur more often at the same time than otherwise would be predicted by correlations estimated during ‘benign’ market conditions.

2.7.2 This section discusses tail dependency and so-called ‘tail correlations’ proposed by regulators and the issues arising from their use. Lastly, we continue with the ABC Insurance Company example to show that under certain conditions such ‘tail correlations’ need not be as large as would otherwise have first been thought.

2.8 Causal Modelling Methods

Finally the most sophisticated, intuitively appealing and potentially the most accurate of the risk aggregation methods is causal modelling i.e. dependency modelling with common risk drivers. However, their use raises other issues such as transparency, parameterisation, and the possible inducement of a false sense of accuracy for what can often be viewed as ‘black box’ models.

2.9 Communication of Economic Capital Modelling Dependency Impacts

2.9.1 This section covers the issues relating to the communication of dependency modelling approaches and results to the board and senior management within an organisation. How does one describe say a copula, what it does and how it impacts the company's overall economic capital to an often non-technical audience.

2.9.2 We present a wide range of methods that could be used, discussing their advantages and disadvantages. Some of the methods may also be of use for determination copulas and their parameters if similar calculations are made from empirical data.

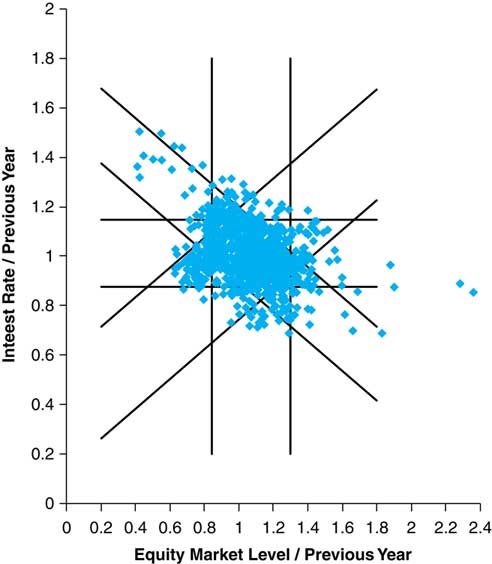

2.10 Using Half-Space Probabilities to Capture Dependencies

The last section of the paper investigates combined stress tests and two-way correlations, the latter of which has appeared in recent CEIOPS papers on risk calibration. Finally, there is a discussion of half-spaces as an alternative to the more common quadrant approaches used to consider extreme events.

3. Why Diversification Is Important

3.1 Economic Capital

3.1.1 A financial institution, be it an insurance company or a bank, faces a multitude of risks that could cause a financial loss. Economic capital is the realistic amount of capital that is needed to cover losses at a certain risk tolerance level. It captures a wide spectrum of risks such as insurance risk, market risk, credit risk and operational risk, as well as dependencies between them and various other complexities like transferability of capital, and expresses all of this as a single number.

3.1.2 There are three main components of an economic capital definition:

(i) risk measure

(ii) probability threshold and

(iii) time horizon

A company may do an economic capital calculations according to an external criteria laid down by the regulators for regulatory capital purposes or other criteria e.g. to satisfy specific standards prescribed by a rating agency.

3.1.3 Currently, the most popular risk measure that is used in banking and insurance is the one-year 99.5% Value at Risk (VaR). For example, under the UK's Individual Capital Assessment (ICA) regime and Solvency II, an insurance company needs to hold enough capital such that there is a probability of 99.5% of survival over a one-year time horizon, or in other words the probability of insolvency over 12-months is no more than 0.5%.

3.1.4 However, not all risks the company is facing will cause losses at the same time. Some areas of business may experience extremely high financial losses whilst others average losses, or even profits – an effect known as ‘diversification’. Many firms calculate the capital requirement for each risk in isolation, ignoring the effect of other risks. The effect of diversification is visible when the overall capital required for an insurance company at the 99.5% level is less than the sum of the 99.5% individual capital amounts for each risk.

3.1.5 The extent to which the aggregate 99.5% capital differs from a straight sum of the 99.5% individual capital amounts is a measure of the level of diversification between risks. The lower the degree of dependency between risks the greater the diversification benefit.

3.1.6 Diversification is not the only balancing item to reconcile single risk capital calculations to a total company level. Interaction effects may also play a part, where a development in one risk may amplify another. For example, an insured loss may be recoverable after some delay from reinsurance cover, creating an exposure to the risk of reinsurer default in the meantime.

3.1.7 The diversification benefit depends, among other things, on the level from which aggregation starts. For example, if we started aggregating at the “equities, property, fixed interest” level we are going to get a different diversification benefit than if we start aggregating at the UK equities, US equities, Asian equities, UK fixed interest, US fixed interest, Asian fixed interest level.

3.1.8 The extent of the level of diversification between risks varies from company to company. In fact, the recent CRO Forum QIS 4 benchmarking study of 2008 suggested that diversification reduces economic capital by around 40% on average.

3.2 Regulatory Developments

The use of economic capital models is far greater today compared with a few years ago. One of the key drivers to their more regular use within financial institutions has been the evolution of legislation for insurance companies and banks. Two of the more influential pieces of legislation have been the developments arising under Basel II (Banking) and Solvency II (Insurance).Footnote 1

3.2.1 Basel II

3.2.1.1 Basel II is the second of the Basel Accords issued by the Basel Committee on Banking Supervision. Its purpose being to create an international standard that banking regulators can use when creating regulations about how much capital banks need to hold against financial and operational risks.

3.2.1.2 Basel II uses the three pillars approach, where the first pillar specifies a minimum capital amount, the second pillar is a supervisory review and the third pillar is a market discipline. From an economic capital modelling perspective the three major components of risk that are covered are credit risk, operational risk and market risk. Furthermore within each major risk there are different permissible approaches to the quantification of risk.Footnote 2

3.2.2 Solvency II

3.2.2.1 Solvency II is a risk-based approach to insurance company supervision based on a three pillar approach similar to the banking industry.

3.2.2.2 The first pillar contains the quantitative requirements for valuing assets and liabilities and capital requirements and capital resources. There are two separate capital requirements, the Solvency Capital Requirement (‘SCR’) and the Minimum Capital Requirement (MCR). The SCR is a risk-based requirement and the key solvency control level. Solvency II sets out two possible methods for the calculation of the SCR:

(i) European Standard Formula; or

(ii) Firms’ own Internal Model for calculating Economic Capital.

The SCR will cover all the quantifiable risks an insurer or reinsurer faces and will take into account any risk mitigation techniques such as reinsurance. For details of the current CEIOPS advice on the internal model and standard formula standards and calibrations, refer to the CEIOPS website: www.ceiops.eu.

3.2.2.3 The second pillar contains qualitative requirements on undertakings such as risk management as well as supervisory activities. The third pillar covers supervisory reporting and disclosure.

3.3 Solvency II – Internal Model Approval

3.3.1 The modelling of dependencies and calculation of the overall diversification benefits goes beyond a pure bottom line insurance company impact. With the advent of the Internal Model Approval Process (‘IMAP’) under Solvency II, and company desires to gain approvalFootnote 3 of their economic capital models for computing the SCR, various other criteria, often otherwise ignored, suddenly become important.

3.3.2 In particular with reference to CEIOPS (2010d).

3.3.2.1 Use Test (Section 3 of the CEIOPS Paper)

1) Senior management, including the administrative or management body shall be able to demonstrate understanding of the internal model and how this fits with their business model and risk-management framework.

2) Senior management, including the administrative or management body shall be able to demonstrate understanding of the limitations of the internal model and that they take account of these limitations in their decision-making.

3) The timely calculation of results is essential. The administrative, management or supervisory body will need to ensure that the undertaking avoids significant time lags between the calculation of model output and the actual use of the model output for decision making purposes.

3.3.2.2 One approach is to require board members to become familiar with say copula methodologies, at least on a very high level, and whatever dependencies are buried inside third party economic scenario generators. An alternative approach is to simplify the models until management can understand how they work.

3.3.2.3 Simplification has some significant advantages, including easier calibration and maintenance as well as a better chance of mistakes being detected as results are open to wider scrutiny. Furthermore with regards to point 3, a simpler model is less likely to suffer from the time lags associated with a more complex model with multiple outputs. Having said that, an overly-simplified model may not be able to cope with such important features of tail dependency and asymmetry. A balance needs to be struck between simplicity and functionality.

3.3.2.4 Statistical Quality Standards (Section 5 of the CEIOPS Paper)

Another important section is that of the Statistical Quality Standards. Here there are many onerous requirements. We have focused on the “Adequate system for measuring diversification effects” and in particular on paragraphs 5.245 and 5.246 of the paper:

4) There should be meaningful support for claiming diversification effects that includes:

a) Empirical/Statistical analyses

b) Expert judgement of causal relationships

c) or a combination of both.

3.3.2.5 Regarding expert judgements, it is important to note that these should be explained and documented in detail and in a well-reasoned manner, including how expert judgement is challenged and reviewed/monitored against actual experience wherever possible.

3.3.2.6 From paragraph 5.246 of the CEIOPS paper:

5) Whatever technique is used for modelling diversification effects, undertakings shall ensure that diversification effects hold not only on average but also in extreme scenarios and scenarios for those quantiles which are used for risk management purposes.

3.3.2.7 Given that correlation coefficients between risks are often set by reference to external studies, e.g. SCR Standard Formula correlations, point 4 above would seem to imply a lot more work needs to be done within insurance organisations to justify their parameter selections. Justification of diversification parameters is likely to prove quite difficult, but it is a necessary and positive development for the insurance industry.

3.3.2.8 External Models and Data (Section 10 of the CEIOPS Paper)

Many insurance companies that use third-party Economic Scenario Generators (ESG) as a part of the economic capital modelling process will need to be able to demonstrate an understanding of the dependency modelling within and the calibration thereof. Furthermore they will need to demonstrate a knowledge of any limitations of such processes.

3.4 Diversifiers and Hedgers and the Importance of Perspective

3.4.1 One of the key roles of insurance risk management is a careful selection of risks to be accepted. Thereafter there are two principle secondary techniques to manage risks:

(i) Diversification.

(ii) Hedging.

3.4.2 Diversification and hedging, whilst both being used to mitigate risk, rely on different levels of correlation between risks to be their most effective in the minimisation of the overall level of the risk exposure that an organisation faces.

3.4.3 Insurance companies would like to minimise their overall capital needs for a given set of risk exposures and so are interested in the concept of diversification, or in other words, spreading their risks across many different categories. Diversifiers want to avoid high dependence between risks and so are interested in correlations between risks being either small positive numbers, zero or even negative.

3.4.4 In contrast, hedgers are interested in a high level of positive correlation between the gross underlying risk exposure and the relevant insurance/capital market instrument used to mitigate such risk.

3.4.5 A simple example of an insurance risk management instrument would be to use reinsurance to reduce the gross insurance loss exposure. The risk mitigating impacts of the two principle types of reinsurance, namely quote share and excess of loss, are a function of the overall level of gross exposure and the detailed specifics of the reinsurance contracts in question. The relationship (or ‘correlation’) between the gross and net losses will thus be a function of the overall level of gross insurance losses.

3.4.6 Another example from capital markets is where available derivatives from banks might be on a standard index portfolio, and available only for terms shorter than the underlying insurance policy. To hedge, the insurer buys the guarantees that are available, hoping for a sufficiently high correlation between risks.

4. Correlation As The Simplest Type Of Dependency

4.1 Dependency Structures

4.1.1 It is helpful to make a distinction between the economic drivers of risks facing an insurer and the monetary balance sheet effect. The economic drivers might include interest rates, mortality, natural catastrophes and credit indices, most of which can be observed external to the insurer. The monetary effect is a function of these drivers, sometimes a very complicated one, and is specific to the insurer.

4.1.2 Within a single period value-at-risk model, the drivers are described by a multivariate joint probability distribution. Given that multivariate distribution, we can calculate the marginal distribution of each risk, that is, the distribution of each risk in isolation. The marginal distributions constrain but do not determine the joint distribution. The ‘dependency structure’ represents the information contained in the joint distribution but not in the marginal distributions.

4.1.3 There are enough issues alone in estimating the form of the distribution and parameters for the marginal risk distributions before we even consider how they might be linked in a dependency structure. The realistic measurement and modelling of dependencies is one of the most difficult aspects of economic capital modelling facing the insurance and banking industries today.

4.1.4 An unrealistic model of a dependency structure could result in an unrealistic optimistic or pessimistic view of an enterprise, despite the fact that the individual capital components themselves may be quite reasonable.

4.1.5 Dependencies and Causation

4.1.5.1 Dependencies are a statistical feature, in which information about one risk can provide information about another. In rare cases, there is a clear causal influence of one risk on another. For example, a natural catastrophe damaging a property could cause a fall in the property owner's share price. Dependencies may arise because of shared dependence on external factors that are not individually modelled. For example, an solvency model may describe both motor insurance claim frequency and wage inflation, with both affected by unemployment rates.

4.1.5.2 More usually, dependence reflects complex effects of macroeconomic conditions on many risks. For example, inflation rates, interest rates, exchange rates and equity values are not only interrelated but they also influence both sides of the balance sheet.

4.1.5.3 The impact of these risk factors on asset values is obvious e.g. interest rates on bond values or inflation on equity values, but there is also a direct link to the liabilities. The level of inflation rates will influence the loss payments for underwriting losses and reserve development whilst interest rates will directly impact discounted cash value calculations or act as a risk factor for variation in the underwriting cycle. Where dependencies are assessed using expert judgement, a consideration of such causal relationships is a key consideration in forming the judgement.

4.1.5.4 Purely statistical approaches to dependency modelling can measure simultaneous dependency without expressing a view on causality.

4.1.5.5 For example, common statistical models of inflation and interest rates can reflect a positive correlation without expressing a view on whether falling interest rates are caused by falling inflation, falling inflation is caused by falling interest rates or falls in both variables are caused by other unmodelled factors.

4.1.6 Dependency as a Mathematical Representation

4.1.6.1 A statistical dependency between two risks is most often described by a single number, the linear (Pearson) correlation coefficient. But for many situations this one statistic is not sufficient to capture the range of possible relationships between risks and one needs information on the nature of the dependency structure. For example, if one variable depends on another, but in a non-linear way, then a correlation does not capture this. If the conditional variance of one variable depends on another, then the Pearson correlation will not pick this up either. The term “dependency structure” includes these possibilities in addition to the linear dependence which Pearson correlation captures so well.

4.1.6.2 In the real world then we have to make do with the tools available which involves measuring the observed risk correlations and then determining the parameters of the model structure that is being used to reflect such observations. Many of these themes will be explored in more detail in the following sections.

4.2 What Do We Mean by Dependency

4.2.1 In everyday language a lot of us use the words ‘correlation’ and ‘dependency’ interchangeably. Quite often a correlation coefficient is used in situations when we need to measure the strength of dependency between two random variables. In fact, it is very important to remember that correlation is just a special case of dependency. It quantifies a linear relationship between two random variables whilst dependency deals with any kind of relationship.

4.2.2 Dependency between two random variables (e.g. risk factors) means that there is some link between them, i.e. information about one random variable tells you something about the value of the other random variable. One extreme is perfect dependence; if you know the value of one random variable, you know exactly what the value of the other random variable is. The other extreme is independence; the value of one random variable does not enable you to make any predictions about the value of the other random variable.

4.2.3 Dependence between two random variables can be strong but such a relationship does not need to follow a linear pattern. Consider the simple example in Figure 1 of two random variables, X and Y. Let us suppose that U is uniformly distributed on [0,1] and, for α = 0.4:

Then Y = 1/X so these distributions are dependent.

Figure 1 Dependent Variables with Low Correlation.

Although Y = 1/X, a decreasing function of X, the correlation between X and Y is not −100% but only −29%. This is because the relationship between X and Y is non-linear. Taking different values of α, we can generate examples with correlations between −100% (for α close to zero), rising to zero as α approaches 0.5.

This is easy to investigate by simulation, or by the analytical formula for the correlation:

4.2.4 One of the reasons for the popularity of correlation in finance is that it is used in variance-covariance matrices as part of Modern Portfolio Theory, which is based on the normal (or more correctly, elliptical) distribution. However, in reality, a lot of financial risks that are dealt with in economic capital modelling and other actuarial work are not adequately described by the normal distribution, see Shaw (Reference Shaw2007), or indeed by an elliptical distribution. Many of these risks exhibit asymmetry and ‘fatter’ tails than described by the normal distribution, especially in non-life insurance, and so relying solely on correlation as a measure of dependency between risks can be very misleading. Moreover, by definition, correlation is a constant scalar coefficient.

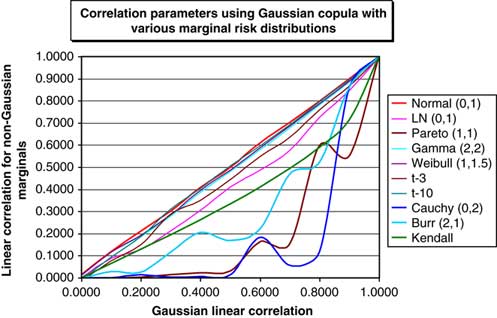

4.2.5 Market experience over the last year has led many firms to re-estimate the parameters underlying their models. In some cases, particularly for credit markets this has led to dramatic parameter revisions. This applies both to marginal distributions and to dependency structures. One possible interpretation, asserted by CEIOPS in CEIOPS (2010c) is that the linear correlations between various assets classes turned out to be significantly higher than have been observed historically. Embrechts et al. (Reference Embrechts, McNeil and Straumann1999) provide the following good summary of the deficiencies of using correlation solely as a measure of dependency:

D1. Correlation is simply a scalar measure of dependency. It cannot tell us everything we would like to know about the dependency structure of risks.

D2. Possible values of correlation depend on the marginal distribution of the risks. All values between −1 and 1 are not necessarily attainable. This means, a model might be impossible to calibrate to certain correlation values.

D3. Perfectly positively dependent risks do not necessarily have a correlation of 1. Perfectly negatively dependent risks do not necessarily have a correlation of −1.

D4. A correlation of zero does not imply independence between risks.

D5. Correlation is not invariant under monotonic transformations. For example, log(X) and log(Y) generally do not have the same correlation as X and Y.

D6. Correlation is only defined when the variances of the risks are finite. It is not an appropriate dependency measure for very heavy-tailed risks where variances appear infinite.

4.2.6 Sections 4.3 to 4.5 describe the different types of correlation.

4.3 Pearson Correlation Coefficient

We begin with by considering a pair of random variables X, Y with finite variances.

4.3.1 Two Variables

4.3.1.1 The Pearson correlation coefficient between X and Y is:

4.3.1.2 If we have a sample of n observations xi and yi where i = 1, 2, …, n, then the sample correlation coefficient, also know as Pearson product-moment correlation coefficient can be used to estimate the correlation between X and Y:

4.3.1.3 Pearson correlation has the following important properties:

– The correlation is 1 in the case of an increasing linear relationship, −1 in the case of a decreasing linear relationship, and some value in between in all other cases.

– The closer the coefficient is to either −1 or 1, the stronger the correlation between the variables. ||ρL[X,Y]|| = 1 if and only if there exist a, b ≠ 0 such that Y = a + bX.

– If the variables are independent then the correlation is 0, but the converse is not true because the correlation coefficient detects only linear dependencies between two variables. For example, suppose the random variable X is uniformly distributed on the interval from −1 to 1, and Y = X2. Then Y is completely determined by X, so that X and Y are dependent, but their correlation is zero; they are uncorrelated.

– However, in the special case when X and Y are jointly normally distributed, zero correlation is equivalent to independence.

– Linear correlation is invariant under a linear transformation: ρL[a 1 + b 1X, a 2 + b 2Y] = sign(b 1b 2) × ρL[X,Y] for all real a1, a2 and b 1, b 2 ≠ 0

– Linear correlation is not invariant under an arbitrary non-linear monotonic transformation T: ρL[T(X),T(Y)] ≠ ρL[X,Y].

4.3.1.4 There are many practical reasons for using of the Pearson correlation coefficient:

– The Pearson correlation is easy to calculate.

– The Pearson correlation is covered in many elementary statistical courses, so is likely to be familiar to a broader range of professionals making communication easier.

– Given standard deviations and correlations of a vector of variables, it is simple to calculate standard deviations for sums and differences of those vector elements, as well as correlations between different linear combinations.

– In the context of elliptically contoured distributions, the correlation matrix uniquely determines the dependence structure.

– Where many risks are correlated, the correlations form a correlation matrix. The necessary and sufficient conditions for a correlation matrix to be feasible are well understood, and rapid tests exist even for high dimensional distributions.

4.3.2 Correlation Matrix

4.3.2.1 Consider vectors of random variables X = (X 1,…,Xn)t and Y = (Y 1,…,Yn)t. Then, given pairwise covariances Cov[X,Y] and correlations ρ(X,Y) for an n × n correlation matrix we define:

Such nxn matrices have to be symmetric and Positive Semi-Definite (‘PSD’). (See Appendix A for a definition of PSD).

4.3.2.2 Rank correlation is an alternative to the use of linear correlation as a measure of dependency. The two most common types of rank correlation are:

(i) Spearman coefficient; and

(ii) Kendall Tau correlation.

Both of them are commonly used.

4.4 Spearman Coefficient

4.4.1 Definition of Spearman Correlation

4.4.1.1 Spearman Coefficient is: ρ S[X,Y] = ρ[FX(X),FY(Y)] where: FX(X) and FY(Y) are cumulative density functions of X and Y, i.e. their ranks.

4.4.1.2 In practice, a simple procedure is normally used to calculate ρS. If we are given two vectors X = (X1, …, Xn) and Y = (Y1, …, Yn) that represent observations of the random variables X and Y, then ρS between X and Y is simply a linear correlation between the vectors of ranks of Xi and Yi.

4.4.1.3 Rank correlation, and this refers to both Spearman Coefficient and Kendall Tau (see the next section), does not have the limitations of conditions D2, D3, D5 and D6.

4.4.1.4 The following property holds for rank correlation: ρrank[T(X),T(Y)] = ρrank[X,Y] for any non-linear monotonic transformation T. Rank correlation assesses how well an arbitrary monotonic function could describe the relationship between two variables without making any assumptions about their underlying distribution frequencies.

4.4.1.5 So we only need to know the ordering of the sample for each variable, not the actual values themselves. Therefore, rank correlation does not depend on marginal distributions of both variables. For this reason it can be used to calibrate copulas from empirical data. Having said this, the limitations identified in D1 and D4 still hold. It is possible to construct examples of random variables which are highly dependent on each other but have either a low or zero rank correlation coefficient.

4.5 Kendall Tau Correlation

4.5.1 The Kendall Tau correlation measures dependency as the tendency of two variables, X and Y, to move in the same (opposite) direction. Let (Xi,Yi) and (Xj,Yj) be a pair of observations of X and Y.

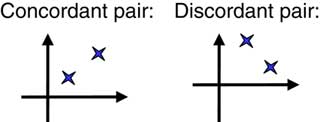

4.5.2 If (Xj−Xi) and (Yj−Yi) have the same sign, then we say that the pair is concordant, if they have opposite signs, then we say that the pair is discordant. Figure 2 illustrates concordant and discordant pairs in the (x, y)-plane.

Figure 2 Example of Concordant and Discordant pairs.

4.5.3 Definition of Kendall Tau Correlation

4.5.3.1 Suppose, we have a sample of n pairs of observations. Let C stand for the number of concordant pairs and D stand for the number of discordant pairs. A simple intuitive way to measure the strength of a relationship is to compute S = C−D, a quantity known as Kendall S.

4.5.3.2 The normalised value of S, namely ![]() is known as the Kendall Tau correlation coefficient, or Kendall Tau.

is known as the Kendall Tau correlation coefficient, or Kendall Tau.

4.5.3.3 In section 4.6 we demonstrate the differences in the values arising from use of these different measures of correlation in the case of a simple example involving 10 joint data observations for two risks A and B.

4.6 Numerical Example

4.6.1 Let us consider Table 1 with 10 joint observations from two risk factors, A and B:

Table 1 Example of calculations for risk factors A and B.

4.6.2 The Linear correlation coefficient is equal to 0.21 whereas the Spearman correlation is equal to −0.19. This latter calculation involving the correlation of the ranks between the two risks. We note that the Spearman correlation is very different from the linear correlation. This is because the linear correlation is heavily affected by one outlier (the last observation).

4.6.3 Kendall's Tau is equal to −0.16 and is calculated as (19–26)/45. It is close to the Spearman correlation, as they are both rank correlations and not affected by the outlier.

5. Risk Aggregation

5.1 Risk Aggregation Framework

5.1.1 A prima facie reason for the consideration of different dependency modelling structures is risk aggregation in computing overall economic capital levels for insurance companies and banks.

5.1.2 A common approach for deriving economic capital is by first assessing the individual risk components and then considering possible techniques to aggregate these components to derive an overall capital number. This approach is a feature of the first four methods that we discuss in sections 5.3 to 5.6.

5.1.3 One of the natural ways to model a joint behaviour of multiple risks is to come up with their multivariate distribution function. This leads to the use of copulas (see sections 5.6 and 8) which provide a way of combining stand-alone marginal risk distributions into a multivariate distribution.

5.2 Risk Aggregation Methodologies

Insurance companies and banks differ in their approaches to economic capital risk aggregation, some techniques being more sophisticated than others. The most common broad categories of methods used in financial modelling are:

– Simple Summation (no allowance for diversification benefits).

– Fixed Diversification percentage.

– Variance-covariance matrix (quite often called the ‘Correlation Matrix’ or ‘Sum of Squares’ approach).

– Copulas.

– Causal Modelling (an abstract model that uses cause and effect logic to describe the behavior of an insurance organisation; often referred to as an ‘integrated model’ using CEIOPS Solvency II nomenclature). This method is often used in combination with the above methods, such as variance-covariance matrix or copulas.

5.2.1 The Best Approach

There are various trade-offs to consider within each method:

– Model accuracy (such as the ability to model heavy tailed risks).

– Methodology consistency.

– Numerical accuracy.

– Availability of data to perform a realistic calibration.

– Intuitiveness and ease of communication.

– Flexibility.

– Resources.

5.2.2 Solvency II

5.2.2.1 As was stated in section 3.3, the advent of the Internal Model Approval Process (‘IMAP’) within Solvency II, and the desirability of many companies to gain approval, has increased the importance of some of the criteria stated in 5.2.1.

5.2.2.2 For example the “Intuitiveness and ease of communication” has particular relevance in the context of the use of the internal model for decision making purposes and management's ability to understand the methodological framework and its limitations.

5.2.2.3 For each of the possible methods, we have not rigorously discussed their merits or demerits in light of all of the criteria listed in section 5.2.1, but rather have used examples from these for the comments that we make in section 7, 8 and 10.

5.3 Simple Summation

This involves adding together the stand alone marginal risk capital amounts. It ignores potential diversification benefits and produces an upper bound for the economic capital number. Mathematically this is equivalent to assuming a perfect dependency between risks, e.g. 100% correlation.

5.4 Fixed Diversification Percentage

This method is very similar to the straight summation as described in 5.3 above, however it assumes a fixed percentage deduction from the overall capital figure.

5.5 Variance-Covariance Matrix

5.5.1 The correlation matrix approach is where capital is first calculated on a stand alone basis for each risk and then aggregated using a correlation matrix. In this calculation there is no prerequisite for the stand alone marginal risk distribution to be known, however such stand alone capital for many risks is often calculated from prior determined marginal risk in the economic capital modelling process.

5.5.2 The correlations may be estimated using conventional techniques. An alternative is to back-solve parameters to reproduce the answers to specified aggregation tests. The resulting parameters are sometimes called tail correlations or quadrant correlations.

5.6 Copulas

5.6.1 The copula approach is different, in that it involves a Monte Carlo simulation with the full marginal risk distribution of each risk and a copula function to produce a meaningful aggregate risk distribution. The simplest copulas from the calibration point of view are the Gaussian and t copula, more of which will be said later.

5.6.2 This section gives a brief intuitive introduction to the concept of copulas, whereas section 8 goes into more technical details on this topic.

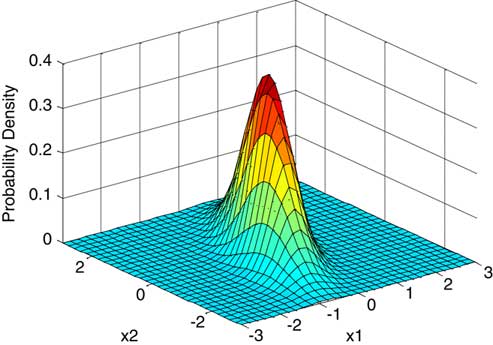

5.6.3 Let us consider two random variables X and Y. A range of outcomes for each variable on a stand alone basis can be represented by a marginal risk distribution function, given by its two-dimensional Probability Density Function (‘PDF’) or Cumulative Density Function (‘CDF’).

5.6.4 In addition, we might happen to know the distribution law which describes the joint distribution of any pair of values (X,Y), i.e. the three-dimensional surface. Visually one can think of loss amounts on the x-axis and y-axis for X and Y respectively with the z-axis representing the value of either the joint PDF or CDF. If the joint distribution is known, it gives us the best possible information about the behaviour of both variables in aggregate. However, in practice, if we have a large number of risk variables, such as equity, fixed interest, property, non-life underwriting risk, non-life reserving risk etc. it is very difficult to specify a multivariate joint distribution between all risks.

5.6.5 A way of dealing with this difficulty is to split the problem into two parts as illustrated by Figure 3:

Figure 3 Simple representation of a Copula.

– The first part describes the individual behaviour of each risk in isolation, i.e. the stand alone marginal risk distribution (‘MRD’) and

– The second part (which in itself is a distribution function) the dependency structure between the risk variables. This second part is known as a copula of the distribution:

5.7 Causal Modelling

5.7.1 Causal modelling is best explained as common risk drivers which impact risks often in a non-linear fashion. This is the usual method for capturing dependencies within economic scenario generators. A typical example would be inflation being derived from simulated nominal and real yield curves, which in turn is linked to the simulation of insurance losses. This method is often used in combination with others, for example even in causal modelling it is not possible to formulate all potential risk relationships credibly and so techniques such as correlation matrices or copulas are often used to deal with the residual dependency risk relationships.

5.7.2 Causal Loops

5.7.2.1 A causal loop diagram (‘CLD’) is a diagram that can be used to understand how interrelated variables affect one another. The CLD consists of a set of nodes representing connections between variables. The relationships can be described as either positive or negative feedback according to their effect:

– A Positive feedback (causal link) means that two nodes change in the same direction, i.e. if the node in which the link starts increases, the other node also increases, and vice versa.

– A Negative feedback (causal link) means that the two nodes change in opposite directions, i.e. if the node in which the link starts increases, then the other node decreases, and vice versa.

5.7.2.2 Furthermore, from a system dynamics perspective, a system can be classified as either ‘Open’ or ‘Closed’. Open systems have outputs that respond to but have no influence on their inputs, whilst Closed systems have outputs that both respond to and influence their inputs.

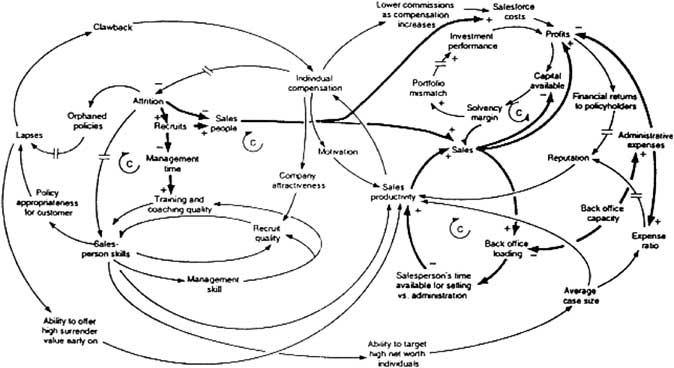

5.7.3 Causal Loop Diagram for the Growth or Decline of UK Life Insurance Companies

5.7.3.1 These models can get quite complex, as is illustrated by the causal loop diagram in Figure 4 to explain the growth or decline of UK life insurance organisations.

Figure 4 Casual Loop Diagram for Growth or Decline of UK insurance companies.

Source: Robert A Taylor (2008).“Feedback”. U.S. Department of Energy's Introduction to System Dynamics (2008).

5.7.3.2 There are various features from Figure 4 worth mentioning:

– The model's negative feedback loops are identified by ‘Cs’ which stand for ‘Counteracting loops’.

– The symbols ‘//’ are used to indicate places where there is a significant delay between cause and effect.

– The thicker lines are used to identify the feedback loops and links that the reader should focus on.

5.7.3.3 It is clear that a decision maker would not find it easy to think through the model dynamics based on such diagrams alone. As with the copula approach to economic capital calculation, the causal modeling approach would involve Monte Carlo simulation.

5.8 Natural Catastrophe vs. Reinsurance Credit Risk Aggregation

5.8.1 Companies often use a combination of different methods that we have described so far. For example, each insurance company within an insurance group may have models that operate in sufficient detail for its own purposes, but all companies within the group use common economic model output and disaster scenarios that imply dependency when risk is viewed at a group level.

5.8.2 The modelling of reinsurance credit risk, i.e. the loss associated with the failure of reinsurance counterparties is a good example of the different levels of modelling granularity.

5.8.3 One of the key dependencies for non-life insurance companies is between Catastrophe underwriting risk (‘Cat UW risk’) and Reinsurance credit risk (‘RI credit risk’). If a low frequency, high severity natural catastrophe loss event were to occur, then a non-life company writing property related business may see a large increase in reinsurance credit risk, due to both an increased likelihood of default by its reinsurers and an increased exposure i.e. larger reinsurance recoveries.

5.8.4 Different methods are possible to reflect this dependency structure between Cat UW Risk and Reinsurance credit risk. These are described as follows:

1) The simplest of the approaches would involve Catastrophe Underwriting risk (“Cat UW risk”) and Reinsurance credit risk (‘RI credit risk’) as separate entries within a variance-covariance matrix.

– The marginal risk capital calculation for RI credit risk would be based on the expected level of reinsurance recoveries and other risk factors. The exposure would include the run-off of the opening balance sheet values and the expected incremental exposure from one year's new business.

– The selected correlation coefficient for use within the correlation matrix would reflect:

(i) dependency relationships between the levels of exposure i.e. gross insurance losses (‘Cat UW risk’) and reinsurance recoveries via the reinsurance arrangements; and

(ii) more complex relationships involving reinsurance default rates and loss given default being functions of the level of gross insurance loss events.

2) An alternative slightly more complex approach would involve the Cat UW risk marginal risk distribution also capturing the associated RI Credit risk associated with Cat UW risk. The Cat UW risk capital now being larger than the value of CAT UW risk calculated in 1.

– Given that it is common for Cat UW risk modelling to involve the simulation of gross losses and reinsurance recoveries, such methods would enable the modeller to capture the varying reinsurance loss exposure more accurately via the direct structural relationship between the exposures.

– In this eventuality the RI Credit risk marginal capital calculation would only be in respect of reinsurance recoveries associated with the prior year's business.

– Despite there now being a direct causal link between the simulated gross insurance losses and the reinsurance recoveries for new business, there is still left the potential residual risk dependency relationship between a large natural catastrophe and the indirect impact on reinsurance credit risk variables like default probabilities. This residual dependency risk could be handled either though a selected correlation within a variance-covariance matrix calculation or by copula simulation.

3) More complex causal modelling methods could involve:

–

(i) Reinsurance Default Probabilities (PD) and Loss Given Default (LGD) being a function of insurance losses and/or asset values and /or:

(ii) stochastic interest rates in the discounting of the reinsurance loss payments in the RI Credit risk calculation given that economic capital calculations are on a present value basis.

5.8.5 The last method, whilst being more intuitive, than the earlier methods, does at the same time introduce more uncertainly in terms of both model risk and parameter risk.

6. Data and Model Uncertainties

6.1 Spurious Relationships

6.1.1 It is important when looking at empirical results that spurious relationships are not mistaken for dependency relationships between risks.

6.1.2 In statistics a spurious relationshipFootnote 4 is a mathematical relationship in which two occurrences have no causal connection, yet it may be inferred that there is one. “Correlation does not imply causation” is often used to point out that correlation does not imply that one variable causes the other. However, the presence of a non-zero correlation may hint that a relationship does exist.

6.1.3 Edward Tufte (Reference Tufte2006) puts it succinctly:

“Empirically observed covariation is a necessary but not sufficient condition for causality”.

6.1.4 Correlation does not imply Causation

1) A occurs in correlation with B.

2) Therefore, A causes B.

6.1.4.1 In this type of logical fallacy, a conclusion about causality is made after observing only a correlation between two or more factors. When A is observed to be correlated with B, it is sometimes taken for granted that A is causing B, even when no evidence supports this. This is a logical fallacy as four other possibilities exist:

(a) B may be the cause of A.

(b) An unknown factor C may be causing both A and B.

(c) The ‘relationship’ is coincidence or so complex or indirect that it is more effectively called coincidence.

(d) B may be the cause of A at the same time as A is the cause of B.

6.1.4.2 Determining a cause and effect relationship requires further study even when the result is statistically significant.

6.1.4.3 Examples of each, drawn from everyday life as analogies, are:

(a) “The more firemen fighting a fire (A), the bigger the fire is going to be (B). Therefore firemen cause fire”. In reality, it is (B) the fire severity influencing how many firemen are sent (A).

(b) “Sleeping with one's shoes on (A) is strongly correlated with waking up with a headache (B)”. This ignores the fact that there is a more plausible lurking variable: excessive alcohol, (C) giving rise to the observed correlation.

(c) “With a decrease in the number of pirates, there has been an increase in global warming, therefore global warming is caused by a lack of pirates.”

(d) “According to the ideal gas law: PV = nRT, given a fixed mass, increased temperature (A), results in increased pressure (B), however an increase in pressure (B) will result in an increase in temperature (A). The two variables are directly proportional to each other.

6.1.4.4 With regards economic capital modelling, simple examples of (a) and (b) are:

(a) Increasing domestic demand and inflation (A) often leads to the Government having to increase short-term interest rates (B), to counter potential over-heating in the economy, evidence of positive correlation. Conversely, falling short-term interest rates (B) is likely to lead increased demand, which once spare capacity is utilised, leads to increasing inflation (A), however in this case, there is evidence of negative correlation.

(b) The large negative correlation between equity returns (A), and credit spreads (B), during 2008 could be viewed as a consequence of the financial crisis (C).

6.1.4.5 Some observed correlation relationships are one way A → B. For example, a very severe natural catastrophe could lead to a large decrease in equity markets, but a large fall in equity markets is not likely to result in a natural catastrophe.

6.1.5 Economic Logic

6.1.5.1 When determining correlations between risks, one should consider the questions:

– Is the relationship logical (rather than spurious)?

– Is there statistical evidence for the hypothesised relationship?

6.1.5.2 An example of a relationship that satisfies both of these questions is in the simple example of a yield curve. The three year bond yield is closely related to the two year and four year bonds yields, which is intuitive given that yield curve movements are often thought of as a combination of:

(i) parallel shift; and

(ii) slope changes.

Empirical studies support positive correlations between adjacent points of the yield curve.

6.1.6 Spurious Regression Example

6.1.6.1 Consider 2 random walk time series, Xt and Yt as follows:

where:

εt and δt are N(0,1) distributed

X0 = Y0 = 5.

6.1.6.2 Given this, a random sample of 100 values for each of X and Y for t = 0 to 99 has been generated. Using this output the linear correlation and R2 have been calculated.

6.1.6.3 X and Y are not related and yet it is common, to observe high correlations.

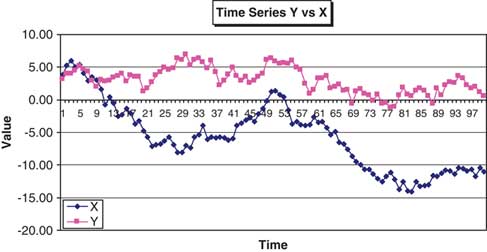

6.1.3.4 Figures 5, 6 and 7 show:

Figure 5 Linear Regression of Y vs X.

Figure 6 Time Series of Y vs X.

Figure 7 Residuals from the linear regression.

(i) Linear regression between X and Y. (Figure 5)

(ii) Time Series of X and Y (Figure 6)

(iii) Residuals from the linear regression (Figure 7).

6.1.3.5 In this random example, the observed correlation between X and Y is 56.5% and 5.9% between the residuals (any difference from zero due to simulation error). The time series plots in Figure 6 for X and Y look plausible for typical financial variables and yet we conclude they have a large positive correlation when in fact there no relationship between them at all. Moreover, there is significant autocorrelation in the residuals leading one to reject the linear regression model as a measure of the relationship. In fact, this is illustrative of the fact that trending variables, which are often a feature of economic and financial time series data, are likely to lead to a regression with high values of R2, regardless of whether they are related or not. Differencing variables (changes) eliminates trends and thus avoids spurious regression, so it is important to consider the nature of the variables being used to determine the correlation of interest.

6.2 Correlation and Linearity (Anscombe's Quartet)

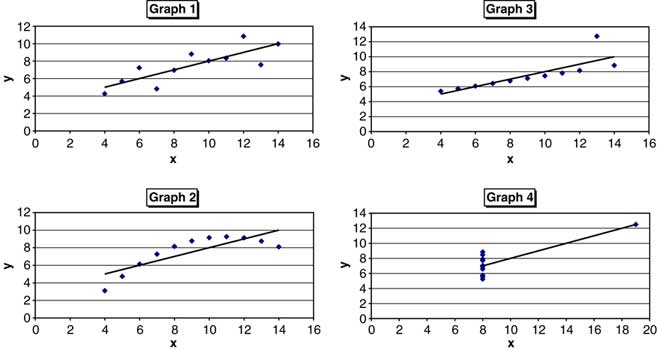

The Pearson correlation coefficient indicates the strength of a linear relationship between two risks, but it alone is often not sufficient to evaluate the strength of this relationship. This is illustrated with a study of sets of scatter plots of what is known as Anscombe's quartet, created by Francis Anscombe (Reference Anscombe1973). The y variables have the same mean, standard deviation, linear correlation and regression line and yet in all four cases the distribution of the variables is markedly different.

6.2.1 Anscombe's Quartet

Anscombe's quartet consists of four data sets which have the same identical statistical properties but which are very different to each other when viewed graphically. The different graphs are labelled 1 through to 4. The linear regression line for each set of points is given by y = 3 + 0.5x. The statistics for all four data sets are shown in Table 2.

Table 2 Anscombe's Quartet Statistics.

6.2.2 Comments on Figure 8

Graph 1 – What one would expect when considering two correlated variables that follow the assumption of normality.

Graph 2 – The relationship is not linear but an obvious non-linear relationship exists.

Graph 3 – The linear relationship is perfect except for one outlier.

Graph 4 – The relationship between variables is not linear but one outlier is enough to give a correlation of 0.81 and make it appear as though there is one.

The numerical examples in Figure 8 demonstrate that the correlation coefficient, as a statistic cannot replace a more detailed examination of the data patterns that may exist.

Figure 8 The four different graphs of Anscombe's Quartet.

6.3 Biases introduced by the way we look at Data

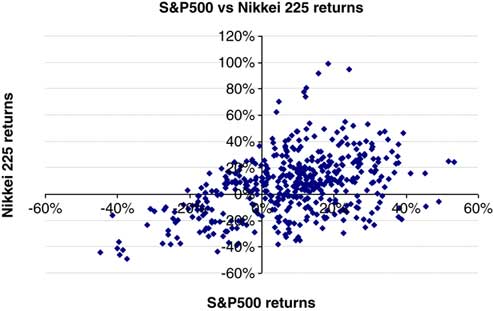

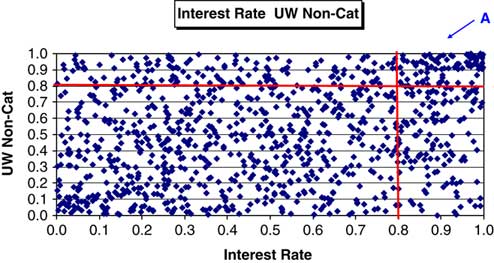

6.3.1 Following on from section 6.2, we address the potential issues arising from the different ways that we may investigate the same data set. Ideally data visualisation needs to take place in more than one framework. For example, a typical visualisation of the dependency structure between two risks is through the use of a scatter plot, as in Figure 9. Here the Pearson correlation coefficient between the two risks X and Y is 55.1%.

Figure 9 Linear Regression of Y vs. X.

6.3.2 However, if we plot risks X and Y as time series (Figure 10) we see quite a marked pattern in the differences in their relationship over time. The dependency between the two risks is time-varying, the nature of which will not be picked up in graphical representations such as a scatter plot. The scatter plot in essence disregarding any historical trends that may be present in the data.

Figure 10 Time Series of Y vs X.

6.3.3 This is not to say that a scatter plot is not useful but that it should perhaps not be the only visual aid used in the computation of correlation parameters.

6.4 Wrong-Way Risk

6.4.1 Sections 6.4 to 6.7 look at miscellaneous topics that each in their own right and illustrate some of the potential issues arising when parameterisation and modelling dependency structures within economic capital models. We begin with a discussion of the so-called ‘wrong-way risk’.

6.4.2 The way that risks are aggregated relates to the scope of inter-risk diversification, i.e. to the idea that an aggregation of risks will not be larger that the sum of its components.

6.4.3 With risks aggregation across different portfolios or business units, some of the assumptions may fail to hold. One possible reason for the aggregate to require more capital than the sum of the capital for each component is the nature of the risk measure. For example, the VaR measure can be shown to fail the subadditivity property of a coherent risk measure. It is possible to construct examples where the VaR of a combination of risks is greater than the sum of the VaR of the individual constituent parts.

6.4.4 Another reason is more complex and subtle, the so-called ‘wrong-way risk’. This is also sometimes called ‘interaction’ or ‘nonlinearity’.

6.4.5 With the ‘wrong-way risk’ the reason why the aggregate risk may be greater than the sum of the parts is not related to the choice of risk metric, but because one risk may amplify the effect of another. Measurement of risks one at a time may not detect this amplification. This issue is more prevalent with the variance-covariance matrix approach to risk aggregation than the other approaches we have identified.

6.4.6 For example, measuring separately the market and credit risk components in a portfolio of foreign currency denominated loans with a currency hedge can underestimate risk as a default may result in an over-hedge of subsequent currency movements.

6.4.7 Another example is a follow on from section 5.8 and the discussion of different types of modelling of Natural Catastrophe and Reinsurance Credit Risk. The two risks types are often modelled separately before being combined. A situation where a catastrophe increases reinsurance due as probability of default is the ‘wrong-way risk’. The standard formula makes an assumption that loss in any scenario can be expressed as a simple sum of risks from different sources, but in this example there is an arbitrariness in assessing whether a reinsurance bad debt on a larger than expected recovery is classified as default risk or natural catastrophe risk. The answer is that it depends on the order in which the risks are analysed – an effect familiar to actuaries through analysis of change. As the result is no longer a sum of marginal risks, the correlation formula based on the variance of a sum, no longer applies.

6.4.8 Modelling tail dependency in underlying risk drivers is no remedy for wrong-way risk. Instead, it is necessary to model carefully the way in which a firms net assets depends on risk drivers, including a consideration of effects when more than risk materialises at once. One method of capturing this is to use the combined scenario approach outlined in Solvency II, whose mathematical basis is covered in McNeil & Smith (Reference Mcneil and Smith2009).

6.5 Multivariate Distributions: The Curse of Dimensionality

6.5.1 There are times when one may want to calibrate dependency relationships by looking simultaneously at all risks together, rather than the more common approach of two risks at a time, whether estimating correlation coefficients between a pair of risks, or determining parameters for specific copulas. For example, if there are three risks: A,B,C of interest, this would involve looking at the joint distribution of all three (A,B,C), rather than the separate analysis of the three pair combinations (A,B), (A,C) and (B,C).

6.5.2 However, the estimation of multivariate distributions suffers from the so-called ‘curse of dimensionality’.

6.5.3 Here is an example of the problem. An insurer itemises the risks to which it is exposed, resulting in a short list of 200 risks. Let us suppose that 90 years of clean and relevant historical data is available with annual observations, for each risk. For some risks, far fewer than 90 years relevant data is available. Having 100 years data provides more statistical input that is usually available. With such a data set, we may be able to estimate not only location and dispersion but perhaps also some measures of asymmetry and tail fatness.

6.5.4 However, in the multivariate case, the number of parameters proliferates relative to the number of observations. For example, suppose we are to estimate a correlation matrix. A 200 × 200 correlation matrix contains 19,900 distinct elements. Out of 40,000 elements, the 200 diagonal elements are all 1, and as the matrix is symmetric the remaining 19,900 elements each appear twice, once below and once above the diagonal.

6.5.5 The number of data points is only 18,000, this being 200 risks multiplied by 90 annual observations. No continuous calibration methodology can map the possible data sets onto the set of possible correlation matrices. There will always be some correlation matrices that are mathematically valid but inaccessible, that is, which cannot arise for any input data set. The more limited the data, the greater the extent of inaccessible matrices.

6.5.6 It may be argued that it is desirable to capture aspects of distribution beyond mean and variance. For example, it may be desirable to capture skewness and tail fatness. It may be desirable to capture aspects of dependency beyond correlations, such as quadrant correlations or tail dependency. All of these introduce additional parameters, inevitably increasing the extent of inaccessible correlation matrices.

6.6 Multivariate Distributions: Multivariate Model Selection

Given the wide range of possible multivariate models, and the impossibility of distinguishing between them on data alone, other supplementary criteria come into play.

These might include:

– Computational ease of calibration, validation or simulation

– Results-driven model selection

– Consensus – using a similar approach to other firms

– Mathematically ‘natural’ models.

We examine each of these criteria in turn.

6.6.1 Computational Ease of Calibration, Validation or Simulation

6.6.1.1 Calculations for calibrating models can be computationally intensive and are prone to malfunction. The malfunctions occur because model fitting usually involves difficult numerical procedures, such as optimisation of goodness of fit, or solution of multiple simultaneous equations to capture chosen calibration statistics.

6.6.1.2 There is no infallible general algorithm for optimisation or simultaneous equations solution. Reliability can be improved by choosing models for which the required estimation equations are well behaved; for example when functions to be minimised are convex, or when simultaneous equations are linear.

6.6.1.3 Some mathematical models lend themselves more easily to such methods, and in the absence of a firm steer from data, it is natural that analysts prefer models that are easy to handle.

6.6.2 Results-Driven Model Selection

6.6.2.1 Results-driven criteria choose a model whose conclusions are acceptable. This could mean, for example, avoiding model families whose estimation results in large sensitivities to individual data points, thus reducing the chance of widely fluctuating model conclusions.

6.6.2.2 Results-driven selection may also describe a technique where an analyst seeks to understand the range of possible outcome from different models, and seek output at a chosen point within that range. It is desirable to select a modelling approach whose output ranks consistently among the range of possible model. However, results-driven selection has a downside, and indeed many references to the technique use the pejorative term ‘cherry picking’. The fear is that firms may fail to communicate fully the extent of cherry picking, or that users may overestimate the influence of data versus manual selection in reported numbers.

6.6.3 Consensus – Using a Similar Approach to Other Firms

6.6.3.1 Many specialist consultants and model developers have built software and methodologies for capital calculations, aimed at the insurance market. Some insurers may perceive cost savings or reduced project risk from buying a pre-packaged solution rather than building from scratch.

6.6.3.2 Even where firms well understand a modelling approach internally, communication to the outside world of regulators, rating agencies and analysts remains a formidable challenge.

6.6.3.3 External parties seldom have a particular methodology in mind, but instead compare approaches from different insurers, commenting on relative strengths and weaknesses. These commercial influences result in a substantial consensus dividend, where insurers who travel with their peers are likely to experience better project success, lower build costs and easier dialogue with external parties.

6.6.3.4 The use of consensus methodologies may be characterised as ‘best practice’ or ‘herd instinct’ depending on whether they are considered to be a good thing or not. However there is a systemic risk if everyone is using the same models.

6.6.4 Mathematically ‘Natural’ Models

6.6.4.1 The final criterion for model selection is the mathematical concept of a ‘natural’ model. For example, there are many ways to construct bivariate distributions with normal marginals, but any mathematician would regard the bivariate normal as the ‘natural’ extension of the univariate normal. Models that are not natural may be called ‘arbitrary’.

6.6.4.2 Given mathematics’ usual requirement for precise definitions, the meaning of ‘natural’ is surprisingly subjective. A paper criticising the use of copulas by Mikosch (Reference Mikosch2005) spawned a heated debate as to what models could be considered natural or arbitrary. In the absence of agreed criteria for natural models, the discussion reduces to a matter of personal opinion with little hope at present of objective resolution.

6.6.4.3 Mathematical notions of natural models are not empirical assertions; the goodness-of-fit or otherwise to a particular data set is irrelevant. In the physical sciences, this has proved no obstacle because it turns out that many accurate physical theories are also mathematically elegant, with laws of motion, optics, relativity and quantum mechanics all mapping into neat mathematical formulations.

6.6.4.4 Unfortunately, such success stories are rare in applied finance. Indeed, users’ uncritical faith in elegant mathematical concepts such as normality, or a failure to include messy concepts such as tail dependence, have contributed to more financial problems than to solutions. However, in these examples it appears that mathematical elegance has been allowed to override empirical evidence.

6.7 Ten Properties of Good Calibration

6.7.1 A realistic economic capital model should ideally have robust calibration. There remains the question therefore of what constitutes a ‘good calibration’. A natural statistical approach is to select a family of distributions and to estimate these using a combination of historic data and expert guesswork. Several generic approaches exist for distribution estimation, including the method of maximum likelihood, as well as methods based on setting fitted statistics such as means, correlations, rank correlations or kurtosis to their empirically estimated counterparts.

6.7.2 There are good and bad ways to go about calibrations. Choices to adopt a particular approach usually involve some sort of trade-off between different criteria. Some attributes of a good calibration process are as follows:

– Statistical power – This means that estimated parameters are likely to be close to the true parameters.

– Robust Process – The estimation process converges to a unique solution (does not get stuck in an endless loop nor iterate between solutions), estimated models can be computed quickly and the fitted model varies continuously as a function of the data and responds logically to new data

– Symmetries – The estimation process is preserved under various transformations of the data, e.g. if we rotate the historic risk vectors then fitted distribution is the corresponding rotation of the unrotated fit.

– Parsimony – The number of parameters used is minimised in order to produce the simplest possible model. Parameters are included only if justified by the data.

– Surjection – Any underlying distribution is capable of arising as a fit given sufficient data; the calibration process does not exclude significant model types.

– Parallel threading – Individual parameters each follow a transparent process from data to parameter value, which can be verified manually, communicated graphically, explained to non-experts and consequences understood. The alternative is an estimation process where all parameters emerge simultaneously from a single complicated calculation.

– Consistency – Input conflicts can easily be avoided or at least remedied. An example of input conflicts is a correlation matrix that is not positive definite; each individual assumption is valid but together they are not.

– Inclusion – Different methodologies can be combined for different parameters. For example some parameters may be estimated from historic data, others taken from published analysis, others from expert views and yet others from peer group surveys.

– Evolution – Existing software can be re-used, or cheap software licensed. The need for technical re-training, bespoke development or explaining unfamiliar concepts to regulators, is minimised.

– Surplus suitability – The construction of any model of surplus (in short available capital) should lend itself to fast and accurate calculations of key percentiles of the surplus function, as well as to supplementary calculations such as marginal attribution of capital requirements.

7. Variance-Covariance Matrix Methods

7.1 Methodology

7.1.1 Introduction

7.1.1.1 This method allows for a full pattern of risk interactions with the assumption of differing pairwise correlations between risks. The overall level of diversification between risks is dependent on the levels of these correlations.

7.1.1.2 The capital for an insurance company with n risks is calculated by use of the following variance-covariance matrix formula:

Where:

– Ci is the stand alone capital amount for the ith risk

– Correlation coefficient ρij for risks i and j allows for the diversification between risks.

7.1.1.3 When correlations are all equal to 1, the calculation is equivalent to the sum of stand-alone capital amounts. If the risks are all independent i.e. the correlation coefficients between risks i and j are all equal to zero then the total capital is equal to:

7.1.2 Risk Dimensions – Economic Nature vs Organisational

7.1.2.1 Many financial institutions, in particular large insurance groups, consist of various subsidiaries, business units (BU) or similar organisations. When faced with this situation, there are two important dimensions of risk classification:

– Economic nature of risk – insurance, market, credit, operational risk etc.

– Organisational structure – business lines or legal entities.

7.1.2.2 The economic nature of the risk considers aggregating risks into silos by risk-type across the whole group e.g. equity risk by consideration of the aggregate risk at a group balance sheet level. By contrast, the organisational risk grouping would consider organisation silos before the aggregation to a group capital total. This approach deals with inter-risk relationships earlier on in the process and takes advantage of known corporate structures. An organisational classification presents far less difficulty than a classification by risk where definitions of risk may be imprecise across different organisations.

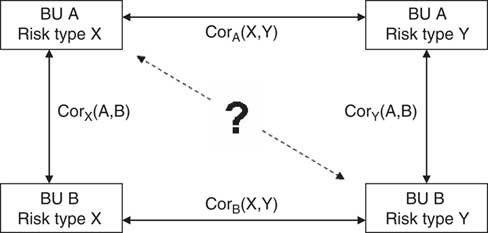

7.1.2.3 A third approach features aspects of both and operates at a lower level of risk granularity. In this situation the unit of risk that is worked with is of the form ‘Organisation/Risk’ e.g. UK/Equity, France/Fixed Interest etc, the aggregation process thereafter working from this base level. However, whereas at face value this would seem to be conceptually a more accurate approach, there are other issues to consider like smaller volumes of data at this finer level of granularity and the difficulties of estimating “cross-terms” in the enlarged correlation matrix. For example what would be the correlation coefficient between ‘UK/Equity’ and ‘France/Fixed Interest’ given the most likely scenario that correlation assumptions would only have been determined between the Equity and Fixed Interest risks within each business units, such as the UK or France.

7.1.2.4 Section 7.6 discusses the issues arising in trying to determine these cross-terms.

7.2 Advantages and Disadvantages of Variance-covariance Matrix Approach

Advantages:

– Relatively simple, intuitive and transparent.

– Facilitates a consensus of typical correlations for use by companies.

– The use of a cascade of correlation matrices permits the easy addition of further risks, from a new business unit, subsidiary or risk category.

– Correlation is the only form of dependency that a lot of non-specialists are familiar with. This makes communication easier than some of the more sophisticated methods described in sections 8 and 10.

Disadvantages:

– Risks where we have empirical evidence of correlations (mainly reliable market data) are very few and so there is a heavy reliance on a subjective ‘expert opinion’ to determine correlations.

– The variance-covariance matrix approach implies that the underlying risks are normally (or elliptically) distributed.

– Underestimates the effects of skewed distributions and does not allow for potential heavier dependency in the tail.

– The value of correlations is sensitive to the underlying marginal risk distributions.

– A correlation matrix has to satisfy certain conditions (e.g. is Positive Semi-Definite). These are often ignored in practice.

– All cause-effect structures cannot be properly modelled.

– Does not capture non-linearities.

7.3 Risk Granularity

7.3.1 The finer the level of risk classification (i.e. a more granular subdivision of risk) within a variance-covariance matrix, the lower the intra-risk diversification (i.e. diversification within a risk category) and the greater the inter-risk diversification (i.e. the diversification between risk categories). Differences in approaches will generally lead to differences in the economic capital number given the complexity of re-working all of the various risk dependency relationships.

7.3.2 Sometimes the economic capital calculation will feature a series of ‘nested’ variance-covariance matrices. An example of this is the method adopted within the standard formula approach to the Solvency II Solvency Capital requirement (‘SCR’) for QIS 4 and as described in CEIOPS (2008).

7.3.3 Table 3 details the correlation matrix that is used to aggregate the individual capital amounts for each of the five main risk categories to derive the Basic SCR. Market Risk capital (SCR Market) is one of the major risk capital categories within this process.

Table 3 QIS 4 SCR Correlation Matrix.

7.3.4 Table 4 shows the nested Market Risk correlation matrix, i.e. the matrix that is used to aggregate the individual capital amounts in respect of different types of market risk e.g. interest rates, equities etc to derive an overall Market Risk capital number for use in Table 3.

Table 4 QIS 4 Market Risk Correlation Matrix.

7.3.5 It is a common practice for many life insurance companies to aggregate individual stress tests results by using the variance-covariance matrix approach. Some non-life insurance companiesFootnote 5 use this approach as well. However, more and more companies (at the moment mostly in the non-life area, although with life offices also gradually moving in the same direction), use more sophisticated mathematical models involving copulas and causal modelling.

7.4 Simpler Variants

7.4.1 Simple Summation

As noted in section 5.3, this involves adding together the stand alone marginal risk capital amounts. Mathematically this is equivalent to assuming a perfect dependency between risks, e.g. 100% correlation.

Advantages

– No data is required to calibrate the model correlations.

– Computational simplicity.

– Ease of communication of method and results.

– It is conservative.

Disadvantages

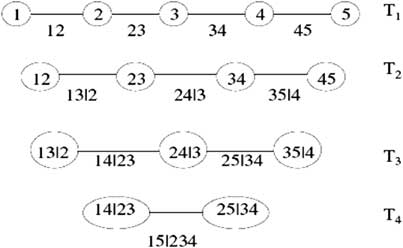

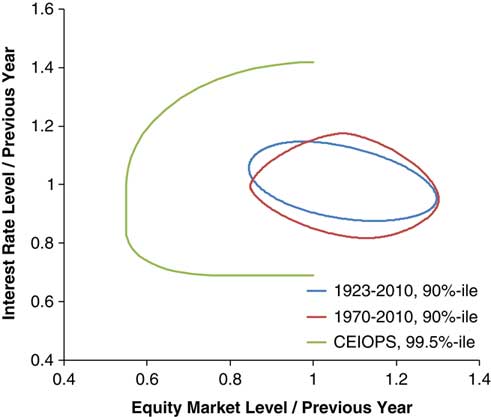

– This method overestimates the amount of required capital, and therefore incurs a cost of holding extra capital