1. Introduction

Generating reduced-order representations of turbulent flows is a major challenge underpinning several tasks and goals in fluid dynamics. They are essential both for modelling, as when writing reduced-order models, and for analysis and theory, as, for example, in the quest to determine the existence, dimensions and characteristics of inertial manifolds. Thus, the list of techniques for dimensionality reduction and their associated history is long, going from Fourier decompositions and principal component analysis (PCA), to wavelets, and lately to neural network-based autoencoders (Brunton & Kutz Reference Brunton and Kutz2019). While the former employ linear techniques to derive orthogonal bases to best represent the data, the latter perform nonlinear decompositions and have been shown to be significantly more effective at generating compact representations of different datasets (Hinton & Salakhutdinov Reference Hinton and Salakhutdinov2006; Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016).

Fuelled by their achievements in various machine learning tasks, autoencoders have been applied to various problems in fluid mechanics. In the context of the Kuramoto–Shivashinky equation, Linot & Graham (2020, 2022) employed a combination of PCA and autoencoders to parameterize the inertial manifold of the equation, where the dynamics on the parametrized space were later modelled with neural ordinary differential equations (ODEs) (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019). These processes served to estimate the dimension of the inertial manifold, matching the estimates obtained via analysis of unstable periodic orbits (UPOs) (Ding et al. Reference Ding, Chate, Cvitanovic, Siminos and Takeuchi2016). Expanding on these methods, Floryan & Graham (Reference Floryan and Graham2022) incorporated elements of manifold theory into their analysis of the latent space. Working with the Kolmogorov flow, Page et al. (2021, 2023) used autoencoders, along with a Fourier decomposition of the latent space, to find and characterize UPOs and relate them to bursting events. Similarly, Linot & Graham (Reference Linot and Graham2023), De Jesús & Graham (Reference De, Pérez and Graham2023) and Constante-Amores, Linot & Graham (Reference Constante-Amores, Linot and Graham2024) used models of the latent space dynamics to search for UPOs in the flow in Kolmogorov, channel and pipe flows, respectively. Fukami, Nakamura & Fukagata (Reference Fukami, Nakamura and Fukagata2020) and Eivazi et al. (Reference Eivazi, Le Clainche, Hoyas and Vinuesa2022) were able to determine a hierarchy of modes within latent space and facilitated the proposal of nonlinear mode decomposition of flows in fewer modes than their linear counterparts. In the realm of turbulent stratified flows, Foldes, Camporeale & Marino (Reference Foldes, Camporeale and Marino2024) were able to improve the autoencoders capabilities to capture high-order statistics by adding extra penalizations to the loss functions.

In order to avoid the costly parameter sweeps involved in determining the smallest dimension needed for the autoencoder to represent the data properly, different regularized autoencoder variants have been proposed. Zeng & Graham (Reference Zeng and Graham2023) utilized the implicit regularization method, first introduced by Jing, Zbontar & lecun (Reference Jing, Zbontar and Lecun2020), to Kolmogorov flow, showing that, in their examples, the regularized variant was able to give the same estimate for the minimal numbers of modes as the non-regularized version. Sondak & Protopapas (Reference Sondak and Protopapas2021) introduced

![]() $L_1$

regularization to the autoencoder model so as to create a sparse version of the latent vector. They successfully identified the optimal reduced latent space for the Kuramoto–Shivashinkyy equation while demonstrating the absence of a corresponding reduced latent space for the Korteweg--de Vries equation, reflecting its inherently infinite-dimensional dynamics. These regularized variants have not yet been applied to flows with large-scale separation.

$L_1$

regularization to the autoencoder model so as to create a sparse version of the latent vector. They successfully identified the optimal reduced latent space for the Kuramoto–Shivashinkyy equation while demonstrating the absence of a corresponding reduced latent space for the Korteweg--de Vries equation, reflecting its inherently infinite-dimensional dynamics. These regularized variants have not yet been applied to flows with large-scale separation.

As was mentioned previously and as many of the examples above show, autoencoders serve as a powerful tool to generate parametrizations of inertial manifolds (or, at the very least, parametrizations of submanifolds that capture the relevant dynamics) and estimate their dimensions. Inertial manifolds are finite-dimensional manifolds that contain the global attractor of nonlinear dissipative systems (Hopf Reference Hopf1948; Foias, Sell & Temam Reference Foias, Sell and Temam1988), offering a simplified description through a finite set of ODEs (Temam Reference Temam1990). This concept has been successfully applied to systems such as the Kuramoto--Sivashinsky equation, where the existence of such manifolds has been rigorously established (Hyman & Nicolaenko Reference Hyman and Nicolaenko1986). While this is not the case for Navier--Stokes flows (Temam Reference Temam1990), several studies provide evidence of its possible existence (Chandler & Kerswell Reference Chandler and Kerswell2013; Willis, Short & Cvitanović Reference Willis, Short and Cvitanović2016; Crowley et al. Reference Crowley, Pughe-Sanford, Toler, Krygier, Grigoriev and Schatz2022; De Jesús & Graham Reference De, Pérez and Graham2023).

The main aim of this paper is to generate low-order representations of the temperature field of Rayleigh--Bénard flows and use them to estimate the dimensionality of the flow as it transitions to turbulence, with an added emphasis on understanding the performance of different autoencoders when dealing with strongly turbulent and multiscale data. Heat-induced currents play a crucial role in various geophysical and industrial activities. For instance, in the geophysical context, these currents are essential for the circulation of the Earth’s atmosphere (Hartmann, Moy & Fu Reference Hartmann, Moy and Fu2001) and oceans (Våge et al. Reference Våge, Papritz, Håvik, Spall and Moore2018). The presence of buoyancy enables the generation of convective rolls, large-scale structures that transport heat and matter from hot to cold regions of the fluid. The balance between buoyant and viscous forces weighted by the Prandtl number, which is the ratio of viscosity to thermal diffusivity, is measured by the Rayleigh number. As this balance changes, so does the behaviour of the flow. We focus on Rayleigh numbers ranging from

![]() $10^6$

to

$10^6$

to

![]() $10^8$

, where turbulence starts to develop and the system experiences a sudden change in its properties, such as the emergence of multi-stability and long transient states (van der Poel et al. Reference van der, Erwin, Stevens, Sugiyama and Lohse2012), the reversal of large-scale structures (Sugiyama et al. Reference Sugiyama, Ni, Stevens, Chan, Zhou, Xi, Sun, Grossmann, Xia and Lohse2010; Castillo-Castellanos et al. Reference Castillo-Castellanos, Sergent, Podvin and Rossi2019), the appearance of new optimal steady states (Kooloth, Sondak & Smith Reference Kooloth, Sondak and Smith2021) and a loss of synchronization capabilities (Agasthya et al. Reference Agasthya, Clark Di Leoni and Biferale2022). These changes are associated with an increase in the number of structures the flow exhibits, which, in turn, indicate that the dimension of the inertial manifold of the system is increasing during the transition. The use of convolutional autoencoders to generate reduced-order models of Rayleigh--Bénard flows has been explored for

$10^8$

, where turbulence starts to develop and the system experiences a sudden change in its properties, such as the emergence of multi-stability and long transient states (van der Poel et al. Reference van der, Erwin, Stevens, Sugiyama and Lohse2012), the reversal of large-scale structures (Sugiyama et al. Reference Sugiyama, Ni, Stevens, Chan, Zhou, Xi, Sun, Grossmann, Xia and Lohse2010; Castillo-Castellanos et al. Reference Castillo-Castellanos, Sergent, Podvin and Rossi2019), the appearance of new optimal steady states (Kooloth, Sondak & Smith Reference Kooloth, Sondak and Smith2021) and a loss of synchronization capabilities (Agasthya et al. Reference Agasthya, Clark Di Leoni and Biferale2022). These changes are associated with an increase in the number of structures the flow exhibits, which, in turn, indicate that the dimension of the inertial manifold of the system is increasing during the transition. The use of convolutional autoencoders to generate reduced-order models of Rayleigh--Bénard flows has been explored for

![]() $Ra=10^7$

in Pandey et al. (Reference Pandey, Teutsch, Mäder and Schumacher2022), where the local convective heat flux, a central measure for characterizing mean turbulent heat transfer, was forecasted by reducing the datasets to a dimension of 40.

$Ra=10^7$

in Pandey et al. (Reference Pandey, Teutsch, Mäder and Schumacher2022), where the local convective heat flux, a central measure for characterizing mean turbulent heat transfer, was forecasted by reducing the datasets to a dimension of 40.

This paper is organized as follows. Section 2 details the theoretical background and the analytical methods employed, first introducing important aspects of Rayleigh–Bénard flows in § 2.1. This is followed by a detailed explanation of the different autoencoder architectures used in our study, § 2.2 outlines the design and functionality of the fixed-

![]() $d$

autoencoder (F

$d$

autoencoder (F

![]() $d$

AE) networks with a fixed latent space dimension, and § 2.3 introduces two modifications to the network to improve training times: implicit rank minimizing autoencoders (IRMAEs) and sparsity-inducing autoencoders (SIAEs). In § 2.4 we outline the empirical framework used to test our theories, the details of the numerical simulations and training of the neural networks. Results and conclusions are presented in §§ 3 and 4, respectively.

$d$

AE) networks with a fixed latent space dimension, and § 2.3 introduces two modifications to the network to improve training times: implicit rank minimizing autoencoders (IRMAEs) and sparsity-inducing autoencoders (SIAEs). In § 2.4 we outline the empirical framework used to test our theories, the details of the numerical simulations and training of the neural networks. Results and conclusions are presented in §§ 3 and 4, respectively.

2. Problem set-up and methodology

2.1. Rayleigh–Bénard flow

Rayleigh–Bénard convection describes the motion of a planar cell of fluid flow that is heated from below. Under the Boussinesq approximation, density variations are assumed to be small and the velocity and temperature fields are coupled through the buoyancy term. For a rectangular domain of horizontal length

![]() $L$

and height

$L$

and height

![]() $h$

, the equations take the form

$h$

, the equations take the form

where

![]() $\boldsymbol {v}$

is the velocity field,

$\boldsymbol {v}$

is the velocity field,

![]() $p$

the pressure,

$p$

the pressure,

![]() $T$

the temperature,

$T$

the temperature,

![]() $\nu$

the kinematic viscosity,

$\nu$

the kinematic viscosity,

![]() $\kappa$

the thermal conductivity,

$\kappa$

the thermal conductivity,

![]() $\hat {\boldsymbol {z}}$

the direction parallel to gravity and

$\hat {\boldsymbol {z}}$

the direction parallel to gravity and

![]() $g$

the acceleration of gravity. For reasons outlined below, we work with the rescaled temperature fluctuations

$g$

the acceleration of gravity. For reasons outlined below, we work with the rescaled temperature fluctuations

![]() $\theta = (T - T_0) \sqrt {\alpha g h / \Delta T}$

, with

$\theta = (T - T_0) \sqrt {\alpha g h / \Delta T}$

, with

![]() $T_0 = T_b - z \Delta T/h$

the linear background profile and

$T_0 = T_b - z \Delta T/h$

the linear background profile and

![]() $T_b$

the temperature of the bottom plate. We assume periodic boundary conditions in the horizontal direction and non-slip boundary conditions in the vertical direction throughout the whole work.

$T_b$

the temperature of the bottom plate. We assume periodic boundary conditions in the horizontal direction and non-slip boundary conditions in the vertical direction throughout the whole work.

The dimensionless Rayleigh number,

![]() $Ra$

, quantifies the balance between buoyancy forces and the combined effects of thermal diffusion and viscous dissipation and is given by

$Ra$

, quantifies the balance between buoyancy forces and the combined effects of thermal diffusion and viscous dissipation and is given by

As

![]() $Ra$

increases, the fluid undergoes several transitions: from stable stratification, characterized by conductive heat transfer, to the emergence of convective rolls or cells (Bénard cells), and eventually to chaotic and turbulent motion. At

$Ra$

increases, the fluid undergoes several transitions: from stable stratification, characterized by conductive heat transfer, to the emergence of convective rolls or cells (Bénard cells), and eventually to chaotic and turbulent motion. At

![]() $Ra \approx 1700$

the fluid transitions from a conductive to convective behaviour. This occurs as linear instability mechanisms break the translational invariance of the flow leading to the appearance of convective rolls. We focus on

$Ra \approx 1700$

the fluid transitions from a conductive to convective behaviour. This occurs as linear instability mechanisms break the translational invariance of the flow leading to the appearance of convective rolls. We focus on

![]() $Ra$

values ranging from

$Ra$

values ranging from

![]() $10^6$

to

$10^6$

to

![]() $10^8$

. At these levels, the dynamics is highly convective, with rolls starting to break down and the flow showing clear signs of turbulence. A subsequent regime, not covered in this work, occurring when

$10^8$

. At these levels, the dynamics is highly convective, with rolls starting to break down and the flow showing clear signs of turbulence. A subsequent regime, not covered in this work, occurring when

![]() $Ra$

reaches

$Ra$

reaches

![]() $10^{13}$

, is the so-called ultimate regime. Here, small-scale structures dominate, and rolls are no longer evident (Zhu et al. Reference Zhu, Mathai, Stevens, Verzicco and Lohse2018).

$10^{13}$

, is the so-called ultimate regime. Here, small-scale structures dominate, and rolls are no longer evident (Zhu et al. Reference Zhu, Mathai, Stevens, Verzicco and Lohse2018).

The two other dimensionless numbers that characterize Rayleigh–Bénard convection are the Prandtl number

![]() $Pr = \nu / \kappa$

, which we set to

$Pr = \nu / \kappa$

, which we set to

![]() $1$

in this study, and the Nusselt number

$1$

in this study, and the Nusselt number

![]() $Nu$

,

$Nu$

,

where

![]() $\langle \cdot \rangle {x,t}$

is the time and spatial average at a height

$\langle \cdot \rangle {x,t}$

is the time and spatial average at a height

![]() $z$

. The Nusselt number compares the ratio of vertical heat transfer due to convection to the vertical heat transfer due to conduction. On account of energy conservation, the Nusselt number is constant at each height

$z$

. The Nusselt number compares the ratio of vertical heat transfer due to convection to the vertical heat transfer due to conduction. On account of energy conservation, the Nusselt number is constant at each height

![]() $z$

when using periodic or adiabatic boundary conditions on the sides of the domain.

$z$

when using periodic or adiabatic boundary conditions on the sides of the domain.

In terms of length scales, there are two important small-scale quantities. One is the thermal boundary layer

![]() $\delta _\theta$

, which is the thin region adjacent to the walls where most of the temperature gradient of the fluid is concentrated. In this layer, temperature changes significantly, while beyond it, the fluid temperature becomes comparatively uniform. Understanding how

$\delta _\theta$

, which is the thin region adjacent to the walls where most of the temperature gradient of the fluid is concentrated. In this layer, temperature changes significantly, while beyond it, the fluid temperature becomes comparatively uniform. Understanding how

![]() $\delta _\theta$

scales with varying Rayleigh and Nusselt numbers gives insight into the dynamics of convective heat transfer. The thermal boundary layer thickness can be estimated by identifying the height at which the root mean square (r.m.s.) of the temperature deviates from linear behaviour near the plates.

$\delta _\theta$

scales with varying Rayleigh and Nusselt numbers gives insight into the dynamics of convective heat transfer. The thermal boundary layer thickness can be estimated by identifying the height at which the root mean square (r.m.s.) of the temperature deviates from linear behaviour near the plates.

The other length scale is the Kolmogorov scale

![]() $\eta _K(\boldsymbol {x},t)$

, which represents the size at which viscous forces damp out the smallest turbulent eddies and is given by (Scheel, Emran & Schumacher Reference Scheel, Emran and Schumacher2013)

$\eta _K(\boldsymbol {x},t)$

, which represents the size at which viscous forces damp out the smallest turbulent eddies and is given by (Scheel, Emran & Schumacher Reference Scheel, Emran and Schumacher2013)

where

![]() $\epsilon (\boldsymbol {x}, t)$

is the kinetic energy dissipation rate that is defined as

$\epsilon (\boldsymbol {x}, t)$

is the kinetic energy dissipation rate that is defined as

Due to the symmetries of the problem,

![]() $\eta _K$

should be homogeneous in time and the horizontal direction but not in the vertical direction. Further details on how this behaves are given below when the datasets used are described.

$\eta _K$

should be homogeneous in time and the horizontal direction but not in the vertical direction. Further details on how this behaves are given below when the datasets used are described.

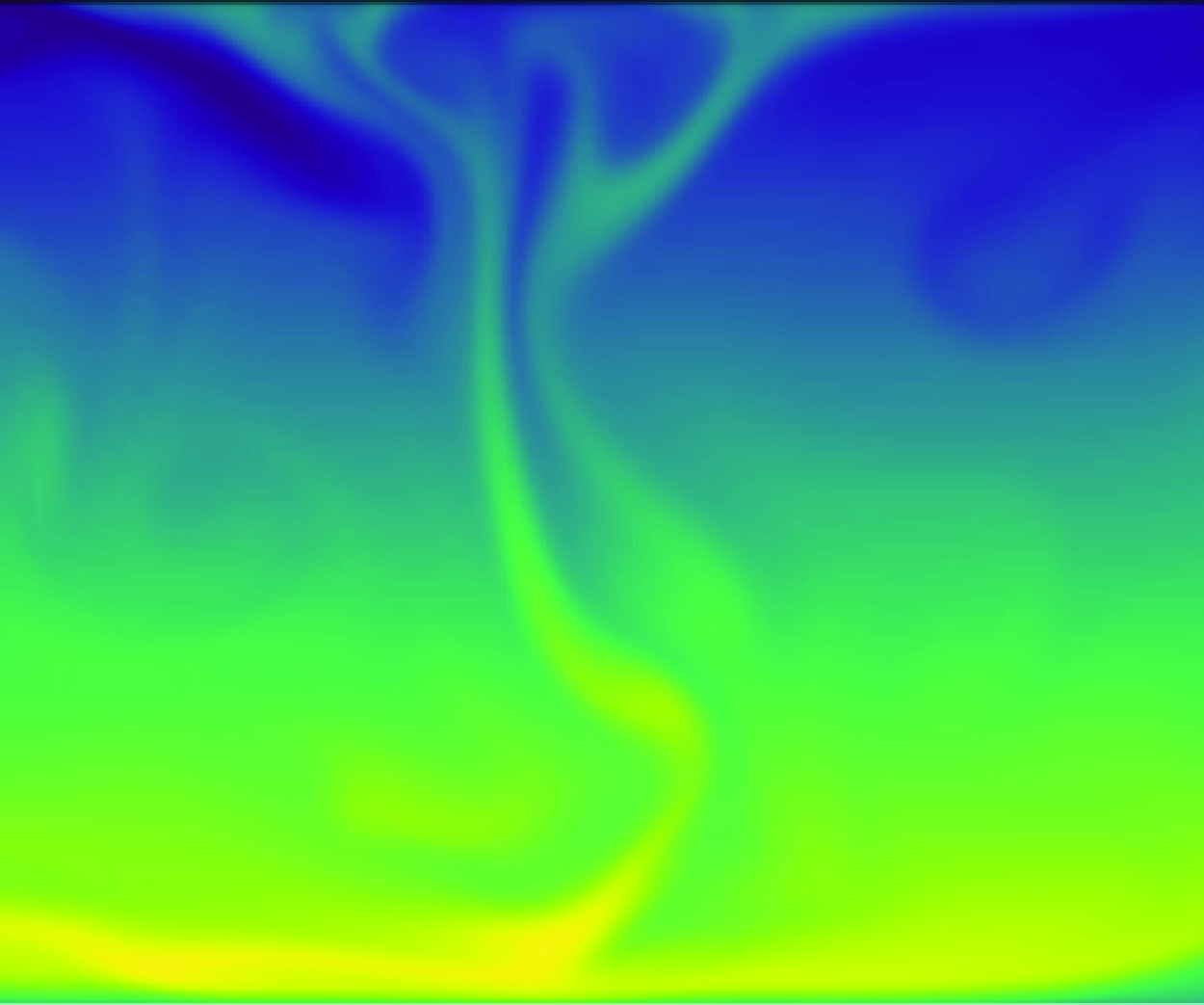

Figure 1. Two-dimensional convolutional neural network-based autoencoder. The central layer

![]() $z$

in the middle is the bottleneck layer or latent vector. The image on the left-hand side represents an example of the input to the network for

$z$

in the middle is the bottleneck layer or latent vector. The image on the left-hand side represents an example of the input to the network for

![]() $Ra=10^8$

, corresponding to the temperature fields of a Rayleigh–Bénard numerical simulation. The image on the right is the output of the network for the same input, with a latent space dimension of

$Ra=10^8$

, corresponding to the temperature fields of a Rayleigh–Bénard numerical simulation. The image on the right is the output of the network for the same input, with a latent space dimension of

![]() $d=2250$

.

$d=2250$

.

Finally, the characteristic time scale of the system is the large eddy turnover time, denoted by

![]() $\tau$

. This is calculated as the time required for a large-scale eddy to complete one full rotation over the height of the domain

$\tau$

. This is calculated as the time required for a large-scale eddy to complete one full rotation over the height of the domain

![]() $\tau = 2h / w$

, with

$\tau = 2h / w$

, with

![]() $w$

the r.m.s. of the vertical velocity component,

$w$

the r.m.s. of the vertical velocity component,

![]() $w \equiv v_{z, {rms}}$

.

$w \equiv v_{z, {rms}}$

.

2.2.

Fixed-

$d$

autoencoders

$d$

autoencoders

Autoencoders are a type of dimensionality reduction algorithm that seeks to construct an encoding function

![]() $\mathcal {E}$

that can map an input field

$\mathcal {E}$

that can map an input field

![]() $u \in \mathbb {R}^{N_x \times N_z}$

into a smaller-sized latent vector

$u \in \mathbb {R}^{N_x \times N_z}$

into a smaller-sized latent vector

![]() $\boldsymbol {z}$

and a decoding function

$\boldsymbol {z}$

and a decoding function

![]() $\mathcal {D}$

that can perform the inverse operation. This is done by approximating

$\mathcal {D}$

that can perform the inverse operation. This is done by approximating

![]() $\mathcal {E}$

and

$\mathcal {E}$

and

![]() $\mathcal {D}$

with neural networks and training the resulting model

$\mathcal {D}$

with neural networks and training the resulting model

![]() $\mathcal {F}(u) = \mathcal {D}(\mathcal {E}(u))$

to approximate the identity operation

$\mathcal {F}(u) = \mathcal {D}(\mathcal {E}(u))$

to approximate the identity operation

![]() $u = \mathcal {F}(u)$

, where the latent vector

$u = \mathcal {F}(u)$

, where the latent vector

![]() $\boldsymbol {z}=\mathcal {E}(u)$

acts as a bottleneck layer. The training process involves minimizing a prescribed loss function

$\boldsymbol {z}=\mathcal {E}(u)$

acts as a bottleneck layer. The training process involves minimizing a prescribed loss function

![]() $\mathcal {L}(u, \mathcal {F}(u))$

with respect to the parameters of the neural networks. The most common choice of loss function (and the one used in the majority of this work) is the

$\mathcal {L}(u, \mathcal {F}(u))$

with respect to the parameters of the neural networks. The most common choice of loss function (and the one used in the majority of this work) is the

![]() $L_2$

norm

$L_2$

norm

\begin{equation} \mathcal {L}(u, \tilde {u}) = \frac {1}{B} \sum _{b=1}^{B} L_2(u^{(b)} - \tilde {u}^{(b)}) = \frac {1}{B} \sum _{b=1}^{B} \sum _{i=1}^{N_x} \sum _{j=1}^{N_z} \left ( u_{ij}^{(b)} - \tilde {u}_{ij}^{(b)} \right )^2, \end{equation}

\begin{equation} \mathcal {L}(u, \tilde {u}) = \frac {1}{B} \sum _{b=1}^{B} L_2(u^{(b)} - \tilde {u}^{(b)}) = \frac {1}{B} \sum _{b=1}^{B} \sum _{i=1}^{N_x} \sum _{j=1}^{N_z} \left ( u_{ij}^{(b)} - \tilde {u}_{ij}^{(b)} \right )^2, \end{equation}

where

![]() $\tilde {u}^{(b)} = \mathcal {F}(u^{(b)})$

, and

$\tilde {u}^{(b)} = \mathcal {F}(u^{(b)})$

, and

![]() $u_{ij}^{(b)}$

and

$u_{ij}^{(b)}$

and

![]() $\tilde {u}_{ij}^{(b)}$

are the individual grid elements of each field and

$\tilde {u}_{ij}^{(b)}$

are the individual grid elements of each field and

![]() $B$

denotes the batch size, representing the number of training samples processed simultaneously during each iteration of the training.

$B$

denotes the batch size, representing the number of training samples processed simultaneously during each iteration of the training.

A diagram of the autoencoders used is shown in figure 1. Our input data are snapshots of the rescaled temperature field

![]() $\theta (x, z, t_i)$

and the output is denoted as

$\theta (x, z, t_i)$

and the output is denoted as

![]() $\tilde {\theta }$

. For simplicity, we also define

$\tilde {\theta }$

. For simplicity, we also define

![]() $\Delta \theta = \theta - \tilde {\theta }$

. We used convolutional neural networks for both the encoder and decoder as is standard in image-processing tasks. A schematic of the encoder architecture is shown in figure 2. The input fields of size

$\Delta \theta = \theta - \tilde {\theta }$

. We used convolutional neural networks for both the encoder and decoder as is standard in image-processing tasks. A schematic of the encoder architecture is shown in figure 2. The input fields of size

![]() $\mathcal {R}^{512 \times 512}$

undergo transformations through four convolutional layers, each employing a

$\mathcal {R}^{512 \times 512}$

undergo transformations through four convolutional layers, each employing a

![]() $5 \times 5$

kernel (highlighted in green in the figure). The configuration of each subsequent layer is characterized by its shape, which includes the number of channels and the new dimension size. The reduction in size at each layer is achieved through the application of specific strides and padding. After the final convolutional layer, the output is flattened to maintain its structure , which is a vector of dimension

$5 \times 5$

kernel (highlighted in green in the figure). The configuration of each subsequent layer is characterized by its shape, which includes the number of channels and the new dimension size. The reduction in size at each layer is achieved through the application of specific strides and padding. After the final convolutional layer, the output is flattened to maintain its structure , which is a vector of dimension

![]() $d'$

, followed by the introduction of a dense layer with a smaller dimension

$d'$

, followed by the introduction of a dense layer with a smaller dimension

![]() $d$

. This additional layer serves to form the compact encoded representation of the input, known as the bottleneck layer. The different hyperparameters used are listed in table 1. Note that under this set-up the dimension of the latent vector

$d$

. This additional layer serves to form the compact encoded representation of the input, known as the bottleneck layer. The different hyperparameters used are listed in table 1. Note that under this set-up the dimension of the latent vector

![]() $d$

is a hyperparameter that has to be fixed prior to training. The primary goal of this work is to evaluate the performance of the autoencoder as we vary

$d$

is a hyperparameter that has to be fixed prior to training. The primary goal of this work is to evaluate the performance of the autoencoder as we vary

![]() $d$

in order to assess what is the minimum

$d$

in order to assess what is the minimum

![]() $d$

needed by the autoencoder to properly represent the data, in this case the temperature field. We refer to this architecture as F

$d$

needed by the autoencoder to properly represent the data, in this case the temperature field. We refer to this architecture as F

![]() $d$

AE, variations are discussed below. Details about the training protocol and datasets used are given below. Note that, for the value of

$d$

AE, variations are discussed below. Details about the training protocol and datasets used are given below. Note that, for the value of

![]() $d'$

, we estimated the minimum number of degrees of freedom necessary to represent the flow by determining the number of Fourier and Chebyshev modes needed to reconstruct the relevant small-scale dynamics. In the periodic xdirection, we need at least

$d'$

, we estimated the minimum number of degrees of freedom necessary to represent the flow by determining the number of Fourier and Chebyshev modes needed to reconstruct the relevant small-scale dynamics. In the periodic xdirection, we need at least

![]() $2 k_\eta$

modes. For the non-periodic zdirection, we can calculate the number of interpolation Chebyshev points needed to resolve the boundary layer. This estimation indicates that at

$2 k_\eta$

modes. For the non-periodic zdirection, we can calculate the number of interpolation Chebyshev points needed to resolve the boundary layer. This estimation indicates that at

![]() $Ra \approx 10^8$

approximately 4096 modes are required (or degrees of freedom), thus determining the largest size (biggest bottleneck layer) to which we compressed our data using the networks.

$Ra \approx 10^8$

approximately 4096 modes are required (or degrees of freedom), thus determining the largest size (biggest bottleneck layer) to which we compressed our data using the networks.

Figure 2. Schematic of the convolutional encoder

![]() $\mathcal {E}$

. The first layers are convolutional while the last layer after the flattening operation is a fully connected layer. Details are listed in table 1.

$\mathcal {E}$

. The first layers are convolutional while the last layer after the flattening operation is a fully connected layer. Details are listed in table 1.

2.3.

Variable-

$d$

autoencoders

$d$

autoencoders

In the F

![]() $d$

AE outlined above, the dimension of the latent space

$d$

AE outlined above, the dimension of the latent space

![]() $d$

has to be chosen prior to training and a new neural network has to be trained for each latent space

$d$

has to be chosen prior to training and a new neural network has to be trained for each latent space

![]() $d$

. We now discuss two regularized variations where

$d$

. We now discuss two regularized variations where

![]() $d$

can be adjusted after training. In both variations, the networks are trained using an over-parametrized bottleneck layer of size

$d$

can be adjusted after training. In both variations, the networks are trained using an over-parametrized bottleneck layer of size

![]() $d'\gg d$

of which only

$d'\gg d$

of which only

![]() $d$

parameters are used for inference. We then always use

$d$

parameters are used for inference. We then always use

![]() $d$

to refer to the effective dimension of the encoding.

$d$

to refer to the effective dimension of the encoding.

2.3.1. Sparsity-inducing autoencoders

The first approach involves applying a

![]() $L_1$

regularization term, acting over

$L_1$

regularization term, acting over

![]() $\boldsymbol {z}$

, to the loss function (2.8), resulting in

$\boldsymbol {z}$

, to the loss function (2.8), resulting in

\begin{equation} \mathcal {L}_S(u, \tilde {u}, \boldsymbol {z}) = \mathcal {L} + \frac {1}{B} \sum _{b=1}^{B} \lambda L_1(\boldsymbol {z}^{(b)}), \end{equation}

\begin{equation} \mathcal {L}_S(u, \tilde {u}, \boldsymbol {z}) = \mathcal {L} + \frac {1}{B} \sum _{b=1}^{B} \lambda L_1(\boldsymbol {z}^{(b)}), \end{equation}

where

![]() $\mathcal {L}$

is defined in (2.8) and

$\mathcal {L}$

is defined in (2.8) and

![]() $B$

denotes the batch size. The regularization term is defined as

$B$

denotes the batch size. The regularization term is defined as

\begin{equation} L_1(\boldsymbol {z})=\frac {1}{d'} \sum _{j=1}^{d'} \left | z_j \right |, \end{equation}

\begin{equation} L_1(\boldsymbol {z})=\frac {1}{d'} \sum _{j=1}^{d'} \left | z_j \right |, \end{equation}

![]() $\lambda$

is an extra hyperparameter that controls the intensity of the regularization, with

$\lambda$

is an extra hyperparameter that controls the intensity of the regularization, with

![]() $\lambda =0$

recovering the previous case.

$\lambda =0$

recovering the previous case.

Table 1. The CNN autoencoder parameters. The encoder and decoder structure specify the number of filters per convolutional layer.

By introducing this regularization, the model is encouraged to maintain only the most essential connections and promote sparsity in the middle layer. This approach was explored in Sondak & Protopapas (Reference Sondak and Protopapas2021), where the authors employ an autoencoder with latent space penalization. We refer to this variation as a SIAE.

As stated above, a SIAE is trained using a bottleneck layer of size

![]() $d'$

. After training, the respective latent representation

$d'$

. After training, the respective latent representation

![]() $\boldsymbol {z}'$

of each element of the training dataset is calculated, forming a matrix

$\boldsymbol {z}'$

of each element of the training dataset is calculated, forming a matrix

![]() $Z'$

, from which then the interquartile range (IQR) of each dimension is obtained. Finally, a sparse version of the latent vector

$Z'$

, from which then the interquartile range (IQR) of each dimension is obtained. Finally, a sparse version of the latent vector

![]() $\boldsymbol {z}'$

is constructed where the

$\boldsymbol {z}'$

is constructed where the

![]() $d'-d$

dimensions with the lowest IQR values are set to zero (and, thus, only

$d'-d$

dimensions with the lowest IQR values are set to zero (and, thus, only

![]() $d$

non-zero dimensions remain). This whole operation can be viewed as replacing the encoder

$d$

non-zero dimensions remain). This whole operation can be viewed as replacing the encoder

![]() $\mathcal {E}$

by

$\mathcal {E}$

by

where

![]() $\Theta _d$

is the operator that sets the least import dimensions to zero, during inference, meaning, too, that the latent vector takes the form

$\Theta _d$

is the operator that sets the least import dimensions to zero, during inference, meaning, too, that the latent vector takes the form

![]() $\boldsymbol {z} = \Theta _d (\boldsymbol {z}')$

. The decoder is unchanged in this version. The structure of

$\boldsymbol {z} = \Theta _d (\boldsymbol {z}')$

. The decoder is unchanged in this version. The structure of

![]() $\mathcal {E}$

and

$\mathcal {E}$

and

![]() $\mathcal {D}$

themselves is the same as in the F

$\mathcal {D}$

themselves is the same as in the F

![]() $d$

AE, except for the fact that the bottleneck layer has size

$d$

AE, except for the fact that the bottleneck layer has size

![]() $d'$

, of course, this is true in the next version as well. The values of

$d'$

, of course, this is true in the next version as well. The values of

![]() $d'$

and

$d'$

and

![]() $\lambda$

are presented in table 1.

$\lambda$

are presented in table 1.

2.3.2. Implicit rank minimizing autoencoders

The second approach is known as implicit regularization (IRMAE, Zeng & Graham Reference Zeng and Graham2023). This method uses the fact that adding depth to a matrix factorization enhances an implicit tendency towards low-rank solutions to construct low-rank versions of the latent representations (Jing et al. Reference Jing, Zbontar and Lecun2020). It works by adding

![]() $n$

linear layers,

$n$

linear layers,

![]() $\mathcal {W}=\mathcal {W}_1, \mathcal {W}_2, \ldots , \mathcal {W}_n$

, between the encoder and the bottleneck layer.

$\mathcal {W}=\mathcal {W}_1, \mathcal {W}_2, \ldots , \mathcal {W}_n$

, between the encoder and the bottleneck layer.

Similar to the SIAE, an IRMAE is trained with bottleneck layers of size

![]() $d'$

. After training, the covariance matrix of the encodings

$d'$

. After training, the covariance matrix of the encodings

![]() $Z'$

is formed, but now instead of calculating the IQR values, a singular value decomposition,

$Z'$

is formed, but now instead of calculating the IQR values, a singular value decomposition,

![]() $Z'=U\Sigma U^*$

, is performed. The final latent vector is obtained by projecting into the first

$Z'=U\Sigma U^*$

, is performed. The final latent vector is obtained by projecting into the first

![]() $d$

dimensions. The resulting encoder used during inference has the form

$d$

dimensions. The resulting encoder used during inference has the form

where

![]() $\Theta _d$

truncates the singular values to those that are different from zero so that

$\Theta _d$

truncates the singular values to those that are different from zero so that

![]() $U^* \boldsymbol {z}' \approx \Theta _d (U^* \boldsymbol {z}')$

holds. The decoder, which now needs to project back into

$U^* \boldsymbol {z}' \approx \Theta _d (U^* \boldsymbol {z}')$

holds. The decoder, which now needs to project back into

![]() $Z'$

, takes the form

$Z'$

, takes the form

Contrary to Zeng & Graham (Reference Zeng and Graham2023), we keep the loss function the same as in the F

![]() $d$

AE (2.8) and do not add extra terms as we did not find that they changed the final results.

$d$

AE (2.8) and do not add extra terms as we did not find that they changed the final results.

Figure 3. Visualizations of

![]() $\theta (x,z,t)$

and

$\theta (x,z,t)$

and

![]() $\theta (x,z,t+\tau )$

for three different values of

$\theta (x,z,t+\tau )$

for three different values of

![]() $Ra$

.

$Ra$

.

2.4. Numerical simulations, datasets and training protocol

Equations (2.1)–(2.3) where solved using SPECTER (Fontana et al. Reference Fontana, Bruno, Mininni and Dmitruk2020), a highly parallel pseudo-spectral solver that utilizes a Fourier-continuation method to account for the non-periodic boundary conditions in the vertical direction. For implementation reasons, the code solves for the rescaled temperature

![]() $\theta$

, instead of the temperature

$\theta$

, instead of the temperature

![]() $T$

. So, as explained above, we present our results in terms of

$T$

. So, as explained above, we present our results in terms of

![]() $\theta$

, this does not pose a problem for our analysis as the temperature fluctuations and the rescaled temperature fluctuations only differ by the scaling factor. We solved the equations at seven different Rayleigh numbers, ranging from

$\theta$

, this does not pose a problem for our analysis as the temperature fluctuations and the rescaled temperature fluctuations only differ by the scaling factor. We solved the equations at seven different Rayleigh numbers, ranging from

![]() $10^6$

to

$10^6$

to

![]() $10^8$

. In all cases, the Prandtl number was set to

$10^8$

. In all cases, the Prandtl number was set to

![]() $Pr = 1$

, and the domain sizes were

$Pr = 1$

, and the domain sizes were

![]() $[L_x, L_z] = [2 \pi , \pi ]$

, with

$[L_x, L_z] = [2 \pi , \pi ]$

, with

![]() $N_x = N_z = 512$

grid points for spatial resolution in each direction. This spatial domain ensured that the smallest scales were resolved for all values of

$N_x = N_z = 512$

grid points for spatial resolution in each direction. This spatial domain ensured that the smallest scales were resolved for all values of

![]() $Ra$

. The values of the viscosity used at each

$Ra$

. The values of the viscosity used at each

![]() $Ra=\{10^6,\; 5\times 10^6,\; 7.5\times 10^6; 10^7,\; 1.75\times 10^7,\; 2.5\times 10^7,\; 5\times 10^7,\; 10^8 \}$

were

$Ra=\{10^6,\; 5\times 10^6,\; 7.5\times 10^6; 10^7,\; 1.75\times 10^7,\; 2.5\times 10^7,\; 5\times 10^7,\; 10^8 \}$

were

![]() $\nu =\{9.86 \times 10^{-3},\; 4.41 \times 10^{-3},\; 3.60 \times 10^{-3},\; 3.12 \times 10^{-3},\; 2.36 \times 10^{-3},\; 1.97 \times 10^{-3},\; 1.39 \times 10^{-3},\; 9.68 \times 10^{-4} \}$

, respectively. Simulations were started from random initial conditions for the temperature field, allowed to reach a statistically stationary state and then run for over 700 turnover times. For each

$\nu =\{9.86 \times 10^{-3},\; 4.41 \times 10^{-3},\; 3.60 \times 10^{-3},\; 3.12 \times 10^{-3},\; 2.36 \times 10^{-3},\; 1.97 \times 10^{-3},\; 1.39 \times 10^{-3},\; 9.68 \times 10^{-4} \}$

, respectively. Simulations were started from random initial conditions for the temperature field, allowed to reach a statistically stationary state and then run for over 700 turnover times. For each

![]() $Ra$

value, we conducted two simulations with different initial conditions.

$Ra$

value, we conducted two simulations with different initial conditions.

Figure 4. Temporal evolution at a fixed location

![]() $(x_p, z_p)$

of the flow for three different

$(x_p, z_p)$

of the flow for three different

![]() $Ra$

.

$Ra$

.

Figure 5. Relevant scales of the fluid for three different

![]() $Ra$

. The top plot illustrates the profile of

$Ra$

. The top plot illustrates the profile of

![]() $\langle \theta ^2 \rangle ^{1/2}_{x,t}$

. The lower plot shows the Kolmogorov scale,

$\langle \theta ^2 \rangle ^{1/2}_{x,t}$

. The lower plot shows the Kolmogorov scale,

![]() $\langle \eta _K \rangle _{x,t} (z)$

. For each

$\langle \eta _K \rangle _{x,t} (z)$

. For each

![]() $Ra$

,

$Ra$

,

![]() $z_p$

is marked.

$z_p$

is marked.

We now take a moment to describe three paradigmatic cases,

![]() $Ra = 10^6$

,

$Ra = 10^6$

,

![]() $10^7$

and

$10^7$

and

![]() $10^8$

. Figure 3 shows snapshots of

$10^8$

. Figure 3 shows snapshots of

![]() $\theta (x,y)$

at a time

$\theta (x,y)$

at a time

![]() $t$

and one turnover time later, at a time

$t$

and one turnover time later, at a time

![]() $t+\tau$

. As the Rayleigh number increases, the flow becomes more complex with smaller and smaller structures popping up. New time scales appear in the flow too, as can be in seen in figure 4, where we show the temporal evolution of

$t+\tau$

. As the Rayleigh number increases, the flow becomes more complex with smaller and smaller structures popping up. New time scales appear in the flow too, as can be in seen in figure 4, where we show the temporal evolution of

![]() $\theta$

at one single point

$\theta$

at one single point

![]() $(x_p, z_p)$

throughout several turnover times (while the location

$(x_p, z_p)$

throughout several turnover times (while the location

![]() $x_p$

is indistinct due to the translational symmetry of the flow, the significance of

$x_p$

is indistinct due to the translational symmetry of the flow, the significance of

![]() $z_p$

will be discussed shortly). The turnover time

$z_p$

will be discussed shortly). The turnover time

![]() $\tau$

is defined for each Ra. Even at

$\tau$

is defined for each Ra. Even at

![]() $Ra=10^7$

the flow exhibits multi-time-scale behaviour and intricate dynamics. The shift observed at

$Ra=10^7$

the flow exhibits multi-time-scale behaviour and intricate dynamics. The shift observed at

![]() $t=7.5\tau$

in the

$t=7.5\tau$

in the

![]() $Ra=10^8$

case corresponds to a horizontal displacement of the plume. Finally, we show profiles of the horizontally averaged and time-averaged r.m.s. fluctuations,

$Ra=10^8$

case corresponds to a horizontal displacement of the plume. Finally, we show profiles of the horizontally averaged and time-averaged r.m.s. fluctuations,

![]() $\langle \theta ^2 \rangle _{x,t}^{1/2}(z)$

, and Kolmogorov lengths scale,

$\langle \theta ^2 \rangle _{x,t}^{1/2}(z)$

, and Kolmogorov lengths scale,

![]() $\langle \eta _K \rangle _{x,t} (z)$

, in figure 5. As the Rayleigh number increases, the thermal boundary layer becomes steeper, as expected. As the dissipation is not uniform in the vertical direction, the Kolmogorov length scale increases when approaching the middle of the domain. We are interested in checking how well the autoencoders represent each scale of the flow, for this matter we define

$\langle \eta _K \rangle _{x,t} (z)$

, in figure 5. As the Rayleigh number increases, the thermal boundary layer becomes steeper, as expected. As the dissipation is not uniform in the vertical direction, the Kolmogorov length scale increases when approaching the middle of the domain. We are interested in checking how well the autoencoders represent each scale of the flow, for this matter we define

![]() $z_p(Ra)$

as the the height where the temperature perturbation fluctuations are highest. Below

$z_p(Ra)$

as the the height where the temperature perturbation fluctuations are highest. Below

![]() $z_p$

the dynamics is dominated by the boundary layer, while above the Kolmogorov length scale decreases when approaching the centre of the domain. The corresponding

$z_p$

the dynamics is dominated by the boundary layer, while above the Kolmogorov length scale decreases when approaching the centre of the domain. The corresponding

![]() $z_p$

are marked in figure 5. As we will explain below, corroborating if an autoencoder is properly representing this scale will be the benchmark used to test its capabilities and choose the minimal number of dimensions

$z_p$

are marked in figure 5. As we will explain below, corroborating if an autoencoder is properly representing this scale will be the benchmark used to test its capabilities and choose the minimal number of dimensions

![]() $d^*$

needed for the latent space dimension. As a summary, we list the values of

$d^*$

needed for the latent space dimension. As a summary, we list the values of

![]() $\eta _K (z_p)$

,

$\eta _K (z_p)$

,

![]() $Re$

,

$Re$

,

![]() $Nu$

and

$Nu$

and

![]() $\delta _\theta$

for the three Rayleigh numbers cases showcased in table 2.

$\delta _\theta$

for the three Rayleigh numbers cases showcased in table 2.

Table 2. Parameters used for the reference Rayleigh–Bénard flows, all in non-dimensional simulation units. Here

![]() $Re = u_{rms} h / \nu$

denotes the Reynolds number, where

$Re = u_{rms} h / \nu$

denotes the Reynolds number, where

![]() $u_{rms}$

is the r.m.s. velocity.

$u_{rms}$

is the r.m.s. velocity.

As input for the networks, we utilized the two-dimensional

![]() $\theta$

fields from the numerical simulations described above, selecting specific snapshots for each dataset. We excluded the transient regime of the flow, as we are interested in the statistically steady states. The cutoff point for the transient regime was defined as the point when the volume-averaged kinetic energy began to stabilize, oscillating around a constant value. To address the continuous spatial symmetry in the horizontal direction, we augmented the data by applying 10 random shifts over the

$\theta$

fields from the numerical simulations described above, selecting specific snapshots for each dataset. We excluded the transient regime of the flow, as we are interested in the statistically steady states. The cutoff point for the transient regime was defined as the point when the volume-averaged kinetic energy began to stabilize, oscillating around a constant value. To address the continuous spatial symmetry in the horizontal direction, we augmented the data by applying 10 random shifts over the

![]() $x$

axis at each time step. This augmentation increased the number of temperature fields under consideration from

$x$

axis at each time step. This augmentation increased the number of temperature fields under consideration from

![]() $7000$

to

$7000$

to

![]() $70 \, 000$

, spanning over 700 turnover times. Note that it would also have been possible to deal with this symmetry by performing phase shifts, as described in Linot & Graham (Reference Linot and Graham2020) and formulated in Budanur et al. (Reference Budanur, Cvitanović, Davidchack and Siminos2015), instead of data augmentation, we chose to explicitly retain the symmetry as we are interested in exploiting it in future analyses (Page et al. 2021, 2023). The datasets were normalized with respect to the global maximum and minimum, in order to feed

$70 \, 000$

, spanning over 700 turnover times. Note that it would also have been possible to deal with this symmetry by performing phase shifts, as described in Linot & Graham (Reference Linot and Graham2020) and formulated in Budanur et al. (Reference Budanur, Cvitanović, Davidchack and Siminos2015), instead of data augmentation, we chose to explicitly retain the symmetry as we are interested in exploiting it in future analyses (Page et al. 2021, 2023). The datasets were normalized with respect to the global maximum and minimum, in order to feed

![]() $\theta / \theta _0 \in [-1,1]$

to the neural networks. Additionally, a separate, longer dataset was created for testing to enable the integration of phenomena over extended periods, facilitating a more comprehensive evaluation of the performance of the model over longer time scales for certain physical metrics, such as the estimation of the Nusselt number.

$\theta / \theta _0 \in [-1,1]$

to the neural networks. Additionally, a separate, longer dataset was created for testing to enable the integration of phenomena over extended periods, facilitating a more comprehensive evaluation of the performance of the model over longer time scales for certain physical metrics, such as the estimation of the Nusselt number.

Figure 6. The output fields

![]() $\tilde {\theta }$

of the F

$\tilde {\theta }$

of the F

![]() $d$

AE for a snapshot of the temperature field, corresponding to latent space dimensions

$d$

AE for a snapshot of the temperature field, corresponding to latent space dimensions

![]() $d = 100$

, 500 and 2250, with respective MSEs of

$d = 100$

, 500 and 2250, with respective MSEs of

![]() $1.6 \times 10^{-3}$

,

$1.6 \times 10^{-3}$

,

![]() $3.1 \times 10^{-4}$

and

$3.1 \times 10^{-4}$

and

![]() $4.0 \times 10^{-5}$

, are shown. For comparison, the normalized ground truth field

$4.0 \times 10^{-5}$

, are shown. For comparison, the normalized ground truth field

![]() $\theta / \theta _0$

, used as input, is also displayed. Additionally, an inset highlights a region where small-scale structures are present.

$\theta / \theta _0$

, used as input, is also displayed. Additionally, an inset highlights a region where small-scale structures are present.

Figure 7. Profile of the original

![]() $\theta _{rms}$

along with reconstructed profiles for F

$\theta _{rms}$

along with reconstructed profiles for F

![]() $d$

AE with

$d$

AE with

![]() $d=100$

and

$d=100$

and

![]() $d=500$

. The inset zooms in on the near-wall region, showing the boundary layer thickness

$d=500$

. The inset zooms in on the near-wall region, showing the boundary layer thickness

![]() $\delta _\theta$

, defined as the height

$\delta _\theta$

, defined as the height

![]() $z$

where the

$z$

where the

![]() $\theta _{rms}$

profile transitions away from a linear profile. While the reconstruction with

$\theta _{rms}$

profile transitions away from a linear profile. While the reconstruction with

![]() $d=100$

does not capture

$d=100$

does not capture

![]() $\theta _{rms}$

accurately in the bulk, the reconstruction with

$\theta _{rms}$

accurately in the bulk, the reconstruction with

![]() $d=500$

successfully reproduces the boundary layer behaviour.

$d=500$

successfully reproduces the boundary layer behaviour.

Figure 8. Difference between the Nusselt number

![]() $Nu$

and the reconstructed Nusselt number

$Nu$

and the reconstructed Nusselt number

![]() $\tilde {Nu}$

with F

$\tilde {Nu}$

with F

![]() $d$

AE as a function of the latent dimension

$d$

AE as a function of the latent dimension

![]() $d$

. The error stabilizes at

$d$

. The error stabilizes at

![]() $d=1500$

, indicating that the model reliably captures

$d=1500$

, indicating that the model reliably captures

![]() $Nu$

at this dimension. For reference, the true value of

$Nu$

at this dimension. For reference, the true value of

![]() $Nu$

is provided in table 1.

$Nu$

is provided in table 1.

During the training process, we employed the Adam optimizer with its default configurations to minimize the

![]() $L_2$

norm (2.8), which was also monitored on the validation set. Each network was trained over 500 epochs, by which point all networks had already stabilized. We monitored the validation metrics, and the batch size was set to 32. To ensure consistency, three different models were trained for each variation of the latent space dimension

$L_2$

norm (2.8), which was also monitored on the validation set. Each network was trained over 500 epochs, by which point all networks had already stabilized. We monitored the validation metrics, and the batch size was set to 32. To ensure consistency, three different models were trained for each variation of the latent space dimension

![]() $d$

. Each model was trained using different snapshots from the same extensive simulation, with both the training and test datasets maintained at the same size. Specifically, for each model, we performed three independent runs: (a) using a random 80–20 train-test split, (b) reinitializing the network with different learning rates and initialization seeds but keeping the same data split, and (c) using the same initialization but applying a different random 80–20 train-test split. In this last case, we also introduced new random shifts to the data. The results shown in the next section reflect the averaged values from these three variations.

$d$

. Each model was trained using different snapshots from the same extensive simulation, with both the training and test datasets maintained at the same size. Specifically, for each model, we performed three independent runs: (a) using a random 80–20 train-test split, (b) reinitializing the network with different learning rates and initialization seeds but keeping the same data split, and (c) using the same initialization but applying a different random 80–20 train-test split. In this last case, we also introduced new random shifts to the data. The results shown in the next section reflect the averaged values from these three variations.

3. Results

All results shown here are calculated over elements of the test datasets and averaged over them when appropriate.

3.1.

Fixed-

$d$

autoencoders

$d$

autoencoders

We focus our analysis on the performance of the F

![]() $d$

AE on the

$d$

AE on the

![]() $Ra = 10^8$

case, as this is the highest Rayleigh number used in our study, and thus, the most complex case. We reference results at other Rayleigh numbers when appropriate. At this particular value of

$Ra = 10^8$

case, as this is the highest Rayleigh number used in our study, and thus, the most complex case. We reference results at other Rayleigh numbers when appropriate. At this particular value of

![]() $Ra$

the flow is characterized by a rising hot plume and falling cold plume, which generate two large counter-rotating vortices that are accompanied by a rich variety of small-scale structures, the F

$Ra$

the flow is characterized by a rising hot plume and falling cold plume, which generate two large counter-rotating vortices that are accompanied by a rich variety of small-scale structures, the F

![]() $d$

AE has to be able to capture the whole range of scales. In figure 6 we present a snapshot of the original temperature field

$d$

AE has to be able to capture the whole range of scales. In figure 6 we present a snapshot of the original temperature field

![]() $\theta$

(chosen out of the test dataset) together with its respective output

$\theta$

(chosen out of the test dataset) together with its respective output

![]() $\tilde {\theta }$

obtained for selected values of latent space dimension

$\tilde {\theta }$

obtained for selected values of latent space dimension

![]() $d$

, along with zoomed-in views of regions with small-scale structures. These values (100, 500 and 2250) are noteworthy as they represent critical thresholds in performance and mark the transition points in the ability of the model to go from just reconstructing the large convective rolls to reproducing the small-scale details.

$d$

, along with zoomed-in views of regions with small-scale structures. These values (100, 500 and 2250) are noteworthy as they represent critical thresholds in performance and mark the transition points in the ability of the model to go from just reconstructing the large convective rolls to reproducing the small-scale details.

When

![]() $d=100$

, the F

$d=100$

, the F

![]() $d$

AE mainly reconstructs the broader flow patterns and larger convective rolls. Increasing the latent space dimension to

$d$

AE mainly reconstructs the broader flow patterns and larger convective rolls. Increasing the latent space dimension to

![]() $d=500$

enhances the performance of the autoencoder, which can now capture mid-range structures. At this value of

$d=500$

enhances the performance of the autoencoder, which can now capture mid-range structures. At this value of

![]() $d$

, the model begins to accurately reproduce physically relevant quantities, such as the boundary layer thickness

$d$

, the model begins to accurately reproduce physically relevant quantities, such as the boundary layer thickness

![]() $\delta _\theta$

. In figure 7 we show the profile of

$\delta _\theta$

. In figure 7 we show the profile of

![]() $\theta _{{rms}}$

along with its reconstructions for

$\theta _{{rms}}$

along with its reconstructions for

![]() $d=100$

and

$d=100$

and

![]() $d=500$

. The inset highlights the near-wall region where the boundary layer thickness

$d=500$

. The inset highlights the near-wall region where the boundary layer thickness

![]() $\delta _\theta$

is defined as the height

$\delta _\theta$

is defined as the height

![]() $z$

at which the

$z$

at which the

![]() $\theta _{rms}$

profile departs from a linear profile. While the

$\theta _{rms}$

profile departs from a linear profile. While the

![]() $d=100$

reconstruction does not accurately capture

$d=100$

reconstruction does not accurately capture

![]() $\theta _{rms}$

in the bulk, the

$\theta _{rms}$

in the bulk, the

![]() $d=500$

model successfully reproduces the boundary layer structure. Finally, at

$d=500$

model successfully reproduces the boundary layer structure. Finally, at

![]() $d=2250$

, the autoencoder is visually able to express the finer details of the flow. To further quantify this, we examine the accuracy of

$d=2250$

, the autoencoder is visually able to express the finer details of the flow. To further quantify this, we examine the accuracy of

![]() $Nu$

as a function of latent dimension

$Nu$

as a function of latent dimension

![]() $d$

. Figure 8 shows the difference between the true Nusselt number

$d$

. Figure 8 shows the difference between the true Nusselt number

![]() $Nu$

and the reconstructed Nusselt number

$Nu$

and the reconstructed Nusselt number

![]() $\widetilde {Nu}$

across varying

$\widetilde {Nu}$

across varying

![]() $d$

. This difference stabilizes around

$d$

. This difference stabilizes around

![]() $d=1500$

, indicating that the model reliably captures

$d=1500$

, indicating that the model reliably captures

![]() $Nu$

at this dimension. These figures collectively serve as a visual testament to the power of the F

$Nu$

at this dimension. These figures collectively serve as a visual testament to the power of the F

![]() $d$

AE in identifying and reconstructing features across various scales, highlighting the importance of latent space dimensionality in the performance of the model. One of the main goals of this paper is to introduce a clear metric to determine the specific value of

$d$

AE in identifying and reconstructing features across various scales, highlighting the importance of latent space dimensionality in the performance of the model. One of the main goals of this paper is to introduce a clear metric to determine the specific value of

![]() $d$

at which all relevant scales are accurately reconstructed, as discussed in detail below.

$d$

at which all relevant scales are accurately reconstructed, as discussed in detail below.

Figure 9 shows the mean squared errors (MSEs) between input and output, MSE

![]() $(\Delta \theta )$

, as a function of latent space dimension,

$(\Delta \theta )$

, as a function of latent space dimension,

![]() $d$

. Additionally, the plot includes comparative lines representing the results of PCA and a decomposition combining Fourier and the discrete sine transform (DST) in the

$d$

. Additionally, the plot includes comparative lines representing the results of PCA and a decomposition combining Fourier and the discrete sine transform (DST) in the

![]() $z$

(non-periodic) direction for different latent space dimensions, i.e. the number of modes used in each case. Such comparisons are instrumental in benchmarking the performance of the F

$z$

(non-periodic) direction for different latent space dimensions, i.e. the number of modes used in each case. Such comparisons are instrumental in benchmarking the performance of the F

![]() $d$

AE against these established linear techniques. To ensure a fair comparison, when doing PCA, we projected the training dataset and then evaluated the test data using the modes obtained from the initial decomposition. It is important to note that due to the large size of the training dataset matrix, which stacks all the flattened images, performing PCA directly is too computationally intensive. Consequently, we resorted to using incremental PCA from Scikit-learn (Pedregosa et al. Reference Pedregosa2011), which is a suitable approximation method for large datasets that computes PCA over small batches.

$d$

AE against these established linear techniques. To ensure a fair comparison, when doing PCA, we projected the training dataset and then evaluated the test data using the modes obtained from the initial decomposition. It is important to note that due to the large size of the training dataset matrix, which stacks all the flattened images, performing PCA directly is too computationally intensive. Consequently, we resorted to using incremental PCA from Scikit-learn (Pedregosa et al. Reference Pedregosa2011), which is a suitable approximation method for large datasets that computes PCA over small batches.

In neither case does the MSE exhibit a sharp drop-off that would indicate the minimum number of dimensions needed to accurately represent the flow. This is to be expected in systems with this degree of scale separation, for example, when studying the Kuramoto–Sivashinsky equation, Linot & Graham (Reference Linot and Graham2022) found sharp decreases in the MSE at the

![]() $d$

coinciding with that estimated for the inertial manifold only when using small domain sizes, when they increased the domain the drop-off became noticeably smoother. Similar results were observed in studies of turbulent pipe flows (Constante-Amores et al. Reference Constante-Amores, Linot and Graham2024). This effect is only greater in our case. The F

$d$

coinciding with that estimated for the inertial manifold only when using small domain sizes, when they increased the domain the drop-off became noticeably smoother. Similar results were observed in studies of turbulent pipe flows (Constante-Amores et al. Reference Constante-Amores, Linot and Graham2024). This effect is only greater in our case. The F

![]() $d$

AE outperforms the linear methods in terms of the MSE within the test dataset, demonstrating its capacity to capture the nonlinearity attributes present in the dataset. Furthermore, it is important to acknowledge that the MSE might not be the most fitting metric to gauge the fidelity of reconstructions, particularly for smaller scales, as it predominantly captures the high energy modes, which are effectively resolved even at lower latent space dimensions as demonstrated in figure 6.

$d$

AE outperforms the linear methods in terms of the MSE within the test dataset, demonstrating its capacity to capture the nonlinearity attributes present in the dataset. Furthermore, it is important to acknowledge that the MSE might not be the most fitting metric to gauge the fidelity of reconstructions, particularly for smaller scales, as it predominantly captures the high energy modes, which are effectively resolved even at lower latent space dimensions as demonstrated in figure 6.

Figure 9. Comparison of the MSE of the temperature field (

![]() $\theta$

) reconstructions against the latent space dimension

$\theta$

) reconstructions against the latent space dimension

![]() $d$

for the F

$d$

for the F

![]() $d$

AE (represented by circles), PCA (illustrated with squares) and Fourier-DST decomposition (denoted by triangles) using the same dataset. For the autoencoder, each data point corresponds to the average of three runs with minor variations.

$d$

AE (represented by circles), PCA (illustrated with squares) and Fourier-DST decomposition (denoted by triangles) using the same dataset. For the autoencoder, each data point corresponds to the average of three runs with minor variations.

Figure 10. Error spectra

![]() $E_{\Delta \theta }$

for various reconstructions, alongside the reference energy spectra

$E_{\Delta \theta }$

for various reconstructions, alongside the reference energy spectra

![]() $E_\theta$

for comparison. The spectra were calculated at

$E_\theta$

for comparison. The spectra were calculated at

![]() $z_p$

. The dotted vertical line indicates the wavenumber corresponding to the Kolmogorov scale

$z_p$

. The dotted vertical line indicates the wavenumber corresponding to the Kolmogorov scale

![]() $k_\eta$

also at

$k_\eta$

also at

![]() $z_p$

, and the dots represent the maximum resolved

$z_p$

, and the dots represent the maximum resolved

![]() $k_x$

for each

$k_x$

for each

![]() $d$

.

$d$

.

Figure 11. Maximum

![]() $k_x^*$

resolved by each F

$k_x^*$

resolved by each F

![]() $d$

AE for different heights. The horizontal line shows the Kolmogorov scale

$d$

AE for different heights. The horizontal line shows the Kolmogorov scale

![]() $k_\eta$

of the flow at

$k_\eta$

of the flow at

![]() $z_p$

. At

$z_p$

. At

![]() $d^*=2250$

the network reconstructs scales up to

$d^*=2250$

the network reconstructs scales up to

![]() $k_\eta$

for

$k_\eta$

for

![]() $z_p$

.

$z_p$

.

To quantify the ability of the F

![]() $d$

AE to represent different scales in the flow, we compute the energy spectrum of the difference between input and output:

$d$

AE to represent different scales in the flow, we compute the energy spectrum of the difference between input and output:

Here

![]() $\Delta \hat {\theta } (k_x, z_p, t )$

are the Fourier coefficients of

$\Delta \hat {\theta } (k_x, z_p, t )$

are the Fourier coefficients of

![]() $\Delta \theta$

in the horizontal direction at height

$\Delta \theta$

in the horizontal direction at height

![]() $z_p$

and

$z_p$

and

![]() $\langle , \rangle _t$

is a time average. The resulting spectra, calculated for

$\langle , \rangle _t$

is a time average. The resulting spectra, calculated for

![]() $d=100$

,

$d=100$

,

![]() $500$

,

$500$

,

![]() $2250$

and

$2250$

and

![]() $4096$

, are shown in figure 10, alongside the spectrum of the original test dataset as reference. For

$4096$

, are shown in figure 10, alongside the spectrum of the original test dataset as reference. For

![]() $d=100$

, the error spectrum has large values (compared with the original spectrum) across almost all scales, indicating the inability of the F

$d=100$

, the error spectrum has large values (compared with the original spectrum) across almost all scales, indicating the inability of the F

![]() $d$

AE to accurately represent the flow. As the latent space dimension increases, the F

$d$

AE to accurately represent the flow. As the latent space dimension increases, the F

![]() $d$

AE progressively improves at capturing features corresponding to smaller wavenumbers

$d$

AE progressively improves at capturing features corresponding to smaller wavenumbers

![]() $k_x$

. At

$k_x$

. At

![]() $d=2250$

, the F

$d=2250$

, the F

![]() $d$

AE can faithfully represent scales up to the Kolmogorov scale

$d$

AE can faithfully represent scales up to the Kolmogorov scale

![]() $k_\eta (z_p)$

, therefore, completely describing the flow, as expected from the results above. The significance of

$k_\eta (z_p)$

, therefore, completely describing the flow, as expected from the results above. The significance of

![]() $z_p$

and the justification for choosing it as the relevant height is given below.

$z_p$

and the justification for choosing it as the relevant height is given below.

By inspecting figure 10, we can define the resolved wavenumber

![]() $k_r$

for each

$k_r$

for each

![]() $d$

as the first wavenumber

$d$

as the first wavenumber

![]() $k_x$

at which the corresponding

$k_x$

at which the corresponding

![]() $E_{\Delta \theta }$

intersects with

$E_{\Delta \theta }$

intersects with

![]() $E_\theta$

(the spectrum of the input). This intersection represents the smallest scale at which the output of the networks fails to accurately reproduce the input, effectively indicating the maximum

$E_\theta$

(the spectrum of the input). This intersection represents the smallest scale at which the output of the networks fails to accurately reproduce the input, effectively indicating the maximum

![]() $k_x$

that is resolved for each

$k_x$

that is resolved for each

![]() $d$

. This is shown in figure 11, where we observe that the F

$d$

. This is shown in figure 11, where we observe that the F

![]() $d$

AE networks start to reproduce scales up to

$d$

AE networks start to reproduce scales up to

![]() $k_\eta (z_p)$

for

$k_\eta (z_p)$

for

![]() $d=2250$

. Note that in this figure we also repeat the analysis at heights closer to the centre of the channel. At these higher heights not only is the Kolmogorov length scale larger, as seen in figure 5, but the autoencoder is also able to resolve smaller scales at lower

$d=2250$

. Note that in this figure we also repeat the analysis at heights closer to the centre of the channel. At these higher heights not only is the Kolmogorov length scale larger, as seen in figure 5, but the autoencoder is also able to resolve smaller scales at lower

![]() $d$

. We recall too that at lower heights the dynamics is dominated by the boundary layer that is also resolved at lower values of

$d$

. We recall too that at lower heights the dynamics is dominated by the boundary layer that is also resolved at lower values of

![]() $d$

, as shown in figure 7. Therefore, when the autoencoder is able to resolve

$d$

, as shown in figure 7. Therefore, when the autoencoder is able to resolve

![]() $\eta _K(z_p)$

, it is also able to resolve the smallest scale at every height. We then define the minimum dimension of the latent space needed by the autoencoder to properly resolve all scales of the temperature field,

$\eta _K(z_p)$

, it is also able to resolve the smallest scale at every height. We then define the minimum dimension of the latent space needed by the autoencoder to properly resolve all scales of the temperature field,

![]() $d^*$

, as that for which

$d^*$

, as that for which

![]() $k_r \approx k_\eta$

at

$k_r \approx k_\eta$

at

![]() $z_p$

. This definition acknowledges the multiscale nature of the flow and does not rely on finding a sharp drop-off in a given metric, a task that is not always possible. Note that if the autoencoder was handling other physical fields besides the temperature, this criteria would need to be expanded such that every scale of every field is accounted for. Therefore, at

$z_p$

. This definition acknowledges the multiscale nature of the flow and does not rely on finding a sharp drop-off in a given metric, a task that is not always possible. Note that if the autoencoder was handling other physical fields besides the temperature, this criteria would need to be expanded such that every scale of every field is accounted for. Therefore, at

![]() $Ra=10^8$

we obtain

$Ra=10^8$

we obtain

![]() $d^*=2250$

. We extend this analysis to other Rayleigh numbers below.

$d^*=2250$

. We extend this analysis to other Rayleigh numbers below.

Figure 12. Distribution of the normalized IQR for the SIAE with different regularization values

![]() $\lambda$

, where the normalization is done using the maximum IQR value. (a) The IQR values for the network with a regularization strength of

$\lambda$

, where the normalization is done using the maximum IQR value. (a) The IQR values for the network with a regularization strength of

![]() $\lambda =10^{-1}$

, with the line marking a threshold that differentiates the two observed distributions. (b) The IQR distributions for various

$\lambda =10^{-1}$

, with the line marking a threshold that differentiates the two observed distributions. (b) The IQR distributions for various

![]() $\lambda$

values, including a comparison with the F

$\lambda$

values, including a comparison with the F

![]() $d$

AE (

$d$

AE (

![]() $\lambda =0$

, shown in darker shading). The line in this figure represents the same threshold as identified in (a).

$\lambda =0$

, shown in darker shading). The line in this figure represents the same threshold as identified in (a).

3.2. Sparsity-inducing autoencoders

We now turn our attention to the SIAE, while focusing again on the

![]() $Ra = 10^8$

case. We repeat the same experiments as above using a fixed bottleneck size of

$Ra = 10^8$

case. We repeat the same experiments as above using a fixed bottleneck size of

![]() $d'=4096$

and then proceed to analyse the structure of the latent vector. To identify which dimensions of

$d'=4096$

and then proceed to analyse the structure of the latent vector. To identify which dimensions of

![]() $Z'$

are relevant, we process each snapshot of the test dataset through the trained network, extract

$Z'$

are relevant, we process each snapshot of the test dataset through the trained network, extract

![]() $z'$

for each instance, and then calculate the IQR of each dimension of the vector as a measure of their variability. A large IQR indicates significant activity in that dimension during the reconstruction of the test dataset.

$z'$

for each instance, and then calculate the IQR of each dimension of the vector as a measure of their variability. A large IQR indicates significant activity in that dimension during the reconstruction of the test dataset.

We tested different values of the regularization parameter

![]() $\lambda$

. This led to varying distributions of the IQR of the bottleneck layer activations as seen in figure 12(b). We employed two criteria for selecting the regularization parameter. First, we evaluated the MSE of the reconstructions for different values of

$\lambda$