1. INTRODUCTION

[J]oin[ing] the mechanical power of the machine to the nuances and ‘subjectivizing’ control of the human performer … is itself an aesthetic value for our new age. This ultimately will be the way music will need to move until the next big issues arise. (Garnett Reference Garnett2001: 32)

Human–computer interaction (HCI) in music is a subsidiary of the sonic arts in which the social context of its myriad manifestations varies greatly. Initially emerging from the field of experimental/academic electroacoustic music, HCI in music was facilitated in this regard ‘in large part due to the melodic/harmonic, rhythmic and timbral/textural freedom associated with the genre’ (Meikle Reference Meikle2016: 235); as exemplified by The Hands (Waisvisz Reference Waisvisz2006; Waisvisz et al. Reference Waisvisz, den Biggelaar, Rijnsburger, Venmans, Cost and Demeijer1984–2006) and The Hub (Bischoff, Perkins, Brown, Gresham-Lancaster, Trayle, and Stone Reference Bischoff, Perkins, Brown, Gresham-Lancaster, Trayle and Stone1987–present; Brown Reference Brownnd; Early Computer Network Ensembles nd). HCI in music’s subsequent evolutionary trajectory has engendered a menagerie of contemporary ICMSs, all of which fall into one of three overarching categories: sequenced, transformative and generative (Rowe Reference Rowe1994; Drummond Reference Drummond2009).

Sequenced systems (Incredibox (So Far So Good 2011–present), Patatap (Brandel Reference Brandel2012–present; Brandel Reference Brandel2015), Adventure Machine (Madeon 2015b)) are usually tailored towards a lone user and allow for the orchestration and arrangement of musically complex, system-specific or pre-existing compositions – the constituent parts of which are broken up into short loops – but rarely afford the opportunity for users to create original music by recording their own loops/phrases and are often devoid of computer influence over the musical output of the system. While the stringent musical restrictions make sequenced systems ideal for novice users and musicians, the absence of a synergistic approach to musical creation involving both user and computer means it could be argued that such systems are predominantly reactive as opposed to interactive. As indicated by the aforementioned examples, sequenced systems are ordinarily designed as touchscreen or web-based applications, with the odd exception such as STEPSEQUENCER (Timpernagel et al. Reference Timpernagel, Heinsch, Huber, Pohle, Haberkorn and Bernstein2013–14; STEPSEQUENCER nd): a mixed reality interactive installation.

Transformative and generative systems are reliant upon underlying algorithmic frameworks, with examples of the former generally being classified as ‘score-followers’. ‘Score-followers’ work in tandem with trained musicians playing traditional or augmented instruments when performing pre-composed works and ordinarily demonstrate direct, instantaneous audio/Musical Instrument Digital Interface (MIDI) manipulation of user input. Like sequenced systems, the results can be musically complex but, while the user has far greater depth-in-control (i.e., the level of precision with which they are able to deliberately influence the musical output of the system) than is the case with sequenced systems, the requisite level of instrumental proficiency is an inhibiting factor for novice users. Examples of transformative systems include Maritime (Rowe 1992/99, Reference Rowe1999; Drummond Reference Drummond2009), Voyager (Lewis Reference Lewis1993, Reference Lewis2000; Drummond Reference Drummond2009), Music for Clarinet and ISPW (Lippe 1992/97, Reference Lippe1993) and Pluton (Manoury Reference Manoury1988; Puckette and Lippe Reference Puckette and Lippe1992).

Generative systems generate appropriate musical responses to user input and, due often to being stylistically ambient and therefore more musically simplistic in terms of form and structure, are better suited than sequenced and transformative systems to facilitating multi-user interaction. The frequently ambient nature of generative systems is conducive to supporting novice user interaction but also means that users are often afforded limited depth-in-control. Examples of generative systems can be more varied than those of sequenced and transformative systems, encompassing touchscreen-based applications (NodeBeat (Sandler, Windle, and Muller Reference Sandler, Windle and Muller2011–present), Bloom (Eno and Chilvers Reference Eno and Chilvers2008), Bloom 10: Worlds (Eno and Chilvers Reference Eno and Chilvers2018)), multichannel audiovisual installations (Gestation (Paine Reference Paine1999–2001, Reference Paine2013), Bystander (Gibson and Richards 2004–06, Reference Gibson and Richards2008)), open-world musical exploration games (FRACT OSC (Flanagan, Nguyen, and Boom Reference Flanagan, Nguyen and Boom2011–present), PolyFauna (Yorke, Godrich and Donwood Reference Yorke, Godrich and Donwood2014)) and mixed reality performances (DÖKK (fuse* 2017)).

While there is notable crossover between the various types of ICMS and the social contexts they inhabit, from conferences and gallery installations/exhibitions to classrooms, live stage performances and electronic music production studios, the vast majority of ICMSs are designed to function solely within the confines of their own contextual realm. ScreenPlay encapsulates the fundamental concepts underpinning all three approaches to ICMS design and, in doing so, transcends these socially contextual boundaries of implementation by capitalising on their respective strengths and negating their weaknesses.

2. DESIGN AND DEVELOPMENT METHODOLOGY

2.1. Design overview

At its core, ScreenPlay takes a sequenced approach to interaction by affording the ability to intuitively orchestrate complex musical works through the combination of individual loops. However, it is the ability to record these constituent loops from scratch to result in the composition/performance of entirely original works that sets Screenplay apart from other sequenced systems. The inherent lack of computer influence over the musical output of sequenced systems is accounted for through the inclusion of topic-theory-inspired transformative and Markovian generative algorithms, both of which serve to alter the notes of musical lines/phrases recorded/inputted by the user(s) and together furnish novel ways of breaking routine in collaborative, improvisatory performance and generating new musical ideas in composition. When triggered, the Markovian generative algorithm continuously generates notes in real time in direct response to melodic/harmonic phrases played/recorded into the system by the user(s). The topic-theory-inspired transformative algorithm works by altering the notes of melodies recorded into the system as clips/loops, whilst topic theory also provides the conceptual framework for the textural/timbral manipulation of sound, thus introducing new and additional dimensions of expressivity.

The potential for musical complexity diverges from the prevailing approach to generative ICMS design, negating one of the major weaknesses with this approach in terms of captivating users – both novice and experienced – beyond the initial point of intrigue. The other weakness of the generative approach, often shared by sequenced systems – lack of depth-in-control afforded to users over the musical output of the system – is combatted by drawing upon the strengths of transformative, ‘score-following’ systems: the potential for virtuosic performances. The grid-based playing surface, inspired by that of Ableton Push (2013) (Figure 2), affords the opportunity to increase one’s proficiency in interacting with the system and perform with freedom of expression through practice in the manner commonly associated with traditional musicianship via increased dexterity, muscle memory training and an improved sense of rhythm and timing, thus cementing ScreenPlay’s ability to engage experienced users/musicians. In order to offset the constraints imposed upon accessibility/usability to novice users/musicians by this approach it is possible to ‘lock’ the pitch intervals between individual pads comprising the button matrix to those of a specific scale/mode as well as apply quantisation to recorded notes and the triggering of clips.

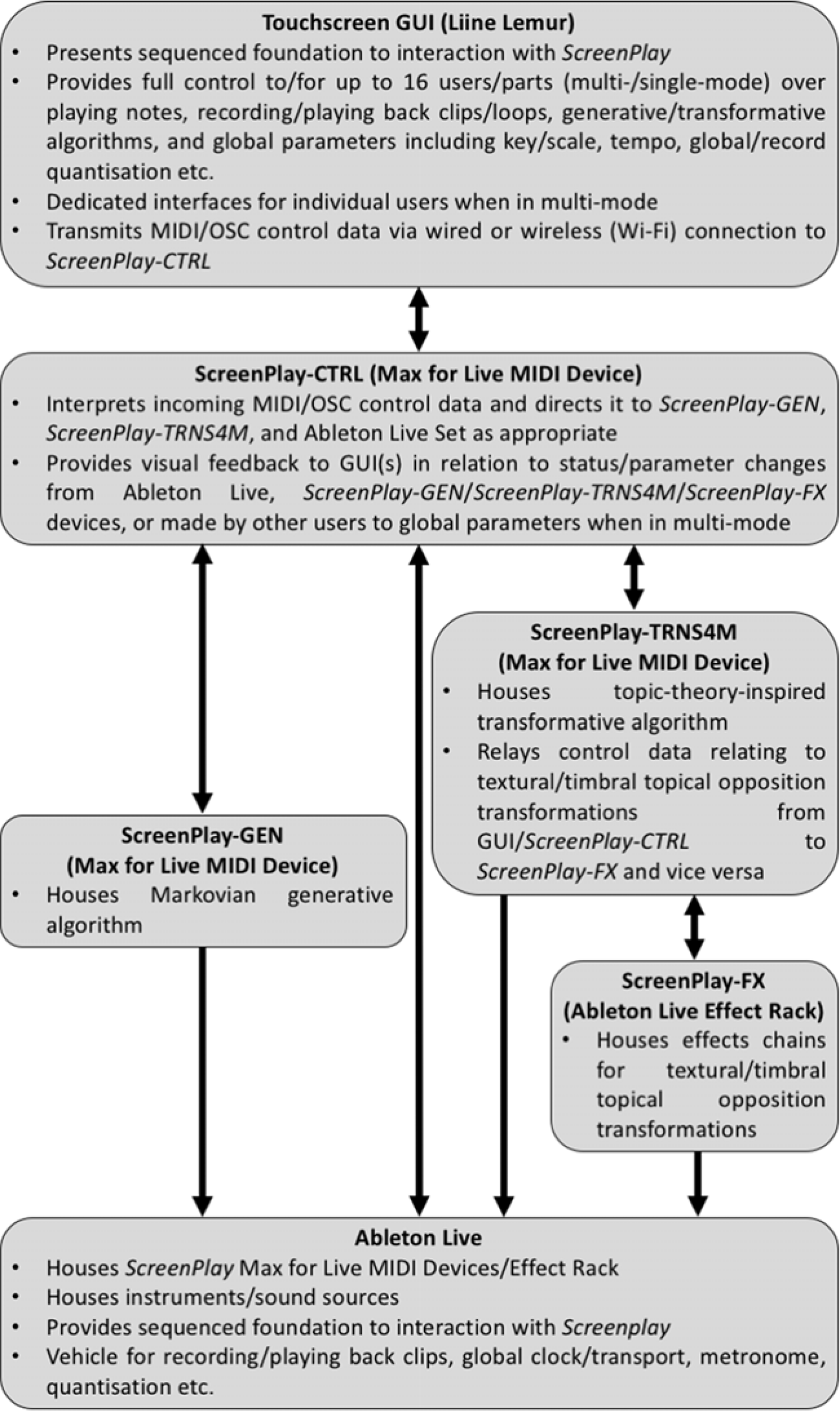

Figure 1. System overview diagram.

Figure 2. ScreenPlay GUI playing surface.

Through its inclusive design, ScreenPlay manifests a duality in function fairly unique within the field of HCI in music: the capability of operating as both a multi-user-and-computer collaborative, improvisatory interactive performance system and a single-user-and-computer studio compositional tool for Ableton Live. When in multi-mode, each user is afforded control over one of up to 16 individual instruments/parts within the musical output of the system via a dedicated graphical user interface (GUI). In this configuration, ScreenPlay is ideally suited to function as a gallery installation, while the ability to set the system up using pre-recorded clips/loops in order to better facilitate intuitive and engaging interactive musical experiences between numerous novice users and the computer is beneficial in this scenario. Alternatively, when in single-mode, ScreenPlay affords the user direct control over up to 16 individual parts from a single GUI, which updates in real time to accurately reflect the status of the currently selected part; ideal for studio composition/live performance scenarios. Furthermore, the suite of Max for Live MIDI Devices and Ableton Live Audio Effect Rack that constitute to computational framework of ScreenPlay (ScreenPlay-CTRL, Screenplay-GEN, Screenplay-TRNS4M and Screenplay-FX) each include their own GUI controls (Figure 3), accessible without the use of the touchscreen-based GUI but where, when used together, changes made in one are reflected in the other; functionality which further improves ScreenPlay’s integrability with regard to the existing composition/production/performance workflows/setups of practising electronic/computer musicians. A schematic of ScreenPlay and the lines of communication between its constituent elements can be seen in Figure 1.

Figure 3. ScreenPlay Max for Live MIDI Devices and Ableton Live Audio Effect Rack. From left to right: ScreenPlay-CTRL, ScreenPlay-GEN, ScreenPlay-TRNS4M, ScreenPlay-FX.

ScreenPlay’s reliance on Ableton Live also provides a framework to build upon the surge of interactive music releases that has, in recent years, paved the way for HCI in music to emerge into mainstream popular culture. Due in large part to the concurrent surge in popularity of electronic dance music – the loop-based structure of which is ideally suited to enabling interactive musical experiences for non-musicians – and the advent of powerful, portable, affordable touchscreen devices (Meikle Reference Meikle2016), many contemporary popular musicians have chosen to release their music as interactive/audiovisual applications, the majority of which are sequenced in nature (with some exceptions). Examples include: Biophilia (Björk 2011), Reactable Gui Boratto (Boratto, Jordà, Kaltenbrunner, Geiger and Alonso Reference Boratto, Jordá, Kaltenbrunner, Geiger and Alonso2012) and Reactable Oliver Huntemann (Huntemann, Jordà, Kaltenbrunner, Geiger, and Alonso Reference Huntemann, Jordá, Kaltenbrunner, Geiger and Alonso2012), ARTPOP (Lady Gaga 2013), Doompy Poomp (Division Paris, Skrillex, and Creators Project 2014; Skrillex 2014), THERMAL (Teengirl Fantasy 2014; Teengirl Fantasy and 4real 2014) and Adventure/Adventure Machine (Madeon 2015a, 2015b). Even more recently, the widespread success of the interactive music game BEAT FEVER – Music Planet (WRKSHP 2016–present) for iOS and Android has seen many mainstream/pop artists release music licensed for use in the game. Some examples include Steve Aoki’s Neon Future III (Reference Aoki2018), Getter’s Visceral (2018) and R3HAB’s Trouble (2017).

At the same time, more ‘underground’ electronic music producers have been actively distributing their music in the form of Ableton Live Packs (ALPs), with artists such as Mad Zach and ill.gates leading the way in this regard (some examples include ‘Noth’ (Mad Zach 2018a), ‘Brootle’ (Mad Zach 2018b), ‘Hiroglifix’ (Mad Zach and Barclay Crenshaw 2017), ‘The Demon Slayer PT 1’ and ‘The Demon Slayer PT 2’ (ill.gates 2012a, 2012b) and ‘Otoro’ (ill.gates 2011)). By virtue of its primarily sequenced approach to musical interaction Screenplay has a propensity for, but not exclusivity of, the interactive composition and performance of popular electronic music, which, when allied with its incorporation of Markovian generative and topic-theory-inspired transformative algorithms, presents a plethora of new possibilities for interacting with musical releases in this format that, ordinarily, take a more standardised approach to sequenced interaction.

2.2. Key design concepts

ScreenPlay’s ability to transcend the socially contextual categorisation inherent to ICMS design and implementation can be attributed, in part, to its assimilation of various key principles and conceptual frameworks for ICMS and interface design, including Blaine and Fels’s 11 criteria for collaborative musical interface design (Blaine and Fels Reference Blaine and Fels2003), Norman’s theoretical development of Gibson’s concept of affordances (Norman Reference Norman1994, Reference Norman2002, Reference Norman2008; Gibson 1979 [Reference Gibson1986]) and Benford’s approach to the classification of ‘high level design strategies for spectator interfaces’ (Benford Reference Benford2010: 55), which together allow for multifunctionality in both its creative workflow and GUI.

2.2.1. Affordances

ScreenPlay implements touchscreen-based interfacing due to the potential for a high level of depth-in-control over the musical output of the system. In relation to screen-based interfacing, Norman (Reference Norman2002, Reference Norman2008) distinguishes between real affordances (those expressed by the physical attributes of an object) and perceived affordances (those which convey the effects of a given control object/gesture through the metaphorical/symbolic representation of its function using language and/or imagery) and also lays out four principles for the design of screen-based interfaces (Reference Norman2008):

1. ‘Follow conventional usage, both in the choice of images and the allowable interactions’.

2. ‘Use words to describe the desired action’.

3. ‘Use metaphor’.

4. ‘Follow a coherent conceptual model so that once part of the interface is learned, the same principles apply to other parts’.

An advantage of using touchscreen-based interfacing (Figure 2; also see Figure 4) as the means of control for ScreenPlay is the ubiquity of touchscreen devices in modern-day society and the resulting widespread learned cultural codes and conventions (Saussure Reference Saussure1959: 65–70) that furnish users with an immediate sense of the real affordances of the interface and how to discern the perceived affordances. For most touchscreens, real affordances are limited to tapping and pressing/holding (including vertical/lateral movement). ‘In graphical, screen-based interfaces, all that the designer has available is control over perceived affordances’ (Norman Reference Norman2008). These constraints bring to bear another of Benford’s concepts which is closely linked to his ‘high level design strategies’ (Reference Benford2010); that there are three primary gesture types to be considered with respect to ICMS design: those which are expected (by the user), sensed (by the system) and desired (by the system-designer) (Benford et al. Reference Benford, Schnädelbach, Koleva, Anastasi, Greenhalgh and Rodden2005; Benford Reference Benford2010). The focus of designing an intuitive and engaging GUI and resulting interactive musical experience for both novice and experienced users and musicians when developing ScreenPlay was on successfully formulating expectations regarding meaningful gestures and interactions in the minds of users by translating the desired control gestures via the perceived affordances of the system.

Figure 4. ScreenPlay GUI algorithm controls.

ScreenPlay’s GUI makes use of fairly universal symbols and control objects for basic functionality such as green/red play/record buttons, +/- signs to transpose the range of the grid-based playing surface by octaves, sliders for velocity and tempo control, and drop-down menus for key/scale selection, global/record quantisation settings, loop length and instrument/part selection in single-mode. Beyond this, it was necessary to take a strategic and creative approach to the communication of desired control gestures and system status information while being mindful of the constraints imposed upon design by the protocols and hardware being used as well as issues of accessibility to a broad range of potential users and overarching aesthetic values. Chief among these considerations was devising a suitable means by which to communicate to users the real-time status of any given part in the overall musical output of the system in relation to the global transport position in Ableton Live. ScreenPlay’s GUI has been developed using Liine Lemur (2011–present), which allows for both wired and wireless communication of MIDI and Open Sound Control (OSC) data between the GUI(s) and central computer system; an attribute that, in itself, is paramount to Screenplay’s ability to transcend the innate socially contextual boundaries surrounding the implementation of many ICMSs by virtue of their design due to the fact that a wireless setup is more practical in a multi-user installation environment whereas the reduced latency and increased reliability of a wired setup is conducive to the successful integration of ScreenPlay into studio composition and live performance setups/workflows. Accordingly, ScreenPlay’s GUI displays the passage of time and global transport position via a horizontal ‘timebar’ that moves from left to right across the top of the display over the course of a single bar. Testing proved the continuous motion of the timebar to be a more effective temporal indicator than a metronomic light synchronised with the musical pulse due to timing inaccuracies with the latter caused by fluctuations in Wi-Fi signal strength when the system was set up in a wireless configuration. Furthermore, the colour of the timebar updates to reflect the playback status of the corresponding part; turning green if a clip is playing, red if recording is enabled and blue if there is no active clip. The currently selected clip is highlighted by a series of indicator lights at the top of each clip slot.

While ScreenPlay has been designed to be controlled via a tablet and not a smartphone, variable screen size and resolution was another important consideration when developing the GUI. Using the correct aspect ratio was the most fundamental design choice made with this in mind in order to maximise the compatibility of the GUI with different devices, thus further expanding its potential for implementation within a variety of social contexts without being subject to hardware limitations. While Apple’s iPad and some premium Android tablets use 4:3 aspect ratio, 16:9 is common among mid-range and budget Android tablets. ScreenPlay utilises a 16:9 aspect ratio as the relative sizing of the interface translates better from 16:9 to 4:3, which is presented in full size with black bars at the top/bottom of the display, than it does from 4:3 to 16:9, which is decreased in size to compensate for the reduction in landscape-oriented display height. Various measures have also been taken to reduce clutter on the GUI, which simultaneously has an aesthetic benefit and helps clarify perceived affordances and desired control gestures. Using ‘hold-to-delete’ functionality for the 16 clip slots, as opposed to dedicated ‘clip clear’ buttons, is one of these, while tapping a clip slot creates a new clip if the slot is empty and either record or fixed loop length is enabled (for which pre-determined loop lengths of one, two or four bars are available) or begins playback of the clip contained within a slot at the next available opportunity as dictated by the global quantisation value. Screenplay’s GUI is also restricted to a single display page, with the switch in the bottom left corner of the interface serving to alternate between the grid-based playing surface and generative/transformative algorithm controls in the centre of the screen, while the surrounding controls remain the same. This ensures control over functionality such as playback, key/scale selection and clip triggering etc. is always at hand.

Balancing the size and number of pads that comprise the playing surface was another concern when accounting for different screen sizes. The decision to use 40 pads in an eight by five configuration affords a large enough musical range – almost three full octaves when using a diatonic scale and slightly more than two when using a chromatic scale – at the same time as ensuring the individual pads are large enough to be used comfortably on a screen as small as eight inches. The scales currently supported by ScreenPlay are Ionian, Dorian, Phrygian, Lydian, Mixolydian, Aeolian, Locrian and chromatic. When using a diatonic scale pitches ascend from left to right with the ascent also continuing vertically for every three notes along the horizontal row, allowing for a standard triad to be formed anywhere on the grid with the same hand shape. Additionally, the pads to which root-notes are assigned are coloured differently from the other pads for more accurate orientation.

This is a feature of ScreenPlay’s design that is particularly good for novice/multi-user installation scenarios and is supported in this regard by the ability to quantise recorded notes and the launching/playback of loops/clips and the Markovian generative algorithm. Real-time record quantisation can be set to crotchet, quaver or semiquaver notes while global clip trigger quantisation can be set to either crotchet notes or one bar. It is also possible to disable both forms of quantisation entirely. When allied with the ability to increase one’s proficiency in playing with the system, use chromatic scales or bypass the GUI entirely in order to input MIDI notes via alternative means and use dedicated controls on the Ableton Live Audio Effect Rack and Max for Live MIDI Devices themselves to access ScreenPlay’s tools for textural/timbral manipulation and control the Markovian generative and topic-theory-inspired transformative algorithms, which themselves can be MIDI-mapped to any compatible MIDI controller via Ableton Live’s MIDI mapping protocol (Figure 3), this functionality is conducive to the seamless integration of ScreenPlay into studio composition and live performance scenarios. Together these design features constitute a duality in support and application for both non-expert and expert users/musicians that satisfies Blaine and Fels’s recommendations with regard to learning curve, musical range and pathway to expert performance (Blaine and Fels Reference Blaine and Fels2003: 417–19).

2.2.2. Focus, scalability, media and player interaction

ScreenPlay’s unique multifunctionality in this regard is further enhanced when scrutinised through the lens of Blaine and Fels’s focus, scalability, media and player interaction criteria. Focus relates to the efforts made to increase audience ‘transparency’ (Fels, Gadd and Mulder Reference Fels, Gadd and Mulder2002): the ease with which audience members can discern the connection between the actions of the user(s)/performer(s) when interacting with the system and the resulting musical output. The concept of audience transparency is intrinsically linked with Benford’s ‘high level design strategies for spectator interfaces’ (Reference Benford2010: 55), and particularly the expressive strategy (clearly displaying the effects of specific manipulations). ScreenPlay’s reliance on standardised musical control objects and gestures means the connection between input gestures and musical output is easily recognisable to both the user(s) and audience. These clearly perceptible connections also relate to Smalley’s theory of spectromorphology (‘the interaction between sound spectra … and the ways they change and are shaped through time’ (Smalley Reference Smalley1997: 107)), particularly with respect to source-cause interaction, which refers to the ability of the listener to identify both the sounding body and gesture type used to create any given sound by way of the spectromorphological referral process (reversal of the source-cause interaction chain) (111): ‘in traditional music, sound-making and the perception of sound are interwoven, in electroacoustic music they are often not connected’ (109) and, thus, source-cause interaction analysis can be more difficult. The same is often true for transformative and generative interactive systems, which are generally more experimental in nature than are sequenced systems. Despite ScreenPlay’s integration of transformative and generative approaches to musical interaction alongside sequenced elements, the source-cause interaction remains clear by virtue of its interface design; simultaneously affording a high level of audience transparency conducive to effective functionality in novice/multi-user installation setups and a high level of depth-in-control requisite to increasing proficiency/technical ability through practice – a key aspect of Screenplay’s design in terms of its applicability to studio composition and live performance scenarios.

Blaine and Fel’s scalability, media and player interaction criteria are intrinsically linked in ScreenPlay, whilst the implementation of the latter also exhibits a connection with Norman’s first and fourth principles for screen-based interfaces. Scalability refers to the constraints imposed upon the depth-in-control afforded to users/performers by the GUI relative to the number of simultaneous users: ‘An interface built for two people is generally quite different from one built for tens, hundreds or thousands of players’ (Blaine and Fels Reference Blaine and Fels2003: 417). While the depth-in-control afforded to users when ScreenPlay is in multi-mode is somewhat diminished compared to single-mode, given that each user is afforded control over a single instrument/part as opposed to direct control over all of them, the control afforded to users over these individual parts in collaborative, improvisatory performance is not curtailed with increased numbers of participants due to the provision of individual, dedicated GUIs. This is often not the case with collaborative interactive music installations aimed at novice users, an example of which is the aforementioned system STEPSEQUENCER (Timpernagel et al. Reference Timpernagel, Heinsch, Huber, Pohle, Haberkorn and Bernstein2013–14), and, as such, is a major strength of ScreenPlay in this regard. Controlled using physical and digitally projected control objects located on the floor of the performance space in a manner reminiscent to that of the classic arcade game Dance Dance Revolution (Konami 1998–present), both size constraints of the installation environment and limited number of control objects result in reduced depth-in-control afforded to individual users with increased numbers when interacting with STEPSEQUENCER.

Media refers to the use of audiovisual elements ‘as a way of enhancing communication and creating more meaningful experiences … by reinforcing the responsiveness of the system to players’ actions’ (Blaine and Fels Reference Blaine and Fels2003: 417) and is linked with the concept of feedthough, which involves the provision of visual feedback to users in a collaborative interactive environment relating to the actions of their fellow participants (Dix Reference Dix1997: 147–8; Benford Reference Benford2010). Feedthrough is implemented in ScreenPlay in a rudimentary capacity when in multi-mode, enhancing user experience in the context of collaborative installation environments by providing users with visual feedback via their individual GUIs as to the actions of the other participants with respect to global parameters, such as tempo/key/scale/quantisation value changes and playback/metronome activation etc. As previously discussed, the provision of real-time visual feedback is further enhanced when ScreenPlay is configured in single-mode by updating the GUI to reflect the status of the currently selected instrument/part and through the affordance of two-way visual communication between the GUI and dedicated Max for Live MIDI Device controls, aimed at better integration into compositional/performative workflows of practising electronic/computer musicians.

Finally, Player interaction relates to the effects of providing each participant in a collaborative ICMS with either the same, similar or differing UIs, whereby an increased presence of identifiable similarities between the interfaces of all performers can ‘lead to a more relaxed environment and more spontaneous group behaviours’ (Blaine and Fels Reference Blaine and Fels2003: 417). ScreenPlay’s single GUI layout, provided to all performers when in multi-mode, is conducive to this and also ties in with Norman’s first and fourth principles for screen-based interfaces through its implementation of standardised control objects and associative symbols.

Norman’s second and third principles for screen-based interfaces manifest in the controls for the topic-theory-inspired transformative algorithm (Figure 4). Here, ‘topical oppositions’ are presented to the user(s) as contradictory metaphorical descriptors assigned to opposite ends of numerous sliders, intended to convey the tonal/textural/timbral impact of the various transformations in relation to the position of the sliders between the two extremes. Drummond also highlights the use of metaphor as being of great import with respect to ICMS design:

The challenge facing designers of interactive instruments and sound installations is to create convincing mapping metaphors, balancing responsiveness, control and repeatability with variability, complexity and the serendipitous. (Drummond Reference Drummond2009: 132)

3. TRANSFORMATIVE AND GENERATIVE ELEMENTS OF DESIGN

3.1. Topic theory and ScreenPlay

Particularly prevalent in Classical and Romantic music, topic theory is based upon the invocation of specific musical identifiers to manipulate emotional responses and evoke cultural/contextual associations in the minds of the audience; these connections being exemplary of metaphorical binary oppositions. In ScreenPlay, these metaphorical binary oppositions are used in reverse to communicate to the user(s) the audible effects (both tonal and textural/timbral) of various topical opposition transformations in order to break routines of form, structure and style in collaborative, improvisatory performance, aid compositional problem-solving and provide new dimensions of expressivity. The graphical representation of these topical oppositions as previously described is a fundamental component in a design that challenges the restrictions upon social context associated with ScreenPlay’s constituent computational processes, which are derived from the three main approaches to ICMS design. The use of metaphor as a means of conveying the audible effects of the various transformations provides an accessible means of musical manipulation for novices ideally suited to work within collaborative environments such as a gallery installation whilst simultaneously posing a unique and interesting conceptual paradigm for experienced composers and performers. Furthermore, the novel manifestation of topic theory within HCI in music represents a conjoining of even more disparate concepts that span both time and genre.

The four topical opposition transformations afforded by ScreenPlay are: ‘joy–lament’, ‘light–dark’, ‘open–close’ and ‘stability–destruction’; each of which affects the musical output of the system in a unique way. The impact of both ‘joy–lament’ and ‘light–dark’ topical opposition transformations is directly influenced by specific topics, with the basis of ‘joy’ being found in the fanfare topic, ‘lament’ in the pianto, ‘light’ in the hunt and ‘dark’ in nocturnal. The impact of the ‘open–close’ transformation mimics the effects of increased and decreased proximity to a sound source and the size of the space in which it is sounding. A combination of reverb, delay, compression, filtering and equalisation is used to achieve this. The ‘stability–destruction’ transformation uses real-time granulation of the audio signal and distortion to amplify the effect.

3.1.1. Joy–lament

The ‘joy–lament’ topical opposition is a probability-based, evolutionary algorithm designed to reshape the melodic and rhythmic contours of monophonic musical lines and make them sound either ‘happier’ or ‘sadder’. These transformations are enacted by modifying the pitch intervals of notes in relation to the transformed pitches of the notes immediately preceding them, as opposed to treating each note in isolation, and inserting passing notes or removing notes where applicable, thus allowing for melody lines to be transmuted in accordance with the position of the ‘joy–lament’ slider on the GUI.

At the ‘joy’ end of the transformational spectrum the algorithmic probabilities are weighted towards producing results that exhibit an upward melodic contour, greater speed of movement between notes, greater/more rapid pitch variation, reduced repetition of notes and an increased prevalence of passing notes. Conversely, ‘lament’ results in a downward melodic contour, reduced speed of movement between notes, reduced variation in pitch, increased repetition of notes, a reduced likelihood of generating passing notes and an increased likelihood that notes will be muted as those preceding them increase in duration. The algorithmic probabilities of the transformation gradually shift as the slider is moved from one end of the scale to the other, whilst the overall range of the transformation is curtailed to remain within the root notes of the chosen key above/below the highest/lowest notes originally present in a given melody prior to the transformation; a constraint imposed to help preserve the original musical character of the phrase.

The fanfare topic, which forms the basis of the ‘joy’ transformation, is a rising triadic figure (Monelle Reference Monelle2000: 35) most commonly used in the eighteenth century (30) to represent ‘traditional heroism … with a slightly theatrical and unreal flavor proper to the age’ (19). Monelle also cites examples of the fanfare being used in different contexts to that of the overarching military topic, including softer/gentler pieces such Mozart’s Piano Concerto in B♭, K. 595 (Reference Mozart1788–91) and ‘Non più andrai’ from Figaro (Reference Mozart1786) (Monelle Reference Monelle2000: 35–6). This ‘helps to explain the diminutiveness, the toy-like quality, of many manifestations of fanfarism’ (38) and is supported by Ernst Toch who distinguishes between two different kinds of fanfare: the ‘masculine type’ and ‘feminine type’ (Toch Reference Toch1948: 106–7). It is this cross-compatibility of the fanfare that makes it suitable for use within the context of the modern electronic style of ScreenPlay. Perhaps even more important in this regard is Monelle’s assertion that:

the military fanfare may function associatively for a modern audience, who are sensitive [to] the slightly strutting pomp of the figure’s character without realizing that it is conveyed by its origin as a military trumpet call. In this case, the topic is functioning, in the first place, through the indexicality of its original signification; the latter has been forgotten, and the signification has become arbitrary. (Reference Monelle2000: 66)

At the ‘joy’ end of the transformational spectrum there is a 45 per cent chance each that either a fourth (perfect/augmented/diminished depending on chosen scale) or perfect fifth will be generated and a 10 per cent chance of an octave when performing a diatonic transformation – as dictated by the currently selected scale/mode. A chromatic transformation yields a 22.5 per cent chance each that a perfect fourth, augmented fourth/diminished fifth, perfect fifth, or minor sixth will be generated and a 5 per cent chance each of either a major seventh or octave. The decision to utilise fourths and fifths as opposed to thirds and fifths, as suggested by the triadic nature of fanfare, is to increase average interval size, given that the sentiment of joy/triumph is chiefly evoked by larger intervals and greater pitch variation (Gabrielsson and Lindström Reference Gabrielsson, Lindström, Justin and Sloboda2010: 240–1). The increased interval size also helps maximise the contrast between results yielded by the ‘joy’ and ‘lament’ transformations, while the small chance of generating sevenths/octaves increases variation in the transformational results.

In contrast to ‘joy’, the ‘lament’ transformation is founded upon the pianto, which ‘signifies distress, sorrow and lament’ (Monelle Reference Monelle2000: 11): ‘the motive of a falling minor second’ (17), the pianto ‘[overarches] our entire history[,] from the sixteenth to the twenty-first centuries’ (Monelle Reference Monelle2006: 8), is equally applicable in both vocal and instrumental music (Monelle Reference Monelle2000: 17), and is commonplace in popular music (Monelle Reference Monelle2006: 4), all of which makes it ideally suited to application within the context of modern electronic music and ScreenPlay in particular. Despite originally signifying the specific act of weeping, and later sighing, the pianto has come merely to represent the emotions of ‘grief, pain, regret[, and] loss’ associated with such actions (Monelle Reference Monelle2006: 17).

It is very doubtful that modern listeners recall the association of the pianto with actual weeping; indeed, the later assumption that this figure signified sighing, not weeping, suggests that its origin was forgotten. It is now heard with all the force of an arbitrary symbol, which in culture is the greatest force of all. (Reference Monelle2006: 73)

Much like with ‘joy’, the ‘lament’ transformation does not use exclusively minor second intervals. Instead, there is a 45 per cent chance each that either a second or third with be generated and a 10 per cent chance of a seventh (all of which are minor/major depending on scale) for a diatonic transformation and 22.5 per cent chance each of minor/major seconds/thirds and 5 per cent chance each of major sixths/minor sevenths for chromatic transformations. This is necessary to ensure that generated pitch values remain in key when performing diatonic transformations while, again, the small chance of generating larger intervals furnishes unpredictability. ScreenPlay’s transformative algorithmic framework is only inspired by topic theory and there is a balance to be struck between serving the needs of the system in supporting a wide range of user experience levels/musical proficiency while also remaining true to the musical characteristics traditionally associated with the topics that have influenced its design.

The theoretical justification for the inclusion of thirds can be found in the evolution of the pianto – specifically in the topic of Empfindsamkeit (Monelle Reference Monelle2000: 69), which introduced inversions of the descending minor second, the major second and the descending chromatic fourth (68–9); the inclusion of all of which is described by Monelle as ‘the true style of Empfindsamkeit’ (70). Both major and minor thirds are used in the ‘lament’ transformation instead of chromatic fourths to ensure generated intervals remain in key when conducting diatonic transformations. Furthermore, smaller intervals and reduced pitch variation are generally associated with the broad evocation of sadness, which is also why ‘lament’ favours the repetition of notes, with larger intervals and increased pitch variation being associated with joy (Moore Reference Moore2012). Despite the creative license taken in implementing the pianto in ScreenPlay’s ‘joy–lament’ transformation, its suitability is not in question, as summarised by Monelle in stating that ‘The pianto … [is] seemingly so thoroughly appropriate to its evocation – somehow, the moan of the dissonant falling second expresses perfectly the idea of lament’ (Reference Monelle2000: 72).

Both note duration and speed of movement between notes are also highly important when evoking happiness/sadness through music since ‘The feeling that music is progressing or moving forward in time is doubtless one of the most fundamental characteristics of musical experience’ (Lippman Reference Lippman1984: 121). When discussing characteristic traits of Romantic music in relation to the representation of lament/tragedy, in reference to Poulet, Monelle states:

Time-in-a-moment and progressive time respectively evoke lostness and struggle; the extended present of lyric time becomes a space where the remembered and imagined past is reflected, while the mobility of progressive time is a forum for individual choice and action that is ultimately doomed.

Lyric time is the present, a present that is always in the present. And for the Romantic, the present is a void. ‘To feel that one’s existence is an abyss is to feel the infinite deficiency of the present moment’ [(Poulet Reference Poulet1956: 26, cited in Monelle Reference Monelle2000: 115)]; in the present people felt a sense of lack tinged with ‘desire and regret’. (Reference Monelle2000: 115)

With progressive time being the overall sense of forward motion in music and lyric time being the internal temporality of the melody, Monelle is suggesting that the extension of lyric time – that is, increased note duration in the melody and reduced speed of movement between notes – is inherent in signifying a sense of doom and regret. As such, these melodic traits apply to the ‘lament’ transformation to convey an increased sense of sorrow, while the inverse is true of ‘joy’, which coheres with the fact that the ‘fanfare is essentially a rhythmicized arpeggio’ (Agawu Reference Agawu1991: 48).

The implementation of topic theory as described in signifying joy/lament is paramount to the design and functionality of ScreenPlay due to the fact that the traditional correlation between minor/major scales and the cultural opposition of tragic/nontragic (Hatten Reference Hatten1994: 11–12) is not viable given the key/scale of the music produced by the system is subject to the discretion of the user(s). Transcriptions of ‘joy’ and ‘lament’ transformations, both diatonic and chromatic, of the main melody line from Video example 1 can be seen along with the original melody line and ‘neutral’ transformations, for which the ‘joy–lament’ slider was positioned centrally, thus providing equal probability for the generation of all available intervals, in Figures 5–11, with Sound examples 1–7 also provided. Both ‘joy’ and ‘lament’ transformations can also be seen/heard in Video example 1 at 4:10.

Figure 5. Transcription of lead melody (E Aeolian scale) from Video example 1 (Sound example 1).

Figure 6. Transcription of diatonic (E Aeolian scale) ‘joy’ transformation of lead melody from Video example 1 (Sound example 2).

Figure 7. Transcription of diatonic (E Aeolian scale) ‘lament’ transformation of lead melody from Video example 1 (Sound example 3).

Figure 8. Transcription of diatonic (E Aeolian scale) neutral transformation of lead melody from Video example 1 (Sound example 4).

Figure 9. Transcription of chromatic ‘joy’ transformation of lead melody from Video example 1 (Sound example 5).

Figure 10. Transcription of chromatic ‘lament’ transformation of lead melody from Video example 1 (Sound example 6).

Figure 11. Transcription of chromatic neutral transformation of lead melody from Video example 1 (Sound example 7).

3.1.2. Light–Dark

Closely related to the fanfare topic is the topic of the hunt, which serves as the inspiration for the ‘light’ transformation, due to often being used to signify the morning during the Classical era and beyond as a result of ‘the courtly hunt of the period [taking] place during the morning’ (Monelle Reference Monelle2006: 3). Itself a type of fanfare, predominantly in a 6/8 metre (82), it is not the melodic/rhythmic contour or organisation of notes inherent to the topic that apply to the ‘light’ transformation, but instead the associated textural/timbral characteristics. Like many brass instruments, the tonal qualities of the French and German hunting horns and the traditional calls/fanfares played on both – which shaped the topic of the hunt (Monelle Reference Monelle2006) – are characterised by a bright, loud sound with a large amount of presence throughout the mid-range of the frequency spectrum and the ability to reach higher when played at increased registers. Accordingly, the textural/timbral impact of the ‘light’ transformation emphasises the mid-range through to the high end of the frequency spectrum through the application of equalisation (EQ), high-pass filtering and the addition of a small amount of white noise. The high-pass filter is of the second order (12 dB/octave) and has a cut-off frequency of 5 kHz and resonance value of 50 per cent, thus providing a significant boost to the frequencies around the cut-off, while the EQ employs a shelving boost of 6 dB, again at 5 kHz. White noise is used as the carrier signal for a vocoder that appears at the beginning of the effects chain so that it mimics the dynamics of the dry signal and is subject to the same filtering and EQ (Figure 12).

Figure 12. ‘Light’ transformation effect chain.

Conversely, the textural/timbral impact of the ‘dark’ transformation is founded in those qualities of nocturnal music, which originated in the horn of nocturnal mystery topic: ‘[taking] on some of the associations of the hunt, especially the mysterious depth of the woodland, but [abandoning] others’ (Monelle Reference Monelle2006: 91), the horn of nocturnal mystery was a ‘solo horn in the nineteenth century, playing a fragrant cantilena with a soft accompaniment’ (106). Contemporary examples of the link between nocturnal music and woodland can still be found, even in popular electronic music, as seen in the song ‘Night Falls’ by Booka Shade (2006), which serves to highlight the suitability of nocturnal characteristics in the context of ScreenPlay.

Despite the significations of nocturnal woodland in ‘Night Falls’, this does not figure in the impact of the ‘dark’ transformation. Instead, as with ‘light’, the focus is on the textural/timbral characteristics of nocturnal music and the horn of nocturnal mystery. Most sounds used throughout ‘Night Falls’ are lacking in high frequency content and, predominantly, play notes, lines and progressions that are low in register. The same textural/timbral and registral characteristics are inherent to nocturnal music and the horn of nocturnal mystery. The timbral impact of playing a horn softly is almost akin to applying a low-pass filter to the sound, effectively rolling off a large amount of the high frequency energy. Likewise, the accompaniment to the solo horn was not only texturally soft but also generally low in register. These primary characteristics are translated to the ‘dark’ transformation by way of low-pass filtering, which is again of the second order and has a cut-off frequency of 250 Hz, the enhancement of low resonant frequencies present within the signal via EQ, using a 6 dB low shelving boost at 250 Hz and increasingly high resonance values (up to 50%). Boosting the lower mid-range in such a way helps to shift the tonal balance towards the low-end, thus reducing definition/clarity in the signal; an idea that resonates with visual impairment in the dark. This lack of definition is further emphasised by blending a small amount of white noise with the signal (Figure 13). The ‘light–dark’ transformation can be seen/heard affecting the pad sound throughout Video example 1.

Figure 13. ‘Dark’ transformation effect chain.

3.1.3. Open – close and stability – destruction

As previously mentioned, the ‘open–close’ and ‘stability–destruction’ transformations are interpretive expressions of the textural/timbral qualities of their respective significations. The occupancy of sound within space, upon which ‘open–close’ is founded, is of great importance in all music and can be described in terms of Smalley’s four qualifiers of spectral space. Of these, both emptiness–plentitude and diffuseness–concentration are of particular importance with respect to the ‘open–close’ transformation and can respectively be defined as ‘whether the space is extensively covered and filled, or whether spectromorphologies occupy smaller areas, creating larger gaps, giving an impression of emptiness and perhaps spectral isolation’, and ‘whether sound is spread or dispersed throughout spectral space or whether it is concentrated or fused in regions’ (Reference Smalley1997: 121).

A combination of reverb, delay, compression and EQ is used to produce the contrasting effects of openness and closeness (Figures 14 and 15). Openness is achieved through increased delay level, time and feedback, increased reverb level, density, diffusion scale, room size, pre-delay and decay times, reduced early reflections and a mid-range cut in the frequency spectrum. At its most extreme, the result is an almost imperceptible dry signal with an overpowering reverb tail lacking in clarity and definition, giving the impression that the sound source is emanating from deep within a vast, cavernous space. On the contrary, the ‘close’ transformation is achieved by applying the inverse of many of these settings, including a mid-range boost to give the signal greater presence and the application of compression to reduce the dynamic range of the signal. The result is a claustrophobic effect indicative of the sound source being heard at close range within a confined space. The ‘open–close’ transformation can be seen/heard applied to the lead melody at 5:10 in Video example 1.

Figure 14. ‘Open’ transformation effect chain.

Figure 15. ‘Close’ transformation effect chain.

Unlike the other topical opposition transformations, ‘stability–destruction’ has no effect upon the musical output of the system at one end (‘stability’) and an increasingly acute effect as the transformation slider is moved towards the other (‘destruction’). The destructive effect upon the sound is achieved through real-time granulation and distortion, with decreased grain size, increased grain spray and increased distortion level, drive and tone applied to the signal (Figure 16). This can be seen/heard applied to the lead melody at 3:40 in Video example 1.

Figure 16. ‘Stability–destruction’ transformation effect chain.

3.2. Markovian generative algorithm

Screenplay’s generative algorithm comprises both first and second order Markov chains to generate MIDI notes in response to those recorded/inputted by the user(s). Values generated by first order Markov chains are dependent only on the value immediately preceding them. Second order Markov chains account for the previous two values when calculating the next, meaning that results more directly resemble the source material than do those generated by first order Markov chains (Dodge and Jerse Reference Dodge and Jerse1997: 165–72). Accordingly, first order Markov chains are used in Screenplay for the generation of velocity, duration and note on time, with a second order Markov chain responsible for generating pitch values. This combination results in the propagation of variation through greater discrepancy between secondary parameters in the source material and generated results, whilst ensuring commonality is retained between pitch interval relationships; the means by which listeners most directly perceive melodic similarity.

By striking the correct balance between randomness and predictability in this way, ScreenPlay’s Markovian generative algorithm strengthens its ability to support musical interaction for a wide range user experience levels and thus function within a broad range of social contexts. The divergent yet musically coherent results of the algorithm are conducive to engaging and satisfying musical experiences for novices in collaborative, improvisatory performance, as would be common within a gallery installation/exhibition scenario. Likewise, the thread of similarity shared by the generated results and the source material is important in studio composition/live performance scenarios given the propensity for the creation of popular electronic music with ScreenPlay and the fundamental importance of development through repetition within the genre. The results of the Markovian generative example can be seen/heard in Figure 17, Sound example 8 and in both the lead and the bass at 3:00 and 3:30 in Video example 1.

Figure 17. Transcription of melody excerpt generated by Markovian generative algorithm using lead melody from Video example 1 as source material for transition matrix.

4. CONCLUSION

‘[M]usical meaning is ‘expressive’ [and] related to the ‘emotions’’ (Monelle Reference Monelle2000: 11) but its primary signification is not subject to the individual perception of listeners. Monelle believes that ‘the musical topic … clearly signifies by ratio facilis … [Umberto Eco’s theory, Reference Eco1976] in which signification is governed by conventional codes and items of expression are referred to items of content according to learned rules’ (Reference Monelle2000: 16). Of course, as with any signifier, all topics are also subject to secondary signification (Barthes [1957] Reference Barthes1991), meaning that each topic ‘carries a “literal” meaning, together with a cluster of associative meanings’ (Monelle Reference Monelle2006: 3). ‘Topics … are points of departure, but never “topical identities” … even their most explicit presentation remains on the allusive level. They are therefore suggestive, but not exhaustive’ (Agawu Reference Agawu1991: 34).

This blend of collective identifiability of primary topical signification and connotative pliability is what makes topic theory such an effective vehicle for ScreenPlay’s multifunctionality and ability to transcend the boundaries of social context inextricably linked with ICMS design and implementation. The relatively rigid conformity of topics to their defined primary signification is an excellent means of engaging novice users in collaborative installation environments by virtue of their shared understanding as to the audible effects of the various topical opposition transformations, thus providing an accessible means of tonal and textural/timbral musical manipulation. Conversely, the allusive and functional flexibility of topic theory, in that ‘a given topic may assume a variety of forms, depending on the context of its exposition, without losing its identity’ (Agawu Reference Agawu1991: 35), presents an intriguing conceptual paradigm for composers and performers across a broad range of styles/genres. Furthermore, the manner in which topic theory is implemented in ScreenPlay, which is, in effect, a reversal of the roles of music and meaning traditionally associated with topic theory, whereby the impact of the individual transformations is communicated to the user(s) via the GUI, is supported by Agawu’s assertion that the kernel of topic theory lies in how topics function within/affect music, rather than their explicit signification: ‘not “what does this piece mean?” but, rather, “how does this piece mean?”’ (Reference Agawu1991: 5).

While of fundamental importance in ScreenPlay’s multifunctional design, the topic-theory-inspired transformative algorithm alone is not enough to enable ScreenPlay to transcend the socially contextual confines of implementation associated with ICMS design. Both the Markovian generative algorithm and foundational sequenced approach to interaction, as well as the considered approach to GUI design, work together to strengthen ScreenPlay’s flexibility in this regard by increasing its applicability in both installation and studio composition/live performance scenarios. It is the combination of all these elements that distinguishes ScreenPlay within HCI in music by virtue of its ability to simultaneously challenge the internal and external aesthetic and sociohistorical frontiers between/within Classical/Romantic era music, contemporary academic/experimental and popular electronic musics and HCI in music. Furthermore, ScreenPlay’s realisation is representative of the potentialities for expanding the application of topic theory within contemporary electronic music and the sonic arts.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S1355771819000499