Data access and transparency comprise a growing area of concern in the political science discipline. Professional associations including the American Political Science Association (APSA) and the European Consortium of Political Research (ECPR) are either introducing or discussing data-access and transparency policies. Academic articles (Gherghina and Katsanidou Reference Gherghina and Katsanidou2013; Key Reference Key2016) are beginning to discuss the practices, merits, and problems (both logistical and ethical) of sound data-access and replication policies. Also, more journals are adopting binding data-access and replication policies, especially for articles that use quantitative data.Footnote 1 For example, 27 political science journals committed to implement the guidelines described in the Journal Editors’ Transparency Statement (JETS) by January 15, 2016. Among others, these guidelines require authors to (1) make all data available at the time of publication in a trusted digital repository or on the journal’s website; and (2) delineate clearly the analytical procedures used to analyze the data.

How many authors of articles published in journals with no mandatory data-access policy make their dataset and analytical code publicly available? If they do, how many times can we replicate the results? If we can replicate them, do we obtain the same results as reported in the respective article? We answer these questions based on all quantitative articles published in 2015 in three behavioral journals—Electoral Studies, Party Politics, and Journal of Elections, Public Opinion & Parties—none of which has any binding data-access or replication policy as of 2015. We found that few researchers make their data accessible online and only slightly more than half of contacted authors sent their data on request. Our results further indicate that for those who make their data available, the replication confirms the results (other than minor differences) reported in the initial article in roughly 70% of cases. However, more concerning, we found that in 5% of articles, the replication results are fundamentally different from those presented in the article. Moreover, in 25% of cases, replication is impossible due to poor organization of the data and/or code. This article provides a snapshot of where the discipline stands in terms of data access and transparency. First, it introduces our study and discusses our analytical strategy. Second, it presents the results of our analysis. Finally, it concludes with a discussion of repercussions of our study for more comprehensive data-access and transparency policies.

Our results further indicate that for those who make their data available, the replication confirms the results (other than minor differences) reported in the initial article in roughly 70% of cases. However, more concerning, we found that in 5% of articles, the replication results are fundamentally different from those presented in the article.

DATA ACCESS AND TRANSPARENCY: THE STATE OF AFFAIRS

Calls for data transparency are not new, but few scholars voiced these calls 20 or 30 years ago. A prominent example of a proponent of data transparency during the past 20 years is Harvard professor Gary King. In several articles (King Reference King1995; Reference King2003; Reference King2011), he admonished researchers to think about guidelines and rules to increase data access and transparency in the social sciences. However, until the 2010s, these calls remained unanswered. With few exceptions, political science associations, academic journals, and researchers did not discuss the challenges of sharing and replicating published work in a sustained and serious manner. Yet, spearheaded by early initiatives and attempts to establish guidelines on data transparency—which are associated with Political Analysis, American Journal of Political Science, and State Politics & Policy Quarterly—the discipline during the past five years suddenly has become interested in questions of how to scientifically prepare, present, and share public work and data. Several developments have fostered this interest.

First, the logistical and monetary costs to share data decreased tremendously in the 2010s. At no cost, researchers can make their data publicly available on their personal website, in an online appendix of the journal in which they publish their article, or in an online depository (e.g., Harvard Dataverse). Second, replication studies in neighboring disciplines highlight serious issues with the state of scientific conduct. For example, in the field of education studies, Freese (Reference Freese2007) and Evanschitzky et al. (Reference Evanschitzky, Baumgarth, Hubbard and Scott Armstrong2007) illustrated two worrisome developments: (1) there is a disturbing trend featuring a lack of replication studies; and (2) many studies do not adhere to the standards of rigorous scientific works, rendering replication even more important. To underline these developments, Evanschitzky (Reference Evanschitzky, Baumgarth, Hubbard and Scott Armstrong2007) claimed that “[t]eachers are advised to ignore the findings until they have been replicated, and researchers should put little stock in the outcomes of one-shot studies.” (For a similar and more recent study, see Open Science Collaboration 2015.) Third, and partly as a result of these first two developments, researchers are commonly aware that the lack of access to data is a major impediment to progress in science. Combined with this awareness is a growing willingness among researchers to share their data. To illustrate, Tenopir et al. (Reference Tenopir, Allard, Douglass, Umur Aydinoglu, Wu, Read, Manoff and Frame2011) highlighted that nearly 75% of 1,361 scientists polled were willing to share their data. However, few did so due to “insufficient time” (54%), “lack of funding” (40%), “no rights to make data public” (24%), “no place to put data” (24%), and “a lack of standards” (20%).

Together, these developments made researchers and journal editors in the political science discipline (as well as others) realize that journals and political science associations must take the lead in the quest for more rigorous data-transparency norms (Ishiyama Reference Ishiyama2014). Normatively, this pressure has increased with the publication of several manifestos expressing the need for clear guidelines in favor of an open research culture and transparency in social science research (Miguel et al. Reference Miguel, Camerer, Casey, Cohen, Esterling, Gerber and Glennerster2014). APSA responded to these claims more vigorously than other associations. The APSA Council developed the so-called Data Access and Research Transparency Statement (DA-RT), which is based on the premise that researchers have an ethical obligation to “facilitate the evaluation of their evidence-based knowledge claims through data access, production transparency, and analytic transparency so that their work can be tested or replicated” (Lupia and Elman Reference Lupia and Elman2014, 20). Two further initiatives emanated from DA-RT: (1) the Journal Editors’ Transparency Statement (JETS), and (2) the Transparency and Openness Promotion Statement (TOPS). First, in the JETS, 27 leading political science journals committed to greater data access and research transparency and to implementing policies requiring authors to make as accessible as possible the empirical foundation and logic of inquiry of evidence-based research.Footnote 2 Second, the TOPS guideline listed eight transparency standards that journal editors might want to adopt: citation standards, data transparency, analytic-methods (i.e., code) transparency, research-materials transparency, design and analysis transparency, study preregistration, analysis-plan registration, and replication.Footnote 3

These developments are changing what we generally label as “good research practice.” To illustrate, Gherghina and Katsanidou (Reference Gherghina and Katsanidou2013) identified 120 political science journals that publish quantitative research and reported that only 18 journals had any type of data-sharing policy listed on their website. Only three years later, we found that more than 80% of political science journals publishing quantitative work had a transparency statement on their website. More importantly, the 27 JETS signatories practice the JETS guidelines reasonably well. A recent study by Key (Reference Key2016) revealed that International Organizations, American Journal of Political Research, and Political Analysis—all of which are JETS signatories—have replication materials available for more than 80% of their articles. However, the same article reported that the replication material is available in only about 35% of the studies published in the British Journal of Political Science, the Journal of Politics, and the American Political Science Review—none of which have a binding data-sharing policy.

This recent evidence points toward the fact that the adoption of a stringent data-sharing policy “forces” authors to make their data available. However, what happens to authors who publish their research in a journal that does not require researchers to make their data publicly available? Do they post it on the journal’s online depository anyway? If not, do they share it with colleagues, if asked? If they do share it, is it sufficiently well prepared to allow for replication? Finally, do the replications provide results identical to those published in the respective journal article? This article attempts to answer these questions. To do so, we selected the 2015 edition of three prominent peer-reviewed journals in the field of political behavior: Electoral Studies,Footnote 4 Party Politics,Footnote 5 and Journal of Elections, Public Opinion & Parties (JEPOP).Footnote 6 We deemed these three referents a good fit for our study because they mostly publish quantitative pieces, and none of the journals had a binding data-sharing policy as of 2015.Footnote 7 Although based in Europe, all three journals are international and mostly publish articles by authors from institutions in Europe as well as the United States and Canada. They provide a good cross section of the political behavior discipline as a whole. This also is reflected by the composition of the journals’ editorial boards, which include prominent experts in the field.

RESEARCH DESIGN

To determine whether researchers make data from their published articles publicly available—and, if so, whether it is possible to replicate these results—we engaged in a multi-step research process. First, we selected all articles that used quantitative methods from all those published in the three journals of reference. This resulted in 145 articles (i.e., 73 published in Electoral Studies, 52 in Party Politics, and 20 in JEPOP). Second, we checked whether the journals publish replication data on their website or whether the articles contain a link that allows other researchers and the interested public to access these data. We also consulted the authors’ personal websites to ascertain whether the data and/or code are published there. Third, in all cases in which we did not find the data and/or the code readily available, we asked the authors via email to share their data, codebook, and code. The email stated clearly that we wanted to do a replication study of their published article, and we assured them that we would not distribute the data to any third party. When authors did not answer, we sent three reminders in two-week intervals. Fourth, for those articles on which we obtained the data, we attempted to replicate the results.Footnote 8

Regarding data availability, our results suggest that slightly more than half of the authors were willing to share their data (i.e., data, code, or both) (table 1

Table 1 Frequency/Percentage of Articles for Which We Could Access Data

). However, few shared it when not specifically asked to do so.

RESULTS

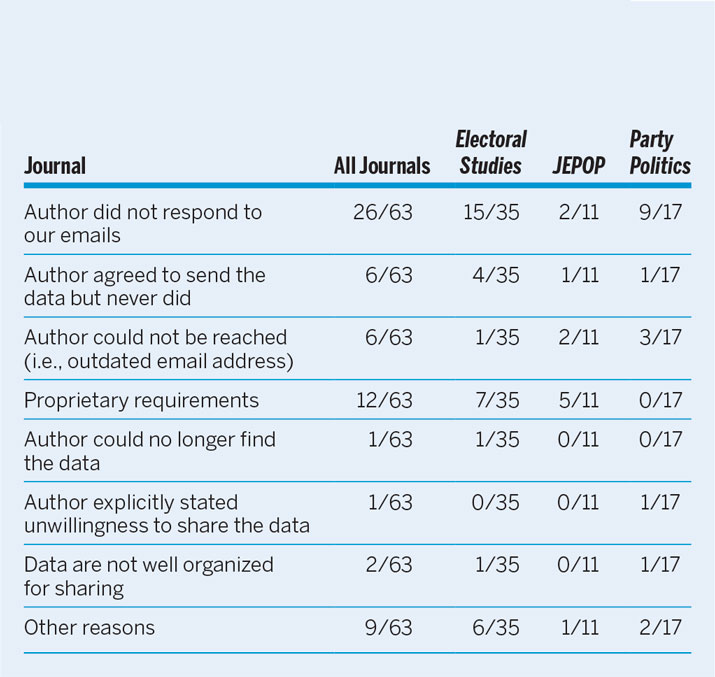

Regarding data availability, our results suggest that slightly more than half of the authors were willing to share their data (i.e., data, code, or both) (table 1). However, few shared it when not specifically asked to do so. Specifically, of the 82 articles from which we could receive data, the data were publicly available for only 13, or slightly more than 15% of cases (table 2). In the remaining 69 cases, we received the data or part of it by email. The primary reasons for not sharing the data were a nonresponse to our email and (alleged) propriety requirements, which we could not verify (table 3).

Table 2 Locations of Where Data Are Available

Table 3 Reasons for Not Providing Data

Regarding replication, we had 71 articles (of the total 145 articles) for which we received both data and code files,Footnote 9 the quality of which varied considerably. Most authors processed their code for our purpose, which simplified the replication of their findings. However, this made it nearly impossible to comprehend their workflow from the original data to the reported results (in rare exceptions, we also received the raw data). Another problem encountered was that authors did not present their data and code uniformly. Whereas in some cases, we received one data file and one code file—which not only listed the code but also explained the various analytical steps—the code we received in other cases was sometimes in disarray. That is, it was scattered in several documents, did not include an explanation or indication about the model, table, or graph it referred to, or an explanation of the workflow and the type of analysis conducted.

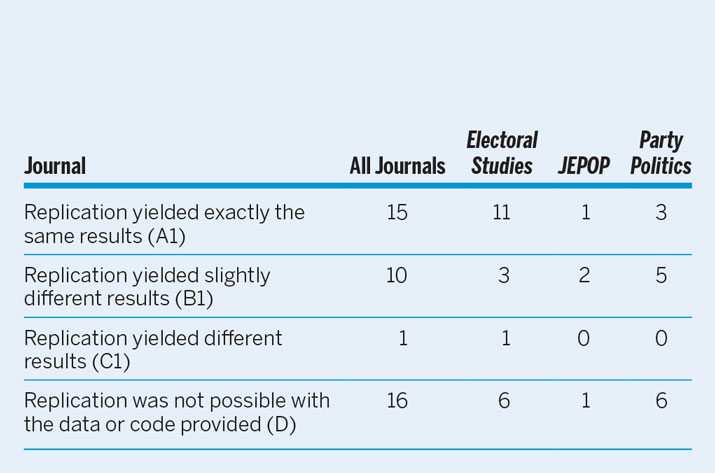

This lack of organization in code and data presentation was the main reason that we were unable to replicate some results. In other cases, the code simply did not work; we classified them as “replication was not possible with the data or code provided” (table 4). This category applied to slightly more than 20% of cases. Of those cases that we could replicate, we distinguished between full replications (see table 4) and partial replications (table 5).Footnote 10 For those articles that we could either fully or partially replicate, we found that in slightly less than half (i.e., 32 of 70 cases), our replication analyses yielded exactly the same results as reported in the article. In another 19 cases (or slightly more than 25% overall), we detected minor errors: mainly typos including one coefficient incorrectly reported, a wrong significant sign, and an incorrect comma in the reporting of a regression weight. The results are only significantly different than those reported in the respective articles in three cases overall, or less than 5%.

Several lessons can be drawn from this study. First, if we as a discipline want to abide by the principle of research and data transparency, then mandatory data sharing and replication are necessary because many authors are still unwilling to share their data voluntarily or make unusable replication material available.

Table 4 Results of the Replication StudiesFootnote 11

Table 5 Results of the Replication Studies with Incomplete Code

CONCLUSION

This article provides new insights into the practices of data access, research transparency, and replication. First, we detected that when authors are not forced to do so, they are rather unwilling to share their data. That is, few researchers make their data and code freely available and in only about half of the cases were scholars willing to share their data. In fact, we received either the data or the code in about 55% of cases and both in less than 50% of cases. Second, for those articles from which we obtained the data, we confirmed the reported results in roughly 70% of cases (i.e., if we ignored the minor reporting errors, which we found in roughly one in four articles). However, of more concern is the way that some researchers store their replication files—unorganized to say the least. They were sometimes so disorganized or incomplete that replication simply was not possible with the material provided, even after several attempts.

Several lessons can be drawn from this study. First, if we as a discipline want to abide by the principle of research and data transparency, then mandatory data sharing and replication are necessary because many authors are still unwilling to share their data voluntarily or make unusable replication material available. It was a great concern that we could do only full or partial replication in less than 40% of cases. In an ideal scenario, journals also should replicate the results before publishing them—a policy already adopted by American Journal of Political Science and Political Analysis. This would illuminate the few cases in which results of the replication do not correspond to those reported in an article. It also would eliminate minor inconsistencies and force authors to prepare their data and code such that their analytical steps can be traced. Among other benefits, this would include the provision of data in a suitable format, written instructions on how to run the analysis, and mandatory submissions of syntax files that are properly notated to make the steps traceable. We suspect that many of the nonresponses in our study were tied to the analysis being conducted without the use of syntax files. To simplify data sharing and replication, journals and political science associations should offer guidelines on how to organize the data and the code so they are suitable for replication. Having common standards across political science associations and journals certainly would facilitate data sharing and replication.