1. Introduction

A general issue in cognitive linguistics has been the extent to which language influences perception and conceptualization in a more general cognitive domain that need not always be linguistically instantiated. Many empirical studies have provided wide-ranging evidence of how perceptual behavior is influenced by language experience in spoken languages (Best, Mathur, Miranda, & Lillo-Martin, Reference Best, Mathur, Miranda and Lillo-Martin2010; Eimas, Reference Eimas1963; Thierry, Athanasopoulos, Wiggett, Dering, & Kuipers, Reference Thierry, Athanasopoulos, Wiggett, Dering and Kuipers2009; Werker & Tees, 1983). Although sign language offers unique experiences in the visual–manual domain, we still have limited insights into how sign language knowledge affects visual perception and processing. This study sets out to investigate how sign language experience influences perception of handshapes in partially lexicalized constructions in British Sign Language (BSL). We examine how variability in handling handshapes is managed during handshape categorization and discrimination. This research centers around two theoretical questions of how language experience in the visual domain affects perceptual capacities of deaf signers and how handshapes in partially lexicalized sign language constructions are internally structured. Before we describe partially lexicalized constructions, it is necessary to explain the structure of lexical signs (i.e., equivalents of words in spoken languages).

1.1. sign language structure

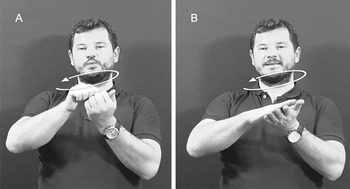

Handshape is one of the main formational parameters in sign languages (along with location, movement, and orientation), and is the primary focus of the present study. In the context of phonological/phonetic analysis, handshape as a feature class stands for the specific configurations of fingers and the palm. For example, in the BSL sign chew, Footnote 1 the handshape configuration involves all fingers completely closed (flexed) to form the /fist/ handshape (Figure 1A). Despite the large number of possible hand configurations that can be produced, each sign language tends to use a limited inventory of handshapes (Brennan, Reference Brennan and Brien1992; Schembri, Reference Schembri1996). Other parameters include location, which refers to the position of the hand on the signer’s face, body, or area in the signing space; e.g., in the BSL signs think and afternoon, the signer’s dominant hand is placed on the temple and the chin respectively. Movement refers to the action that the hand/arm performs and can be for example arced or straight as in the BSL sign ASK, or from left to right on the signer’s chest as in the BSL sign morning. Orientation (Battison, Reference Battison1978) refers to the direction of the palm in relation to the signer’s body.

Fig. 1. Phonemic variation of handshape in BSL signs: (A) BSL CHEW with circular /fist/ movement; and (B) BSL MEAN with circular /B/ movement.

The formational parameters of lexical signs consist of phonetic feature classes and function similarly to the phonetic features in spoken languages. There is a finite number of features for each feature class (e.g., handshape or movement) in sign language phonology (Hulst, Reference Hulst1995). Brentari (Reference Brentari1998) proposed that handshape consists of the number of selected fingers that move/contact the body as a group during sign production, and the joint configuration representing the flexion, extension, spread, or stacking of selected fingers during articulation of a sign (Brentari, Reference Brentari1998, Reference Brentari, Leuninger and Happ2005; Eccarius & Brentari, 2010). For example, the BSL signs chew and mean (Figure 1) both use the same selected fingers but vary in finger flexion vs. extension – flexed vs. extended respectively. The formational features serve as an organizational basis for minimal phonological contrasts (Stokoe, Reference Stokoe1960) – lexical signs can be minimally distinguished by handshape, e.g., /fist/ and /B/ phonemes generate a minimal contrast between signs chew and mean respectively.

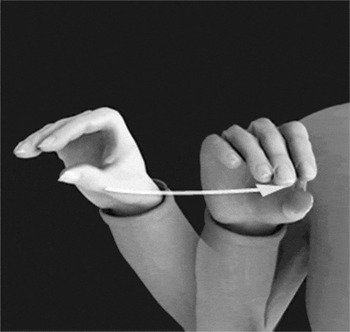

In addition to lexical signs, sign languages contain a set of semantically rich, partially lexicalized constructions that can analogically depict spatial–visual information, called depicting constructions (DC) (Liddell, Reference Liddell2003a). DCs differ from lexical signs in that they blend discrete and gradient properties and may express complex meanings about spatial properties of referents. However, it remains unclear what precisely the categorical and gradient properties are in DCs or how they are represented in the lexicon. In sign languages such as British Sign Language, DCs tend to be less conventionalized in form and meaning than lexical signs. DCs can express how referents or referent parts are handled or manipulated, called handling constructions (Figure 2A), or they can express the whole or a part of an entity and its movement, location, and orientation in space, called entity constructions (Figure 2B).

Fig. 2. Depicting constructions in BSL: (A) handling construction representing the movement of an object from location x to location y, with a handshape depicting a flat rectangular object being handled; and (B) entity construction representing an upright stick-shaped entity moving from location x to location y, with the handshape depicting the whole entity.

The handshape in handling constructions (Figure 2A), handling handshape, represents how the referent’s hand is configured when handling an object or object part and can gradiently vary according to the object properties or how the referent’s hand was configured for handling. It remains unclear whether gradient object properties are conventionally conveyed in a categorical manner by handling handshapes in any sign language. In contrast, entity handshapes (Figure 2B) categorically express entities or part entities and do not appear to exhibit the same degree of gradient modification as handling handshapes. For example, the extended index finger, referred to as /index/ handshape, represents stick-like entities, such as a person, a toothbrush, or a pen in BSL and many other sign languages. The number of handling handshapes could be very large because handling handshape can more directly express analogue information about object properties (e.g., object thickness) and on a real-life scale. This might lead to different conventionalization patterns of handling handshapes than of entity handshapes, which tend to represent objects discretely on a reduced scale.

Handling handshapes tend to be highly analogue and less conventionalized, and the problem that these forms represent for linguistic analyses is how such forms, which may not be completely discrete, can be described (van der Kooij, Reference van der Kooij2002). Despite the apparent productive and analogue appearance of handling constructions, some researchers have asserted that DCs contain discrete handshapes that function as morphemes (Eccarius & Brentari, Reference Eccarius and Brentari2010; McDonald, Reference McDonald1982; Slobin et al., Reference Slobin, Hoiting, Kuntze, Lindert, Weinberg, Pyers, Thumann and Emmorey2003; Supalla, Reference Supalla and Craig1986, Reference Supalla and Emmorey2003; Zwitserlood, Reference Zwitserlood2003). Others have argued against such an analysis because depicting handshapes appear to vary in a non-discrete and analogue fashion (de Matteo, Reference de Matteo and Friedman1977; Mandel, Reference Mandel and Friedman1977; for further discussion see Schembri, Reference Schembri and Emmorey2003). The notion that DCs blend discrete and analogue mappings is now widely accepted in the literature (Liddell, Reference Liddell and Emmorey2003b). However, it remains to be empirically determined which components of DCs are discrete versus analogue, how such gradient and discrete mappings conventionally combine, and how such hybrid structures are perceived and represented in the mind, particularly for handling handshapes which have been less thoroughly studied than entity constructions. Furthermore, handling constructions used by deaf signers look remarkably similar to handling gestures used by non-signers (e.g., viewpoint gestures described by McNeill, Reference McNeill1992), although some articulatory differences between signers’ and non-signers’ handling handshapes have been observed (Brentari, Coppola, Mazzoni, & Goldin-Meadow, Reference Brentari, Coppola, Mazzoni and Goldin-Meadow2012; Brentari & Eccarius, Reference Brentari, Eccarius and Brentari2010). Insights into the perceptual signatures by deaf sign language users and sign naive perceivers will deepen our knowledge of the nature and representation of these constructions.

1.2. gradient versus categorical variation in language

The issue of internal representation of linguistic structures has been central to many competing theories of human categorization. Traditional views of category structure maintain that category members are considered perceptually more or less equivalent. In phonological processing, variability in auditory signal is ignored if listeners perceive idealized tokens of intended types, often resulting in a categorical perception (CP) effect. CP is when certain stimuli are perceived categorically rather than continuously despite a continuous variation in form. CP occurs because members of the same category are less easily discernable than two members from different categories, even if there is an equal perceptual distance between them (Harnad, Reference Harnad1987; Pisoni & Tash, Reference Pisoni and Tash1974; Studdert-Kennedy, Lieberman, Harris, & Cooper, Reference Studdert-Kennedy, Lieberman, Harris and Cooper1970). In comparison, exemplar or prototype theories maintain that categories are graded. Members of the category are not perceived as equivalent and signal variability is not ignored; rather it is used to shape perceptual processing (Rosch, Reference Rosch1975; Rosch, Mervis, Gray, Johnson, & Boyes-Braem, Reference Rosch, Mervis, Gray, Johnson and Boyes-Braem1976). Boundaries between categories can thus be fuzzy and the status of category members inconsistent (Barsalou, Reference Barsalou, Collins, Gathercole and Conway1993; Rosch, Reference Rosch and Moore1973; Rosch & Mervis, Reference Rosch and Mervis1975; Tversky, Reference Tversky1977). Linguistic categories have also been argued to have a graded structure, with some members perceived as better exemplars of a category than others (Bybee, Reference Bybee2001; Bybee & Hopper, Reference Bybee and Hopper2001). Graded category structure can be determined by the perceived familiarity of exemplars or frequency of instantiation (Barsalou, Reference Barsalou1999).

One of the fundamental problems for theories of perception is how to characterize the perceiver’s ability to extract consistent phonetic percepts from a highly variable visual or acoustic signal. Language involves routine mapping between form and meaning, where many variations of form are captured by a discrete category. We are specifically interested in whether and how these routine mappings between form and meaning in the visual modality of sign language constrain perceptual systems.

The consequence of category bias on perceptual patterns has been extensively studied in language and other domains using the categorical perception paradigm. CP is an important phenomenon in science because it involves the interplay between higher-level and lower-level perceptual systems and offers a potential account for how the apparently symbolic activity of high-level cognition can be grounded in perception and action (Goldstone & Hendrickson, Reference Goldstone and Hendrickson2009). CP is typically assessed by forced choice identification (categorization) and discrimination tasks. In an identification task, participants are asked to perform binary assignment of continuous stimuli (e.g., sounds or handshapes) to endpoint categories in order to detect a perceptual boundary. Discrimination is determined by category membership rather than the perceptual characteristics of the stimuli. For example, in an ABX paradigm, perceivers judge if X (a stimulus occurring within some kind of continuum) is identical to either A (one endpoint of the continuum) or B (the other endpoint). CP is assumed if a peak in discrimination accuracy occurs on the boundary from the identification task and if accuracy within category is below chance.

In language, for example, variation in speech sounds along voice onset frequencies is perceived in terms of categories that coincide with phonemes in the perceiver’s language (Liberman, Harris, Horffmann, & Griffith, Reference Liberman, Harris, Horffmann and Griffith1957; Liberman, Harris, Kinney, & Lane, Reference Liberman, Harris, Kinney and Lane1961; Schouten & van Hessen, Reference Schouten and van Hessen1992), suggesting that linguistic experience mediates perception of certain types of auditory stimuli. But not all speech sounds are perceived categorically. For instance, perception of certain vowel properties, such as duration (Bastian, Eimas, & Liberman, Reference Bastian, Eimas and Liberman1961), intonation (Abramson, Reference Abramson1961), and affricate/fricative consonant distinction (Ferrero, Pelamatti, & Vagges, Reference Ferrero, Pelamatti and Vagges1982; Rosen & Howell, Reference Rosen, Howell and Harnad1987) has been shown to be continuous rather than categorical.

Although the CP paradigm has been widely attested for speech perception, the nature of CP for speech and what it reveals about linguistic representations has been debated (Schouten, Gerrits, & van Hessen, Reference Schouten, Gerrits and van Hessen2003). CP has been found in other domains, e.g., color perception (Bornstein & Korda, Reference Bornstein and Korda1984; Özgen & Davies, 1998), face perception (Beale & Keil, Reference Beale and Keil1995; Campbell, Woll, Benson, & Wallace, Reference Campbell, Woll, Benson and Wallace1999), and non-speech sound perception (Jusczyk, Rosner, Cutting, Foard, & Smith, Reference Jusczyk, Rosner, Cutting, Foard and Smith1977), and is not by any means limited to human perceivers (Diehl, Lotto, & Holt, Reference Diehl, Lotto and Holt2004; Kluender & Kiefte, Reference Kluender, Kiefte, Gernsbacher and Traxler2006). CP is thus understood as a general characteristic of how perceptual systems respond to experience with regularities in the environment (Damper & Harnad, Reference Damper and Harnad2002) and may arise because of natural sensitivities to specific types of stimuli (Aslin & Pisoni, Reference Aslin, Pisoni, Yeni-Komshian, Kavanagh and Ferguson1980; Emmorey, McCullough, & Brentari, Reference Emmorey, McCullough and Brentari2003; Jusczyk et al., Reference Jusczyk, Rosner, Cutting, Foard and Smith1977).

Reaction times (RT) in CP have yielded robust and reliable patterns of stimulus identification and discrimination sensitivity (Campbell et al., Reference Campbell, Woll, Benson and Wallace1999), but have rarely been studied in the sign language domain. In speech perception, analyses of identification times revealed increased processing latencies when participants made judgments about pairs straddling phonetic boundaries (Pisoni & Tash, Reference Pisoni and Tash1974; Studdert-Kennedy, Liberman, & Stevens, Reference Studdert-Kennedy, Liberman and Stevens1963). RTs are a positive function of uncertainty, increasing at the boundary where labeling is inconsistent and decreasing where identification is most consistent, i.e., within-category. In comparison, discrimination judgments should be faster where discrimination is easy, that is, on the boundary rather than within category (Pisoni & Tash, Reference Pisoni and Tash1974). RTs thus provide an important quantitative measure of the ways in which handshape perception might involve specialized mechanisms for perceptual judgments.

Although absolute CP for speech sounds is rarely found, as not all speech sounds are perceived categorically or as invariant perceptual targets, CP can be useful for obtaining perceptual profiles for individual or groups of perceivers that can help determine the extent to which sensitivity to stimuli may have been attenuated by linguistic experience. Indeed, the perceptual profiles of deaf signers can reveal whether handling handshapes are perceived as equal or less equal variants and whether the signal variability is managed in terms of category boundaries or central tendencies. Further, if categorical perception of handling handshapes is refined by sign language usage, do handshape categories have causal effects on perceptual behavior, as has been demonstrated with other sign or speech stimuli? Due to the apparent gestural influence and similarity to non-signers’ gesture, e.g., those found in viewpoint gestures (McNeill, 1992), handling handshapes provide a unique opportunity to investigate how linguistic bias constrain perception of handshapes that occur in face-to-face communication. The perceptual patterns displayed by perceivers whose languages are in different modalities will provide an insight into the specialized mechanisms for perception of visual properties of signs (e.g., handshapes, movements, locations) and how variation in the visual signal is managed.

1.3. effects of linguistic experience on categorical perception in sign language

Effects of linguistic experience on perception have been demonstrated for phonemic handshapes in American Sign Language (ASL), i.e., those handshapes that contribute to lexical contrast (Baker, Idsdardi, Golinkoff, & Petitto, Reference Baker, Idsdardi, Golinkoff and Petitto2005; Best et al., Reference Best, Mathur, Miranda and Lillo-Martin2010; Emmorey et al., Reference Emmorey, McCullough and Brentari2003; Morford, Grieve-Smith, MacFarlane, Staley, & Waters, Reference Morford, Grieve-Smith, MacFarlane, Staley and Waters2008). Emmorey et al. (Reference Emmorey, McCullough and Brentari2003) showed that gradient variations in handshape aperture and finger selection in certain ASL signs were categorically perceived by deaf native ASL signers. Handshapes in ASL lexical signs varying in equal steps along a continuum with lexical signs please and sorry which differ in the use of /B/ versus /A/ handshape or mother and posh which differ in the number of selected fingers from five to three yielded a CP effect but only in deaf ASL signers. Unlike hearing non-signers, deaf ASL signers displayed a superior ability to discriminate between handshape pairs that fell across a category boundary, while handshape pairs from within a category were not judged to be different. Deaf signers’ perceptual abilities were mediated by linguistic categorization that can be demonstrated only for phonemic, not allophonic, contrasts. Native sign language experience can give rise to CP for certain handshapes but only those in phonemic opposition (Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005; Emmorey et al., Reference Emmorey, McCullough and Brentari2003). Additionally, perceptual sensitivity to boundaries between phoneme categories has been shown to vary with age of sign language acquisition (Best et al., Reference Best, Mathur, Miranda and Lillo-Martin2010; Morford et al., Reference Morford, Grieve-Smith, MacFarlane, Staley and Waters2008). From a theoretical perspective, showing that CP arises for phonological parameters in sign languages (e.g., handshape or location) was crucial for demonstrating that lexical signs are composed of discrete linguistic units akin to spoken language phonemes.

In partially lexicalized constructions such as DCs, it is less clear how gradient handshape variation is categorized. Effects of linguistic categorization on perception of depicting handshapes have only been examined for size-and-shape specifying handshapes (SASS). Using a paradigm in which gradient versus categorical expression is assessed by determining how descriptions of objects varying in size are interpreted by another group of deaf or hearing judges, Emmorey and Herzig (Reference Emmorey, Herzig and Emmorey2003) showed that deaf ASL signers, unlike hearing non-signers, systematically organized handshapes depicting size and shape of objects (specifically, a set of medallions of varying sizes) into categories that corresponded with morpheme categories in ASL. For example, deaf signers used /F/ handshapes for small-size medallions and /baby-C/ for larger size medallions, suggesting that continuous variation in size is encoded categorically by a set of distinct ASL handshapes. In this study, however, hearing judges were not included in the interpretation of handshape productions by signers or non-signers. Thus it remains unclear whether handshape categorization is exclusively shaped by linguistic experience. Furthermore, the SASS handshapes in the Emmorey and Herzig study depict objects that vary in two-dimensional characteristics, unlike handling handshapes, which often depict how a three-dimensional object is manipulated in space. Handling handshapes tend to be more dynamic and often involve handshape change associated with grasping and manipulating of objects. It remains to be seen if CP findings based on SASS handshapes can be extended to handling handshapes.

The ability to categorize sign language stimuli might not always be a consequence of linguistic categorization. Regardless of perceivers’ linguistic experience, certain visual stimuli can be perceived categorically because they represent salient visual or perceptual contrast. McCullough and Emmorey (Reference McCullough and Emmorey2009) examined perception of facial expressions, such as furrowed eyebrows and raised eyebrows with eyes wide open, which function as conventional question markers in ASL marking wh-questions and yes/no questions, respectively. The continuum between furrowed vs. raised eyebrows was categorically perceived by both deaf ASL signers and hearing non-signers. The authors suggested that the contrast coincided with categories of affect shared by deaf and hearing perceivers, consistent with categorical perception of facial affect found in previous studies. Categories of affect are learned through social interaction, and perceivers may form discrete representations. A parallel example might be handshapes associated with precision handling that come to signify the preciseness of a concept in co-speech gestures (Kendon, Reference Kendon2004). Perceivers without sign language expertise might form discrete handshapes representations based on visual semiosis or visual motor experience deriving from object grasping or manipulation. The question is whether categorization processes specific to sign language or other general perceptual or cognitive processes (such as those in place for categorizing human hand shaping for object handling/manipulation, or size/magnitude processing) differentially influence perceptual behavior.

In the current study, we compare performances in a categorical perception experiment by deaf native BSL signers whose language imposes unique constraints on visual–spatial processing of handshapes and hearing non-signers who have no sign language experience. The empirical goals of the study are to investigate (a) whether handling handshapes are perceived categorically or gradiently by deaf BSL signers and hearing non-signers, and (b) whether or not the linguistic use of handling handshapes biases the perceptual processes in deaf BSL signers. We make the following specific predictions: if handling handshape continua are perceived in a categorical manner by BSL signers but continuously by hearing non-signers, it will suggest that handshape perception is mediated by linguistic categorization. If, however, both deaf BSL signers and hearing non-signers display gradient patterning, it will suggest that handling handshape variability is not ignored and influences perceptual processing at a more general level. Alternatively, both groups exhibit CP for handling handshape and this will suggest that handling handshape perception is mediated by other general perceptual or cognitive processes and not solely by linguistic experience. In addition, we included a measure of RT as a function of the perceivers’ sensitivities to perceptual boundaries and membership status of the exemplars. RTs in categorization and discrimination have been argued to yield robust and reliable patterns of discrimination sensitivity (Campbell et al., Reference Campbell, Woll, Benson and Wallace1999), yet CP studies of sign language stimuli have not traditionally measured RTs. We examine the differences between RTs and accuracy in deaf and hearing perceivers to reveal if and how linguistic experience impacts visual processing and leads to processing advantages. The results will be discussed in light of existing studies on sign language perception and theories of gradient category structure. Our findings have implications for current theories of DCs, sign language structure, and visual processing.

2. Methodology

2.1. participants

Participants in this study included 14 deaf BSL signers (age range 18–38 years; 8 female) and 14 hearing native English speakers matched to BSL signers on age and gender. All deaf participants acquired BSL before age 6, reported BSL as their preferred method of communication, and were born and lived in southeast England. Deaf participants were recruited through an on-line participation pool website administered by the Deafness Cognition and Language (DCAL) Research Centre (UCL) or through personal contacts in the deaf community. Hearing participants were recruited through the UCL Psychology on-line participation pool website. The experiment took place in a computer laboratory at DCAL.

2.2. stimuli

We created two handling handshape continua using a key frame animation technique in the software package Poser 6.0™ (Curious Labs, 2006). This technique incorporates information on joint or body positions from the starting and ending poses and calculates equal increments between the endpoints. The result is a naturalistic and carefully controlled animated exemplar. The exemplars were handshapes used in BSL to depict handling of flattish rectangular objects (e.g., books) and cylindrical objects (e.g., jars) (Brennan, Reference Brennan and Brien1992). Figure 3A shows a continuum of handshapes used to manipulate flattish, rectangular objects, progressing in aperture from the most closed /flat-O/ to most open /flat-C/ handshape. Figure 3B shows a continuum of handshapes used to manipulate cylindrical objects, progressing from the most closed /S/ to most open /C/ handshape.

Fig. 3. Handling handshape continua presented as video clips: (A) a continuum of eleven handshapes depicting handling of flattish rectangular objects in BSL varying in equidistant steps from most closed /flat-O/ handshape (item 1) to most open /flat-C/ handshape (item 11); and (B) a continuum of eleven handshapes depicting handling of cylindrical objects in BSL varying in equidistant steps from most closed /S/ handshape (item 1) to most open /C/ handshape (item 11).

The 11-item continua were designed to create a visual homologue to typical CP experiments for speech (Liberman et al., Reference Liberman, Harris, Horffmann and Griffith1957) and ASL (Emmorey et al., Reference Emmorey, McCullough and Brentari2003). Exemplars were presented as dynamic video clips involving a straight, right arm movement from left to right (from the perspective of the viewer) in neutral space in front of the signer’s torso. The arm was anchored to the shoulder and bent at an angle of 45 degrees (Figure 4).

Fig. 4. A still image representing movement shown in actual stimuli consisting of 500 ms animated videos.

In the handling exemplars used in this study, the movement, location, and orientation remained constant for all steps. We aimed to keep the movement as neutral as possible. The starting location was neutral to the right of the signer’s torso. The orientation of the palm and fingers was facing away from the signer and is similar to handshape orientation used in previous CP experiments (e.g., Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005). The handshapes were articulated by the right hand and consisted of four selected fingers with the thumb opposed for all exemplars and both handshape types. Handshapes depicting handling of flattish rectangular objects (Figure 3A) consisted of angled finger/thumb joints, whereas handshapes depicting handling of cylindrical objects (Figure 3B) consisted of curved finger/thumb joints with fingers together (i.e., not spread). This finger bending feature is the distinguishing feature between handling of a flattish rectangular object and an object of a cylindrical shape. Within each continuum, the handshapes varied continuously in one parameter value only – the distance between the thumb and fingers (aperture). The aperture changed from [closed] (as used in constructions depicting handling of a piece of paper or thin rod) to [open] (as in handshapes depicting handling of a wider rectangular object such as a book, or cylindrical object such as a large jar). Thus, handshapes differed in the thumb and selected fingers distance. A block of practice trials with an unrelated handling handshape continuum preceded each task. The practice continuum consisted of eleven handshape exemplars with /intl-T/ handshape used to depict handling of a long thin object (e.g., a stick) as one endpoint, and a handshape depicting handling of a thick object, e.g., a remote control, as the other endpoint where the thumb and bent index finger are several inches apart.

2.3. procedure

Before each task, participants viewed images of a person moving rectangular or cylindrical objects from a shelf with the person’s handshape blurred. Pre-task images were intended to prime handling as we wanted participants to focus on the handshapes that occur in depiction of handling rather than handshapes in lexicalized signs, such as initialized BSL sign communication where discrete patterning is expected, or as size-and-shape handshapes used to trace the outline of an object in space. The handshape continua were blocked and a practice trial always preceded the first test block.

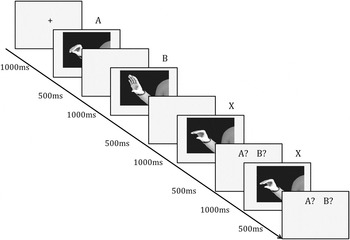

CP was examined using a forced choice identification task and an ABX discrimination task, with accuracy and reaction times recorded for both tasks. The trial structure in the binary forced choice identification task is schematized in Figure 5. Two blocks of trials separated by short rest periods began by displaying each endpoint item on opposite sides of the screen for 500 ms, followed by a 1000 ms blank screen. Then, eleven items selected randomly from anywhere from the continuum, including the endpoints, consecutively appeared in the middle of the screen followed by a blank response screen during which participants recorded their responses as quickly as possible. During the blank response screen, participants pressed either the left arrow key to indicate if the handshape was similar to the endpoint handshape they had previously viewed on the left of the screen or the right arrow key to indicate if the handshape was similar to the endpoint handshape previously viewed on the right of the screen. Each item appeared twice in each test block, two test blocks created for each handshape continuum. The order of items was randomized across test blocks and participants. Endpoint handshapes were only visible at the start of each block, but not during the trials and their position on the screen was always reversed for the second block of trials. Each participant saw each handshape variant four times, resulting in forty-four trials for each handshape continuum. Deaf participants viewed the task instructions in BSL and hearing participants received instructions in written English.

Fig. 5. Trial structure in identification task: items A and B are the endpoints and X is randomly selected from anywhere on the continuum.

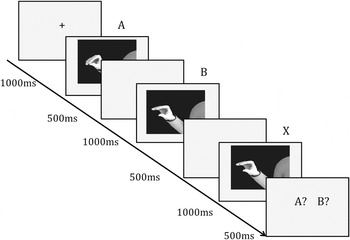

The discrimination task used the same stimuli as the identification task and was based on previous studies using the ABX matching to sample paradigm, e.g., Beale and Keil (Reference Beale and Keil1995), Emmorey et al. (Reference Emmorey, McCullough and Brentari2003), or McCullough and Emmorey (2009). Thirty-six trials were divided into three test blocks and a practice block preceded the first test block. Each trial consisted of three sequentially presented handshapes (a triad), where the first two handshapes presented (A and B) were always two steps apart on the continuum (item pairs 1–3, 2–4, 3–5, etc.). One handshape was presented on the left side of the screen and the other on the right, and the third handshape (X), presented in the center of the screen, was always an identical match to one of the previous two. Each item appeared on the screen for 500 ms followed by a blank screen for 1000 ms. A blank response screen followed each triad during which participants pressed the left arrow key if they thought the third handshape matched the handshape previously presented on the left (A) or the right arrow key if they thought the third handshape matched the handshape previously presented on the right of the screen (B). The position of the endpoints was reversed for the second half of the trials. The order of items (X) was randomized across trials and participants. The trial structure in the discrimination task is schematized in Figure 6.

Fig. 6. Trial structure in the discrimination task: an example of an ABX triad where A and B are always two steps apart on the continuum and X is always identical to either A or B.

In the identification task, the dependent measure was the proportion of items identified as item 1. Based on the binary responses, we calculated the slope gradient using a logistic regression to obtain the slope coefficient for each participant. The slope coefficient was averaged across participants to reveal the strength of endpoint category bias for each group. A steep slope gradient would indicate a strong influence of the binary categories and a clearly defined boundary, while a more gradual slope would suggest a more continuous categorization and a weaker category influence. Next, in order to determine category membership of handshapes, we computed individual category boundaries (see ‘Appendix’) by dividing the slope constant by the intercept on the y-axis, defining the category boundary as 50% of an individual participant’s responses. The group average intercept on the y-axis, slope coefficient, and category boundary for each handshape continuum are provided in Table 1. We also examined reaction time in identification of items that straddled the participant’s category boundary, items within category, and endpoint items. In the discrimination task, the dependent variables were discrimination accuracy and discrimination RTs. Discrimination accuracy was measured as the average proportion of correctly discriminated items. RTs that were ±2 SD from the mean were removed (1.3% of the data). RTs outside this boundary might indicate reliance on long-term memory processes or additional processing efforts or strategies due to general difficulties with the task.

table 1. Mean intercept on the y-axis, slope coefficient, and category boundaries obtained in handshape identification averaged across groups and continua (standard deviation)

3. Results

Both deaf signers and hearing non-signers placed category boundaries in approximately similar locations for the /flat-O/–/flat-C/ continuum (t(26) = 1.06, p = .30) and the /S/–/C/ continuum (t(26) = 0.23, p = .82). The average category boundary for each group is provided in Table 1. Both groups also exhibited similar binary categorization of handshapes, as we found no differences in the slope gradient between the two groups for either continuum (/flat-O/–/flat-C/: t(26) = 0.31, p = .76; /S/–/C/: t(26) = 0.22, p = .83).

In the identification task, deaf BSL signers and hearing non-signers categorized handshapes along the continua into two binary categories. The identification performances plotted in Figures 7A and 7B indicate that both deaf signers and hearing non-signers displayed a sigmoidal shift in category item assignment. Figure 7A shows the average proportion of handshapes identified as item 1 plotted on the y-axis for the /flat-O/–/flat-C/ continuum and Figure 7B plots identification of handshapes as item 1 for the /S/–/C/ continuum.

Fig. 7. Handshape identification function: (A) average percentage of handshapes identified as item 1 on the /flat-O/–/flat-C/ continuum; and (B) average percentage of handshapes identified as item 1 on the /S/–/C/ continuum.

RTs in the identification task (Figure 8) were analyzed with a 2 (Group: deaf signers vs. hearing non-signers) × 4 (Handshape Membership: left-endpoint items, within-category, boundary and right-endpoint items) repeated measures ANOVA, with Handshape Type (rectangular vs. cylindrical) entered as a covariate. There was no effect of Handshape Type (F(1,50) = 0.10, p = .5, η2 p = .01). Therefore, identification RTs were collapsed across the two continua to preserve power. The results revealed that binary handshape categorization slowed significantly on the boundary compared with elsewhere on the continuum (main effect of Handshape Membership: F(1.7,89) = 27.94, p < .001, η2 p = .35; the assumption of sphericity was not met, Greenhouse–Geisser corrected values are reported). Deaf and hearing participants did not differ in identification RTs overall (F(1,51) = 0.02, p = .89, η2 p = .01). We found an interaction between Group and Handshape Membership (F(1.7,88) = 7.34, p = .002, η2 p = .13). The interaction appeared to be driven by a marginally significant difference between signers and non-signers for handshapes straddling the boundary (t(52) = 1.79, p = .08); however, the groups did not differ in RTs on the endpoints or within category. We followed up the interaction by a separate analysis for each group.

Fig. 8. Mean identification reaction times (RTs) for items positioned on the left endpoint (most closed), on the boundary, within category and right endpoint (most open) collapsed for both handshape continua; bars represent standard error.

In the deaf signers group, within-subjects contrasts indicated a significant quadratic trend in identification RTs (F(1,27) = 42.10, p < .001, η2 p = .61), with the fastest RTs on the endpoints and within category and the slowest RTs at the category boundary. Post-hoc pairwise comparisons of mean RTs (with Bonferroni adjustment) across these four regions confirmed that RTs were significantly slower at the boundary than at the endpoints (ps < .001) and slower at the boundary than within category (p < .001). Deaf signers were only marginally significantly slower identifying handshapes on the left endpoint than on the right endpoint (p = .05). Similarly to deaf signers, in the hearing non-signers group we found a quadratic trend (F(1,25) = 31.61, p < .001, η2 p = .56). Hearing non-signers were slower categorizing handshapes at the boundary than at the endpoints (ps < .001) and slower at the boundary than within category (p < .001). Hearing non-signers, however, categorized handshapes on the right endpoint significantly faster than on the left endpoint (p < .001), suggesting that the perceived difference between fingers touching vs. minimally apart decelerated identification of handshapes, and that handshapes with larger perceived distance between the thumb and fingers (on the right endpoint) were easier for hearing non-signers to categorize than smaller thumb–finger distances. No other contrasts were significant.

Handshape discrimination accuracy was analyzed with a 2 (Group: deaf signers vs. hearing non-signers) × 4 (Handshape Membership: left-endpoint items, within-category, boundary and right-endpoint items) repeated measures ANOVA, with Handshape Type (rectangular vs. cylindrical) entered as a covariate. Handshape Type as a covariate did not influence accuracy (F(1,53) = 0.41, p = .53, η2 p = .01); therefore we report accuracy collapsed across both handshape continua to preserve power (Figure 9). The results reveal that the position of handshape on the continuum significantly affected discrimination accuracy (main effect of Handshape Membership: F(3,159) = 46.16; p < .001; η2 p = .47). Deaf signers were more accurate than hearing non-signers (main effect of Group: F(1,53) = 10.01, p = .003, η2 p = .16). We found no interaction between Group and Handshape Membership (F(3,159) = 0.46, p = .71, η2 p = .01), suggesting that the position of handshape on the continuum influenced discrimination accuracy similarly in both groups.

Fig. 9. Mean discrimination accuracy for item pairs positioned on the left endpoint (most closed), on the boundary, within category and right endpoint (most open) collapsed for both handshape continua; bars represent standard error.

In the deaf group, within subjects-contrasts indicated significant quadratic (F(1,27) = 9.41, p = .005, η2 p = .26) and cubic trends (F(1,27) = 19.62, p < .001, η2 p = .42). Pairwise comparisons (Bonferroni) showed that handshape discrimination at the boundary was significantly more accurate than within category (p < .001) and more accurate on the boundary than on the right endpoint (pair 9–11) (p = .006). Accuracy on the boundary was only marginally significantly better than on the left endpoint (pair 1–3) (p = .08), and the difference in discrimination accuracy on both endpoints did not reach significance (p = 1). Hearing non-signers showed a significant quadratic trend in discrimination accuracy across the four regions (F(1,27) = 13.69, p = .001, η2 p = .38). Hearing non-signers showed no difference in discrimination accuracy between boundary and left endpoint pairs (p = .63) but were more accurate on the boundary than within-category pairs (p = .02) and more accurate on the boundary than on right endpoint pairs (p = .002). The difference in discrimination accuracy on the endpoints did not reach significance (p = .96). Although discrimination accuracy peaked at the category boundary in both groups compared to within-category, the relatively high accuracy at the left endpoint pair (pair 1–3) where fingers were touching vs. not touching and the overall good accuracy reduced the strength of a CP effect.

We present the full discrimination function for all handshape pairs on both continua in Figures 10A and 10B. The graphs suggest that a psychophysical effect occurred on /flat-O/–/flat-C/ continuum; deaf and hearing perceivers displayed high discrimination accuracy at the endpoint pair where fingers were touching vs. minimally apart (pair 1–3) (Figure 10A). Accuracy in both groups declined towards the more open endpoints where the thumb and fingers were wider apart. Despite the psychophysical phenomenon, the discrimination peak was preserved when discrimination across the four regions was contrasted. There was no such perceptual strategy for the /S/–/C/ continuum (Figure 10B). The vertical dotted line signifies an approximate boundary on each continuum which coincided with the overall discrimination peak only in non-signers and only on the /S/–/C/ continuum.

Fig. 10. Full discrimination function for all handshape pairs: (A) mean discrimination accuracy for item pairs on the /flat-O/–/flat-C/ continuum; and (B) mean discrimination accuracy for item pairs on the /S/–/C/ continuum. Vertical dotted lines represent the perceptual boundary.

Finally, discrimination RTs were analyzed with a 2 (Group: deaf signers vs. hearing non-signers) × 4 (Handshape Membership: left-endpoint items, within-category, boundary, and right-endpoint items) repeated measures ANOVA, with Handshape Type (rectangular vs. cylindrical) entered as a covariate. Handshape Type as covariate did not affect overall discrimination RTs (F(1,52) = 0.002, p = .96, η2 p < .001); therefore, RTs for both handshape continua are collapsed to increase power (Figure 11). The results showed that discrimination RTs were influenced by the location of handshape pairs on the continuum and slowed down as finger distance increased (main effect of Handshape Membership: F(3,156) = 6.34, p < .001, η2 p = .11). Deaf signers were significantly faster than hearing non-signers in discrimination (main effect of Group: F(1,52) = 4.28, p = .04, η2 p = .08). We found an interaction between Group and Handshape Membership (F(3,156) = 3.54, p = .02, η2 p = .06). Main effects and interactions are followed up separately for each group.

Fig. 11. Mean discrimination reaction times (RTs) for item pairs positioned on the left endpoint (most closed), on the boundary, within category and right endpoint (most open) collapsed for both handshape continua; bars represent standard error.

Deaf signers’ RTs were influenced by the handshape pair position on the continuum (main effect of Handshape Membership: F(3,78) = 7.48, p < .001, η2 p = .22). There was a significant linear trend in discrimination RTs (F(1,26) = 12.25, p = .002, η2 p = .32), suggesting that deaf signers were the fastest discriminating between the left endpoint pair (pair 1–3), but progressively slowed down as distances between selected fingers increased. Pairwise comparisons (Bonferroni) revealed that deaf signers discriminated significantly faster at the left endpoint (pairs 1–3) compared to within-category pairs (p = .04) and faster at the left endpoint than the right endpoint (pair 9–11) (p = .05), but no other contrasts were significant. In contrast, hearing non-signers’ discrimination speed remained completely unaffected by Handshape Membership (F(3,81) = 1.31, p = .27, η2 p = .05) and no interactions were found. No further pairwise comparisons were conducted in the hearing non-signers group. To sum up, discrimination was more accurate for pairs straddling the boundary than elsewhere on the continuum for both groups. However, deaf signers’ RTs reflect varying levels of sensitivity to handshape changes, perhaps due to sign language experience, unlike hearing non-signers whose RTs remained unaffected.

4. Discussion

The results showed that deaf signers and hearing non-signers both exhibit similar binary categorization of handling handshapes and display similar perceptual boundary placement. The outcome of the handshape identification task was consistent with earlier studies which found a similar sigmoidal function in deaf ASL signers and hearing non-signers (Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005; Emmorey et al., Reference Emmorey, McCullough and Brentari2003; Lane, Boyes-Braem, & Bellugi, Reference Lane, Boyes-Braem and Bellugi1976). In line with previous results, our findings suggest that linguistic experience does not mediate identification of visual features for the purpose of binary handshape categorization. Our results extend this finding to handling handshapes in BSL as deaf and hearing perceivers both employed visual perceptual strategies for binary category handling handshape assignment.

Assignment to handshape endpoints was the hardest at the perceptual boundaries, where reaction times for handshape categorization in both groups significantly increased compared to handshape categorization elsewhere on the continua. However, deaf perceivers exhibited a stronger category bias than hearing perceivers because they were significantly slower than hearing non-signers in categorizing handshapes on the perceptual boundary. The identification RTs are in line with our predictions and previous results on perception of voice onset times (Pisoni, Reference Pisoni1973; Studdert-Kennedy et al., Reference Studdert-Kennedy, Lieberman, Harris and Cooper1970), where perceivers were slowest at the phonetic boundary, but faster for other within-category stimuli. In support of our hypothesis and previous studies, we found that decisions about handshape similarity were faster for same-category handshapes than handshapes straddling a category boundary. Hearing non-signers did not show this effect. This suggests that sign language experience places additional demands on perceptual processing, as evident from longer processing times in cross-boundary conflict resolution in handshape identification.

Discrimination accuracy results indicate that deaf signers and hearing non-signers were more accurate on the category boundary for both handshape continua than within categories. Within-category discrimination accuracy remained relatively high in both groups, suggesting that despite the perceptual sensitivity to category boundaries, deaf and hearing perceivers attended to the gradient aperture changes. Thus, contra to our expectation that only deaf signers might exhibit categorical perception due to linguistic categorization, we found that both deaf and hearing groups regardless of linguistic experience perceived handshape continua rather categorically. This finding suggests that perceptual handshape discrimination was not influenced by linguistic representations in the BSL group, and that linguistic experience alone is neither necessary nor sufficient to give rise to a traditional CP (Beale & Keil, Reference Beale and Keil1995; Bornstein & Korda, Reference Bornstein and Korda1984; Emmorey et al., Reference Emmorey, McCullough and Brentari2003; Gerrits & Schouten, Reference Gerrits and Schouten2004; Özgen & Davies, 1998). Although this study did not demonstrate a traditional CP effect that has been previously reported for other visual or auditory stimuli, the discrimination data fit broadly with previous sign language CP studies reporting overall good discrimination abilities for handshapes (Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005; Best et al., Reference Best, Mathur, Miranda and Lillo-Martin2010; Emmorey et al., Reference Emmorey, McCullough and Brentari2003).

The increased discrimination accuracy for handshapes straddling category boundaries has previously been attributed to the perceived contrast between phoneme categories in speech (Liberman et al., Reference Liberman, Harris, Horffmann and Griffith1957, Reference Liberman, Harris, Kinney and Lane1961) or in lexical signs in ASL (Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005; Emmorey & Herzig, Reference Emmorey, Herzig and Emmorey2003). Baker et al. (Reference Baker, Idsdardi, Golinkoff and Petitto2005) observed improved accuracy at the boundary for [5]–[flat-O] and [B]–[A] handshape continua relative to within category but only in deaf ASL signers; hearing non-signers discriminated equally well between all handshape pairs. However, the authors did not find this pattern in perception of [5]–[S] handshape continuum, where the discrimination peak was diminished by a possibly third phonemic handshape with medium distance between the thumb and fingers, e.g., [claw] handshape. Similarly to Baker et al., the above-chance discrimination in the current study may have been due to a third, or several handshapes depicting handling of medium-size objects. This warrants further investigation.

In ASL sign say-no-to, the /N/ handshape variation is allophonic; the thumb, index, and middle finger are selected and the metacarpal joint is specified. Handshapes within the open /N/ to closed /N/ continuum were not perceived categorically, because handshape discrimination was fairly stable across this continuum (Emmorey et al., Reference Emmorey, McCullough and Brentari2003). The flattish /O/ and /C/ handling handshapes in the present study were similar to the handshape continuum in ASL say-no-to, except that in flattish /C/ handshape examined here, four fingers, instead of two, are selected. In contrast with Emmorey et al., we found that these handshapes were not perceived as equally good variants because discrimination function declined as handshape aperture increased. But non-categorical perception does not have to be strictly continuous. Continuous perception is when discrimination rates remain more or less similar across the continuum, which has been observed for allophonic handshapes in ASL sign say-no-to. In non-categorical perception, discrimination ability may increase or decrease at various points along the continuum without a peak on the boundary, or it could be better on one endpoint than on the other, which was the case in perceptual patterns for handling handshapes in the present study. At this point, it cannot be assumed that aperture variation in handling handshape examined here is allophonic, as more psycholinguistic evidence is needed to support such claims.

Furthermore, ideal CP has rarely been observed in sign languages, because overall discrimination tends to be more accurate than for speech sounds, which could be an important modality effect. Good within-category discrimination has been observed in perception of sonorant vowels, perhaps due to a more robust variation in articulation of vowel sounds than consonant sounds (Macmillan, Kaplan, & Creelman, Reference Macmillan, Kaplan and Creelman1977; Massaro, Reference Massaro and Harnad1987). Thus Massaro’s term ‘categorical partition’ (1987) rather than ‘categorical perception’ more appropriately fits the above-chance within-category performance found with vowel sounds, and we suggest that this ‘partition’ may also be a better way of thinking about categoricity in handling handshapes. In addition, the perceptual partitioning of thumb–finger distance in handling handshapes in the present study is rather similar to the perceptual patterns found for vowel durations (Bastian et al., Reference Bastian, Eimas and Liberman1961) or affricate/fricative consonant distinction (Ferrero et al., Reference Ferrero, Pelamatti and Vagges1982; Rosen & Howell, Reference Rosen, Howell and Harnad1987). Although we do not wish to claim that handling handshapes are akin to vowels, or that handling handshape properties are akin to certain vowel properties, we suggest that observed similarities in perceptual patterns for handshapes in lexical signs, vowels, and handling handshapes examined here demonstrate that gradience is an essential characteristic of language. Signers’ visual processing faculties develop to manage gradience as a unique aspect of less lexicalized constructions in sign language, such as handling constructions.

The perceptual features of handling handshapes influenced their discriminability, suggesting that features such as contact vs. no contact between the thumb and fingers (items 1–3) are salient visual characteristics of handshapes both deaf and hearing perceivers use to guide discrimination. Examining the variation in perceptual patterns and processing latencies, rather than whether or not handshapes are categorically perceived, might be more appropriate in determining how language experience influences visual processing; We leave this for future research. To further explore the extent of sign language experience on comprehension and production of depicting forms, a follow-up study will examine how graspable object size is expressed and interpreted in handling constructions in BSL and gesture (Sevcikova, Reference Sevcikova2013).

Discrimination RTs further revealed important differences in sensitivities to handshape membership and category boundaries. Deaf BSL signers’ RTs were influenced by the handshape position on the continuum, whereas non-signers’ RTs remained unaffected on both handshape continua. Deaf signers’ discrimination times for closed vs. open handshapes were faster than for boundary pairs and within-category pairs, perhaps because /flat-O/ with fingers touching vs. /flat-C/ with fingers apart might mark a meaningful distinction in BSL between handling very thin objects, such as a credit card or paper, and thicker objects, such as books. RTs slowed down as handshape aperture increased, suggesting that handshapes with larger apertures were more difficult to tell apart, but only for deaf signers. Variability in aperture of handling handshapes affected RT performance on the identification and discrimination tasks in a way that suggests a gradient structure with a handshape prototype grounding the category because other handshape variants within a category were not perceptually discarded.

The current findings provide insights into how variability in the linguistic signal is managed during comprehension of signs. We showed that in addition to the apparent binary perceptual bias (fingers touching vs. fingers apart), gradient handshape variations were not readily discarded in perceptual judgments by deaf perceivers and thus influenced handshape processing. Perceivers did not treat same-category members as equivalent candidates. Processing times further revealed that the deaf signers’ perceptual systems transformed the relatively linear visual signals into non-linear internal representations. The continuous difference in handshape aperture was slightly de-emphasized for within-category pairs but accentuated for across-category pairs. Thus, we have demonstrated that native exposure to sign language offers visual categorization expertise that can alter perceptual sensitivities compared to those without any sign language experience.

The present results fit with theories of graded category structure, such as exemplar theories, where categories are built around a central prototype or exemplar and categorization reflects central tendencies (Rosch, Reference Rosch1975). The perceptual patterns found in the present study do not support arguments that categories are represented by the boundaries and that all category members are perceived as equal. Cognitive/usage-based linguists have long debated the idea that linguistic categories are internally structured, with best-example referents as central exemplars and others on the periphery (Lakoff, Reference Lakoff1987; Rosch, Reference Rosch and Moore1973; Rosch & Mervis, Reference Rosch and Mervis1975). Similar structures have been proposed at a sublexical level in exemplar-based theories of phonology (Johnson, Reference Johnson, Johnson and Mullenix1997; Pierrehumbert, Reference Pierrehumbert, Bybee and Hopper2001). If signers store best examples of a handshape category, then handshape variation is not ignored and is used to shape perceptual processing. Others, e.g., traditional generative approaches or feature detection theories, do not effectively account for the patterns observed in the current study as perceivers do not effectively discard variability to uniquely identify an idealized handshape token. Thus, accuracy and RT results support the idea that the architecture of handling handshape categories is graded (i.e., not all members within a category are perceived as identical).

The current findings suggest that direct comparisons between perception of handshapes in lexical signs (Baker et al., Reference Baker, Idsdardi, Golinkoff and Petitto2005; Emmorey et al., Reference Emmorey, McCullough and Brentari2003) and less lexicalized handling constructions need to be made with caution. In less lexicalized and less conventionalized constructions, greater variation of form is permitted and subtle changes in form are more noticeable because of the semantic values associated with each handshape token. Handling handshapes also appear to be different from handshapes in size/shape DCs (Emmorey & Herzig, Reference Emmorey, Herzig and Emmorey2003), where deaf ASL signers, unlike hearing non-signers, interpreted gradient handshape variations depicting object sizes as categorical expressions. Similar discrimination pattern in handling handshape categorization between signers and non-signers could indicate a perceived relationship between handshape size and magnitude. This raises the question of whether the hearing non-signing group’s experience with co-speech gesture, for example, is sufficient to shape handshape categorization in a manner similar to sign language experience. Experience with gesture develops visual categorization skills as demonstrated in deaf children with limited language input who introduce discrete handshape systems into their homesign communication (Goldin-Meadow, Mylander, & Butcher, Reference Goldin-Meadow, Mylander and Butcher1995). It is likely that this kind of experience extends beyond the lexicon and generalizes to handling constructions. Nevertheless, the robust variation, weaker CP effect, and response latencies observed for handling handshapes in deaf BSL signers suggest that handling handshapes are somewhat conventionalized in BSL.

5. Summary

The findings of the current study suggest that linguistic experience alone is not sufficient to mediate handshape feature identification for the purpose of binary handshape categorization, as deaf signers and hearing non-signers perceptually categorized handshapes based on some salient properties, such as finger distance or finger contact. Handling handshapes occur in co-speech gestures and represent familiar visual percepts even for perceivers with no sign language experience. We suggest that, particularly in the case of hearing non-signers, general conceptual processes, e.g., magnitude or object size processing or grasping judgments, could bias handshape perception. More crucially, the sign language system places more specific demands on perceptual processing as evidenced by longer processing times in cross-boundary conflict resolution in handshape categorization. The perceptual discontinuities and differences in response latencies between deaf and hearing perceivers together suggest that handling handshapes are, to some extent, entrenched and conventionalized in BSL. However, deaf signers remained attuned to fine aperture changes in handling handshapes, suggesting that gradience is a unique and perhaps necessary aspect of less lexicalized depicting constructions. This makes handling handshapes rather different from handshapes in lexical signs or entity handshapes, which tend to be discrete in nature. Furthermore, handshape variability affected processing latencies in a way that suggests a gradient structure with a handshape exemplar grounding the category. Perceptual categorization of handling handshapes cannot be solely attributed to linguistic processing. However, exposure to sign language appears– at least to some extent – to modulate processing efforts when perceiving handshapes or hand actions. Language structure is dynamic because the representation of meaning from linguistic input includes flexible perceptual representations rather than rigid, mechanical combinations of discrete components of meaning (Barsalou, Reference Barsalou1999; Glenberg, Reference Glenberg1997; Langacker, Reference Langacker1987). In summary, the study has shown that the perceptual capacities of deaf BSL signers can be refined by sign language experience and these findings contribute to an understanding of the extent to which sign language impacts visual perception. These capacities provide the basis for perception and processing of other aspects of sign language. Overall, the study provides important insights into the closely-knit relationship between natural, sensorimotor experience and experiences originating in linguistic conventions and practices.

APPENDIX

Fig. 12. Illustration of handshapes and their codes used in the text.

table 2. Individual category boundaries obtained in the identification task