Introduction

Music has an ability to elicit emotions and to activate emotion-related brain regions (Juslin & Laukka, Reference Juslin and Laukka2003; Escoffier et al. Reference Escoffier, Zhong, Schirmer and Qiu2013). Darwin (Reference Darwin1871) proposed that this function of music probably arises because of its ability to tap into an innate, pre-existing ‘musical protolanguage’ system of the brain that arose in the course of primate evolution. As expanded upon by later researchers (Fitch, Reference Fitch2006; Masataka, Reference Masataka2009), this protolanguage in turn permitted communication of basic social information, for example establishing social structure or infant–mother relationships, even prior to the evolution of formal linguistic taxonomies. Musical protolanguage theory further proposes that language and music developed formally and tonally first (Jespersen, Reference Jespersen1922; Wray, Reference Wray1998; Arbib, Reference Arbib2005, Reference Arbib2008), with semantics (word meaning) coming later, suggesting that deficits in perception of language form (thought form) and protolanguage (music and emotion) perception would be inter-related.

Recently, it has been demonstrated that individuals can have isolated deficits in musical ability, a condition termed ‘congenital amusia’ (or ‘tone deafness’), and defined as an impairment in melody perception and production that cannot be explained by hearing loss, brain damage, intellectual deficiencies or lack of music exposure (Peretz, Reference Peretz and Deutsch2013). Amusia is commonly measured by the Montreal Battery for Assessment of Amusia (MBEA; Peretz et al. Reference Peretz, Champod and Hyde2003), a battery that assesses processing of complex musical phrases. The MBEA assesses perceptual musical disorders and amusia, and is operationalized both narrowly, by having melodic impairments only (Thompson et al. Reference Thompson, Marin and Stewart2012; Peretz, Reference Peretz and Deutsch2013), and broadly/globally, also incorporating deficits in rhythm and working memory (Peretz et al. Reference Peretz, Champod and Hyde2003). We are aware of one previous report (Hatada et al. Reference Hatada, Sawada, Akamatsu, Doi, Minese, Yamashita, Thornton, Honer and Inoue2013) of the MBEA in schizophrenia, finding a significant deficit in Japanese-speaking patients, highlighted by a 62% rate of amusia.

Although impairments in thought form, such as derailment, tangentiality and incoherence, illogicality and circumstantiality (Rodriguez-Ferrera et al. Reference Rodriguez-Ferrera, McCarthy and McKenna2001; Sans-Sansa et al. Reference Sans-Sansa, McKenna, Canales-Rodriguez, Ortiz-Gil, Lopez-Araquistain, Sarro, Duenas, Blanch, Salvador and Pomarol-Clotet2013), have been documented in schizophrenia since at least the time of Bleuler (Reference Bleuler1950), impairments in auditory emotion recognition (AER) and broader aspects of protolanguage perception have only been appreciated more recently (Ross et al. Reference Ross, Orbelo, Cartwright, Hansel, Burgard, Testa and Buck2001). Moreover, prominent sensory contributions to these deficits have been increasingly recognized over the past few years (Leitman et al. Reference Leitman, Laukka, Juslin, Saccente, Butler and Javitt2010; Gold et al. Reference Gold, Butler, Revheim, Leitman, Hansen, Gur, Kantrowitz, Laukka, Juslin, Silipo and Javitt2012; Kantrowitz et al. Reference Kantrowitz, Leitman, Lehrfeld, Laukka, Juslin, Butler, Silipo and Javitt2013, Reference Kantrowitz, Hoptman, Leitman, Silipo and Javitt2014).

One of the best-established and most pernicious aspects of schizophrenia is an impairment of social cognition (Green & Leitman, Reference Green and Leitman2008), including the ability to detect emotion from vocal information (Leentjens et al. Reference Leentjens, Wielaert, van Harskamp and Wilmink1998; Edwards et al. Reference Edwards, Jackson and Pattison2002; Leitman et al. Reference Leitman, Foxe, Butler, Saperstein, Revheim and Javitt2005, Reference Leitman, Laukka, Juslin, Saccente, Butler and Javitt2010; Bozikas et al. Reference Bozikas, Kosmidis, Anezoulaki, Giannakou, Andreou and Karavatos2006; Hoekert et al. Reference Hoekert, Kahn, Pijnenborg and Aleman2007; Bach et al. Reference Bach, Buxtorf, Grandjean and Strik2009a ,Reference Bach, Herdener, Grandjean, Sander, Seifritz and Strik b ; Gold et al. Reference Gold, Butler, Revheim, Leitman, Hansen, Gur, Kantrowitz, Laukka, Juslin, Silipo and Javitt2012; Yang et al. Reference Yang, Chen, Chen, Khan, Forchelli and Javitt2012; Pinheiro et al. Reference Pinheiro, Del Re, Mezin, Nestor, Rauber, McCarley, Goncalves and Niznikiewicz2013). For example, it has been proposed that deficits in social cognition mediate effects of neurocognitive impairments on ability to engage in competitive employment (Combs et al. Reference Combs, Waguspack, Chapman, Basso and Penn2011; Luedtke et al. Reference Luedtke, Kukla, Renard, Dimaggio, Buck and Lysaker2012).

Over the past several years, the role of auditory dysfunction as a contributor to social cognitive impairment has become increasingly appreciated. Schizophrenia patients show impairments not only in tone matching (Strous et al. Reference Strous, Cowan, Ritter and Javitt1995) but also in generation of early auditory event-related potentials (ERPs) such as mismatch negativity (MMN) (Umbricht & Krljes, Reference Umbricht and Krljes2005; Light et al. Reference Light, Swerdlow and Braff2007; Wynn et al. Reference Wynn, Sugar, Horan, Kern and Green2010; Friedman et al. Reference Friedman, Sehatpour, Dias, Perrin and Javitt2012). Deficits in both tone matching (Gold et al. Reference Gold, Butler, Revheim, Leitman, Hansen, Gur, Kantrowitz, Laukka, Juslin, Silipo and Javitt2012) and MMN (Jahshan et al. Reference Jahshan, Wynn and Green2013) contribute strongly to impaired AER ability, consistent with sensory-based contributions to impaired AER.

Given the hypothesized relationship between the perception of complex musical phrases, language form and AER, the MBEA may also be useful for investigations of protolinguistic competence. For example, one recent study evaluated individuals with congenital amusia who were otherwise without psychiatric or neurological disorder (Thompson et al. Reference Thompson, Marin and Stewart2012). In that study, amusic individuals showed highly significant AER deficits, despite having otherwise intact neurocognitive function.

The concept of amusia, as assessed by the MBEA, goes beyond simple tone matching by using complex musical phrases that we hypothesize are better matches of complex emotional speech than simple tone matching. To assess this hypothesis, we have incorporated measures of both tone matching and general neurocognitive impairment, along with the MBEA, to evaluate relative contribution of these measures to the well-described impairments of AER in schizophrenia.

Musical protolanguage theory also predicts shared processing of music and language form, as supported by recent neuroimaging studies (Sammler et al. Reference Sammler, Koelsch, Ball, Brandt, Elger, Friederici, Grigutsch, Huppertz, Knosche, Wellmer, Widman and Schulze-Bonhage2009, Reference Sammler, Koelsch, Ball, Brandt, Grigutsch, Huppertz, Knosche, Wellmer, Widman, Elger, Friederici and Schulze-Bonhage2013; Asaridou & McQueen, Reference Asaridou and McQueen2013). We used the Disorganization (Cognition) factor (Lindenmayer et al. Reference Lindenmayer, Bernstein-Hyman and Grochowski1994) of the Positive and Negative Syndrome Scale (PANSS; Kay et al. Reference Kay, Fiszbein and Opler1987) to obtain an operationalized measure of formal language dysfunction. This factor was chosen because of its inclusion of the language-specific items conceptual disorganization (P2) and abstract thinking (N5). We predicted that correlations between the MBEA and language would be independent of control for cognitive ability.

Finally, we have previously demonstrated (Leitman et al. Reference Leitman, Laukka, Juslin, Saccente, Butler and Javitt2010) that, when identifying emotion based upon tone of voice, patients tend to misidentify not only the intended emotion but also the intended strength of characterization, which we term auditory emotion intensity recognition (AEIR). In particular, patients differentiate less between weak and strong portrayals of emotion, primarily because of overestimation of the intended strength of weakly portrayed emotions. As with impairment in emotion recognition itself, AEIR deficits correlate with impaired early auditory processing ability, although its relationship with musical processing has not been evaluated. Therefore, as with AER, the present study evaluated substrates of impaired AEIR in schizophrenia.

Method

Subjects

Subjects consisted of 31 medicated patients recruited from long-term in-patient (n = 12) and supervised residential sites (n = 19) and 44 healthy volunteers, of whom 16 performed the MBEA only. All subjects gave their signed informed consent to participate in the study, following a full description of the study procedures.

Patients met DSM-IV-TR criteria for a diagnosis of schizophrenia (n = 23) or schizo-affective disorder (n = 8), with no significant between-diagnosis differences on auditory tasks (p = 0.8). We excluded controls with a history of an Axis I psychiatric disorder, as defined by the SCID (First et al. Reference First, Spitzer, Gibbon and Williams1997). Patients and controls were excluded if they had any neurological or auditory disorders noted on their medical history or in prior records, or for alcohol or substance dependence within the past 6 months and/or abuse within the past month (First et al. Reference First, Spitzer, Gibbon and Williams1997).

In addition, a subsample of subjects completed the AER task, the PANSS (Kay et al. Reference Kay, Fiszbein and Opler1987; Lindenmayer et al. Reference Lindenmayer, Bernstein-Hyman and Grochowski1994), with the Disorganization (Cognition) factor used as a proxy for language ability. The Measurement and Treatment Research to Improve Cognition in Schizophrenia (MATRICS) Consensus Cognitive Battery (MCCB; Nuechterlein & Green, Reference Nuechterlein and Green2006) was used to assess general cognition. The Social Cognition domain was not included. Data in the text are given as means ± standard deviation (s.d.). Patients were receiving a mean antipsychotic dose of 774 ± 565 chlorpromazine equivalents (Woods, Reference Woods2003). Demographics, behavioral ratings and scores for individual tasks are presented in Table 1.

Table 1. Study demographics

SES, Socio-economic status (measured by the four-factor Hollingshead scale); MCCB, MATRICS Consensus Cognitive Battery; PANSS, Positive and Negative Syndrome Scale (Lindenmayer et al. Reference Lindenmayer, Bernstein-Hyman and Grochowski1994).

Values given as mean ± standard deviation (with number in parentheses).

a p < 0.05 on Mann–Whitney for categorical values and independent-sample t tests for continuous values.

Auditory tasks

All auditory tasks were presented on a CD player at a sound level that was comfortable for each listener in a sound-attenuated room. Subjects worked 1:1 with an experienced tester, and were encouraged to take breaks when needed.

MBEA

The MBEA is described fully in Peretz et al. (Reference Peretz, Champod and Hyde2003). In brief, it contains six subtests across the three domains: Melody (three tests: scale, contour, interval) and Rhythm (two tests: rhythm, meter) organization and Memory (one test: incidental music memory recognition). Two to four examples were provided preceding each task to ensure that the subject clearly understood the task instructions (see online Supplementary Material). These examples were repeated if necessary.

All six tests use the same pool of 30 novel musical phrases that were composed according to the rules of the Western tonal system, written with sufficient complexity to guarantee its processing as a meaningful structure rather than as a simple sequence of tones. The selections last a mean of 5.1 s in all but the metric test, in which the stimuli lasted twice as long (mean = 11 s).

Melody and rhythm tests require subjects to perform a same–different classification task in which pairs are either the same or have one note that differs in pitch or duration. The memory task is an incidental-memory recognition task in which subjects are asked whether they have previously heard the selection during the task. Amusia was operationalized using published norms (Peretz, Reference Peretz and Deutsch2013) by a < 73.3% on the scale subtest.

AER and AEIR

AER was assessed using a new battery of 248 stimuli (Laukka & Elfenbein, Reference Laukka and Elfenbein2012). To refine the task for future use, analysis focused on the subset (n = 137) of items in which both groups scored above 35% on emotional identification [1.5 s.d. above chance performance (20%)]. Unlike the previous battery, which used actors who spoke with British-accented English (Gold et al. Reference Gold, Butler, Revheim, Leitman, Hansen, Gur, Kantrowitz, Laukka, Juslin, Silipo and Javitt2012), stimuli in the new battery were spoken by native American-English speakers and were scored based upon the speaker's intended emotion (happy, sad, angry, fear or neutral) and intensity level (high, medium and low). The verbal material consisted of short emotionally neutral phrases (i.e. ‘Let me tell you something’).

AEIR was rated on a scale from 1 (very low intensity) to 10 (very high intensity) for weak, medium and strong emotional portrayals independently. AEIR discrimination was defined as the mean rating for high-intensity items minus the mean rating for low-intensity items.

Tone-matching task

Pitch processing was assessed using a simple tone-matching task (Leitman et al. Reference Leitman, Laukka, Juslin, Saccente, Butler and Javitt2010). This task consists of pairs of 100-ms tones in series, with 500-ms intertone intervals. Within each pair, tones are either identical or differed in frequency (Hz) by specified amounts in each block (2.5, 5, 10, 20 or 50%). In each block, 12 of the tones are identical and 14 are dissimilar. Tones are derived from three reference frequencies (500, 1000 and 2000 Hz) to avoid learning effects. In all, the test consisted of five blocks of 26 pairs of tones.

Statistical analyses

Demographics for the two groups were compared by a Mann–Whitney test or Fisher's exact test for categorical values and by independent-sample t tests for continuous values. Between-group effects were assessed using a multivariate analysis of variance (MANOVA) or a repeated-measure ANOVA (rmANOVA), with stimulus type as a within-subject factor and diagnostic group as a between-subject factor, and follow-up univariate ANOVAs or independent-sample t tests were conducted.

Relationships among measures were determined by Pearson correlations and multivariate linear regression. Within the linear regression, partial correlations were used to assess significance of association. Measures of sensitivity (d′) and bias (criterion c) (Stanislaw & Todorov, Reference Stanislaw and Todorov1999) were calculated for same–different trials of the MBEA. Two-tailed statistics were used throughout with preset α level of significance of p < 0.05.

Results

Between-group analysis

MBEA

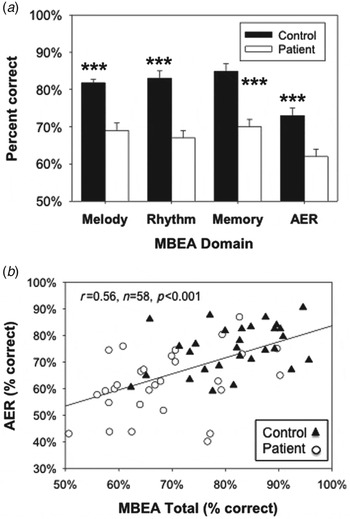

Patients showed a highly significant reduction in MBEA total score relative to all controls (F 1,77 = 49.5, p < 0.001), which remained highly significant after controlling for years of education, gender and age (F 1,70 = 12.6, p = 0.001). Equivalent deficits were seen across the individual melody, rhythm and memory domains (Fig. 1 a), corresponding to a large effect-size impairment (d = 1.30).

Fig. 1. (a) Bar graph (mean ± s.e.m.) of overall percentage correct scores for Montreal Battery for Evaluation of Amusia (MBEA) domains and auditory emotion recognition (AER). (b) Scatter plot of MBEA versus AER. Correlations remained significant after controlling for group status (partial r = 0.37, p = 0.004). *** p < 0.001 on independent-samples t test.

Given this large difference, patterns of impairment were further analyzed by comparing accuracy on ‘same’ and ‘different’ trials. The groups had relatively high rates of correct response on ‘same’ trials (controls: 83.9 ± 11.1%; patients: 80.3 ± 15.0%) but significantly lower accuracy in ‘different’ trials (controls: 80.4 ± 13.5%; patients: 58.3 ± 20.6), yielding a highly significant group × error type interaction (F 1,72 = 34.8, p < 0.001). This pattern suggests that, like controls, patients adopted a strategy of saying ‘same’ unless they accumulated evidence of a difference. This was confirmed by signal detection analyses showing highly significant between-group differences in sensitivity (controls: d′ = 2.9 ± 0.7; patients: d′ = 1.9 ± 0.7; t 72 = 5.3, p < 0.0001) and bias (t 72 = 2.9, p = 0.004), also yielding a highly significant group × signal interaction (F 1,72 = 29.8, p < 0.001), suggesting that patients were actively engaged in the task but mainly had reduced sensitivity to between-stimulus differences in the dimensions tested by the MBEA.

MBEA and AER

As in previous studies, patients showed highly significant impairment in detecting intended emotion (F 1,60 = 27.1, p < 0.001; Fig. 1 a), with no significant group × emotion interaction (F 4,53 = 0.7, p = 0.58). Patients also showed significant deficits in neurocognitive function, as reflected in the MCCB composite score (F 1,58 = 56.6, p < 0.001), and in early auditory function, as reflected in tone-matching (F 1,60 = 18.9, p < 0.001) (Table 1).

As predicted, a highly significant correlation was observed between AER and MBEA total scores (r = 0.56, n = 58, p < 0.001; Fig. 1 b). Significant independent correlations were also observed between the melody (r = 0.55, n = 58, p < 0.001), rhythm (r = 0.48, n = 58, p < 0.001) and memory (r = 0.44, n = 58, p = 0.001) domains and AER.

Correlations with total scores (partial r = 0.37, p = 0.004) and melody (partial r = 0.37, p = 0.005), but not rhythm (partial r = 0.06, p = 0.67), remained significant after control for group status. Similarly, correlations remained significant after covariation for MCCB (total–partial r = 0.31, p = 0.023; melody–partial r = 0.32, p = 0.019) or tone-matching ability (total–partial r = 0.32, p = 0.018; melody–partial r = 0.31, p = 0.022), suggesting that correlations were also independent of general cognitive and sensory processing deficits. Moreover, when group status, total MBEA and MCCB composite scores were entered simultaneously into a regression versus AER, a significant correlation was observed with MBEA (partial r = 0.28, p = 0.046) but not MCCB (partial r = 0.20, p = 0.16).

AEIR

Because the battery included weak, medium and strong portrayals of emotion, it was also possible to evaluate the degree to which patients experienced and differentiated emotion intensity. Patients' overall ratings for stimulus intensity were similar to those of controls across all levels (F 1,56 = 1.9, p = 0.17). Nevertheless, patients differentiated between weak and strong portrayals to a significantly smaller degree than controls (Fig. 2 a), leading to a highly significant group × portrayal strength interaction (F 2,55 = 15.8, p < 0.0001).

Fig. 2. Bar graph (mean ± s.e.m.) of (a) auditory emotion intensity recognition (AEIR) ratings for high and low intended intensity items and (b) AEIR discrimination (mean rating for high-intensity items minus mean rating for low-intensity items). A highly significant group × portrayal strength interaction (F 2,63 = 19.8, p < 0.0001) and a between-group difference in discrimination (t64 = 4.7, p < 0.001) were seen. (c) Scatter plot of Montreal Battery for Evaluation of Amusia (MBEA) versus AEIR discrimination. Black shading indicates amusia. Correlations remained significant after controlling for group status (partial r = 0.30, p = 0.023) *** p < 0.001 on independent-samples t test.

AEIR discrimination scores, defined as the difference between ratings for strong and weak portrayal by subject, differed significantly between groups (Fig. 2 b; F 1,56 = 17.7, p < 0.001). This difference remained significant when controlling for correct response (F 2,55 = 10.9, p < 0.001), further demonstrating reduced ability to discriminate intended emotional strength in patients.

As with AER, impaired AEIR discrimination correlated significantly with MBEA total score (r = 0.49, n = 58, p < 0.001; Fig. 2 c). The correlation also remained significant after control for group status (partial r = 0.31, p = 0.023). However, unlike AER, AEIR correlated primarily with scores on the rhythm domain of the MBEA (partial r = 0.46, p < 0.001) rather than on the melody domain (partial r = 0.12, p = 0.39) in a stepwise regression. Correlations with the rhythm domain remained significant after controlling for group status (partial r = 0.28, p = 0.037).

Protolanguage

To assess the hypothesis that language form and protolanguage were related over and above general cognition, we performed a stepwise, multivariate regression with the PANSS disorganization factor as the dependent variable, MCCB entered in the first step and MBEA domains in the second step. The melody subscale (partial r = −0.38, p = 0.044) correlated significantly with the disorganization factor, with poor melody recognition scores predicting increased disorganization. By contrast, MCCB scores (overall cognition) correlated only at trend level (partial r = −0.34, p = 0.073), as did musical memory (p = 0.09).

To further test the relationship between language form and protolanguage, we extracted the language-specific items of the PANSS disorganization factor (P2 conceptual disorganization; N5 abstract thinking) to create more a specific language/thought disorder PANSS subscale. When the analysis was repeated using this ‘language’ subscale, melody remained a significant predictor (partial r = −0.43, p = 0.025) whereas the MCCB (partial r = −0.16, p = 0.41) was not predictive (Fig. 3 a). By contrast, neither positive nor negative symptoms correlated significantly with any of the MBEA domains. Correlations between the PANSS language subscale and the melody domain remained significant even using non-parametric (Spearman) correlations (r s = −0.45, n = 29, p = 0.014), suggesting that the relationship was not due to outliers.

Fig. 3. Scatter plot of (a) Melody domain versus exploratory Positive and Negative Syndrome Scale (PANSS) language subscale (P2 conceptual disorganization and N5 abstract thinking). (b) Bar graph (mean ± s.e.m.) for auditory emotion recognition (AER) divided by group and amusia classification. *** p < 0.0001, which remained significant after controlling for group status and the MATRICS Consensus Cognitive Battery (MCCB).

Groups by amusia

Based on published cut-offs (Peretz, Reference Peretz and Deutsch2013), 45% of patients and 9% of controls met criteria for amusia. As predicted, even after controlling for education, significant main effects of AER ability were seen for both amusia (F 1,49 = 7.2, p = 0.01) and group (F 1,49 = 7.4, p = 0.009; Fig. 2 b).

Memory domain

Although patients showed reduced musical memory ability versus controls (Fig. 1 a), correlations with AER (partial r = 0.11, p = 0.43) did not remain significant after controlling for group status and the melody domain. Similarly, correlations with AEIR (partial r = 0.35, p = 0.057) did not remain significant after controlling for group status and the rhythm domain. By contrast, impaired memory on the MBEA correlated significantly with overall cognitive ability across groups (r = 0.51, p < 0.001). The correlation, moreover, remained highly significant even after controlling for group status in a stepwise regression (partial r = 0.38, p = 0.003).

Discussion

Although Bleuler (Reference Bleuler1950) considered sensory processing as an ‘intact simple function’, increasing evidence suggests that sensory processing in schizophrenia is neither simple nor intact. The present study used a test battery, the MBEA, developed specifically for the assessment of musical perceptual deficits, and therefore also useful to probe the underlying musical protolanguage that is known to convey social emotion during human interaction.

As predicted, patients showed deficits across MBEA domains, and these deficits correlated significantly with impaired AER ability. In addition, patients showed significant difference in the ability to discriminate between intended strength of emotion portrayal, with a tendency to overestimate intended strength of weak emotions. Finally, as predicted, patients showed significant correlation between impairments in melodic processing and impairments in clinician-rated spontaneous language generation, as assessed using the PANSS. These correlations remained significant even after controlling for contributions of general cognitive ability using the MCCB, suggesting a significant association between prosodic and structural aspects of language generation in schizophrenia.

Impaired AER function in schizophrenia patients was most highly correlated with impairments in the processing of melody, similar to recent results in individuals with amusia (Thompson et al. Reference Thompson, Marin and Stewart2012). Furthermore, consistent with these recent findings, we found specific contributions for both ‘amusia’ and patient status to impairments in AER function (Fig. 3 b), even after controlling for education. Nevertheless, a far higher percentage of schizophrenia patients than controls met criteria for amusia, and amusic controls were otherwise neurocognitively normal, suggesting that controls may be more able than schizophrenia patients to compensate for primary deficits in musical ability through the general use of cognitive, problem-solving abilities.

AEIR

Unlike AER correlations with melody impairment, impaired AEIR correlated more specifically with deficits in rhythm. Of note, others have also recently observed that aspects of rhythm are associated with the arousal level of musically expressed emotions (Laukka et al. Reference Laukka, Eerola, Thingujam, Yamasaki and Beller2013). As we have found previously (Leitman et al. Reference Leitman, Laukka, Juslin, Saccente, Butler and Javitt2010), patients tended to overestimate the intensity of the intended weak emotions (Fig. 2 b), leading to reduced differentiation of weak and strong portrayals. The majority of emotion batteries do not incorporate items with different intended strength of portrayal, so that the literature regarding substrates of emotion intensity discrimination has been less studied than those involving AER itself.

One of the primary ways in which individuals communicate calmness in social situations is by speaking softly and slowly, leading others to understand that a low level of emotionality is being communicated. The correlation between MBEA rhythm domain scores and AEIR discrimination suggests that, in schizophrenia patients, and also in other amusic individuals, such communication may be misperceived, leading to patients feeling that others are speaking with more intensity than is truly intended.

Protolanguage

We also assessed whether deficits in music discrimination would be correlated with other aspects of language processing. In general, thought disorder in schizophrenia (Barrera et al. Reference Barrera, McKenna and Berrios2005, Reference Barrera, McKenna and Berrios2009) is considered to reflect dysfunction within both frontal and temporal brain regions. Recently, however, imaging studies have suggested that fluent disorganization in patients is most related to left temporal lobe deficits involving Broca and Wernicke's regions, whereas poverty in content of speech is more related to frontal lobe changes and dysfunction (Sans-Sansa et al. Reference Sans-Sansa, McKenna, Canales-Rodriguez, Ortiz-Gil, Lopez-Araquistain, Sarro, Duenas, Blanch, Salvador and Pomarol-Clotet2013). Melody and rhythm, by contrast, are thought to be processed in the Wernicke homologue region of the right temporal cortex (Albouy et al. Reference Albouy, Mattout, Bouet, Maby, Sanchez, Aguera, Daligault, Delpuech, Bertrand, Caclin and Tillmann2013). The specific correlation we observed between fluent thought disorder and music perception (i.e. the PANSS language and melodic processing) is thus consistent with correlated bi-temporal involvement in schizophrenia. Given the exploratory nature of the extracted language subscale, this correlation should be verified using a validated scale of language form.

Prevalence of deficit

The rate of amusia is commonly cited as around 4% (Cuddy et al. Reference Cuddy, Balkwill, Peretz and Holden2005; Sloboda et al. Reference Sloboda, Wise and Peretz2005), although absolute rates of amusia differ based upon cut-off. For example, the online MBEA database (www.brams.umontreal.ca/plab/publications/article/57) reports an amusia rate of 5.2% using the same criteria we used in this report. Others have argued for d′-based scoring (Henry & McAuley, Reference Henry and McAuley2010). Regardless of definition used, however, schizophrenia patients showed significantly higher amusia rates than the matched controls, similar to previously published cohorts (Hatada et al. Reference Hatada, Sawada, Akamatsu, Doi, Minese, Yamashita, Thornton, Honer and Inoue2013).

Treatment implications

Music therapy was widely used in the 1950s and 1960s for treatment of schizophrenia (Skelly & Haslerud, Reference Skelly and Haslerud1952; Gillis et al. Reference Gillis, Lascelles and Crone1958; Weintraub, Reference Weintraub1961), but was largely abandoned with budget cuts and increased reliance on medication during the 1970s to 1980s. Despite the current disuse of music therapy in most clinical settings, more recent clinical trials (Bloch et al. Reference Bloch, Reshef, Vadas, Haliba, Ziv, Kremer and Haimov2010; Mossler et al. Reference Mossler, Chen, Heldal and Gold2011) continue to show benefit despite a lack of understanding of the mediating effects. Our results suggest that development of schizophrenia is associated with loss of musical ability in general, rather than just loss of specific sensory functions such as tone-matching ability or MMN generation, and that these deficits may contribute not only to AER impairments but also to disturbances in thought and communication.

Given the importance of musical function to perception of emotion, and of emotion perception to social outcome (Kee et al. Reference Kee, Green, Mintz and Brekke2003; Leitman et al. Reference Leitman, Foxe, Butler, Saperstein, Revheim and Javitt2005, Reference Leitman, Hoptman, Foxe, Saccente, Wylie, Nierenberg, Jalbrzikowski, Lim and Javitt2007; Grant & Beck, Reference Grant and Beck2010), our findings provide a potential mechanistic basis for reported efficacy of musical therapy approaches in schizophrenia. The present study suggests that impaired musical ability, over and above impairments in general cognitive ability, underlie impaired social interactional abilities in schizophrenia and thus may represent a crucial target for music-based remediation approaches (Herholz & Zatorre, Reference Herholz and Zatorre2012). Furthermore, the MBEA may be useful in schizophrenia both for identifying subjects who would be likely to benefit from intervention and as a way to monitor response. Compared with medication, music therapy is associated with limited side-effects, and potentially weight loss, and so should be reconsidered as a potential tool for remediation of AER/AEIR and social cognitive impairments in schizophrenia.

In conclusion, this study demonstrates the importance of protolanguage, as measured by music perception, along with language deficits in communicatory and social cognitive disturbances in both schizophrenia and congenital amusia. Furthermore, our results argue for greater screening of patients for amusia and, potentially, for the revival of the study of music therapy in schizophrenia, particularly the study of neuroscientifically music-based remediation approaches (Herholz & Zatorre, Reference Herholz and Zatorre2012) that have been shown to improve brain plasticity, including cognition, in older adults and mobility after stroke. Protolanguage dysfunction, including impairments in melody and rhythm perception, remains strongly significant even following covariation for general cognitive ability, and should therefore be considered a separate domain of neurocognitive impairment that is not captured within present cognitive assessment approaches for schizophrenia.

Supplementary material

For supplementary material accompanying this paper, please visit http://dx.doi.org/10.1017/S0033291714000373.

Acknowledgments

Preparation of this manuscript was supported in part by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through grant number UL1 RR024156 and the Dr Joseph E. and Lillian Pisetsky Young Investigator Award for Clinical Research in Serious Mental Illness to J.T.K., and R01 DA03383, P50 MH086385 and R37 MH49334 to D.C.J. We thank N. Gaine for administrative support.

Declaration of Interest

None.