Women also know stuff, but we might not know that from reviewing political science bibliographies. Work by women is far less likely to be cited than similar work by men (Maliniak, Powers, and Walter Reference Maliniak, Powers and Walter2013), and men are particularly unlikely to cite women (Mitchell, Lange, and Brus Reference Mitchell, Lange and Brus2013).

Women are under-cited as well as under-assigned. For example, in the top 200 most-frequently assigned works in the Open Syllabus Project’s “Politics” section,Footnote 1 only 15 works are authored by at least one woman, whereas 20 are authored by at least one man named Robert. Of the 219 total authors on that list, 204 are men and only 15 (6.8%) are women, far less than in the discipline as a whole. More rigorous analyses have found that women authors may appear as often in international relations syllabi as they do in the field. However, this may be driven by women being more likely to assign other women, which is indicative of under-assignment by men (Colgan Reference Colgan2015).Footnote 2

Because researchers tend to cite, at least initially, the works assigned to them in coursework (Nexon Reference Nexon2013), the gender gap in citations is exacerbated by under-assignment in syllabi. Repercussions of the citation gap exceed a normative desire for descriptive diversity: decisions about hiring, promotion, tenure, and raises often are informed by citation counts.

Many other explanations are offered for why women are not cited as frequently as men. For example, women are less likely to cite themselves in their own research (Colgan Reference Colgan2015; Maliniak, Powers, and Walter Reference Maliniak, Powers and Walter2013; Mitchell, Lange, and Brus Reference Mitchell, Lange and Brus2013). Another explanation is that scholars tend to be most familiar with work by people within their social networks, which tend to be gendered (Mansbridge Reference Mansbridge2013).

A third explanation is that assessing gender balance in bibliographies and syllabi can be difficult and tedious.Footnote 3 Determining the percentage of the 200 most-assigned works from the Open Syllabus Project that were by women authors involved researching many unfamiliar names, determining which identified as women, and summing the total number of authors. The process took 20 to 30 minutes. Although this may not seem excessive, those who otherwise might be inclined to assess their gender balance may view this process as an impediment.

This article introduces a web-based tool that I created to help scholars assess the gender balance of their bibliographies and syllabi.Footnote 4 Whereas many scholars have long assessed the gender balance manually, far more have not. This tool makes this process fast and easy for people not already predisposed to manual assessment. It uses RShiny to implement an algorithm that identifies author names, probabilistically codes each author’s gender, and then provides the user with an estimate of the percentage of authors who are women. This process is less accurate than hand-coding, but it is much faster and easier and provides users with a fairly reliable and accurate estimate. For instance, when applied to the 200 most-frequently assigned politics texts, the tool identified 211 names and determined that 9.68% were women. This compared to 218 names and 6.8% when hand-coding. Although the tool found fewer authors and a larger percentage of women than was produced by hand-coding, the result was similar, much faster, and a huge improvement over not assessing gender balance at all. The sources of this inaccuracy are discussed in more detail herein.

The next section explains how the tool works and describes in detail how it identifies names and probabilistically codes gender. The section that follows briefly discusses the two most frequently asked questions: (1) What proportion of women should be the goal?; and (2) How should scholars aim to balance their bibliographies and syllabi?

THE GBAT

The GBAT makes estimating diversity quick and easy, to help those who would like to but who do not otherwise undertake such an assessment. It works by identifying author names in a document and then estimating the probability that an author is a woman. The tool then aggregates each probability to approximate the percentage of women authors in the list. The entire process, from uploading to final estimate, typically takes less than a minute.

The following sections explain the two primary components of the algorithm: identifying likely author names and computing gender probabilities.

Because researchers tend to cite, at least initially, the works assigned to them in coursework (Nexon Reference Nexon2013), the gender gap in citations is exacerbated by under-assignment in syllabi.

IDENTIFYING NAMES

Whereas computers excel at implementing written directions faster and more consistently than humans, many tasks that can be done easily by humans are difficult for computers.Footnote 5 Identifying names as distinct from other non-name words is one such task. For example, consider the last three entries on the top 200 list, which resemble entries in a bibliography or a syllabus:

Power Shift. Matthews, Jessica T. Foreign Affairs.

Counteractive Lobbying. Austen-Smith, David, Wright, John R. American Journal of Political Science.

The True Clash of Civilizations. Inglehart, Ronald, Norris, Pippa. Foreign Policy.

Most humans can quickly identify that this list contains five authors, two of which are women. Computers do not recognize the nuanced human idea that some words “just look like names” and therefore this intuition does not translate easily into code. Computers can be programmed to identify patterns that resemble names but are seldom as precise as humans.Footnote 6 For that reason, enlisting a computer would not be sensible if a syllabus or a bibliography were actually as short as the previous example. However, if the list of citations were much longer, the computer’s speed and untiring repetition would become useful, even with the slight cost of the accuracy of human coding. The key is telling a computer what it means that some words “just look like names.” This algorithm works by removing character strings that are unlikely to be names and then by identifying strings of characters that follow patterns that “look like” names.

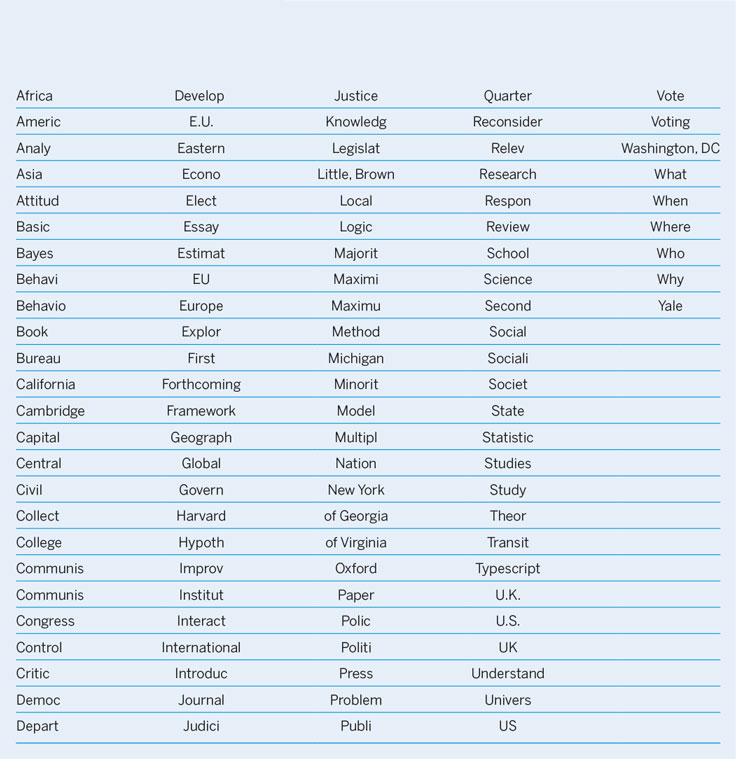

Before identifying names, the algorithm removes words, letters, and symbols that are unlikely to appear in names: most conjunctions and stop words (leaving in “I,” “a,” and “and”); numbers; and words and word stems from a list of common title, journal, and publisher names (table 1). The algorithm replaces these characters with spaces. The following example of a resulting text has fewer words and more empty spaces:

“. Power Shift. Matthews, Jessica T. Affairs. . active ying. Austen-Smith, David, Wright, John R. an cal . . True Clash izations. Inglehart, Ronald, Norris, Pippa. y.”

Table 1 Common Title Words

Stray punctuation marks and repeated spaces are deliberately retained because they make it easier to identify names. This is important because some of the characteristics that allow us to identify that a string of characters “looks like” a name—two or three words in a row all beginning with capital letters, for instance—also are shared by other words in titles and journal names. Removing words, inserting spaces, and retaining punctuation prevents many titles and journal names from falsely being identified as names. From the resulting text, regular expressions and the R package openNLPFootnote 7 can be used to extract a list of probable names.

In the previous example, the tool identifies “Power Shift,” “Mathews, Jessica,” “Austen-Smith, David,” “Wright, John,” “True Clash,” “Inglehart, Ronald,” and “Norris, Pippa” as probable author names. This is good for a first pass; it correctly identifies all author names and contains only two false names. With this list of probable author names, the tool moves on to the next stage—probabilistically predicting gender—which also will eliminate many of the false-positive names.

PROBABILISTIC PREDICTION OF GENDER

Most academics likely can quickly identify in this list of probable names that three first names are common among men (i.e., David, John, and Ronald); two first names are typically associated with women (i.e., Jessica and Pippa); and two phrases are not names (i.e., Power Shift and True Clash).Footnote 8 Computers lack the inherent human ability to make these same contextual judgments; fortunately, algorithms have been written to help them do so.

The GBAT predicts an author’s gender from the author’s given name using the genderize.io algorithm, as implemented in the genderizeR package for R (Wais Reference Wais2015). Unlike other data sources—such as US Social Security Administration data, which only includes data on names that are common in the United States—genderize.io and genderizeR use social-media data. Therefore, it can predict gender for many more names, allowing for greater inclusion. An additional benefit of this tool is that it often screens out the non-names included in the probable-names list. If the algorithm determines that the first word of the non-name term (e.g., “Power” or “True”) is probably not a name because there is insufficient data to predict the gender, it is omitted from the gender prediction.Footnote 9 The tool then aggregates the name-specific probabilities, producing an overall percentage of authors that are likely to be women.Footnote 10

However, there are shortcomings to this approach. First, authors identified only by initials cannot be categorized using this method and are dropped from the estimation process. Second, because the tool aggregates probabilities rather than dichotomous designations of “man” and “woman,” names that are common among both genders can throw off the estimate. Third, the algorithm cannot predict gender for names that are particularly uncommon worldwide due to lack of data; these names also are dropped. Bibliographies and syllabi with high levels of any of these three issues will be less accurate. For instance, in the Open Syllabus Project example referred to previously, W. W. Rostow, V. O. Key, and four other names with initials only are dropped; Mancur Olsen’s gender cannot be predicted due to insufficient data; works by Dani Rodrik (probability = 0.61) and Alexis de Toqueville (probability = 0.48) incorrectly inflate the proportion of probable women; and work by Lee Epstein (probability = 0.25) incorrectly decreases it. The result is fewer total authors identified and an inflated estimate of the percentage of women. As a result, the tool usually provides an estimate that closely resembles reality, but users must be aware that it is only an estimate; particular characteristics of their documents may lead to more or less accuracy. This highlights a key tradeoff of the tool: it will never be as accurate as thorough hand-coding and should not be used as a replacement for it. However, it is a quick and easy estimation tool for those not already predisposed to hand-coding.

This tool was developed with modest intentions. Rather than produce the most accurate estimate of diversity within a syllabus, the aim is to make assessing gender diversity so easy and quick that more scholars will do it.

DISCUSSION

This tool was developed with modest intentions. Rather than produce the most accurate estimate of diversity within a syllabus, the aim is to make assessing gender diversity so easy and quick that more scholars will do it. It is hoped that this will lead to (1) a gradual decrease in the gender gap as scholars realize the degree of their under-citation of women; and (2) a rethinking of what is being cited, why, and what is being overlooked. A low percentage of women should be an invitation to explore what other material exists and may be unintentionally excluded.Footnote 11 However, this raises two important questions, the first of which pertains to best practices and the second to how the descriptive diversity of our bibliographies and syllabi can be improved.

The modal question asked in response to this tool is: “My bibliography/syllabus was N% women; is that good?” Reasonable minds differ on this point; however, whether a particular percentage is normatively desirable depends—at a minimum—on the topic at hand. Some subfields (e.g., political methodology) have far less diversity than others, including Race, Ethnicity, and Politics. Imposing uniform standards across subfields may not be sensible: a quantitative political methodology syllabus that has 20% women authors may be representative of the diversity in the subfield, whereas the same 20% on a syllabus about women in politics would be extremely problematic.Footnote 12 If scholars are unsure about the diversity of their subfield, reports such as the American Political Science Association (APSA) Status of Women in the Profession provide details about diversity in the field as a whole. Other professional organizations, such as the International Studies Association (ISA), publish similar information. Organized sections within APSA, the Midwest Political Science Association (MPSA), and ISA also may want to publish descriptive data about their membership to determine how relatively diverse their bibliographies and syllabi are.

The second most frequently asked question concerns how to make bibliographies and syllabi more diverse: How and where do we find relevant articles by women? Fortunately, the answer in many cases is simple: the Internet is replete with information about women and their research interests. Notably, the website WomenAlsoKnowStuffFootnote 13 exists to address this problem and maintains a list of women scholars who create profiles on its website (Beaulieu et al. Reference Beaulieu, Boydstun, Brown, Dionne, Gillespie, Klar, Krupnikov, Michelson, Searles and Wolbrecht2016). The website is organized by subject area, providing a list of women scholars in many subfields. Subfield-specific women’s groups also maintain public membership lists, including Visions in MethodologyFootnote 14 (political methodology), Women in Conflict StudiesFootnote 15 (conflict), and Journeys in World PoliticsFootnote 16 (international relations).

Although this article focuses on gender, scholars of color likely face many of the same problems as women regarding citation and assignment gaps, and scholars should be equally mindful of racial diversity when assessing bibliographies and syllabi. There are many resources on the Internet for locating scholars of color. The Twitter account @PoCAlsoKnow amplifies accomplishments by people of color in academia (@PoCAlsoKnow 2016).Footnote 17 The APSA Latino Caucus maintains a public membership list that is organized by subfield.Footnote 18 Similarly, the APSA Asian Pacific American Caucus maintains a public list that, although not organized by subfield, includes scholars’ research interests.Footnote 19 The National Conference of Black Political Scientists does not maintain a public membership list but allows the public to search for members. Using the Advanced Search feature,Footnote 20 users can select from a list of member types and search by field and subfield on the next page.

CONCLUSION

Although women actively contribute research in political science, their work is cited less frequently than their male counterparts. Their work also tends to be underrepresented on syllabi, which may exacerbate the citation gap. This gender gap has deleterious effects for women scholars because citation counts affect decisions about hiring, tenure, promotion, and raises.

Yet, even though the disadvantages of the citation gap are well known, this knowledge is only part of the battle. Scholars must keep these issues in mind when citing research or constructing syllabi. Although many scholars hand-code their bibliographies and syllabi, many more do not. For those not already predisposed to assessing descriptive diversity in their citations, determining the gender balance of a particular bibliography or syllabus may be viewed as tedious, time-consuming, and difficult. To make the task faster and easier, this article describes a web-based tool that allows users to easily upload a bibliography or syllabus and, within a minute, receive a probabilistic estimate of their bibliography or syllabus gender balance.

My goal in presenting this tool is to remove many of the practical roadblocks that deter scholars from assessing the gender balance of their syllabi and bibliographies. My hope is that this will encourage more people to assess the gender balance and then use this information to understand why they are citing particular authors and articles and whether they can make their syllabi and bibliographies more representative of the diversity of the field as a whole. To that end, this article also highlights resources to easily find women scholars and scholars of color who are conducting research in their field.

ACKNOWLEDGMENTS

For their help in developing the tool and their feedback on this article, the author thanks Ray Block, Justin Esarey, Rebecca Kreitzer, Michael Leo Owens, and Eric Reinhardt.