Introduction

The human brain can transform arbitrary symbols such as words into meaningful concepts within milliseconds and, even more incredibly, we can learn new vocabulary by making new connections between these arbitrary symbols and their meanings throughout our lives. One goal of research focused on second language (L2) acquisition and processing in adults has been to shed light on how new words are learned as well as how new language systems are built and stored. Several investigators have used the temporal precision of event-related potentials (ERPs) to measure the adult brain's response to L2 words in proficient bilinguals and learners who have not yet attained L2 proficiency (e.g., Alvarez, Holcomb & Grainger, Reference Alvarez, Holcomb and Grainger2003; Hoshino, Midgley, Holcomb & Grainger, Reference Hoshino, Midgley, Holcomb and Grainger2010; McLaughlin, Osterhout & Kim, Reference McLaughlin, Osterhout and Kim2004; Midgley, Holcomb & Grainger, Reference Midgley, Holcomb and Grainger2009a; Pu, Holcomb & Midgley, Reference Pu, Holcomb and Midgley2016; Soskey, Holcomb & Midgley, Reference Soskey, Holcomb and Midgley2016; Yum, Midgley, Holcomb & Grainger, Reference Yum, Midgley, Holcomb and Grainger2014). In most studies, the authors have reported the N400 to be the most sensitive ERP component to manipulations of target language and L2 proficiency. The N400 is a negative-going wave that peaks about 400 ms after stimulus onset and is thought to reflect lexico-semantic processing (Grainger & Holcomb, Reference Grainger and Holcomb2009). The majority of ERP studies of L2 processing have found that the amplitude, time-course, and/or scalp distribution of the N400 varies as a function of proficiency (Midgley et al., Reference Midgley, Holcomb and Grainger2009a; Midgley, Holcomb & Grainger, Reference Midgley, Holcomb and Grainger2009b; Midgley, Holcomb & Grainger, Reference Midgley, Holcomb and Grainger2011; Moreno & Kutas, Reference Moreno and Kutas2005).

Notably, several studies have shown that the N400 is sensitive enough to measure changes in the brain within the first few hours of L2 instruction (McLaughlin et al., Reference McLaughlin, Osterhout and Kim2004; Soskey et al., Reference Soskey, Holcomb and Midgley2016; Yum et al., Reference Yum, Midgley, Holcomb and Grainger2014). For example, McLaughlin et al. (Reference McLaughlin, Osterhout and Kim2004) found that the N400 response in native English speakers learning French could be used to discriminate L2 words from L2 pseudowords within the first 14 hours of instruction. Similarly, Pu et al. (Reference Pu, Holcomb and Midgley2016) found that native English speakers learning Spanish as an L2 showed backward translation priming (Spanish L2 – English L1) on the N400 after only two learning sessions in the laboratory. Because these N400 ERP effects likely reflect underlying language processing and are also sensitive to very early changes that occur with learning, they can provide important insights into how a second language begins to be integrated into an existing L1 language system.

Most of the research on L2 acquisition focuses on unimodal bilinguals who know two spoken languages. Recently, research on second language learning has extended beyond the focus on spoken languages and has included investigations of bimodal bilinguals who have acquired a spoken and a signed language. Someone with an L1 spoken language who learns a sign language later in life is sometimes considered an “M2-L2” learner because not only are they learning a second language but they are also acquiring a second modality (M2) (see Chen Pichler & Koulidobrova, Reference Chen Pichler, Koulidobrova, Marschark and Spencer2015). Sign languages and spoken languages use distinct articulators—the hands versus the vocal tract—and thus learning a sign language necessarily includes the integration of new phonological and articulatory systems for L1 spoken-language users.

One important result coming out of this research is that L2 sign acquisition is affected by the iconicity of signs (e.g., Lieberth & Gamble, Reference Lieberth and Gamble1991; Campbell, Martin & White, Reference Campbell, Martin and White1992; Morett, Reference Morett2015; Ortega, Özyürek & Peeters, Reference Ortega, Özyürek and Peeters2019). Iconicity is a pervasive feature of many sign languages and is most often defined as the extent to which a sign resembles its meaning. For example, the sign for “cry” in American Sign Language (ASL) involves tracing the index fingers down the cheeks, mimicking a stream of tears. Because tears are a salient visual feature of the concept “cry,” this is a highly iconic sign and the meaning can be easily guessed (Sehyr & Emmorey, Reference Sehyr and Emmorey2019). On the other hand, the sign for “farm” involves rubbing the side of the thumb under the chin with all fingers extended (a 5 handshape), which does not visually map on to any salient features of the concept of “farm” and so FARMFootnote 1 is a relatively non-iconic sign. M2-L2 learners tend to learn (Lieberth & Gamble, Reference Lieberth and Gamble1991) and translate (Baus, Carreiras & Emmorey, Reference Baus, Carreiras and Emmorey2013) iconic signs faster and more accurately than less iconic signs. However, there is also growing evidence to suggest that gesture knowledge may interfere with learning the form of ASL signs (Chen Pichler & Koulidobrova, Reference Chen Pichler, Koulidobrova, Marschark and Spencer2015; Ortega & Morgan, Reference Ortega and Morgan2015) and that hearing non-signers are less accurate when producing highly iconic signs compared to arbitrary signs, presumably because their familiarity with the form of a related gesture may lead to superficial processing of the sign's form (Ortega, Reference Ortega2013; Ortega & Morgan, Reference Ortega and Morgan2015).

In addition, how speakers gesture can impact their perception of the meaning of signs (Ortega, Schiefner & Özyürek, Reference Ortega, Schiefner and Ozyurek2017; Sehyr & Emmorey, Reference Sehyr and Emmorey2019). For example, signs that overlap with gestures (i.e., gestures that are used to convey the concept denoted by the sign) are guessed more accurately and given higher iconicity ratings than signs that have no form overlap with gestures (Ortega et al., Reference Ortega, Schiefner and Ozyurek2017). The meanings of signs may also be guessed incorrectly if their form overlaps with a symbolic gesture, e.g., the ASL sign LONELY is often incorrectly guessed to mean “be quiet” because this sign resembles a “shh” gesture (the index finger touches the lips; Sehyr & Emmorey, Reference Sehyr and Emmorey2019). This symbolic gesture could be considered a type of “false friend” gesture for the sign (e.g., Brenders, van Hell & Dijkstra, Reference Brenders, van Hell and Dijkstra2011). Such effects of gesture knowledge on the perception of sign meaning might be comparable to transfer effects that occur from L1 to L2 (e.g., MacWhinney, Reference MacWhinney1992). In fact, Ortega et al. (Reference Ortega, Özyürek and Peeters2019) suggest that these iconic gestures function as “manual cognates” for hearing L2 learners of a sign language.

Despite the motoric and perceptual differences between signed and spoken languages, previous studies examining the electrophysiology of language processing have shown that the brain processes signed and spoken languages similarly. For example, in their seminal study, Kutas, Neville, and Holcomb (Reference Kutas, Neville and Holcomb1987) found that the N400 effect for sentences with semantically congruent versus anomalous completions could be found to visually presented English words, spoken English words, or ASL signs. Further, Neville, Coffey, Lawson, Fischer, Emmorey, and Bellugi (Reference Neville, Coffey, Lawson, Fischer, Emmorey and Bellugi1997) and Capek, Grossi, Newman, McBurney, Corina, Roeder, and Neville (Reference Capek, Grossi, Newman, McBurney, Corina, Roeder and Neville2009) found N400 effects to semantic anomalies when deaf signers comprehended ASL sentences. What is not yet clear is whether similar N400 effects can be seen to ASL signs in hearing L1 speakers within the first few hours of sign language learning, whether those N400 effects would be modulated by iconicity, or how those N400 effects might compare to N400 effects seen in proficient deaf ASL signers.

The present study

The main goals of the present study were to assess the neural correlates of cross-modal translation priming in hearing adults who are in the earliest stages of learning American Sign Language (ASL), to determine how those priming effects may be modulated by a sign's iconicity, and to determine how these effects compare to the effects seen in deaf adults who learned ASL natively or early in childhood. To accomplish this, hearing adults with no significant previous exposure to any sign languages were taught a small vocabulary of ASL signs over two lab sessions within one week. These hearing learners came into the lab for a final session later in the same week, during which ERPs were recorded as they completed an English – ASL translation recognition task. A group of deaf signers who were bilingual in ASL and (written) English also completed the English – ASL translation recognition task while ERPs were recorded but did not complete the ASL lab learning sessions.

Previous research showing cross-language N400 priming early during learning of a new second spoken language (e.g., Pu et al., Reference Pu, Holcomb and Midgley2016) suggests that we may find a similar reduction in the N400 component for the ASL signs which follow their English translations compared to the ASL signs which follow unrelated English words in hearing ASL learners. We also expect to observe a reduced N400 negativity for the target ASL signs with translation prime words compared to unrelated prime words in the deaf ASL signers. However, L2 proficiency has been shown to affect the timing of ERP components. For example, Midgley et al. (Reference Midgley, Holcomb and Grainger2009a) showed that the N400 shifted later in time for less proficient bilinguals when processing their L2. Thus, we also predicted that the time-course of the N400 may shift later in the ERP of hearing L2 learners compared to proficient deaf signers.

Also of interest in this study was how iconicity of a sign might differentially affect translation priming for hearing learners compared to deaf signers. As noted above, previous research indicates that iconicity is a salient feature of sign language, especially for beginning learners (Lieberth & Gamble, Reference Lieberth and Gamble1991; Campbell et al., Reference Campbell, Martin and White1992; Morett, Reference Morett2015; Ortega & Morgan, Reference Ortega and Morgan2015). However, the role of iconicity in sign comprehension is less clear for proficient signers. For example, Bosworth and Emmorey (Reference Bosworth and Emmorey2010) found that iconic signs were not recognized faster than non-iconic signs by deaf native signers in a lexical decision task, and Baus et al. (Reference Baus, Carreiras and Emmorey2013) found that proficient hearing ASL-English bilinguals exhibited slower recognition and translation times for iconic than non-iconic signs in a translation task. On the other hand, evidence from picture-naming tasks suggests that during production, iconic signs are retrieved more quickly by deaf skilled signers (Vinson, Thompson, Skinner & Vigliocco, Reference Vinson, Thompson, Skinner and Vigliocco2015; Navarrete, Peressotti, Lerose & Miozzo, Reference Navarrete, Peressotti, Lerose and Miozzo2017). Here we investigated whether the amplitude and/or time-course of the N400 translation priming effect is modulated by sign iconicity and whether such effects are more pronounced in hearing learners than in deaf signers.

Methods

Participants

Participants in the hearing learner group were 24 monolingual native English speakers (12 males) who ranged in age from 19 to 29 years old (mean age = 22.4, SD = 2.8). No hearing participant had any significant exposure to a sign language prior to participating in this study. Participants in the deaf signer group were 20 native or early-exposed ASL signers (10 males) who were fluent in (written) English and ranged in age from 22 to 49 years old (mean age = 29.8, SD = 6.3). All of the early-exposed deaf signers (N = 6) acquired ASL before the age of 7 years.

All participants were right-handed, had normal or corrected-to-normal vision, and had normal neurological profiles. Participants were recruited from San Diego State University and the city of San Diego, and they all gave informed consent to participant in accordance with San Diego State University Institutional Review Board protocols.

Stimuli

Stimuli were short video clips of a native deaf ASL signer producing individual signs. Clips were obtained from the ASL-LEX database (Caselli, Sevcikova Sehyr, Cohen-Goldberg & Emmorey, Reference Caselli, Sevcikova Sehyr, Cohen-Goldberg and Emmorey2017) and were centred on an LCD monitor, with a black background at a viewing distance of 150 cm. The videos measured 10 cm x 13.5 cm. These viewing parameters were selected so participants would not have to make eye movements to fully perceive each sign. The average duration of the clips was 1793 ms for the iconic signs (SD = 282) and 1874 ms (SD = 325) for the non-iconic signs. The video clip included the transitional movement of the hand(s) from rest position (the model's lap) to the sign onset, as well as the transitional movement of the hand(s) back to rest position. The average sign onset was 516 ms after video onset for the iconic signs (SD = 124) and 498 ms (SD = 132) for the non-iconic signs. Sign onset measures were taken from the ASL-LEX database where sign onset was defined as the first video frame in which the fully formed handshape contacted the body or was held in space before initiating the sign's movement (see Caselli et al., Reference Caselli, Sevcikova Sehyr, Cohen-Goldberg and Emmorey2017).

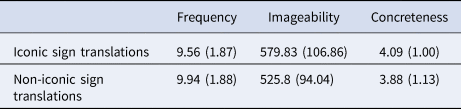

Signs were designated “iconic” or “non-iconic” based on their iconicity ratings listed in the ASL-LEX database (Caselli et al., Reference Caselli, Sevcikova Sehyr, Cohen-Goldberg and Emmorey2017). Iconicity ratings in the ASL-LEX database were obtained from 21–37 hearing non-signers who were presented a video of the ASL sign and its English translation, and were asked to rate how much each sign “looks like what it means” using a 7-point Likert scale (1 = not iconic at all, 7 = very iconic; Caselli et al., Reference Caselli, Sevcikova Sehyr, Cohen-Goldberg and Emmorey2017). All 40 signs included in the iconic condition had iconicity ratings of 4.0 or higher (M = 5.2, SD = 0.9), and signs included in the non-iconic condition had iconicity ratings of 2.5 or lower (M = 1.5; SD = 0.4). None of the signs were “false friends” with symbolic gestures (i.e., they did not resemble common gestures with a different meaning from the sign). The majority of iconic signs (80%; 32/40) were not manual cognates; that is, they did not match transparent, symbolic gestures (e.g., the signs PUSH and CRY are very similar to common pantomimic gestures, but most of the iconic signs were not associated with a conventional gesture). The ASL sign glosses (English translations) included in the iconic and non-iconic conditions are presented in Table 1. The English translations for the iconic signs did not differ significantly from the translations of the non-iconic signs with respect to English word frequency (Balota, Yap, Cortese, Hutchison, Kessler, Loftis, Neely, Nelson, Simpson & Treiman, Reference Balota, Yap, Cortese, Hutchison, Kessler, Loftis, Neely, Nelson, Simpson and Treiman2007), imageability (Wilson, Reference Wilson1988), or concreteness (Brysbaert, Warriner & Kuperman, Reference Brysbaert, Warriner and Kuperman2014), as shown in Table 2. None of the English translations were homophones with multiple translations in ASL (e.g., words like “fall” which could be translated as AUTUMN or PERSON-FALL were not included).

Table 1. List of ASL sign glosses included in the Iconic and Non-iconic conditions. Videos of all signs can be found in the ASL-LEX database (asl-lex.org).

Table 2. Means for the learned English translations of iconic and non-iconic signs. Standard deviations are in parentheses.

Procedure

Hearing learners came into the laboratory for three sessions within the same week (each session was 24 to 48 hours apart), and they were instructed not to practice or have any additional exposure to ASL outside of the lab. These participants completed the series of learning protocols in the first two lab sessions, described below, with the goal of learning the meaning of 105 ASL signs (the 80 critical signs described above and 25 signs that were used as fillers and practice items). After completing the learning protocols, hearing learners completed a cross-modal translation recognition task during their third session in the lab while EEG was recorded. The group of deaf signers completed only the cross-modal translation recognition task while EEG was recorded and did not complete any of the learning protocols. See Table 3 for an overview of sessions.

Table 3. Tasks performed by hearing learners across three sessions. Only the cross-modal translation recognition task in session three was performed by the deaf signers.

Learning protocols (hearing learners only)

During their first session in the lab, hearing learners completed an associative learning task followed by a forced-choice translation task as described below. During their second session in the lab, participants first completed the forced-choice translation task to measure how well they recalled the signs from the previous session, then the associative learning task, and finally the forced-choice translation task a second time. To ensure that any observed effects were not list-order effects, lists were pseudo-randomized and counterbalanced. No learning protocols were completed on the participants’ third session.

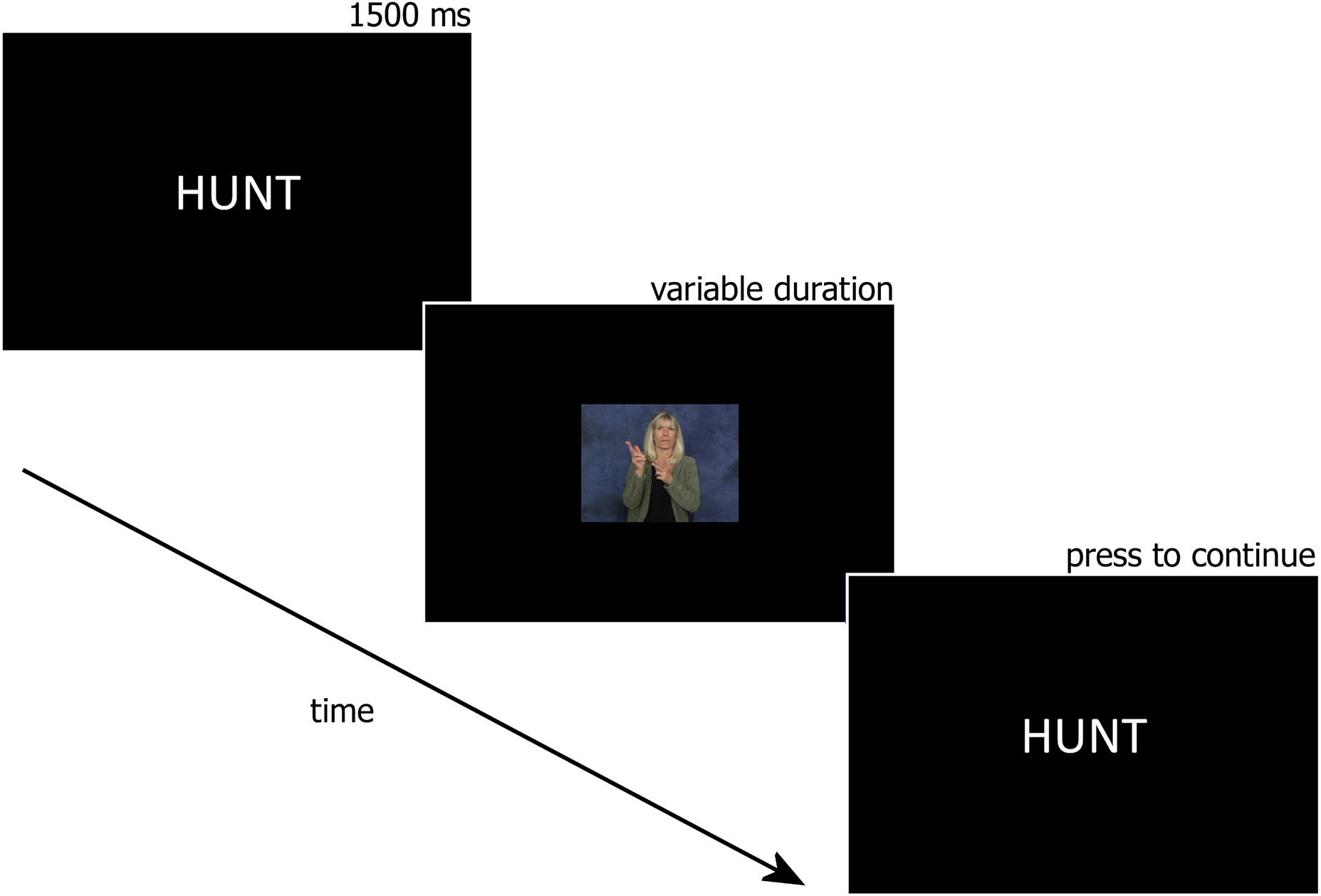

Associative learning task.

Printed English words were presented on the computer monitor followed by the video of the ASL translation of the word (see Figure 1). After the video played, the English translation would appear on the monitor again until the participant pressed a button indicating they were ready to learn the next sign. Participants were instructed to practice reproducing the sign at least once during each trial and were told to take as long as they needed to process each English – ASL pair before pressing the button to move on. All 105 word-sign pairs were included in the associative learning task.

Fig. 1. Sample trial from the associative learning task.

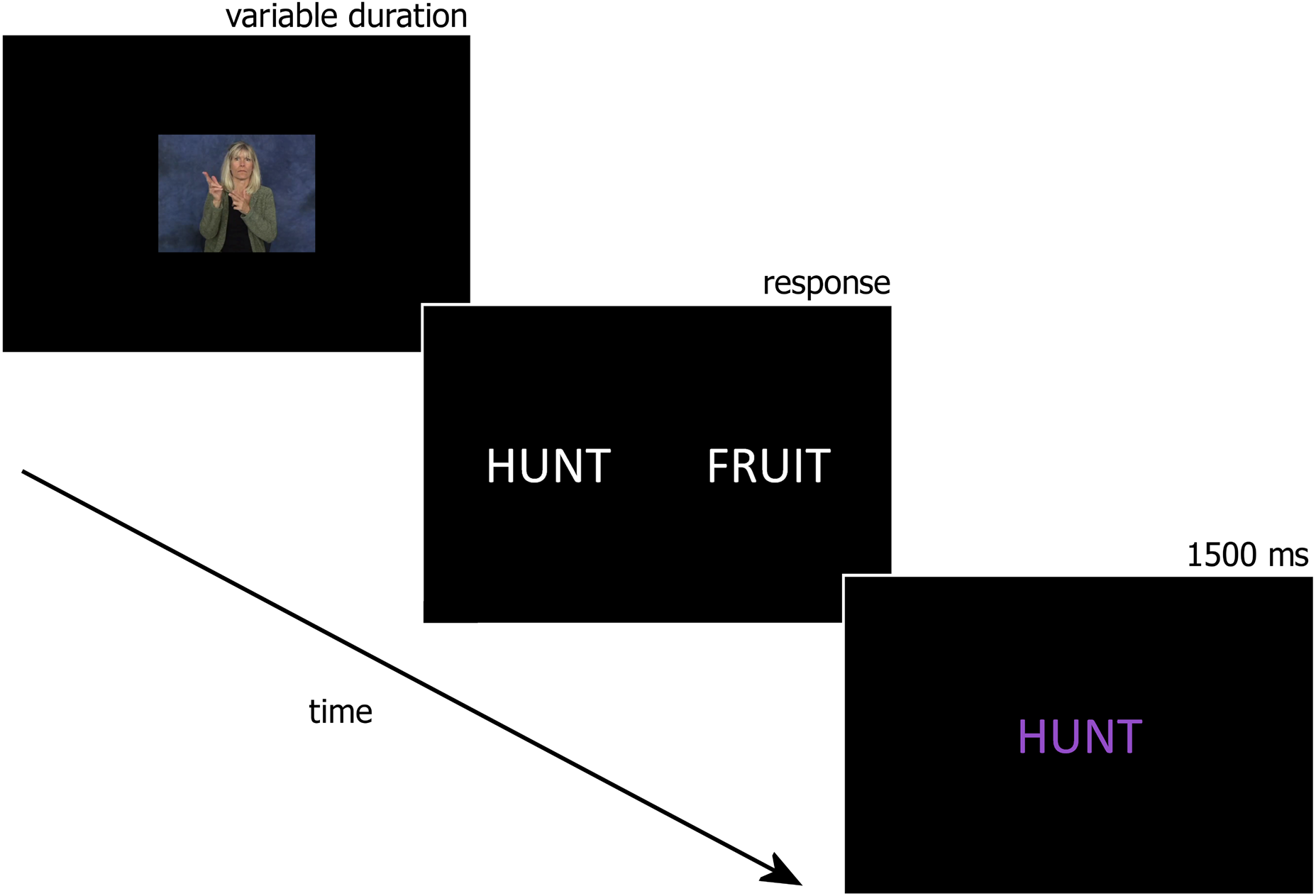

Forced – choice translation task.

In this task, ASL sign videos were presented on the computer monitor followed by two printed English words, one of the word choices was the correct translation and the other word was a translation for a different ASL sign in the learning block. Participants used buttons on a gamepad to indicate whether the correct translation of the ASL sign was presented on the left or right side of the screen. Once the participant responded they were given feedback in the form of the correct translation printed in the center of the screen (see Figure 2). All word-sign pairs included in the associative learning tasks were included in the forced – choice translation task.

Fig. 2. Sample trial from the forced-choice translation task.

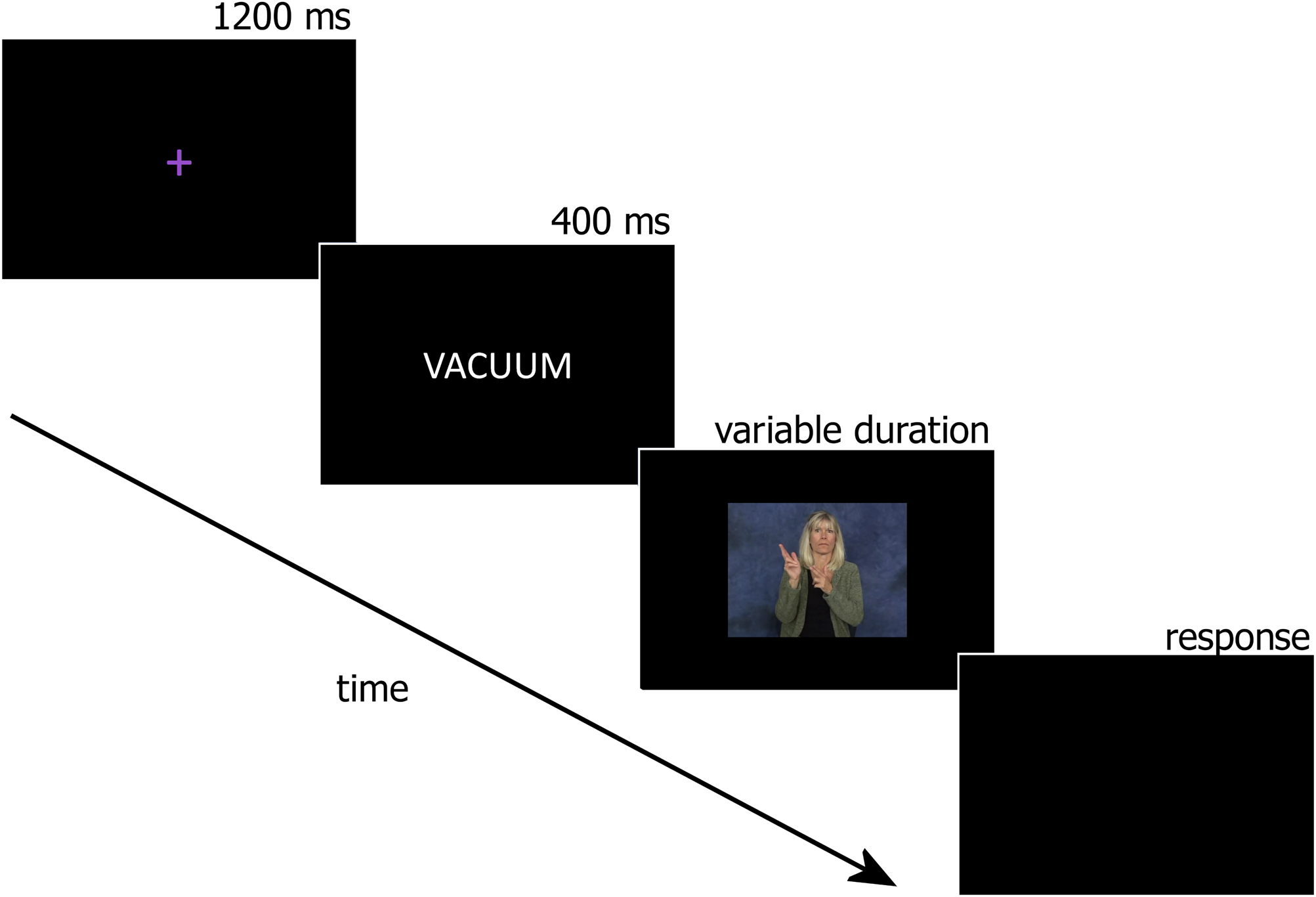

Cross – modal translation recognition task (hearing learners and deaf signers)

During their third session in the lab, hearing learners, along with deaf signers, completed a cross-modal translation recognition task while EEG was recorded. Each trial in this task began with a purple fixation cross “+” centered on the screen (1200 ms), followed by a 400 ms printed English prime word and finally a target ASL sign video. We chose to use the ASL signs as targets instead of the English words for two reasons. First, we were particularly interested in elucidating the ERP signatures of ASL sign processing considering sign language is under-represented in the literature compared to spoken (written) languages. Second, L2-L1 priming tends to be much weaker than L1-L2 priming in beginning L2 learners, and therefore we chose L1-L2 priming to maximize our chances of finding priming effects.

Participants were instructed to answer as quickly and as accurately as possible whether the English prime word was an acceptable translation of a target ASL sign by pressing one of two buttons on a gamepad. If the participants did not respond by the time the ASL video finished playing, a blank screen appeared until a response was made (see Figure 3). All 105 items from the learning protocols were included. For each participant, approximately half of the ASL videos were immediately preceded by their correct English translations (i.e., “YES” responses) and the remaining ASL videos were preceded by an unrelated English prime word (i.e., “NO” responses). Two lists were used so that all signs were presented to half of the participants in the translation condition, and to the other half of participants in the unrelated condition.

Fig. 3. Sample trial (unrelated/iconic) from the cross-modal translation recognition task.

EEG procedure

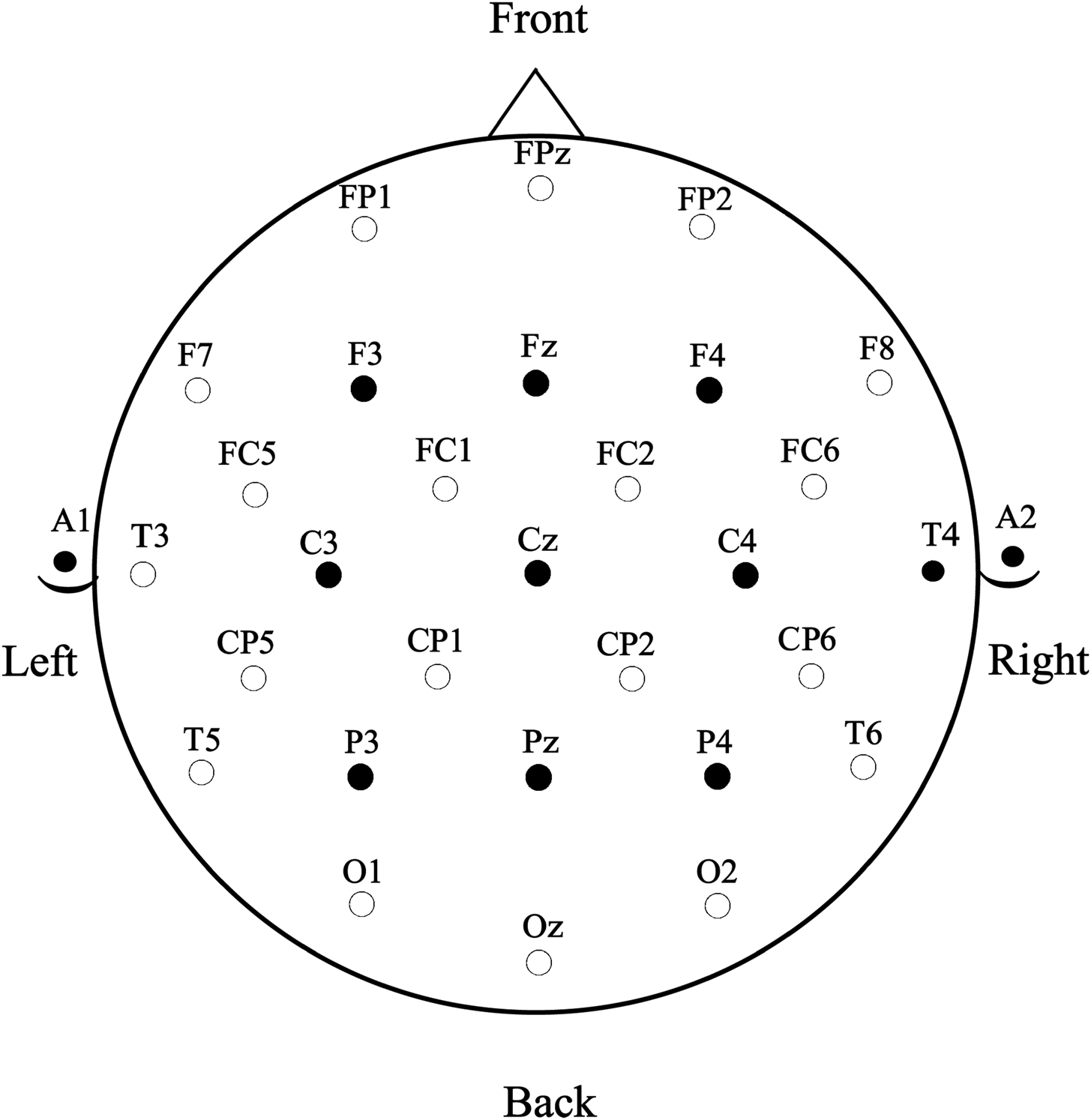

EEG from 29 scalp sites (see Figure 4) and eye related activity from two additional sites (below the left eye to detect blinks and vertical eye movements and to the right of the right eye to detect horizontal eye movements) along with an electrode over the right mastoid (to detect potential experimental effects at the right mastoid – none were seen) were all referenced to the left mastoid. Electrode impedances were maintained below 2.5 kΩ and the EEG was digitized continuously online at 500 hz between DC and 100 Hz (NeuroScan SynAmps RT). Offline, separate ERPs were formed time-locked to the onset of ASL video clips using a 100 ms pre-sign baseline in conditions of the cross-modal translation recognition task. Averaged ERPs were digitally filtered between .01 and 15 Hz (3db cutoff) prior to analysis. Trials with eye-movements or muscle artefact were excluded from the averages. After rejection for incorrect responses and EEG artifact 18.2 trials were retained for analysis, on average, for the iconicity comparisons. Even though the Ns for these comparisons are smaller than ideal, we observed statistically reliable effects suggesting that the effects are robust. (SNR for hearing = 42.2; SNR for deaf = 37.7).

Fig. 4. Electrode montage with the nine sites (filled circles) used in analyses circled.

Data analysis

Response times (RTs) and percent correct responses for the forced-choice translation tasks from the learning protocol were measured as the time between presentation of the English word choices and the button press indicating the participant's response (hearing learners only). RTs for the cross-modal translation recognition task were measured from the onset of the ASL video in both hearing learners and deaf signers.

A subset of nine representative electrode sites (see Figure 4) were included in the analyses in order to give adequate coverage of the scalp and allow for a single ANOVA (including scalp distribution) per epoch. We have used this strategy successfully in a previous word learning study (Pu et al., Reference Pu, Holcomb and Midgley2016). Because the timing of the N400 may be affected by language proficiency and the stimulus videos included transitional movements before sign onset, prior work in our lab has shown that a broader range of latencies is necessary to capture priming effects to these dynamic stimuli (e.g., Holcomb, Midgley, Grainger & Emmorey, Reference Holcomb, Midgley, Grainger and Emmorey2015). Therefore, we measured mean amplitude (baselined to the 100 ms pre-sign epoch) in four contiguous time-windows: 400 – 600 ms, 600 – 800 ms, 800 – 1000 ms, and 1000–1400 ms. The resulting values were entered into four separate mixed design ANOVAs including one between-subject variable of Group (hearing learners vs. deaf signers) and four within-subject variables of Priming (translations vs. unrelated), Iconicity (iconic vs. non-iconic), Anteriority of electrode site (frontal vs. central vs. parietal), and Laterality of electrode site (left vs. midline vs. right). Because the two iconicity conditions involved physically different sign stimuli (which can have large effects on ERPs irrespective of linguistic differences), we only report iconicity effects that resulted in an interaction with the priming variable. The logic here is that because the same signs were used in both priming conditions any item variability would be controlled for in any observed priming effects. All reported p-values with degrees of freedom higher than one in the numerator reflect the Greenhouse-Geisser correction (Geisser & Greenhouse, Reference Geisser and Greenhouse1959).

Results

Behavioral results

Forced – choice translation tasks (hearing learners only)

To evaluate the hearing participants’ success in learning ASL signs, accuracy and response time data were gathered from the three forced-choice translation tasks which participants completed during their first two lab sessions (see Table 3). Hearing learners performed very well on this task overall, scoring near ceiling for the iconic signs and only slightly worse for the non-iconic signs (see Table 4). Thus, by this measure, participants were quite successful in learning the meaning of the 80 critical ASL signs during the laboratory learning sessions.

Table 4. Mean reaction times (ms) and percent correct from the forced-choice translation tasks for hearing learners. Standard deviations are in parentheses.

ANOVAs were performed on the RTs and percent correct (arc sine). For RT there were significant main effects of test (F(2,46) = 47.78, p <.001) and iconicity (F(1,23) = 112.51, p < .001), as well as a significant test x iconicity interaction (F(2,46) = 46.19, p < .001). As can be seen in Table 4, the effect of iconicity (faster RTs to iconic than non-iconic sign translations) was strongest in the first testing session (a mean difference of 592 ms) and weakest in the third testing session (a difference of 249 ms). There was also a significant interaction between test and iconicity for percent correct (F(2,46)= 3.37, p = .05), with larger accuracy differences on test 1 (4.4%) and test 2 (5.1%) than test 3 (1.1%).

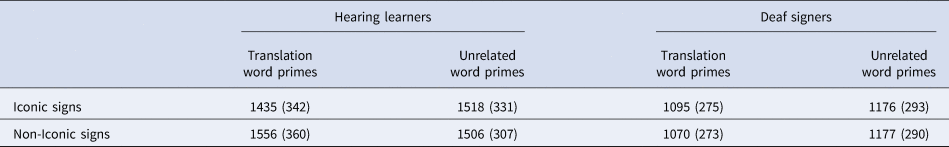

Cross-modal translation recognition task: hearing learners and deaf signers

During the cross-modal translation recognition experiment, both hearing learners and deaf signers performed near ceiling for the 80 critical items in the translation task (M = 78/80 and 79/80 correct for the hearing learners and deaf signers, respectively). RTs for each group are given in Table 5 (only RTs from correct responses within 2 SDs of participants’ mean RT were included). This analysis revealed a main effect of group (F(1,42) = 16.39, p < .001) which indicated that deaf signers, not surprisingly, made faster responses overall (1130 vs. 1504ms). Importantly, there was also a three-way interaction of group, iconicity, and priming (F(1,42) = 9.05, p = .004), suggesting a different pattern of priming as a function of iconicity in the two groups. We therefore followed up with separate ANOVAs in the two groups.

Table 5. Mean reaction times (ms) for the cross-modal translation recognition task. Standard deviations are given in parentheses.

In hearing learners there was a significant interaction of Iconicity x Priming (F(1,23) = 8.1, p = .009). Follow-up analyses revealed the interaction was due to a significant effect of Priming for the iconic signs (F(1,23) = 10.29, p = .004), but not for the non-iconic signs (p = .229). Hearing learners were faster to recognize iconic signs when they were preceded by their English translations (i.e., “YES” responses) than when they were preceded by unrelated English translations (i.e., “NO” responses), but no significant effects were found for the non-iconic signs (see Table 5).

For deaf signers there was a significant main effect of Priming (F(1,19) = 31.36, p < .001), but no main effect of iconicity or interaction of iconicity and priming (ps > .09). As can be seen in Table 5, deaf signers were faster to respond to ASL signs presented after their English translations (i.e., “YES” responses) than unrelated English translations (i.e., “NO” responses) in both the iconic and non-iconic conditions, whereas hearing learners only showed a translation facilitation effect to iconic signs. It is important to note that RTs are typically faster for “YES” responses compared to “NO” responses in studies of visual word recognition, and so interpretation of the behavioral data should be considered carefully and in conjunction with the ERP data.

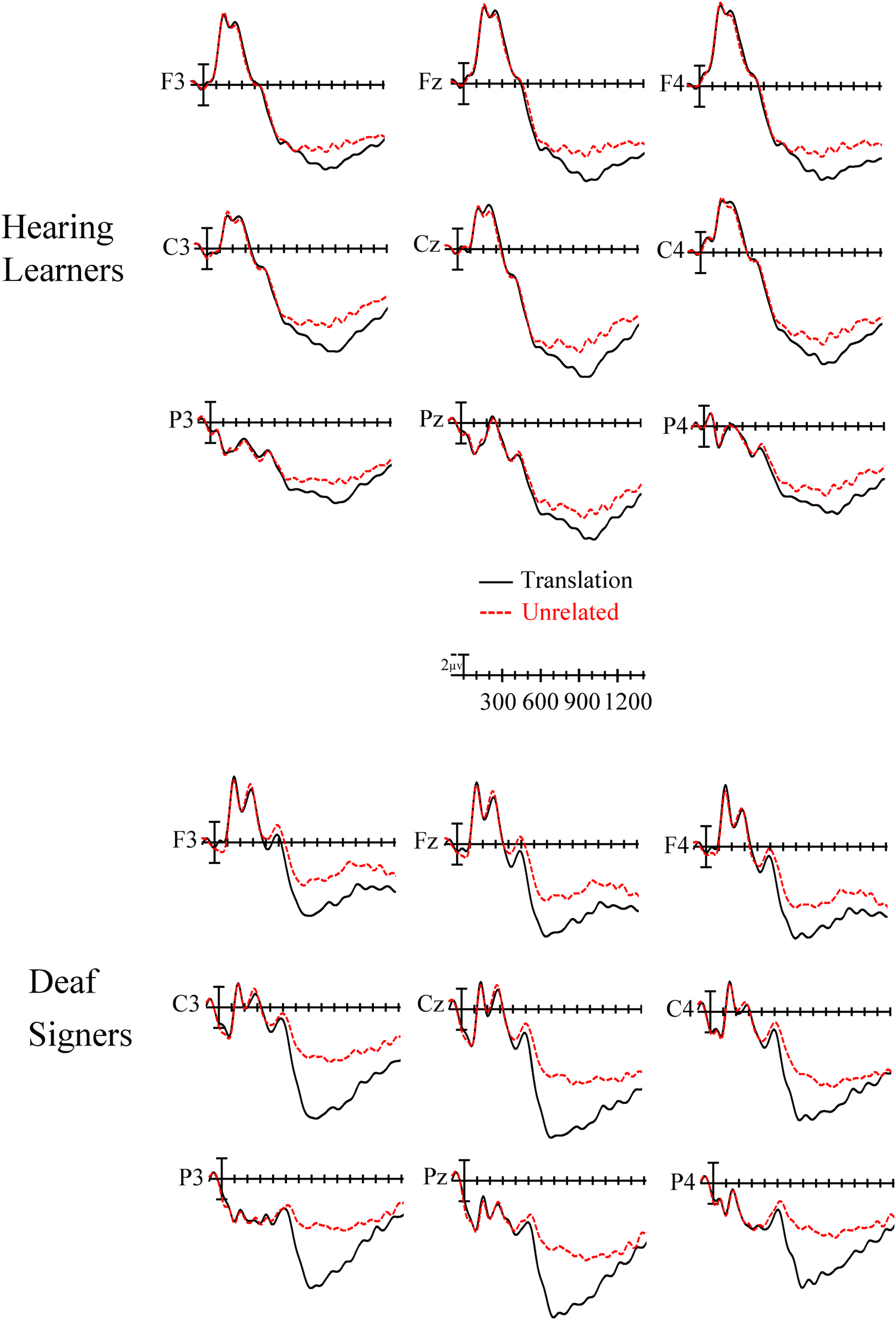

ERP results – Cross-modal translation recognition task

Plotted in Figure 5 are the ERPs from the translation priming task for the hearing learners (top panel) and deaf signers (bottom panel). ERPs were time-locked to the onset of ASL videos, with the waves from videos unrelated to the prior English prime word over-plotted with those to videos that were translations of the prime word. As can be seen, the sign stimuli generated a series of ERP components which would be expected for dynamic visual stimuli, including a number of relatively large (>3 microvolt) early (before 400ms) and later (after 400ms) deflections in the waves.

Fig. 5. ERPs to all target ASL videos that were translations of the English prime word (solid black) and target videos that were unrelated to the prime word (red dashed) from nine representative electrode sites used in the analyses. Video onset is the vertical calibration bar, and negative is plotted up.

400 – 600 ms time epoch.

In the overall ANOVA comparing deaf signers and hearing learners there was a main effect of priming (F(1,42) = 5.55, p = .023) with target signs preceded by unrelated primes producing greater negativity than targets preceded by related primes. There were no interactions between priming and iconicity although there was a marginally significant interaction between group and priming in this epoch (F(1,42) = 3.80, p = .058). While marginal interactions need to be interpreted cautiously, and do not fully justify follow-up analyses, one of the stated goals for this research was to determine how the effects seen in beginning learners compared to the effects seen in proficient deaf signers. Because a group comparison was planned a priori, we followed up this marginal interaction with separate analyses for each group.

Hearing learners did not show significant effects of translation priming (all p-values > .234 – see Figures 5 and 6), nor an interaction between priming and iconicity (all p-values > .133). However, deaf signers did show a significant main effect of translation priming (F(1,19) = 6.71, p = .018), such that unrelated targets produced more negativity compared to the translation targets (see Figures 5 and 6). Deaf signers did not show any significant interactions of priming and iconicity (all p-values > .474).

Fig. 6. Priming effects calculated by subtracting translation ERPs from unrelated ERPs for hearing learners (solid black) and deaf signers (dashed red). Video onset is the vertical calibration bar, and negative is plotted up. Note that priming effects start around 400 ms in deaf signers at anterior sites.

600 – 800 ms time epoch

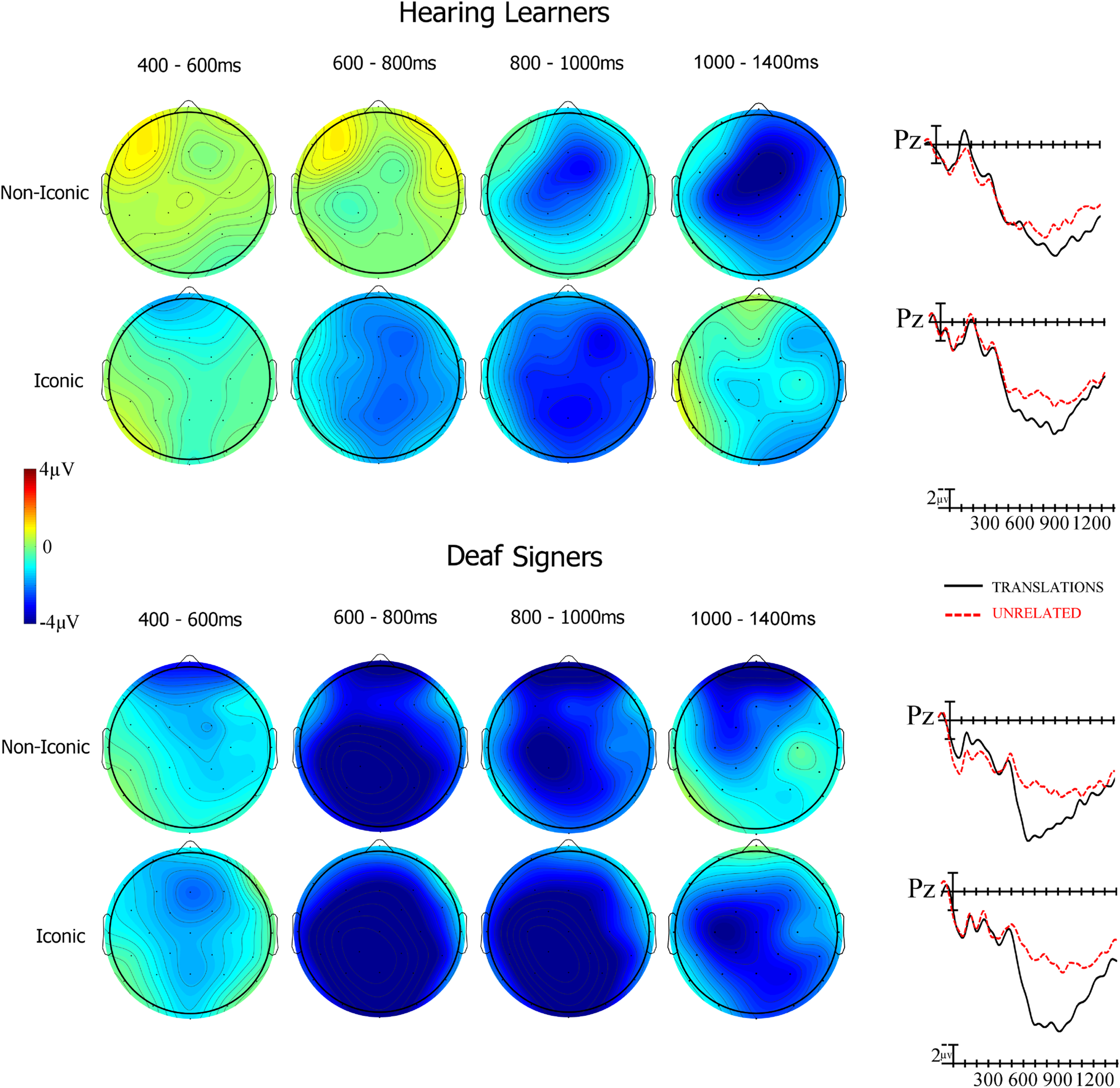

In this epoch the omnibus ANOVA revealed a main effect of priming (F(1,42) = 51.18, p < .001), and an iconicity by priming interaction (F(1,42) = 7.43, p = .009). The interaction indicated that the priming effect was larger for iconic than non-iconic signs. There was also a group by priming interaction (F(1,42) = 20.9, p < .001) which we followed up with separate analyses for hearing learners and deaf signers.

Hearing learners showed a significant main effect of priming (F(1,23) = 6.94, p = .015) as well as a priming by iconicity interaction (F(1,23) = 7.2, p = .0132). To understand the priming by iconicity interaction follow-up ANOVAs were performed contrasting the priming effect (Unrelated – Translation) for iconic and non-iconic signs. In this analysis there was a main effect of priming for the iconicity variable (F(1,23) = 7.2, p = .013) with iconic signs producing a large priming effect and non-iconic signs producing a very small effect (see Figure 8 left).

Deaf signers showed significant interactions for translation priming and laterality (F(2,38) = 11.98, p < .001) and between priming and anteriority (F(2,38) = 16.12, p < .001), such that unrelated targets generated stronger negativity than translation targets especially in midline posterior sites (see Figures 7 and 8 bottom). Deaf signers did not show significant translation priming by iconicity interactions in this time window (all p-values > .242).

Fig. 7. Voltage maps (left) showing the scalp distribution and time course of translation priming effects (Unrelated – Translation) in the four analysis epochs for non-iconic and iconic ASL target signs. ERPs (right) at the Pz electrode site time-locked to target signs that were translations of the prior English word (black solid line) and signs that were unrelated to the prior English word.

Fig. 8. Priming effect difference waves computed by subtracting translation target ERPs from unrelated target ERPs for iconic signs (solid black) and non-iconic signs (red dashed) in hearing learners and deaf signers.

800 – 1000 ms time epoch.

In the third epoch the omnibus ANOVA revealed a main effect of translation priming (F(1,42) = 41.35, p < .001) and an interaction between priming and iconicity (F(1,42) = 5.46, p = .024). There were also interactions involving the group variable including a group x priming x laterality interaction (F(2,84) = 7.07, p = .003) and a group x priming x iconicity x laterality x anteriority interaction (F(4,168) = 1.83, p = .047).

Because of the interactions involving the group variable we ran follow-up analyses separately for the two groups. In the hearing learners we found a significant main effect of priming (F(1,23) = 20.7, p =.0001) with unrelated targets producing more negative-going waves than translation targets. There was also a significant interaction of priming x laterality x anteriority (F(4,92) = 4.92, p = .003), indicating that the translation effect tended to be largest over more posterior midline sites (see Figures 5 and 7). All of the interactions involving priming and iconicity did not reach significance (all ps > .06).

Deaf signers showed a significant priming x iconicity interaction (F(1,19) = 5.45, p = .031). A follow-up ANOVA comparing the priming effect (Unrelated – Translation) for iconic and non-iconic signs showed that the priming effect was larger in this epoch for iconic compared to non-iconic signs (F(1,19) = 5.45, p = .031 – see Figures 7 and 8).

1000 – 1400 ms time epoch.

In this final epoch the omnibus ANOVA revealed a main effect of translation priming (F(1,42) = 36.28, p < .0001), as well as several interactions including priming x laterality x anteriority (F(4,168) = 10.85, p < .001) and group x iconicity x priming (F(1,42) = 5.86, p = .02).

Because of the interactions involving the group variable we ran follow-up analyses separately for the two groups. In the hearing learners we found a significant main effect of priming (F(1,23) = 25.79, p < .001) with unrelated targets producing more negative-going waves than translation targets. There was also a significant interaction of priming x laterality x anteriority (F(4,92) = 4.96, p = .005), indicating that the translation effect was now larger over more anterior midline sites (see Figure 5). There was also an interaction between priming and iconicity (F(1,23) = 7.24, p = .013) and between priming x iconicity x laterality x anteriority (F(4,92) = 3.09, p = .02). To understand these latter interactions, we directly compared the priming effect (Unrelated – Translation) for iconic and non-iconic target signs. In this analysis there was a main effect of iconicity (F(1,23) = 7.24, p = .013) and an interaction of iconicity x laterality x anteriority (F(4,92) = 3.09, p = .045) indicating that in hearing learners the ERP priming was significantly larger for non-iconic signs than iconic signs especially over more anterior midline sites (see Figures 7 and 8).

In the deaf signers there was also a main effect of priming (F(1,19) = 13.73, p = .0015) and a priming x laterality interaction (F(2,38) = 4.55, p = .028) indicating that unrelated signs produced more negative-going ERPs than translation signs especially over left hemisphere and midline sites. However, there were no interactions between priming and iconicity in this epoch (all ps > .1 – see Figures 7 and 8).

Discussion

To examine neurocognitive processing during the earliest stages of learning a new sign language, we taught hearing adults who were initially naïve to ASL a small vocabulary of ASL signs over two laboratory sessions. Included in the target signs were subsets of 40 highly iconic signs and 40 non-iconic signs. The hearing learners then completed a cross-modal translation recognition task (English – ASL) in a subsequent laboratory session, during which ERPs were recorded. Deaf signers who learned ASL natively or early in childhood (before age 7) also performed the cross-modal translation recognition task in order to identify how ERP effects of priming and iconicity are modulated by language proficiency.

Behavioral data from the learning protocols showed that hearing learners’ accuracy was quite high and that reaction times decreased across learning sessions for the forced-choice translation task, indicating that participants were successful in learning the meaning of the ASL signs. Hearing learners were faster and more accurate to respond to iconic signs than non-iconic signs in all sessions, which is consistent with previous studies showing that highly iconic signs are more easily recognized by new learners of a sign language than non-iconic signs (e.g., Baus et al., Reference Baus, Carreiras and Emmorey2013; Lieberth & Gamble, Reference Lieberth and Gamble1991). The difference in reaction times between iconic and non-iconic signs narrowed across testing sessions, indicating that the advantage hearing learners have in recognizing iconic signs diminishes relatively quickly as learning progresses.

Behavioral data from the cross-modal translation recognition task revealed that hearing learners displayed faster response times to target signs when the prime word was the translation of the target than when the prime word was unrelated to the target, but only when the sign was iconic. Non-iconic signs elicited similar (slower) response times whether the prime was the translation or an unrelated word. Translation priming may not have occurred for non-iconic signs because recognition of these signs was so slow that any priming effect was swamped by protracted sign recognition. Not surprisingly, the deaf signers were much faster than hearing learners (by an average of 374ms) when making translation decisions, and translation facilitation effects were seen to both iconic and non-iconic signs in contrast to the hearing learners. For deaf signers, iconicity did not modulate behavioral priming effects, consistent with Bosworth and Emmorey (Reference Bosworth and Emmorey2010) who reported that iconicity did not alter semantic priming for deaf signers in a lexical decision task.

The ERP results from the cross-modal translation task generally complemented the behavioral results. For hearing learners, no translation priming effects were observed in the earliest time window (400–600ms). Recall that sign onsets occurred roughly 500 ms after the video onset, and thus lack of translation priming in this early epoch may have occurred due to slow sign recognition by the hearing learners. Previous research indicates that the N400 response may be delayed in less proficient language users compared to highly proficient users (e.g., Midgley et al., Reference Midgley, Holcomb and Grainger2009a). In the next time window (600–800ms), hearing learners showed a translation priming effect for the iconic signs (greater negativity for targets preceded by unrelated than translation prime words), but this effect was not significant for the non-iconic signs. This pattern is consistent with the response time data, i.e., facilitation was only observed for iconic signs. In the last two epochs (800–1000 ms and 1000–1400ms), hearing learners exhibited translation priming effects for both iconic and non-iconic target signs (see Figure 7) with a larger priming effect in the final epoch for non-iconic signs. This pattern suggests that in the earliest stages of sign learning, semantic access for non-iconic signs is delayed compared to iconic signs.

Overall, these results suggest that for beginning learners of ASL, iconicity is a particularly salient feature of signs. Highly iconic signs are recognized earlier and may be more easily integrated into an emerging ASL lexicon. However, an alternative possibility is that the translation priming effects we observed for iconic signs are unrelated to language learning and simply reflect a type of congruency effect for iconic gestures and English words. For example, Wu and Coulson (Reference Wu and Coulson2005; Reference Wu and Coulson2011) found reduced negativity (N450) to iconic gestures that were preceded by a congruent context (a cartoon event that matched the gesture depiction) compared to an incongruent context (an unrelated cartoon event). Wu and Coulson (Reference Wu and Coulson2005) argued that the semantic congruency effect for the cartoon events and gestures belongs to the N400 class of negativies which are responsive to manipulations of semantic relatedness across a range of stimulus types (e.g., words, pictures). It is possible that such an N400-like priming effect might be observed for the iconic signs in the present experiment without any prior ASL instruction. However, we view this possibility as unlikely because written words are not visually depictive (unlike cartoons), and the meaning of most of the iconic signs was not readily apparent (i.e., transparent; Sehyr & Emmorey, Reference Sehyr and Emmorey2019). Thus, ASL-naïve participants might not be able to easily (or quickly) recognize the relationship between the meaning of the English word and the related iconic sign. Furthermore, the fact that we observed ERP priming effects for non-iconic signs (whose meanings are not guessable) indicates that the beginning learners were indeed learning the ASL signs and developing a small ASL lexicon.

In the latest two time-windows (800–1000 ms and 1000–1400ms), the scalp distribution of the translation priming effect in the hearing learners differed somewhat for iconic and non-iconic signs. The priming effect was more anterior for the non-iconic signs (see Figure 7 and 8), and overall, the non-iconic signs elicited a stronger frontal negativity and posterior positivity than the iconic signs. Although the iconic and non-iconic signs were matched for ASL frequency, imageability, and concreteness, it is possible that some other factor associated with the different sign types might have affected the distribution of the neural response during sign recognition, such as semantic differences or distinct strategies for translation decisions in this late time window.

Because the hearing learners in the present study all performed very well and had very high accuracy scores during the training sessions, it was not possible to create groups of “fast” and “slow” learners (e.g., Yum et al., Reference Yum, Midgley, Holcomb and Grainger2014), or otherwise separate the hearing learners based on ability or proficiency of ASL L2 acquisition. Thus, future studies with a similar focus may want to increase the difficulty of the learning sessions in order to induce more variability in performance, which might allow us to understand how iconicity and interactions between priming and iconicity might be modulated by aptitude for learning a sign language. It is also worth noting that the associative learning tasks in the present study were self-paced, which allowed participants to spend as much time as they needed to process each sign before progressing to the next sign. This self-paced sign production learning likely influenced participants’ high scores, so future studies may consider placing a time-limit on the associative learning tasks to increase task difficulty.

In contrast to hearing learners, deaf signers exhibited translation priming (greater negativity to unrelated targets compared to translation targets) in the earliest time window (400–600ms), and the priming effect was not modulated by sign iconicity. The priming effect continued into the 600–800 ms time window, and again similar priming was observed for both iconic and non-iconic signs. The third epoch (800–1000ms) was the only time window in which an interaction between priming and iconicity was observed in the deaf signers. In this late time window, priming effects were larger for iconic than non-iconic signs. One possible explanation for this late effect (which was approximately 400 ms later than the earliest effects of translation priming) is that it reflects some kind of post-lexical processing whereby the deaf signers recognize the iconicity manipulation.

In sum, hearing learners within the first few hours of L2 ASL instruction are quicker and more accurate at recognizing iconic signs than non-iconic signs, but this advantage diminishes as learning progresses. ERP priming effects occurred earlier for iconic than non-iconic signs, suggesting faster lexical access for iconic signs. Thus, iconic signs may be more easily integrated into an emerging lexicon for sign language learners. Deaf signers showed translation facilitation effects in the RTs for both iconic and non-iconic signs and exhibited earlier ERP translation priming effects compared to hearing learners. ERP translation priming effects were unaffected by iconicity until the 800 – 1000 ms time window. Within this time window, the effects of priming were larger for iconic signs. Because deaf signers showed main effects of translation priming before any effects of iconicity were seen, we suggest that deaf signers did not use a sign's iconicity to facilitate lexical access, but were able to recognize sign iconicity later in processing. Overall, the results indicate that iconicity speeds lexical access for beginning sign language learners, but not for proficient deaf signers.

Acknowledgements

This work was supported by grants from the National Institutes of Health (R01 DC010997 and HD25889). We thank Lucinda O'Grady Farnady and Allison Bassett for help carrying out this study. We also thank all of the study participants, without whom this research would not be possible