Recent considerations of aptitude for second language (L2) research include implicit language aptitude (e.g., Granena, Reference Granena2013; Suzuki & DeKeyser, Reference Suzuki and DeKeyser2017), a multifaceted construct defined as “cognitive abilities that facilitate implicit learning and processing of an L2” (Granena, Reference Granena2020, p. 7). From the various implicit learning and memory abilities (Reber, Reference Reber2013; Squire & Dede, Reference Squire and Dede2015), theoretical perspectives of L2 acquisition have posited a role for procedural memory and knowledge (DeKeyser, Reference DeKeyser, VanPatten, Keating and Wulff2020; Paradis, Reference Paradis2009; Ullman, Reference Ullman, VanPatten, Keating and Wulff2020). Given the implicit nature of procedural memory, understanding its role in L2 should be informative to perspectives on implicit language aptitude. Indeed, empirical research that has specifically investigated the role of procedural memory in L2 grammar suggests that individual differences in procedural memory learning ability are associated with L2 development at least at later stages of learning (see Buffington & Morgan-Short, Reference Buffington, Morgan-Short, Wen, Skehan, Biedroń, Li and Sparks2019; Hamrick et al., Reference Hamrick, Lum and Ullman2018). This body of research has used various assessments of procedural memory learning ability but has yet to establish the reliability and validity of these assessments, which is critical for the internal validity of each study as well as for the robustness of conclusions that can be drawn across studies. Accordingly, in the present study we examine the reliability and validity of assessments commonly used in L2 studies that explicitly aimed to examine the role of procedural memory in L2. Although we conceptualized this study within a framework focused on procedural memory learning ability, the findings may also have important implications for implicit language aptitude (Granena, Reference Granena2020) and implicit learning more generally (Kalra et al., Reference Kalra, Gabrieli and Finn2019).

PROCEDURAL MEMORY AND ITS RELATION TO L2 ACQUISITION

Extensive behavioral, neurophysiological, and neuroimaging work has provided evidence for dissociable learning and memory systems (Eichenbaum, Reference Eichenbaum2012; Gabrieli, Reference Gabrieli1998; Reber, Reference Reber2013; Squire, Reference Squire, Schacter and Tulving1994; Squire et al., Reference Squire, Hamann and Knowlton1994; Ullman, Reference Ullman2004, Reference Ullman, Hickok and Small2016; Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020; Willingham et al., Reference Willingham, Salidis and Gabrieli2002). Here, we focus on procedural memory and use a general conceptualization of procedural memory gleaned from several perspectives (e.g., Eichenbaum, Reference Eichenbaum2012; Gabrieli, Reference Gabrieli1998; Squire & Dede, Reference Squire and Dede2015; Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020). Specifically, procedural memory is considered to be one type of implicit learning and memory system that supports the acquisition of cognitive and motor skills and habits.Footnote 1 Procedural memory may be contrasted with other memory systems such as declarative memory, which supports the acquisition of facts and personal experiences (Eichenbaum, Reference Eichenbaum2012; Gabrieli, Reference Gabrieli1998; Squire & Dede, Reference Squire and Dede2015; Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020).

Learning supported by procedural memory shows several cognitive and neuroanatomical characteristics. For one, learning in procedural memory is believed to be implicit, in that it does not involve conscious awareness (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020). Relatedly, learning in procedural memory is not supported by attention, at least for cognitive skills, and indeed attention may interfere with learning in procedural memory for cognitive skills (Foerde et al., Reference Foerde, Knowlton and Poldrack2006). The development of knowledge in procedural memory occurs gradually as opposed to the relatively fast acquisition of knowledge in declarative memory (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020). Additionally, knowledge in procedural memory is typically encapsulated, meaning that it is generally inflexible with respect to the contexts in which it can be applied (Squire & Dede, Reference Squire and Dede2015). Finally, neuroanatomically, procedural memory relies on a fronto-striatal circuit (including frontal cortex, the basal ganglia, and the thalamus) as well as other neural substrates such as the cerebellum, as compared to declarative memory, which relies on the medial temporal lobe and connected cortical regions (Eichenbaum, Reference Eichenbaum2012; Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020).

Three theoretical perspectives predict a role for procedural memory and knowledge in L2 acquisition: Skill Acquisition Theory (DeKeyser, Reference DeKeyser, VanPatten, Keating and Wulff2020), Ullman’s Declarative/Procedural Model (Ullman, Reference Ullman, VanPatten, Keating and Wulff2020), and Paradis’ Neurolinguistic Theory of Bilingualism (Paradis, Reference Paradis2009). Although there are important differences among these perspectives (Buffington & Morgan-Short, Reference Buffington, Morgan-Short, Wen, Skehan, Biedroń, Li and Sparks2019; Morgan-Short & Ullman, Reference Morgan-Short, Ullman, Godfroid and Hoppin press), they agree in viewing procedural memory and knowledge as involved in the fluent production and comprehension of grammar in a second language, at least at higher levels of proficiency. Empirical research has begun to examine the association between procedural memory learning ability and L2 grammatical development (Antoniou et al., Reference Antoniou, Ettlinger and Wong2016; Ettlinger et al., Reference Ettlinger, Bradlow and Wong2014; Faretta-Stutenberg & Morgan-Short, Reference Faretta-Stutenberg and Morgan-Short2018; Hamrick, Reference Hamrick2015; Morgan-Short et al., Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014; Morgan-Short et al., Reference Morgan-Short, Deng, Brill-Schuetz, Faretta-Stutenberg, Wong and Wong2015; Pili-Moss et al., Reference Pili-Moss, Brill-Schuetz, Faretta-Stutenberg and Morgan-Short2020; Suzuki, Reference Suzuki2018). Indeed, a recent meta-analysis has shown that procedural memory learning ability is associated with L2 grammar abilities at higher, but not lower, levels of proficiency (Hamrick et al., Reference Hamrick, Lum and Ullman2018).

As this research expands to provide a more fine-grained understanding of the role of procedural memory in L2, the robustness and the interpretability of the findings will naturally depend on the validity and reliability of the tasks and measures used to assess procedural memory learning ability, among other factors. In examining the empirical research that has the explicitly stated purpose of testing the role of procedural memory in L2, we find a number of different tasks and measures (Table 1),Footnote 2 including different tasks that have been used by our research team.Footnote 3 To date, four tasks have been used in L2 procedural memory research: the Alternating Serial Reaction Time task (ASRT; J. H. Howard & D. V. Howard, Reference Howard and Howard1997), the dual-task version of the Weather Prediction Task (DT-WPT; Foerde et al., Reference Foerde, Knowlton and Poldrack2006; based on the single-task version, Knowlton et al., Reference Knowlton, Squire and Gluck1994; Knowlton et al., Reference Knowlton, Mangels and Squire1996), the Tower of London (TOL; Kaller et al., Reference Kaller, Unterrainer and Stahl2012; Shallice, Reference Shallice1982), and the Serial Reaction Time task (Hamrick, Reference Hamrick2015; Lum et al., Reference Lum, Conti-Ramsden, Page and Ullman2012; based on the task originally reported in Nissen & Bullemer, Reference Nissen and Bullemer1987). For some tasks, researchers have also used different measures of learning. For example, for the TOL, Ettlinger et al. (Reference Ettlinger, Bradlow and Wong2014) quantified improvement on the task as the overall trial solution time on a second administration of the task, whereas Morgan-Short et al. (Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014) measured the change in initial think time between the first and last trials of each block on one administration of the task (and combined this measure with the measure from the DT-WPT to create a composite score). The use of different tasks and measures is not problematic in and of itself. To the extent that each task is a valid measure of the construct of interest, results that are reproduced across these tasks suggest generalizability of the findings. However, if a field of inquiry is built upon different tasks that have not been validated, then both the internal validity of each study and the overall validity of the conclusions drawn across studies cannot be known. In addition to validity, it is vital that the reliability of each task be established so that we know that the construct of interest is measured consistently. As inquiry about the role of procedural memory in L2 expands, it is important to consider evidence for the validity and reliability of the tasks being used to assess procedural memory learning ability

TABLE 1. Studies examining the relationship between L2 acquisition and procedural memory

Note: Shows previous studies that examined the role of procedural memory in L2. TOL = Tower of London; DT-WPT = DT Weather Prediction Task; ASRT = Alternating Serial Reaction Time; SRT = Serial Reaction Time.

a Pili-Moss et al. (Reference Pili-Moss, Brill-Schuetz, Faretta-Stutenberg and Morgan-Short2020) analyzed unreported data from the Morgan-Short et al. (Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014) study.

b For technical reasons, some participants in Tagarelli et al. (Reference Tagarelli, Ruiz, Vega and Rebuschat2016) completed the ASRT, and others completed the SRT. Scores for both tasks were standardized for analysis.

.

EVIDENCE SUPPORTING THE RELIABILITY AND VALIDITY OF PROCEDURAL MEMORY TASKS

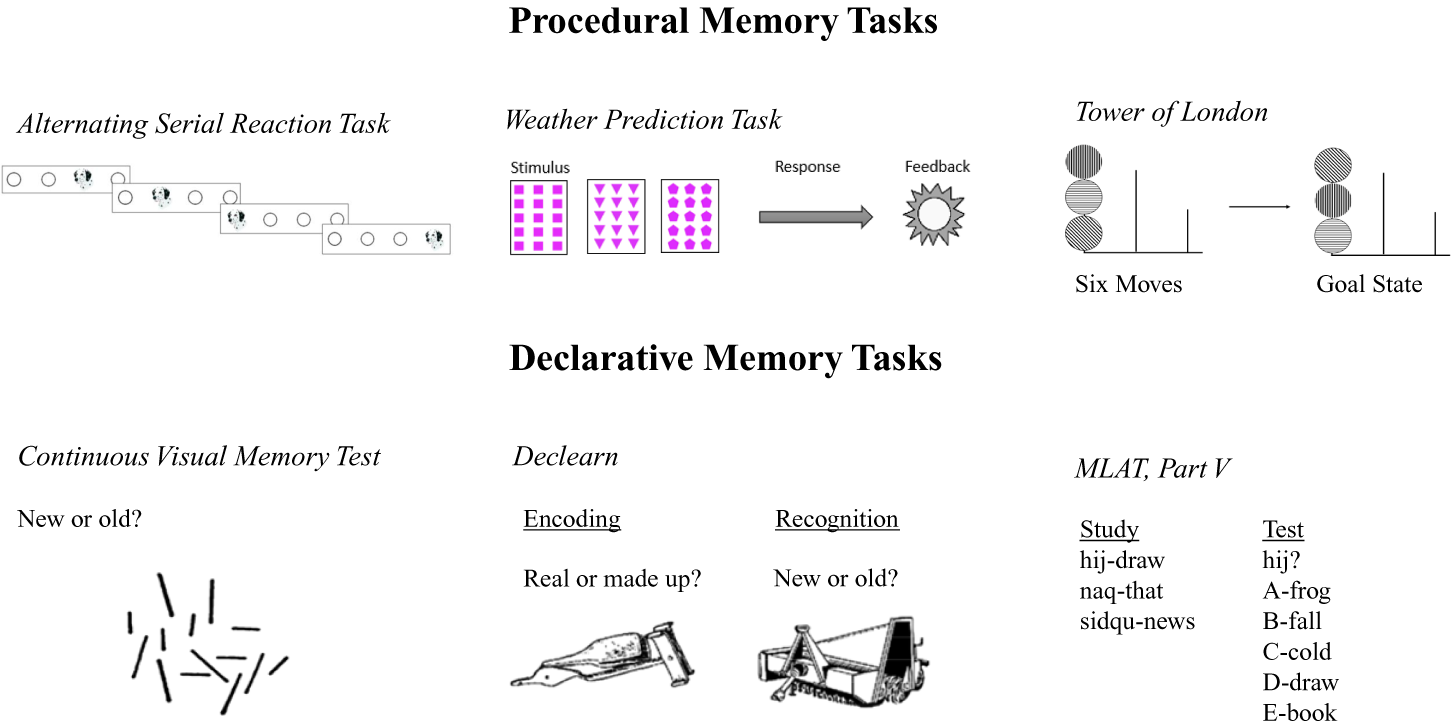

What is the evidence for the validity and reliability of the tasks that have been used to assess procedural memory learning ability in L2 research? Here we consider the most commonly used tasks: the ASRT, the DT-WPT, and the TOL (see Figure 1 for images of these tasks).Footnote 4 Currently, there is limited evidence for the reliability of these tasks, especially for the specific measures that have been used in the L2 literature. Support for validity is largely based on (a) the design of the tasks, such as the instructions provided to participants, the design of the stimuli, and/or dual-task procedures (described in the following text and detailed in the “Methods” section), and (b) previous research that shows how performance on these tasks reflects the characteristics of procedural memory and may involve the engagement of the particular neural substrates tied to procedural memory. In the text that follows we review the characteristics of the task design and typical patterns of task performance for the ASRT, DT-WPT, and TOL as well as research findings indicating that neural substrates associated with procedural memory may underpin performance on these tasks.

Figure 1. Procedural and declarative tasks.

Note: Shows sample images from each of the procedural and declarative memory learning ability tasks used in the present study.

ALTERNATING SERIAL REACTION TIME TASK

The ASRT is a sequence learning task in which an item of the sequence consists of a filled-in circle in a row of four circles (see Figure 1 for examples of circles filled in with dog heads). The sequence in which the circles are filled in follows a second-order pattern where patterned trials alternate with random trials. As such, participants see a repeating sequence such as 3r1r4r2r, where the numbers correspond to the location of the filled-in circle and “r” represents a random location. Participants are instructed to press a key corresponding to the location of the filled-in circle as quickly and accurately as possible. Learning is typically assessed by the difference in reaction times to target versus nontarget trials. For example, J. H. Howard and D. V. Howard (Reference Howard and Howard1997) examined reaction times on pattern versus random trials. Song, Howard, & Howard (Reference Song, Howard and Howard2007) examined reaction times to highly frequent triplets of trials versus low-frequency triplets, and Nemeth et al. (Reference Nemeth, Janacsek and Fiser2013) examined reaction times to high versus low frequency random triplets, among other measures. Regarding the reliability of the ASRT, a previous study demonstrated test-retest reliability (r = .46, p = .04) based on high- versus low-frequency triplets (Stark-Inbar et al., Reference Stark-Inbar, Raza, Taylor and Ivry2017). Within L2 research on procedural memory (Table 1), Faretta-Stutenberg and Morgan-Short (Reference Faretta-Stutenberg and Morgan-Short2018) reported that internal consistency reliability was acceptable (split-half reliability based on Spearman-Brown correlation = .921) based on all items (i.e., pattern and random items combined) split between the first and second halves of the task. However, additional analyses of the reliability for the specific measure of reliability based on the pattern versus random difference score is warranted.

Regarding the ASRT’s validity as a measure of procedural memory learning ability, previous research provides evidence for the use of procedural memory in acquiring the sequence in this task. In J. H. Howard and D. V. Howard (Reference Howard and Howard1997) learning was characterized by reaction times that gradually became faster on patterned trials than on random trials, despite the fact that participants were unable to accurately describe the regularity in the sequence, suggesting that their knowledge may have been implicit. Regarding the engagement of neural substrates on the ASRT, evidence appears to be consistent with the use of procedural memory (for review see J. H. Howard & D. V. Howard, Reference Howard and Howard2013), although research has focused on triplet measures with the ASRT along with a related Triplet-Learning Task (J. H. Howard et al., Reference Howard, Howard, Dennis and Kelly2008), perhaps in part because of the lack of clear neuroimaging contrasts with the pattern versus random items on this task. J. H. Howard and D. V. Howard (Reference Howard and Howard2013) review neuroimaging and neuropsychological studies on the ASRT, among other lines of research (e.g., genetic factors). Concerning neuroimaging, connections between the caudate nucleus, part of the basal ganglia, and the dorsolateral prefrontal cortex have been implicated in ASRT learning. Relatedly, neuropsychological research points to ASRT learning deficits in patients who suffer from corticobasal syndrome, which involves degeneration in the basal ganglia. Overall, behavioral and neural research on the ASRT is suggestive of the use of procedural memory due to gradual, implicit learning that appears to be supported by the basal ganglia.

WEATHER PREDICTION TASK

In the WPT, participants are instructed to predict the “weather” (which is a choice between fictional “sunshine” or “rain”) based on a combination of cues (see Figure 1 for example cards with geometric shapes serving as cues). Each combination of cues is associated with a certain probability of sunshine or rain. For example, a combination of a card with circles and a card with squares may be associated with an 80% chance of sunshine. As a secondary distractor task, participants are also tasked with keeping track of the number of high tones that occur during each trial (Foerde et al., Reference Foerde, Knowlton and Poldrack2006). Learning is typically assessed by examining whether participants’ predictions of “sunshine” or “rain” are optimal based on the probabilistic cue-outcome associations. For example, Foerde et al. counted optimal responses as accurate on a probe block administered after the task. Kalra et al. (Reference Kalra, Gabrieli and Finn2019) calculated the optimal response rate versus chance on each block during the task. Test-retest reliability on the learning measure used in the first and second administrations of the task (i.e., optimal response rate vs. chance on the third block of a four-block version of this task) showed that the two administrations were significantly correlated: r = .39, p = .002. (Kalra et al., Reference Kalra, Gabrieli and Finn2019). Within L2 research on procedural memory (Table 1), Faretta-Stutenberg and Morgan-Short (Reference Faretta-Stutenberg and Morgan-Short2018) reported that the internal consistency of the WPT was acceptable (Cronbach’s alpha = .746) for performance on the final dual-task block. However, additional evidence for the internal consistency for this specific measure would further inform our understanding of its reliability.

Evidence from previous research suggests that the DT-WPT may be a valid assessment of procedural memory learning ability. Foerde and colleagues examined cognitive learning characteristics and neural substrates of performance on the DT-WPT as compared to a single-task WPT. Regarding cognitive characteristics, the dual-task version reduced the amount of declarative, explicit knowledge of cue-outcome associations compared to the single-task version (Foerde et al., Reference Foerde, Knowlton and Poldrack2006; Foerde et al., Reference Foerde, Poldrack and Knowlton2007), even as implicit knowledge of cue-outcome associations was evidenced. Neuroimaging results (Foerde et al., Reference Foerde, Knowlton and Poldrack2006) suggested that performance on the dual-task, but not the single-task, version was positively associated with activity in the striatum, whereas the single-task, but not the dual-task, version showed a positive relationship with activity in the medial temporal lobe. Neuropsychological evidence also suggests that WPT performance (for a single-task version) depends on neural substrates associated with procedural memory. Knowlton et al. (Reference Knowlton, Mangels and Squire1996) showed that patients with Parkinson’s disease, which involves basal ganglia degeneration, were impaired relative to healthy controls on this task, whereas amnesic patients, who had damage to the medial temporal lobe, were unimpaired on the task despite a lack of memory for the training sessions.Footnote 5 A role for procedural memory neural substrates in learning on the WPT is also supported by other studies (see Batterink et al., Reference Batterink, Paller and Reber2019 for review). Overall, these patterns of results suggest the use of procedural memory on the WPT, especially for the dual-task version.

TOWER OF LONDON

In the TOL, participants are instructed to match a goal configuration of colored circles that rest on pegs (Figure 1). In producing the goal configuration, participants are constrained by being able to move only the topmost circle on each peg, and, when moved, the circle will fall to the lowest possible peg position. Participants are instructed to plan their sequence of moves before beginning the first move, and to do their best in matching the goal configuration in the stated number of moves. Performance on the TOL can be quantified by accuracy and various reaction time measures. For example, Unterrainer et al. (Reference Unterrainer, Rahm, Kaller, Wild, Münzel, Blettner, Lackner, Pfeiffer and Beutel2019) examined accuracy as the percentage of the correctly solved problems in the stated number of moves, whereas Unterrainer et al. (Reference Unterrainer, Rahm, Leonhart, Ruff and Halsband2003) examined reaction time measures, including the preplanning time (time from presentation of problem to first move, which is also described as “initial think time”; Morgan-Short et al., Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014) and movement execution time (time from first move to solution of the problem). Psychometric work with versions of the TOL that are similar to that administered by the present study have demonstrated acceptable levels of reliability specific to internal consistency for accuracy on a 32-item TOL (reliability estimates ranged from .691 to .828; Kaller et al., Reference Kaller, Unterrainer and Stahl2012) and on a 24-item TOL (overall reliability estimates ranged from .715 to .757; Unterrainer et al., Reference Unterrainer, Rahm, Kaller, Wild, Münzel, Blettner, Lackner, Pfeiffer and Beutel2019). In L2 research, analysis of the TOL has primarily been based on reaction time measures from the task. Thus, reliability should be examined for these measures.

Some research with the TOL suggests that it can be used as a valid measure of procedural memory learning ability, even as it has also been commonly used to examine processes in other cognitive areas, such as problem solving (i.e., planning ability, for example see Kaller et al., Reference Kaller, Rahm, Köstering and Unterrainer2011). In research examining the cognitive characteristics of learning on the TOL, Ouellet et al. (Reference Ouellet, Beauchamp, Owen and Doyon2004) demonstrated that participants gradually improve in both accuracy and the time to complete this task over blocks of trials. They also demonstrated an improvement on goal configurations that were repeated compared to nonrepeated goal configurations, despite the fact that participants were unable to describe specific information about the repeated sequences, suggesting the use of implicit knowledge to solve these problems. As such, behavioral data indicate that participants may rely on procedural memory when acquiring the TOL due to the gradual improvement over time and use of implicit knowledge to solve the problems. In a neuroimaging study, Beauchamp et al. (Reference Beauchamp, Dagher, Aston and Doyon2003) provided evidence that improved performance on the TOL is associated with activity in the fronto-striatal network, among other brain regions. The use of the fronto-striatal network on the TOL, along with early activation of other brain structures involved in procedural memory (e.g., cerebellum), is consistent with the use of procedural memory on this task. Lastly, neuropsychological evidence indicates that patients with Parkinson’s disease, which affects procedural memory neural circuits, show an impairment on the TOL that is associated with the severity of the disease (Owen et al., Reference Owen, James, Leigh, Summers, Marsden, Quinn, Lange and Robbins1992). Also, whereas healthy controls rely on a fronto-striatal network when acquiring the TOL, patients with Parkinson’s disease show reduced activity in the fronto-striatal network and elevated activity in brain regions associated with declarative memory (Beauchamp et al., Reference Beauchamp, Dagher, Panisset and Doyon2008; Dagher et al., Reference Dagher, Owen, Boecker and Brooks2001). A similar pattern of findings has also been evidenced in patients with obsessive compulsive disorder, which is associated with frontostriatal abnormality (van den Heuvel et al., Reference Van den Heuvel, Veltman, Groenewegen, Cath, van Balkom, van Hartskamp, Barkhof and van Dyck2005). Taken together, the pattern of results across cognitive and neuroimaging evidence suggests that procedural memory may underlie learning on the TOL.

MOTIVATION AND RESEARCH QUESTIONS

In sum, previous research has provided some evidence for the reliability and validity of the ASRT, the DT-WPT, and the TOL as measures of procedural memory learning ability. However, regarding reliability, further examination is needed as the measures examined in previous research are largely not the same measures typically used in L2 research about procedural memory. Regarding validity, the overall pattern of evidence is consistent with the use of these tasks as measures of procedural memory learning ability. For one, the tasks show reasonable face validity (a subjective evaluation of whether the task captures the construct; Morling, Reference Morling2015) in that all three tasks seem to involve gradual, implicit learning, which is characteristic of learning supported by procedural memory. In addition, some evidence for construct validity (how well the task measures the construct it claims to measure; Morling, Reference Morling2015) is provided with neuroimaging and neuropsychological research that shows the engagement of neural substrates associated with procedural memory during task performance (although these neural substrates may also be engaged by other cognitive processes). However, very little evidence exists regarding the convergent and discriminant validity of these tasks, that is, whether the tasks are related to each other (as one might expect if they all measure a broad procedural memory learning ability construct) and whether they are unrelated to tasks that measure other constructs (such as declarative memory learning ability). Consequently, the current study directly examines the reliability and the convergent and discriminant validity of these tasks to increase our understanding of their overall reliability and validity in L2 research. Evidence of convergent validity of the tasks would establish that the tasks, though different, each reflect a broad construct of procedural memory learning ability. Interestingly, a recent study provided preliminary evidence that these tasks may not correlate positively with each other, suggesting that they may not measure the same cognitive ability, although a relatively low number of participants precludes strong conclusions (Buffington & Morgan-Short, Reference Buffington and Morgan-Short2018). Evidence of discriminant validity would establish that the tasks are not associated with other constructs such as declarative memory. Accordingly, we examined the following research questions:

RQ1 (reliability): Do assessments of procedural memory learning ability demonstrate internal consistency?

RQ2 (convergent validity): Do the assessments of procedural memory learning ability pattern positively together?

RQ3 (discriminant validity): Do assessments of procedural memory learning ability not pattern positively with assessments of declarative memory learning ability?

To test these research questions, we administered all three procedural memory learning ability assessments to participants in a within-subjects design. We also included three assessments of declarative memory learning ability to investigate discriminant validity.

METHOD

PARTICIPANTS

Participants (N = 119) received course credit for participation through the subject pool at the University of Illinois at Chicago. Twenty participants were excluded for reasons such as not following task instructions or extreme performance on a task (see Supplemental Methods for additional information). This left N = 99 participants (58 women, 41 men; average age = 19.30 years; age range = 17–29 years).

MATERIALS

PROCEDURAL MEMORY LEARNING ABILITY

Participants completed three assessments of procedural memory learning ability (Figure 1). For details on all tasks used in the present study, see Supplementary Materials. The first assessment, the ASRT (Csabi et al., Reference Csabi, Benedek, Janacsek, Zavecz, Katona and Nemeth2016), was based on the original task from J. H. Howard and D. V. Howard (Reference Howard and Howard1997) and involved responding to the serial location of a target that followed an alternating pattern, such that every other trial was part of the pattern. Learning was measured by subtracting the reaction time on pattern trials from the reaction time on random trials. The second assessment of procedural memory was the dual-task version of the WPT (Foerde et al., Reference Foerde, Knowlton and Poldrack2006; based on the single-task version; Knowlton et al., Reference Knowlton, Squire and Gluck1994; Knowlton et al., Reference Knowlton, Mangels and Squire1996). Participants predicted a weather outcome (sunshine or rain) using cue cards that were probabilistically associated with the weather outcomes. The dual-task component was a tone-counting task, which has been shown to impede the use of declarative memory on the weather prediction component of the task (Foerde et al., Reference Foerde, Knowlton and Poldrack2006). Performance was measured by accuracy on the final dual-task block. Responses were scored as accurate if they matched the optimal, or expected, response (Gluck et al., Reference Gluck, Shohamy and Myers2002). After completing the WPT, participants completed a debriefing measure of explicit knowledge (Cue Select). The final assessment of procedural memory was the TOL (Kaller et al., Reference Kaller, Unterrainer and Stahl2012). In this task, participants were presented with a configuration of colored circles and instructed to move the circles to match a goal configuration within a specified number of moves. To measure improvement, participants repeated the task. Two dependent measures for this task have been used in previous L2 empirical work. The first measure (TOL ImpBlock) examines the average percent change in initial think time, or planning time, with a higher percent change representing a greater decrease in initial think time and presumably more procedural learning (used in Morgan-Short et al., Reference Morgan-Short, Faretta-Stutenberg, Brill-Schuetz, Carpenter and Wong2014). The second measure (TOL ImpNormed) assesses the average total time to match a goal configuration on the second administration of the task, normalized relative to the other participants, as a measure of overall improvement on the task, which may or may not be specific to procedural memory (used in Antoniou et al., Reference Antoniou, Ettlinger and Wong2016; Ettlinger et al., Reference Ettlinger, Bradlow and Wong2014).

DECLARATIVE MEMORY TASKS

Participants completed three assessments of declarative memory (Figure 1). The first assessment, Part V of the Modern Language Aptitude Test (MLAT-V; Carroll & Sapon, Reference Carroll and Sapon1959), examined participants’ memory for English translations of novel pseudo-Kurdish words following a brief study phase. The dependent measure was accuracy on a multiple-choice assessment. The second assessment of declarative memory was the Continuous Visual Memory Test (CVMT; Trahan & Larrabee, Reference Trahan and Larrabee1988). Participants were presented with a series of abstract images, some of which were repeated, and tasked with answering whether they had previously seen the image. Performance was measured with the dʹscore. The last assessment of declarative memory, the Declearn task (Hedenius et al., Reference Hedenius, Ullman, Alm, Jennische and Persson2013), involved an incidental encoding phase in which participants made real/made-up judgments for images. Following this, they were tested on their recognition memory of the shapes. Recognition performance was measured with the dʹ score.

PROCEDURE

Participants completed the study over two testing sessions scheduled on separate days. Both sessions lasted approximately 1.5–2 hours each. To avoid fatigue effects of the cognitive tasks, the order of assessments was partially counterbalanced such that assessments in the same session were counterbalanced and no two procedural or declarative memory assessments occurred back to back (see Supplementary Materials for additional details).Footnote 6

ANALYSIS

All data and analyses, including an R script that reproduces the results in the present study, are available through the Open Science Foundation at the following webpage: https://osf.io/ux4qs/?view_only=ddb9ed28ad7046f18d55201a087d6e46. Before analyses were run, data was cleaned by items and participants. For the ASRT, data was first cleaned by removing reaction times shorter than 100 ms and longer than three standard deviations above the participant’s average reaction time and inaccurate trials were removed (total of 9.97% were removed). For DT-WPT, all trials in Block 8 were included except for those that had a 50% probability of sunshine or rain (7.5% of trials). For the CVMT, two participants were discovered as outliers, with performance more than three standard deviations below the group mean performance. This caused us to remove those entire cases from subsequent analyses, as factor analysis with missing data is not recommended (Tabachnick & Fidell, Reference Tabachnick and Fidell2013). No data cleaning was required for any other tasks.

Details of all analyses are reported in the “Results,” but here an overview and rationale for the “Results” section is provided. To examine our first research question, we calculated reliability for each dependent measure, with acceptable reliability defined at .70 or greater (Lance et al., Reference Lance, Butts and Michels2006; Nunally & Bernstein, Reference Nunally and Bernstein1978). Generally, if the reliability for a dependent measure was below this threshold, we excluded it from analysis for RQs 2 and 3, although we made an exception for ASRT (see “Results,” “RQ1: Reliability Analysis” subsection). Following the insightful critiques of two reviewers, we calculated reliability differently depending on whether the measure of learning ability was taken over the whole task (i.e., ASRT, TOL, and CVMT) versus at the end of the task (i.e., DT-WPT, MLAT, and Declearn). The reason for this is that learning should occur in each task, which leads to dependence among the test items and may degrade interpretations of reliability when it is calculated over the whole task. Thus, for measures of learning taken over the whole task we calculated Spearman–Brown split-half reliability based on every other item in serial order. This approach controls for the serial dependence among items, as each half involves items across the whole task. For measures of learning taken at the end of the task, we calculated Cronbach’s alpha. To provide initial evidence regarding our second and third research questions about convergent and discriminant validity, respectively, a correlation matrix was computed using Spearman correlations among all assessments in the study. For our main analysis, we conducted an exploratory factor analysis (EFA)Footnote 7 on the Spearman correlation matrix to test if the tasks factor into the expected procedural and declarative memory factors.

To perform the EFA, assumptions including multivariate normality and sampling adequacy (i.e., sufficiently large relationships among variables in the correlation matrix, examined with the Kaiser–Meyer–Olkin Test) were evaluated. Principal Axis Factoring extraction was chosen because this extraction method does not depend on multivariate normality (M. C. Howard, Reference Howard2016). Following this, factor analysis was performed with a scree plot analysis with converging evidence from parallel analysis, optimal coordinates, and the acceleration factor (Horn, Reference Horn1965; Raiche et al., Reference Raiche, Riopel and Blais2006; Raiche & Magis, Reference Raiche and Magis2020). Factors were then rotated with an oblique rotation (quartimin), which allows the factors to correlate with each other (M. C. Howard, Reference Howard2016; Yong & Pearce, Reference Yong and Pearce2013). To conduct the analyses, we used R version 3.6.0 (R Core Team, 2019; see Supplementary Materials for citations of packages used).

RESULTS

RQ 1: RELIABILITY ANALYSIS

The first step in the analysis was to evaluate RQ 1: Do assessments of procedural memory learning ability demonstrate internal consistency? Results of the reliability analysis are displayed in Table 2 and described in the following text. For thoroughness, descriptive statistics, including means, standard deviations, normality, and learning effects are also displayed in Table 2. Normality was evaluated with histograms, Q-Q plots, and the Shapiro–Wilk test. If the distribution of a measure was skewed based on visual inspection or the Shapiro–Wilk test was significant (indicating a departure from normality), then the measure received an “X” for normality, otherwise the measure received a check, indicating that we considered the measure to be normally distributed. Learning effects were evaluated with 95% confidence intervals on the average performance for each measure. If these intervals do not overlap with chance performance, this is taken as evidence that there was learning on the task. Learning effects are important in order to analyze learning at the group level. If group-level learning is not observed, this raises questions about the task’s validity as a measure of learning (e.g., there may be design issues with the task that prevent participants from learning). For procedural memory learning ability tasks, we also include plots of learning over time in Supplementary Materials.

TABLE 2. Selection of measures to include in final analysis

Note: The units of the dependent measure for each task are shown in parentheses; NA = We note that the CI for TOL ImpNormed does not conceptually reflect a learning effect as scores on this measure do not have a baseline from which to evaluate learning (see main text); SB = Spearman–Brown split-half coefficient; CA = Cronbach’s alpha; CI = confidence interval; *above-chance learning effect.

a The observed split-half reliability for ASRT was negative. As such, instead of the Spearman–Brown correction we applied a correction suggested by Krus and Helmstadter (Reference Krus and Helmstadter1993, eq. 15) for cases in which observed reliability is negative. We also investigated the source of this low reliability. Specifically, we looked at reliability separately for pattern and random items and then compared these reliabilities to the reliability of their difference score. We found that pattern and random items both have acceptable reliability, with values of .96 and .99, respectively. Thus, we suggest that the source of low reliability for ASRT may lie in taking the difference score between pattern and random items.

b To investigate if performance on DT-WPT Block8 might be associated with explicit knowledge developed during the task, we correlated the Block8 measure with the explicit debriefing task, Cue Select. There was a strong, positive correlation between these two tasks, r(97) = .50, p < .001. Thus, performance on the Block8 measure may rely, at least in part, on explicit knowledge, which would not be consistent with the exclusive use of procedural memory on this task.

c For Declearn, the dependent measure for reliability was accuracy, not dʹ, as using an accuracy measure allowed us to calculate Cronbach’s alpha, which is preferred over the Spearman–Brown split-half reliability because Cronbach’s alpha includes all possible split-half reliabilities, which is not done when calculating the Spearman–Brown split-half reliability.

d The Shapiro–Wilk normality test was positive for Declearn (p = .04), but visually the data appear to follow a normal distribution based on a histogram and Q-Q plot. So, the Declearn data could potentially be considered normally distributed.

ASRT evidenced normality and a learning effect that was significantly above chance performance. Chance performance was operationalized as no difference between the reaction times on pattern versus random trials, as measured by a confidence interval overlapping with 0. The reliability score of .42 for the ASRT did not meet our .70 threshold for acceptable reliability based on the pattern versus random difference score, although reliability for the pattern and random trials was acceptable (see Table 2, note a). Thus, overall, the ASRT appears to be an acceptable measure to include in the EFA.

DT-WPT task exhibited normality and a significant learning effect (with chance performance equal to 50% correct when guessing sunshine/rain). Reliability was acceptable according to the .70 threshold. Thus, this measure appears to be valid for use in the EFA.

For the TOL, we examined two dependent measures from previous L2 research. For the first measure, TOL ImpBlock, we included all trials at the beginning or end of a block from the first administration of the task (8 trials). This measure did not suggest a normal distribution and did not evidence a significant learning effect because the 95% confidence interval overlapped with chance performance (0% improvement). Additionally, TOL ImpBlock did not show acceptable reliability (< .70). The second measure, TOL ImpNormed, involved performance on all trials of the second administration (28 trials). Descriptively, TOL ImpNormed does not appear to be normally distributed. Further, because there is no baseline to evaluate performance, there does not appear to be a reasonable way to measure a learning effect on TOL ImpNormed. In other words, the measure assesses learning on the second administration of the task, and thus no value represents chance learning, as would a value of zero if performance were compared between the first and second administrations. However, TOL ImpNormed did exhibit acceptable reliability. For this reason, TOL ImpNormed, but not TOL ImpBlock, was included in the EFA. Hereafter, TOL ImpNormed is referred to simply as TOL.

Next, we evaluated the measures for declarative memory. The first declarative memory measure is MLAT. This measure includes the 24 multiple-choice test items. Descriptively, the MLAT was not normally distributed but did evidence a learning effect (chance performance is 20% correct because each question has five options; expected number of correct answers by chance = 24 questions × 20% = 4.8 questions). Reliability for the MLAT was above the acceptable threshold. Second, scores on the CVMT were based on 96 recognition trials. Descriptively, the CVMT showed both a learning effect (chance dʹ = 0) and normal distribution. Reliability was also acceptable. Third, the Declearn score includes the 128 trials on the recognition task. Descriptively, Declearn may not be normally distributed but participants did exhibit a learning effect (chance dʹ = 0). Reliability for Declearn was based on accuracy (see note c in Table 2) and was well above the minimum threshold. In sum, based on their reliability each of the memory measures appear to be valid for use in the EFA.

RQS 2 AND 3: CONVERGENT AND DISCRIMINANT VALIDITY

Correlations

As a preliminary investigation of our second and third research questions—convergent validity: do the assessments of procedural memory learning ability pattern positively together? and discriminant validity: do assessments of procedural memory learning ability not pattern positively with assessments of declarative memory learning ability?—we first examined a correlation matrix for all tasks. Results of this correlation matrix are displayed in Figure 2, which shows Spearman correlations among all our tasks. Spearman correlations, which measure the rank order or ordinal relationships among scores on the tasks, were used instead of Pearson correlations because the Spearman correlation can work with ordinal data, does not depend as strongly on normality, and can correct some deviations from linearity because it is based on ranks.

Figure 2. Intercorrelations for declarative and procedural memory tasks.

Note: Spearman correlations.

.p < .10; *p < .05; **p < .01; ***p < .001

The results suggest a lack of correlations among procedural memory assessments, with no positive correlations and most of the correlations small to negligible in size. As such, the correlation matrix suggests that there may be a lack of convergent validity among the procedural memory learning ability assessments. Regarding discriminant validity, there appear to be three patterns of relevant preliminary results: (a) TOL showed a negative correlation with two of the declarative memory tasks (CVMT and Declearn) that was small-to-medium in size (Cohen, Reference Cohen1992); (b) ASRT did not correlate with any of the declarative memory tasks; and (c) DT-WPT showed positive correlations with all three declarative memory tasks, with effect sizes ranging from small-to-medium to medium in size. These data suggest that TOL and ASRT may show discriminant validity with the declarative memory tasks, but DT-WPT could be patterning with declarative memory, which would be inconsistent with discriminant validity. Finally, we note that, as expected, all three declarative memory tasks (MLAT, CVMT, and Declearn) correlated significantly and positively with each other, with effect sizes ranging from small-to-medium to almost large. Overall, this preliminary analysis suggests that the three procedural memory learning ability assessments may not show convergent validity and that DT-WPT may not show discriminant validity, but in fact may pattern more strongly with the declarative memory learning ability tasks.

Exploratory Factor Analysis

For the final analysis of our second and third research questions we conducted an EFA. The overall Kaiser–Meyer–Olkin Score (KMO) score was .68 (Table 3), which is above the minimum recommended threshold. Individual tasks also had acceptable KMO scores (>.60) except for ASRT. Some researchers recommend dropping tasks with low KMO scores prior to conducting the EFA (M. C. Howard, Reference Howard2016). However, as all tasks are motivated based on previous literature, and because there are a low number of tasks in the EFA to begin with, we retained all the tasks for the EFA.

TABLE 3. Kaiser–Meyer–Olkin scores for the exploratory factor analysis

Next, we created a scree plot that includes supporting evidence from parallel analysis, optimal coordinates, and the acceleration factor to decide how many factors to include in the EFA (Figure 3). Most of the results suggest two factors, and as this is consistent with two factors representing procedural and declarative memory, we conducted the EFA with two group factors.

FIGURE 3. Scree plot for EFA.

Note: Shows scree plot along with converging evidence from parallel analysis, optimal coordinates, and the acceleration factor (Raiche & Magis, Reference Raiche and Magis2020).

Results from this EFA are reported in Table 4. The two factors accounted for 31% cumulative variance, with 23% and 8% for Factor 1 and 2, respectively. These factors correlated weakly at .24. Factor loadings were interpreted according to the following criteria from M. C. Howard (Reference Howard2016): (a) satisfactory factor loadings should load onto a primary factor at a value of .40 or greater; (b) any alternate loadings for a task should not exceed 0.30; and (c) the difference between the primary and alternate factor loadings for a task should be at least 0.20. Three tasks clearly loaded onto Factor 1: CVMT, Declearn, and DT-WPT. DT-WPT was not expected to load with CVMT and Declearn, but this is consistent with the correlation matrix. One task clearly loaded onto Factor 2: ASRT. TOL did not meet threshold for inclusion in Factor 2, but it was trending to a negative loading on Factor 1. MLAT did not load clearly onto either factor.

TABLE 4. Factor loadings for exploratory factor analysis

Note: Shows results of EFA with percent variance explained by the model. F1 = first factor; F2 = second factor; h2 = communality (sum of squared factor loadings), or total variance in each task that is accounted for by F1 and F2; u2 = variance in each task not accounted for by the factors, or the task’s uniqueness; bolding indicates loadings (>.40); % variance = proportion of total variance accounted for by the factor.

Given the loadings, Factor 1 suggests a declarative memory learning ability factor, as both Declearn and CVMT loaded onto this factor. Factor 2 had only one task loading with it, ASRT, but none of the other procedural memory learning ability tasks loaded onto this factor and as such it does not clearly suggest a factor of procedural memory learning ability. Overall, the EFA does not appear to suggest two factors of procedural and declarative memory, although they clearly form one interpretable factor that may represent declarative memory learning ability.

DISCUSSION

Regarding our first research question—do assessments of procedural memory learning ability demonstrate internal consistency?—our results suggest that measures used for the DT-WPT and TOL tasks showed acceptable levels of internal consistency reliability (>.70). The ASRT pattern versus random measure fell below this threshold, although responses to the pattern and random trials themselves were reliable. For our second research question—do the assessments of procedural memory learning ability pattern positively together?—results from our correlation matrix did not provide evidence that these tasks correlate with each other, and the EFA did not show evidence that the tasks loaded onto the same factor. The ASRT showed an acceptable loading on the second factor, but none of the other procedural memory learning ability tasks also loaded onto this factor. Thus, our results suggest that the procedural memory learning ability tasks in the present study do not seem to measure a broad procedural memory construct. Finally, regarding our third research question—do assessments of procedural memory learning ability not pattern positively with assessments of declarative memory learning ability?—the correlation matrix showed no positive correlations with declarative memory learning ability tasks for ASRT and TOL. The DT-WPT correlated positively with all three declarative memory learning ability tasks. Also, the DT-WPT, but not the ASRT or the TOL, loaded onto the first factor in the EFA that also included Declearn and CVMT. This factor seems to be indicative of a declarative memory learning ability factor, as both Declearn and CVMT showed acceptable loadings onto this factor with no cross-loadings onto the second factor. Overall, then, for the procedural memory learning ability tasks we found (a) evidence of reliability, except for the ASRT pattern versus random difference measure; (b) a lack of evidence for convergent validity among the tasks; and (c) evidence consistent with discriminant validity as compared to declarative memory learning ability for two of the tasks (ASRT and TOL, but not for DT-WPT).

Although previous L2 research has not systematically addressed these reliability and validity questions about procedural memory learning ability tasks, our findings can be interpreted in light of some findings from L2 research and from related research in cognitive psychology. First, regarding reliability, our results complement previous DT-WPT and TOL results (see literature review in the preceding text) in that their findings of acceptable internal consistency and test-retest reliability are extended to internal consistency for the specific measures used in prior L2 procedural memory work. However, the pattern versus random difference measure of ASRT used in the present study appears to have low internal consistency reliability even as internal consistency for the pattern and random trials was high (see Table 2, note a). This finding is not consistent with previous reliability results for this task although those findings were either based on the trials themselves or on another ASRT measure (Faretta-Stutenberg & Morgan-Short, Reference Faretta-Stutenberg and Morgan-Short2018; Stark-Inbar et al., Reference Stark-Inbar, Raza, Taylor and Ivry2017). Interestingly, Trafimow (Reference Trafimow2015) proposes that high true correlations between tests and low deviation ratios, or ratios of the variances between tests, likely lowers the reliability of the difference score. Results for the ASRT are consistent with this claim: The true correlation between pattern and random scores was essentially perfect, at 1.02 (raw r = .99), and the deviation ratio was also low, based on the values supplied by Trafimow, at .99. This observation may account for why the pattern and random scores are separately reliable although their difference score has low reliability. Overall, then, our reliability results encourage confidence in the DT-WPT and TOL, insofar as these tasks appear consistent in their measurement, but reliability for the ASRT pattern versus random measure used here is low.

Second, regarding convergent validity, no patterns of association were found among the DT-WPT, ASRT, and TOL tasks. This finding was somewhat surprising given the patterns of evidence reviewed in the preceding text suggesting that performance on these tasks reflects characteristics of procedural memory, including the gradual acquisition of knowledge on the tasks, the implicit nature of this knowledge, and the engagement of neural substrates associated with procedural memory. However, the results seem consistent with research within the broader domain of implicit learning and memory. For example, Godfroid and Kim (Reference Godfroid and Kim2021) also did not find a positive relationship between ASRT and TOL when using different dependent measures than those in the current study. Further, Godfroid and Kim found that performance on these tasks loaded onto different factors in an EFA. Studies within cognitive psychology have also generally not found evidence of convergent validity among various implicit and statistical learning tasks (Gebauer & Mackintosh, Reference Gebauer and Mackintosh2007; Kalra et al., Reference Kalra, Gabrieli and Finn2019; Siegelman & Frost, Reference Siegelman and Frost2015) with some claims that implicit learning is not a unitary ability or mechanism. However, Kalra et al. found a good fit for a one-factor model for three specific tasks (probabilistic classification, serial reaction time, and implicit category learning, but not for artificial grammar learning) for which learning seems to depend on the basal ganglia rather medial temporal-lobe structures. Arguably, these tasks might represent the more specific construct of procedural memory given their reliance on the basal ganglia. Interestingly, two of our tasks are similar to those used in Kalra et al.: Our DT-WPT is also a probabilistic classification task, and our ASRT is a variant for the serial reaction time task. Why then do we not find convergent validity for our three procedural memory learning tasks, which also seem to rely on the basal ganglia? The answer may partly lie in the specific task characteristics and dependent measures. For example, Kalra’s probabilistic classification task was comprised of 262 single-task trials across four blocks with the dependent measure of learning being taken on the third block, whereas our DT-WPT was comprised of 320 dual-task trials across eight blocks with the dependent measure of learning being taken on the eighth block. Future research will be needed to determine whether convergent validity can be evidenced across procedural memory learning ability tasks with further consideration of their specific characteristics and dependent measures of learning. However, convergent validity might be somewhat difficult to find for procedural memory tasks as the neurocognitive substrates of procedural memory seem to be somewhat segregated into discrete circuits that may be involved in separate aspects of behavior (Middleton & Strick, Reference Middleton and Strick2000).

Finally, regarding discriminant validity, our results for the ASRT and TOL are consistent with these tasks showing discriminant validity as neither task patterned with assessments of declarative memory learning abilities. However, the DT-WPT appears to pattern with the declarative memory learning ability tasks, which generally seems inconsistent with evidence from previous research that the DT-WPT engages procedural memory (Foerde et al., Reference Foerde, Knowlton and Poldrack2006). Although procedural memory may always be involved at some level for this task (as evidenced by striatal activity that did not differ during training in single- versus dual-task conditions; Foerde et al., Reference Foerde, Knowlton and Poldrack2006, p. 11780), the task conditions seem to modulate the “relative contribution” of the declarative and procedural memory systems (p. 11781). If participants in the current study did not fully engage in the tone-counting task that created the dual-task condition, their reliance on declarative memory may not have been effectively modulated. Unfortunately, we do not have a measure of their performance on the tone counting task and are not able to assess how well they engaged in the dual-task condition. Overall, then, the present results suggest that the DT-WPT may, at least in some cases, engage declarative memory learning abilities, which we interpret to mean that the task should not be considered a process-pure measure of procedural memory learning ability unless researchers can ensure that recourse to declarative memory is effectively blocked. For the ASRT and TOL, our results suggest that they do not recruit declarative memory learning abilities in a detectable manner and can be used as individual differences measures independent of declarative memory.Footnote 8

IMPLICATIONS FOR FUTURE L2 RESEARCH

The present results have implications for how researchers examine the role of procedural memory learning abilities in L2 acquisition. Although we do not have evidence that the ASRT, DT-WPT, and TOL tasks jointly capture a broad construct of procedural memory, given the overall pattern of evidence presented in the literature suggesting their reliance on procedural memory, we consider the case for each task as a measure of procedural memory learning ability, depending on the measure used and the theoretical motivation for the task.

The case for the DT-WPT may be the weakest given that, in our results, it loaded on a factor that we interpret as a declarative memory learning ability factor. However, the stimuli represent probabilistic cue-outcome associations, which may be subserved by prediction-based learning in procedural memory (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020). Also, the task has been shown to engage procedural memory substrates, and a different version of the probabilistic classification task has been associated with other tasks that engage the basal ganglia (Kalra et al., Reference Kalra, Gabrieli and Finn2019). Thus, we do not interpret our results as negating the engagement of procedural memory on this task. However, in future research, we suggest that the DT-WPT not be considered a process-pure measure of procedural memory learning abilities, unless researchers can ensure that declarative memory learning abilities are not recruited in the task. Perhaps using a dependent measure earlier in the task, as in Kalra et al., when declarative memory processes may not have begun to support learning (see note 5) would provide a purer measure of procedural memory learning ability.

Although the TOL did not show associations with declarative memory learning ability in our study, the task may also not be ideal as a process-pure measure of procedural memory learning ability. The “change in initial think time” TOL measure used in previous L2 research may arguably exhibit face validity regarding reduced reliance on declarative strategies before initiating a move, but this measure showed very low levels of reliability in the current study. The more general measure of overall improvement showed acceptable levels of reliability but may not show high face validity as it is an overall measure of a task that has generally been associated with planning abilities (e.g., Kaller et al., Reference Kaller, Unterrainer and Stahl2012; Unterrainer et al., Reference Unterrainer, Rahm, Kaller, Wild, Münzel, Blettner, Lackner, Pfeiffer and Beutel2019) and skill acquisition (e.g., Beauchamp et al., Reference Beauchamp, Dagher, Aston and Doyon2003; Ouellet et al., Reference Ouellet, Beauchamp, Owen and Doyon2004). Although the task shows engagement of fronto-striatal networks tied to procedural memory, neural areas thought to reflect other processes involved in skill acquisition also seem to be engaged, at least early on during the task (Beauchamp et al., Reference Beauchamp, Dagher, Aston and Doyon2003). Thus, if research is theoretically motivated to examine a skill acquisition perspective of L2 (DeKeyser, Reference DeKeyser, VanPatten, Keating and Wulff2020), this might be considered a valid task (depending on the measure being used). In this case, researchers might want to consider the version of the TOL used in previous skill acquisition work by (e.g., Beauchamp et al., Reference Beauchamp, Dagher, Aston and Doyon2003; Beauchamp et al., Reference Beauchamp, Dagher, Panisset and Doyon2008; Ouellet et al., Reference Ouellet, Beauchamp, Owen and Doyon2004) or the updated TOL-F used in planning research, for which psychometric examinations have been conducted (e.g., Kaller et al., Reference Kaller, Unterrainer and Stahl2012; Unterrainer et al., Reference Unterrainer, Rahm, Kaller, Wild, Münzel, Blettner, Lackner, Pfeiffer and Beutel2019). However, if a researcher is examining procedural memory from a neurobiologically based perspective (Ullman, Reference Ullman, VanPatten, Keating and Wulff2020), then the TOL may not reflect a process-pure measure of procedural memory learning ability.

The ASRT seems to be a likely candidate as a valid and relatively process-pure measure of procedural memory learning ability. Learning on the ASRT is gradual, seemingly implicit, and has been shown to involve striatal activity (J. H. Howard & D. V. Howard, Reference Howard and Howard2013). In the current study, the ASRT was not associated with declarative memory learning ability and was not correlated with the TOL, which may not be a process-pure task. Furthermore, the pattern versus random measure has been shown to reflect second-order statistical dependencies (J. H. Howard & D. V. Howard, Reference Howard and Howard1997), which are likely to rely on procedural memory (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020). Thus, out of the tasks examined in the current study, the ASRT might be interpreted as the most valid, process-pure task of procedural memory learning ability. However, future research should carefully consider other ASRT measures that may demonstrate higher reliability than the pattern versus random difference score examined here. Interestingly, measures based on triplets of items have been argued to specifically represent probabilistic sequence learning (i.e., high- vs. low-frequency triplets; Song et al., Reference Song, Howard and Howard2007) and statistical learning (i.e., high- vs. low-frequency random triplets; Nemeth et al., Reference Nemeth, Janacsek and Fiser2013). Researchers may also consider different versions of this task, such as the Triplet-Learning Task (J. H. Howard et al., Reference Howard, Howard, Dennis and Kelly2008), for which motor learning aspects are removed, and the Serial Reaction Time task (Nissen & Bullemer, Reference Nissen and Bullemer1987), which has been widely used in cognitive psychology and for which learning relies on the basal ganglia (Janacsek et al., Reference Janacsek, Shattuck, Tagarelli, Lum, Turkeltaub and Ullman2020). (See “Limitations” and Supplementary Materials for more information.) In all cases, researchers (including our own research group) should provide a clear theoretical motivation as well as validity and reliability considerations for the choice of task and dependent measure.

Because procedural memory learning ability is a domain-general implicit learning ability, the results of our study also have implications for growing and important considerations of implicit language aptitude in L2 acquisition (e.g., Granena, Reference Granena2013; Suzuki & DeKeyser, Reference Suzuki and DeKeyser2017). Granena (Reference Granena2020) states that implicit aptitude is not a unitary construct and considers the distinctions between implicit learning and implicit memory as well as between declarative and nondeclarative memory systems. Regarding implicit learning and memory, the measures used in the current study arguably can be considered measures of learning, as the measures were based on continuous data throughout the learning task for the ASRT, the second administration of the TOL, and on the final learning block for the DT-WPT. The results of our EFA are generally consistent with findings that measures of implicit learning do not seem to reflect a broad construct (e.g., Gebauer & Mackintosh, Reference Gebauer and Mackintosh2007; Godfroid & Kim, Reference Godfroid and Kim2021; Kalra et al., Reference Kalra, Gabrieli and Finn2019; Siegelman & Frost, Reference Siegelman and Frost2015; cf. Kalra et al. when artificial grammar learning was excluded from analysis). As pointed out by others (e.g., Godfroid & Kim, Reference Godfroid and Kim2021; Granena, Reference Granena2020; Siegelman & Frost, Reference Siegelman and Frost2015), differences among tasks such as modality of the stimuli (e.g., auditory or visual), specificities of the stimuli (e.g., being based on letters or figures), the type of relationship among the stimuli (e.g., deterministic, probabilistic), whether motor learning is involved, and the point at which the dependent measure is taken (during learning or after) may all play a role in the lack of convergence of tasks onto a single construct.

Regarding declarative and nondeclarative memory systems, all nondeclarative memory systems, including the procedural memory system (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020), are characterized by implicit learning and memory (Squire & Dede, Reference Squire and Dede2015). Although there are patterns of evidence for implicit learning and the engagement of procedural memory for all the tasks considered in the current study (see literature review), the ASRT may arguably be the task that provides the most process-pure measure of procedural memory learning ability and nondeclarative memory more generally. However, as emphasized by Granena (Reference Granena2020, p. 39), it is vitally important for future research to continue to examine the reliability and construct validity of nondeclarative, implicit learning and memory tasks. To the extent that research establishes the reliability and validity of procedural memory learning ability tasks, as in the current study, the findings will be informative to L2 implicit learning aptitude research (as procedural memory learning ability is one type of implicit learning ability). Similarly, to the extent that the tasks validated in the implicit aptitude research are specific to the procedural memory and learning system (Ullman et al., Reference Ullman, Earle, Walenski and Janacsek2020), implicit learning research that uses those specific tasks will be relevant to research on procedural memory learning ability (see note 3). However, it is important to emphasize that not all tasks used to assess implicit learning and memory will be relevant to procedural memory learning ability, as other types of implicit learning and memory, for example, priming, depend on separate neural systems.

We offer a brief note regarding the implications of our results on assessing declarative memory learning ability, which was not the focus of the current study. Although all three declarative memory learning ability measures correlated positively with each other, in the EFA, only the Declearn and CVMT tasks showed satisfactory factor loadings onto the factor that we interpreted as declarative memory learning ability. Thus, researchers examining the role of declarative memory in future research may consider using one of these two tasks rather than the MLAT-V. An advantage of using the Declearn or CVMT is that they are nonverbal tasks, so associations between performance on these tasks and L2 can be more strongly attributed to domain-general abilities in the declarative memory and learning system.

LIMITATIONS

The strength of our conclusions regarding the validity of these procedural memory learning ability tasks should be considered in light of the study’s limitations. First, the current study did not include the Serial Reaction Time task (SRT), which was used to study the role of procedural memory learning abilities in L2 in Hamrick (Reference Hamrick2015) and partially in Tagarelli et al. (Reference Tagarelli, Ruiz, Vega and Rebuschat2016). We chose not to include this task in the main study because (a) it has not been as commonly used in studies that examine the role of procedural memory in L2 (see Table 1), and (b) given our within-subjects design, there may have been transfer effects between the SRT and the ASRT, as participants learn sequences in both these tasks and learning one sequence may have effects on learning a subsequent sequence. However, in a smaller follow-up study, we administered the SRT to a subset of participants (N = 33) who came in for a third session that was not originally planned as part of the main study (see Supplementary Materials for a description of the follow-up study, analyses, and results regarding the SRT). Results from the follow-up study indicated that the reliability of the SRT was acceptable (Spearman-Brown reliability was .76). The correlational analyses between the SRT, the three procedural memory learning ability tasks, and the three declarative memory learning ability tasks administered in the main study revealed a significant relationship between the SRT and the ASRT (Spearman’s ρ = .38, p = .03). No other correlations were statistically significant. These findings serve as preliminary evidence for convergent validity between the ASRT and the SRT and discriminant validity between the SRT and the tasks of declarative memory learning ability. Thus, we tentatively suggest that procedural memory learning ability may drive the relationship between the SRT and ASRT, although we acknowledge the existence of alternative interpretations. More research into the reliability and validity of the SRT as a task of procedural memory learning ability is needed. Such research will also be quite informative to work with implicit learning, as the SRT is a task that is commonly used in that line of research as well.

A second limitation of our study concerns the lack of L2 data in the present work. In future research, it will be important to examine the relative predictive validity of these tasks, including the SRT, for L2. Such work is important because it would be useful to know if some of these procedural memory learning ability tasks are more strongly associated with L2 learning than others. Future work should also consider test-retest reliability for the tasks to establish the stability of the measure over time. Finally, a further limitation of our study is that we examined the procedural (and declarative) memory learning ability construct using an EFA with a relatively small cognitive battery of tests, although the tests that were included were specifically motivated by the L2 literature. More correlated tests within each domain might have allowed for the constructs to emerge if the underlying connection to the construct exists but is weak.

CONCLUSION

In conclusion, we investigated the reliability and validity of procedural memory learning ability tasks that have been used to study L2 acquisition. We found evidence of reliability, except for the ASRT pattern versus random difference measure. Convergent validity was not evidenced in our results, suggesting that these measures do not jointly capture a broad construct of procedural memory. Discriminant validity was evidenced for the ASRT and TOL, but not for the DT-WPT, which may engage declarative memory learning abilities. In interpreting our results in light of prior research, we suggest that, although each task seems to recruit procedural memory learning abilities to some extent, the ASRT may provide a relatively process-pure measure, although different measures of the ASRT and other variants of sequence-learning tasks should be considered. Indeed, in a post-hoc follow-up study, we examined the SRT and found acceptable reliability for the task and a positive relationship between the ASRT and SRT. These results have implications both for future research that examines the role of procedural memory and may also have implications for research about implicit language aptitude and implicit learning more generally. However, future research on reliability and validity for the tasks used in these lines of research is needed.

SUPPLEMENTARY MATERIALS

To view supplementary material for this article, please visit http://dx.doi.org/10.1017/S0272263121000127.