Experimental research on emotion often implies the use of affective stimuli. Emotional materials have been used as stimuli in a wide range of research fields such as cognitive and social psychology or neurosciences research (e.g., Bar-Haim, Lamy, Pergamin, Bakermans-Kranenburg, & van Ijzendoorn, Reference Bar-Haim, Lamy, Pergamin, Bakermans-Kranenburg and van Ijzendoorn2007; Greenwald, Poehlman, Uhlman, & Banaji, Reference Greenwald, Poehlman, Uhlman and Banaji2009; Li, Chan, McAlonan, & Gong, Reference Li, Chan, McAlonan and Gong2010).

Studies conducted to validate emotional stimuli have often followed Lang’s model of emotion (Bradley, Codispoti, Cuthbert, & Lang, Reference Bradley, Codispoti, Cuthbert and Lang2001), in which emotion is organized around different affective dimensions including (a) hedonic valence (i.e., pleasantness or unpleasantness) and (b) arousal (i.e., degree of motivational activation). Following this dimensional approach, there are now several available databases with normative data for lexical stimuli in different languages (Bradley & Lang, Reference Bradley and Lang1999a; Redondo, Fraga, Comesaña, & Perea, Reference Redondo, Fraga, Comesaña and Perea2005), emotional scenes (Lang, Bradley, & Cuthbert, Reference Lang, Bradley and Cuthbert1999), or even film clips (Gross & Levenson, Reference Gross and Levenson1995) and sounds (Bradley & Lang, Reference Bradley and Lang1999b). Yet, other not dimensional models (e.g., recognition of discrete emotions) have also been used in validating other emotional stimuli, such as faces (e.g., Ekman & Friesen, Reference Ekman and Friesen1976).

Emotional faces are a particularly interesting type of affective stimuli. Faces are considered to be a core element of the perception and experience of emotions (Ekman, Reference Ekman1993). Moreover, their adequate recognition and interpretation provide us with important social information for an adequate adaptation to our physical and interpersonal environment. Validation studies of emotional faces have typically asked participants to identify the discrete emotion expressed by each face and then to judge its emotional levels of arousal or emotional intensity. Emotion recognition is thought to reflect to what extent a given expression adequately represents the emotion depicted according to the prototype for that emotion category (e.g., anger). Prototypes are defined as configurations of facial-feature displacements, produced by well-defined combinations of muscle contractions (Bimler & Kirkland, Reference Bimler and Kirkland2001), corresponding to a small number of discrete basic-emotion categories. According to this approach, perceptual processing of emotional faces is assumed to be essentially organized in a categorical manner, with some categories of basic emotions (i.e., angry, happy, sad) suggested to be universally found in humans. For instance, Etcoff and Magee (Reference Etcoff and Magee1992) reported data on a discrimination task that showed that faces within a same emotional category (i.e. happiness) were discriminated more poorly than faces in different categories (e.g., happiness-sadness) with similar physical features. Thus, emotional faces are thought to be perceived categorically, suggesting a perceptual mechanism tuned to the facial configurations displayed for each emotion.

In this sense, a strategy of recognition of discrete emotions in facial expressions is thought to reflect the adjustment of a given face to the prototype for the emotion supposedly represented. Using this strategy, different sets of emotional faces pictures have been studied and validated, such as the Picture of Facial Affect (PFA; Ekman & Friesen, Reference Ekman and Friesen1976), the Japanese and Caucasian Facial Expressions of Emotion (JACFEE; Matsumoto & Ekman Reference Matsumoto and Ekman1988), the Montreal Set of Facial Displays of Emotion (MSFDE; Beaupré, Cheung, & Hess, Reference Beaupré, Cheung and Hess2000), the NimStim Face Stimulus Set (Tottenham et al., Reference Tottenham, Tanaka, Leon, McCarry, Nurse, Hare and Nelson2009), or the Karolinska Directed Emotional Faces (KDEF; Lunqvist, Flykt, & Öhman, 1998), among others.

Despite the rigorous methodology typically used in these standard recognition methods, the accuracy in identifying the emotional prototype corresponding to a facial expression may be affected by several factors. First, when participants are given the opportunity to indicate to whether a facial expression may represent different basic emotions, participants tend to choose several options, not only the supposedly represented emotion by the model (e.g.,Yrizarry, Matsumoto, & Wilson-Cohn, 1998). This result shows that observers may identify more than one category when judging emotional expressions (e.g., Matsumoto & Ekman, Reference Matsumoto and Ekman1989) and, therefore, asking to judge how representative an expression is of a specific emotion may be more informative than simply rating the presence or not of a given emotion category. This strategy may provide a more sensitive measure of the degree of prototypicality of emotional faces.

Second, judgments of prototypicality are not completely stable but they may to some extent depend upon features like the time and place of the evaluation or the observers’ condition (Halberstadt & Niedenthal, Reference Halberstadt and Niedenthal2001). Classic studies of Russell and colleagues (e.g., Carroll & Russell, Reference Carroll and Russell1996; Russell & Fehr, Reference Russell and Fehr1987) showed that context influences the perception of emotions on facial expressions. For instance, Russell and Fehr (Reference Russell and Fehr1987) showed that the category assigned to an emotional face can be dependent upon the type of faces previously presented (e.g., participants categorize more likely an angry face as representing ‘fear’ or ‘disgust’ when they have immediately seen a face of ‘fear’ or ‘disgust’ respectively). Thus, despite that emotional faces may be perceived categorically (Etcoff & Magee, Reference Etcoff and Magee1992), the stimulus context seems to provide a frame of reference to anchor the scale of judgment (e.g., Russell, Reference Russell1991). According to this contextual effect, judgments of facial expressions might be modulated by the type of emotional expressions accompanying the target face that has to be judged.

To overcome these two general issues, this study was designed to evaluate a set of emotional faces by asking participants to rate to what degree emotional faces fit the prototype of a particular emotion (i.e., angry, sad, happy), which would allow to use a more sensitive measure (i.e., a dimension) than a yes/no approach. Furthermore, to control for random categorization effects, each emotional face was always accompanied by its corresponding neutral face from the same model. Thus, an anchor point was constantly held in rating the characteristics of each emotional face.

The use of a constant neutral face to rate emotional faces may have some advantages. Classic studies have shown that emotional faces presented in a neutral context are better categorized in their proper emotional prototypes than when they are presented in emotional contexts (e.g., Watson, Reference Watson1972). Furthermore, emotional faces have been found to be more expressively salient when paired with neutral faces (Fernández-Dols, Carrera, & Russell, Reference Fernández-Dols, Carrera and Russell2002). Taking together these findings, neutral faces were thought to serve as an adequate point of comparison to evaluate emotional faces’ parameters, such as the degree of adjustment to their emotional prototype as well as their emotional intensity.

In sum, in the present study we report values of emotional prototypicality and intensity for three different emotions (i.e., angry, happy, sad) using an anchor-point method of comparison.

Method

Participants

A sample of 117 undergraduate students (100 females, 17 males) volunteered to participate in the rating procedure. The mean age was 21.79 years (SD: 2.68; range 20-37 years).

Materials

Faces were selected from the A series of the Karolinska Directed Emotional Faces (KDEF), a set of pictures of facial expressions displaying seven different emotional expressions (neutral, happy, angry, sad, disgusted, surprised, and fearful). KDEF database has been created at Karolinska Institutet of Stockholm by Lunqvist et al. (1998), Footnote 1 and subsets of it have been previously validated using paradigms of recognition of emotions (Calvo & Lundqvist, Reference Calvo and Lundqvist2008; Goeleven, De Raedt, Leyman, & Verschuere, Reference Goeleven, De Raedt, Leyman and Verschuere2008).

In our study, a subset of sixty-six models (half men, half women) were selected, each of them expressing three different types of emotions commonly used in experimental research on emotional states (i.e., angry, happy and sad). Thus, stimuli comprised 198 emotional pictures, plus a set of 66 neutral expressions of the same models, all frontal view pictures.

This subset of 264 original KDEF pictures were edited and cropped, using Adobe Photoshop CS2 (see Figure 1). Each face was fit within an oval window, and the hair, neck, and surrounding parts of the image were darkened to remove non-informative aspects of emotional expression. This procedure has been employed in previous validation studies of emotional faces (e.g., Calvo & Lunqvist, 2008), in order to maximize the emotional salience of facial expressions.

Figure 1. Example of the KDEF emotion expressions’ edition for the study.

Pictures were further converted to a grey scale, from their colored original format. Following these image conversion procedures we intended to reduce distracting elements (e.g., colour, brightness, body signs, etc.).

Procedure

In order to simplify the rating procedure and to avoid cognitive overload, two groups of participants separately rated two different subsets of pictures. The first group was composed of 55 participants (47 females, 8 males; mean age: 21.75 years) who evaluated ninety-nine female faces. The second group, composed of 62 participants (53 females, 9 males; mean age: 21.82 years), evaluated ninety-nine male faces. Experiment was run in group sessions. During each group session, participants viewed the corresponding subset of faces divided into three consecutive blocks (i.e., one for each type of emotional faces).

Each block was composed of 33 trials. Each trial consisted of the presentation of an emotional face paired with its corresponding neutral expression (see Figure 2). Previous to the presentation of each block, a member of the team gave participants instructions referred to the type of emotional faces that they were going to see during that block (e.g., happy faces) and how they had to rate the emotional parameters of emotional faces (i.e., by comparing the emotional face to the neutral one).

Figure 2. Example of a dual presentation trial used in the study (in this case, a neutral vs. angry face).

Each pair of faces was displayed with a beam projector on a 147 cm (width) x 110 cm (height) screen. Pairs’ presentation was controlled using Microsoft Power-Point 2007. Each image size was 31 cm (width) x 41.2 cm (height), and they were centered on the screen with a horizontal distance between both images of 36.75 cm.

For each trial, the pair of stimuli was displayed on the screen for 8 s. Following its presentation, there was a black screen inter-trial period of 4 s to allow participants to write down their ratings. Participants were asked to fill out the measures of prototypicality and intensity in each trial. Firstly, they were asked to judge to what extent the emotional face corresponded to the prototypical expression of that emotion (i.e., In comparison to the neutral face, to what extent the emotional face adequately represents the emotion depicted?). Secondly, they rated the emotional intensity of the emotional face (i.e., In comparison to the neutral face, what level of emotional intensity is expressed by the emotional face?). Both dimensions were measured by a 0–10 Likert scale, where 0 indicated “Not at all”, 5 “Some” and 10 “Extremely”. The duration of each emotional block was 6.6 min and the complete session (plus three 5-min preparation periods, one for each block) lasted for 39.8 min.

Results

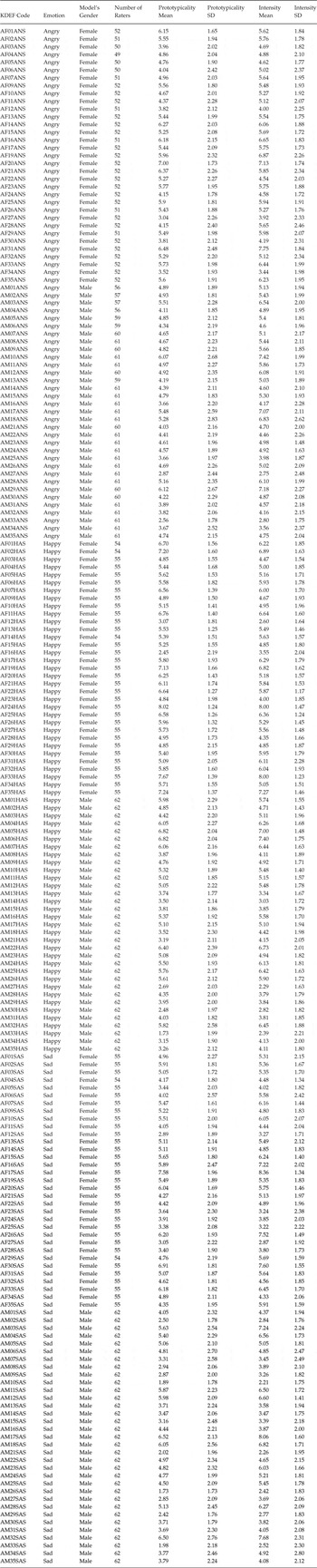

Data for both parameters (i.e., prototypicality and intensity) were calculated for the 198 emotional faces selected. Mean scores for the three types of emotions are summarized in Table 1. (See also Appendix 1 for a comprehensive list of means and standard deviations for each male and female expression). Footnote 2

Table 1. Means (M) and standard deviations (SD) for Prototypicality and Intensity as a function of Emotional face and Model’s gender. Values range from 0 to 10

Table 1 reveals that mean scores for prototypicality (range: 5.77–4.07) and intensity (range: 5.60–4.85) were in the middle of the 10-point Likert scale. Correlation analyses between both indices showed that prototypicality and intensity were positively related for every emotion evaluated (angry expressions: r = .65, p < .001; happy expressions: r = .59, p < .001; sad expressions: r = .63, p < .001).

A series of analysis of variance (ANOVA) on prototypicality and intensity was conducted to compare the ratings of the three emotions investigated in this study.

Emotional Prototypicality

A 2 (female, male) x 3 (angry, happy, sad) mixed design ANOVA on prototypicality ratings was conducted with model’s gender as the between-subjects variable and emotion as the within-subjects variable. Footnote 3

The main effect for emotion was significant, F(2, 111) = 45.53, p < .001, η2 = .45. Bonferroni post-hoc comparisons showed that all participants judged happy expression to be more prototypical than angry and sad expressions. Angry expressions were also judged to be significantly more prototypical than sad expressions. There was also a significant main effect for model’s gender, F(1, 111) = 19.83, p < .001, η2 = .152. Bonferroni post-hoc tests revealed that judgments of prototypicality were significantly higher for female expressions than for male ones in each of the three emotions depicted in the pictures. These effects were partially qualified by a marginally significant interaction for model’s gender x emotion, F(2, 111) = 2.96, p = .056, η2 = .05. Bonferroni post-hoc tests showed that females’ happy expressions were rated as more prototypical than females’ angry and sad expressions. Furthermore, males’ happy and angry expressions were rated as more prototypical than males’ expressions of sadness.

Emotional Intensity

A 2 (female, male) x 3 (angry, happy, sad) mixed design ANOVA of emotional intensity rates was carried out with one between-subjects variable of model’s gender and one within-subjects variable of emotion as independent variables. Footnote 4

A main effect for emotion was significant, F(2, 111) = 19.23, p < .001, η2 = .26. Bonferroni-corrected post-hoc comparisons showed that, for all participants, intensity rates for both happy and angry expressions were significantly higher than for sad expressions. With regard to gender differences, there was also a significant main effect for models’ gender, F(1, 111) = 13.47, p < .001, η2 = .108. Bonferroni post-hoc tests indicated that intensity rates of female expressions were significantly higher than male expressions for happy and sad expressions, but not for angry expressions.

These effects were qualified by a significant interaction for model’s gender x emotion, F(2, 111) = 3.39, p < .05, η2 = .06. Bonferroni post-hoc-tests showed that females’ happy and angry faces were judged to be significantly more intense than females’ sad expressions. For males’ expressions, Bonferroni post-hoc comparisons indicated that males’ angry expressions were judged to be significantly more intense than males’ sad expressions.

Inter-raters Reliability

Furthermore, inter-raters reliability analyses were performed using intra-class correlations.

For female expressions, intra-class correlation coefficients for prototypicality and intensity were 0.96 (confidence interval 95% = 0.94–0.97) and 0.96 (confidence interval 95% = 0.95–0.98), respectively. For male expressions, correlation coefficients for prototypicality and intensity were 0.96 (confidence interval 95% = 0.94–0.97), in both cases.

Taken together, analyses showed a high degree of agreement between raters for both parameters in both subsets of facial expressions.

Discussion

The present study reports data of 198 pictures of three different types of emotional expressions (i.e., angry, happy and sad) from a subset of the Karolinska Directed Emotional Faces database (KDEF; Lunqvist et al., 1998).

Two different emotional parameters were evaluated. First, participants rated the closeness or adjustment of each emotional face to its emotional prototype (i.e., angry, happy and sad). Second, participants rated the emotional intensity of each emotional face. Both parameters were assessed in a paradigm simultaneously presenting the emotional face to be evaluated and the corresponding neutral face. This design allowed participants to keep a comparison face (i.e., neutral) as a constant non emotional anchor point to evaluate parameters on each emotional expression.

Besides the mean values annexed in this study, some results deserve further explanation. Significant correlations were found between prototypicality and intensity for all emotional conditions, which is consistent with previous findings showing that prototypicality is linearly related to intensity of expression. For instance, Horstmann (Reference Horstmann2002) showed that when participants were asked to choose prototypical exemplars of facial emotion expressions out of several exemplars with different expression intensities, they chose the most intense versions. Taken together, these results indicate that intense exemplars are associated to prototypical expressions. This is consistent with the assumption that if the main function of facial expressions of emotion is communicative, then their perceived intensity may maximize distinctiveness between different emotional expressions.

Happy faces were judged, in general, to be better adjusted to its emotional prototype than angry and sad ones. Angry faces were judged better adjusted than sad ones. This pattern of findings is similar to that reported by other studies using different validation measures. For instance, Calvo and Lunqvist (2008) found that happy faces were recognized faster than other emotional faces even using subliminal presentations. Using visual search methods, Juth, Lunqvist, Karlsson and Öhman (1995) found that happy expressions were more quickly and accurately detected than other faces. As for the emotional intensity dimension, it was found that, in general, happy and angry faces’ were judged to be more intense than sad faces. These differences could be the result of subtle differences in emotional expression (Aguado, García-Gutierrez, & Serrano-Pedraza, Reference Aguado, García-Gutierrez and Serrano-Pedraza2009), which could be related to the degree of familiarity, or levels of ambiguity of different emotional expression. Furthermore, facial expressions of emotions may differ in regard to the number of muscles involved. It has been argued that, in terms of muscle complexity, happy and angry expressions involve less muscles coordination than other expressions (Ekman, Friesen, & Hager, Reference Ekman, Friesen and Hager2002) and, therefore, they could be more easily judged in terms of their emotional prototypicality and intensity.

Moreover, other interpretations of these results are possible. Unlike categorical models on facial processing, which assume a categorical perception of emotional faces, alternative dimensional approaches predict that facial expressions, as others emotional stimuli, convey values of valence and arousal, which are subsequently used to attribute an emotion to the face (Russell, 1997). Thus, affective content of facial expressions would be evaluated into continuously varying attributes, such as valence and arousal (Bradley et al., Reference Bradley, Codispoti, Cuthbert and Lang2001), that can be treated as dimensions of a spatial model (e.g., Nummenmaa, Reference Nummenmaa1992). Based on these assumptions, facial morphing studies have found that the distance between basic emotion prototypes in this dimensional space may differ, with some facial prototypes less spatially distant than others (e.g., Bimler & Kirkland, Reference Bimler and Kirkland2001). This is particullary the case of neutral and sad prototypes, which tend to have a low dissimilarity. In our study, neutral faces were employed a comparative anchor-points for the evaluation of emotional faces. Given previous results, a low dissimilarity in valence and arousal between the neutral faces and sad faces may account for the lower levels in emotional parameters found for sad faces, compared to the found for other emotional faces with a higher dissimilarity with respect to neutral faces (i.e., angry and happy faces; see Bimler & Kirkland, Reference Bimler and Kirkland2001).

Finally, our results suggest that female facial expressions may represent emotional prototypes better than male expressions do, although this effect was only marginally significant. Our results revealed significant differences in judgments of emotional intensity for female and male faces. With the exception of angry faces, where gender differences were not found, female expressions were judged to be more intense than male ones. These results posit the importance of controlling gender differences to select adequate sets of emotional stimuli (Aguado et al., Reference Aguado, García-Gutierrez and Serrano-Pedraza2009). However, these gender differences should be taken cautiously as our design (i.e., separated groups of raters for female and male faces) did not allow a direct comparison of model’s gender differences within the same raters. Using separated groups of raters for subsets of stimuli is a procedure that has been used in previous studies (e.g., Langer et al., 2011) to simplify the rating procedure and to avoid raters’ cognitive overload. Yet, the cost of this procedure is that it limits the interpretability of between-group differences. Another limitation is referred to raters’ gender, as there were a higher number of female raters than male raters for both groups of faces. Footnote 5 Although this is a common characteristic of validation studies of emotional faces (e.g., Langner et al., Reference Langner, Dotsch, Bijlstra, Wigboldus, Hawk and van Knippenberg2010; Tottenham et al., Reference Tottenham, Tanaka, Leon, McCarry, Nurse, Hare and Nelson2009), it may limit the generalizability of the results.

Given the exploratory nature of the present study, it only included three emotions. Although these three emotions have been extensively used in emotion research and are increasingly been employed in clinical research (e.g., LeMoult, Joormann, Sherdell, Wright, & Gotlib, Reference LeMoult, Joormann, Sherdell, Wright and Gotlib2009), further studies should be conducted to evaluate other basic emotions (e.g., disgust, surprise, fear) using this procedure. Moreover, despite the emotional parameters rated in the present study (i.e., emotional prototypicality and intensity), it could be relevant to evaluate other parameters in emotional faces’ perception, such as their clarity and genuineness (see, for instance, Langner et al., Reference Langner, Dotsch, Bijlstra, Wigboldus, Hawk and van Knippenberg2010). Finally, we employed a pictures’ edition procedure in order to maximize the emotional salience of faces and to control other possible distractor variables (i.e., color, brightness, non-informative aspects of emotional expressions). Although this procedure has several methodological advantages to control other non-emotional factors in the pictures, it may certainly delimit the generalizability of the data provided and reduce the ecological validity of pictures employed. Thus, further studies should be directed to additionally evaluate emotional parameters in original format pictures.

Despite these limitations, the method presented in this study may offer some potential advantages. In terms of the procedure, pairing a neutral face with the emotional face rated (see Figure 2) has the advantage of keeping a comparison term (i.e., the neutral face of that particular model) as a constant anchor point to evaluate a given emotional expression. This procedure may minimize random categorization effects. Further research is warranted to specifically test these potential advantages by directly contrasting alternative face recognition methods. Along this line, studies comparing the effects of using a constant neutral anchor-point versus emotional anchor-points would help to determine the role of non-emotional comparative anchor-points to control potential contextual effects in categorization (Russell & Fehr, Reference Russell and Fehr1987).

The method of evaluation of emotional parameters presented here can be especially valuable for cognitive research on emotion processing employing competition approaches (e.g., Mathews & Mackintosh, 1998), in which emotional faces are paired with neutral faces. For instance, selective attention studies aimed to analyze processing of emotional faces competing with neutral faces, such as dot-probe studies (e.g., Joormann & Gotlib, Reference Joormann and Gotlib2007) or eye-tracking studies (e.g., Provencio, Vázquez, Valiente, & Hervás, Reference Provencio, Vázquez, Valiente and Hervás2012), could benefit from the use of stimuli whose emotional parameters had been validated using a paradigm similar to the employed in these tasks. Data reported could be also useful for visual search paradigms and methods involving presentations of competing stimuli (Williams, Moss, Bradshaw, & Mattingley, Reference Williams, Moss, Bradshaw and Mattingley2005), and others directed to evaluate the distinctiveness of emotional faces from neutral faces by using morphing pictures’ methods (e.g., Bimler & Kirkland, Reference Bimler and Kirkland2001). Thus, our study, besides its utility in describing a new procedure to evaluate emotional parameters of emotional faces, also provides mean values on emotional prototypicality and intensity which can be useful for a number of current paradigms of emotion research (see Appendix).

In sum, the procedure reported in this study can be a helpful approach for researchers who want to select facial stimuli based on procedures different to those used in classical emotional recognition rates. We hope that this study makes a contribution to the emerging literature in this direction.

APPENDIX

KDEF Expressions: Prototypicality and Intensity Scores