Government exists, in part, to provide public goods that otherwise would not be generated by the market (Taylor Reference Taber and Lodge1987). It does so by making laws and allocating resources that ostensibly better the lives of citizens. In so doing, legislators and other government officials can draw on any information or input they prefer—nothing requires them to turn to science or even to citizens. Yet, it is clear that both science and citizens play a role. The former is apparent from the investment that governments around the world put into science. For example, as is the case in many countries, the United States Government supports a National Science Foundation (NSF): “an independent federal agency created by Congress in 1950 ‘to promote the progress of science; to advance the national health, prosperity, and welfare; to secure the national defense…’” In 2012, the NSF annual budget was roughly $7 billion, and it funded approximately 20% of all federally conducted research at universities (see www.nsf.gov/about). Even more money is allocated for research by the National Institutes of Health (NIH), which invests about $31 billion annually for medical research (see http://nih.gov/about). Furthermore, in 1863, the government established the National Academy of Sciences (NAS), the mission of which is to provide “independent, objective advice to the nation on matters related to science and technology” (see www.nasonline.org/about-nas/mission). The latter purpose—that is, that citizens impact public policy—has been demonstrated by an extensive body of scholarship that reveals policy shifts in direct response to changing citizens’ preferences (for a detailed review, see Shapiro Reference Schwartz2011).

What remains unclear, however, is how scientific research influences policy by either directly affecting legislative decisions and/or indirectly shaping citizens’ preferences to which legislators respond. To what extent does “science inform the policy-making process?” (Uhlenbrock, Landau, and Hankin Reference Tetlock2014, 94). This article does not explore the direct impact of science on policy. Instead, the focus is on a prior question of how science can best be communicated to policy makers and citizens. This is a challenging task; as Lupia states: “[s]ocial scientists often fail to communicate how such work benefits society…Social scientists are not routinely trained to effectively communicate the value of their technical findings” (Lupia Reference Lupia2014b, 2). The same is true of physical scientists who often are “fearful of treading into the contested terrain at all” (Uhlenbrock, Landau, and Hankin Reference Tetlock2014, 96, citing Opennheimer Reference O’Keefe2010). These apparent failures, in turn, have caused lawmakers to question the value of social science funding (Lupia Reference Lupia2014b, 1).

The approach is twofold. First, I discuss basic realities of how individuals form attitudes and make decisions. I do not delve into the details of information processing; however, I highlight key factors that are critical to understand if one hopes to effectively communicate science. Second, given how humans form opinions and make decisions, I discuss ways that science can be communicated more effectively to lawmakers and the public. Footnote 1

INDIVIDUAL OPINION FORMATION AND DECISION MAKING

This section highlights four common features of information processing that are necessary to acknowledge if one hopes to use science to shape opinions and decisions. Footnote 2

Values and Information

When individuals form opinions and/or make decisions, two key factors are involved: values and information (Dietz Reference Davenport2013, 14081; Lupia Reference Lupia2014a). “Values (1) are concepts or beliefs, (2) pertain to desirable end states or behaviors, (3) transcend specific situations, (4) guide selection or evaluation of behavior and events, and (5) are ordered by relative importance” (Schwartz Reference Schuldt, Konrath and Schwarz1992, 4). More concretely, values comprise stable, ordered belief systems that reflect a worldview (Feldman Reference Emanuel2003). Footnote 3 Individuals differ in their values; therefore, communicators and scholars must recognize these differences and not apply uniform criteria to what constitutes a “good decision”—what is good depends on the values that people hold. Dietz (2013, 14082) states that a good decision “must be value–competent. We know values differ substantially across individuals and vary to some degree within an individual over time…Science can help us achieve value competence by informing us about what values people bring to a decision and how the decision process itself facilitates or impedes cooperation or conflict.” Footnote 4

Science, then, enters into play when it comes to the second basis on which individuals form opinions and make decisions: science is information or facts that people use to arrive at attitudes and behaviors. In forming opinions and making decisions, citizens use a set of facts or information that always can be expanded. Unfortunately, this often has led social scientists to criticize citizens for not being sufficiently informed—labeling them cognitive misers and satisficers or claiming that they rely on “shortcuts and heuristics” instead a large store of information. However, the reality is that failure to be “fully informed” should not be perceived as a shortfall but rather as a basic reality. This is true for citizens (Lupia and McCubbins Reference Lupia and Elman1998; Sniderman, Chubb, and Hagen Reference Smith1991) and lawmakers (e.g., Kingdon Reference Kim, Taber and Lodge1977). As Schattschneider (1960, 131–2; italics in the original) aptly explained:

[n]obody knows enough to run government. Presidents, senators, governors, judges, professors, doctors of philosophy, editors, and the like are only a little less ignorant than the rest of us…The whole theory of knowledge underlying these concepts of democracy is false—it proves too much. It proves not only that democracy is impossible; it proves equally that life itself is impossible. Everybody has to accommodate himself to the fact that he deals daily with an incredible number of matters about which he knows very little. This is true of all aspects of life, not merely politics. The compulsion to know everything is the road to insanity.

Indeed, it is far from clear that increasing the quantity of information or facts per se generates “better” decisions. This is true because the identification of what constitutes “more information” often is beyond the grasp of analysts. Political scientists have long bemoaned that most citizens fail to answer correctly a set of factual questions about political institutions or figures. However, why this information matters for decisions and citizens should be expected to know these facts is unclear (Althaus Reference Althaus2006; Lupia Reference Lupia2006, Reference Lupia2014a). Lupia and McCubbins (1998, 27) stated: “[m]ore information is not necessarily better.” Footnote 5

Moreover, scholars have produced substantial evidence that those who seemingly “know more” by answering general factual questions on a topic tend to engage in “biased” reasoning such that they become overconfident in their standing opinions, thereby leading them to reject information that actually is accurate and could be helpful (Druckman Reference Douglas2012; Taber and Lodge Reference Stern, Young and Druckman2006). These points lead to the conclusion that it is not the amount of information or facts that matter but rather which information decision makers use: information that facilitates their ability to (1) identify which values are most relevant for a given decision (e.g., is the decision framed in terms of the most relevant values?), and (2) connect the given value to the decision. Footnote 6

… it is not the amount of information or facts that matter but rather which information decision makers use: information that facilitates their ability to (1) identify which values are most relevant for a given decision (e.g., is the decision framed in terms of the most relevant values?), and (2) connect the given value to the decision.

This is exactly where science comes into play. The hope is that the information used proves useful and that science, in many if not almost all cases, is beneficial. Dietz (2013, 14082) explains that “a good decision must be factually competent. The beliefs used in making decisions should accurately reflect our understanding of how the world works. Here, the role of science is obvious: Science is our best guide to developing factual understandings.” This echoes Kahneman’s (2011, 4) point that “Most of us are healthy most of the time, and most of our judgments and actions are appropriate most of the time. As we navigate our lives, we normally allow ourselves to be guided by impressions and feelings, and the confidence we have in our intuitive beliefs and preferences is usually justified. But not always…[and] an objective observer is more likely to detect our errors than we are.” This “objective observer” can be thought of as science.

For example, consider the energy technology called fracking, which involves a type of drilling that fundamentally differs from conventional drilling. Relative to the question of whether fracking should be supported as a method of energy recovery, concern over the environment is a common relevant value. In the case of fracking, many people are concerned that the process results in the release of substantial amounts of methane that are harmful to the environment. In forming an opinion about fracking, an individual—whose primary value concerns the environment—would want to know which information is helpful and/or relevant. It probably would not be necessary that the individual knows every detail about how fracking works and where it has been applied, much less other general scientific facts that often are asked on general science surveys as measures of “scientific literacy” (e.g., “Is it true or false that lasers work by focusing sound waves?”; “Which travels faster: light or sound?”; “True or False: Most of the oil imported by the United States comes from the Middle East”) (see, e.g., Miller Reference McCright and Dunlap1998). Footnote 7 What might be helpful, however, is scientific information from a recent study published in the Proceedings of the National Academy of Sciences that shows with fracking, 99% of methane leakages are captured (Allen et al. Reference Allen, Torres, Thomas, Sullivan, Harrison, Hendler and Herndon2013). In reference to the study, a senior vice president at the Environmental Defense Fund referred to recent developments as “good news” (http://www.nytimes.com/2013/09/17/us/gas-leaks-in-fracking-less-than-estimated.html?_r=0). This is not to say that the individual then should support fracking given this one study and statement; however, this scientific information could be helpful as the person considers fracking versus alternative approaches. In contrast, general scientific questions have no apparent relevance.

The point for science communication is how to ensure that “good” and pertinent science effectively reaches decision makers who use it. However, accomplishing this incurs several challenges, including assurance that individuals (i.e., citizens and/or lawmakers) attend to scientific information. As previously mentioned, people attend to a small amount of possible information in the world; therefore, one hurdle becomes how to grab their attention. One way to stimulate attention involves creating anxiety about a topic; anxiety causes people to seek information (Marcus, Neuman, and MacKuen Reference Mansbridge2000). This becomes pertinent not because anxiety should be created artificially but rather, as discussed later, uncertainty is not something that science should disguise; at times, uncertainty about outcomes can stimulate anxiety. A second method (also discussed later) to arouse attention entails emphasizing the personal importance of an issue (Visser, Bizer, and Krosnick Reference Uhlenbrock, Landau and Hankin2006, 30). Footnote 8 In many cases, public policies indeed have direct consequences that citizens otherwise may not recognize and legislators may not appreciate in advance—that is, they may not realize how different policies can later affect their reelectoral chances (Krehbiel Reference Kingdon1992). Footnote 9

A second challenge concerns the credibility of information. Even when they are exposed to relevant information, individuals will use it only if they believe it to be credible (Lupia and McCubbins Reference Lupia and Elman1998). Ensuring trust in scientific information is not straightforward: general trust in science has not increased in the past 35 years despite clear scientific advances (Dietz Reference Davenport2013, 14085; Gauchat Reference Freudenburg, Gramling and Davidson2012). Various steps can be taken to increase trust, such as ensuring consensus evidence that differing political sides endorse to minimize perceived bias (O’Keefe Reference Nyhan2002, 187), ensuring transparent evidence in terms of how the results were derived (Dietz Reference Davenport2013, 14086), and avoiding conflating scientific information with values that may vary among the population (Dietz Reference Davenport2013, 14086).

In summary, to understand how scientists can influence and improve citizen and legislator decision making, we must understand how information is processed. Thus far, this article highlights three key components of opinion formation and decision making: (1) the quality and not quantity of information is what matters, (2) stimulating attention is a challenge, and (3) information must be perceived as credible to have an effect. The discussion now turns to another hurdle in the science-communication process: motivated reasoning.

Motivated Reasoning

Motivated reasoning refers to the tendency to seek information that confirms prior beliefs (i.e., a confirmation bias); to view evidence consistent with prior opinions as stronger or more effective (i.e., a prior-attitude effect); and to spend more time counter-arguing and dismissing evidence inconsistent with prior opinions, regardless of objective accuracy (i.e., a disconfirmation bias). Footnote 10 These biases influence the reception of new information and may lead individuals to “reason” their way to a desired conclusion, which typically is whatever their prior opinion suggested (Kunda Reference Krehbiel1990; Taber and Lodge Reference Stern, Young and Druckman2006).

For example, consider Druckman and Bolsen’s (2011) two-wave study of new technologies. At one point in time, the authors measured respondents’ support for genetically modified (GM) foods. They told respondents that they would be asked later to state but not explain their opinions, thereby promoting attitude strength—which, in turn, increases the likelihood for motivated reasoning (Lodge and Taber Reference Lenz2000; Redlawsk Reference Pielke2002). Presumably, respondents had little motivation to form accurate preferences given the relative distance of GM foods to their daily life and the lack of inducements to be accurate (e.g., being required to justify their reasoning later).

After about 10 days, respondents received three types of information about GM foods: positive information about how they combat diseases, negative information about their possible long-term health consequences, and neutral information about their economic consequences. On its face, all of this information is potentially relevant. Indeed, when asked to assess the information, a distinct group of respondents—who were encouraged to consider all possible perspectives regarding GM foods and were told they would be required to justify their assessments—judged all three to be relevant and valid. Yet, Druckman and Bolsen (Reference Druckman2011) report that among the main set of respondents, the prior wave 1 opinions strongly conditioned treatment of the new information. Those who were previously supportive of GM foods dismissed the negative information as invalid, rated the positive information as highly valid, and viewed the neutral information as positive. Those opposed to GM foods did the opposite: invalidated the positive information, praised the negative, and perceived the neutral as negative (see also Kahan et al. Reference Joffe2009). The authors found virtually identical dynamics using the same design on the topic of carbon nanotubes.

Furthermore, motivated reasoning often has a partisan slant—for example, consider a George W. Bush supporter who receives information suggesting that the president misled voters about the Iraq war. Given these biases, this supporter is likely to interpret the information as either false or as evidence of strong leadership in times of crisis. Motivated reasoning likely will lead this supporter, and others with similar views, to become even more supportive of Bush (e.g., Jacobson Reference Iyengar and Kinder2008). This same behavior also occurs in the presence of partisan cues that anchor reasoning (e.g., Bartels Reference Bartels2002; Goren, Federico, and Kittilson Reference Gauchat2009). For instance, individuals interpret a policy in light of existing opinions concerning the policy’s sponsor. Thus, Democrats might view a Democratic policy as effective (e.g., a new economic-stimulus plan) and support it, whereas they would see the same policy as less effective and perhaps even oppose it if sponsored by Republicans or not endorsed by Democrats (e.g., Druckman and Bolsen Reference Druckman2011). Similarly, Democrats (or Republicans) may view economic conditions favorably during a Democratic (or Republican) administration even if they would view the same conditions negatively if Republicans (or Democrats) ruled (e.g., Bartels Reference Bartels2002; Lavine, Johnston, and Steenbergen Reference Lau and Redlawsk2012). Footnote 11

Even before these types of biased-information evaluations, individuals will seek information supportive of their prior opinions (e.g., pro-Bush or pro-Democratic information) and evade contrary information (Hart et al. Reference Hajnal2009). Lodge and Taber (2008, 35–6) explained that motivated reasoning entails “systematic biasing of judgments in favor of one’s immediately accessible beliefs and feelings… [It is] built into the basic architecture of information-processing mechanisms of the brain.” A further irony—given the value often granted to strongly constrained attitudes—is that motivated reasoning occurs with increasing likelihood as attitudes become stronger (Redlawsk Reference Pielke2002; Taber and Lodge Reference Stern, Young and Druckman2006). Houston and Fazio (1989, 64) explained that those with weaker attitudes, particularly when motivated, “are processing information more ‘objectively’ than those with [stronger] attitudes.”

At an aggregate level, the result can be a polarization of opinions. If, for example, Democrats always seek liberal-oriented information and view Democratic policies as stronger, and Republicans behave analogously, partisans may diverge further. Briefly, instead of seeking and incorporating information that may be relevant and helpful in ensuring more accurate later opinions, individuals ignore and/or dismiss information—not on the basis of prospective use and relevance or “objective” credibility but rather because their goal, in fact, is to find and evaluate information to reinforce their prior opinions. Footnote 12

Whereas this type of reasoning raises serious normative questions about democratic representation and opinion formation (Lavine et al. Reference Lau and Redlawsk2012), for current purposes, the central point is that relaying even ostensibly credible scientific information faces a serious hurdle if individuals reject any evidence that seems to contradict their prior opinions. Recall the example of fracking: if individuals are initially opposed to the idea, motivated reasoning would lead them to dismiss the Proceedings of the National Academy of Sciences article as noncredible or not useful—or they may avoid paying any attention to it in the first place. In reference to scientific information, Dietz (2013, 14083) states: “Once an initial impression is formed, people then tend to accumulate more and more evidence that is consistent with their prior beliefs. They may be skeptical or unaware of information incongruent with prior beliefs and values. Over time, this process of biased assimilation of information can lead to a set of beliefs that are strongly held, elaborate, and quite divergent from scientific consensus” (italics added). Footnote 13

As discussed herein, there are ways to overcome motivated-reasoning biases. Specifically, when individuals are motivated to form “accurate” opinions such that they need to justify them later, when the information comes from a mix of typically disagreeable groups (e.g., Democrats and Republicans), or when individuals are ambivalent about the source of information, then they tend to view the information in a more “objective” manner (Druckman Reference Douglas2012).

The actor who politicizes need not be a political actor per se but there typically is a political agenda being pursued.

Politicization

Few trends in science and public policy have received as much recent attention as politicization. To be clear, politicization occurs when an actor exploits “the inevitable uncertainties about aspects of science to cast doubt on the science overall…thereby magnifying doubts in the public mind” (Stekette Reference Stapel, Koomen and Zeelenberg2010, 2; see also Jasanoff Reference Jacobson1987, 195; Oreskes and Conway Reference Opennheimer2010; Pielke Reference Page and Shapiro2007). The consequence is that “even when virtually all relevant observers have ultimately concluded that the accumulated evidence could be taken as sufficient to issue a solid scientific conclusion…arguments [continue] that the findings [are] not definitive” (Freudenburg, Gramling, and Davidson Reference Ford and Kruglanski2008, 28; italics in original). Thus, politicization is distinct from framing (e.g., emphasizing distinct values such as the environment or the economy) or even misinformation: it involves introducing doubt and/or challenging scientific findings with a political purpose in mind (i.e., it does not entail offering false information but rather raising doubt about extant scientific work). The actor who politicizes need not be a political actor per se but there typically is a political agenda being pursued. Horgan (2005) noted that this undermines the scientific basis of decision—that is, opinions could contradict a scientific consensus because groups conduct campaigns in defiance of scientific consensus with the goal of altering public policy (see also Lupia Reference Lupia2013).

To cite one example, the recently released report, “Climate Change Impacts in the United States,” suggests that a scientific consensus exists that global climate change stems “primarily” from human activities. Indeed, the report reflected the views of more than 300 experts and was reviewed by numerous agencies including representatives from oil companies. Yet, the report was immediately politicized with Florida Senator Marco Rubio stating, “The climate is always changing. The question is, is manmade activity what’s contributing most to it? I’ve seen reasonable debate on that principle” (Davenport Reference Cook and Moskowitz2014, A15). He further stated: “I think the scientific certainty that some claimed isn’t necessarily there.”

Of course, climate change is the paradigmatic example of politicization (see Akerlof et al. Reference Akerlof, Rowan, Fitzgerald and Cedeno2012; McCright and Dunlap Reference Massey and Barreras2011; Oreskes and Conway Reference Opennheimer2010; Schuldt, Konrath, and Schwarz Reference Schattschneider2011; Von Storch and Bray 2010). However, many other areas of science have been politicized, including education (Cochran–Smith, Piazza, and Power 2012), biomedical research (Emanuel Reference Druckman and Bolsen2013), social security (Cook and Moskowitz Reference Cook2013), aspects of health care (Joffe Reference Jasanoff2013), and so on (for a general discussion, see Lupia Reference Lupia2014a, chap. 8). The consequences and potential implications were captured by a recent editorial in Nature (2010, 133) that states: “[t]here is a growing anti-science streak…that could have tangible societal and political impacts.”

In one of the few studies that examine the effects of politicization on the processing of scientific information, Bolsen, Druckman, and Cook (2014a) investigated what happens when respondents were told: “…[m]any have pointed to research that suggests alternative energy sources (e.g., nuclear energy) can dramatically improve the environment, relative to fossil fuels like coal and oil that release greenhouse gases and cause pollution. For example, unlike fossil fuels, wastes from nuclear energy are not released into the environment. A recent National Academy of Sciences (NAS) publication states, ‘A general scientific and technical consensus exists that deep geologic disposal can provide predictable and effective long-term isolation of nuclear wastes.’” When respondents received only this information (which did in fact come from an NAS report), support for nuclear energy increased.

However, when the information was preceded by a politicization prime that stated “…[i]t is increasingly difficult for non-experts to evaluate science—politicians and others often color scientific work and advocate selective science to favor their agendas,” support not only did not increase but, in fact, it also marginally decreased. The authors also present evidence that the decreased support stemmed from increased anxiety about using nuclear energy. The results suggest that politicization has the potential, if not the likelihood, of causing individuals to not know what to believe, thereby dismissing any evidence and clinging to a status quo bias (i.e., new technologies are not adopted and novel scientific evidence is ignored). This begs the question of “How can politicization be overcome?”—a question that is discussed later in this article.

The results suggest that politicization has the potential, if not the likelihood, of causing individuals to not know what to believe, thereby dismissing any evidence and clinging to a status quo bias (i.e., meaning new technologies are not adopted and novel scientific evidence is ignored).

Competence

Thus far, this article makes clear the role science can and perhaps should play in citizen and/or legislator decision making, but it has not clarified exactly why science necessarily leads to better decisions per se. Defining what makes an opinion, preference, or action “better” is a matter of great debate among social scientists. Footnote 14 For example, political scientists offer various criteria including the statement that better decisions are those arrived at with ideological constraint (e.g., Converse Reference Cochran-Smith, Piazza and Power1964, 2000); based on deliberation (e.g., Bohman Reference Bohman1998); and, as previously noted, based on “full” or the “best available” information (e.g., Althaus Reference Althaus2006, 84; Bartels Reference Bartels1996; Lau and Redlawsk Reference Kunda2006; Page and Shapiro Reference Oreskes and Conway1992, 356; Zaller Reference Warren1992, 313). Similar to the previous argument that using full information as a standard is foolhardy, I further argue that any substantive basis is bound to be problematic as an expectation for the evaluation of preferences (see Druckman Reference Druckman2014). Researchers who assert that a preference based on certain criteria reflects greater competence than a preference based on other criteria run the risk of ascribing their values and expectations to others, which, in turn, strips citizens of autonomy by imputing a researcher’s own preferences onto the citizen (Warren Reference Von Storch and Bray2001, 63).

This also can lead to a confusing array of contradictory criteria. Footnote 15 As Althaus (2006, 93) explained: “[t]he various traits often held to be required of citizens [are] usually ascribed to no particular strand of democratic theory…‘there is scarcely a trait imaginable that cannot, in some form or fashion, be speculatively deemed part of democratic competence.’”

Thus, a standard for competence must avoid relying on “traits” asserted as necessary by an observer (e.g., a researcher)—if for no other reason than to avoid Lupia’s “elitist move” of imputing preferences and to ensure that citizens maintain autonomy to determine what is best for them (Lupia Reference Lupia2006). This implies consideration of a relevant counterfactual, which I call the prospective counterfactual. Specifically, we assume a single decision maker and a particular choice set over which he or she must form a preference (e.g., a set of candidates or policy proposals; I ignore questions about the origins of the choice set). Furthermore, we set a time frame that covers the time at which a preference is formed (t1) to a later point in time when the preference is no longer relevant (e.g., the end of a candidate’s term or a point at which a policy is updated or is terminated) (t2). If the decision maker formed the same preference at t1 that he or she would form at t2, then the preference can be nothing but competent (i.e., a “capable” preference given the decision maker’s interests). This is similar to Mansbridge’s “enlightened preferences,” which refer to preferences that people would have if “their information were perfect, including the knowledge they would have in retrospect if they had had a chance to live out the consequences of each choice before actually making a decision” (Mansbridge Reference Maio and Olson1983, 25). This is an inaccessible state given that it relies on an assessment of a future state: Mansbridge explained that it “is not an ‘operational’ definition, for it can never be put into practice…No one can ever live out two or more choices in such a way as to experience the full effects of each and then choose between them” (Mansbridge Reference Maio and Olson1983, 25). Footnote 16 In practice, one way to think about this is to posit that the decision maker’s goal should be to minimize the probability of subsequent retrospective regret about a preference formed at t1.

I suggest that there are two aspects of t1 opinions that increase the likelihood of forming a competent opinion. First, as argued previously, basing a decision on scientific information—all else being constant—should enhance the quality of the decision. This is true because science—when transparent—provides a unique basis for anticipating the outcomes of distinct policies (Dietz Reference Davenport2013, 14082; Lupia Reference Lupia2014a; Uhlenbrock, Landau, and Hankin Reference Tetlock2014). It is important to note that this is true only if the science is done “well”—that is, the methods and results are transparent, uncertainty is noted as a reality, and values are minimized in the factual presentation of science (Dietz, Leshko, and McCright Reference Dietz2013; Lupia Reference Lupia2014a). Footnote 17 This type of science increases the odds of a “competent” decision because it facilitates the connection between an individual’s personal values and the probable outcome of a policy.

The second desirable trait that increases the likelihood of a good decision is that the decision maker is motivated to form “accurate decisions.” Without a motivation to form accurate opinions, individuals could fall victim to motivated processing in which, even if provided with and attentive to relevant scientific information, they may dismiss or misread it so that it coheres with their prior opinions. Accuracy motivation can stem from an individual’s disposition and interest (Stanovich and West Reference Southwell1998) or various features of a decision-making context (Hart et al. Reference Hajnal2009). Alternatively, it can be induced from contextual factors, such as a demand that the individual justify or rationalize a decision (Ford and Kruglanski Reference Feldman, Sears, Huddy and Jervis1995; Stapel, Koomen, and Zeelenberg Reference Stanovich and West1998; Tetlock Reference Taylor1983); an instruction to consider opposing perspectives (Lord, Leeper, and Preston Reference Lodge and Taber1984); the realization that a decision may not be reversible (Hart et al. Reference Hajnal2009); or the presence of competing information that prompts more thorough deliberation (Chong and Druckman Reference Chapp2007; Hsee Reference Houston and Fazio1996).

Having identified various challenges to opinion formation and decisions and defined key features of what I argue are relatively “competent” decisions, the discussion now considers how science communication can increase the likelihood of making such decisions.

MAKING SCIENCE COMMUNICATION MORE EFFECTIVE

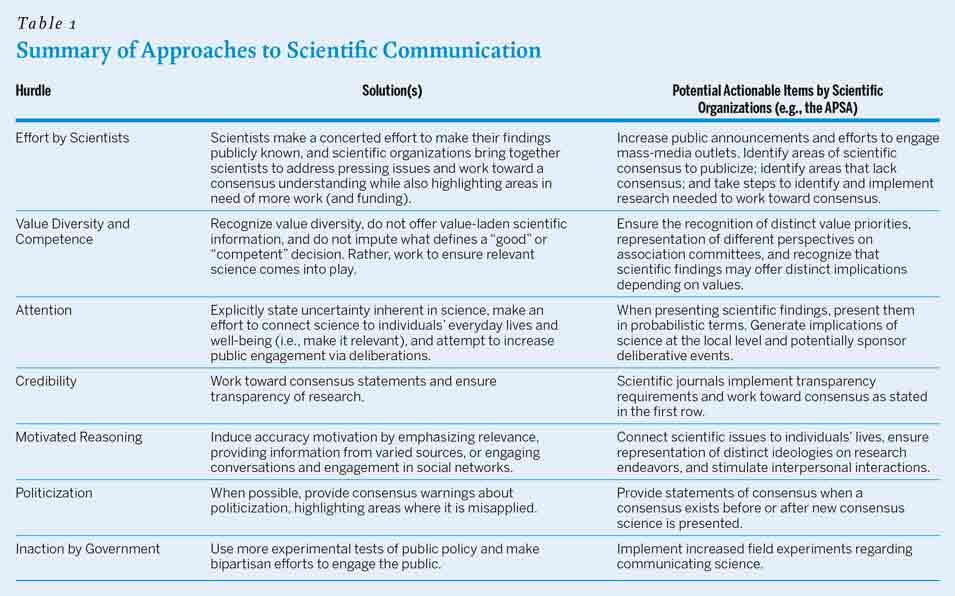

Ensuring that science plays a role in public policy either directly via legislators’ decisions or indirectly to legislators who respond to citizens cannot be assumed or assured. Yet, given the previous discussion, several steps can be taken to increase the likelihood that “science matters.” They should not be seen as exhaustive, but they suggest ways to overcome the hurdles discussed in the previous section. Table 1 lists each hurdle, possible solutions, and potential actionable items that can be taken by scientific organizations such as the American Political Science Association (APSA). The table is a guide and a summary for the remainder of the article.

Table 1 Summary of Approaches to Scientific Communication

Scientists Must Become Involved

To date, scientists generally have not been active in engaging in policy debates and they often hesitate about presenting what they know, even when it is clearly policy-relevant. Uhlenbrock, Landau, and Hankin (2014, 95) state that “scientists shy away from being part of this dialogue because some science policy issues have become polarized…[yet] [h]owever ominous it may seem for scientists to venture into the communication world, there is a great need not only for dissemination of the information but also for science to have a regular voice in the conversation” (see also Lupia Reference Lupia2014b). Given that academic scientists may lack professional incentives for such involvement per se, it is critical that academic associations and organizations (e.g., the APSA and the American Association for the Advancement of Science) work to ensure that science is communicated. The NAS has attempted to fulfill this role and serves as an example; however, given the challenge of the current and changing media landscape, even it can develop techniques that reach a broader segment of the population. Indeed, although the NAS undoubtedly identifies consensual scientific information, it is less clear that it has mastered the challenges facing science communicators (see the following discussion).

Moving forward, scientific organizations can engage in sustained efforts to identify areas of scientific consensus; when these areas do not exist, they can organize groups of researchers to assess the work that may be needed to move toward a consensus. When a consensus does exist, organizations should not hesitate about engaging mass-media outlets to publicize research.

Recognize Value Diversity and Avoid Imputing Values in the Presentation of Evidence

As a starting point, scientists and analysts must recognize the reality that people differ in their values, which often leads to distinct opinions in light of the same factual information: “[f]or values, there is no correct position on which we can converge…we make a serious mistake if we assume such fact-based [science] processes will resolve conflicts based on value differences” (Dietz Reference Davenport2013, 14085). Lupia (2014a, 87, 115) explains that an “important step in managing value diversity is to recognize that it is present…In many situations, there are no criteria that will be accepted as ‘best’ by everyone.” In other words, science does not ensure a single attitude because the scientific information will have distinct meanings to people with varying values, and this must be recognized: singular scientific findings may have distinct implications for individuals that are contingent on their values.

Relatedly, it is critical that those who present scientific information attempt to focus on the science and minimize the value commentary; failure to do so damages their own credibility and ignores the reality of value diversity. Dietz (2013, 14086) clarifies this in stating that “trust is not well served when scientists confuse competencies. Scientists are experts on the facts…a scientific body is neither authorized nor particularly well constituted to make value judgments for the larger society.” Similarly, Uhlenbrock, Landau, and Hankin (2014, 95) explain that “the research must adhere to the scientific process and be free of value judgments.” That being said, the reality is that—on some level, science is imbued with value choices (Douglas Reference Dietz, Rosa and York2009; Hicks Reference Hart, Albarracín, Eagly, Brechan, Lindberg and Merill2014). Consequently, what scientists can do (when appropriate) is recognize value choices made through the scientific process (e.g., what problems to study and how to study it), and perhaps even note the values of individual scientists involved, and then emphasize aspects of the scientific process that make it relatively credible. Organizations also can ensure the value diversity of individuals involved in joint organizational projects.

… it is critical that those who present scientific information attempt to focus on the science and minimize the value commentary; failure to do so damages their own credibility and ignores the reality of value diversity.

How to Get Attention

A central challenge to any communication effort is how to capture the attention of the potential audience (e.g., citizens or legislatures). As Lupia (2013, 14048) points out, “individuals forget almost everything that any scientist ever says to them…when a scientist attempts to convey a particular piece of information to another person at a particular moment, that piece of information is involved in a competition for one of the person’s few available chunks [of memory] with all other phenomena to which that person can potentially pay attention.” This hurdle becomes more acute with each passing day as the media landscape increases in complexity and available information concomitantly becomes overwhelming (Lupia Reference Lupia2014a, Reference Lupiab). Three approaches comprise ways that science communication can attempt to gain citizen attention. First, scientists should not evade the reality that all science involves uncertainty—that is, science should “take explicit account of uncertainty” (Dietz Reference Davenport2013, 14082). This can be done, when appropriate, by presenting findings in probabilistic terms. Not only does this potentially enhance the credibility of science given that science is never certain; it also may have the unintended by-product of causing individual-level anxiety—that is, uncertainty about facts can generate anxiety about outcomes (Bolsen, Druckman, and Cook 2014a). Anxiety, in turn, has been shown to stimulate individuals to seek information and, in this case, scientific information (Marcus, Neuman, and MacKuen Reference Mansbridge2000). That being said, there are two caveats to using uncertainty to generate anxiety. First, if a scientist or scientific body is perceived as purposely provoking anxiety to stimulate attention, it may compromise credibility, thereby making any communication ineffective. Second, whereas recognizing uncertainty may be advisable given the reality of science, overemphasizing it can facilitate politicization whereby other (often non-scientific) actors capitalize on inevitable uncertainty to challenge sound scientific consensus (see the following discussion). For these reasons, overstating uncertainty invites a slippery slope.

A second approach, which may be more straightforward, is to make clear how a given policy can affect individuals’ lives. Government policy often seems distant, especially when it is on a national scale and the connection to day-to-day life may not be transparent. Yet, in many cases, a policy will affect citizens in terms of what they can and cannot do and, more generally, how their tax dollars are spent. The more relevant a policy and the relevant science are to individuals, the more likely they will attend to the science (Visser, Bizer, and Krosnick Reference Uhlenbrock, Landau and Hankin2006). Moreover, recalling the prior discussion, making science less abstract and more applicable also means increasing anxiety in many cases. Lupia (2013, 14049) notes that affective triggers (e.g., anxiety) increase attention, and he provides the following example: rather than discussing rising seas due to global warming as an abstract global phenomenon, “scientists can also use models to estimate the effect of sea level rise on specific neighborhoods and communities. Attempts to highlight these local climate change implications have gained new attention for scientific information in a number of high-traffic communicative environments.” In other words, in many cases in which new science is needed to reduce a threat (e.g., climate change), localizing consequences likely generates attention due to both increased anxiety and increased relevance.

The third approach, emphasized by Dietz (Reference Davenport2013) and in a recent National Research Council (NRC) report (Dietz, Rosa, and York Reference Dietz, Leshko and McCright2008), is to increase public engagement by having more deliberative-type interactions. This would be ideal, especially if that engagement followed the principles established in the NRC report, because it ensures attention and also enables scientists to obtain feedback on which science is relevant given the diversity of values: two-way exchanges can be exceptionally valuable (in the form of public-information programs, workshops, town meetings, and so forth). Indeed, public engagement often is defined in terms of deliberative interactions (see, for e.g., http://en.wikipedia.org/wiki/Public_engagement). Although this suggests that scientific organizations (including the APSA) may seek to sponsor such events, we must recognize that inducing several individuals to participate is not likely to be realistic. Moreover, participation does not ensure attention or careful processing given the findings that deliberation often is dominated not by those most informed but rather by individuals with other demographic features based on gender and race (e.g., Karpowitz, Mendelberg, and Shaker Reference Kahneman2012; Lupia Reference Lord, Leeper and Preston2002). Thus, although efforts to engage the public in two-way dialogues have great potential, they should be undertaken with caution.

Credibility

Even after it has been received, information generates belief change only if it is perceived as credible. Lupia (2013, 14048) explains that “listeners evaluate a speaker’s credibility in particular ways.” Whereas an extensive literature in social psychology identifies approaches to enhance credibility (see, e.g., O’Keefe Reference Nyhan2002), two key approaches seem to be particularly relevant for communicating science. First, it is critical that science be transparent so that the methods used to arrive at a given conclusion are not only clear but also replicable and honest. Lupia (2014b, 3) states: “[w]hat gives social science [but also science in general] its distinctive potential to expand our capacity for honesty is its norm of procedural transparency” (for a more general discussion on transparency, see Lupia and Elman Reference Lupia2014). Similarly, Dietz (2013, 14086) explains: “What can we do to enhance trust in science? One step, fully in line with the norms of science, is to encourage open and transparent processes for reporting scientific results” (see also Nyhan Reference Nyhan2015 for a discussion on steps that can be taken concerning publication). This means that scientists from all fields must work toward a set of procedures that ensures work is transparent, data are made public, and steps to reach a conclusion are obvious (these could be requirements for publication in association journals). Moreover, when communicating scientific results, priming the attributes of science—as a transparent, replicable, and systematic process—can enhance credibility and persuasion. Bolsen, Druckman, and Cook (2014a) found that such a prime increases the likelihood that individuals believe the science to which they are exposed.

A second step, when possible, is to have scientists who bring in distinctive and varying values and ideological leaning work together toward a consensus view: “[i]f scientists don’t agree, then trust in science is irrelevant” (Dietz, Leshko, and McCright Reference Dietz2013, 9191). Of course, at times, consensus is not possible but, in these cases, areas of consensus should be clearly distinguished from those in which consensus is lacking. These latter areas then should inform funding organizations about what future science needs to address. When consensus can be reached, it helps to vitiate the impact of politicization, especially when different ideological sides are included. Bolsen, Druckman, and Cook (2014b) found that when information comes from a combination of Democrats and Republicans, all individuals tend to believe it and act accordingly. This contrasts with the situation in which the same information is attributed to only one party or the other (this finding also is relevant to minimizing motivated reasoning). Smith’s proposal that scientific organizations create a speaker’s bureau is in line with this idea, particularly if speakers can be paired based on differing ideological perspectives, thereby clarifying points of agreement and disagreement (Smith 2014).

The importance of consensus has long been recognized; indeed, a purpose of the NAS is to document consensus about contemporary scientific issues when it exists (see www.nationalacademies.org/about/whatwedo/index.html). Although it may not be directly expressed in the mission statements of other academic organizations, such groups (e.g., disciplinary groups mentioned previously) can work to generate task forces aimed at identifying consensus knowledge, when it exists (while also recognizing the realities of uncertainty) (Uhlenbrock, Landau, and Hankin Reference Tetlock2014, 98–9). Footnote 18

The focus of this article is to emphasize transparent and scientific methods and to strive for consensus statements. In essence, these two foci cohere to Lupia’s (2013) point that persuasion occurs only when there exists perceived knowledge, expertise, and common interests. The critical point is that perceptions are what matter; increasingly, the perception of shared interests and expertise can take various forms. However, it seems that accentuating science and consensual viewpoints may capture the broadest audience.

…perceptions are what matter; increasingly, the perception of shared interests and expertise can take various forms. However, it seems that accentuating science and consensual viewpoints may capture the broadest audience.

Motivation

Another challenge is to minimize motivated reasoning, which, as explained, refers to people’s tendency to downgrade the credibility of any information if it disagrees with their prior opinions or comes from a source perceived to be on the opposite side of the individual (e.g., a Democrat does not believe a Republican source). There are methods to minimize motivated reasoning, thereby ensuring that individuals assess evidence at face value. To the extent possible, those passing on the information must try to stimulate their audience to be motivated to form accurate opinions; when this occurs, motivated reasoning disappears and people spend more time elaborating on and accepting the information (Bolsen, Druckman, and Cook 2014b). At least three distinct approaches can be used to generate accuracy motivation: (1) making it clear that the issue and/or information is directly relevant to an individual’s life (i.e., the same technique used to generate attention) (Leeper Reference Lavine, Johnston and Steenbergen2012); (2) having the information come from varying sources with ostensibly different agendas (e.g., a mix of Democrats and Republicans) (Bolsen, Druckman, and Cook 2014b); and (3) inducing individuals to anticipate being required to explain their opinions to others. The third method can be pursued by increased usage of participatory engagement and deliberations in which people must explain themselves. Although I previously noted the various challenges to these exercises, motivated reasoning can be overcome even in the absence of formalized deliberations if people simply expect to be required to explain themselves in informal social situations (Sinclair Reference Shapiro2012). The likelihood of this occurring can be enhanced as the relevance of science is emphasized, leading to more discussion of opposing views. That being said, an unanswered question is the extent to which temporary increases in motivation (e.g., anticipation of interpersonal interactions) sustain over time as novel information continues to be encountered.

Overcoming Politicization

The problem of politicization is linked directly to the credibility of information and motivated reasoning—that is, when science is politicized, people become unclear about what to believe; therefore, scientific credibility declines and people tend to turn only to sources that confirm their prior opinions. It is for this reason that the ostensible increase in politicization led to tremendous concern among scientists: “[p]oliticization does not bode well for public decision making on issues with substantial scientific content. We have not been very successful in efforts to counter ideological frames applied to science” (Dietz Reference Davenport2013, 14085).

Bolsen and Druckman (Reference Bolsen and Druckmanforthcoming) explore techniques to counteract politicization related to the use of carbon nanotechnology and fracking. They study two possible approaches to vitiating the effects of politicization: (1) a warning prior to a politicized communication that states that the given technology should not be politicized as a scientific consensus exists, and (2) a correction that offers the same information as the just described warning but in this case, it comes after a politicized communication. The authors find warnings are very effective and minimize politicization effects, and corrections are less so but do work when individuals are motivated to process information. The more significant point is that when a scientific consensus exists, organizations can work to counteract politicization by issuing direct messages that challenge the politicization either before or after it occurs.

The Role of Scientific Bodies and Funding

Why should scientists care about communicating their findings? The answer is not straightforward, given the previously mentioned hesitation to engage in public policy, which is perhaps due to fear that their findings will be misconstrued. However, there are two answers. First, for reasons that are emphasized herein, science has a critical role in making public policy. Second, even if scientists do not appreciate that role, relaying the benefits of their endeavors is necessary if the government is to continue funding scientific research. As Lupia makes clear, “Congress is not obligated to spend a single cent on scientific research” (2014b, 5; italics in the original). He further states that “Honest, empirically informed and technically precise analyses of the past provide the strongest foundation for knowledge and can significantly clarify the future implications of current actions.” Stated differently, scientists must clarify how and when they improve what I call “competent decision making.”

Scientists cannot do this alone; therefore, some of the responsibility falls on scientific organizations: “Scientific societies and organizations can play a central role in science policy discourse in addition to an individual scientist’s voice” (Uhlenbrock, Landau, and Hankin Reference Tetlock2014, 98; see also Newport et al. 2013). This is critical if scientific disciplines hope to present consensus or near-consensus statements about varying issues. As mentioned previously, the NAS serves this role as part of its mission, but other disciplinary organizations must complement the NAS. In addition to organizing committees to issue scientific reports, they can make a more concerted effort to identify areas in need of future work. Indeed, the NAS sometimes does exactly this; for example, in a 1992 report on global and environment change, an NAS committee was partially funded by the NSF. It also recommended areas in need of more funding: “Recommendation 1: The National Science Foundation should increase substantially its support for investigator-initiated or unsolicited research on the human dimensions of global change. This program should include a category of small grants subject to a simplified review procedure” (Stern, Young, and Druckman Reference Stekette1992, 238). That being said, even the NAS is not empowered to take the necessary steps to generate a scientific consensus when one does not exist. That is, it does not have the inherent infrastructure to identify areas that lack consensus, to isolate the work that may be helpful in reaching a consensus, and to acquire funding needed to achieve consensus. Indeed, the previously mentioned report recommends NSF funding but, ideally, there would be a mechanism such that scientists committed to conducting research to move toward a consensus would be funded (e.g., the NSF could make this a criterion when considering funding).

If scientists hope to play a role in policy making or even to ensure continued federal support of their work, it is essential that identifying effective communication strategies becomes a paramount goal.

A final point concerns the role of government. Ideally, government agencies will move toward endorsing more experimentation with varying social programs, thereby fulfilling Campbell’s plea for an experimenting society (Campbell Reference Bullock1969, Reference Campbell1991). There are obvious ethical and logistical challenges to experimenting with social programs (Cook Reference Converse2002). However, if scientists can make a stronger case for the benefits of doing so, it may lead to an increase in these evaluations, thereby allowing scientists and policy makers to identify more clearly what works and what does not (see, e.g., Bloom Reference Bloom2005). In summary, this would be a movement toward “evidence-based government.” If nothing else, field experiments can be launched to assess the relative success of the types of communication strategies discussed in this article.

Government also can play a role via the Office of Public Engagement (see www.whitehouse.gov/engage/office), the mission of which is to allow the views of citizens to be heard within the administration by staging town hall meetings and other events. The Office of Public Engagement can make a more concerted effort to incorporate scientific perspectives in these conversations and, although perhaps unlikely for political purposes, ideally would be moved outside of the Executive Office of the President to become a nonpartisan independent commission, thereby avoiding partisan biases and building bipartisan perspectives.

CONCLUSION

The goal of this article is to identify the hurdles to effective scientific communication in the public policy-making process. It highlights several challenges but also suggests possible methods to make science play a larger role in opinion formation (for both legislators and citizens). This includes acknowledging the uncertainty inherent in science, making the relevance of science clear to citizens’ everyday life, ensuring that science proceeds with transparency and seeks to build consensus by incorporating value and ideological diversity, and seeking increased social engagement.

None of the recommended steps is easy and none ensures success. Moreover, the article focuses on direct scientific communication, ignoring obvious questions about information flows through social networks (Southwell Reference Sniderman and Highton2013), the media (Kedrowski and Sarow Reference Karpowitz, Mendelberg and Shaker2007), and interest groups (Claasen and Nicholson 2013)—all of which have been shown to generate possible uneven information distribution related to science. That being said, I offer a starting point. If scientists hope to play a role in policy making or even to ensure continued federal support of their work, it is essential that identifying effective communication strategies becomes a paramount goal. In the current politicized and polarized environment, a failure to demonstrate why and how science matters ultimately could render moot much of science.

ACKNOWLEDGMENTS

I thank Adam Howat, Heather Madonia, and especially Skip Lupia for comments and insights.