1. Introduction

The gestural controller can be made sufficiently complex in its control diversity, and therefore can optimally deal with expressive timbral control. And with this I mean it can provide the translation of physical intentions of the composer/performer, ranging form [sic] utmost fragility to outstanding trance, into a set of related timbral trajectories. (Waisvisz Reference Waisvisz2000: 425)

Few digital instruments introduced in 1984, the year Waisvisz introduced The Hands, moved beyond traditional models for live performance; most commercial products of the time offered only a keyboard or step sequencer as the performance interface (Roland 1984; Korg 1985; Yamaha 1985). While many synthesiser companies offered multiple potentiometers and push buttons for fine control over sonic parameters, few challenged the traditional performance interfaces (e.g. keyboard, sequencer) in their designs, and thus companies offered few new ways for playing electronic music. Waisvisz recognised this gap in performative possibility: ‘Even the most recently developed electronic music keyboards still provide rather poor translation of the rich information generated by hand/arm gestures and finger movements/pressures’ (Waisvisz Reference Waisvisz1985: 313). Fed up with commercial developments and inspired by the increased musical reproducibility that the Musical Instrument Digital Interface (MIDI) technology afforded, Michel Waisvisz and engineers at Studio for Electro-Instrumental Music (STEIM) created The Hands, a digital musical instrument centred on arm and hand movements for live performance (Waisvisz Reference Waisvisz1985). The design comprised sensors strapped to the performer’s hands in order to give the performer, Waisvisz, more muscular control over his music (see section 2.1.). With an array of push buttons for fingers, mercury tilt switches for wrists/arms and an ultrasonic sensor that gave gesticulations of the arms control over amplitude, note events and timbre during performance, Waisvisz and The Hands amplified the potential of the human body as a musical instrument.

Michel Waisvisz (1949–2008), composer and artistic director at STEIM, had been involved in the exploration of timbre and live electronic music since the 1960s. Waisvisz developed instruments such as TapeLoopSwing (1967–69), a live performance sampler operated by stretching and pulling audio tape, and Cracklebox (1975), a synthesiser requiring human conductivity to complete electronic circuits, that nurtured his notions of effort and touch through the physical embodiment of human connection to musical systems (STEIM 2008a). ‘I couldn’t avoid discovering, some 20 years ago, the notion of effort as a crucial musical ingredient in what we then called “live electronic music.” Putting my fingers through the back of a Putney VCS3 synthesiser and into the open leads of an analog print-board allowed to me [sic] control electronic sound in an immediate and sensitive way’ (Waisvisz Reference Waisvisz1999: 119, emphasis in original). For Waisvisz, the links between physical action and musical result were tied to the human body. In electronic music, which had the potential to obfuscate cause and effect in the production of sound, Waisvisz saw the translation of physical effort to sound as important: ‘“I like big things that require a lot of physical effort. I don’t like little pitch wheels that can make a huge orchestral glissando. I want to bring body information to musical systems”’ (quoted in Lehrman Reference Lehrman1986: 22). Waisvisz carried his notions of effort and nuanced control over electronic music into the 1980s, building The Hands and working on software that included the Lick Machine and LiSa (Live Sampling), both of which extended how the human performer enacted and controlled sounds in real time (Dykstra-Erickson and Arnowitz Reference Dykstra-Erickson and Arnowitz2005). Later, Waisvisz became involved with STEIM’s Touch Festival (1998) and the Children in Touch exhibition (2001), which offered many new interfaces and instruments for musical play (Otto Reference Otto2008).

Even though Waisvisz worked on many other projects, he dedicated years to improving, composing for and performing on The Hands. Throughout its evolution, Waisvisz developed three hardware versions of The Hands (Torre, Andersen and Baldé Reference Torre, Andersen and Baldé2016), and he made a conscious effort to struggle with and to learn to play his instrument: ‘The only solution that worked for me is to freeze tech development for a period of sometimes nearly two years, and than [sic] exclusively compose, perform and explore/exploit its limits’ (Waisvisz Reference Waisvisz2000: 423). Waisvisz continued to play The Hands throughout his career until his untimely death in 2008.

Reviews of Waisvisz’s performances cite him as a ‘virtuosic’ performer (Roads Reference Roads1986: 46) who uses ‘calculated theatricality’ (Keane Reference Keane1986: 67). Some semantically link Waisvisz’s body movements to musical movements (Lehrman Reference Lehrman1986), and others applaud the instrument’s ‘maturity’ (Blum Reference Blum1989: 89). The Hands played an important role in shaping live electroacoustic music, but Waisvisz’s music remains under-recognised. Underscoring the importance of Michel Waisvisz and his innovative digital musical instrument, this analysis focuses on one of the earliest known published recordings of The Hands, The Hands (Movement 1) (1986), released on the Wergo label (Waisvisz 1987). The piece documents the successful beginnings of a musical process (instrument building, composing, performing) that reinforced Waisvisz’s ideas about human effort in electronic music. Waisvisz created The Hands, in part, to explore music through the active effort of the performer. His interests and aesthetics intertwine in the movement’s explorations of timbre and sonic manifestations of human touch and effort. The piece stands as an aural icon of Waisvisz’s methodology and art practice.

A programme from the 1986 North American tour reveals how concerned Waisvisz was with the human body in electronic music: ‘Hands is a physical and visual approach to electronic music. It’s live electronic music. No tapes. No video. No Computer Composition. No Artificial Intelligence’ (Vasulka 1986: 10).Footnote 1 Waisvisz brands the performance as one driven by the human performer. He sells the idea that his music comes from the physical transmission of ‘an arm, hand, or finger’, and he argues for this connection to his music (ibid.).

The following musical analysis will consider the physical ties to Waisvisz’s musical sound in The Hands (Movement 1). Through an investigation of the recording and the instrument’s technology, the analysis will pair instrument controls (device affordances and performative actions) with sonic results, with the aim of developing an aural understanding of this alternative digital musical instrument. Because there is no score, and because Waisvisz believes that composition is ‘the performance itself’ (Krefeld and Waisvisz Reference Krefeld and Waisvisz1990: 32), my analysis is guided by his particular concern for timbre and physicality. Technical descriptions of The Hands help document its musical performance capabilities and limits, as well as offer clues towards performance decisions. I also use software analysis tools TimbreID (Brent Reference Brent2011) and EAnalysis (Couprie Reference Couprie2014) in order to highlight performance events and expose the ‘translation of physical intentions’ (Waisvisz Reference Waisvisz2000: 425) through spectral analyses.

I specifically focus on the first version of The Hands to bring attention to the developmental process of Waisvisz’s early compositions and performance practice. To assist with this endeavour, I interviewed Maurits Rubinstein, Waisvisz’s sound engineer who worked with Waisvisz extensively for a number of years. I also review a 1987 video recording of Touch Monkeys (1986) to underscore performative actions that may have been used in The Hands (Movement 1) (STEIM 1987). I acknowledge that assembly code and performance software for Touch Monkeys may be different from those used in The Hands (Movement 1); yet Touch Monkeys was made with the same hardware version as The Hands (Movement 1) and, as will be discussed, shares similar sound material. The shared timbres and musical phrasing between these two works warrants a comparison.

Lastly, many use the term ‘The Hands’ to reference the instrument as a singular entity, often without any clear delineation between hardware versions, let alone changes in software (Rubine and McAvinney Reference Rubine and McAvinney1990; Birnbaum, Fiebrink, Mallock and Wanderley Reference Birnbaum, Fiebrink, Malloch and Wanderley2005). Mindful that scattered and limited sources on Waisvisz’s first version of The Hands may misdirect listeners to musical controls and techniques that apply to a later version of the hardware, my analysis will highlight controls used in The Hands (Movement 1), and the term ‘The Hands’ used herein will refer to the instrument version circa 1984–89 (STEIM 2008b).

2. The Hands (Movement 1)

I’m a composer using electronic means because of their differentiated and refined control over timbre … The way a sound is created and controlled has such an influence on its musical character that one can say that the method of translating the performer’s gesture into sound is part of the compositional method. (Krefeld and Waisvisz Reference Krefeld and Waisvisz1990: 28)

The Hands (Movement 1) was recorded during a concert on 21 April 1986 in the Auditorium at First Church, in Cambridge, MA. The concert was part of a North American tour; the tour lasted five to six weeks and included performances in Houston, Santa Fe, Cambridge, New York and Montreal (Rubinstein Reference Rubinstein2016). Maurits Rubinstein,Footnote 2 a STEIM engineer and close friend travelling with Waisvisz, engineered the live sound, and Curtis Roads engineered the concert recording. The Hands (Movement 1) along with The Hands (excerpt of Movement 2), also from this April 1986 concert, were later compiled and released as part of New Computer Music on the Wergo label (Waisvisz 1987).

My analysis will centre on the recording of the first movement. Concert reviews and interviews around this time period indicate that many of Waisvisz’s performances were 20 to 30 minutes in length (Lehrman Reference Lehrman1986: 21; Blum Reference Blum1989: 88), and interviews with Maurits Rubinstein revealed that performances during this tour went as long as 50 minutes. Thus, while this analysis presents an investigation of the first movement, I acknowledge that further studies of Waisvisz’s music, a complete concert say, would provide opportunities for a more exhaustive analysis. Still, The Hands (Movement 1) represents one of the earliest published recordings of Waisvisz performing on The Hands, and while Ferguson (Reference Ferguson2016) analyses Waisvisz’s music of later versions of The Hands, unpacking the sounds of Waisvisz’s first version of the instrument will document the development of works during this time period and reveal threads in both his compositional process and performance practice.

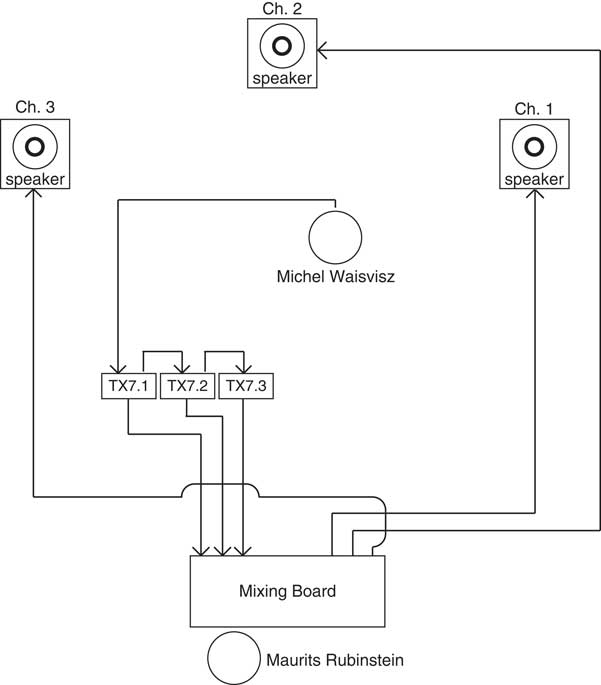

Waisvisz performed The Hands (Movement 1) using three Yamaha TX7s, each mapped respectively to the three speakers of the concert space (Lehrman Reference Lehrman1986; Vasulka 1986; Rubinstein Reference Rubinstein2016). A reconstruction of the stage setup, based upon interviews, period concert reviews and a technical data sheet from the tour is shown in Figure 1 (Lehrman Reference Lehrman1986; Roads Reference Roads1986; Vasulka 1986).

Figure 1 Reconstruction of the stage setup for The Hands (Movement 1). TX7s were located off stage (Vasulka 1986).

2.1. Instrument controls

Waisvisz invests in the act of performance by designing The Hands to make his effort and his intentions plain to the audience. As Waisvisz confesses, ‘“I don’t like equipment that can outrule [sic] the humans involved”’ (quoted in Lehrman Reference Lehrman1986: 21). Physical movements that translate into musical trajectories place responsibility on the performer and turn the human into a necessary agent. In other words, Waisvisz designed The Hands to treat the human as the musical solution, not just a performance tool.

A breakdown of the controls on the first version of The Hands is well documented by Torre et al. (Reference Torre, Andersen and Baldé2016). The authors discuss five assembly code print-outs that document The Hands software (1985–88). An interview with Lehrman (Reference Lehrman1986), which occurred before the October 1986 performance of Touch Monkeys at IRCAM, effectively describes The Hands controls. For example, Lehrman’s (Reference Lehrman1986: 21) description of pitch, octave transposition, left-hand channel assignment keys and right-hand thumb control keys accurately match descriptions of version 2.x of the assembly code (Torre et al. Reference Torre, Andersen and Baldé2016) and Waisvisz’s Reference Waisvisz1985 ICMC report (Waisvisz Reference Waisvisz1985). While assembly code versions 3.4, 4 and 5 contain a 1986 year label, there is no clear indicator that effectively pairs The Hands (Movement 1) to one of the 1986 software version accounts described by Torre et al. (Reference Torre, Andersen and Baldé2016). These version update descriptions do not report changes to key-velocity, ‘scratch’ mode, or octave transposition, controls widely used throughout The Hands (Movement 1) (discussed below). The rest of this section highlights two physical-sound relationships of the controls that help correlate performative actions to sounds heard in The Hands (Movement 1). Additional controls related to the analysis will appear in their appropriate analysis section.

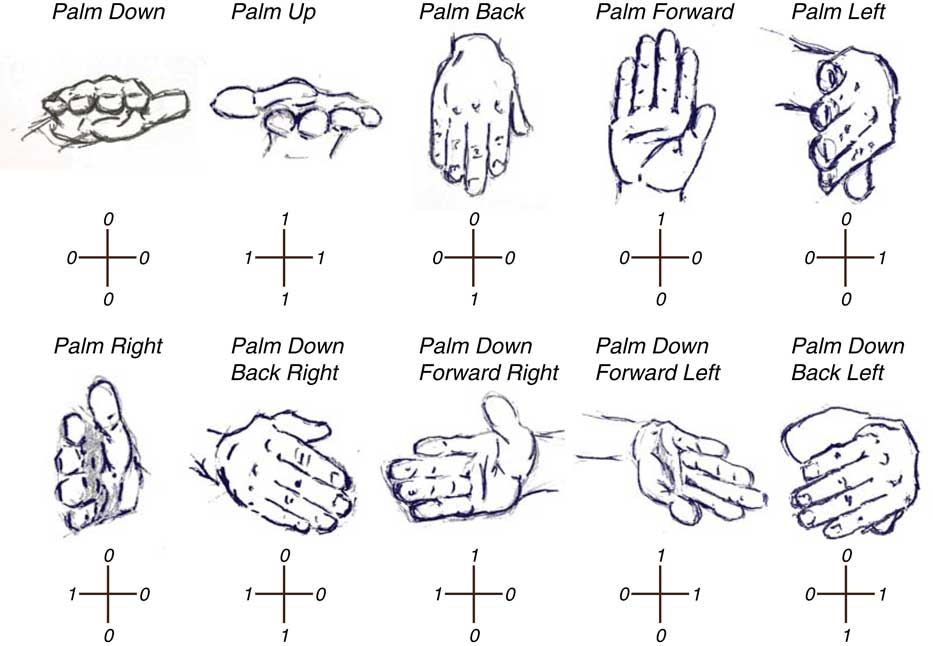

The first physical-sound relationship afforded by the controls involves hand tilt. ‘Like caressing the upperhalf [sic] of a globe’ (Waisvisz Reference Waisvisz1985: 314), physical movement of the wrist and lower arm muscles that tilt and rotate the hand activate four mercury switches. These switches control octave transposition. Even though ten modes are possible (Torre et al. Reference Torre, Andersen and Baldé2016), eight of these hand positions activate a different octave transposition (Waisvisz Reference Waisvisz1985; Lehrman Reference Lehrman1986; Torre et al. Reference Torre, Andersen and Baldé2016). For example, no transposition occurs when ‘the palm of the hand was horizontal and parallel to the ground’ (Torre et al. Reference Torre, Andersen and Baldé2016: 27). Each hand has control of an eight-octave range. Given the placement of the mercury switches on The Hands, Figure 2 depicts the ten switch modes possible with corresponding hand tilt. A video recording of Touch Monkeys (1986) shows Waisvisz moving his hands into many of the various hand poses depicted (STEIM 1987).

Figure 2 Mercury switches on The Hands allowed for ten possible modes, although eight were used to activate octave transposition (Torre et al. Reference Torre, Andersen and Baldé2016: 27). 0/1 values indicate the various off/on configurations of the switches. (Original drawings by the author.)

The second physical-sound relationship of the controls involves arm movement. Physical movement of the arms moving the hands away and towards each other, a measure of distance, is read by an ultrasonic sonar transmitter-receiver. This sensor controls key-velocity. The ‘close and distant positions of The Hands were scaled to velocity values between 0 and 127’ (Torre et al. Reference Torre, Andersen and Baldé2016: 27) so that a note played with the hands close together equate to quiet notes, and as Waisvisz moves his hands farther apart, the notes played grow louder.

A special function to key-velocity was ‘scratch’ mode, which was activated with a right-thumb button toggle. Perhaps the instrument’s most striking feature, ‘scratch’ mode was initially discovered by accident (Bongers Reference Bongers2007). With ‘scratch’ mode activated, each new sonar sensor distance value creates ‘a copy of the Note On event currently active’ (Torre et al. Reference Torre, Andersen and Baldé2016: 27–8) with the ‘appropriate velocity’ at that distance (Lehrman Reference Lehrman1986: 22). ‘Scratch’ mode operates at control rate and incoming data generates the numerous, fast Note On messages, although the speed of moving the hands apart and together could impact the ‘rate of note repetition’ (Torre et al. Reference Torre, Andersen and Baldé2016: 28).

To better understand the musical implications of ‘scratch’ mode, I used existing documentation to create a software model of The Hands ‘scratch’ mode that controlled a Yamaha TX816 synthesiser and a Yamaha DX7 VST software modeller, Dexed. With both, increasing velocity and lengthening time between Note On messages caused an expansion of spectral harmonics, especially with certain FM synthesis algorithms. Indeed, spectral analyses of sounds in The Hands (Movement 1) where repetitions of notes space out in time and become louder also show an expansion of spectral content (see section 2.6.). Thus, since the controls of key-velocity and Note On messages are tied to the sonar sensor in ‘scratch’ mode, Waisvisz may only have had to move his hands apart to increase spectral richness of a sound.

2.2. Parametric kinesphere

Initially, one may not understand how the controls and resulting sounds of a new digital musical instrument like The Hands may work. Indeed, The Hands do not look or sound like a conventional synthesiser. However, we can understand and appreciate physical effort having experienced effort while using our bodies. The Hands emphasises the performer’s input into its musical system by making apparent the performer’s parametric kinesphere, the spatial area of and around the body that controls sonic parameters.

The concept of kinesphere is borrowed from movement theory and refers to ‘an imaginary space we are able to outline with our feet and our hands’, the spatial area that the body moves within (Salazar Sutil Reference Salazar Sutil2015: 20). A parametric kinesphere follows the physical limitations of the body and the instrument controls. The body defines one spatial boundary and the controls further limit the spatial area of the body linked to sound controls.

With The Hands, Waisvisz’s arms define his parametric kinesphere. The physical movements of the arms directly control amplitude (distance values reported by the ultrasonic sonar sensor). Thus, Waisvisz’s parametric kinesphere couples sonic and visual effect, marrying physical to musical action. Figure 3 depicts the range of Waisvisz’s parametric kinesphere with The Hands.

Figure 3 Ultrasonic sensors expand Waisvisz’s parametric kinesphere for controlling sound space. Arm movements (distance readings from the sensors) control amplitude and in ‘scratch’ mode, arm movements also control note-events. (Original image ©STEIM. Used with permission.)

The Hands also offers something novel in its digital design: the idea that controls on the instrument can alter how the instrument responds, changing the musical relationship between the performer and instrument midway through a piece. Waisvisz designed the instrument to toggle ‘scratch’ mode on and off, which altered ‘the algorithm for the translation of sensor data into music control data’ (Waisvisz Reference Waisvisz2000: 425). By activating ‘scratch’ mode, Waisvisz changes the ultrasonic sensor mapping, which allow his arm movements to additionally control Note On messages. The mode offers new possibilities in sound: ‘Holding down the button and moving the hands produces a scratching or ripping effect. If the sustain button is engaged at the same time, the effect is more like a bowed string which constantly changes in volume’ (Lehrman Reference Lehrman1986: 22). ‘Scratch’ mode links arm movements to new sonic actions (bowing, scratching) that extend existing sound–movement relationships of amplitude. By adding the translation of physical arm movements into note events at the flip of a switch, Waisvisz expands the focus of the effective physical movements that manifest musical relationships, and his parametric kinesphere now contains these new controls as a result.

2.3. Formal structure (MIDI program changes)

Waisvisz composed The Hands (Movement 1) without a score; instead, he developed synthesis patches to help formulate the work’s design. As Waisvisz explains, ‘“I know where I start and the trajectory of where I want to go in each performance, but I will sometimes leave things out, or add, or repeat things. Actually, I find that compacting is usually best”’ (Lehrman Reference Lehrman1986: 21). The construction and ordering of synthesis patches provided Waisvisz with a way to explore and react to sounds during performance. The software patches facilitated a type of open musical form that provided access to different timbres. Waisvisz has discussed his compositional reasons for open form: ‘I think that a composer has to be able to make immediate compositional decisions based on actual perception of sound rather than making decisions derived from a formal structure’ (Krefeld and Waisvisz Reference Krefeld and Waisvisz1990: 28). Waisvisz could cycle forward or backward to different sonic timbres based upon his knowledge of preset patches; these presets served as a performance road map of timbre.

There are 32 MIDI program changes that may be recalled on the Yamaha TX7, accessed by stepwise motion on The Hands (Waisvisz Reference Waisvisz1985: 314). Two right-hand buttons near the thumb control step-up/down of MIDI programs (Lehrman Reference Lehrman1986; Torre et al. Reference Torre, Andersen and Baldé2016). Since MIDI program changes alter synthesis algorithms, discrete changes in MIDI programs can translate to sudden shifts in spectral content. By applying spectral analysis tools that reveal sudden spectral shifts, one may calculate when a program change most likely occurred. Since The Hands (Movement 1) is 4 minutes and 26 seconds in length, and Waisvisz performed for up to 50 minutes during the North American tour (Rubinstein Reference Rubinstein2016), the overall rate of program changes would then be presumed to be low. When applied to The Hands (Movement 1), Mel-frequency cepstrum coefficients (MFCC) in TimbreID (Brent Reference Brent2011) and sonograms in EAnalysis software (Couprie Reference Couprie2014) reveal abrupt shifts in spectral content (one indicator of a possible MIDI program change). Figure 4 overlays these proposed MIDI program changes, four in total, on top of the recording’s audio waveform.

Figure 4 Proposed MIDI program changes in The Hands (Movement 1). Number labels represent TX7 synthesis programs. (Figure created with EAnalysis software.)

Since MIDI program changes represent a shift in timbre, these changes may point towards shifts in musical idea. Beyond changes to MIDI programs, shifts in performance controls (i.e. ‘scratch’ mode) also alter musical material. I considered all these alterations collectively to help define musical sections for The Hands (Movement 1) as shown in Table 1.

Table 1 Breakdown of The Hands (Movement 1)

The sonic development of The Hands (Movement 1) unfolds in an expository fashion, slowly introducing performative movements and controller functions like pitch control, panning and ‘scratch’ mode. A MIDI program change follows the quiet introduction of Section I to indicate the start of Section II. Section II distinctively employs ‘scratch’ mode to explore the material, a sonic manifestation of the expansion and contraction of performative arm movements. The shift in density and amplitude suggest the section’s end, demarcated with the release of sustained notes. Section III crescendos out of musical rest into the piece’s climax, which immediately shifts timbre at 2:37. In continuing the musical idea through the exploration of this new timbre, Waisvisz develops two similar phrase endings, at 2:57 and 3:37 respectively. After repeating the second ending, Waisvisz moves into the sustained chaotic noise of the movement’s finale (Section IV). The final motif in this section holds until Waisvisz (or Rubinstein) abruptly cuts the sound off to end the movement.

2.4. Musical actions

Waisvisz calculatedly maps his physical movement (finger, hands, arms) to a sound’s creation so that sounds are dependent upon human action. Twelve buttons on each hand (three rows of four) control pitch (Note On/Off messages) (Lehrman Reference Lehrman1986; Torre et al. Reference Torre, Andersen and Baldé2016). All pitches are within one chromatic octave, but may be transposed by octave with hand tilt (see section 2.1.). Each hand has polyphonic capabilities.

Additional actions on The Hands, in particular ‘scratch’ mode, bear the digital translation of kinespheric space: the instrumental mapping of hand distance to key-velocity and Note On messages. Sounds created with The Hands carry the parametric kinesphere of Waisvisz’s moving arms, the relative physical location of the performer’s hands in physical space. The sounds of The Hands, then, contain prosodic traces of Michel Waisvisz’s body, having encoded the physical resistance of his playing with the musical object.

The technology demonstrates how Waisvisz embeds his actions into his sound. Instrumental mappings, designed to optimise the encoding of sound events with performative movements, show Waisvisz’s concern for the translation of the physical domain. In an attempt to outline performative movements, I listened with an audio editor to generate a list of musical events. After event identification, I examined all events using a combination of listening, spectrograms (Max/MSP) and audio features (TimbreID) in order to fill out event details (Puckette et al. Reference Puckette, Zicarelli, Sussman, Clayton, Bernstein and Place1990; Brent Reference Brent2011). Lastly, I measured listening observations and analyses against The Hands technology in order to hone down possible control choices. The multi-step, multiple listen approach aided qualitative cohesion between events and their labels. Figure 5 shows musical events as they occur within The Hands (Movement 1).

Figure 5 Musical events in The Hands (Movement 1). The 75 events are numbered in chronological order. Labels indicate event type. For a full, annotated chart of musical events/controls, see http://jpbellona.com/public/writing/the-hands/Bellona_TheHandsMovement1_events.pdf (Waveform and amplitude envelope created with EAnalysis software).

The 75 sonic events manifest themselves in a myriad of combinations throughout the recording. A chart of events (see link in Figure 5) was created to help provide a basic understanding of Waisvisz’s instrumental and musical choices. The chart outlines general performance activity. For example, instrumental clicks refer to the sound of button clicks from The Hands that may be identified in the recording. Due to the dynamic level of the piece, we can hear these clicks more in the beginning than in the rest of the work.

The chart also includes an analysis of peak frequencies, although these frequencies are often between pitches. As a first attempt to codify a pitch set that might lead towards a harmonic structure, these frequencies were assigned pitch labels, skewed towards harmonic relationships. No harmonic analysis was completed due to the nature of Waisvisz’s improvisatory performances (see section 2.3.). However, frequency analysis did help decode strong spectral shifts as possible indicators of MIDI program changes. The following sections (panning, amplitude/timbre and effort) will describe several of these musical actions and events in more detail.

2.5. Panning

The Hands (Movement 1) uses three Yamaha TX7 synthesisers. The TX7s output to different speakers: left, centre and right respectively, so that MIDI channel affords control over sound source location (Lehrman Reference Lehrman1986: 21; Rubinstein Reference Rubinstein2016). Buttons controlled by the left thumb on The Hands operates MIDI channel selection. Various possible software versions (2.x, 3.4 and 4) affected MIDI channel routing differently, but all versions gave Waisvisz effective control over MIDI channel selection (Torre et al. Reference Torre, Andersen and Baldé2016). Thus, while it is unclear which software version was used in The Hands (Movement 1), due to the nature of the audio routing, Waisvisz had some control of sound source location through control of MIDI channel selection.

Waisvisz did not solely control all aspects of the sound, however: ‘Michel made the sounds, but … we had an agreement, an understanding’ (Rubinstein Reference Rubinstein2016) – that Rubinstein could control the sound from the mixing console. This control included ‘swapping around back and forth between speakers’ (ibid.). So, while Waisvisz could spatialise the work through the selection of MIDI channel, Rubinstein could spatialise the work from the mixing console. Panning changes are an indication of either Waisvisz selecting MIDI channels or Rubinstein making mixing choices. By listening to panning of sounds within the piece, one may infer how Waisvisz and Rubinstein develop the piece spatially. Figure 6 depicts panning of events heard throughout the work, revealing how the piece expands from discrete channels to the full spatial field.

Figure 6 Panning events in The Hands (Movement 1). Labels represent the four panning positions The Hands may control (MIDI channels 1, 2, 3, all). L=left; R=right; C=centre (Waveform and amplitude envelope created with EAnalysis software).

2.6. Amplitude and timbre

The Hands enable Waisvisz to use physical arm movements to control amplitude and timbre. Maintaining the distance between hands maintains a fixed dynamic due to the ultrasonic sonar sensor feeding similar values to key-velocity. Increasing the distance between the arms increases the overall volume and, depending on the synthesis algorithm, also adds harmonic content to the sound.

Rowe, in his review of The Hands (Movement 1), speaks to Waisvisz’s control over amplitude: ‘What is most striking about the recording is how well the interface works: just having the modest amount of expressive information coming out of The Hands to effect amplitude gives the music a life that too many computer music compositions simply do not have’ (Reference Rowe1990: 84). Sonic gestures of amplitude are bound by Waisvisz’s physical arm movements, and create a tight musical relationship. Figure 7 shows three dynamic levels, sonically depicting the distance between Waisvisz’s hands (amplitude and harmonic content).

Figure 7 EAnalysis sonogram showing music events #70, 71, 72 (3:54.8–4:00.8) with increasing dynamics (p, mf, ff).

Of course, Rubinstein had his fingers on the faders, which not only kept Waisvisz’s sound ‘“under control”’ (Lehrman Reference Lehrman1986: 22) but also suggests an additional musical relationship. As Rubinstein explains further,

[There was a] thin line between Michel and myself, sometimes he wished it was louder. Even when I thought it was loud, he wanted it louder. I had a direct line with the audience and feeling, and if I thought it was too loud, I made it lower. The whole show had a kind of scheme, a line, not note-by-note, sometimes I made it small and quiet, and he had to fight his way back to being loud. (Rubinstein Reference Rubinstein2016)

This collaborative effort between Waisvisz and Rubinstein helps outline Rubinstein’s role during these performances. Rubinstein could extend the dynamic range of performances. Thus, shifts in amplitude were effectively impacted by two agents, not just one. Still, the faders of the console come after the TX7 synthesisers, so while Rubinstein could compress or expand the overall volume during performances, fader volume could not impact spectral content of the synthesis algorithms.

Marked by fast shifts in amplitude, much of the second section of The Hands (Movement 1), 1:06–2:15, explores sound through the activation of ‘scratch’ mode. What is striking within this musical section is the sound of rips or tears that involve the increase and decrease of note spacing. The alteration of note spacing is a manifestation of the arms in motion within ‘scratch’ mode – the shifting between physical distances that create different response times from the sonar sensor (Lehrman Reference Lehrman1986; Torre et al. Reference Torre, Andersen and Baldé2016). While the ripping sound suggests sustain is turned off (Lehrman Reference Lehrman1986: 22), the musical phrasing of this section implies the act of moving arms in a choreographic fashion, where crescendos of sound and spectra signal the physical act of expanding and contracting arm movements. Figure 8 shows a sonogram of several of these ‘scratch’-mode arm movements within a musical passage.

Figure 8 EAnalysis sonogram showing music event #29 (1:37–1:49); ‘scratch’ mode with expanding and contracting arm movements.

While the sound and the controls suggest these arm movements, a 1987 video recording of Touch Monkeys (1986) supports this conjecture (STEIM 1987). The section 0:54–1:02 of Touch Monkeys is a perfect example of ‘scratch’ mode as explained by Torre et al. (Reference Torre, Andersen and Baldé2016), where one can hear (and see) the rate of note repetitions changing with the arms slowly moving apart (STEIM 1987). A forceful expansion–contraction of the arms creates a ripping sound (1:17–1:23), and this move articulates phrases found in The Hands (Movement 1). For example, 1:46–1:52 of Touch Monkeys contain multiple repeats of this ripping sound, which may be found with an extremely similar timbre in The Hands (Movement 1) from 1:39–1:47, as depicted in Figure 8.

Other similarities may be found between the two works: 2:04–2:09 in Touch Monkeys contains similar timbres as 1:48–1:58 in The Hands (Movement 1), and 1:30–1:40 in Touch Monkeys points to the crescendo in The Hands (Movement 1), from 2:15–2:25. And, while this article does not focus upon an analysis of The Hands (excerpt of Movement 2), it is interesting to note that the section from 14:00–16:50 of Touch Monkeys bears striking similarities to sounds and phrases heard in The Hands (excerpt of Movement 2). The section 14:18–14:37 of Touch Monkeys is especially similar to the main theme from The Hands (excerpt of Movement 2).

The sonic similarities between The Hands (1986) and Touch Monkeys (1986) suggest that part of Waisvisz’s musical development on the instrument involved revisiting sound material. Rubinstein, when asked about the reincorporation of sound and movement material into new works, commented, ‘Absolutely! Sometimes even [sic] sounds really came up again. He recycled sounds. He made new ones, but he always kept sounds’ (Rubinstein Reference Rubinstein2016). Acknowledging sonic continuity as a possible evolutionary factor, Table 2 highlights three works from 1985 to 1986 that surround The Hands (Movement 1) to help outline the instrument’s evolution within its first few years.

Table 2 Works for The Hands (1985–86)

The striking similarity between all three works is their performative ending, as documented in concert review (Keane Reference Keane1986: 67) and archival video (STEIM 1987). The recurrence of the ending was corroborated by Rubinstein, who added, ‘This was the end for quite a while … he just put them on the stand and stepped back and at a certain point he looked at me, and it was up to me to decide. He was telling me ok, as far I’m concerned this is where we stop’ (Rubinstein Reference Rubinstein2016). The continuity of endings between the three works further suggests the honing of a musical set for The Hands, a set complete with both old and new sound (as well as performative) material.

2.7. Effort

The CD liner notes depict the music of The Hands (Movement 1) as a physical enterprise: ‘[I]f music … was about meditation on the cyclical activities of rowers at sunset … then The Hands is about rowing itself’ (Waisvisz 1987). The link between sound and effort for Waisvisz is critical, and physical and mental effort must be discernible to the listener. As Waisvisz would later state, ‘The physical effort you make is what is perceived by listeners as the cause and manifestation of the musical tension of the work’ (Krefeld and Waisvisz Reference Krefeld and Waisvisz1990: 29). Because distance between the arms controls amplitude, hearing extreme changes in dynamics helps depict Waisvisz’s physical arm motion and effort. Throughout The Hands (Movement 1), large swells of noise are often immediately followed by quiet, sustaining notes, as heard in events #26–7 (1:31.7–1:35.5), #49–50 (3:02.3–3:16.4) and #52–3 (3:20–3:24.3) (see Figure 5). With similar movement and sonic phrasing as The Hands (Movement 1), Waisvisz freezes his hands and his body while sustaining chords in Touch Monkeys, from 3:36–3:48 (STEIM 1987). The juxtaposition of sonic density (mapped from active arm/hand movement) against musical sustain (body stillness) tethers the sound to the body. Barry Truax writes about the effectiveness of mapping effort to sound in this way from a 1985 Waisvisz performance: ‘the points of greatest interest and contrast were those at the transition points; one in particular was very effective where a large sound mass was transformed to a quiet sustained chord’ (Keane Reference Keane1986: 67).

Physical effort can also build musical tension, as heard during the long crescendo from 2:15 to 2:37. Here, the slow crescendo and expansion of overtones result from a slow widening of the performer’s arms. Note triggers in the left channel accent the expansion, and the loudest and most complex sound of the crescendo seem to suggest the physical limitation of Waisvisz’s outstretched arms. Several moments in Touch Monkeys, 3:43–3:55 and 5:00–5:10, support this idea (STEIM 1987). Even though Rubinstein could bring the faders up, we know Waisvisz cannot push his own sound louder than the furthest distance between his arms (velocity value equals 127). At the sonic climax, perhaps just as his arms become fully outstretched, Waisvisz triggers a change in timbre (a new TX7 program selection) that allows him to break free from his physical limit. The increased density and amplitude of this new timbre also breaks the musical tension and lets Waisvisz move past what was once perceived as physically impossible.

Some, however, have described the coupling of movement to sound as merely theatrics: ‘What impressed here was the illusion of the performance, its theatrical aspects, not the actual technique of controlling the TX7 synthesisers’ (Honing Reference Honing1987: 14). Honing is critical of Waisvisz by implying that technical command of an instrument is its performative value, and that The Hands are not interactive, or at least, not interactive enough. Yet, the music of The Hands (Movement 1) – the physical actions and the sounds – stands for more than just theatrical entertainment; the connections between sound and movement carry personal meaning for Waisvisz.

Michel always got excited. There is a time in the piece in the middle, the sound of airplanes. He got really excited and he got into an emotional state of mind. It was dealing with his father who was an RAF pilot. [He was] making turns like a pilot; we always had a lot of eye contact. He wanted the sound in that part to give pressure to the audience. I wanted to keep it low. Battle is a big word, but there was tension between him and me. After concerts we had discussion whether he was happy or not. (Rubinstein Reference Rubinstein2016)

Waisvisz infused himself into his musical movements. He created physical links to his own narrative, so that particular sounds allowed Waisvisz to tap into his own experiences. Waisvisz moves through sound physically, enabling a choreographic interactivity between performer and sound, and, as Rubinstein suggests, between performer and sound engineer. The translation of his effort in the performance is evident, supported by the ‘uninhibited enthusiasm by both critics and auditors’ (Honing Reference Honing1987: 14).

Of course, the physical demands of performing alone up to 50 minutes was no small feat. Waisvisz would finish even a 30-minute performance drenched in sweat (Lehrman Reference Lehrman1986). Many audience members write about Waisvisz’s effort in these early works for The Hands. As Denis Smalley attests in a 1986 performance review: ‘Here the audience instinctively hailed a direct link between the performer’s gestures, his movements, and their effect both on the shaping of sound-contours and the passage through the musical structure’ (Kendall et al. Reference Kendall, Ashley, Barrière, Dannenberg, Moore and Risset1987: 40). By mapping hand distance and hand tilt into sonic activity, Waisvisz sets up the body as a recognisable limitation to his musical system. The rise and fall of sound follows the trajectory of Waisvisz’s movements, and the trajectory of sonic action provides a contextual frame for understanding Waisvisz’s ideas about human effort in digital electronic music. The physical efforts resonate within the sounds – physical actions help shape their envelopes after all – and these kinesphere-encoded sounds offer listeners, who live in the physical world, a gateway into hearing this alternative way of playing music.

3. Conclusion

The Hands (Movement 1) documents the beginnings of The Hands. The work captures an early sound world and reveals sonic connections to later works. Waisvisz reused sound and performance material throughout the early years on The Hands, suggesting a musical practice that was incremental and ongoing. The early success of The Hands and touring works, like The Hands (Movement 1), helped garner commissions for the instrument (i.e. Touch Monkeys and Archaic Symphony), which allowed Waisvisz to develop his craft and expose audiences to his performance practice. The use of arm movements to control amplitude and note events was a novel musical choice, and by listening to the amount of sounds generated by ‘scratch’ mode in The Hands (Movement 1), one can hear how this feature became a signature mark of his early work on the instrument. Further listening of The Hands (Movement 1) may even conjure up images of Waisvisz’s arms moving about on stage: pulling, twisting, contracting, swiping. The sound serves as choreographic memories, an imprint that after a few listens becomes inseparable from the body.

Today, the field of live electronic music continues to grapple with the balance between the human body and technological systems, between human choice and algorithmic choice, and between physical effort and technical ease. Often these scales are tipped away from the flesh. Here, in The Hands (Movement 1), Waisvisz argues the case for the human in electronic music in unequivocal terms by making human effort audible, almost palpable. Waisvisz stands his ground against the mechanisation of live music and gestures us to consider the performer once more. Thirty years later, his message remains poignant and powerful.

The success of live performances of The Hands (1986) and others that followed, Touch Monkeys (1986) and Archaic Symphony (1987), helped broadcast Waisvisz’s message of human effort in electronic music to audiences worldwide, and in the context of Waisvisz’s active artistic director role at STEIM, spurred a new physical model for engaging with and performing electronic music. The Hands concepts and controls were streamlined for new artists and their instruments, including Ray Edgar’s Sweatstick, Walter Fabeck’s SonoGloves, Laetitia Sonami’s Lady’s Glove and Edwin van der Heide’s MIDI-Conductor (all instruments created at STEIM and with the assistance of Bert Bongers) (Bongers Reference Bongers2007; Otto Reference Otto2008: 51–2). As Laetitia Sonami recounts the influence of physicality on her music, ‘Gestures are what I became attached with … the idea of communicating through some type of physical action … In the 90s we were all interested in … making sure that we controlled things and that it made sense and that everybody understood what we were controlling’ (Sonami Reference Sonami2016). As musicians continue to explore how to interface with electronic sounds, The Hands and their legacy live on in new instrument designs that seek to translate the physical body into sound. For example, Imogen Heap’s glove controller is the latest in a line popularising the desire to translate the hand’s movements into sound and sound controls.

Waisvisz offered a new way of thinking about the body in performing electronic music: how to incorporate physical effort into digital electronic sound. The Hands endowed the spatial movements of the arms and hands with sound control, unprecedented for its time, and in the process, interwove the moving body with musical interface. Waisvisz mapped his arm movements to amplitude, which literally amplified his effort. By doing so, The Hands fused sound shape with body shape, and through that, Waisvisz showed us how to turn our bodies into instruments of digital electronic sound.

Acknowledgments

I am indebted to Kristina Andersen who manages the Michel Waisvisz Archive for her help with overseas access to materials from the Michel Waisvisz Archive as well as Maurits Rubinstein for his wealth of knowledge and openness to share his stories.