1. Description

The manual actions expressive system (MAES) described below addresses the problem of enabling music creation and performance using natural hand actions (e.g. hitting virtual objects, or shaking them). The motivation for this project is to exploit the potential of hand gestures to generate, shape and manipulate sounds as if these were physical entities within a larger structured environment of independent sonic material (i.e. other entities that act independently from the manipulated sounds). In order to achieve this, the actions of the hands must be convincing causes of the sounds produced and visible effectors of their evolution in time, which is a well-known requisite for expression in digital devices and interfaces. Specifically for MAES, the intention is to preserve strong links between the causality of gestures and everyday experience of the world, yielding sound that is a believable result of the performer's natural actions and enabling intimate control of that sound. This can be achieved by mimicking and/or adapting the mechanics of physical phenomena; for instance, gripping grains to bind them together into a smooth continuous sound and loosening the grip to release them as a sparser jumbled texture, shaking particles to produce collisions and so on. Furthermore, causality can still be maintained in hyper-real situations: for example, a particle container that changes size from the proportions of a hand-held receptacle to the dimensions of a room.

An important aim in this project concerns the achievement of expressive content and sonic sophistication with simple hand gestures (Wanderley Reference Wanderley2001), so that MAES can be used by individuals who do not have formal musical training, because the performing gestures are already ingrained in their neuromuscular system. Yet, MAES aims to enable virtuosity in the compositional structuring and articulation of sonic material at a level comparable to outputs produced in the electroacoustic studio: while the gestures remain simple, the mappings associated with these can be sophisticated. This is achievable by subsuming complexity within the technology, thus reducing specialised dexterity in order to produce sophisticated sound. In fact, simple gestures in the real world often set in motion complex processes: for instance, when we throw an object, its trajectory is governed by the combined effects of gravity, air friction, the momentum transferred to the object by the hand and so on. This is also true for sonic processes, such as in the mechanisms at work when hitting a bell, rubbing a roughed surface with a rod and so forth. Furthermore, subsuming complexity also enables sonic manipulation within a larger sonic environment in which, similarly to real life, we act within our independent surroundings and our actions modify the latter, but do not control it totally. Ideally, structure design and implementation of the environment, its mechanics and its functions can be created beforehand, while still allowing individual expression and performance freedom in a manner analogous to the design of videogames.Footnote 1 Thus, the participant is able to realise an individual instance of a performance within the constraints and affordances resulting from such structural design.

To a significant extent, the considerations above differ from the concept of instrument, which requires learning conventions specific to a particular device that are often outside common bodily experience (e.g. it is necessary to learn how to use a bow to produce sound on a violin, or to develop an embouchure to play a wind instrument). Instead, MAES aims to maintain gestural affinity with manual actions rather than engaging with specially built mechanisms. This also minimises the necessity for timbral consistency in comparison to instrument-driven metaphors, enabling use of a wide variety of spectromorphologies, only limited by the current capabilities of the processing engine and the imagination of the user. Therefore, the device is treated as a transducer of existing bodily skills that becomes as transparent as possible and, as technology develops, will disappear altogether. Furthermore, it is envisaged that future developments of systems of this type will shape and manipulate audiovisual and haptic objects, bringing the model even closer to the mechanics of videogame play. Nevertheless, although such objects are not yet implemented in MAES, the physicality of the metaphors employed implies tacit tactility and vision: hopefully, this should become apparent in the mapping examples and musical work discussed below (sections 4.4 and 5).

The conceptual approach in MAES focuses on mapping strategies and spectromorphological content: rather than investing time and effort on the creation of new devices this research emphasises the adaptation of existing technology for creative compositional use in gesture design, sound design and the causal match of gesture with sound. Therefore, in addition to the development of the software tools required for adaptation, most of the work has gravitated around the design and implementation of a sufficiently versatile mapping strategy underpinned by a corresponding synthesis and processing audio engine, and a viable compositional approach which is embodied and demonstrated in the resulting musical work: the mapping of gestures is as important as the corresponding selection of spectromorphologies and sound processes. In other words, the main concern shifts from technological development to actual content, ultimately embodied in the musical output. The use of buttons or additional devices (pedals, keyboards, etc.) in order to perform a work is avoided in order to prevent disruption of the sound shaping/manipulation metaphor, aiding to the smoothness of a performance and, since the captured data is generic, simultaneously reducing dependency on a specific device. The result is an interactive environment that facilitates the composition of works in which the performer is responsible for the articulation of part of the sonic material within a larger sonic field supported by the technology. Moreover, we can already observe the incipient mechanics of a videogame, in which the actions of the user prompt a response (or lack of response) from the technology.

2. Background and Context

The quest for intuitive interfaces appropriate for music performance is inextricably linked with issues concerning gesture and expression.Footnote 2 Recent research has led to the discovery of important insights and essential concepts in this area, which have facilitated practice-led developments.

2.1. Gesture and expression

Decoupling of sound control from sound production (Sapir Reference Sapir2000; Wanderley Reference Wanderley2001) facilitated the implementation of a new breed of digital performance devices. However, it also highlighted the potential loss of causal logic and lack of expression when performers’ gestures cannot be associated to sonic outputs (Cadoz, Luciani and Florens Reference Cadoz, Luciani and Florens1984; Cadoz Reference Cadoz1988; Cadoz and Ramstein Reference Cadoz and Ramstein1990; Mulder Reference Mulder1994; Roads Reference Roads1996; Goto Reference Goto1999, Reference Goto2005).

Gesture has been defined as all multisensory physical behaviour, excluding vocal transmission, used by humans to inform or transform their immediate environment (Cadoz Reference Cadoz1988). It fulfils a double role as ‘symbolic function of sound’, and ‘object of composition’ whose validity can only be proven by the necessities of the creative process; often requiring trial and error development through its realisation in musical compositions (Krefeld Reference Krefeld1990).

2.2. Mapping and metaphor

Causal logic is dependent on mapping – in other words, the correspondence between gestures or control parameters and the sounds produced (Levitin, McAdams and Adams 2002). Correspondence can be one-to-one, when one control parameter is mapped to one sound parameter; convergent, when many control parameters are mapped to a single sound parameter; divergent, when one control parameter is mapped to many sound parameters (Rovan, Wanderley, Dubnov and Depalle Reference Rovan, Wanderley, Dubnov and Depalle1997) or a combination of these.Footnote 3 Mappings may be modal, when internal modes choose appropriate algorithms and sound outputs for a gesture depending on the circumstances, or non-modal, when mechanisms and outputs are always the same for each gesture (Fels, Gadd and Mulder Reference Fels, Gadd and Mulder2002). Furthermore, gestures are most effective when mappings implement higher levels of abstraction instead of raw synthesis variables, such as brightness instead of relative amplitudes of partials (Hunt, Paradis and Wanderley Reference Hunt, Paradis and Wanderley2003). This is achieved by adding additional modal mapping layers, which can be time-varying (Momeni and Henry Reference Momeni and Henry2006).

Mappings should be intuitive (Choi, Bargar and Goudeseune Reference Choi, Bargar and Goudeseune1995; Mulder Reference Mulder1996; Mulder, Fels and Mase 1997; Wessel, Wright and Schott Reference Wessel, Wright and Schott2002; Momeni and Wessel Reference Momeni and Wessel2003), exploiting intrinsic properties of our cognitive map that enable tight coupling of physical gestures with musical intentions (Levitin et al. Reference Levitin, McAdams and Adams2002). Successful gestures can incorporate expressive actions from other domains. This is desirable because spontaneous associations of gestures with sounds are the results of lifelong experience (Jensenius, Godoy and Wanderley Reference Jensenius, Godoy and Wanderley2005). This leads to the concept of metaphor (Sapir Reference Sapir2000), whereby electronic interfaces emulate existing gestural paradigms which may originate in acoustic instruments – such as the eviolin (Goudeseune, Garnett and Johnson Reference Goudeseune, Garnett and Johnson2001) or the SqueezeVox (Cook and Leider Reference Cook and Leider2000) – or in generic sources – such as MetaMuse's falling rain metaphor (Gadd and Fels Reference Gadd and Fels2002).

Metaphor facilitates transparency, an attribute of mappings that indicates the psychophysiological distance between the intent to produce an output and its fulfilment through some action. Transparency enables designers, performers and audiences to link gestures with corresponding sounds by referring to common knowledge understood and accepted as part of a culture (Gadd and Fels Reference Gadd and Fels2002): spontaneous associations of gestures with sounds and cognitive mappings are crucial components of this common knowledge.

Therefore, MAES aimed to develop strong metaphors through hand gestures embedded in common knowledge belonging to the cognitive map of daily human activity: as long as these are linked to appropriate spectromorphologies they have the potential to produce convincing mappings for gesture design.Footnote 4 Performers do not have to consider parameters and mapping mechanisms, but rather conceive natural actions akin to human manual activity (e.g. throwing and shaking objects, etc.); being reinforced by the multimodal nature of these actions.

2.3. Learnability versus virtuosity

Gestural interfaces should balance between a potential for virtuosic expression and learnability (Hunt et al. Reference Hunt, Paradis and Wanderley2002).Footnote 5 Technologies requiring little training for basic use but allowing skill development through practice strike this balance, offering gentle learning curves and ongoing challenges (Levitin et al. Reference Levitin, McAdams and Adams2002).

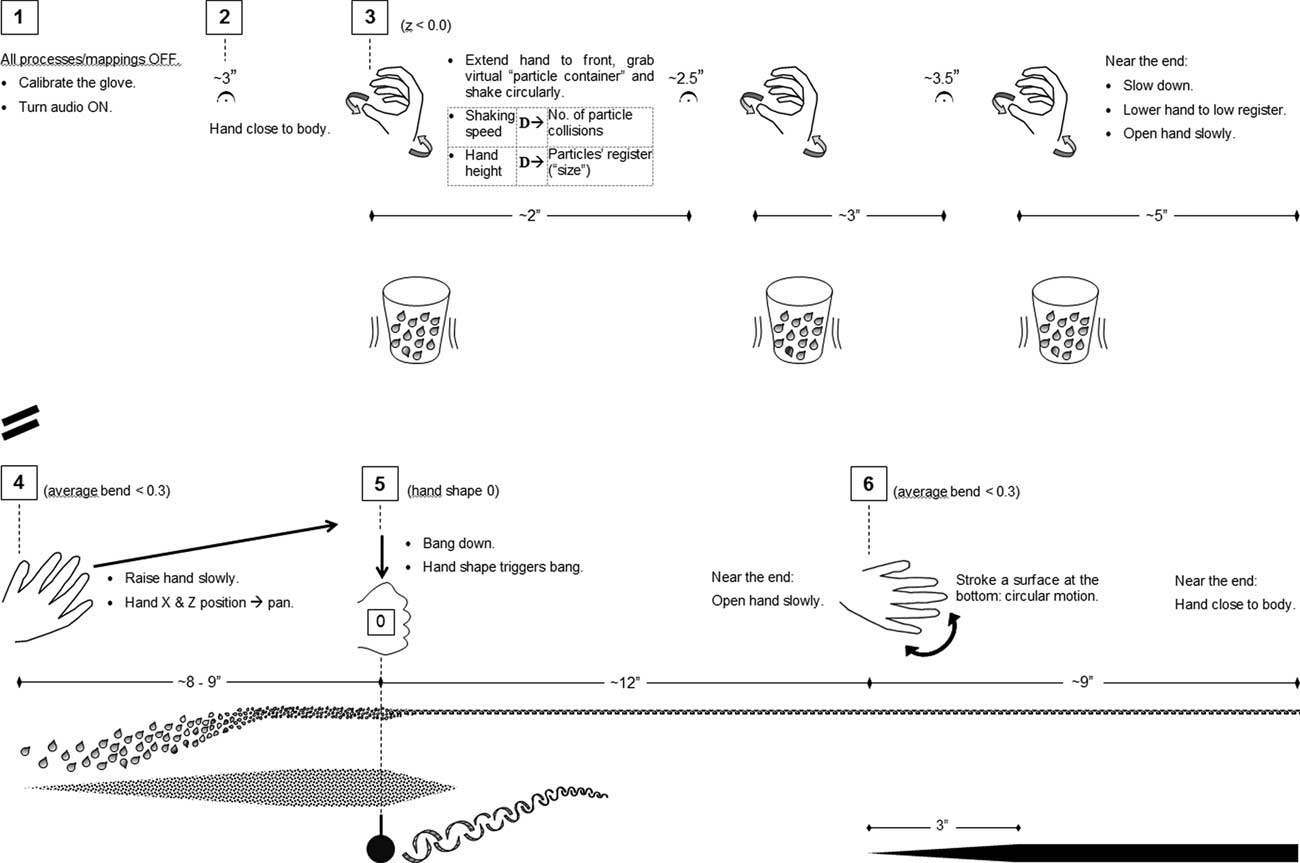

MAES enhances learnability by enabling the design of gestures that are already ingrained in the human cognitive map that constitutes a manual repertoire. For instance, a set of instructions for the performance of a musical passage (i.e. a score) may consist of the following sequence of manual actions:

-

• Extend hand to front, grab virtual particle container and shake circularly for 3 seconds.

-

• Pause for 3.5 seconds.

-

• Shake for 5 seconds.

-

• Near the end: slow down, lower hand and open it slowly.

These indications are performable by musicians and non-musicians alike. Of course, it is important to ensure that the sonic results correspond to these actions: for instance, shaking could be mapped to audio grains being articulated according to the velocity of the hand, and so on.

MAES also enables progression towards virtuosity. However, it is important to stress that, since one of the aims is to enable music performance using simple gestures, virtuosity in the achievement of timbral variety through mapping and sound processing is prioritised over instrumental manual dexterity: in other words, the emphasis is on the development of compositional sophistication. For this purpose the software allows the user to configure complex mappings in order to design gestures and change these configurations as the performance progresses, rather than implementing a ‘hard-wired’ setting; offering a large number of possible available combinations of mapping primitives and interchangeability of the spectromorphologies controlled by these mappings. This enables the expansion of the inventory of actions available (see section 4.3).

2.4. Effort

The perception of physical effort enhances expressivity (Vertegaal, Ungvary and Kieslinger Reference Vertegaal, Ungvary and Kieslinger1996; Krefeld Reference Krefeld1990; Mulder Reference Mulder1994). Regardless of whether actual effort is real or virtual, it enlarges motion by projecting it and expresses musical tension through the musician's body language (Vertegaal et al. Reference Vertegaal, Ungvary and Kieslinger1996). Although MAES does not implement actual effort into the gestures, this is already implied in our cognitive map as a result of daily experience (e.g. the muscular activity involved in throwing an object).

3. Existing Interfaces

It is impossible to list all existing interfaces in this article.Footnote 6 We will therefore concentrate on systems that are relevant to the development of MAES. Following the latter's aims and approach, meaningful contextualisation focuses on guiding metaphors, corresponding mapping strategies, spectromorphological content, timbral control and their realisation in composition.

Historically, Michel Waisvisz's and Laettia Sonami's work has been influential to this day. Waisvisz's The Hands control of MIDI signals favours triggering (e.g. recorded samples) rather than continuous control of sonic attributes (Krefeld Reference Krefeld1990; Torre Reference Torre2013). Although there is little documentation on their functioning, the structure of the actual devices and Waisvisz's performances indicate an instrumental approach rather than hand-action sound-shaping (e.g. Waisvisz Reference Waisvisz2003). While also controlling MIDI, Sonami employs the Lady Glove (which includes a foot sensor) differently (Bongers Reference Bongers2007; Sonami Reference Sonami2010, Reference Sonami2013; Torre Reference Torre2013): together with the use of filtering processes, she achieves expression by controlling large numbers of short snippets by means of concurrent mechanisms (hands mutual distance, hand–foot distance, orientation, finger bend, etc.) which, in addition to rhythmic control, allow her to shape sounds timbrally.Footnote 7 This is strengthened by the theatricality of her gestures.

MAES was influenced by Sound Sculpting's ‘human movement primitives’ metaphorsFootnote 8 (Mulder, Reference Mulder1996; Mulder, Fels and Mase Reference Mulder, Fels and Mase1999), and the use of gestures for multidimensional timbral control. Both reinforce the manual dexterity innate in humans; the premise that, within limits, audio feedback can replace force-feedback; and an implicit sensorial multimodality (Mulder et al. Reference Mulder, Fels and Mase1997). However, Sound Sculpting's timbral control focused on FM parameters, providing narrower scope for variety and differing from MAES's regard for spectromorphologies as surrogate to hand actions’ physical effects.

Rovan (Reference Rovan2010) uses a right-hand glove comprising force-sensitive-resistors, bend sensors and an accelerometer, together with an infrared sensor manipulated by the left hand. Music structure articulation shares common ground with MAES, whereby the user can control durations of subsections within a predetermined order (Rovan advances using left-hand actions whilst MAES generally subsumes preset advancement within sound-producing gestures). On the other hand, Rovan's metaphors are different from MAES's manual actions, suggesting an instrumental approach.Footnote 9

Essl and O'MOdhrain's tangible interfaces implement hand action metaphors (e.g. friction). Similar to MAES, these address ‘knowledge gained through experiences with such phenomena in the real world’ (Reference Essl and O'Modhrain2006: 285). However, because of their reliance on tactile feedback, metaphor expansion depends on the implementation of additional hardware controllers. Also, sonic output relies on microphone capture and a limited amount of processing. MAES avoids these constraints through the virtualisation of sensorimotor actions supported by ingrained cognitive maps, and mapping flexibility coupled with synthesis/processing variety (e.g. interchangeable outputs that maintain surrogacy links to gestures): this compensates to an extent for the lack of haptic feedback.

SoundGrasp (Mitchell and Heap Reference Mitchell and Heap2011; Mitchell, Madgwick and Heap Reference Mitchell, Madgwick and Heap2012) uses neural nets to recognise postures (hand shapes) reliably, implementing a set of modes which are optimised for sound capture and post-production effects, and are complemented by a synthesiser and drum modes. In comparison, MAES enables more flexible mode change through programmable gesture-driven presets that are adapted to the flow of the composition, but hand-shape recognition is more rudimentary.Footnote 10 This reflects a significant difference in approaches and main metaphors: SoundGrasp's sound-grabbing metaphor is inspired by the sonic extension of Imogen Heap's vocals, which could be conceptualised as an extended instrument paradigm that may also trigger computer behaviours. This differs from MAES's focus on the control of spectromorphological content within an independent environment. Finally, SoundGrasp's mapping is more liberal than MAES's regarding common knowledge causality of gestures: while some of the mappings correspond to established cognitive maps (e.g. sound-grabbing gesture to catch a vocal sample, angles controlling rotary motion), others are inconsistent with MAES's metaphor (e.g. angle controlling reverberation). SoundCatcher's metaphor (Vigliensoni and Wanderley Reference Vigliensoni and Wanderley2009) works similarly to SoundGrasp, but without posture recognition. It implements looping and/or spectral freezing of the voice: hand positions control loop start/end points. A vibrating motor provides tactile feedback for the distance from the sensors and microphone. Comparisons between MAES and SoundGrasp are also applicable to SoundCatcher.

Pointing-At (Torre, Torres and Fernstrom Reference Torre, Torres and Fernstrom2008; Torre Reference Torre2013) measures 3D orientation (attitude) with high accuracy. A bending sensor on the index finger behaves as a three-state switch (as opposed to MAES's continuous bending data in all five fingers). MAES and Pointing-At share a compositional approach that combines the computer's environmental role with sound controlled directly by the performer's gestures; subsuming complexity in the technology but being careful not to affect the transparency of the interface. The approach to software design is also similar, using MAXFootnote 11 in combination with external objects purposely designed to obtain and interpret data from the controller. This enables a flexible mapping strategy, as evidenced in different pieces.Footnote 12 However, such changes require the implementation of purpose-built patches, as opposed to MAES's inbuilt connectable mappings between any data and sound processing parameters within a single patch. This also accounts for a difference in the approach to the integration of gesture design within a composition: Torre's design normally consists of a sequence of subgestures which form a higher-level complex throughout macrolevel sections in the piece, while in MAES mappings change from preset to preset, using gestures in parallel or in rapid succession, normally resulting in a larger number of modes.Footnote 13 This has an obvious impact on the structuring of musical works.Footnote 14 Also, Pointing-At allows for a liberal choice of metaphors which, similarly to SoundGrasp, combine mappings corresponding to hand-action cognitive maps (e.g. sweeping sound snippets like dust in Agorá) with more arbitrary ones (e.g. hand roll controlling delay feedback in Molitva). Technical differences between Pointing-At and MAES also influence gesture design and composition. For instance, Pointing-At facilitates spatialisation within a spherical shell controlled by rotation movement thanks to accuracy and a full 360-degree range of attitude data. On the other hand, position tracking motion inside the sphere is less intuitive.Footnote 15 In contrast, better accuracy and ranges for position tracking than orientation favour mapping of the former in MAES, therefore allowing coverage of points inside the speaker circle, including changes in proximity to the listener. Also, the use of continuous bend values for each finger in MAES enables different mappingsFootnote 16 from those generated by bending used as a switch in Pointing-At (e.g. crooking and straightening the finger to advance through loop lists in Mani). Finally, theatrical elements and conceptual plots that aid gesture transparency are common to compositional approaches developed for both Pointing-AtFootnote 17 and MAES.

The P5 Glove Footnote 18 has been used for music on several occasionsFootnote 19 , mainly controlling MIDIFootnote 20 and/or sample loops.Footnote 21 An exception is Matthew Ostrowsky's approach, which shares similarities with MAES: he focuses on creating gestalts using a P5 driven by MAX (DuBois Reference DuBois2012), achieving tight control of continuous parameters and discrete gestures.Footnote 22 The metaphors also manipulate virtual objects in a multidimensional parameter space, employing physical modelling principles (Ostrowski Reference Ostrowski2010). On the other hand, Ostrowski focuses on abstract attributes rather than seeking causality within a physical environment. Malte Steiner controls CSound parameters and graphics: documentation of a performance excerpt (Steiner Reference Steiner2006) suggests control of sonic textural material in an instrumental manner, while the control of graphics responds to spatial position and orientation.

Nuvolet tracks hand gestures via Kinect Footnote 23 as ‘an interface paradigm … between the archetypes of a musical instrument and an interactive installation’ (Comajuncosas, Barrachina, O'Connell and Guaus Reference Comajuncosas, Barrachina, O'Connell and Guaus2011: 254). It addresses sound shaping, realised as navigation through a concatenative synthesis (mosaicking) source database. Although it differs significantly from MAES by adopting a path metaphor and use of a single audio technique, it shares two important concerns: higher abstraction level via intuitive mappings (e.g. control of spectral centroid) and a game-like structuring of a performance through pre-composed paths that the user can follow and explore, avoiding known issues related to the sparseness of particular areas of the attribute space. Similarly to Nuvolet, The Enlightened Hands (Vigliensoni Martin Reference Vigliensoni Martin2010) map position to concatenative sound synthesis. Axes are mapped to spectral centroid and loudness. Visuals are also controlled manually. While the issue of sparseness is identified and prioritised for future research, there is no indication that this has already been addressed in the project. Mano (Oliver Reference Oliver2010) provides an inexpensive yet effective method of tracking hand shapes using a lamp on a dark surface; offering detailed continuous parameter control. Its approach follows theories of cognitive theory embodiment, promoting simple mappings that arise from interrelated complex inputs.

The Thummer Interface (Paine Reference Paine2009) benefits from the versatility and abundant data provided by the Nintendo Wii Remote.Footnote 24 Although it uses an instrument metaphor, conceptual premises leading to its development shed light on wider issues related to the design of digital controllers, such as transparency and high-level mapping abstraction: Thummer uses four predominant physical measurements (pressure, speed, angle and position) to control five spectromorphological parameters (dynamics, pitch, vibrato, articulation and attack/release) considered to be fundamental in the design of musical instruments. Therefore, it subsumes complex data mappings in the technology in order to achieve more tangible metaphors; an approach followed in MAES. Both Thummer and MAES implement configurable mappings and groupings between controller data and the sound production engine according to user needs. Finally, the ‘comprovisation’Footnote 25 approach described by Paine shares the concept of a (relatively liberal) structured individual trajectory, akin to videogame play in MAES's approach.

Beyer and Meier's system (Reference Beyer and Meier2011) subsumes complexity in the technology in order to allow user focus on simple actions, similarly to MAES. Users with no musical training compose according to their preferences within a known set of note-based musical genres and styles. However, this project differs in its note-based metaphor and its emphasis on learnability by novice users over development of virtuosity, requiring hard-wired mappings – as opposed to MAES's configurable mappings.

Other interfaces share less common ground with MAES but are listed here for completeness: Powerglove (Goto Reference Goto2005) and GloveTalkII (Fels and Hinton Reference Fels and Hinton1998) use glove devices. GRIP MAESTRO (Berger Reference Berger2010) is a sensor-augmented hand-exerciser measuring gripping force and 3D motion. Digito (Gillian and Paradiso Reference Gillian and Paradiso2012) implements a note-based modified keyboard paradigm. Phalanger (Kiefer, Collins and Fitzpatrick Reference Kiefer, Collins and Fitzpatrick2009) tracks hand motion optically, controlling MIDI.Footnote 26 Couacs (Berthaut, Katayose, Wakama, Totani and Sato Reference Berthaut, Katayose, Wakama, Totani and Sato2011) relies on first-person shooter videogame techniques for musical interaction.

4. Implementation

Figure 1 provides a convenient way of conceptualising MAES, consisting of a tracking device controlled by specialised software for the creation of musical gestures.

Figure 1 MAES block diagram.

4.1. Tracking device

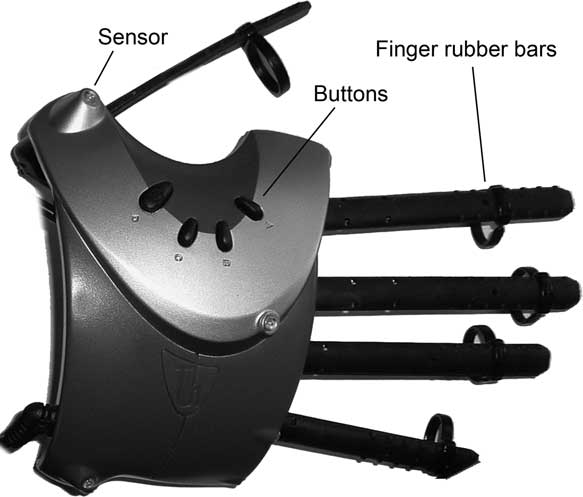

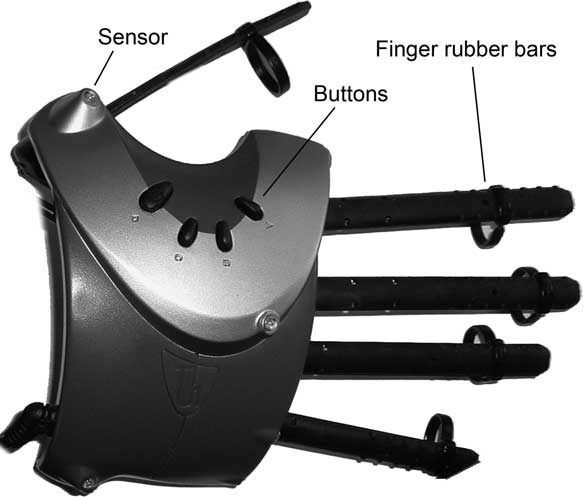

Since the project focused on content, it was important to choose a device that would minimise the technical effort invested in its adaptation. The P5 Glove (Figure 2) captures the necessary data required to devise convincing gestures. It is affordableFootnote 27 and therefore within reach of the widest possible public, supporting reasonable expectations of affordability in future technology. While being an old deviceFootnote 28 it provides:

Figure 2 P5 Glove.

-

• tracking of three-dimensional translation and rotation, and finger bend,

-

• sufficient sensitivity and speed,Footnote 29

-

• detection within a wide spatial range calibrated by each user.

However, the original manufacturer's software library did not exploit its capabilities, working within a narrow spatial range and reacting sluggishly. Fortunately, McMullan (Reference McMullan2003, Reference McMullan2008) and Bencina (Reference Bencina2006) developed C libraries that access the glove's raw data: these were used to implement alternative tracking functions within an external MAX object. Also, looseness of the plastic rings used to couple the rubber bands to the fingers resulted in slippages that affected the reliability and repeatability of finger bend measurements. This was significantly remedied with adjustable Velcro attachments placed between the base of the finger and the original rings.

4.2. Software

In addition to a user interface implemented in MAX, the software consists of:

-

1. the processing package,

-

2. the external object P5GloveRF.mxe (Fischman Reference Fischman2013), and

-

3. the mapping mechanism.

4.2.1. Synthesis and processing

Synthesis and processing modules are interconnectable by means of a patchbay emulating matrix, and consist of the following:

-

1. Sound sources

-

1.1. Three synthesisers (including microphone capture)

-

1.2. Two audio file players (1–8 channels)

-

-

2. Spectral processesFootnote 30

-

2.1. Two spectral shifters (Fischman Reference Fischman1997: 134–5)

-

2.2. Two spectral stretchers (Fischman Reference Fischman1997: 134–5)

-

2.3. Two time stretchers (Fischman Reference Fischman1997: 134–5)

-

2.4. Two spectral blur units (Charles Reference Charles2008: 92–4)

-

2.5. A bank of four time-varying formantsFootnote 31

-

-

3. Asynchronous granulationFootnote 32

-

• This includes the control of sample read position, wander and speed (time-stretch); grain density, duration, transposition and spatial scatter; and cloud envelope.

-

-

4. QList Automation

-

• MAX QLists allow smooth variation of parameters in time according to breakpoint tables.

-

-

5. Spatialisation

-

• A proprietary algorithm implements spatialisation in stereo, surround 5.1 and two octophonic formats, including optional Doppler shift. The matrix patchbay enables the connection of the outputs of any of the synthesisers, file players and processes to ten independent spatialisers. The granulator features six additional spatialisers and the file players can be routed directly to the audio outputs, which is useful in the case of multichannel files that are already distributed in space.Footnote 33

-

4.2.3. MAX external object

P5GloveRF.mxe fulfils the following functions:

-

1. Communication between the glove and MAX

-

2. Conversion of raw data into position, rotation, velocity, acceleration and finger bend

-

3. Utilities, such as glove calibration, storing shapes, tracking display

The MAX patch includes optional low-pass data filters that smooth discontinuities and spikes. So far, these have only been used for orientation and, more seldom, to smooth velocities.

4.3. Mapping approach

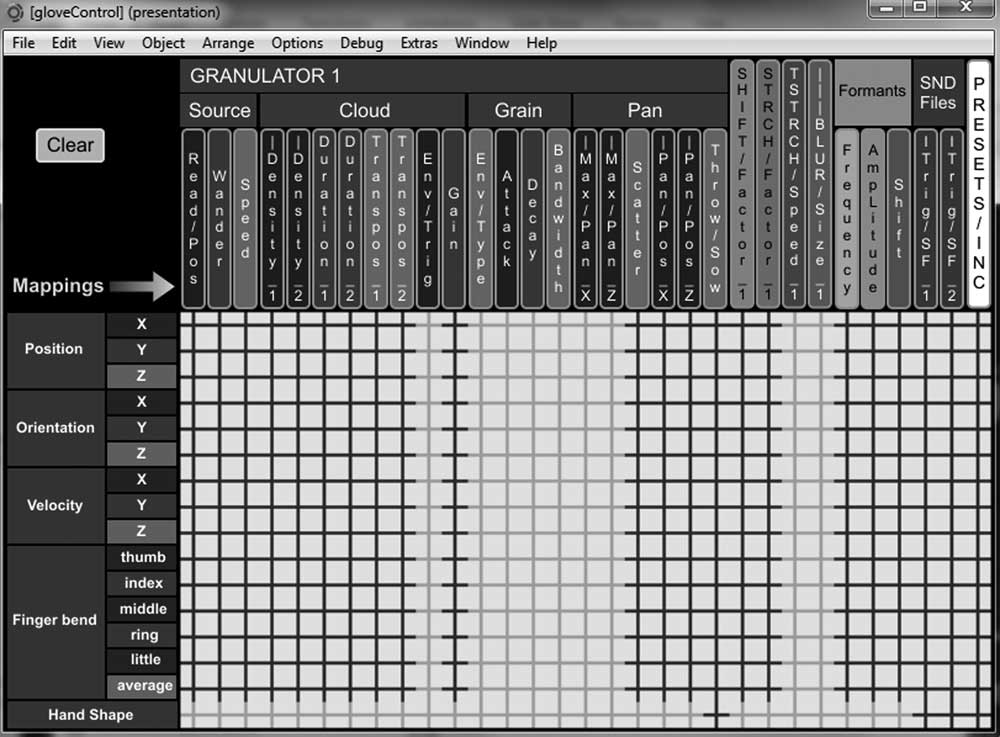

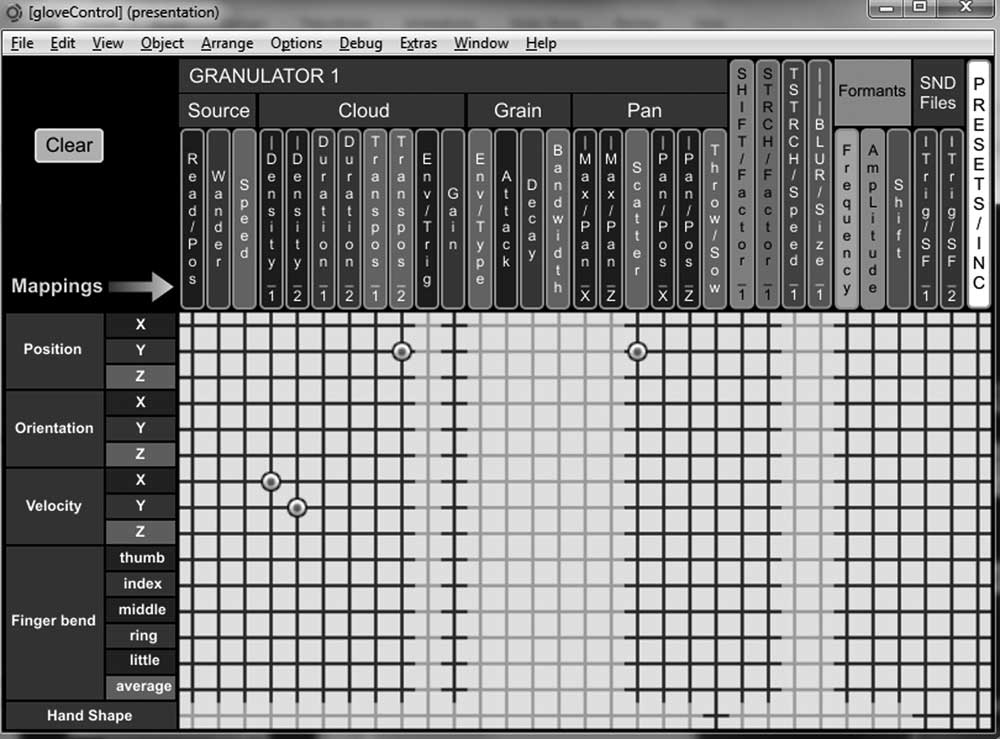

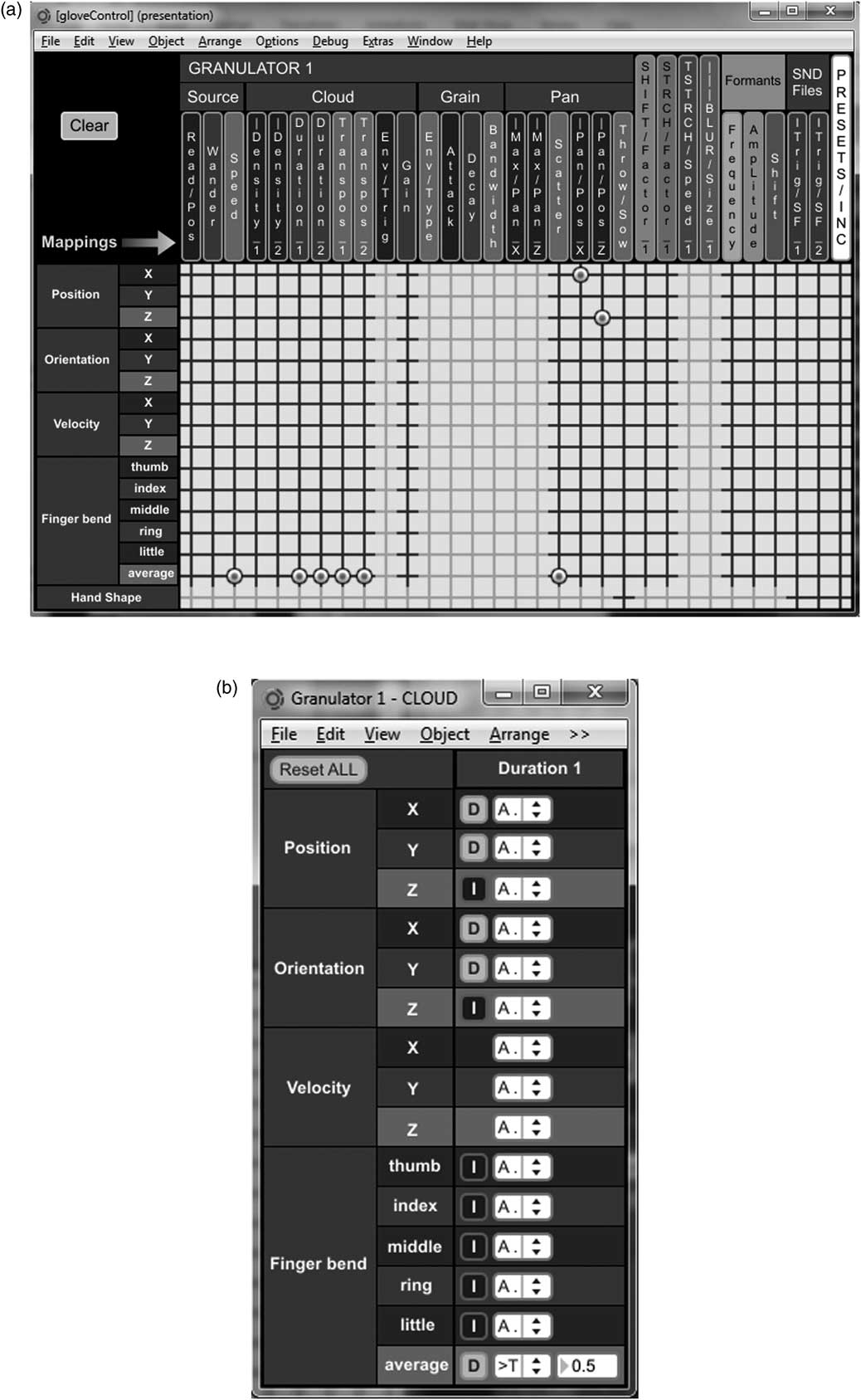

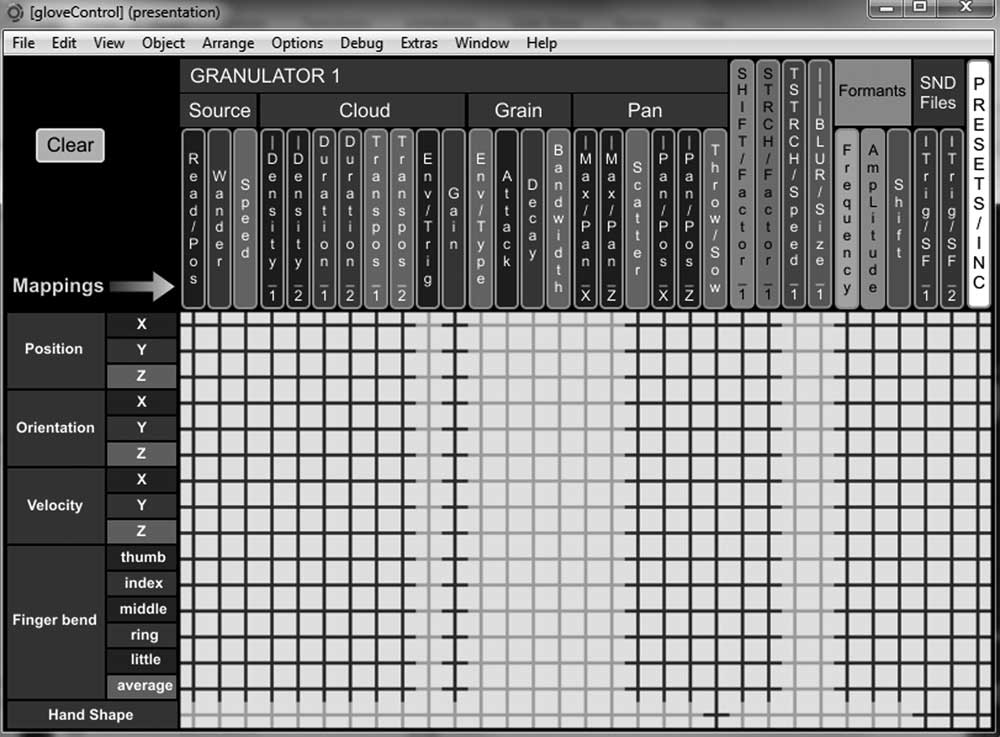

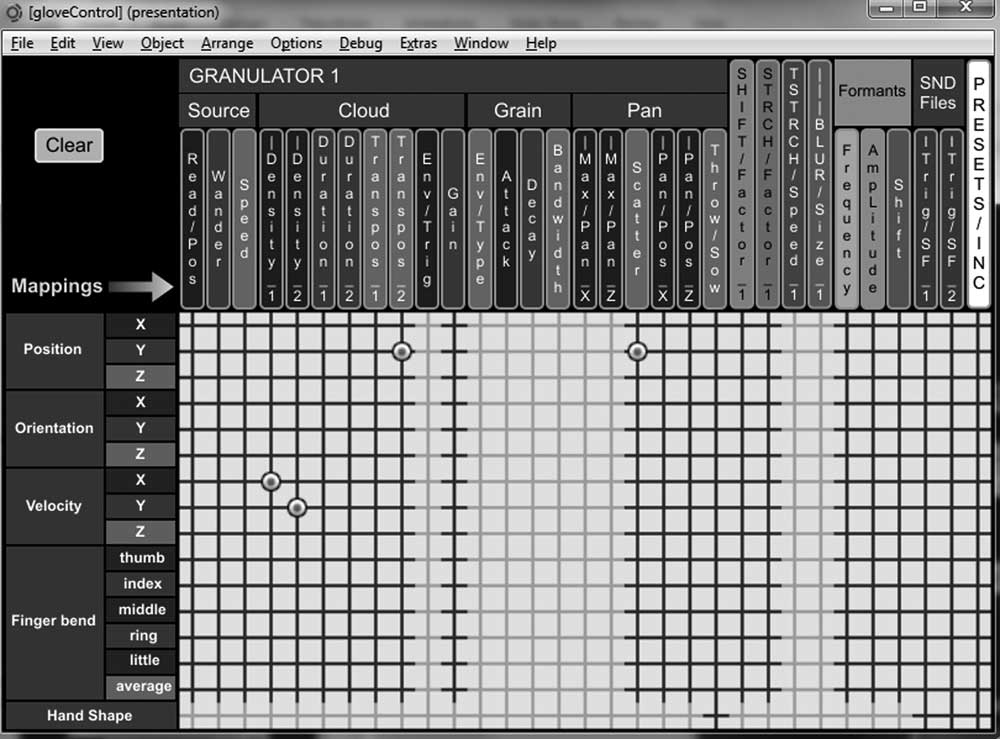

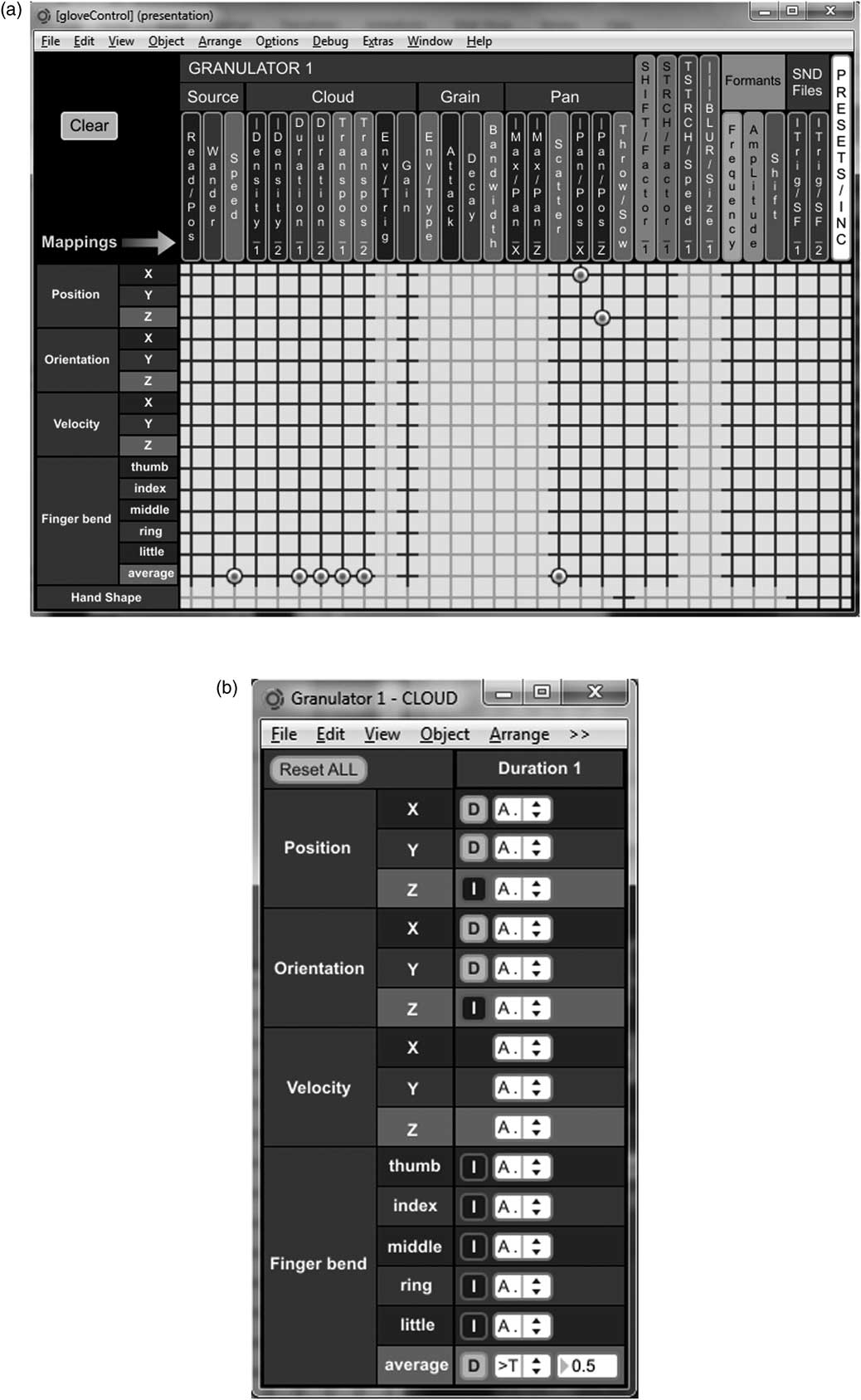

The patch provides configurable mappings via a matrix instead of implementing a hard-wired approach.Footnote 34 It is possible to establish correspondences between 15 streams of tracked data and 18 continuous processing parameters, a physical model for throwing/sowing particles, two soundfile triggers, and a preset increment (Figure 3). This one-to-one mapping produces a set of primitives that can be used independently to establish basic correspondences and also combined simultaneously into divergent and many-to-many mappings to generate more complex metaphors, offering a large number of possibilities.Footnote 35

Figure 3 Mapping matrix. Tracked parameters appear in the left column. Sound parameters, audio file triggers and preset increments appear on the top row. Fuzzy columns indicate that mappings are not available (due to 32 bit limitations). Fuzzy entries in the bottom row disable hand shape mappings that do not make sense.

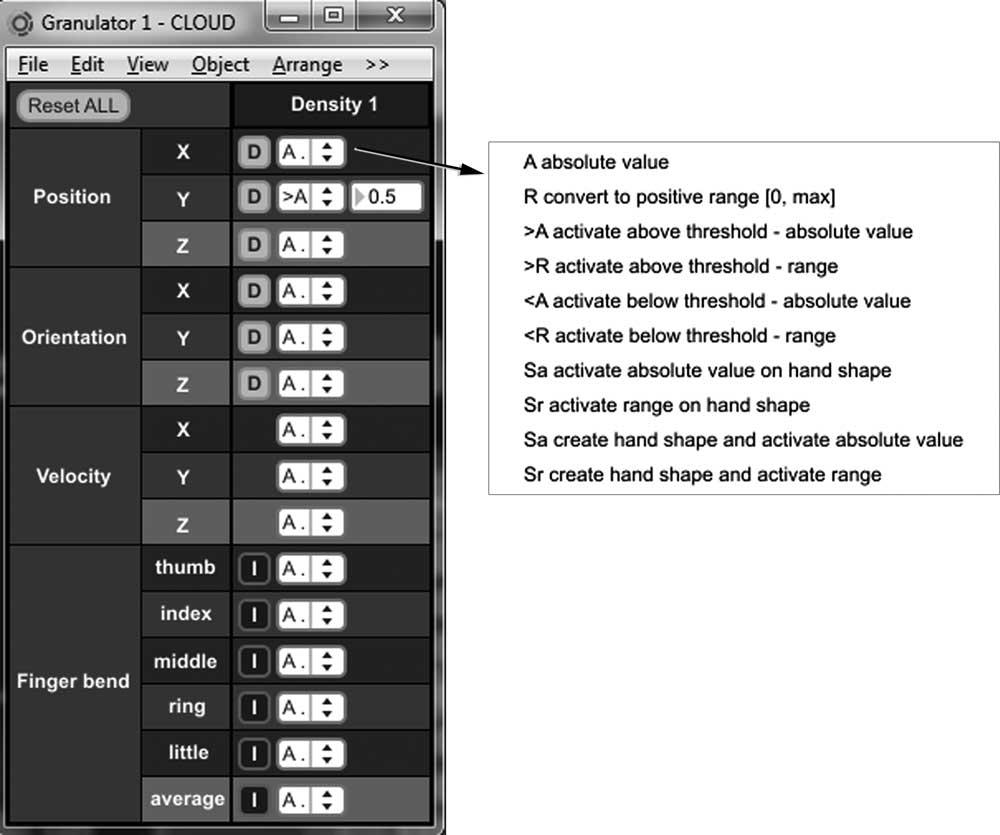

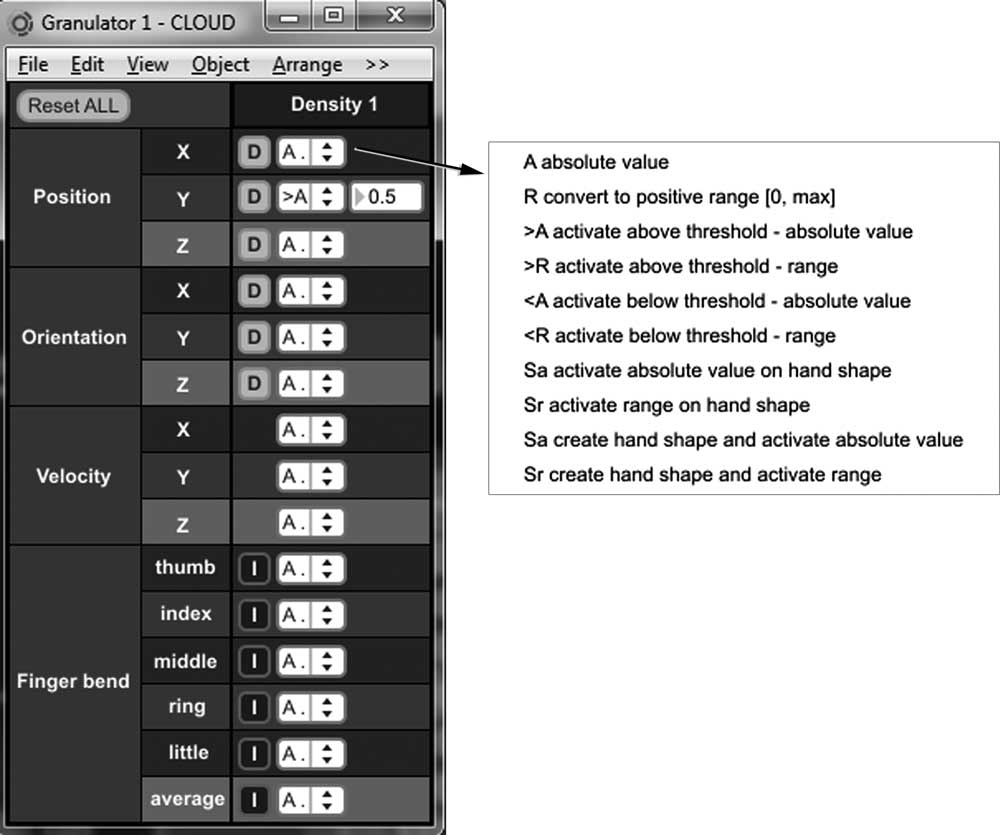

Furthermore, correspondences can include a number of conditions: for example, Figure 4 displays conditions for mappings between position X and density 1. Also, mappings of continuous parameters can be direct – when increments (decrements) in the source parameter cause corresponding increments (decrements) in the target parameter – or inverse – when increments (decrements) cause corresponding decrements (increments). Continuous parameters can have more than one condition: for instance, in Figure 4 the mapping between position X and density 1 is also conditional on the value of position Y being greater than 0.5.

Figure 4 Mapping conditions for correspondences between position X and density 1.

Finally, all these mappings can be stored in the patch's presets. Therefore, it is possible to change mapping modes and gestures used from preset to preset as often as required. Moreover, since it is possible to map tracked data and set conditions for preset increments, there is no need to use other means to increment presets and change mappings; this avoids disruption to the metaphor of direct shaping of sounds through manual actions, aids the transparency of the technology and enables an organic interaction between it and the performer.

The following section illustrates the flexibility of the mapping mechanisms in the generation of more complex metaphors by combining primitives and setting up conditions. The generation of such metaphors is at the heart of this project's aim to achieve expression through simple gestures while subsuming technological complexity.

4.4. Mapping examples

The examples will be described in terms of metaphors: the reader is encouraged to use these to form a mental image of the equivalent physical actions and resulting sound.

4.4.1. Shaking particles (Movie example 1)

The performer holds and shakes a receptacle containing particles that produce sound when they collide with each other and with the edges of the container, as they are being shaken: the faster the shaking speed, the larger the number of collisions and corresponding sounds. When shaking stops there is silence. This metaphor is extended into hyper-reality by creating a correspondence between the particles’ register (and perhaps their size) and the height at which the container is held: when the hand is lowered, frequency content is correspondingly low, and it goes up as the hand is raised.

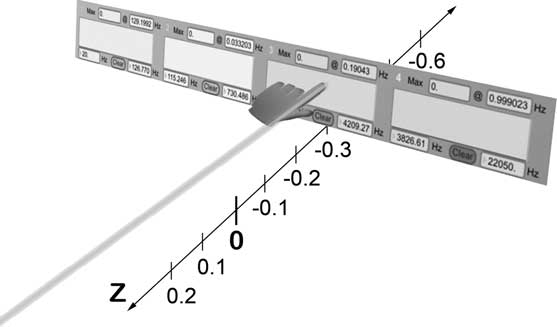

Figure 5 shows the mappings used in this example:

Figure 5 Shaking particles: mapping.

-

1. X and Y velocities control the granulation density limits: when the hand moves rapidly between left and right (X), and between top and bottom (Y), the density is higher and we hear more collisions. As the hand slows down we gradually hear fewer collisions. When the hand is still (velocity = 0) the grain densities are 0 and there is no sound.

-

2. Y position is mapped divergently onto the second transposition limit and the grain scatter: the higher the hand is held the higher the transposition (and the corresponding top register of the grains) and the wider their spatial scatter.

4.4.2. Gripping/scattering a vocal passage (Movie example 2)

The performer traps grains dispersed in space, condensing these into a voice that becomes intelligible and hovers around the position of the fist that holds it together. As the grip loosens grains begin to escape and spread in space, and the speech slows down; disintegrating and becoming unintelligible.

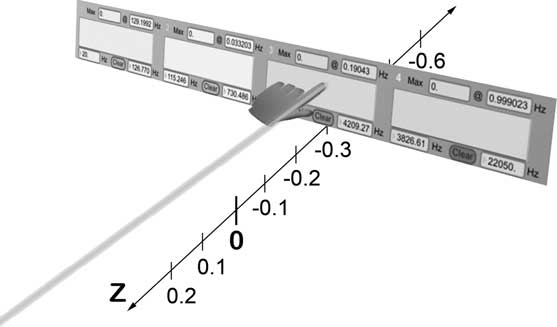

Figures 6(a) and 6(b) show the mapping and conditions used in this example. Finger-bend average is mapped divergently onto the sample's reading speed, and the grains’ duration limits, transposition limits and scatter. The following conditions apply:

Figure 6 Gripping/scattering a vocal passage: (a) mapping; (b) conditions.

-

1. Mappings onto the duration limits are direct (Figure 6(b)) so that maximum finger bending corresponds to the longest grains: in this case, maximum duration limits of 90 and 110 milliseconds were set elsewhere in the patch (not shown in the figure) in order to obtain good grain overlap and a smooth sound.

-

2. Finger bend only takes effect when it is greater than 0.5 (finger bend range is 0 to 1). This avoids grain durations that are too short: in this case, the minimum durations will correspond to a bend of 0.5, yielding half of the maximum values above; in other words, 45 to 55 milliseconds. Nevertheless, we will hear shorter grains due to transposition by resampling.

-

3. Mappings onto transposition limits are inversed: full-range finger bend is mapped without conditions onto corresponding ranges of 0 to 25 and 0 to 43 semitones. As the fist tightens finger bend increases and transposition decreases towards 0; when the latter value is reached, the voice is reproduced in its natural register.

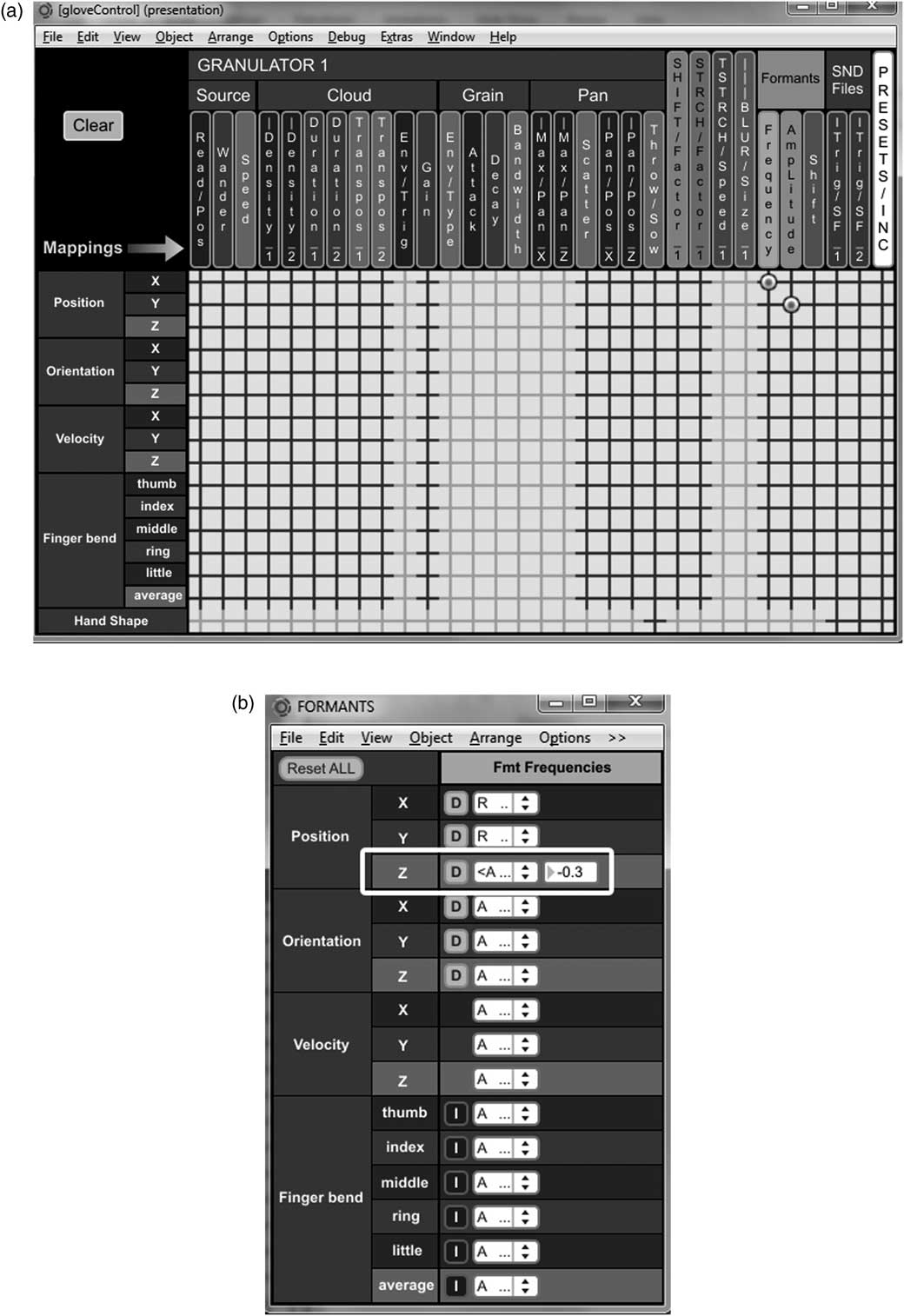

4.4.3. Perforating a veil (Movie example 3)

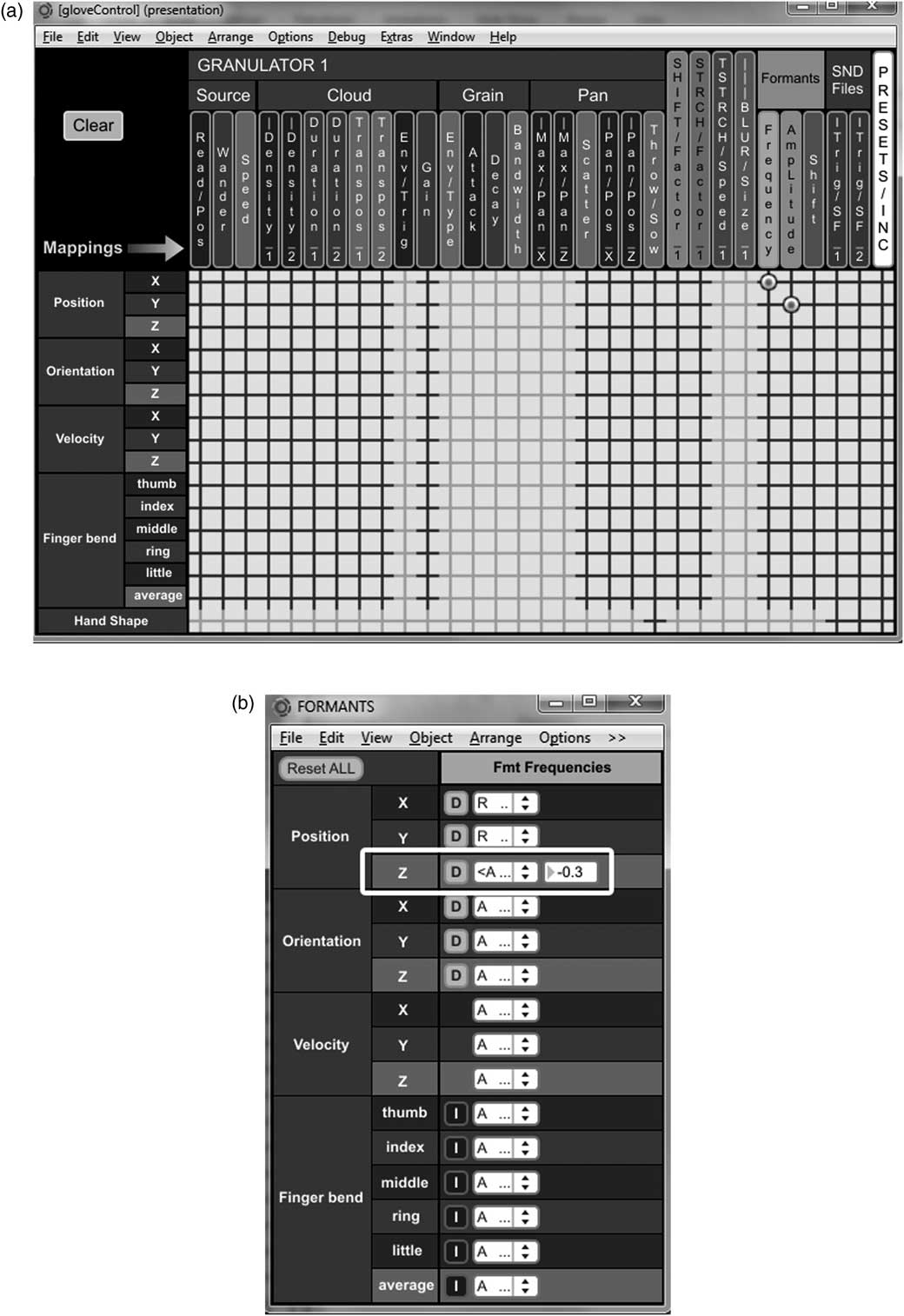

The metaphor here is an imaginary soft barrier that prevents sound from breaking through, analogous to a fragile veil that blocks the passage of light. The barrier consists of a bank of formants that stop all frequencies. But in the same way the hand perforates a light blocking veil, the performer punches holes in the formant filters, allowing frequencies corresponding to the horizontal position of the holes to break through (Figure 7). Frequencies ascend from left to right, and the height determines the amplitude of each corresponding frequency. Finally, the veil has a depth position specified by the Z axis: the hand penetrates the veil when its position is deeper than that of the veil,Footnote 36 which in this case is −0.3. Therefore, if the Z position of the hand is greater than −0.3, there will be no perforation and no new frequencies will be heard. Conversely, if the hand's depth is less than −0.3, a frequency proportional to its X position and amplitude proportional to its Y position will be heard.

Figure 7 Perforating a formant veil: the Z axis determines the position of the veil (z = −0.3).

Figure 8(a) shows the mappings for this example, connecting the X position to frequency and the Y position to amplitude. Figure 8(b) shows the condition for the mapping of position X, which will only take effect when the Z position is less than −0.3 (highlighted with a white rectangle). The same condition applies to mapping the Y position to amplitude.

Figure 8 Perforating a veil: (a) mapping; (b) conditions.

5. Musical Work: Ruraq Maki

Gesture is an essential component of music composition and is inextricably linked to the latter's intrinsic processes and necessities (Cadoz Reference Cadoz1988). Thus the validity of a system for musical expression can only be corroborated by its effectiveness in the creation and performance of musical works. For this reason, the last stage of this project consisted of the creation and performance of a composition entitled Ruraq Maki (Fischman Reference Fischman2012).Footnote 37 MAES facilitated the construction of manual gestures by means of combinations of its mapping primitives, including the examples above. The implementation of tangible gestures allows the performer to generate and manipulate sounds, shaping the latter and interacting with MAES in a manner similar to that of videogame play, in which the technology is driven by the user according to rules that vary depending on the current state of the game – in other words, the musical work.

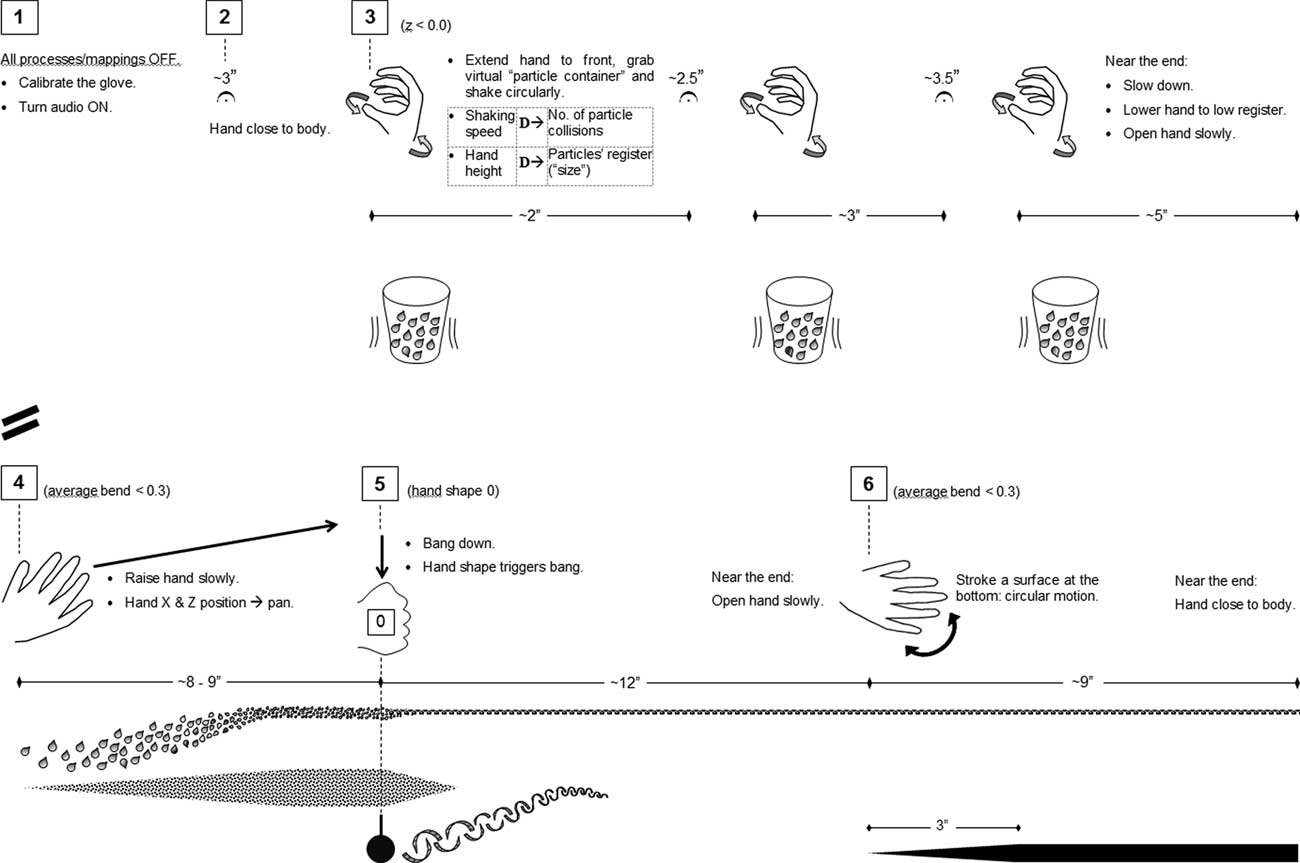

Ruraq Maki is pre-composed and performed according to a score (Figure 9). It is therefore repeatable and recognisable as the same composition from performance to performance in the same way scored music is, providing for ample interpretative variety and including short sections when the performer can improvise. Movie example 4 consists of a video recording and stereo mixdown of an eight-channel performance: the timings of the illustrative passages described below refer to this recording.

Figure 9 Ruraq Maki score, page 1.

Following this project's approach, the performing gestures remain simple: conscious attempts were made to keep these strongly rooted in cognitive maps of daily human activity and to link them to appropriate spectromorphologies, in order to preserve a connection between their causality and everyday experience of the world.Footnote 38 This concerns the establishment of relatively close levels of surrogacy between common-knowledge hand actions and their aural effect. Furthermore, such links are also extrapolated onto the hyper-real. For example, in the beginning of the piece (0′07′′–0′24′′, rehearsal mark 3 in Figure 9) a particle container is shaken using the same mappings as movie example 1 (section 4.4.1.). While the particles are shaken, the performer can make them exceed a humanly sized container to the proportions of the speaker system, increasing their spatial scatter by adding vertical movement to the shaking (i.e. shaking diagonally): the higher the hand the more scattered the particles. As a result, the perspective of the audience is shifted from a situation in which the particle container is viewed from a distance to being inside the container.

During the process of learning the work, it was discovered that the approach described above aided memorisation: the metaphors became a mnemonic device similar to those used by memorisation experts, providing a sequence of actions similar to a plot for the realisation of the work (Figure 9).

5.1. Performer control versus sonic environment

The paradigm of sound manipulation within a larger structured environment of independent sonic material was realised through three main mechanisms:

-

1. direct control of continuous processing parameters through performer gestures,

-

2. gestures triggering pre-composed materials, and

-

3. fully automated time-varying processes assuming an environmental role.

Also, ancillary gestures were scored in order to aid metaphor identification by audiences. Unlike functional gestures, these do not affect the mechanics of sound production. However, they enhance performance expression, fulfilling similar functions to gestures in other genres; for instance, rock guitarists’ exaggerated circular arm movements on downbeats, pianists’ use of the whole upper body to emphasise cadences, undulating movements when playing cantabile and so on.

5.1. Direct control of processing parameters

This is the most intimate level of control, directly implementing the metaphor of sound shaping and manipulation as a physical entity. Examples are found throughout the piece, including the case of shaking particles illustrated above. At 1′05′′–1′23′′ and 8′04′′–8′10′′ the fingers are used as if they were a metallic flap sweeping through a curved row of bars, producing a corresponding sound in which the loudness increases as the index is straightened, the speed of the sweep is controlled by the hand's left–right velocity and panning follows the position of the hand in the horizontal plane. At 2′51′′–3′14′′ the performer unfolds particles tentatively until they weld seamlessly into a single surface by stretching/bending the fingers (the surface becomes more welded as fingers stretch). This is accompanied by two ancillary gestures consisting of raising/lowering the hand and smooth motion in the horizontal plane leading eventually to a low palm-down position, as if stroking the welded surface. To realise this metaphor, average finger bend is mapped directly to granular transposition and scatter, and panning follows the position of the hand in the horizontal plane.

A structured improvisation section at 4′43′′–5′50′′ implements a gripping/scattering mechanism similar to that depicted in movie example 2 (section 4.4.2.), but applied to a processed quasi-vocal sample. Panning follows the position of the hand in the horizontal plane, which is clearly noticeable when there is minimum grain scatter (hand fully closed). The structure of the improvisation includes a plot through which a number of ancillary gestures are enacted: for instance, when attempting to grab the particles at the beginning a pitched buzz begins to form, at which point the performer releases the grip quickly as if reacting to a small electric shock; while panning the sound when it is firmly gripped, he follows the trajectory with the gaze as if displaying it to an audience, and so on.

Piercing of a formant veil similarly to movie example 3 occurs at 3′47′′–3′56′′; however, in this case, the veil is limited to the left half of the horizontal space by applying the additional condition x<−0.1 to the mappings of formant amplitude and frequency. At 4′20′′–4′37′′, wiggling the fingers controls the articulation of a rubbery granular texture through inverse mapping of finger-bend average to grain density: changing finger bend by wiggling changes the density and, because this is an inverse mapping, fully bending the fingers produces zero density, resulting in silence.

At 9′53′′–10′15′′ the performer gradually grabs a canopied granular texture, releasing fewer and fewer grains as the hand closes its grip until a single grain is released in isolation, concluding the piece. Here, average finger bend is mapped inversely to density, similarly to the case of the rubbery texture controlled by wiggling. However, because of the spectromorphology used (highly transposed, shortened duration grains) the resulting metaphor is different.

5.2. Triggering pre-composed materials

Triggered materials consist of pre-composed audio samples and/or processes generated via QLists. They bridge between directly controllable and fully automated processes, depending on the specific gestures and associated spectromorphologies chosen during the mapping process. For example, hitting gestures at 6′22′′–7′19′′ are easily bound into cause/effect relationships with percussive instrumental sounds, becoming direct manipulation of sonic material by the performer.Footnote 39 Conversely, the accompanying percussive rhythm beginning at 6′56′′ is fully environmental, since it is not associated with gestures that trigger a shaker (quijada) and, at 7′09′′, a cowbell (campana). However, there are no clear boundaries between fully automated and triggered situations. For instance, at 6′41′′ the performer hits an imaginary object above him, resulting in a strong attack continued by a texture that slowly settles into a steady environmental rhythm.Footnote 40 At 0′32′′ we encounter an intermediate situation between full controllability and environment: the simultaneous closure of the fist (functional gesture) and a downwards hit (ancillary gesture) trigger one of the main cadences used throughout the piece, consisting of a low-frequency bang with long resonance mixed with a metallic texture. The bang has a causal link to the performer's gesture but the texture does not, fulfilling an environmental role.

There is one type of mapping which is neither a trigger nor a continuous control paradigm: throw/sow implements momentum transfer from the user to audio grains, affecting their direction and velocity in the speaker space and simulating a throw. This happens at 7′37′′, 7′44′′ and 7′51′′: in the original surround performance grains are launched towards the audience, traversing the space towards the back until they fade in the distance.

The examples above illustrate compositional rather than technological design, emphasising gesture's role as object of composition. For instance, compare the previous example with the triggering of a high-pitched chime by means of a flicker at 3′19′′. If this flicker had triggered a low bang, or if a closed fist hit had triggered a chime, this would have not only affected musical meaning but also called for a different interpretation of the causality of the triggering gestures.Footnote 41

5.3. Fully automated processes

These sustain the interactive role of the technology reacting to the performer's actions: when a gesture advances a preset, the technology can initiate QLists and/or play pre-composed soundfiles. The accompanying percussive rhythm at 6′56′′ is an example of the latter, while an emerging smooth texture leading to a local climatic build-up at 0′24′′–0′32′′ is an example of a QList controlling both spectral stretchers. At 9′41′′, a QList controls the granulator to produce a texture that changes from longer mid–low-frequency grains to the canopied granular texture which is subsequently grabbed by the performer at 9′53′′.

6. Discussion

The realisation of Ruraq Maki suggests that MAES provides a reasonably robust approach, a necessary and sufficient range of tools and techniques, and varied mapping possibilities in order to create expressive gestures.

As expected from any system, MAES has shortcomings; specifically:

-

1. Tracking of orientation angles is inaccurate. Therefore, orientation mappings are only useful when they do not require precision; for instance, when we are more interested in a rough fluctuation of a parameter.

-

2. Slippage of the finger rubber bands: although using Velcro is reasonable, this could be improved significantly; for instance, using gloves made of stretching fabric that fit snugly on the hand. Nevertheless, it is worth remembering that the P5 was used in order demonstrate that it is possible to implement an expressive system employing existing technology, with the expectation that future technological development will yield more accurate controllers, wireless communication and, eventually, more accurate tracking without bodily attached devices.

These shortcomings do not prevent MAES's usage by other composers and performers. The software is available under a public licence both as executable and as a source for further adaptation, modification and development. Although MAES is optimised for use with a digital controller, the synthesis and processing implementation can fully function without it. Furthermore, the patch allows straightforward replacement of the external object P5GloveRF controlling the glove by other MAX objects, such as objects that capture the data of other controllers. It is hoped that this will offer a wide range of possibilities which enable music-making within a wide spectrum of aesthetic positions, hopefully contributing to the blurring of popular/art boundaries and challenging this traditional schism.

7. Future Developments

MAES is the first stage of a longer-term research strategy for the realisation of Structured interactive immersive Musical experiences (SiiMe), in which users advance at their own pace, choosing their own trajectory through a musical work but having to act within its rules and constraints towards a final goal: the realisation of the work. A detailed description of the strategy is given in Fischman (Reference Fischman2011: 58–60), but its main premises follow below.

Firstly, in order to enable non-musically trained individuals to perform with MAES, it will be useful to implement a videogame score that provides performance instructions through rules and physics of videogames. For instance, it is not difficult to imagine a graphics interactive environment that prompts the actions required to perform the beginning of Ruraq Maki (Figure 9), requiring the user to reach a virtual container and shake it in a similar manner to movie example 1 (section 4.4.1.). This would also provide visual feedback which, although less crucial in the case of continuous control (Gillian and Paradiso Reference Gillian and Paradiso2012), would be extremely valuable in the case of discrete parameters and events, including thresholds and triggers.

Further stages of development include:

-

• increasing the number and sophistication of the audio processes and control parameters available,

-

• expanding to other time-based media (e.g. visuals, haptics),

-

• enabling the system to behave according to the particular circumstances of a performance (e.g. using rule-based behaviour or neural networks),

-

• implementing a generative system that instigates musical actions without being prompted (e.g. using genetic algorithms),

-

• implementing a multi-performer system incorporating improved controllers and devices,Footnote 42 and

-

• evolving the mechanics of musical performance by blurring the boundaries between audiences and performer, including music-making between participants in virtual spaces, remote locations,Footnote 43 and so on.

Acknowledgements

This project was made possible thanks to a Research Fellowship from the Arts and Humanities Research Council (AHRC), UK; grant No. AH/J001562/1. I am also grateful to the reviewers for their insightful comments, without which the discussion above would lack adequate depth and detail, and the contextualisation of this project would have suffered.