1. Introduction

From the eyes of a child, each day is an adventure with a million things to discover and learn. For example, during language learning, children soon discover that the speech stream consists of phonologically distinct units, that we call words. Children typically learn words in settings in which a multitude of sensorimotor information co-occurs with the spoken word. For instance, when a child hears the word cat, information from various senses is available: the child sees the cat and maybe also sees a parent who is pointing at the cat, the child might bend down to touch the cat, the cat might meow. Such vivid examples are often brought forward in the literature on embodied language understanding, and the underlying ideas have been specified, for example, in the Experiential-Trace-Model (Zwaan & Madden, Reference Zwaan, Madden, Pecher and Zwaan2005). According to this model, sensorimotor experiences that co-occur with a word become closely associated with it and later become reactivated whenever the respective word is processed, even in contexts where no referent is present. From this perspective, sensorimotor experiences build the core representation underlying language comprehension. To our knowledge, there is currently no evidence that the connection between words and word-specific sensorimotor experiences already occurs during initial word learning. If associations between words and sensorimotor processes are crucial for language comprehension in general, these associations should not just be a by-product of years of co-occurrence but also occur during early word learning. In the current study we investigate the assumption that sensorimotor processes become associated with words from the start of the process of word learning. Such evidence has direct implications for the cognitive process underlying word learning during language development.

In the framework of embodied language understanding, many studies investigated the role of sensorimotor processes during language comprehension. In one of the classical studies, Stanfield and Zwaan (Reference Stanfield and Zwaan2001) presented participants with sentences that were followed by a picture depicting an object. Participants were faster in deciding whether an object was mentioned in a previous sentence when the orientation of the object in the picture and the orientation of the object implied by the sentence were congruent. Thus, after reading a sentence such as He hammered a nail into the wall, participants were faster at identifying a picture of a nail in horizontal orientation compared to a nail in vertical orientation. One explanation for this result is that experiential traces became reactivated during language comprehension (Zwaan & Madden, Reference Zwaan, Madden, Pecher and Zwaan2005). More specifically, while constructing a meaning representation for the sentence, participants retrieved sensorimotor (visual) experiences associated with the described object (i.e., a nail in horizontal orientation). If this was consistent with the object in the picture, the decision process was facilitated and response times were fast. If the retrieved experiences were inconsistent with the picture, then the decision process was hindered and response times were slow. Many studies (mostly investigating L1 language comprehension in adults) support the conclusion by Stanfield and Zwaan (Reference Stanfield and Zwaan2001) (for a review, see Fischer & Zwaan, Reference Fischer and Zwaan2008; Willems & Casasanto, Reference Willems and Casasanto2011). Further, it could be shown that experiential traces are reactivated automatically during language comprehension rather than under specific task conditions only (e.g., Boulenger, Silber, Roy, Paulignan, Jeannerod, & Nazir, Reference Boulenger, Silber, Roy, Paulignan, Jeannerod and Nazir2008; Dunn, Kamide, & Scheepers, Reference Dunn, Kamide and Scheepers2014; but see Bub, Masson, & Cree, Reference Bub, Masson and Cree2008; Lebois, Wilson-Mendenhall, & Barsalou, Reference Lebois, Wilson-Mendenhall and Barsalou2015). However, this does not exclude the possibility that these traces become associated with specific words only after years of co-occurrence. Alternatively, it is possible that we learn words in a fully amodal manner, but as the words typically co-occur with sensorimotor experiences over many years of our life, these finally become interconnected with the respective words at a rather late stage during language development. There is evidence indicating that children at the age of seven to thirteen years already show associations between words and sensorimotor experiences (Engelen, Bouwmeester, de Bruin, & Zwaan, Reference Engelen, Bouwmeester, de Bruin and Zwaan2011). However, at that age children are already advanced language users and it therefore remains unclear at what point in time during the language learning process these associations were formed.

Recently, several studies investigated the association between sensorimotor experiences and words in a second language (L2) (e.g., Bergen, Lau, Narayan, Stojanovic, & Wheeler, Reference Bergen, Lau, Narayan, Stojanovic and Wheeler2010). For example, Jared, Pei Yun Poh, and Paivio (Reference Jared, Pei Yun Poh and Paivio2013) presented highly proficient bilinguals with objects that looked differently in the L1 and the L2 country, such as a postbox in China and a postbox in Canada. Participants had to name the object in either their L1 or in their L2. Objects were named faster when the language in which the object was named matched the picture (e.g., English language and Canadian postbox) compared to when language and picture mismatched (e.g., English language and Chinese postbox). This finding suggests that experiential traces also become reactivated during L2 comprehension. Participants in this study were living in the L2 country for several years and were thus acquiring their second language in a context were sensorimotor information was available. Thus, even when participants do not learn a language from childhood, it seems that words become associated with specific sensorimotor traces. Additional evidence for an involvement of experiential traces in L2 comprehension was provided by de Grauwe, Willems, Rueschemeyer, Lemhöfer, and Schriefers (Reference de Grauwe, Willems, Rueschemeyer, Lemhöfer and Schriefers2014), who showed that sensorimotor areas in the brain were activated during both L1 and L2 processing. Finally, Dudschig, de la Vega, and Kaup (Reference Dudschig, de la Vega and Kaup2014) could show that spatial experiences with a word’s referent also become automatically activated during L2 comprehension. It can thus be concluded that experiential traces appear to be automatically involved in both L1 and L2 comprehension. These findings, however, leave open the question whether experiential traces involved in L2 comprehension were newly formed during L2 acquisition, or whether they were transferred from L1. In addition to that, the reported studies again leave open whether sensorimotor experiences become associated with words early on during language learning, because participants were rather advanced comprehenders who had many years of L2 experience. To tease apart these two possibilities, one would need to investigate the reactivation of experiential traces during comprehension of linguistic stimuli referring to novel entities, since experiential traces cannot be transferred in this case.

A first step towards a better understanding of the role of sensorimotor information in initial word learning was provided by a study by Richter, Zwaan, and Hoever (Reference Richter, Zwaan and Hoever2009). Adult participants learned artificial words referring to novel objects in a computer-learning task. After that, participants were presented with familiar and new artificial words and had to respond to the words from the learning phase only. Crucially, artificial words were preceded either by the corresponding object, by a novel object that looked very similar to the corresponding object, or by a neutral object. Results showed that only the corresponding object facilitated the recognition of the artificial word. The authors interpreted this finding as evidence for associations between words and experiential traces at an early stage during word learning. It should be noted, however, that the effect obtained in this study could also be explained by response facilitation due to an internal labeling of the objects. The reason is that participants always first saw the object and then the word. In order to allow conclusions about the involvement of experiential traces in initial word learning, the word should automatically activate sensorimotor traces, rather than the object facilitating the activation of the word. Such a finding can be considered crucial for any model of language comprehension that is based on the assumption that experiential sensorimotor information is involved in language comprehension.

The current study investigates whether acquiring new words in a natural, multisensory fashion gives rise to associations between these words and sensorimotor experiences automatically retrieved during language comprehension. For abstract concepts, it has already been shown that a short session of training in the lab is sufficient for reversing lifelong established associations between valence and space (Casasanto & Chrysikou, Reference Casasanto and Chrysikou2011). We taught participants labels of eight novel objects in an interactive word learning task. Participants experienced half of the objects in the upper and the other half in the lower visual field. In a learning phase, participants learned the words in an interactive manner, by looking upward or downward and pointing upward and downward. In the test phase, participants performed a Stroop-like task which was similar to the task applied in previous studies (Borghi, Glenberg, & Kaschak, Reference Borghi, Glenberg and Kaschak2004; Dudschig et al., Reference Dudschig, de la Vega and Kaup2014; Lachmair, Dudschig, De Filippis, de la Vega, & Kaup, Reference Lachmair, Dudschig, De Filippis, de la Vega and Kaup2011). The newly learned words were presented in a color, and participants had to respond to the color of the words, by performing either an upward or downward arm movement. Thus, the word itself was not relevant for the experimental task. If sensorimotor information is indeed associated with the newly learned words during an early learning phase, then participants should be faster in responding to the word’s color in the Stroop-like test phase when the color demands a response movement compatible with the typical location of the word’s referent. This would provide direct evidence for the central assumption underlying embodied models of language comprehension that sensorimotor experiences become associated with linguistic expressions during initial language learning.

2. Methods

2.1. participants

Twenty-five right-handed participants took part in the experiment for course credit or a financial reimbursement of 8 euro/hour. One participant had to be excluded due to computer malfunction. The remaining twenty-four participants (20 female, 4 male) were on average 21.33 years old (range: 18–32).

2.2. stimulus material

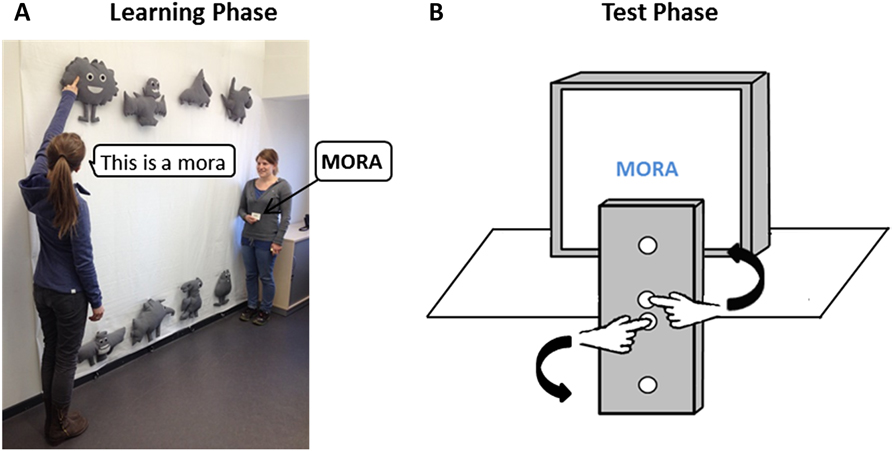

Eight artificial 2-syllable words were used in this study (see Table 1). To ensure that these words did not have any association with a spatial location, prior to the experiment a control experiment was conducted. A group of participants who did not take part in the actual experiment (N = 24) performed the Stroop-like task of the test phase without learning the meaning of the word by means of the interactive word learning task in the learning phase (see below). Results confirmed that, without prior learning, participants did not associate the artificial words with a spatial location (interaction: F(1,23) = 1.20, p = .29; both main effects: F < 1; means for down words: M response direction down = 595 ms, M response direction up = 601 ms, means for up words: M response direction down = 599 ms, M response direction up = 594 ms). The artificial words were presented in capitalized letters on paper cards in the learning phase and on the computer screen in the test phase. Each word was randomly assigned to an object which was realized as a stuffed animal. Stuffed animals were made of grey fabric with black and white faces and were approximately 25–38 cm wide and 24–50 cm high (see Table 1). Care was taken that they did not correspond to a lexical concept in the native language of the participants. For that reason, objects could not be associated with a spatial location prior to the experiment. Each object was randomly assigned to a spatial location on a wall. Four of the objects were attached to a wall in a spatially upper location (2.07–2.15 m from the floor), the other four objects were attached to the same wall in a spatially lower location (0.35–0.45 m from the floor) (see Figure 1A). All participants acquired the same four objects in the upper plane and the same four objects in the lower plane on the wall. Participants could reach the objects in both locations by bending down or stretching.

table 1. Stimulus material of the study

Fig. 1. Experimental set-up of the learning phase (A) and the test phase (B).

2.3. procedure

The experiment contained a learning phase and a test phase. The procedure of the two phases will be described separately in what follows.

2.3.1. Learning phase

In the learning phase, the experimenter first introduced all objects to the participant by presenting a card with the corresponding name with one hand while pointing at and touching the object with the other hand. Simultaneously, the experimenter named the respective object by saying “This is a x”, were x corresponds to the respective object name (see Figure 1A). The order of introducing the objects was randomized for both the introductory round and each of the following four learning cycles. The third and the fourth learning cycle were separated by another task (adjective generation). In the adjective generation task participants were asked to write adjectives they associate with studying at the university either with their dominant hand or with their non-dominant hand. This was done to separate the word learning phase and the test phase in time and to extend word learning over task irrelevant content. In addition, we aimed at distracting participants in order to conceal the purpose of the study. In each learning cycle, the same four objects were placed in the upper location and the other four objects in the lower location. Over all learning cycles, each of the objects occurred once in each of the four possible locations (in the upper or in the lower plane). In each learning cycle, the experimenter showed one name card after another to the participant and he or she was asked to imitate the behavior of the experimenter from the introductory phase (i.e., pointing and touching the object while saying “This is a x”; see Figure 1A). Participants received performance feedback: in the case of a wrong or no response, the experimenter touched the correct object and named it correctly by saying “This is a x”. This procedure was repeated three times within each learning cycle, and then the participant was asked to name the object in free order without first seeing a card. The experimenter gave verbal feedback as to whether or not the answer was correct. After that, the participant was asked to briefly leave the room, and the position of the objects on the wall was changed for the next learning cycle. The learning phase took approximately 20–30 minutes. Throughout the whole experiment the experimenter did not use any words associated with spatial positions in order to avoid that the newly learned words become lexically associated with words referring to spatial locations (Goodhew, McGaw, & Kidd, Reference Goodhew, McGaw and Kidd2014).

2.3.2. Test phase

In the test phase, the words from the learning phase were presented in a Stroop-like paradigm. Words were presented in the center of the screen in one of four colors (blue, red, green, orange), and participants responded to the color of the word on a self-constructed response-box which was vertically mounted on the table (see Figure 1B). Participants were instructed that two colors (e.g., red and green) required an upward arm movement; the other two colors (e.g., orange or blue) required a downward arm movement. Thus, the font color was the stimulus attribute that indicated the direction of the response. Importantly, it was not in conflict with the meaning of the word, as in the classic Stroop task where participants name the font color of a color word (Stroop, Reference Stroop1935). The assignment of colors to upward or downward responses was balanced between participants. To start a trial, participants kept the two middle buttons pressed with the right and the left hand, respectively (see Figure 1B). After 1000 ms, a fixation cross was presented for 750 ms which was followed by the central presentation of a word in one color until the participant initiated a response. An upward movement required participants to release the upper middle button and press the upper button; a downward movement required to release the lower middle button and press the lower button. Response times were measured as the time participants took to release one of the middle buttons (see Lachmair et al., Reference Lachmair, Dudschig, De Filippis, de la Vega and Kaup2011). If no response occurred within 1500 ms, the trial was aborted and participants received the message “too slow”. If the wrong key was released, participants received the feedback “Error”. Each word occurred eight times in each color and was presented thirty-two times to the participant. The experiment was divided into eight blocks, in one block all eight words were presented four times. To familiarize participants with the task and the response device, sixteen practice trials were completed by each participant. Here participants acquired the color to response mapping by responding to letter strings (xxxx vs. yyyy) that appeared in one of the four colors. Performance feedback was provided. Participants completed the test phase within approximately 20 minutes. To obtain information on whether participants still knew the names of the objects after the test phase, participants were asked one more time to freely name all objects after the test phase.

2.4. design

A 2-by-2 within-subjects design with the factors referent location (up vs. down) and response direction (upward vs. downward) and the dependent variable reaction time was deployed in this study.

3. Results

In the learning phase, learning performance was quantified by the number of correctly named objects in each learning cycle (see Table 2). A Wilcoxon test indicated that participants performed significantly better in Learning Cycle 4 compared to Learning Cycle 1 (Z = –3.74, p < .001, r = –0.54). This suggests that participants acquired the names of the objects prior to the test phase.

table 2. Results of the learning phase

In the test phase, reaction times were analyzed using a repeated measures Analysis of Variance (ANOVA) with the factors referent location (up vs. down) and response direction (upward vs. downward) (see Figure 2). Incorrect trials (< 3%) were excluded from the analysis. The analysis showed no main effect of referent location (F < 1), nor of response direction (F < 1). However, the interaction between referent location and response direction was significant (F(1,23) = 7.00, p < .05, η p 2 = 0.23), indicating that the reaction times for upward or downward responses were influenced by the location of the referent (see Figure 2). Planned follow-up one-tailed paired t-tests indicated a significant difference between an upward and a downward directed response for the referent location up (t(23) = 1.82, p < .05, r = 0.36), but not for referent location down (t(23) = –0.62, p = .27).

Fig. 2. Results from the Stroop-like task in the test phase. Mean reaction times and mean error rate for upward responses (white) and downward responses (grey) after a down word or an up word. Error bars represent the 95% confidence intervals for within-subject comparison after Loftus and Masson (Reference Loftus and Masson1994).

Additionally, arcsine transformed error percentages were analyzed in a 2-by-2 ANOVA with the factors referent location (up vs. down) and response direction (upward vs. downward). The analysis revealed a significant main effect for response direction (F(1,23) = 8.16, p < .01, η p 2 = 0.26). Participants made more errors in trials that required a downward movement than in trials that required an upward movement. There was a trend towards a main effect of referent location (F(1,23) = 3.35, p = .08), but importantly no significant interaction between response direction and referent location (F(1,23) = 1.21, p = .28). Thus, we can exclude an explanation based on a speed–accuracy trade-off.

4. General discussion

One central assumption underlying embodied models of language comprehension is that words become associated with sensorimotor experience during language learning. This study aimed at providing evidence for this hypothesis by investigating whether the presence of sensorimotor information during word learning results in associations between words and sensorimotor experience early in the learning process. Adult participants acquired artificial words referring to novel objects in a multimodal manner. Results showed that participants’ response to the color of a newly learned word in a Stroop-like task was influenced by the sensorimotor experience participants had made with the referent in the learning phase of the experiment. More specifically, the spatial location in which the referents were experienced during word learning influenced the time participants needed to initiate an upward or a downward directed response to the color of the corresponding words in the Stroop-like task. Thus, we observed a small but significant compatibility effect between referent location and response direction. Follow-up tests showed that up-words led to faster upward responses as compared to downward responses; for the down-words no difference between upward and downward responses became evident. The observation that the upper referent location but not the lower referent location significantly affects response direction is in line with previous findings by Dudschig, Souman, Lachmair, de la Vega, and Kaup (Reference Dudschig, Souman, Lachmair, de la Vega and Kaup2013) and Dunn and colleagues (Reference Dunn, Kamide and Scheepers2014). The main result – that is, the interaction between word’s referents location and response direction – suggests that sensorimotor experience becomes associated with words not only after years of co-occurrence but already after initial word learning. In studies investigating adult language comprehension, such language–space interactions are typically interpreted as evidence for rather automatic associations between language and sensorimotor experience (Lachmair et al., Reference Lachmair, Dudschig, De Filippis, de la Vega and Kaup2011). Our finding nicely fits with evidence from cognitive neuroscience showing that motor areas in the brain are active during the processing of words which have previously been associated with motion events (Fargier, Paulignan, Boulenger, Monaghan, Reboul, & Nazir, Reference Fargier, Paulignan, Boulenger, Monaghan, Reboul and Nazir2012). They are further compatible with Casasanto and Chrysikou (Reference Casasanto and Chrysikou2011) in demonstrating that a short training session can alter associations between abstract concepts, such as valence and space. Our study suggests that sensorimotor experiences become associated with the meaning representation already during initial word learning in expert language users. Remarkably, associations between words and sensorimotor experiences showed after 20–30 minutes of word learning in a Stroop-like task that did not even require participants to actively read, evaluate, or categorize the words. This finding is of importance for any model of language comprehension that proposes that sensorimotor information builds the basis for language comprehension. Nevertheless, we would like to stress the point that this does not ultimately exclude the possibility that abstract processes are (additionally) involved in the process of language comprehension (e.g., Mahon, Reference Mahon2014; Mahon & Caramazza, Reference Mahon and Caramazza2008).

In the current study, participants acquired novel words in the absence of contextual sentence information. That means that participants could not build associations between the novel words and co-occurring words that referred to an upper or lower spatial location during word learning. For that reason, our finding provides evidence for the idea that sensorimotor information affects word processing beyond the influence of language statistics (Louwerse, Reference Louwerse2008). However, we cannot exclude the possibility that participants internally used spatial terms while learning the novel words. What we can exclude is that our results are due to years-long co-occurrence of these words with spatial words in the language input (Goodhew, McGaw, & Kidd, Reference Goodhew, McGaw and Kidd2014; Hutchinson & Louwerse, 2013; Louwerse, Reference Louwerse2008; Louwerse & Jeuniaux, Reference Louwerse and Jeuniaux2010). An interesting line of research for future studies would be to investigate whether novel words embedded in sentences (e.g., mora lives in trees) are learned by associating them with sensorimotor experiences from the surrounding words. In addition it would be interesting to see whether our effects would replicate in a study in which participants are hindered from using spatial terms during encoding, possibly by having them constantly verbalize a particular phrase (e.g., “This is mora”).

However, there are open questions regarding our study and, more generally, compatibility effects in the literature on embodied cognition which we would like to address. With respect to our study, one issue that needs to be discussed is the question regarding participants’ awareness of the critical manipulation in this experiment. Since we included a distractor task in the learning phase of our study, and since previous studies speak against the idea that participants are aware of the association between response direction and referent location in Stroop-tasks (e.g., Lachmair et al., Reference Lachmair, Dudschig, De Filippis, de la Vega and Kaup2011), we think that participants’ awareness is a rather unlikely explanation for the obtained results. A more general problem with regard to all studies implementing these sorts of Stroop-like tasks is the question regarding the type and format of processes that are involved in these tasks, and that are potentially measured. In our experiment, several aspects of the sensorimotor experience with the referents in the learning phase might have become reactivated during the processing of the respective words later on: visual spatial information, the action of looking up or down, the action of moving the arm to touch the object, an image schema, or possibly even an abstract amodal representation of spatial location. Thus, the nature of the reactivated spatial information remains unclear and future studies are clearly needed to better understand the exact type of sensorimotor information that becomes reactivated in Stroop tasks. A related interesting question to further investigate is whether the spatial information becomes only associated with the (newly created) meaning representation of the word or whether it actually becomes incorporated into the representation. In any case, findings of the current study demonstrate that sensorimotor experience influences the processing of words after initial word learning in an automatic manner.

Another issue that is often ignored in the literature reporting compatibility effects is the question regarding the relevance of these language–space associations for language comprehension. Our study simply shows that sensorimotor information becomes activated during word reading. However, this does not directly imply that this activated information helps or is even needed for the comprehension process itself. In this context, it would be of great interest to investigate whether a certain amount of sensorimotor information is required for stable word learning. Findings for vocabulary learning by Mayer, Yildiz, Macedonia, and von Kriegstein (Reference Mayer, Yildiz, Macedonia and von Kriegstein2015), along with other evidence (for a review, see Macedonia, Reference Macedonia2014), suggest that learning with gestures and an active involvement of the learner’s body leads to superior learning and retention performance compared to learning with, for example, pictures only. It would be interesting to see whether similar effects can be obtained for the acquisition of new word–object relationships. Specifically, it would be necessary to investigate whether, in situations where participants are hindered in their reactivation of these sensorimotor associations, word comprehension would be worse than in situations where they are allowed to reactivate these associations. Related to this idea, future research should address the timecourse of the observed effect. Our study showed that sensorimotor information was associated with a word right after the embodied learning of the word–object relationship. It would be interesting to see whether these associations are stable over time and also play a role after longer periods of time (e.g., 2 months after the initial world learning phase).

Our main finding directly leads to several intriguing questions in the broader context of language learning and the theoretical framework of embodied cognition. For example, it would be interesting to investigate whether some very specific aspects of the sensorimotor information that was available to the participants in our learning situation were crucial for this early association between words and sensorimotor experiences. One potentially important factor is the pointing participants had to perform in the learning phase. Pointing can be conceptualized as a deictic gesture, which has been shown to aid L1 acquisition (e.g., Cartmill, Hunsicker, & Goldin-Meadow, Reference Cartmill, Hunsicker and Goldin-Meadow2014; Iverson & Goldin-Meadow, Reference Iverson and Goldin-Meadow2005), and the role of gestures during language processing is emphasized within the framework of embodied cognition (Hostetter & Alibali, Reference Hostetter and Alibali2008; Macedonia, Reference Macedonia2014; Pouw, De Nooijer, van Gog, Zwaan, & Paas, Reference Pouw, De Nooijer, van Gog, Zwaan and Paas2014). As mentioned above, evidence for the idea that iconic gestures support word learning in adults has been recently provided by Mayer and colleagues (Reference Mayer, Yildiz, Macedonia and von Kriegstein2015). In this study, vocabulary learning along with performing iconic or symbolic gestures not only resulted in robust learning and retention effects but also recruited brain areas related to motor activity. Based on these findings for iconic gestures, it could be the case that deictic gestures were of special importance for establishing an association between sensorimotor experiences and new words referring to novel objects in our study.

Another important aspect of our study is that sensorimotor experience with the referents was primarily gained in the dimension of (vertical) space during word leaning. Thus, we cannot generalize whether all sorts of sensorimotor experiences become associated with words during word learning, or whether there are more and less relevant experiences, whereby only experiences classified as relevant become associated with the words. Indeed, various studies show that infants have a concept of vertical space very early during development (Bowerman, Reference Bowerman, Gumperz and Levinson1996; Needham & Baillargeon, Reference Needham and Baillargeon1993). This suggests that vertical space is a very relevant and fundamental dimension for human beings. In this light, our results show that sensorimotor experience in a potentially generally relevant dimension becomes reactivated after initial word learning. For future studies it would be of great interest to investigate whether other sorts of experiences – that might be less relevant – also become as quickly associated with words during initial word learning. Related to that, it would be important to investigate what makes an experience relevant or less relevant during word learning. For example, lexical context could determine what experience becomes relevant during word learning. In the current study we fully avoided using verbal labels classifying the vertical spatial dimension during the word learning phase. However, due to the fact that we have such clear verbal labels for classifying the vertical spatial dimension, the vertical dimension might therefore become generally relevant to us. Based on the findings of the current study, it would certainly be interesting to further investigate how relevant associations are dissociated from irrelevant associations when acquiring language.

A third question that derives from our findings is whether children during language development associate sensorimotor experiences with words during word learning. Findings from our study provide evidence for the idea that adult language learners associate sensorimotor experience with words. Thus, we show that expert language users built associations between words and sensorimotor information after a short period of multimodal word learning. Whether this also holds for children during language development is an open question. Findings by Engelen and colleagues (Reference Engelen, Bouwmeester, de Bruin and Zwaan2011) indicate that children in elementary school reactivate experiential traces during language comprehension. In line with that, a recent study by Hald, van den Hurk, and Bekkering (Reference Hald, van den Hurk and Bekkering2015) showed that seven- to eight-year-old children learned motion verbs better with motion animations than with pictures that depicted the motion. Since children at that age can already be seen as proficient users of their L1, it would be of great importance to assess the role of sensorimotor information during language comprehension in younger children and infants. Related to the acquisition of experiential traces, Yu and Smith (Reference Yu and Smith2012) could show that children at the age of 1.5 years learned words corresponding to new objects with embodied attention. With this term, the authors refer to situations in which the child exclusively attends to one object, such that multisensory information related to the new object can be associated with the label that the parent provides for the respective object. Thus, existing evidence suggests that children learn words along with sensorimotor information, and our study suggests that this skill to quickly associate words with sensorimotor information does not get lost in adult language users. However, future research is clearly needed to better understand the role of sensorimotor information during first language acquisition.

5. Conclusion

We showed that sensorimotor experiences that were associated with the referent of a word during word learning subsequently became reactivated when the word is processed in a situation in which no referent was present. Thus, this study provides direct evidence for the assumption underlying the Experiential-Trace-Model that associations between linguistic representations and sensorimotor experiences do not only build after years of co-occurrence but rather play a role for language processing right from the start.