Introduction

The ability to perform activities in everyday life is dependent upon cognitive abilities such as attention, episodic memory, executive abilities, and prospective memory (Mlinac & Feng, Reference Mlinac and Feng2016). The neuropsychological assessment of these cognitive abilities benefits from an ecologically valid approach to better understand the quality of an individual’s everyday functioning (Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003). Ecological validity increases the probability that an individual’s cognitive performance will replicate how they will respond in real-life situations (Bailey, Henry, Rendell, Phillips, & Kliegel, Reference Bailey, Henry, Rendell, Phillips and Kliegel2010; Burgess et al., Reference Burgess, Alderman, Frobes, Costello, Coates, Dawson, Anderson, Gilbert, Dumontheil and Channon2006; Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003).

Verisimilitude and veridicality are the two predominant approaches for achieving the ecological validity of neuropsychological tests (Franzen & Wilhelm, Reference Franzen, Wilhelm, Sbordone and Long1996; Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Spooner & Pachana, Reference Spooner and Pachana2006). Verisimilitude refers to the level of resemblance to the complexity and cognitive demands of everyday tasks by the neuropsychological tests (Franzen & Wilhelm, Reference Franzen, Wilhelm, Sbordone and Long1996; Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Spooner & Pachana, Reference Spooner and Pachana2006). Veridicality refers to the strength of the relationship between the outcomes of neuropsychological tests and everyday functioning measures (e.g., questionnaires pertinent to everyday functioning and independence; Franzen & Wilhelm, Reference Franzen, Wilhelm, Sbordone and Long1996; Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Spooner & Pachana, Reference Spooner and Pachana2006). While both verisimilitude and veridicality approaches have their merits, the literature suggests that the verisimilitude approach may be better predictors of real-world memory and attention (Higginson, Arnett, & Voss, Reference Higginson, Arnett and Voss2000), executive functioning (e.g., multitasking, planning, and mental flexibility; Burgess, Alderman, Evans, Emslie, & Wilson, Reference Burgess, Alderman, Evans, Emslie and Wilson1998), and prospective memory abilities (e.g., remembering to initiate a planned action in the future; Haines et al., Reference Haines, Shelton, Henry, Terrett, Vorwerk and Rendell2019; Phillips, Henry, & Martin, Reference Phillips, Henry, Martin, Kliegel, McDaniel and Einstein2008) than the veridicality approach (Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Spooner & Pachana, Reference Spooner and Pachana2006).

Several laboratory-based test batteries that simulate real-life tasks exist in the neuropsychological literature including those assessing attention (e.g., Test of Everyday Attention, TEA; Robertson, Ward, Ridgeway, & Nimmo-Smith, 1994), memory (e.g., Rivermead Behavioral Memory Test-III, RBMT-III; Wilson, Cockburn, & Baddeley, Reference Wilson, Cockburn and Baddeley2008), executive abilities (e.g., Behavioral Assessment of Dysexecutive Syndrome, BADS; Wilson, Alderman, Burgess, Emslie, & Evans, Reference Wilson, Alderman, Burgess, Emslie and Evans1996), and prospective memory (e.g., Cambridge Prospective Memory Test, CAMPROMPT; Wilson, Reference Wilson2005). Yet, such neuropsychological test batteries tend to incorporate simple, static stimuli within a highly controlled environment and do not fully resemble the complexity of real-life situations (Parsons, Reference Parsons2015; Rand, Rukan, Weiss, & Katz, Reference Rand, Rukan, Weiss and Katz2009). Attempts to provide better assessments of everyday abilities have involved assessments in real-life settings such as performing errands in a shopping center or a pedestrianized street (e.g., Garden, Phillips, & MacPherson, Reference Garden, Phillips and MacPherson2001; Shallice & Burgess, Reference Shallice and Burgess1991). However, these cannot be standardized for use in other clinics or laboratories, they may not be feasible for some individuals in challenging populations (e.g., psychiatric patients, stroke patients with paresis or paralysis), they are time-consuming and expensive, they require participant transport and consent from local businesses, and they lack experimental control over the external situation (e.g., Elkind, Rubin, Rosenthal, Skoff, & Prather, Reference Elkind, Rubin, Rosenthal, Skoff and Prather2001; Logie, Trawley, & Law, Reference Logie, Trawley and Law2011; Parsons, Reference Parsons2015; Rand et al., Reference Rand, Rukan, Weiss and Katz2009).

The use of technology such as video recordings of real-world locations and non-immersive virtual environments (Farrimond, Knight, & Titov, Reference Farrimond, Knight and Titov2006; McGeorge et al., Reference McGeorge, Phillips, Crawford, Garden, Della Sala, Milne and Callender2001; Paraskevaides et al., Reference Paraskevaides, Morgan, Leitza, Bisby, Rendell and Curran2010) have also been considered to simulate real-life situations. Non-immersive virtual reality (VR) tests such as the Edinburgh Virtual Errands Test (Logie et al., Reference Logie, Trawley and Law2011), the Jansari Assessment of Executive Function (Jansari et al., Reference Jansari, Devlin, Agnew, Akesson, Murphy and Leadbetter2014), the Virtual Multiple Errands Test within the Virtual Mall (Rand et al., Reference Rand, Rukan, Weiss and Katz2009), and the Virtual Reality Shopping Task (Canty et al., Reference Canty, Fleming, Patterson, Green, Man and Shum2014) attempt to simulate real-life tasks and are considered more cost-effective, require less administration time, have greater experimental control, and can be easily be adapted for other clinical or research settings (Parsons, McMahan, & Kane, Reference Parsons, McMahan and Kane2018; Werner & Korczyn, Reference Werner and Korczyn2012; Zygouris & Tsolaki, Reference Zygouris and Tsolaki2015). Non-immersive VR tests can also offer automated scoring and standardized administration, enabling clinicians and researchers to administer these tests with only limited training. Finally, some non-immersive VR tests also offer shorter versions of the test that focus on the assessment of specific cognitive functions (Parsons et al., Reference Parsons, McMahan and Kane2018; Werner & Korczyn, Reference Werner and Korczyn2012; Zygouris & Tsolaki, Reference Zygouris and Tsolaki2015).

However, the user interface and procedure of non-immersive VR tests can be challenging for individuals without gaming backgrounds (Parsons et al., Reference Parsons, McMahan and Kane2018; Zaidi, Duthie, Carr, & Maksoud, Reference Zaidi, Duthie, Carr and Maksoud2018), especially for older adults and clinical populations such as individuals with mild cognitive impairment or Alzheimer’s disease (Werner & Korczyn, Reference Werner and Korczyn2012; Zygouris & Tsolaki, Reference Zygouris and Tsolaki2015). Immersive VR tests, which share the same advantages as non-immersive ones, may overcome these challenges (Rizzo, Schultheis, Kerns, & Mateer, Reference Rizzo, Schultheis, Kerns and Mateer2004; Bohil, Alicea, & Biocca, Reference Bohil, Alicea and Biocca2011; Parsons, Reference Parsons2015; Teo et al., Reference Teo, Muthalib, Yamin, Hendy, Bramstedt, Kotsopoulos and Ayaz2016). In addition, individuals without gaming experience have been found to perform better in immersive VR environments due to the first-person perspective and ergonomic/naturalistic interactions that are proximal to real-life actions (Zaidi et al., Reference Zaidi, Duthie, Carr and Maksoud2018). Also, while VR tests have in the past resulted in VR-induced symptoms and effects (VRISE) such as nausea, dizziness, disorientation, fatigue, or instability (Bohil et al., Reference Bohil, Alicea and Biocca2011; de Franca & Soares, Reference de França and Soares2017; Palmisano, Mursic, & Kim, Reference Palmisano, Mursic and Kim2017), which compromise neuropsychological (Mittelstaedt, Wacker, & Stelling, Reference Mittelstaedt, Wacker and Stelling2019; Nalivaiko, Davis, Blackmore, Vakulin, & Nesbitt, Reference Nalivaiko, Davis, Blackmore, Vakulin and Nesbitt2015; Nesbitt, Davis, Blackmore, & Nalivaiko, Reference Nesbitt, Davis, Blackmore and Nalivaiko2017) and neuroimaging data (Arafat, Ferdous, & Quarles, Reference Arafat, Ferdous and Quarles2018; Gavgani et al., Reference Gavgani, Wong, Howe, Hodgson, Walker and Nalivaiko2018; Toschi et al., Reference Toschi, Kim, Sclocco, Duggento, Barbieri, Kuo and Napadow2017), certain contemporary VR head-mounted displays (HMDs) and VR software with naturalistic and ergonomic interactions and navigation within the virtual environment reduce or show no symptoms of VRISE (see Kourtesis, Collina, Doumas, & MacPherson, Reference Kourtesis, Collina, Doumas and MacPherson2019a). Lastly, immersive VR has been found to provide deeper immersion in the virtual environment than non-immersive VR; deeper immersion has been found to induce substantially less adverse VRISE (Kourtesis, Collina, Doumas, & MacPherson, Reference Kourtesis, Collina, Doumas and MacPherson2019b; Weech, Kenny, & Barnett-Cowan, Reference Weech, Kenny and Barnett-Cowan2019).

We recently developed the Virtual Reality Everyday Assessment Lab (VR-EAL) to create an immersive virtual environment that simulates everyday tasks proximal to real life to assess prospective memory, episodic memory (immediate and delayed recognition), executive functions (i.e., multitasking and planning), and selective visual, visuospatial, and auditory attention (Kourtesis, Korre, Collina, Doumas, & MacPherson, Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020). In the VR-EAL, individuals are exposed to alternating tutorials (practice trials) and storyline tasks (assessments) to allow them to become familiarized with both the immersive VR technology and the specific controls and procedures of each VR-EAL task. Moreover, VR-EAL offers also a shorter version (i.e., scenario) where only episodic memory, executive function, selective visual attention, and selective visuospatial attention are assessed. Also, the examiner can opt to simply assess a specific cognitive function, where the examinee will go through the generic tutorial, the specific tutorial for this task, and the storyline task that assess the chosen cognitive function (e.g., selective visual attention).

VR-EAL endeavors to be the first immersive VR neuropsychological battery of everyday cognitive functions. Our previous work has shown that the VR-EAL does not induce VRISE (Kourtesis et al., Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020). However, we have yet to demonstrate the validity of the VR-EAL as a neuropsychological tool. In the current study, the full version of the VR-EAL was administered to participants and compared with the existing paper-and-pencil neuropsychological tests to assess the construct validity of the VR-EAL. We also aimed to replicate our previous findings that the VR-EAL does not induce VRISE, using the Virtual Reality Neuroscience Questionnaire (VRNQ; Kourtesis et al., Reference Kourtesis, Collina, Doumas and MacPherson2019b). Finally, comparisons between the VR-EAL and neuropsychological paper-and-pencil tests were conducted in terms of verisimilitude (i.e., ecological validity), pleasantness, and administration time.

Methods

Participants

Participants were recruited via social media and the internal mailing list of the University of Edinburgh. Forty-one participants (21 females) aged between 18 and 45 years (M = 29.15, SD = 5.80) were recruited: 18 considered themselves to be gamers (7 females) and 23 (14 females) considered themselves to be non-gamers. The mean education of the group was 13.80 years (SD = 2.36, range = 10–16). The study was approved by the Philosophy, Psychology and Language Sciences Research Ethics Committee of the University of Edinburgh. Written informed consent was obtained from each participant. All participants received verbal and written instructions regarding the procedures, possible adverse effects of immersive VR (e.g., VRISE), utilization of the data, and general aims of the study.

Materials

Hardware

An HTC Vive HMD with two lighthouse stations for motion tracking and two HTC Vive wands with six degrees of freedom (6DoF) for navigation and interactions within the virtual environment was implemented in accordance with our previously published technological recommendations for immersive VR research (Kourtesis et al., Reference Kourtesis, Collina, Doumas and MacPherson2019a). The spatialized (bi-aural) audio was facilitated by a pair of Senhai Kotion Each G9000 headphones. The size of the VR area was 5 m2, which facilitates an adequate space for immersion and naturalistic interaction within virtual environments (Borrego, Latorre, Alcañiz, & Llorens, Reference Borrego, Latorre, Alcañiz and Llorens2018). The HMD was connected to a laptop with an Intel Core i7 7700HQ 2.80 GHz processor, 16 GB RAM, a 4095 MB NVIDIA GeForce GTX 1070 graphics card, a 931 GB TOSHIBA MQ01ABD100 (SATA) hard disk, and Realtek High Definition Audio.

VR-EAL

VR-EAL attempts to assess everyday cognitive functioning by assessing prospective memory, episodic memory (i.e., immediate and delayed recognition), executive functioning (i.e., planning, multitasking), and selective visual, visuospatial, and auditory (bi-aural) attention within a realistic immersive VR scenario lasting around 60 min (Kourtesis et al., Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020). See Table 1 and Figures 1 and 2 for a summary of the VR-EAL tasks assessing each cognitive ability. See Table 2 for the description of the VR-EAL tasks and Table 3 for the administration procedures and scoring of the VR-EAL tasks. For a full description of the VR-EAL’s scenarios, tasks, and scoring, see Kourtesis et al. (Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020). Also, a brief video recording of the VR-EAL may be accessed at this hyperlink: https://www.youtube.com/watch?v=IHEIvS37Xy8&t=.

Table 1. VR-EAL tasks and score ranges

*The tasks are presented in the same order as they are performed within the scenario.

Fig. 1. VR-EAL Storyline: Scenes 3–12. Derived from Kourtesis et al. (Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020).

Fig. 2. VR-EAL Storyline: Scenes 14–22.

Derived from Kourtesis et al. (Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020).

Table 2. VR-EAL tasks’ description

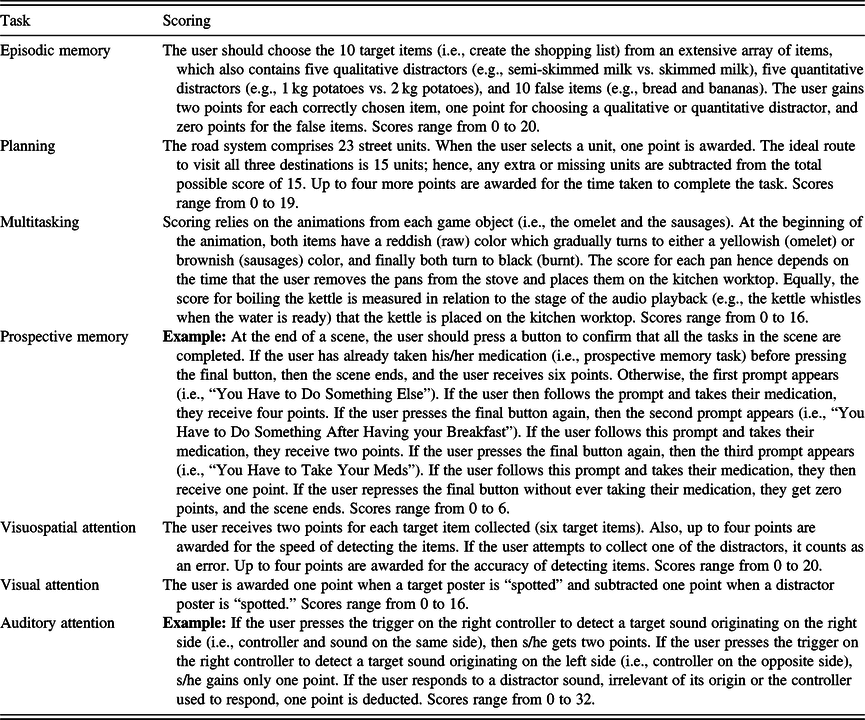

Table 3. VR-EAL task administration and scoring

Note: For all measures, higher scores indicate better performance.

Paper-and-Pencil Tests

Established ecologically valid paper-and-pencil test batteries in terms of both verisimilitude and veridicality were selected to match the equivalent VR-EAL tasks and examine their ecological and construct validity (i.e., CAMPROMPT, RBMT-III, BADS, and TEA). Two additional neuropsychological tests that are ecologically valid in terms of only veridicality were also included to assess the validity of the VR-EAL’s visuospatial attention and multitasking tasks. Tests of visuospatial attention and multitasking that are ecologically valid both in terms of verisimilitude and veridicality are not available in the literature.

Prospective memory

The CAMPROMPT was administered to evaluate prospective memory using six prospective memory tasks (Wilson, Reference Wilson2005). Three tasks are event-based and three are time-based. The participant is required to perform several distractor tasks (e.g., word-finder puzzles and general knowledge quizzes and questions) for 20 min, as well as remember to perform the prospective memory tasks (e.g., when the participant faces a question which includes the word “EastEnders,” s/he needs to give a book to the examiner). The utilization of reminding strategies (e.g., taking notes) is permitted to aid the participant to remember when and how to perform the prospective memory tasks. The CAMPROMPT provides three scores: a total score (out of 36), an event-based score (out of 18), and a time-based score (out of 18).

Episodic memory

Two subtests from the RBMT-III (Wilson et al., Reference Wilson, Cockburn and Baddeley2008) were administered to assess episodic memory. The recall tasks were opted since they offer two scores (immediate recall and delayed recall), while the recognition tasks provide a score only for delayed recognition. The immediate and delayed story recall tasks were used to match the VR-EAL’s immediate and delayed recognition tasks. The participant listens to a story from a newspaper read aloud by the examiner. The participant should recall the story immediately (immediate recall out of 21) and after approximately 20 min (delayed recall out of 21).

Executive function: planning

The Key Search task from the BADS (Wilson et al., Reference Wilson, Alderman, Burgess, Emslie and Evans1996) was utilized as a test of planning (Wilson, Evans, Emslie, Alderman, & Burgess, Reference Wilson, Evans, Emslie, Alderman and Burgess1998). While the Key Search task assesses planning ability, it also involves other aspects of executive function (e.g., problem-solving and monitoring of behavior; Wilson et al., Reference Wilson, Evans, Emslie, Alderman and Burgess1998). The participant should draw his or her route to find lost keys in a field. The quality of the route (e.g., whether it covers the whole field) and the time taken to draw it are considered in the scoring (max score = 16).

Executive functioning

The Color Trails Test (CTT; D’Elia, Satz, Uchiyama, & White, Reference D’Elia, Satz, Uchiyama and White1996) was administered to assess processing speed and executive functioning. CTT is a non-alphabetical adaptation (i.e., colors and numbers) of the Trail Making Test (Reitan & Wolfson, Reference Reitan and Wolfson1993). CTT has two tasks (i.e., CTT-1 and CTT-2), where the participant must draw a line to connect consecutive numbers. In CTT-1, the numbers in the sequence are in a single color. Comparable to the TMT-A (Reitan & Wolfson, Reference Reitan and Wolfson1993), CTT-1 assesses processing speed. In CTT-2, the numbers are displayed in two colors and the examinee alternates between the two colors for each number in the sequence. Comparable to the TMT-B (Reitan & Wolfson, Reference Reitan and Wolfson1993), CTT-2 assesses task-switching, as well as inhibition and visual attention (D’Elia et al., Reference D’Elia, Satz, Uchiyama and White1996). The CTT was chosen to assess the validity of the VR-EAL’s multitasking task, and these aspects of executive functioning have been found central in everyday multitasking (Logie et al., Reference Logie, Trawley and Law2011). Furthermore, the time to complete in seconds is taken as the score for CTT-1 and CTT-2, and the difference between the two scores (i.e., CTT-2 minus CTT-1) is considered an index of executive function.

Selective visual attention

The Ruff 2 and 7 Selective Attention Test (RSAT; Ruff, Niemann, Allen, Farrow, & Wylie, Reference Ruff, Niemann, Allen, Farrow and Wylie1992) was used to assess selective visual attention. The participant is asked to identify target numbers (i.e., 2s and 7s) and ignore the distractors (either numbers or letters) in the block. The examinee is required to implement two different strategies for each type of block; an automatic selection of 2s and 7s for the blocks with letter-distractors and a controlled detection of 2s and 7s for the blocks with number distractors. The RSAT produces two scores: a detection speed score (out of 80) and a detection accuracy score (out of 59). The scores consider the number of detected 2s and 7s, as well as, the number of misses and errors. The RSAT was opted to match the VR-EAL selective visuospatial attention task because it requires different scanning strategies, shifting of attention to another block, and considers the number of misses and mistakes.

Selective visual attention

The Map task from the TEA (Robertson et al., Reference Robertson, Ward, Ridgeway and Nimmo-Smith1994) was administered to assess selective visual attention (i.e., the ability to detect visual targets, while disregarding similar visual distractors). The participant should find as many as possible restaurant symbols (version A) or gas station symbols (version B) on a map of Philadelphia (USA) within 2 min. The total score out of 80 corresponds to the number of symbols detected overall, while one subscore corresponds to the number of symbols found in the first minute, and the other subscore refers to the number of symbols detected in the second minute.

Selective auditory attention

The Elevator Counting with Distraction task of the TEA (Robertson et al., Reference Robertson, Ward, Ridgeway and Nimmo-Smith1994) was administered, which measures auditory selective attention (i.e., the ability to select target sounds, while ignoring competitive auditory distractors). In each trial, the participant listens to different sounds (beeps), where s/he needs to count the number of normal pitched beeps (i.e., targets) and disregard the high-pitched and low-pitched beeps (i.e., distractors). The total score is the number of correct responses across the 10 trials (max score = 10).

Questionnaires

Questionnaires were administered to examine the VR software quality and VRISE, gaming experience of the participants, as well as the verisimilitude and pleasantness of the tests. See Table 4 for a description of the questionnaires.

Table 4. Questionnaires’ administration and scoring

Procedure

Participants individually attended both the VR session and the paper-and-pencil session; the order was pseudorandomized across participants. In the VR session, participants participated in an induction session to introduce them to the HMD and controllers (i.e., HTC Vive and 6DoF wands — controllers) prior to immersion. After completion of VR-EAL, participants completed the VRNQ and the VR versions of the comparison questionnaire (i.e., to assess pleasantness and verisimilitude). During the paper-and-pencil session, participants completed the paper-and-pencil comparison questionnaires (i.e., pleasantness and verisimilitude) after each test. The duration of each session was timed using a stopwatch.

Statistical analyses

A reliability analysis for the VR-EAL was conducted calculating Cronbach’s alpha to inspect the internal consistency and reliability of the VR-EAL. A threshold of 0.70–1.00 for Cronbach’s alpha was used, which indicates good (i.e., 0.70) to excellent (i.e., 1.00) internal consistency and reliability (Nunally & Bernstein, Reference Nunally and Bernstein1994).

The Bayesian factor (BF10) was used for assessing statistical inference. The BF10 threshold ≥ 10 was set for statistical inference in all analyses, which indicates strong evidence in favor of the H1 (Marsman & Wagenmakers, Reference Marsman and Wagenmakers2017; Rouder & Morey, Reference Rouder and Morey2012; Wetzels & Wagenmakers, Reference Wetzels and Wagenmakers2012) and corresponds to a p-value < 0.01 (e.g., BF10 = 10) (Bland, Reference Bland2015; Cox & Donnelly, Reference Cox and Donnelly2011; Held & Ott, Reference Held and Ott2018). BF10 is considered substantially more parsimonious than the p-value in evaluating the evidence against the H0 (Bland, Reference Bland2015; Cox & Donnelly, Reference Cox and Donnelly2011; Held & Ott, Reference Held and Ott2018), especially when evaluating the evidence of H1 against H0 in small sample sizes (Held & Ott, Reference Held and Ott2018), as in the present study. Notably, BF10 allows evidence in either direction (i.e., toward H1 and H0), and its measurement of evidence is insensitive to the stopping rule, which substantially mitigates the issue of multiple comparisons and generates reliable and more generalizable results (Dienes, Reference Dienes2016; Marsman & Wagenmakers, Reference Marsman and Wagenmakers2017; Wagenmakers et al., Reference Wagenmakers, Marsman, Jamil, Ly, Verhagen, Love and Matzke2018).

Bayesian Pearson’s correlational analyses were conducted to examine associations between age, years of education, VR experience, gaming experience, and performance on the VR-EAL and paper-and-pencil tasks. Similarly, Bayesian Pearson’s correlational analyses were performed to assess construct validity for the entire VR-EAL and convergent validity between the VR-EAL tasks and the paper-and-pencil tasks. Furthermore, Bayesian paired samples t-tests were performed to investigate the differences between VR-EAL and paper-and-pencil tests in terms of verisimilitude, pleasantness, and administration time. Finally, a post hoc analyses for the achieved statistical power of the Bayesian Pearson’s correlations and Bayesian paired samples t-tests were performed using G * Power (Faul, Erdfelder, Lang, & Buchner, Reference Faul, Erdfelder, Lang and Buchner2007; Faul, Erdfelder, Buchner, & Lang, Reference Faul, Erdfelder, Buchner and Lang2009). All Bayesian analyses were performed using JASP (Version 0.8.1.2) (JASP Team, 2018).

Results

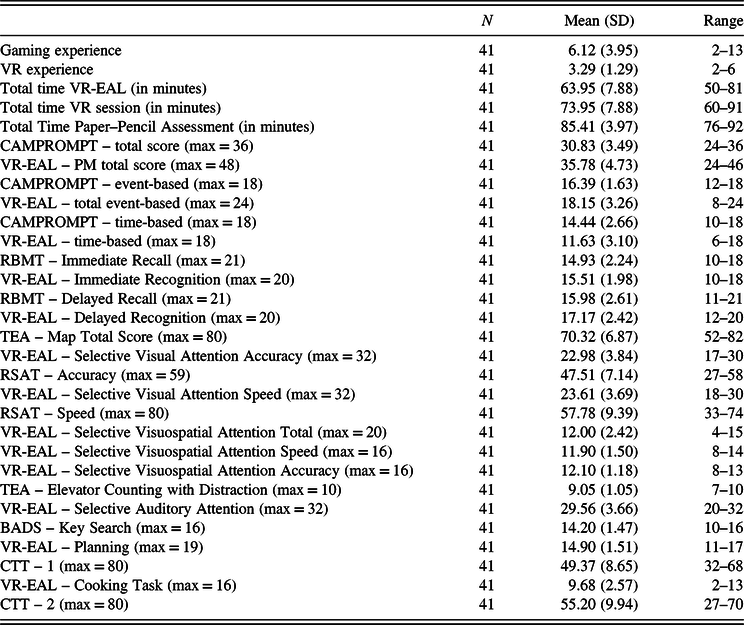

The descriptive statistics of the sample performing the VR-EAL, the paper-and-pencil tests, and questionnaires are displayed in Table 5.

Table 5. Descriptive statistics for the VR-EAL, paper-and-pencil tests, and questionnaires

BADS = Behavioral Assessment of Dysexecutive Syndrome; CAMPROMPT = Cambridge Prospective Memory Test; CTT = Color Trails Test; RBMT = Rivermead Behavioral Memory Test; TEA = Test of Everyday Attention; VR-EAL = Virtual Reality Everyday Assessment Lab.

Correlations Between Demographics and Performance

No significant correlations were found between age, education, VR experience, gaming experience, or performance on any of the paper-and-pencil tests or the VR-EAL tasks. The only significant correlations were observed between gaming experience and VR experience, VR experience and the VR session duration, gaming experience and the VR session duration, gaming experience and the duration of the paper-and-pencil testing session, and the duration of the VR session and the paper-and-pencil session (see Table 6).

Table 6. Bayesian correlations between users’ experience and the sessions’ durations

The alternative hypothesis specifies that the correlation is positive. *BF10 > 10; **BF10 > 30; ***BF10 > 100; r = Pearson’s r; SP = Statistical Power at α < .05.

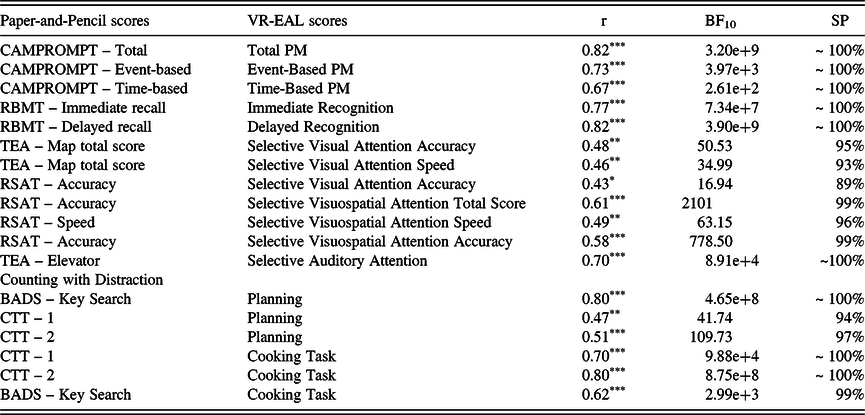

Convergent and Construct Validity of the VR-EAL

The VR-EAL scores were significantly positively correlated with their equivalent scores on the paper-and-pencil tests (see Table 7). These results support the convergent validity of the VR-EAL tasks, as well as the construct validity of the VR-EAL as an immersive VR neuropsychological battery. The reliability analysis demonstrated a Cronbach’s α = 0.79 for VR-EAL, which indicates good internal reliability (Nunally & Bernstein, Reference Nunally and Bernstein1994).

Table 7. Bayesian correlations between the VR-EAL and the Paper-and-Pencil tests

The alternative hypothesis specifies that the correlation is positive. *BF10 > 10; **BF10 > 30; ***BF10 > 100; r = Pearson’s r; SP = Statistical Power at α < .05; BADS = Behavioral Assessment of the Dysexecutive Syndrome; CAMPROMPT = Cambridge Prospective Memory Test; CTT = Color Trails Test; PM = Prospective Memory; RBMT = Rivermead Behavioral Memory Test; RSAT = Ruff 2 and 7 Selective Attention Test; TEA = Test of Everyday Attention; VR-EAL = Virtual Reality Everyday Assessment Lab.

Quality of VR-EAL and VRISE: VRNQ

The median of the VRNQ total score for VR-EAL was 128, which is substantially above the parsimonious cut-off of 120 (maximum score = 140). The medians of the VRNQ domains (i.e., user experience, game mechanics, in-game assistance, and VRISE) were between 31 and 33, again above their respective parsimonious cut-offs of 30 (maximum score = 35). Notably, the medians for all the individual VRISE items (i.e., nausea, dizziness, disorientation, fatigue, and instability) were 7 (i.e., absent feeling), except for fatigue, which was 6 (i.e., very mild feeling). No participant reported a VRISE subscore less than 5 (i.e., mild feeling).

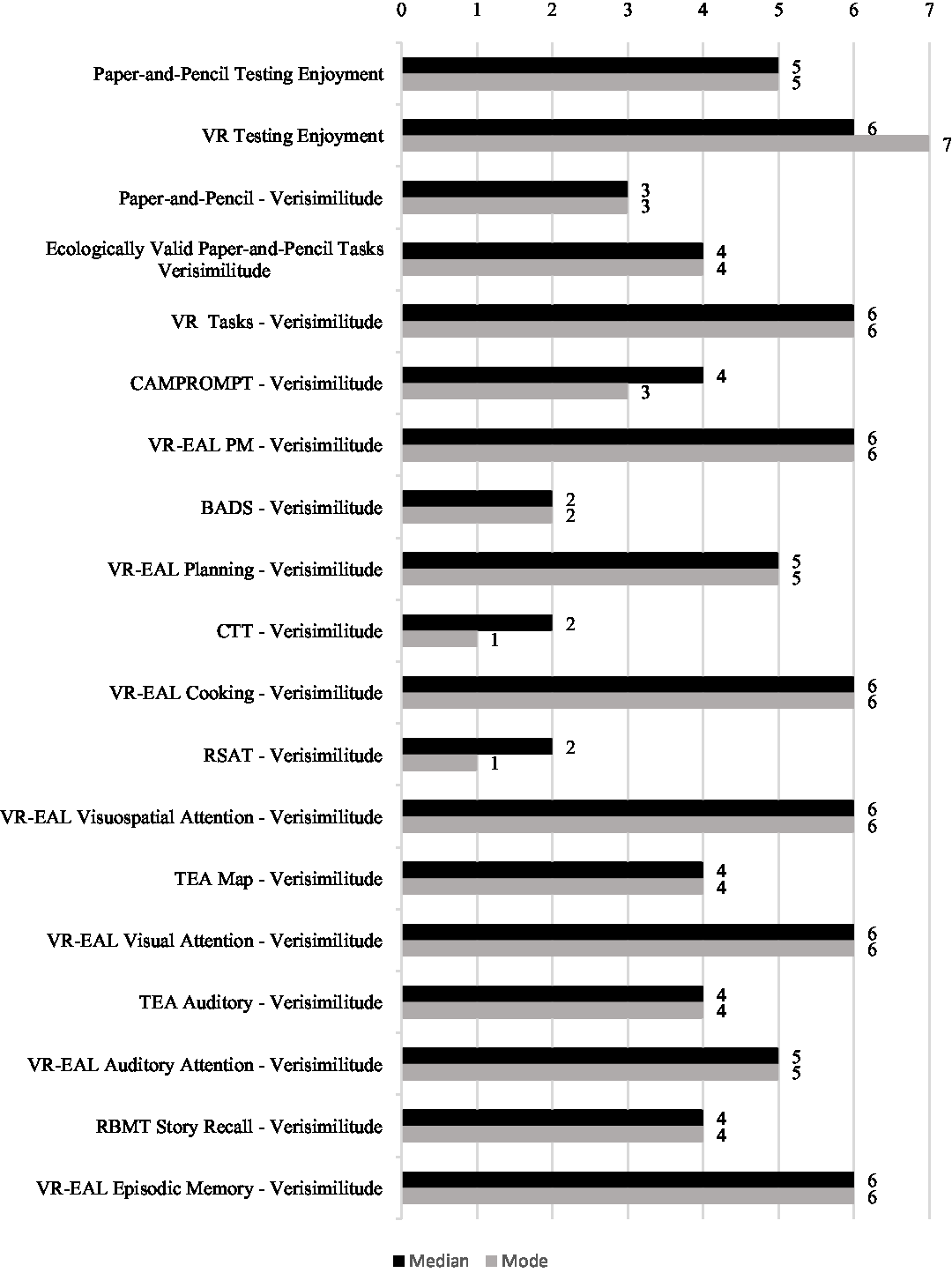

Comparison of the Testing Experience Between VR-EAL and Paper-and-Pencil Tests

The median for enjoyment level was 6 (very pleasant) for the VR-EAL and 5 (pleasant) for the paper-and-pencil assessments (see Figure 3). The median for verisimilitude was 6 (i.e., very similar to everyday life) for VR-EAL, 4 (neither similar nor dissimilar to everyday life) for the ecologically validity tests, and 3 (dissimilar to everyday life) for the remaining paper-and-pencil tests (see Figure 3). The Bayesian t-tests demonstrated significant differences between the VR-EAL and paper-and-pencil tests, where the VR-EAL is rated significantly more pleasant and ecologically valid (i.e., verisimilitude) than the paper-and-pencil tests (see Table 8). In addition, the VR session was substantially shorter than the paper-and-pencil session (see Table 8).

Fig. 3. Self-report verisimilitude and enjoyment of the VR-EAL and paper-and-pencil tests. BADS = Behavioral Assessment of the Dysexecutive Syndrome; CAMPROMPT = Cambridge Prospective Memory Test; CTT = Color Trails Test; PM = Prospective Memory; RBMT = Rivermead Behavioral Memory Test; RSAT = Ruff 2 and 7 Selective Attention Test; TEA = Test of Everyday Attention; VR = Virtual Reality; VR-EAL = Virtual Reality Everyday Assessment Lab.

Table 8. Comparison between administration time and participants’ ratings of verisimilitude and enjoyment for the VR-EAL and paper-and-pencil tests

* BF10 > 10; **BF10 > 30; ***BF10 > 100; SP = Statistical Power at α < .05; BADS = Behavioral Assessment of the Dysexecutive Syndrome; CAMPROMPT = Cambridge Prospective Memory Test; CTT = Color Trails Test; PM = Prospective Memory; RBMT = Rivermead Behavioral Memory Test; RSAT = Ruff 2 and 7 Selective Attention Test; TEA = Test of Everyday Attention; VR = Virtual Reality; VR-EAL = Virtual Reality Everyday Assessment Lab.

Discussion

The VR-EAL was devised to assess cognitive functions (i.e., prospective memory, episodic memory, executive functions, and attentional processes) that are central to everyday functioning. Being an immersive VR research/clinical software, the VR-EAL aims to increase the likelihood that individuals’ performance will replicate how they will act in real-life situations (Higginson et al., Reference Higginson, Arnett and Voss2000; Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Phillips et al., Reference Phillips, Henry, Martin, Kliegel, McDaniel and Einstein2008; Rosenberg, Reference Rosenberg2015; Mlinac & Feng, Reference Mlinac and Feng2016; Haines et al., Reference Haines, Shelton, Henry, Terrett, Vorwerk and Rendell2019). In the current study, we attempted to provide convergent, construct, and ecological validity for the VR-EAL tasks. Indeed, we demonstrated that all VR-EAL tasks significantly correlated with their corresponding ecologically valid paper-and-pencil tasks. The VR-EAL also showed good internal consistency, allowing implementation in clinical and research settings (Nunally & Bernstein, Reference Nunally and Bernstein1994). Therefore, the VR-EAL appears to be an effective, reliable, and ecologically valid tool for the assessment of everyday cognitive functioning, which can be used for clinical and research purposes. Importantly, the VR-EAL is a highly immersive and ergonomic VR neuropsychological battery; immersive VR provides a more ecological valid experience than non-immersive VR (Weech, Kenny, & Barnett-Cowan, Reference Weech, Kenny and Barnett-Cowan2019) and ergonomic interactions benefit non-gamers as their performance is comparable to gamers (Zaidi et al., Reference Zaidi, Duthie, Carr and Maksoud2018).

Notably, the paper-and-pencil tests utilized in this study have been found to be ecologically valid in terms of both verisimilitude and veridicality (or veridicality only), evidencing their ability to predict everyday functioning. For example, the RBMT was highly accurate in predicting the everyday memory functionality of patients with traumatic brain injuries (TBI; Makatura, Lam, Leahy, Castillo, & Kalpakjian, Reference Makatura, Lam, Leahy, Castillo and Kalpakjian1999). Also, the RBMT has been strongly associated with occupational therapists’ observations of general cognitive activities of daily living (ADL) in depressed and healthy older adults (Goldstein, McCue, Rogers, & Nussbaum, Reference Goldstein, McCue, Rogers and Nussbaum1992). The RBMT, as well as the TEA, have been found to be the best predictors of general functional impairment in multiple sclerosis (MS) patients compared to traditional cognitive tests (Higginson et al., Reference Higginson, Arnett and Voss2000). The TEA was also successful in detecting cognitive aging effects in attentional processes in healthy old adults (Robertson et al., Reference Robertson, Ward, Ridgeway and Nimmo-Smith1994). The CAMPROMPT was a significant predictor of the occupational performance (e.g., returning to work, withdrawal, or compromised performance) in MS patients (Honan, Brown, & Batchelor, Reference Honan, Brown and Batchelor2015), and the BADS was significantly associated with everyday executive skills (Evans, Chua, McKenna, & Wilson, Reference Evans, Chua, McKenna and Wilson1997) and general cognitive performance (Norris & Tate, Reference Norris and Tate2000) in neurological patients and healthy individuals. Equally, the Trail Making Test B (i.e., comparable to CTT-2) was a significant predictor of the everyday executive skills of neurological (Burgess et al., Reference Burgess, Alderman, Evans, Emslie and Wilson1998) and TBI patients (Chaytor, Schmitter-Edgecombe, & Burr, Reference Chaytor, Schmitter-Edgecombe and Burr2006). Lastly, the RSAT was found to be a key predictor of TBI patients’ ability to return to professional or academic environments after rehabilitation (Ruff et al., Reference Ruff, Marshall, Crouch, Klauber, Levin, Barth and Jane1993).

Our findings regarding the convergent validity of the VR-EAL tasks with the corresponding paper-and-pencil tasks that have been established as predictors of real-world performance support the VR-EAL’s ability to reflect performance outcomes in everyday life. However, the ecological validity of the VR-EAL would benefit from future work directly comparing the VR-EAL with true real-world functioning. For example, studies have shown that performance on real-world tasks (e.g., household chores) is significantly associated with self-ratings of instrumental activities of daily living (IADL) and independence questionnaires (Weakley, Weakley, & Schmitter-Edgecombe, Reference Weakley, Weakley and Schmitter-Edgecombe2019), which produce reliable and generalizable outcomes (Bottari, Dassa, Rainville, & Dutil, Reference Bottari, Dassa, Rainville and Dutil2010; Bottari, Shun, Le Dorze, Gosselin, & Dawson, Reference Bottari, Shun, Le Dorze, Gosselin and Dawson2014). Thus, the predictive ability and/or veridicality of the VR-EAL could be further examined by investigating its relationship with real-world tasks and/or established IADL questionnaires in healthy older adults and/or clinical populations.

Nevertheless, considering that the verisimilitude approach may be more efficient than the veridicality approach in predicting everyday performance (Chaytor & Schmitter-Edgecombe, Reference Chaytor and Schmitter-Edgecombe2003; Spooner & Pachana, Reference Spooner and Pachana2006), our findings suggest that VR-EAL’s high verisimilitude is an advantage over other ecological valid tests. Previous studies examining the ecological validity of other VR neuropsychological tools have not considered users’ perceptions of the task’s verisimilitude (e.g., Canty et al., Reference Canty, Fleming, Patterson, Green, Man and Shum2014; Jansari et al., Reference Jansari, Devlin, Agnew, Akesson, Murphy and Leadbetter2014; Logie et al., Reference Logie, Trawley and Law2011; Rand et al., Reference Rand, Rukan, Weiss and Katz2009). Therefore, a further advantage of VR-EAL is that the participants rated it as more similar to the tasks that they perform in their daily life (i.e., more ecologically valid in terms of verisimilitude) than all tests in the paper-and-pencil neuropsychological battery and the group of well-established ecological valid tests with verisimilitude (i.e., CAMPROMPT test, RBMT-Story Recall, BADS-Key Search, TEA-Map, and TEA-Elevator Counting with Distraction). Furthermore, the VR-EAL tasks were individually compared to their corresponding paper–pencil test, where the results postulated that the VR-EAL tasks are significantly more ecologically valid in terms of verisimilitude than the equivalent paper–pencil tests. Also, as far as we are aware, our study is the first to compare the pleasantness of the testing experience between immersive VR and paper-and-pencil tests. Here, the full version of the VR-EAL was also considered by the participants to be a more pleasant testing experience than the paper-and-pencil neuropsychological battery. Furthermore, the duration of the entire VR session (i.e., the induction and performance of VR-EAL) was considerably shorter than the administration time for the paper-and-pencil neuropsychological battery. Therefore, the VR-EAL emerges as substantially more enjoyable and ecologically valid testing experience with a significantly shorter administration time in comparison with the equivalent paper-and-pencil neuropsychological battery.

Age and education did not correlate with performance on the VR-EAL or the paper-and-pencil tests. While the paper-and-pencil scores were adjusted for age and education, the VR-EAL scores were not. Therefore, the VR-EAL may have the advantage that performance is not dependent on age or education. However, this needs to be further investigated in a larger and more diverse population, as the population of this study predominantly comprised younger adults aged 18–45 years with a relatively high level of education (i.e., 10–16 years).

Gaming experience strongly and positively associated with VR experience, indicating that gamers are also more experienced immersive VR users. Also, VR and gaming experience were both negatively correlated with the duration of the VR session, where more experienced gamers complete the assessment faster than non-gamers. Interestingly, however, the gaming experience was also correlated with the duration of the paper-and-pencil session, indicating that gamers complete the paper-and-pencil assessment faster than non-gamers. Finally, the duration of the VR session was correlated significantly with that of the paper-and-pencil session, which also indicates that the speed of performing tasks affects the duration of both types of tasks (i.e., immersive VR and paper-and-pencil). Our findings are aligned with the relevant literature where gamers have been found to have enhanced perceptual processing speed (Anguera et al., Reference Anguera, Boccanfuso, Rintoul, Al-Hashimi, Faraji, Janowich and Gazzaley2013; Dye, Green, & Bavelier, Reference Dye, Green and Bavelier2009; Kowal, Toth, Exton, & Campbell, Reference Kowal, Toth, Exton and Campbell2018). However, in our sample, the gaming ability was not associated with the performance on the cognitive tests, indicating that gaming ability is not linked with an improved overall cognition, which is also in line with the relevant literature (Kowal et al., Reference Kowal, Toth, Exton and Campbell2018).

Another aim of the study was to provide immersive VR software for clinical and research use that has minimal VRISE since adverse symptomology associated with VR can significantly decrease participants’ reaction times and overall cognitive performance (Nalivaiko et al., Reference Nalivaiko, Davis, Blackmore, Vakulin and Nesbitt2015; Nesbitt et al., Reference Nesbitt, Davis, Blackmore and Nalivaiko2017; Mittelstaedt et al., Reference Mittelstaedt, Wacker and Stelling2019). Albeit that the incidence of VRISE is more frequent in immersive VR, these symptoms are also highly frequent in non-immersive VR (Sharples et al., Reference Sharples, Cobb, Moody and Wilson2008). However, the examination and report of VRISE have not been considered in non-immersive VR studies of neuropsychological tools for clinical and research purposes (e.g., Canty et al., Reference Canty, Fleming, Patterson, Green, Man and Shum2014; Jansari et al., Reference Jansari, Devlin, Agnew, Akesson, Murphy and Leadbetter2014; Logie et al., Reference Logie, Trawley and Law2011; Rand et al., Reference Rand, Rukan, Weiss and Katz2009). Similarly, the examination of VRISE is under-reported or not examined in immersive VR studies of neuropsychological tools (Kourtesis et al., Reference Kourtesis, Collina, Doumas and MacPherson2019a).

In contrast, the examination and report of VRISE were central in our endeavor to scrutinize the suitability of VR-EAL as a neuropsychological tool for research and clinical purposes. Our current findings replicate those of our previous work where VR-EAL did not induce VRISE in participants (Kourtesis et al., Reference Kourtesis, Korre, Collina, Doumas and MacPherson2020). In this study, VR-EAL exceeded the parsimonious cut-offs for the VRNQ scores (total score, user experience, game mechanics, in-game assistance, and VRISE). The outcomes of VRNQ hence postulate that VR-EAL is a suitable VR software for implementation in research and clinical settings, without inducing VRISE. On all VRISE items, except fatigue, there was an absence of adverse symptoms. Participants reported only very mild feelings of fatigue albeit that this was an expected outcome since the duration of VR-EAL was around 60 min. However, fatigue was equally present during the paper-and-pencil session (80 min).

This study also has some limitations. The sample was moderately small (N = 41), though, every statistical analysis displayed a substantially robust statistical power (>90%). Moreover, as the current study is the first to provide validity for the VR-EAL, it was only administered to younger but not older adults. Yet, the eventual aim is to use the VR-EAL to assess cognitive impairments in healthy aging and dementias (Anderson & Craik, Reference Anderson and Craik2017) or attention-deficit/hyperactivity disorder and autism (Karalunas et al., Reference Karalunas, Hawkey, Gustafsson, Miller, Langhorst, Cordova and Nigg2018). Future work should examine the performance and experiences of different clinical populations performing the VR-EAL to provide further evidence for the clinical utility of VR-EAL for assessing everyday cognitive functioning.

In summary, this study provides evidence supporting the validation of VR-EAL as an effective neuropsychological tool with enhanced ecological validity for the assessment of everyday cognitive functioning. In addition, the VR-EAL does not seem to induce VRISE (i.e., cybersickness). Therefore, our preliminary findings support the VR-EAL as an immersive VR assessment tool that has the potential to be implemented in both research and clinical settings in the future.

Acknowledgments

The current study did not receive any financial support or grants.

Conflict of interest

The authors have nothing to disclose.

Supplementary Material

To view supplementary material for this article, please visit https://doi.org/10.1017/S1355617720000764