Published online by Cambridge University Press: 11 April 2005

Covariance structure analyses of a core neuropsychological test battery consisting of the Wechsler Adult Intelligence Scale–Revised, Wechsler Memory Scale–Revised, and Auditory Verbal Learning Test have previously identified a 5-factor model in a sample of cognitively normal White volunteers from Mayo's Older Americans Normative Studies (MOANS). The present study sought to replicate this factor structure in a sample of 289 cognitively normal, community-dwelling African American elders from Mayo's Older African Americans Normative Studies (MOAANS). The original 5-factor model was tested against 2 alternative 4-factor models and a 6-factor model generated on a substantive basis. Confirmatory factor analysis supported the construct validity of this core battery in older African Americans by replicating the original 5-factor model of Verbal Comprehension, Perceptual Organization, Attention/Concentration, Learning, and Retention as viable in the present sample. (JINS, 2005, 11, 184–191.)

Mayo's Older Americans Normative Studies (MOANS; Ivnik et al., 1992a, 1992b, 1992c; 1996; Lucas et al., 1998a, 1998b) provide age-appropriate norms for individuals over age 55 on a wide variety of commonly used clinical neuropsychological measures. The original MOANS series (Ivnik et al., 1992a, 1992b, 1992c) established normative estimates for a “core” battery of tests consisting of the Wechsler Adult Intelligence Scale–Revised (WAIS–R; Wechsler, 1981), Wechsler Memory Scale–Revised (WMS–R; Wechsler, 1987), and Auditory Verbal Learning Test (AVLT; Rey, 1964). Ivnik and colleagues (1992a, 1992b) chose these measures because of the importance of distinguishing normal from impaired memory ability and general cognitive functioning in dementia evaluations of older patients. Noting that the construct validity of some cognitive measures may change as a function of age, Smith and colleagues (1992) sought to empirically validate the conjoint factor structure of the WAIS-R, WMS-R, and AVLT in their sample of older participants. Factor analysis of MOANS age-corrected scaled scores resulted in a model containing five factors, which they labeled: Verbal Comprehension (VC), Perceptual Organization (PO), Attention/Concentration (AC), Learning (LRN), and Retention (RET). The covariance structure of the MOANS core battery was later evaluated in a clinical sample of 417 neurological and psychiatric cases, predominantly comprised of patients with a primary degenerative dementia (Smith et al., 1993). The original five-factor model was largely replicated in the clinical sample and was found to be superior to alternative three-, four-, or six-factor models.

One limitation of MOANS research to date has been the lack of ethnic diversity in the normative sample. Several investigators have argued that it is inappropriate to rely on traditional normative data derived from White samples when evaluating ethnic minority patients because of problems with test specificity and elevated risk of misdiagnosis (see Ardila, 1995; Artiola i Fortuny & Mullaney, 1998; Friedman et al., 2002; Lichtenberg et al., 1995; Loewenstein et al., 1994; Manly et al., 1998a, 1998b; Nabors et al., 2000). In response to these concerns, normative estimates and ethnicity-based regression corrections for African Americans have recently begun to appear in the literature (Diehr et al., 1998; Evans et al., 2000; Gladsjo et al., 1999; Heaton et al., 2003; Lichtenberg et al., 1998; Marcopulos et al., 1997; Marcopulos & McLain, 2003; Manly et al., 1998b; Unverzagt et al., 1996; Wechsler, 1997a; Wechsler, 1997b; Welsh et al., 1995). Investigators at Mayo Clinic have also responded to this need by extending the original MOANS normative projects to a cohort of African American elders.

These projects, known collectively as Mayo's Older African Americans Normative Studies (MOAANS; see Lucas et al., in press), share many similarities with Mayo's prior normative studies, including test selection, participant inclusion/exclusion criteria, and norming procedures. One important procedural similarity is the co-norming of all measures in the MOAANS test battery simultaneously within the same reference population at the same point in time. As noted previously by Ivnik and colleagues (1996), co-norming facilitates comparisons of a given patient's performances across cognitive domains and improves precision in evaluating cognitive profile patterns.

Co-norming alone, however, may not be sufficient to detect, analyze, and understand neurocognitive performances in African American patients. According to Helms (1992, 1997), neuropsychological test performances are only clinically meaningful to the extent that the underlying cognitive processes being measured are similar across populations under consideration. Whereas excellent normative information may provide a reliable index of relative standing for a particular individual on a given measure, poor performance on that measure may not be clearly interpretable if the underlying constructs are dissimilar across groups. In other words, the clinical meaningfulness of neuropsychological test results in African Americans depends not only on the availability of appropriate normative data, but also on the extent to which our assessment instruments measure the same constructs in African Americans as have previously been established in Whites.

To this end, the present study sought to examine whether the original factor structure of the MOANS core battery as previously established by Smith and colleagues (1992) in Whites could be replicated in a sample of cognitively normal older African Americans. A factor solution analogous to the original model might suggest “cultural equivalence” (Helms, 1992, 1997) of the underlying constructs of this core battery among older Whites and African Americans. Significant discrepancies in factor structure, however, would indicate the need to re-evaluate interpretive strategies when assessing older African Americans with these measures.

Potential study participants included 309 self-identified African American volunteers from MOAANS research projects. All participants provided informed consent approved by Mayo's Institutional Review Board. Recruitment procedures, sample characteristics, and other aspects of the MOAANS research program are detailed elsewhere (Lucas et al., in press). For purposes of this and all Mayo normative studies, cognitively normal adults were defined as community-dwelling, independently functioning individuals examined by their primary physician within 1 year of study entry who met the following selection criteria:

Patients with prior histories of dementia, stroke, movement disorder, multiple sclerosis, brain tumor, seizures, severe head trauma, schizophrenia, bipolar mood disorder, or major depression were excluded. Patients with prior histories of other conditions having the potential to affect cognition (e.g., heart attack, mild head injury) were allowed to participate if no evidence of residual cognitive dysfunction was evident on general medical evaluation and their physician judged the condition to be no longer active. Patients with active, chronic medical disease (e.g., diabetes, hypertension, thyroid dysfunction) were included if their physician judged that the condition was adequately controlled and not causing cognitive compromise.

As indicated above, MOAANS volunteers do not need to be completely medically healthy to participate. By applying a broader definition of normality and including participants with adequately controlled medical conditions (based on physician determination), the MOAANS normative sample provides a more accurate representation of the population of interest.

Twenty participants did not have complete data for all variables of interest and were excluded from these analyses, leaving a final sample of 289 study participants. Demographic characteristics of the sample are presented in Table 1. Participants included 73 men and 216 women with a mean age of 69.5 years (SD = 6.9, range = 56–84) and a mean education of 12.1 years (SD = 3.5, range = 0–20).

Demographic characteristics

Participants were administered the WAIS–R, WMS–R, and AVLT as part of a larger neuropsychological examination by psychometrists who were trained and supervised by licensed psychologists (J.A.L., T.J.F.). MOAANS normative data were used to transform raw scores to age-corrected scaled scores with a mean of 10 and standard deviation of 3.

Variables were chosen for the current measurement models based on the prior analyses by Smith and colleagues (1992, 1993). The only exception was the exclusion of delayed recall measures from WMS–R Verbal and Visual Paired Associate Learning (PAL) in the current analyses. These variables were excluded because data were unavailable on a large number of study participants, resulting in unacceptable reduction of sample size and power. All other variables from the previously reported factor studies were used, including (1) all subtests of the WAIS-R; (2) Learning over Trials (LOT) and Long-Term Percent Retention (LTPR) indices from the AVLT (see Ivnik et al., 1992c); and (3) Mental Control, Figure Memory, Logical Memory I, Verbal PAL I, Visual PAL I, Visual Reproduction I, and Visual Memory Span from the WMS–R. Delayed memory scores for WMS–R Logical Memory (LM) and Visual Reproduction (VR) subtests were included as percent retention scores (LM–PR, VR–PR) rather than standard LM-II and VR-II scores. As noted by Smith and colleagues (1992), percent retention scores allow one to define unique variance associated with delayed memory by controlling for initial learning (see Ivnik et al., 1992c for calculation of LM–PR and VR–PR).

Standard administration procedures were followed for all measures except WMS–R Verbal and Visual PAL–I. In MOANS/MOAANS projects, administration of these subtests is limited to three learning trials. This procedure yields scores consistent with those employed by Smith and colleagues (1992, 1993), but differs from standard WMS–R administration, which allows up to six learning trials if criterion performance is not met. Thus, Verbal and Visual PAL–I scores in these analyses are not directly comparable to scores obtained from the standard WMS–R.

Confirmatory factor analysis was conducted using LISREL 8.20 (Jöreskog & Sörbom, 1998). Maximum likelihood was used to derive parameter estimates from the covariance matrix. The scale of the factor matrix (Phi) was standardized (i.e., diagonal elements fixed to 1; off-diagonal elements free to vary). Error terms (Theta-Delta) were assumed to be uncorrelated, except for immediate and delayed measures of the same subtests. The specific error terms allowed to covary in these analyses were: (1) LM–I and LM–PR, (2) VR–I and VR–PR, and (3) LOT and LTPR.

When assessing the fit of the model, the chi-square statistic is not robust to violations of the multivariate normality assumption and is often affected by sample size (Hayduck, 1996; Hu & Bentler, 1995). Therefore, model fit was evaluated by supplementing the model chi-square with the following indices: (1) the relative chi-square (χ2/df; Wheaton et al., 1977); (2) the goodness-of-fit index (GFI; Bentler, 1983; Jöreskog & Sörbom, 1997); (3) the adjusted goodness-of-fit index (AGFI; Bentler, 1983; Jöreskog & Sörbom, 1997); (4) the standardized root mean squared residual (SRMR; Bentler, 1995); (5) the comparative fit index (CFI; Bentler, 1990); and (6) the expected cross-validation index (ECVI; Browne & Cudeck, 1993).

The relative chi-square minimizes the impact of sample size on the model chi-square statistic. Although there is no consensus regarding what magnitude of the relative chi-square ratio indicates acceptable model fit, a frequent convention is a relative chi-square less than 2.0 (Tabachnick & Fidell, 2001). The GFI is somewhat analogous to R2 in multiple regression analyses, and is an index of the proportion of observed covariance explained by the model. In both the GFI and the AGFI, values equal to or greater than .90 suggest adequate model fit. Recently, Hu and Bentler (1998) recommended a two-index strategy consisting of the SRMR, which was found to be a sensitive index of factor covariance misspecification, and one of several incremental fit indices sensitive to factor loading misspecification. Given the present sample size and recommendations by Hu and Bentler (1999), the SRMR was supplemented with the CFI, which compares model fit relative to a null model with uncorrelated latent variables. A good-fitting model would be indicated by an SRMR less than or equal to .08 and a CFI value close to .95. Finally, the ECVI was used to assess how well each measurement model solution in this sample would cross-validate in an independent sample of comparable size (MacCallum & Austin, 2000). Smaller ECVI values suggest better model fit.

The primary goal of this study was to test the previously reported five-factor model in a sample of cognitively normal African American elders. In addition, the fit of this model was examined relative to the fit of alternate models generated on a substantive basis and previously described by Smith et al. (1993). Therefore, a total of four different measurement models were submitted to confirmatory factor analysis. These included two separate, four-factor models (A: VC, PO, AC, LRN; B: VC, PO, AC, RET), the original five-factor model (VC, PO, AC, LRN, RET), and a six-factor model (VC, PO, AC, Verbal LRN, Nonverbal LRN, and RET). The assignment of indicators to factors is presented in Table 2. Model enhancement was attained by evaluating the modification indices (MI) and freeing parameters in order of the size of the respective MIs. The indiscriminate use of MIs and analogous post hoc specification searches are highly susceptible to capitalization on chance (MacCallum et al., 1992). Therefore, model enhancement was discontinued if modifications were not theoretically substantiated.

Parameter specifications for all models evaluated in the LISREL analyses

Multivariate skewness (z = −0.39, p = .70) and kurtosis (z = −0.98, p = .33) values suggested that the data met the assumption of multivariate normality. The six-factor model contained inadmissible parameter estimates and, as a result, the phi matrix was nonpositive definite. The LISREL boundary solution errors in the six-factor model were likely triggered by a correlation estimate of .97 between PO and Nonverbal LRN and an estimate of .95 between Verbal LRN and RET. Examination of the observed intercorrelations between indicators suggested model specification errors similar to those previously reported by Millis et al. (1999) in a standardization sample and Wilde et al. (2003) in a clinical sample when using the latest revision of the Wechsler Memory Scale (i.e., WMS–III; Wechsler, 1997b). Namely, numerous correlations among indicators of different factors were larger than the correlations between indicators of the same factor. In the current study, for example, the correlation estimate between Visual PAL–I and Figure Memory of the Nonverbal LRN factor was .14 while the correlation estimate between Visual PAL–I and LM–I was .23 and the correlation between Visual PAL–I and Verbal PAL–I was .31. A model containing separate verbal and nonverbal learning factors is thus not viable in this sample.

Table 3 provides the goodness-of-fit indices for the remaining measurement models. In all three models, modification indices suggested that freely estimating measurement error between WAIS–R Picture Arrangement and Picture Completion resulted in model enhancement. In addition, allowing LM–I to also load on the RET factor further improved the four-factor B model. Examination of the goodness-of-fit indices suggested that all three models provided a good fit to the data, resulting in relative chi-square ratios less than 2.0 and GFI values over .90. Similarly, the SRMR and CFI values in all three models were lower than .06 and above .93, respectively. These two indices have been previously suggested as highly sensitive markers of factor covariance and factor loading misspecifications. The ECVI seemed to favor both the five-factor and the four-factor A models, as lower values indicate better model fit.

Goodness-of-fit indices for four- and five-factor models of the MOAANS core battery

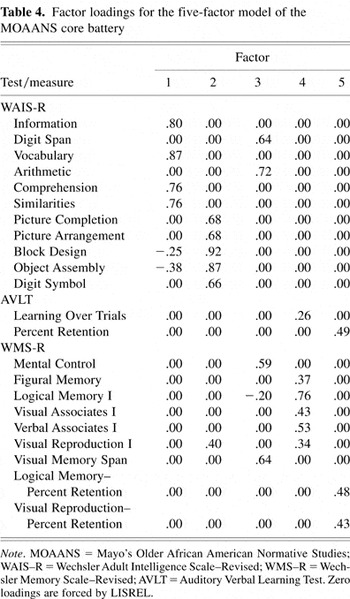

Since the four-factor A model is a nested case of the five-factor model, a chi-square difference test was calculated. This test indicated that the five-factor model is a significantly better-fitting model than the four-factor A model [χ2diff (2) = 8.13, p < .05]. Thus, the five-factor model initially described by Smith et al. (1992) in a large sample of cognitively normal older White individuals was virtually replicated in the current sample of older African Americans. Table 4 presents the standardized factor structure (loadings) for the current five-factor model. The correlations among the factors are presented in Table 5.

Factor loadings for the five-factor model of the MOAANS core battery

Correlations among latent variables in the five-factor model of the MOAANS core battery

Smith and colleagues (1992) found that a five-factor model consisting of Verbal Comprehension (VC), Perceptual Organization (PO), Attention/Concentration (AC), Learning (LRN), and Retention (RET) best accounted for the covariance structure of the MOANS core battery in a sample of older White adults. Of the four potential models evaluated in the current study, a nearly identical five-factor solution provided the best fit to data obtained from a sample of African American elders.

Hu and Bentler (1999) have recently recommended a two-index strategy (i.e., SRMR and CFI) for rejecting reasonable proportions of misspecified models and optimally evaluating model fit. The SRMR and CFI values obtained in the five-factor model are consistent with suggested cut-off criteria and lend support to this factor structure. The six-factor model yielded a phi matrix that was nonpositive definite, potentially due to misspecification errors associated with the partitioning of the LRN factor into Verbal and Nonverbal components. Both four-factor models were feasible alternatives to the five-factor model, but failed to provide better model fit.

The current sample of African Americans was slightly younger (M age = 69.5 vs. 71.5) and less well educated (M education = 12.1 vs. 13.2 years) than the White sample in Smith's (1992) original analyses. The current sample also had a somewhat greater proportion of women (74% vs. 60%). Despite these differences, the magnitudes of the factor loadings in the current five-factor solution were comparable to those of Whites in the prior study. Positive loadings on the VC factor ranged from .76 to .87 (vs. .71 to .86 in Whites), while the magnitude of the negative loadings for Block Design (−.25) and Object Assembly (−.38) on this factor were virtually identical to Caucasians (−.26 and −.37, respectively). Loadings on the PO factor ranged from .40 to .92 and were also very similar to the range obtained previously in Whites, (i.e., .46–.88).

A slight difference in factor loadings was observed on the AC factor. Whereas Smith and colleagues (1992) found that LM–I loaded more strongly on the AC factor than on the LRN factor in Caucasians, in the current sample of African Americans LM–I showed a stronger loading on the LRN factor. Results suggest that LM–I may be less affected by attentional processes and may provide a stronger index of learning among older African Americans. This finding may reflect the primacy of oral tradition and storytelling in the African American community (Banks-Wallace, 2002; Jones & Block, 1984).

Loadings on the LRN and RET factors in the current sample were comparable to those reported by Smith and colleagues (1992), ranging from .26 to .76 for LRN (vs. .20 to .76 in Whites) and from .43 to .49 for RET (vs. .40 to .63 in Whites). The viability of the RET factor in the current study substantiates Smith et al.'s (1992) observation that modeling a distinct information recall component (i.e., percent retention) may provide a theoretical advantage to models that merely rely on information acquisition or learning.

It should be noted that the present five-factor model is not entirely comparable to the original model reported by Smith and colleagues (1992). Specifically, data on WMS–R Verbal and Visual PAL–II subtests were not available for this sample, and the proposed factor measurement models had to be evaluated without these indicators. Their absence in the current five-factor measurement model precludes an unequivocal comparison with the original model, as it is not possible to ascertain the extent to which the inclusion of these two subtests would have modified the obtained factor solution. Given the theoretical interpretability of the current model and the goodness of fit to the data, however, the present solution provides evidence of a viable factor structure for the MOANS/MOAANS core battery in a sample of African American elders that is highly similar to that observed in Whites.

The current study was restricted to testing a model that has previously been specified using a set battery of tests. Alternate models that incorporate measures of other cognitive domains may result in different factorial solutions. Additionally, it is important to note that the current sample was predominantly female, with relatively low representation (7%) over 80 years of age. It is unclear whether comparable results would be obtained in a sample with proportionately greater representation of males and the oldest old.

Helms (1992, 1997) has noted that cultural equivalence of construct validity enhances test interpretation in ethnic minorities and reduces ambiguity that can occur when one (typically White) normative group is used to draw inferences about cognitive impairment in other (typically non-White) groups. Although factorial validity alone is not a sufficient determinant of the cultural equivalence of neuropsychological tests, the present results provide support for the psychometric equivalence of the factor structure of the MOANS/MOAANS core battery among White and African American elders. We are hopeful that future investigations will not only continue to expand the availability of normative data on diverse ethnic groups, but will also explore the cross-cultural equivalence of the underlying constructs assessed by the normed measures.

Demographic characteristics

Parameter specifications for all models evaluated in the LISREL analyses

Goodness-of-fit indices for four- and five-factor models of the MOAANS core battery

Factor loadings for the five-factor model of the MOAANS core battery

Correlations among latent variables in the five-factor model of the MOAANS core battery