Published online by Cambridge University Press: 22 March 2006

To elucidate the role of visual and nonvisual attribute knowledge on visual object identification, we present data from three patients, each with visual object identification impairments as a result of different etiologies. Patients were shown novel computer-generated shapes paired with different labels referencing known entities. On test trials they were shown the novel shapes alone and had to identify them by generating the label with which they were formerly paired. In all conditions the same triad of computer-generated shapes were used. In one condition, the labels (banjo, guitar, violin) referenced entities that were both visually similar and similar in terms of their nonvisual attributes within semantics. In separate conditions we used labels (e.g., spike, straw, pencil or snorkel, cane, crowbar) that referenced entities that were similar in terms of their visual attributes but were dissimilar in terms of their nonvisual attributes. The results revealed that nonvisual attribute information profoundly influenced visual object identification. Our patients performed significantly better when attempting to identify shape triads whose labels referenced objects with distinct nonvisual attributes versus shape triads whose labels referenced objects with similar nonvisual attributes. We conclude that the nonvisual aspects of meaning must be taken into consideration when assessing visual object identification impairments. (JINS, 2006, 12, 176–183.)

Objects in our world have both visual and nonvisual attributes. We not only know what violins look like but also what they do (you play different notes by pressing the fingers of the left hand down on the strings and moving a bow across these strings with the right hand). All would agree that visual attributes play a key role in visual identification. The question of interest is whether nonvisual attributes play any significant role in visual object identification. The visual discriminability hypothesis of Gaffan and Heywood (1993) proposes that it is solely the visual attributes of objects that influence object identification. For patients who have problems identifying line drawings of objects the key factor is visual attribute similarity. That is, objects that come from categories with many visually similar exemplars are the ones that pose difficulties for patients. They note that even monkeys, who presumably have no nonvisual attributes associated with line drawings, had more difficulty discriminating between entities drawn from categories with many visually similar exemplars versus entities drawn from categories with very few visually similar exemplars.

Damasio and colleagues have also advocated the importance of structural similarity in accounting for visual identification impairments. As noted by Damasio et al., (1982, p. 339) “the chance of an item being incorrectly identified depends on the existence of other items with a relative visuostructural similarity.” More recently, Tranel et al. (1997b) have refined this construct of visuostructural similarity to include measures of curvilinearity and structural overlap—a combination that they refer to as “homomorphy.” Unlike Gaffan and Heywood, these authors acknowledge that nonvisual semantic features (e.g., familiarity, value to the subject, etc.) can also play a role in visual identification of objects. However, similar to Gaffan and Heywood, they acknowledge that visual discriminability plays a crucial role in determining the ease with which various types of objects can be identified by patients.

A number of studies have suggested that when visually identifying an object, both the visual attributes and the nonvisual attributes of objects each play a role (e.g., Dixon et al., 1997, 1999, 2002). Previously we have used the “ELM paradigm” (patient ELM) to show how these different types of attributes contribute to visual object identification in patients with temporal-lobe stroke and Alzheimer's disease. In the ELM paradigm, simple computer-generated shapes with empirically specifiable values on curvature, thickness, and tapering are generated. By combining these shape dimensions in different ways shape sets can be formed that are either visually similar or visually distinct. Shapes from these different shape sets are then imbued with nonvisual semantic properties by pairing these shapes with labels that reference known entities. On learning trials, each shape is accompanied by a spoken label (e.g., shape A = “banjo,” B = “guitar,” C = “violin”). On test trials patients are presented the shape alone and asked to “name” it by generating the appropriate label. For some shape sets, the labels reference similar entities (as in the example above). For other shape sets, the labels reference distinct entities (e.g., shape A = “carriage,” B = “wrench,” C = “kite”).

By looking at the patterns of patients' errors one can independently assess the role of visual and nonvisual attributes on object identification. For example, to assess the role of visual attributes on object identification, Alzheimer's patients were presented with a visually distinct triad of shapes paired with the labels carriage, wrench, and kite (Dixon et al., 1999). Patients made few identification errors. In a different session the same labels were applied to a visually similar triad of shapes. Here patients made more errors than with the visually distinct shapes. By holding the labels constant, and manipulating the similarity of the shapes, Dixon and colleagues were able to conclude that visual similarity plays a role in patients object identification.

To assess the role of nonvisual attributes on visual object identification, patients who had been presented with the visually similar shapes paired with carriage, wrench, and kite were then presented with exactly the same visually similar shapes, but now the shapes were paired with labels referencing entities with highly similar nonvisual attributes (banjo, guitar, violin). In this condition patients made more errors than when exactly the same shapes were paired with carriage, wrench, and kite. By holding the shapes constant, and manipulating the similarity of the labels (carriage, wrench, and kite vs. banjo, guitar, and violin), Dixon et al. were able to conclude that nonvisual attributes also play a role in visual object identification.

Clearly the semantic similarity of the labels made a difference to performance in these tasks. What is unclear in these studies is which aspect of semantics played a key role in driving patients' performance. Consider the poor performance for shapes attached to the labels banjo, guitar, and violin and the relatively good performance for shapes attached to the labels carriage, wrench, and kite. We surmised that it was the overlap in the nonvisual semantic attributes of the stringed instruments (the fact that they are all stringed instruments, they are all played by pressing the fingers of the left hand down on a fret board, etc.) that lead to patients' poor performance in this condition. When the same shapes were paired to the labels carriage, wrench, and kite, we assumed it was the lack of overlap in the nonvisual attributes of carriage, wrench, and kite (the fact that these entities were used for entirely different things) that determined patients' performance. However, we cannot rule out that it was the visual attributes within semantics that determined patients' performance. That is, we cannot rule out that patients performed differently in these two conditions of the ELM task simply because within semantics people have knowledge that the stringed instruments look visually similar, and that carriage, wrench, and kite all look very different.

The present study sought to more clearly demonstrate that within semantics it is the similarity of nonvisual attributes that determines patients' visual identification performance in the ELM task. We assessed the role of nonvisual attribute knowledge on visual identification performance in three patients, each presenting with visual identification impairments as a result of different etiologies [stroke, dementia of the Alzheimer's type, herpes simplex viral encephalitis (HSVE)]. Our approach was to pair the same set of visually similar computer-generated shapes with different sets of labels as in the ELM paradigm. In one condition, the labels referenced entities that were both visually similar and similar in terms of their nonvisual attributes within semantics. For this set the labels banjo, guitar, and violin were used. In separate conditions we used labels that referenced entities that were similar in terms of their visual attributes but were dissimilar in terms of their nonvisual attributes. There were two such triads: snorkel, cane, crowbar; and spike, straw, pencil.

According to a conservative version of the visual discriminability hypothesis, if object identification is constrained only by the forms of the presented objects, then performance should be the same for all three triads, because each triad uses the same set of highly similar computer-generated shapes. A more liberal version of the visual discriminability hypothesis makes the same predictions. If the semantic similarity effects in previous studies using the ELM paradigm were attributable to the similarity of visual attributes, then in the current study patients should have equal difficulty identifying members of all three triads, because within each triad the labels reference objects that have overlapping visual semantics (e.g., violin, guitar, banjo have forms that are similar to one another, as do snorkel, cane, crowbar, and spike, straw, pencil). By contrast, if it is the nonvisual attributes of object representations within semantics that influence patients' performance, then patients should perform poorly on the banjo, guitar, violin set, but fare much better when attempting to identify members of either the snorkel, cane, crowbar triad or members of the spike, straw, pencil triad. Performance on the stringed instrument set should be poor because within semantics there are few nonvisual features that serve to disambiguate triad members (e.g., they are used for similar purposes, and are played in similar fashion, etc.). Performance on the snorkel, cane, and crowbar set and the spike, straw, and pencil set should be much better because even though the labels within each triad reference entities that have similar forms, these labels refer to entities that differ from one another on a wide variety of nonvisual attributes (e.g., what they are used for, how they are used, etc.). In sum, equivalent performance on all three triads supports Gaffan and Heywood's visual discriminability hypothesis (both conservative and liberal versions), whereas poorer performance on the banjo, guitar, violin triad relative to the snorkel, cane, crowbar and the spike, straw, pencil triads would support theories which enable nonvisual attributes of objects to play a role in visual identification.

All participants gave their informed consent prior to inclusion in the study. Ethical approval for this research was obtained from the Office of Research Ethics at the University of Waterloo.

ELM is a 72-year-old man who was admitted to hospital for heart failure in 1982. He was readmitted in 1985 and was found to have bilateral lesions deep in the mesiotemporal lobes. Previous testing of this patient has revealed category-specific visual recognition impairments (see Arguin et al., 1996 for complete patient profile).

Previous research using line drawings indicates that relative to aged-matched controls ELM has deficits naming common mammals, birds, common insects, fruits, and vegetables, but can quickly and easily identify most man-made objects (in a confrontation naming task using line drawings he correctly named only 21% of the depictions of “biological” objects, but 92% of the depictions of man-made objects (Dixon et al., 1997). Importantly, however, he does have trouble identifying certain categories of man-made objects. He keeps a flag on his car to distinguish it from others in the parking lot. Furthermore, an in-depth study of his musical-instrument naming shows a profound deficit in naming stringed musical instruments (Dixon et al., 2002).

FS is a 55-year-old, right-handed male banker with 12 years of formal English education. In June 1997 he developed flu-like symptoms including muscle aches, headache, wheezing, and dry cough. He experienced tonic/clonic seizures and was admitted to hospital with a temperature. He was taken to the intensive care unit with generalized seizures and focal neurological findings related to cerebrovascular disease. Following a neurology consult he was administered Acyclovir.

The presentation was consistent with herpes simplex viral encephalitis. Electroencephalography demonstrated abundant epileptiform activity in keeping with diffuse, severe viral encephalitis. A computed tomography (CT) scan taken six days post-onset demonstrated hypodensity in the right anterior temporal lobe, left anterior temporal lobe, and right anterior frontal lobe consistent with herpes simplex encephalitis. Six months post-onset, the patient was seen again for a formal neuropsychological assessment. Psychometrically, he had borderline performance in short-term retention and recognition of geometric designs relative to a group of age-matched peers. He was disoriented to place (thought he was at an insurance company as opposed to a hospital), date, month, and year (reported year as 1993 rather than actual year of 1998). He misidentified the city he was in and what time of day it was. What used to be well-learned and/or significant information for him premorbidly (e.g., Prime Minister's name, FS's own age) were incorrectly reported. When information was presented to him and his memory was challenged, he confabulated.

Digit Span performance was Low Average with 6 digits forward and 5 backwards. Picture Arrangement and Block Design performance was impaired (Wechsler, 1997). On a standardized Visual Search Test (Trenerry et al., 1990) he did not show neglect. Verbal fluency for cued words was severely compromised (4 words after five minutes) and fraught with perseverations.

CS is a 60-year-old male, right-handed, retired engineer with post secondary education. Since 2000, CS had been experiencing symptoms of cognitive decline. Neuropsychological assessment confirmed a number of impairments, with the most pronounced deficits relating to very impaired mental tracking, multitasking skills, and cognitive flexibility; very compromised memory for novel visuospatial information; very weak written arithmetic problem-solving skills and mental manipulation of numeric information; and extremely impaired psychomotor performance. The pattern of test findings and clinical history are suggestive of dementia of the Alzheimer's type (DAT). Tests indicate no evidence of depression and CS reported no family history of neurological or psychiatric disorder.

To assess our patient's visual recognition deficits, we administered a standardized set of 260 line drawings from Snodgrass and Vanderwart (1980). Both patients were presented with each line drawing, in random order, one at a time, and asked to name each one. No time limit was imposed on responding to each picture. FS correctly named 197/260 (75%) of the standardized line drawings while CS correctly named 217/260 (84%).

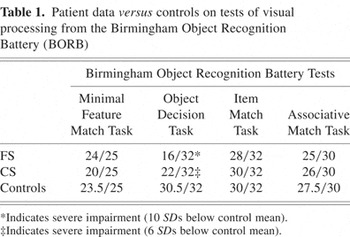

Components of the BORB (Riddoch & Humphreys, 1993) were used to assess aspects of FS's and CS's visual object identification difficulties. Patient performance was compared to control data reported in the BORB and is summarized in Table 1. For each test, impaired performance is indicated when scores are two standard deviations away from control means. The following tests were administered.

Patient data versus controls on tests of visual processing from the Birmingham Object Recognition Battery (BORB)

The Minimal Feature Match Task (BORB test 7) assesses the ability to match canonical with acanonical (unusual viewpoints) of objects. This task requires the participant to analyze a target picture of an object taken from a standard viewpoint and then select from amongst distractors a picture of that same object taken from a different viewpoint.

The remaining tests (10, 11, and 12) assess the patient's ability to access stored knowledge of objects. In the Object Decision Task (test 10), patients are presented with line drawings depicting real and unreal objects and asked if the presented item could exist in real life or not. The unreal objects were created by having one feature of a real object combined with a feature from another object. An example of an unreal object would depict a swan's body combined with a snake's head.

In the Item Match Task (test 11) the participant is required to choose which one of the two probe pictures comes from the same category as the target stimuli. For example, the target picture of a daffodil is presented with the probe pictures of a leaf and a daisy. The correct response to this item would be the picture of the daisy because it is from the same class of objects (e.g., two different types of flowers). Riddoch and Humphreys (1993) argued that successful performance on this task can only be done by accessing some stored semantic knowledge about the presented items.

In the Associative Match Task (test 12) a target is presented that is functionally associated with one of the two probe pictures. For example, a picture of a wine bottle is presented as the target stimulus and the probe pictures include a picture of grapes and an onion.

In summary, FS and CS appear to have few problems in forming three-dimensional representations of visually presented objects. Both patients present with few problems accessing semantic knowledge about objects but do have problems on an object decision test—a task traditionally thought to assess the integrity of patients' structural descriptions (Riddoch & Humphreys, 1993). Our goal in testing FS and CS with the BORB was to capture a general picture of their object identification capabilities. Such relatively global assessments are not specifically designed to show that their semantic attribute knowledge was preserved for one class of objects (i.e., artifacts) and impaired for other classes of objects (i.e., animals), or conversely, to show that their visual attributes were more impaired than their functional attributes. The fact that both patients had relatively unimpaired performance in accessing semantic information about the entities that comprise these subtests suggests, however, that they do not have any such semantic-attribute retrieval impairments. Thus, patient FS's and CS's problems in the visual identification of objects appear to preferentially involve impaired structural descriptions as opposed to an impaired semantic system. The goal of the current experiments was to see whether the nonvisual attributes of objects that are stored in these relatively intact semantic stores could aid patient's performance in their visual identification of the objects in the ELM task.

Eight, healthy, elderly male participants matched on age and education to our patients were asked to rate the visual and nonvisual similarity of the object triads referenced by the labels used in the study (banjo, violin, guitar; snorkel, cane, crowbar; spike, straw, pencil) and is summarized in Table 2. Each participant was provided with the list of labels contained within each triad and asked to imagine each triad of concepts (i.e., snorkel, cane, crowbar) and rate how visually similar the triad of objects are to one another. In another condition participants were asked to rate how similar the same triads were in terms of their nonvisual attributes (i.e., what the object does, what the object is used for, etc.). Participants were provided a 7-point Likert scale below the printed words, where 1 was labelled “not similar” and 7 was labelled “very similar.” The mean visual similarity ratings were 6.0 for the banjo, violin, guitar triad; 5.6 for the snorkel, cane, crowbar triad; and 5.3 for the spike, straw, pencil triad. These differences were not statistically significant (p > .4). The mean nonvisual similarity ratings were; 6.1 for the banjo, violin, guitar triad; 1.8 for the snorkel, cane, crowbar triad, and 2.0 for the spike, straw, pencil triad. Planned comparisons indicated that the latter triads did not differ from one another t(7) = .607, p = .56, but the banjo, guitar, violin triad had higher nonvisual similarity ratings than either the snorkel, cane, crowbar triad, t(7) = 13.5, p < .001 or the spike, straw, pencil triad t(7) = 10.4, p < .001.

Control participant ratings of the visual and nonvisual similarity of the object triads

The experiments were presented using a Macintosh Powerbook (170) computer controlled by Psychlab software (Bub & Gum, 1990).

Three computer shapes were generated, such that within this triad, shapes had multiple overlapping visual attributes. Shapes A, B, and C were all equally tapered. Shapes B and C were equally curved (1/3 the curvature of Shape A); Shapes A and B were equally thick (30 mm) and were both twice the width of Shape C (15 mm along the horizontal axis) (see Figure 1).

The computer-generated shape set.

The triad of shapes shown in Figure 1 were associated with three sets of labels. Label names are presented along with their word frequency, familiarity ratings (freq, fam), which were obtained from the MRC Psycholinguistic Database (2004). Labels referencing entities with visually similar forms but distinct nonvisual features included the “snorkel” (0, 0), “crowbar” (0, 0), and “cane” (12, 442) triad; and the “spike” (2, 471), “straw” (15, 508), and “pencil” (34, 598) triad. Labels referencing entities with visually similar forms and similar non-visual features comprised the “banjo” (2, 0), “guitar” (19, 550), and “violin” (11, 468) triad.

Shapes were presented one at a time accompanied by a digitized recording of their preassigned label. Shapes were centrally presented while the name was simultaneously played over the computer's speakers. Each shape remained on-screen for 2000 ms, followed by a blank screen intertrial interval of 3000 ms. Six such learning trials were presented (two of each shape-label pairing). Learning trials were presented in pseudorandom order.

After a 500-ms READY prompt and a 500-ms blank interstimulus interval, shapes were centrally presented without their labels. Participants attempted to “name” the shape (give the label associated with the shape on learning trials). Naming was not under time pressure. Shapes remained on-screen until an answer was provided. Participants were provided with a printed list of that session's three possible labels to refer to as needed. All answers including “don't know” were recorded, and participants were encouraged to guess if they were not sure. Six such test trials were presented (two of each shape). A 1000-ms intertrial interval separated test trials.

This interleaved six-learning-six-test trial pattern was repeated until 144 learning and 144 test trials were completed in each of the three shape-label conditions. The critical data is the number of errors participants made on test trials for the various shape-label set pairings. Testing was conducted over three separate sessions for each patient with a minimum of 24 hours between sessions. The presentation of shape-label set pairings was counterbalanced across patients.

Six, healthy age (mean age 64.5, SD = 5.0) and education-matched male participants performed the shape-label identification task. As expected, healthy participants had few problems with the task. The mean number of confusion errors made by our participants were 3.7 (SD = 1.4) for the banjo, violin, guitar triad; 1.8 (SD = .75) for the snorkel, cane, crowbar triad, and 1.2 (SD = 1.2) for the spike, straw, pencil triad. Planned comparisons indicated that performance on the latter triads did not differ from one another t(5) = .933, p = .39, but participants made more confusion errors on the banjo, guitar, violin triad than either the snorkel, cane, crowbar triad, t(5) = 3.1, p < .05 or the spike, straw, pencil triad t(5) = 2.7, p < .05.

As illustrated in Figure 2, ELM's error rate was 28% (41/144) on the banjo, guitar, violin triad and 8% (11/144) for the snorkel, cane, crowbar triad, and 13% (19/144) for the spike, straw, pencil triad. Performance on the latter two triads was not significantly different (χ2(1) = 2.13, p = ns). As expected, ELM made more errors on the banjo, guitar, violin triad, than on the snorkel, cane, crowbar (χ2(1) = 17.31, p < .001) or the spike, straw, pencil triad (χ2(1) = 8.07, p < .005).

Patient performance on the ELM paradigm.

As illustrated in Figure 2, FS's error rate was 54% (78/144) on the banjo, guitar, violin triad, and 15% (22/144) for the snorkel, cane, crowbar triad, and 8% (12/144) for the spike, straw, pencil triad. Performance on the latter two triads was not significantly different (χ2(1) = 3.37, p = ns). As expected, FS made more errors on the banjo, guitar, violin triad, than on the snorkel, cane, crowbar triad (χ2(1) = 48.6, p < .001) or the spike, straw, pencil triad (χ2(1) = 74.6, p < .001).

As illustrated in Figure 2, CS's error rate was 32% (46/144) on the banjo, guitar, violin triad, and 15% (21/144) for the snorkel, cane, crowbar triad, and 16% (23/144) for the spike, straw, pencil triad. Performance on the latter two triads was not significantly different (χ2(1) = 0.11, p = ns). As expected, CS made more errors on the banjo, guitar, violin triad, than on the snorkel, cane, crowbar (χ2(1) = 12.4, p < .001) or the spike, straw, pencil triad (χ2(1) = 10.24, p < .001).

The primary purpose of this experiment was to elucidate the role of nonvisual attribute knowledge on visual identification performance. The results reported here suggest that nonvisual attribute knowledge plays a critical role in determining visual identification performance. All three patients (ELM, FS, and CS) demonstrated significantly poorer identification performance on the banjo, violin, guitar, triad as compared to the triads of snorkel, cane, crowbar and spike, straw, pencil. These results, albeit to a much lesser degree, were also apparent in our healthy participants. For each triad, the visual similarity of the computer-generated shapes was equated by using the same shapes within each triad. Furthermore, the similarity among the visual attributes of the objects referenced by the labels was also equal across all three triads (i.e., healthy participants gave equivalent visual similarity ratings for banjo, guitar, violin; snorkel, cane, crowbar; and spike, straw, pencil). However, the proximity of the nonvisual semantic attributes differed across the three triads (i.e., healthy participants gave high similarity ratings for banjo, guitar, and violin, but low ratings for the triads of snorkel, cane, crowbar and spike, straw, pencil). Since the computer-generated shapes were the same in all three triads and the visual semantic information among the entities were also similar, then the differences in identification performance must be attributable to the nonvisual semantic information associated with the shapes in each triad.

These results are inconsistent with the idea that in visual identification only the visual attributes of the objects being identified play a significant role. Rather, they support theories that advocate for the contribution of both visual and nonvisual attributes in visual identification, such as the psychological distance account.

The psychological distance account by Dixon and colleagues (Dixon et al., 1997, 1999) proposes that semantic similarity effects are due to the proximity of nonvisual attributes within semantics. Since the snorkel, cane, crowbar, and the spike, straw, pencil set have disparate nonvisual attributes, then Dixon and colleagues would predict better performance on these triads than on the triad where the nonvisual attributes are similar. The performance of patients on the shape-label task provides empirical support for theories that emphasize the importance of both visual and nonvisual semantic similarity as being critical factors in understanding visual object identification.

Dixon and colleagues assumed that because in the ELM paradigm the computer-generated shapes are provided to the participants, these shapes stand for, and replace the actual forms of the objects referenced by the labels. As such, Dixon and colleagues assume that when participants access semantics of the labels from the computer-generated shapes that are provided to the participant, they access only the nonvisual attributes (when shown the “guitar” computer-generated shape, they access nonvisual attributes such as what it is used for, how it is played, etc.). It could be argued, however, that in addition to accessing nonvisual attributes (e.g., how it is played), participants also access visual attributes (i.e., the form of a guitar). If participants accessed both nonvisual and visual attributes when performing the ELM task, then one would expect superior performance on a triad in which the labels referenced objects that are distinct in terms of both their visual and nonvisual semantics. Although such a triad was not presented in the current study, in an earlier paper by Schweizer and colleagues (2001), patient FS was tested using exactly the same shape triad used in this experiment but paired with the labels of bell, eraser, cane—a set of labels that reference objects with nonoverlapping, nonvisual semantic attributes, as well as nonoverlapping, visual semantic attributes. If patients accessed visual attributes as well as nonvisual attributes, then one would predict superior performance on this set relative to the snorkel, cane, crowbar or spike, straw, pencil set because the differing visual features of bell, eraser, and cane would further separate these entities in multidimensional psychological space, rendering them less prone to errors. FS's error rate was 10% (14/144) on the triad of bell, eraser, cane—an error rate which was comparable to his performance in the current experiment using exactly the same shapes paired with snorkel, cane, crowbar (15%) and spike, straw, pencil (8%). These results are compatible with the idea that, at least for this patient, the computer-generated shapes do indeed stand for, and replace the forms of the objects referenced by the labels, and that only the nonvisual semantic information was critical in determining identification performance. Further research is necessary, however, before concluding that all participants in the ELM paradigm only access the nonvisual features of objects referenced by the labels. Possibly, only patients like FS who have sustained damage to higher-order visual centers will limit their access to the nonvisual attributes of the objects referenced by the labels. By contrast, healthy observers, for whom higher aspects of vision are fully functional, may access both the nonvisual and visual aspects of the entities referenced by the labels in performing the ELM task. At present, what is unequivocal is that for these patients identifying these particular triads, the nonvisual attributes of the objects referenced by the labels clearly played a key role in patient's visual identification performance.

Other researchers have also acknowledged the importance of nonvisual knowledge in visual object identification in patients with category-specific deficits. Tranel et al. (1997a, 1997b) reported results from a large-scale study investigating the retrieval of what they refer to as “conceptual knowledge” in brain-damaged patients. Conceptual knowledge referred to attributes such as what an object does, what it is used for, familiarity, value to the perceiver, characteristic sounds that objects make, etc. They reported that patients with impaired concept retrieval for a particular item were unable to visually identify that item, suggesting that intact retrieval of conceptual knowledge is crucial to identifying visually presented objects.

A number of studies have demonstrated that patients' identification performance in a number of different domains can be influenced by nonvisual information. Providing nonvisual semantic information about faces can play a facilitating role in later face identification (Klatzky et al., 1982; Dixon et al., 1998). Further support for the notion that nonvisual semantic information influences visual discrimination comes from research on neurologically intact individuals. Gauthier and colleagues (2003) had participant's associate arbitrary nonvisual semantic features (i.e., sticky, heavy, sweet, etc.) with novel creature-like objects called “YUFOs.” One group of participants were trained to associate each YUFO with dissimilar nonvisual features while the other group associated each YUFO with similar nonvisual features. After receiving equivalent amounts of training, each group performed a sequential matching task with the YUFOs. The results suggest that associating YUFOs with semantically dissimilar nonvisual features translated to better performance on a sequential matching task than associating the same objects with semantically similar nonvisual features. That is, participants were more likely to notice that YUFO 1 was different from YUFO 2, if the two YUFOs were highly distinct in terms of their nonvisual features (e.g., one was sticky, heavy, and sweet, and one was smooth, light, and sour). By contrast, if the two YUFOs had the same nonvisual features, participants were less likely to notice that two YUFOs were actually different. Interestingly, Gauthier et al. (2003) pointed out that this effect was obtained with a task that could be performed exclusively by using the visual information alone—it was never a requirement on the matching task that participants explicitly retrieve the nonvisual features. As a result, they conclude that access to semantic information may be an automatic process during visual discrimination because the nonvisual semantic features associated with the novel objects significantly influenced visual discrimination judgments that could have been made by the visual properties alone. A recent neuroimaging study utilizing the same paradigm revealed that the brain regions involved in the processing of conceptual knowledge were activated when participants visually processed novel objects paired with arbitrary concepts (James & Gauthier, 2004).

Other research implicating the importance of nonvisual object attributes in visual object identification comes from neuroimaging studies. Martin et al. (2000) suggest that for successful visual object identification to take place, one needs to access prior knowledge about the viewed object. A number of researchers have reported that naming tools selectively activates the left middle temporal gyrus and the left premotor region and suggest that these areas are responsible for storing knowledge about how particular objects are used (Martin et al., 1995; Perani et al., 1995; Martin et al. 1996; Chao et al., 1999; Chao & Martin, 2000). They suggest that activating the stored nonvisual aspects regarding the objects' function and the associated motor patterns is an important part of visual tool identification. In contrast, naming animals selectively activated the left medial occipital lobe and inferior temporal regions. Martin and colleagues concluded that this activity reflected “top-down activation, which would occur whenever information about visual attributes is needed to distinguish between category members” (Martin et al., 2000, p. 1028).

The weight of evidence presented by three patients, each with visual identification impairments as a result of different etiologies (stroke, DAT, and HSVE), favors the notion that nonvisual attribute information can profoundly influence visual object identification. All of our patients performed significantly better when attempting to identify members of either the snorkel, cane, crowbar triad or members of the spike, straw, pencil triad, relative to the guitar, violin, and banjo triad. Thus, it appears that explaining the pattern of visual identification impairments solely by the proximity of either the presented forms of objects, or the visual semantics of objects is inadequate. As such, various converging lines of evidence ranging from neuroimaging data to the behavioral evidence reported in other labs and in the current study all suggest that nonvisual attributes of objects play a significant role in visual identification.

This work was supported by a Natural Sciences and Engineering Research Council of Canada (NSERC) Doctoral Award to TAS and a Premier's Research Excellence Award to MJD.

Patient data versus controls on tests of visual processing from the Birmingham Object Recognition Battery (BORB)

Control participant ratings of the visual and nonvisual similarity of the object triads

The computer-generated shape set.

Patient performance on the ELM paradigm.