INTRODUCTION

In Australia and internationally, neuropsychologists are primarily located in metropolitan areas (Australian Institute of Health and Welfare, 2018; Janzen & Guger, Reference Janzen and Guger2016; Psychology Board of Australia, 2018; Sweet, Benson, Nelson, & Moberg, Reference Sweet, Benson, Nelson and Moberg2015). Teleneuropsychology, defined as the provision of neuropsychological services via telecommunication technologies, particularly videoconference (Cullum & Grosch, Reference Cullum, Grosch, Myers and Turvey2013), has the potential to expand the reach of neuropsychological services to those in underserviced areas. Beyond this, videoconference-based consultations could increase access to neuropsychology for those with mobility restrictions and could increase engagement and representation in research. More recently, the potential of teleneuropsychology to accommodate infection control measures such as those put in place as a result of COVID-19 has also been realised. Emerging evidence supports the use of videoconferencing for various aspects of neuropsychological practice including taking a clinical history (e.g., Martin-Khan et al., Reference Martin-Khan, Flicker, Wootton, Loh, Edwards, Varghese and Gray2012; Martin-Khan, Varghese, Wootton, & Gray, Reference Martin-Khan, Varghese, Wootton and Gray2007; Schopp, Johnstone, & Merveille, Reference Schopp, Johnstone and Merveille2000) and providing cognitive interventions (e.g., Burton & O’Connell, Reference Burton and O’Connell2018; Lawson et al., Reference Lawson, Stolwyk, Ponsford, McKenzie, Downing and Wong2020).

Neuropsychological assessment (in particular, test administration) remains at the core of neuropsychological practice in most settings (Ponsford, Reference Ponsford2016). This is also the area of practice in which neuropsychologists are most apprehensive and least confident about the use of videoconference (Chapman et al., Reference Chapman, Ponsford, Bagot, Cadilhac, Gardner and Stolwyk2020). Researchers have compared in-person and videoconference-based neuropsychological test administration in several populations including healthy individuals (Cullum, Hynan, Grosch, Parikh, & Weiner, Reference Cullum, Hynan, Grosch, Parikh and Weiner2014; Hildebrand, Chow, Williams, Nelson, & Wass, Reference Hildebrand, Chow, Williams, Nelson and Wass2004; Jacobsen, Sprenger, Andersson, & Krogstad, Reference Jacobsen, Sprenger, Andersson and Krogstad2003; Rebchuck et al., Reference Rebchuk, Deptuck, O’Neill, Fawcett, Silverberg and Field2019; Wadsworth et al., Reference Wadsworth, Galusha-Glasscock, Womack, Quiceno, Weiner, Hynan and Cullum2016, Reference Wadsworth, Dhima, Womack, Hart, Weiner, Hynan and Cullum2018), and those with mild cognitive impairment (MCI; Cullum et al., Reference Cullum, Hynan, Grosch, Parikh and Weiner2014; Wadsworth et al., Reference Wadsworth, Galusha-Glasscock, Womack, Quiceno, Weiner, Hynan and Cullum2016, Reference Wadsworth, Dhima, Womack, Hart, Weiner, Hynan and Cullum2018), Alzheimer’s disease (AD; Cullum et al., Reference Cullum, Hynan, Grosch, Parikh and Weiner2014; Wadsworth et al., Reference Wadsworth, Dhima, Womack, Hart, Weiner, Hynan and Cullum2018), unspecified dementia (Wadsworth et al., Reference Wadsworth, Galusha-Glasscock, Womack, Quiceno, Weiner, Hynan and Cullum2016), early psychosis (Stain et al., Reference Stain, Payne, Thienel, Michie, Carr and Kelly2011), a history of alcohol abuse (Kirkwood, Peck, & Bennie, Reference Kirkwood, Peck and Bennie2000), and developmental disorders (Temple, Drummond, Valiquette, & Jozsvai, Reference Temple, Drummond, Valiquette and Jozsvai2010). Results from this body of research are broadly promising (for a review, see Brearly et al., Reference Brearly, Shura, Martindale, Lazowski, Luxton, Shenal and Rowland2017; Marra, Hamlet, Bauer, & Bowers, Reference Marra, Hamlet, Bauer and Bowers2020). The authors of a recent meta-analysis demonstrated that for non-timed tests that allow for repetition, videoconference scores were one-tenth of a standard deviation below in-person scores (Brearly et al., Reference Brearly, Shura, Martindale, Lazowski, Luxton, Shenal and Rowland2017). In contrast, in-person and videoconference scores were equivalent for verbally mediated, timed tests that proscribe repetition (e.g., list learning tasks; Brearly et al., Reference Brearly, Shura, Martindale, Lazowski, Luxton, Shenal and Rowland2017). Client evaluations of acceptability have also been broadly positive (Hildebrand et al., Reference Hildebrand, Chow, Williams, Nelson and Wass2004; Hodge et al., Reference Hodge, Sutherland, Jeng, Bale, Batta, Cambridge and Silove2019; Kirkwood et al., Reference Kirkwood, Peck and Bennie2000; Jacobsen et al., Reference Jacobsen, Sprenger, Andersson and Krogstad2003; Parikh et al., Reference Parikh, Grosch, Graham, Hynan, Weiner, Shore and Cullum2013; Stain et al., Reference Stain, Payne, Thienel, Michie, Carr and Kelly2011). However, to date, no research has compared performance scores between in-person and videoconference-based administrations of neuropsychological measures, nor examined patient acceptability of this method of assessment, in stroke survivors. It is important to investigate the suitability of videoconference-based neuropsychological test administration in stroke survivors. It cannot be assumed that the results of the above studies are generalisable to a stroke population who present with a diverse range of cognitive impairments. Further, many of the neuropsychological measures commonly used in the assessment of stroke survivors have not been previously evaluated.

Cognitive impairment is very common following stroke (Lesniak, Bak, Czepiel, Seniow, & Czlonkowska, Reference Lesniak, Bak, Czepiel, Seniow and Czlonkowska2008). While estimates of the rate of post-stroke cognitive impairment vary from approximately 30% to 90% depending on various factors such as the method of recruitment used in the study (e.g., hospital or community recruitment) and the criteria used to define cognitive impairment, the most reliable estimates suggest that cognitive impairment occurs in over 70% of stroke survivors (Lesniak et al., Reference Lesniak, Bak, Czepiel, Seniow and Czlonkowska2008). It is important to assess post-stroke cognition in order to plan and guide effective multidisciplinary rehabilitation and because of its prognostic value with regard to long-term outcomes (Nys et al., Reference Nys, van Zandvoort, van der Worp, de Haan, de Kort, Jansen and Kappelle2006; Saxena, Ng, Koh, Yong, & Fong, Reference Saxena, Ng, Koh, Yong and Fong2007; Wagle et al., Reference Wagle, Farner, Flekkoy, Bruun Wyller, Sandvik, Fure and Engedal2011). Indeed, the authors of Australian clinical guidelines recommend (a) all stroke survivors should undergo cognitive screening and (b) where screening indicates likely cognitive impairment, a full neuropsychological evaluation should be undertaken (Stroke Foundation, 2017). However, reflecting the above-reported disparate distribution of neuropsychologists, these recommendations are not currently being met (Stroke Foundation, 2018, 2019). Based on the most recent Australian audit data, only 30% of acute stroke services have access to neuropsychology, which includes 46% of metropolitan services and just 10% of inner regional services and 13% of outer regional services (Stroke Foundation, 2019). Further, only 41% of rehabilitation services have access to neuropsychology (Stroke Foundation, 2018). Clearly, increasing access to neuropsychological services, by conducting neuropsychological assessments via videoconference, could benefit stroke survivors (and their carers) by increasing the identification, characterisation, and management of cognitive impairment post-stroke.

Aims and Hypotheses

Our primary aim was to compare performance across in-person and videoconference-based administrations of common neuropsychological tasks in community-based survivors of stroke. On the basis of previous research, we hypothesised that performance in in-person and videoconference conditions would be comparable for all tests. The secondary aim was to evaluate the level of acceptability of videoconference-based neuropsychological assessment to participants. On the basis of previous research, we hypothesised that participants would show a high degree of acceptability of videoconference-based neuropsychological assessment.

METHOD

The design, procedure, and sample for this study have previously been published in Chapman et al. (Reference Chapman, Cadilhac, Gardner, Ponsford, Bhalla and Stolwyk2019), which presents results comparing in-person and videoconference-based administrations of the Montreal Cognitive Assessment (MoCA). The study design is provided briefly below, with greater detail on aspects of the study not provided in the initial paper published from this research.

Design

In-person and videoconference sessions were conducted in a counterbalanced order (randomised crossover design). We aimed for an interval of 2 weeks between sessions to balance the impact of practice effects and natural changes/fluctuations in cognition. For the Hopkins Verbal Learning Test – Revised (HVLT-R), alternate forms (Forms 1 and 2; Brandt & Benedict, Reference Brandt and Benedict2001) were used. Form versions were counterbalanced on an opposite schedule to condition order. For example, half of the participants who completed the in-person session first were administered Form 1 in this session and Form 2 in the videoconference session while the other half were administered the forms in the opposite order across sessions (see Figure 1).

Fig. 1. Participant recruitment, progression, and counterbalancing. HVLT-R = Hopkins Verbal Learning Test – Revised.

Participants

Participants were community-dwelling survivors of stroke recruited through community advertising and a stroke-specific database of former research participants. Participants were required to have a confirmed diagnosis of stroke and to be older than 18 years, proficient in English, and at least 3 months post-stroke, to avoid the most rapid period of spontaneous recovery (Skilbeck, Wade, Hewer, & Wood, Reference Skilbeck, Wade, Hewer and Wood1983). People were excluded if they had (a) a recent or upcoming neuropsychological assessment for clinical purposes, (b) a concurrent neurological diagnosis other than stroke and/or a major psychiatric diagnosis(es), and/or (c) any sensory, motor or language impairment that would significantly preclude the standardised unadapted administration of tests. Sensory, motor, and language impairments were screened for in the initial contact with the participant and/or their carer. Where participants had language impairment but were still able to understand the study and provide consent, they were included and their capacity to complete each measure was assessed through the administration of the initial instructions and sample items. Where language impairment was likely to preclude valid administration, this measure was excluded. There were no exclusion criteria regarding access to technology, as we provided the required technology. Data were collected between November 2016 and February 2019 in Melbourne, Australia and surrounding regional areas.

Measures

Participant characteristics

Demographic data were obtained using a verbally administered questionnaire. Stroke information (e.g., mechanism and location of stroke) was sought from the participant’s medical records (from their acute treating hospital or general practitioner) with their written consent. The following measures were administered to characterise the sample.

Cognitive screen

The MoCA is a 30-point cognitive screening measure (Nasreddine et al., Reference Nasreddine, Phillips, Bedirian, Charbonneau, Whitehead, Collin and Chertkow2005). Items assess visuospatial/executive function, naming, attention, language, abstraction, delayed recall, and orientation (Nasreddine et al., Reference Nasreddine, Phillips, Bedirian, Charbonneau, Whitehead, Collin and Chertkow2005). Conventionally, scores of ≤25 are considered indicative of likely cognitive impairment (Nasreddine et al., Reference Nasreddine, Phillips, Bedirian, Charbonneau, Whitehead, Collin and Chertkow2005). However, some authors suggest that a cutoff of ≤24 is more sensitive and specific in chronic stroke samples (Pendlebury, Mariz, Bull, Mehta, & Rothwell, Reference Pendlebury, Mariz, Bull, Mehta and Rothwell2012, Reference Pendlebury, Mariz, Bull, Mehta and Rothwell2013). Research supports the psychometric properties of the MoCA for use following stroke (Burton & Tyson, Reference Burton and Tyson2015). To allow for comparison of MoCA performance across in-person and videoconference administrations (presented in Chapman et al., Reference Chapman, Cadilhac, Gardner, Ponsford, Bhalla and Stolwyk2019), alternate versions were administered in both conditions (in the same counterbalanced design as the neuropsychological measures). As such, where MoCA scores are reported and used in this paper, an average of these two administrations has been used.

Computer proficiency

The Computer Proficiency Questionnaire (CPQ) is a 33-item self-report measure of frequency and ease of computer use across six categories (e.g., Computer Basics; Boot et al., Reference Boot, Charness, Czaja, Sharit, Rogers, Fisk and Nair2015). Responses to items (e.g., “I can: Turn a computer on and off”) are provided on a 5-point scale ranging from 1 (never tried) to 5 (very easily); average scores in each category are summed to obtain a total score between 5 (low computer proficiency) and 30 (high computer proficiency; Boot et al., Reference Boot, Charness, Czaja, Sharit, Rogers, Fisk and Nair2015). This measure has sound psychometric properties (Boot et al., Reference Boot, Charness, Czaja, Sharit, Rogers, Fisk and Nair2015).

Mood

The Hospital Anxiety and Depression Scale (HADS) is a 14-item self-report measure that assesses symptoms of anxiety (HADS-A) and depression (HADS-D; Zigmond & Snaith, Reference Zigmond and Snaith1983). Items (e.g., HADS-A: “I feel tense or ‘wound-up’”) are answered on a scale from 0 (indicating the least frequent occurrence; e.g., not at all) to 3 (indicating the most frequent occurrence; e.g., most of the time; Zigmond & Snaith, Reference Zigmond and Snaith1983). Subscale scores are summed to reflect either normal (0–7), mild (8–10), moderate (11–14), or severe (15–21) symptomatology (Zigmond & Snaith, Reference Zigmond and Snaith1983). This measure has sound psychometric properties (Bjelland, Dahl, Haug, & Neckelmann, Reference Bjelland, Dahl, Haug and Neckelmann2002; Zigmond & Snaith, Reference Zigmond and Snaith1983).

Neuropsychological measures

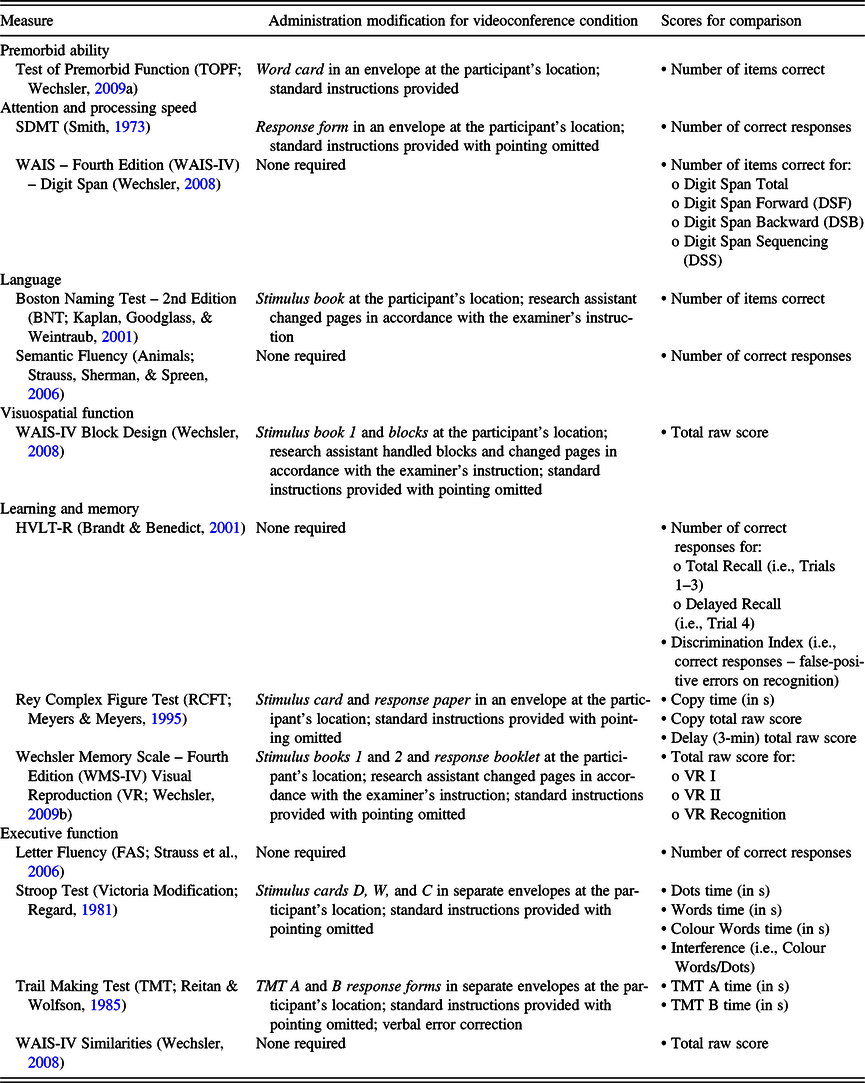

We evaluated neuropsychological measures across a range of cognitive domains, specifically, premorbid ability, attention and processing speed, language, visuospatial function, visual and verbal learning and memory, and executive function. We evaluated measures commonly and frequently used in the assessment of stroke survivors, with measure selection guided by the common data elements developed by the National Institute of Neurological Disorders and Stroke and Canadian Stroke Network (Hachinski et al., Reference Hachinski, Iadecola, Peterson, Breteler, Nyenhuis, Black and Leblanc2006). We refined the 60-min battery defined therein, based on consultation with experts in stroke rehabilitation (namely, Betina Gardner, Jennie Ponsford, and Renerus Stolwyk). In refining this battery, the oral Symbol Digit Modalities Test (SDMT; Smith, Reference Smith1973) substituted the Wechsler Adult Intelligence Scale – Third Edition (WAIS-III; Wechsler, Reference Wechsler1997) Digit Symbol Coding subtest to accommodate stroke survivors with upper limb impairments. Additional measures (e.g., Stroop Test [Victoria Modification]) were also added to expand the assessment of some domains. The neuropsychological battery is shown in Table 1. Although some measures assess multiple domains, they have been reported in only one domain.

Table 1. Neuropsychological measures including administration modifications utilised in the videoconference condition and scores for comparison

SDMT = Symbol Digit Modalities Test; WAIS = Wechsler Adult Intelligence Scale; HVLT-R = Hopkins Verbal Learning Test – Revised.

Acceptability of administration methods

We used a 14-item self-report survey of acceptability, modified from the measure developed by Parikh et al. (Reference Parikh, Grosch, Graham, Hynan, Weiner, Shore and Cullum2013), including two questions about participants’ experience in the in-person condition, four questions about the videoconference condition, and eight questions comparing participants’ experience across conditions (see Supplementary Material).

Videoconference setup

Videoconference calls were established between two laptop computers and facilitated using a cloud-based software, Zoom (Copyright © 2019 Zoom Video Communications, Inc., San Jose, California, USA). Videoconference calls had an established bandwidth of 384 kb/s, which is sufficient for a one-to-one video call (Bartlett & Wetzel, Reference Bartlett and Wetzel2010) and has been deemed appropriate in similar studies (e.g., Jacobsen et al., Reference Jacobsen, Sprenger, Andersson and Krogstad2003). We utilised the integrated webcam of each laptop directed to obtain a portrait view of the researcher/participant. An additional USB-connected webcam on the participant’s laptop (located next to the integrated webcam) was directed so the researcher could observe the participant’s work station, and therefore, their performance of tasks. Cameras were switchable by the participant using a two-key command; they were trained to do this at the beginning of the videoconference session. The integrated webcam was used for verbal tasks, and the USB webcam was used where participants were required to interact with stimulus materials. Images depicting this setup are provided in the Supplementary Material for Chapman et al. (Reference Chapman, Cadilhac, Gardner, Ponsford, Bhalla and Stolwyk2019).

Procedure

This research was completed in accordance with the Helsinki Declaration. Ethics approval was provided by the Monash University Human Research Ethics Committee (CF16/130 – 2016000056). We obtained written informed consent and demographic data in the first session.

Both sessions were conducted by the same researcher (Jodie Chapman), at the same time of day (where possible), at the same location (participant’s home, university, or community location), and in a quiet, distraction-free room(s). In both conditions, neuropsychological tasks and the MoCA were administered in a predefined order, which minimised cross-task interference. All tests were administered in accordance with standardised administration instructions set out in test manuals. Modifications to standardised procedures in the videoconference condition are provided in Table 1. For three tasks (i.e., BNT, WAIS-IV Block Design, and WMS-IV VR), a research assistant was present to physically engage with test stimuli (e.g., turn pages) in the videoconference condition only. They were not required to provide instructions, time responses, or otherwise assist with task administration. There were several research assistants in this study who were all trained using a consistent protocol, although each participant was only exposed to one. In accordance with standard practice, task responses were recorded on response forms and scored after each session. Participants completed the CPQ, HADS, and acceptability survey at the end of the second session.

Data Analyses

Data analyses were conducted using IBM SPSS Statistics for Windows, Version 23.0. Scores compared for each measure are shown in Table 1. Raw scores, rather than standardised scores, were used for analyses as they have a greater range, allowing for a more nuanced comparison of performance. We used pairwise deletion of missing values for all analyses.

Comparing in-person and videoconference scores

A series of repeated-measures t tests were used to determine whether there was a significant difference between in-person and videoconference scores for each measure. Transformations were not conducted for non-normality as t tests are robust to violations of normality with sample sizes greater than 30 (Field, Reference Field2018). As we were looking for no difference between conditions (and therefore non-significant t tests), a less stringent alpha was more conservative in this instance (i.e., a Bonferroni adjustment was not applied).

In keeping with similar studies (e.g., Cullum et al., Reference Cullum, Hynan, Grosch, Parikh and Weiner2014), intraclass correlation coefficient (ICC) estimates were used to assess the reliability of repeated (i.e., in-person and videoconference) administrations. We used single occasion, absolute agreement, two-way random effects ICC estimates and their 95% confidence intervals (Koo & Li, Reference Koo and Li2016). Bujang and Baharum (Reference Bujang and Baharum2017) suggest that the minimum sample size requirement for estimating ICCs to assess the reliability of different measurement methods varies between 18 and 50, therefore, our sample size was sufficient for these analyses. Normality was assessed for all variables using converging evidence from visual inspection of histograms and standardised skewness and kurtosis values. For most variables where issues of non-normality were identified, winsorising outliers remedied or significantly reduced these issues (Field, Reference Field2018). Analysis of relevant ICC estimates pre- and post-winsorising indicated only minimal influence of outliers on the results for these measures. Further, in most instances, winsorising outliers deflated rather than inflated the relevant correlations. We did not transform distributions with remaining issues of mild non-normality, as this would have significantly confused the interpretation of results.

Bland–Altman plots were constructed to further evaluate the agreement between conditions (Bland & Altman, Reference Bland and Altman1986). In a Bland–Altman plot, an individual’s average score on a measure is plotted against their difference score on the measure (i.e., videoconference score minus in-person score; Bland & Altman, Reference Bland and Altman1986). A Bland–Altman plot shows the bias (i.e., average difference) value and the 95% limits of agreement (i.e., limits within which 95% of difference scores will lie), both derived from average difference scores (Bland & Altman, Reference Bland and Altman1986). In this study, for most measures, positive bias values represent superior performance in the videoconference condition, on average, while negative bias values represent superior performance in the in-person condition, on average. The opposite is true where higher numbers represent inferior performance on a measure (e.g., TMT). Winsorising outliers remedied non-normal difference distributions, where necessary (Field, Reference Field2018).

If converging evidence from the above analyses indicated differences in test performance, we conducted further analyses. In this instance, we used multivariable models to evaluate the influence of participant characteristics on this outcome.

Acceptability of administration methods

For items where participants had to rate their satisfaction, ease of understanding during sessions or comfort with the videoconference equipment (items 1–5), numeric values from 1 (indicating the least favourable response; e.g., completely dissatisfied) to 5 (indicating the most favourable response; e.g., completely satisfied) were applied to response options. Averages were calculated, with higher scores representing greater satisfaction, understanding, or comfort. All other question responses were summarised by endorsement frequencies and percentages.

RESULTS

Sample Characteristics

Figure 1 displays participant recruitment, progression through the study, and counterbalancing. The most frequently reported reason for declining to participate was time constraints (due to rehabilitation commitments, return to work, etc.). Table 2 presents the demographic and clinical characteristics of the 48 participants. Years of education were calculated using the norms of Heaton, Miller, Taylor, and Grant (Reference Heaton, Miller, Taylor and Grant2004). Participants were on average in their mid-60s, Australian born, and had had a stroke over 5 years previously. Most participants had experienced an ischaemic stroke (68.8%) and most had experienced a left hemisphere stroke (50%). Fewer participants had experienced a right hemisphere stroke (33.3%) or bilateral strokes (12.5%). Stroke location was unknown for two (4.2%) participants. Sessions were completed on average 15.8 (SD = 9.7) days apart.

Table 2. Demographic and clinical characteristics of the sample

MoCA = Montreal Cognitive Assessment; CPQ = Computer Proficiency Questionnaire; HADS-A = Hospital Anxiety and Depression Scale – Anxiety; HADS-D = Hospital Anxiety and Depression Scale – Depression.

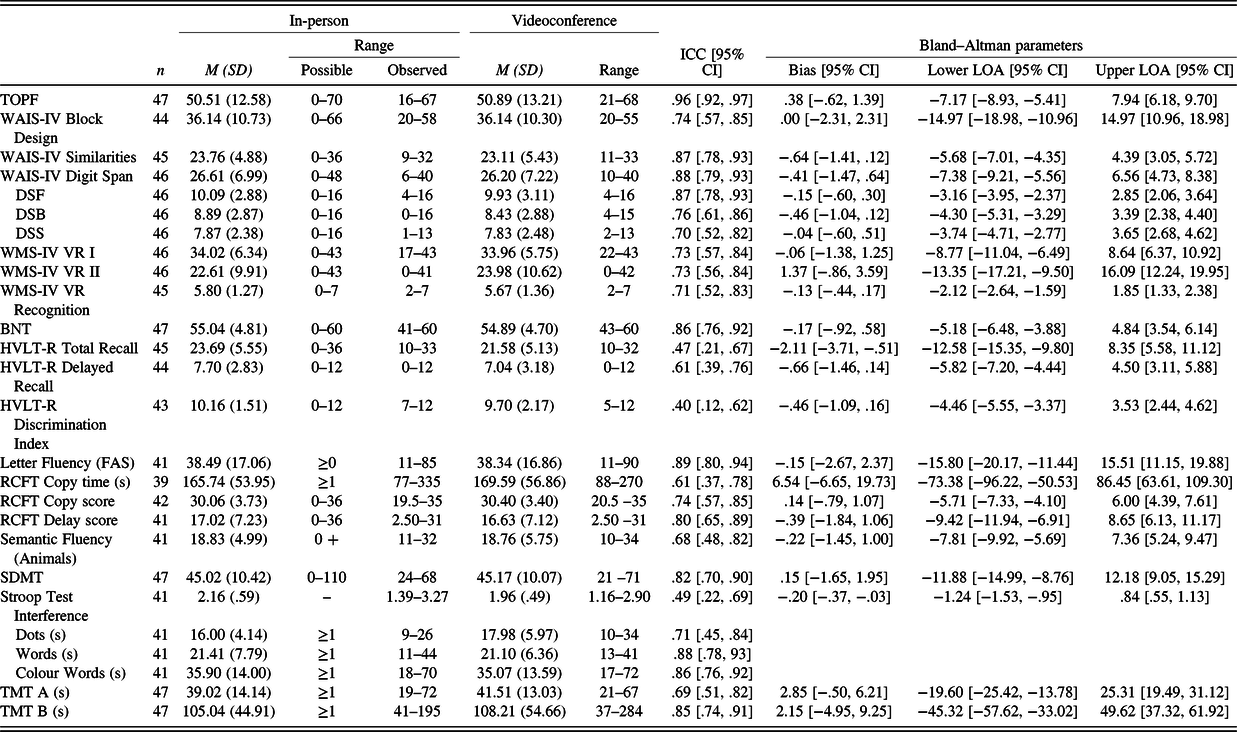

Comparing In-person and Videoconference Scores

Due to participant fatigue and time constraints, not all participants completed all measures. Further, due to language impairment, one participant did not complete all measures. Table 3 presents the number of participants who completed each measure and means and standard deviations of scores in the videoconference and in-person conditions. Mean scores were similar across conditions for most tests. Most pairwise differences were not statistically significant (all ps > .05). However, pairwise comparisons indicated that, on average, participants remembered significantly fewer words across HVLT-R learning trails (i.e., Total Recall) in the videoconference condition than the in-person condition, t(44) = 2.65, p = .011, d = 0.39; there was a small effect size (Cohen, Reference Cohen1988). In addition, on average, Stroop Interference scores were superior in the videoconference condition with a small effect size, t(40) = 2.25, p = .030, d = 0.35 (Cohen, Reference Cohen1988).

Table 3. Means (M), standard deviations (SD) and ranges of in-person and videoconference scores, and ICCs and Bland–Altman parameters comparing in-person and videoconference scores

ICC = intraclass correlation coefficient; CI = confidence interval; LOA = limit of agreement; TOPF = Test of Premorbid Function; WAIS-IV = Weschler Adult Intelligence Scale – Fourth Edition; DSF = Digit Span Forward; DSB = Digit Span Backward; DSS = Digit Span Sequencing; WMS-IV VR = Weschler Memory Scale – Fourth Edition Visual Reproduction; BNT = Boston Naming Test; HVLT-R = Hopkins Verbal Learning Test – Revised; RCFT = Rey Complex Figure Test; SDMT = Symbol Digit Modalities Test; TMT = Trail Making Test.

ICC estimates and their 95% confidence intervals are also shown in Table 3. ICC estimates ranged from .40 to .96 across measures. Tests with the highest ICC estimates included the TOPF, WAIS-IV Digit Span, and FAS. In contrast, ICC estimates for the HVLT-R Total Recall and Discrimination Index were lower (i.e., .47 and .40, respectively). While the ICC estimate for Stroop Interference scores was low (.49), ICC estimates for Stroop components, particularly Words and Colour Words, were higher (i.e., .71–.86).

Parameters for the Bland–Altman plots (i.e., bias values, upper and lower 95% limits of agreement) and their associated 95% confidence intervals are presented in Table 3. The Bland–Altman plots are available in the online Supplementary Material. Bias values were close to 0 for most measures. However, the bias value for the WMS-IV VR II indicated superior average performance in the videoconference condition. In addition, the bias value for HVLT-R Total Recall indicated superior average performance in the in-person condition. Across measures, the 95% limits of agreement were relatively wide. In addition, most measures showed relative symmetry in points above and below 0, indicating participants did not perform better or worse in a particular condition. However, for the HVLT-R Total Recall, Delayed Recall, and Discrimination Index, more participants had negative difference values, indicating better performance in the in-person condition. In four Bland–Altman plots, there was an indication of unequal variance in difference values along the spectrum of average values (i.e., heteroscedasticity). For the HVLT-R Total Recall and Discrimination Index and the RCFT Copy score, those with lower average scores (indicating poorer performance) varied more across sessions than those with higher average scores. Similarly, those with higher average scores on TMT A (indicating poorer performance) varied more across sessions than those with lower average scores.

We ran further analyses to evaluate whether participant characteristics could explain the poorer performance on HVLT-R Total Recall in the videoconference condition (we assumed that results would be similar across HVLT-R scores, which showed a similar pattern of performance across conditions). In this multivariable regression analysis, participant characteristics included in the model (i.e., age, level of cognition [MoCA], computer proficiency [CPQ], and symptoms of anxiety [HADS-A] and depression [HADS-D]) did not significantly predict HVLT-R Total Recall difference scores, F(5, 35) = 1.64, p = .175, adj. R 2 = .074. Table 4 displays the regression coefficients. No predictors contributed to the model (all ps > .05).

Table 4. Unstandardised (B) and Standardised (β) regression coefficients, and semi-partial correlations (Sr 2 ) of predictors in the model predicting HVLT-R Total Recall difference scores

HVLT-R = Hopkins Verbal Learning Test – Revised; MoCA = Montreal Cognitive Assessment; CPQ = Computer Proficiency Questionnaire; HADS-A = Hospital Anxiety and Depression Scale – Anxiety; HADS-D = Hospital Anxiety and Depression Scale – Depression.

Acceptability of Administration Methods

Forty-five participants completed the acceptability survey. Table 5 shows the average ratings for satisfaction, ease of understanding during each condition, and comfort with the videoconference equipment. For other items, endorsement frequencies and percentages are shown. Average satisfaction ratings were comparable across conditions and reflected that participants were, on average, satisfied with both conditions. The majority of respondents reported equal comfort in both conditions and reported no preference for a particular condition. Of those who preferred the in-person condition (n = 19), the majority suggested that this was because this condition facilitated a better interpersonal connection with the examiner (n = 10). This was also the most frequently endorsed advantage of the in-person session by all participants. Other reasons included that the in-person session had less scope for technical glitches (n = 3) and allowed the participant to better read the examiner’s body language (n = 3). Of the three who preferred the videoconference condition, one participant started feeling more relaxed in this condition and another suggested that this type of interaction could eliminate future travel. In all, 24.4% of the sample reported the videoconference session as more interesting or fun. While the in-person session was the most preferred session, most participants reported being unwilling to wait for more than 3 months or travel long distances for this type of assessment.

Table 5. Means (M), standard deviations (SD), and endorsement frequencies for items on the acceptability measure

a Possible ratings range from 1 (least favourable) to 5 (most favourable).

b Participants selected all options that applied.

DISCUSSION

We report the first comparison of performance of a comprehensive neuropsychological battery administered in-person and via videoconference, and the first evaluation of the acceptability of videoconference-based neuropsychological assessment among stroke survivors. To our knowledge, we are the first to compare results across in-person and videoconference-based administrations for several neuropsychological measures including the TOPF, RCFT, Stroop Test, WAIS-IV Block Design, WAIS-IV Similarities, and WMS-IV VR. For most measures, converging evidence indicated that participants did not perform systematically better or worse in a particular condition. Therefore, our study provides preliminary evidence that test results across in-person and videoconference-based administrations are comparable for community-based survivors of stroke and could potentially be used interchangeably in clinical practice for this group. Inclusion of the Bland–Altman limits of agreement gives clinicians a resource to evaluate relative confidence in the comparability of results for each measure. In contrast, converging evidence indicated that participants performed more poorly on the HVLT-R in the videoconference condition than the in-person condition. In addition, Stroop Test Interference scores were superior in the videoconference condition than the in-person condition. This indicates that these measures should potentially be avoided, or appropriate considerations should be made (e.g., conservative clinical decision-making), when using these measures via videoconference in clinical practice. We also found that participants were broadly accepting of videoconference-based neuropsychological assessment and were prepared to avoid travel and delays in access to a neuropsychologist as a trade-off for in-person assessments.

Examining previous research, the authors of a recent meta-analysis evaluating agreement of in-person and videoconference neuropsychological scores across previous studies using non-stroke samples (e.g., healthy participants, those with dementia) have demonstrated that videoconference scores were one-tenth of a standard deviation below in-person scores for non-timed tests that allow for repetition, which they defined as nonsynchronous dependent tests (Brearly et al., Reference Brearly, Shura, Martindale, Lazowski, Luxton, Shenal and Rowland2017). The current results are broadly consistent with these findings. For example, the BNT demonstrated an ICC estimate of .86 and the RCFT Delay score had an ICC estimate of .80. In this meta-analysis, it was also shown that in-person and videoconference scores were equivalent for synchronous dependent tasks, which are verbally mediated, timed tests that proscribe repetition (e.g., list learning tasks; Brearly et al., Reference Brearly, Shura, Martindale, Lazowski, Luxton, Shenal and Rowland2017). Interestingly, while we did demonstrate equivalent scores for several of these synchronous dependent tasks, for example, WAIS-Digit Span, verbal fluency tasks, and SDMT, the HVLT-R was not equivalent in our study. This may, in part, reflect the test–retest reliability of this measure broadly, which is shown to be sub-optimal, particularly for Delayed Recall (r = .66) and the Discrimination Index (r = .40; Benedict, Schretlen, Groninger, & Brandt, Reference Benedict, Schretlen, Groninger and Brandt1998), in comparison with other measures in this study. Indeed, it should be noted that most of the ICC estimates in our study did not meet the acceptable limit of ≥.90 for a psychological research context, as defined by Nunnally and Bernstein (Reference Nunnally and Bernstein1994). However, as discussed above, the participants did not perform systematically better/worse in a particular condition. Instead, current findings likely reflect the broader reliability of the individual measures we were evaluating. Indeed, for other measures, ICC estimates in our study seemed to match established reliability coefficients. For example, TMT A had a lower ICC estimate than TMT B, which is consistent with test–retest reliability estimates established for this measure in the literature (Strauss et al., Reference Strauss, Sherman and Spreen2006). Further, the WAIS-IV subtests evaluated had similar ICC estimates to established test–retest reliability coefficients presented in the WAIS-IV test manual (Wechsler, Reference Wechsler2008). However, participants in our sample did, on average, perform worse on the HVLT-R in the videoconference condition specifically, which would not be expected solely on the basis of poor test–retest reliability. This difference was not explained by participant characteristics such as age, cognitive impairment, computer proficiency, or depression or anxiety symptoms. This might have reflected the dependence of this test in particular on the highly synchronous transfer of both visual and verbal cues, which may have meant that it was harder to hear words in the videoconference condition or that there was a higher chance of mishearing words in this condition. It is also possible that due to this fact, participants were particularly anxious about, or preoccupied with, the videoconference scenario for this test, which could have affected their performance. It should be an aim of future research to replicate this finding, particularly in those with a more diverse range of cognitive abilities. Future research could also evaluate whether the use of a pre-recorded word list (rather than examiner reading), or verbal memory tasks that also present the written word, results in a similar trend. In addition, further research could evaluate whether other verbal learning tasks (with better reliability and semantic content; e.g., story memory tasks) result in more comparable results across conditions.

Difference was also demonstrated between conditions for the Stroop Test Interference score, with the videoconference condition having superior (i.e., lower) scores. However, it seems that this difference was actually driven by marginally slower performance on the Dots trial in the videoconference condition. Interestingly, this finding was isolated to this trial, with both the Words and Colour Words trials having largely similar average results across conditions. While this may be a spurious finding, it may also be related to the fact that Dots is the first trial to be administered. That is, it is possible that participants were particularly concerned about the examiner’s capacity to hear them in this trial. Further research is needed to replicate this finding. Another finding was that the RCFT Copy and TMT A demonstrated more variable difference scores in those who were performing more poorly on these measures. However, this may reflect the variable psychometric properties of neuropsychological measures for people with different levels of cognitive function.

Our results regarding the acceptability of videoconference-based neuropsychological assessment were broadly consistent with previous research in other samples. Parikh et al. (Reference Parikh, Grosch, Graham, Hynan, Weiner, Shore and Cullum2013) had 40 participants who were either healthy or had diagnoses of AD or MCI complete an acceptability survey following neuropsychological assessment both in-person and via videoconference. Their results reflect 98% satisfaction with videoconference-based neuropsychological assessment, which was consistent with the high average satisfaction rating (4.7 out of 5) reported in our sample. In addition, in their sample, 60% of participants had no preference for a particular session, 30% preferred in-person assessment, and 10% preferred videoconference-based assessment (Parikh et al., Reference Parikh, Grosch, Graham, Hynan, Weiner, Shore and Cullum2013). These findings are broadly in keeping with our findings, with a slightly higher percentage of our sample (42.2%) reporting a preference for in-person assessment. Beyond Parikh et al.’s (Reference Parikh, Grosch, Graham, Hynan, Weiner, Shore and Cullum2013) findings, similar rates of preference across conditions have been reported in other studies (i.e., Hildebrand et al., Reference Hildebrand, Chow, Williams, Nelson and Wass2004; Jacobsen et al., Reference Jacobsen, Sprenger, Andersson and Krogstad2003; Stain et al., Reference Stain, Payne, Thienel, Michie, Carr and Kelly2011). Whilst interpersonal connection was the main driver of the preference for in-person assessments in our sample, it did not seem to outweigh the burden of travel or wait times. The vast majority of participants in our sample indicated that they would prefer videoconference-based consultations if it avoided travel of more than 3 hours or a wait time of more than 3 months. One limitation of our evaluation of acceptability is that the evaluation occurred after the second session. Whilst this was necessary to facilitate comparison of the conditions, this also may have been difficult for some participants with memory difficulties given the 2- week interval between sessions. Perhaps, a more ecologically valid measure of acceptability would be gained by assessing the acceptability of each condition independently, directly after session completion. Further, as noted in Figure 1, a number of people declined to participate in this study. Whilst not explicitly stated by these individuals, it is possible that the use of videoconferencing in this study deterred them from participating. As such, this may have served to increase evaluations of acceptability in this study.

This study has several strengths. First, we included neuropsychological measures that facilitated the assessment of all classically assessed domains of cognitive function. Some previous studies in non-stroke populations have limited their batteries to verbal tasks, which are particularly suitable to administration via videoconference. This, however, limits the assessment of some neuropsychological domains, particularly visuospatial function and nonverbal problem-solving, and therefore the comprehensiveness of the neuropsychological evaluation being validated. Second, the condition order was counterbalanced in the current study, as well as the HVLT-R form version, which reduced the potential influence of practice effects. Third, we used low-cost, easily accessible hardware and low-cost, easily accessible, and secure videoconference software. These features were considered important to maximise the likelihood of clinical translation. Most features of this setup would be readily available in health services or would require minimal funding. In addition, most healthcare providers, and possibly patients, would be familiar with this hardware and software.

This study also has a number of limitations. First, the study had the potential for bias due to the use of a single examiner. However, it should also be noted that the use of separate examiners could introduce the potential for bias due to inter-examiner differences. Further research should aim to counterbalance different examiners alongside the condition of participants. Second, we did not include a condition in which participants completed the neuropsychological measures in-person in both sessions. This would have provided a clearer comparison for the reliability and agreement statistics presented here. Third, whilst the inclusion of a comprehensive battery of neuropsychological measures was a clear strength of this study, we did not include a measure of hemispatial neglect (despite the prevalence of this syndrome following stroke) nor an established test battery that allows for profile analysis. These should be included in future research. The use of a comprehensive battery also necessitated the use of a research assistant to facilitate administration of some neuropsychological measures requiring a higher level of examiner control over stimulus materials (e.g., WAIS-IV Block Design). This administration modification was necessary to ensure the protection of copyright for these materials. However, this also may present additional barriers in terms of clinical translation. It should be the objective of future work to consider alternatives to test administration that do not require an assistant to be present, perhaps in consultation with the publishers of these tests. Finally, the participant sample was on average 5 years post-stroke, was relatively computer proficient, and mostly Australian born. In addition, because we used a community sample, we were unable to obtain a measure of stroke severity. However, MoCA results did indicate that our participants were, on average, only mildly cognitively impaired. The findings presented herein may not be generalised beyond the relatively mildly impaired population of stroke survivors evaluated in the current study. Future research should aim to replicate the above findings in acute and subacute samples, in those who have more severe cognitive impairments and are more representative of the diverse stroke population, and in those that are less computer proficient. Further, future research should also evaluate the suitability of videoconference-based assessment for those with more severe cognitive impairments and/or behavioural disturbance who may present additional challenges when assessed in a videoconference context. Of course, the administration of neuropsychological tests is only one, albeit central element of neuropsychological practice. As such, future research should also be designed to evaluate other aspects of neuropsychological practice, including clinical interviewing, assessing client presentation, intervention, and secondary consultation to other disciplines, via videoconference.

This study provides preliminary evidence to support videoconference-based administration of a number of common neuropsychological tasks in community-based survivors of stroke. Whilst further research in this area is warranted, particularly with regard to the HVLT-R, videoconference-based neuropsychological assessment stands to have substantial benefits for improving access to neuropsychological assessment and treatment for stroke. Indeed, the importance and value of telehealth have been highlighted in recent times, where restrictions put in place by the COVID-19 pandemic have limited the capacity of neuropsychologists around the world to engage in in-person assessment practices. Further, the conduct of neuropsychological assessments via videoconference may attenuate or eliminate some of the regional disparities in the availability of neuropsychological services.

ACKNOWLEDGEMENTS

This research was not supported by any specific grant from any funding agency. We would like to thank Aimee Brown, Dr Anna Carmichael, Alicia Tanasi, Dr Bleydy Dimech-Betancourt, Chanel Tara Alevizos, Dr David Lawson, Joanna Loayza, Dr Nicole Stefanac, Rebecca Kirkham, Rebecca Wallace, Ruchi Bhalla, and Dr Toni Withiel, all of whom assisted in data collection by being the research assistant at the participant site.

CONFLICT OF INTEREST

The authors declare that there are no conflicts of interest.

SUPPLEMENTARY MATERIAL

To view supplementary material for this article, please visit https://doi.org/10.1017/S1355617720001174.