1. Introduction

Flow control is a challenging problem both from the academic perspective and in terms of concrete applications, where problems such as laminar-to-turbulent transition, drag reduction and flow-induced vibration are of practical concern (Rowley et al. Reference Rowley, Williams, Colonius, Murray and Macmynowski2006; Kim & Bewley Reference Kim and Bewley2007; Bagheri & Henningson Reference Bagheri and Henningson2011; Luhar, Sharma & McKeon Reference Luhar, Sharma and McKeon2014; Brunton & Noack Reference Brunton and Noack2015; Jin, Illingworth & Sandberg Reference Jin, Illingworth and Sandberg2020). The difficulties arise from the nonlinearity of flow dynamics, which is often avoided by considering a linearized system. Nevertheless, it is not clear how to model the neglected nonlinear terms, and effective methods to determine the optimal location of sensors and actuators are still a topic of research. Among the linear control strategies presently available, inverse feed-forward, wave-cancellation, optimal and robust control are popular choices. Throughout this work, by optimal and robust control we mean the corresponding linear control approaches.

In flows dominated by convection, wave cancellation has been proposed as a simple-but-effective control strategy. Waves are identified by upstream sensors, and actuators situated between the sensors and targets act to minimize perturbations at the target location (Sasaki et al. Reference Sasaki, Tissot, Cavalieri, Silvestre, Jordan and Biau2016). Although the approach is not guaranteed to be causal, in so far as computation of the actuation signal may require future sensor readings, causality can be imposed by ignoring the non-causal part of the control kernel. This has been shown to closely reproduce optimal control when there is sufficient distance between sensors, actuators and targets (Sasaki et al. Reference Sasaki, Morra, Fabbiane, Cavalieri, Hanifi and Henningson2018a). While this can significantly reduce the effectiveness of the controller (Brito et al. Reference Brito, Morra, Cavalieri, Araújo, Henningson and Hanifi2021), the approach has been successfully used in numerous studies (Hanson et al. Reference Hanson, Bade, Belson, Lavoie, Naguib and Rowley2014; Sasaki et al. Reference Sasaki, Tissot, Cavalieri, Silvestre, Jordan and Biau2018b; Maia et al. Reference Maia, Jordan, Cavalieri, Martini, Sasaki and Silvestre2021). A similar approach was used by Luhar et al. (Reference Luhar, Sharma and McKeon2014), where opposition control based on the resolvent operator was performed. Adaptive control strategies are often similar to wave-cancellation approaches, but use additional downstream sensors to adapt the control law to changes in the flow (Fabbiane et al. Reference Fabbiane, Semeraro, Bagheri and Henningson2014; Simon et al. Reference Simon, Fabbiane, Nemitz, Bagheri, Henningson and Grundmann2016).

Optimal control, on the other hand, minimizes a quadratic cost functional (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b), frequently associated with the mean perturbation energy. This approach provides a maximal reduction of perturbation energy and has been used to control flow instabilities (Bewley & Liu Reference Bewley and Liu1998) or to reduce the receptivity of a flow to disturbances (Barbagallo, Sipp & Schmid Reference Barbagallo, Sipp and Schmid2009; Semeraro et al. Reference Semeraro, Bagheri, Brandt and Henningson2011; Juillet, Schmid & Huerre Reference Juillet, Schmid and Huerre2013; Juillet, McKeon & Schmid Reference Juillet, McKeon and Schmid2014; Morra et al. Reference Morra, Sasaki, Hanifi, Cavalieri and Henningson2020; Sasaki et al. Reference Sasaki, Morra, Cavalieri, Hanifi and Henningson2020), to delay transition to turbulence, for instance. However, the robustness of optimal control may be hindered by feedback between actuators and sensors and/or by small errors in the flow model. In some cases, such issues may even cause the control law to further destabilize the system. While the model can be accurately known in some scenarios, for instance, when simulating transitional flows subject to small disturbances, applications in off-design conditions or in cases where nonlinear dynamics are important, will generally result in a reduction of the accuracy with which the linear model represents the physical system. Robust control allows a balance to be struck between the cost-functional reductions and the control robustness: at the cost of achieving lower energy reduction than optimal control, robust control can tolerate higher modeling errors. This kind of approach has been used in several recent studies (Dahan, Morgans & Lardeau Reference Dahan, Morgans and Lardeau2012; Jones et al. Reference Jones, Heins, Kerrigan, Morrison and Sharma2015; Jin et al. Reference Jin, Illingworth and Sandberg2020). Robust control is particularly important for the control of oscillator systems where actuators are frequently situated upstream of sensors (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b). For this kind of configuration, optimal control is typically not robust (Schmid & Sipp Reference Schmid and Sipp2016).

There are, however, many scenarios where optimal control is robust. Schmid & Sipp (Reference Schmid and Sipp2016) showed that this is the case for amplifier flows, where sensors are typically located upstream of actuators. Semeraro et al. (Reference Semeraro, Bagheri, Brandt and Henningson2011) arrived at a similar conclusion for the control of boundary layer disturbances, where the optimal control was found to be robust to changes in Reynolds numbers and pressure gradients. Our study is focused on this scenario: the development of optimal control for amplifier flows.

The usual approach to obtain optimal control laws involves solution of Riccati equations. The computational cost grows rapidly with the number of degrees of freedom (DOFs) of the system. As problems in fluid mechanics typically have many DOFs, methods based on the solution of Riccati equations are impractical. A standard way of dealing with this issue is to base control design on reduced-order models (ROMs) (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b). Several bases have been used to obtain ROMs, such as proper orthogonal decomposition modes (Noack & Eckelmann Reference Noack and Eckelmann1993), eigenmodes (Åkervik et al. Reference Åkervik, Hœpffner, Ehrenstein and Henningson2007), and balanced modes (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b; Barbagallo et al. Reference Barbagallo, Sipp and Schmid2009). The eigensystem realization algorithm (ERA) (Juang & Pappa Reference Juang and Pappa1985) was shown to be equivalent to a ROM based on balanced modes, with only a fraction of the costs when external disturbances are low rank (Ma, Ahuja & Rowley Reference Ma, Ahuja and Rowley2011). A drawback of such techniques is that control laws obtained from these ROMs are not guaranteed to be optimal when applied to the full system. Model reduction with balanced modes has upper-error bounds for modelling open-loop systems, but even when the open-loop system is accurately represented by the ROM, a control law based on the ROM can be ineffective when applied to the original problem (Åström & Murray Reference Åström and Murray2010, p. 349).

Several approaches exist for obtaining estimation and control laws for the full system. Optimal estimation and control gains for the full system can be obtained iteratively (Semeraro et al. Reference Semeraro, Pralits, Rowley and Henningson2013; Luchini & Bottaro Reference Luchini and Bottaro2014). This, however, requires integration of a large auxiliary system for real-time application; this adds significant cost when used in a numerical simulation and is likely unfeasible for experimental implementation. Alternatively, the control law can be reduced a posteriori (design-then-reduce), instead of being designed based on a ROM (reduce-then-design). Another approach applicable to the full system is to use estimation strategies using an ensemble Kalman filter (Colburn, Cessna & Bewley Reference Colburn, Cessna and Bewley2011). However, this requires the integration of multiple realizations of the full system, which adds significant computational cost when applied to numerical solutions.

Control strategies are highly dependent on actuator and sensor placement, and the optimal choice is often unclear. As ROMs are frequently derived for a specific set of sensors and actuators, studies of the role of their placement for control often rely on the derivation of multiple ROMs. An example is the study of Belson et al. (Reference Belson, Semeraro, Rowley and Henningson2013), in which an ERA ROM was required for each sensor position. This highlights the role of ROMs in studies of sensor and actuator placement and how this can be costly. As sensor and actuator positioning substantially impacts the control strategy (Ilak & Rowley Reference Ilak and Rowley2008; Illingworth, Morgans & Rowley Reference Illingworth, Morgans and Rowley2011; Belson et al. Reference Belson, Semeraro, Rowley and Henningson2013; Freire et al. Reference Freire, Cavalieri, Silvestre, Hanifi and Henningson2020), it is of interest to be able to obtain control laws without having to rely on ROMs for each possible choice of sensor and actuator.

Most approaches used for flow control typically require simplified forcing assumptions, frequently modelled as white-in-time noise. Although complex spatial-temporal forcing colour can be used in the Kalman-filter framework, the approach requires use of an expanded system that filters a white-noise input to create a coloured noise, with the extra assumption that the forcing cross-spectral density (CSD) is a rational function of the frequency (Åström & Wittenmark Reference Åström and Wittenmark2013). Simplified forcing-colour models are thus typically used to avoid this complexity. However, it has been shown that the use of realistic spatiotemporal forcing colour is crucial for accurate estimation of complex flows (Chevalier et al. Reference Chevalier, Hœpffner, Bewley and Henningson2006; Martini et al. Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020; Amaral et al. Reference Amaral, Cavalieri, Martini, Jordan and Towne2021). This is an indication that control can be considerably enhanced if realistic forcing models are used.

Another approach to estimation and control is possible using the Wiener–Hopf formalism. An optimal non-causal estimation method, known as a Wiener filter (Wiener Reference Wiener1942; Barrett & Moir Reference Barrett and Moir1987), and optimal control strategies, known as Wiener regulators (Youla, Jabr & Bongiorno Reference Youla, Jabr and Bongiorno1976; Grimble Reference Grimble1979; Moir & Barrett Reference Moir and Barrett1989), can be obtained based on CSDs between sensors, actuators and flow states. Although well described in the control literature, their potential for flow control has not been appropriately explored. To the best of the authors’ knowledge, Martinelli (Reference Martinelli2009) is the only work in which the formalism has been used for flow control. Difficulties in solving the associated Wiener–Hopf problems limited that study to the use of one sensor and one actuator, and the significant cost of converging the CSDs probably hindered further application of the method.

Wiener–Hopf problems appear when causality constraints are imposed on the estimation and control kernels. The Wiener–Hopf method has been used in the fluid mechanics community to obtain solutions to linear problems with spatial discontinuities, such as acoustic scattering by edges (Noble Reference Noble1959; Peake Reference Peake2004). Solutions to this class of problem are typically based on a factorization of the Wiener–Hopf kernel into components that are regular on the upper/lower halves of the complex frequency plane. Such factorization can be achieved analytically only for scalar problems (Crighton & Leppington Reference Crighton and Leppington1970; Peake Reference Peake2004) and for some special classes of matrices (Daniele Reference Daniele1978; Rawlins & Williams Reference Rawlins and Williams1981). Lacking general analytical solutions, several numerical approaches can be used (Tuel Reference Tuel1968; Daniele & Lombardi Reference Daniele and Lombardi2007; Atkinson & Shampine Reference Atkinson and Shampine2008; Kisil Reference Kisil2016). The potential of the method for estimation and control comes from the fact that the size of the matrices to be factorized scales with the number of sensors and actuators, in contrast to control strategies based on solutions of algebraic Riccati equations that scale with the system's size.

While the use of a linear control strategy for nonlinear systems has clear limitations, i.e. it is expected to work only when the disturbances around a reference flow can be reasonably approximated by a linear model, there are many examples in the literature (several of which are cited above) that show how the approach is useful in a broad variety of flows.

The objective of our study is to obtain a method for real-time estimation and control that avoids some of the drawbacks of previous approaches. To achieve this, we strategically combine three pre-existing tools: the Wiener–Hopf regulator for flow control (Martinelli Reference Martinelli2009); a numerical method to solve matrix Wiener–Hopf problems (Daniele & Lombardi Reference Daniele and Lombardi2007); and matrix-free methods for obtaining the resolvent operator of large systems (Martini et al. Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020, Reference Martini, Rodríguez, Towne and Cavalieri2021; Farghadan et al. Reference Farghadan, Towne, Martini and Cavalieri2021). Combining these tools provides a novel method that can, for the first time, simultaneously handle complex forcing colour and be applied directly to large systems without the need for a priori model reduction, allowing parametric investigation of sensor/actuator placement at low cost when forcing/targets are low rank. To the best of the authors’ knowledge, this is also the first time that the Wiener–Hopf regulator is constructed from first principles, i.e. from the linearized equations of motion and a model of the forcing, as in the linear quadratic Gaussian (LQG) framework. This construction provides physical insights on the structures that can be estimated and controlled. The approach can also be used to improve wave-cancelling strategies (Li & Gaster Reference Li and Gaster2006; Fabbiane et al. Reference Fabbiane, Semeraro, Bagheri and Henningson2014; Sasaki et al. Reference Sasaki, Morra, Fabbiane, Cavalieri, Hanifi and Henningson2018a; Brito et al. Reference Brito, Morra, Cavalieri, Araújo, Henningson and Hanifi2021; Maia et al. Reference Maia, Jordan, Cavalieri, Martini, Sasaki and Silvestre2021), minimizing the effect of kernel truncation.

The method can be viewed as an extension of the resolvent-based estimation methods recently developed by Towne, Lozano-Durán & Yang (Reference Towne, Lozano-Durán and Yang2020) and Martini et al. (Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020). In the former study, a resolvent-based approach was developed to estimate space–time flow statistics from limited measurements. The central idea is to use the measurements to approximate the nonlinear terms that act as a forcing of the linearized Navier–Stokes equations, which in turn provide an estimate of the flow state upon application of the resolvent operator in the frequency domain. The latter study extends this resolvent-based methodology to obtain optimal estimates of the time-varying flow state and forcing. The estimator is derived in terms of transfer functions between the measurements and the forcing terms and can be written in terms of the resolvent operator and the CSD of the forcing. However, these methods are nominally non-causal, making them appropriate for flow reconstruction but limiting their applicability for flow control.

The method developed in the current paper follows that of Martini et al. (Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020), but with additional constraints to enforce causality. It is these constraints that lead to the aforementioned Wiener–Hopf problem. The causality of the new resolvent-based estimator makes it applicable for real-time estimation, and we use a similar approach to develop an optimal resolvent-based controller. The resulting estimation and control methods are thus obtained directly for the full-rank flow system, without requiring ROMs, and they make use of the spatiotemporal forcing statistics, thus avoiding the simplified forcing assumptions that can lead to significant reductions in performance.

The paper is structured as follows. The derivation of optimal estimation and control kernels based on the Wiener–Hopf formalism are constructed in § 2, and solutions are compared to those obtained from the algebraic Riccati equation using a linearized Ginzburg–Landau problem. Implementation of the method using numerical integration of the linearized system and using experimental data are described in § 3. An application to flow over a backward-facing step is presented in § 4. Final conclusions are drawn in § 5. An introduction to Wiener–Hopf problems (which appear in § 2) and their solution is presented in Appendix A.

2. Estimation and control using Wiener–Hopf methods

In what follows, we define the linear system considered, followed by the derivation of optimal estimation and control kernels based on the Wiener–Hopf approach. Optimality here is defined in terms of quadratic cost functionals. An approach to recover estimation and control gains from the proposed approach is presented. The derivation for full-state control is presented in Appendix B.

2.1. System definition

We consider the linear time-invariant system

\begin{equation} \left.\begin{array}{c@{}}\dfrac{{\rm d} \boldsymbol{u}}{{\rm d} t} (t) ={{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}\boldsymbol{f}(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}\boldsymbol{a}(t), \\ \boldsymbol{y}(t) ={{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}\boldsymbol{u}(t) + \boldsymbol{n}(t),\\ \boldsymbol{z}(t)= {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}}\boldsymbol{u}(t), \end{array}\right\}\end{equation}

\begin{equation} \left.\begin{array}{c@{}}\dfrac{{\rm d} \boldsymbol{u}}{{\rm d} t} (t) ={{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}\boldsymbol{f}(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}\boldsymbol{a}(t), \\ \boldsymbol{y}(t) ={{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}\boldsymbol{u}(t) + \boldsymbol{n}(t),\\ \boldsymbol{z}(t)= {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}}\boldsymbol{u}(t), \end{array}\right\}\end{equation}

where ![]() $\boldsymbol {u} \in \mathbb {C}^{n_u}$ represents the flow state,

$\boldsymbol {u} \in \mathbb {C}^{n_u}$ represents the flow state, ![]() $\boldsymbol {f} \in \mathbb {C}^{n_f}$ is an unknown stochastic forcing, which can represent external disturbances and/or nonlinear interactions (McKeon & Sharma Reference McKeon and Sharma2010),

$\boldsymbol {f} \in \mathbb {C}^{n_f}$ is an unknown stochastic forcing, which can represent external disturbances and/or nonlinear interactions (McKeon & Sharma Reference McKeon and Sharma2010), ![]() $\boldsymbol {a}\in \mathbb {C}^{n_a}$ represents flow actuation used for control,

$\boldsymbol {a}\in \mathbb {C}^{n_a}$ represents flow actuation used for control, ![]() $\boldsymbol {y} \in \mathbb {C}^{n_y}$ is a set of system observables,

$\boldsymbol {y} \in \mathbb {C}^{n_y}$ is a set of system observables, ![]() $\boldsymbol {n} \in \mathbb {C}^{n_y}$ is measurement noise and

$\boldsymbol {n} \in \mathbb {C}^{n_y}$ is measurement noise and ![]() $\boldsymbol {z} \in \mathbb {C}^{n_z}$ is a set of targets for the control problem, for instance, perturbations at a given position or surface loads, to be minimized. The system evolution is described by the matrix

$\boldsymbol {z} \in \mathbb {C}^{n_z}$ is a set of targets for the control problem, for instance, perturbations at a given position or surface loads, to be minimized. The system evolution is described by the matrix ![]() ${{\boldsymbol{\mathsf{A}}}} \in \mathbb {C}^{n_u\times n_u}$, representing the linearized Navier–Stokes operator. The matrices

${{\boldsymbol{\mathsf{A}}}} \in \mathbb {C}^{n_u\times n_u}$, representing the linearized Navier–Stokes operator. The matrices ![]() ${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}} \in \mathbb {C}^{n_u\times n_f}, {{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}} \in \mathbb {C}^{n_u\times n_a}, {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}} \in \mathbb {C}^{n_y\times n_u}$ and

${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}} \in \mathbb {C}^{n_u\times n_f}, {{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}} \in \mathbb {C}^{n_u\times n_a}, {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}} \in \mathbb {C}^{n_y\times n_u}$ and ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}} \in \mathbb {C}^{n_z\times n_u}$ determine the spatial support of external disturbances, actuators, sensors and targets, as in Bagheri, Brandt & Henningson (Reference Bagheri, Brandt and Henningson2009a). Forcing and sensor noise are modelled as stochastic zero-mean processes with two-point space–time correlations given by

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}} \in \mathbb {C}^{n_z\times n_u}$ determine the spatial support of external disturbances, actuators, sensors and targets, as in Bagheri, Brandt & Henningson (Reference Bagheri, Brandt and Henningson2009a). Forcing and sensor noise are modelled as stochastic zero-mean processes with two-point space–time correlations given by

with ![]() ${\dagger}$ representing the adjoint operator using a suitable inner product and

${\dagger}$ representing the adjoint operator using a suitable inner product and ![]() $\langle \cdot \rangle$ representing the ensemble average. We emphasize that these forcing and noise statistics are more general than those assumed in the derivation of the Kalman filter and LQG control, in which they must be uncorrelated in time. A frequency-domain representation of the two-point correlation is given by the CSDs

$\langle \cdot \rangle$ representing the ensemble average. We emphasize that these forcing and noise statistics are more general than those assumed in the derivation of the Kalman filter and LQG control, in which they must be uncorrelated in time. A frequency-domain representation of the two-point correlation is given by the CSDs

Note that this differs from the standard definition, i.e. ![]() $\langle \hat {\boldsymbol {f}} \hat {\boldsymbol {f}}^{H} \rangle$, with

$\langle \hat {\boldsymbol {f}} \hat {\boldsymbol {f}}^{H} \rangle$, with ![]() $H$ representing the conjugate transpose of the matrices; this modified definition simplifies the derivations that follow.

$H$ representing the conjugate transpose of the matrices; this modified definition simplifies the derivations that follow.

Throughout this work, we assume the system to be stable, that is, all eigenvalues ![]() $\lambda$ of

$\lambda$ of ![]() ${{\boldsymbol{\mathsf{A}}}}$, i.e.

${{\boldsymbol{\mathsf{A}}}}$, i.e. ![]() $\lambda$ for which

$\lambda$ for which ![]() ${{\boldsymbol{\mathsf{A}}}} \hat {\boldsymbol {u}} = -\mathrm {i} \lambda \hat {\boldsymbol {u}}$ has non-trivial solutions, lie in the lower half-plane. As discussed in § 1, optimal control strategies are best suited for amplifier flows, which satisfy this stability requirement.

${{\boldsymbol{\mathsf{A}}}} \hat {\boldsymbol {u}} = -\mathrm {i} \lambda \hat {\boldsymbol {u}}$ has non-trivial solutions, lie in the lower half-plane. As discussed in § 1, optimal control strategies are best suited for amplifier flows, which satisfy this stability requirement.

It is useful to split (2.1) into two systems, one of which is driven by the forcing and includes sensor noise,

\begin{equation} \left.\begin{array}{c@{}}\dfrac{{\rm d} \boldsymbol{u}_1}{{\rm d} t}(t) ={{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}_1(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}\boldsymbol{f}(t), \\ \boldsymbol{y}_1(t) ={{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}\boldsymbol{u}_1(t) + \boldsymbol{n}(t),\\ \boldsymbol{z}_1(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \boldsymbol{u}_1(t), \end{array}\right\}\end{equation}

\begin{equation} \left.\begin{array}{c@{}}\dfrac{{\rm d} \boldsymbol{u}_1}{{\rm d} t}(t) ={{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}_1(t) +{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}\boldsymbol{f}(t), \\ \boldsymbol{y}_1(t) ={{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}\boldsymbol{u}_1(t) + \boldsymbol{n}(t),\\ \boldsymbol{z}_1(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \boldsymbol{u}_1(t), \end{array}\right\}\end{equation}and another noiseless system driven by actuation only,

\begin{equation} \left.\begin{array}{c@{}} \dfrac{{\rm d} \boldsymbol{u}_2}{{\rm d} t}(t) = {{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}_2(t) + {{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}} \boldsymbol{a}(t), \\ \boldsymbol{y}_2(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}} \boldsymbol{u}_2(t) ,\\ \boldsymbol{z}_2(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \boldsymbol{u}_2(t). \end{array}\right\} \end{equation}

\begin{equation} \left.\begin{array}{c@{}} \dfrac{{\rm d} \boldsymbol{u}_2}{{\rm d} t}(t) = {{\boldsymbol{\mathsf{A}}}} \boldsymbol{u}_2(t) + {{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}} \boldsymbol{a}(t), \\ \boldsymbol{y}_2(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}} \boldsymbol{u}_2(t) ,\\ \boldsymbol{z}_2(t) = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \boldsymbol{u}_2(t). \end{array}\right\} \end{equation}The original system can be recovered adding the variables with subscripts ‘1’ and ‘2’, that is,

Figures 1 and 2 illustrate these systems.

Figure 1. Block diagram representing the system (2.1).

The derivations that follow make use of the frequency-domain form of these equations. Taking a Fourier transform of (2.6) and (2.7) yields the input–output relationships

where

is the resolvent operator. Note that the above input–output relations are exact within the linearized model used here, and thus has the same limitation as any linear modelling of the original nonlinear system, i.e. treating the nonlinear interactions as exogenous stochastic noise. This framework is shared by all linear control laws that have been developed.

2.2. The estimation problem

Before deriving the optimal causal estimator that we seek, we first briefly review the derivation of the optimal non-causal estimator (Martini et al. Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020). We will see later that the two cases are closely related but that imposing causality leads to the appearance of an additional term. We focus on the uncontrolled estimation problem, i.e. ![]() $\boldsymbol {a}(t) = 0$, thus making (2.1) and (2.6) equivalent.

$\boldsymbol {a}(t) = 0$, thus making (2.1) and (2.6) equivalent.

The optimal estimator minimizes the cost functional

defined in terms of the estimation error

where ![]() $\boldsymbol {z}$ is the target, i.e. the quantity to be estimated, and

$\boldsymbol {z}$ is the target, i.e. the quantity to be estimated, and ![]() $\tilde {\boldsymbol {z}}$ is its estimate and where

$\tilde {\boldsymbol {z}}$ is its estimate and where ![]() $\langle\, \cdot\, \rangle$ represents the expected value. Note that full-state estimation is recovered using

$\langle\, \cdot\, \rangle$ represents the expected value. Note that full-state estimation is recovered using ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}={{\boldsymbol{\mathsf{I}}}}$.

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}={{\boldsymbol{\mathsf{I}}}}$.

We seek an estimate of the targets in the form of a linear combination of sensor readings, in the form of

where the subscript ![]() $nc$ is a reminder that the kernel,

$nc$ is a reminder that the kernel, ![]() ${{\boldsymbol{\mathsf{T}}}}_{z,nc} \in \mathbb {C}^{n_z\times n_y }$, is, in general, non-causal, and

${{\boldsymbol{\mathsf{T}}}}_{z,nc} \in \mathbb {C}^{n_z\times n_y }$, is, in general, non-causal, and ![]() $\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,nc}$ is its Fourier transform. Expanding (2.14) leads to

$\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,nc}$ is its Fourier transform. Expanding (2.14) leads to

In the above equations, and henceforth, the frequency dependence is omitted for clarity. The minimum is found by taking the derivative of the cost function (2.17) with respect to ![]() $\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,nc}^{{\dagger} }(\omega )$ and setting it to zero. The optimal estimation kernel is obtained as the solution of

$\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,nc}^{{\dagger} }(\omega )$ and setting it to zero. The optimal estimation kernel is obtained as the solution of

where

Note that the forcing CSD ![]() $\hat {{{\boldsymbol{\mathsf{F}}}}}$ appears explicitly in the equation, and is thus naturally handled by the approach.

$\hat {{{\boldsymbol{\mathsf{F}}}}}$ appears explicitly in the equation, and is thus naturally handled by the approach.

Causality of the estimation kernel can be enforced in (2.14) using Lagrange multipliers (Martinelli Reference Martinelli2009), as

\begin{align} J' &= J + \int_{-\infty}^{\infty} {\rm Tr}( \boldsymbol{\varLambda}_-(t) {{\boldsymbol{\mathsf{T}}}}_{z,c}(t)+\boldsymbol{\varLambda}^{{{\dagger}}}_- (t) {{\boldsymbol{\mathsf{T}}}}^{{{\dagger}}}_{z,c}(t))\,{\rm d} t \nonumber\\ &= J + \int_{-\infty}^{\infty} {\rm Tr}(\hat{\boldsymbol{\varLambda}}_- \hat{{{\boldsymbol{\mathsf{T}}}}}_{z,c}+ \hat{\boldsymbol{\varLambda}}^{{{\dagger}}}_- \hat{{{\boldsymbol{\mathsf{T}}}}} ^{{{\dagger}}}_{z,c}) \,{\rm d} \omega, \end{align}

\begin{align} J' &= J + \int_{-\infty}^{\infty} {\rm Tr}( \boldsymbol{\varLambda}_-(t) {{\boldsymbol{\mathsf{T}}}}_{z,c}(t)+\boldsymbol{\varLambda}^{{{\dagger}}}_- (t) {{\boldsymbol{\mathsf{T}}}}^{{{\dagger}}}_{z,c}(t))\,{\rm d} t \nonumber\\ &= J + \int_{-\infty}^{\infty} {\rm Tr}(\hat{\boldsymbol{\varLambda}}_- \hat{{{\boldsymbol{\mathsf{T}}}}}_{z,c}+ \hat{\boldsymbol{\varLambda}}^{{{\dagger}}}_- \hat{{{\boldsymbol{\mathsf{T}}}}} ^{{{\dagger}}}_{z,c}) \,{\rm d} \omega, \end{align}

where the Lagrange multipliers ![]() ${\boldsymbol {\varLambda }}_-,{\boldsymbol {\varLambda }}_+ \in \mathbb {C}^{n_y\times n_z}$ are required to be zero for

${\boldsymbol {\varLambda }}_-,{\boldsymbol {\varLambda }}_+ \in \mathbb {C}^{n_y\times n_z}$ are required to be zero for ![]() $t > 0$, thus enforcing the condition

$t > 0$, thus enforcing the condition ![]() ${{{\boldsymbol{\mathsf{T}}}}_{z,c}(t<0)=0 }$. The subscript

${{{\boldsymbol{\mathsf{T}}}}_{z,c}(t<0)=0 }$. The subscript ![]() $c$ is used to emphasize the causal nature of this kernel.

$c$ is used to emphasize the causal nature of this kernel.

The functionals ![]() $J$ and

$J$ and ![]() $J'$ differ only by linear terms in the estimation kernel, and thus it is straightforward to see that taking the derivative of

$J'$ differ only by linear terms in the estimation kernel, and thus it is straightforward to see that taking the derivative of ![]() $J'$ and setting it to zero leads to

$J'$ and setting it to zero leads to

This apparently simple equation hides significant complexities. First, this is a single equation with two variables, ![]() ${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and

${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and ![]() ${\hat {\boldsymbol {\varLambda }}}$. Nevertheless, it admits a unique solution due to the requirements that

${\hat {\boldsymbol {\varLambda }}}$. Nevertheless, it admits a unique solution due to the requirements that ![]() ${{{\boldsymbol{\mathsf{T}}}}_{z,c}(t<0)=0 }$ and

${{{\boldsymbol{\mathsf{T}}}}_{z,c}(t<0)=0 }$ and ![]() ${\boldsymbol {\varLambda }(t>0)=0 }$, which in the frequency domain impose restrictions on

${\boldsymbol {\varLambda }(t>0)=0 }$, which in the frequency domain impose restrictions on ![]() ${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and

${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and ![]() ${\hat {\boldsymbol {\varLambda }}}$, namely that these quantities are regular on the upper and lower complex planes, respectively. This restriction means that the values of

${\hat {\boldsymbol {\varLambda }}}$, namely that these quantities are regular on the upper and lower complex planes, respectively. This restriction means that the values of ![]() ${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and

${\hat {{{\boldsymbol{\mathsf{T}}}}}_{z,c}}$ and ![]() ${\hat {\boldsymbol {\varLambda }}}$ for different frequencies have a non-trivial relation between them, and thus cannot be chosen independently. Equation (2.22), with the regularity constrains, constitutes a Wiener–Hopf problem. As discussed in § 1, analytical solutions are only known for special cases, and thus we resort to numerical methods. An introduction to Wiener–Hopf problems and numerical methods to solve them is presented in Appendix A. The solution of (2.22), once inverse Fourier transformed, is the optimal causal estimation kernel for the linear system at hand.

${\hat {\boldsymbol {\varLambda }}}$ for different frequencies have a non-trivial relation between them, and thus cannot be chosen independently. Equation (2.22), with the regularity constrains, constitutes a Wiener–Hopf problem. As discussed in § 1, analytical solutions are only known for special cases, and thus we resort to numerical methods. An introduction to Wiener–Hopf problems and numerical methods to solve them is presented in Appendix A. The solution of (2.22), once inverse Fourier transformed, is the optimal causal estimation kernel for the linear system at hand.

In previous studies (Martini et al. Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020; Amaral et al. Reference Amaral, Cavalieri, Martini, Jordan and Towne2021) we have shown that using the spatiotemporal forcing statistics considerably improves the accuracy of the estimation of a turbulent channel flow. The estimation method presented here preserves the ability to handle these complex forcing models, while being applicable in real time via the simple integration of

which is similar to (2.16a,b), but with integration restricted to positive ![]() $\tau$, implying that only present and past sensor measurements are used for estimation.

$\tau$, implying that only present and past sensor measurements are used for estimation.

2.3. Partial-knowledge control

Control can be divided in full-knowledge control, where the full state of the flow is assumed to be known, and partial-knowledge control, where the flow state needs to be estimated from limited, noisy, sensor readings. In this section we derive the optimal partial-knowledge control; the full-knowledge case is considered in Appendix B for completeness.

Analogous to the estimation problem, we seek a control law that constructs an actuation signal as a linear function of sensor readings,

where ![]() $\boldsymbol {\varGamma }' \in \mathbb {C}^{ n_a\times n_y }$ is the control kernel and

$\boldsymbol {\varGamma }' \in \mathbb {C}^{ n_a\times n_y }$ is the control kernel and ![]() $\hat {\boldsymbol {\varGamma }}'$ is its Fourier transform. However, the derivation of the kernel is simplified if instead the actuation is expressed only in terms of

$\hat {\boldsymbol {\varGamma }}'$ is its Fourier transform. However, the derivation of the kernel is simplified if instead the actuation is expressed only in terms of ![]() $\boldsymbol {y}_1$, i.e. ignoring the influence of the actuator on the sensor,

$\boldsymbol {y}_1$, i.e. ignoring the influence of the actuator on the sensor,

where again ![]() $\boldsymbol {\varGamma } \in \mathbb {C}^{ n_a\times n_y }$ and

$\boldsymbol {\varGamma } \in \mathbb {C}^{ n_a\times n_y }$ and ![]() $\hat {\boldsymbol {\varGamma }}$ its Fourier transform.

$\hat {\boldsymbol {\varGamma }}$ its Fourier transform.

This implies no loss of generality: as ![]() $\boldsymbol {y}_2$ is a function only of the previous actuation, which is known, it can be computed using the actuator impulse response and subtracted from

$\boldsymbol {y}_2$ is a function only of the previous actuation, which is known, it can be computed using the actuator impulse response and subtracted from ![]() $\boldsymbol {y}$ to obtain

$\boldsymbol {y}$ to obtain ![]() $\boldsymbol {y}_1$. The approach is equivalent to the formalism of an internal model control (Morari & Zafiriou Reference Morari and Zafiriou1989). The control kernel

$\boldsymbol {y}_1$. The approach is equivalent to the formalism of an internal model control (Morari & Zafiriou Reference Morari and Zafiriou1989). The control kernel ![]() $\boldsymbol {\varGamma }$ is then chosen so as to minimize a cost functional that trades off the expected value of targets and actuation,

$\boldsymbol {\varGamma }$ is then chosen so as to minimize a cost functional that trades off the expected value of targets and actuation,

where ![]() ${{\boldsymbol{\mathsf{P}}}} \in \mathbb {C}^{ n_a\times n_a }$ is a positive-definite matrix containing actuation penalties. For simplicity, we do not include a state cost matrix. This does not imply any loss of generality, as any state cost matrix

${{\boldsymbol{\mathsf{P}}}} \in \mathbb {C}^{ n_a\times n_a }$ is a positive-definite matrix containing actuation penalties. For simplicity, we do not include a state cost matrix. This does not imply any loss of generality, as any state cost matrix ![]() ${{\boldsymbol{\mathsf{Q}}}} \in \mathbb {C}^{ n_u\times n_u }$, which must be positive definite, can be absorbed into the definition of the targets as

${{\boldsymbol{\mathsf{Q}}}} \in \mathbb {C}^{ n_u\times n_u }$, which must be positive definite, can be absorbed into the definition of the targets as ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}'= {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}} {{\boldsymbol{\mathsf{Q}}}}_c$, where

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}'= {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}} {{\boldsymbol{\mathsf{Q}}}}_c$, where ![]() ${{\boldsymbol{\mathsf{Q}}}} = {{\boldsymbol{\mathsf{Q}}}}_c {{\boldsymbol{\mathsf{Q}}}}_c^{H}$ is a Cholesky decomposition of

${{\boldsymbol{\mathsf{Q}}}} = {{\boldsymbol{\mathsf{Q}}}}_c {{\boldsymbol{\mathsf{Q}}}}_c^{H}$ is a Cholesky decomposition of ![]() ${{\boldsymbol{\mathsf{Q}}}}$.

${{\boldsymbol{\mathsf{Q}}}}$.

Using the identity ![]() $\textrm {Tr}(\boldsymbol {\varPhi }\boldsymbol {\varPsi })=\textrm {Tr}(\boldsymbol {\varPsi }\boldsymbol {\varPhi } )$, valid for any matrices

$\textrm {Tr}(\boldsymbol {\varPhi }\boldsymbol {\varPsi })=\textrm {Tr}(\boldsymbol {\varPsi }\boldsymbol {\varPhi } )$, valid for any matrices ![]() $\boldsymbol {\varPsi }$ and

$\boldsymbol {\varPsi }$ and ![]() $\boldsymbol {\varPhi }$ with suitable sizes, the functional is rewritten as

$\boldsymbol {\varPhi }$ with suitable sizes, the functional is rewritten as

The functional is expanded using

Before further expanding these terms, we define

As shown in Appendix B, these terms are the counterparts of ![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and ![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$ for the full-knowledge control problem. It is now straightforward to show that

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$ for the full-knowledge control problem. It is now straightforward to show that

The cost functional can then be expressed as

\begin{align} J &= \int_{-\infty}^{\infty} {\rm Tr}( {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{z}\boldsymbol{f}} \hat{{{\boldsymbol{\mathsf{F}}}}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{z}\boldsymbol{f}}^{{{\dagger}}} + \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}}\hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}\hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} - \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}} {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}}^{{{\dagger}}} - {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} )\, {\rm d} \omega \nonumber\\ &\quad + \int_{-\infty}^{\infty}{\rm Tr}( {{\boldsymbol{\mathsf{P}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} )\, {\rm d} \omega, \end{align}

\begin{align} J &= \int_{-\infty}^{\infty} {\rm Tr}( {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{z}\boldsymbol{f}} \hat{{{\boldsymbol{\mathsf{F}}}}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{z}\boldsymbol{f}}^{{{\dagger}}} + \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}}\hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}\hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} - \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{{\dagger}}} {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}}^{{{\dagger}}} - {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} )\, {\rm d} \omega \nonumber\\ &\quad + \int_{-\infty}^{\infty}{\rm Tr}( {{\boldsymbol{\mathsf{P}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc} \hat{{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}_{nc}^{{{\dagger}}} )\, {\rm d} \omega, \end{align}

where the subscript ‘nc’ was added to emphasize the non-causal nature of the control that will be obtained. Using the cyclic property of the trace and isolating terms with ![]() $\hat {\boldsymbol {\boldsymbol {\varGamma }}}_{nc}^{{\dagger} }$ , we obtain

$\hat {\boldsymbol {\boldsymbol {\varGamma }}}_{nc}^{{\dagger} }$ , we obtain

where ![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}} = \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{\dagger} } + {{\boldsymbol{\mathsf{P}}}}$ was used. The minimum of

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}} = \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}^{{\dagger} } + {{\boldsymbol{\mathsf{P}}}}$ was used. The minimum of ![]() $J$ is found by differentiating (2.36) with respect to

$J$ is found by differentiating (2.36) with respect to ![]() $\hat {\boldsymbol {\boldsymbol {\varGamma }}}_{nc}^{{\dagger} }$, leading to

$\hat {\boldsymbol {\boldsymbol {\varGamma }}}_{nc}^{{\dagger} }$, leading to

which can be solved for the optimal control kernel as

From (2.38), it can be seen that the optimal non-causal control kernel is a combination of the optimal non-causal estimation of targets (![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}^{-1}$, solution of (2.18)) with the optimal full-knowledge, non-causal control, described in Appendix B, (

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}^{-1}$, solution of (2.18)) with the optimal full-knowledge, non-causal control, described in Appendix B, (![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}^{-1}\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$, solution of (B3)), acting to minimize these targets with actuation inputs.

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}^{-1}\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$, solution of (B3)), acting to minimize these targets with actuation inputs.

As this control kernel is, in general, not causal, it cannot be used in real-time applications. It does, however, provide upper bounds for the effectiveness of causal control, and it is the basis for the control method proposed by Sasaki et al. (Reference Sasaki, Tissot, Cavalieri, Silvestre, Jordan and Biau2018b), where the kernel is truncated to its causal part. This approach was also successfully applied to experiments (Brito et al. Reference Brito, Morra, Cavalieri, Araújo, Henningson and Hanifi2021; Maia et al. Reference Maia, Jordan, Cavalieri, Martini, Sasaki and Silvestre2021). However, truncating the non-causal control kernel is, in general, sub-optimal.

To obtain the optimal causal control law, causality is again enforced via Lagrange multipliers. The modified cost functional reads as

\begin{align} J'& = J +\int_{-\infty}^{\infty} {\rm Tr}( \boldsymbol{\varLambda}_- (t) {\boldsymbol{\varGamma}}_c(t) + \boldsymbol{\varLambda}_-^{{{\dagger}}} (t) {\boldsymbol{\varGamma}}^{{{\dagger}}}_c(t) ) \, {\rm d} t \nonumber\\ & =J + \int_{-\infty}^{\infty} {\rm Tr}( \hat{\boldsymbol{\varLambda}}_- \hat{\boldsymbol{\boldsymbol{\varGamma}}}_c + \hat{\boldsymbol{\varLambda}}_-^{{{\dagger}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}^{{{\dagger}}}_c ) \,{\rm d} \omega, \end{align}

\begin{align} J'& = J +\int_{-\infty}^{\infty} {\rm Tr}( \boldsymbol{\varLambda}_- (t) {\boldsymbol{\varGamma}}_c(t) + \boldsymbol{\varLambda}_-^{{{\dagger}}} (t) {\boldsymbol{\varGamma}}^{{{\dagger}}}_c(t) ) \, {\rm d} t \nonumber\\ & =J + \int_{-\infty}^{\infty} {\rm Tr}( \hat{\boldsymbol{\varLambda}}_- \hat{\boldsymbol{\boldsymbol{\varGamma}}}_c + \hat{\boldsymbol{\varLambda}}_-^{{{\dagger}}} \hat{\boldsymbol{\boldsymbol{\varGamma}}}^{{{\dagger}}}_c ) \,{\rm d} \omega, \end{align}

where the subscript ![]() $c$ emphasizes the causal nature of the control that will be obtained. Just as in the estimation problem, the linear terms added to

$c$ emphasizes the causal nature of the control that will be obtained. Just as in the estimation problem, the linear terms added to ![]() $J$, contribute to an additional term when taking the derivative of

$J$, contribute to an additional term when taking the derivative of ![]() $J'$. The control kernel is now given by the solution of

$J'$. The control kernel is now given by the solution of

Again, as in the estimation problem, the requirements that ![]() ${\boldsymbol {\varGamma }(t<0)=0}$ and

${\boldsymbol {\varGamma }(t<0)=0}$ and ![]() ${\boldsymbol {\varLambda }_-(t>0)=0}$ makes this a well-posed problem, and specifically another type of Wiener–Hopf problem. The procedure to solve this problem is equivalent to the one used for the estimation problem, and is presented in Appendix A.

${\boldsymbol {\varLambda }_-(t>0)=0}$ makes this a well-posed problem, and specifically another type of Wiener–Hopf problem. The procedure to solve this problem is equivalent to the one used for the estimation problem, and is presented in Appendix A.

The control kernel ![]() $\boldsymbol {\varGamma }'$ can be now recovered from

$\boldsymbol {\varGamma }'$ can be now recovered from ![]() $\boldsymbol {\varGamma }$. The expression for the actuation

$\boldsymbol {\varGamma }$. The expression for the actuation

can be rewritten as

\begin{equation} \hat{\boldsymbol{a}} = \underbrace{ ({{\boldsymbol{\mathsf{I}}}} + \hat{\boldsymbol{\boldsymbol{\varGamma}}} {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{a}} )^{{-}1} \hat{\boldsymbol{\boldsymbol{\varGamma}}}}_{\hat{\boldsymbol{\boldsymbol{\varGamma}}}'} \hat{\boldsymbol{y}}, \end{equation}

\begin{equation} \hat{\boldsymbol{a}} = \underbrace{ ({{\boldsymbol{\mathsf{I}}}} + \hat{\boldsymbol{\boldsymbol{\varGamma}}} {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{a}} )^{{-}1} \hat{\boldsymbol{\boldsymbol{\varGamma}}}}_{\hat{\boldsymbol{\boldsymbol{\varGamma}}}'} \hat{\boldsymbol{y}}, \end{equation}

thus recovering ![]() $\boldsymbol {\varGamma }'$.

$\boldsymbol {\varGamma }'$.

The closed-loop control diagram is illustrated in figure 3, where the relation between ![]() $\hat {\boldsymbol {\boldsymbol {\varGamma }}}'$ and

$\hat {\boldsymbol {\boldsymbol {\varGamma }}}'$ and ![]() $\hat {\boldsymbol {\boldsymbol {\varGamma }}}$ is shown. As

$\hat {\boldsymbol {\boldsymbol {\varGamma }}}$ is shown. As ![]() $\boldsymbol {y}_2$ is a function only of the previous actuation, it can be computed in real time and subtracted from

$\boldsymbol {y}_2$ is a function only of the previous actuation, it can be computed in real time and subtracted from ![]() $\boldsymbol {y}$ to obtain

$\boldsymbol {y}$ to obtain ![]() $\boldsymbol {y}_1$. This procedure is by construction included in

$\boldsymbol {y}_1$. This procedure is by construction included in ![]() ${\boldsymbol {\varGamma }}'$.

${\boldsymbol {\varGamma }}'$.

Figure 3. Closed-loop control diagram.

Note that it is the process of removing the actuator's response from the sensor readings that can lead to instabilities if the feedback is not accurately modelled (Belson et al. Reference Belson, Semeraro, Rowley and Henningson2013). For amplifier flows, the actuators are typically located downstream of the sensors, the feedback tends to be small, and the optimal control is thus robust (Schmid & Sipp Reference Schmid and Sipp2016). This explains the successful use of optimal control in many studies (Semeraro et al. Reference Semeraro, Bagheri, Brandt and Henningson2011; Barbagallo et al. Reference Barbagallo, Dergham, Sipp, Schmid and Robinet2012; Morra et al. Reference Morra, Sasaki, Hanifi, Cavalieri and Henningson2020; Sasaki et al. Reference Sasaki, Morra, Cavalieri, Hanifi and Henningson2020; Maia et al. Reference Maia, Jordan, Cavalieri, Martini, Sasaki and Silvestre2021). As our method targets amplifier flows, the issue of robustness is not further addressed.

2.4. Recovering Kalman and LQR control gains

We now demonstrate that gain matrices for the Kalman-filter estimation (![]() ${{\boldsymbol{\mathsf{L}}}}$) and LQR control (

${{\boldsymbol{\mathsf{L}}}}$) and LQR control (![]() ${{\boldsymbol{\mathsf{K}}}}$) can be recovered from the Wiener–Hopf formalism. As both methods have the same optimality properties, they amount to different approaches for obtaining the same result if applied to a system satisfying the common assumptions in the derivation of Kalman filters and LQR control, such as white-in-time disturbances, i.e.

${{\boldsymbol{\mathsf{K}}}}$) can be recovered from the Wiener–Hopf formalism. As both methods have the same optimality properties, they amount to different approaches for obtaining the same result if applied to a system satisfying the common assumptions in the derivation of Kalman filters and LQR control, such as white-in-time disturbances, i.e. ![]() $\hat {{{\boldsymbol{\mathsf{F}}}}}(\omega ) = {{\boldsymbol{\mathsf{F}}}}_0$.

$\hat {{{\boldsymbol{\mathsf{F}}}}}(\omega ) = {{\boldsymbol{\mathsf{F}}}}_0$.

From the Kalman-filter estimation equation (Åström & Wittenmark Reference Åström and Wittenmark2013),

the impulse response of the ![]() $i$th sensor,

$i$th sensor, ![]() $y_j(t) =\delta _{j,i}\delta (t)$, is such that

$y_j(t) =\delta _{j,i}\delta (t)$, is such that ![]() $\tilde {\boldsymbol {u}}(0^{+}) = {{\boldsymbol{\mathsf{L}}}}_i$, where

$\tilde {\boldsymbol {u}}(0^{+}) = {{\boldsymbol{\mathsf{L}}}}_i$, where ![]() ${{\boldsymbol{\mathsf{L}}}}_i$ is the

${{\boldsymbol{\mathsf{L}}}}_i$ is the ![]() $i$th row of

$i$th row of ![]() ${{\boldsymbol{\mathsf{L}}}}$. Thus, from (2.16a,b),

${{\boldsymbol{\mathsf{L}}}}$. Thus, from (2.16a,b),

The LQR control gains can be recovered by emulating an initial condition ![]() $\boldsymbol {u}_0$ at

$\boldsymbol {u}_0$ at ![]() $t=0$, which can be accomplished by setting

$t=0$, which can be accomplished by setting ![]() ${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}}={{\boldsymbol{\mathsf{I}}}}$ and using

${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}}={{\boldsymbol{\mathsf{I}}}}$ and using ![]() $\boldsymbol {f}(t) = \boldsymbol {u}_0 \delta (t)$, or equivalently,

$\boldsymbol {f}(t) = \boldsymbol {u}_0 \delta (t)$, or equivalently, ![]() $\hat {\boldsymbol {z}}_1 = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}{{\boldsymbol{\mathsf{R}}}} \boldsymbol {u}_0$. From the LQR framework,

$\hat {\boldsymbol {z}}_1 = {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}{{\boldsymbol{\mathsf{R}}}} \boldsymbol {u}_0$. From the LQR framework,

Since ![]() $\boldsymbol {u}(t<0)=0$, it follows that

$\boldsymbol {u}(t<0)=0$, it follows that ![]() $\boldsymbol {a}(t<0) =0$. Comparing with the solution of (A16), (B6) and (2.10), we have

$\boldsymbol {a}(t<0) =0$. Comparing with the solution of (A16), (B6) and (2.10), we have

\begin{align} \hat{\boldsymbol{a}}(\omega) & = \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_+^{{-}1}(\omega) (\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_-^{{-}1} (\omega) \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} (\omega) \hat{\boldsymbol{z}}(\omega) )_+ \nonumber\\ & = \underbrace{\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_+^{{-}1}(\omega) (\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_-^{{-}1} (\omega) \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} (\omega) {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} {{\boldsymbol{\mathsf{R}}}}(\omega) )_+}_{\hat{\boldsymbol{\varPi}}_c(\omega)} \boldsymbol{u}_0. \end{align}

\begin{align} \hat{\boldsymbol{a}}(\omega) & = \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_+^{{-}1}(\omega) (\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_-^{{-}1} (\omega) \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} (\omega) \hat{\boldsymbol{z}}(\omega) )_+ \nonumber\\ & = \underbrace{\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_+^{{-}1}(\omega) (\hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}_-^{{-}1} (\omega) \hat{{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} (\omega) {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{z}}} {{\boldsymbol{\mathsf{R}}}}(\omega) )_+}_{\hat{\boldsymbol{\varPi}}_c(\omega)} \boldsymbol{u}_0. \end{align}

Comparing (2.45) and (2.46), we have ![]() ${{\boldsymbol{\mathsf{K}}}} = \boldsymbol {\varPi }_c(0)$.

${{\boldsymbol{\mathsf{K}}}} = \boldsymbol {\varPi }_c(0)$.

Knowledge of LQR gains ![]() ${{\boldsymbol{\mathsf{K}}}}$ and the Kalman filter

${{\boldsymbol{\mathsf{K}}}}$ and the Kalman filter ![]() ${{\boldsymbol{\mathsf{L}}}}$ may be useful to understand regions of the flow that require accurate estimation to obtain effective control strategies, as discussed by Freire et al. (Reference Freire, Cavalieri, Silvestre, Hanifi and Henningson2020).

${{\boldsymbol{\mathsf{L}}}}$ may be useful to understand regions of the flow that require accurate estimation to obtain effective control strategies, as discussed by Freire et al. (Reference Freire, Cavalieri, Silvestre, Hanifi and Henningson2020).

2.5. Validation using a linearized Ginzburg–Landau problem

We here compare the kernels and gains computed with the present method to those obtained with the Kalman-filter and the LQR approaches. We use a linearized Ginzburg–Landau problem, for which standard tools can be used for the computation of estimation and control gains without resorting to model reduction. Such models have been used in many previous studies (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b; Lesshafft Reference Lesshafft2018; Cavalieri, Jordan & Lesshafft Reference Cavalieri, Jordan and Lesshafft2019). The model reads as

and we use the same parameters as in Martini et al. (Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020), namely: ![]() $U=6 , \gamma =1-\mathrm {i}$ and

$U=6 , \gamma =1-\mathrm {i}$ and ![]() $\mu (x)=\beta \mu _c(1-x/20)$, where

$\mu (x)=\beta \mu _c(1-x/20)$, where ![]() $\beta =0.1$ was used and

$\beta =0.1$ was used and ![]() $\mu _c=U^{2} \textrm {Re} (\gamma ) / |\gamma |^{2}$ is the critical value for onset of absolute instability (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b).

$\mu _c=U^{2} \textrm {Re} (\gamma ) / |\gamma |^{2}$ is the critical value for onset of absolute instability (Bagheri et al. Reference Bagheri, Henningson, Hœpffner and Schmid2009b).

For this validation, we use ![]() $\hat {{{\boldsymbol{\mathsf{F}}}}}={{\boldsymbol{\mathsf{I}}}}$, two sensors located at

$\hat {{{\boldsymbol{\mathsf{F}}}}}={{\boldsymbol{\mathsf{I}}}}$, two sensors located at ![]() $x=5$ and

$x=5$ and ![]() $20$, an actuator at

$20$, an actuator at ![]() $x=15$, and a target at

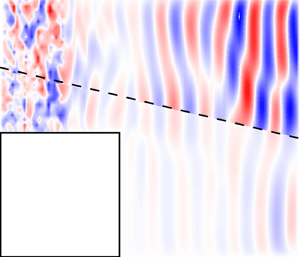

$x=15$, and a target at ![]() $x=30$. Figure 4 compares gains obtained from the proposed approach and from the algebraic Riccati equations. Both approaches produce identical gains, indicating their equivalence when white-noise forcing is assumed. Estimation and control kernels are shown in figure 5, again showing the equivalence between the two methods. The Kalman and LQG kernels are obtained as follows. From (2.16a,b), the state estimation is obtained as

$x=30$. Figure 4 compares gains obtained from the proposed approach and from the algebraic Riccati equations. Both approaches produce identical gains, indicating their equivalence when white-noise forcing is assumed. Estimation and control kernels are shown in figure 5, again showing the equivalence between the two methods. The Kalman and LQG kernels are obtained as follows. From (2.16a,b), the state estimation is obtained as

with the estimation kernel given by

\begin{equation} {{\boldsymbol{\mathsf{T}}}}_{u,kal}(\tau) = \begin{cases} {\rm e}^{ ({{\boldsymbol{\mathsf{A}}}}- {{\boldsymbol{\mathsf{L}}}} {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}})\tau} {{\boldsymbol{\mathsf{L}}}}, & \tau\ge 0, \\ 0, & \tau<0. \end{cases} \end{equation}

\begin{equation} {{\boldsymbol{\mathsf{T}}}}_{u,kal}(\tau) = \begin{cases} {\rm e}^{ ({{\boldsymbol{\mathsf{A}}}}- {{\boldsymbol{\mathsf{L}}}} {{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}})\tau} {{\boldsymbol{\mathsf{L}}}}, & \tau\ge 0, \\ 0, & \tau<0. \end{cases} \end{equation}The LQG kernel is obtained from

with the solution reading as

and where the control kernel is given by

\begin{equation} {\boldsymbol{\varGamma}}'_{lqg}(\tau) = \begin{cases} {{\boldsymbol{\mathsf{K}}}} \, {\rm e}^{ ({{\boldsymbol{\mathsf{A}}}}-{{\boldsymbol{\mathsf{L}}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}-{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}{{\boldsymbol{\mathsf{K}}}}) \tau} {{\boldsymbol{\mathsf{L}}}}, & \tau\ge 0, \\ 0, & \tau<0. \end{cases} \end{equation}

\begin{equation} {\boldsymbol{\varGamma}}'_{lqg}(\tau) = \begin{cases} {{\boldsymbol{\mathsf{K}}}} \, {\rm e}^{ ({{\boldsymbol{\mathsf{A}}}}-{{\boldsymbol{\mathsf{L}}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}-{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}{{\boldsymbol{\mathsf{K}}}}) \tau} {{\boldsymbol{\mathsf{L}}}}, & \tau\ge 0, \\ 0, & \tau<0. \end{cases} \end{equation}Figures 4 and 5 show that the Riccati-based and proposed approaches provide the same results, illustrating their equivalence.

Figure 4. (a) Blue/red lines show the estimation gains corresponding to the first and second sensor obtained using the proposed method. Dashed black lines show results obtained from the algebraic Riccati equation. (b) Same for control gains.

Figure 5. (a,b) Kernels for the estimation of the system at different locations. Colour lines/black markers show results from the proposed method and from the algebraic Riccati equations. (c) Same for the control kernel. (d) Spatial support of the sensors, actuator and target. Vertical lines show locations for which the estimation kernels in (a,b) were computed.

3. Implementation

The size of the Wiener–Hopf problems (2.22) and (2.40) is independent of the size of the linear system ![]() $n_{u}$. The dominant cost to solve these problems is the Wiener–Hopf factorization of

$n_{u}$. The dominant cost to solve these problems is the Wiener–Hopf factorization of ![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and ![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$, which are matrices that scale with

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$, which are matrices that scale with ![]() $n_y$ and

$n_y$ and ![]() $n_a$, respectively. Accordingly, a solution can be obtained with low cost for arbitrarily large systems, as long as the number of sensors and actuators remains reasonable. However, the coefficient matrices of the Wiener–Hopf problems are functions of the resolvent operator (2.13), and thus requires the inversion of matrices of size

$n_a$, respectively. Accordingly, a solution can be obtained with low cost for arbitrarily large systems, as long as the number of sensors and actuators remains reasonable. However, the coefficient matrices of the Wiener–Hopf problems are functions of the resolvent operator (2.13), and thus requires the inversion of matrices of size ![]() $n_u$ to be constructed, which is unfeasible for large systems.

$n_u$ to be constructed, which is unfeasible for large systems.

In § 3.1 we show a method to construct the coefficients of the Wiener–Hopf problems efficiently, with an approach to incorporate effective forcing models presented in § 3.2. In § 3.3 we show that the terms in the equations correspond to CSD matrices that can be obtained directly from numerical and physical experiments. The latter technique allows application of the tools developed in this paper when adjoint solvers are not available or in experimental set-ups.

3.1. Matrix-free implementation

In this section we apply methods developed in previous works (Martini et al. Reference Martini, Cavalieri, Jordan, Towne and Lesshafft2020, Reference Martini, Rodríguez, Towne and Cavalieri2021) to construct the terms ![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}} , \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} , \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}} , \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}} , \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and ![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$, circumventing the need to construct the resolvent operator, which would make their construction prohibitive in any practical scenario. The approach consists of using time-domain solutions of the linearized equations to obtain the action of the resolvent operator on a vector, which in turn can be used to reconstruct the operator.

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$, circumventing the need to construct the resolvent operator, which would make their construction prohibitive in any practical scenario. The approach consists of using time-domain solutions of the linearized equations to obtain the action of the resolvent operator on a vector, which in turn can be used to reconstruct the operator.

As an example, assume that the action of ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ on a vector

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ on a vector ![]() $\hat {\boldsymbol {v}}_d$ can be efficiently computed. The operator can be constructed row-wise by setting

$\hat {\boldsymbol {v}}_d$ can be efficiently computed. The operator can be constructed row-wise by setting ![]() $\hat {\boldsymbol {v}}_d=\boldsymbol {e}_i$, where

$\hat {\boldsymbol {v}}_d=\boldsymbol {e}_i$, where ![]() $\boldsymbol {e}_i$ is an element of the canonical basis for the forcing space: the resulting vector

$\boldsymbol {e}_i$ is an element of the canonical basis for the forcing space: the resulting vector ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}\boldsymbol {e}_i$ provides the

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}\boldsymbol {e}_i$ provides the ![]() $i$th row of

$i$th row of ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$. If

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$. If ![]() $n_f$ is small,

$n_f$ is small, ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ can be recovered by repeating the procedure for

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ can be recovered by repeating the procedure for ![]() $i=1,\ldots, n_f$, and subsequently used for the construction of

$i=1,\ldots, n_f$, and subsequently used for the construction of ![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$.

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$.

To obtain the action of the resolvent operator on a vector for all resolved frequencies simultaneously, we use an approach based on the transient-response method, developed in Martini et al. (Reference Martini, Rodríguez, Towne and Cavalieri2021). The action of ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}$ on a vector

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}$ on a vector ![]() $\hat {\boldsymbol {v}}_d(\omega )$ is obtained using the system

$\hat {\boldsymbol {v}}_d(\omega )$ is obtained using the system

where (3.2a–c) is obtained from a Fourier transform of (3.1a–c). Here ![]() $\hat {\boldsymbol {v}}_d$ is regarded as an input, with

$\hat {\boldsymbol {v}}_d$ is regarded as an input, with ![]() $\hat {\boldsymbol {r}}_d$ and

$\hat {\boldsymbol {r}}_d$ and ![]() $\hat {\boldsymbol {s}}_d$ as outputs of the system, with their dimensions implicit by the context. Using

$\hat {\boldsymbol {s}}_d$ as outputs of the system, with their dimensions implicit by the context. Using ![]() $\boldsymbol {v}_d(t)=\boldsymbol {e}_i\delta (t)$ ensures that the canonical basis is used for all frequencies. A time-domain solution of (3.1a–c) will be referred to as a forcing direct run. An actuator direct run is obtained by replacing

$\boldsymbol {v}_d(t)=\boldsymbol {e}_i\delta (t)$ ensures that the canonical basis is used for all frequencies. A time-domain solution of (3.1a–c) will be referred to as a forcing direct run. An actuator direct run is obtained by replacing ![]() ${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}}$ with

${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}}$ with ![]() ${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}}$, and provides the action of

${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}}$, and provides the action of ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {a}}$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {a}}$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}$ on a given vector.

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}$ on a given vector.

Similarly, the action of the operators ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {a}}^{{\dagger} }$ on a vector is constructed by time marching the adjoint system,

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {a}}^{{\dagger} }$ on a vector is constructed by time marching the adjoint system,

$$\begin{gather}\hat{\boldsymbol{q}} = {{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}} \hat{\boldsymbol{v}}_a, \quad \hat{\boldsymbol{r}}_a = \underbrace{{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}^{{{\dagger}}}{{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}}}_{{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{f}}^{{{\dagger}}}} \hat{\boldsymbol{v}}_a, \quad \hat{\boldsymbol{s}}_a = \underbrace{{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}^{{{\dagger}}}{{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}}}_{{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{a}}^{{{\dagger}}}} \hat{\boldsymbol{v}}_a, \end{gather}$$

$$\begin{gather}\hat{\boldsymbol{q}} = {{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}} \hat{\boldsymbol{v}}_a, \quad \hat{\boldsymbol{r}}_a = \underbrace{{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{f}}}^{{{\dagger}}}{{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}}}_{{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{f}}^{{{\dagger}}}} \hat{\boldsymbol{v}}_a, \quad \hat{\boldsymbol{s}}_a = \underbrace{{{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol{a}}}^{{{\dagger}}}{{\boldsymbol{\mathsf{R}}}}^{{{\dagger}}}{{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol{y}}}^{{{\dagger}}}}_{{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol{y}\boldsymbol{a}}^{{{\dagger}}}} \hat{\boldsymbol{v}}_a, \end{gather}$$

referred to here as a sensor adjoint run. A target adjoint run is obtained by replacing ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}$ with

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}$ with ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}$ and can be used to obtain the action of

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}$ and can be used to obtain the action of ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}^{{\dagger} }$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}^{{\dagger} }$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$.

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$.

The action of more complex terms can be obtained by solving (3.1a–c) and (3.3a–c) in succession. For example, if the output ![]() $\boldsymbol {r}_a$ of a sensor adjoint run is an input of a direct run, then the outputs of the latter will be given by

$\boldsymbol {r}_a$ of a sensor adjoint run is an input of a direct run, then the outputs of the latter will be given by ![]() ${ \hat {\boldsymbol {r}}_d = {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }\hat {\boldsymbol {v}}_a}$ and

${ \hat {\boldsymbol {r}}_d = {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }\hat {\boldsymbol {v}}_a}$ and ![]() ${ \hat {\boldsymbol {s}}_d = {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }\hat {\boldsymbol {v}}_a }$. The resulting system is referred to as a sensor adjoint-direct run. Following similar procedures, direct-adjoint, direct-adjoint-direct and adjoint-direct-adjoint runs are constructed. The terms whose action are obtained from each of these runs are illustrated in figure 6.

${ \hat {\boldsymbol {s}}_d = {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}{{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }\hat {\boldsymbol {v}}_a }$. The resulting system is referred to as a sensor adjoint-direct run. Following similar procedures, direct-adjoint, direct-adjoint-direct and adjoint-direct-adjoint runs are constructed. The terms whose action are obtained from each of these runs are illustrated in figure 6.

Figure 6. Illustration of the terms obtained from different combinations of direct and adjoint time-domain solutions. The left and right flowcharts represent a sensor adjoint(-direct-adjoint) system and an actuator direct(-adjoint-direct) system. Readings refer to inner products of the state with sensors/forcing/targets/actuators spatial supports, and F.T. to a Fourier transform. Diagrams for the target and forcing systems are equivalent to the left(right) diagrams with ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}({{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}})$ exchanged by

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}({{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}})$ exchanged by ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}({{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}})$, and the

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {z}}}({{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {f}}})$, and the ![]() $\boldsymbol {y}(\textit {a})$ subscripts of the rightmost terms in each quantity exchanged by

$\boldsymbol {y}(\textit {a})$ subscripts of the rightmost terms in each quantity exchanged by ![]() $\boldsymbol {z}(f)$.

$\boldsymbol {z}(f)$.

In practice, to link together direct and adjoint runs as described above, checkpoints of the output of one time integration are saved to disk and subsequently read and interpolated in the following run to be used as forcing terms. Details of this procedure as well as the impact of different interpolation strategies are described by Martini et al. (Reference Martini, Rodríguez, Towne and Cavalieri2021), to which we refer the reader for details. Alternatively, Farghadan et al. (Reference Farghadan, Towne, Martini and Cavalieri2021) developed an approach that minimizes the data to be retained using streaming Fourier sums to obtain the action of the operator on a discrete set of frequencies.

The most effective approach for constructing the Wiener–Hopf problems depend on the rank of forcing and targets. In the following sections, we outline the best approach for four possible scenarios and discuss the associated computational cost and the types of parametric studies that can be easily conducted in each case.

3.1.1. Low-rank  $\boldsymbol{\mathsf{B}}$

$\boldsymbol{\mathsf{B}}$ $_f$ and

$_f$ and  $\boldsymbol{\mathsf{C}}$

$\boldsymbol{\mathsf{C}}$ $_{z}$

$_{z}$

In this case scenario, the terms ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}, {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}, {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}^{{\dagger} }$ are small matrices and can be obtained using

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}^{{\dagger} }$ are small matrices and can be obtained using ![]() $n_f$ direct and

$n_f$ direct and ![]() $n_z$ adjoint runs. With those terms,

$n_z$ adjoint runs. With those terms, ![]() $\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}} , \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} , \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and

$\hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{l}}}}}} , \hat {{{\boldsymbol{\mathsf{G}}}}_{{{\boldsymbol{\mathsf{r}}}}}} , \hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{l}}}}}}$ and ![]() $\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$ can be constructed.

$\hat {{{\boldsymbol{\mathsf{H}}}}_{{{\boldsymbol{\mathsf{r}}}}}}$ can be constructed.

Note that as ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ is obtained from state readings in (3.1a–c), storing snapshots of

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ is obtained from state readings in (3.1a–c), storing snapshots of ![]() $\boldsymbol {q}(t)$ allows for computing

$\boldsymbol {q}(t)$ allows for computing ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ for any

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ for any ![]() ${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}$ from inexpensive data post-processing. Using the same strategy in (3.3a–c) allows

${{{\boldsymbol{\mathsf{C}}}}_{\boldsymbol {y}}}$ from inexpensive data post-processing. Using the same strategy in (3.3a–c) allows ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$ to be computed for any

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {a}}^{{\dagger} }$ to be computed for any ![]() ${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}}$. It is thus possible to inexpensively compute the control kernels for any sensor and actuator when the forcing and targets are low rank.

${{{\boldsymbol{\mathsf{B}}}}_{\boldsymbol {a}}}$. It is thus possible to inexpensively compute the control kernels for any sensor and actuator when the forcing and targets are low rank.

3.1.2. High-rank  $\boldsymbol{\mathsf{B}}$

$\boldsymbol{\mathsf{B}}$ $_{f}$ and low-rank

$_{f}$ and low-rank  $\boldsymbol{\mathsf{C}}$

$\boldsymbol{\mathsf{C}}$ $_{z}$

$_{z}$

In this scenario, ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ and

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}$ and ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}$ are large matrices, and it is impractical to construct and manipulate them. Instead,

${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {z}\boldsymbol {f}}$ are large matrices, and it is impractical to construct and manipulate them. Instead, ![]() ${{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}} {{\boldsymbol{\mathsf{R}}}}_{\boldsymbol {y}\boldsymbol {f}}^{{\dagger} }$ and