Introduction

The developmental programming literature has established not only that environments encountered at age t predict health at age t+n but also that the strongest predictions appear when t defines critical or sensitive developmental periods.Reference Heindel and Vandenberg 1 , Reference Wadhwa, Buss, Entringer and Swanson 2 A smaller literature argues that these critical periods include gestation. This work posits that pregnant women program fetuses to survive stressors likely at birth, but that these programs can cause illness later in life.Reference Barker 3 , Reference Gluckman, Hanson, Cooper and Thornburg 4 Most notably, this literature has argued that a mother’s nutrition during gestation determines where her infant will fall on the distribution of ‘conserve or convert’ metabolic programs.Reference Claussnitzer, Dankel and Kim 5 , Reference Frayling, Timpson and Weedon 6 According to this argument, poorly nourished mothers program fetuses to conserve food energy as adipose tissue. Well-nourished mothers, conversely, would produce offspring more likely to convert food energy to heat. Natural selection presumably conserved mutations that select among a broad repertoire of fetal metabolic programs because food energy in human ecosystems varied widely over evolutionary time.Reference Bateson, Barker and Clutton-Brock 7 , Reference Gluckman and Hanson 8

The literature positing developmental programming in utero suggests that at least two circumstances can lead to adverse outcomes in contemporary populations. First, transient events may cause poor maternal nutrition during gestation, but the resulting children may enjoy a relatively calorically enriched environment.Reference Ravelli, van Der Meulen, Osmond, Barker and Bleker 9 This ‘mismatch,’ created, for example, by famine, increases the risk of obesity and the metabolic syndrome by placing energy conserving children in an energy-rich environments.Reference Ravelli, van Der Meulen, Osmond, Barker and Bleker 9 , Reference Veenendaal, Painter and de Rooij 10

A second circumstance under which developmental programming can cause adverse outcomes arises from the possibility that programming in utero becomes heritable. Mothers can presumably inherit a tendency to produce children with characteristics adaptive in the environments occupied by ancestors.Reference Neel 11 Economic development and migration in the modern world have, however, rearranged mothers in socioeconomic or geographic space so that many occupy environments unlike those occupied by their antecedents. A mother prone by inheritance to program energy-conserving fetuses, but who lives in a calorically rich environment, will have children at risk of obesity and the metabolic syndrome.

The developmental programming literature likely arose from many antecedents including the long-observed association between the diet of individual mothers and the morphology of their offspring.Reference Rinaudo and Wang 12 That association does not axiomatically imply that the food environment causes metabolic syndrome because some characteristic of the mother may determine both her diet and her infant’s morphology.Reference Speakman 13 Researchers have responded to this possibility by searching for historical ‘natural experiments’ in which pregnant women would not have had access to good nutrition regardless of their personal characteristics. The search inevitably led to the hypothesis that populations exposed to famine would produce offspring who would, on average, die younger than other cohorts born earlier and later in the same place.Reference Doblhammer, van den Berg and Lumey 14 , Reference Saxton, Falconi, Goldman-Mellor and Catalano 15

Well-documented famines in societies that have kept dependable vital statistics remain rare leaving researchers with few opportunities to search for a signal of developmental programming in entire birth cohorts. Searches for such signals have, moreover, produced conflicting results.Reference Doblhammer, van den Berg and Lumey 14 – Reference Li and Lumey 17 A meta-analysis of 21 published studies on the effects of the 1959–1961 Chinese famine, for example, finds mixed results.Reference Li and Lumey 17 Indeed, the birth cohort perhaps most scrutinized for signals of developmental programming in utero – persons in gestation in the ‘Dutch Famine’ of 1944–1945 – has shown no excess mortality due to metabolic syndrome.Reference Lumey, Stein, Kahn and Van der 18 – 22

Conflicting findings in the famine research could arise from several artifacts.Reference Li and Lumey 17 , Reference Paneth and Susser 23 Data dependable enough to estimate age at death in spatially defined populations exposed to famine typically appear well after the onset of widespread migration. Migration likely distorts estimates of cohort life span. Persons exposed to famine may migrate out, while persons of the same age who did not experience the famine may migrate in. The few societies that have kept dependable registries of populations that aged in place have, moreover, nearly always begun benefiting from the introduction of modern public health and medical interventions during the lifetime of the birth cohorts exposed in utero to famine. These interventions plausibly induced temporal variation in cohort life expectancy that confounds measurement of the effects, if any, of developmental programming in utero. Studies of contemporary famines also suffer from the problem that exposed cohorts may not have lived long enough to exhibit premature death programmed in utero.Reference Li and Lumey 17 , 22

Further tests of famine-induced developmental programming in utero would seem a worthy addition to the Development Origins of Health and Disease research agenda if for no other reason than the applied literatureReference Koletzko, Brigitte and Chourdakis 24 – Reference Wijesuriya, Williams and Yajnik 26 and public discourseReference Zimmer 27 , Reference Sawyer 28 appears to have accepted such programming as settled science that should shape public health interventions. The World Health OrganizationReference Delisle 29 and the United Nations Food and Agriculture OrganizationReference Kennedy, Nantel and Shetty 30 have, based on the assumption of developmental programming in utero of entire birth cohorts, urged altering culture that shapes diet and nutrition among women of reproductive age. These initiatives appear to have influenced physicians worldwide. A recent survey of physicians in Australia, for example, discovered that over 90% ‘would be comfortable recommending potential prevention strategies to reduce non-communicable disease related to early life exposure, with lifestyle modification and dietary supplements being the most frequently cited examples. Pregnant women and women planning pregnancy were the most frequently cited target groups for such prevention strategies.’Reference McMullan, Fuller and Caterson 31

We offer a test of famine-induced developmental programming in utero that determines if average age at death (i.e., cohort life expectancy) fell among Swedes exposed in utero to the dire famine of 1773. We compare the exposed Swedes to others born from 1751 through1800 because these 50 birth cohorts meet the standards for a rigorous test based on time-series methods that control for autocorrelation. The cohorts suffered a well-documented famine and did not benefit from public health interventions. These cohorts also aged in place. Outmigration of Swedes remained rare before the mid-19th century and then drew most mostly from persons of reproductive age. 32 Variation in cohort life expectancy among Swedes born before 1801, therefore, unlikely arose from selective migration.

No controversy can arise over whether the Swedish Famine of 1773 would have induced developmental programming in utero of metabolic syndrome should such programming exist. Crop yields fell dramatically in the harvest of 1772 causing food shortages through most of 1773.Reference Utterström 33 The average age at which Swedes died in 1773 (i.e., period life expectancy) fell to 18 years – the lowest in Sweden’s history and more than 10 years lower than the next lowest year. We further note that Swedes in the 18th and 19th century died of non-communicable disease and recorded death by causes we would now include in metabolic syndrome. Heart disease and stroke among older persons, for example, was a major killer then as now.Reference Bengtsson and Lindström 34 , Reference Brandstrom and Tedebrand 35

Method

Data

We used the highest quality life table data available to measure sex-specific average age at death for each annual birth cohort (i.e., cohort life expectancy) for the years 1751 through 1800. We extracted these data from the Human Mortality Database 36 that includes vital statistics that meet minimum standards, set by demographers unassociated with this paper, of accuracy and completeness. No earlier data for Sweden, indeed anywhere, meets standards for inclusion in the Database. Cohorts born after 1800 in Sweden experienced much outmigration making estimates of cohort life span less accurate than for those born earlier. 32 Moreover, 50 data points provide sufficient statistical power to use the time-series tests described below.

Analyses

Empirical tests of association have, since at least the work of Galton,Reference Stigler 37 measured the extent to which two or more variables move away from their statistically expected values in the same cases. ‘Cases’ in our tests include 50 years (i.e., 1751 through 1800) during which Sweden experienced the famine of 1773 and for which we know the average age at death for all the persons born in each year (i.e., cohort life expectancy) as well as the odds of death among Swedes of reproductive age.

Tests of association typically specify the mean of all observed values of a variable as the value expected for any case. Variables measured over time, however, often exhibit ‘autocorrelation’ in the form of secular trends, cycles, or the tendency to remain elevated or depressed, or to oscillate, after high or low values. The expected value of an autocorrelated series is not its mean, but rather the value predicted by autocorrelation. Following practice dating to Fisher,Reference Fisher 38 we solve this problem by identifying time-series models that best fit observed autocorrelation in our two series and by using the predicted values as statistically expected. We used the most developed and widely disseminated type of such modeling. The method, devised by Box and Jenkins,Reference Box and Jenkins 39 identifies which of a very large family of models best fits serial measurements. The Box and Jenkins approach attributes autocorrelation to integration as well as to ‘autoregressive’ and ‘moving average’ parameters. Integration describes secular trends and strong seasonality. Autoregressive parameters best describe patterns that persist for relatively long periods, while moving average parameters parsimoniously describe less persistent patterns.

Our test proceeded through the following steps. First, we used Box and Jenkins methods to detect and model autocorrelation in the average age at death (i.e., cohort life expectancy) for Swedes born in the years 1751 through 1800. Second, we created an indicator variable for famine exposure scored 1 for 1773 and 0 otherwise. We scored 1773 as the exposed birth year because the famine, which began with the fall 1772 crop failure, did not affect most gestations in 1772, and those it did affect yielded births in 1773. Third, we estimated the general equation, shown below, formed by adding, as a predictor variable, the famine indicator variable to the time-series model for cohort life expectancy specified in step 1. We estimated the association between the famine and births in 1773, 1774 and 1775. We included 1774 because the famine extended through most of 1773 (i.e., until the harvest) implying that a fraction of births in 1774 would have been exposed to famine during gestation. We included 1775 as a falsification test because the fetal programming narrative would not predict an effect of famine on that birth cohort.

Y t is Swedish cohort life expectancy for births in year t. C is constant. ω1 through ω3 are the estimated effect parameters. I t is the famine exposure variable (scored 1 for 1773 and 0 otherwise) for year t. θ is a moving average parameter. ϕ is an autoregressive parameter. B is the ‘backshift operator’ or value of a at year t − p or at year t − q. a t is the residual of the model at year t. Box and Jenkins methods, applied in Step 1, determine not only which autoregressive and or moving average parameters (i.e., θ and/or ϕ) appear in the final model, but the values of p and q as well. Developmental programming in utero predicts that ω1 will be significantly <0.

Results

Table 1 includes several pertinent statistics for Sweden during our test period, including those that show more Swedes died in 1773 than in any other test year. Infant mortality in 1773 reached a level never again seen in Sweden. And yet, even before adjusting for autocorrelation, life span for the cohort born in 1773 exceeded that for any other cohort during the test period. Table 1 further shows that the 1773 birth cohort exhibited exceptional life expectancy at ages 6 and 30, suggesting that whatever mechanisms accounted for the longevity of the famine cohort remained at work not only well past childhood but also past the likely threshold for the onset of metabolic disease.

Table 1 Swedish population, births, deaths, infant mortality, and cohort life expectancy (CLE) at birth and at ages 6 and 30

Column 1 of Table 2 shows the Box–Jenkins model identified in Step 1 for cohort life expectancy. As shown by the constant, cohorts born from 1751 through 1800 lived, on average, about 36 years. The autoregressive parameter (i.e., 0.717) shows that values at time t persist into following years although in geometrically decreasing proportions.

Table 2 Coefficients (standard errors in parentheses) for models predicting cohort life expectancy for annual Swedish birth cohorts (1751–1801)

* P<0.0001; two-tailed test.

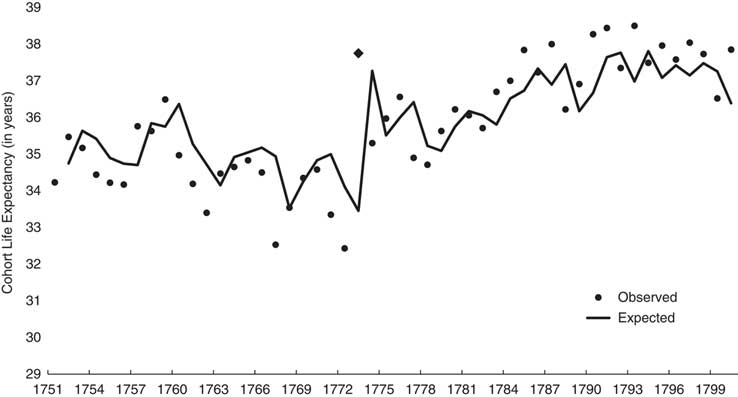

Figure 1 shows the observed and expected (i.e., from Box–Jenkins model shown in Table 2) values of cohort life expectancy. The differences between expected and observed values at 1773 and 1774 become, in effect, the variance in cohort life expectancy ‘available’ for association with the famine.

Fig. 1 Observed (dots; 1773 shown with trapezoid) and expected (line) annual cohort life expectancy for Sweden from 1751 through 1800.

Column 2 of Table 2 shows the results of Step 3 in which we estimated parameters for a model that includes the binary famine variable and Box–Jenkins parameters. The cohort born in 1773 lived 4.2 (s.e.=0.86) years longer than otherwise expected. Consistent with the reasoning of our falsification test, neither the 1774 nor 1775 birth cohort exhibited longevity different from expected. These results imply that whatever mechanisms the famine induced in the exposed cohort did not extend to future cohorts.

Column 3 of Table 2 shows the estimated parameters for the ‘pared’ model which omits the famine indicator variable for 1774 and 1775. The coefficient for the famine year of 1773 (i.e., 3.9) remains positively signed and more than twice its s.e. (i.e., 0.66).

We anticipate several questions regarding our estimations. Did, for example, males and females differ in their response to the famine? We repeated our test separately for male and female cohort life expectancy and found essentially the same associations discovered for the entire population. The famine test model (i.e., same as column 2 in Table 1) estimated a gain of 4.14 (s.e.=0.89) years for females and 4.25 (s.e.=0.8174) for males born in 1773.

Could outliers in the life expectancy series have inflated our s.e. and led to false acceptance of the null hypothesis for the cohorts born in 1774 and 1775? We used outlier detection and correction routinesReference Chang, Tiao and Chen 40 to answer this question. We found no outlying cases.

Did whatever mechanisms that accounted for the longevity of the 1773 cohort reduce deaths only in the few years after infancy, or did they persist into later life as well? As Table 1 shows, life span remaining at age 6 and 30 for the 1773 birth cohort, both exceeded that for any other cohort in the test period. Applying the test procedures described earlier to cohort life expectancy at 30 produced an estimated surplus of 1.9 years.

Discussion

Prior research has not found strong support for the hypothesis, implied by developmental programming in utero, that birth cohorts in gestation during famines exhibit shorter life spans than other cohorts. Explanations of this weak support have included that migration and modern medicine have made the association difficult to observe in historical data. We offer a test in which neither medicine nor migration could obscure the presumed effect. We find no support for the hypothesis. Birth cohorts exposed in utero to one of history’s cruelest famines lived longer lives than otherwise expected.

Limitations of our test include that data from the last half of the 18th century may not generalize to modern societies exposed to famine. We note, however, that the argument for developmental programming in utero presumes that natural selection conserved the mechanism in humans well before the 18th century.Reference Bateson, Barker and Clutton-Brock 7 , Reference Gluckman and Hanson 8 We, moreover, know of no argument asserting that natural selection has extinguished such programming since 1800. Sweden between 1750 and 1800 provides the most reliable data currently available for estimating the association between cohort life expectancy and exposure in utero to famine. No other society with vital statistics dependable enough for inclusion in the Human Mortality Database provides data describing a population, exposed to famine, whose composition remained as unaffected by migration and modern medicine for the minimum of 50 years though necessary to apply strong modeling methods. ‘Microdata’ describing the life course of individuals in small historic samples cannot, moreover, yield population-level estimates of cohort life span due to incomplete survival data and unknown external validity.Reference Dillon, Amorevieta-Gentil and Caron 41

We do not have access to the individual birth and death records that sum to the data we used in our test. Such data would allow the construction of monthly time-series of births and deaths that in turn could identify the life span of conception cohorts exposed in utero to the famine more accurately than we could with annual data. Without such data we cannot identify when in gestation exposure to famine confers the greatest longevity benefit.

Our data cannot rule out that developmental programming in utero made metabolic disease more common in the 1773 birth cohort than otherwise expected. Such added morbidity could not, however, account for the longer life span we found in this cohort. We also note that the literature which motivated us to conduct our test claims that developmental programming in utero leads not only to greater morbidity but also to early death.Reference Zimmer 27

We set out to test the hypothesis that the observed value of cohort life expectancy for 1773 would fall below the 95% confidence interval of its expected value. We found, rather, that the cohort’s observed life span rose above the 99% confidence interval of the expected value. We note that the distance between the expected and observed life span of the 1773 birth cohort likely reflects the effect of the famine on earlier birth cohorts as well as on that of 1773. Famine-induced deaths among persons born before 1773 likely lowered life span for earlier birth cohorts and thereby repressed the statistically expected value for the 1773 cohort. We doubt, however, that adjusting for the effect of the famine on earlier birth cohorts, assuming such adjustment were computationally possible and logically desirable, would lead to finding the observed value below the 95% confidence interval as predicted by developmental programming in utero. Reasons to doubt that outcome includes that our interrupted time-series approach estimates the expected value of life span for the 1773 cohort, and its confidence interval, from cohorts born after the famine as well as from those born before. The 27 cohorts born after 1773 balance the influence of the 22 born before.

Developmental programming may not predict our findings, but evolutionary theory does. Much literature argues that natural selection has conserved mechanisms, collectively referred to as reproductive suppression, that avert maternal investment in less reproductively fit offspring including those that, if born, would least likely thrive in prevailing environments.Reference Beehner and Lu 42 – Reference Lummaa, Pettay and Russell 45 This literature assumes that pregnant women vary in their capacity to invest in offspring and that offspring vary in their need for maternal investment. Low-resource mothers with high-need offspring presumably had relatively few grandchildren because their children more frequently died before reproductive age than the children of other mothers. These assumptions lead to the inference that natural selection would have conserved mutations that spontaneously abort gestations in which the needs of the prospective offspring would otherwise exceed the resources of the prospective mother.

Reproductive suppression predicts that spontaneous abortion will increase when the fraction of pregnant women with relatively few resources increases even if the distribution of fetuses on the need for maternal resources remains unchanged. This circumstance, referred to in the literature as selection in utero, implies that fewer high need infants will be born when the environment weakens women of reproductive age.Reference Trivers and Willard 46 , Reference Bruckner and Catalano 47 The empirical literature reports that conception cohorts presumably subjected to deep selection in utero also exhibit unexpectedly great longevity.Reference Bruckner, Helle, Bolund and Lummaa 43 , Reference Catalano and Bruckner 48 – Reference Bruckner and Catalano 50 Our findings imply that the Swedish Famine of 1773 may have triggered selection in utero.

Our, and earlier, research failing to find relatively short life spans among birth cohorts in utero during famines does not detract from the developmental programming argument. Research supporting the argument for such programming in infancyReference Catalano and Bruckner 51 and adolescenceReference Falconi, Gemmill, Dahl and Catalano 52 includes tests that, like ours, apply time-series methods to historical life table data. Ours and similar findings, however, suggest that while gestation may present an opportunity for developmental programming, signals of that programming in the longevity of historic birth cohorts may prove difficult to detect given, among other phenomena, the countervailing influence of selection in utero.

Research finds plasticity in fetuses as well as evidence that the maternal stress response, particularly that induced by poor nutrition, influences that plasticity. Much literature, moreover, argues that the combination of fetal plasticity and the maternal stress response puts offspring at risk of poor health and early death. That literature often cites research into the health effects of famine to support this argument. The preponderance of that research, however, finds no association with cohort longevity. Critics of that research have noted that migration and medicine may have obscured the association in modern populations. We contribute to the literature by searching for that association in a population well described by life table data, unaffected by migration, unaided by modern medicine and stressed by one of history’s worst famines. We find that birth cohorts exposed in utero to that famine lived longer lives than otherwise expected.

Acknowledgments

None.

Financial Support

This research received no specific grant from any funding agency, commercial or not-for-profit sectors.

Conflicts of Interest

None.