Systematic reviews are on the highest level of the evidence hierarchy in medical science (Reference Guyatt, Sackett and Sinclair1), and are often used for decision making in healthcare (Reference Wallace, Dahabreh, Schmid, Lau and Trikalinos2). Apart from that, abridged forms of knowledge synthesis that convey key information in a compact manner are required, for the purpose of time-saving and due to the magnitude of evidence available (Reference Dobbins, Jack, Thomas and Kothari3). Conducting full-fledged systematic reviews or HTAs is time-consuming and costly (Reference Perleth, Lühmann, Gibis and Droste4;Reference Oxman, Schünemann and Fretheim5). It usually takes 6 months to 1 year to complete a systematic review (Reference Ganann, Ciliska and Thomas6), and at least 1 year to complete an HTA. Because of the urgency of some questions in healthcare, decision makers sometimes need information within a shorter time (Reference Perleth, Lühmann, Gibis and Droste4).

Ganann et al. (Reference Ganann, Ciliska and Thomas6) discuss different methods applied in the case of rapid reviews to shorten the review process. These predominantly consist of methodological limitations in comparison to systematic reviews, and frequently concern the search and retrieval of literature. For instance, only publications that are easily accessible are included in the review. Furthermore, the number of databases searched is limited. Additionally, the reviewers avoid searching for gray literature, that is, informally published literature (7). Restrictions can also be applied to the later stages of the review process, such as screening of titles and abstracts, screening of full texts, or data extraction by sparing duplicate review, and quality assessment of the included publications. The methodological restrictions described above may lead to an increased vulnerability to bias, particularly publication and language bias, simply because lack of proof-reading means that human errors are not corrected (Reference Ganann, Ciliska and Thomas6).

According to Watt et al. (Reference Watt, Cameron and Sturm8), when directly compared with traditional systematic reviews of the same topic, rapid reviews are often characterized by narrower research questions and, therefore, inclusion of fewer publications. Additionally, ethical, legal, social, and organizational issues are considered less extensively. Thus, there are axiomatic differences between rapid and systematic reviews, although conclusions drawn by both forms of review are comparable, as observed by Watt et al.

To estimate the validity of the results, assessment of methodological quality is required. To date, little is known about the quality of rapid reviews and whether it is useful to apply quality measurements developed for systematic reviews to rapid reviews, because particular instruments for the quality assessment of rapid reviews do not exist.

OBJECTIVES

The aim of this study was to analyze the methodological restrictions of rapid reviews compared with systematic reviews using the AMSTAR checklist and assess its feasibility for rapid reviews. AMSTAR was applied to a sample of rapid reviews on surgical interventions identified through systematic literature search. Furthermore, the results were compared with those of an overview of systematic reviews on the same topic of surgical interventions (Reference Martel, Duhaime and Barkun9) using AMSTAR for assessment as well.

METHODS

A systematic literature search without a time limit was conducted in three databases: Medline, Embase, and the Cochrane library. In addition, the HTA databases, namely the Centre for Reviews and Dissemination (CRD), the German Agency for HTA (DAHTA), the Canadian Agency for Drugs and Technologies in Health (CADTH), the Ludwig Boltzmann Institute for Health Technology Assessment (LBI), the Australian Safety and Efficacy Register of New Interventional Procedures—Surgical (ASERNIP—S), and the Belgian Health Care Knowledge Centre (KCE) were searched. Search terms included rapid review, rapid report, rapid health technology assessment, rapid appraisal, rapid report, short health technology assessment, evidence summary, expedited review, accelerated review, realist review, realist evaluation, short systematic review. Further details are shown in Supplementary Tables 1–3.

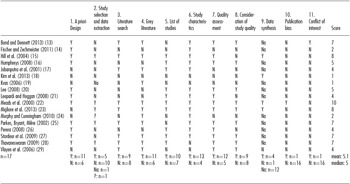

Table 1. Assessment of Rapid Reviews by Using AMSTAR

AMSTAR items: 1. Was an a priori Design provided? 2. Was there duplicate study selection and data extraction? 3. Was a comprehensive literature search performed? 4. Was the status of publication (i.e. grey literature) used an inclusion criterion? 5. Was a list of studies (included and excluded) provided? 6. Were the characteristics of the included studies provided? 7. Was the scientific quality of the included studies assessed and documented? 8. Was the scientific quality of the included studies used appropriately in formulating conclusions? 9. Were the methods used to combine the findings of studies appropriate? 10. Was the likelihood of publication bias assessed? 11. Was the conflict of interest stated? Y: Yes; N: No; na: not applicable; ?: can't answer.

Titles and abstracts were screened by applying predefined inclusion and exclusion criteria. Screening was done by two independent reviewers, and disagreements were resolved through discussion.

Rapid reviews were included if the publication language was English, German, or Italian, and if they contained a methodology section where the rapid methods used were explicitly identified. Furthermore, we included only publications dealing with surgical interventions, that is, invasive surgical procedures on the human body for therapy purposes, including laparoscopic surgery. Neither accompanying measures, nor dental treatment, nor postsurgical care such as wound care were included. We focused on surgical reviews to have a sample of rapid reviews which is comparable in terms of content. In the case of additionally conducted economic analyses, we included only the medical part in the methodological analysis.

Furthermore, we extracted the following characteristics of the sample rapid reviews: terms used to describe the accelerated review process, databases, language, and length of the document, and publication status (i.e., if the review was published in a scientific journal).

Methodological analysis was determined by the AMSTAR checklist, which was developed by Shea et al. (Reference Shea, Grimshaw and Wells10) and consists of 11 questions (see Table 1). Both validity and reliability of AMSTAR have been shown (Reference Shea, Bouter and Peterson11;Reference Shea, Hamel and Wells12). According to Shea et al. (Reference Shea, Hamel and Wells12), a score between 0 and 11 can be calculated by summing the positively answered items. In the context of the present analysis, Item 1 (“Was an a priori design provided?”) was marked with a “yes” if research questions as well as inclusion and exclusion criteria were explicitly stated. Assessment of methodological quality was done by one reviewer. The results of the analyses of rapid reviews were compared with those of an overview of systematic reviews which also used AMSTAR as their quality assessment instrument. The overview was published in 2012 by Martel et al. (Reference Martel, Duhaime and Barkun9) and synthesized the results of 27 systematic reviews on the topic of laparoscopic surgery for colorectal cancer.

RESULTS

Literature search in Embase and Medline was conducted on February 14, 2014, and yielded 3,493 results. The search in the Cochrane library on February 24, 2014, generated 1,735 results. After the screening of 135 complete texts, five rapid reviews about surgical interventions were selected. Another twelve rapid reviews about surgical interventions were found during additional searches in the databases listed above and shown in Figure 1.

Figure 1. Rapid reviews about surgical interventions found during additional searches in the databases.

The publication language was English in all the rapid reviews except two. There are notable differences in the reviews with regard to extent, publication type, and terms used for rapid review methods. The shortest rapid review ran to five pages; the longest ran to 265 pages, with a median of 57 pages. Six reports (35.3 percent) were published as journal articles. Supplementary Table 1 gives an overview of the different terms used to describe rapid review methodology.

Table 2 and Figure 1 show in detail the assessment of rapid reviews by using AMSTAR. Items 5 and 6, concerning the listing of included and excluded studies, and the provision of the characteristics of included studies, were mainly answered with “yes,” whereas Items 9, 10, and 11, appraising meta-analysis, publication bias, and statement of conflicts of interest, were predominantly answered with “no” or “not applicable.” A summary of the answers is given in the last line of the table.

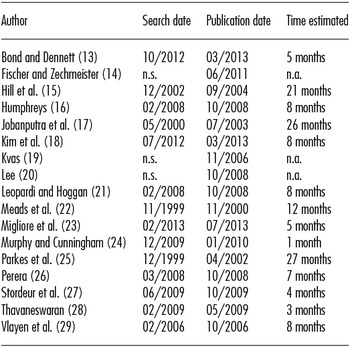

Table 2. Time Estimated between Date of Last Search and Publication Date

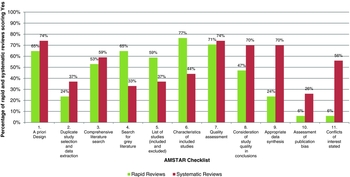

The score percentages of rapid reviews and systematic reviews are shown in Figure 2. There are notable differences in the scores of rapid reviews and systematic reviews for Items 4, 5, 6, 8, 9, 10, and 11. In particular, rapid reviews include the following items more frequently than systematic reviews: a search for gray literature (Item 4, 65 percent versus 33 percent), a list of both included and excluded studies (Item 5, 59 percent versus 37 percent), and characteristics of included studies (Item 6, 77 percent versus 44 percent). However, in rapid reviews, study quality in formulating conclusions is considered (Item 8, 47 percent versus 70 percent), meta-analysis is appropriately conducted (Item 9, 14 percent versus 70 percent), the probability of publication bias is assessed (Item 10, 6 percent versus 26 percent), and conflicts of interest are stated (Item 11, 6 percent versus 56 percent) less frequently compared with systematic reviews. In terms of duplicate study selection and data extraction, both rapid and systematic reviews prove to be weak (Item 2, 27 percent versus 34 percent), whereas both appear to be strong with regard to a priori design (Item 1, 65 percent versus 74 percent) and the quality assessment of included studies (Item 7, 71 percent versus 74 percent).

Figure 2. Score percentages of rapid reviews and systematic reviews.

The median score of the rapid reviews is 5 (mean 5.1) with an inter-quartile range of four to seven, whereas Martel et al. (Reference Martel, Duhaime and Barkun9) reported a mean score of 5.8 for systematic reviews. The percentage of good quality rapid reviews, that is, those achieving a score of 9 or more, is 5.9 percent. In contrast, 30 percent of all systematic reviews assessed were considered to be of good quality (Reference Martel, Duhaime and Barkun9).

Estimation of the Time Necessary for Conduction of Rapid Reviews

The difference between full systematic reviews and rapid reviews mainly seems to be the time consumed, but no information about the time taken to complete the reviews was given by the authors of any of the included publications.

Following the methods described by Harker and Kleijnen (Reference Harker and Kleijnen30), we estimated the time frame by calculating the period between the last search date and the approximate or reported review publication date. The mean time between search and publication was 10.2 ± 8.1 months, while median time was 8 months with an inter-quartile range of 6 months (see Table 1). Only 14.3 percent of the rapid reviews were published within 3 months of the last search, 21.4 percent within 4 to 6 months, 42.9 percent within 7 months to 1 year, and 21.4 percent were published later than a year after the last search date. Three publications did not provide information about search dates (see Table 1). By way of comparison, time frames for systematic reviews analyzed by Martel et al. (Reference Martel, Duhaime and Barkun9) were calculated. The mean time between search and publication was 20.1 ± 4.2 months.

DISCUSSION

Using the AMSTAR checklist, rapid reviews achieved better results than systematic reviews with regard to search for gray literature, listing of included and excluded studies, and provision of study characteristics. On the other hand, systematic reviews were better in terms of considering study quality in formulating a conclusion, conducting appropriate meta-analyses, assessment of publication bias, and statement of conflicts of interest. However, mean AMSTAR scores were similar.

When analyzing reviews with the AMSTAR checklist, one faces several difficulties. First of all, a checklist is generally not meant to be used as an assessment instrument, but as a means of controlling publication quality by checking the completeness of relevant issues (Reference Da Costa, Cevallos, Altman, Rutjes and Egger31;Reference Buchberger, von Elm and Gartlehner32). Additionally, assigning marks for reporting items by using a score system can be misleading if an assessment of methodological quality was the target, and such results should be questioned. Specifically, Item 1 leaves some room for interpretation. It is not sufficiently clear whether the demand for an a priori design means that a protocol must be published in advance or that the demand is satisfied by providing a clearly defined research question that includes selection criteria. Analyzing rapid reviews in the present study, the second interpretation was applied. Furthermore, it must be stated that Item 5, which checks the listing of included and excluded studies, and Item 6, which appraises provision of the characteristics of included studies, assess the reporting quality of reviews rather than their validity. This is particularly important because using these criteria for reporting quality as a surrogate for validity may lead to false estimation of methodological quality (Reference Dreier, Borutta, Stahmeyer, Krauth and Walter33), especially when using a score system.

In the present study, an a priori review design, as defined above, was found in 65 percent of the reviews sampled. Harker and Kleijnen (Reference Harker and Kleijnen30) conducted a systematic study of the rapid review methodology. They stated that 47 percent of analyzed rapid reviews did not contain a well-defined research question. Of those, approximately 30 percent provided only broad aims, whereas approximately 50 percent contained narrower aims from which a research question could be derived. Well-defined selection criteria were found in 50 percent of the reviews.

One of the weaknesses of rapid reviews observed in this study is the lack of duplicate study selection and data extraction conducted by two reviewers. According to Buscemi et al. (Reference Buscemi, Hartling, Vandermeer, Tjosvold and Klassen34), data extraction conducted by one reviewer and then checked by a second reviewer generated 22 percent more errors than independent double extraction, but helped to save time by 36 percent. However, the rate is only slightly higher in systematic reviews (Reference Martel, Duhaime and Barkun9). In contrast to our study, Harker and Kleijnen (Reference Harker and Kleijnen30) found that in 60 percent of rapid reviews, two reviewers are involved. Nonetheless, the waiver of duplicate literature selection and data extraction has to be seen in the context that this is precisely a common restriction imposed in rapid reviews to accelerate the process (Reference Ganann, Ciliska and Thomas6).

Further research about the possibility of compensating for this restriction through comprehensive literature searches, including gray literature and scanning of reference lists, would be interesting. This hypothesis is supported by the findings of Royle and Waugh (Reference Royle and Waugh35), who recommend manual searching of relevant reference lists and consultation by experts because it is more effective than exhaustive database searches. The main requirement with regard to limitations or restrictions that could possibly lead to bias is that they should be stated explicitly for the purpose of transparency (Reference Oxman, Schunemann and Fretheim36).

Gray literature was found to be included in 65 percent of the rapid reviews, whereas in systematic reviews, a search for gray literature was performed much less frequently (Reference Martel, Duhaime and Barkun9). Because Martel et al. (Reference Martel, Duhaime and Barkun9) do not explain their interpretation of this AMSTAR item explicitly, these remarkable differences could be attributed to divergent interpretations. Interestingly, and in contrast to our findings, Ganann et al. (Reference Ganann, Ciliska and Thomas6) state that a common restriction in rapid reviews is the renouncement of the search for gray literature.

Rapid reviews reach high agreement rates in comparison to systematic reviews concerning the provision of a list of included and excluded studies and of study characteristics. Even though these items assess reporting quality rather than validity, this indicates a high level of transparency, which may be important considering the methodological restrictions of rapid reviews.

While the methodological quality of included studies is frequently assessed in both rapid reviews and systematic reviews, consideration of the results of quality assessment in formulating conclusions is much less common in rapid reviews. This fact is remarkable because the aim of rapid reviews is to provide guidance for time-sensitive and urgent requests, especially in the case of disease outbreaks (Reference Palmer, Jansen, Leitmeyer, Murdoch and Forland37). Therefore, it is of particular importance that recipients should be able to rely on the conclusions stated in the review, and a scrutiny of the validity of results is indispensable.

Evidently, rapid reviews contain fewer meta-analyses and assessments of publication bias in comparison to systematic reviews. This may be because consideration of study quality regarding conclusions is not common in rapid reviews. This is, however, necessary to conduct adequate meta-analysis.

Conflicts of interest are stated in very few rapid reviews. Systematic reviews fulfil this AMSTAR item much more frequently, according to Martel et al. (Reference Martel, Duhaime and Barkun9), compared with the results of this study. However, this comparison should be considered carefully, because it is not clear if Martel et al. (Reference Martel, Duhaime and Barkun9) interpreted the item as strictly as required.

In terms of mean AMSTAR scores, rapid reviews and systematic reviews prove to be close to each other. However, reviewers are advised against calculating such scores by the Cochrane handbook (Reference Higgins, Altman and Sterne38) because of the lack of empirical evidence and the necessity of weighting the items, which would lead to additional difficulties. Considering their flaws, we calculated mean AMSTAR scores for comparative purposes only.

In summary and apart from the discussion about the ability of checklists for quality assessment in general, it can be stated that AMSTAR may be used for rapid reviews. However, we do not recommend the calculation of a sum score. Rather, by reporting the results in detail, methodological restrictions and thus potential sources of bias can be made transparent to help decision makers estimate if they can rely on the conclusions of a rapid review.

The estimated time between the last search date and the publication date varied widely between 1 and 27 months. Given that all analyzed reviews are explicitly considered rapid by the authors, and the aim of an accelerated review process is to provide patients quicker access to innovative effective technology within sustainable healthcare systems, it seems striking that only a minority were published within 6 months from the last literature search, whereas most reviews were published later, the mean time being 10.2 months. While Harker and Kleijnen (Reference Harker and Kleijnen30) found similar results, other sources reported shorter time frames ranging between 1 and 6 months (Reference Perleth, Lühmann, Gibis and Droste4;Reference Ganann, Ciliska and Thomas6;Reference Watt, Cameron and Sturm39).

However, with the absence of information about preparation time, the duration between completion and publication was not replicable; hence, the reasons for delay could not be assessed. For systematic reviews, we calculated a mean time of 20.5 months from last update to publication. Sampson et al. (Reference Sampson, Shojania and Garritty40) found a median time of 15 months from final search to publication. They state that the publication lag is mostly due to the peer review and publication process. For minimizing publication delays, electronic publication with immediate release is recommended. However, as only a minority of rapid reviews were published in peer-reviewed journals, this does not explain the publication delay.

Moreover, electronic publication usually entails additional costs. As demonstrated by Shojania et al. (Reference Shojania, Sampson and Ansari41), more than half of the systematic reviews require an update, a finding consistent with the fact that review institutions such as the Cochrane Collaboration have an issue with keeping even half of their systematic reviews up to date (Reference Bastian, Glasziou and Chalmers42). A possible solution to the publication delay may be, in addition to the methodological restriction described above, to publish short summaries with clear recommendations while explicitly including methods for the sake of transparency, but without extensive discussion. This will be helpful for decision makers and patients with urgent needs.

In addition, the term “rapid” should be interpreted in terms of scope rather than exclusively in terms of time, according to the definition given by EUnetHTA (43). Thus, rapid reviews are characterized by the waiver of economic evaluation, ethical analysis, and aspects pertaining to organization, society, and legality. As there is no commonly accepted definition for rapid reviews at present and because methodology varies greatly between publications, standardization is needed. According to Watt et al. (Reference Watt, Cameron and Sturm39), the development of explicit methodology may be counterproductive because one of the strengths of rapid reviews is being flexible to adapt to specific audiences.

Limitations

The first limitation of the present study is that only rapid reviews pertaining to the topic of surgical procedures have been considered. Thus, the results should be generalized with caution.

Second, it should be considered that the analysis focuses only on medical questions. Reviews containing additional economic analyses were omitted. Furthermore, ethical, legal, social, and organizational aspects were not considered.

Third, the authors of the considered publications did not report the time taken for completion of rapid reviews, and the methods for estimation of time frame may lead to distorted results, probably by overestimating the time needed.

Fourth, analyzed rapid reviews included other study designs beyond randomized controlled trials; although AMSTAR is considered by the developers to be suitable for different types of reviews, it has only been tested on systematic reviews of RCT (Reference Shea, Hamel and Wells12).

Finally, the quality assessment of systematic reviews was not conducted by the authors of the present study, but the results were taken from a recently published overview of reviews. Because some items of AMSTAR leave room for interpretation, comparisons should be considered carefully. For future research, it would be preferable to analyze both rapid and systematic reviews to be able to compare the two forms of reviews directly.

CONCLUSIONS

Based on the reflections above, we conclude that AMSTAR can be used as a checklist for rapid reviews to describe methodological restrictions in comparison to systematic reviews and to roughly estimate the validity of the results. We propose the following modifications:

First, a research question should be considered a priori if it is well-defined and accompanied by clearly formulated selection criteria, because review protocols are usually unavailable for rapid reviews. Second, items concerning reporting quality should be explicitly designated as such and reported separately to emphasize transparency. Third, the absence of duplicate study selection and data extraction should not affect the general appraisal because it is a frequent time-saving restriction in rapid reviews. Fourth, if conflicts of interest of the review authors are stated, it should be considered as sufficient for a positive answer, because it seems quite unrealistic to provide a statement of conflicts of interest with regard to every study included, especially because not every journal demands such statements, and there is no reason why authors should give such information in their reviews. Finally, when using AMSTAR for rapid reviews, rather than a sum score, all items and the corresponding answers should be listed for the purpose of transparency, because a sum score does not provide sufficient details about different types of bias. Furthermore, risk of bias due to methodological restrictions should be addressed within a separate item.

Policy Implications

Conducting full-fledged systematic reviews or HTAs is time-consuming and costly. Abridged forms of knowledge synthesis that convey key information in a compact manner are required, for the purpose of time-saving and due to the magnitude of evidence available.

Rapid reviews can be conducted in a shorter timeframe but they feature methodological restrictions, which may lead to an increased vulnerability to bias. To estimate the validity of the results of rapid reviews, detailed analysis of methodological restrictions, that is, assessment of methodological quality, is required.

SUPPLEMENTARY MATERIAL

Supplementary Table 1: http://dx.doi.org/10.1017/S0266462316000465

Supplementary Table 2: http://dx.doi.org/10.1017/S0266462316000465

Supplementary Table 3: http://dx.doi.org/10.1017/S0266462316000465

CONFLICTS OF INTEREST

The authors do not have any conflicts of interest.