1 Introduction

The last few decades have seen a significant rise in the power of indicators, as documented by a considerable body of literature.Footnote 1 These studies show that the naive view according to which indicators are merely purveyors of information aimed at describing an aspect of the world fails to adequately explain the nature and effects of such devices. More than simply describing the phenomenon it measures, an indicator often affects the very field it covers. Among other things, an indicator can force the entities evaluated to take into account, or even integrate, the standards and values against which the measurement is done. More generally, the various normative effects that an indicator may produce have prompted some to describe them as a ‘technology of global governance’ (Davis et al., Reference Davis, Kingsbury and Merry2012b).

The prominent position of graphical curves, models and even rankings during the COVID-19 pandemic is certainly a critical component of the crisis. Although news media have long been saturated with quantitative information, never before has so much attention been paid to numbers. In a way, this phenomenon seems to have confirmed the increasing influence of metrics as noted by the body of work mentioned above. It can indeed be seen as paradigmatic of what Alain Supiot (Reference Supiot and Brown2017) has called ‘governance by numbers’. However, perhaps it is too simplistic to interpret the omnipresence of indicators during the COVID-19 pandemic as the mere continuation of this trend. To understand the uses of these instruments during the crisis, we need to consider the specific nature and history of public health and, in particular, of the management of infectious-disease epidemics. Indeed, from the beginning of the nineteenth century onwards, indicators and quantification more broadly were called upon to play a key role in the management of health concerns and outbreaks, at both the local and international levels. Although their function has evolved over time, numbers and statistics have long been recognised as an essential component of the decision-making process in relation to public health and policy. In this light, my aim here is to demonstrate that the evolving role that numbers have played in the history of the world management of epidemics may help us to understand how indicators inform, influence and sometimes determine the content of the (often coercive) measures that have been taken by governments around the world to combat the novel coronavirus. In particular, I intend to show that the normative power acquired by metrics during the pandemic can be understood in light of two rationales that have gained in importance over the last decades – epidemiological surveillance and performance assessment.

The paper proceeds as follows. First, I will describe the emergence of epidemiology and describe how this topic became the subject of active discussions at the international level. The specific character of infectious disease – namely that it can rapidly spread from one country to another – led some (mostly Western) countries to stress the need for international co-operation, notably as regards the transmission of health data and statistics. Second, I will analyse the emergence, during the second half of the twentieth century, of these two forms of quantification – epidemiological surveillance and performance assessment – in the field of international health and describe their respective relation to the decision-making process. Third, in light of these two rationalities, I will study some quantitative instruments deployed during the COVID-19 pandemic and examine how they influence the content of public health policies. Lastly, I offer some concluding remarks.

2 Statistics and the birth of epidemiology

The history of public health and the history of statistics are closely intertwined. Historians agree that the nineteenth century marked the real beginning of epidemiology, as it is understood today. Along with poverty, unemployment and criminality, disease was one of the fields affected by the avalanche of numbers – famously described by Hacking (Reference Hacking1982) – that occurred in the 1820s. The many significant changes that characterised the modern state were accompanied by the deployment of important statistical offices. During this period of major social reform and public intervention, medical science was strongly impacted by the statistical practices of both states and insurance companies (Desrosières, Reference Desrosières2002; Porter, Reference Porter, Jorland, Opinel and Weisz2005).

In its early years, epidemiology was only concerned with infectious diseases,Footnote 2 paying particular attention to environmental and social influences on health. The most prominent figure in this regard is undoubtedly William Farr (1807–1883), who served for forty years as superintendent of statistics at the General Register Office of England and Wales. He conceived of epidemiology in a very pragmatic way: his main goal was to understand epidemics by linking unhygienic conditions with illness in order to develop strategies against outbreaks (Hardy and Magnello, Reference Hardy and Magnello2002). Convinced that vital phenomena such as births, deaths and disease were affected by regularities and patterns, Farr deemed it critical to collect data on causes of death as completely and precisely as possible, and to establish precise and consistent disease classification (Donnelly, Reference Donnelly, Jorland, Opinel and Weisz2005). The gathering of data, then, has always been at the core of public health practice. Farr went even further in creating a performance indicator (called a ‘healthy district’) based on data from different cities, which sought to compare the various death rates. The average rate of the healthiest districts was even set as an objective for the other districts (Donnelly, Reference Donnelly, Jorland, Opinel and Weisz2005). While Farr used somewhat rudimentary methods, we cannot but underline that such a tool is in many respects analogous to what we would now call benchmarking.

At the international level, the issue of tackling epidemics had been a concern long before the creation of the World Health Organization (WHO) in 1948. A century earlier, Paris hosted the first international health conference aimed at controlling outbreaks while the Congrès international d'hygiène was organised regularly (Paillette, Reference Paillette2012). At these conferences, delegates were mainly concerned with ensuring the security of the European continent from the threats coming from abroad (Rasmussen, Reference Rasmussen, Boudia and Henry2015). The rise of bacteriology – against the miasma theory – as a paradigm for explaining infectious disease confirmed the view that there was a need to control and secure national frontiers from microbial enemies coming from abroad. On the one hand, the idea that diseases are caused by microorganisms that can circulate around the world and, on the other, the increased movements of persons and goods favoured a process of ‘bacterial globalisation’ (globalisation par les germes) (Rasmussen, Reference Rasmussen, Boudia and Henry2015) and consequently enhanced the conviction that epidemics had to be dealt with internationally (Blouin Genest, Reference Blouin2015).

During the 1900s, in a period marked by internationalism, the International Sanitary Office of the American Republics and the Office international d'hygiène publique were the first international health organisations to be created. Unsurprisingly, one of the missions of the latter office, notably, was to collect and diffuse epidemiological and statistical information from member countries in order to become a world observatory on epidemics. The creation of the Health Organisation as part of the League of Nations in 1923 confirmed the trend towards the internationalisation of health statistics. As noted by Edgar Sydenstricker (1881–1936), who served as Chief of the Service of Epidemiological Intelligence and Public Health Statistics of this organisation:

‘Comparable vital statistics for all nations, and a worldwide exchange of current information on the prevalence of disease … are no longer academic ideals but have become necessities. They are as essential to a sound development of international cooperation for prevention of disease as vital statistics and disease notification are fundamental to local or national public health administration.’ (Sydenstricker, Reference Sydenstricker1924)

The need for comparable information was indeed considered a key issue and the substantive differences between countries in the way data were collected and classified were a nightmare for international epidemiologists. Extensive work was thus undertaken to achieve greater uniformity across the procedures of Member States (Lothian, Reference Lothian1924). Alongside these health organisations, the Office international des épizooties (International Office of Epizootics) was created in 1924, independently of the UN system. The reappearance of rinderpest in Western Europe in the 1920s prompted the creation of this institution, which fully participated in this movement towards the internationalisation of norms and statistical information (OIE, 2007). For both human and animal infectious diseases, the widely held view was that the measures adopted to combat epidemics should cause minimal harm to the proper conduct of international trade.

In the aftermath of World War II, the WHO emerged as part of the UN. The international public health experts who contributed to the WHO's birth shared the assessment that science was essential to the improvement of health conditions at the global level and that medical science should be disseminated internationally (Lin, Reference Lin2017). Accordingly, they thought that the future WHO's Health Statistics Division should not only collect raw data on epidemics, but also gather and analyse all types of data related to health. Likewise, they considered that, alongside descriptive statistics, the use of inferential statistics was needed in order to compare and assess the different public health interventions put in place around the world (Lin, Reference Lin2017).

The mission and influence of international organisations such as the WHO and the OIE lie primarily in their expertise function, notably with regard to health statistics. Although, unlike some other international organisations, their coercive power is minimal or non-existent, they nevertheless exercise an undeniable normative authority that is manifested in protocols, nomenclatures and standards, related to the collection of data and modes of quantification.Footnote 3 On the one hand, these technical norms are the result of compromises between different visions and allow certain interests to prevail over others; on the other, they favour transnational comparison by imposing uniform categories despite the deep divergences between local knowledge and practices that may exist among nations.

3 Data production for surveillance and performance-assessment purposes

Over time, the WHO became increasingly involved in the enhancement of national public health officials’ expertise: it launched projects in numerous countries to support the development of national statistical services and contributed to the organisation of training in health statistics in various universities and institutes (WHO, 2008; Fisher, Reference Fisher and Davis2012). The introduction of computers was a key element in the improvement of health-data processing and the WHO strongly promoted the development of informatics. The WHO's emphasis on the implementation of proper co-ordinated health-information systems can be described in light of two rationales: surveillance and performance assessment. These two logics should not be sharply distinguished, but it seems fruitful to stress their respective specificity and to trace their genealogies separately.

3.1 From epidemiological surveillance to preparedness

Let us first look at the surveillance rationale. This notion was put forward in the US during the 1950s and 1960s by Alexander Langmuir (1910–1993), chief epidemiologist at the Center for Disease Control and Prevention (Weir and Mykhalovskiy, Reference Weir, Mykhalovskiy and Bashford2007). The term was previously used to describe the process of following up on contacts of people affected by infectious diseases. Langmuir extended the scope of this idea to include the surveillance of the disease itself, which involved the systematic collection, analysis and interpretation of data related to a particular disease. This comprised not only case detection, but also studies such as the analysis of the distribution and spread of infections or serological surveys. In addition, the developed notion of surveillance aimed to highlight that quarantine measures were not the only policy actions available to combat infectious disease. The emphasis was hence placed on the need for rapid transmission of data so as to enable proper public health action. In the 1960s, the WHO adopted this new approach to communicable diseases, replacing the concept of epidemiological intelligence with that of epidemiological surveillance (WHO, 1968, p. 95).

However, during the same period, Western countries tended to believe that, thanks to the progress made in respect of public health, infectious diseases were no longer a problem, at least not for them. This placed infectious-disease experts in a situation in which they had to reframe the notion of transmissible disease in order to spark the public's interest in this problematic (Weir and Mykhalovskiy, Reference Weir, Mykhalovskiy and Bashford2007). One of the arguments put forward was that the accelerating globalisation at the end of the century was fostering the circulation of viruses. The concept of ‘emerging infections’ – first formulated at an international conference organised in 1989 by the National Institute of Allergy and Infectious Diseases and National Institutes of Health (both US) – was developed to describe the growing threat posed by already known diseases with rising incidence and diseases caused by new pathogenic agents coming from abroad (Weir and Mykhalovskiy, Reference Weir, Mykhalovskiy and Bashford2007). In response to this situation, international organisations such as the WHO and the OIE stressed the need for a better supranational structure for disease monitoring.

An important concern pertained to states poorly communicating about outbreaks. Indeed, states were often reluctant to acknowledge the existence of an epidemic in their territory, concerned about the adverse effects on their economy caused by such an announcement. In this regard, the surveillance system put in place by the 1965 International Health Regulation was completely reliant on the willingness (indeed, the openness) of the member countries in conveying information about diseases within their borders. What is more, the system only dealt with three diseases: cholera, plague and yellow fever. As such, it was seen as outdated with respect to the issues of the time (Youde, Reference Youde2011).

By 1995, the WHO began to work on a substantive reform of its functioning, which culminated in the adoption of the 2005 International Health Regulation (IHR 2005) that remains in force (WHO, 2016). Among other things, the IHR 2005 sets up a comprehensive surveillance system and requires state parties to implement national-surveillance structures that must in each case assume a proactive role in the monitoring of all events (and not just some specific diseases) that may constitute public health emergencies of international concern within their territories (Art. 5). Alongside this country-notification system, the regulation also contains a major innovation: namely the possibility for the WHO to be informed of potential health emergencies by non-official sources of information (Art. 9) such as subnational agencies, non-governmental organisations (NGOs), news or Internet sources (Youde, Reference Youde2011). Notably, the inclusion of this procedure in the IHR 2005 can be explained by the prior emergence of private companies, such as ProMed-mail, the Global Public Health Intelligence Network, HealthMap or, more recently, BlueDot, which specialise in the early detection of disease outbreaks through the screening of various informal sources. The idea is that the WHO can rely on the work of these actors in order to detect and declare health emergencies without state approval. The concept of syndromic surveillance was created to describe this constant and near-real-time monitoring of unstructured data aimed at detecting early signs of outbreaks (Roberts and Elbe, Reference Roberts and Elbe2017). Thanks to the power of algorithms and big data, these new techniques were supposed to track outbreaks in a more efficient way than classical epidemiological methods.

The OIE also saw its mission broadened – that is, not only to fight animal diseases and epizootics, but, more importantly, to improve ‘animal health throughout the world’ (Figuié, Reference Figuié2014). Similar to the WHO and its IHR 2005, the new Terrestrial Animal Health Code and the Fourth Strategic Plan 2006–2010 extended countries’ surveillance obligations as well as the range of information sources capable of warning the OIE of a potential threat.

Another important step in the recent history of global health is the adoption, in 2007, of the ‘One world, One health’ approach by six organisations – the WHO, the OIE, the Food and Agriculture Organization of the UN, the World Bank (WB), the UN System Influenza Coordination and the United Nations Children's Fund. This approach aims at developing a more comprehensive framework to human, animal and ecosystem health and encourages ‘countries and actors … to transcend boundaries, to progressively construct a shared perception of health risks and to build coordinated responses to global health issues’ (Figuié, Reference Figuié2014, p. 476). In 2008, these organisations elaborated a ‘Strategic Framework for Reducing Risks of Infectious Diseases at the Animal–Human–Ecosystems Interface’, which underlines the importance of ‘surveillance and emergency response systems at the national, regional and international levels’ (FAO et al., 2008, p. 17). The ‘One health’ approach is thus consistent with the idea that emerging infectious disease constitutes the main threat to ‘global health’ – a vision that, according to some scholars, tends to concentrate efforts on some specific diseases that present a threat to Western countries at the expense of other ills such as endemic diseases, which nonetheless cause millions of deaths (Blouin Genest, Reference Blouin2015).

Along with the notion of surveillance, another concept that has been at the core of global health is that of preparedness.Footnote 4 This term refers to the idea that some catastrophic events will certainly occur, but their precise timing and nature cannot be accurately predicted by probabilistic calculation. For this reason, the imperative of preparedness requires countries and their public services to ‘assume that the occurrence of the event may not be avoidable’ and to produce ‘knowledge about its potential consequences through imaginative practices like simulation and scenario planning’ (Lakoff, Reference Lakoff2017, p. 19). We can understand surveillance as an aspect of preparedness to the extent that preparedness involves the monitoring of any potential warning signal, either of a health emergency or a weakness in public infrastructures that must be ready to respond to such an emergency.

3.2 Assessing the performance of health systems

Performance assessment is the second dimension that is significant in the description of the evolving relationship between public health and statistics in the second half of the twentieth century. In order to understand this dimension, we need to go back to the 1980s, during which time international health governance underwent a series of structural changes, one of the most important being the positioning of the WB as a major actor in this field. The WB's development-assistance policies started to include new aspects such as education, health and population control. Health was no longer seen as a consequence of economic growth and development, but rather as a means to achieve them (Ruger, Reference Ruger2005).

The WHO, in contrast, lost some of its power, partly due to the diminution in its regular budget and its resulting greater dependence on certain key donors (Brown et al., Reference Brown, Cueto and Fee2006). The end of the 1990s also saw the massive development of public–private partnerships, in which companies, foundations, NGOs and international organisations participated (Gaudillière, Reference Gaudillière2016). All these elements fostered the emergence of a culture of performance and audit. The idea that countries must be assessed by various medical indicators in order to determine which ones should receive funding, and the view that metrics should also be used to identify which public health interventions are the most cost-effective, became commonplace. Not only were managerial techniques, based on private-sector practices, introduced to foundations such as the Bill & Melinda Gates Foundation or the Bloomberg Initiative,Footnote 5 but international organisations also resorted to such practices. In 1993, the WB published its report ‘Investing in health’ in which various metrics were developed, such as the Global Burden of Disease or the DALY (Disability Adjusted Life Years), which allowed assessments of the economic cost of diseases and enabled policy-makers to identify the supposedly most efficient types of public intervention.Footnote 6 ‘Development performance should be evaluated on the basis of measurable results,’ the WB stated in 1998.Footnote 7

In this shifting landscape, the WHO initiated a series of reforms in order to better position itself in the field of international health. Accepting that it was no longer the only important actor in the sphere of global health, the WHO was willing to become the co-ordinator on the world scene considering the threats posed by ‘emerging infectious diseases’. Gro Harlem Brundtland, appointed director general in 1998, fully adopted the rhetoric of the WB, announcing that the WHO was to become a ‘department of consequence’ (Brown et al., Reference Brown, Cueto and Fee2006, p. 70). The publication of the World Health Report 2000 – Health Systems: Improving Performance, wherein national-health-system performances were measured and ranked, is paradigmatic of this evolution (Gaudillière, Reference Gaudillière2016). By adopting such rhetoric, the WHO expressly endorsed the use of benchmarking to guide national policies. Various performance indicators produced by the WHO were also explicitly taken into account in the allocation of funds provided by the WB or the Millennium Challenge Corporation and thereby became all the more influential (Fisher, Reference Fisher and Davis2012).

In addition, recent years have seen some powerful private actors investing in global health metrics, most notably the Institute of Health Metrics and Evaluation (IHME), an entity launched in 2007 mainly with funding from the Bill & Melinda Gates Foundation (Mahajan, Reference Mahajan2019). The growing influence of the IHME is in line with the trust in data that is a key characteristic of the history of public health. However, it also marks a step towards the end of the monopoly of states and international organisations in the field of global health data, since the IHME clearly wants to compete with the WHO's metrics and claims that other data sources (such as companies, universities, citizens) than those produced by states have become essential (Mahajan, Reference Mahajan2019).

Interestingly, the field of surveillance and preparedness itself has been affected by audit and benchmarking practices: recently, ratings and indicators have been specifically created to assess the preparedness and surveillance systems of countries.Footnote 8 In fact, there are too many initiatives to properly analyse here. That said, we can mention some of the most pertinent ones. The principal actor on this issue is, unsurprisingly, the WHO. According to the IHR 2005 (in particular, Art. 54 and Annex 1), state parties are required to report on the implementation of the regulations and must therefore provide a self-assessment on different kinds of capacity requirements. Following these provisions, the WHO has developed different tools that are summed up in the ‘IHR Monitoring and Evaluation Framework’ (2018c). There are two quantitative instruments: the SPAR Tool – State Party Self-assessment Annual Reporting Tool (WHO, 2018a) – which is supposed to monitor progress towards the implementation of the IHR regulations;Footnote 9 and the Voluntary External Evaluation, generally carried out through the Joint External Evaluation Tool (JEE) (WHO, 2018b), which, as its name indicates, is an optional and external assessment, made on an ad hoc basis.Footnote 10

These two indicators are composed of subindicators that have a harmonised scale – ranging from 1 (no capacity) to 5 (sustainable capacity). The scores are attributed by (either national or foreign) experts responding to a questionnaire and are then aggregated into a final rating. The WHO specifies that these indicators ‘are not intended for use in ranking or comparing countries’ performances’ (WHO, 2018c, p. 8). Yet, despite the stated intention, one can note that the WHO e-SPAR websiteFootnote 11 expressly provides for the possibility of comparing one country's score with the average value of its region and that of the world. Moreover, the WHO adds that these tools aim to ‘support countries in monitoring their progress in the development and maintenance of the national capacities required by the IHR’ and has developed another document, entitled Benchmarks for International Health Regulations Capacities (WHO, 2019), which describes, for each SPAR and JEE subindicator, what action should be taken by a country to reach a higher level on the 1-to-5 scale. All these instruments are thus overtly used by the WHO as a technology of governance aimed at guiding state parties towards an allegedly better preparedness in relation to health threats. Given its weak coercive power, it is hardly surprising that the WHO has recourse to such an instrument to try to ensure compliance with the IHR.

Furthermore, as might be expected, several private initiatives pursuing the same goal have emerged. Without analysing each of them in detail, we can mention the ReadyScore developed by PreventEpidemics,Footnote 12 the Infectious Disease Vulnerability Index created by the RAND Corporation (Moore et al., Reference Moore2016) and the Epidemic Preparedness Index developed by Metabiota, a company whose declared mission is to make ‘the world more resilient to epidemics’ (Oppenheim et al., Reference Oppenheim2019). The ReadyScore is exclusively based on the data provided by the JEEs; the two others are based on various sources, mainly from the WHO and the WB. Lastly, the Global Health Security Index,Footnote 13 probably the most elaborate one, comprises a range of indicators as well as rankings. It claims to be based on the belief that

‘all countries are safer and more secure when their populations are able to access information about their country's existing capacities and plans and when countries understand each other's gaps in epidemic and pandemic preparedness so they can take concrete steps to finance and fill them’.Footnote 14

Despite their individual characteristics, all of these projects are based in the US and they share the same global health discourse – that is, a discourse according to which: (1) the risk of epidemics is increasing (notably due to the process of globalisation); (2) previous experiences have shown that many (especially Southern) countries demonstrate weaknesses in terms of preparedness; and (3) therefore the need to provide objective assessment of preparedness and resilience and to encourage states to be aware of this issue are critical for improving global health security.

We find a clear confirmation of this trend in a report published in 2017 by the International Working Group on Financing Preparedness (IWG), a group related to the WB, entitled ‘From panic and neglect to investing in health security: financing pandemic preparedness at a national level’ (2017). Mindful of the ‘preparedness problem’, this report offers extensive reflections on the issue of investing in preparedness. Noting that ‘it can be challenging to convince politicians to spend money to help avoid something’ (IWG, 2017, p. 61), the report points to indicators as a way of incentivising countries to allocate funds towards enhancing their preparedness:

‘One way of doing this is by developing indices or measures based on preparedness that influence the inflow of private capital. Another way is by using measures of preparedness to influence the flows of development assistance, such as from the concessional financing from the World Bank.’ (IWG, 2017, p. 61)

Although it explicitly recognises the inherent limits of using indicators, the report considers several contexts in which preparedness indices could be integrated into existing structures related to international finance: considering the decisive influence of credit-ratings agencies, the International Monetary Fund's Article IV consultations or the Country Policy and Institutional Assessment, the inclusion of preparedness in their assessments, so the report argues, could compel countries to improve their response capacities for health emergencies. Such incentive mechanisms, of course, would significantly increase the normative power of emergency-preparedness performance indicators.

***

This brief historical overview demonstrates that the quantification of health data has been an essential component of public health since the beginning of the nineteenth century. This trend is especially pronounced during epidemics when supposedly solid and hardly questionable data offer strong support for the quick decisions that need to be taken. In addition, infectious diseases were among the hot topics of international co-operation given that the adequacy of the authorities’ response directly depended on the effective transmission of information between states. Hence, the need to be co-ordinated in respect of data coding and the establishment of knowledge networks have led international organisations to play a central role.

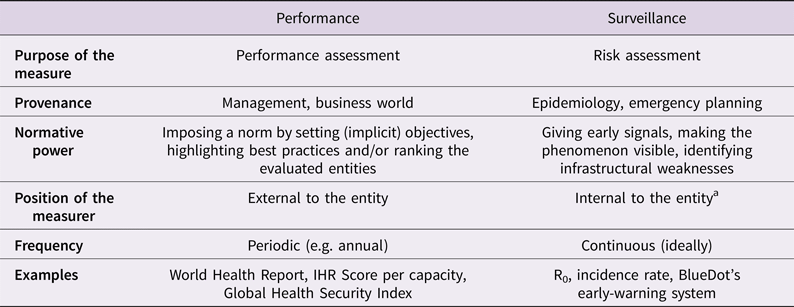

The second half of the twentieth century saw the emergence of two distinct ways of quantifying public health (and communicable diseases in particular): surveillance and preparedness on the one hand; performance assessment on the other. These two approaches interact differently with the decision-making process of public authorities. They are summarised in Table 1.

Table 1. Two types of indicators within global health

a On the notion of internal organisational measures, see Restrepo Amariles (Reference Restrepo2017).

The use of indicators for surveillance and preparedness purposes seeks to make visible a phenomenon – the outbreak and spread of a disease – that would otherwise be hard to discern. Such indicators form part of an alert system dedicated to warning the authorities against an ever-latent threat through the identification of early signs of a potential catastrophe. Indeed, the quantified data play a key role in this process, since the surveillance implies the adequate and continuous transmission of information from laboratories and medical practitioners to the control authorities in respect of standardised protocols (Fallon et al., Reference Fallon, Thiry and Brunet2020). Observing various indicators allows the level of risk to be quantified by examining outliers that possibly indicate the existence of a threat. In this regard, syndromic surveillance tools, such as BlueDot, which aim to predict threats through the screening of unstructured data in real time, can be seen as the latest expression of surveillance indicators. Indicators also enable the testing of preparedness, notably at the hospital level, by simulating an emergency situation, in much the same way as stress tests are performed in the context of banking (Lakoff, Reference Lakoff2017; Stark, Reference Stark2020). In the surveillance rationale, the function of quantitative information is thus to guide decision-making through the identification of early signals of a threat or of weaknesses in infrastructure, or through the monitoring of the epidemic once it has broken out.

By contrast, performance indicators work differently. They are produced by external actors (e.g. international organisations, private foundations, think-tanks), which intend to control the behaviour of the audited entities through stimulating competition, setting quantified targets and highlighting best practices to which these entities are invited to align themselves. This is even more the case when direct consequences are attached to a rating or a position in a ranking (e.g. allocation of funds). This potentially leads the audited actors to internalise the normative referential that underpins the criteria according to which the assessment is carried out. As we have seen, the field of surveillance and preparedness itself is now affected by this rationality, given the emergence of various tools dedicated to measuring the performance of countries in this regard. In general, these performance indicators are published periodically.

This distinction should be understood as a theoretical framework and therefore not considered too restrictively, since, in various cases, the line between the two types of indicators can become blurred. Most importantly, this distinction has more to do with the way in which the data are analysed and used than with the nature of the data itself. Indeed, the same kind of data (e.g. number of beds in intensive-care units) can be useful for both surveillance and performance-assessment purposes. As we will see in the next part, differentiating between these two regimes of quantification is valuable for understanding the importance of health indicators during the COVID-19 pandemic.

4 The two roles of indicators during the pandemic

The fact that metrics play a major role in the management of the COVID-19 pandemic is beyond doubt. However, the question of how and by whom these metrics are produced and in what manner they inform, influence or sometimes determine the decision-making process needs to be further addressed. To do so, using the distinction between performance and surveillance indicators, I will first examine the role of international rankings and evaluation tools that have been deployed during the crisis. Second, I will analyse how quantitative health data are used to locally combat the outbreak.

4.1 COVID-19 data for comparison and evaluation purposes

Since the very beginning of the pandemic, international comparison through numbers has been in full swing. Indeed, a plethora of tools have emerged allowing the visualisation and comparison of health data specific to each country. The WHO created its ‘COVID-19 Situation Dashboard’Footnote 15 and the WB its ‘Understanding the COVID-19 pandemic through data’.Footnote 16 The WHO Regional Office for Europe even launched in November 2020 a Public Health and Social Measures (PHSM) Severity Index,Footnote 17 which allows comparisons to be made between the responses to COVID-19 implemented in the countries belonging to the WHO European Region. But, alongside indicators produced by international organisations or countries, initiatives have also been developed by universities (e.g. Johns Hopkins University,Footnote 18 Oxford's Our World in Data,Footnote 19 WorldPopFootnote 20), private groups (e.g. WorldometerFootnote 21) and newspapers (e.g. BNO NewsFootnote 22).Footnote 23 A number of these projects are highly influential. The Johns Hopkins Dashboard, for instance, is regularly cited by journalists or public authorities and is the main source of the WB's own dashboard.

What is exceptional about this profusion of indicators and rankings is of course not that some have been initiated by private actors. Many of the most influential indicators, in other fields, are not developed by public authorities – take the Shanghai Ranking or the credit-rating agencies, for instance. Yet, there have never been so many indicators seeking to measure the same phenomenon, namely the evolution of the disease outbreak. The competition that arose between countries in the fight against the pandemic has thus taken a decentralised form, as there was not one or a few indicators measuring a single object – as is generally the case – but rather a myriad of instruments. The use of comparison is not neutral, since it contributes to determining which types of political measures are acceptable and which are not: for instance, a country that adopts – in comparison with other countries – very coercive measures at a time when the level of the epidemic is low on its territory can be strongly criticised for its overreaction; by the same token, a country with a high number of confirmed cases that takes few actions in response can be sharply criticised for its inertia. These indicators and rankings are used both as a means to criticise the action of a country and as a way for political leaders to praise the quality of their actions.Footnote 24 We can consider that their ‘retroaction’ effects (Desrosières, Reference Desrosières and Mugler2015) lie thus in their ability to limit the scope of possible responses to the crisis by forcing countries to take into account the actions taken by their neighbours, and to justify themselves when they act in a way that significantly diverges from that of other countries.

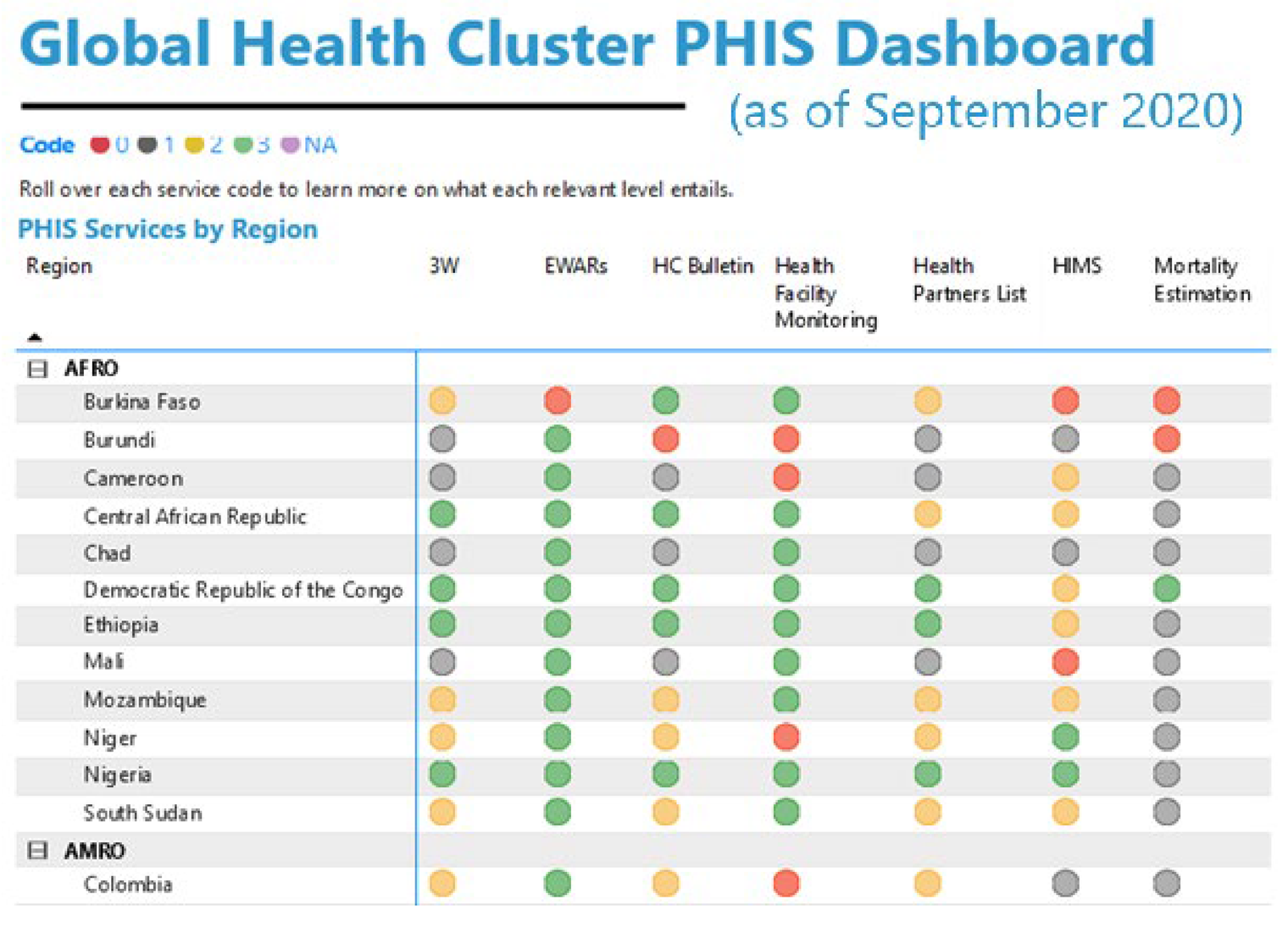

However, alongside these various metrics, one also needs to consider the ‘COVID-19 Strategic Preparedness and Response Plan’, put in place by the WHO, which notably aims to evaluate the nature of the actions implemented by Member States. From the beginning of the pandemic, the WHO set objectives to be achieved, not only by states, but by the WHO itself. The ‘COVID-19 Monitoring and Evaluation Framework’ (WHO, 2020a) lists ‘key public health and essential health services and systems indicators to monitor preparedness, response, and situations during the COVID-19 pandemic’. Speaking unambiguously of ‘key performance indicators’, the framework allows the WHO, on the one hand, to track the progress of states in reaching the goals and, on the other, to be itself accountable to the WHO's partners, including donors. The WHO also encourages countries to conduct an ‘Intra-action Review’ so as to ‘identify current best practices, gaps and lessons learned’ and to ‘propose corrective actions’ (WHO, 2020b). This instrument is fully consistent with the reporting tools that the WHO developed to ensure the proper implementation of the IHR 2005 (such as the SPAR and JEE tools and the after-action review). We can find another telling illustration of the importance of this audit and benchmarking rationality in the Global Health Cluster PHIS Dashboard (Figure 1).

Figure 1. The Global Health Cluster PHIS Dashboard. Source: Screenshot from the Global Health Cluster PHIS Dashboard, available at https://app.powerbi.com/view?r=eyJrIjoiZGM1M2FkNjUtM2E2Yi00NTZjLTg1YmUtOGI3OWVhZTY1ZmIyIiwidCI6ImY2ZjcwZjFiLTJhMmQtNGYzMC04NTJhLTY0YjhjZTBjMTlkNyIsImMiOjF9.

Health Cluster is a partnership led by the WHO that is mostly active in countries in the Global South. The Health Cluster's work focuses on humanitarian emergencies. This dashboard lists various tools and technologies related to the collection and management of health information and uses a four-point code for each technology. Hence, it strives to evaluate the ability of countries’ health-information systems to respond to the pandemic and indirectly encourage them to invest and develop such technological infrastructure. The pandemic thus gave rise to a variety of reporting and audit tools, developed by global health actors such as the WHO or private foundations, which intend to help and guide countries towards an effective response against the spread of the virus.

4.2 The production of COVID-19 data for dealing locally with the epidemic

Alongside the use of metrics for comparison and evaluation purposes, the COVID-19 outbreak prompted countries to produce a massive amount of data so as to understand the spread of the disease and to act accordingly. As explained earlier, the nature of an epidemic is such that it is a particularly difficult phenomenon to grasp without numbers. Indeed, if we think of other catastrophic events, such as earthquakes or floods, the materiality of the disaster can be quite easily experienced. For epidemics, we can of course see that many persons die or that hospitals are filling up with sick people, but quantitative data are indispensable if we want to get a sense of the bigger picture.Footnote 25 Likewise, when it comes to pandemics, having a minimum level of harmonisation between the ways in which data are collected is imperative for the fight against the disease. In this respect, history shows that international health organisations came to play a key role in producing technical work and expertise addressed to the states. The COVID-19 crisis is surely no exception, as the WHO has published a large number of technical-guidance documents to advise countries in their struggle against the outbreak.

If we consider, for example, the crucial question of certifying death due to COVID-19, many problems may occur (comorbidities, no confirmed diagnostics, etc.). Guidelines indicating in which cases COVID-19 should be recorded as a cause of death are thus essential for any cross-country comparison and surveillance at the supranational level.Footnote 26 More generally, the WHO's recommendations include: sampling strategies for patient testing; case definitions (suspected, probable, confirmed); delimitation of age groups; contact tracing; periodicity of reporting; and so forth. These elements may appear innocuous, but they largely determine the content of the data that states publish and consequently the information provided by the international indicators mentioned above. In addition, these technical-guidance documents are often implemented via software programs, thus fostering the process of standardisation; this is indeed the case for the COVID-19 Surveillance Digital Data Package launched within the District Health Information Software 2 (DHIS2). Created at the end of the 1990s, this platform claims to be the world's largest health-management information system and has been implemented in dozens of low- and middle-income countries for the collection, analysis, visualisation and sharing of health data. The newly created COVID-19 package is specifically dedicated to the fight against the coronavirus and is already operational or in development in fifty countries. The package includes ‘standard metadata aligned with the WHO's technical guidance on COVID-19 surveillance and case definitions and implementation guidance to enable rapid deployment in countries’.Footnote 27

All of the socio-technical infrastructure that supports the life-cycles of global health data deserves our attention, as it influences the construction process of the national-surveillance indicators that help to visualise the epidemic and combat it. At national and local levels, the pandemic has in fact led these surveillance metrics to acquire a decisive normativity. Switzerland offers an outstanding example of this. In the ‘decree on the measures aiming at fighting the COVID-19 epidemic in particular situation’ (Le Conseil fédéral suisse, 2020), reference is expressly made to certain indicators for determining whether the cantonal authorities may strengthen or weaken health-policy measures imposed on all of the country. For instance, Article 7, paragraph 2 provides that any canton may extend the opening hours imposed on establishments such as shops and restaurants under certain conditions, including when the reproduction rate is below 1 for at least seven consecutive days and the number of new infections per 10,000 persons is below the national average for the last seven days. Paragraph 5 adds that, in case the reproduction rate exceeds 1 during three consecutive days (or the two other conditions are not fulfilled anymore), then the canton must cancel the opening-hours extension.

Yet, the focus on one indicator instead of another is not neutral, as it captures some aspects of the epidemic and occludes others. Let us consider the reproduction numberFootnote 28 (also called R) that is referred to in this Swiss regulation and has, more broadly, received a considerable amount of attention from experts and political leaders since the beginning of the pandemic. Without going into the details of the inherent limitations of this measure,Footnote 29 let us note that – as has been well explained by Tufekci (Reference Tufekci2020) – given that the reproduction rate measures an average number, it does not consider the dispersion of the contagiousness of the SARS-CoV-2. Yet, many studies have shown that the transmission of this virus is overdispersed, as, in many cases, infection occurs at superspreading events. However, by only indicating the average, the reproduction rate does not reflect the importance of clusters in the spreading of the disease. Furthermore, because the focus on this variable implicitly suggests that the disease spreads uniformly into the population, it may favour the adoption of uniform public health measures, rather than targeted actions. Besides, as noted by Tufekci, the judgment one can make on the severity of the measures taken in a country may vary following the variable by which the judgment is made. Hence, if we consider the measure of dispersion rather than the reproduction number, the fact that, in March 2020, Sweden imposed a limit of fifty people for indoor gatherings and maintained this interdiction for an extended period of time (whereas many countries had lifted such measures) may provide some more nuance in light of the frequent claim that Sweden has been extremely lax in dealing with the outbreak.

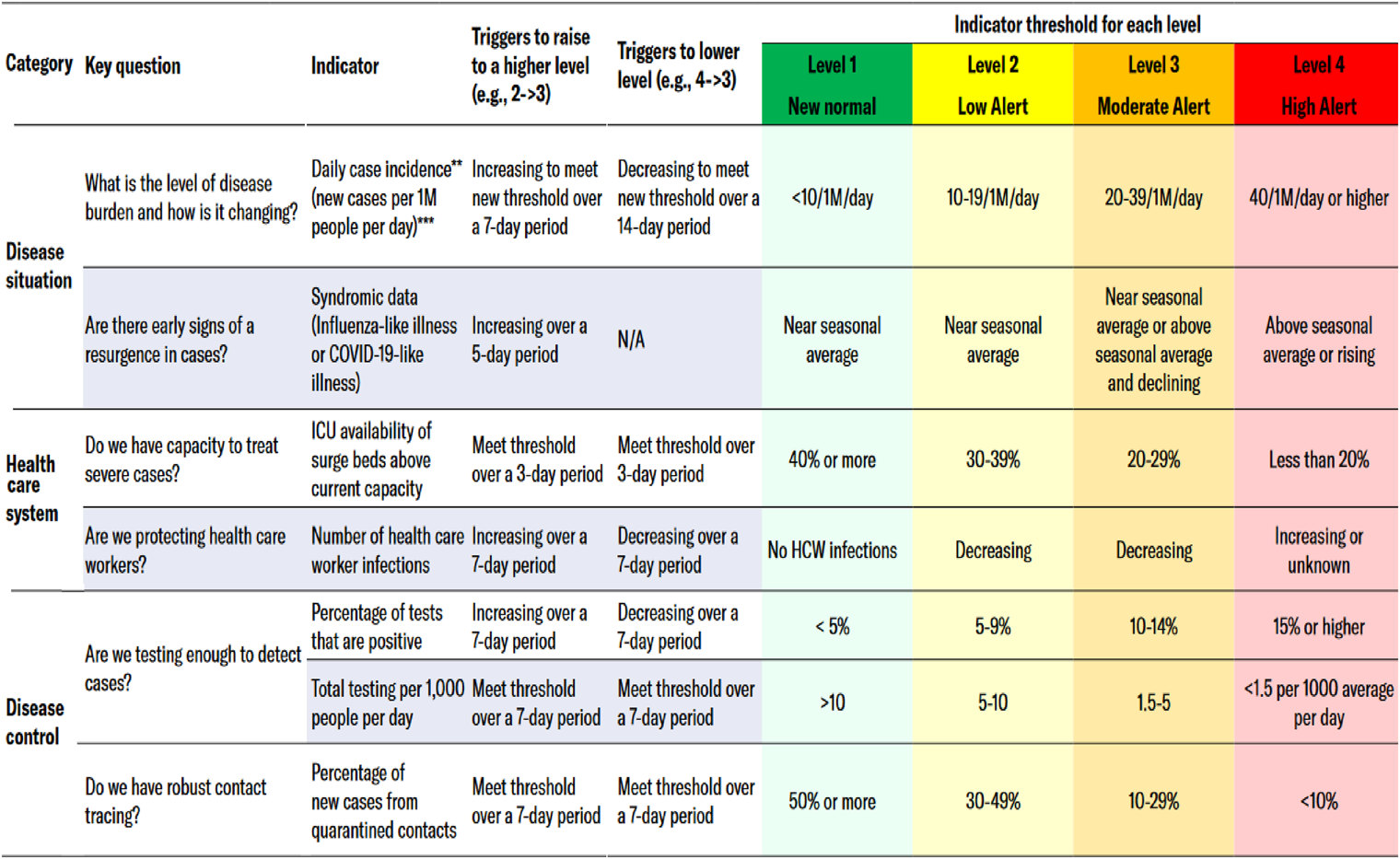

Finally, the pandemic has also prompted the creation of more complex decision tools, which can be described as multilayered indicators designed to, on the one hand, describe objectively the level of the threat and, on the other, define the political and legal measures that must be adopted as a result. They are multilayered in the sense that they usually take the form of a simple scale whose levels are determined according to various indicators (such as the reproduction number). Their interesting characteristic is that specific public health measures are identified for each level. Unsurprisingly, such regulation tools are developed by some of the global health actors mentioned earlier. For example, Resolve To Save Lives, the powerful organisation that launched the Prevent Epidemics ReadyScore, promotes the use of alert-level systems aiming ‘to support clear decision-making, improve accountability and communicate with the public to increase healthy behavior change’.Footnote 30 They provide an example of an alert-level system, as illustrated in Figure 2, which is divided into four levels, from ‘new normal’ to ‘high alert’.

Figure 2. Prevent Epidemics’ COVID-19 alert-level indicators. Source: Screenshot from ‘Prevent Epidemics’ website, available at https://preventepidemics.org/wp-content/uploads/2020/05/Annex-2_Example-of-an-alert-level-system_US_FINAL.pdf.

The instrument comprises three categories – Disease Situation, Health Care System and Disease Control – with which are associated a range of indicators such as the incidence rate, the percentage of positive tests or the availability of intensive-care beds. For each indicator and each level, thresholds are defined. In another tab, different public health measures are associated with the four levels. We find another compelling example in the ‘COVID-Local’ project (Figure 3), which was notably developed by the Nuclear Threat Initiative (which also produces the Global Health Security Index). The assessment of the threat level is divided into five different categories (Infection Rate, Diagnostic Testing & Surveillance, Case & Contact Investigations, Healthcare Readiness and Protecting At-Risk Populations), which are themselves subdivided into a few indicators.

Figure 3. Nuclear Threat Initiative's COVID-Local project. Source: Screenshot from ‘Metrics for Phased Reopening’ from the ‘COVID LOCAL’ website, available at https://covid-local.org/metrics/.

In Belgium, such an instrument, referred to as a ‘barometer’, has been conceptualised by an expert committee.Footnote 31 It would have included four levels (determined on the basis of the reproduction number, the daily number of new hospitalisations and modelling) to which were attached a range of possible measures. It was even envisaged that this tool would be applied differently by province and that the public health measures would be implemented automatically in a province once a defined threshold had been crossed in its territory. This version of the project, after a saga of many twists and turns, was eventually abandoned, being apparently too difficult to implement.

This kind of indicator is different from those that aim to assess the performance of an entity such as a state. Indeed, the goal of such indicators is not to put the evaluated entities in competition with each other. Rather, by fixing the metrics that must be monitored as well as the mitigation actions that must be taken, they purport to enable the authorities to react, ideally in real time and almost automatically, to the evolution of the outbreak.

5 Conclusion

Metrics, as the history of public health shows, have always played a key role in international health and in the management of infectious disease. This finding has only been confirmed by the COVID-19 crisis. This paper has attempted to show that the multiple uses of data and the influence of indicators on the decision-making process during the pandemic can be understood in light of the two forms of health quantification – performance assessment and epidemiological surveillance – which accompanied the turn from international health to global health. The first explains the pervasiveness of rankings and evaluation practices since the beginning of the pandemic; the second the normative function that is assigned to health indicators in the management of the outbreak.

However, despite their differences, the line between surveillance and performance-assessment purposes can easily become blurred.Footnote 32 Indeed, if one looks at the Belgian barometer project, it is clear that such a project could also be used to stimulate competition between the audited provinces. In addition, beyond their respective characteristics, these two rationalities share the belief that numerous and accurate data ensure objectivity and are thus essential to the fight against the virus. On the one hand, quantified data allow the quality of countries’ responses to the crisis to be effectively assessed and the best reactions identified; on the other, they reflect unambiguously the level of the threat in a country. The imperative of evidence-based and data-driven policy-making, strongly promoted by the actors of global health, tends to set aside the political process that necessarily underlies the management of a crisis. The impression that this is a purely technical issue is even more vivid when it comes to an epidemic, since the goal pursued in such a situation is fairly consensual, namely to mitigate the outbreak and, in particular, to avoid hospitals becoming overloaded. In this regard, the projects of alert-level systems examined in section 4 are paradigmatic of this trend: not only are the indicators, the critical thresholds and the scale of alert preset, but the political measures also are predetermined and tied to each alert level. Moreover, the ubiquity of data sensors that we see today favours the conceptualisation of regulatory devices, which are meant to ensure an almost automatic implementation of the rules in accordance with the information provided by the sensors.

However, one should not forget, first, that any metric is always the product of conventions, values and technologies that reveal some aspects of reality while occluding others; and, second, that the means to achieve the defined goal are far from self-evident and non-contentious. That is not to say, of course, that quantification is undesirable or useless in the fight against an epidemic. On the contrary, indicators are absolutely indispensable, since we would be almost completely in the dark without them. Nonetheless, it seems critical to recall the inherent precarity and partiality of the information that indicators provide.Footnote 33 Given the growing importance of such normative quantitative devices during the pandemic, we can assume that their influence will likely continue to grow in the future, especially when we think to other impending global crises such as global warming. For this reason, these devices require close scrutiny.

Conflicts of Interest

None

Acknowledgements

I would like to thank the participants in the internal seminar of the Perelman Centre for the discussion that followed the presentation of an earlier version of this paper. I also wish especially to thank Catherine Fallon, who provided substantial comments and suggestions. Lastly, I thank Simon Mussell for proofreading the paper. In some passages, I draw on certain ideas that I developed more concisely in Genicot (Reference Genicot2020). All errors or approximations are of course my own.