Introduction and background

Currently, two robotic rovers are exploring Mars, having arrived there in January 2004 (Squyres et al. Reference Squyres2004a,Reference Squyresb; Alexander et al. Reference Alexander2006; Matthies et al. Reference Matthies2007). Two rovers are planned to be launched in the next few years, in order to explore Mars further (Volpe Reference Volpe2003; Barnes et al. Reference Barnes2006; Griffiths et al. Reference Griffiths, Coates, Jaumann, Michaelis, Paar, Barnes and Josset2006). Fink et al. (Reference Fink, Dohm, Tarbell, Hare and Baker2005), Dubowsky et al. (Reference Dubowsky, Iagnemma, Liberatore, Lambeth, Plante and Boston2005), Yim et al. (Reference Yim, Shirmohammadi and Benelli2007) and others suggest mission concepts for planetary or astrobiological exploration with ‘tier-scalable reconnaissance systems’, microbots and marine/mud-plain robots, respectivelyFootnote 1. Volpe (Reference Volpe2003), Fink et al. (Reference Fink, Dohm, Tarbell, Hare and Baker2005), Castano et al. (Reference Castano2007), Halatci et al. (Reference Halatci, Brooks and Iagnemma2007, Reference Halatci, Brooks and Iagnemma2008) and others have explored concepts within the area of scientific autonomy for such robotic rovers or missions on Mars and other planets or moons. We report upon progress made by our team in the development of scientific autonomy for the computer-vision systems for the exploration of Mars by robots. This work can also be applied to the exploration of the Moon, and the deserts and oceans of Earth, either by robots or as augmented-reality systems for human astronauts or aquanauts. One motivation for our work is to develop systems that are capable of recognizing (in real time) all the different areas of a series of images from a geological field site. Such recognition of the complete diversity can be applied in multiple endeavours, including image downlink prioritization, target selection for in situ analysis with geochemical or biological analysis tools and sample selection for sample return missions.

Previous work by our team includes: (a) McGuire et al. (Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a) on the concept of uncommon maps based upon image segmentation, on the development of a real-time wearable computer system (using the NEO graphical programming language (Ritter et al. Reference Ritter1992, Reference Ritter2002)) to test these computer-vision techniques in the field, and on geological/astrobiological field tests of the system at Rivas Vaciamadrid near Madrid, Spain; (b) McGuire et al. (Reference McGuire2005) on further tests of the wearable computer system at Riba de Santiuste in Guadalajara, Spain – this astrobiological field site was of a totally different character to the field site at Rivas Vaciamadrid (red-bed sandstones instead of gypsum-bearing outcrops), yet the computer vision system identified uncommon areas at both field sites rather well; and (c) Bartolo et al. (Reference Bartolo2007) on the development of a phone-camera system for ergonomic testing of the computer-vision algorithms.

Previous work to ours includes: (a) McGreevy (Reference McGreevy1992, Reference McGreevy1994) on the concept of geologic metonymic contacts, on cognitive ethnographic studies of the practice of field geology, and on using head-mounted cameras and a virtual-reality visor system for the ethnographic studies of field geologistsFootnote 2; (b) Gulick et al. (Reference Gulick, Morris, Ruzon and Roush2001, Reference Gulick, Hart, Shi and Siegel2004) on the development of autonomous image analysis for exploring Mars; and (c) Itti et al. (Reference Itti, Koch and Niebur1998), Rae et al. (Reference Rae, Fislage and Ritter1999), McGuire et al. (Reference McGuire, Fritsch, Steil, Roethling, Fink, Wachsmuth, Sagerer and Ritter2002), Ritter et al. (Reference Ritter1992, Reference Ritter2002), Sebe et al. (Reference Sebe, Tian, Loupias, Lew and Huang2003) and others on the development or testing of saliency or interest maps for computer vision. Current, related work to ours includes that of Purser et al. (Reference Purser, Bergmann, Lundälv, Ontrup and Nattkemper2009) and Ontrup et al. (Reference Ontrup, Ehnert, Bergmann and Nattkemper2009) on using Web 2.0 to label and explore images from a deep-sea observatory, using textural features and self-organizing maps.

Herein, we extend our previous work by: (i) developing and testing a second computer-vision algorithm for robotic exploration by applying a Hopfield neural network algorithm for novelty detection (Bogacz et al. Reference Bogacz, Brown and Giraud-Carrier1999, Reference Bogacz, Brown and Giraud-Carrier2001); (ii) integrating a digital microscope into the wearable computer system; (iii) testing the novelty detection algorithm with the digital microscope on the gypsum outcrops at Rivas Vaciamadrid; (iv) developing a Bluetooth communications mode for the phone-camera system; and (v) testing the novelty detection algorithm with the Bluetooth-enabled phone camera connected to an ordinary netbook computer (Foing et al. Reference Foing2009a–c; Gross et al. Reference Gross2009; Wendt et al. Reference Wendt2009) at the Mars Desert Research Station (MDRS) in Utah, which is sponsored and maintained by the Mars Society.

Techniques

NEO graphical programming language

NEO/NST is a graphical programming language developed at Bielefeld University (Ritter et al. Reference Ritter1992, Reference Ritter2002) whose utilization (McGuire et al. Reference McGuire2004b) for the Cyborg Astrobiologist project was based primarily upon its graphical representation and readability, promoting ease of use, debugging and reuse. Previous computer-vision projects in Bielefeld, which use NEO, include the GRAVIS robotFootnote 3 (McGuire et al. Reference McGuire, Fritsch, Steil, Roethling, Fink, Wachsmuth, Sagerer and Ritter2002; Ritter et al. Reference Ritter, Steil, Noelker, Roethling and McGuire2003). The NEO language includes icons for each algorithmic subroutine, wires that send data to and from different icons, encapsulation to create meta-icons from sub-icons and wires, the capability to code icons either in compiled or interpreted C/C++ code and capabilities for iterative real-time debugging of graphical sub-circuits. NEO functions on operating systems that include Linux and Microsoft Windows. For the fieldwork for this project, the Microsoft Windows version of NEO was used exclusively. However, much of the developmental computer-vision programming was done at the programmer's desk using the Linux version of NEO.

Image segmentation

In 2002, three of the authors (Ormö, Díaz-Martínez and McGuire) decided that image segmentation is a low-level technique that was needed for the Cyborg Astrobiologist project, in order to distinguish between different geological units, and to delineate geological contacts. McGreevy (Reference McGreevy1992, Reference McGreevy1994) naturally also considers geologic metonymic contacts to be an essential cognitive concept for the practice of field geology. Hence, a greyscale image segmentation meta-icon was programmed from a set of pre-existing compiled NEO icons together with a couple of newly created compiled NEO icons.

This image segmentation meta-icon is based upon a classic technique of using two-dimensional co-occurrence matrices (Haralick et al. Reference Haralick, Shanmugam and Dinstein1973) to search for pairs of pixels in the image with common greyscale values to other pairs of pixels.Footnote 4 Six to eight peaks in the two-dimensional co-occurrence matrix are identified using a NEO icon, and all pixels in each of these peaks is labelled as belonging to one segment or cluster of the greyscale image. Therefore, we are provided with 6–8 segments for each image; see McGuire et al. (Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a, Reference McGuire2005) for more details.

For the work described in this paper, which involves colour novelty detection, we needed to identify regions of relatively homogeneous colour (in red-green-blue (RGB) or hue saturation intensity (HIS) colour spaceFootnote 5), as opposed to relatively homogenous greyscale values. So we implemented a full-colour image segmentation algorithm in NEO, based upon three-band spectral-angle matching. This spectral-angle matching thus provides full-colour image segmentation, a capability that was lacking in previous work within this project. In our implementation of spectral-angle matching, each pixel is represented as a 3-vectorFootnote 6 with its hue (H), saturation (S) and intensity (I) values. This 3-vector for a given pixel is compared to the 3-vectors for other pixels with elementary trigonometry by computing the angle between these two 3-vectors. If this angle is sufficiently small, the two pixels are assigned to be in the same colour segment or cluster. Otherwise, they are assigned to different colour segments or clusters. By evaluating the spectral angle between each unassigned pixel pair in the image, making assignments sequentially, each pixel is assigned to be in a colour segment. The matching angle is set before the mission, but can be modified if desired during the mission. This technique is commonly used in multispectral and hyperspectral image classification, so the novelty detection (which we describe later) that uses this spectral-angle matching technique for colour image segmentation is, in principle, directly extensible to multispectral imaging.

Interest points by uncommon mapping

Although this is not the focus of the current paper, this exploration algorithm of ‘interest points by uncommon mapping’ was tested further during both field missions described in this paper. In previous field missions, this was the only algorithm that was tested and evaluated (McGuire et al. Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a, Reference McGuire2005). In summary, an uncommon map is made for each of the three bands of a colour HSI image, with the image segments that had the least number of pixels being given a numerical value corresponding to high uncommonness, and the image segments with the highest number of pixels being given a numerical value corresponding to low uncommonness. Three uncommon maps are the result of this process. The three uncommon maps are summed together to produce an interest or saliency map. The interest map is then blurred with a smoothing operator in order to search for clusters of interesting pixels instead of solitary interesting pixels. The three highest peaks in the blurred interest map were identified and reported as ‘interest points’ to the human user.

Novelty detection

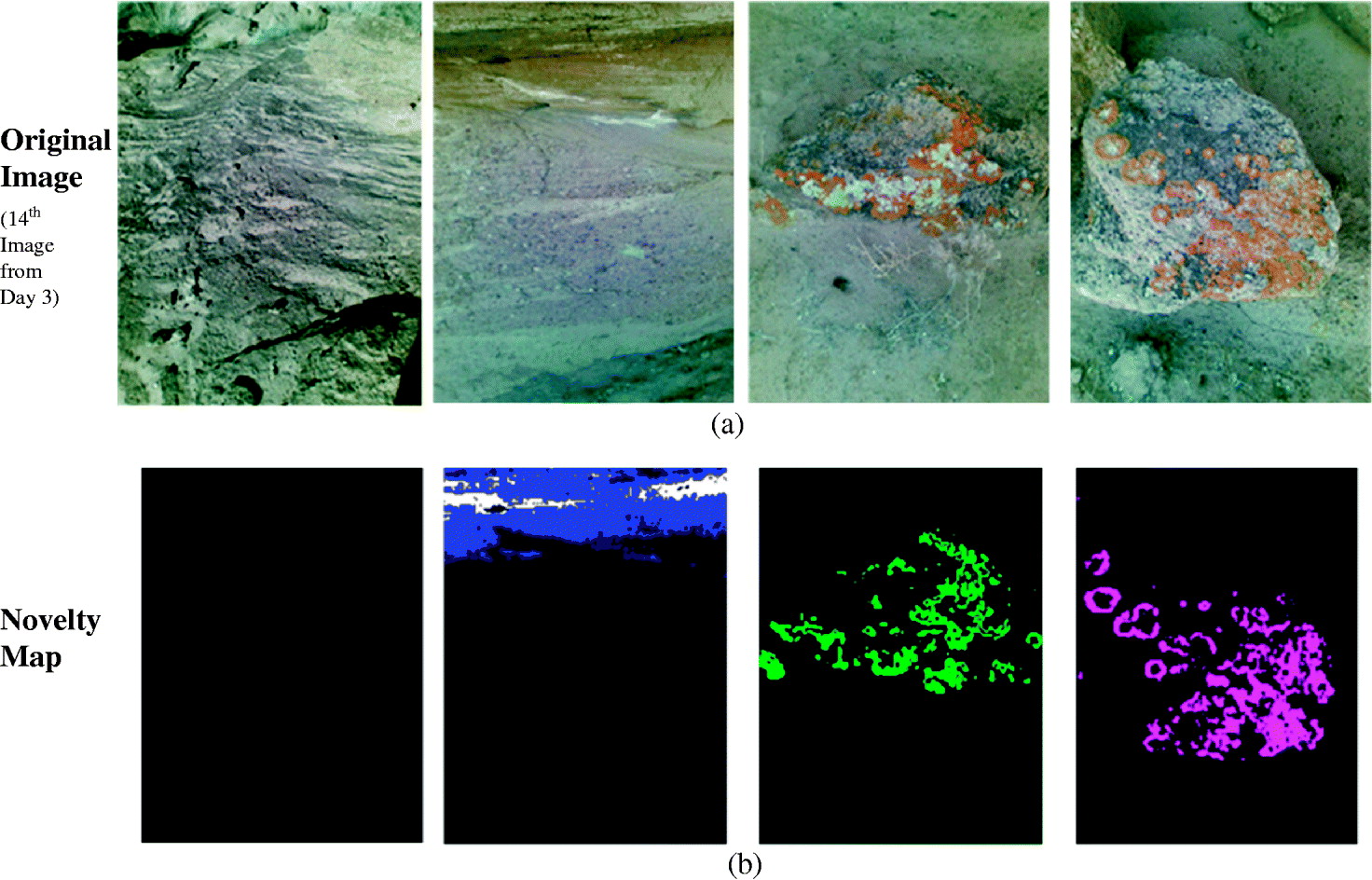

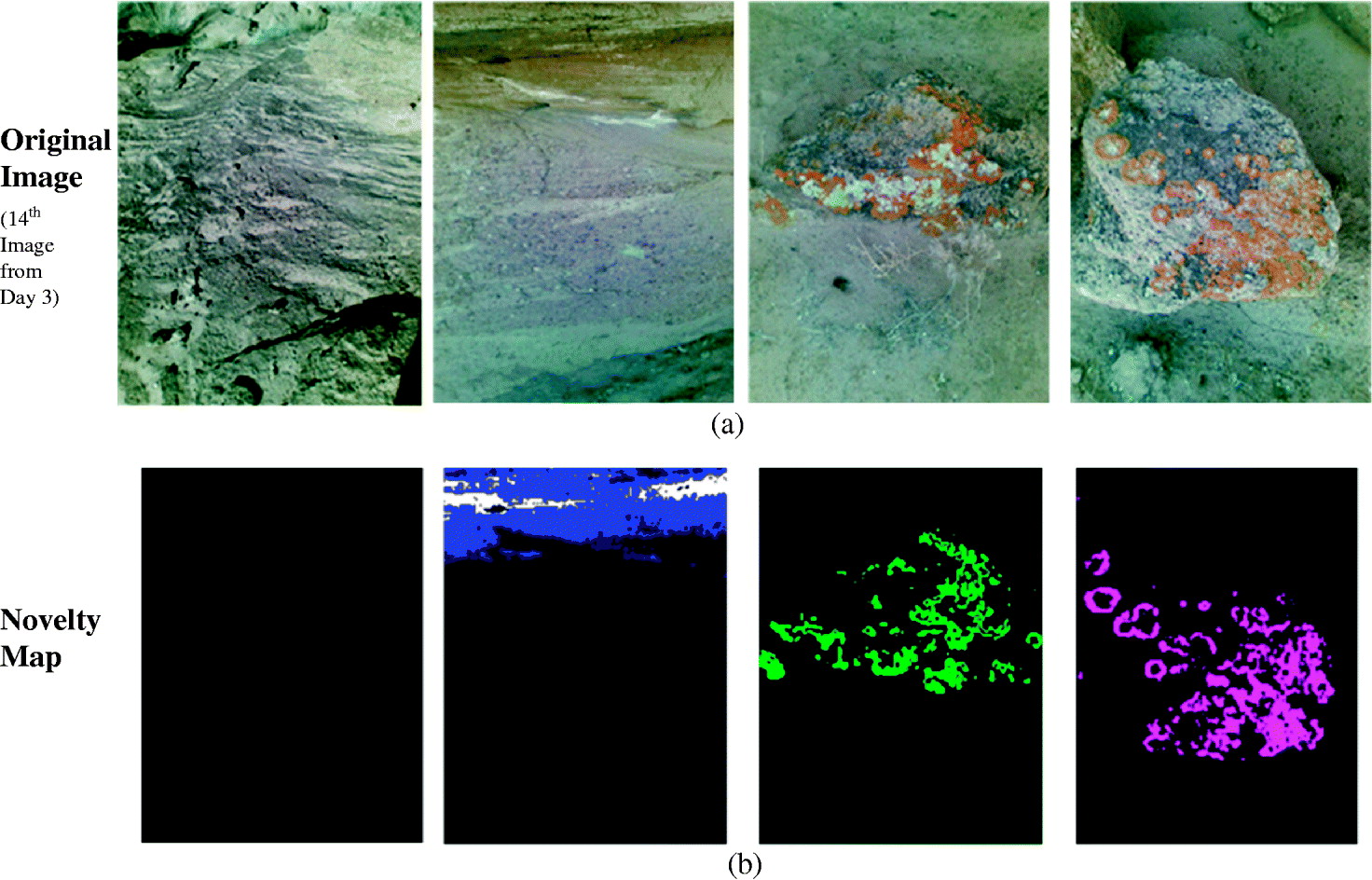

By utilizing the three-colour image segmentation maps for an image, we compute mean values for the hue, saturation and intensity (<H>, <S>, <I>) for each segment of each image that is acquired. This vector of three real numbers is then converted to a vector of three 6-bit binary numbers in order to feed it into an 18-neuron Hopfield neural network, as developed for novelty detection by Bogacz et al. (Reference Bogacz, Brown and Giraud-Carrier1999, Reference Bogacz, Brown and Giraud-Carrier2001) and coded into NEO for this project. If the interaction energy of the 18-vector with the Hopfield neural network is high, then the pattern is considered to be novel and is then stored in the neural network. Otherwise, the pattern has a low familiarity energy, it is considered to be familiar (not novel) and is not stored in the neural network. The novelty detection threshold is fixed at –N/4=–4.5, as prescribed by Bogacz et al. (Reference Bogacz, Brown and Giraud-Carrier1999, Reference Bogacz, Brown and Giraud-Carrier2001). See Fig. 1 for a summary and Fig. 2 for an offline example computed from data from the red-beds in Riba de Santiuste in Guadalajara, Spain. The familiar segments of the segmented image are coloured black, and the novel segments of the segmented image retain the colours that were used in the segmented image. In so doing, a quick inspection of the novelty maps allows the user to see what the computer considers to be novel (at least based upon this simple colour prescription without texture). See Fig. 3 for an example of the real-time novelty detection with the digital microscope during the Rivas Vaciamadrid mission in September 2005. As shown in Fig. 3, red-coloured sporing bodies of lichens were identified to be novel on the 18th image of the mission, and similar red-coloured sporing bodies of lichens were found to be familiar on the 19th image of the mission. This is ‘fast learning’.

Fig. 1. High-level pseudo program for the current novelty detection module of the Cyborg Astrobiologist. The number of neurons in the Hopfield neural network is N=18, corresponding to a threshold of −4.5 for familiarity energy.

Fig. 2. An example of colour-based novelty detection, using two images from an image sequence from a previous mission to Riba de Santiuste, processed in an offline manner with colour-based image segmentation and colour-based novelty detection. We have not yet implemented texture- and shape-based novelty detection. These images from Riba de Santiuste are approximately 0.30 m wide.

Fig. 3. Demonstration of real-time colour-based novelty detection with a field-capable digital microscope at Rivas Vaciamadrid. Image #1 was acquired by the panoramic camera prior to switching the input to be from the digital microscope, and this image corresponds to a cliff face exposure of about 10 m in width. The bottom three images acquired with the digital microscope (images #18, #19 and #23) are about 0.01 m wide. In image #18, the sporing bodies of lichens are identified in image #18 as being novel, whereas in image #19, similar red sporing bodies are regarded as familiar. Black and orange lichens are also regarded as novel in image #23. See the text for discussion.

An alternative, complementary direction for the development of the Cyborg Astrobiologist project (in addition to the current project of novelty detection) would be to direct the computer to identify units of similar colour to a unit of a user-prescribed colour. When extended to multispectral or hyperspectral imaging cameras, this would allow the search for minerals of interest.

Hardware

Wearable computer

In prior articles in this journal, the technical specifications of the wearable Cyborg Astrobiologist computer system have been described (McGuire et al. Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a, Reference McGuire2005). It consists of PC hardware with special properties to be both power saving and worn on the explorer's body. These special properties include an energy-efficient computer, a keyboard and trackball attached to the human's arm and a head-mounted display. We also use a colour video camera connected to the wearable computer by a FireWire communications cable, which is mounted on a tripod. The mounting on the tripod was necessary to reduce the vibrations in the images and to increase the repeatability of pointing the camera at the same location in the geological outcrops.

For the field experiments at Rivas Vaciamadrid discussed in this paper, the wearable computer system has been extended by having the option to replace the colour video camera with a universal serial bus (USB)-attached digital microscope.

Digital microscope

The digital microscope for the Rivas Vaciamadrid mission was chosen as an off-the-shelf USB microscope (model: Digital Blue QX5), which is often used for children's science experiments, but with sufficient capacity for professional scientific studies (e.g. King & Petruny Reference King, Petruny, Evans, Horton, King and Morrow2008). This microscope has three magnifications (10×, 60×, 200×) and worked fine with the wearable computer, allowing contextual images of ~1 mm diameter sporing bodies of lichens. It imaged well in natural reflective lighting in the field. A small tripod was added to the microscope to facilitate alignment and focusing in the field. As a result of the field experience at Rivas Vaciamadrid in September 2005, we recommend that future preparatory work with the digital microscope should involve the development of a robotic alignment-and-focusing stage for the microscope. This would save considerable time in the field, whereas the alignment and focusing were done manually during the Rivas mission.

Phone camera

The phone camera for the mission to the MDRS in Utah was chosen as an off-the-shelf mobile phone camera (model: Nokia 3110 classic, with 1.3 megapixels), which is used quite commonly in everyday personal and professional communication. This phone camera has Bluetooth capabilities, as well as standard mobile phone communication capabilities, via the mobile-phone communication towers. The camera in the phone camera has 480×640 pixels, and the images are transmitted by Bluetooth in lossy JPEG format. This particular camera turned out commonly to have a slight (<5–10%) enhancement in the central ~50% of the image (relative to the edges of the image) in the red channel of the RGB images. This issue was not noticed for the green and blue channels, and our novelty detection software handled the red-channel enhancement rather robustly.

Netbook computer

For the mission to the MDRS, a small netbook computer was chosen for the automated processing of the phone-camera images. The ‘ASUS Eee PC 901’ computer is noted for its combination of a lightweight, power-saving processor, solid-state disk drive and relatively low cost. It features an Intel Atom ‘Diamondville’ central processing unit (CPU) clocked at 1.6 GHz, Bluetooth and an 802.11n Wi-Fi connection. Proper power management software and a six-cell battery enable battery life of up to seven hours. The computer is ‘paired’ with the mobile phone camera by the Bluetooth stack in such a way that the images are transferred automatically to a certain folder, which is observed by the automation software. As the ‘Class 1’ Bluetooth connection allows transmission distances of up to 100 m, the computer can either be carried by the human who is operating the phone camera, by another nearby colleague, or be stationary in, for instance, a nearby vehicle.

Bluetooth and automation

The communications and automation software between the phone camera and the processing computer can handle images with both a standard mobile phone network (Borg et al. Reference Borg, Camilleri and Farrugia2003; Farrugia et al. Reference Farrugia, Borg, Camilleri, Spiteri and Bartolo2004; Bartolo et al. Reference Bartolo2007) and via Bluetooth communications (this work). The Bluetooth communication and automation software between the phone camera and the computer (netbook) was programmed in the MATLAB language and then compiled for use in the field. The MATLAB program uses: (a) Microsoft Windows system commands and MATLAB internal commands to control manipulation and conversion for the image files; (b) menu macro commands to send and receive images via Bluetooth; and (c) a ‘spawn call’ of the NEO program for the computer vision. The MATLAB program allows the user to choose to use either the novelty detection or the interest-point detection from uncommon mapping, and it also allows the user to set the novelty detection neural-network memory to ‘zero’. Future work with the Bluetooth automation may involve using a Bluetooth Application Programming Interface (API) instead of the MATLAB menu macro commands, in order to speed up the communications.

Field sites

Rivas Vaciamadrid in Spain

On the 6th of September, 2005, two of the authors (McGuire and Souza-Egipsy) tested the wearable computer system for the fifth time at the Rivas Vaciamadrid geological site, which is a set of gypsum-bearing southward-facing stratified cliffs near the ‘El Campillo’ lake of Madrid's Southeast Regional Park (Castilla Cañamero Reference Castilla Cañamero2001; Calvo et al. Reference Calvo, Alonso and Garcia del Cura1989; IGME 1975), outside the suburb of Rivas Vaciamadrid. This work involved a digital microscope and a novelty detection algorithm. Prior work at Rivas Vaciamadrid by our team is described in McGuire et al. Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a:

‘The rocks at the outcrop are of Miocene age (15–23.5 Myrs before present), and consist of gypsum and clay minerals, also with other minor minerals present (anhydrite, calcite, dolomite, etc.). These rocks form the whole cliff face which we studied, and they were all deposited in and around lakes with high evaporation (evaporitic lakes) during the Miocene. They belong to the so-called Lower Unit of the Miocene. Above the cliff face is a younger unit (which we did not study) with sepiolite, chert, marls, etc., forming the white relief seen above from the distance, which is part of the so-called Middle Unit or Intermediate Unit of the Miocene. The color of the gypsum is normally grayish, and in-hand specimens of the gypsum contain large and clearly visible crystals.

We chose this locality for several reasons, including:

• It is a so-called ‘outcrop’, meaning that it is exposed bedrock (without vegetation or soil cover);

• It has distinct layering and significant textures, which will be useful for this study and for future studies;

• It has some degree of color differences across the outcrop;

• It has tectonic offsets, which will be useful for future high-level analyses of searching for textures that are discontinuously offset from other parts of the same texture;

• It is relatively close to our workplace;

• It is not a part of a noisy and possibly dangerous construction site or highway site.

The upper areas of the chosen portion of the outcrop at Rivas Vaciamadrid were mostly of a tan color and a blocky texture. There was some faulting in the more layered middle areas of the outcrop, accompanied by some slight red coloring. The lower areas of the outcrop were dominated by white and tan layering. Due to some differential erosion between the layers of the rocks, there was some shadowing caused by the relief between the layers. However, this shadowing was perhaps at its minimum for direct lighting conditions, since both expeditions were taken at mid-day and since the outcrop runs from East to West. Therefore, by performing our field-study at Rivas during mid-day, we avoided possible longer shadows from the sun that might occur at dawn or dusk’.

Mars Desert Research Station in Utah

For the last two weeks of February 2009, two of the authors (Gross and Wendt) tested the Bluetooth-enabled phone-camera system with the novelty detection algorithm at the MDRS field site in Utah (see Figs 4–7). The MDRS is located in the San Rafael Swell of Utah, 11 kilometres from Hanksville in a semi-arid desert. Surrounding the MDRS habitat, the Morrison Formation represents a sequence of Late Jurassic sedimentary rocks, also found in large areas throughout the western US. The deposits are known to be a famous source of dinosaur fossils in North America. Furthermore, the Morrison Formation was a major source for uranium mining in the US. The formation is named after Morrison, Colorado, where Arthur Lakes discovered the first fossils in 1877. The sequence is composed of red, greenish, grey or light grey mudstone, limestone, siltstone and sandstone, partially inter-bedded by white sandstone layers. Radiometric dating of the Morrison Formation show ages of 146.8±1 Ma (top) to 156.3±2 Ma (base) (Bilbey Reference Bilbey1998).

Fig. 4. (a) The MDRS in Utah. (b) Two ‘astronauts’ exploring a stream near the MDRS.

Fig. 5. (a) Panoramic view of the Morrison cliffs near the MDRS. (b) Two images of the Cyborg Astrobiologist exploring the Morrison cliffs equipped with a Bluetooth-enabled phone camera and a netbook. Note the impressive mobility (relative to many robotic astrobiologists) in the gully on the right image.

Fig. 6. Two close-up views ((a) and (b)) of the Cyborg Astrobiologist in action at the MDRS, together with an image (c) acquired by the Cyborg Astrobiologist with the Astrobiology phone camera at the location in (b). In other tests at the MDRS, the Cyborg Astrobiologist, when suited as an astronaut, was able to successfully, but less robustly, operate the Bluetooth-enabled phone camera (phone-camera operation was limited by the big-finger problem of the gloved astronaut).

Fig. 7. Two further images of the Cyborg Astrobiologist using the Bluetooth-enabled Astrobiology phone camera in the Utah desert. Shading of the phone-camera screen was sometimes required in order to view the details.

The Morrison Formation is divided into four subdivisions. From the oldest to the most recent they are the Windy Hill Member (shallow marine and tidal flat deposits), the Tidewell Member (lake and mudflat deposits), the Salt Wash Member (terrestrial deposition, semi arid alluvial plain and seasonal mudflats) and the Brushy Basin Member (river and saline/alkaline lake deposits). The Cyborg system was tested on two of the Members, the Salt Wash and the Brushy Basin Members, once deposited in swampy lowlands, lakes, river channels and floodplains. The Brushy Basin Member is much finer grained than the Salt Wash Member and is dominated by mudstone rich in volcanic ash. The most significant difference of the Brushy Basin Member is the reddish colour, whereas the Salt Wash Member has a light, greyish colour. The deposits were accumulated by rivers flowing from the west into a basin that contained a giant, saline, alkaline lake called Lake T'oo'dichi' and extensive wetlands that were located just west of the Uncompahgre Plateau. The large alkaline, saline lake, Lake T'oo'dichi', occupied a large area of the eastern part of the Colorado Plateau during the deposition. The lake extended from near Albuquerque, New Mexico, to near the site of Grand Junction, Colorado, and occupied an area that encompassed the San Juan and ancestral Paradox basins, making it the largest ancient alkaline, saline lake known (Turner and Fishman Reference Turner and Fishman1991). The lake was shallow and frequently evaporated. Intermittent streams carried detritus from source areas to the west and southwest far out into the lake basin. Prevailing westerly winds carried volcanic ash to the lake basin from an arc region to the west and southwest (Kjemperud et al. Reference Kjemperud, Schomacker and Cross2008). Today, the rough and eroded surface of the Brushy Basin and the Salt Wash Members in the area around the MDRS show a variety of different morphologic features, making it ideal for testing the Cyborg Astrobiologist System.

A brief summary of the characteristics of the Bushy Basin and Salt Wash Members of the Morrison Formation is given here:

— colour variations within one unit due to changing depositional environment and/or volcanic input;

— diverse textures of the sediment surface (popcorn texture, due to bentonite);

— laminated sediment outcrops;

— eroded boulder fields in the Salt Wash Member;

— cross bedding in diverse outcrops;

— interbedded gypsum nodules;

— lichens and endolithic organisms;

— scarce vegetation.

Results

The main results of this development and testing are three-fold. Firstly, we have two rather robust systems (the wearable computer system with a digital microscope and the Bluetooth-enabled netbook/phone-camera system) that have different complementary hardware capabilities. The wearable computer system can be used either when advanced imaging/viewing hardware is required or when the most rapid communication is required. The netbook/phone-camera system can be utilized when higher mobility or ease-of-use is required. Secondly, our novelty detection algorithm and our uncommon-mapping algorithm are also both rather robust, and ready to be used in other exploration missions here on Earth, as well as being ready to be optimized and refined further for exploration missions on the Moon and Mars. Such further optimizations and refinements could include: optimization for speed both of computation and of communication, the incorporation of texture (for example, Freixenet et al. Reference Freixenet, Munoz, Marti, Lladó, Pajdla and Matas2004) into the novelty detection and uncommon-mapping, the extension to more than three bands of colour information (i.e., multispectral image sensors) and improvements to the clustering used in the image-segmentation module, etc. Thirdly, the NEO graphical-programming language is a mature and easy-to-use language for programming robust computer-vision systems. This observation is based upon over eight years of experience of using NEO on this project (McGuire et al. Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a,Reference McGuireb, Reference McGuire2005; Bartolo et al. Reference Bartolo2007; and the current work) and on the predecessor GRAVIS project (McGuire et al. Reference McGuire, Fritsch, Steil, Roethling, Fink, Wachsmuth, Sagerer and Ritter2002; Ritter et al. 1999, Reference Ritter2002).

Results at Rivas Vaciamadrid

We show one result for Rivas Vaciamadrid in Fig. 3. The deployment of the digital microscope is shown on the far left.

The four rows of the 4×4 sub-array of images represent selected images from the mission to Rivas Vaciamadrid, with the earliest images at the top and the latest images at the bottom.

The four columns of the 4×4 sub-array of images (from left to right) are:

1. the original acquired images from the panoramic camera and the digital microscope;

2. the full-colour image segmentation (using spectral-angle matching);

3. uncommon maps from the full-colour image segmentation;

4. novelty maps from the sequence of full-colour segmented images.

Of particular note from this series of images are the second to fourth rows of this sub-array of images. In the second row, corresponding to the 18th image in the image sequence, we have imaged the red sporing bodies of lichen for the first time during this mission. Each of the sporing bodies is approximately 1 mm in diameter. The uncommon map for this image finds the sporing bodies to be relatively uncommon coloured regions of the image. Impressively, the novelty map for this image finds the red sporing bodies to be the nearly unique areas of this image that are novel. The other area that was determined to be novel was a small area of brownish rock in the upper right-hand corner of the image, beneath the white-coloured parent body of the lichen. More impressively, the novelty map for the image in the third row, in the 19th image in the image sequence, does not find these red sporing bodies to be novel. The red sporing bodies have been observed previously, so the colours of the sporing bodies are now familiar to the Hopfield neural network. The neural network has learned these colours after only one image. In the fourth row (23rd image of the image sequence), the Hopfield neural network detects some black and orange lichens as also being novel.

Results at the Mars Desert Research Station

We show some of the results for the mission to the MDRS in Figs 8–12. The first images were taken at typical outcrops of the sediments with so-called popcorn structures (see Fig. 8). These structures are caused by the presence of bentonite in the sediments. Small channels exposed a cross-section through the stratigraphic sequence, so we imaged the ground through these diverse small channels and further up the geologic sequence. The system robustly detected colour changes from grey to red and white, with the novelty map lighting up when new colours were observed. In addition to this, the system recognized different structures as being novel, such as boulders of different colours and shadows. Generally, a terrain of uniform colour was completely familiar to the novelty detection system after three to six pictures (see Fig. 9). Objects whose colours were not familiar to the system were detected as being novel without exception (Figs 9–11). By far the best results of the Cyborg Astrobiologist were obtained from lichens and cross-cutting layerings of gypsum or ash (Figs 10 and 11). Lichens were detected very accurately as being novel, whether they were in the shadow or in bright sunlight, even after some prior observations of lichens of similar colours. During the tests, a library of pictures was developed and categorized for further investigations and instrument tests, for example in processing quality and recognition experiments. The library consists of 259 images of the desert landscape, most of them without vegetation and some with soils at different humidity levels. A subset of thumbnails of images from this library is shown in Fig. 8.

Fig. 9. The sequence of novelty maps acquired during the first two days of the tests at the MDRS in Utah. These novelty maps correspond to the image sequence in Fig. 8. Figure 10 shows expanded views of several images in this sequence. The coloured regions are the novel regions in this image sequence. The black regions are the familiar regions in the image sequence. See text for details.

Fig. 10. Examples of successful novelty detection during the field tests (Day #2). See the lower half of Figs 8 and 9 for the sequencing. The system recognized the colours in image #31 as known and sends back a black image. Image #40 shows a mudstone outcrop, a colour unknown to the system. The returned image indicates the novelty in cyan and magenta. Image #53 shows yellow to orange lichen, growing on sandstone. The system clearly indentifies the lichen as novel, whereas the surrounding rock is known and therefore coloured black in the resulting image.

Fig. 11. (a) Zoom-in on four of the images from the image sequence, from Day #3 at the MDRS. The widths of these four images are about 2, 4, 0.4 and 0.3 m, from left to right. (b) Zoom-in on the corresponding novelty maps (from Fig. 9). Note that the Cyborg Astrobiologist identified as familiar the ‘normally’ coloured image on the left, and it identified as novel the yellow-, orange- and green-coloured lichen in the three images on the right. The system sometimes requires several images before it recognizes a colour as being familiar.

Fig. 12. From left to right: images 86, 87, 88 and 89 from day 3 of the MDRS test campaign. The widths of these images are approximately 3, 0.3, 50 and 80 m, all measured half-way up the image. Three interest points (based upon uncommon mapping) are overlain upon each image in real time and transmitted back to the phone camera. Image 86 focuses on a piece of petrified wood. Image 87 focuses on lichen. Image 88 focuses on a cluster of aeolian or water-eroded features. Image 89 focuses on a channel.

The novelty detection system robustly separated images of rock or biological units that it had already observed before from images of surface units that it had not yet observed. Sometimes, several images are required in order to learn that a certain colour has been observed before (see Fig. 11). Nonetheless, this shows that colour information can be used successfully to recognize familiar surface units, even with rather simple camera systems, such as a mobile-phone camera. For remotely operating exploration systems, this ability might be a great advantage due to its simplicity and robustness.

The hardware and software performed very well and was very stable. Some problems emerged when using the system with big gloves in the extravehicular activity (EVA) suit and when reading out the phone-camera display in the bright light. Some problems emerged from cast shadows in rough terrain or at low-standing sun. The system detected those sharp contrast transitions as novel.

The uncommon-mapping system for the generation of interest points (McGuire et al. Reference McGuire, Ormö, Díaz-Martínez, Rodríguez-Manfredi, Gómez-Elvira, Ritter, Oesker and Ontrup2004a, Reference McGuire2005) was also tested again at a few locations. We targeted broad scenes (see Fig. 12) in order to test the landscape capability of the system with the goal of identifying, for example, obstacles or layer boundaries that could stand in the way of a rover.

Summary and future work

We have developed and tested a novelty detection algorithm for robotic exploration of geological and astrobiological field sites in Spain and Utah, both with a field-capable digital microscope connected to a wearable computer and a hand-held phone camera connected to a netbook computer via Bluetooth.

The novelty detection algorithm can detect, for example, small features such as lichens as novel aspects of the image sequence in semi-arid desert environments. The novelty detection algorithm is currently based upon a Hopfield neural network that stores average HSI colour values for each segment of an image segmented with full-colour segmentation. The novelty detection algorithm, as implemented here, can learn familiar colour features, sometimes in single instances, although several instances are sometimes required. The algorithm never requires many instances to learn familiar colour features.

In many machine-learning applications, learning a piece of information often requires the presentation of multiple, or even many, instances of similar pieces of information, although single-instance learning is possible in certain situations with particular algorithms (Aha et al. Reference Aha, Kibler and Albert1991; Maron & Lozano-Perez Reference Maron and Lozano-Perez1998; Zhang & Goldman Reference Zhang and Goldman2002; Chen & Wang Reference Chen and Wang2004). Humans are sometimes capable of learning a new piece of information with only a single instance (Read Reference Read1983), although multiple instances are often required (Gentner & Namy Reference Gentner and Namy2006). Our implementation of the novelty detection algorithm (Bogacz et al. Reference Bogacz, Brown and Giraud-Carrier1999, Reference Bogacz, Brown and Giraud-Carrier2001) is therefore competitive with both humans and other machine-learning systems, at least in terms of training requirements, for this application of the real-time detection of unfamiliar colours in images from a semi-arid desert.

In our next efforts, we intend to (a) extend this processing to include robust segmentation, based upon both colour and texture (for example, Freixenet et al. Reference Freixenet, Munoz, Marti, Lladó, Pajdla and Matas2004), as well as novelty detection based upon both colour and textural features, (b) optimize the current system for speed of computation and communication and (c) extend our system to more sophisticated cameras (i.e., multispectral cameras).

In the near future, we will test the Bluetooth-enabled phone camera and novelty detection system at additional field sites with different types of geological and astrobiological images to those studied thus far.

Acknowledgements

We would like to acknowledge the support of other research projects that helped in the development of the Cyborg Astrobiologist. The integration of the camera phone with an automated mail watcher was carried out under the ‘Innovative Early Stage Design Product Prototyping’ (InPro) project, supported by the University of Malta under research grant (R30) 31-330. Many of the extensions to the GRAVIS interest-map software, programmed in the NEO language, were made as part of the Cyborg Astrobiologist project from 2002–2005 at the Centro de Astrobiologia in Madrid, Spain, with support from the Instituto Nacional de Técnica Aeroespacial (INTA) and Consejo Superior de Investigaciones Científicas (CSIC) and from the Spanish Ramon y Cajal program.

We thank the Mars Society for access to their unique facility in Utah, the European Space Agency (ESA)/European Space Research and Technology Centre (ESTEC) for facilitating the mission at the MDRS in February 2009 and Gerhard Neukum for his support of the Utah mission in February 2009.

PCM acknowledges support from a research fellowship from the Alexander von Humboldt Foundation, as well as from a Robert M. Walker fellowship in Experimental Space Sciences from the McDonnell Centre for the Space Sciences at Washington University in St. Louis.

PCM is grateful for conversations with: (a) Peter Halverson, which were part of the motivation for developing the Astrobiology phone camera; (b) Javier Gómez-Elvíra and Jonathan Lunine, which were part of the motivation for developing the Cyborg Astrobiologist project; and (c) Babette Dellen, concerning the novelty detection neural-network algorithm.

CG and LW acknowledge support from the Helmholtz Association through the research alliance ‘Planetary Evolution and Life’.