Introduction

An obvious characteristic shared by sign language and co-speech gesture production is that they are expressed in the same modality (visual-gestural), relying primarily on the same articulators (the hands). Studies with hearing individuals who acquire American Sign Language (ASL) and English from birth (referred to as native or early signers) suggest that sign language experience impacts their co-speech gesture patterns. For example, when describing events in English, these ‘bimodal’ bilinguals produce more iconic gestures than monolingual non-signers, and more sign-like gestures, such as gestures that convey character perspective, which are similar to signs produced with role-shift in ASL (i.e., adopting the role of characters in a narrative by producing the facial expressions and/or body movements of the characters; Casey & Emmorey, Reference Casey and Emmorey2009). Similarly, Gu, Zheng, and Swerts (Reference Gu, Zheng and Swerts2018) recently reported that hearing bimodal bilinguals fluent in Mandarin and in Chinese Sign Language (CSL) produced different time-related co-speech gestures compared to non-signing Mandarin speakers, which reflected the spatial timelines used in CSL.

Casey and Emmorey (Reference Casey and Emmorey2009) also found that, compared to non-signers, ASL–English bilinguals used a wider variety of handshapes in their co-speech gestures and produced more gestures with unmarked handshapes (i.e., handshapes that are frequent across sign languages and acquired early by signing children). These ASL–English bilinguals also produced a greater variety of both marked and unmarked handshapes compared to non-signers (marked handshapes are less common, acquired later, and have more phonological restrictions on their occurrence; Brentari, Reference Brentari1998). Casey and Emmorey (Reference Casey and Emmorey2009) hypothesized that the greater variety of handshapes observed in the co-speech gestures of bimodal bilinguals occurs because sign and gesture share the manual modality. Specifically, ASL–English bilinguals have experience using a variety of handshapes to express meaning with lexical signs, and thus they have a wider variety of handshape types available to them when producing meaningful co-speech gestures.

ASL–English bilinguals also produce clearly identifiable ASL signs when describing events in English, even when they know their addressee has no ASL knowledge (Casey & Emmorey, Reference Casey and Emmorey2009; Naughton, Reference Naughton1996). Many of these ASL signs have no transparent iconic meaning, and thus would be unrecognizable by a non-signer (e.g., the handshape used to refer to vehicles [the thumb, index, and middle fingers all extended]; the signs NOW, LOOK-FOR – see the website asl-lex.org for videos of these signs). It is unlikely that such manual productions are meant to convey information to listeners who do not know ASL; rather, they appear to represent unintentional intrusions of ASL signs, reflecting a failure to suppress ASL (see also Pyers & Emmorey, Reference Pyers and Emmorey2008).

Even short-term exposure to sign language can influence co-speech gesture production. After one year of ASL study, novice adult signers showed increased iconic gesture production rates and more marked handshape varieties compared to at the start of the course, but there was no change in the rate of beat or deictic gestures (see Methods for definitions of these gesture types). In contrast, after one year of Romance language instruction, adult learners showed no change in their iconic co-speech gesture rate (Casey, Emmorey & Larrabee, Reference Casey, Emmorey and Larrabee2012). Despite these different outcomes for ASL and Romance language learners, Casey et al. (Reference Casey, Emmorey and Larrabee2012) found no statistical difference between these groups in iconic gesture rates or number of marked handshape varieties. This finding suggests that short-term L2 ASL exposure does not robustly alter co-speech gesture patterns in adult learners.

Compared to early (native) ASL–English bilinguals, the modest effects on co-speech gesture experienced by novice adult learners may reflect the new learners’ lack of sign language proficiency. It is also possible that the impact of sign language experience depends (at least in part) on the age of acquisition. If age of acquisition is key, adults who become proficient in ASL as a second language (L2 signers) may experience minimal effects on their co-speech gesture, compared to early-exposed signers. On the other hand, if ASL fluency is a key factor influencing co-speech gesture patterns, then long-term exposure to, and use of, ASL, even when acquired in adulthood, should result in more robust co-speech gesture effects than found for novice adult learners. Further, it is possible that sign language experience has a greater impact on co-speech gesture patterns of L2 signers than on early signers. Such an effect might occur because, for L2 learners, the co-speech gesture system, which is already in place when ASL acquisition begins, incorporates newly learned manual articulations of ASL into that existing system. In contrast, child learners who simultaneously acquire ASL and English may develop separate systems for ASL and co-speech gesture (with English).

Whether a second language affects gesture patterns in a first language has not been widely studied. However, research with one group of unimodal bilinguals showed that, when speaking Japanese, native speakers with intermediate knowledge of English patterned more like monolingual English speakers than monolingual Japanese speakers, with respect to the use of character viewpoint (Brown, Reference Brown2008) and the use of manner of motion gestures (Brown and Gullberg, Reference Brown and Gullberg2008). Conversely, most studies have shown that L2 learners display traces of their L1 gesture patterns when speaking their L2 (e.g., Choi & Lantolf, Reference Choi and Lantolf2008; Kellerman & van Hoof, Reference Kellerman and van Hoof2003; Negueruela, Lantolf, Rehn Jordan & Gelabert, Reference Negueruela, Lantolf, Rehn Jordan and Gelabert2004; Özçalişkan, Reference Özçalişkan2016). Because signed languages are visual-manual languages, when acquired as an L2 they may be more likely to influence L1 co-speech gesture, compared to acquisition of a second spoken language.

Some studies of gesture production in unimodal bilinguals have focused on the rate of co-speech gesture production in L2 compared to L1, testing the hypothesis that bilinguals use gesture to compensate for weaker proficiency in their L2; however, the evidence for this hypothesis is inconsistent (for review see Nicoladis, Reference Nicoladis2004). Other studies with unimodal bilinguals focused more on the content of co-speech gestures produced in L1 vs. L2 (e.g., whether path or manner gestures are produced; Choi & Lantolf, Reference Choi and Lantolf2008) or the use of culturally-specific emblems (Gullberg, 2006). No studies (to our knowledge) have explored whether learning a spoken L2 affects the handshape complexity of L1 co-speech gestures. Form complexity (i.e., greater use of marked handshapes) is a domain where acquiring a signed language as an L2 might be more likely to impact L1 co-speech gesture than acquiring a second spoken language. There are approximately 40 distinct handshapes in the ASL phonological inventory, and, as noted for early bimodal bilinguals, the frequent use of such a wide variety of handshapes while signing could increase the variety of handshapes they can exploit to create co-speech gestures. Further, this effect could be considered an example of convergence between two manual systems (sign and co-speech gesture), comparable to convergence effects observed in unimodal bilinguals. For example, French–Dutch bilinguals exhibited a merged semantic system for placement verbs, with speech and gesture patterns that differed from monolinguals' (Alferink, Reference Alferink2015; Alferink & Gullberg, Reference Alferink and Gullberg2014).

In the present study we examined how acquisition of ASL as an L2 in adulthood impacts overall co-speech gesture rate, type of gesture production (the rate of iconic, deictic, or beat gestures), and manual form complexity (e.g., variety of handshapes and handshape markedness) in English (L1). With respect to the type of co-speech gesture, our previous research suggested that ASL acquisition is most likely to impact the rate of iconic, but not deictic gestures (Casey & Emmorey, Reference Casey and Emmorey2009; Casey et al., Reference Casey, Emmorey and Larrabee2012). Here, we compared a group of native ASL–English bilinguals (early signers), a group of skilled, fluent signers who acquired ASL after an average age of 18 years (L2 signers), and a control group of monolingual English speakers (non-signers), as they described cartoon clips to an interlocutor. Our goal was to examine whether fluent L2 signers and early ASL–English bilinguals share similar patterns of co-speech gesture production, and whether their patterns differ from those of non-signers. If so, such a finding would contrast with Casey et al. (Reference Casey, Emmorey and Larrabee2012), who found that the gesture production of adults with a year of ASL instruction did not differ from that of adult non-signers (Romance language learners). This result would also suggest that effects of sign language knowledge on co-speech gesture depend on longer-term sign language experience and proficiency. A second possibility is that ASL experience influences the co-speech gesture patterns of proficient L2 signers more than it does those of early signers. That is, L2 signers might show more robust effects, compared to native signers, because adult ASL learners are more likely to incorporate aspects of ASL into their existing co-speech gesture system than child ASL learners. A third possibility is that co-speech gesture production is strongly affected only when sign language acquisition occurs early and simultaneously with English acquisition (i.e., native use of ASL and English). If so, then gesture production patterns of fluent L2 adult signers might not differ from that of non-signers, and would resemble those of the adult novice learners in Casey et al. (Reference Casey, Emmorey and Larrabee2012). Under this third account, modest effects for L2 signers would indicate that the co-speech gesture system is somewhat protected from intrusion when acquisition of two manual communication systems is sequential (i.e., co-speech gesture with English first, then ASL as an L2), rather than simultaneous, even with high fluency in ASL.

Method

Participants

Three groups of hearing, native English speakers participated in the study. One group of participants was composed of fluent ASL signers who had learned ASL since birth from their DeafFootnote 1 parents and English from birth from their hearing relatives and surrounding community (n = 15, M age = 28 years [SD = 8 years]; 8 females), and half (n = 7) worked as interpreters. A second group of fluent signers learned ASL as a second language (L2 signers; n = 15, M age = 41 years [SD = 9 years]; 12 female). Participants in this group had their first exposure to ASL at an average age of 18 years (SD = 7), and all had been using ASL for ten or more years (M = 23 years; SD = 8 years) and were working interpreters, indicating a high level of proficiency. Data for the third group of participants was obtained from randomly selected monolingual English speakers (n = 15, M age = 21 years [SD = 5 years]; 12 female) from the group of Romance language learners at the beginning (pre-instruction phase) of the Casey et al. (Reference Casey, Emmorey and Larrabee2012) longitudinal study. A one-way analysis of variance (ANOVA) revealed a significant difference in the ages of the three groups (F (2, 42) = 26.0, p < .001). Specifically, early signers and L2 signers were older than the non-signers, (early signers vs. non-signers, t (1, 28) = 2.82, p = .01; L2 vs non-signers, t (1, 28) = 7.30, p < .001; and the L2 group was older than the early signers, t (1, 28) = 4.07, p < .001). We address possible effects of age in the Discussion section. No participants were fluent in any spoken language other than English, and none of the signing participants were fluent in any sign language other than ASL. All participants gave informed consent according to SDSU IRB procedures and were paid for participating.

Procedure

To avoid influencing participant responses, we made no mention of gesture prior to or during the experiment. Bilingual participants were told that the purpose of the study was to compare narrative styles across bilinguals; monolingual language-learners were told we were investigating factors that improve language learning. Participants viewed eight clips (scenes) from a seven-minute cartoon known to elicit co-speech gesture (Canary Row; McNeill, Reference McNeill1992). After viewing each individual clip, participants described the clip in English to a confederate interlocutor whom they did not know. Interlocutors interacted naturally with participants (e.g., provided feedback to indicate comprehension). For the signing participants, the interlocutor had no knowledge of ASL and this was told to the participants to avoid any possibility of biasing them to use ASL signs in their narratives. For the non-signers, lab members who knew ASL served as interlocutors, but the participants were unaware of this. Since non-signers could not produce ASL signs, there was no concern that the interlocutor could bias them toward sign production in their narratives. All sessions were videotaped for later coding and analysis.

Gesture coding

For consistency, we coded the entire narrative (eight scenes) for ASL sign production and selected the same two scenes for gesture coding that were previously analyzed by Casey et al. (Reference Casey, Emmorey and Larrabee2012). This allowed us to compare gesture and sign production results across studies. For the gesture analyses, one scene shows Tweety (the bird) throwing a bowling ball down a drainpipe and the ball rolls into Sylvester's (the cat's) stomach, and the second scene shows Sylvester swinging on a rope and slamming into a wall next to Tweety's window. We coded participants’ cartoon descriptions for co-speech gesture productions by gesture type (iconic, deictic, beat, conventional, other); handshape type (marked, unmarked); handshape variety (the number of different handshapes); and the presence of ASL signs (bilinguals only). All reported gesture production rates reflect the number of gestures per second across narratives. Rates were calculated as the number of gestures produced summed across scenes and divided by the summed lengths of both scenes. Length of cartoon descriptions was measured in seconds, and included the entire time required for participants to complete their description of each clip, without regard to repetition or pauses.

Iconic gestures included those that represented the attributes, actions, or relationships of objects or characters (McNeill, Reference McNeill1992). For example, moving the hands sideways to indicate Sylvester rolling into a bowling alley was classified as an iconic gesture. This category of gestures also included imitations of gestures performed by characters in the cartoon (e.g., moving the index finger from side to side, mimicking Sylvester's gesture in the cartoon). Deictic gestures included pointing gestures produced with a fingertip or with the hand, whether the referent entities were physically present or not (e.g., some deictic gestures were toward the computer screen). We coded non-iconic gestures that bounced or moved in synchronization with speech as beats, which typically accompanied a stressed word and consisted of one or multiple movements. These gesture category assignments were not mutually exclusive – some gestures belonged to more than one category. For example, one participant moved her hands to describe the path along which Sylvester rolled down the street while also bouncing her hand in synchronization with her speech. This was therefore coded as both an iconic gesture and a beat gesture and was included in the rate calculations for both categories of gesture type. We considered any gestures typically used in American culture as conventional gestures, e.g., “well” (a one or two-handed gesture produced with a lax 5 handshape, palm(s) up, and a short outward movement; see Appendix A for illustration of the 5 handshape). One final gesture category, ‘unclear’, included all gestures that did not fit any other category (e.g., a gesture that looked like a beat, but was produced without accompanying speech). Following standard practice and to parallel our previous studies, conventional and unclear gestures were excluded from all except the overall gesture rate analysis.

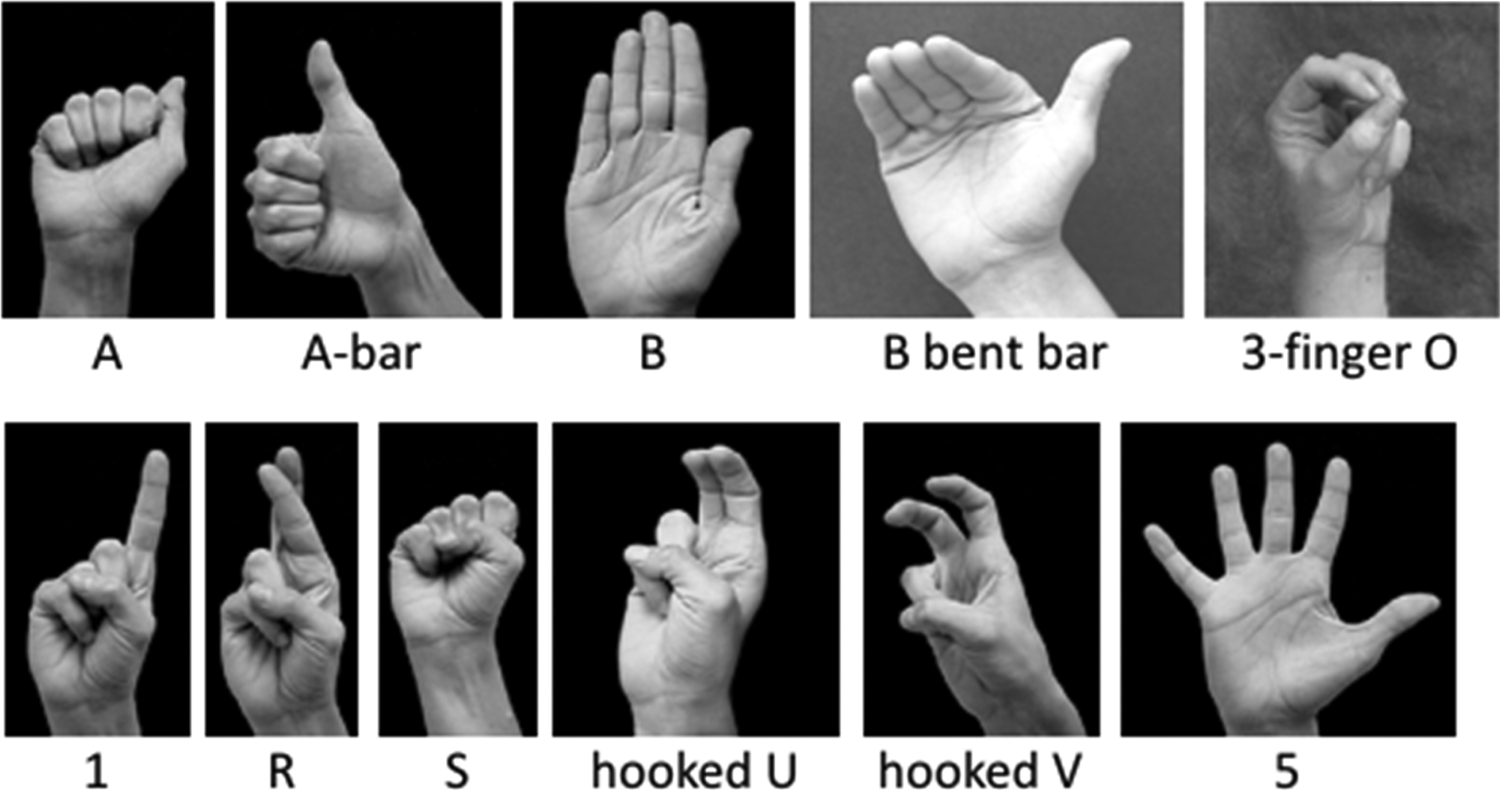

Within the coded gestures, we categorized all handshapes as either marked or unmarked. Unmarked handshapes (i.e., those that are easier to produce and the most common across sign languages) were A, A-bar, B, S, 1, and 5 (see Appendix A), following the cross-linguistic phonological analysis of Eccarius and Brentari (Reference Eccarius and Brentari2007). All other handshapes were categorized as marked (i.e., less common and harder to produce), including phonologically distinct variations of unmarked handshapes (e.g., B bent bar; see Appendix A).

We coded ASL sign production across the entire narrative (all eight scenes) for consistency with Casey et al. (Reference Casey, Emmorey and Larrabee2012). As in that study, limiting examination of sign production to only the two scenes selected for gesture coding revealed the same pattern of results as found for the entire narrative; however, including only these two scenes yielded too few signs for statistical analysis. ASL signs included identifiable lexical signs (e.g., MEASURE; see Figure 3) and classifier constructions that a non-signer would be unlikely to produce. Classifier constructions are morphologically complex forms in which the handshape can indicate the semantic class of a referent or information about the size and shape of a referent. For example, in ASL, a hooked V handshape is an animal classifier (see Appendix A for illustration). Because a non-signer would not commonly designate an animal with a hooked V handshape, it was coded as a sign. We excluded all signs that were similar to gestures produced by any non-signer in our previous studies (Casey et al., Reference Casey and Emmorey2009; Casey et al., Reference Casey, Emmorey and Larrabee2012). For this reason, we did not code the ASL signs LOOK-AT, TELEPHONE, and WRITE (see the website asl-lex.org for videos of these signs), or the classifier handshape used for movements of people (the one handshape; see Appendix A), as ASL signs. Intercoder reliability between two trained, independent coders was 97.5% for gesture type (Cohen's kappa = .94). Agreement reliability was 95% for beat gestures, 85% for deictic gestures, and 99% for iconic gestures. For handshape type, intercoder reliability was 95% (Cohen's kappa = .97).

Following ANOVA or MANOVA (see results), we conducted post-hoc analyses using one-tailed independent t-tests (or Tukey HSD) for group comparisons where we had directional predictions (i.e., overall manual production rates, iconic gesture rates) and two-tailed independent t-tests for all comparisons where we had no specific directional predictions (i.e., deictic gesture rates, beat gesture rates, handshapes types, handshape variety). Post-hoc t-tests are reported as Welsh's t when variance between groups was unequal (as determined by Levene's test for equality of variance).

Results

Cartoon descriptions were significantly longer for L2 signers than early signers and non-signers (L2 signers, M = 101.8 sec, SD = 33.9; early signers, M = 54.0 sec, SD = 20.1; non-signers, M = 52.2 sec, SD = 26.3; F (2, 42) = 15.91, p < .001, η p 2 = .43; Tukey HSD, both p values < .001). The description length for the early signers and the non-signers did not differ from each other (p = .98). One early signer did not gesture at all and was excluded from further analysis.

Gesture rates

An ANOVA comparing Groups (early ASL signers, L2 signers, and non-signers) on their rates of all manual productions (collapsed across ASL signs and all gesture categories – iconic, deictic, beat, conventional, and unclear) revealed a significant main effect of Group (F (2, 41) = 4.19, p = .022, η p 2 = .17; Figure 1). We included ASL signs in this first analysis because although participants were not instructed to use signs, signers can produce ASL signs as co-speech gestures. Post hoc comparisons revealed that the early and L2 signers had comparable manual production rates (M = .59, SD = .21 for early signers; M = .61, SD = .14 for late signers; Tukey HSD, p = .98), and both signing groups had higher manual production rates than non-signers (M = .44, SD = .18; early signers vs. non-signers, p = .028; L2 signers vs. non-signers, p = .017). Thus, although one year of ASL instruction may not significantly affect co-speech gesture production rates in adult learners, relative to sign-naïve English speakers (Casey et al., Reference Casey, Emmorey and Larrabee2012), our results show that individuals who became fluent L2 signers in adulthood show co-speech gesture production rates that are similar to those who acquired ASL in childhood, leading to higher gesture rates in both groups of fluent signers, relative to non-signing English speakers.

Fig. 1. Average gesture rates (gestures per second) for early ASL signers, L2 ASL signers, and non-signers. The histogram labeled “Total” includes all manual productions (all gestures and ASL signs). Error bars indicate standard error of the mean.

We next examined the effect of bilingual status on iconic, deictic, and beat gestures only. We excluded ASL signs from this analysis to eliminate any possible confound due to the fact that signers had the ability to produce signs as co-speech gestures, whereas non-signers did not. A Group (early signer, L2 signer, non-signer) x Gesture type (iconic, deictic, beat) multivariate analysis of variance revealed a main effect of Group (F (6, 64) = 3.59, p = .011, Wilk's Λ = .667, η p 2 = .18). We examined this effect in more detail by comparing groups for each gesture type separately. Post-hoc t-tests revealed a higher iconic gesture rate for early signers (M = .41, SD = .17) than non-signers (M = .30, SD = .12; t(27) = 1.99, p = .03); and for L2 signers (M = .39, SD = .09) relative to non-signers (t(28) = 2.10, p = .02). Iconic gesture rates were comparable between early and L2 signers (t < 1, p = .59). For deictic gestures, early signers showed a significantly higher rate (M = .10, SD = .05) than non-signers (M = .05, SD = .03; t(24) = 3.27, p = .003). Deictic gesture rates for L2 signers were numerically higher (M = .07, SD = .03) than those of non-signers (M =.05, SD = .03), though this difference did not reach significance (t(25) = 1.91, p = .068). Similar to iconic gesture rates, deictic gesture rates were comparable between early signers and L2 signers (t(25) = 1.75, p = .09). Lastly, for beat gestures, while rates did not differ between early signers (M = .06, SD = .04) and non-signers (M = .04, SD = .02; t(24) = 1.46, p = .163), L2 signers’ beat gesture rates (M = .08, SD = .05) were significantly higher than those of non-signers (t(25) = 2.86, p = .011, unequal variance). We found no difference for beat gesture rates between early signers and L2 signers (t(25) = 1.46, p = .156, unequal variance).

Handshapes

We next examined the effect of bilingual status on the proportion of gestures produced with marked handshapes. For direct comparison with our previous study, we conducted a univariate ANOVA on the proportion of marked handshapes, which revealed a significant main effect of Group, F (2, 41) = 4.14, p = .023, η p 2 = .17. Post-hoc t-tests indicated that L2 signers produced a higher proportion of marked handshapes (M = .37, SD = .13) compared to early signers (M = .24, SD = .15; t(27) = 2.47, p = .02) and compared to non-signers (M = .26, SD = .11; t(28) = 2.46, p = .020). Early signers and non-signers showed comparable proportions of marked handshapes (t(27) < 1, p = .63).

To examine the effect of bilingual status on speakers’ handshape repertoire, we examined how many different varieties of marked and unmarked handshapes (i.e., the number of different marked and unmarked handshapes) were produced by each group (Figure 2). We conducted a 3 x 2 omnibus Group (early signers, L2 signers, non-signers) x Handshape type (marked, unmarked) multivariate analysis of variance (MANOVA), with the number of different handshapes as the dependent measure. This analysis revealed a significant Group x Handshape type interaction (F (4, 80) = 4.71, p = .002, Wilks’ lambda = .655, η p 2 = .19). We examined the interaction with two separate one-way, univariate ANOVAS: one compared groups for the variety of marked handshapes and the other for the variety of unmarked handshapes. These analyses showed a significant effect of bilingual status on the variety of marked handshapes (F (2, 41) = 8.93, p = .001, η p 2 = .30) and the variety of unmarked handshapes (F (2, 41) = 4.24, p = .021, η p 2 = .17). For marked handshapes, L2 signers produced significantly more forms (M = 7.4, SD = 3.4) than both early signers (M = 4.6, SD = 2.6; t(27) = 2.50, p = .019) and non-signers (M = 3.4, SD = 1.7; t(20) = 4.09, p = .001, unequal variance). However, early signers and non-signers did not differ on this measure (t(27) = 1.44, p = .161). Thus, fluency with ASL, rather than age of acquisition, appears to impact the variety of handshapes used for co-speech gesture. For unmarked handshapes, variety was comparable between early signers (M = 4.4, SD = 1.2) and L2 signers (M = 4.7, SD = .80; t < 1, p = .414), but L2 signers produced significantly more unmarked varieties than non-signers (M = 3.7, SD = 1.1; t(28) = 3.016, p = .005). Early signers produced numerically (but not significantly) more unmarked handshape varieties than non-signers (t(27) = 1.804, p = .082).

Fig. 2. Group averaged counts of different marked and unmarked handshape varieties produced by early ASL signers, L2 ASL signers, and non-signers. The y-axis indicates the number of different co-speech gesture handshapes produced (bars represent standard error of the mean).

ASL sign rates

Lastly, we analyzed the production of ASL signs across the entire narrative (cartoon clips 1–8). Ten early ASL signers (out of 15) and fourteen L2 ASL signers (out of 15) produced at least one ASL sign across all of the cartoon narrative segments. Early signers produced an average of 2.1 signs and a total of 22 ASL tokens, whereas L2 signers produced an average of 8.0 ASL signs and a total of 69 ASL tokens across all scene descriptions. These results suggest that L2 signers were less able than early signers to suppress signs during speech. The average number of ASL signs per minute was greater in L2 signers (M = .92, SD = .9) than early ASL signers (M = .39, SD = .3), but this difference did not reach significance (t(27) = 1.99, p = .063, unequal variance). Figure 3 provides an example of an ASL sign produced by an L2 ASL signer; the signer produces the ASL lexical sign glossed as MEASURE while speaking the word calculations (see Appendix B for a list of all ASL signs that were produced by each group).

Fig. 3. An example of the ASL lexical sign MEASURE produced by an L2 signer with the spoken word “calculations” in the phrase “He's at this drawing board doing some calculations and measurements…”

Discussion

We examined how long-term sign language exposure affects co-speech gesture by comparing gesture production in early ASL–English bilinguals, fluent L2 ASL signers, and monolingual non-signers, as they retold a story depicted in an animated movie. Early and L2 signers gestured at higher rates compared to monolingual non-signers, but not all gesture types were equally affected by sign language experience.

Our finding of increased gesture rates for both early signers and L2 signers extends earlier findings that experience with a visual-manual language can influence gesture production. Specifically, Casey et al. (Reference Casey, Emmorey and Larrabee2012) demonstrated that gesture rates of novice adult learners increased after only one year of ASL instruction, compared to their pre-instruction rates. In contrast, after a year of Romance language instruction, the co-speech gesture production of novice learners remained stable, with no difference between their pre- and post-instruction rates. However, Casey et al. (Reference Casey, Emmorey and Larrabee2012) found no difference in gesture rate at the post-instruction time point (or at the pre-instruction time point) when the ASL and Romance language learners were directly compared. Thus, although only one year of ASL instruction is sufficient to affect gesture rates in adult learners, the lack of a post-instruction group difference indicates a relatively weak effect. In the present study, we found more robust group differences for highly proficient signers (increased gesture rates for both early signers and late L2 signers), compared to sign-naïve speakers. This result indicates that proficiency with sign language, extensive exposure to sign language, or both, are key mediators of the observed co-speech gesture differences.

Moreover, this influence may be modality-related. That is, the fact that sign language and co-speech gesture rely on the manual articulators likely promotes increased gesturing when speaking English. We suggest that, for L2 acquisition, signed languages may have a greater impact on L1 gesture patterns than spoken languages. One possible mechanism for this effect, suggested by Casey et al. (Reference Casey, Emmorey and Larrabee2012), is that experience with manual production may lower the neural threshold for gesturing (Hostetter & Alibali, Reference Hostetter and Alibali2008), even for those with minimal sign language experience. The overlap in modality between co-speech gesture and sign language is unique in bimodal bilingual populations, and it is not clear how experience with two spoken languages affects gesture production in an L1. As noted in the introduction, the influence of a spoken L2 on L1 co-speech gesture has not been extensively studied (see Brown and Gullberg, Reference Brown and Gullberg2008, for an exception). More commonly reported are influences of L1 on L2 co-speech gesture in unimodal bilinguals, which is often considered to be cultural, rather than language-related (e.g., Laurent & Nicoladis, Reference Laurent and Nicoladis2015; Cavicchio & Kita, Reference Cavicchio and Kita2013; Smithson, Nicoladis & Marentette, Reference Smithson, Nicoladis and Marentette2011).

The robust difference in overall gesture production that we observed for signers, compared to non-signers, must be reconciled with Casey and Emmorey's (Reference Casey and Emmorey2009) finding of comparable overall gesture rates between early native signers and non-signers. This discrepancy may reflect methodological differences. For example, although both studies used the Canary Row cartoon to elicit natural co-speech gesture, participants in Casey and Emmorey (Reference Casey and Emmorey2009) viewed and then retold the entire seven-minute cartoon, whereas our participants viewed eight short clips from the cartoon, describing each clip immediately after viewing it. In addition, our results reflect gesture production from the two scenes analyzed by Casey et al. (Reference Casey, Emmorey and Larrabee2012) that Casey and Emmorey (Reference Casey and Emmorey2009) found to yield the greatest difference in gesture production between native signers and non-signers. Each of our groups produced co-speech gestures at higher rates than reported in the earlier studies, yielding more data points from which to distinguish effects. In this sense, Casey and Emmorey (Reference Casey and Emmorey2009) may have lacked sufficient power to detect a group difference.

In our present and previous studies, the L2 signers were older than the other participants, which could have led to differences in gesture patterns. However, existing research indicates either no effect of age on adults’ gesture production during narratives (Theocharopoulou, Cocks, Pring & Dipper, Reference Theocharopoulou, Cocks, Pring and Dipper2015; Feyereisen & Havard, Reference Feyereisen and Havard1999; Cohen & Borsoi, Reference Cohen and Borsoi1996) or increased production in younger, compared to older, adults (Theocharopoulou et al., Reference Theocharopoulou, Cocks, Pring and Dipper2015; Cohen & Borsoi, Reference Cohen and Borsoi1996). In contrast, our older L2 signers produced more gestures than the other, younger groups, making age an unlikely explanation for our findings. In addition, the L2 signers produced longer descriptions than the early signers and non-signers. We had no predictions about narrative length, and the reason for this difference is unclear. However, because we analyzed rates and proportions, rather than absolute number of gestures, differences in narrative length are unlikely to impact the results.

Despite methodological and participant differences, our findings and those of Casey and Emmorey (Reference Casey and Emmorey2009) converge when gesture production is examined at a more detailed level. We found no difference in production rates between early signers and L2 signers for iconic or deictic gestures. However, both signing groups produced these gestures types at higher rates than non-signers. Similarly, Casey and Emmorey (Reference Casey and Emmorey2009) reported that early signers produced a higher percentage of iconic gestures than non-signers. Increased iconic gesturing by signers may reflect the iconicity present in lexical, phonological, and spatial aspects of sign language. Many signs resemble their referents, and space is used iconically to indicate the location, position, and shape of referents. Detailed information about the features expressed iconically in ASL may prime action plans for iconic co-speech gestures, even when ASL is not the target language (Casey et al., Reference Casey, Emmorey and Larrabee2012). One way to test this hypothesis would be to assess whether viewing iconic (vs. non-iconic) signs just prior to re-telling a related story to monolingual English speakers increases the rate and/or form of co-speech gestures produced by bimodal bilinguals.

Although speculative, it is also possible that signers visualized the narrative more vividly than non-signers, leading to increased gesturing as they retold the story depicted in the cartoon. Emmorey, Kosslyn, and Belugi (Reference Emmorey, Kosslyn and Bellugi1993) suggested that the mental imagery demands of ASL (i.e., image generation, maintenance, and transformation) lead to faster and possibly more vivid mental image generation in signers than in non-signers (see also Emmorey & Kosslyn, Reference Emmorey and Kosslyn1996). Thus, compared to non-signers, participants with extensive ASL experience may more readily generate enhanced visualizations of the animated information, which in turn, elicits increased gesture production to convey the visualized action.

In addition, as suggested by Casey et al. (Reference Casey, Emmorey and Larrabee2012), sign language experience may reduce the neural threshold for gesture production when speaking English. Specifically, Hostetter and Alibali (Reference Hostetter and Alibali2008) propose that increasing the strength of neural connections between premotor and motor cortex may reduce the neural threshold for gesture because stronger connections allow activation to spread more readily. Sign language experience may strengthen those neural connections, making gesture production more likely. Alternatively, the gesture threshold can be modulated by communicative motivation (Hostetter & Alibali, Reference Hostetter and Alibali2008). Sign language uses space to indicate the physical properties and positions of referents in space, and placement of the hands and fingers on the body is an important phonological parameter of ASL. Based on their extensive experience using linguistic space for effective communication, signers may have a lower gesture threshold, particularly when they feel gesture would benefit the communicative situation, such as when retelling the action of a story.

Consistent with this interpretation, there is ample evidence that both of a bilingual's languages are always active (Dijkstra, Grainger & van Heuven, Reference Dijkstra, Grainger and van Heuven1999; Giezen, Blumenfeld, Shook, Marian & Emmorey, Reference Giezen, Blumenfeld, Shook, Marian and Emmorey2015; Meade, Midgley, Sevcikova Sehyr, Holcomb & Emmorey, Reference Meade, Midgley, Sevcikova Sehyr, Holcomb and Emmorey2017; Morford, Wilkinson, Villwock, Pinar & Kroll, Reference Morford, Wilkinson, Villwock, Pinar and Kroll2011) and further, that unimodal bilinguals must constantly inhibit one language when speaking the other (Christoffels, Firk & Schiller, Reference Christoffels, Firk and Schiller2007; Van Assche, Duyck & Gollan, Reference Van Assche, Duyck and Gollan2013). Because it is impossible to articulate two spoken languages simultaneously, unimodal bilinguals sometimes switch back and forth between languages when speaking with each other (referred to as ‘code-switching’). In contrast, instead of switching between languages, simultaneous production of ASL and English occurs as a frequent and natural part of discourse between bimodal bilinguals (Baker & Van den Bogaerde, Reference Baker, Van den Bogaerde, Plaza Pust and Morales Lopez2008; Bishop, Reference Bishop2010; Petitto, Katerelos, Levy, Gauna, Tetreault & Ferraro, Reference Petitto, Katerelos, Levy, Gauna, Tetreault and Ferraro2001), a phenomenon referred to as ‘code-blending’ (Emmorey, Borinstein & Thompson, Reference Emmorey, Borinstein, Thompson, Cohen, McAlister, Rolstad and MacSwan2005; Emmorey, Borinstein, Thompson & Gollan, Reference Emmorey, Borinstein, Thompson and Gollan2008). Simultaneous word and sign production is possible because ASL and spoken English rely on different linguistic articulators.

Further, bimodal bilinguals produce signs unintentionally as co-speech gestures when conversing with non-signers, even when they know their interlocutor has no knowledge of sign language (Emmorey et al., Reference Emmorey, Borinstein, Thompson, Cohen, McAlister, Rolstad and MacSwan2005; Casey & Emmorey, Reference Casey and Emmorey2009). The unintentional intrusion of signs when speaking to sign-naïve addressees is consistent with our finding that both early signers and L2 signers mixed ASL signs in with their co-speech gestures when retelling the cartoon. Like iconic and other representational co-speech gestures, the signs our participants produced were semantically related to the accompanying speech. Code-blended signs and co-speech gestures are both meaningful manual productions that co-occur with speech, and this similarity may lead to sign intrusions and increased gesturing when speaking English. Although there is some evidence that signs can infiltrate the English conversation of novice learners (after one year of ASL instruction; Casey et al., Reference Casey, Emmorey and Larrabee2012), it is unclear whether those sign productions actually represent lexicalized signs, or rather, emblem-like (conventional) gestures in a pre-lexical stage. It may be that, in the very early stages of ASL acquisition, adult learners first incorporate newly learned signs into their existing gestural repertoire, and the transition to lexical entries occurs with more extensive ASL exposure.

Some evidence for this hypothesis comes from the work of Ortega and colleagues who argued that hearing adult sign language learners may experience gestural interference when learning iconic signs (Ortega, Reference Ortega2013; Ortega & Özyürek, Reference Ortega and Ozyurek2013; Ortega & Morgan, Reference Ortega and Morgan2015a, Reference Ortega and Morgan2015b). Specifically, these authors found that, although the meanings of iconic signs are easily learned and remembered, their articulation is often less accurate compared to more arbitrary signs. Less accurate articulation of iconic signs may occur because the similarity between iconic signs and co-speech gestures interferes with learners’ focus on the exact phonological structure of the iconic signs (whose form is similar, but not identical, to that of co-speech gestures; Ortega & Özyürek, Reference Ortega and Ozyurek2013). The results from Ortega and colleagues suggest hearing adult L2 learners of a sign language may initially acquire signs within their co-speech gesture system, rather than as a completely separate system.

The number and rate of signs produced when re-telling the cartoon clips were numerically greater for L2 signers compared to early signers, although this difference did not reach significance. The trend toward increased sign intrusion may reflect age of acquisition as it relates to the development of language-related manual articulation systems. That is, early signers acquire ASL and English simultaneously, which could lead to the development of more segregated systems of manual productions for signs and co-speech gesture. In this scenario, early learners may acquire and store manual signs as ASL lexical representations; simultaneously, manual co-speech gestures would be acquired as part of, and integrated with, English (e.g., the pattern of conflated manner and path gestures that is typical of English speakers; see Allen, Özyürek, Sotaro, Brown, Furman, Ishizuka & Fujii, Reference Allen, Özyürek, Sotaro, Brown, Furman, Ishizuka and Fujii2007). Perhaps when ASL is acquired later in life, L2 signers already have a developed co-speech gesture system in place, and that system expands as they incorporate the manual productions of ASL.

Supporting the notion that the ASL experience of L2 signers may have expanded or altered the internal structure of their gestural repertoire, we found that L2 signers produced a greater variety of marked handshapes during cartoon descriptions, relative to the other groups. Further, while not statistically significant, early signers also produced a greater variety of handshapes, compared to non-signers (particularly for unmarked handshapes). Although L2 signers produced longer narratives than early signers and non-signers, there is no evidence to suggest that variation in handshape repertoire is related to narrative length, or that narrative length would differentially affect marked versus unmarked handshapes. Similarly, differences in handshape repertoire size do not appear to be related to co-speech gesture production rates: for marked handshapes, L2 signers produced a greater variety than early signers, despite comparable production rates, while early signers and non-signers produced equally varied marked handshapes, despite significantly different production rates. Handshapes only produced by L2 signers and not by the other groups included B bent bar, hooked U, 3-finger O, and R (see Appendix A for illustrations of these handshapes). Casey and Emmorey (Reference Casey and Emmorey2009) also reported increased handshape variety for early signers, compared to non-signers, and Casey et al. (Reference Casey, Emmorey and Larrabee2012) found increased handshape variety for novice learners after a year of ASL instruction (versus before instruction). However, Casey et al. (Reference Casey, Emmorey and Larrabee2012) identified no group difference in handshape variety between ASL learners and Romance language learners, either pre- or post-instruction. Taken together, these findings suggest that the influence of ASL knowledge on handshape repertoire may reflect proficiency and/or long-term experience with ASL. Signs and co-speech gestures both involve manual productions, and knowledge of sign language affords a larger inventory of handshapes to select from for co-speech gestures, relative to the inventory of non-signers. However, it is curious that handshape variety seemed less affected in our group of early signers, despite life-long sign language experience. This suggests that early sign language experience may not be a primary factor that influences handshape variety in co-speech gesture production.

Across groups, the pattern of beat gesture production diverged from that of the other two gesture types discussed above. Specifically, while early and L2 signers had comparable rates of beat gestures, only the L2 group produced beat gestures at a higher rate than non-signers. Beat gesture production rates for early signers fell between those of L2 signers and non-signers. That is, L2 signers produced beat gestures at a higher rate than both of the other groups. This result was somewhat unexpected, given an earlier report of decreased beat gesture production for early signers, relative to non-signers (Casey & Emmorey, Reference Casey and Emmorey2009). It is not clear why the findings for beat gestures are incongruous across studies. One could speculate that the discrepancy relates to the length of narrations, the segments selected for analysis, or individual subject differences. Nevertheless, both studies found that beat and deictic gestures were much less frequent than iconic gestures for all groups tested. Selecting a narrative to specifically elicit beat and deictic gesture production might shed light on what influence sign language experience has on production of these gesture types.

In summary, we have shown that sign language knowledge influences the co-speech gesture production of early signers and of fluent L2 signers who acquired ASL in adulthood. Comparable gesture production rates (overall and for specific gesture types) were found for early and L2 signers, and these rates exceeded those of non-signers. This finding indicates that age of acquisition is not a strong determinant of co-speech gesture rates. Rather, our finding that fluent L2 signers differed quantitatively and qualitatively from monolingual non-signers indicates that sign language proficiency, years of exposure, or both, exert a strong influence on co-speech gesture production. This conclusion is supported by Casey et al.’s (Reference Casey, Emmorey and Larrabee2012) report that adults with only one year of ASL instruction showed a co-speech gesture increase that was insufficient to differentiate them from adult novice Romance language learners. Our findings also indicate that the shared manual productions of sign and speech lead to incorporation of ASL handshapes into the gestural repertoire of ASL–English bilinguals. Further, sign language experience appears to impact co-speech gesture whether acquired early in life or in adulthood, and there is a complex interaction between age of acquisition, proficiency (or length of exposure), and gesture type. More research is needed to determine the precise mechanisms that mediate the influence of sign language on co-speech gesture. Possible mechanisms include some combination of a) simultaneous activation of sign and speech; b) a reduced neural threshold for gesture production as a result of exposure to sign language (Hostetter & Alibali, Reference Hostetter and Alibali2008); and c) reduced inhibition of signs (weak suppression of ASL), which, like co-speech gestures, rely on manual articulation and thus do not interfere with speech.

Acknowledgements

This work was supported in part by a grant from the National Institute of Child Health and Human Development (R01 HD047736) to Karen Emmorey and San Diego State University. The authors would like to thank Heather Larrabee and Jennifer Petrich for help with data coding and analysis, and all of the participants, without whom this work would not be possible.

Appendix A: Handshapes produced by ASL signers

Appendix B: List of ASL signs produced across the eight cartoon descriptions by Early ASL signers and L2 ASL signers