Anecdotally, hearing people who learn American Sign Language (ASL) as a second language report that they gesture more when speaking English than they did before learning ASL. If true, this effect represents an unusual influence from a late-learned second language (L2) on first language (L1) production. Although co-speech gesture is often ignored as a component of language, a large body of evidence indicates that gesture creation interacts online with speech production processes and affects both language production and comprehension (e.g., Emmorey & Casey, Reference Emmorey and Casey2001; Kelly, Barr, Church & Lynch, Reference Kelly, Barr, Church and Lynch1999; Kita & Özyürek, Reference Kita and Özyürek2003; Krauss, Reference Krauss1998; McNeill, Reference McNeill2005). In the two studies reported here (Study 1 and Study 2), we endeavored to first confirm anecdotal reports that English speakers perceive a change in their gestures after learning ASL, and then we conducted a longitudinal study to determine whether co-speech gestures do in fact increase or change after just one academic year of ASL instruction.

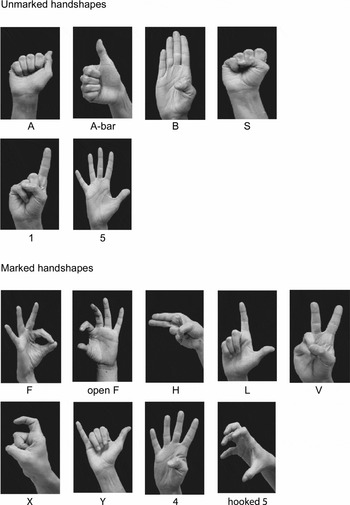

Previously, Casey and Emmorey (Reference Casey and Emmorey2009) found that early bimodal bilinguals (hearing people with deaf signing families who had early exposure to both ASL and English) produced more iconic gestures (i.e., representational gestures that bear a resemblance to their referent) and more gestures from a character viewpoint than monolingual English speakers. The increase in gestures that express character viewpoint (i.e., the gesturer's body is used to depict the actions of a character in the narrative; McNeill, Reference McNeill1992) is likely linked to the use of referential shift (also known as role shift) in ASL, a specific linguistic structure that marks character viewpoint within a narrative in which signers depict body gestures and facial expressions of a discourse referent (e.g., Friedman, Reference Friedman1975; Liddell & Metzger, Reference Liddell and Metzger1998). The gestures of bimodal bilinguals also exhibited a greater variety of handshapes and more frequent use of unmarked handshapes compared to monolingual speakers. Unmarked handshapes are the most common handshapes in sign languages cross-linguistically (Battison, Reference Battison1978), are the most frequently occurring handshapes in signs, i.e., they occur in approximately 70% of signs (Klima & Bellugi, Reference Klima and Bellugi1979), and are acquired first by children learning a sign language as a native language (Boyes-Braem, Reference Boyes-Braem, Volterra and Erting1990). In addition, Pyers and Emmorey (Reference Pyers and Emmorey2008) found that when speaking English, bimodal bilinguals produced significantly more ASL-appropriate facial expressions (e.g., raised eyebrows to mark conditional clauses) than monolingual English speakers, and they synchronized their facial expressions with the English clause onset. Thus, highly fluent ASL-English bilinguals exhibit a clear influence of ASL on the nature of both facial and manual gestures that accompany spoken English. Casey and Emmorey (Reference Casey and Emmorey2009) argued that these differences result from dual language activation and suggested that information may be encoded for expression in ASL even when English is the target language.

For unimodal bilinguals (individuals who have acquired two spoken languages), the evidence is mixed with respect to the influence of a second language on co-speech gestures produced when speaking a first language. Pika, Nicoladis and Marentette (Reference Pika, Nicoladis and Marentette2006) found that English speakers who learned Spanish as young adults (and spent at least one year in a Spanish-speaking country) gestured more when speaking English than monolinguals. However, this difference could be the result of cultural exposure, rather than a linguistic effect from learning Spanish. Brown and Gullberg (Reference Brown and Gullberg2008) found that native Japanese speakers with intermediate knowledge of English gestured slightly differently in their native language than Japanese speakers without any knowledge of English. Specifically, compared to monolingual Japanese speakers, Japanese–English bilinguals (like monolingual English speakers) were less likely to produce a gesture that expressed manner of motion when their speech encoded manner information. In contrast, Choi and Lantolf (Reference Choi and Lantolf2008) found that advanced L2 Korean speakers and L2 English speakers retained their L1 co-speech gesture patterns when expressing manner of motion in their first language (English or Korean). Thus, there is little evidence for a strong influence of a second spoken language on the co-speech gesture patterns in a first spoken language.

We hypothesize that learning a signed language as an L2 will have a much stronger and more apparent effect on the co-speech gestures that accompany a spoken L1. The manual gestures that accompany a spoken L1 coincide with the signed modality, and this modality overlap may promote or trigger a heightened use of the hands when communicating in L1. We first investigate this idea by conducting a survey of university students after they had taken one academic year of ASL or one year of instruction in a Romance language (Spanish, French, or Italian). We chose Romance languages because they are hypothesized to be “high frequency gesture” languages (Pika et al., Reference Pika, Nicoladis and Marentette2006, p. 319). We predict that the majority of ASL students will report increases and/or changes in their co-speech gesture, whereas learners of the spoken languages will not.

Study 1: Gesture questionnaire

Method

Participants

Two hundred ninety-eight students attending San Diego State University participated in the survey (205 females). The sample included 95 students of ASL (74 females), 56 students of French (38 females), 37 students of Italian (22 females), and 110 students of Spanish (71 females). Participants ranged in age from 17 to 47 years, with a mean age of 21 years. An additional 42 students were excluded from the analysis because they indicated they were non-native English speakers, and six were excluded because they failed to answer a question or were unclear/inconsistent in their answers.

Procedure

A short questionnaire was distributed to students during the last week of classes after they had taken two semesters of a foreign language, i.e., ASL, French, Italian, or Spanish. In addition to questions about age, gender, and native language, the survey consisted of the following three questions:

1. After learning ASL/French/Italian/Spanish, do you think you gesture while speaking English:

less more the same (circle one)

2. Do you feel that gestures you make while talking have changed since learning

ASL/French/Italian/Spanish? yes no (circle one)

3. If yes, please explain how you think your gestures have changed.

The questionnaire was customized for each language, so that the students were asked only about the language they were learning (e.g., “After learning French, . . .”). The students filled out the short questionnaire in their language class.

Results and discussion

Table 1 provides the percentage of students from each language group who perceived an increase, decrease, or no change in their gestures at the end of an academic year of instruction. Seventy-five percent of ASL students felt that their co-speech gesture use had increased since learning ASL, whereas only 14% (28/203) of spoken language students perceived an increase in co-speech gesture. Of the spoken language learners, more Italian students felt their gestures increased, but a Chi square analysis revealed no significant difference across spoken language learners in response type, χ 2 (4, N = 203) = 5.73, p = .22. The vast majority of spoken language learners (85%; 172/203) felt that their rate of co-speech gesture had not changed.

Table 1. Percentage of students reporting a change in rate of gestures after one academic year of instruction.

Similarly, when asked about perceived changes in co-speech gesture (Question 2 in the questionnaire), 76% of ASL students (72/95) felt that their gestures had changed in some way, whereas only 14% of spoken language learners (28/203) felt that their co-speech gestures had changed at the end of their language course. More Italian learners (32%; 12/37) perceived a change in their co-speech gestures compared to Spanish learners (11%; 12/110), χ 2 (1, N = 147) = 9.39, p = .002, and compared to French learners (7%; 4/56), χ 2 (1, N = 93) = 10.00, p = .002. The Italian learners’ perception of their gestural production may have been influenced by the large number of emblematic gestures that are used by native Italian speakers (e.g., Poggi, Reference Poggi2002), particularly in Southern Italy (Kendon, Reference Kendon1995). Italian learners may have become aware of such gestures through exposure to Italian film, class conversations in Italian, or from the popular media.

When asked to explain how their gestures had changed (Question 3 in the questionnaire), seven of the ASL students simply repeated that their rate of gesturing had increased and three failed to respond to this question. Of the remaining 62 ASL students, 15% (9/62) described a change in the form of their gestures. For example, several students indicated that they produced bigger gestures or used a larger, more exaggerated space. The ASL-learning students also described changes in how they used gestures (24%; 15/62). For example, they used more descriptive gestures, more gestures to express emotion or to explain what they were saying. However, the majority of ASL learners (63%; 39/62) indicated that they now sometimes produce ASL signs when they gesture.

For the spoken language learners who indicated that they felt their gestures had changed, three failed to provide an explanation and nine simply repeated that their gestures had increased. Of the remaining 16 students, 44% (7/16) described a change in the form of their gestures (e.g., more precise or more body movement), and 56% (9/16) described a change in how they used gesture (e.g., more descriptive or expressive gestures). None of the Italian students indicated that they now sometimes produced Italian gestures (e.g., the Italian “beautiful”, “evil eye”, or “money” gestures), and their qualitative gesture descriptions did not obviously differ from those of the other spoken language learners.

These results lend credence to anecdotal reports that exposure to ASL as a second language affects co-speech gestures in the first language – the great majority of ASL learners perceived a change in their co-speech gestures after just one year of instruction. The findings also indicate that this same academic exposure to a spoken language did not lead to a strong perception of changes in co-speech gesture, although students of Italian were more likely than other spoken language students to report a change. However, the perception of an increase in gesture rate for ASL learners might arise because these students are paying much more attention to their hands and to gesture in general – there may be no actual change in gesture rate, only the perception of change because the students have become more self-aware of their gestures. Similarly, the Italian learners may have been biased to report a change in gesture because they were influenced by the common belief that Italian speakers gesture profusely, and the ASL learners may have been biased simply because ASL is a manual language. In Study 2, we conducted a longitudinal study to determine whether we can detect an increase in gesture rate, a change in the type of gestures produced, and/or the production of ASL signs while speaking, after just one year of instruction. Again, Romance language learners served as the comparison group.

Study 2: Longitudinal study of gesture change

Method

Participants

Forty-one native English speakers participated in the study. Twenty-one (14 female) studied ASL and 20 (16 female) studied a Romance language for one year at the University of California, San Diego: Italian (N = 9), French (N = 4), or Spanish (N = 7).Footnote 1 All participants acquired English as a first language and had no other native language, with the exception of one participant who was exposed to Chinese from birth for four years, but rated her fluency as “almost none to poor”. No participants were bilingual in a second language, and none had studied another language beyond basic university or high school courses. Participants ranged in age from 18 to 36 years, with a mean age of 19.5 years.

Procedure

Participants were told that we were interested in studying what makes someone good at learning languages – gesture was never mentioned. Participants were shown eight short clips of a seven-minute Tweety and Sylvester cartoon (Canary Row), which is commonly used to elicit co-speech gestures (e.g., McNeill, Reference McNeill1992), and they were asked to re-tell each clip individually to another person whom they did not know. All testing occurred in English. Each participant was tested twice: once when he or she started to study the language (after no more than three weeks of class) and once after one academic year of course work. The language courses consisted of six hours of instruction per week (three hours of informal conversation and three hours of grammar instruction), for a total of three 10-week quarters. During the spoken language conversation sessions, it is likely that students were exposed to co-speech gesture produced by the instructors who were native speakers. The interlocutor in the experiment was a confederate who interacted with the participant naturally, i.e., providing feedback to indicate comprehension. We ensured that the interlocutor either did not know the language the participant was studying or was not known to be a user of that language by the participant. All sessions were videotaped for later coding and analysis.

Gesture coding

We selected two scenes for coding that Casey and Emmorey (Reference Casey and Emmorey2009) found to exhibit the greatest difference between monolingual speakers and native ASL–English bilinguals: the third video clip (Tweety throws a bowling ball down a drainpipe and into Sylvester's stomach) and the seventh video clip (Sylvester swings on a rope and slams into a wall next to Tweety's window). Gesture productions were coded for the following properties: gesture type, handshape form, viewpoint, and the presence of ASL signs. Inter-rater agreement among three coders for the presence of a gesture ranged from 93% to 98% based on 78 independently coded gestures. In any case where there was a disagreement among coders (either here or below), the coders either came to an agreement or the gesture was not included in that analysis.

Gestures were classified by type as iconics, deictics, beats, conventional, and unclassifiable. Gestures were coded as iconic when they represented the attributes, actions, or relationships of objects or characters (McNeill, Reference McNeill1992). For example, moving the hands downward to represent Tweety throwing a bowling ball down a pipe. Gestures that were imitations of gestures performed by characters in the cartoon were also coded as iconic, e.g., moving the index finger from side to side, mimicking Sylvester's gesture in the cartoon. Pointing gestures produced with a fingertip or with the hand were coded as deictics. Non-iconic gestures that bounced or moved in synchronization with speech were coded as beats. These gestures could consist of single or multiple movements, and they usually accompanied a stressed word. Gestures that are often used in American culture, e.g., thumbs up, shh, and so-so, were coded as conventional gestures. These gesture categories were not mutually exclusive – it was possible for a gesture to be coded as belonging to more than one category. For example, one participant moved her hands to show the path of Sylvester rolling down the street and also bounced her hand in synchronization with her speech. This gesture was coded as both an iconic and a beat gesture. Lastly, gestures that were unclear were coded as unclassifiable, e.g., a gesture that looked like a beat, but was produced without accompanying speech. Inter-rater agreement among three coders for gesture type ranged from 88 to 90% based on 76 independently coded gestures.

Gestures were coded as containing character viewpoint if they were produced from the perspective of a character, i.e., produced as if the gesturer were the character (McNeill, Reference McNeill1992). For example, moving two fists outward to describe Sylvester swinging on a rope. Character viewpoint gestures included both manual gestures and body gestures not involving the hands. For example, moving the body forward while describing Sylvester swinging into a wall. Gestures could also simultaneously contain both an observer viewpoint and a character viewpoint. For example, one participant produced an observer viewpoint gesture using a 5 handshape (see Appendix for illustrations of all handshapes discussed here) that represented Sylvester slamming into the wall, while simultaneously moving her body forward as if she were Sylvester. Instances such as this were coded as containing both an observer and a character viewpoint. However, as in Casey and Emmorey (Reference Casey and Emmorey2009), gestures that consisted solely of character facial expressions without any accompanying manual or body gesture were not included in the analysis. Inter-rater agreement between two coders for presence of character viewpoint was 93% based on 85 independently coded gestures.

Handshape form within all coded gestures was categorized as either unmarked or marked. Unmarked handshapes were A, A-bar, B, S, 1, and 5, following Eccarius and Brentari (Reference Eccarius and Brentari2007) (see Appendix). All other handshapes were categorized as marked, including phonologically distinct variations of unmarked handshapes (e.g., hooked 5). Inter-rater agreement among three coders for handshape form ranged from 85 to 95% based on 91 independently coded gestures.

Productions were coded as ASL signs when they were identifiable lexical signs or classifier constructions that a non-signer would not be likely to produce. For each potential ASL sign, we examined the spoken language learners’ entire eight episode retelling of the cartoon to determine whether a similar gesture was ever produced. If so, this gesture was not coded as an ASL sign, e.g., the ASL signs LOOK (produced with a V handshape), PHONE, WRITE, and the 1 and V classifier handshapes to refer to movements of people and animals, were not coded as ASL signs because at least one non-signer produced a similar gesture.

Statistical analysis

One-tailed t-tests were used for comparisons in which there was a directional prediction, i.e., comparisons of the ASL learner group before versus after taking ASL. All other comparisons were two-tailed.Footnote 2 We used paired and unpaired t-tests when the data were distributed normally and nonparametric Wilcoxon Sign-Rank tests (paired) and Mann-Whitney tests (unpaired) when the data were not distributed normally.

Results

Gesture rate

The ASL learners did not differ significantly from the Romance language learners in their rate of gesturing at the beginning of the academic year, t(39) = .51, p = .61. For the ASL learners, there was a significant increase in the rate of gesture after one year of language instruction, but no change for learners of spoken languages (see Table 2). ASL learners had a pre-instruction mean of .40 gestures per second and a post-instruction mean of .48 gestures per second, t(20) = 2.14, p = .02 (one-tailed). In contrast, Romance language learners had a pre-instruction mean of .44 gestures per second and a post-instruction mean of .43 gestures per second: t(19) = .24, p = .81. However, despite the increase in gesture rate, the ASL learners did not differ significantly from the Romance language learners at the end of the academic year, t(39) = .78, p = .44.

Table 2. Mean rate of gesture types before versus after one year of ASL or a Romance language (standard deviations in parentheses).

* p < .05.

Gesture type: Iconics, deictics, and beats

For ASL learners, there was a significant increase in the rate of iconic gestures per second after a year of ASL classes, t(20) = 1.80, p = .04 (one-tailed) – see Table 2. In contrast, the rate of iconic gestures for learners of Romance languages did not change, t(19) = .78, p = .44. For deictic gestures, neither language-learning group exhibited a change in gesture rate, both ps > .13. Similarly, there was no change in rate of beat gestures for either group, both ps > .27. For all three gesture types, the ASL and Romance language learners did not differ significantly in their pre-instruction gesture rate, all ps > .38, nor in their post-instruction rate, all ps > .22. Thus, although we observed a significant increase in iconic gestures for the ASL learners, the increase was not large enough to cause a between group difference in iconic gesture rate at the end of a year of instruction.

Character viewpoint

Iconic gestures were further analyzed for the presence of character viewpoint. Neither ASL learners nor learners of Romance languages showed a change in the use of character viewpoint after one year of a foreign language (see Table 3). For ASL learners, there was a non-significant increase in the percent of gestures expressing a character viewpoint after taking a year of ASL, t(17) = .77, p = .22 (one-tailed), whereas for learners of Romance languages, there was a non-significant decrease in gestures with a character viewpoint, t(19) = 1.7, p = .11. ASL learners did not differ from Romance language learners with respect to the use of character viewpoint either before, t(37) = .04, p = .97, or after, t(38) = 1.61, p = .12, taking a foreign language.

Table 3. Mean percentage of gestures displaying character viewpoint and means of handshape types (standard deviations in parentheses).

*p < .05; ** p < .01.

Handshape analyses

As seen in Table 3, ASL learners produced a wider variety of handshapes in their gestures after a year of ASL instruction, t(20) = 1.95, p = .03 (one-tailed), whereas the Romance language learners exhibited no change in the number of handshape types produced, t(19) = .79, p = .44. As with our other measures of gesture change, the language learning groups did not differ from each other in the number of handshape types at the beginning of the year, z = .75, p = .45, or at the end of the academic year, t(39) = .68, p = .50.

When we divided handshapes into marked versus unmarked categories (see Appendix), we observed a significant increase in the production of marked types after one year of ASL, t(20) = 2.79, p = .006 (one-tailed), but not after one year of a Romance language, t(19) = 1.12, p = .28 (see Table 3). The groups did not differ in the number of marked handshape types either before or after taking a year of a foreign language: t(39) = 1.35, p = .18 (before); z = 1.13, p = .26 (after). In contrast to the marked handshape types, we observed no difference in the production of unmarked types before versus after taking ASL, z = .59, p = .28 (one-tailed), or before versus after taking a Romance language, t(19) = 0.0, p = 1. Additionally, the groups did not differ with respect to the production of unmarked handshape types either before or after one year of instruction: z = .14, p = .89 (before); z = .08, p = .94 (after).

Production of ASL signs

For this analysis, we identified gestural productions that resembled ASL signs for the entire eight-episode cartoon for each ASL-learning participant. As described above, any gestures that were produced by spoken language learners that resembled ASL signs were not counted as ASL signs for the ASL learners. After taking one year of ASL, participants produced from none to five signs in their retelling of the cartoon. Five of the 21 ASL learners produced at least one ASL sign, and there were a total of 13 signs produced across participants. The sign types produced were the following: DOOR, MONKEY, ROLL, TRAIN, WALK, and a two-handed classifier construction with F handshapes describing a long, thin object. None of the spoken language learners produced a Spanish, Italian, or French word.

Italian language learners

In Study 1, we found that more Italian learners felt that their gestures had changed compared to learners of the other Romance languages. To determine whether the Italian learners exhibited a change in gesture rate or in any other gesture variable, we analyzed this group separately. No comparison revealed a significant difference in gestures before versus after taking Italian, and all but two of these comparisons were actually in the opposite direction, i.e., a decrease in gesture production.

Discussion

The perception that rate of gesturing when speaking English increases for students learning American Sign Language (Study 1) was confirmed by a longitudinal study comparing co-speech gesture rates before versus after one year of academic instruction (Study 2). In contrast, students learning a Romance Language (French, Spanish, or Italian) were unlikely to perceive a change in their co-speech gesture after a year of classes (see Table 1 above) and did not exhibit an increase in gesture rate. Exposure to ASL may increase co-speech gesture production for a number of reasons. Signing involves manual rather than vocal linguistic articulators, and students may become more comfortable and accustomed to moving their hands when communicating. This ease and practice with manual production may result in a lower gesture threshold for those becoming bilingual in ASL. According to the Gesture as Simulated Action model (Hostetter & Alibali, Reference Hostetter and Alibali2008), representational (iconic) gestures are only produced when the neural activation from a mentally simulated action is sufficiently strong to spread from premotor to motor cortex and surpass a gesture threshold defined as “the level of activation beyond which a speaker cannot inhibit the expression of simulated actions as gestures” (p. 503). Gesture thresholds are hypothesized to vary according to a speaker's individual neurology (e.g., strength of connections between premotor and motor regions), experiences, beliefs, and situational factors (e.g., whether gestures are felicitous or impolite). Within this model, experience and practice with sign language could lower the neural activation threshold, resulting in an increase in gesture production due to a decreased ability to inhibit motor movements.

Another possibility is that first year ASL students may become more accustomed to producing manual gestures while speaking because they often produce English translation equivalents (usually whispered words) when they sign. First year students of Romance languages may covertly produce English translation equivalents when speaking, but articulatory constraints prevent the simultaneous production of two spoken words (e.g., one cannot whisper or say bird while simultaneously saying oiseau in French). Given that the majority of co-speech gestures convey the same information expressed in speech (McNeill, Reference McNeill1992), the frequent simultaneous production of semantically equivalent words and signs may prime the co-speech gesture system to produce more manual gestures.

The learners of ASL exhibited only an increase in iconic gestures, with no change in the frequency of deictic or beat gestures (Table 2). Thus, ASL learners are not just moving their hands more as they speak. If that were the case, we would also expect an increase in beat gestures (i.e., non-representational gestures that co-occur with prosodic peaks in speech). Casey and Emmorey (Reference Casey and Emmorey2009) reported that native ASL–English bilinguals actually produced a lower proportion of beat gestures compared to non-signers and suggested that life-long experience with ASL may cause them to suppress the use of semantically non-transparent gestures, however ASL–English bilinguals did produce more iconic gestures than non-signers. Thus, it appears that both life-long and short-term exposure to ASL increases the use of meaningful, representational gestures when speaking. In particular, learning how to describe visual scenes through the use of ASL classifier constructions that iconically depict the location, movement, or shape of referents may lead to an enhanced use of iconic gestures that function similarly. In fact, it is possible that more of the gestures produced by the ASL learners were actually ASL classifier constructions, but they were not coded as such because of our conservative coding criteria (i.e., classifier constructions or lexical signs that resembled gestures produced by a non-signer were not coded as ASL).

The lack of increase in deictic gestures may seem odd given that deictic gestures are common in sign languages both for pronominal use and spatial referencing. However, this result replicates that of Casey and Emmorey (Reference Casey and Emmorey2009) who compared native ASL–English bilinguals and non-signers using the same task. Thus, it would be somewhat surprising if novice learners showed an increase in deictic gestures when fluent bimodal bilinguals did not. Casey and Emmorey (Reference Casey and Emmorey2009) suggested that a difference in the use of deictic gestures might emerge if a more overtly spatial task were used, such as eliciting descriptions of spatial layouts or route directions.

Unlike native ASL–English bilinguals, the ASL students did not exhibit an increase in their use of gestures from a character viewpoint. Casey and Emmorey (Reference Casey and Emmorey2009) hypothesized that the increased use of such gestures in bilinguals arose from the frequent use of character viewpoint within role shifts during ASL narratives. We speculated that the bilingual production system encodes information for expression in ASL even when English is the selected language and that this engenders an increase in the production of gestures from a character viewpoint. However, it is unlikely that first year ASL students have achieved a level of proficiency and experience that would lead to the automatic encoding of ASL when speaking (or enough practice producing ASL narratives with role shift).

In addition to an increase in the use of iconic gestures, we also observed an increase in the variety of handshape types (mostly marked handshapes) that were produced after a year of ASL exposure (Table 3). On average, ASL learners added one new handshape to their gesture repertoire. The following six new handshape types were observed after exposure to ASL (see Appendix for handshape illustrations): F, open-F, L, V, 4, and modified X (index finger hooked with the thumb in the hook, other fingers closed). For the Romance language learners, only three new handshape types were observed at the end of the year: F, L, and Y. Life-long ASL–English bilinguals also produced a wide variety of handshape types in their co-speech gestures (Casey & Emmorey, Reference Casey and Emmorey2009). These effects are likely due to the shared manual modality between ASL and gesture. Thus, when either simulated actions (Hostetter & Alibali, Reference Hostetter and Alibali2008) or spatio-motoric representations (Kita & Özyürek, Reference Kita and Özyürek2003) are expressed as co-speech gesture, more handshape types are readily available and easily produced for speakers who have experience with a sign language.

Although we observed significant increases in gesture rate, production of iconic gestures, and number of handshape types for ASL learners only, these changes were not large enough to create significant group differences between ASL and Romance language learners at the end of the year. The well-known variability in individual rates of co-speech gesture production (e.g., Nagpal, Nicoladis & Marentette, Reference Nagpal, Nicoladis and Marentette2011) may override and obscure the subtle changes that occur after just one year of ASL exposure in a group comparison with spoken language learners. We are currently exploring whether L2 learners who have become proficient in ASL exhibit significant group differences in the rate and form of gestures compared to English speaking non-signers.

We also observed that a few ASL learners produced one or more ASL signs when re-telling the cartoon story to an unfamiliar interlocutor. This finding is consistent with the fact that more than half (63%) of the ASL students in Study 1 spontaneously reported that after learning ASL, they sometimes produced signs when speaking English. These results are somewhat surprising because these students are in the earliest stage of becoming bilingual, whereas unintentional intrusions of ASL signs might be expected for highly proficient bilinguals who fail to fully suppress their L2. Additionally, none of the Romance learners produced a foreign word when re-telling the cartoon story, and such an intrusion would likely disrupt the interaction. Thus, the fact that speakers with a low level of ASL fluency sometimes produced signs when speaking to a non-signer suggests that learning a visual-manual language might pose a unique challenge to language control even in the earliest stages of L2 acquisition. Furthermore, the production of an ASL sign is not disruptive because it is produced simultaneously with speech (which carries the primary message), and it is likely to be overlooked as a co-speech gesture (Casey & Emmorey, Reference Casey and Emmorey2009).

Another possibility is that sign production while speaking does not reflect a failure to suppress ASL, but rather an increase in the repertoire of conventional gestures. Under this hypothesis, ASL learners have not begun to acquire a new lexicon (as have the Romance language learners), but instead have learned new emblematic gestures (akin to “quiet” (finger to lips), “stop” (palm outstretched), “good luck” (fingers crossed), or “thumbs up”). However, these new conventional gestures would only be shared with ASL signers. The gestures would not be conventional for the interlocutor in Study 2, and many would not be recognized as meaningful. For example, the ASL sign TRAIN (the dominant hand in an H handshape moves across the H handshape of the non-dominant hand) produced by one student is not iconic, and the meaning is not transparent (see Figure 1). Further research is necessary to determine if the ASL signs that ASL learners produce during co-speech gesture are genuine intrusions from the ASL lexicon or if they have merely become part of their gestural repertoire. And if ASL signs are initially part of a gestural repertoire, how does the transition from gestural to lexical representations occur? That is, how and when do gestural (emblem-like) representations become part of the stored ASL lexicon and acquire morpho-syntactic and phonological features?

Figure 1. Illustration of the sign TRAIN produced by an ASL learner while saying “ah you know [the train] wires that run through some cities”.

Finally, an increase in gesture rate following the acquisition of a sign language may have an impact beyond the nature of gestures that accompany the native spoken language. Several studies have found that co-speech gesture production aids memory, learning, and spatial cognition (e.g., Broaders, Cook, Mitchell & Goldin-Meadow, Reference Broaders, Cook, Mitchell and Goldin-Meadow2007; Chu & Kita, Reference Chu and Kita2008, Reference Chu and Kita2011; Cook, Yip & Goldin-Meadow, Reference Cook, Yip and Goldin-Meadow2010; Goldin-Meadow, Cook & Mitchell, Reference Goldin-Meadow, Cook and Mitchell2009; Wagner, Nusbaum & Goldin-Meadow, Reference Wagner, Nusbaum and Goldin-Meadow2004). For example, Cook et al. (Reference Cook, Yip and Goldin-Meadow2010) found that when adults gestured while describing events, their immediate and delayed recall of those events was better than for comparison groups or conditions in which no gestures were produced. Chu and Kita (Reference Chu and Kita2008, Reference Chu and Kita2011) found that co-speech gestures as well as “co-thought” gestures (gestures produced without speech while thinking about a task) facilitate performance on mental rotation tasks. A possible implication of our findings is that the increase in gesture rate that accompanies exposure to ASL might improve cognitive abilities by affecting how well events are encoded in memory and how mental rotation tasks are performed. Indeed, previous research has shown that fluent ASL–English bilinguals have better mental rotation abilities than non-signers (e.g., Emmorey, Kosslyn & Bellugi, Reference Emmorey, Kosslyn and Bellugi1993; Talbot & Haude, Reference Talbot and Haude1993).

In sum, the anecdotal impression that learning a sign language changes the nature of co-speech gesture was confirmed by a one-year longitudinal study of ASL students. Exposure to a sign language may lower the neural threshold for gesture production (Hostetter & Alibali, Reference Hostetter and Alibali2008) and may pose unique challenges for language control that are not present for spoken language learners. Lastly, learning a sign language also has the potential to facilitate cognitive processes that are linked to gesture production.

Appendix. Handshape types described in the article