1. Introduction

Behavioural and neurobiological studies of spatial cognition have provided considerable insight into how animals (including humans) navigate through the world, establishing that a variety of strategies, subserved by different neural systems, can be used in order to plan and execute trajectories through large-scale, navigable space. This work, which has mostly been done in simplified, laboratory-based environments, has defined a number of sub-processes such as landmark recognition, heading determination, odometry (distance-measuring), and context recognition, all of which interact in the construction and use of spatial representations. The neural circuitry underlying these processes has in many cases been identified, and it now appears that the basics of navigation are reasonably well understood.

The real world, however, is neither simple nor two-dimensional, and the addition of vertical space to the two horizontal dimensions adds a number of new problems for a navigator to solve. For one thing, moving against gravity imposes additional energy costs. Also, moving in a volumetric space is computationally complicated, because of the increased size of the representation needed, and because rotations in three dimensions interact. It remains an open question how the brain has solved these problems, and whether the same principles of spatial encoding operate in all three dimensions or whether the vertical dimension is treated differently.

Studies of how animals and humans navigate in environments with a vertical component are slowly proliferating, and it is timely to review the gathering evidence and offer a theoretical interpretation of the findings to date. In the first part of the article, we review the current literature on animal and human orientation and locomotion in three-dimensional spaces, highlighting key factors that contribute to and influence navigation in such spaces. The second part summarizes neurobiological studies of the encoding of three-dimensional space. The third and final part integrates the experimental evidence to put forward a hypothesis concerning three-dimensional spatial encoding – the bicoded model – in which we propose that in encoding 3D spaces, the mammalian brain constructs mosaics of connected surface-referenced maps. The advantages and limitations of such a quasi-planar encoding scheme are explored.

2. Theoretical considerations

Before reviewing the behavioural and neurobiological aspects of three-dimensional navigation it is useful to establish a theoretical framework for the discussion, which will be developed in more detail in the last part of this article. The study of navigation needs to be distinguished from the study of spatial perception per se, in which a subject may or may not be moving through the space. Additionally, it is also important to distinguish between space that is encoded egocentrically (i.e., relative to the subject) versus space that is encoded allocentrically (independently of the subject – i.e., relative to the world). The focus of the present article is on the allocentric encoding of the space that is being moved through, which probably relies on different (albeit interconnected) neural systems from the encoding of egocentric space.

An animal that is trying to navigate in 3D space needs to know three things – how it is positioned, how it is oriented, and in what direction it is moving. Further, all these things require a reference frame: a world-anchored space-defining framework with respect to which position, orientation, and movement can be specified. There also needs to be some kind of metric coordinate system – that is, a signal that specifies distances and directions with respect to this reference frame. The core question for animal navigation, therefore, concerns what the reference frame might be and how the coordinate system encodes distances and directions within the frame.

The majority of studies of navigation, at least within neurobiology, have up until now taken place in laboratory settings, using restricted environments such as mazes, which are characterized both by being planar (i.e., two-dimensional) and by being horizontal. The real world differs in both of these respects. First, it is often not planar – air and water, for example, allow movement in any direction and so are volumetric. In this article, therefore, we will divide three-dimensional environments into those that are locally planar surfaces, which allow movement only in a direction tangential to the surface, and those that are volumetric spaces (air, water, space, and virtual reality), in which movement (or virtual movement) in any direction is unconstrained. Second, even if real environments might be (locally) planar, the planes may not necessarily be horizontal. These factors are important in thinking about how subjects encode and navigate through complex spaces.

What does the vertical dimension add to the problem of navigation? At first glance, not much: The vertical dimension provides a set of directions one can move in, like any other dimension, and it could be that the principles that have been elucidated for navigation in two dimensions extend straightforwardly to three. In fact, however, the vertical dimension makes the problem of navigation considerably more complex, for a number of reasons. First, the space to be represented is much larger, since a volume is larger than a plane by a power of 3/2. Second, the directional information to be encoded is more complex, because there are three planes of orientation instead of just one. Furthermore, rotations in orthogonal planes interact such that sequences of rotations have different outcomes depending on the order in which they are executed (i.e., they are non-commutative), which adds to processing complexity. Third, the vertical dimension is characterized by gravity, and by consequences of gravity such as hydrostatic or atmospheric pressure, which add both information and effort to the computations involved. And finally, there are navigationally relevant cues available for the horizontal plane that are absent in the vertical: for example, the position of the sun and stars, or the geomagnetic field.

The internal spatial representation that the brain constructs for use in large-scale navigation is often referred to as a “cognitive map” (O'Keefe & Nadel Reference O'Keefe and Nadel1978). The cognitive map is a supramodal representation that arises from the collective activity of neurons in a network of brain areas that process incoming sensory information and abstract higher-order spatial characteristics such as distance and direction. In thinking about whether cognitive maps are three-dimensional, it is useful to consider the various 3D mapping schemes that are possible.

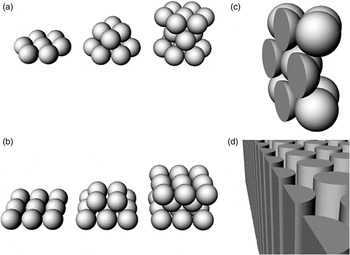

Figure 1 shows three different hypothetical ways of mapping spaces, which differ in the degree to which they incorporate a representation of the vertical dimension. In Figure 1a, the metric fabric of the map – the grid – follows the topography but does not itself contain any topographical information: the map is locally flat. Such a surface is called, in mathematics, a manifold, which is a space that is locally Euclidean – that is, for any point in the space, its immediate neighbours will be related to it by the usual rules of geometry, even if more-distant points are related in a non-Euclidean way. For example, the surface of a sphere is a manifold, because a very small triangle on its surface will be Euclidean (its interior angles add up to 180 degrees) but a large triangle will be Riemannian (the sum of its interior angles exceeds 180 degrees). Given a small enough navigator and large enough surface undulations, the ground is therefore a manifold. For the purposes of the discussion that follows, we will call a map that follows the topography and is locally Euclidean a surface map. A surface map in its simplest form does not, for a given location, encode information about whether the location is on a hill or in a valley.Footnote 2 Although such a map accurately represents the surface distance a navigator would need to travel over undulating terrain, because it does not contain information about height (or elevation) above a reference plane it would fail to allow calculation of the extra effort involved in moving over the undulations, and nor would the calculation of shortcuts be accurate.

Figure 1. Different kinds of three-dimensional encoding. (a) A surface map, with no information about elevation. (b) An extracted flat map in which the horizontal coordinates have been generated, by trigonometric inference, from hilly terrain in (a). (c) A bicoded map, which is metric in the horizontal dimension and uses a non-metric scale (i.e., shading) in the vertical (upper panel = bird's eye view of the terrain, lower panel = cross-section at the level of the dotted line). (d) A volumetric map, which is metric in all three dimensions, like this map of dark matter in the universe.

A variant of the surface map is the extracted flat map shown in Figure 1b. Here, distances and directions are encoded relative to the horizontal plane, rather than to the terrain surface. For a flat environment, a surface map equates to an extracted flat map. For hilly terrain, in order to determine its position in x-y coordinates, the navigator needs to process the slope to determine, trigonometrically, the equivalent horizontal distance. To generate such a map it is therefore necessary to have some processing of information from the vertical dimension (i.e., slope with respect to position and direction), and so this is a more complex process than for a purely surface map. There is evidence, discussed later, that some insects can in fact extract horizontal distance from hilly terrain, although whether this can be used to generate a map remains moot.

In Figure 1c, the map now does include a specific representation of elevation, but the encoding method is different from that of the horizontal distances. Hence, we have termed this a bicoded map. In a bicoded map, vertical distance is represented by some non-spatial variable – in this case, colour. Provided with information about x-y coordinates, together with the non-spatial variable, navigators could work out where they were in three-dimensional space, but would not have metric information (i.e., quantitative information about distance) about the vertical component of this position unless they also had a key (some way of mapping the non-spatial variable to distances). Lacking a key, navigators could still know whether they were on a hill or in a valley, but could not calculate efficient shortcuts because they could not make the trigonometric calculations that included the vertical component. Such a map could be used for long-range navigation over undulating terrain, but determining the most energy-efficient route would be complicated because this would require chaining together segments having different gradients (and therefore energetic costs), as opposed to simply computing a shortest-distance route. Although the representational scheme described here is an artificial one used by human geographers, later on we will make the point that an analogous scheme – use of a non-metric variable to encode one of the dimensions in a three-dimensional map – may operate in the neural systems that support spatial representation.

Finally, the volumetric map shown in Figure 1d could be used for navigation in a volumetric space. Here, all three dimensions use the same metric encoding scheme, and the shortest distance between any two points can be calculated straightforwardly using trigonometry (or some neural equivalent), with – on Earth – elevation being simply the distance in the dimension perpendicular to gravity. However, calculating energy costs over an undulating surface would still be complicated, for the same reasons as above – that is, energy expenditure would have to be continuously integrated over the course of the planned journey in order to allow comparisons of alternate routes.

The questions concerning the brain's map of space, then, are: What encoding scheme does it use for navigation either over undulating surfaces or in volumetric spaces? Is the vertical dimension encoded in the same way as the two horizontal ones, as in the volumetric map? Is it ignored entirely, as in a surface map, or is it encoded, but in a different way from the horizontal dimensions, as in the bicoded map? These questions motivate the following analysis of experimental data and will be returned to in the final section, where we will suggest that a bicoded map is the most likely encoding scheme, at least in mammals, and perhaps even including those that can swim or fly.

3. Behavioural studies in three dimensions

A key question to be answered concerning the representation of three-dimensional space is how information from the vertical dimension is incorporated into the spatial representation. We will therefore now review experimental studies that have explored the use of information from the vertical dimension in humans and other animals, beginning with an examination of how cues from the vertical dimension may be processed, before moving on to how these cues may be used in self-localization and navigation. The discussion is organized as follows, based on a logical progression through complexity of the representation of the third (usually vertical) dimension:

-

Processing of verticality cues

-

Navigation on a sloping surface

-

Navigation in multilayer environments

-

Navigation in a volumetric space

3.1. Processing of verticality cues

Processing of cues arising from the vertical dimension is a faculty possessed by members of most phyla, and it takes many forms. One of the simplest is detection of the vertical axis itself, using a variety of mechanisms that mostly rely on gravity, or on cues related to gravity, such as hydrostatic pressure or light gradients. This information provides a potential orienting cue which is useful not only in static situations but also for navigation in three-dimensional space. Additionally, within the vertical dimension itself gravity differentiates down from up and thus polarizes the vertical axis. Consequently, animals are always oriented relative to this axis, even though they can become disoriented relative to the horizontal axes. The importance of the gravity signal as an orienting cue is evident in reduced or zero-gravity environments, which appear to be highly disorienting for humans: Astronauts report difficulty in orienting themselves in three-dimensional space when floating in an unfamiliar orientation (Oman Reference Oman, Mast and Jancke2007).

The vertical axis, once determined, can then be used as a reference frame for the encoding of metric information in the domains of both direction and distance. The technical terms for dynamic rotations relative to the vertical axis are pitch, roll, and yaw, corresponding to rotations in the sagittal, coronal, and horizontal planes, respectively (Fig. 2A). Note that there is potential for confusion, as these rotational terms tend to be used in an egocentric (body-centered) frame of reference, in which the planes of rotation move with the subject. The static terms used to describe the resulting orientation (or attitude) of the subject are elevation, bank, and azimuth (Fig. 2B), which respectively describe: (1) the angle that the front-back (antero-posterior) axis of the subject makes with earth-horizontal (elevation); (2) the angle that the transverse axis of the subject makes with earth-horizontal (bank); and (3) the angle that the antero-posterior axis of the subject makes with some reference direction, usually geographical North (azimuth). Tilt is the angle between the dorso-ventral cranial axis and the gravitational vertical.

Figure 2. (a) Terminolog y describing rotations in the three cardinal planes. (b) Terminology describi ng static orientation (or “attitude”) in each of the three planes.

The other metric category, in addition to direction, is distance, which in the vertical dimension is called height – or (more precisely) elevation, when it refers to distance from a reference plane (e.g., ground or water level) in an upward direction, and depth when it refers to distance in a downward direction. Determination of elevation/depth is a special case of the general problem of odometry (distance-measuring).

There is scant information about the extent to which animals encode information about elevation. A potential source of such information could be studies of aquatic animals, which are exposed to particularly salient cues from the vertical dimension due to the hydrostatic pressure gradient. Many fish are able to detect this gradient via their swim bladders, which are air-filled sacs that can be inflated or deflated to allow the fish to maintain neutral buoyancy. Holbrook and Burt de Perera (Reference Holbrook and Burt de Perera2011a) have argued that changes in vertical position would also, for a constant gas volume, change the pressure in the bladder and thus provide a potential signal for relative change in height. Whether fish can actually use this information for vertical self-localization remains to be determined, however.

The energy costs of moving vertically in water also add navigationally relevant information. These costs can vary across species (Webber et al. Reference Webber, Aitken and O'Dor2000); hence, one might expect that the vertical dimension would be more salient in some aquatic animals than in others. Thermal gradients, which are larger in the vertical dimension, could also provide extra information concerning depth, as well as imposing differential energetic costs in maintaining thermal homeostasis at different depths (Brill et al. Reference Brill, Lutcavage, Metzger, Stallings, Bushnell, Arendt, Lucy, Watson and Foley2012; Carey Reference Carey1992).

Interestingly, although fish can process information about depth, Holbrook and Burt de Perera (Reference Holbrook and Burt de Perera2009) found that they appear to separate this from the processing of horizontal information. Whereas banded tetras learned the vertical and horizontal components of a trajectory equally quickly, the fish tended to use the two components independently, suggesting a separation either during learning, storage, or recall, or at the time of use of the information. When the two dimensions were in conflict, the fish preferred the vertical dimension (Fig. 3), possibly due to the hydrostatic pressure gradient.

Figure 3. The experiment by Holbrook and Burt de Perera (Reference Holbrook and Burt de Perera2009). (a) Line diagram of the choice maze (taken from the original article, with permission), in which fish were released into a tunnel and had to choose one of the exit arms to obtain a reward. (b) The conflict experiment of that study, in which the fish were trained with the arms at an angle, so that one arm pointed up and to the left and the other arm pointed down and to the right. During testing, the arms were rotated about the axis of the entrance tunnel so that one arm had the same vertical orientation but a different left-right position, and one had the same horizontal (left-right) position but a different vertical level. (c) Analysis of the choices showed that the fish greatly preferred the arm having the same vertical level. Redrawn from the original.

Several behavioural studies support the use of elevation information in judging the vertical position of a goal. For instance, rufous hummingbirds can distinguish flowers on the basis of their relative heights above ground (Henderson et al. Reference Henderson, Hurly and Healy2001; Reference Henderson, Hurly and Healy2006), and stingless bees can communicate the elevation as well as the distance and direction to a food source (Nieh & Roubik Reference Nieh and Roubik1998; Nieh et al. Reference Nieh, Contrera and Nogueira-Neto2003). Interestingly, honeybees do not appear to communicate elevation (Dacke & Srinivasan Reference Dacke and Srinivasan2007; Esch & Burns Reference Esch and Burns1995). Rats are also able to use elevation information in goal localization, and – like the fish in the Holbrook and Burt de Perera (Reference Holbrook and Burt de Perera2009) study – appear to separate horizontal and vertical components when they do so. For example, Grobéty & Schenk (Reference Grobéty and Schenk1992a) found that in a lattice maze (Fig. 4A), rats learned to locate the elevation of a food item more quickly than they learned its horizontal coordinates, suggesting that elevation may provide an additional, more salient spatial cue, perhaps because of its added energy cost, or because of arousal/anxiety.

Figure 4. Rodent studies of vertical processing. (a) Schematic of the lattice maze of Grobéty and Schenk (Reference Grobéty and Schenk1992a), together with a close-up photograph of a rat in the maze (inset). (b) Schematic and photograph of the pegboard maze used in the experiments of Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011) and, as described later, by Hayman et al. (Reference Hayman, Verriotis, Jovalekic, Fenton and Jeffery2011). In the Jovalekic et al. experiment, rats learned to shuttle directly back and forth between start and goal locations, as indicated in the diagram on the right. On test trials, a barrier (black bar) was inserted across the usual route, forcing the rats to detour. The great majority of rats preferred the routes indicated by the arrows, which had the horizontal leg first and the vertical leg second.

Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011) also found a vertical/horizontal processing separation in rats, but in the opposite direction from Grobéty and Schenk, suggesting a task dependence on how processing is undertaken. Rats were trained on a vertical climbing wall (studded with protruding pegs to provide footholds; Fig. 4B) to shuttle back and forth between a start and goal location. When a barrier was inserted into the maze, to force a detour, the rats overwhelmingly preferred, in both directions, the route in which the horizontal leg occurred first and the vertical leg second. Likewise, on a three-dimensional lattice similar to the one used by Grobéty and Schenk, the rats preferred the shallow-steep route to the steep-shallow route around a barrier. However, the task in Jovalekic et al.'s study differed from that faced by Grobéty and Schenk's rats in that in the latter, both the starting location and the goal were well learned by the time the obstacle was introduced. It may be that elevation potentiates initial learning, but that in a well-learned situation the animals prefer to solve the horizontal component first, perhaps due to an inclination to delay the more costly part of a journey.

Humans can estimate their own elevation, but with only a modest degree of accuracy. Garling et al. (Reference Garling, Anders, Lindberg and Arce1990) found that subjects were able to judge, from memory, which one of pairs of familiar landmarks in their hometown has a higher elevation, indicating that information about elevation is both observed and stored in the brain. It is not clear how this information was acquired, although there were suggestions that the process may be based partly on encoding heuristics, such as encoding the steepest and therefore most salient portions of routes first, as opposed to encoding precise metric information. Notwithstanding the salience of the vertical dimension, processing of the vertical dimension in humans appears poor (Montello & Pick Reference Montello and Pick1993; Pick & Rieser Reference Pick, Rieser and Potegal1982; Tlauka et al. Reference Tlauka, Wilson, Adams, Souter and Young2007).

As with the fish and rodent studies above, a dissociation between vertical and horizontal processing was seen in a human study, in which landmarks arranged in a 3-by-3 grid within a building were grouped by most participants into (vertical) column representations, and by fewer participants into (horizontal) row representations (Büchner et al. Reference Büchner, Hölscher, Strube, Vosniadou, Kayser and Protopapas2007). Most participants' navigation strategies were consistent with their mental representations; as a result, when locating the given target landmark, a greater proportion of participants chose a horizontal-vertical route. The authors suggested that these results might have been reversed in a building that was taller than it was wide, unlike the building in their study. However, given the results from the rats of Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011), in which the climbing wall dimensions were equal, it is also possible that this behaviour reflects an innate preference for horizontal-first routes.

In conclusion, then, studies of both human and nonhuman animals find that there is clear evidence of the use of elevation information in spatial computations, and also for differential processing of information from vertical versus horizontal dimensions. The processing differences between the two dimensions may result from differential experience, differential costs associated with the respective dimensions, or differences in how these dimensions are encoded neurally.

Next, we turn to the issue of how the change in height with respect to change in distance – that is, the slope of the terrain – may be used in spatial cognition and navigation. This is a more complex computation than simple elevation, but its value lies in the potential for adding energy and effort to the utility calculations of a journey.

3.2. Navigation on a sloping surface

Surfaces extending into the vertical dimension locally form either slopes or vertical surfaces, and globally form undulating or crevassed terrain. Slopes have the potential to provide several kinds of information to a navigating animal (Restat et al. Reference Restat, Steck, Mochnatzki and Mallot2004), acting, for instance, as a landmark (e.g., a hill visible from a distance) or as a local organizing feature of the environment providing directional information. Additionally, as described earlier, the added vertical component afforded by slopes is both salient and costly, as upward locomotion requires greater effort than horizontal locomotion, and an extremely steep slope could be hazardous to traverse. As a result, it is reasonable to assume that slopes might influence navigation in several ways.

A number of invertebrates, including desert ants and fiddler crabs, appear able to detect slopes, sometimes with a high degree of accuracy (at least 12 degrees for the desert ant; Wintergerst & Ronacher Reference Wintergerst and Ronacher2012). It is still unknown how these animals perform their slope detection, which in the ant does not appear to rely on energy consumption (Schäfer & Wehner Reference Schäfer and Wehner1993), sky polarization cues (Hess et al. Reference Hess, Koch and Ronacher2009), or proprioceptive cues from joint angles (Seidl & Wehner Reference Seidl and Wehner2008). The degree to which slope is used in ant navigation has been under considerable investigation in recent years, making use of the ability of these animals to “home” (find their way back to the nest) using a process known as path integration (see Etienne & Jeffery Reference Etienne and Jeffery2004; Walls & Layne Reference Walls and Layne2009; Wehner Reference Wehner2003) in which position is constantly updated during an excursion away from a starting point such as a nest.

Foraging ants will home after finding food, and the direction and distance of their return journey provide a convenient readout of the homing vector operative at the start of this journey. Wohlgemuth et al. (Reference Wohlgemuth, Ronacher and Wehner2001) investigated homing in desert ants and found that they could compensate for undulating terrain traversed on an outbound journey by homing, across flat terrain, the horizontally equivalent distance back to the nest, indicating that they had processed the undulations and could extract the corresponding ground distance. Likewise, fiddler crabs searched for their home burrows at the appropriate ground distance as opposed to the actual travelled distance (Walls & Layne Reference Walls and Layne2009). Whether such encoding of slope is relative to earth-horizontal (i.e., the plane defined by gravity) or whether it simply relates the two surfaces (the undulating outbound one and the flat return one), remains to be determined. However, despite the ability of Cataglyphis to extract ground distance, Grah et al. (Reference Grah, Wehner and Ronacher2007) found that the ants did not appear to encode specific aspects of the undulating trajectory in their representations of the journey, as ants trained on outbound sloping surfaces will select homing routes that have slopes even when these are inappropriate (e.g., if the two outbound slopes had cancelled out, leaving the ant at the same elevation as the nest with no need to ascend or descend). Thus, ants do not appear to incorporate elevation into their route memory; their internal maps seem essentially flat.

The ability of humans to estimate the slope of surfaces has been the subject of considerable investigation. Gibson and Cornsweet (Reference Gibson and Cornsweet1952) noted that geographical slant, which is the angle a sloping surface makes with the horizontal plane, is to be distinguished from optical slant, which is the angle the surface makes with the gaze direction. This is important because a surface appears to an observer to possess a relatively constant slope regardless of viewing distance, even though the angle the slope makes with the eye changes (Fig. 5A). The observer must somehow be able to compensate for the varying gaze angle in order to maintain perceptual slope constancy, presumably by using information about the angle of the head and eyes with respect to the body. However, despite perceptual slope constancy, perceptual slope accuracy is highly impaired – people tend to greatly overestimate the steepness of hills (Kammann Reference Kammann1967), typically judging a 5-degree hill to be 20 degrees, and a 30-degree hill to be almost 60 degrees (Proffitt et al. Reference Proffitt, Bhalla, Gossweiler and Midgett1995).

Figure 5. Visual slant estimation. (a) Slope constancy: A subject viewing a horizonta l surface with a shallow gaze (β1) versus a steep gaze (β2) perceives the slope of the ground to be the same (i.e., zero) in both cases despite the optical slant (β) being different in the two cases. In order to maintain constant slope perception, the nervous system must therefore have some mechanism of compensating for gaze angle. (b) The scale expansion hypothesis: An observer misperceives both the declination of gaze and changes in optical slant with a gain of 1.5, so that all angles are exaggerated but constancy of (exaggerated) slant is locally maintained. Increasing distance compression along the line of sight additionally contributes to slant exaggeration (Li & Durgin Reference Li and Durgin2010).

This mis-estimation of slope has been linked to the well-established tendency of people to underestimate depth in the direction of gaze (Loomis et al. Reference Loomis, Da Silva, Fujita and Fukusima1992): If subjects perceive distances along the line of sight to be foreshortened, then they would perceive the horizontal component of the slope to be less than it really is for a given vertical distance, and thus the gradient to be steeper than it is. However, this explanation fails to account for why subjects looking downhill also perceive the hill as too steep (Proffitt et al. Reference Proffitt, Bhalla, Gossweiler and Midgett1995). Furthermore, if observers step back from the edge of a downward slope, there is a failure of slope constancy as well as accuracy: they see the slope as steeper than they do if they are positioned right at the edge (Li & Durgin Reference Li and Durgin2009). Durgin and Li have suggested that all these perceptual distortions can collectively be explained by so-called scale expansion (Durgin & Li Reference Durgin and Li2011; Li & Durgin Reference Li and Durgin2010) – the tendency to overestimate angles (both gaze declination and optical slant) in central vision by a factor of about 1.5 (Fig. 5B). While this expansion may be beneficial for processing efficiency, it raises questions about how such distortions may affect navigational accuracy.

Other, nonvisual distortions of slope perception may occur, as well. Proffitt and colleagues have suggested that there is an effect on slope perception of action-related factors, such as whether people are wearing a heavy backpack or are otherwise fatigued, or are elderly (Bhalla & Proffitt Reference Bhalla and Proffitt1999); whether the subject is at the top or bottom of the hill (Stefanucci et al. Reference Stefanucci, Proffitt, Banton and Epstein2005); and their level of anxiety (Stefanucci et al. Reference Stefanucci, Proffitt, Clore and Parekh2008) or mood (Riener et al. Reference Riener, Stefanucci, Proffitt and Clore2011) or even level of social support (Schnall et al. Reference Schnall, Harber, Stefanucci and Proffitt2012). However, the interpretation of many of these observations has been challenged (Durgin et al. Reference Durgin, Klein, Spiegel, Strawser and Williams2012).

Creem and Proffitt (Reference Creem and Proffitt1998; Reference Creem and Proffitt2001) have argued that visuomotor perceptions of slope are dissociable from explicit awareness. By this account, slope perception comprises two components: an accurate and stable visuomotor representation and an inaccurate and malleable conscious representation. This may be why subjects who appear to have misperceived slopes according to their verbal reports nevertheless tailor their actions to them appropriately. Durgin et al. (Reference Durgin, Hajnal, Li, Tonge and Stigliani2011) have challenged this view, suggesting that experimental probes of visuomotor perception are themselves inaccurate. The question of whether there are two parallel systems for slope perception therefore remains open.

How does slope perception affect navigation? One mechanism appears to be by potentiating spatial learning. For example, tilting some of the arms in radial arm mazes improves performance in working memory tasks in rats (Brown & Lesniak-Karpiak Reference Brown and Lesniak-Karpiak1993; Grobéty & Schenk Reference Grobéty and Schenk1992b; Figure 6a). Similarly, experiments in rodents involving conical hills emerging from a flat arena that rats navigated in darkness found that the presence of the slopes facilitated learning and accuracy during navigation (Moghaddam et al. Reference Moghaddam, Kaminsky, Zahalka and Bures1996; Fig. 6B). In these experiments, steeper slopes enhanced navigation to a greater extent than did shallower slopes. Pigeons walking in a tilted arena were able to use the 20-degree slope to locate a goal corner; furthermore, they preferred to use slope information when slope and geometry cues were placed in conflict (Nardi & Bingman Reference Nardi and Bingman2009b; Nardi et al. Reference Nardi, Nitsch and Bingman2010). In these examples, it is likely that slope provided compass-like directional information, as well as acting as a local landmark with which to distinguish between locations in an environment.

Figure 6. Behavioural studies of the use of slope cues in navigation, in rats (a and b) and in humans (c). (a) Schematic of the tilted-arm radial maze of Grobéty & Schenk (Reference Grobéty and Schenk1992b). (b) Schematic of the conical-hill arena of Moghaddam et al. (Reference Moghaddam, Kaminsky, Zahalka and Bures1996). (c) The virtual-reality sloping-town study of Restat et al. (Reference Restat, Steck, Mochnatzki and Mallot2004) and Steck et al. (Reference Steck, Mochnatzki, Mallot, Freksa, Brauer, Habel and Wender2003), taken from Steck et al. (Reference Steck, Mochnatzki, Mallot, Freksa, Brauer, Habel and Wender2003) with permission.

In humans, similar results were reported in a virtual reality task in which participants using a bicycle simulator navigated within a virtual town with eight locations (Restat et al. Reference Restat, Steck, Mochnatzki and Mallot2004; Steck et al. Reference Steck, Mochnatzki, Mallot, Freksa, Brauer, Habel and Wender2003; Fig. 6C). Participants experiencing the sloped version of the virtual town (4-degree slant) made fewer pointing and navigation errors than did those experiencing the flat version. This indicates that humans can also use slope to orient with greater accuracy – although, interestingly, this appears to be more true for males than for females (Nardi et al. Reference Nardi, Newcombe and Shipley2011). When slope and geometric cues were placed in conflict, geometric information was evaluated as more relevant (Kelly Reference Kelly2011). This is the opposite of the finding reported in pigeons discussed above. There are, of course, a number of reasons why pigeons and humans may differ in their navigational strategies, but one possibility is that different strategies are applied by animals that habitually travel through volumetric space rather than travel over a surface, in which geometric cues are arguably more salient.

The presence of sloping terrain adds complexity to the navigational calculations required in order to minimize energy expenditure. A study investigating the ability of human participants to find the shortest routes linking multiple destinations (the so-called travelling salesman problem, or “TSP”) in natural settings that were either flat or undulating found that total distance travelled was shorter in the flat condition (Phillips & Layton Reference Phillips and Layton2009). This was perhaps because in the undulating terrain, participants avoided straight-line trajectories in order to minimize hill climbing, and in so doing increased travel distance. However, it is not known to what extent they minimized energy expenditure. Interestingly, Grobéty & Schenk (Reference Grobéty and Schenk1992a) found that rats on the vertical plane made a far greater number of horizontal movements, partly because they made vertical translations by moving in a stair pattern rather than directly up or down, thus minimizing the added effort of direct vertical translations. This reflects the findings of the TSP in the undulating environments mentioned above (Phillips & Layton Reference Phillips and Layton2009) and is in line with the reported horizontal preferences in rats (Jovalekic et al. Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011).

In summary, then, the presence of sloping terrain not only adds effort and distance to the animal's trajectory, but also adds orienting information. However, the extent to which slope is explicitly incorporated into the metric fabric of the cognitive map, as opposed to merely embellishing the map, is as yet unknown.

3.3. Navigation in multilayer environments

Multilayer environments – such as trees, burrow systems, or buildings – are those in which earth-horizontal x-y coordinates recur as the animal explores, due to overlapping surfaces that are stacked along the vertical dimension. They are thus, conceptually, intermediate between planar and volumetric environments. The theoretical importance of multilayer environments lies in the potential for confusion, if – as we will argue later – the brain prefers to use a plane rather than a volume as its basic metric reference.

Most behavioural studies of spatial processing in multilayer environments have involved humans, and most human studies of three-dimensional spatial processing have involved multilayer environments (with the exception of studies in microgravity, discussed below). The core question concerning multilayer environments is the degree to which the layers are represented independently versus being treated as parts within an integrated whole. One way to explore this issue is to test whether subjects are able to path integrate across the layers. Path integration, introduced earlier, consists of self-motion-tracking using visual, motor, and sensory-flow cues in order to continuously update representations of current location and/or a homing vector (for a review, see Etienne & Jeffery Reference Etienne and Jeffery2004).

An early study of path integration in three dimensions explored whether mice could track their position across independent layers of an environment (Bardunias & Jander Reference Bardunias and Jander2000). The mice were trained to shuttle between their nest-box on one horizontal plane and a goal location on a parallel plane directly above the first, by climbing on a vertical wire mesh cylinder joining the two planes. When the entire apparatus was rotated by 90 degrees, in darkness while the animals were climbing the cylinder, all mice compensated for this rotation by changing direction on the upper plane, thus reaching the correct goal location. This suggests that they had perceived and used the angle of passive rotation, and mapped it onto the overhead space. A second control experiment confirmed that the mice were not using distal cues to accomplish this, thus suggesting that they were able to rely on internal path integration mechanisms in order to remain oriented in three dimensions.

However, it is not necessarily the case that the mice calculated a 3D vector to the goal, because in this environment the task of orienting in three dimensions could be simplified by maintaining a sense of direction in the horizontal dimension while navigating vertically. This is generally true of multilayer environments, and so to show true integration, it is necessary to show that subjects incorporate vertical angular information into their encoding. In this vein, Montello and Pick (Reference Montello and Pick1993) demonstrated that human subjects who learned two separate and overlapping routes in a multilevel building could subsequently integrate the relationship of these two routes, as evidenced by their ability to point reasonably accurately from a location on one route directly to a location on the other. However, it was also established that pointing between these vertically aligned spaces was less accurate and slower than were performances within a floor. Further, humans locating a goal in a multi-storey building preferred to solve the vertical component before the horizontal one, a strategy that led to shorter navigation routes and times (Hölscher et al. Reference Hölscher, Meilinger, Vrachliotis, Brösamle and Knauff2006). In essence, rats and humans appear in such tasks to be reducing a three-dimensional task to a one-dimensional (vertical) followed by a two-dimensional (horizontal) task. However, their ability to compute a direct shortest route was obviously constrained by the availability of connecting ports (stairways) between the floors, and so this was not a test of true integration.

Wilson et al. (Reference Wilson, Foreman, Stanton and Duffy2004) investigated the ability of humans to integrate across vertical levels in a virtual reality experiment in which subjects were required to learn the location of pairs of objects on three levels of a virtual multilevel building. Participants then indicated, by pointing, the direction of objects from various vantage points within the virtual environment. Distance judgements between floors were distorted, with relative downward errors in upward judgements and relative upward errors in downward judgements. This effect is interesting, as the sense of vertical space seems to be biased towards the horizontal dimension. However, in this study there was also a (slightly weaker) tendency to make rightward errors to objects to the left, and leftward errors to objects to the right. The results may therefore reflect a general tendency for making errors in the direction towards the center point of the spatial range, regardless of dimension (although this was more pronounced for the vertical dimension). Another interesting finding from this study is that there appears to be a vertical asymmetry in spatial memories, with a bias in favour of memories for locations that are on a lower rather than higher plane.

Tlauka et al. (Reference Tlauka, Wilson, Adams, Souter and Young2007) confirmed the findings of Wilson et al. (Reference Wilson, Foreman, Stanton and Duffy2004) and suggested that there might be a “contraction bias” due to uncertainty, reflecting a compressed vertical memory. They further speculated that such biases might be experience-dependent, as humans pay more attention to horizontal space directly ahead than to regions above or below it. This view is interesting and might explain the experimental results seen with rats in Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011). Finally, young children appear to have more problems in such three-dimensional pointing tasks (Pick & Rieser Reference Pick, Rieser and Potegal1982), once again suggesting that experience may modulate performance in vertical tasks.

In the third part of this article, we will argue that the mammalian spatial representation may be fundamentally planar, with position in the vertical dimension (orthogonal to the plane of locomotion) encoded separately, and in a different (non-metric) way, from position in the horizontal (locomotor) plane. If spatial encoding is indeed planar, this has implications for the encoding of multilevel structures, in which the same horizontal coordinates recur at different vertical levels. On the one hand, this may cause confusion in the spatial mapping system: That is, if the representations of horizontal and vertical dimensions are not integrated, then the navigational calculations that use horizontal coordinates may confuse the levels. As suggested above, this may be why, for example, humans are confused by multilevel buildings. On the other hand, if there is, if not full integration, at least an interaction between the vertical and horizontal representations, then it is possible that separate horizontal codes are able to be formed for each vertical level, with a corresponding disambiguation of the levels. Further studies at both behavioural and neurobiological levels will be needed to determine how multiple overlapping levels are represented and used in navigation.

3.4. Navigation in a volumetric space

Volumetric spaces such as air and water allow free movement in all dimensions. Relatively little navigational research has been conducted in such environments so far. One reason is that it is difficult to track animal behaviour in volumetric spaces. The advent of long-range tracking methods that use global positioning system (GPS) techniques or ultrasonic tagging is beginning to enable research into foraging and homing behaviours over larger distances (Tsoar et al. Reference Tsoar, Nathan, Bartan, Vyssotski, Dell'Omo and Ulanovsky2011). However, many of these studies still address navigation only in the earth-horizontal plane, ignoring possible variations in flight or swim height. Laboratory studies have started to explore navigation in three-dimensional space, though the evidence is still limited.

One of the earliest studies of 3D navigation in rats, the Grobéty and Schenk (Reference Grobéty and Schenk1992a) experiment mentioned earlier, involved a cubic lattice, which allowed the animals to move in any direction at any point within the cube (see Fig. 4A). As noted earlier, it was found that rats learned the correct vertical location before the horizontal location, suggesting that these elements may be processed separately. Grobéty and Schenk suggested that the rats initially paid greater attention to the vertical dimension both because it is salient and because greater effort is required to make vertical as opposed to horizontal translations; therefore, minimizing vertical error reduced their overall effort.

By contrast, Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011) found that rats shuttling back and forth between diagonally opposite low and high points within a lattice maze or on a vertical subset of it, the pegboard (see Fig. 4B), did not tend to take the direct route (at least on the upward journey) but preferred to execute the horizontal component of the journey first. However, the separation of vertical from horizontal may have occurred because of the constraints on locomotion inherent in the maze structure: the rats may have found it easier to climb vertically than diagonally. Another experiment, however, also found vertical/horizontal differences in behavior: Jovalekic et al. (Reference Jovalekic, Hayman, Becares, Reid, Thomas, Wilson and Jeffery2011) examined foraging behaviour in the Grobéty and Schenk lattice maze and found that rats tended to retrieve as much of the food as possible on one horizontal layer before moving to the next. These authors observed similar behaviour on a simplified version of this apparatus, the pegboard, where again, foraging rats depleted one layer completely before moving to the next. The implication is that rather than using a truly volumetric representation of the layout, the animals tend to segment the environment into horizontal bands.

A similar propensity to treat three-dimensional environments as mainly horizontal has been reported in humans by Vidal et al. (Reference Vidal, Amorim and Berthoz2004), who studied participants in a virtual 3D maze and found that they performed better if they remained upright and had therefore aligned their egocentric and allocentric frames of reference in one of the dimensions. Similar results were also reported by Aoki et al. [Reference Aoki, Ohno and Yamaguchi2005]. Likewise, astronauts in a weightless environment tended to construct a vertical reference using visual rather than vestibular (i.e., gravitational) cues, and remain oriented relative to this reference (Lackner & Graybiel Reference Lackner and Graybiel1983; Tafforin & Campan Reference Tafforin and Campan1994; Young et al. Reference Young, Oman, Watt, Money and Lichtenberg1984).

Finally, one intriguing study has shown that humans may be able to process not only three- but also four-dimensional information (Aflalo & Graziano Reference Aflalo and Graziano2008). In this virtual-reality study, in addition to the usual three spatial dimensions, a fourth dimension was specified by the “hot” and “cold” directions such that turns in the maze could be forward/back, left/ right, up/down or hot/cold, and the usual trigonometric rules specified how “movements” in the fourth (hot-cold) dimension related to movements in the other three. Participants were required to path integrate by completing a multi-segment trajectory through the maze and then pointing back to the start. They eventually (after extensive practice) reached a level of performance exceeding that which they could have achieved using three-dimensional reasoning alone. This study is interesting because it is highly unlikely that any animals, including humans, have evolved the capacity to form an integrated four-dimensional cognitive map, and so the subjects' performance suggests that it is possible to navigate reasonably well using a metrically lower-dimensional representation than the space itself. The question, then, is whether one can in fact navigate quite well in three dimensions using only a two-dimensional cognitive map. We return to this point in the next section.

4. Neurobiological studies in three dimensions

So far we have reviewed behavioural research exploring three-dimensional navigation and seen clear evidence that 3D information is used by animals and humans, although the exact nature of this use remains unclear. A parallel line of work has involved recordings from neurons that are involved in spatial representation. This approach has the advantage that it is possible to look at the sensory encoding directly and make fine-grained inferences about how spatial information is integrated. While most of this work has hitherto focused on flat, two-dimensional environments, studies of three-dimensional encoding are beginning to increase in number. Here, we shall first briefly describe the basis of the brain's spatial representation of two-dimensional space, and then consider, based on preliminary findings, how information from a third dimension is (or might be) integrated.

The core component of the mammalian place representation comprises the hippocampal place cells, first identified by O'Keefe and colleagues (O'Keefe & Dostrovsky Reference O'Keefe and Dostrovsky1971), which fire in focal regions of the environment. In a typical small enclosure in a laboratory, each place cell has one or two, or sometimes several, unique locations in which it will tend to fire, producing patches of activity known as place fields (Fig. 7A). Place cells have therefore long been considered to represent some kind of marker for location. Several decades of work since place cells were first discovered have shown that the cells respond not to raw sensory inputs from the environment such as focal odours, or visual snapshots, but rather, to higher-order sensory stimuli that have been extracted from the lower-order sensory data – examples include landmarks, boundaries, and contextual cues such as the colour or odour of the environment (for a review, see Jeffery Reference Jeffery2007; Moser et al. Reference Moser, Kropff and Moser2008).

Figure 7. Firing patterns of the three principal spatial cell types. (a) Firing of a place cell, as seen from an overhead camera as a rat forages in a 1 meter square environment. The action potentials (“spikes”) of the cell are shown as spots, and the cumulative path of the rat over the course of the trial is shown as a wavy line. Note that the spikes are clustered towards the South–East region of the box, forming a “place field” of about 40 cm across. (b) Firing of a head direction cell, recorded as a rat explored an environment. Here, the heading direction of the cell is shown on the x-axis and the firing rate on the y-axis. Note that the firing intensifies dramatically when the animal's head faces in a particular direction. The cell is mostly silent otherwise. (c) Firing of a grid cell (from Hafting et al. Reference Hafting, Fyhn, Molden, Moser and Moser2005), depicted as for the place cell in (a). Observe that the grid cell produces multiple firing fields in a regularly spaced array. Adapted from Jeffery and Burgess (Reference Jeffery and Burgess2006).

For any agent (including a place cell) to determine its location, it needs to be provided with information about direction and distance in the environment. Directional information reaches place cells via the head direction system (Taube Reference Taube2007; Taube et al. Reference Taube, Muller and Ranck1990a), which is a set of structures in areas surrounding the hippocampus whose neurons are selectively sensitive to the direction in which the animal's head is pointing (Fig. 7B). Head direction (HD) cells do not encode direction in absolute geocentric coordinates; rather, they seem to use local reference frames, determined by visual (or possibly also tactile) cues in the immediate surround. This is shown by the now well-established finding that rotation of a single polarizing landmark in an otherwise unpolarized environment (such as a circular arena) will cause the HD cells to rotate their firing directions by almost the same amount (Goodridge et al. Reference Goodridge, Dudchenko, Worboys, Golob and Taube1998; Taube et al. Reference Taube, Muller and Ranck1990b). Interestingly, however, there is almost always a degree of under-rotation in response to a landmark rotation. This is thought to be due to the influence of the prevailing “internal” direction sense, sustained by processing of self-motion cues such as vestibular, proprioceptive, and motor signals to motion. The influence of these cues can be revealed by removing a single polarizing landmark altogether – the HD cells will maintain their previous firing directions for several minutes, although they will eventually drift (Goodridge et al. Reference Goodridge, Dudchenko, Worboys, Golob and Taube1998).

The internal (sometimes called idiothetic) self-motion cues provide a means of stitching together the directional orientations of adjacent regions of an environment so that they are concordant. This was first shown by Taube and Burton (Reference Taube and Burton1995), and replicated by Dudchenko and Zinyuk (Reference Dudchenko and Zinyuk2005). However, although there is this tendency for the cells to adopt similar firing directions in adjacent environments, especially if the animal self-locomoted between them, this is not absolute and cells may have discordant directions, flipping from one direction to the other as the animal transitions from one environment to the next (Dudchenko & Zinyuk Reference Dudchenko and Zinyuk2005). This tendency for HD cells to treat complex environments as multiple local fragments is one that we will return to later.

The source of distance information to place cells is thought to lie, at least partly, in the grid cells, which are neurons in the neighbouring entorhinal cortex that produce multiple place fields that are evenly spaced in a grid-like array, spread across the surface of the environment (Hafting et al. Reference Hafting, Fyhn, Molden, Moser and Moser2005; for review, see Moser & Moser Reference Moser and Moser2008) (Fig. 7c). The pattern formed by the fields is of the type known as hexagonal close-packed, which is the most compact way of tiling a plane with circles.

Grid cell grids always maintain a constant orientation for a given environment (Hafting et al. Reference Hafting, Fyhn, Molden, Moser and Moser2005), suggesting a directional influence that probably comes from the head-direction system, as suggested by the finding that the same manipulations that cause HD firing directions to rotate also cause grids to rotate. Indeed, many grid cells are themselves also HD cells, producing their spikes only when the rat is facing in a particular direction (Sargolini et al. Reference Sargolini, Fyhn, Hafting, McNaughton, Witter, Moser and Moser2006). More interesting, however, is the influence of odometric (distance-related) cues on grid cells. Because the distance between each firing field and its immediate neighbours is constant for a given cell, the grid cell signal can theoretically act as a distance measure for place cells, and it is assumed that this is what they are for (Jeffery & Burgess Reference Jeffery and Burgess2006) though this has yet to be proven. The source of distance information to the grid cells themselves remains unknown. It is likely that some of the signals are self-motion related, arising from the motor and sensory systems involved in commanding, executing, and then detecting movement through space. They may also be static landmark-related distance signals – this is shown by the finding that subtly rescaling an environment can cause a partial rescaling of the grid array in the rescaled dimension (Barry et al. Reference Barry, Hayman, Burgess and Jeffery2007), indicating environmental influences on the grid metric. It seems that when an animal enters a new environment, an arbitrary grid pattern is laid down, oriented by the (also arbitrary) HD signal. This pattern is then “attached” to that environment by learning processes, so that when the animal re-enters the (now familiar) environment, the same HD cell orientation, and same grid orientation and location, can be reinstated.

Given these basic building blocks of the mammalian spatial representation, we turn now to the question of how these systems may cope with movement in the vertical domain, using the same categories as previously: slope, multilayer environments, and volumetric spaces.

4.1. Neural processing of verticality cues

The simplest form of three-dimensional processing is detection of the vertical axis. Verticality cues are those that signal the direction of gravity, or equivalently, the horizontal plane, which is defined by gravity. The brain can process both static and dynamic verticality cues: Static information comprises detection of the gravitational axis, or detection of slope of the terrain underfoot, while dynamic information relates to linear and angular movements through space and includes factors such as effort. Both static and dynamic processes depend largely on the vestibular apparatus in the inner ear (Angelaki & Cullen Reference Angelaki and Cullen2008) and also on a second set of gravity detectors (graviceptors) located in the trunk (Mittelstaedt Reference Mittelstaedt1998). Detection of the vertical depends on integration of vestibular cues, together with those from the visual world and also from proprioceptive cues to head and body alignment (Angelaki et al. Reference Angelaki, McHenry, Dickman, Newlands and Hess1999; Merfeld et al. Reference Merfeld, Young, Oman and Shelhamer1993; Merfeld & Zupan Reference Merfeld and Zupan2002).

The vestibular apparatus is critical for the processing not only of gravity but also of movement-related inertial three-dimensional spatial cues. The relationship of the components of the vestibular apparatus is shown in Figure 8a, while the close-up in Figure 8b shows these core components: the otolith organs, of which there are two – the utricle and the saccule – and the semicircular canals. The otolith organs detect linear acceleration (including gravity, which is a form of acceleration) by means of specialized sensory epithelium. In the utricle the epithelial layer is oriented approximately horizontally and primarily detects earth-horizontal acceleration, and in the saccule it is oriented approximately vertically and primarily detects vertical acceleration/gravity. The two otolith organs work together to detect head tilt. However, because gravity is itself a vertical acceleration, there is an ambiguity concerning how much of the signal is generated by movement and how much by gravity – this “tilt-translation ambiguity” appears to be resolved by means of the semicircular canals (Angelaki & Cullen Reference Angelaki and Cullen2008). These are three orthogonal fluid-filled canals oriented in the three cardinal planes, one horizontal and two vertical, which collectively can detect angular head movement in any rotational plane.

Figure 8. The vestibular apparatus in the human brain. (a) Diagram showing the relationship of the vestibular apparatus to the external ear and skull. (b) Close-up of the vestibular organ showing the detectors for linear acceleration (the otolith organs – comprising the utricle and saccule) and the detectors for angular acceleration (the semicircular canals, one in each plane). (Taken from: http://www.nasa.gov/audience/forstudents/9-12/features/F_Human_Vestibular_System_in_Space.html)

Neural encoding of direction in the vertical plane (tilt, pitch, roll, etc.) is not yet well understood. Head direction cells have been examined in rats locomoting on a vertical plane, and at various degrees of head pitch, to see whether the cells encode vertical as well as horizontal direction. Stackman and Taube (Reference Stackman and Taube1998) found pitch-sensitive cells in the lateral mammillary nuclei (LMN). However, these were not true volumetric head direction cells, because their activity was not at all related to horizontal heading direction. Nor did they correspond to the vertical-plane equivalent of HD cells: Most of the observed cells had pitch preferences clustered around the almost-vertical, suggesting that these cells were doing something different from simply providing a vertical counterpart of the horizontal direction signal. By contrast, the HD cells in LMN were insensitive to pitch, and their firing was uniformly distributed in all directions around the horizontal plane. Thus, vertical and horizontal directions appear to be separately and differently represented in this structure.

In a more formal test of vertical directional encoding, Stackman et al. (Reference Stackman, Tullman and Taube2000) recorded HD cells as rats climbed moveable wire mesh ladders placed vertically on the sides of a cylinder. When the ladder was placed at an angular position corresponding to the cell's preferred firing direction, the cell continued to fire as the animal climbed the ladder, but did not fire as the animal climbed down again. Conversely, when the ladder was placed on the opposite side of the cylinder, the reverse occurred: now the cell remained silent as the animal climbed up, but started firing as the animal climbed down. This suggests that perhaps the cells were treating the plane of the wall in the same way that they usually treat the plane of the floor. Subsequently, Calton and Taube (Reference Calton and Taube2005) showed that HD cells fired on the walls of an enclosure in a manner concordant with the firing on the floor, and also informally observed that the firing rate seemed to decrease in the usual manner when the rat's head deviated from the vertical preferred firing direction. This observation was confirmed in a follow-up experiment in which rats navigated in a spiral trajectory on a surface that was either horizontal or else vertically aligned in each of the four cardinal orientations (Taube et al. Reference Taube, Wang, Kim and Frohardt2013). In the vertical conditions, the cells showed preferred firing directions on the vertical surface in the way that they usually do on a horizontal surface. When the vertical spiral was rotated, the cells switched to a local reference frame and maintained their constant preferred firing directions with respect to the surface.

What are we to make of these findings? In the Calton and Taube (Reference Calton and Taube2005) experiment, it appears that the HD cells seemed to be acting as if the walls were an extension of the floor – in other words, as if the pitch transformation, when the rat transitioned from horizontal to vertical, had never happened. A possible conclusion is that HD cells are insensitive to pitch, which accords with the LMN findings of Stackman and Taube (Reference Stackman and Taube1998). This has implications for how three-dimensional space might be encoded and the limitations thereof, which we return to in the final section. In the spiral experiment described by Taube (2005), it further appears that the reference frame provided by the locomotor surface became entirely disconnected from the room reference frame – when the rat was clinging to the vertical surface, the cells became insensitive to the rotations in the azimuthal plane that would normally modulate their firing on a horizontal plane. The idea of local reference frames is one that we will also come back to later.

The Calton and Taube (Reference Calton and Taube2005) experiment additionally replicated an observation made in microgravity, which is that head direction cells lose their directional specificity, or are greatly degraded, during inverted locomotion on the ceiling (Taube et al. Reference Taube, Stackman, Calton and Oman2004). In another study, head direction cells were monitored while animals were held by the experimenter and rotated by 360 degrees (relative to the horizontal plane) in the upright and in the inverted positions, as well as by 180 degrees in the vertical plane (Shinder & Taube Reference Shinder and Taube2010). Although the animal was restrained, head direction cells displayed clear directionality in the upright and vertical positions, and no directionality in the inverted position. Additionally, directionality in the vertical position was apparent until the animal was almost completely inverted. These findings confirm those of the previous studies (Calton & Taube Reference Calton and Taube2005; Taube et al. Reference Taube, Stackman, Calton and Oman2004), that loss of HD cell directionality is a feature of the inverted position, at least in rats.

This breakdown in signalling may have arisen from an inability of the HD cells to reconcile visual evidence for the 180 degree heading-reversal with the absence of a 180 degree rotation in the plane to which they are sensitive (the yaw plane). This finding is consistent with a recent behavioural study by Valerio et al. (Reference Valerio, Clark, Chan, Frost, Harris and Taube2010). Here, rats were trained to locomote while clinging upside-down to the underside of a circular arena, in order to find an escape hole. Animals were able to learn fixed routes to the hole but could not learn to navigate flexibly in a mapping-like way, suggesting that they had failed to form a spatial representation of the layout of the arena. Taking these findings together, then, it appears that the rodent cognitive mapping system is not able to function equally well at all possible orientations of the animal.

What about place and grid cells in vertical space? Processing of the vertical in these neurons has been explored in a recent study by Hayman et al. (Reference Hayman, Verriotis, Jovalekic, Fenton and Jeffery2011). They used two environments: a vertical climbing wall (the pegboard), and a helix (Fig. 9). In both environments, place and grid cells produced vertically elongated fields, with grid fields being more elongated than place fields, thus appearing stripe-like (Fig. 9E). Hence, although elevation was encoded, its representation was not as fine-grained as the horizontal representation. Furthermore, the absence of periodic grid firing in the vertical dimension suggests that grid cell odometry was not operating in the same way as it does on the horizontal plane.

Figure 9. Adapted from Hayman et al. (Reference Hayman, Verriotis, Jovalekic, Fenton and Jeffery2011). (a) Photograph of the helical maze. Rats climbed up and down either five or six coils of the maze, collecting food reward at the top and bottom, while either place cells or grid cells were recorded. (b) Left – The firing pattern of a place cell (top, spikes in blue) and a grid cell (bottom, spikes in red), as seen from the overhead camera. The path of the rat is shown in grey. The place cell has one main field with a few spikes in a second region, and the grid cell has three fields. Right - the same data shown as a heat plot, for clarity (red = maximum firing, blue = zero firing). (c) The same data as in (b) but shown as a firing rate histogram, as if viewed from the side with the coils unwound into a linear strip. The single place field in (b) can be seen here to repeat on all of the coils, as if the cell is not discriminating elevation, but only horizontal coordinates. The grid cell, similarly, repeats its firing fields on all the coils. (d) Photograph of the pegboard, studded with wooden pegs that allowed the rat to forage over a large vertical plane. (e) Left – The firing pattern of a place cell (top, spikes in blue) and a grid cell (bottom, spikes in red) as seen from a horizontally aimed camera facing the pegboard. The place cell produced a single firing field, but this was elongated in the vertical dimension. The grid cell produced vertically aligned stripes, quite different from the usual grid-like pattern seen in Fig. 8c. Right – the same data as a heat plot.

One possible explanation is that grid cells do encode the vertical axis, but at a coarser scale, such that the periodicity is not evident in these spatially restricted environments. If so, then the scale or accuracy of the cognitive map, at least in the rat, may be different in horizontal versus vertical dimensions, possibly reflecting the differential encoding requirements for animals that are essentially surface-dwelling. An alternative possibility, explored below, is that while horizontal space is encoded metrically, vertical space is perhaps encoded by a different mechanism, possibly even a non-grid-cell-dependent one. Note that because the rats remained horizontally oriented during climbing, it is also possible that it is not the horizontal plane so much as the current plane of locomotion that is represented metrically, while the dimension normal to this plane (the dorso-ventral dimension with respect to the animal) is represented by some other means. Indeed, given the HD cell data discussed above, this alternative seems not only possible but likely. Thus, if the rats had been vertically oriented in these experiments, perhaps fields would have had a more typical, hexagonal pattern.

It is clear from the foregoing that much remains to be determined about vertical processing in the navigation system – if there is a vertical HD–cell compass system analogous to the one that has been characterized for the horizontal plane, then it has yet to be found and, if elevation is metrically encoded, the site of this encoding is also unknown. The cells that might have been expected to perform these functions – head direction, place, and grid cells – do not seem to treat elevation in the same way as they treat horizontal space, which argues against the likelihood that the mammalian brain, at least, constructs a truly integrated volumetric map.

We turn now to the question of what is known about the use of three-dimensional cues in navigationally relevant computations – processing of slopes, processing of multilayer environments, and processing of movement in volumetric space.

4.2. Neural encoding of a sloping surface

Investigation of neural encoding of non-horizontal surfaces is only in the early stages, but preliminary studies have been undertaken in both the presence and the absence of gravity. In normal gravity conditions, a slope is characterized by its steepness with respect to earth-horizontal, which provides important constraints on path integration. If the distance between a start location and a goal includes a hill, additional surface ground has to be travelled to achieve the same straight-line distance (i.e., that of flat ground). Furthermore, routes containing slopes require more energy to traverse than do routes without slopes, requiring a navigator to trade off distance against effort in undulating terrain. Therefore, one might imagine that slope should be incorporated into the cognitive map, and neural studies allow for an investigation of this issue that is not feasible with behavioural studies alone.

The effect of terrain slope has been explored for place cells but not yet for grid cells. The earliest place cell study, by Knierim and McNaughton (Reference Knierim and McNaughton2001), investigated whether place fields would be modulated by the tilt of a rectangular track. A priori, one possible outcome of this experiment was that place fields would expand and contract as the tilted environment moved through intrinsically three-dimensional, ovoid place fields. However, fields that were preserved following the manipulation did not change their size, and many place cells altered their firing altogether on the track, evidently treating the whole tilted track as different from the flat track. One interpretation of these findings is that the track remained the predominant frame of reference (the “horizontal” from the perspective of the place cells), and that the tilt was signalled by the switching on and off of fields, rather than by parametric changes in place field morphology.

This study also showed that slope could be used as an orienting cue: In rotations of the tilted track, cells were more likely to be oriented by the track (as opposed to the plethora of distal cues) than in rotations of the flat track. This observation was replicated in a subsequent study by Jeffery et al. (Reference Jeffery, Anand and Anderson2006), who found that place cells used the 30-degree slope of a square open arena as an orienting cue after rotations of the arena, and preferred the slope to local olfactory cues. Presumably, because the cells all reoriented together, the effect was mediated via an effect on the head direction system. Place cells have also been recorded from bats crawling on a near-vertical (70-degree tilt) open-field arena (Ulanovsky & Moss Reference Ulanovsky and Moss2007). Firing fields were similar to rodent place fields on a horizontal surface, a pattern that is consistent with the fields using the surface of the environment as their reference plane (because if earth-horizontal were the reference, then fields should have been elongated along the direction of the slope, as in the Hayman et al. [2011] experiment) (see Fig. 9e).

Grid cells have not yet been recorded on a slope. The pattern they produce will be informative, because their odometric properties will allow us to answer the question of whether the metric properties of place fields in horizontal environments arise from distances computed in earth-horizontal or with respect to environment surface. The results discussed above lead us to predict the latter.

Only one place cell study has been undertaken in the absence of gravity, in an intriguing experiment by Knierim and colleagues, conducted on the Neurolab Space Shuttle mission of 1998 (Knierim et al. Reference Knierim, McNaughton and Poe2000; Reference Knierim, Poe, McNaughton, Buckey and Homick2003). Rats were implanted with microelectrodes for place cell recording, and familiarized with running on a rectangular track on Earth, before being carried in the shuttle into space. Under microgravity conditions in space, the animals were then allowed to run on a 3D track, the “Escher staircase,” which bends through three-dimensional space such that three consecutive right-angle turns in the yaw plane of the animal, interspersed with three pitch rotations, leads the animal back to its starting point (Fig. 10a). The question was whether the cells could lay down stable place fields on the track, given that the visual cues might have conflicted with the path integration signal if the path integrator could not process the 3D turns. On the rats' first exposure to the environment on flight day 4, the cells showed somewhat inconsistent patterns, with those from one rat showing degraded spatial firing, those from a second rat showing a tendency to repeat their (also degraded) firing fields on each segment of the track, and those from a third looking like normal place fields. By the second recording session, on flight day 9, place fields from the first two rats had gained normal-looking properties, with stable place fields (Fig. 10b). This suggests either that the path integrator could adapt to the unusual conditions and integrate the yaw and pitch rotations, or else that the visual cues allowed the construction of multiple independent planar representations of each segment of the track.

Figure 10. (a) The “Escher staircase” track, on which rats ran repeated laps while place fields were recorded. (b) The firing field of a place cell, showing a consistent position on the track, indicating either the use of visual cues or of three-dimensional path integration (or both) to position the field. (From Knierim et al. [2000] with permission.)