The regularity and near universality in the steps children take from very early in life toward the acquisition of a native language show how deeply embedded language is in our biology. Yet, the vast ways in which experience and learning contribute to and shape acquisition reveal language to be, simultaneously, perhaps our most experientially sensitive and responsive cognitive attribute. Perception, cognition, learning, social understanding, and culture all come together in contributing to the emergence of this quintessential human capability.

While prepared by evolution to acquire any language, it is of course the native language we must ultimately acquire; as such, we acquire language in interaction with other members of our community. The first window infants have into that acquisition comes via their perceptual systems: in listening to, watching, and perhaps imitating language long before they know any words. My research program traces the perceptual foundations of language acquisition, starting in infancy and even prenatal development. A question that has driven this work almost from its inception is an attempt to understand when and how experience with the native language changes perceptual sensitivities, and just how these changing speech perception sensitivities set the stage for, and intersect with, successful language learning. While I have always argued that there are bidirectional influences between perceptual development and the achievement of various milestones in language development (see Figure 1), the emphasis in much of my writing has been on how attunement to the perceptual properties of the native language guides and bootstraps acquisition (see Wojcik, de la Cruz Pavía, & Werker, Reference Wojcik, de la Cruz Pavía and Werker2017, for the most recent such focus). The current review will highlight as well the ways in which language function and use also drive perceptual attunement.

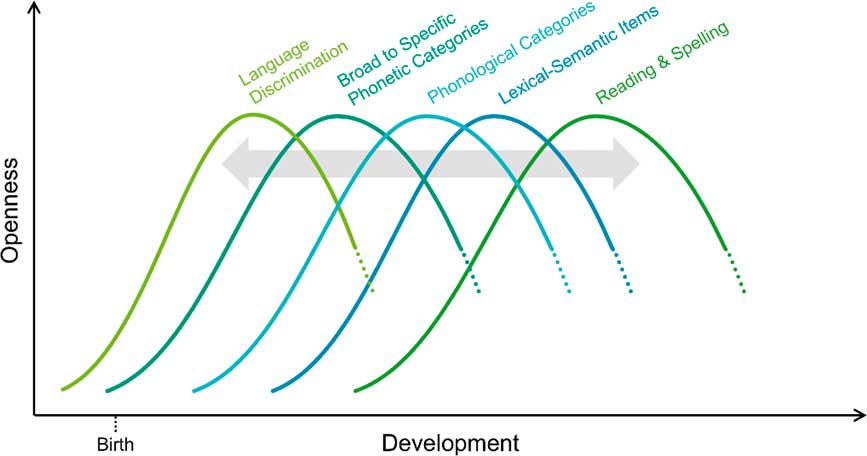

Figure 1 An illustration of the cascading and interacting nature of the steps in language acquisition in infancy. The curves indicate the opening, peak, and diminishment of critical or sensitive periods in the development and mastery of that particular milestone. The overlapping nature of the peaks points to their mutual influence and overlapping timing of emergence. For example, phonological categories and lexical items could emerge simultaneously and be mututally influential. The arrow indicates that these influences are not merely bidirectional, but can also occur across levels. For example, learning two words (lexical–semantic items) that differ in only a single phonetic feature might also influence the attunement to native phonetic categories.

To this end, I will first present descriptive findings on the character of early speech perception, the neural organization that gets us started, and the timing of how speech perception changes across the first year of life. Both monolingual and bilingual infants will be considered. I will then describe research in which we have attempted to identify the “mechanisms of change,” learning or otherwise, that underlie the movement from language-general to language-specific phonetic perception, and the relation between speech perception and language understanding. In the process, I will also outline broader theoretical frameworks we have proposed over the years to account for these changes, while also providing the reader with a sense of how these frameworks have been modified with the accrual of new data. Woven into the review will be the question of whether there are “critical” or “sensitive” periods in development during which input is most effective and why, as well as the question of whether speech perception is best characterized and studied as an acoustic-only phenomenon or whether to fully understand the development of speech perception we need to focus on speech as a multisensory phenomenon. The theme of the current review is to consider the extent to which speech perception development not only sets the stage for, but is also influenced by, the acquisition of meaning.

PERCEPTUAL FOUNDATIONS AT BIRTH

By the time they are born, human infants are already well prepared for language acquisition. At birth, babies show a preference for speech over other types of complex sounds (Vouloumanos & Werker, Reference Vouloumanos and Werker2007) and use distinct brain systems when processing speech sounds (Dehaene-Lambertz, Dehaene, & Hertz-Pannier, Reference Dehaene-Lambertz, Dehaene and Hertz-Pannier2002; May, Byers-Heinlein, Gervain, & Werker, Reference May, Byers-Heinlein, Gervain and Werker2011; May, Gervain, Carreiras, & Werker, Reference May, Gervain, Carreiras and Werker2017; Minagawa-Kawai et al., Reference Minagawa-Kawai, van der Lely, Ramus, Sato, Mazuka and Dupoux2011; Peña et al., Reference Peña, Maki, Kovac̆ić, Dehaene-Lambertz, Koizumi, Bouquet and Mehler2003), with some support for greater involvement of the left hemisphere (Dehaene-Lambertz, Reference Dehaene-Lambertz2000; Peña et al., Reference Peña, Maki, Kovac̆ić, Dehaene-Lambertz, Koizumi, Bouquet and Mehler2003; Perani et al., Reference Perani, Saccuman, Scifo, Anwander, Spada, Baldoli and … Friederici2011). The boundaries on infants’ perceptual preference for language are revealing: they extend beyond human speech to other primate vocalizations (Vouloumanos, Hauser, Werker, & Martin, Reference Vouloumanos, Hauser, Werker and Martin2010), but not to noncommunicative vocalizations such as coughs, yawns, or sneezes (Shultz, Vouloumanos, Bennett, & Pelphy, Reference Shultz, Vouloumanos, Bennett and Pelphy2014), leading to the suggestion that these areas of the human brain are broadly specialized for processing “communicative” sounds. However, in our work, neural activation in these same areas is also seen for natural sounds (e.g., all kinds of moving water) that have a similar fractal structure to speech (Gervain, Werker, Black, & Geffen, Reference Gervain, Werker, Black and Geffen2016), but not to either forward or backward Silbo Gomero, a whistled language that is used by shepherds in the Canary Islands (May et al., Reference May, Gervain, Carreiras and Werker2017). Silbo Gomero, a “surrogate” language that shares properties with its Spanish base, is never acquired as a first language, but once acquired, it is decidedly communicative, whereas water is not. Thus, appealing as the distinction is, it is not merely the communicative versus noncommunicative nature of speech that underlies its neural specialization, but something as well about the match between its properties and the resolving capabilities of the related brain structures. By 3 months of age, English-learning infants no longer include other primate calls in the class of vocalizations they prefer (Shultz et al., Reference Shultz, Vouloumanos, Bennett and Pelphy2014; Vouloumanos et al., Reference Vouloumanos, Hauser, Werker and Martin2010), showing a rapid increase in the precision of the type of vocal communication to which they attend.

By birth, infants also show evidence of experiential influences on both speech perception and its neural foundations. Neonates prefer their mother’s voice (DeCasper & Fifer, Reference DeCasper and Fifer1980) and the language heard in utero (Mehler et al., Reference Mehler, Jusczyk, Lambertz, Halsted, Bertoncini and Amiel-Tison1988; Moon, Cooper, & Fifer, Reference Moon, Cooper and Fifer1993), with neonates from a bilingual environment demonstrating a preference for both of their native languages (Byers-Heinlein, Burns, & Werker, Reference Byers-Heinlein, Burns and Werker2010). Moreover, although the temporal and inferior frontal areas in the neonate brain are more activated to forward versus backward speech, the differential activation to forward speech is more pronounced for (and in some comparisons, only seen for) the language heard in utero (May et al., Reference May, Byers-Heinlein, Gervain and Werker2011, in Reference May, Gervain, Carreiras and Werkerpress; Minagawa-Kawai, et al., Reference Minagawa-Kawai, van der Lely, Ramus, Sato, Mazuka and Dupoux2011). Thus, from the earliest ages tested, it is apparent that babies at birth are both prepared to learn language (any language) but that they have already begun the journey toward becoming a specialized listener to, and learner of, their native language.

Newborn infants have a number of other perceptual sensitivities that prepare them for language acquisition in general. They discriminate languages from different rhythmical classes (Mehler et al., Reference Mehler, Jusczyk, Lambertz, Halsted, Bertoncini and Amiel-Tison1988); show a preference for, and specialized processing of, well-formed syllables (Gómez et al., Reference Gómez, Berent, Benavides-Varela, Bion, Cattarossi, Nespor and Mehler2014); and perceptually discriminate the two major grammatical classes of words: content versus function words (Shi, Werker, & Morgan, Reference Shi, Werker and Morgan1999). All of these abilities become further attuned to the properties of the native language over the first months of life. For example, infants become better able to discriminate their own language from another language from within the same rhythmical class by 4 months of age if bilingual (Bosch & Sebastián-Gallés, Reference Bosch and Sebastián-Gallés1997) or 5 months of age if monolingual (Nazzi, Jusczyk, & Johnson, Reference Nazzi, Jusczyk and Johnson2000); develop a preference for the phonotactic regularities (Jusczyk, Luce, & Charles-Luce, Reference Jusczyk, Luce and Charles-Luce1994) and stress patterns (Jusczyk, Cutler, & Redanz, Reference Jusczyk, Cutler and Redanz1993) used in their native language by 8–9 months of age; and become experts by 8–9 months as well at precisely which grammatical words occur in their native language (Shi, Werker, & Cutler, Reference Shi, Werker and Cutler2006) and where (e.g., phrase initial or phrase final; Gervain, Nespor, Mazuka, Horie, & Mehler, Reference Gervain, Nespor, Mazuka, Horie and Mehler2008), and further, use this knowledge to segment words (Shi & Lepage, Reference Shi and Lepage2008) and phrases (Gervain et al., Reference Gervain, Nespor, Mazuka, Horie and Mehler2008; Gervain & Werker, Reference Gervain and Werker2013) in a language-specific manner. By the time they are 18 months old and beginning to learn words, infants selectively associate with objects only those forms that sound like words in their native language (e.g., MacKenzie, Graham, & Curtin, Reference MacKenzie, Graham and Curtin2011; May & Werker, Reference May and Werker2014).

From early in life, listening to speech selectively enhances and is enhanced by communicative and referential functions, with attunement in this process as well. In the presence of speech sounds, but not tones, infants as young as 3 months detect the category structure of a set of pictures (e.g., detect the category dinosaurs; Ferry, Hespos, & Waxman, Reference Ferry, Hespos and Waxman2013). At this young age, rhesus monkey calls also facilitate object categorization, but no longer do so by 4 months (Perszyk & Waxman, Reference Perszyk and Waxman2016). Similarly, at 5 months, infants look longer at pictures of human faces when hearing speech and at monkey faces when hearing primate calls, but do not look longer at duck faces when hearing duck sounds (Vouloumanos, Druhen, Hauser, & Huizink, Reference Vouloumanos, Druhen, Hauser and Huizink2009), which indicates they have some expectation of the source of potentially meaningful vocalizations. By 12 months, they show expectations that speech, but not nonspeech, can communicate unobservable intentions (Vouloumanos, Onishi, & Pogue, Reference Vouloumanos, Onishi and Pogue2012) and potential ways to interact with objects (Martin, Onishi, & Vouloumanos, Reference Martin, Onishi and Vouloumanos2012); they also show an expectation for information from speakers of their native language (Begus, Gliga, & Southgate, Reference Begus, Gliga and Southgate2016). Thus, in addition to the preference infants show for speech from early in life, they also seem more readily prepared to link speech (over other kinds of sounds) to meaning and communicative intent.

An important caveat to much of the above-mentioned work on the unique role speech sounds play in facilitating categorization and word learning is that, in the presence of referential cues, infants will treat nonspeech or nonnative speech as functionally linguistic. For example, if first familiarized to a video of two people conversing, but tones rather than words appear to come from the mouth of one of the two interlocuters, then infants will successfully detect the category structure of a set of objects when presented with tones in a categorization task soon thereafter (Ferguson & Waxman, Reference Ferguson and Waxman2016). As another example, if first familiarized with three known word–object pairings, which serve to indicate that the current task is one of object labeling, 18-month-old infants will better link nonnative word forms to objects (May & Werker, Reference May and Werker2014). Thus, while the human brain may be specialized to detect speech and to use speech most naturally for categorization and labeling, if provided with evidence that the context of a sound presentation is the exchange of meaning, infants will accept other kinds of sounds as speech as well. This is another example of the two-way interaction between form and meaning: while learning about the form provides infants with candidate sounds and/or words for learning meanings, the search for meaning also influences the way that infants process and treat different kinds of sounds. Thus, again, higher order aspects of language use can “reach down” into perception.

PERCEPTUAL ATTUNEMENT TO PHONETIC CATEGORIES

Since the early 1970s, we have known that infants can discriminate syllables differing in single phonetic features, whether they be in the initial consonant; in place, manner, or voicing; or in the height or roundedness of the vowel (Eimas, Siqueland, Jusczyk, & Vigorito, Reference Eimas, Siqueland, Jusczyk and Vigorito1971; see Kuhl, Reference Kuhl2004, and Werker & Curtin, Reference Werker and Curtin2005, for reviews). These findings have been strengthened by more recent work using event-related potentials, megaencephalography, and functional near-infrared spectroscopy measures for phonetic discrimination (see Gervain & Mehler, Reference Gervain and Mehler2010; Kuhl, Reference Kuhl2010, for reviews). Discrimination is most robust when acoustic–phonetic variations cross, rather than come from within, known natural language phoneme distinctions (Eimas et al., Reference Eimas, Siqueland, Jusczyk and Vigorito1971; Werker & Lalonde, Reference Werker and Lalonde1988) and involves the same left hemisphere temporal areas activated in adult phonetic discrimination (Dehaene-Lambertz & Gliga, Reference Dehaene-Lambertz and Gliga2004).

It has also been known since the 1970s that, in infants younger than 6 months of age, perceptual sensitivity extends to nonnative phonetic contrasts (e.g., Streeter, Reference Streeter1976; Werker, Gilbert, Humphrey, & Tees, Reference Werker, Gilbert, Humphrey and Tees1981), including acoustically similar nonnative distinctions that adults who are not speakers of that language have difficulty discriminating (see Werker, Reference Werker1989, for a review). This pattern of broad-based initial sensitivities, which supports discrimination of both native and nonnative consonant distinctions in very young infants, changes across the first year of life such that infants show a decline in discrimination of nonnative phonetic distinctions (e.g., Werker & Tees, Reference Werker and Tees1984) and improved discrimination of native ones (e.g., Kuhl et al., Reference Kuhl, Stevens, Hayashi, Deguchi, Kiritani and Iverson2006; Narayan, Werker, & Beddor, Reference Narayan, Werker and Beddor2010). Referred to as perceptual attunement or perceptual narrowing, this pattern of decline in nonnative and improvement in native discrimination has been shown as well for the perception of vowel distinctions (e.g., Kuhl, Williams, Lacerda, Stevens, & Lindblom, Reference Kuhl, Williams, Lacerda, Stevens and Lindblom1992; Polka & Werker, Reference Polka and Werker1994), lexical tone differences (e.g., Mattock & Burnham, Reference Mattock and Burnham2006; Yeung, Chen, & Werker, Reference Yeung, Chen and Werker2013), and even categorical perception of hand sign (Baker, Golinkoff, & Petitto, Reference Baker, Golinkoff and Petitto2006; Palmer, Fais, Golinkoff, & Werker, Reference Palmer, Fais, Golinkoff and Werker2012).

Studies with infants growing up bilingual show a similar pattern of change, but with (perhaps) some variation. While some studies indicate that during the period of perceptual attunement, bilingual infants go through a stage where they discriminate best the phoneme distinctions that are most common across their two languages and collapse less frequent ones (Bosch & Sebastián-Gallés, Reference Bosch and Sebastián-Gallés2003), other studies (Sundara, Polka, & Molnar, Reference Sundara, Polka and Molnar2008), even some with the same population of bilingual infants tested by Bosch and Sebastián-Gallés (Reference Bosch and Sebastián-Gallés2003), indicate that if more sensitive testing paradigms are used, the developmental trajectory is the same in bilingual and monolingual infants (Albareda-Castellot, Pons, & Sebastián-Gallés, Reference Albareda-Castellot, Pons and Sebastián-Gallés2011). While there are some differences reported across the first year of life, the data are nevertheless consistent in indicating that bilingual infants discriminate the phoneme contrasts of each of their native languages by the end of the first year of life (e.g., Burns, Yoshida, Hill, & Werker, Reference Burns, Yoshida, Hill and Werker2007; Bosch & Sebastián-Gallés, Reference Bosch and Sebastián-Gallés2003).

Perceptual attunement, or narrowing of phonetic discrimination from broad based to language specific, is the most common pattern of reorganization seen across the first year of life in phonetic discrimination (see Maurer & Werker, Reference Maurer and Werker2014). However, there are other patterns of change as well. Discrimination of some distinctions, such as the contrast between /ng/ and /n/ in the initial position, is not seen in young infants, and does not emerge until later in infancy, following several months of listening experience with that distinction in one’s native language (Narayan, Werker, & Beddor, Reference Narayan, Werker and Beddor2010; see also Kuhl et al., Reference Kuhl, Stevens, Hayashi, Deguchi, Kiritani and Iverson2006; Sato, Sogabe, & Mazuka, Reference Sato, Sogabe and Mazuka2010). Similarly, some nonnative and never (or seldom) heard distinctions remain discriminable across the lifetime, failing to show a pattern of narrowing or decline. The best known example here is Zulu clicks, which adult non-click speakers can continue to discriminate across the lifetime (Best, McRoberts, & Sithole, Reference Best, McRoberts and Sithole1997). A more recent example is rising versus falling (T2–T4) Mandarin tonal distinctions. Dutch-learning infants show a decline in discrimination of this distinction between 4–7 and 8–11 months of age, but do not fully stop discriminating, and then show an increase again after that age (Liu & Kager, Reference Liu and Kager2014). Even in behavioral studies, earlier influences of the ambient language are apparent, for example, on the internal structure of native phonetic vowel categories (Kuhl, Reference Kuhl1991), in preference for syllables containing native lexical tones among tone-language learning infants (see Yeung et al., Reference Yeung, Chen and Werker2013), and even for vowels heard in utero (Moon, Lagerkrantz, & Kuhl, Reference Moon, Lagercrantz and Kuhl2013). As well, the process of decline in discrimination of nonnative phonetic distinctions may appear earlier for vowels than for consonants (Polka & Werker, Reference Polka and Werker1994).

MECHANISMS OF ATTUNEMENT

Timing

A continuing question in the literature, and one we have attempted to address as well, is what mechanisms underlie the establishment of native phonological categories. At the neurophysiological level, our initial findings (e.g., Werker & Tees, Reference Werker and Tees1984) were taken as potential evidence for pruning of exuberant connectivity, with initial preparation for discrimination of both native and nonnative distinctions and subsequent refinement to just native ones. While this may be the case at some level of analysis, in the years following, animal model work has yielded increasingly sophisticated information about the number of different ways circuits can be kept open and closed (see Werker & Tees, Reference Werker and Tees2005, for a review of some of these). One line of work in my lab, which was reviewed in-depth in Werker and Hensch (Reference Werker and Hensch2015), examines the question of whether there are critical periods in speech perception development. Working within a model developed by Hensch (e.g., Hensch, Reference Hensch2005; Takesian & Hensch, Reference Takesian and Hensch2013) delineating the molecular, neurophysiological, and experiential events that support the onset and closing of periods of plasticity in the brain, we have investigated the effect of different types of exposure and experience on the timing of speech perception development. This work indicates that while premature birth does not accelerate the timing of phonetic attunement (it corresponds to gestational rather than chronological age; see Peña, Werker, & Dehaene, Reference Werker, Yeung and Yoshida2012), exposure to certain classes of drugs may accelerate the onset of plasticity while experience with (untreated) maternal depression may delay it (Weikum, Oberlander, Hensch, & Werker, Reference Weikum, Oberlander, Hensch and Werker2012). Bilingual babies also seem to show openness longer (Petitto et al., Reference Petitto, Berens, Kovelman, Dubins, Jasinska and Shalinsky2012; Pi Casaus, Sebastián-Gallés, Werker, & Bonatti, Reference Pi Casaus, Sebastián-Gallés, Werker and Bonatti2015; see also Sebastián-Gallés, Albareda, Weikum, & Werker, Reference Sebastián-Gallés, Albareda, Weikum and Werker2012, for visual language discrimination) but it is not known whether this reflects a timing delay at the circuit level, presumably caused by less exposure to/experience with each language, or an attentional advantage from simultaneously acquiring two languages. Given that this work was reviewed fairly comprehensively in Werker and Hensch (Reference Werker and Hensch2015), it will not be the focus of the current paper.

Process

Another question we and others have addressed is what is the process by which infants become attuned to the phonetic categories that are used in their native languages? Do they learn them through simple passive learning? Through mapping sound onto meaning? What types of interactional contexts best support such learning?

Prior to the explosion of research showing sophisticated perceptual sensitivities to phonetic distinctions in young infants, it was assumed that a phonological system was built through establishing a lexicon. Here it was assumed that as children accrued a sufficient vocabulary, they would begin to establish lexical representations in which the words were highly similar phonologically, and that, through “contrast,” they would establish the repertoire of native phonemes (Trubetskoy, Reference Trubetskoy1969/1958). The necessary lexicon was thought to include, for example, actual minimal pair words such as “goat” and “coat” for an English-learning infant (Barton, Reference Barton1980), but also sufficient items that differed in distributed, individual features across words to eventually point to a matrix. However, with the advent of studies showing that infants begin life discriminating speech according to phonetic category boundaries, and that phonetic perceptual development includes narrowing and sharpening as well as building, this view began to fall into disfavor (see Brown & Matthews, Reference Brown and Matthews1997). Although many of us felt it important to make a distinction between phonetic category learning and phonological contrast (Pater, Stager, & Werker, Reference Pater, Stager and Werker2004; Werker & Logan, Reference Werker and Logan1985), the fact that native phonetic categories could be established prior to the establishment of a lexicon was deemed to require a new explanation. Thus, with the advent of findings from the infant speech perception field, researchers began to look for explanations that could occur prior to the establishment of a lexicon. Peter Jusczyk, in his word recognition and phonetic structure acquisition (WRAPSA) model, discussed the shrinking and stretching of perceptual space, in loose connection with familiarization with word forms (Jusczyk, Reference Jusczyk1993). Patricia Kuhl proposed a “magnet effect,” whereby highly familiar items, akin to category prototypes, would serve to attract similar variants and reduce discrimination among them (see Kuhl, Reference Kuhl1993; Kuhl et al., Reference Kuhl, Conboy, Coffey-Corina, Padden, Rivera-Gaxiola and Nelson2008). Catherine Best put forward similar notions, but based on “perceptual assimilation” to similar articulatory gestures rather than acoustic distinctiveness (Best, Reference Best1994). However, while useful descriptive frameworks for the observed patterns of results, these frameworks lacked empirical operationalization and prediction.

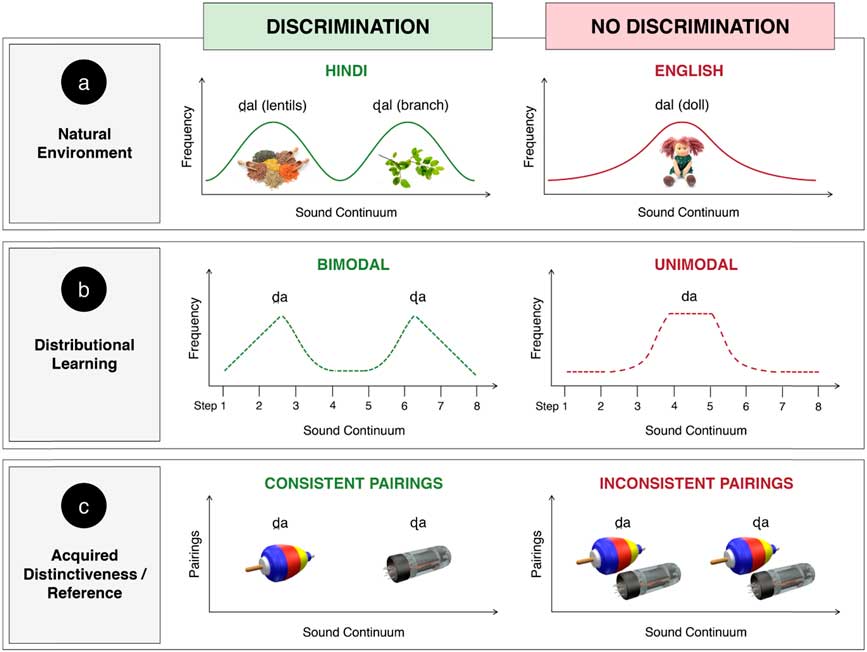

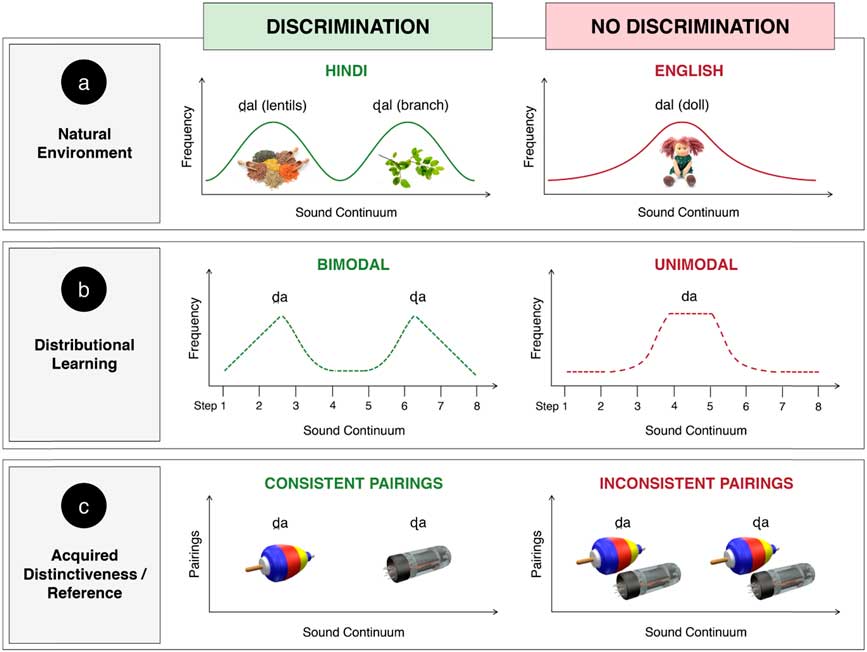

At around this time, Saffran, Aslin, and Newport (Reference Saffran, Aslin and Newport1996) published their seminal paper showing that infants are superb at tracking statistics in the input (in their case, transitional probabilities between syllables) as a means of pulling out words. Building on work she had initially completed with adults (Maye & Gerken, Reference Maye and Gerken2001), when she joined my lab, Jessica Maye asked whether infants could similarly use statistical regularities in the speech they hear, even without knowing how those speech sounds related to meaning, to pull out the phonetic categories of their native language (Maye, Werker, & Gerken, Reference Maye, Werker and Gerken2002). We reasoned that infants growing up in a language that distinguished a particular contrast would likely hear instances of those phones in a bimodal distribution, whereas infants growing up in a language that did not distinguish a contrast would likely hear those phones in something closer to a unimodal distribution (see Figure 2a and 2b for an example). We modeled this in a “distributional learning” paradigm wherein infants aged 6–8 months were familiarized to a d–t continuum that had acoustic cues unlike those used in English, and then tested their ability to discriminate the two categories. While all the infants heard all eight steps along this continuum, half were familiarized to a bimodal distribution in which there were more exemplars near the end points (in Figure 2b, Steps 2 and 7), and half were familiarized to a unimodal distribution in which there were more exemplars of the two midpoints (in Figure 2b, Steps 4 and 5). Following familiarization, both groups were tested with stimuli that both groups had experienced with the same frequency during the familiarization phase (in Figure 2b, Steps 1 and 8). Only infants familiarized to the bimodal distribution succeeded. In subsequent work, Maye, Weiss, and Aslin (Reference Maye, Weiss and Aslin2008) showed that infants not only could maintain or learn to collapse a phonetic category on the basis of distributional frequency but also could generalize what they had learned to a new phonetic contrast in which the same phonetic dimension (voice-onset time) was the critical distinguishing cue. Enhancement of initial sensitivity via distributional learning has also been shown for (a retroflex but not an alveolar–palatal) fricative discrimination in young infants (Cristia, McGuire, Seidl, & Francis, Reference Cristia, McGuire, Seidl and Francis2011), and for an acoustically impoverished ba/da contrast, but here only with the inclusion as well of visual cues (Teinonen, Aslin, Alku, & Csibra, Reference Teinonen, Aslin, Alku and Csibra2008).

Figure 2 An illustration of speech contrast discrimination in infancy within the context of three “learning” environments: (a) natural, (b) distributional, and (c) acquired distinctiveness and/or reference. The top panel (a) illustrates the variability in the pronunciation of “da” an infant might hear in a natural language environment, depending on whether the infant is growing up learning Hindi (left), in which dental /d̪a/ and retroflex /ɖa/ are contrastive, or English (right), in which they are not. The middle panel (b) illustrates how the variability present in natural learning environments (Hindi-learning infants encounter /da/ phones in a bimodal distribution and English-learning infants encounter /da/ phones in a unimodal distribution) is modeled in studies of distributional learning, using relative frequencies of sounds that are created along an eight-step continuum from /d̪a/ to /ɖa/. In such studies, English-learning infants will only discriminate /d̪a/ from /ɖa/ if they are first familiarized with a bimodal distribution. The bottom panel (c) illustrates how the same variability is modeled in studies of acquired distinctiveness: two consistent sound–object pairings are presented to highlight the distinction between /d̪a/ and /ɖa/ in comparison to inconsistent pairings. English-learning infants will only discriminate if they are first familiarized with the consistent pairings. For other nonnative distinctions, ostensive or other cues to the referential nature of the word–object pairing are required in addition to acquired distinctiveness to enable discrimination. Figure and legend adapted from “How Do Infants Become Experts at Native-Speech Perception?” by J. F. Werker, H. H. Yeung, and K. A. Yoshida, Reference Werker, Yeung and Yoshida2012, Current Directions in Psychological Science, 21, 221–226.

The studies reviewed above provided support for the hypothesis that prior to establishing a lexicon to help with contrast, infants can track statistical regularities in the input (McMurray, Aslin, & Toscano, Reference McMurray, Aslin and Toscano2009). By the time they are 9–10 months of age and have begun to attune to the phonetic categories used in their native language, passive statistical learning is less effective. By this age, 4 rather than 2 minutes of prefamiliarization is required to lead to changes in category structure (Yoshida, Pons, Maye, & Werker, Reference Yoshida, Pons, Maye and Werker2010), and even then, the results are not as robust or as generalizable as those reported with younger infants. By 18 months of age (Maye, personal communication) and in adulthood (Hayes-Harb, Reference Hayes-Harb2007; Maye & Gerken, Reference Maye and Gerken2001), while distributional learning can modify perceptual discrimination, listeners still fail overall to use these cues to discriminate nonnative phonetic distinctions. By 9–10 months, learning appears more robust when there is contingent social interaction, even without an explicitly presented bimodal versus unimodal massed presentation (Kuhl, Tsao, & Liu, Reference Kuhl, Tsao and Liu2003; Meltzoff & Kuhl, Reference Meltzoff and Kuhl2016), and/or when the phonetic category bifurcation or collapse co-occurs with the presentation of distinct objects (Yeung & Werker, Reference Yeung and Werker2009). Still working within a statistical learning frame, we first conceptualized this latter finding as a form of “acquired distinctiveness.” Building on the animal perceptual learning literature (see Kluender, Lotto, Holt, & Bloedel, Reference Kluender, Lotto, Holt and Bloedel1998; Lawrence, Reference Lawrence1949), we suggested that the pairing of two perceptually similar syllables with two more obviously distinct objects helped English-learning infants attend to, and pull apart, the two nonnative (Hindi) syllables and thus more successfully discriminate them (see Figure 2c). Subsequent work reviewed below, however, suggested that pairing two nonnative syllables with two different objects may actually involve more abstract phonological knowledge guiding perceptual learning.

Two subsequent studies involved testing 9- to 10-month-old infants on distinctions that are not used for lexical contrast (to distinguish two words) in their native language, but that nonetheless occur as paralinguistic cues. In the first study, we tested English-learning infants on their sensitivity to a rising versus flat tone difference as used in Mandarin and Cantonese (Yeung, Chen, & Werker, Reference Yeung, Chen and Werker2014). As noted earlier, by 9–10 months, English-learning infants have difficulty discriminating this difference (Mattock & Burnham, Reference Mattock and Burnham2006; Mattock, Molnar, Polka, & Burnham, Reference Mattock, Molnar, Polka and Burnham2008; Yeung et al., Reference Yeung, Chen and Werker2014). In English, differences in tone are used functionally (e.g., to signal a question vs. a statement, to relinquish the floor in a conversation, for emphasis, and so on) but are not used to distinguish two words as they are in tone languages such as Mandarin. Thus, English-learning infants may have actively learned to ignore tone differences as signals of lexical contrast. In addition, with the two syllables differing only in rising versus level tone, simple pairing of the two syllables with two different objects was not sufficient to improve discrimination. Instead, the infants had to first be given some evidence that the two different syllables referred to objects. Specifically, they had to first be primed with three known word–object pairings (e.g., hearing the word dog when shown a picture of a dog) and then presented with the new word–object pairings. Only with the addition of these referential trials was there an improvement in tone discrimination (see Fennell & Waxman, Reference Fennell and Waxman2010, for the genesis of the referential manipulation).

In the second study, Yeung and Nazzi (Reference Yeung and Nazzi2014) used a similar paradigm and extended these findings to lexical stress. In French, stress is not used phonemically to contrast the meaning of individual words, and French infants aged 9–10 months do not discriminate stress differences as well as do infants from languages in which it is used to distinguish meaning (Höhle, Bijeljac-Babic, Herold, Weissenborn, & Nazzi, Reference Höhle, Bijeljac-Babic, Herold, Weissenborn and Nazzi2009; Skoruppa et al., Reference Skoruppa, Pons, Christophe, Bosch, Dupoux, Sebastián-Gallés and … Peperkamp2009). Like tone in English, stress is used only pragmatically in French, and so, using the same logic as before, the infants may have already learned to ignore stress on words as indicative of contrast. Here again the addition of cues signaling a referential context enabled infants to learn from the consistent word–object pairings and to improve in discrimination and lexical use of stress (Yeung & Nazzi, Reference Yeung and Nazzi2014). In this work, the referential manipulation included both presentation of known word–object pairs before the pairing of new words with unknown objects (as in Yeung et al., Reference Yeung, Chen and Werker2014) as well as ostensive pointing cues (see Wu, Gopnik, Richardson, & Kirkham, Reference Wu, Gopnik, Richardson and Kirkham2011), thus employing both reference and social interaction.

THE LINK BETWEEN CHANGING PERCEPTUAL SENSITIVITIES AND LATER LANGUAGE

An increasing body of work shows a link between changing speech perception sensitivities and their subsequent use in language processing and language learning. Word-recognition tasks indicate that infants can recognize known words and distinguish them from minimal pair distinctions as used in their native language by as young as 10–12 months of age (e.g., Mani & Plunkett, Reference Mani and Plunkett2010). Word-learning tasks indicate that infants have difficulty using language-specific phonological knowledge to guide them in the initial stages of word learning (e.g., Stager & Werker, Reference Stager and Werker1997; Swingley & Aslin, Reference Swingley and Aslin2007), but that by 18 months of age, phonological knowledge does guide word learning across a variety of tasks (Swingley, Reference Swingley2007; Swingley & Aslin, Reference Swingley and Aslin2000; Werker, Fennell, Corcoran, & Stager, Reference Werker, Fennell, Corcoran and Stager2002). One task we used to assess minimal pair word learning is the Switch task. In this procedure, infants are habituated to two word–object pairings: object A with nonsense word A, and object B with nonsense word B. Following habituation, they are tested on whether they have learned the word by being shown, in sequence, a word–object pairing that matches their experience in the habituation phase and then a word–object pairing that is mismatched (e.g., object A with nonsense word B). Longer looking to the “switch” trial indicates that infants have learned the link. Our initial work indicated that while 14-month-old infants can perform reliabily in this task when the nonsense syllables are phonologically distinct items such as “lif” and “neem,” they have difficulty with minimal pair items such as “bih” and “dih” (Stager & Werker, Reference Stager and Werker1997; Werker, Cohen, Lloyd, Stager, & Casasola, Reference Werker, Cohen, Lloyd, Stager and Casasola1998).

These early studies indicated that infants fail to perform reliably on minimal pair distinctions until 18–20 months of age (Werker et al., Reference Werker, Fennell, Corcoran and Stager2002), and can continue to have difficulty until an even later age if they are growing up bilingual (Fennell, Byers-Heinlein, & Werker, Reference Fennell, Byers-Heinlein and Werker2007). From a number of studies that replicated and then extended these results, we now know that if the computational demands of the task are simplified, infants can learn minimal pair words at a younger age. For example, prefamiliarizing monolingual infants with either the word forms (Fennell & Werker, Reference Fennell and Werker2003) or the objects (Fennell, Reference Fennell2012) allows them to succeed at 14 months, as does testing them in a two-choice, side by side looking task where they can compare the two objects and look to the one that most closely matches the word with which it was previously paired (Yoshida, Fennell, Swingley, & Werker, Reference Yoshida, Fennell, Swingley and Werker2009). They can also succeed at this younger age if the minimally different syllables are presented in distinct syllabic contexts (Thiessen, Reference Thiessen2007), presented in stressed syllables (Archer, Ference, & Curtin, Reference Archer, Ference and Curtin2014), or if multiple speakers produce the words during the familiarization phase (Rost & McMurray, Reference Rost and McMurry2009). Similarly, we now know that bilingual-learning infants can pass these tasks as early as monolingual-learning infants if the nonsense words match better the phonetic form they are typically hearing in their day-to-day language environment (Fennell & Byers-Heinlein, Reference Fennell and Byers-Heinlein2014; Mattock, Polka, Rvachew, & Krehm, Reference Mattock, Polka, Rvachew and Krehm2010). That is, if they are typically hearing speech from a bilingual adult, bilingual infants perform best when the nonsense words are pronounced by a bilingual, whereas monolingual infants perform best when the nonsense words are pronounced by a monolingual speaker of their language (and show difficulty if the words are produced by a bilingual speaker). By 18–24 months, monolingual and bilingual infants appear to perform similarly (Singh, Hui, Chan, & Golinkoff, Reference Singh, Hui, Chan and Golinkoff2014).

In attempting to understand the difficulty that 14- but not 18-month-old infants have in using native phonetic categories to guide word learning when they are not given extra support by contextually relevant cues, a number of us proposed frameworks for understanding the link between speech perception development and phonological/lexical acquisition. In our PRIMIR model (Curtin & Werker, Reference Curtin and Werker2007; Werker & Curtin, Reference Werker and Curtin2005), Suzanne Curtin and I built on the evidence showing that while young infants are sensitive to phonetic contrast, they are also sensitive to a number of other acoustic–phonetic cues in speech. They discriminate pitch (Nazzi, Floccia, & Bertoncini, Reference Nazzi, Floccia and Bertoncini1998), stress (Sansavini, Bertoncini, & Giovanelli, Reference Sansavini, Bertoncini and Giovanelli1997), and individual voices (as evident, e.g., in their preference for the mother’s voice; DeCasper & Fifer, Reference DeCasper and Fifer1980). All of these aspects of the speech signal are important as all of them are informative: they signal differences in emphasis, carry information about speaker identity, and so on. In many languages, however, including English, these cues are strictly paralinguistic and do not signal a difference in meaning between two words. In PRIMIR we suggest that 14-month-old infants might weigh these paralinguistic cues as heavily as they do lexically contrastive information, and thus can only attend to them if they are highlighted and/or if the computational demands of the word-learning situation are minimized (see Werker & Fennell, Reference Werker and Fennell2004, for an earlier version of this as a “computational resources” explanation). We reasoned that by 18 months, when they have a sufficiently large vocabulary, “phonological” categories would likely be prioritized, and so when encountering a word and an object together, infants would treat the word as a label and hence attend to the phonetic features rather than to other acoustic cues. This in turn would enable them to more easily use phonological contrast to guide their word learning. Proposals focusing on a shift to phonological contrast have been put forward by several other authors as well, including those coming from a lexical perspective (Heitner, Reference Heitner2004; Swingley, Reference Swingley2009) to a more distributional perspective (Beckman & Edwards, Reference Beckman and Edwards2000; Thiessen, Kronstein, & Hufnagle, Reference Thiessen, Kronstein and Hufnagle2013).

In further support, working jointly with Swingley, we found that at 18 months of age it is phonological categories rather than just language-specific phonetic categories that guide word learning in the Switch task. We compared English- and Dutch-learning infants on their ability to link words differing in vowel color (/tam/ vs. /tem/) and words differing in short versus long vowels (/tam/ vs. /taam/) to two different objects. The vowel color distinction is phonemic in both English and Dutch, whereas the vowel duration distinction is phonemic in Dutch but not in English, yet English infants this age can discriminate vowel length differences in a nonsense syllable discrimination task (Mugitani, Pons, Fais, Werker, & Amano, Reference Mugitani, Pons, Fais, Werker and Amano2009). As predicted, both the English and Dutch infants succeeded at learning the word–object association when the words differed in vowel color, but only the Dutch infants succeeded when the words differed in vowel duration (Dietrich, Swingley, & Werker, Reference Dietrich, Swingley and Werker2007).

Building from these findings, Fennel and Waxman (Reference Fennell and Waxman2010) proposed that if 14-month-old infants were put in a situation wherein it was clear that the word–object pairings were about reference, then even at this younger age they should be able to use minimal pair phonetic differences to guide word learning. To test this question, infant performance following two “referential” manipulations was compared to their performance in the standard task. In one referential manipulation, prior to habituation to the two minimally different word pairs with two different objects, infants were first shown three known word–object pairings in an attempt to “inform” the infants that this task was about reference. This manipulation was sufficient to boost infants’ performance, enabling them to learn the two phonetically similar words. A second referential manipulation wherein infants were presented the test words in very short sentences rather than in isolation also boosted performance (Fennell & Waxman, Reference Fennell and Waxman2010). In our lab we found similar results: when the referent was indicated by a pointing interlocutor, 14-month-old infants also showed better performance (Fais et al., Reference Fais, Werker, Brown, Leibowich, Barbosa and Vatikiotis-Bateson2012). Thus, we have again an example of the bidirectional relationship between phonetic and lexical development.

The minimal pair word-learning task requires infants to use native phonological categories to guide word learning. As such, we reasoned it might provide a sensitive diagnostic for children at-risk for a phonological delay. Thus, in two longitudinal studies we investigated whether performance in the standard minimal pair Switch word-learning task in the toddler years would predict later phonological knowledge and preliteracy. In the first study, with only 16 infants, we found a very strong relationship (Bernhardt, Kemp, & Werker, Reference Bernhardt, Kemp and Werker2007). However, in a second, more tightly controlled longitudinal study with a larger sample, including children from more diverse socioeconomic status backgrounds than in our usual studies, this predictive relation did not replicate, with the only hint of a relation being found among the poorest performing toddlers who also had low vocabulary (Kemp et al., Reference Kemp, Scott, Bernhardt, Johnson, Siegel and Werker2017). Although this raises the possibility that the task could help identify those slow talkers who might have an actual language delay, the finding applied to only 4 children and was discovered during exploratory post hoc analyses. Thus, at best, the link must be explored further.

IS THERE BIDIRECTIONALITY IN PERCEPTUAL AND LANGUAGE DEVELOPMENT?

At the time that we were initially conducting these speech perception and word learning studies, consensus knowledge indicated that infants only begin to recognize words toward the end of the first year of life (Fenson et al., Reference Fenson, Dale, Reznick, Bates, Thal, Pethick and … Stiles1994). While maternal reports (from studies using CDI vocabulary checklists) sometimes indicated understanding of a few very common words by 8–10 months of age, the numbers were small and in many cases no words at all were checked off as understood. Similarly, although a few experimental studies provided evidence that infants as young as 6 months old can look reliably more to very common referents (specifically feet vs. hands and other body parts; Tincoff & Jusczyk, Reference Tincoff and Jusczyk1999 and 2012, respectively) in the presence of the relevant word, and that infants as young as 4 months old can respond to their own names (Mandel, Jusczyk, & Pisoni, Reference Mandel, Jusczyk and Pisoni1995), these instances were seen as high-frequency exceptions. More recently, a growing number of studies have indicated that infants detect the match between words and objects for far more referents and at a far earlier age than anyone had expected. In a widely cited study, Bergelson and Swingley (Reference Bergelson and Swingley2012) reported that if highly familiar objects are used, infants as young as 6 months old show evidence of looking longer to matching than to nonmatching objects. This effect is particularly pronounced if familiar voices are used to produce the words (Bergelson & Swingley, Reference Bergelson and Swingleyin press). Such precocious word recognition finds a plausible mechanism in studies showing that as early as 3 months of age, neural responses indicate rapid learning of the association of words and objects (Friedrich & Friederici, Reference Friedrich and Friederici2017). What is still unknown is the extent to which these early word–object associative linkings carry the same referential weight as does lexical understanding in older infants, or whether they reveal at best “proto-words.”

With the increasing evidence that the foundations for referential word learning are in place far earlier than once expected, we can again consider the idea that establishment of native speech sound categories, even in the earliest stages of infant development, involves acquisition of sound categories within the context of the earliest appreciation of differential meaning. That is, rather than perceptual attunement being a prerequisite to word meaning, with the phonological categories of the native language subsequently being used to direct word learning, it seems increasingly likely that the appreciation of what words mean and of what phonetic distinctions are phonological in the native language emerge in parallel. In his writings, Heitner (Reference Heitner2004) credits Roger Brown with this insight in his 1958 “Word Game.” In my lab, it was an insight Henny Yeung also independently introduced in his (unpublished) master’s thesis (Reference Yeung2005), arguing, as does Heitner, that just as the presence of distinct words guides category learning (à la Waxman), so, too, does the presence of distinct objects guide speech sound categorization (see Swingley, Reference Swingley2009, for similar arguments). It was this hypothesis that guided the design and implementation of Yeung’s empirical work, reviewed above, showing that the presence of two distinct objects can help infants as young as 9 months old continue to distinguish nonnative contrasts (Yeung et al., Reference Yeung, Chen and Werker2014; Yeung & Nazzi, Reference Yeung and Nazzi2014; Yeung & Werker, Reference Yeung and Werker2009).

The suggestion that word–object co-occurrences can also guide speech perception development is different from the original Trubetskoy (Reference Trubetskoy1969/1958) notion, which argued that children must first establish a lexicon and only thereafter could a system of contrast be constructed. In the current approach, the establishment of object categories and of speech sound categories develop together in a mutually informative fashion. With the evidence that the presence of words drives categorization from early in life (Ferry et al., Reference Ferry, Hespos and Waxman2013; Waxman, Reference Waxman2004), the evidence that vocabulary learning begins far earlier than we previously expected (Bergelson & Swingley, Reference Bergelson and Swingley2012; Tincoff & Jusczyk, Reference Tincoff and Jusczyk1999, Reference Tincoff and Jusczyk2012), and the emerging evidence that the presence of distinct objects drives speech sound categorization (Yeung et al., Reference Yeung, Chen and Werker2014; Yeung & Nazzi, Reference Yeung and Nazzi2014; Yeung & Werker, Reference Yeung and Werker2009), we are well positioned to begin looking earlier in infancy, in the period during which perceptual attunement takes place, for the co-emergence of object and speech sound categorization. This is not to argue that statistical learning does not also play an important role. Distributional learning of language-specific phonetic (rather than phonological) categories could yield exactly those kinds of stable percepts on which a cyclical “word game” could then operate. However, rather than having to necessarily come first, statistical learning of native phonetic categories could also occur concurrently with semantically driven acquisition, with the statistical regularities and object mappings providing stimultaneous converging sources of confirmation. In this way, listening to speech and noting its functional significance could influence and be influenced by the development of native language phonetic categories, the emergence of native language phonological categories, and a conventionalized understanding of the kinds of semantic distinctions that are encoded in the native language.

A FINAL CONSIDERATION: SPEECH PERCEPTION IS MULTISENSORY

Visual influences

As noted at the outset, infant speech perception is not only auditory, but is, like adult speech perception, richly multisensory. Perceptual attunement has been shown to apply to speech perception in other sensory modalities (e.g., vision) as well as to multisensory speech perception (e.g., audiovisual integration). For example, young but not older infants can discriminate rhythmically distinct languages just by watching silent talking faces. When habituated to multiple speakers producing sentences in one language but with the sound turned off, and then shown those same speakers producing new sentences in either the old language or in a rhythmically distinct language, infants 4 and 6 months of age show a recovery in looking time to the language switch (Weikum et al., Reference Weikum, Vouloumanos, Navarra, Soto-Faraco, Sebastián-Gallés and Werker2007). By 8 months of age, mononlingual-learning infants no longer do so, whereas bilingual-learning infants maintain the ability to discriminate the two languages visually (Sebastián-Gallés et al., Reference Sebastián-Gallés, Albareda, Weikum and Werker2012; Weikum et al., Reference Weikum, Vouloumanos, Navarra, Soto-Faraco, Sebastián-Gallés and Werker2007).

We have also known for some time that from as early as 4 months old (Kuhl & Meltzoff, Reference Kuhl and Meltzoff1982; Patterson & Werker, Reference Patterson and Werker1999) and even 2 months old (Patterson & Werker, Reference Patterson and Werker2003), infants can match heard with seen phonetic information. For example, when shown two side-by-side faces of the same person, one producing an /i/ and the other an /a/, infants will look to the side that matches what they hear when presented with an auditory /i/ or /a/. Preferential looking to the match in the first 6 months of life is seen not only for native speech but also for nonnative speech sounds (Danielson, Bruderer, Kandhadai, Vatikiotis-Bateson, & Werker, Reference Danielson, Bruderer, Kandhadai, Vatikiotis-Bateson and Werker2017; Kubicek et al., Reference Kubicek, de Boisferon, Dupierrix, Pascalis, Loevenbruck, Gervain and Schwarzer2014; Pons, Lewkowicz, Soto-Faraco, & Sebastián-Gallés, Reference Pons, Lewkowicz, Soto-Faraco and Sebastián-Gallés2009).

Just as auditory phonetic perception attunes across the first year of life, so does audiovisual (AV) match. Spanish lacks a /v/ phone and hence lacks a ba-va phonetic distinction. Yet young Spanish infants will look preferentially to a picture of a face articulation of /va/ or /ba/ when hearing the matching syllable, but stop doing so by 9–10 months of age (Pons et al., Reference Pons, Lewkowicz, Soto-Faraco and Sebastián-Gallés2009). We see related results for nonmatching speech: at 6 but not 11 months of age, infants look differentially to the face when viewing mismatching, nonnative AV speech displays than they do to matching ones (Danielson et al., Reference Danielson, Bruderer, Kandhadai, Vatikiotis-Bateson and Werker2017). This provides another example of AV perceptual attunement. Moreover, the presentation of matching AV speech seems to facilitate discrimination of an otherwise difficult native distinction at 6–8 months of age (Teinonen et al., Reference Teinonen, Aslin, Alku and Csibra2008) and of an otherwise nondiscriminable nonnative vowel difference at 9–11 months of age (Ter Schure, Junge, & Boersma, Reference Ter Schure, Junge and Boersma2016; but see Danielson et al., Reference Danielson, Bruderer, Kandhadai, Vatikiotis-Bateson and Werker2017, for a lack of facilitation in nonnative consonant discrimination).

AV interactions continue to influence speech processing as infants move into word recognition and word learning. At 12–13 months of age, infants detect auditory mispronounciations of familiar words in both auditory-only and AV matched conditions, but fail to detect mispronunciations when the AV display is mismatched (Weatherhead & White, Reference Weatherhead and White2017). By 18 months of age, if first taught a word–object match with only auditory information, infants will look correctly to the match in both an auditory-only (A-only) and in a visual-only (V-only) test condition (Havy, Foroud, Fais, & Werker, Reference Havy, Foroud, Fais and Werker2017). Infants of this age cannot, however, learn the pairing when given only visual facial information in the training phase, whereas adults can. In this study, we first presented infants with a frontal view of a woman pronouncing two nonsense words, but with no referent object. This brief AV exposure was followed by a training period wherein the woman turned toward an object and pointed at it while she produced the word. Two word–object pairings were taught. In the A-only training condition, her pointing arm covered the lower half of her face, preventing access to most of the visual phonetic information. In the V-only training condition, her pointing arm was below the face, thus showing the infants the articulatory movements, but the sound was turned off. The subsequent test phase assessed word recognition in a preferential looking task wherein the two objects were presented side by side, and the infant either heard (A-only) or saw (V-only) the woman presenting one of the words. Word learning was evident by longer looking to the match. As noted above, toddlers could learn the words in the A-only training condition (they subsequently demonstrated word recognition in both the A-only and V-only testing conditions), but they could not learn the words in the V-only training condition, whereas adults could.

While not the focus of this article, it should be noted that visual influences may also play a role in the perceptual bootstrapping of syntactic structure. Young infants can use both word frequency (Gervain et al., Reference Gervain, Nespor, Mazuka, Horie and Mehler2008) and prosody (Bernard & Gervain, Reference Bernard and Gervain2012) to parse an artificial language into constituent phrases, and bilingual-learning infants can use both simultaneously (Gervain & Werker, Reference Gervain and Werker2013). Current work in my lab is investigating the extent to which visual cues in talking faces also convey these prosodic differences. To date, we have found that adults can use cues such as head nods to parse language streams into syntactic phrases (de la Cruz Pavía, Werker, Vatikiotis-Bateson, & Gervain, Reference de la Cruz Pavía, Werker, Vatikiotis-Bateson and Gervain2016) and are currently investigating this same question with infants.

Oral motor influences

A growing body of research indicates that oral-motor movements, or the somatosensory feedback from them, also impact speech perception. The first evidence came from a number of naturalistic observations, showing, for example, that infants are better able to discriminate sounds that are in their babbling repertoire (DePaolis, Vihman, & Portnoy, Reference DePaolis, Vihman and Keren-Portnoy2011), and are more likely to imitate sounds when they both hear the sounds and see the faces moving than when only listening (Coulon, Hemimou, & Streri, Reference Coloun, Hemimou and Streri2013; for a full review, see Guellaï, Streri, & Yeung, Reference Guellaï, Streri and Yeung2014). In the first study to test this empirically, Henny Yeung and I (2013) investigated the influence of oral-motor movements on auditory–visual matching. Our work with infants 4–5 months old revealed a robust influence: in this case, as a contrast effect. When watching two side-by-side faces, one articulating /i/ and the other /u/, and presented with the heard vowel of one or the other, the infants looked to the matching side. However, when a pacifier (or the parent’s fingertip) was held between the infants’ lips (resulting in a rounded lip configuration much like that required for producing an /u/), they looked to the visual /i/ when hearing the /u/, and when a teether (or the long side of the parent’s finger) was placed between the infants’ lips (resulting in a stretched lip configuration much like that required for producing an /i/), they looked more to the /u/ face when hearing the /i/ sound (Yeung & Werker, Reference Yeung and Werker2013). Control conditions confirmed this effect only when there was shared information between the oral-motor movement and the corresponding sound heard and seen.

More recently, we have found an oral-motor influence not just on AV matching, but on A-only speech perception as well. As discussed earlier, at 6 months of age, English-learning infants are able to discriminate the nonnative distinction between the dental /da/ and retroflex /Da/ phones produced in Hindi, even though they have not heard them in canonical syllable form in their language input (e.g., Werker & Tees, Reference Werker and Tees1984). We have recently shown that this discrimination is impaired if 6-month-old infants are prevented from moving their tongue tips in ways that correspond to the adult production of a dental /da/ versus a retroflex /Da/ (Bruderer, Danielson, Kandhadai, & Werker, Reference Bruderer, Danielson, Kandhadai and Werker2015). Specifically, when a flat teether was held in their mouths such that it interfered with tongue tip movement, infants no longer showed evidence of discrimination, whereas when a gummy teether was held in their mouths such that it still allowed tongue tip movement, discrimination remained intact. The fact that this effect was seen in 6-month-old infants even for a nonnative distinction, and even though infants this age are not yet babbling consonant–vowel syllables, and hence not yet fully imitating the sounds heard in their native language, suggests preparation prior to specific experience for linking heard speech and related self-produced motor movements (see Choi, Kandhadai, Danielson, Bruderer, & Werker, Reference Choi, Kandhadai, Danielson, Bruderer and Werker2017, for a discussion of the events in prenatal and early postnatal development that might support the mapping between heard speech and self-produced oral-motor movements even prior to babbling).

HOW DOES MULTISENSORY SPEECH PERCEPTION FIT INTO THE OVERALL PICTURE?

There are many ways in which the study of multisensory speech perception could change our approach to understanding the perceptual foundations of language acquisition. Given that the speech percept is multisensory from early in life and that perceptual attunement is found for both V-only and AV speech perception, perhaps work on critical/sensitive periods and influences on their timing should be focused on multisensory rather than A-only speech percepts. Issues concerning neural organization (and thus potential compensation, loss, and reorganization) come to the fore. Speech as a multisensory percept also has important implications for infants with different types of sensory impairments: a chapter unto itself.

In addition, a multisensory speech percept could be part of what contributes to the privileged status of speech for categorization and reference. It has been suggested that multisensory binding is an important part of what makes a representation a candidate for abstraction (Xu, Reference Xu2016). To the extent that the speech percept is multisensory from very early in life, or at least to the extent that the same phonetic content can be recognized across different sensory modalities, it has the qualities that favor abstraction. Thus, unlike other kinds of percepts where cross-modal recognition needs to be first established through experience and only then can the representation become a candidate for a symbol, the multimodal status of the speech percept may be part of what enables it to support categorization and potentially even reference from very early in life. This in turn may further support the bidirectional interface between phonetic attunement and phonological development.

SUMMARY

In summary, language acquisition begins long before the production or comprehension of the first word. In this article, I have reviewed some aspects of the intellectual journey my work has taken as my students, postdoctoral fellows, collaborators, and I have tried to understand the perceptual foundations of language acquisition. We have considered the perceptual biases infants have at birth that get them started, the mechanisms by which they become attuned to the phonetic and phonological categories of the native language, and the complex relation between speech and language. My current thinking is that the relation is a bidirectional one, with speech perception development guiding word learning and other aspects of language development, but with a preparation for reference and meaning also guiding speech perception development.

ACKNOWLEDGMENTS

Preparation of this paper, and the research from my laboratory described therein, was supported by grants from SSHRC and NSERC, and by my Canada Research Chair and Canadian Institutes for Advanced Research Fellowship. Special thanks to Savannah Nijeboer for editing and for creating the figures, and to Dr. Padmapriya Kandhadai for editing advice.