NOMENCLATURE

Acronyms

- AGA

Aerodromes and ground aids

- AI

Artificial Intelligence

- AIG

Aircraft accident and incident investigation

- AIR

Airworthiness of aircraft

- ANFIS

Adaptive Neuro-Fuzzy Inference System

- ANN

Artificial Neural Network

- ANS

Air navigation services

- ATSB

Australian Transport Safety Bureau

- CCA

Civil Aviation Authority

- CE

Critical Element

- DB

Database

- GASP

Global Aviation Safety Plan

- IE

Effective Implementation

- ICAO

International Civil Aviation Organisation

- LEG

Primary aviation legislation and civil aviation regulations

- OPS

Aircraft operations

- ORG

Civil aviation organisation

- PANS

Procedures for Air Navigation Services

- PEL

Personnel licensing and training

- PQ

Protocol Question

- SARP

Standards and Recommended Practice

- SSP

State Safety Program

- UI

User-Interface

- USOAP: CMA

Universal Safety Oversight Audit Program: Continuous Monitoring Approach

1.0 INTRODUCTION

The systematic process of aircraft accident and incident investigation evaluates and identifies the likely causes(1) of the accident or incident. An accident is an occurrence during flight operations where an aircraft was significantly damaged/missing/inaccessible, or a serious/fatal injury resulted. An incident is an occurrence of lesser severity than an accident where the safety of aircraft operation was also impacted(2).

In 1944, the United Nations established the ICAO to manage the administration and governance as well as encourage the development of International Civil Aviation (Convention on Chicago Convention)(3). The ICAO Member States work together with the industry groups on Standards and Recommended Practices (SARPs) through consensus to facilitate harmonised regulations for security, efficiency, environmental protection and global aviation safety(4)

1.1 Learning from past accident investigations

In the interest of continuous improvement and accident prevention, ICAO requires investigating Member State to release the final report, if possible, within 12 months to enable other States to learn from such an aircraft accident. ICAO Annex 13 also requires the Member States to set up an accident and incident database, including maintaining it to improve the analysis of potential or actual safety insufficiencies and determine any necessary preventive actions(2).

1.2 ICAO USOAP: CMA (2016 – 2018) six important non-compliance issues and their effects

ICAO conducts voluntary audits to determine the Member State’s safety oversight capability by assessing its Effective Implementation (EI)(5). This paper highlights the ICAO Universal Safety Oversight Audit Program (USOAP): Continuous Monitoring Approach (CMA) Effective Implementation (EI) scores for four Member States that have complied and four Member States underperformed. EI a common metric used to refer to the Member States Safety Oversight Systems capability. The key tenets underpinning the highlighting of EI scores are that all eight Member States should be aware of the agreed-through-consensus frameworks’ prerequisites and expected to fulfill those prerequisites to comply with the audits.

In this light, the global average EI was 68.83% in 2019, with 46% of the audited Member States achieving the 2022 target of 75% EI established in the Global Aviation Safety Plan (GASP) 2020–2022 edition. Therefore, 54% has underperformed(5).

The 46% of the audited Member States having achieved the 2022 target of 75% EI can be inferred to have the necessary prerequisites to apply the related ICAO frameworks, including Annex 13 framework for aircraft accident investigations successfully. In contrast, the 54% underperformed Member States can be inferred to have application issues primarily due to the lack of prerequisites.

Sub-section 3.2 further describes these two inferences and highlights four Member States that have complied and four Member States underperformed. These two inferences are also supported by the USOAP CMA (2016 – 2018) results (sub-section 3.3), including an overview of the six highlighted important non-compliance issues and summaries of their effects in the aircraft accident and incident investigation area. One of the six issues highlighted was that more than 60% of the Member States do not have a comprehensive and detailed investigator training program. This has led to many shortcoming, including the lack of preservation of essential, volatile evidence, poor investigation management, poor investigation reporting, and/or safety recommendations reported by the International Civil Aviation Organisation (ICAO)(6).

1.3 Objectives of this paper

This paper aims to close a specific training prerequisite gap (deficiency in the investigation evidence analysis) primarily responsible for two of the highlighted effects (shortcomings) in sub-section 3.3 (poor investigation reporting and safety recommendations). The proposal is in the form of a global collaborative information technology system based on an Expert System (ES) framework with imbedded inference engine to generate probable cause(s) from past accidents.

The proposed ES framework includes two objectives. Objective one is to improve the quality of evidence analysis, conclusion consistency, and timeline. These improvements will subsequently enhance the investigation reporting and safety recommendations, closing the two focused gaps, which is especially beneficial to the new or untrained investigators. The proposal will also help more experienced investigators to provide additional consistency and faster evidence analysis. Objective two supports the on-the-job field training of the evidence analysis through self-discovery and help to generate confidence. The self-discovery element comes from the ES’s ability to trace evidence used to conclude through its inference engine trained from past accidents.

This ES framework proposal is a matter of relative global urgency due to the long-term nature of building up the necessary infrastructure prerequisites in the investigator Evidence-Analysis area, especially for the underperformed Member States.

1.4 Organisation of the paper

The rest of this paper is organised as follows: Section 2 provides a brief description of the ICAO Annex 13 framework, section 3 highlights the ICAO USOAP CMA’s EI scores and the audit results (2016 – 2018), including six important non-compliance issues and summaries of their effects (shortcomings), section 4 discusses and proposes an expert system framework to support aircraft accident and incident investigations. Section 5 concludes this paper.

2.0 ICAO ANNEX 13 FRAMEWORK

In 1951, ICAO Council implemented the SARPs for aircraft accident inquiries according to the Convention on International Civil Aviation (Chicago, 1944) in ICAO Annex 13. Annex 13 requires the contracting States to collaborate to facilitate and improve air navigation to the highest practicable uniformity in the regulations, procedures, standards and organisation on aircraft, airways, auxiliary services and personnel and imposes an obligation to investigate aircraft accidents or incidents(4). Annex 13 also provides safety data and information to support ICAO Member States in their safety management efforts(5). If there are differences between Member States practices and the SARPs, ICAO is to be notified(4).

Table 1 lists the ICAO Manual of Aircraft Accident and Incident Investigation guidelines to assist the Member States in harmonising their investigation frameworks(2).

Table 1 ICAO Manual of Aircraft Accident and Incident Investigation(2)

ICAO Annex 13 framework expects a preliminary accident investigation report, that may be public or confidential, within 30 days and a final report within 12 months(7). Table 2 provides a brief outline of the final report format layout.

Table 2 ICAO Annex 13 Final Report format for aircraft accident and incident investigation(2)

3.0 ICAO ANNEX 13 EFFECTIVE IMPLEMENTATIONS AND AUDIT RESULTS

3.1 Effective Implementation (EI) scores

ICAO USOAP: CMA determines the Member State’s capabilities for safety oversight and monitors the Effective Implementation (EI) of the Critical Elements (CEs) in their safety oversight system to support the Global Aviation Safety Plan (GASP) objectives(5). EI is a common metric for the Member States’ Safety Oversight Systems, and the EI scores of the CEs are a measure and indication of a State’s capability for safety oversight. Higher EI scores indicate a higher maturity of the Member State’s safety oversight system.

EI is computed for any audited group of applicable Protocol Questions (PQs) in the following equation and expressed as a percentage(6):

ICAO establishes the Protocol Questions (PQs) to standardise the conduct of audits under USOAP: CMA. PQs are based on the safety-related ICAO Standards and Recommended Practices (SARPs) established in the Annexes to the Chicago Convention, Procedures for Air Navigation Services (PANS) and the ICAO guidance material(5). Each standardised PQ assesses the EI of one of the eight critical elements (CEs) in one of the eight audit areas (Table 3), which ensure transparency, quality, consistency, reliability, and fairness in the conduct and implementation of USOAP CMA activities(5).

Table 3 ICAO Audit Areas and Critical Elements

3.2 ICAO USOAP CMA audit results (2016 – 2018)

Figures 1 and 2 show the ICAO audit results for each of the eight audit areas, including the Accident Investigation for the Member State one to four performing above the current global average compliance EI score and the Member State five to eight underperforming, respectively. Amongst the global average Effective Implementation (%) scores for the eight audit areas, the Accident Investigation area has the lowest EI score of 56.15%(8). Only the assessed Member State receives the assessment report, while others are given a summary report(9).

Figure 1. ICAO USOAP CMA Audit Results for typical Member States performing well(8).

Figure 2. ICAO USOAP CMA Audit Results for typical Member States underperforming(8).

The ICAO USOAP CMA audit results in Figs. 1 and 2 can be explained as follows. The four Member States that have performed above the global average EI score in all eight audit areas show that the globally agreed-through-consensus SARPs, including the ICAO Annex 13 framework, can be successfully applied when they are well prepared. The four Member States that have underperformed can be inferred to an application issue of the frameworks mainly due to a lack of prerequisites. The evidence supporting these explanations come from the six highlighted important non-compliance issues and their effects (shortcomings) in the following sub-section 3.3 (ICAO USOAP CMA audit results (2016 – 2018)).

3.2.1 ICAO audit EI results

The average Effective Implementation (EI) score for the audited Member States was 68.83% in 2019, with 46% achieving the 2022 target of 75% EI established in the 2020 – 2022 edition GASP(5). Therefore, 54% of the Member States performed below this 2022 target EI.

3.3 ICAO audit EI results (2016 – 2018) for aircraft accident and incident investigation (AIG) audit area

The ICAO USOAP CMA (2016 – 2018) audit EI results for the aircraft accident and incident investigation (AIG) audit area highlighted six important non-compliance issues. A brief overview of these six important non-compliance issues are presented as follows:

3.3.1 Issue 1 – Establishing an independent accident investigation authority and investigation processes(6)

ICAO Annex 13 framework, sub-chapter 3.2 requires a Member State to form an accident investigation authority. This authority is independent of State aviation authorities and other entities that could interfere with the investigation’s conduct or objectivity(2). The authority must be strictly objective and totally impartial and must also be perceived to be so. The authority should be established so that it can withstand political or other interference or pressure. In this light, the Member States that has achieved this objective has enabled its accident investigation authority to report directly to Congress, Parliament or a ministerial level of government(10).

The first identified issue is that less than 50% of the Member States have formed an autonomous accident investigation authority. Without such an independent authority, State aviation authorities and other entities could interfere with the investigation’s conduct or objectivity(6).

3.3.2 Issue 2 – Ensuring the effective investigation of aircraft serious incidents as per Annex 13(6)

ICAO Annex 13 framework defines Serious Incident as an incident involving circumstances indicating a high probability of an accident and associated with an aircraft’s operation(2). ICAO Annex 13 framework, Chapter 5: Investigation provides comprehensive requirements and recommendations relating to the responsibility for instituting and conducting serious incident investigations (this same Chapter 5 also cover accident investigations)(2).

For Recommendation example, Chapter 5, sub-section 5.1.1 states: “The State of Occurrence should institute an investigation into the circumstances of a serious incident. Such a State may delegate the whole or any part of the conducting of such investigation to another State or a regional accident and incident investigation organization by mutual arrangement and consent. In any event the State of Occurrence should use every means to facilitate the investigation.”(2).

For Requirement example, Chapter 5, sub-section 5.1.2 states: “The State of Occurrence shall institute an investigation into the circumstances of a serious incident when the aircraft is of a maximum mass of over 2,250 kg. Such a State may delegate the whole or any part of the conducting of such investigation to another State or a regional accident and incident investigation organization by mutual arrangement and consent. In any event the State of Occurrence shall use every means to facilitate the investigation.”(2).

The second-identified issue is that more than 60% of the Member States have not formed a process to ensure the aircraft serious incident investigation is as per Annex 13. Insufficient or no guidance in most cases (such as actions to be taken, timelines, and personnel to be involved in assessing and decision-making), to support the assessment upon incident notification before deciding whether to investigate independently as per Annex 13(6).

3.3.3 Issue 3 – Providing sufficient training to aircraft accident investigators(6)

The outcome of an accident investigation primarily depends on the skill and experience of the investigators. Besides the relevant technical skill, an investigator requires certain personal attributes such as integrity and impartiality in recording facts, analytical ability, perseverance in pursuing inquiries and tact in dealing with different people involved in aircraft accidents, which is traumatic(10).

The ICAO Training Guidelines for Aircraft Accident Investigators (Circular 298) discusses in Chapter 2 the experience and employment background required for training to be an aircraft accident investigator. Circular 298 also outlines the progressive training considered necessary to qualify a person for various investigation roles. Chapter 3: Training guidelines provide four Phases of training. Phase one: Initial training; Phase two: On-the-job training; Phase three: Basic accident investigation courses; Phase four: Advanced accident investigation courses and additional training. Chapter 4: Accident investigation course guidelines provide three levels of courses, namely, Basic course, advanced course, and specialty courses(11).

Phase one: Initial training aims to familiarise new investigators with the legislation in their State and the accident investigation authority’s procedures and requirements. Phase one subjects should include Administrative arrangements (e.g., legislation, international agreements, and aircraft accident investigation manuals and procedures); Initial response procedures (e.g., on-call procedures, notification of other national authorities, accident site jurisdiction, and security); Investigation procedures (e.g., authority and responsibility, investigation management and use of specialists)(11).

Phase two: On-the-job training for new investigator begins after the initial training, enabling them to practice the procedures and tasks learned in phase one. The aim is to familiarise the investigator with the accident site investigation techniques and tasks, factual information collection, factual information analysis and final report development(11).

Phase three: Basic accident investigation courses. After phase two, the investigator should attend a Basic accident investigation course, preferable within the first year of training as detailed in Chapter four. Some examples of the topics covered in the Basic course are as follows: ICAO Standards and Recommended Practices (SARPs); Annex 13; Accident notification procedures; Investigation management; Evidence protection; Information gathering techniques; Factual information analysis methods; Reporting writing (includes preparing safety recommendations)(11).

Phase four: Advanced accident investigation courses and additional training. The trained investigator should attend an advanced accident investigation course to update his/her basic techniques knowledge and increase knowledge in special areas. Additional training may be for a variety of aircraft types(11).

The third-identified issue is that more than 60% of the Member States have not developed a comprehensive and detailed training program to train their investigators. Only a small number of Member States provide the necessary training to their investigators to effectively conduct their tasks. Insufficient training contributed to many shortcomings, including the lack of preservation of essential, volatile evidence; Poor investigation management; Poor investigation reporting and/or safety recommendations(6).

3.3.4 Issue 4 – Ensuring proper coordination and separation between the “Annex 13” investigation and the judicial investigation(6)

Appropriate legislation provisions and formal arrangements to properly coordinate investigation activities between the investigation authority and the judicial authority are essential to ensure the necessary separation between the two investigations (e.g., conducting interviews with witnesses and analysing the information collected).

There is also a requirement to govern the activities coordination at the accident scene such as securing and custody of evidence and victim identifications; Cockpit Voice Recording (CVR) and Flight Data Systems (FDR) read-outs; relevant examinations, and tests. This ensures that investigators have ready access to all relevant evidence and are not impeded or significantly delayed due to judicial proceedings, such as flight recorder read-out analysis and other necessary examinations and testing(6).

The fourth-identified issue is that less than 50% of the Member States have effective and formal means, such as suitable legislation provisions and formal arrangements, to enable appropriately coordinated investigation activities between the judicial authority and the investigation authority(6).

3.3.5 Issue 5 – Establishing and implementing a State’s mandatory and voluntary incident reporting systems(6)

The provisions for the Member States’ mandatory and voluntary safety reporting systems are found in Annex 19 (Safety Management) and originated in Annex 13(12).

Annex 19 requires Member States to establish a safety data collection and processing system (SDCPS) to capture, store, aggregate and allow safety data and information analysis to identify hazards across the aviation system. Besides this, the reporting systems and databases for collecting safety data and information is not sufficient to ensure its availability for analysis. States must also put in place laws, regulations, processes and procedures to ensure that safety data and information identified in Annex 19 are reported and collected from service providers (aviation industry organisation) and others to feed the SDCPS(12).

The fifth-identified issue includes both the State’s mandatory and voluntary incident report systems.

Less than 50% of the Member States have established an effective mandatory incident reporting system per ICAO Annex 19 (Safety Management). Many States’ regulations have not clarified the types of occurrence for reporting by aviation domain service providers and what timescale. Regarding the timescale, Annex 13 advises that the accidents and serious incidents reporting to be “as soon as possible and by the quickest means available.” If the States do not have an effective mandatory reporting system, it will affect the effectiveness of the Civil Aviation Authority (CAA)’s continuous surveillance program. It will also limit the State’s ability to follow the data-driven approach to implement the State Safety Program (SSP)(6).

Less than 70% of the Member States have effectively adopted a voluntary incident reporting System per ICAO Annex 19 (Safety Management). An effective voluntary incident reporting system requires proper legislation and procedures. The authority also needs significant efforts to manage the voluntary system to encourage reporting safety data and information not captured by the mandatory incident reporting system(6).

3.3.6 Issue 6 – Establishing an aircraft accident and incident database and performing safety data analyses at State level(6)

ICAO Annex 13 requires various States to establish and maintain an accident and incident database to provide an effective analysis of information on actual or potential safety deficiencies and determine any required preventive actions. Annex 13 has also recommended that various State authorities responsible for implementing the State Safety Program (SSP) should have access to the accident and incident database to support their safety responsibilities. Annex 13 also pointed out that the accident and incident database may be included in a safety database, and further provisions on a safety database are contained in Annex 19(2).

ICAO Annex 19 requires various States to establish the safety data collection and processing system (SDCPS) to capture, store, aggregate and safety data and information analysis. Annex 19 refers SDCPS to processing and reporting systems, safety databases, schemes for exchange of information and recorded information (e.g., data and information related to accident and incident investigations, mandatory and voluntary safety reporting systems)(13).

The sixth-identified issue is that almost 60% of the Member States have not established an accident and incident database to enable effective analysis of actual or potential safety deficiencies and determination of any preventive actions. Many Member States do not have qualified technical staff to administer their database properly. Data that has been collected is not shared with the stakeholders for identifying actual or potential safety deficiencies and adverse trends and determining any preventive action. This affects the State’s ability to implement a State Safety Program (SSP) effectively(6).

4.0 A PROPOSED EXPERT SYSTEM FRAMEWORK TO SUPPORT AIRCRAFT ACCIDENT AND INCIDENT INVESTIGATIONS

In light of the preceding overviews of the six highlighted non-compliance issues and their effects, this paper aims to address the third highlighted issue (the lack-of-investigators comprehensive and detailed training program). More specifically, this paper will address the deficiency of the Accident Investigator Core-Evidence-Analysis Process within an accident and serious incident.

This is in the form of a proposed solution to capture and access aircraft accident knowledge using an Expert System framework underpinned by a global knowledge database. The rationales to focus on this third highlighted issue is given in sub-section 4.1.

The aim is to support aircraft accident investigating authorities with access to global case studies and an intelligent inference engine to provide possible cause(s) based on the available evidence. The ES will offer a consistent investigation analysis workflow, and it will assist with training, reporting and, in general, more efficient use of aircraft accident data available globally.

4.1 Justification to focus on “lack of comprehensive and detailed training program” out of the six identified non-compliance issues by USOAP CMA (2016 – 2018)

This paper aims to address the “lack of comprehensive and detailed investigators training program,” with a lower level focus on the deficiency in the “Accident Investigator Core-Evidence-Analysis Process. ” The outcome is a proposed global collaborative information technology system based on an Expert System framework with an imbedded inference engine to generate probable cause(s) based on past accidents.

4.1.1 Challenges to rank which of the six non-compliance issues is more important

The USOAP: CMA safety oversight system focuses on the whole spectrum of civil aviation activities and comprises eight audit areas and eight critical elements. ICAO standardises the audits with Protocol Questions (PQ) taken from the SARPs established in its Annexes, where each PQ contributes to assessing the EI of one of the eight critical elements (CEs) in one of the eight audit areas (sub-section 3.2)(5). In this light, each of the eight audit areas and eight critical elements are inter-related and are integral parts of the State overall Oversight Safety System capability. Deficiency in at least one or more audit area/s and at least one or more critical element/s will lower the overall standing of their States Oversight Safety System capability.

For example, suppose a Member State has addressed the first-identified issue (sub-section 3.3) by forming an autonomous accident investigation authority. In that case, the third-identified issue to develop a comprehensive and detailed training program will still need to be addressed to train their aircraft accident and incident investigators per Annex 13 framework. Likewise, the second-identified issue to form a process to ensure the aircraft accident and incident investigation (per Annex 13) will also need to be addressed besides addressing the first-and-third-identified issues. Otherwise, there will not be sufficient or no guidance to support the assessment process upon incident notification before deciding whether to investigate independently.

Therefore, each of the six-identified non-compliance issues (sub-section 3.3) in the audit area: Aircraft accident and incident investigation (AIG) are inter-related and virtually impossible to rank which issue is more important.

4.1.2 Justification to focus on the Accident Investigator Core-Evidence-Analysis Process

In the comprehensive and detailed training program for accident investigators, new investigators’ training topics are covered in four wide-ranging phases. Each topic is an important and necessary integral part of the overall training program to train an all-rounded effective accident investigator. Therefore, it is also virtually impossible to rank which training topic is more important to address the third-highlighted non-compliance issues, which is the focus of this paper.

For example, after phase one (Initial training to familiarise new investigators, e.g., relevant legislation and investigation procedures) and phase two (On-the-job training for practicing procedures), the new investigator should attend the basic accident investigation courses (phase three), preferably within the first year of training. Topics covered in the Basic course include: ICAO Standards and Recommended Practices (SARPs); Annex 13; Accident notification procedures; Investigation management; Protection of evidence; Information gathering techniques; Methods of analysing the factual information gathered; Reporting writing (includes preparing safety recommendations) and many others(11). It will be a challenge to attempt to justify the ranking of importance of one topic (e.g., Protection of evidence) over another (e.g., Methods of analysing the factual information gathered).

On this basis, there is certainly justification for focusing on any of the six-identified issues, where each can be considered a significant research area. This paper has chosen to focus on the “ the lack of comprehensive and detailed training program issue: Accident Investigator Core-Evidence-Analysis Process” because its outcome directly affects the quality of “investigation reporting and safety recommendations,” which has received a Poor audit result. Besides this, it is also the author’s area of research interests.

4.2 Addressing the gaps in the investigator training

4.2.1 The Gaps

The ICAO USAOP CMA audits outcome/assessment report will only be sent to the assessed Member States, while others are given a summary report(9). Therefore, this paper addresses the gaps based on the ICAO USAOP CMA audit (2016 – 2018) third highlighted non-compliance issue described in the preceding sub-section 3.3 and reiterated as follows.

More than 60% of the Member States have not developed a comprehensive and detailed training program to train their investigators. Only a small number of Member States provide the necessary training to their investigators to effectively conduct their tasks. Many shortcomings were contributed by insufficient training, including the lack of preservation of essential, volatile evidence; poor investigation management and poor investigation reporting and/or safety recommendations(6).

Specifically, this paper aims to close the gap to one specific shortcoming: poor investigation reporting and/or safety recommendations contributed by the insufficient training.

4.2.2 Overview of an aircraft accident and incident investigation framework: An example from ATSB

Figure 3 shows an example of the aircraft accident and incident investigation framework from the Australian Transport Safety Bureau (ATSB), an ICAO Member States(14). The framework shows that phase 3: examination and analysis, is followed by phase 4: report and review(15).

Figure 3. A brief outline of the Australian aircraft accident and incident investigation framework(15).

During the initiate investigation component, whether to investigate depends on the potential of revealing safety benefits or aviation safety improvements.

If the decision is to investigate, the evidence collection begins, including collating site observations, interviews and securing evidence. Initial evidence gathering may include observations of sites; relevant wreckage gathering; materials and recorded data; information related to human performance; conducts or procures of test and examination reports; interviewing of involved parties, witnesses, and experts; obtains operational records, technical documents, and similar past occurrences data.

After that, the examination and analysis will follow, comprising reviews, examination and laboratory tests, follow-up interviews, establishing the sequence of events, analysis, agreeing on findings, and expected to prepare the preliminary reports if the investigations take at least twelve months. ATSB investigators may execute the following actions: conduct data analysis; reconstruct and simulate events; examine vehicle, company, government, and other records; conduct laboratory examination on selected wreckage and test selected components and system; review the research literature on human factors linkage to evidence; review reports from specialists, conduct additional interviews and analyse the sequence of events.

The investigation team drafts report for the internal technical and administrative reviews, external involved parties review, and ATSB Commission review. Parties directly involved will also receive the pre-public release or advance final report. If a critical safety issue has been identified during the investigation, ATSB will notify the relevant parties immediately and address the issue through safety action/recommendation. Most ATSB reports will contain a safety summary.

Dissemination is the release of the final report on the ATSB website, along with any safety recommendations(15).

4.2.3 Addressing the Gaps – with two objectives

This paper proposes an Expert System framework (sub-section 4.8) with two objectives to close the gap to one specific shortcoming: poor investigation reporting and/or safety recommendations contributed by the insufficient training. This shortcoming can also be traced back to the deficiency of its preceding phase: the evidence analysis phase. Therefore, by addressing the evidence analysis phase’s deficiency, the focused specific shortcoming can also be addressed. The preceding sub-heading provides an example of such an investigation process.

Objective one: To support the investigation evidence-analysis phase of an accident: The proposed Expert System framework emulates the human expert evidence analysis process to provide a more timely, consistent, and reasonably accurate evidence analysis and conclusion. The inferred conclusion’s accuracy will directly benefit the subsequent investigation reporting and/or safety recommendation phase (i.e., closing the focused gaps). Such benefits will be apparent to the new or untrained aircraft accident and incident investigators and the underperformed Member States, especially when they need to collaborate with the other Member States to conduct an aircraft accident or incident investigation following the Annex 13 framework.

Objective two: To support the on-the-job ‘field’ training through self-discovery: A new or untrained investigator is likely to have difficulty in conducting a reasonably consistent, accurate, and timely evidence analysis of a given set of good standing evidence due to the need to understand and apply the accident causation model such as the Reason Model. Such a Model can include identifying the relevant evidence, its influence, and importance justification; defining safety factor hypotheses; testing safety factor hypotheses; and risk analysis to determine the safety issue for entering the final report(Reference Walker and Bills16).

In contrast, a trained Expert System already has the sets of evidence and findings/conclusion (safety issues and/or safety recommendations/messages) pairs of expert knowledge in its inference/reasoning engine. The set of evidence included in past accident reports used to train the Expert System can be inferred to be of good standing. The conclusion that paired with the related set of evidence can be inferred to have passed the safety factor hypotheses, testing safety factor hypotheses, and the risk analysis to determine the safety issue/conclusion.

It is assuming that a set of new evidence of good standing has been entered into the Expert System for inference/reasoning. It will compare this set of new evidence with those already in its trained inference engine and find a closely matched set of evidence. The inferred conclusion from the closely matched evidence, will be displayed along with the closely matched evidence. The investigator can trace the displayed set of evidence used to infer the conclusion.

The Expert System can also link its inferred conclusion to multimedia resources (e.g., pictures and videos), general accident information (e.g., aircraft manufacturers and Models), and past similar accident reports if available. These combinations of resources will further provide a richer experience to help the investigator to understand, through self-discovery, how the inference/reasoning engine arrived at the inferred conclusion.

The inferred conclusion ability to link past similar accident reports, if available, will further help the investigator learn from similar accidents. In this light, ICAO does require the Member State to release the final report, if possible, within 12 months to enable other States to learn from such an aircraft accident and prevent a similar accident in the future(2). This is usually a manual process, which takes a long time. The investigator searches through various databases and/or investigation agencies’ websites and downloads several potential similar past accident reports. After that, he/she will likely review each downloaded report to refine his/her selection. However, a new or untrained investigator is unlikely to efficiently select and utilise the specific past similar reports meaningfully and confidently.

4.3 Expert Systems applications in the current industries

Expert System is a branch of artificial intelligence (AI) developed in the mid-1960s as mentioned by Turban and Aronson(Reference Turban, Aronson and Aronson17). Expert System consists of applications and hardware to emulate human experts’ decision-making ability, aiming to solve complex problems using reasoning knowledge(Reference Tan18).

Expert System applications have been applied in disciplines such as engineering, manufacturing, medical, management, military, education and training(Reference Tan19). For example, the medical discipline was gaining more time to assess decisions, more consistent decisions and shorter decision-making processes(Reference Sheikhtaheri, Sadoughi and Hashemi Dehaghi20). Expert System was significant in facilitating decisions making support to military commanders in the command-and-control process of military program training(Reference Tan19,Reference Liao21) . Expert System for education has proved that automated academic advising is equivalent to human advisors with about 93% accuracy and promising better teaching environments, especially for students(Reference Tan19). Experts’ knowledge and experiential learnings can also be preserved and would not be lost after the experts left the organisation(Reference Tan19).

In any successful Expert System development, its hypothesis must be validated. For instance, in the medical discipline, the Expert System diagnosis needs to match the human expert’s diagnosis. Suppose the test results show a significant correlation between the two variables, where the Expert System systemic diagnoses have a good level of similarity to the human diagnoses. In that case, the development can be considered successful(Reference Ameneh and Fatemeh Mohammadi22). In this light, when the Expert System is used in the field, presumably as alternative diagnosis support, the ultimate decision is still with the human expert if s/he is responsible for the diagnosis and is available on or off-site. This also shows the need for traceability as to how the Expert System concludes.

4.4 Working principles of a general Expert System architecture

A general Expert System architecture consists of three main components: User-Interface (UI), Knowledge Base, and Inference-(reasoning)-Engine, as shown in Fig. 4. The user interacts with the Expert System through the UI by providing queries in human-readable forms. The UI then passes these queries to the Inference Engine (e.g., a Rule-Based Inference-Engine) to simulate human experts’ problem-solving strategy. The UI then displays the results to the user(Reference Jabbar and Khan23).

Figure 4. A general Expert System architecture(Reference Jabbar and Khan23).

The Inference Engine uses these queries to work with the human expert knowledge associated with the IF-THEN rules stored in the Knowledge Base.

The Inference Engine performs a three-step Match, Select and Execute inference (reasoning) process on each human expert knowledge-and-IF-THEN rule combination stored in the Knowledge Base against the facts stored in a separate database (DB) to develop a result for the user. When the IF part of a rule is Matched with a fact, this same rule is Selected, and its action part stated in THEN is Executed. The Selected rule may change the set of facts by adding new facts in the DB. The entire process produces inference chains. The three-step process continues until a convergence of the results happens(Reference Jabbar and Khan23).

Selection of the Inference (reasoning) Engine Model (algorithm/classifier) type of the Expert System will depend on the requirements, such as whether there is any requirement to learn new knowledge? Or trace how the Expert System concluded?

The System Interface links the database to User Interface and Inference Engine. Results from the Selected rule are stored in the working memory. The explanation facility explains the inference (reasoning) process as to why a particular result has been reached. The set of rules that leads to the result is then presented to the user in a simplified way. This also allows the user to view the Inference (reasoning) process followed by the System in reaching the result. The Developer-Interface allows a developer (Knowledge Engineer) to edit the Knowledge Base, rules and facts(Reference Jabbar and Khan23-Reference Nagori and Trivedi25).

4.5 Requirements for Expert System to support aircraft accident and incident investigations

To address the third ICAO highlighted issues (The lack-of-investigators comprehensive and detailed training program: Poor investigation reporting and/or safety recommendations, which can be traced back to the Core-Evidence-Analysis Process area), the Expert System would need to be able to learn new expert knowledge from aircraft accident and incident data available globally amongst other resources, and explain what evidence has been used to establish a finding. Therefore, it is reasonable to identify the proposed Expert System’s inference model/s (algorithm/classifier) based on supervised machine learning (e.g., neural network) or rule-based (e.g., fuzzy logic system) or a combination (e.g., neuro-fuzzy system (hybrid)).

The suggested Expert System requirements and its rationales that are relevant to support investigation agencies need to be established are listed as follows.

I. The Expert System performance must be measurable in terms of validating the correlation and consistency in reaching a conclusion between the Expert System inference/reasoning model and the human expert analysis/reasoning ability. The expert system must provide a confidence level in its conclusion.

Rationale. This is important as it enables the Member States investigators to conduct their investigation per the Standards and Recommended Practices (SARPs) workflows with better confidence and the ability to defend their conclusion.

II. The Expert System must be able to prompt for further information as part of the Expert System inference/reasoning process prior to reaching a conclusion.

Rationale. This is important as the answers to the relevant questions will provide the Expert System inference/reasoning model with more evidence to reach an accurate conclusion.

III. The Expert System must be able to explain how the conclusion was reached. This means that the Expert System inference/reasoning model workflow must be traceable.

Rationale. This is important as investigators need to know how the Expert System reaches a particular conclusion to be able to defend the conclusion.

IV. The Expert System must be scalable to ongoing aviation accident and incident investigation requirements in terms of ease of adding (Learning) new aircraft accident and incident expert knowledge and must be able to handle incomplete and unreliable data sets.

Rationale. It is important to minimise the Expert System downtime whenever new knowledge is added to the Expert System.

V. The Expert System must be able to accept evidence information in a wide range of formats, including multimedia (image, audio, video, text, file).

Rationale. This is important as such integration will provide the investigators with a fuller picture of the scenario.

VI. The Expert System must be able to detect aircraft accident and incident trends, similar aircraft occurrences that include probable cause with associated confidence level and/or safety recommendations.

Rationale. This is important as the investigators will be able to review the trends that are similar to the occurrence in question.

VII. The Expert System must include secure data storage to keep the aviation accident and incident investigation data internally safe to maintain the integrity of the database, and all data entry must follow a security protocol.

Rationale. This is important as most aviation accidents and incident investigations are confidential, which also need secure collaboration.

4.6 Technologies

The technologies to be adopted depends on the type of problems to be solved and the methods applicable to the identified problem types. The problem to be solved refers to the type of data processing task selected. The method that can be used with the selected data processing task refers to the type of Learning algorithm(Reference Qiu26).

4.6.1 Machine learning-based Expert Systems

Figure 5 shows the Machine Learning data processing task types: Supervised Learning (classification and regression) and Unsupervised Learning (clustering). Learning algorithm types are, for example, Support Vector Machines, Linear Regression, K-Means, and Neural Networks, etc.(27).

Figure 5. Machine learning types and algorithms(27).

4.6.1.1 Data processing tasks: Supervised – Classification and Regression; Unsupervised – Clustering

In Supervised Learning, the Model is trained on known inputs and outputs data prior to being able to predict future outputs. Whereas, in Unsupervised Learning, the aim is to locate the hidden patterns or intrinsic structures in inputs data(27).

Supervised Learning required training using labelled data with inputs and desired outputs. The labelled data is part of a given dataset (can be stored in the excel spreadsheet.csv format), which includes a set of features valuables (i.e., data points) and the right answers (i.e., labelled dataset) corresponding to the data points(Reference Rebala, Ravi and Churiwala28). This means that a labelled dataset includes the class labels (responses/desired outputs/categories).

In Unsupervised Learning, there is no requirement to use labelled data with only inputs and without desired targets(Reference Qiu26).

Examples of aircraft evidence (labelled inputs) are (airframeOverspeedDuration>5sec and airframeOverspeed>5knot), which is related labelled outputs data (with a tag: expertCoding) lossOfControl, Other examples labelled outputs are controlissue, incorrectConfiguration, StallWarning, and UnstableApproach, etc.

Once the problem to be solved has been determined, the next step is to select the appropriate learning algorithm method for the data type to be used.

Classification is a supervised learning algorithm where the Model learned from training data to identify the category or class of the input features or data is called the classifier(Reference Rebala, Ravi and Churiwala28). During the Supervised Learning phase, the selected algorithm has to learn the key characteristics within each set of data points and the corresponding right answers (i.e., labelled responses). After the learning process, when a new query (i.e., a set of new data points of similar format as the training data) is given to the trained algorithm, the algorithm should be able to predict the right answer(Reference Rebala, Ravi and Churiwala28). Classification algorithms usually apply to nominal (discrete/non-numerical) response (output’s class/categories) values(27). An example of responses (outputs) from the observation of categorical variables is predicting whether an aircraft will have an accident or incident within a flight (a classification problem). The possible output’s classes are controlissue or lossOfControl.

Regression tasks predict continuous responses (outputs). In regression, the goal is to predict a continuous (numeric) measurement for an observation. That is, the response (output) variables are real numbers. For example, temperature changes or power demand fluctuations(27).

Clustering is used to identify classes or clusters from a set of data objects and group the set of objects similar to each other in the same group(Reference Chug and Dhall29). Inferences can be performed with datasets consisting of input data without a labelled output (responses) through exploratory data analysis to find hidden patterns or groupings in data. Clustering applications include gene sequence analysis, market research, and object recognition(27).

4.6.2 Rule-based and Fuzzy Logic (Rule-Based) Expert Systems

4.6.2.1 Rule-based

Rule-based Expert System is where the Inference Engine process query using the knowledge base that is represented by the combination and association of human expert knowledge and classical logic (1 (true) or 0 (false))(Reference Nagori and Trivedi25).

Each rule and the human-expert-knowledge combination is an independent piece of expert knowledge and consists of two parts. The IF part called the antecedent (premise or condition), and the THEN part, called the consequent (conclusion or action). Examples of the action include ADD fact, REMOVE fact, MODIFY attribute, and QUERY the user for input. A rule can have multiple antecedents (IF conditions) joined by keywords: AND, OR, or a combination of both. An example of such domain expert knowledge, which combines the Human-Expert-Knowledge and IF-THEN rule and stored in the Knowledge Base, is as follows(Reference Nagori and Trivedi25):

4.6.2.2 Fuzzy Logic (Rule-based)

Fuzzy Logic System is based on the Fuzzy logic (Fuzzy set theory) that operates on multi-valued logic. Fuzzy Logic System expresses expert knowledge by using vague and unclear words such as heavily reduced or moderately difficult(Reference Nagori and Trivedi25).

In the Fuzzy Logic System, expert knowledge is represented as a set of fuzzy logic IF-THEN conditional-action rules stored in the Knowledge Base (KB)(30). For instance, a single fuzzy IF-THEN rule and human expert knowledge combination may be represented as follows:

Where

A and B – linguistic values defined by fuzzy sets in the input space ranges X and Y, respectively.

IF part of the rule, x is A - called Antecedent or Premise or Condition.

THEN part of the rule y is B - called Consequent or Conclusion or Action.

Further examples of the fuzzy IF-THEN rule and human expert knowledge combination forming the Knowledge Base may be represented as follows:

Fuzzy Logic System’s benefits are that the fuzzy logic objective is to model human thinking, experience, and intuition in the decision-making process based on inaccurate data and suitable for expressing vagueness and uncertainty(Reference Sremac31). The fuzzy rule-based inference model is also traceable as pointed by Bobek and Misiak(Reference Bobek and Misiak32). Therefore, when this Model (algorithm) has been implemented in the Expert System, it can explain how and what evidence has been used to reach a conclusion.

4.6.3 Hybrid-based Expert Systems

The standalone Artificial Neural Network and Fuzzy Logic System (i.e., their inference/reasoning functions) can be combined to create the Neuro-Fuzzy System, also known as the Hybrid System.

Neuro-Fuzzy System is a machine learning technique used to improve the Fuzzy Logic System’s part of the Neuro-Fuzzy System automatically by exploiting the learning algorithm of the Artificial Neural Network. Neuro-Fuzzy System combines the human reasoning ability of Fuzzy Logic System and the learning capability of the Artificial Neural Network (ANN), where the ANN improves the Fuzzy Inference System performance.

Adaptive Neuro-Fuzzy Inference System (ANFIS) is a variation of implementation from the Neuro-Fuzzy System(Reference Kour, Manhas and Sharma33).

4.7 Limitations of Expert Systems

Limitations of the Expert System are that its intelligence and accuracy (i.e., inference model) is based on the quality of the system requirements that must reflect the end user’s need in any given discipline and the developers used during the development phase.

Generally, for applications in any discipline, the system requirements will need to consider what type of problems to be solved, which refers to the type of data processing tasks required basing on the dataset type. Once the data processing task type has been defined, the method (algorithm) that can be used with will need to be defined next. In this light, the proposed Expert System framework aims to solve a supervised machine learning classification problem.

The best method (algorithm) for a specific dataset (e.g., aircraft accident or incident evidence) does not exist, and neither does one size fits all exist. The best algorithm/classifier type depends on the specific data type. It is partly a trial and error effort to find the right algorithm. The trade-off of one benefit against another is required when choosing a suitable learning algorithm, including model speed, accuracy and complexity(27).

Besides this, the inference model (i.e., algorithm/classifier) selections also need to consider their other attributes. For example, the standalone Neural Network can learn from expert knowledge and work with imprecise data, but it does not have an explanation facility(Reference Nagori and Trivedi25). Most neural network architectures are black boxes(Reference Nitin34,Reference Abraham35) . The standalone Fuzzy Logic System’s rule-based Inference is traceable(Reference Bobek and Misiak32). Its probabilistic reasoning can deal with uncertainty(Reference Nagori and Trivedi25). But, it cannot learn on its own to update Knowledge-Base(Reference Nagori and Trivedi25). Therefore, maintaining the knowledge base as new expert knowledge becomes available will take a longer time. Rule-based systems can also have difficulty dealing with missing values in the referenced dataset used to build the Model, as highlighted by Henderson(Reference Henderson36).

For the machine learning-based inference/reasoning model, the Expert System is also dependent on the relevance, quality, and size of the training dataset. The training dataset embeds the expert knowledge, and analysis workflow, such as the reason model within the Labelled inputs/features data points variables and the desired labelled outputs/responses.

Therefore, any aircraft accident and incident agency considering adopting an Expert System platform to support their investigations should review the preceding limitations. These are important considerations due to the resources required to develop and maintain the global expert knowledge dataset to keep it up to date for effective support of investigations.

4.8 Expert System framework for aircraft accident and incident investigations

Sub-section 4.1 describes the justification to focus on the third highlighted non-compliance issue: the lack of comprehensive and detailed training programs, and focusing on one specific shortcoming: poor investigation reporting and/or safety recommendations. This shortcoming can be traced back to the deficiency in the investigation’s evidence analysis phase and led to the proposed Expert System framework.

Sub-section 4.2 reiterated the ICAO USOAP: CMA third highlighted non-compliance issue in sub-section 3.3, along with an example overview of the ATSB aircraft accident and incident investigation framework and followed by addressing the focused gaps with two objectives.

This sub-section provides details of the proposed Expert System framework for aircraft accident and incident investigations.

4.8.1 The proposed Expert System

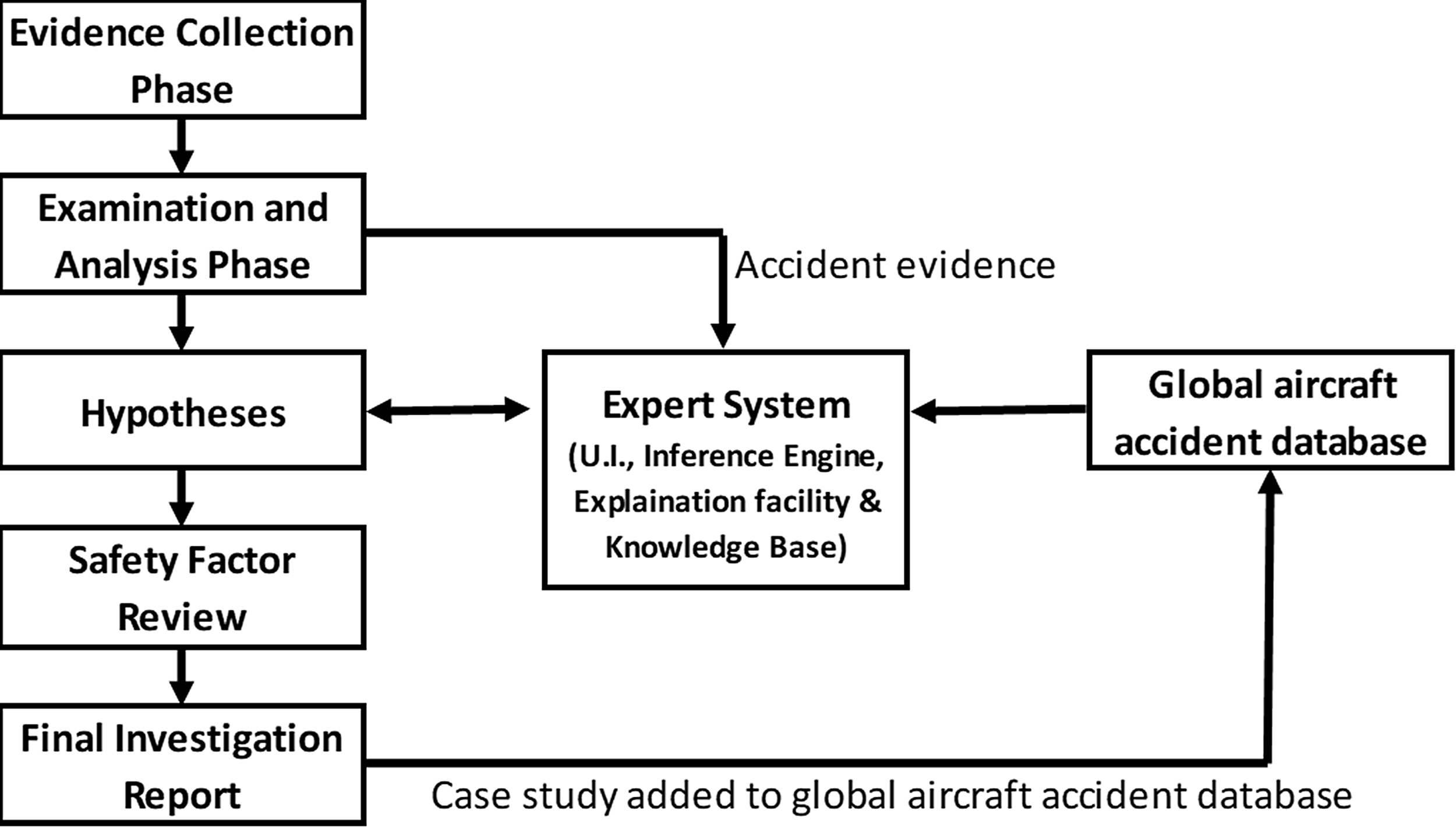

Figure 6 shows the proposed Expert System framework that will incorporate the preceding suggested requirements as an additional tool that the aircraft accident investigators can use to support their role in the investigations’ evidence analysis phase. The evidence used and the conclusion inferred from that evidence will flow into the final phase: investigation reporting and/or safety recommendations. Therefore, a high-quality (consistency and accuracy) evidence analysis phase will directly benefit the investigation’s final phase.

Figure 6. Proposed aircraft investigation framework with integrated Expert System support.

The proposed Expert System framework is similar to common practice, with an additional tool added to support data analysis and decision-making. A global accident database holds data from many aircraft accident and incident reports (prepared by human experts) used to train the Expert System. Its inferred conclusion will also be linked to resources in a wide range of multimedia formats (accident-related images, audios, videos, texts, files).

The Expert System block in the proposed framework is also similar to the general Expert System architecture as discussed in the preceding sub-section (Working principle), consisting of three main components: User-Interface (UI), Knowledge Base, and Inference-(reasoning)-Engine, as shown in Fig. 6.

The user’s queries will be entered into the Expert System using the UI. The UI passes the queries to the Inference-(reasoning)-Engine, which is the core evidence analysis component responsible for emulating the Examination and Analysis Phase and Hypothesis testing of the traditional human-based workflow. The inferred conclusion of the analysis will be displayed using the UI.

The added value of the proposed Expert System framework is multi-folds. The Expert System can ask additional information if required to reach a hypothesis with reasonable confidence. The Expert System intelligence comes from its ability to learn from human experts’ expertise and experience primarily inferred from past aircraft accident and incident reports. The Expert System can explain how it reaches a hypothesis of a probable cause (traceability). This is useful to train new or inexperienced investigators and generate confidence.

The Expert System can also support the aircraft accident investigation process by rapidly correlating available evidence, in multiple formats, with past accidents. It is unbiased, can learn from events, quickly identify trends, and add new data to the database. This will also support more experienced investigators in terms of the consistency of findings.

In the absence of key investigators, whether s/he is on leave or left the agency, the Expert System storages of expert knowledge mean that agency can still progress with the investigations at hand with the minimal expected impact.

5.0 CONCLUSIONS

In 1951, ICAO first adopted the SARPs for aircraft accident inquiries and designated it as Annex 13. Today, 193 Member States adopt the Annex 13 framework for their aircraft accident and incident investigations. ICAO also conducts the USOAP: CMA safety audits to determine the Member States’ Safety Oversight Systems capability. The (2016 – 2018) results of the eight audit areas (sub-section 3.2) show that 46% of the audited Member States complied (audit 2022 target results: 75% EI). This infers that the Member States that are well prepared with the necessary prerequisites (infrastructure and resources) can apply the Annex 13 and other audited frameworks successfully. In contrast, 54% of the Member States that underperformed can be inferred primarily to their lack of the necessary prerequisites to apply the ICAO Annex 13 and other audited frameworks.

Subsection 3.3 provides evidence supporting the Annex 13 application misalignments of the Member States. The evidence support is through the ICAO USOAP: CMA audit results (2016 – 2018) with six highlighted important non-compliance issues in the aircraft accident and incident investigation area (also known as the Accident Investigation Group (AIG) per ICAO USOAP: CMA).

This paper proposes an Expert System framework with two objectives to close the gap to one specific shortcoming: poor investigation reporting and/or safety recommendations. This specific shortcoming arises from the ICAO third highlighted non-compliance AIG issues: more than 60% of the Member States have not developed a comprehensive and detailed training program to train their investigators. This specific shortcoming can also be traced back to the deficiency of its preceding phase: the evidence analysis phase leading to an expert system’s proposal.

The two objectives comprising of objective one: to support the investigation evidence-analysis phase of an accident, and objective two: to support the on-the-job ‘field’ training through self-discovery, are expected to provide a reasonably acceptable level of improvement. This is despite the lack of necessary infrastructure and resources from the underperforming Member States to train new and untrained investigators in the core evidence analysis phase.

The Expert System’s ability to continuously learn new knowledge from past accidents will keep it up-to-date and enhance its effectiveness as a supporting tool for the 193 Member States’ aircraft investigators, especially the new or inexperienced investigators.

Expert System is not new. Many disciplines, such as medical and engineering, have been using for years, but there is a lack of published research in Expert System for aircraft accident and incident investigations. Therefore, this research expects to create more research activities in this area.

Many Member States have a unified investigation workflow for various transport modes such as aviation, rail, and marine. With this research, it is assumed that the identified Expert System architecture and workflow can be applied to other transport modes with similar results within the same Member States.

Finally, the proposed Expert System framework is a matter of relative global urgency because of the long-term nature required to establish the Core-Evidence-Analysis Process infrastructure prerequisites, especially the Member States that have underperformed and lack the resources.

ACKNOWLEDGEMENT

This research is supported by an Australian Government Research Training Program Scholarship.